- 1Music, Stroke Recovery, and Neuroimaging Laboratories, Department of Neurology, Harvard Medical School, Harvard University, Boston, MA, United States

- 2Beth Israel Deaconess Medical Center, Boston, MA, United States

Functional imaging studies have provided insight into the effect of rate on production of syllables, pseudowords, and naturalistic speech, but the influence of rate on repetition of commonly-used words/phrases suitable for therapeutic use merits closer examination.

Aim: To identify speech-motor regions responsive to rate and test the hypothesis that those regions would provide greater support as rates increase, we used an overt speech repetition task and functional magnetic resonance imaging (fMRI) to capture rate-modulated activation within speech-motor regions and determine whether modulations occur linearly and/or show hemispheric preference.

Methods: Twelve healthy, right-handed adults participated in an fMRI task requiring overt repetition of commonly-used words/phrases at rates of 1, 2, and 3 syllables/second (syll./sec.).

Results: Across all rates, bilateral activation was found both in ventral portions of primary sensorimotor cortex and middle and superior temporal regions. A repeated measures analysis of variance with pairwise comparisons revealed an overall difference between rates in temporal lobe regions of interest (ROIs) bilaterally (p < 0.001); all six comparisons reached significance (p < 0.05). Five of the six were highly significant (p < 0.008), while the left-hemisphere 2- vs. 3-syll./sec. comparison, though still significant, was less robust (p = 0.037). Temporal ROI mean beta-values increased linearly across the three rates bilaterally. Significant rate effects observed in the temporal lobes were slightly more pronounced in the right-hemisphere. No significant overall rate differences were seen in sensorimotor ROIs, nor was there a clear hemispheric effect.

Conclusion: Linear effects in superior temporal ROIs suggest that sensory feedback corresponds directly to task demands. The lesser degree of significance in left-hemisphere activation at the faster, closer-to-normal rate may represent an increase in neural efficiency (and therefore, decreased demand) when the task so closely approximates a highly-practiced function. The presence of significant bilateral activation during overt repetition of words/phrases at all three rates suggests that repetition-based speech production may draw support from either or both hemispheres. This bihemispheric redundancy in regions associated with speech-motor control and their sensitivity to changes in rate may play an important role in interventions for nonfluent aphasia and other fluency disorders, particularly when right-hemisphere structures are the sole remaining pathway for production of meaningful speech.

Introduction

In healthy individuals, fluent speech production involves a series of integrated commands [e.g., predictive commands to the motor cortex to establish a target (feedforward); assessment/analysis commands to auditory-motor regions to evaluate accuracy of output compared to the predicted target (feedback); and when a mismatch is detected, corrective commands to motor cortices to both initiate and respond to those auditory targets in the feedforward/feedback loop (modification/correction)] (Houde and Jordan, 1998; Tourville et al., 2008; Houde and Nagarajan, 2011). In contrast, individuals with damage to speech-motor regions or their associated network connections [as in the case of stroke or traumatic brain injury (TBI)] are left with disruptions to speech-motor control that result in fluency disorders (Kent, 2000; van Lieshout et al., 2007; Marchina et al., 2011; Wang et al., 2013; Pani et al., 2016) that can slow, impair, or prevent production of meaningful speech.

Syllable production rate has been recognized as a sensitive clinical indicator for detecting and diagnosing speech-motor disorders [e.g., dysarthria/anarthria (Kent et al., 1987), apraxia of speech (Kent and Rosenbek, 1983; Ziegler, 2002; Aichert and Ziegler, 2004; Ogar et al., 2006), and stuttering (Logan and Conture, 1995; Arcuri et al., 2009)], and syllables per minute (spm) has long been considered a precise, yet flexible measure of speech rate/fluency capable of evaluating both the severity of disordered speech in impaired populations and the efficiency (in terms or rate and fluency) of communication in healthy speakers (Cotton, 1936; Kelly and Steer, 1949; Grosjean and Deschamps, 1975; Costa et al., 2016).

Neuroimaging studies using a variety of syllable rates have reported modulation of activity within speech-motor networks (Wildgruber et al., 2001; Riecker et al., 2005, 2006) as well as modulation of activation in speech-motor regions bilaterally in response to changes in syllable-production rate (Wildgruber et al., 2001; Riecker et al., 2005; Liegeois et al., 2016). Others have examined rate effects in the auditory domain using passive listening to words, syllables, and non-speech noise (Binder et al., 1994; Dhankhar et al., 1997; Noesselt et al., 2003), or single syllables and pseudowords (Wildgruber et al., 2001; Riecker et al., 2006, 2008; Liegeois et al., 2016) rather than meaningful words or phrases.

Despite the common assumption that covert and overt speech use the same processes and share neural mechanisms, studies have reached different conclusions. Palmer et al. (2001) showed similar activation patterns for both response modes once motor activity associated with overt speech was removed; others found distinctly different patterns of neural activation for covert and overt speech. Both Huang et al. (2002) and Shuster and Lemieux (2005) observed stronger activation for overt speech than for covert speech; Brumberg et al. (2016) noted that speech output intensity led to better identification of neural correlates for overt, but not covert sentence production; and Basho et al. (2007) suggested that without overtly spoken responses, the scope of language tasks for functional magnetic resonance imaging (fMRI) would be limited by the inability to assess subjects’ participation, obtain behavioral measures, or monitor responses. Overt repetition of real words is particularly important for studies of speech repetition rate because it enables continuous monitoring of task compliance and accuracy of responses/consistency of adherence to rate within and across subjects.

Patients with speech-motor disorders typically respond better to therapeutic interventions that use slower rates (e.g., Southwood, 1987; Yorkston et al., 1999; Duffy and Josephs, 2012). Based on existing evidence for hemispheric specialization from studies that found left-hemisphere dominance for rapid temporal processing and right-hemisphere sensitivity to slow transitions/longer durations (McGettigan and Scott, 2012) and studies of patients with large left-hemisphere lesions (e.g., Schlaug et al., 2009; Marchina et al., 2011; Zipse et al., 2012), we hypothesized that hemispheric response to speech-production rates might differ. To capture potential shifts in activation, we chose three frequency rates- slow (1 syll./sec.) to simulate the rate used with nonfluent aphasic patients in the early stages of treatment, moderate (2 syll./sec.) to approximate the rate used in therapy as patients improve, and closer-to-normal (3 syll./sec.) which, in general, is slower than that of a typical adult speaker but remains within the range for speakers of American English [∼2.1 syll./sec. (Ruspantini et al., 2012); ∼2.83 syll./sec. (Costa et al., 2016); ∼3.67 syll./sec. (Robb et al., 2004); ∼3.75 syll./sec. (Blank et al., 2002); ∼3–6 syll./sec. (Levelt, 2001); ∼4–5 syll./sec. (Alexandrou et al., 2016)].

The incremental range of speech rates used in our study was designed to (1) provide a more comprehensive view of the healthy brain’s regional response to rate changes within the well-described perisylvian network (Binder et al., 1997; Hickok and Poeppel, 2004; Catani et al., 2005; Crosson et al., 2007; Oliveira et al., 2017), (2) identify regions capable of supporting recovery from fluency disorders characterized by impaired initiation and/or slow, halting speech production, (3) gain important insights for the development of treatment protocols that can be adapted as fluency improves over the course of treatment/recovery, and (4) examine neural response to changes in rate in terms of linear and/or non-linear effects and hemispheric laterality.

Materials and Methods

Participants

Twelve healthy, right-handed native speakers of American English (five females, seven males) ranging in age from 30 to 69 years (mean age: 52.0 ± SD: 10.1 years), with no history of neurological, speech, language, or hearing disorders were recruited. The protocol was approved by Beth Israel Deaconess Medical Center’s Institutional Review Board, and all subjects gave written informed consent in accordance with the Declaration of Helsinki.

Behavioral Testing

Handedness was assessed by self-report using measures adapted from The Edinburgh Inventory (Oldfield, 1971). To ensure that cognition fell within a normal range, subjects completed the Shipley/Hartford Institute of Living assessment (Shipley, 1940), which correlates highly with the Wechsler Adult Intelligence Scale full scale IQ (Paulson and Lin, 1970). Subjects’ IQ equivalents (derived from Shipley scores) all fell within normal limits (mean: 122.09 ± SD: 11.53) and thus, all were included in the data analyses.

Experimental Stimuli

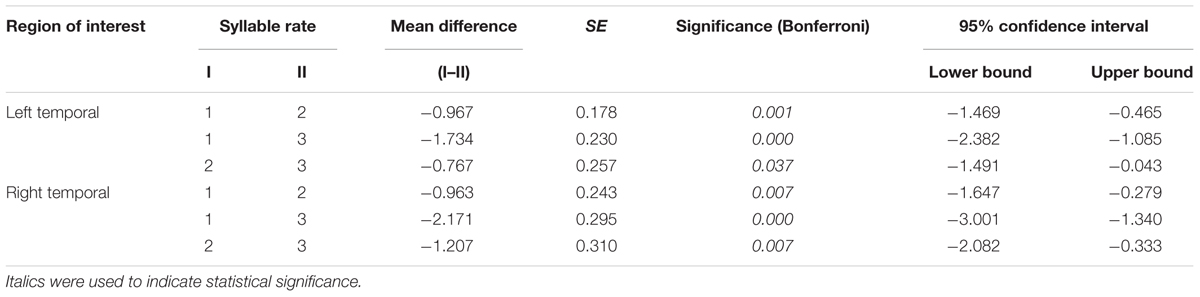

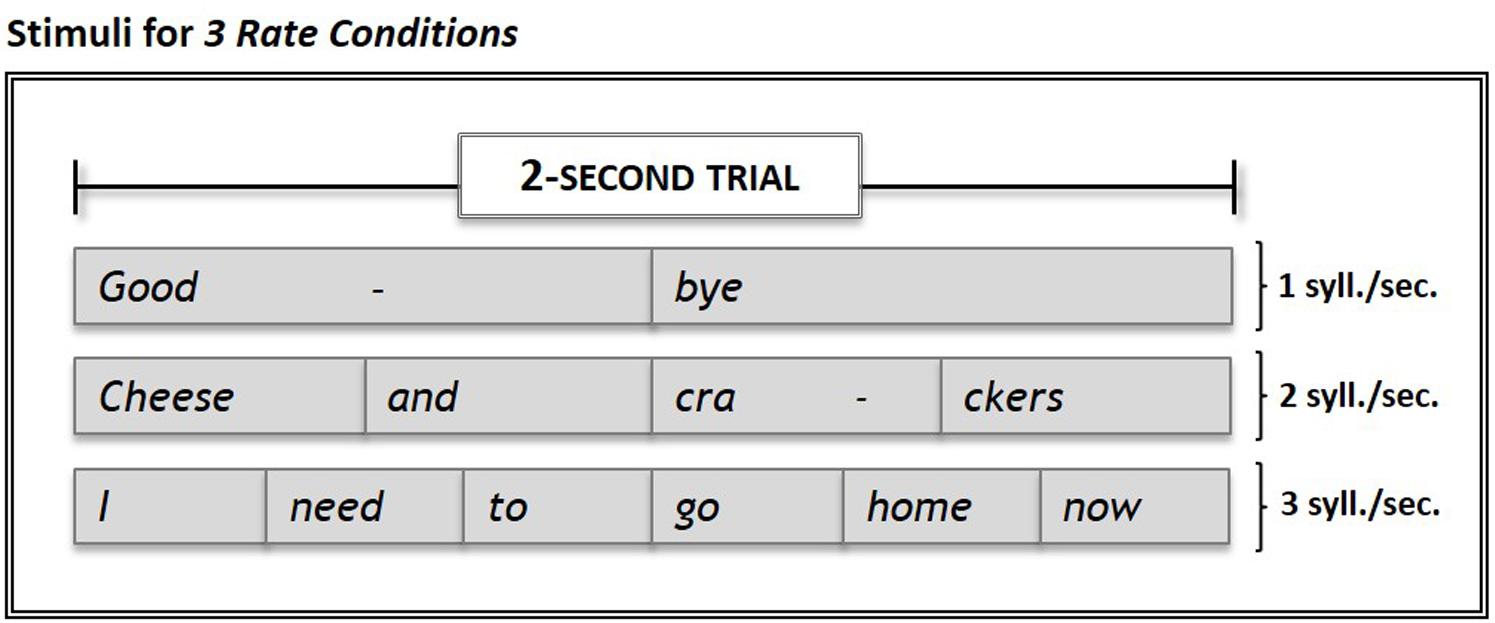

A set of 15 stimuli consisting of commonly-used 2-, 4-, and 6-syllable words/phrases (e.g., “Goodbye”; “Cheese and crackers”; “I need to go home now”) was recorded at rates of 1, 2, or 3 syll./sec. respectively, by a trained, native speaker of American English using Adobe Audition 1.5 software (Adobe, San Jose, CA, United States). The total time for each stimulus equaled 2 s. For each rate, all syllables were produced with equal duration (see Figure 1).

FIGURE 1. Examples of stimuli used in the experiment. Stimuli set consisted of 15 commonly-used words and phrases presented in 2-sec. trials at rates of 1, 2, and 3 syll./sec. Subjects’ overt repetitions of stimuli in each of the three Rate Conditions were compared to their response during Silence (control condition) trials. Within each run, the three rate conditions and the silence (control) condition were each sampled five times.

Task Design

We used an overt repetition task in order to monitor subjects’ task compliance, adherence to protocol timing, and repetition rate in each condition, as well as to verify accuracy of repetition rates within and across subjects. Our stimuli consisted of familiar words and commonly-used phrases that hold potential for use in treatment of fluency disorders.

The fMRI protocol was comprised of 6 runs, each with 20 active trials + 2 dummy acquisitions (15 sec./trial; 5 min 30 sec./run). Within each run, experimental conditions (three speech repetition rates: 1, 2, and 3 syll./sec.) were each sampled five times (15 trials), with five control condition (silence) trials interspersed. Conditions were pseudo-randomized {i.e., the order of the 20 active trials [5 experimental (overt repetition) trials × 3 rates + 5 control (silence) trials] was randomized once, and that same order of condition was then used for all runs}. Order of stimuli was randomized independently for each run. For trials presented in the repetition-rate conditions, each stimulus was followed by a short “ding” that served as an auditory cue to begin overt repetition of the target word/phrase. Subjects were instructed to repeat each target exactly as they had heard it, immediately after the cue. For the control (silence) trials, no spoken stimuli were presented. Subjects were asked to remain quiet until they heard the auditory cue (ding), then take a quick breath and exhale to simulate their preparation for initiation of spoken responses in the experimental (repetition-rate) conditions.

Prior to the fMRI experiment, a member of the research team explained the experimental design and what would take place during the scanning session. Subjects were given approximately 20 min to familiarize themselves with the stimuli and practice the tasks and timing with one of the researchers.

During the fMRI experiment, auditory stimuli were presented via MR-compatible, noise-canceling headphones while the subjects lay supine in the scanner. Subjects were asked to hold as still as possible and keep their eyes closed throughout the scanning session to ensure that acquisitions would capture only task-related activation. Subjects’ responses were noted by researchers to verify task compliance.

Image Acquisition

Functional MRI was performed on a 3T GE whole-body scanner. A gradient-echo EPI-sequence (TR 15 s, TE 25 ms, acquisition time 1.75 s) with a matrix of 64 × 64 was used for functional imaging. 28 contiguous axial slices covering the whole brain resulted in a voxel size of 3.75 mm × 3.75 mm × 5 mm. Image acquisition was synchronized with stimulus onset using Presentation software (Neurobehavioral Systems, Albany, CA, United States). The total scan time including the acquisition of a high-resolution MPRAGE anatomical sequence (voxel resolution of 0.93 mm × 0.93 mm × 1.5 mm) was, on average, 40 min per subject.

We used a jittered, sparse temporal sampling design with precisely-timed acquisitions to capture task-related activation and reduce/eliminate auditory artifacts associated with stimulus presentation, auditory cueing, and scanner noise. The Silence condition was designed to control for activation associated with the preparatory breath and initiation of the speech-motor response necessary for overt repetition in the rate conditions. Although the TR remained constant at 15 s, the delay between subjects’ responses and onset of MR acquisition was varied by moving the task block within the 15 s time frame. These shifts yielded stacks of axial images with delays of 3.5, 4.5, 5.5, and 6.5 s after the auditory cue. By combining the data from the four jitter points, we were able to capture peak hemodynamic response for each condition while allowing for individual timing differences between subjects and brain regions. Ten of the 12 subjects completed all six functional runs. Due to unforeseen scanner time constraints, the sessions of the two remaining subjects were truncated, and thus, they completed only four and three runs, respectively. Nevertheless, all runs of all subjects were included in the analyses.

fMRI Data Analysis

Data were analyzed using SPM5 (Institute of Neurology, London, United Kingdom) implemented in Matlab (Mathworks, Natick, MA, United States). Pre-processing included realignment and unwarping, spatial normalization, and spatial smoothing using an isotropic Gaussian kernel (8 mm). Condition and subject effects were estimated using a general linear model (Friston, 2002). The effect of global differences in scan intensity was removed by scaling each scan in proportion to its global intensity. Low-frequency drifts were removed using a temporal high-pass filter with a cutoff of 128 s (default setting).

As is the case with sparse temporal sampling design, there was no temporal auto correlation between the images. Therefore, we did not convolve our data with the hemodynamic response function, but instead, used the flexible finite impulse response, which averages the BOLD response at each post-stimulus time point. The data were analyzed on a single subject basis in order to enter the individual contrasts into a random effects analysis. One-sample t-tests that included a ventricular mask were calculated individually for each syllable rate by applying a significance threshold of p < 0.01 and correcting for multiple comparisons using the false discovery rate (FDR). For an analysis of variance (ANOVA) with three levels, we used the full factorial design and corrected for family wise error (FWE) at a significance level of p < 0.05.

Local maxima in each cluster of the conjunction analysis were extracted to create a spherical ROI (10 mm). The ROIs were overlaid on each subject’s contrast images for 1-, 2-, and 3-syllable rates > silence; mean beta-values were extracted for each ROI, then used for the repeated measures ANOVA in SPSS.

Results

Speech Repetition Rates: 1, 2, and 3 syll./sec. vs. Silence (Control Condition)

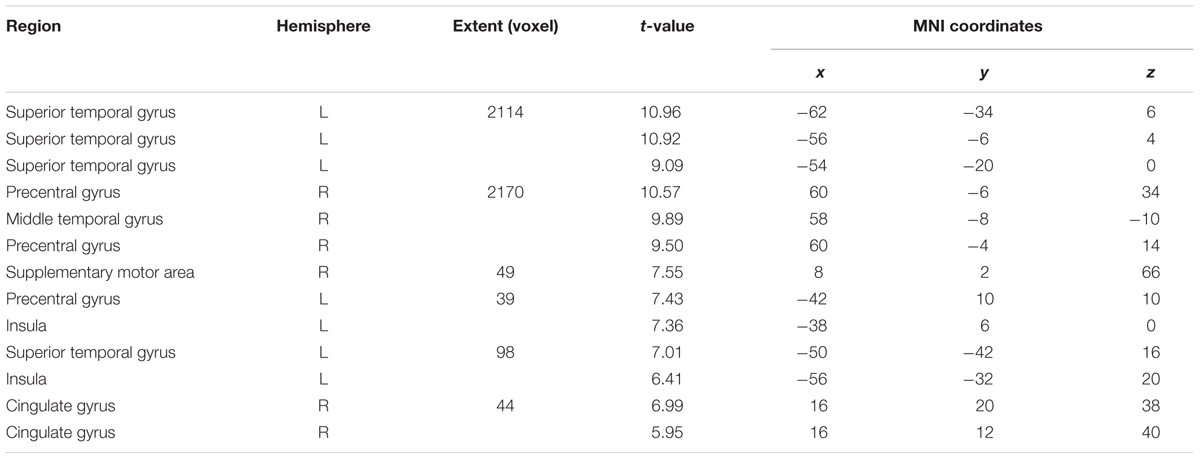

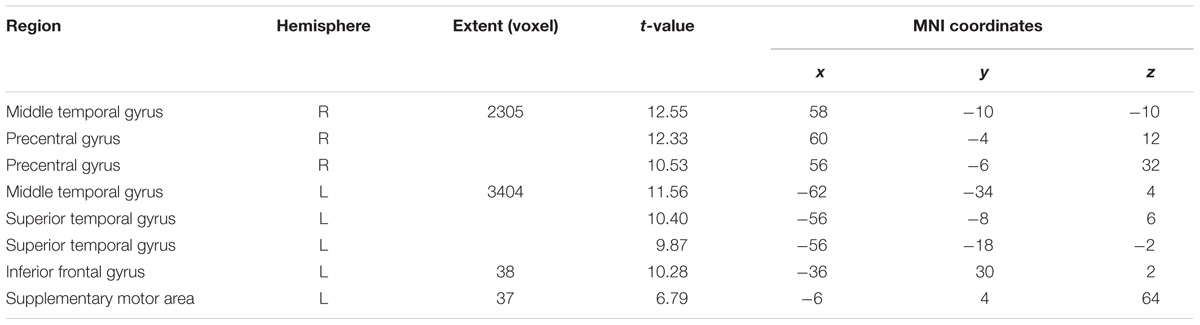

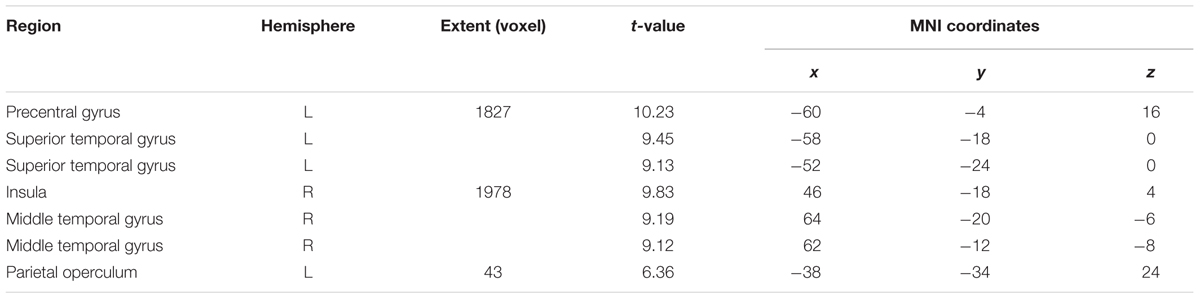

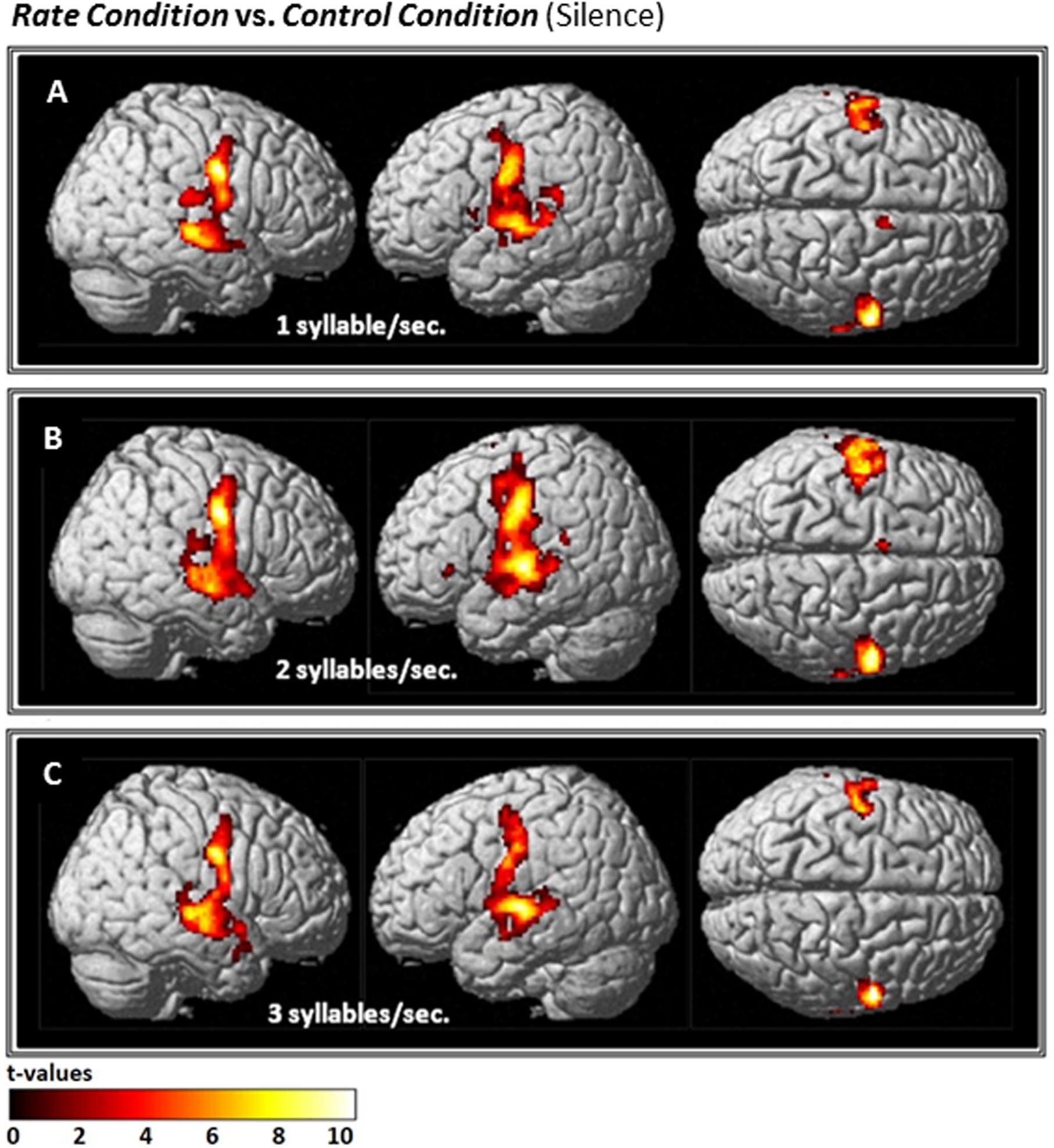

Compared with Silence (control condition), all Rate conditions yielded extensive clusters of activation in bilateral speech-motor regions that included primary motor and adjacent premotor cortices, superior temporal gyri (STG), superior temporal sulci (STS), and middle temporal gyri (MTG). In the 1 syll./sec. contrast, additional activation was found in the right cingulate gyrus, and the left insula (see Figure 2A and Table 1A).

FIGURE 2. Effects of the three individual Rate Conditions vs. Silence (control condition) (A–C): t-Tests comparing 1-, 2-, and 3-syll./sec. Rates vs. Silence revealed bilateral patterns of activation in a group of 12 healthy subjects during overt repetition of words and phrases spoken at three different rates. Highly-significant linear increases were observed at all three rates in the right-hemisphere and at the 1- and 2-syll./sec. rates in the left. Left-hemisphere activation at the closer-to-normal speech rate (3 syll./sec.), though still significant, was less robust. Statistical maps are FDR 0.01 corrected; the extent threshold is 20 voxels.

Similarly, the 2-syll./sec. rate elicited additional activation in the left inferior frontal gyrus (IFG) and left supplementary motor area (SMA) (see Figure 2B and Table 1B).

For the 3-syll./sec. rate, additional activation was located in the right insula and the left parietal operculum (see Figure 2C and Table 1C).

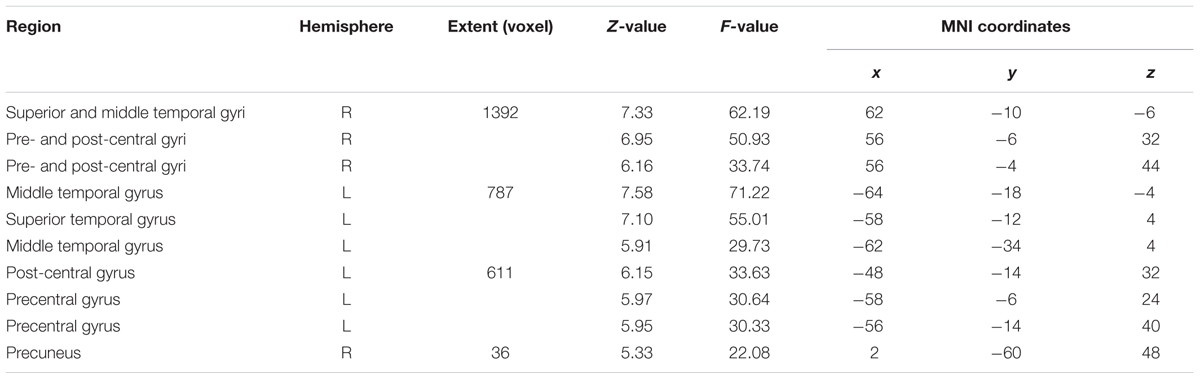

One-Way ANOVA with Three Levels

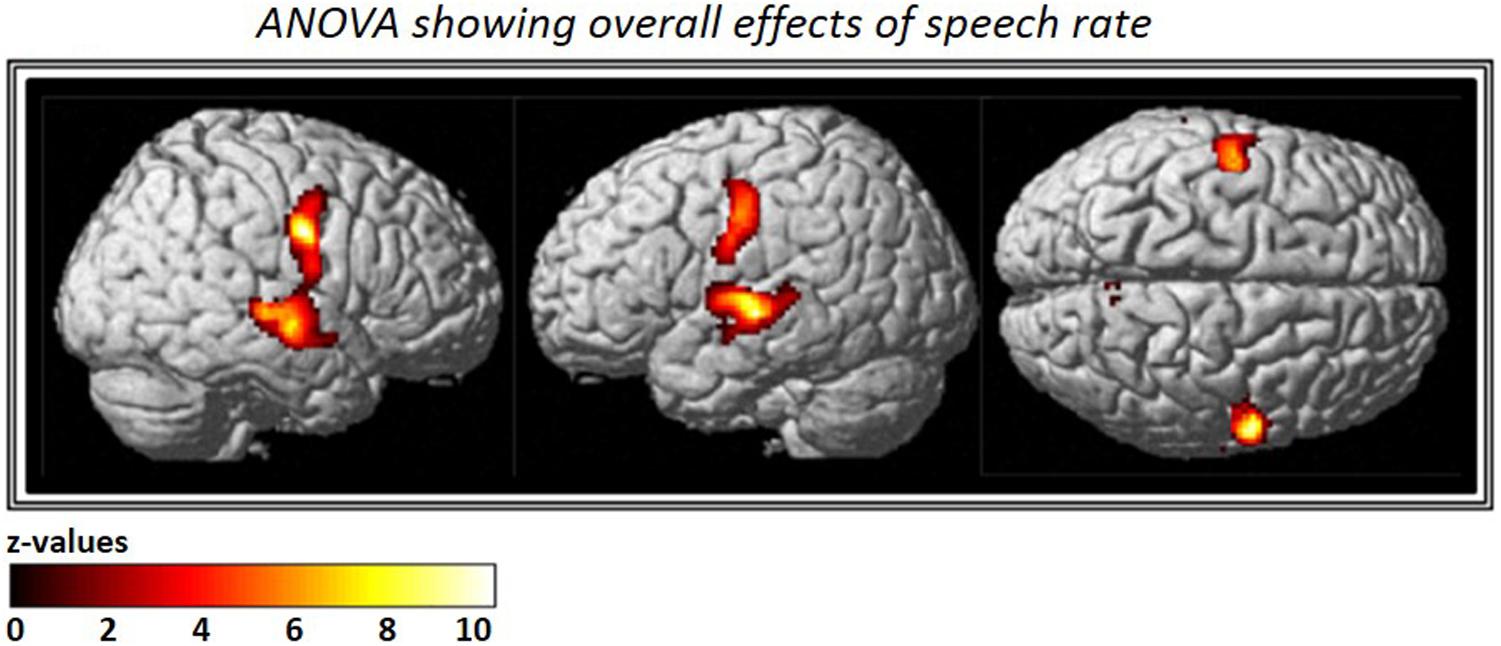

The ANOVA that included all three speech rates vs. silence revealed bilateral activation in speech-motor control regions that included the pre- and post-central gyri, superior- and middle- temporal gyri, as well as the STS. In addition, a smaller cluster was found in the right precuneus (see Figure 3 and Table 2).

FIGURE 3. Analysis of variance (ANOVA) showing overall effects of speech repetition rate. Contrast images from the first level analysis for all three speech repetition rates were entered into a full factorial design in order to calculate an ANOVA with three levels. The resulting F-contrast was FWE corrected at a significance level of p < 0.05; an extent threshold of 20 voxels was applied.

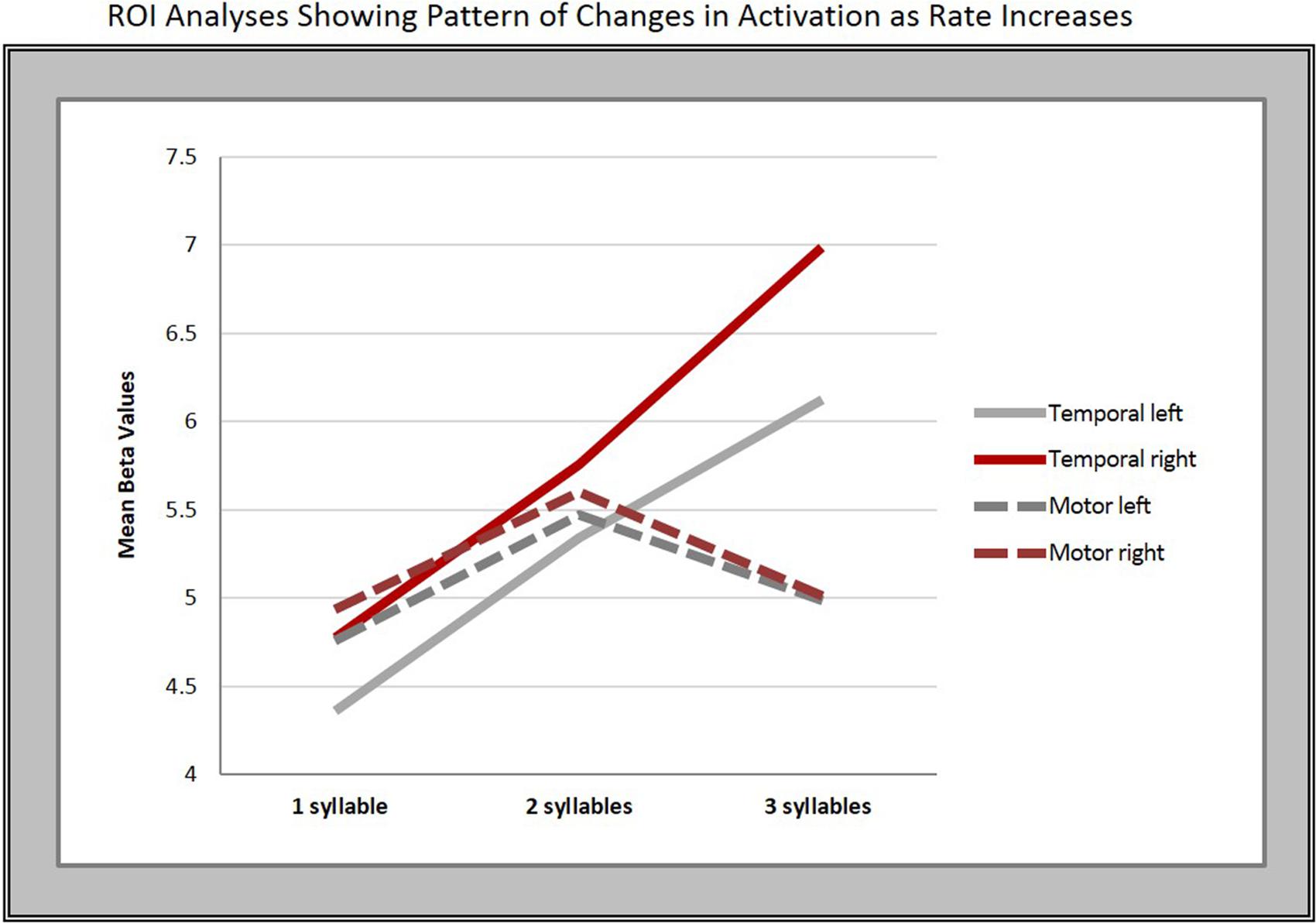

ROI Analysis

To further explore the pattern seen in the contrast estimates, significant clusters in the conjunction analysis served as the basis for a region of interest (ROI) analysis. Thus, we created two ROIs in each hemisphere using the local maxima in the superior temporal [right: 62 -12 -6; left: -64 -18 -4 (in MNI space)] and middle to inferior motor cortices (left: -48 -14 32; right: 58 -6 32). We then conducted a one-way, repeated measures ANOVA with Bonferroni post hoc pairwise comparisons, which revealed a significant difference in mean beta-values within the temporal ROIs bilaterally across syllable rates (right: F = 29.36, p < 0.001; left: F = 30.083, p < 0.001).

Five of the six pairwise comparisons between the three rates in the temporal ROIs were highly significant (p < 0.008), while the 2- vs. 3-syll./sec. contrast in the left hemisphere, though still significant, was less robust at p = 0.037 (see Figure 4 and Table 3).

FIGURE 4. Regions of interest (ROI) analyses. The graphs show the mean beta-values in response to changes in syllable rate in motor and temporal regions bilaterally.

In contrast, no significant overall differences in mean beta-values for the different syllable rates were found in the motor cortex ROIs on either the right (F = 2.42, p = 0.14, Greenhouse–Geisser corrected) or left hemisphere (F = 3.003, p = 0.07).

Discussion

The aims of the present study were to (1) examine healthy adults’ neural response to changes in rate during overt repetition of meaningful words/phrases, (2) determine whether such changes are capable of modulating activation within speech-motor regions, and if so, (3) whether those modulations occur in a linear manner and/or show hemispheric preference.

Early lesion studies found language functions to be localized predominantly in the left hemisphere (Broca, 1865; Wernicke, 1874; Geschwind, 1970), but were limited in their ability to link speech function to structure in vivo. With the evolution of functional imaging, investigations of both healthy and lesioned brains have provided substantial evidence for bilateral organization of speech production (e.g., Hickok et al., 2000; Hickok and Poeppel, 2004, 2007; Saur et al., 2008). In healthy subjects, speaking rate and/or speech-repetition rate has been studied primarily as a means for understanding speech-motor control. Two recent studies of spontaneous connected speech have led to a greater understanding of the role that speech production networks and cortical regions associated with perception and production play in natural speech. Silbert et al. (2014) used a novel fMRI technique to examine unconstrained, 15-min long, real-life speech narratives and found symmetric bilateral activation in sensorimotor and temporal brain regions. Alexandrou et al. (2017) used MEG to study the perception and production of natural speech at three different rates and not only noted distinct patterns of modulation in cortical regions bilaterally, but highlighted the role of the right temporo-parietal junction in task modulation.

Other fMRI studies have also shown that repetition of simple phrases and/or individual syllables activates bilateral networks (Bohland and Guenther, 2006; Ozdemir et al., 2006; Rauschecker et al., 2008). Despite the fact that the present study’s experimental task involved repetition of stimuli at increasing rates and lengths rather than 2-syllable phrases repeated at a constant rate, overall, the results align with those of Ozdemir et al. (2006) showing bilateral activation in the IFG for motor planning and auditory-motor mapping, primary sensorimotor cortex activation for articulatory action, and the middle- and posterior STG/STS for sensory feedback.

Of particular interest in terms of repetition and rate, Wise et al. (1999) employed a listening/repetition task involving 2-syllable nouns produced at multiple slower rates [10, 20, 30, 40, and 50 words/min (i.e., ranging from 0.33 to 1.67 syll./sec.)], and found bilateral activation associated with word repetition in primary sensorimotor cortices, additional activity in the left anterior insula, posterior pallidum, anterior cingulate gyrus, dorsal brainstem, and rostral right paravermal cerebellum. Increased temporal lobe activation corresponded with rate increases for both listening and repetition conditions, and a linear increase associated with increased repetition rate was seen in the sensorimotor cortex. Some of their findings were confirmed by our data which revealed that activation in the superior temporal cortex increased linearly across all three rates on both hemispheres, while increases in the primary sensorimotor cortices showed no linear rate effects. The increased neural activity observed as speech repetition rate increases lends support to the notion that speech-motor regions modulate in response to task demands (Price et al., 1992; Paus et al., 1996; Sörös et al., 2006; Dunst et al., 2014; Alexandrou et al., 2017). The less robust effect in the left hemisphere seen at faster rates may be due to the fact that speech produced at a closer-to-normal pace is a highly practiced function and therefore, requires no additional regional support (Dunst et al., 2014; Nussbaumer et al., 2015).

Wildgruber et al. (2001) used fMRI and repetition of a simple syllable (/ta/) performed at three different rates (2.5, 4.0, and 5.5 Hz) to determine the independent contributions of cerebral structures that support speech-motor control. Bilateral motor cortices showed a positive correlation with production frequencies. Activation in the right superior temporal lobe increased from 2.5 to 4 Hz, but then decreased. These results differed from those of our study which showed a significant difference and increase across all three rates in the temporal lobe, but not in the motor cortex. The underlying cause of this discrepancy is difficult to discern due to the covert nature of a task involving imagined silent repetition of a single syllable. Moreover, in contrast to overt repetition of commonly-used words/phrases, a nonfluent syllable such as /ta/, is one that would likely deter rather than enhance fluency, and thus, may have become more difficult to “produce” as rates increased. Although Wildgruber and others have argued that speech-motor control can be successfully assessed by covert tasks (Wildgruber et al., 1996; Ackermann et al., 1998; Riecker et al., 2000), when Shuster and Lemieux (2005) used both overt and covert stimuli in a word production task, they concluded that, despite similarities, the BOLD response was not the same for the two modalities.

Furthermore, because our repetition rates (1, 2, and 3 syll./sec.) were somewhat slower than the 2.5, 4.0, and 5.5 Hz used by Wildgruber et al. (2001), they may have engaged the right temporal cortex which has been shown to be particularly sensitive to slow temporal features, and therefore, may underlie the encoding of syllable patterns in speech (Boemio et al., 2005; Abrams et al., 2008). Riecker et al. (2006) also investigated speech-motor control using simple, overt repetition of the syllable /pa/ at six different frequencies (2.0 to 6.0 Hz). There, the rate-to-response functions of the BOLD signal revealed a negative relationship between syllable frequency and the striatum, whereas cortical areas and the cerebellum showed the opposite pattern. Surprisingly, they found no activation in the superior temporal cortex as we did in our study. This is, however, in alignment with Wildgruber et al. (2001) who suggest that fewer resources are required from temporal lobe regions for simple syllable repetition. It may also indicate that a more classical perisylvian network is engaged for speaking meaningful phrases while repetition of a single syllable at a faster pace requires greater support from classical motor-control networks.

Noesselt et al.’s (2003) examination of rate effects used an auditory word presentation task that showed a strong linear correlation between presentation rate and bilateral hemodynamic response in the auditory cortices of the STG. They concluded that because “word presentation rate” modulated activation in these areas, it works in a stimulus-dependent fashion. A similar result was found by Dhankhar et al. (1997) who presented auditory stimuli at rates ranging from 0 to 130 words/min (i.e., 0 to 2.17 words/sec.). They found that the total volume of activation in the STG’s auditory regions increased as the presentation rate increased, peaking at 90 words/min (i.e., 1.5 words/sec.) with a subsequent fall at 130 (i.e., 2.17 words/sec.). In our study, overt speech production at three different rates also elicited linear increases in the STG bilaterally, with a lesser increase in intensity on the left between the 2- and 3-syll./sec. rates. Converging evidence has also identified a potential role for the pSTG in aspects of speech production (Price et al., 1996; Wise et al., 2001; Bohland and Guenther, 2006; Ozdemir et al., 2006). Ozdemir et al. (2006) suggested that activated regions in the temporal cortex may be responsible for providing sensory feedback; Price et al. (1992) found a linear relationship between blood flow and presentation rate of heard words in the right STG. The left STG was activated in response to the words themselves, but not to the rate of presentation.

In this study, the strong superior temporal lobe activation observed in combination with activation in other speech-relevant perisylvian language areas suggests fluid teamwork within the speech-motor circuitry shared by regions that support both motor preparation/execution and sensory feedforward/feedback control for speech production. This is consistent with studies that found evidence for left-hemisphere dominance in rapid temporal processing and right-hemisphere sensitivity to longer durations (McGettigan and Scott, 2012; Han and Dimitrijevic, 2015). Furthermore, clinical studies in patients with large left-hemisphere lesions and nonfluent aphasia (e.g., Schlaug et al., 2008; Zipse et al., 2012), have found right-hemispheric support for repetition of meaningful words at slower rates (1 syll./sec.). Our results confirm that bihemispheric sensorimotor regions, part of the feedforward/feedback control loop for speech production, are actively engaged during paced, overt word/phrase repetition in healthy adults. These findings complement the growing body of evidence provided by lesion studies, and together, advance a more comprehensive picture of the effect of rate on neural activation and its promise for the treatment of nonfluent aphasia and other fluency disorders.

There are, however, a number of limitations/shortcomings which deserve consideration. First, the sample size of 12 is at the smaller end for this kind of studies. Designed as a pilot study to inform future studies with aphasic patients, we elected to proceed with the smallest possible sample that was still large enough to statistically power analyses of our sparse temporal sampling paradigm. Second, we are aware that fMRI is not necessarily the optimal imaging method with regard to temporal resolution for time-sensitive tasks; however, our main objective was to visualize and localize the neural correlates of speech-repetition at different rates. Third, despite recording and assessing the subject’s responses in real time, the study lacked an acoustic measure that could be used to more precisely assess error type/extent and make potential correlations with regions activated during paced repetition, although we think that this error analysis would likely reveal only minor variations of the main findings since the speech repetition rate was modulated on a rather large scale.

Conclusion

The linear effects seen in superior temporal lobe ROIs suggest that sensory feedback corresponds directly to task demands. The lesser degree of increase in left-hemisphere activation between the 2- and 3-syllable rates may represent an increase in neural efficiency, thus indicating that faster rates are less demanding on regional function in the left hemisphere when the task so closely approximates a highly-practiced function. The overall pattern of bilateral activation during overt repetition, coupled with right-hemisphere dominance in response to changes in speech repetition rate further suggest that interventions aiming to improve speech fluency through repetition could draw support from either or both hemispheres. This bihemispheric redundancy in speech-motor control may play an important role in recovery of speech production/fluency, particularly for patients with large left-hemisphere lesions for whom the right hemisphere is the only option for production of meaningful speech. Results of this investigation may help identify optimal rates for treatment at different stages of recovery, and provide insight for the development of interventions seeking to target nonfluent aphasia and other fluency disorders characterized by impaired initiation and/or slow, halting speech production that are typically treated with repetition-based therapies.

Author Contributions

Substantial contributions to the conception or design of the work; or the acquisition, analysis, or interpretation of data for the work; drafting the work or revising it critically for important intellectual content; final approval of the version to be published; agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved: SM, AN, SK, and GS.

Funding

This study was supported by NIH (1RO1DC008796), the Richard and Rosalyn Slifka Family Fund, and the Tom and Suzanne McManmon Family Fund.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors wish to gratefully acknowledge Miriam Tumeo and Lauryn Zipse for their efforts in planning and execution of this study.

References

Abrams, D. A., Nicol, T., Zecker, S., and Kraus, N. (2008). Right-hemisphere auditory cortex is dominant for coding syllable patterns in speech. J. Neurosci. 28, 3958–3965. doi: 10.1523/JNEUROSCI.0187-08.2008

Ackermann, H., Wildgruber, D., Daum, I., and Grodd, W. (1998). Does the cerebellum contribute to cognitive aspects of speech production? A functional magnetic resonance imaging (fMRI) study in humans. Neurosci. Lett. 247, 187–190. doi: 10.1016/S0304-3940(98)00328-0

Aichert, I., and Ziegler, W. (2004). Syllable frequency and syllable structure in apraxia of speech. Brain Lang. 88, 148–159. doi: 10.1016/S0093-934X(03)00296-7

Alexandrou, A. M., Saarinen, T., Kujala, J., and Salmelin, R. (2016). A multimodal spectral approach to characterize rhythm in natural speech. J. Acoust. Soc. Am. 139, 215–226. doi: 10.1121/1.4939496

Alexandrou, A. M., Saarinen, T., Makela, S., Kujala, J., and Salmelin, R. (2017). The right hemisphere is highlighted in connected natural speech production and perception. Neuroimage 152, 628–638. doi: 10.1016/j.neuroimage.2017.03.006

Arcuri, C. F., Osborn, E., Schiefer, A. M., and Chiari, B. M. (2009). Speech rate according to stuttering severity. Pro Fono 21, 46–50.

Basho, S., Palmer, E. D., Rubio, M. A., Wulfeck, B., and Muller, R. A. (2007). Effects of generation mode in fMRI adaptations of semantic fluency: paced production and overt speech. Neuropsychologia 45, 1697–1706. doi: 10.1016/j.neuropsychologia.2007.01.007

Binder, J. R., Frost, J. A., Hammeke, T. A., Cox, R. W., Rao, S. M., and Prieto, T. (1997). Human brain language areas identified by functional magnetic resonance imaging. J. Neurosci. 17, 353–362.

Binder, J. R., Rao, S. M., Hammeke, T. A., Frost, J. A., Bandettini, P. A., and Hyde, J. S. (1994). Effects of stimulus rate on signal response during functional magnetic resonance imaging of auditory cortex. Brain Res. Cogn. Brain Res. 2, 31–38. doi: 10.1016/0926-6410(94)90018-3

Blank, S. C., Scott, S. K., Murphy, K., Warburton, E., and Wise, R. J. (2002). Speech production: Wernicke, Broca and beyond. Brain 125, 1829–1838. doi: 10.1093/brain/awf191

Boemio, A., Fromm, S., Braun, A., and Poeppel, D. (2005). Hierarchical and asymmetric temporal sensitivity in human auditory cortices. Nat. Neurosci. 8, 389–395. doi: 10.1038/nn1409

Bohland, J. W., and Guenther, F. H. (2006). An fMRI investigation of syllable sequence production. Neuroimage 32, 821–841. doi: 10.1016/j.neuroimage.2006.04.173

Broca, P. (1865). Du siège de la faculté de langage articulé dans l’hémisphère gauche du cerveau. Bull. Soc. Anthropol. VI, 377–393. doi: 10.3406/bmsap.1865.9495

Brumberg, J. S., Krusienski, D. J., Chakrabarti, S., Gunduz, A., Brunner, P., Ritaccio, A. L., et al. (2016). Spatio-temporal progression of cortical activity related to continuous overt and covert speech production in a reading task. PLoS One 11:e0166872. doi: 10.1371/journal.pone.0166872

Catani, M., Jones, D. K., and Ffytche, D. H. (2005). Perisylvian language networks of the human brain. Ann. Neurol. 57, 8–16. doi: 10.1002/ana.20319

Costa, L. M. O., Martin-Reis, V. O., and Celeste, L. C. (2016). Methods of analysis speech rate: a pilot study. Codas 28, 41–45. doi: 10.1590/2317-1782/20162015039

Cotton, J. C. (1936). Syllabic rate: a new concept in the study of speech rate variation. Speech Monogr. 2, 112–117. doi: 10.1080/03637753609374845

Crosson, B., Mcgregor, K., Gopinath, K. S., Conway, T. W., Benjamin, M., Chang, Y. L., et al. (2007). Functional MRI of language in aphasia: a review of the literature and the methodological challenges. Neuropsychol. Rev. 17, 157–177. doi: 10.1007/s11065-007-9024-z

Dhankhar, A., Wexler, B. E., Fulbright, R. K., Halwes, T., Blamire, A. M., and Shulman, R. G. (1997). Functional magnetic resonance imaging assessment of the human brain auditory cortex response to increasing word presentation rates. J. Neurophysiol. 77, 476–483. doi: 10.1152/jn.1997.77.1.476

Duffy, J. R., and Josephs, K. A. (2012). The diagnosis and understanding of apraxia of speech: why including neurodegenerative etiologies may be important. J. Speech Lang. Hear. Res. 55, S1518–S1522. doi: 10.1044/1092-4388(2012/11-0309)

Dunst, B., Benedek, M., Jauk, E., Bergner, S., Koschutnig, K., Sommer, M., et al. (2014). Neural efficiency as a function of task demands. Intelligence 42, 22–30. doi: 10.1016/j.intell.2013.09.005

Friston, K. J. (2002). Bayesian estimation of dynamical systems: an application to fMRI. Neuroimage 16, 513–530. doi: 10.1006/nimg.2001.1044

Geschwind, N. (1970). The organization of language and the brain. Science 170, 940–944. doi: 10.1126/science.170.3961.940

Grosjean, F., and Deschamps, A. (1975). Analyse contrastive des variables temporelles de l’anglais et du français: vitesse de parole et variables composantes, phénomènes d’hésitation. Phonetica 31, 144–184. doi: 10.1159/000259667

Han, J. H., and Dimitrijevic, A. (2015). Acoustic change responses to amplitude modulation: a method to quantify cortical temporal processing and hemispheric asymmetry. Front. Neurosci. 9:38. doi: 10.3389/fnins.2015.00038

Hickok, G., Erhard, P., Kassubek, J., Helms-Tillery, A. K., Naeve-Velguth, S., Strupp, J. P., et al. (2000). A functional magnetic resonance imaging study of the role of left posterior superior temporal gyrus in speech production: implications for the explanation of conduction aphasia. Neurosci. Lett. 287, 156–160. doi: 10.1016/S0304-3940(00)01143-5

Hickok, G., and Poeppel, D. (2004). Dorsal and ventral streams: a framework for understanding aspects of the functional anatomy of language. Cognition 92, 67–99. doi: 10.1016/j.cognition.2003.10.011

Hickok, G., and Poeppel, D. (2007). The cortical organization of speech processing. Nat. Rev. Neurosci. 8, 393–402. doi: 10.1038/nrn2113

Houde, J. F., and Jordan, M. I. (1998). Sensorimotor adaptation in speech production. Science 279, 1213–1216. doi: 10.1126/science.279.5354.1213

Houde, J. F., and Nagarajan, S. S. (2011). Speech production as state feedback control. Front. Hum. Neurosci. 5:82. doi: 10.3389/fnhum.2011.00082

Huang, J., Carr, T. H., and Cao, Y. (2002). Comparing cortical activations for silent and overt speech using event-related fMRI. Hum. Brain Mapp. 15, 39–53. doi: 10.1002/hbm.1060

Kelly, H. C., and Steer, M. D. (1949). Revised concept of rate. J. Speech Hear. Disord. 14, 222–226. doi: 10.1044/jshd.1403.222

Kent, R. D. (2000). Research on speech motor control and its disorders: a review and prospective. J. Commun. Disord. 33, 391–427; quiz 428. doi: 10.1016/S0021-9924(00)00023-X

Kent, R. D., Kent, J. F., and Rosenbek, J. C. (1987). Maximum performance tests of speech production. J. Speech Hear. Disord. 52, 367–387. doi: 10.1044/jshd.5204.367

Kent, R. D., and Rosenbek, J. C. (1983). Acoustic patterns of apraxia of speech. J. Speech Hear. Res. 26, 231–249. doi: 10.1044/jshr.2602.231

Levelt, W. J. (2001). Spoken word production: a theory of lexical access. Proc. Natl. Acad. Sci. U.S.A. 98, 13464–13471. doi: 10.1073/pnas.231459498

Liegeois, F. J., Butler, J., Morgan, A. T., Clayden, J. D., and Clark, C. A. (2016). Anatomy and lateralization of the human corticobulbar tracts: an fMRI-guided tractography study. Brain Struct. Funct. 221, 3337–3345. doi: 10.1007/s00429-015-1104-x

Logan, K., and Conture, E. (1995). Length, grammatical complexity, and rate differences in stuttered and fluent conversational utterances of children who stutter. J. Fluency Disord. 20, 35–61. doi: 10.1016/j.jfludis.2008.06.003

Marchina, S., Zhu, L. L., Norton, A., Zipse, L., Wan, C. Y., and Schlaug, G. (2011). Impairment of speech production predicted by lesion load of the left arcuate fasciculus. Stroke 42, 2251–2256. doi: 10.1161/STROKEAHA.110.606103

McGettigan, C., and Scott, S. K. (2012). Cortical asymmetries in speech perception: what’s wrong, what’s right and what’s left? Trends Cogn. Sci. 16, 269–276. doi: 10.1016/j.tics.2012.04.006

Noesselt, T., Shah, N. J., and Jancke, L. (2003). Top-down and bottom-up modulation of language related areas–an fMRI study. BMC Neurosci. 4:13. doi: 10.1186/1471-2202-4-13

Nussbaumer, D., Grabner, R. H., and Stern, E. (2015). Neural efficiency in working memory tasks: the impact of task demand. Intelligence 50, 196–208. doi: 10.1016/j.cortex.2015.10.025

Ogar, J., Willock, S., Baldo, J., Wilkins, D., Ludy, C., and Dronkers, N. (2006). Clinical and anatomical correlates of apraxia of speech. Brain Lang. 97, 343–350. doi: 10.1016/j.bandl.2006.01.008

Oldfield, R. C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9, 97–113. doi: 10.1016/0028-3932(71)90067-4

Oliveira, F. F., Marin, S. M., and Bertolucci, P. H. (2017). Neurological impressions on the organization of language networks in the human brain. Brain Inj. 31, 140–150. doi: 10.1080/02699052.2016.1199914

Ozdemir, E., Norton, A., and Schlaug, G. (2006). Shared and distinct neural correlates of singing and speaking. Neuroimage 33, 628–635. doi: 10.1016/j.neuroimage.2006.07.013

Palmer, E. D., Rosen, H. J., Ojemann, J. G., Buckner, R. L., Kelley, W. M., and Petersen, S. E. (2001). An event-related fMRI study of overt and covert word stem completion. Neuroimage 14, 182–193. doi: 10.1006/nimg.2001.0779

Pani, E., Zheng, X., Wang, J., Norton, A., and Schlaug, G. (2016). Right hemisphere structures predict poststroke speech fluency. Neurology 86, 1574–1581. doi: 10.1212/WNL.0000000000002613

Paulson, M. J., and Lin, T. (1970). Predicting WAIS IQ from Shipley-Hartford scores. J. Clin. Psychol. 26, 453–461. doi: 10.1002/1097-4679(197010)26:4<453::AID-JCLP2270260415>3.0.CO;2-V

Paus, T., Perry, D. W., Zatorre, R. J., Worsley, K. J., and Evans, A. C. (1996). Modulation of cerebral blood flow in the human auditory cortex during speech: role of motor-to-sensory discharges. Eur. J. Neurosci. 8, 2236–2246. doi: 10.1111/j.1460-9568.1996.tb01187.x

Price, C., Wise, R., Ramsay, S., Friston, K., Howard, D., Patterson, K., et al. (1992). Regional response differences within the human auditory cortex when listening to words. Neurosci. Lett. 146, 179–182. doi: 10.1016/0304-3940(92)90072-F

Price, C. J., Wise, R. J., Warburton, E. A., Moore, C. J., Howard, D., Patterson, K., et al. (1996). Hearing and saying. The functional neuro-anatomy of auditory word processing. Brain 119(Pt 3), 919–931. doi: 10.1093/brain/119.3.919

Rauschecker, A. M., Pringle, A., and Watkins, K. E. (2008). Changes in neural activity associated with learning to articulate novel auditory pseudowords by covert repetition. Hum. Brain Mapp. 29, 1231–1242. doi: 10.1002/hbm.20460

Riecker, A., Ackermann, H., Wildgruber, D., Meyer, J., Dogil, G., Haider, H., et al. (2000). Articulatory/phonetic sequencing at the level of the anterior perisylvian cortex: a functional magnetic resonance imaging (fMRI) study. Brain Lang. 75, 259–276. doi: 10.1006/brln.2000.2356

Riecker, A., Brendel, B., Ziegler, W., Erb, M., and Ackermann, H. (2008). The influence of syllable onset complexity and syllable frequency on speech motor control. Brain Lang. 107, 102–113. doi: 10.1016/j.bandl.2008.01.008

Riecker, A., Kassubek, J., Groschel, K., Grodd, W., and Ackermann, H. (2006). The cerebral control of speech tempo: opposite relationship between speaking rate and BOLD signal changes at striatal and cerebellar structures. Neuroimage 29, 46–53. doi: 10.1016/j.neuroimage.2005.03.046

Riecker, A., Mathiak, K., Wildgruber, D., Erb, M., Hertrich, I., Grodd, W., et al. (2005). fMRI reveals two distinct cerebral networks subserving speech motor control. Neurology 64, 700–706. doi: 10.1212/01.WNL.0000152156.90779.89

Robb, M. P., Maclagan, M. A., and Chen, Y. (2004). Speaking rates of American and New Zealand varieties of English. Clin. Linguist. Phon. 18, 1–15. doi: 10.1080/0269920031000105336

Ruspantini, I., Saarinen, T., Belardinelli, P., Jalava, A., Parviainen, T., Kujala, J., et al. (2012). Corticomuscular coherence is tuned to the spontaneous rhythmicity of speech at 2-3 Hz. J. Neurosci. 32, 3786–3790. doi: 10.1523/JNEUROSCI.3191-11.2012

Saur, D., Kreher, B. W., Schnell, S., Kummerer, D., Kellmeyer, P., Vry, M. S., et al. (2008). Ventral and dorsal pathways for language. Proc. Natl. Acad. Sci. U.S.A. 105, 18035–18040. doi: 10.1073/pnas.0805234105

Schlaug, G., Marchina, S., and Norton, A. (2008). From singing to speaking: why singing may lead to recovery of expressive language function in patients with Broca’s aphasia. Music Percept. 25, 315–323. doi: 10.1525/mp.2008.25.4.315

Schlaug, G., Marchina, S., and Norton, A. (2009). Evidence for plasticity in white-matter tracts of patients with chronic Broca’s aphasia undergoing intense intonation-based speech therapy. Ann. N. Y. Acad. Sci. 1169, 385–394. doi: 10.1111/j.1749-6632.2009.04587.x

Shipley, W. C. (1940). A self-administering scale for measuring intellectual impairment and deterioration. J. Psychol. 9, 371–377. doi: 10.1080/00223980.1940.9917704

Shuster, L. I., and Lemieux, S. K. (2005). An fMRI investigation of covertly and overtly produced mono- and multisyllabic words. Brain Lang. 93, 20–31. doi: 10.1016/j.bandl.2004.07.007

Silbert, L. J., Honey, C. J., Simony, E., Poeppel, D., and Hasson, U. (2014). Coupled neural systems underlie the production and comprehension of naturalistic narrative speech. Proc. Natl. Acad. Sci. U.S.A. 111, E4687–E4696. doi: 10.1073/pnas.1323812111

Sörös, P., Sokoloff, L. G., Bose, A., Mcintosh, A. R., Graham, S. J., and Stuss, D. T. (2006). Clustered functional MRI of overt speech production. Neuroimage 32, 376–387. doi: 10.1016/j.neuroimage.2006.02.046

Southwood, H. (1987). The use of prolonged speech in the treatment of apraxia of speech. Clin. Aphasiol. 15, 277–287.

Tourville, J. A., Reilly, K. J., and Guenther, F. H. (2008). Neural mechanisms underlying auditory feedback control of speech. Neuroimage 39, 1429–1443. doi: 10.1016/j.neuroimage.2007.09.054

van Lieshout, P. H., Bose, A., Square, P. A., and Steele, C. M. (2007). Speech motor control in fluent and dysfluent speech production of an individual with apraxia of speech and Broca’s aphasia. Clin. Linguist. Phon. 21, 159–188. doi: 10.1080/02699200600812331

Wang, J., Marchina, S., Norton, A. C., Wan, C. Y., and Schlaug, G. (2013). Predicting speech fluency and naming abilities in aphasic patients. Front. Hum. Neurosci. 7:831. doi: 10.3389/fnhum.2013.00831

Wildgruber, D., Ackermann, H., and Grodd, W. (2001). Differential contributions of motor cortex, basal ganglia, and cerebellum to speech motor control: effects of syllable repetition rate evaluated by fMRI. Neuroimage 13, 101–109. doi: 10.1006/nimg.2000.0672

Wildgruber, D., Ackermann, H., Klose, U., Kardatzki, B., and Grodd, W. (1996). Functional lateralization of speech production at primary motor cortex: a fMRI study. Neuroreport 7, 2791–2795. doi: 10.1097/00001756-199611040-00077

Wise, R. J., Greene, J., Buchel, C., and Scott, S. K. (1999). Brain regions involved in articulation. Lancet 353, 1057–1061. doi: 10.1016/S0140-6736(98)07491-1

Wise, R. J., Scott, S. K., Blank, S. C., Mummery, C. J., Murphy, K., and Warburton, E. A. (2001). Separate neural subsystems within ‘Wernicke’s area’. Brain 124, 83–95. doi: 10.1093/brain/124.1.83

Yorkston, K. M., Beukelman, D. R., Strand, E. A., and Bell, K. R. (1999). Management of Motor Speech Disorders in Children and Adults, 2nd Edn. Austin, TX: ProEd.

Ziegler, W. (2002). Psycholinguistic and motor theories of apraxia of speech. Semin. Speech Lang. 23, 231–244. doi: 10.1055/s-2002-35798

Keywords: speech rate, overt repetition, fMRI, bilateral activation, temporal lobes, right-hemisphere language networks, fluency, speech-motor function

Citation: Marchina S, Norton A, Kumar S and Schlaug G (2018) The Effect of Speech Repetition Rate on Neural Activation in Healthy Adults: Implications for Treatment of Aphasia and Other Fluency Disorders. Front. Hum. Neurosci. 12:69. doi: 10.3389/fnhum.2018.00069

Received: 02 November 2017; Accepted: 07 February 2018;

Published: 27 February 2018.

Edited by:

Peter Sörös, University of Oldenburg, GermanyReviewed by:

Fabricio Ferreira de Oliveira, Universidade Federal de São Paulo, BrazilAnna Maria Alexandrou, Aalto University, Finland

Copyright © 2018 Marchina, Norton, Kumar and Schlaug. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gottfried Schlaug, Z3NjaGxhdWdAYmlkbWMuaGFydmFyZC5lZHU=

Sarah Marchina

Sarah Marchina Andrea Norton

Andrea Norton Sandeep Kumar

Sandeep Kumar Gottfried Schlaug

Gottfried Schlaug