- 1Department of Computer Science and Engineering, Toyohashi University of Technology, Toyohashi, Japan

- 2Electronics-Inspired Interdisciplinary Research Institute, Toyohashi University of Technology, Toyohashi, Japan

Faces represent important information for social communication, because social information, such as face-color, expression, and gender, is obtained from faces. Therefore, individuals' tend to find faces unconsciously, even in objects. Why is face-likeness perceived in non-face objects? Previous event-related potential (ERP) studies showed that the P1 component (early visual processing), the N170 component (face detection), and the N250 component (personal detection) reflect the neural processing of faces. Inverted faces were reported to enhance the amplitude and delay the latency of P1 and N170. To investigate face-likeness processing in the brain, we explored the face-related components of the ERP through a face-like evaluation task using natural faces, cars, insects, and Arcimboldo paintings presented upright or inverted. We found a significant correlation between the inversion effect index and face-like scores in P1 in both hemispheres and in N170 in the right hemisphere. These results suggest that judgment of face-likeness occurs in a relatively early stage of face processing.

Introduction

Faces are the most important visual stimuli for social communication. When humans see each other's faces, personal information can be read immediately, and emotions can be understood from facial expression and color. In this way, face perception is valuable for humans. In addition, people tend to find faces unconsciously, even in objects (e.g., ceiling stains, clouds in the sky, etc.). Even infants preferentially watch face-like objects (Kato and Mugitani, 2015). This phenomenon is called “face pareidolia,” and is a kind of visual illusion, not a hallucination. How, then, do humans perceive face-likeness in non-face objects?

Brain functions related to face processing have been studied using neuroimaging, including functional magnetic resonance imaging (fMRI) and electroencephalography (EEG). Whereas fMRI has high spatial resolution and identifies the brain areas related to face processing (Kanwisher et al., 1997; Haxby et al., 2000; Liu et al., 2014), EEG has high temporal resolution and can be used to examine dynamic processes (Bentin et al., 1996). Some EEG-based face studies have also utilized event-related potentials (ERP); some ERP components have been reported to be related to face processing. P1 is an early positive component, peaking at around 100 ms, which is sometimes larger in response to faces than objects (Eimer, 2000b; Itier and Taylor, 2004; Rossion and Caharel, 2011; Ganis et al., 2012). A more face-sensitive response was found at the level of the N170, peaking at approximately 160 ms over the occipito-temporal sites (Bentin et al., 1996; Rossion and Jacques, 2011). The N170 component is larger for faces than for all other objects, especially in the right hemisphere (Bentin et al., 1996; Rossion and Jacques, 2011). Moreover, this component is sensitive not only to human faces, but also to schematic faces (Bötzel and Grüsser, 1989; Itier et al., 2011). It is therefore considered to be intimately involved in face processing. Furthermore, the N170 differs between hemispheres (Bentin et al., 1996; Eimer, 2000a; Caharel et al., 2013); the amplitude is larger in the left hemisphere for featural processing (eyes, nose, and mouth), and in the right hemisphere for configural/holistic processing (Hillger and Koenig, 1991; Haxby et al., 2000; Caharel et al., 2013). In addition, the N250, peaking at 250–300 ms, subsequent to the N170 component, is sensitive to face identity (Sagiv and Bentin, 2001; Tanaka and Curran, 2001).

Conversely, face inversion effects have been well studied for specific face recognition. This phenomenon disrupts face recognition when face stimuli are inverted 180°. Moreover, the disruption effect is larger for face stimuli than for other object stimuli (Yin, 1969). There is evidence that configural/holistic (Tanaka and Farah, 1993; Farah et al., 1995) processing of human faces is disrupted by inversion (Tanaka and Farah, 1993; Freire et al., 2000; Leder et al., 2001; Maurer et al., 2007). Reed et al. (2006) reported slower reaction times (RTs) and higher error rates for decisions about inverted faces than for those about upright faces. This effect is observed in brain activity as well as in behavior (Bentin et al., 1996). The N170 and P1 components are larger with presentation of inverted face stimuli, but not with that of inverted object stimuli (Linkenkaer-Hansen et al., 1998; Itier and Taylor, 2004). Some previous studies have reported that the amplitudes of the P1 and N170 components increased and the latencies were delayed with presentation of inverted face images, as compared to upright face images, which suggested that the P1 component is an early indicator of endogenous processing of visual stimuli, and that the N170 component reflects an early stage of configural/holistic encoding, and is sensitive to changes in facial structure (Itier and Taylor, 2004). In addition, some studies have suggested that upright faces are dominated by holistic processing, and inverted faces by featural processing (Caharel et al., 2013). For example, Rossion et al. (1999, 2000, 2003) reported that N170 inversion effects disrupted processing of configural/holistic information. This effect is considered as a marker for special processing of upright face stimuli in the brain (Yovel and Kanwisher, 2005; Davidenko et al., 2012). Moreover, another study suggested that the inversion effect of N170 amplitude is category-sensitive (Boehm et al., 2011). These results suggest that the inversion effect is a marker for face-like processing.

Other previous studies investigating holistic and featural processing during face processing of inverted faces, using realistic and schematic images, reported that the N170 amplitude increased when inverted realistic face images were presented (Sagiv and Bentin, 2001). Conversely, the N170 amplitude decreased when inverted schematic face images were presented. This study theorized that schematic faces that did not have enough featural information were recognizable by holistic processing when presented upright. However, when the images were inverted, the N170 amplitude was reduced due to preferential featural processing instead of configural/holistic processing. This suggested that individuals perform holistic processing in response to upright faces and featural processing in response to inverted faces.

Facial inversion effect studies have investigated face-like objects as well as faces. 1 study investigated holistic processing using face images; Arcimboldo paintings consisting of vegetables, fruits, and books; and object images (e.g., a car and a house) (Caharel et al., 2013). In the upright stimuli, Arcimboldo paintings and face stimuli induced larger N170 amplitudes in the right hemisphere than did object stimuli. In contrast, in the left hemisphere, N170 amplitudes differed between processing of Arcimboldo paintings and face stimuli. This suggested that the right hemisphere is related to holistic processing, and the left hemisphere to feature processing.

Previous studies also suggested that face-like objects were processed in the N170 component in the right hemisphere, through holistic processing (Caharel et al., 2013; Liu et al., 2016). Furthermore, Churches et al. (2009) suggested that the amplitude of the N170 component in response to objects is affected by the face-likeness of the objects. In addition, previous studies also suggested that the P1 component is associated with face-likeness processing. Dering et al. (2011) reported that the amplitude of the P1 component was modulated in a face-sensitive fashion-independent cropping or morphing. This means that P1 is sensitive to face processing. However, it is unclear whether the P1 and N170 components contribute to face-likeness judgment. Additionally, although these studies investigated how facial features and positions of facial parts are processed, how and when face-likeness perception is processed was not known. According to Sagiv and Bentin (2001), Churches et al. (2009) and Caharel et al. (2013), the N170 component may reflect face-likeness, because the N170 component reflects an early stage of structure coding and is sensitive to face-like stimuli, such as Arcimboldo paintings.

In this study, we investigated whether the inversion effect index of the N170 component actually reflected face-likeness, by observing the correlation between the ERP components and behavioral reports of face-likeness. We expected that correlation between the inversion effect index of N170 amplitude and face-like scores would be found. Furthermore, P1 and N250 correlate with face-like scores, similar to the N170 component. Taken together, this study investigated face-likeness judgment as reflected by ERP components, as well as how and when face-like objects are processed. The purpose of this study was to reveal which ERP components contribute to face-likeness judgment based on correlation between face-likeness evaluation scores and the inversion effect of each ERP component.

Materials and Methods

Participants

Twenty-one healthy, right-handed volunteers (age: 19–37 years, 3 female) with normal or corrected-to-normal vision participated in the experiment. Informed written consent was obtained from participants after procedural details had been explained. The Committee for Human Research of Toyohashi University of Technology approved experimental procedures.

Stimuli

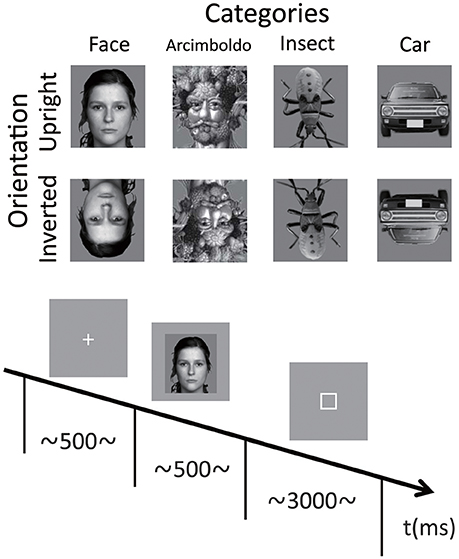

The stimuli in each category are shown in Figure 1. There were 4 categories of stimuli, including natural human faces (without glasses or make-up, and with a neutral expression), Arcimboldo paintings, insects (animate category), and cars (inanimate category). The face category was selected from the FACES database (Max Planck Institute for Human Development, Berlin; Ebner et al., 2010). Each category consisted of 6 kinds of stimuli. In the face category, we presented equal numbers of male and female faces. Only faces with neutral expression were chosen (interrater agreement N 0.90, as published for the reference sample). The upright orientation of the insect category was defined as erecting a higher face-likeness evaluation score in the image evaluation experiment. All photographs were converted to gray scale, and mean luminance and size were equalized with Adobe Photoshop®CS2 software. All stimuli were 220 × 247 pixels (visual angle 9.7 to 11.6°). Each stimulus was presented in 2 different orientations, either upright or inverted 180°.

Figure 1. Example stimuli for each category and the timeline of stimulus presentation during a single trial. The face category was selected from the FACES database (Max Planck Institute for Human Development, Berlin; Ebner et al., 2010). Only faces with neutral expression were chosen (interrater agreement N 0.90, as published for the reference sample). The car category was selected as representing artificial objects, and the insect category was selected as representing natural objects. The Arcimboldo paintings were selected for observing holistic and feature processing, as described by Caharel et al. (2013) and Rossion et al. (2011). Images for each condition were randomly presented, and the participants performed the face-likeness evaluation task.

EEG Recording

EEG data were recorded with 64 active Ag-AgCl sintered electrodes mounted on an elastic cap according to the extended 10–20 system and amplified by a BioSemi ActiveTwo amplifier (BioSemi; Amsterdam, The Netherlands). Electrooculography (EOG) was recorded from additional channels (the infraorbital region of right eye, and the outer canthus of the right and left eye). Both the EEG and the EOG were sampled at 512 Hz.

Procedure

After electrode-cap placement, participants were seated in a light- and sound-attenuated room, at a viewing distance of 60 cm from a computer monitor. Stimulus presentation was controlled by a ViSaGe system (Cambridge Research System, Rochester, UK) and presented on a CRT monitor (EIZO, Flexscan-T761, graphics resolution 800 × 600 pixels, frame rate: 100 Hz). Stimuli were displayed at the center of the screen on a light gray background. At the start of each trial, a fixation point appeared in the center of the screen for 500 ms, followed by the presentation of the test stimulus for 500 ms. The inter-trial interval was randomized between 1,000 and 1,500 ms. Participants performed face-like evaluation tasks and provided their responses by pressing 1 of 7 keys on a numeric keyboard with their right or left index finger; right or left was counterbalanced across blocks (right to left or left to right). They rated face-likeness on a 7-point scale from 1 (non-face-like) to 7 (most face-like) and were requested to respond within 3,000 ms. Participants were instructed to maintain eye gaze fixation on the center of the screen throughout the trial and respond as accurately and as quickly as possible. Participants performed 96 trials per condition (6 stimuli in each category repeated 16 times in each orientation). Four blocks of 192 trials (4 categories × 6 stimuli × 2 orientations × 4 times) were presented in a pseudo-random order. Thus, participants performed a total of 768 trials.

Data Acquisition

Behavioral Data

Scores (face-likeness) and reaction times (RTs) were computed for each condition and submitted to repeated ANOVAs with category (faces, Arcimboldo paintings, insects, cars), and orientation (upright vs. inverted) as within-subject factors.

EEG Data

For ERP analysis, a 1–30 Hz digital band-pass filter was applied offline to continuous EEG data after re-referencing the data to an average reference using the EEGLAB toolbox (Delorme and Makeig, 2004). The continuous EEG data were divided into 900 ms epochs (−100 to +800 ms from stimulus onset) and baseline corrected (−100 to 0 ms). Correction for artifacts, including ocular movements, was performed using Independent Component Analysis (ICA) (runica algorithm) as implemented in the EEGLAB toolbox. ICA decomposition was derived from all trials concatenated across conditions. Ocular artifacts were removed from each average by ICA decomposition (Kovacevic and McIntosh, 2007). Subsequently, 4 methods of artifact rejection were performed. First, artifact epochs were rejected based on extreme values in the EEG channel, ± 80 μV. Next, artifacts based on linear trend/variance using the EEGLAB toolbox (max slope [μV/epoch]: 50; R-squared limit: 0.3) were rejected. Artifact epochs were also rejected using probability methods (single- and all-channel limits: 5 SD) and kurtosis methods (single- and all-channel limits: 5 SD), again using the EEGLAB toolbox. Grand-mean ERP waveforms were visually assessed and peak amplitude and latency were extracted. Peak amplitude and latency of P1, N170, and N250 components were extracted at a maximum amplitude value between 80 and 130 ms for the P1 and at the minimum amplitude value between 130 and 200 ms for the N170 and at a minimum amplitude value between 220 and 300 ms for the N250, for different pairs of occipito-temporal electrodes in the left and right hemispheres: 3 left hemisphere electrodes (P5, P9, PO7) and 3 right hemisphere electrodes (P6, P10, PO8). Moreover, the topographies were calculated to assess which electrode optimized the analysis in this study. The topographies were calculated by averaging across 4 categories and the relevant time window of each ERP component. Amplitude and latency of the P1, N170, and N250 were submitted to separate repeated-measure ANOVAs with category, orientation, and hemisphere as within-subject factors and post-hoc analysis was performed by using Bonferroni method.

Inversion Effect

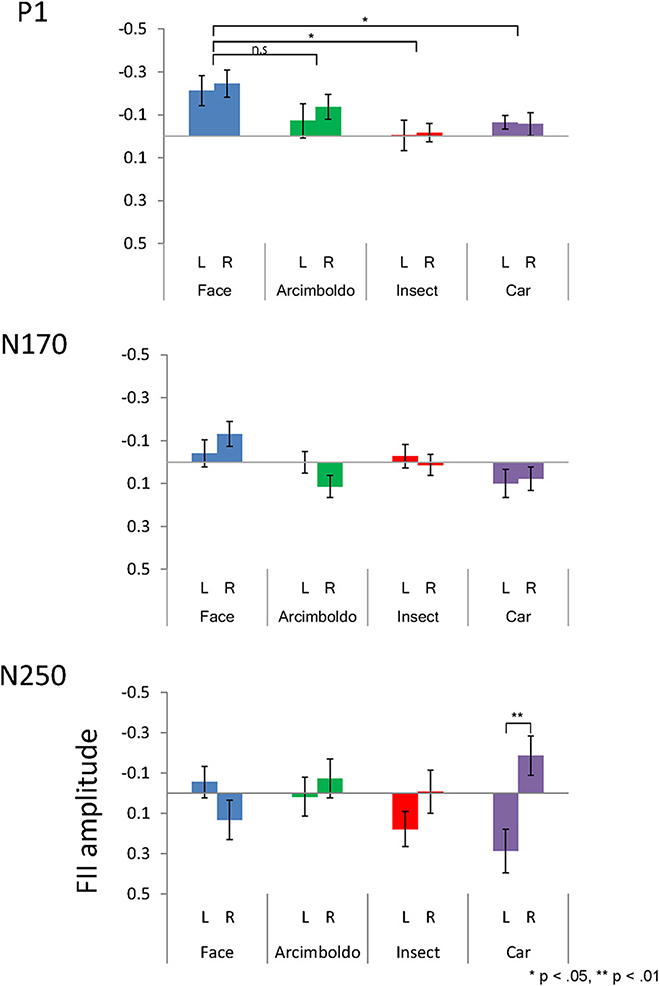

We calculated the inversion effect index using the following equation (1). Each ERP component was assigned to the formula (Sadeh et al., 2010; Suzuki and Noguchi, 2013). The inversion effect index showed differences in N170 amplitudes between the upright and inverted conditions divided by the sum of the 2 conditions. If a normal face inversion effect occurs, this index should be negative. Each inversion effect index was evaluated by means of a 1-sample t-test to determine whether the effect was significantly different from 0. Furthermore, the inversion effect index values were computed for each condition and submitted to repeated ANOVAs with hemisphere and category as within-subject factors (Figure 5).

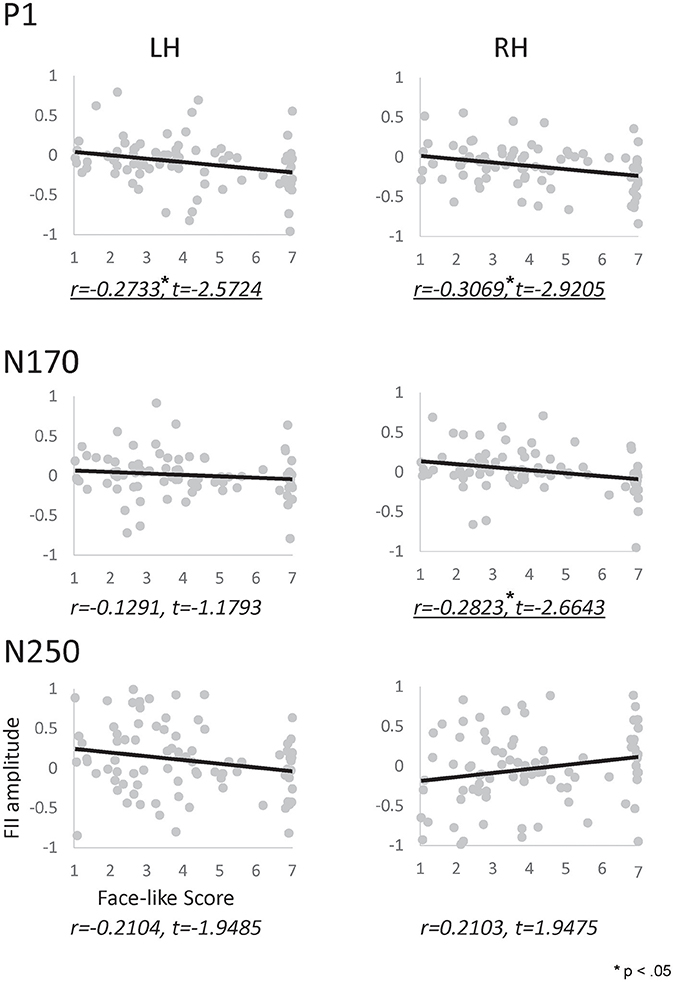

Correlation Analysis

Pearson's correlation analysis was performed between the inversion effect index for each ERP component and the mean face-like score (the mean between upright and inverted score) using the robust correlation toolbox (Pernet et al., 2012). The toolbox automatically implements the Bonferroni adjustment for multiple comparisons for each test and provides bootstrapped confidence intervals for the correlations themselves. For the inversion effect index, we calculated the value from each category for each ERP component in each participant.

Results

Behavioral Results

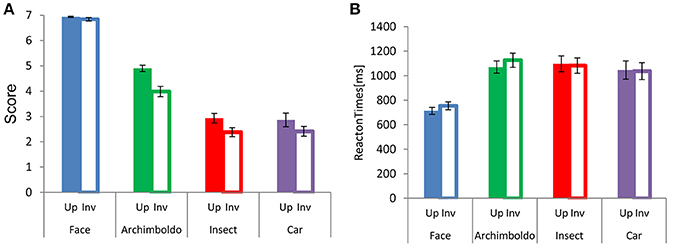

Participants responded more strongly to faces than to images in other categories (Figure 2). There were main effects of Category and Orientation , and an interaction between these factors . This interaction showed a significant effect of Category for both orientations [Upright: , Inverted: F(3, 60) = 6.480, p = 0.001, = 0.24]. For Orientation, the scores of all categories showed a significant difference between upright and inverted orientations (p < 0.001, for all). For both orientations, scores were higher for faces than for other image categories (respectively, p < 0.001, p < 0.001, and p < 0.001, for both orientations) and the scores for Arcimboldo paintings were higher than those for insects and cars (respectively, p < 0.001 and p < 0.001, for both orientations). However, there was no significant difference between the car and insect categories. This interaction showed a significant effect of Orientation for all categories [Face : F(1, 20) = 441.970, p < 0.001, = 0.95, Arcimboldo: , Insect: and Car: F(1, 20) = 63.650, p < 0.001, = 0.76]. Moreover, participants responded more quickly to faces to other types of images. A main effect was found for Category and Orientation . Moreover, an interaction was found between Category and Orientation . This interaction showed a significant effect of Orientation for face category and Arcimboldo paintings category . This Category × Orientation interaction revealed that the response time to faces and Arcimboldo paintings was delayed for inverted orientations as compared to upright orientations (p < 0.001). Furthermore, this interaction showed a significant effect of Category for upright orientation . Participants responded more quickly to faces than to other image categories in the upright orientation (respectively, p < 0.001, p < 0.001, and p < 0.001). However, there were no significant differences between Arcimboldo vs. Insect, Arcimboldo vs. Car, and Insect vs. Car.

Figure 2. (A) Each bar indicates the mean face-likeness score for each category in the upright (fill) and inverted (no fill) orientations. (B) Each bar indicates the mean reaction times for each category in the upright (fill) and inverted (no fill) orientation.

ERP Components

P1 Component

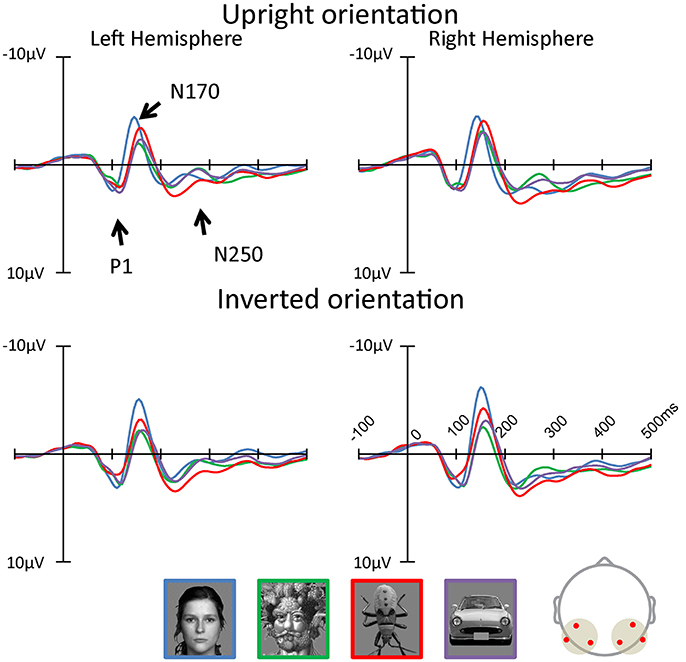

Figures 3, 4 show the topographies and the ERP waveforms in the 6 channels (Left: PO7, P9, P5; Right: PO8, P10, P6). Clear peaks of P1, N170, and N250 are observed. ANOVAs of P1 amplitudes showed a main effect for Category and Orientation . The main effect of Category indicated that P1 amplitude for the insect category was smaller for Arcimboldo and car categories (respectively, p < 0.001 and p = 0.005). The main effect of Orientation revealed that the P1 amplitude was larger for inverted orientations than for upright orientation (p < 0.001). ANOVAs for P1 latency showed a main effect for Category , Orientation , Hemisphere , and an interaction between Category × Orientation . This interaction showed a significant effect of Orientation for the face category and the car category . Moreover, this interaction showed a significant effect of Category for both orientations [Upright: , Inverted: ]. The P1 latency in response to upright orientations was shorter for the face category than for the Arcimboldo paintings category (p = 0.031), and the P1 latency in response to inverted orientations was shorter for the insects category than for other categories (respectively, face: p = 0.017, Arcimboldo paintings: p = 0.003, and car: p < 0.001).

Figure 3. The grand average of ERP waveforms elicited by each category in the upright and inverted orientations at the left and right pooled occipito-temporal electrode sites (waveforms averaged for electrodes P5/P9/PO7, P6/P10/PO8). In addition, the waveforms of inversion effect was calculated (see Supplementary Data Sheet 1, Supplementary Figure 1).

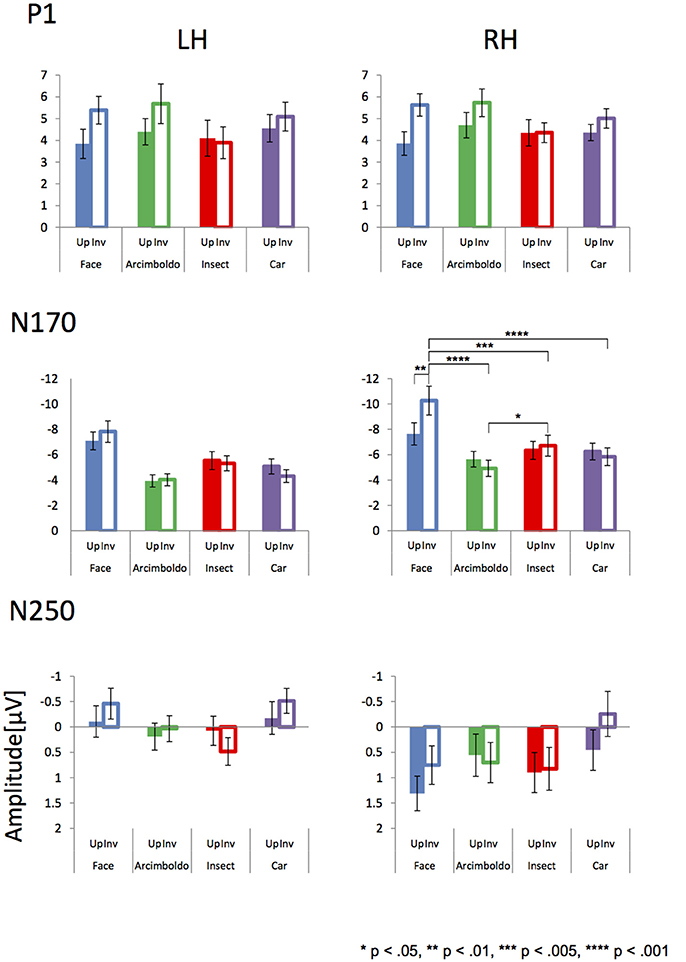

Figure 4. Peak amplitude of the P1 (Top), N170 (Middle), and N250 component (Bottom) measured at the left and right pooled occipito-temporal electrode sites (averaged for electrodes P5/P9/PO7 and P6/P10/PO8), displayed for 4 categories in the upright (fill) and inverted (no fill) orientations.

Figure 5. The inversion effect index for peak amplitude of the P1 (Top), N170 (Middle), and N250 (Bottom) components, measured at the left and right pooled occipito-temporal electrode sites (averaged for electrodes P5/P9/PO7 and P6/P10/PO8) and displayed for 4 categories.

N170 Component

ANOVAs for N170 amplitude showed a main effect for Category , Hemisphere and Hemisphere × Orientation . This Hemisphere × Orientation interaction revealed that the N170 amplitude in inverted orientation was larger for the right hemisphere than for the left hemisphere (p = 0.012). In addition, a three-way interaction was found among hemisphere, category, and orientation . In the right hemisphere, the Category × Orientation interaction was significant as the N170 amplitude for inverted orientation was larger for the face category than for other categories (respectively, Arcimboldo paintings: p < 0.001, car: p < 0.001 and insect: p < 0.001), and N170 amplitude for inverted orientation was larger for the insect category than for the Arcimboldo paintings category (p = 0.011), with no statistically significant difference found between the insect and car categories (p < 1.000) [Simple main effect of Category effect: ]. However, for upright orientations, no significant Category effect was observed . Furthermore, the N170 amplitude for the face category was larger in the inverted orientation than in the upright orientation(p = 0.029). In the left hemisphere, no significant interaction was observed .

ANOVA results for the N170 latency showed a main effect for Orientation , Category , and Category × Orientation . This Category × Orientation interaction showed a significant effect of Category for both orientations [Upright: , Inverted: ]. This interaction revealed that the N170 latency in response to upright orientations was shorter for the face category than for other categories (p < 0.001), and the N170 latency in response to inverted orientations was more delayed for the car category than for the other categories (p < 0.001). Furthermore, latency in response to face category in the upright orientation was shorter than for the inverted orientation (p < 0.001), and the latency in response to the car category in the upright orientation was shorter than for the inverted orientation (p < 0.001).

N250 Component

ANOVA results for the N250 amplitude showed a main effect for hemisphere and category . The N250 amplitude was larger for the right hemisphere than for the left hemisphere (p < 0.001). In addition, there was a significant interaction between Category and Hemisphere and between Category and Orientation . The Category × Orientation interaction showed a significant Category effect for inverted orientation (). The N250 amplitude for inverted orientation was larger for the car category than for the Arcimboldo paintings and insect categories (p < 0.05). Moreover, this interaction showed an orientation effect for face and car categories [Face: and Car: ]. The N250 amplitude for the face category was larger for the inverted orientation than for the upright orientation and the N250 amplitude for the car category was larger for the inverted orientation than for the upright orientation. The Category × Hemisphere interaction showed a significant Category effect for the right hemisphere []. The N250 amplitude in the right hemisphere was larger for the car category than for the insect category. Moreover, this interaction showed a Hemisphere effect for the face category. The N250 amplitude for the face category was larger in the inverted orientation than in the upright orientation (p = 0.002). ANOVA results for N250 latency showed no significant effect and interaction.

Inversion Effect Index

P1 Component

The inversion effect index of the P1 component was then compared with a 1-sample t-test against zero, showing a significant index for face category in both hemispheres, Arcimboldo painting category in the right hemisphere, and car category in the left hemisphere (p < 0.05). The P1 component showed a main effect of Category . The inversion effect index was larger for the face category than for the insect and car categories (respectively, p = 0.002 and p = 0.006).

N170 Component

The inversion effect index of the N170 component was then compared with a 1-sample t-test against zero, showing a significant index for face category and Arcimboldo painting category in the right hemisphere (p < 0.05). For the N170 component, no effect was found for Hemisphere , Category , or the interaction between Hemisphere and Category .

N250 Component

The inversion effect index of the N250 component was then compared with a 1-sample t-test against 0; a significant index for only the car category in the left hemisphere (p < 0.05) was found. The N250 component showed a main effect of Hemisphere . The inversion effect index was larger in the right hemisphere than in the left hemisphere. Moreover, there was a significant interaction between Hemisphere and Category . This Hemisphere and Category revealed that the inversion effect index in response to car was larger for the right hemisphere than for the left hemisphere (p < 0.05).

Correlation Analysis

We performed a correlation analysis to explore the relationship between the face-like score and the inversion effect index (see Figure 6). In the P1 component, a significant correlation was observed between the inversion effect index and face-like score in both hemispheres (left: r = −0.273, p < 0.05, right: r = −0.307, p < 0.05). Furthermore, in the N170 component, a significant correlation was observed between the inversion effect index and face-like score in the right hemisphere (r = −0.282, p < 0.05). In contrast, the N250 components showed no significant correlation. The results indicate that the face-likeness judgment affects early face processing, especially for the right hemisphere. In addition, we also performed a correlation analysis to explore the relationship between the face-like score and raw ERP component (each orientation) or each ERP latency (see Supplementary Figures 2–4).

Figure 6. Correlation map between the inversion effect index and the face-likeness score of P1 (Top), N170 (Middle), and N250 (Bottom) components, calculated for the left (left side) and right (right side) hemispheres. The vertical axis indicates the inversion effect index value, and the horizontal axis indicates the face-likeness scores. Underlines indicate significant correlations.

Discussion

The present study investigated brain activity reflecting face-likeness and explored the correlation between the face inversion effect and face-like score. Significant correlation was observed for P1 in both hemispheres and N170 in the right hemisphere. These results suggest that face-likeness judgment affects early visual processing. After this processing, face-like objects are processed by holistic processing in the right hemisphere. Furthermore, these results suggest that the face inversion index can be used as indicator of face-likeness in early face processing.

Behavioral results showed that face-like scores were reduced in response to inverted objects. Conversely, the scores of human faces in inverted orientations were almost the same as those in upright orientations. Similarly, Reed et al. (2006) reported slower RTs and higher error rates for decisions about inverted human faces, compared to those for upright faces. Furthermore, Itier et al. (2006) reported lower error rates of behavioral inversion effects for natural human faces than for other objects, schematic faces, and Mooney faces, two-toned, ambiguous face images. Their results are consistent with our findings that showed that the inversion effect was specific to face processing, as compared with processing of other object categories.

In terms of ERP results, each component (P1, N170, and N250; Figure 4) was observed for each category. The P1 amplitude showed an inversion effect in both hemispheres. P1 reflects the processing of low-level physical properties, including contrast, luminance, spatial frequency, and color (Linkenkaer-Hansen et al., 1998; Sagiv and Bentin, 2001; Itier and Taylor, 2004; Caharel et al., 2013). However, all stimuli were gray-scale images of equally calibrated luminance in this study. Furthermore, P1 affects holistic face processing (Halit et al., 2000; Wang et al., 2015), and is selective for face parts (Boutsen et al., 2006). These previous studies suggested that P1 is related to configural/holistic and featural processing, and hence, P1 amplitudes for face-like objects were almost the same as the amplitudes for face stimuli. Moreover, the Arcimboldo paintings consist of numerous objects resembling facial parts, with different local contrasts, which may be why the amplitude of the Arcimboldo painting category was higher than for other categories (Itier, 2004). In addition, the face inversion effect for the P1 amplitude was consistent with the results of Boutsen et al. (2006). According to Boutsen et al. (2006), the P1 component is sensitive to global face inversion. Therefore, the inversion effect for P1 appeared in both hemispheres in response to face, Arcimboldo and car categories. However, the inversion effect was not observed for the insect category, because insect stimuli are not dependent on orientation. Thus, the difference in amplitude according to orientation, which is the inversion effect, was not observed for the insect category.

In terms of N170 amplitude, the ANOVA results indicated that the car and insect categories were processed similarly to the face category in the right hemisphere, because there was no difference between these categories for the upright orientation. In the inverted orientation, the amplitude for the face category was larger than for other categories, and the amplitude for the Arcimboldo category was smaller than for other categories. Interestingly enough, this relationship was observed for the inverted orientation in the right hemisphere. We considered that the inverted Arcimboldo category did not contain holistic/configural face information. These results suggested that the Arcimboldo category underwent another form of processing, which was neither face processing nor object processing. In the left hemisphere, we observed no significant difference for either factor. However, the amplitude in response to the objects category was smaller than in response to the face category. These results were consistent with previous studies suggesting that the left hemisphere is specialized for analytic processing of local features of the face (Rossion and Jacques, 2011). Moreover, the face inversion effect for N170 appeared in both hemispheres in response to only the face category. In the face category, the results were consistent with the study of Itier and Taylor (2004), suggesting that the amplitude was increased and the latency was delayed by inverted orientation. In the Arcimboldo category, the results were consistent with the study of Caharel et al. (2013), suggesting that the amplitude decreased in the right hemisphere and the latency was delayed.

There was a difference in the N250 amplitude between the 2 hemispheres. The N250 component relates to personal detection processing in the right hemisphere (Keenan et al., 2000). This processing increased in amplitude when observing objects related to the self (e.g. friends, family, self-face), and hence, the amplitude was small in the right hemisphere in our study. In contrast, the amplitude for the left hemisphere was increased when observing familiar objects (Gorno-Tempini and Price, 2001). Therefore, N250 amplitudes in the left hemisphere were larger in response to faces and cars. Moreover, it may be suspected that the amplitude for the Arcimboldo category was increased because the Arcimboldo paintings resemble human faces. In contrast, the amplitude decreased in response to the insect category, because the insect images in this study were unfamiliar objects. This component was also reported to have no inversion effect (Schweinberger et al., 2004), perhaps because orientation processing was already performed at N170. However, the face and car categories showed a lower inversion effect, which can be attributed to the influence of N170.

We calculated the correlation between the inversion effect index for each ERP component and the face-like score for each category. Significant correlation for the P1 component was observed in both hemispheres. This correlation suggested that the P1 component reflects face-likeness. Moreover, a significant correlation was observed for the N170 component for the right hemisphere. The configuration of stimuli may have been similar enough to human faces to cause this correlation only in the right hemisphere, suggesting that the P1 component in both hemispheres and the N170 component in the right hemisphere reflect face-likeness. Finally, no significant correlation was observed for the N250 component. However, there was a trend for correlation between the inversion effect index in the N250 and the face-like score in both hemispheres, which suggested that the N250 component is related to face-like processing.

The limitations of this study include the low correlation coefficient for each component, although a significant correlation was observed in the P1 and N170 components. The face-like score may have been biased because the stimuli used in this study included only a real face category and 3 face-like categories, without any non-face-like category (e.g., flowers, clocks, and so on). Moreover, the correlation between the P1 inversion effect index and face-like scores could not distinguish between face-like processing and face detection. Additionally, the image of stimuli was difference in spatial frequency. Thus, we cannot deny that P1 components were influenced by spatial frequency. Moreover, recent studies suggested that the N170 component was also influenced by low-level visual information (Dering et al., 2011; Huang et al., 2017). Thus, N170 components were also influenced by spatial frequency and other low-level visual information. However, a significant Category effect was observed only in the inverted orientation in the 3-factor ANOVA. This amplitude difference between the upright and inverted orientation in this study was caused by inversion of the stimulus orientation. Finally, we did not consider the effect of gender differences in this experiment. Among 21 participants in this study, only 3 were female. We considered that the effect of gender would be small, considering the purpose of this study. However, a recent study suggested that females tend to detect face-ness in objects more than do males (Proverbio and Galli, 2016). It is possible that our results could have been affected by sex differences.

Conclusion

Previous studies have suggested that face-likeness processing or face-ness detection occurred in the early visual cortex (Balas and Koldewyn, 2013). In this study, by calculating the correlation between the face-likeness evaluation on the stimulus and the inversion effect index of each ERP component, significant correlations were observed in the P1 component and the N170 component. Accordingly, these results suggested that the face-like processing or face-ness detection is performed in the early visual cortex and that these processes affect face-likeness judgment. Accordingly, we considered that face processing and face-like processing consisted of the following steps. Rough face processing, including detecting the existing shapes as eye-like, nose-like, or mouth-like, is performed in the earlier visual stages represented by P1, while detailed face processing is performed in the face detection stages represented by N170. The process of P1 to N170 components in this study may thus reflect face-likeness judgment. Furthermore, these results suggest that the face inversion index can be used as an indicator of face-likeness in early face processing.

Author Contributions

YN designed the study, and wrote the initial draft of the manuscript. TM and SN contributed to analysis and interpretation of data, and assisted in the preparation of the manuscript. All other authors have contributed to data collection and interpretation, and critically reviewed the manuscript. All authors approved the final version of the manuscript, and agree to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by Grants-in-Aid for Scientific Research from the Japan Society for the Promotion of Science (Grant numbers 25330169 and 26240043).

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2018.00056/full#supplementary-material

References

Balas, B., and Koldewyn, K. (2013). Early visual ERP sensitivity to the species and animacy of faces. Neuropsychologia 51, 2876–2881. doi: 10.1016/j.neuropsychologia.2013.09.014

Bentin, S., Allison, T., Puce, A., Perez, E., and McCarthy, G. (1996). Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 8, 551–565. doi: 10.1162/jocn.1996.8.6.551

Boehm, S. G., Dering, B., and Thierry, G. (2011). Category-sensitivity in the N170 range: a question of topography and inversion, not one of amplitude. Neuropsychologia 49, 2082–2089. doi: 10.1016/j.neuropsychologia.2011.03.039

Bötzel, K., and Grüsser, O. J. (1989). Electric brain potentials evoked by pictures of faces and non-faces: a search for “face-specific” EEG-potentials. Exp. Brain Res. 77, 349–360. doi: 10.1007/BF00274992

Boutsen, L., Humphreys, G. W., Praamstra, P., Warbrick, T., Humphrey, G. W., Praamstra, P., et al. (2006). Comparing neural correlates of configural processing in faces and objects: an ERP study of the Thatcher illusion. Neuroimage 32, 352–367. doi: 10.1016/j.neuroimage.2006.03.023

Caharel, S., Leleu, A., Bernard, C., Viggiano, M.-P., Lalonde, R., and Rebaï, M. (2013). Early holistic face-like processing of Arcimboldo paintings in the right occipito-temporal cortex: evidence from the N170 ERP component. Int. J. Psychophysiol. 90, 157–164. doi: 10.1016/j.ijpsycho.2013.06.024

Churches, O., Baron-cohen, S., and Ring, H. (2009). Seeing face-like objects: an event-related potential study. Neuroreport 20, 1290–1294. doi: 10.1097/WNR.0b013e3283305a65

Davidenko, N., Remus, D. A., and Grill-Spector, K. (2012). Face-likeness and image variability drive responses in human face-selective ventral regions. Hum. Brain Mapp. 33, 2334–2349. doi: 10.1002/hbm.21367

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Dering, B., Martin, C. D., Moro, S., Pegna, A. J., and Thierry, G. (2011). Face-sensitive processes one hundred milliseconds after picture onset. Front. Hum. Neurosci. 5:93. doi: 10.3389/fnhum.2011.00093

Ebner, N. C., Riediger, M., and Lindenberger, U. (2010). FACES—A database of facial expressions in young, middle-aged, and older women and men: development and validation. Behav. Res. Methods 42, 351–362. doi: 10.3758/BRM.42.1.351

Eimer, M. (2000a). Effects of face inversion on the structural encoding and recognition of faces. Cogn. Brain Res. 10, 145–158. doi: 10.1016/S0926-6410(00)00038-0

Eimer, M. (2000b). The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport 11, 2319–2324. doi: 10.1097/00001756-200007140-00050

Farah, M. J., Tanaka, J. W., and Drain, H. M. (1995). What causes the face inversion effect? J. Exp. Psychol. Hum. Percept. Perform. 21, 628–634. doi: 10.1037/0096-1523.21.3.628

Freire, A., Lee, K., and Symons, L. A. (2000). The face-inversion effect as a deficit in the encoding of configural information: direct evidence. Perception 29, 159–170. doi: 10.1068/p3012

Ganis, G., Smith, D., and Schendan, H. E. (2012). The N170, not the P1, indexes the earliest time for categorical perception of faces, regardless of interstimulus variance. Neuroimage 62, 1563–1574. doi: 10.1016/j.neuroimage.2012.05.043

Gorno-Tempini, M. L., and Price, C. J. (2001). Identification of famous faces and buildings. A functional neuroimaging study of semantically unique items. Brain 124, 2087–2097. doi: 10.1093/brain/124.10.2087

Halit, H., de Haan, M., and Johnson, M. H. (2000). Modulation of event-related potentials by prototypical and atypical faces. Neuroreport 11, 1871–1875. doi: 10.1097/00001756-200006260-00014

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Hillger, L. A., and Koenig, O. (1991). Separable mechanisms in face processing: evidence from hemispheric specialization. J. Cogn. Neurosci. 3, 42–58. doi: 10.1162/jocn.1991.3.1.42

Huang, W., Wu, X., Hu, L., Wang, L., Ding, Y., and Qu, Z. (2017). Revisiting the earliest electrophysiological correlate of familiar face recognition. Int. J. Psychophysiol. 120, 42–53. doi: 10.1016/j.ijpsycho.2017.07.001

Itier, R. J. (2004). N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb. Cortex 14, 132–142. doi: 10.1093/cercor/bhg111

Itier, R. J., Latinus, M., and Taylor, M. J. (2006). Face, eye and object early processing: what is the face specificity? Neuroimage 29, 667–676. doi: 10.1016/j.neuroimage.2005.07.041

Itier, R. J., and Taylor, M. J. (2004). Effects of repetition learning on upright, inverted and contrast-reversed face processing using ERPs. Neuroimage 21, 1518–1532. doi: 10.1016/j.neuroimage.2003.12.016

Itier, R. J., Van Roon, P., and Alain, C. (2011). Species sensitivity of early face and eye processing. Neuroimage 54, 705–713. doi: 10.1016/j.neuroimage.2010.07.031

Kanwisher, N., McDermott, J., and Chun, M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311.

Kato, M., and Mugitani, R. (2015). Pareidolia in infants. PLoS ONE 10:e0118539. doi: 10.1371/journal.pone.0118539

Keenan, J. P., Freund, S., Hamilton, R. H., Ganis, G., and Pascual-Leone, A. (2000). Hand response differences in a self-face identification task. Neuropsychologia 38, 1047–1053. doi: 10.1016/S0028-3932(99)00145-1

Kovacevic, N., and McIntosh, A. R. (2007). Groupwise independent component decomposition of EEG data and partial least square analysis. Neuroimage 35, 1103–1112. doi: 10.1016/j.neuroimage.2007.01.016

Leder, H., Candrian, G., Huber, O., and Bruce, V. (2001). Configural features in the context of upright and inverted faces. Perception 30, 73–83. doi: 10.1068/p2911

Linkenkaer-Hansen, K., Palva, J. M., Sams, M., Hietanen, J. K., Aronen, H. J., and Ilmoniemi, R. J. (1998). Face-selective processing in human extrastriate cortex around 120 ms after stimulus onset revealed by magneto- and electroencephalography. Neurosci. Lett. 253, 147–150. doi: 10.1016/S0304-3940(98)00586-2

Liu, J., Li, J., Feng, L., Li, L., Tian, J., and Lee, K. (2014). Seeing Jesus in toast: neural and behavioral correlates of face pareidolia. Cortex 53, 60–77. doi: 10.1016/j.cortex.2014.01.013

Liu, T., Mu, S., He, H., Zhang, L., Fan, C., Ren, J., et al. (2016). The N170 component is sensitive to face-like stimuli: a study of Chinese Peking opera makeup. 10, 535–541. doi: 10.1007/s11571-016-9399-8

Maurer, D., O'Craven, K. M., Le Grand, R., Mondloch, C. J., Springer, M. V., Lewis, T. L., et al. (2007). Neural correlates of processing facial identity based on features versus their spacing. Neuropsychologia 45, 1438–1451. doi: 10.1016/j.neuropsychologia.2006.11.016

Pernet, C. R., Wilcox, R., and Rousselet, G. A. (2012). Robust correlation analyses: false positive and power validation using a new open source matlab toolbox. Front. Psychol. 3:606. doi: 10.3389/fpsyg.2012.00606

Proverbio, A. M., and Galli, J. (2016). Women are better at seeing faces where there are none: an ERP study of face pareidolia. Soc. Cogn. Affect. Neurosci. 11, 1501–1512. doi: 10.1093/scan/nsw064

Reed, C. L., Stone, V. E., Grubb, J. D., and McGoldrick, J. E. (2006). Turning configural processing upside down: part and whole body postures. J. Exp. Psychol. Hum. Percept. Perform. 32, 73–87. doi: 10.1037/0096-1523.32.1.73

Rossion, B., and Caharel, S. (2011). ERP evidence for the speed of face categorization in the human brain: disentangling the contribution of low-level visual cues from face perception. Vision Res. 51, 1297–1311. doi: 10.1016/j.visres.2011.04.003

Rossion, B., Delvenne, J. F., Debatisse, D., Goffaux, V., Bruyer, R., Crommelinck, M., et al. (1999). Spatio-temporal localization of the face inversion effect: an event-related potentials study. Biol. Psychol. 50, 173–189. doi: 10.1016/S0301-0511(99)00013-7

Rossion, B., Dricot, L., Goebel, R., and Busigny, T. (2011). Holistic face categorization in higher order visual areas of the normal and prosopagnosic brain: toward a non-hierarchical view of face perception. Front. Hum. Neurosci. 4:225. doi: 10.3389/fnhum.2010.00225

Rossion, B., Gauthier, I., Tarr, M. J., Despland, P., Bruyer, R., Linotte, S., et al. (2000). The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face- specific processes in the human brain. Neuroreport 11, 69–74. doi: 10.1097/00001756-200001170-00014

Rossion, B., and Jacques, C. (2011). “The N170: understanding the time-course of face perception in the human brain,” in The Oxford Handbook of Event-Related Potential Components, ed S. J. Luc (Oxford: Oxford University Press), 115–142.

Rossion, B., Joyce, C. A., Cottrell, G. W., and Tarr, M. J. (2003). Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuroimage 20, 1609–1624. doi: 10.1016/j.neuroimage.2003.07.010

Sadeh, B., Podlipsky, I., Zhdanov, A., and Yovel, G. (2010). Event-related potential and functional MRI measures of face-selectivity are highly correlated: a simultaneous ERP-fMRI investigation. Hum. Brain Mapp. 31, 1490–1501. doi: 10.1002/hbm.20952

Sagiv, N., and Bentin, S. (2001). Structural encoding of human and schematic faces: holistic and part-based processes. J. Cogn. Neurosci. 13, 937–951. doi: 10.1162/089892901753165854

Schweinberger, S. R., Huddy, V., and Burton, A. M. (2004). N250r: A face-selective brain response to stimulus repetitions. Neuroreport 15, 1501–1505. doi: 10.1097/01.wnr.0000131675.00319.42

Suzuki, M., and Noguchi, Y. (2013). Reversal of the face-inversion effect in N170 under unconscious visual processing. Neuropsychologia 51, 400–409. doi: 10.1016/j.neuropsychologia.2012.11.021

Tanaka, J. W., and Curran, T. (2001). A neural basis for expert object recognition. Psychol. Sci. 12, 43–47. doi: 10.1111/1467-9280.00308

Tanaka, J. W., and Farah, M. J. (1993). Parts and wholes in face recognition. Q. J. Exp. Psychol. A. 46, 225–245. doi: 10.1080/14640749308401045

Wang, H., Sun, P., Ip, C., Zhao, X., and Fu, S. (2015). Configural and featural face processing are differently modulated by attentional resources at early stages: an event-related potential study with rapid serial visual presentation. Brain Res. 1602, 75–84. doi: 10.1016/j.brainres.2015.01.017

Keywords: ERP/EEG, face perception, face inversion effect, face-like patterns, pareidolia

Citation: Nihei Y, Minami T and Nakauchi S (2018) Brain Activity Related to the Judgment of Face-Likeness: Correlation between EEG and Face-Like Evaluation. Front. Hum. Neurosci. 12:56. doi: 10.3389/fnhum.2018.00056

Received: 26 July 2017; Accepted: 31 January 2018;

Published: 16 February 2018.

Edited by:

Xiaolin Zhou, Peking University, ChinaReviewed by:

Xiaohua Cao, Zhejiang Normal University, ChinaYiping Zhong, Hunan Normal University, China

Copyright © 2018 Nihei, Minami and Nakauchi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tetsuto Minami, bWluYW1pQHR1dC5qcA==

Yuji Nihei

Yuji Nihei Tetsuto Minami

Tetsuto Minami Shigeki Nakauchi1

Shigeki Nakauchi1