94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 30 September 2014

Sec. Cognitive Neuroscience

Volume 8 - 2014 | https://doi.org/10.3389/fnhum.2014.00787

This article is part of the Research Topic Advances in Virtual Agents and Affective Computing for the Understanding and Remediation of Social Cognitive Disorders View all 13 articles

Background: Facial expressions of emotions represent classic stimuli for the study of social cognition. Developing virtual dynamic facial expressions of emotions, however, would open-up possibilities, both for fundamental and clinical research. For instance, virtual faces allow real-time Human–Computer retroactions between physiological measures and the virtual agent.

Objectives: The goal of this study was to initially assess concomitants and construct validity of a newly developed set of virtual faces expressing six fundamental emotions (happiness, surprise, anger, sadness, fear, and disgust). Recognition rates, facial electromyography (zygomatic major and corrugator supercilii muscles), and regional gaze fixation latencies (eyes and mouth regions) were compared in 41 adult volunteers (20 ♂, 21 ♀) during the presentation of video clips depicting real vs. virtual adults expressing emotions.

Results: Emotions expressed by each set of stimuli were similarly recognized, both by men and women. Accordingly, both sets of stimuli elicited similar activation of facial muscles and similar ocular fixation times in eye regions from man and woman participants.

Conclusion: Further validation studies can be performed with these virtual faces among clinical populations known to present social cognition difficulties. Brain–Computer Interface studies with feedback–feedforward interactions based on facial emotion expressions can also be conducted with these stimuli.

Recognizing emotions expressed non-verbally by others is crucial for harmonious interpersonal exchanges. A common approach to assess this capacity is the evaluation of facial expressions. Presentations of photographs of real faces allowed the classic discovery that humans are generally able to correctly perceive six fundamental emotions (happiness, surprise, fear, sadness, anger, and disgust) experienced by others from their facial expressions (Ekman and Oster, 1979). These stimuli also helped documenting social cognition impairment in neuropsychiatric disorders such as autism (e.g., Dapretto et al., 2006), schizophrenia (e.g., Kohler et al., 2010), and psychopathy (Deeley et al., 2006). Given their utility, a growing number of sets of facial stimuli were developed during the past decade, including the Montreal Set of Facial Displays of Emotion (Beaupré and Hess, 2005), the Karolinska Directed Emotional Faces (Goeleven et al., 2008), the NimStim set of facial expressions (Tottenham et al., 2009), the UC Davis set of emotion expressions (Tracy et al., 2009), the Radboud faces database (Langner et al., 2010), and the Umeå University database of facial expressions (Samuelsson et al., 2012). These sets, however, have limitations. First, they consist of static photographs of facial expressions from real persons, which cannot be readily modified to fit a specific requirement of particular studies (e.g., presenting elderly Caucasian females). Second, static facial stimuli elicit weaker muscle mimicry responses, and they are less ecologically valid than dynamic stimuli (Sato et al., 2008; Rymarczyk et al., 2011). Because recognition impairments encountered in clinical settings might be subtle, assessment of different emotional intensities is often required, which is better achieved with dynamic stimuli (incremental expression of emotions) than static photographs (Sato and Yoshikawa, 2007).

Custom-made video clips of human actors expressing emotions have also been used (Gosselin et al., 1995), although it is a time and financially consuming process. Recent sets of validated video clips are available (van der Schalk et al., 2011; Bänziger et al., 2012), but again, important factors such as personal expressive style and physical characteristics (facial physiognomy, eye–hair color, skin texture, etc.) of the stimuli are fixed and difficult to control. Furthermore, video clips are not ideal for novel treatment approaches that use Human–Computer Interfaces (HCI; Birbaumer et al., 2009; Renaud et al., 2010).

A promising avenue to address all these issues is the creation of virtual faces expressing emotions (Roesch et al., 2011). Animated synthetic faces expressing emotions allow controlling of a number of potential confounds (e.g., equivalent intensity, gaze, physical appearance, socio-demographic variables, head angle, ambient luminosity), while giving experimenters a tool to create specific stimuli corresponding to their particular demands. Before being used with HCI in research or clinical settings, sets of virtual faces expressing emotions must be validated. Although avatars expressing emotions are still rare (Krumhuber et al., 2012), interesting results emerged from previous studies. First, basic emotions are well recognized from simple computerized line drawing depicting facial muscle movements (Wehrle et al., 2000). Second, fundamental emotions expressed by synthetic faces are equally, if not better, recognized than those expressed by real persons (except maybe for disgust; Dyck et al., 2008). Third, virtual facial expressions of emotions elicit sub-cortical activation of equivalent magnitude than that observed with real facial expressions (Moser et al., 2007). Finally, clinical populations with deficits of social cognition also show impaired recognition of emotions expressed by avatars (Dyck et al., 2010). In brief, virtual faces expressing emotions represent a promising approach to evaluate aspects of social cognition both for fundamental and clinical research (Mühlberger et al., 2009).

We recently developed a set of adult (males and females) virtual faces from different ethnic backgrounds (Caucasian, African, Latin, or Asian), expressing seven facial emotional states (neutral, happiness, surprise, anger, sadness, fear, and disgust) with different intensities (40, 60, 100%), from different head angles (90°, 45°, and full frontal; Cigna et al., in press). The purpose of this study was to validate a dynamic version of these stimuli. In addition to verify convergent validity with stimuli of dynamic expressions from real persons, the goal of this study was to demonstrate construct validity with physiological measures traditionally associated with facial emotion recognition of human expressions: facial electromyography (fEMG) and eye-tracking.

Facial muscles of an observer generally react with congruent contractions while observing the face of a real human expressing a basic emotion (Dimberg, 1982). In particular, the zygomatic major (lip corner pulling movement) and corrugator supercilii (brow lowering movement) muscles are rapidly, unconsciously, and differentially activated following exposition to pictures of real faces expressing basic emotions (Dimberg and Thunberg, 1998; Dimberg et al., 2000). Traditionally, these muscles are used to distinguish between positive and negative emotional reactions (e.g., Cacioppo et al., 1986; Larsen et al., 2003). In psychiatry, fEMG have been used to demonstrate sub-activation of the zygomatic major and/or the corrugator supercilii muscles in autism (McIntosh et al., 2006), schizophrenia (Mattes et al., 1995), personality disorders (Herpertz et al., 2001), and conduct disorders (de Wied et al., 2006). Interestingly, virtual faces expressing basic emotions induce the same facial muscle activation in the observer as do real faces, with the same dynamic >static stimulus advantage (Weyers et al., 2006, 2009). Thus, recordings of the zygomatic major and the corrugator supercilii muscle activations should represent a good validity measure of computer-generated faces.

Eye-trackers are also useful in the study of visual emotion recognition because gaze fixations on critical facial areas (especially mouth and eyes) are associated with efficient judgment of facial expressions (Walker-Smith et al., 1977). As expected, different ocular scanning patterns and regional gaze fixations are found among persons with better (Hall et al., 2010) or poorer recognition of facial expressions of emotions (e.g., persons with autism, Dalton et al., 2005; schizophrenia, Loughland et al., 2002; or psychopathic traits, Dadds et al., 2008). During exposition to virtual expressions of emotions, very few eye-tracking studies are available, although the data seem comparable to those with real stimuli (e.g., Wieser et al., 2009). In brief, fEMG and eye-tracking measures could serve not only to validate virtual facial expressions of emotions, but also to demonstrate the possibility of using peripheral input (e.g., muscle activation and gaze fixations) with virtual stimuli for HCI. The main goal of this study was to conduct three types of validation with a new set of virtual faces expressing emotions: (1) primary (face) validity with recognition rates; (2) concurrent validity with another, validated instrument; and (3) criterion validity with facial muscle activation and eye gaze fixations. This study was based on three hypotheses. H1: the recognition rates would not differ significantly between the real and virtual conditions for any of the six expressed emotions; H2: real and virtual conditions would elicit similar mean activation of the zygomatic major and corrugator supercilii muscles for the six expressed emotions; H3: the mean time of gaze fixations on regions of interest would be similar in both conditions (real and virtual).

Forty-one adult Caucasian volunteers participated in the study (mean age: 24.7 ± 9.2, 18–60 interval; 20 males and 21 females). They were recruited via Facebook friends and university campus advertisement. Exclusion criteria were a history of epileptic seizures, having received a major mental disorder diagnosis, or suffering from motor impairment. Each participant signed an informed consent form and received a 10$ compensation for their collaboration. This number of participants was chosen based on previous studies concerned with emotional facial expressions of emotion (between 20 and 50 participants; Weyers et al., 2006, 2009; Dyck et al., 2008; Likowski et al., 2008; Mühlberger et al., 2009; Roesch et al., 2011; Krumhuber et al., 2012).

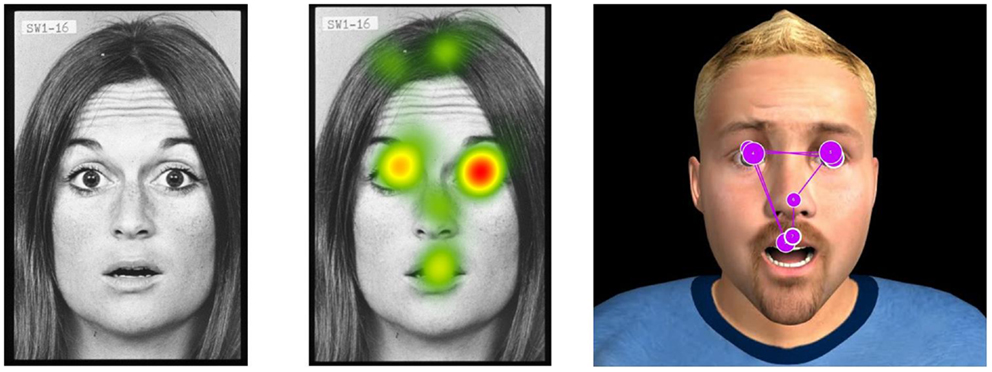

Participants were comfortably seated in front of a 19″ monitor in a sound attenuated, air-conditioned (19°C) laboratory room. The stimuli were video clips of real Caucasian adult faces and video clips of avatar Caucasian adult faces dynamically expressing a neutral state and the six basic emotions (happiness, surprise, anger, sadness, fear, and disgust). Video clips of real persons (one male, one female) were obtained from computerized morphing (FantaMorph software, Abrasoft) of two series of photographs from the classic Picture of Facial Affect set (Ekman and Friesen, 1976; from neutral to 100% intensity). Video clips of virtual faces were obtained from morphing (neutral to 100% intensity) static expressions of avatars from our newly developed set (one male, and female; Cigna et al., in press; Figure 1). The stimuli configurations were based on the POFA (Ekman and Friesen, 1976) and the descriptors of the Facial Action Coding System (Ekman et al., 2002). In collaboration with a professional computer graphic designer specialized in facial expressions (BehaVR solution)1, virtual dynamic facial movements were obtained by gradually moving multiple facial points (action units) along vectors involved in the 0–100% expressions (Rowland and Perrett, 1995). For the present study, 24 video clips were created: 2 (real and virtual) × 2 (man and woman) × 6 (emotions). A series example is depicted in Figure 2. Video clips of 2.5, 5, and 10 s. were obtained and pilot data indicated that 10 s presentations were optimal for eye-tracking analyses. Therefore, real and synthetic expressions were presented during 10 s, preceded by a 2 s central cross fixation. During the inter-stimulus intervals (max 10 s), participants had to select (mouse click) the emotion expressed by the stimulus from a multiple-choice questionnaire (Acrobat Pro software) appearing on the screen. Each stimulus was presented once, pseudo randomly, in four blocks of six emotions, counterbalanced across participants (Eyeworks presentation software, Eyetracking Inc., CA, USA).

Figure 2. Example of a sequence from neutral to 100% expression (anger) from a computer-generated face is shown.

Fiber contractions (microvolts) of the zygomatic major and the corrugator supercilii muscles (left side) were recorded with 7 mm bipolar (common mode rejection) Ag/AgCl pre-gelled adhesive electrodes2, placed in accordance with the guidelines of Fridlund and Cacioppo (1986). The skin was exfoliated with NuPrep (Weaver, USA) and cleansed with sterile alcohol prep pads (70%). The raw signal was pre-amplified through a MyoScan-Z sensor (Thought Technology, Montreal, QC, Canada) with built-in impedance check (<15 kΩ), referenced to the upper back. Data were relayed to a ProComp Infinity encoder (range of 0–2000 μV; Thought Technology) set at 2048 Hz, and post-processed with the Physiology Suite for Biograph Infinity (Thought Technology). Data were filtered with a 30 Hz high-pass filter, a 500 Hz low pass filter, and 60 Hz notch filter. Baseline EMG measures were obtained at the beginning of the session, during eye-tracking calibration. Gaze fixations were measured with a FaceLab5 eye-tracker (SeeingMachines, Australia), and regions of interest were defined as commissures of the eyes and the mouth (Eyeworks software; Figure 3). Assessments were completed in approximately 30 min.

Figure 3. Example of eye-tracking data (regional gaze fixations) on real and virtual stimuli is shown.

Emotion recognition and physiological data from each participant were recorded in Excel files and converted into SPSS for statistical analyses. First, recognition rates (%) for real vs. avatar stimuli from male and female participants were compared with Chi-square analyses, corrected (p < 0.008) and uncorrected (p < 0.05) for multiple comparisons. The main goal of this study was to demonstrate that the proportion of recognition of each expressed emotion would be statistically similar in both conditions (real vs. virtual). To this end, effect sizes (ES) were computed using the Cramer’s V statistic. Cramer’s V values of 0–10, 11–20, 21–30, and 31 and are considered null, small, medium, and large, respectively (Fox, 2009). Repeated measures analyses of variance (ANOVAs) between factors (real vs. virtual) with the within-subject factor emotion (happiness, surprise, anger, sadness, fear, or disgust) were also conducted on the mean fiber contractions of the zygomatic major and the corrugator supercilii muscles, as well as the mean time spent looking at the mouth, eye, and elsewhere. For these comparisons, ES were computed with the r formula, values of 0.10, 0.30, and 0.50 were considered small, medium, and large, respectively (Field, 2005).

This study was approved by the ethical committee of the University of Quebec at Trois-Rivières (CER-12-186-06.09).

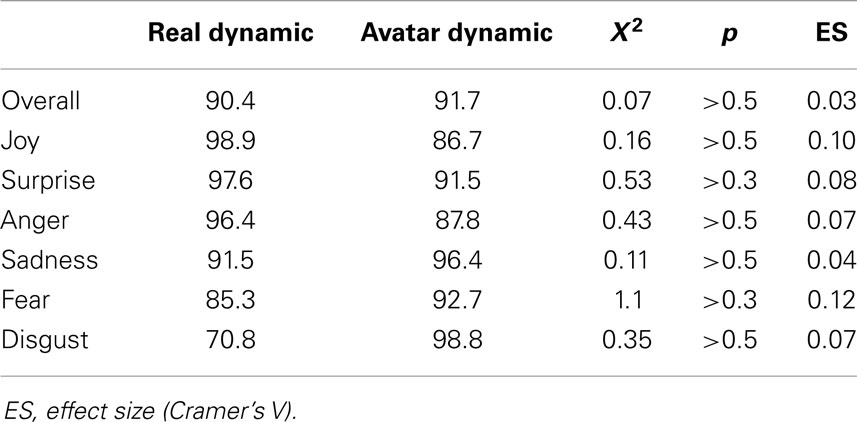

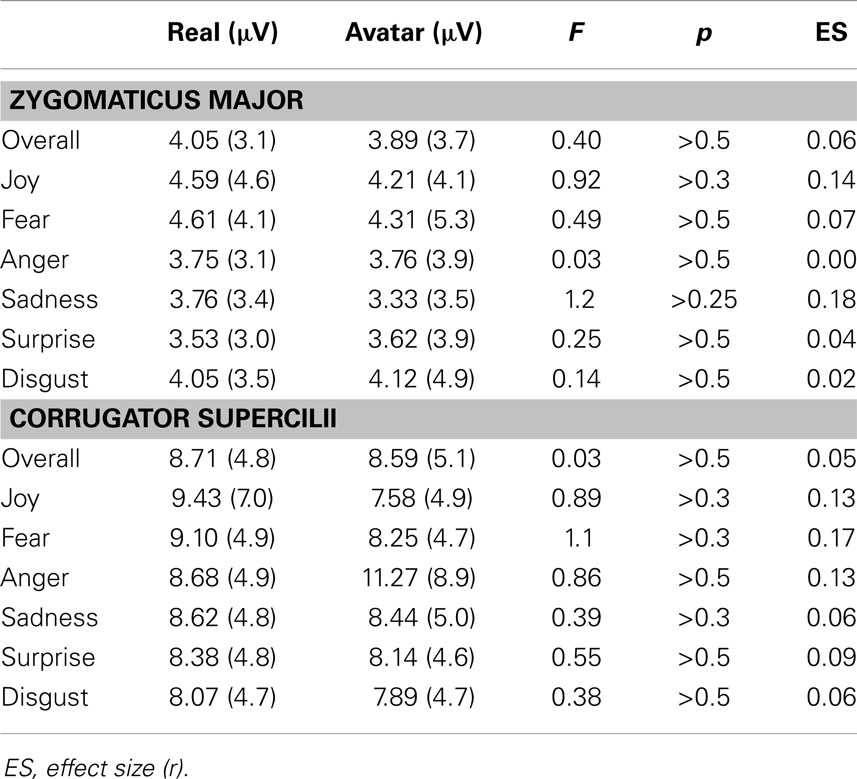

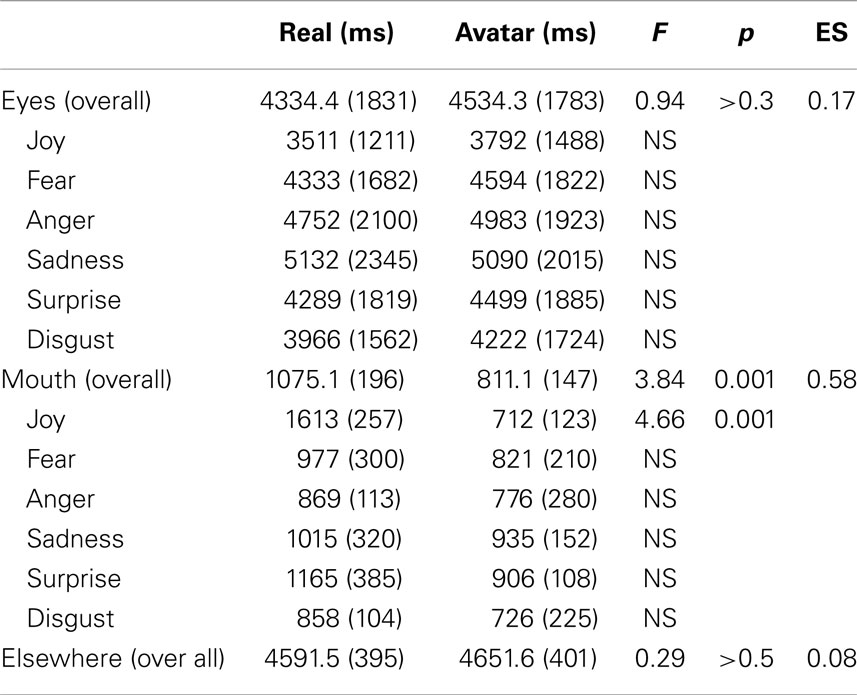

No significant difference emerged between male (90%) and female (92.1%) raters (data not shown). In accordance with H1, recognition rates of the whole sample did not differ significantly between real and virtual expressions, neither overall [90.4 vs. 91.7%, respectively; X2(1) = 0.07, p = 0.51] nor for each emotion (Table 1). ES was small between conditions for all emotions, including joy (0.10), surprise (0.08), anger (0.07), sadness (0.04), fear (0.12), and disgust (0.07) (Table 1). In accordance with H2, no difference emerged between the mean contractions of the zygomatic major or the corrugator supercilii muscles between both conditions for any emotions, with all ES below 0.19 (Table 2). Finally, in partial accordance with H3, only the time spent looking at the mouth differed significantly between conditions [Real > Virtual; F(1,29) = 3.84, p = 0.001, ES = 0.58; Table 3]. Overall, low ES demonstrate that very few difference exist between the real and virtual conditions. However, such low ES also generated weak statistical power (0.28 with an alpha set at 0.05 and 41 participants). Therefore, the possibility remains that these negative results reflect a type-II error (1 − power = 0.72).

Table 1. Comparisons of recognition rates (%) between real and virtual facial expressions of emotions.

Table 2. Comparisons of mean (SD) facial muscle activations during presentations of real and virtual stimuli expressing the basic emotions.

Table 3. Comparisons of mean (SD) duration (ms) of gaze fixations during presentations of real and virtual stimuli expressing the basic emotions.

The main goal of this study was to initially assess concomitants and construct validity of computer-generated faces expressing emotions. No difference was found between recognition rates, facial muscle activation, and gaze time spent on the eye region of virtual and real facial expression of emotions. Thus, these virtual faces can be used for the study of facial emotion recognition. Basic emotions such as happiness, anger, fear, and sadness were all correctly recognized with rates higher than 80%, which is comparable to rates obtained with other virtual stimuli (Dyck et al., 2008; Krumhuber et al., 2012). Interestingly, disgust expressed by our avatars was correctly detected in 98% of the cases (compared with 71% for real stimuli), an improvement from older stimuli (Dyck et al., 2008; Krumhuber et al., 2012). The only difference we found between the real vs. virtual conditions was the time spent looking at the mouth region of the real stimuli, which might be due to an artifact. Our real stimuli were morphed photographs, which could introduce unnatural saccades or texture-smoothing from digital blending. In this study, for instance, the highest time spent looking at the mouth of real stimuli was associated with a jump in the smile of the female POFA picture set (abruptly showing her teeth). Thus, comparisons with video clips of real persons expressing emotions are warranted (van der Schalk et al., 2011). Still, these preliminary data are encouraging. They suggest that avatars could eventually serve alternative clinical approaches such as virtual reality immersion and HCI Birbaumer et al., 2009; Renaud et al., 2010). It could be hypothesized, for instance, that better detection of other’s facial expressions would be achieved through biofeedback based on facial EMG and avatars reacting with corresponding expressions (Allen et al., 2001; Bornemann et al., 2012).

Some limits associated with this study should be addressed by future investigation. First, as abovementioned, using video clips of real persons expressing emotions would be preferable to using morphed photographs. It would also allow presentation of colored stimuli in both conditions. Second, and most importantly, the small number of participants in the present study prevents demonstrating that the negative results were not due to a type-II statistical error related with a lack of power. Most studies using avatars expressing emotions are based on sample sized ranging from 20 to 50 participants (Weyers et al., 2006, 2009; Dyck et al., 2008; Likowski et al., 2008; Mühlberger et al., 2009; Roesch et al., 2011; Krumhuber et al., 2012), because recognition rates are elevated, physiological effects are strong, and effect sizes are high. Although demonstrating an absence of difference is more difficult, these and the present results suggest that no significant difference exist between recognition and reaction to real and virtual agent expression of emotions. Only the addition of more participants in future investigations with our avatars will allow discarding this possibility.

Finally, with the increasing availability of software enabling the creation of whole-body avatars (Renaud et al., 2014), these virtual faces could be used to assess and treat social cognition impairment in clinical settings. We truly believe that the future of social skill evaluation and training resides in virtual reality.

This study was presented in part at the 32nd annual meeting of the Association for Treatment of Sexual Abusers (ATSA), Chicago, 2013.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Allen, J. J., Harmon-Jones, E., and Cavender, J. H. (2001). Manipulation of frontal EEG asymmetry through biofeedback alters self-reported emotional responses and facial EMG. Psychophysiology 38, 685–693. doi: 10.1111/1469-8986.3840685

Bänziger, T., Mortillaro, M., and Scherer, K. R. (2012). Introducing the Geneva Multimodal expression corpus for experimental research on emotion perception. Emotion 12, 1161–1179. doi:10.1037/a0025827

Beaupré, M. G., and Hess, U. (2005). Cross-cultural emotion recognition among Canadian ethnic groups. J. Cross Cult. Psychol. 36, 355–370. doi:10.1177/0022022104273656

Birbaumer, N., Ramos Murguialday, A., Weber, C., and Montoya, P. (2009). Neurofeedback and brain–computer interface: clinical applications. Int. Rev. Neurobiol. 86, 107–117. doi:10.1016/S0074-7742(09)86008-X

Bornemann, B., Winkielman, P., and der Meer, E. V. (2012). Can you feel what you do not see? Using internal feedback to detect briefly presented emotional stimuli. Int. J. Psychophysiol. 85, 116–124. doi:10.1016/j.ijpsycho.2011.04.007

Cacioppo, J. T., Petty, R. P., Losch, M. E., and Kim, H. S. (1986). Electromyographic activity over facial muscle regions can differentiate the valence and intensity of affective reactions. J. Pers. Soc. Psychol. 50, 260–268. doi:10.1037/0022-3514.50.2.260

Cigna, M.-H., Guay, J.-P., and Renaud, P. (in press). La reconnaissance émotionnelle faciale: validation préliminaire de stimuli virtuels et comparaison avec les Pictures of Facial Affect [Facial emotional recognition: preliminary validation with virtual stimuli and the Picture of Facial Affect]. Criminologie.

Dadds, M. R., El Masry, Y., Wimalaweera, S., and Guastella, A. J. (2008). Reduced eye gaze explains “fear blindness” in childhood psychopathic traits. J. Am. Acad. Child Adolesc. Psychiatry 47, 455–463. doi:10.1097/CHI.0b013e31816407f1

Dalton, K. M., Nacewicz, B. M., Johnstone, T., Schaefer, H. S., Gernsbacher, M. A., Goldsmith, H. H., et al. (2005). Gaze fixation and the neural circuitry of face processing in autism. Nat. Neurosci. 8, 519–526. doi:10.1038/nn1421

Dapretto, M., Davies, M. S., Pfeifer, J. H., Scott, A. A., Sigman, M., Bookheimer, S. Y., et al. (2006). Understanding emotions in others: mirror neuron dysfunction in children with autism spectrum disorders. Nat. Neurosci. 9, 28–30. doi:10.1038/nn1611

de Wied, M., van Boxtel, A., Zaalberg, R., Goudena, P. P., and Matthys, W. (2006). Facial EMG responses to dynamic emotional facial expressions in boys with disruptive behavior disorders. J. Psychiatr. Res. 40, 112–121. doi:10.1016/j.jpsychires.2005.08.003

Deeley, Q., Daly, E., Surguladze, S., Tunstall, N., Mezey, G., Beer, D., et al. (2006). Facial emotion processing in criminal psychopathy Preliminary functional magnetic resonance imaging study. Br. J. Psychiatry 189, 533–539. doi:10.1192/bjp.bp.106.021410

Dimberg, U., and Thunberg, M. (1998). Rapid facial reactions to emotional facial expressions. Scand. J. Psychol. 39, 39–45. doi:10.1111/1467-9450.00054

Dimberg, U., Thunberg, M., and Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychol. Sci. 11, 86–89. doi:10.1111/1467-9280.00221

Dyck, M., Winbeck, M., Leiberg, S., Chen, Y., Gur, R. C., and Mathiak, K. (2008). Recognition profile of emotions in natural and virtual faces. PLoS ONE 3:e3628. doi:10.1371/journal.pone.0003628

Dyck, M., Winbeck, M., Leiberg, S., Chen, Y., and Mathiak, K. (2010). Virtual faces as a tool to study emotion recognition deficits in schizophrenia. Psychiatry Res. 179, 247–252. doi:10.1016/j.psychres.2009.11.004

Ekman, P., and Friesen, W. V. (1976). Pictures of Facial Affect. Palo Alto: Consulting Psychologists Press.

Ekman, P., Friesen, W. V., and Hager, J. C. (2002). Facial Action Coding System: The Manual. Palo Alto, CA: Consulting Psychologists Press.

Ekman, P., and Oster, H. (1979). Facial expressions of emotion. Annu. Rev. Psychol. 30, 527–554. doi:10.1146/annurev.ps.30.020179.002523

Field, A. (2005). Discovering Statistical Analyses Using SPSS, 2nd Edn. Thousand Oaks, CA: Sage Publications, Inc.

Fox, J. (2009). A Mathematical Primer for Social Statistics. Thousand Oaks, CA: Sage Publications, Inc.

Fridlund, A. J., and Cacioppo, J. T. (1986). Guidelines for human electromyographic research. Psychophysiology 23, 567–589. doi:10.1111/j.1469-8986.1986.tb00676.x

Goeleven, E., De Raedt, R., Leyman, L., and Verschuere, B. (2008). The Karolinska directed emotional faces: a validation study. Cognit. Emot. 22, 1094–1118. doi:10.1017/sjp.2013.9

Gosselin, P., Kirouac, G., and Doré, F. Y. (1995). Components and recognition of facial expression in the communication of emotion by actors. J. Pers. Soc. Psychol. 68, 83. doi:10.1037/0022-3514.68.1.83

Hall, J. K., Hutton, S. B., and Morgan, M. J. (2010). Sex differences in scanning faces: does attention to the eyes explain female superiority in facial expression recognition? Cogn. Emot. 24, 629–637. doi:10.1080/02699930902906882

Herpertz, S. C., Werth, U., Lukas, G., Qunaibi, M., Schuerkens, A., Kunert, H. J., et al. (2001). Emotion in criminal offenders with psychopathy and borderline personality disorder. Arch. Gen. Psychiatry 58, 737–745. doi:10.1001/archpsyc.58.8.737

Kohler, C. G., Walker, J. B., Martin, E. A., Healey, K. M., and Moberg, P. J. (2010). Facial emotion perception in schizophrenia: a meta-analytic review. Schizophr. Bull. 36, 1009–1019. doi:10.1093/schbul/sbn192

Krumhuber, E. G., Tamarit, L., Roesch, E. B., and Scherer, K. R. (2012). FACSGen 2.0 animation software: generating three-dimensional FACS-valid facial expressions for emotion research. Emotion 12, 351–363. doi:10.1037/a0026632

Langner, O., Dotsch, R., Bijlstra, G., Wigboldus, D. H., Hawk, S. T., and van Knippenberg, A. (2010). Presentation and validation of the Radboud Faces Database. Cogn. Emot. 24, 1377–1388. doi:10.1080/02699930903485076

Larsen, J. T., Norris, C. J., and Cacioppo, J. T. (2003). Effects of positive and negative affect on electromyographic activity over zygomaticus major and corrugator supercilii. Psychophysiology 40, 776–785. doi:10.1111/1469-8986.00078

Likowski, K. U., Mühlberger, A., Seibt, B., Pauli, P., and Weyers, P. (2008). Modulation of facial mimicry by attitudes. J. Exp. Soc. Psychol. 44, 1065–1072. doi:10.1016/j.jesp.2007.10.007

Loughland, C. M., Williams, L. M., and Gordon, E. (2002). Schizophrenia and affective disorder show different visual scanning behavior for faces: a trait versus state-based distinction? Biol. Psychiatry 52, 338–348. doi:10.1016/S0006-3223(02)01356-2

Mattes, R. M., Schneider, F., Heimann, H., and Birbaumer, N. (1995). Reduced emotional response of schizophrenic patients in remission during social interaction. Schizophr. Res. 17, 249–255. doi:10.1016/0920-9964(95)00014-3

McIntosh, D. N., Reichmann-Decker, A., Winkielman, P., and Wilbarger, J. L. (2006). When the social mirror breaks: deficits in automatic, but not voluntary, mimicry of emotional facial expressions in autism. Dev. Sci. 9, 295–302. doi:10.1111/j.1467-7687.2006.00492.x

Moser, E., Derntl, B., Robinson, S., Fink, B., Gur, R. C., and Grammer, K. (2007). Amygdala activation at 3T in response to human and avatar facial expressions of emotions. J. Neurosci. Methods 161, 126–133. doi:10.1016/j.jneumeth.2006.10.016

Mühlberger, A., Wieser, M. J., Herrmann, M. J., Weyers, P., Tröger, C., and Pauli, P. (2009). Early cortical processing of natural and artificial emotional faces differs between lower and higher socially anxious persons. J. Neural Transm. 116, 735–746. doi:10.1007/s00702-008-0108-6

Renaud, P., Joyal, C. C., Stoleru, S., Goyette, M., Weiskopf, N., and Birbaumer, N. (2010). Real-time functional magnetic imaging-brain-computer interface and virtual reality promising tools for the treatment of pedophilia. Prog. Brain Res. 192, 263–272. doi:10.1016/B978-0-444-53355-5.00014-2

Renaud, P., Trottier, D., Rouleau, J. L., Goyette, M., Saumur, C., Boukhalfi, T., et al. (2014). Using immersive virtual reality and anatomically correct computer-generated characters in the forensic assessment of deviant sexual preferences. Virtual Real. 18, 37–47. doi:10.1007/s10055-013-0235-8

Roesch, E. B., Tamarit, L., Reveret, L., Grandjean, D., Sander, D., and Scherer, K. R. (2011). FACSGen: a tool to synthesize emotional facial expressions through systematic manipulation of facial action units. J. Nonverbal Behav. 35, 1–16. doi:10.1007/s10919-010-0095-9

Rowland, D. A., and Perrett, D. I. (1995). Manipulating facial appearance through shape and color. IEEE Comput. Graph. Appl. 15, 70–76. doi:10.1109/38.403830

Rymarczyk, K., Biele, C., Grabowska, A., and Majczynski, H. (2011). EMG activity in response to static and dynamic facial expressions. Int. J. Psychophysiol. 79, 330–333. doi:10.1016/j.ijpsycho.2010.11.001

Samuelsson, H., Jarnvik, K., Henningsson, H., Andersson, J., and Carlbring, P. (2012). The Umeå University Database of Facial Expressions: a validation study. J. Med. Internet Res. 14, e136. doi:10.2196/jmir.2196

Sato, W., Fujimura, T., and Suzuki, N. (2008). Enhanced facial EMG activity in response to dynamic facial expressions. Int. J. Psychophysiol. 70, 70–74. doi:10.1016/j.ijpsycho.2008.06.001

Sato, W., and Yoshikawa, S. (2007). Spontaneous facial mimicry in response to dynamic facial expressions. Cognition 104, 1–18. doi:10.1016/j.cognition.2006.05.001

Tottenham, N., Tanaka, J. W., Leon, A. C., McCarry, T., Nurse, M., Hare, T. A., et al. (2009). The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res. 168, 242–249. doi:10.1016/j.psychres.2008.05.006

Tracy, J. L., Robins, R. W., and Schriber, R. A. (2009). Development of a FACS-verified set of basic and self-conscious emotion expressions. Emotion 9, 554–559. doi:10.1037/a0015766

van der Schalk, J., Hawk, S. T., Fischer, A. H., and Doosje, B. (2011). Moving faces, looking places: validation of the Amsterdam Dynamic Facial Expression Set (ADFES). Emotion 11, 907–920. doi:10.1037/a0023853

Walker-Smith, G. J., Gale, A. G., and Findlay, J. M. (1977). Eye movement strategies in face perception. Perception 6, 313–326. doi:10.1068/p060313

Wehrle, T., Kaiser, S., Schmidt, S., and Scherer, K. R. (2000). Studying the dynamics of emotional expression using synthesized facial muscle movements. J. Pers. Soc. Psychol. 78, 105. doi:10.1037/0022-3514.78.1.105

Weyers, P., Mühlberger, A., Hefele, C., and Pauli, P. (2006). Electromyographic responses to static and dynamic avatar emotional facial expressions. Psychophysiology 43, 450–453. doi:10.1111/j.1469-8986.2006.00451.x

Weyers, P., Mühlberger, A., Kund, A., Hess, U., and Pauli, P. (2009). Modulation of facial reactions to avatar emotional faces by nonconscious competition priming. Psychophysiology 46, 328–335. doi:10.1111/j.1469-8986.2008.00771.x

Keywords: virtual, facial, expressions, emotions, validation

Citation: Joyal CC, Jacob L, Cigna M-H, Guay J-P and Renaud P (2014) Virtual faces expressing emotions: an initial concomitant and construct validity study. Front. Hum. Neurosci. 8:787. doi: 10.3389/fnhum.2014.00787

Received: 18 April 2014; Paper pending published: 03 July 2014;

Accepted: 16 September 2014; Published online: 30 September 2014.

Edited by:

Ali Oker, Université de Versailles, FranceReviewed by:

Ouriel Grynszpan, Université Pierre et Marie Curie, FranceCopyright: © 2014 Joyal, Jacob, Cigna, Guay and Renaud. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christian C. Joyal, University of Quebec at Trois-Rivières, 3351 Boul. Des Forges, C.P. 500, Trois-Rivières, QC G9A 5H7, Canada e-mail:Y2hyaXN0aWFuLmpveWFsQHVxdHIuY2E=

†Christian C. Joyal and Laurence Jacob have contributed equally to this work.

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.