- 1Social Interaction and Consciousness Lab (SINC), School of Psychology, University of Aberdeen, Aberdeen, UK

- 2Neuroimaging Group, Psychiatry and Psychotherapy Clinic, University Hospital of Cologne, Cologne, Germany

Psychiatric Disorders as Disorders of Social Interaction

Impairments of social interaction and communication are an important if not essential component of many psychiatric disorders. In the context of psychopathology, one tends to think predominantly of autism spectrum disorders. However, many psychopathologies are to some degree characterized by alterations or impairments of interpersonal functioning in the DSM-5 (American Psychiatric Association, 2013), for instance schizophrenia [even auditory hallucinations have been linked to social cognition; (Bell, 2013)], or personality disorders such as borderline personality disorder (Wright et al., 2013). For different pathologies, the difficulties in social interaction may originate in different impairments; for instance in schizophrenia they may be related to a deficit in context processing (Cohen et al., 1999). Still, irrespective of the specific place that social interaction impairments take within different etiologies, it is clear that the systematic study of interaction patterns could teach us a lot about how they manifest themselves in patients, how healthy people with whom the patients interact engage with these patterns, and how they relate to underlying neurobiology. Here, we argue why this should and how this could be accomplished.

One important aspect of social interaction that is increasingly shown to be impaired in psychiatric disorders is the recognition and production gaze behavior, often related to disorder-specific attentional bias (Armstrong and Olatunji, 2012). Schizophrenia has been associated with gaze-related attention deficits (Tso et al., 2012; Dalmaso et al., 2013). A recent study shows that patients with schizophrenia can be distinguished from neurotypical controls with astonishing accuracy on the basis of abnormal eye-tracking patterns on simple tasks such as fixation and smooth pursuit (Benson et al., 2012). Depression and bipolar disorder have been associated with prefrontal and cerebellar disturbances of oculomotor control during episodes of major depression, problems with antisaccade tasks (production of saccades away from a cue), and delayed initiation of saccades made on command (Sweeney et al., 1998). Finally, it is well known that people with autism orient to different kinds of contingencies (Gergely, 2001; Klin et al., 2009).

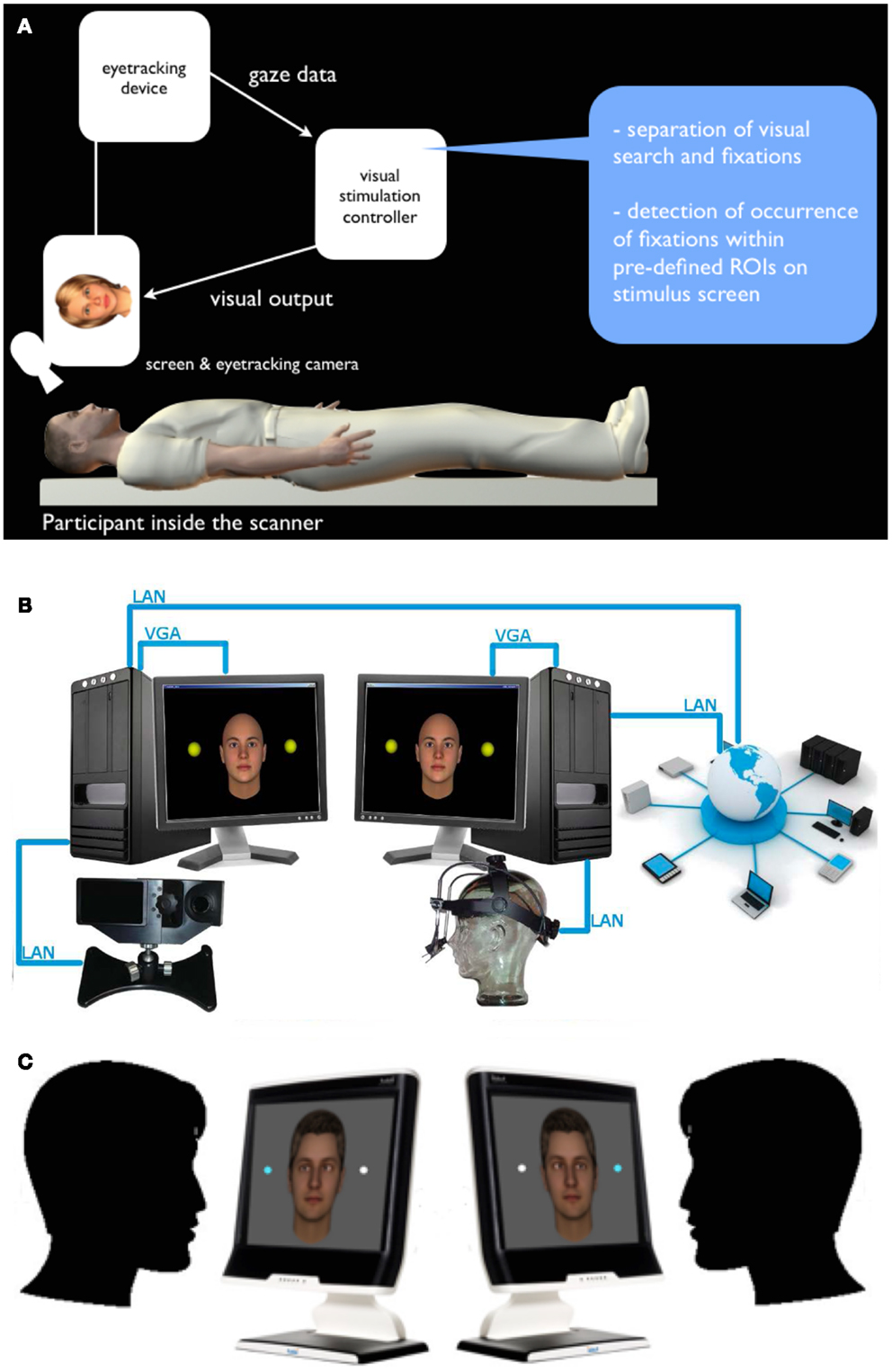

However, most of the experimental paradigms used to establish gaze anomalies are essentially non-interactive and focus on how particular clinical populations differentially perceive stimuli or social scenes, passively. Likewise, the study of social cognition has only in the last decade begun to incorporate social interaction into its explanation of how we come to understand others and how we manage to navigate a complex social world (Schilbach et al., 2013). This “interactive turn” marks a departure from more traditional approaches, which have emphasized the importance of being able to think about the mental states of others. We have argued that the core problem with social interaction in clinical populations may not only lie in passive perception of social cues, but rather in a skewed experience of how one’s own actions influence the social world and in patient’s abilities to automatically and rapidly generate behavioral adjustments in response to social stimuli (Schilbach et al., 2012, 2013). Recently, methodological advances have allowed for the study of real-time dynamic social coordination in for instance children with autism (Fitzpatrick et al., 2013), but while undoubtedly rich, the problem with full-body social interaction is precisely that it is so rich, which makes it most difficult to operationalize so as to be used to quantify aspects of interpersonal coordination. Furthermore, fully interactive approaches become problematic if one wanted to use neuroimaging techniques that can access deeper brain structures, such as fMRI, to investigate the neural correlates of interpersonal coordination in on-going social interactions. Indeed, we have showed that the experience of self-initiated (gaze-based) contingencies is linked to activity in the brain’s reward system, notably the ventral striatum [Pfeiffer et al. (2014); Figure 1A], and it has been suggested that for instance individuals with autism may have difficulties with precisely those rewarding aspects of social interaction (Schmitz et al., 2008; Kohls et al., 2012).

Figure 1. Interactive and dual eye-tracking with virtual anthropo- morphic avatars. (A) Interactive eye-tracking setup operationalized for fMRI: a virtual character is shown on screen and can be made “responsive” to the participant’s looking behavior by means of an algorithm-based, real-time analysis of the eye-tracking obtained from the study participant. (B) Schematic setup: two eye-tracking devices are linked via a local area network (LAN), which allows to simultaneously measure two study participants engaged in a mediated gaze-based interaction (each participant is represented by a virtual character for the respective other). (C) Two participants engaged in a two-person perceptual decision-making task, in which both are asked to discriminate stimuli while the gaze behavior of the respective other participant is visualized on the stimulus screen as well. Importantly, people do not just see where the other is looking via cursor or similar, but actually experience the other’s gaze, to which they can dynamically adapt.

Gaze and Avatars to Study Real-Time Social Interaction

Interactive and even dual interactive eye tracking have been around for a couple of years (Richardson and Dale, 2005; Sangin et al., 2008; Carletta et al., 2010; Neider et al., 2010). Interactive eye tracking is a method whereby a person’s eye gaze is tracked and fed back into the on-going experiment, not so much as a behavioral response akin to a button press but rather as a way of making the trial or experiment course in some way contingent upon the person’s gaze. In dual interactive eye tracking, the gaze of two participants is simultaneously tracked and not only fed into their own experimental course, but also in that of the other person. Due to different experimental questions, all dual eye-tracking setups have either simply collected joint gaze data (non-interactive), or used them to display for one person where the other was looking or reading, by means of a pointer or a little rectangle. While we do not deny the merits of these methods, in social interaction one does not see where others are looking via a rectangle overlaid on a scene (though probably with Google Glass this is not so far away). Instead, what is minimally needed to emulate social interaction is the visibility of one person’s social cue to the other. One logical option is to have people watch live videos of one another (Redcay et al., 2010), but the disadvantage of this is that facial features provide massive social cues that are not always controllable, and that live videos only allow for delay of the video or playing back an unrelated recorded sequence, but do not allow a systematic manipulation of interaction contingencies.

In order to combine both the experimental controllability of depicting the other’s gaze via an on-screen stimulus and the social aspect of perceived gaze, we developed a setup in which a person’s eye gaze either influences an avatar’s gaze behavior [simple interactive; (Wilms et al., 2010; Pfeiffer et al., 2011); Figure 1A], or is displayed onto the eyes of the avatar on another person’s screen and vice versa [dual interactive; (Barišic et al., 2013); Figure 1B]. It has been shown that virtual avatars can robustly elicit social effects comparable to real faces, for instance, social inhibition and facilitation, interpersonal distance regulation and social presence, empathy, and pro-social behavior have been shown to be comparable with virtual avatars (Bailenson et al., 2003; Hoyt et al., 2003; Bente et al., 2007; Gillath et al., 2008; Slater et al., 2009). Therefore, using anthropomorphic virtual characters and making them interactive provides an excellent compromise of ecological validity and experimental control. Using the dual eye-tracking setup, in particular, one can generate two-person tasks, during which an integration of the interaction partner’s gaze behavior may (or may not) become relevant for task performance and measures of subjective experience (Figure 1C).

Empirical Questions and Pathologies

We see four ways in which interactive and dual setups as described above can be useful for psychiatry. First, a simple interactive eye-tracking setup, which allows for control of the algorithm by which the avatar behaves in response to the person’s gaze, could be used for diagnosis just as the setup used by Benson and colleagues (Benson et al., 2012), which had people perform three simple tasks: smooth pursuit, a fixation stability task, and a free-viewing tasks, but more along a social dimension, in that it would tell us to what degree persons are sensitive to action contingencies, or the disruption thereof.

Second, dual interactive setups would allow us to start looking at whether and how particular psychopathologies are associated with skewed interaction patterns. Indeed, the major advantage of a dual interactive setup is that it allows for a precise quantification of the gaze-interaction dynamics, using non-linear methods such as cross-recurrence quantification analysis. Such quantified interaction dynamics have been shown to correlate with person perception (Miles et al., 2009) and social motives (Lumsden et al., 2012), and have shown a deficit in simultaneous movement synchronization in children with Autism Spectrum Disorder (Fitzpatrick et al., 2013). Thus, it would be possible to tease apart the degree to which patients (a) elicit gaze patterns that differ from controls (and entrain controls), (b) are differentially sensitive to controls’ gaze patterns, (c) are differentially sensitive to how their gaze impacts a control person’s and vice versa, and (d) are differentially sensitive to the communicative signals that certain variance in the other’s gaze or in the dyadic gaze patterns entails. Establishing such measures would lend itself to neuroimaging purposes, which could investigate the neural correlates of social interaction dynamics in one or both brains of the interaction partners. Also, the fact that the setup is virtual means that it is possible to manipulate this virtual environment in such a way that interactors perceive different scenes and one can study the degree to which communication breaks down in certain cases.

Third, a dual setup would allow for quantification not simply of a clinically significant aberration in gaze pattern, but rather of the diagnostic intuition: what does a clinician do and how does the patient have to react in order to be diagnosed as belonging to a particular clinical group?

Finally, a dynamically interactive setup could be implemented therapeutically. For instance, the currently existing VIGART system [virtual interactive system with gaze-sensitive adaptive-response technology, (Lahiri et al., 2011a,b)] has participants interact with a virtual avatar while their gaze is monitored in real-time. Following the interaction, participants receive feedback about their gaze behavior, which helps adolescents with Autism Spectrum Disorder improve their eye gaze patterns. A fully dual interactive eye-tracking setup would allow such feedback in real time via calculated indices not just of gaze behavior but of gaze contingencies.

Thus, just as from a research point of view dual setups will allow us to study social cognition in a truly social setting, such setups, particularly when implemented with eye tracking and virtual avatars, would allow us to look at psychopathology in terms of the clinical symptoms being embedded (and perhaps reinforced) by the social environment, as both try and engage in a social interaction for which each has a different “sketchbook.” Indeed, persons with autism often report problems in interaction with non-autistic persons, but not so much with other persons with autism. Such questions can only be addressed in interactive setups, whereby the use of virtual avatars provides many advantages.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

American Psychiatric Association. (2013). Diagnostic and Statistical Manual of Mental Disorders, 5th Edn. Arlington, VA: America Psychiatric Publishing.

Armstrong, T., and Olatunji, B. O. (2012). Eye tracking of attention in the affective disorders: a meta-analytic review and synthesis. Clin. Psychol. Rev. 32, 704–723. doi: 10.1016/j.cpr.2012.09.004

Bailenson, J. N., Blascovich, J., Beall, A. C., and Loomis, J. M. (2003). Interpersonal distance in immersive virtual environments. Pers. Soc. Psychol. Bull. 29, 819–833. doi:10.1177/0146167203029007002

Barišic, I., Timmermans, B., Pfeiffer, U. J., Bente, G., Vogeley, K., and Schilbach, L. (2013). “Using dual eye-tracking to investigate real time social interactions,” in Proceedings of the “Gaze Interaction in the Post-WIMP World” Workshop at CHI2013. Available from: http://gaze-interaction.net/wp-system/wp-content/uploads/2013/04/BTP+13.pdf

Bell, V. (2013). A community of one: social cognition and auditory verbal hallucinations. PLoS Biol. 11:e1001732. doi:10.1371/journal.pbio.1001723

Benson, P. J., Beedie, S. A., Shephard, E., Giegling, I., Rujescu, D., and St Clair, D. (2012). Simple viewing tests can detect eye movement abnormalities that distinguish schizophrenia cases from controls with exceptional accuracy. Biol. Psychiatry 72, 716–724. doi:10.1016/j.biopsych.2012.04.019

Bente, G., Eschenburg, F., and Krämer, N. C. (2007). “Virtual gaze. A pilot study on the effects of computer simulated gaze in avatar-based conversations,” in Virtual Reality, ed. R. Shumaker (Berlin: Springer), 185–194.

Carletta, J., Hill, R. L., Nicol, C., Taylor, T., de Ruiter, J. P., and Bard, E. G. (2010). Eyetracking for two-person tasks with manipulation of a virtual world. Behav. Res. Methods 42, 254–265. doi:10.3758/BRM.42.1.254

Cohen, J. D., Barch, D. M., Carter, C., and Servan-Schreiber, D. (1999). Context-processing deficits in schizophrenia: converging evidence from three theoretically motivated cognitive tasks. J. Abnorm. Psychol. 108, 120–133. doi:10.1037/0021-843X.108.1.120

Dalmaso, M., Galfano, G., Tarqui, L., Forti, B., and Castelli, L. (2013). Is social attention impaired in schizophrenia? Gaze, but not pointing gestures, is associated with spatial attention deficits. Neuropsychology 27, 608–613. doi:10.1037/a0033518

Fitzpatrick, P., Richardson, M. J., and Schmidt, R. C. (2013). Dynamical methods for evaluating the time-dependent unfolding of social coordination in children with autism. Front. Integr. Neurosci. 7:21. doi:10.3389/fnint.2013.00021

Gergely, G. (2001). The obscure object of desire: “nearly, but clearly not, like me”: contingency preference in normal children versus children with autism. Bull. Menninger Clin. 65, 411–426. doi:10.1521/bumc.65.3.411.19853

Gillath, O., McCall, C., Shaver, P. R., and Blascovich, J. (2008). What can virtual reality teach us about prosocial tendencies in real and virtual environments? Med. Psychol. 11, 259–282. doi:10.1080/15213260801906489

Hoyt, C. L., Blascovich, J., and Swinth, K. R. (2003). Social inhibition in immersive virtual environments. Psychon. Bull. Rev. 12, 183–195. doi:10.1162/105474603321640932

Klin, A., Lin, D. J., Gorrindo, P., Ramsay, G., and Jones, W. (2009). Two-year-olds with autism orient to non-social contingencies rather than biological motion. Nature 459, 257–261. doi:10.1038/nature07868

Kohls, G., Chevallier, C., Troiani, V., and Schultz, R. T. (2012). Social “wanting” dysfunction in autism: neurobiological underpinnings and treatment implications. J. Neurodev. Disord. 4, 10. doi:10.1186/1866-1955-4-10

Lahiri, U., Trewyn, A., Warren, Z., and Sarkar, N. (2011a). Dynamic eye gaze and its potential in virtual reality based applications for children with autism spectrum disorders. Autism Open Access 1, 1000101. doi:10.4172/2165-7890.1000101

Lahiri, U., Warren, Z., and Sarkar, N. (2011b). Design of a gaze-sensitive virtual social interactive system for children with autism. IEEE Trans. Neural. Syst. Rehabil. Eng. 19, 443–452. doi:10.1109/TNSRE.2011.2153874

Lumsden, J., Miles, L. K., Richardson, M. J., Smith, C. A., and Macrae, C. N. (2012). Who syncs? Social motives and interpersonal coordination. J. Exp. Soc. Psychol. 48, 746–751. doi:10.1016/j.jesp.2011.12.007

Miles, L. K., Nind, L. K., and Macrae, C. N. (2009). The rhythm of rapport: interpersonal synchrony and social perception. J. Exp. Soc. Psychol. 45, 585–589. doi:10.1016/j.jesp.2009.02.002

Neider, M. B., Chen, X., Dickinson, C. A., Brennan, S. E., and Zelinsky, G. J. (2010). Coordinating spatial referencing using shared gaze. Psychon. Bull. Rev. 17, 718–724. doi:10.3758/PBR.17.5.718

Pfeiffer, U. J., Schilbach, L., Timmermans, B., Kuzmanovic, B., Georgescu, A. L., Bente, G., et al. (2014). Why we interact: activation of the ventral striatum during real-time social interaction. Neuroimage 101, 124–137. doi:10.1016/j.neuroimage.2014.06.061

Pfeiffer, U. J., Timmermans, B., Bente, G., Vogeley, K., and Schilbach, L. A. (2011). Non-verbal turing test: differentiating mind from machine in gaze-based social interaction. PLoS ONE 6:e27591. doi:10.1371/journal.pone.0027591

Redcay, E., Dodell-Feder, D., Pearrow, M. J., Mavros, P. L., Kleiner, M., Gabrieli, J. D., et al. (2010). Live face-to-face interaction during fMRI: a new tool for social cognitive neuroscience. Neuroimage 50, 1639–1647. doi:10.1016/j.neuroimage.2010.01.052

Richardson, D. C., and Dale, R. (2005). Looking to understand: the coupling between speakers’ and listeners’ eye movements and its relationship to discourse comprehension. Cogn. Sci. 29, 1045–1060. doi:10.1207/s15516709cog0000_29

Sangin, M., Molinari, G., Nüssli, M.-A., and Dillenbourg, P. (2008). “How learners use awareness cues about their peer’s knowledge? Insights from synchronized eye-tracking data,” in Proceedings of the Eight International Conference for the Learning Sciences, eds G. Kanselaar, J. van Merriënboer, P. Kirschner, and T. de Jong (Utrecht: International Conference of the Learning Sciences), 287–294.

Schilbach, L., Eickhoff, S. B., Cieslik, E., Kuzmanovic, B., and Vogeley, K. (2012). Shall we do this together? Social gaze influences action control in a comparison group, but not in individuals with high-functioning autism. Autism 16, 151–162. doi:10.1177/1362361311409258

Schilbach, L., Timmermans, B., Reddy, V., Costall, A., Bente, G., Schlicht, T., et al. (2013). Toward a second-person neuroscience. Behav. Brain Sci. 36, 393–414; discussion 414–41; response 441–62. doi:10.1017/S0140525X12000660

Schmitz, N., Rubia, K., van Amelsvoort, T., Daly, E., Smith, A., and Murphy, D. G. (2008). Neural correlates of reward in autism. Br. J. Psychiatry 192, 19–24. doi:10.1192/bjp.bp.107.036921

Slater, M., Lotto, B., Arnold, M. M., and Sanchez-Vives, M. V. (2009). How we experience immersive virtual environments: the concept of presence and its measurement. Annu. Psicol. 40, 193–210.

Sweeney, J. A., Strojwas, M. H., Mann, J. J., and Thase, M. E. (1998). Prefrontal and cerebellar abnormalities in major depression. Evidence from oculomotor studies. Biol. Psychiatry 43, 584–594. doi:10.1016/S0006-3223(97)00485-X

Tso, I. F., Mui, M. L., Taylor, S. F., and Deldin, P. J. (2012). Eye-contact perception in schizophrenia: relationship with symptoms and socioemotional functioning. J. Abnorm. Psychol. 121, 616–627. doi:10.1037/a0026596

Wilms, M., Schilbach, L., Pfeiffer, U., Bente, G., Fink, G. R., and Vogeley, K. (2010). It’s in your eyes – using gaze contingent stimuli to create truly interactive paradigms for social cognitive and affective neuroscience. Soc. Cogn. Affect. Neurosci. 5, 98–107. doi:10.1093/scan/nsq024

Keywords: eye tracking, social interaction, anthropomorphic avatars, autism, schizophrenia

Citation: Timmermans B and Schilbach L (2014) Investigating alterations of social interaction in psychiatric disorders with dual interactive eye tracking and virtual faces. Front. Hum. Neurosci. 8:758. doi: 10.3389/fnhum.2014.00758

Received: 21 April 2014; Accepted: 08 September 2014;

Published online: 23 September 2014.

Edited by:

Ouriel Grynszpan, Université Pierre et Marie Curie, FranceReviewed by:

Daniel Belyusar, Massachusetts Institute of Technology, USAGnanathushran Rajendran, Heriot-Watt University, UK

Copyright: © 2014 Timmermans and Schilbach. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence:YmVydC50aW1tZXJtYW5zQGFiZG4uYWMudWs=

Bert Timmermans

Bert Timmermans Leonhard Schilbach

Leonhard Schilbach