95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 25 November 2013

Sec. Speech and Language

Volume 7 - 2013 | https://doi.org/10.3389/fnhum.2013.00809

This article is part of the Research Topic What makes written words so special to the brain? View all 23 articles

Objectives: Functional magnetic resonance imaging (fMRI) is a reliable and non-invasive method with which to localize language function in pre-surgical planning. In clinical practice, visual stimulus presentation is often difficult or impossible, due to the patient's restricted language or attention abilities. Therefore, our aim was to investigate modality-specific differences in visual and auditory stimulus presentation.

Methods: Ten healthy subjects participated in an fMRI study comprising two experiments with visual and auditory stimulus presentation. In both experiments, two language paradigms (one for language comprehension and one for language production) used in clinical practice were investigated. In addition to standard data analysis by the means of the general linear model (GLM), independent component analysis (ICA) was performed to achieve more detailed information on language processing networks.

Results: GLM analysis revealed modality-specific brain activation for both language paradigms for the contrast visual > auditory in the area of the intraparietal sulcus and the hippocampus, two areas related to attention and working memory. Using group ICA, a language network was detected for both paradigms independent of stimulus presentation modality. The investigation of language lateralization revealed no significant variations. Visually presented stimuli further activated an attention-shift network, which could not be identified for the auditory presented language.

Conclusion: The results of this study indicate that the visually presented language stimuli additionally activate an attention-shift network. These findings will provide important information for pre-surgical planning in order to preserve reading abilities after brain surgery, significantly improving surgical outcomes. Our findings suggest that the presentation modality for language paradigms should be adapted on behalf of individual indication.

Brain surgery that involves eloquent cortical areas, particularly in brain tumor or epilepsy patients, has remained a challenging task (Spena et al., 2010). Preservation of neuronal functions after surgery is one of the most important goals for neurosurgeons. An accurate mapping of eloquent cortical areas ensures a sufficiently extensive and safe resection of brain parenchyma. Functional magnetic resonance imaging (fMRI) has been established as a reliable and noninvasive tool in mapping of cognitive and executive functions prior to brain surgery [for review see Dimou et al. (2013)]. Reliable localization of language abilities is of huge importance in pre-surgical planning, as language is an essential quality of life factor. The gold standard for intraoperative language localization and neuronavigation is direct electrocortical stimulation (ECS; Sunaert, 2006). However, this method is time-consuming during surgery and is not applicable in all cases, as compliance of the awake patient during surgery is mandatory, and not all patients are capable of this.

Patients who undergo fMRI examination prior to neurosurgery often suffer from disease-driven restricted language abilities or have difficulties in focusing their attention on the task for the entire measurement period. Reading is especially challenging for patients undergoing pre-surgical planning, and therefore, stimuli are often presented auditorily to map language abilities (Dimou et al., 2013). However, the manner in which stimuli are presented might influence the spatial representation of processing networks, as already hypothesized by Carpentier et al. (2001), who investigated differences between auditory and visual stimulus presentation in language-related areas.

In clinical practice, two different language paradigms, one for language perception and one for language production are usually presented visually to map language-related areas. The present study aimed to investigate the different processing networks related to presentation modalities of these exact paradigms by testing auditory and visual stimulus conditions. Based on previous findings and clinical observations that visually presented fMRI stimuli are particularly challenging for patients, we were interested in the specific characteristics of networks that process written language. Therefore, we hypothesized that visually presented language stimuli would require an attention-shift network (Corbetta et al., 1998; Corbetta and Shulman, 2002) in the brain.

To achieve a conclusive comparison of both techniques, two different analysis approaches were used to account for temporal and spatial network patterns: data driven analysis was performed, using independent component analysis (ICA), to test for functionally connected processing networks; and a hypothesis-driven method, using a general linear model (GLM), was used to account for purely stimulus-driven activity. Combining these two analysis methods offers complementary information about the precise processing and representation of language-related areas. As the shift of attention induced by different stimulus modalities is not clear yet, ICA is an appropriate method to investigate data without assuming an a priori model, as this method discriminates activation based on spatial independence rather than temporal correlation to a predefined stimulus.

Ten healthy right-handed subjects (four male, six female; mean age 22 years) participated in this study. All participants completed two fMRI experiments, comprising two scanning sessions each: Experiments 1 and 2. All subjects had normal or corrected-to-normal vision and no history of psychiatric or neurologic diseases. All participants were native speakers of the German language and had a comparable educational background. Prior to inclusion, all participants were informed about the aim of the study and gave their written, informed consent. The study was approved by the Ethics Committee of the Medical University of Vienna.

To avoid influence of language abilities on neural activation within the language network, two language tasks were performed prior to fMRI measurements. The first task was a sentence completion task, a subtest of the Intelligence Structure Test (IST-2000-R; Liepmann et al., 2007), which tests for semantic decision-making. This subtest consists of 20 sentences that are missing the last word of the sentence. The participant is instructed to choose one of five given words to complete the sentence correctly. Furthermore, all participants completed the Regensburg Word Fluency Test (RWT; Aschenbrenner et al., 2000), which tests for verbal fluency, and reflects semantic memory. Subjects had to pronounce as many words as possible referring to a given category. This category can be semantic, such as fruits or animals, or phonemic, such as words beginning with the letter M (e.g., mother, man, mouse).

In Experiment 1, subjects were visually presented with two different language paradigms using an MR-compatible visual stimulation system (NordicNeuroLab, Bergen, NO).

1. Verb generation task: The first language paradigm was a covert verb generation task. Frequent German nouns were visually presented in white letters on a black screen. In this task, a 30s block-design was used. During active blocks, 15 nouns are presented for 1s each (e.g., door, book, ball). The subjects were instructed to think of all verbs he/she associated with the presented noun until the next word appeared (Petersen et al., 1988; Holland et al., 2001). During baseline blocks, the participants were asked to fixate on harsh signs presented on the screen.

2. Phrases task: In the second language paradigm, syntactically simple and correct sentences in canonical German word order (subject–verb–object) were presented in white letters on a black screen. During active blocks, sentences were presented every 2 s, half of the sentences containing a semantically inappropriate object (e.g., semantically appropriate: Das Mädchen spielt Klavier. Engl.: ’The girl plays the piano.'; semantically inappropriate: Der Dichter dichtet ein Auto. Engl:. ’The poet composes a car.'). During baseline, subjects were instructed to look at white harsh signs presented on the black screen [stimuli modified from Foki et al. (2008)].

Experiment 2 consisted of the same two language paradigms. Rather than visual presentation, words and sentences were presented auditorily using MR-compatible head phones. Block-design presentation times equaled those of Experiment 1. During active blocks, 15 nouns or sentences were presented. During baseline, participants were presented with a tone every 2 s.

Measurements were performed on a 3T TIM Trio System (Siemens Medical Solution, Erlangen, Germany) using a 12-channel head coil. FMRI data were acquired using single-shot, gradient-recalled, echo-planar imaging (EPI). Twenty slices (1 mm gap, 4 mm thickness) with an FOV of 210 × 210 mm and a TE/TR of 42/2000 ms were acquired. Slices were aligned parallel to the connection between the anterior and posterior commissure. All subjects participating in this study underwent four scanning sessions, two with visually presented language paradigms (Experiment 1) and two with auditory language presentation (Experiment 2), lasting 5 min each.

Stimulus fixation and eye movements were recorded using an MR-compatible eye-tracker (ViewPoint EyeTracker, Arrington Research, Scottsdale, AZ) throughout all the measurements of Experiment 1.

Preprocessing of fMRI data was performed using SPM8 (http://www.fil.ion.ucl.ac.uk/spm/) implemented in MATLAB (Matlab 7.14.0, Release 2012a, Mathworks Inc., Sherborn, MA, USA) including motion correction, spatial normalization to an MNI template, and spatial smoothing. First-level analysis was performed for each paradigm separately, by constructing a GLM using block onsets as regressors. Head movement effects were modeled by including six motion parameters as additional regressors. The contrast active > baseline was generated for both paradigms for Experiments 1 and 2. For comparison of visually vs. auditorily presented language effects, the two contrasts visual > auditory and auditory > visual were calculated at the group level.

Additional second-level group analysis was carried out for both paradigms (phrases and verb generation) and experiments (auditory and visual presentation) using probabilistic ICA, as implemented in MELODIC (Multivariate Exploratory Linear Decomposition into Independent Components) version 3.10, a part of FSL (FMRIB's Software Library, www.fmrib.ox.ac.uk/fsl), using FastICA (Beckmann and Smith, 2004). Non-brain voxels were masked and voxel-wise de-meaning of the data and normalization of the voxel-wise variance was carried out. Pre-processed data sets were whitened and projected into an n-dimensional subspace using probabilistic Principal Component analysis in which the number of dimensions was estimated using the Laplace approximation to the Bayesian evidence of the model order (Minka, 2000; Beckmann and Smith, 2004). Dimensions for n were 18 for both visually presented paradigms, 24 for the auditory phrases task, and 25 for the auditory verb generation task. For the optimization of the non-Gaussian sources, contrast function and convergence thresholds, as suggested by Hyvärinen et al. (2001), were used. Estimated component maps were divided by the standard deviation of the residual noise and thresholded by fitting a mixture model to the intensity values histogram (Beckmann and Smith, 2004). All group ICA network components were assessed by visual inspection, based on the spatial distribution patterns.

Additional group ICAs were carried out by submitting the visual and auditory data sets of both conditions to be evaluated as a group. After group ICA, as described above, the set of spatial maps from the group-average analysis was used to generate subject-specific versions of the spatial maps, and associated time series, using the dual regression approach version v0.5, a part of FSL (Beckmann et al., 2009; Filippini et al., 2009). First, for each subject, the group-average set of spatial maps is regressed (as spatial regressors in a multiple regression) into the subject's 4D space-time dataset. This results in a set of subject-specific time series, one per group-level spatial map. Next, those time series were regressed (as temporal regressors, again in a multiple regression) into the same 4D dataset, resulting in a set of subject-specific spatial maps, one per group-level spatial map. Corresponding spatial IC maps for every subject and both conditions were then exported to SPM8 for statistical testing. For second-level analysis, two separate t-tests were performed for both conditions (p < 0.05, FWE corrected). Common language related areas, independent of presentation modality, were investigated performing two conjunction analyses (Friston et al., 1999), one for visual and auditory presentation of the phrases task and a second one for the two modalities of the verb generation task (p < 0.05, FWE corrected).

To investigate language lateralization, voxel-wise laterality maps were created for the subject-specific spatial IC maps resulting from the dual regression step. The lateral maps were computed using the LUI toolbox (http://mialab.mrn.org/software/; Swanson et al., 2011) by subtracting every image from itself after flipping in the left/right direction (Stevens et al., 2005). A voxel-wise laterality map overcomes the problem of a laterality index, which is based on voxel counting and is therefore sensitive to the definition of the threshold. Two-sample t-tests were calculated across the two presentation modalities separately for both tasks (p < 0.05, FWE corrected).

The results of the language tasks performed prior to fMRI measurements revealed average language performance for all investigated subjects. For the sentence completion subtest, the participants' number of correct items ranged from 10 to 18 (mean 14), corresponding to an average performance compared to normative data for this age group. Results of the RWT revealed a mean number of 18 words beginning with the letter M and a mean number of listed words referring to the category of animals of 35, both reflecting average verbal fluency performance.

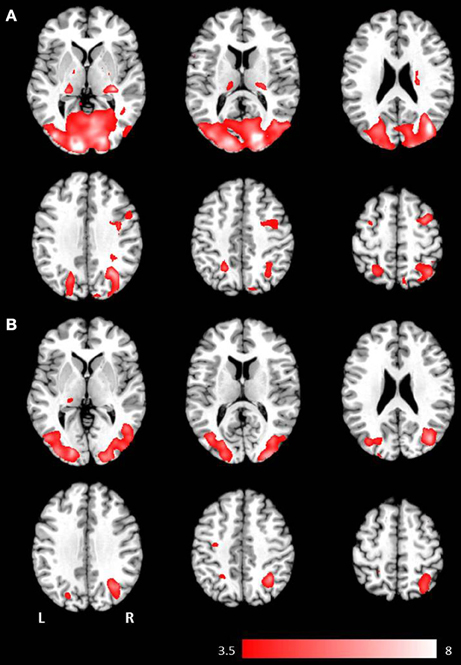

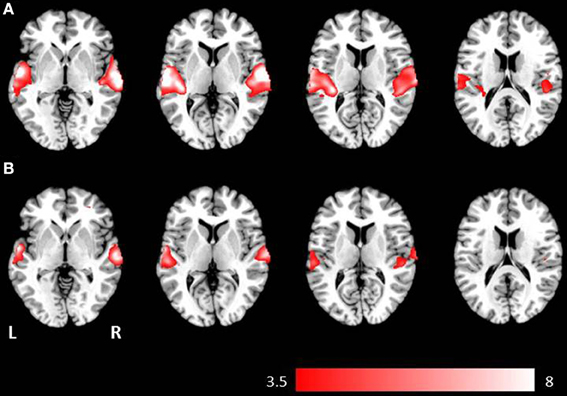

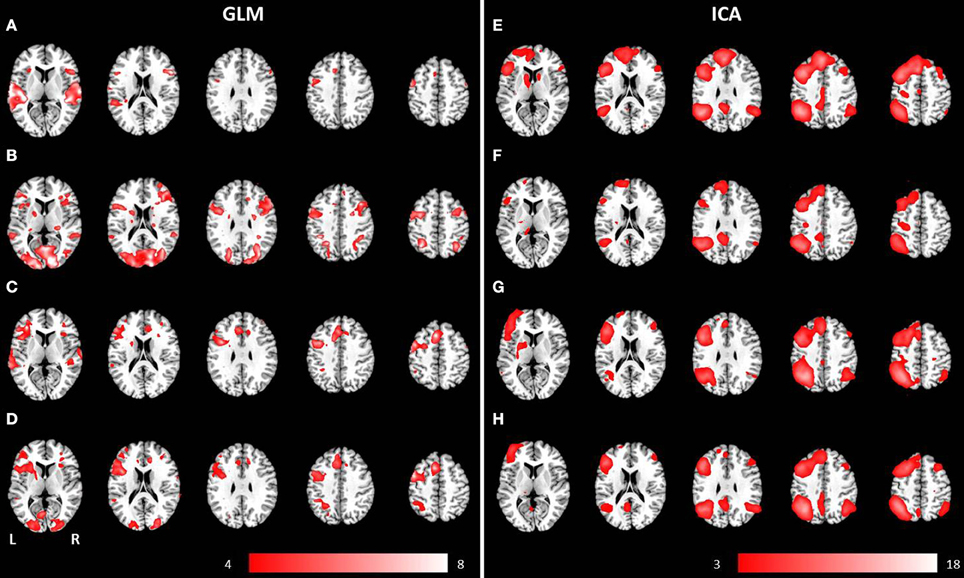

To map the modality-specific effects of language processing on brain activity, a two-sample t-test was performed at the group level. These analyses comprise the t-contrasts visual > auditory (see Figure 1) and auditory > visual (see Figure 2) computed at the group level for both investigated language paradigms. All resulting statistical parametric maps were thresholded at p < 0.001 (uncorrected), using a cluster extent threshold of 10 contiguous voxels.

Figure 1. Axial mean anatomical images overlaid with brain activation resulting from second-level GLM analysis, revealing higher brain activity for visual presentation compared to auditory presentation (p < 0.001, uncorrected) induced by (A) the phrases task and (B) the verb generation task.

Figure 2. Axial mean anatomical images overlaid with brain activation resulting from second-level GLM analysis, revealing higher brain activity for auditory presentation compared to visual presentation (p < 0.001, uncorrected) induced by (A) the phrases task and (B) the verb generation task.

Results for the contrast visual > auditory revealed significantly higher brain activation in the superior and inferior parietal lobule, the middle occipital gyrus, the postcentral gyrus, and in the hippocampus. For the phrases task, additional increased brain activity was obtained in the middle frontal gyrus, the precuneus, the cuneus, the precentral gyrus, and in the pallidum (see Table 1 and Figure 1B). For the verb generation task, the contrast visual > auditory evoked additional increased brain activity in the inferior temporal gyrus (see Table 1 and Figure 1A).

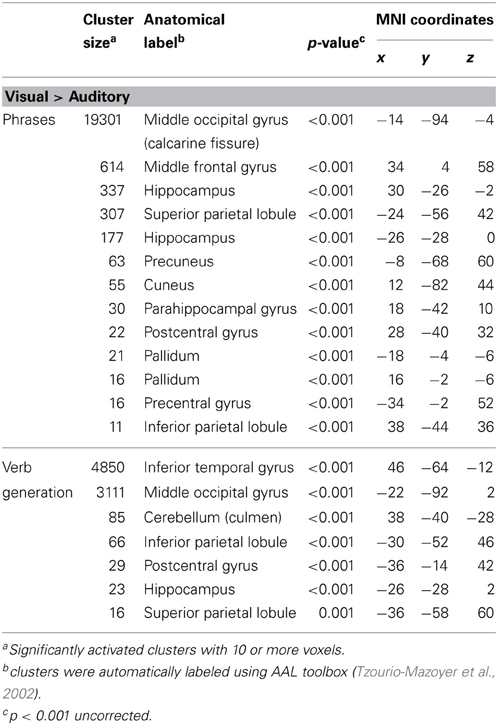

Table 1. Significantly higher activated brain areas by visual compared to auditory presentation of the two language paradigms.

Auditory presentation (contrast auditory > visual) induced significantly increased brain activation in the superior temporal gyrus bilaterally (see Table 2 and Figure 2) for both tasks. The auditory presentation of the verb generation task revealed an additional cluster in the middle frontal gyrus (see Table 2 and Figure 2A).

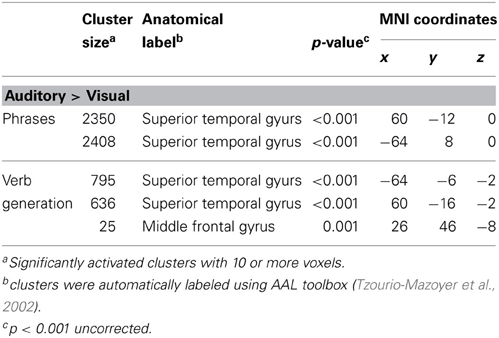

Table 2. Significantly higher activated brain areas by auditory compared to visual presentation of the two language paradigms.

Auditory presentation of the phrases task evoked brain activation bilaterally in the superior temporal gyrus, the insula, the medial frontal gyrus, the inferior frontal gyrus, and the left precentral gyrus (see Figure 3A). In contrast, visual presentation of the same paradigm induced clusters of increased neuronal activation bilaterally in the superior and inferior parietal gyrus, the precentral gyrus, the lingual gyrus, the cuneus, the middle occipital gyrus, the inferior frontal gyrus, the superior temporal gyrus, the left medial frontal gyrus as well as the right middle frontal gyrus (see Figure 3B).

Figure 3. Mean anatomical images overlaid with brain activation resulting from second-level GLM analysis and group ICA. Results of second-level GLM analysis (A–D) were reported for the contrast active > baseline condition (p < 0.001, uncorrected) for (A) auditory presentation of the phrases task, (B) visual presentation of the phrases task, (C) auditory presentation of the verb generation task and (D) visual presentation of the verb generation task. Group ICA revealed a left lateralized language network independent from presentation modality and language paradigm. Determined networks were reported for (E) auditory presentation of the phrases task, (F) visual presentation of the phrases task, (G) auditory presentation of the verb generation task and (H) visual presentation of the verb generation task.

The auditorily presented verb generation task induced brain activation bilaterally in the medial frontal gyrus, the cingulate gyrus, the insula, the superior temporal gyrus, the left inferior frontal gyrus, the left inferior parietal lobule, and the left precentral gyrus (see Figure 3C). Visually presented stimuli also evoked neuronal activation bilaterally in the medial frontal gyrus, the cingulate gyrus and the insula. Activation of the superior temporal gyrus was obtained lateralized in the left hemisphere. Furthermore, left-sided clusters in the inferior frontal gyrus, the left inferior parietal lobule, and the precentral gyrus were larger for visual compared to auditory condition (see Figure 3D).

Separate group ICA for both paradigms (phrases and verb generation) and both experiments (visual and auditory) obtained 18 components for both visually presented paradigms. For auditory presentation, group ICA revealed 24 components for the phrases task and 25 components for the auditory task. Reported activated network components only include within-brain activations.

A group language network was determined for both paradigms, independent of the presentation modality, and involved brain areas such as the inferior frontal gyrus (Broca's area), the superior temporal gyrus (Wernicke's area), the insula, the middle occipital gyrus, the precentral gyrus, and the superior frontal gyrus (see Figures 3E–H).

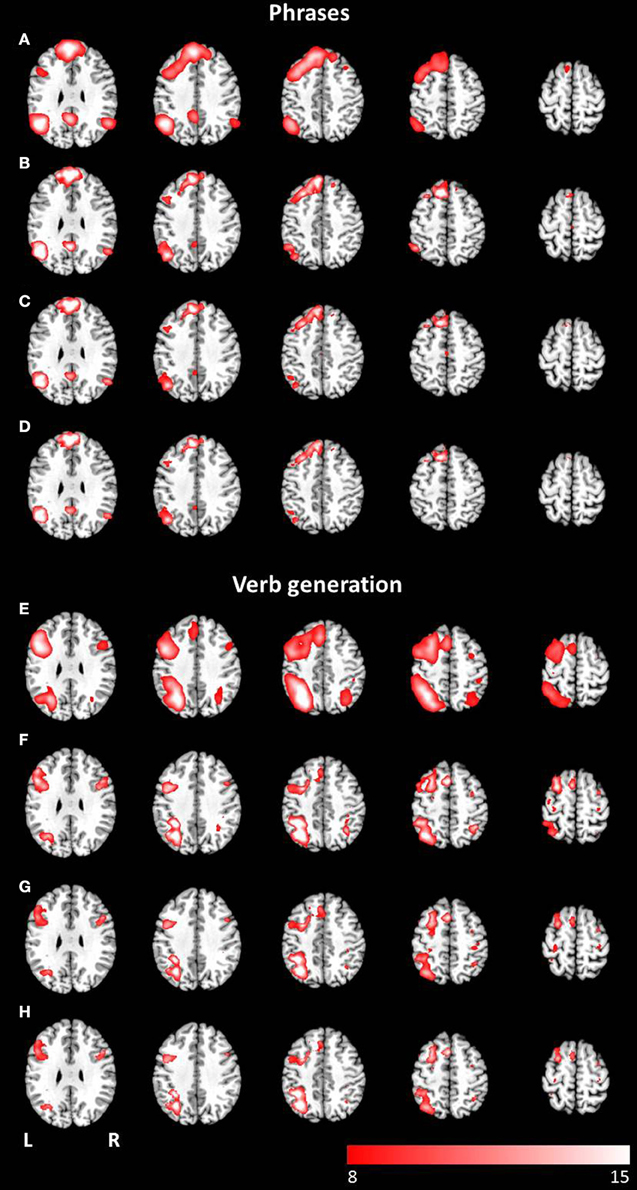

The combined group ICA of both modalities for the phrases task and the verb generation task revealed a language and an attention network respectively. The phrases task evoked a modality independent language network, detected by performing a conjunction analysis (p < 0.05, FWE corrected), involving clusters in the left and right superior frontal gyrus, the left inferior frontal gyrus, the left and right angular gyrus, the left posterior cingulate cortex, the left middle temporal gyrus, and the left supplementary motor area (see Figures 4A–D). No significant differences between visual and auditory presentation were found. The verb generation task revealed a language related network including significant clusters of neuronal activation in the left and right inferior parietal lobule, the left and right inferior frontal gyrus, the left supplementary motor area, the left inferior and middle temporal gyrus, the right cerebellum, and the left and right precentral gyrus (see Figures 4E–H). Similar to the phrases task, no significant differences were detected between visual and auditory language presentation.

Figure 4. Axial mean anatomical images overlaid with the language network, resulting from combined group ICA including auditory and visual stimulus presentation (p < 0.05, FWE-corrected). A network involving language related areas was detected for (A) the phrases task and (E) the verb generation task. The comparison of modality specific differences shows similar networks for visually (B,F) and auditory (C,G) presented language paradigms. For both paradigms the conjunction analysis of both modalities (D,H) revealed similar activation patterns compared to modality-specific networks.

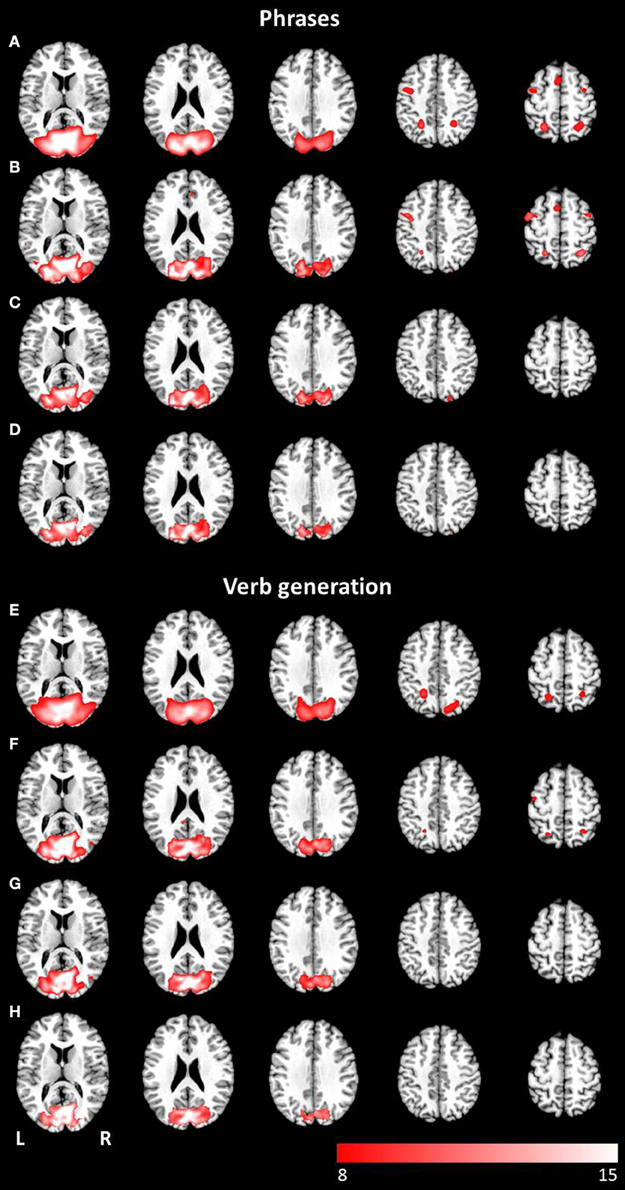

The combined group ICA of visual and auditory presentation of the phrases task obtained an attention network involving the left and right inferior, middle and superior occipital lobule as well as the left putamen, detected by the conjunction analysis (p < 0.05, FWE corrected). In addition, visual stimulus presentation revealed significant brain activation in attention related areas, involving the left superior and medial frontal gyrus, the left and right precentral gyrus, the left and right middle frontal gyrus as well as the left and right superior parietal lobule (see Figures 5A–D). No additional brain activation was obtained for auditory stimulus presentation. Based on the conjunction analysis of the two modalities for the verb generation task, an attention network involving neuronal activation bilaterally in the lingual gyrus, the calcarine gyrus, and the fusiform gyrus was detected. Visual presentation evoked additional activation in the left posterior cingulate gyrus, the left and right superior parietal lobule, and the left precentral gyrus (see Figures 5E–H). Similar to the phrases task, no additional brain activation was found for auditory presentation.

Figure 5. Axial mean anatomical images overlaid with the attention-shift network, resulting from combined group ICA including auditory and visual stimulus presentation (p < 0.05, FWE-corrected). This network was determined for (A) the phrases task and (E) the verb generation task. The comparison of modality specific differences shows substantial differences for visually (B,F) and auditory (C,G) presented language paradigms. Whereas visually presented stimuli caused evoked activity in the attention-shift network, no comparable activation pattern was detected for auditory stimuli. In the conjunction analysis (D,H) only activation in occipital parts was found.

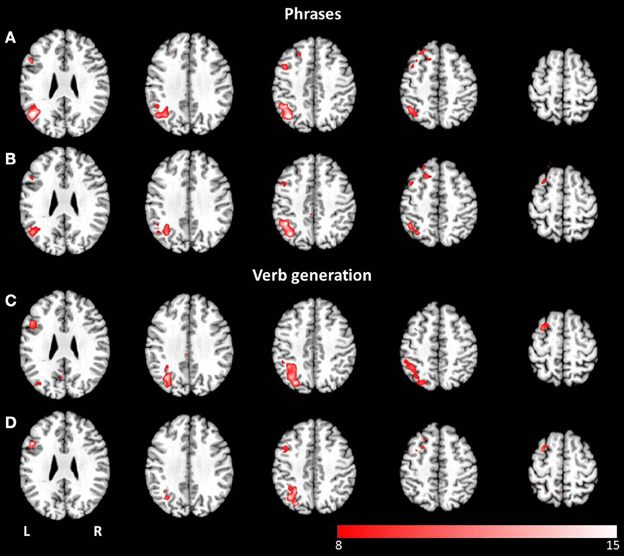

Thresholded laterality maps (p < 0.05, FWE corrected) resulting from group ICA of the phrases task revealed significant left-sided lateralization in the inferior, middle and superior temporal gyrus, the middle and inferior frontal gyrus, the angular gyrus, and the inferior parietal lobule (see Figures 6A,B). Computation of the laterality maps resulting from the verb generation task obtained significant left lateralized brain activation in the inferior, middle and superior frontal gyrus, the inferior and superior parietal lobule, the supramarginal gyrus, the angular gyrus, the middle temporal gyrus, and the fusiform gyrus (see Figures 6C,D). For both paradigms, no significant differences were determined between visual and auditory language presentation.

Figure 6. Axial mean anatomical images overlaid with lateralized spatial maps (p < 0.05, FWE-corrected) for the language network resulting from combined group ICA for visual (A,C) and auditory stimulus presentation (B,D). No statistically significant modality-dependent differences were obtained.

The aim of this study was to examine modality-specific differences of language processing by comparing visually and auditorily presented language paradigms (one for language production and one for language comprehension) used in clinical practice. A combined group ICA for visual and auditory presentation revealed modality-dependent differences in identified networks. For visually presented language, an attention-shift network (Corbetta et al., 1998) was found for both paradigms. In contrast, this network was not detected for auditory presentation. These results are largely consistent with our hypothesis that visual stimulus presentation of language paradigms requires an additional attention network.

Investigating modality-dependent differences in language localization is of huge importance with respect to pre-surgical planning for which fMRI has become part of the routine procedure (Genetti et al., 2013). FMRI has been proven to be a reliable tool to determine language lateralization (Arora et al., 2009; Jones et al., 2011), and has been increasingly validated for the precise localization of language cortices (Genetti et al., 2013). The reliability of language lateralization is of particular interest in patients with left hemisphere temporal lobe epilepsy, as they have an increased likelihood of atypical right hemisphere lateralization of language processing areas (Hamberger and Cole, 2011). It is assumed that chronic epileptic activity induces a shift of language processing areas from the left to the right hemisphere (Liégeois et al., 2004; Janszky et al., 2006). Since patients prior to neurosurgery often suffer from restricted language and attention abilities, the required compliance of the patient is often lacking, which inhibits the determination of brain areas involved in language processing. Reading, in particular, may present an insurmountable challenge to patients, and therefore, paradigms for detecting language abilities are often presented auditorily for review see Dimou et al. (2013). Neuronal patterns resulting from fMRI experiments provide essential information for neuronavigation during brain surgery. Differences between auditory and visual language presentation need to be investigated in detail, as functional imaging data provide essential information for neurosurgery.

Independent of presentation modality, a language component was identified for the verb generation and for the phrases task. In clinical practice, usually both paradigms are used, as they cover different aspects of language processing. This assumption has been supported by the results of this study, showing differences in the language network between the two tasks. Investigating presentation modalities, no significant differences between auditory and visual stimulation were obtained. The involved areas of the modality-independent language network are in line with previous functional imaging results of language processing [for review see Price (2010, 2012)]. In contrast to Carpentier et al. (2001), who found higher lateralization scores for visual stimuli, the results of our study revealed no significant difference between visual and auditory stimulus presentation. Thus our findings suggest that auditory language presentation in functional imaging is an appropriate tool for lateralization, providing essential information for pre-surgical planning. However, visually presented language additionally activated an attention-shift network (Corbetta et al., 1998), which appears to be a necessary prerequisite for written language processing. A comparison of the detected attention network has shown that visual stimulus presentation evoked increased brain activation in the left superior and medial frontal gyrus, the left and right precentral gyrus, the left and right middle frontal gyrus as well as the left and right superior parietal lobule, areas related to attention (Corbetta and Shulman, 2002; Daselaar et al., 2013) and short term memory (Makuuchi and Friederici, 2013). For auditory stimulus presentation no comparable network was found. Our finding indicates that the investigator has to be aware of the individual clinical indication of functional language mapping and to select the appropriate stimulus presentation method with respect to tumor location or reorganization of networks.

Beyond modality-dependent differences, the change of spatial processing patterns induced by language and attention shifts were investigated in this study using group ICA, an already proven analysis tool for language network detection (Kim et al., 2011). The evaluation of the network components that resulted from ICA in this investigation for visual stimulus presentation revealed a network similar to the network of eye movement and attention-shift, described in Corbetta et al. (1998). For auditory stimulus presentation, this network was not detected. It is assumed that this network is responsible for covert shifts of attention, reflected by overt rapid eye movements (saccades). Moreover, these two processes appear to be not only functionally related but also share the same pathways in the brain. Although it has been shown that saccadic eye movements combined with short fixations are necessary for reading words (Reichle et al., 2003; Rayner and Reichle, 2010), the impact of saccades on word processing is still unknown (Temereanca et al., 2012).

The results of this study suggest that the performed language task as well as the presentation modality influence the detected networks. In addition, our findings indicate that not only the task itself and the way of stimulus presentation may affect the detected language processing areas. A comparison of hypothesis-driven GLM analysis and data-driven ICA showed substantial differences in resulting network patterns. Standard GLM analysis is based on the canonical hemodynamic response function (HRF) also relying on restrictive time-modeling of the stimuli. In contrast, ICA revealed highly consistent language networks independent of the language task and the modality of stimulus presentation. It is assumed that ICA is qualified to detect separate time course related networks such as attention or motor patterns (Robinson et al., 2013) and has already been shown to add additional information on processing networks (Tie et al., 2008; Schöpf et al., 2011; Frasnelli et al., 2012; Xu et al., 2013). The results of previous studies revealed that language processing areas show inter-individual variability in network patterns (Amunts et al., 2000; Rademacher et al., 2001). These individual variations in conjunction with additional stimulus-related functions such as attention or eye movements may produce imprecise language localization based on GLM analysis especially in group studies. Furthermore, a recently published study (Stoppelman et al., 2013) found significant influence of different baseline conditions on resulting language related areas using GLM analysis. Especially for the analysis of language paradigms a purely data-driven method as ICA may not only serve as an additional technique, but furthermore might be the analyzing method of choice as we were able to show that a time-locked analyzing tool, such as the GLM, was not able to reflect the spatial patterns involved in the processing of visually generated language paradigms.

Although the mapping of language processing areas using fMRI has been investigated in various studies (Carpentier et al., 2001; Arora et al., 2009; Jones et al., 2011; Genetti et al., 2013), the conductance of fMRI is sometimes problematic in clinical practice. Usually, two different language paradigms, one for language perception and another for language production, have to be performed for covering a wide range of language processing. These tasks require focused attention on the stimuli throughout the whole experiment, which is often challenging and hard to accomplish for the patient. Recently, an fMRI paradigm was presented, claiming to localize functional activation in areas for language perception and production in a single paradigm (Fedorenko et al., 2010, 2012). The validation of this paradigm in clinical practice and its effect on patient compliance should be part of further investigations.

Even though new language paradigms are developed to facilitate tasks during fMRI measurements, performing the task is still challenging the patient, due to the disabilities already mentioned previously. A promising method to overcome the substantial challenge of the patient's active participation is resting-state fMRI, a method without active task performance. Previous studies have successfully determined language networks using resting-state fMRI (Tomasi and Volkow, 2012; Kollndorfer et al., 2013; Tie et al., 2013). Although the application to pre-surgical planning has already achieved promising initial results in epilepsy surgery (Negishi et al., 2011; Morgan et al., 2012), it is still a long way from becoming part of the clinical routine (Böttger et al., 2011). In clinical practice, the development of a standardized imaging protocol for mapping language abilities, as demanded by Sunaert (2006), will be an inevitable step, as it has been shown that different resting-state conditions may influence the detected networks (Kollndorfer et al., 2013).

A potential limitation of this study is the small sample size. The influence of sample size in fMRI studies has recently been discussed controversially. Friston (2012) pointed out that statistically significant results from studies with small sample sizes are statistically valid, indicating a stronger effect than the equivalent result in a larger sample size. In contrast, some other authors (Ingre, 2013; Lindquist et al., 2013) highlight the potential pitfalls of statistical testing using small sample sizes, such as less accurate parameter estimation or less possibilities to control for confounding variables. To avoid an exceeding influence of confounding factors, we investigated a very homogeneous sample: young, healthy, right-handed subjects with comparable educational background. In addition, behavioral language data were collected to control for language ability parameters.

We were able to show that the neural processing of visually presented paradigms (language perception and language production) requires an attention-shift network in addition to the commonly known language processing areas in the brain. These activation patterns were not detected for auditory stimulus presentation of the same tasks. Therefore, the way of stimulus presentation should be adjusted with respect to individual indication of functional language mapping. As the attention-shift network was restricted to visual stimuli, it is assumed that it is a basic prerequisite for reading abilities. This additional attention mechanism accompanying visually language testing may provide important information for neurosurgeons, so as to preserve language function and writing abilities to improve quality of life after surgery.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors thank the subjects for their participation and Thomas Schlegl for his support in analyzing eye-tracking data. Special thanks are dedicated to Daniel Grailach (Tonkombüse, Vienna) for recording the voice samples. Veronika Schöpf, Kathrin Kollndorfer, and Jacqueline Krajnik are supported by the FWF (Veronika Schöpf and Kathrin Kollndorfer: P23205-B09; Jacqueline Krajnik: KLI252).

Amunts, K., Malikovic, A., Mohlberg, H., Schormann, T., and Zilles, K. (2000). Brodmann's areas 17 and 18 brought into stereotaxic space-where and how variable. Neuroimage 11, 66–84. doi: 10.1006/nimg.1999.0516

Arora, J., Pugh, K., Westerveld, M., Spencer, S., Spencer, D. D., and Todd Constable, R. (2009). Language lateralization in epilepsy patients: fMRI validated with the Wada procedure. Epilepsia 50, 2225–2241. doi: 10.1111/j.1528-1167.2009.02136.x

Aschenbrenner, S., Tucha, O., and Lange, K. (2000). Regensburg Word Fluency Test [Regensburger Wortflüssigkeits-Test (RWT)]. Göttingen: Hogrefe.

Beckmann, C., Mackay, C., Filippini, N., and Smith, S. (2009). Group comparison of resting-state FMRI data using multi-subject ICA and dual regression. NeuroImage 47(Supp. 1), 148. doi: 10.1016/S1053-8119(09)71511-3

Beckmann, C. F., and Smith, S. M. (2004). Probabilistic independent component analysis for functional magnetic resonance imaging. IEEE Trans. Med. Imaging 23, 137–152. doi: 10.1109/TMI.2003.822821

Böttger, J., Margulies, D. S., Horn, P., Thomale, U. W., Podlipsky, I., Shapira-Lichter, I., et al. (2011). A software tool for interactive exploration of intrinsic functional connectivity opens new perspectives for brain surgery. Acta Neurochir. 153, 1561–1572. doi: 10.1007/s00701-011-0985-6

Carpentier, A., Pugh, K. R., Westerveld, M., Studholme, C., Skrinjar, O., Thompson, J. L., et al. (2001). Functional MRI of language processing: dependence on input modality and temporal lobe epilepsy. Epilepsia 42, 1241–1254. doi: 10.1046/j.1528-1157.2001.35500.x

Corbetta, M., Akbudak, E., Conturo, T. E., Snyder, A. Z., Ollinger, J. M., Drury, H. A., et al. (1998). A common network of functional areas for attention and eye movements. Neuron 21, 761–773. doi: 10.1016/S0896-6273(00)80593-0

Corbetta, M., and Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 201–215. doi: 10.1038/nrn755

Daselaar, S. M., Huijbers, W., Eklund, K., Moscovitch, M., and Cabeza, R. (2013). Resting-state functional connectivity of ventral parietal regions associated with attention reorienting and episodic recollection. Front. Hum. Neurosci. 7:38. doi: 10.3389/fnhum.2013.00038

Dimou, S., Battisti, R. A., Hermens, D. F., and Lagopoulus, J. (2013). A systematic review of functional magnetic resonance imaging and diffusion tensor imaging modalitites used in presurgical planning of brain tumor resection. Neurosurg. Rev. 36, 205–214. doi: 10.1007/s10143-012-0436-8

Fedorenko, E., Hsieh, P.-J., Nieto-Castañón, A., Whitfield-Gabrieli, S., and Kanwisher, N. (2010). New method for fMRI investigations of language: defining ROIs functionally in individual subjects. J. Neurophysiol. 104, 1177–1194. doi: 10.1152/jn.00032.2010

Fedorenko, E., Nieto-Castañon, A., and Kanwisher, N. (2012). Lexical and syntactic representations in the brain: an fMRI investigation with multi-voxel pattern analyses. Neuropsychologia 50, 499–513. doi: 10.1016/j.neuropsychologia.2011.09.014

Filippini, N., MacIntosh, B. J., Hough, M. G., Goodwin, G. M., Frisoni, G. B., Smith, S. M., et al. (2009). Distinct patterns of brain activity in young carriers of the APOE-epsilon4 allele. Proc. Natl. Acad. Sci. U.S.A. 106, 7209–7214. doi: 10.1073/pnas.0811879106

Foki, T., Gartus, A., Geissler, A., and Beisteiner, R. (2008). Probing overtly spoken language at sentential level: a comprehensive high-field BOLD-fMRI protocol reflecting everyday language demands. Neuroimage 39, 1613–1624. doi: 10.1016/j.neuroimage.2007.10.020

Frasnelli, J., Lundström, J. N., Schöpf, V., Negoias, S., Hummel, T., and Lepore, F. (2012). Dual processing streams in chemosensory perception. Front. Hum. Neurosci. 6:288. doi: 10.3389/fnhum.2012.00288

Friston, K. (2012). Ten ironic rules for non-statistical reviewers. Neuroimage 61, 1300–1310. doi: 10.1016/j.neuroimage.2012.04.018

Friston, K. J., Holmes, A. P., Price, C. J., Büchel, C., and Worsley, K. J. (1999). Multisubject fMRI studies and conjunction analyses. Neuroimage 10, 385–396. doi: 10.1006/nimg.1999.0484

Genetti, M., Grouiller, F., Vulliemoz, S., Spinelli, L., Seeck, M., Michel, C. M., et al. (2013). Noninvasive language mapping in patients with epilepsy or brain tumors. Neurosurgery 72, 555–565. doi: 10.1227/NEU.0b013e318282cdad

Hamberger, M. J., and Cole, J. (2011). Language organization and reorganization in epilepsy. Neuropsychol. Rev. 21, 240–251. doi: 10.1007/s11065-011-9180-z

Holland, S. K., Plante, E., Weber Byars, A., Strawsburg, R. H., Schmithorst, V. J., and Ball, W. S. (2001). Normal fMRI brain activation patterns in children performing a verb generation task. Neuroimage 14, 837–843. doi: 10.1006/nimg.2001.0875

Hyvärinen, A., Karhunen, J., and Oja, E. (2001). Independent Component Analysis. New York, NY: John Wiley and Sons. doi: 10.1002/0471221317

Ingre, M. (2013). Why small low-powered studies are worse than large high-powered studies and how to protect against “trivial” findings in research: comment on friston (2012). Neuroimage 81, 496–498. doi: 10.1016/j.neuroimage.2013.03.030

Janszky, J., Mertens, M., Janszky, I., Ebner, A., and Woermann, F. G. (2006). Left-sided interictal epileptic activity induces shift of language lateralization in temporal lobe epilepsy: an fMRI study. Epilepsia 47, 921–927. doi: 10.1111/j.1528-1167.2006.00514.x

Jones, S. E., Mahmoud, S. Y., and Phillips, M. D. (2011). A practical clinical method to quantify language lateralization in fMRI using whole-brain analysis. Neuroimage 54, 2937–2949. doi: 10.1016/j.neuroimage.2010.10.052

Kim, K. K., Karunanayaka, P., Privitera, M. D., Holland, S. K., and Szaflarski, J. P. (2011). Semantic association investigated with functional MRI and independent component analysis. Epilepsy Behav. 20, 613–622. doi: 10.1016/j.yebeh.2010.11.010

Kollndorfer, K., Fischmeister, F. P. S., Kasprian, G., Prayer, D., and Schöpf, V. (2013). A systematic investigation of the invariance of resting-state network patterns: is resting-state fMRI ready for pre-surgical planning. Front. Hum. Neurosci. 7:95. doi: 10.3389/fnhum.2013.00095

Liégeois, F., Connelly, A., Cross, J. H., Boyd, S. G., Gadian, D. G., Vargha-Khadem, F., et al. (2004). Language reorganization in children with early-onset lesions of the left hemisphere: an fMRI study. Brain 127, 1229–1236. doi: 10.1093/brain/awh159

Liepmann, D., Beauducel, A., Brocke, B., and Amthauer, R. (2007). Intelligenz-Struktur-Test 2000 R (I-S-T 2000 R). Manual. 2nd Edn. Göttingen: Hogrefe.

Lindquist, M. A., Caffo, B., and Crainiceanu, C. (2013). Ironing out the statistical wrinkles in “ten ironic rules”. Neuroimage 81, 499–502. doi: 10.1016/j.neuroimage.2013.02.056

Makuuchi, M., and Friederici, A. D. (2013). Hierarchical functional connectivity between the core language system and the working memory system. Cortex. 49, 2416–2423. doi: 10.1016/j.cortex.2013.01.007

Minka, T. (2000). Automatic choice of dimensionality for PCA, Technical Report 514. Cambridge, MA: MIT Media Lab.

Morgan, V. L., Sonmezturk, H. H., Gore, J. C., and Abou-Khalil, B. (2012). Lateralization of temporal lobe epilepsy using resting functional magnetic resonance imaging connectivity of hippocampal networks. Epilepsia 53, 1628–1635. doi: 10.1111/j.1528-1167.2012.03590.x

Negishi, M., Martuzzi, R., Novotny, E. J., Spencer, D. D., and Constable, R. T. (2011). Functional MRI connectivity as a predictor of the surgical outcome of epilepsy. Epilepsia 52, 1733–1740. doi: 10.1111/j.1528-1167.2011.03191.x

Petersen, S. E., Fox, P. T., Posner, M. I., Mintun, M., and Raichle, M. E. (1988). Positron emission tomographic studies of the cortical anatomy of single-word processing. Nature 331, 585–589. doi: 10.1038/331585a0

Price, C. J. (2010). The anatomy of language: a review of 100 fMRI studies published in 2009. Ann. N.Y. Acad. Sci. 1191, 62–88. doi: 10.1111/j.1749-6632.2010.05444.x

Price, C. J. (2012). A review and synthesis of the first 20 years of PET and fMRI studies of heard speech, spoken language and reading. Neuroimage 62, 816–847. doi: 10.1016/j.neuroimage.2012.04.062

Rademacher, J., Morosan, P., Schormann, T., Schleicher, A., Werner, C., Freund, H. J., et al. (2001). Probabilistic mapping and volume measurement of human primary auditory cortex. Neuroimage 13, 669–683. doi: 10.1006/nimg.2000.0714

Rayner, K., and Reichle, E. D. (2010). Models of the reading process. Wiley Interdiscip. Rev. Cogn. Sci. 1, 787–799. doi: 10.1002/wcs.68

Reichle, E. D., Rayner, K., and Pollatsek, A. (2003). The E-Z reader model of eye-movement control in reading: comparisons to other models. Behav. Brain Sci. 26, 445–476. discussion: 477–526. doi: 10.1017/S0140525X03000104

Robinson, S. D., Schöpf, V., Cardoso, P., Geissler, A., Fischmeister, F., Wurnig, M., et al. (2013). Applying independent component analysis to clinical fMRI at 7T. Front. Hum. Neurosci. 7:496. doi: 10.3389/fnhum.2013.00496

Schöpf, V., Windischberger, C., Robinson, S., Kasess, C. H., Fischmeister, F. P., Lanzenberger, R., et al. (2011). Model-free fMRI group analysis using FENICA. Neuroimage 55, 185–193. doi: 10.1016/j.neuroimage.2010.11.010

Spena, G., Nava, A., Cassini, F., Pepoli, A., Bruno, M., D'Agata, F., et al. (2010). Preoperative and intraoperative brain mapping for the resection of eloquent-area tumors. A prospective analysis of methodology, correlation, and usefulness based on clinical outcomes. Acta Neurochir. 152, 1835–1846. doi: 10.1007/s00701-010-0764-9

Stevens, M. C., Calhoun, V. D., and Kiehl, K. A. (2005). Hemispheric differences in hemodynamics elicited by auditory oddball stimuli. Neuroimage 26, 782–792. doi: 10.1016/j.neuroimage.2005.02.044

Stoppelman, N., Harpaz, T., and Ben-Shachar, M. (2013). Do not throw out the baby with the bath water: choosing an effective baseline for a functional localizer of speech processing. Brain Behav. 3, 211–222. doi: 10.1002/brb3.129

Sunaert, S. (2006). Presurgical planning for tumor resectioning. J. Magn. Reson. Imaging 23, 887–905. doi: 10.1002/jmri.20582

Swanson, N., Eichele, T., Pearlson, G., Kiehl, K., Yu, Q., and Calhoun, V. D. (2011). Lateral differences in the default mode network in healthy controls and patients with schizophrenia. Hum. Brain Mapp. 32, 654–664. doi: 10.1002/hbm.21055

Temereanca, S., Hämäläinen, M. S., Kuperberg, G. R., Stufflebeam, S. M., Halgren, E., and Brown, E. N. (2012). Eye movements modulate the spatiotemporal dynamics of word processing. J. Neurosci. 32, 4482–4494. doi: 10.1523/JNEUROSCI.5571-11.2012

Tie, Y., Rigolo, L., Norton, I. H., Huang, R. Y., Wu, W., Orringer, D., et al. (2013). Defining language networks from resting-state fMRI for surgical planning-a feasibility study. Hum. Brain Mapp. doi: 10.1002/hbm.22231. [Epub ahead of print].

Tie, Y., Whalen, S., Suarez, R. O., and Golby, A. J. (2008). Group independent component analysis of language fMRI from word generation tasks. Neuroimage 42, 1214–1225. doi: 10.1016/j.neuroimage.2008.05.028

Tomasi, D., and Volkow, N. D. (2012). Resting functional connectivity of language networks: characterization and reproducibility. Mol. Psychiatry 17, 841–854. doi: 10.1038/mp.2011.177

Tzourio-Mazoyer, N., Landeau, B., Papathansassiou, D., Crivello, F., Etard, O., Delcroix, N., et al. (2002). Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage 15, 273–289. doi: 10.1006/nimg.2001.0978

Keywords: fMRI, language, attention-shift network, functional mapping, visual, auditory

Citation: Kollndorfer K, Furtner J, Krajnik J, Prayer D and Schöpf V (2013) Attention shifts the language network reflecting paradigm presentation. Front. Hum. Neurosci. 7:809. doi: 10.3389/fnhum.2013.00809

Received: 27 June 2013; Accepted: 07 November 2013;

Published online: 25 November 2013.

Edited by:

Mohamed L. Seghier, UCL, UKCopyright © 2013 Kollndorfer, Furtner, Krajnik, Prayer and Schöpf. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Veronika Schöpf, Department of Biomedical Imaging and Image-guided Therapy, Medical University of Vienna, Waehringer Guertel 18-20, 1090 Vienna, Austria e-mail:dmVyb25pa2Euc2Nob2VwZkBtZWR1bml3aWVuLmFjLmF0

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.