94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 17 April 2012

Sec. Cognitive Neuroscience

Volume 6 - 2012 | https://doi.org/10.3389/fnhum.2012.00087

This article is part of the Research Topic Towards a neuroscience of social interaction View all 56 articles

Part of this article's content has been mentioned in:

Deceptively simple … The “deception-general” ability and the need to put the liar under the spotlight

Both the ability to deceive others, and the ability to detect deception, has long been proposed to confer an evolutionary advantage. Deception detection has been studied extensively, and the finding that typical individuals fare little better than chance in detecting deception is one of the more robust in the behavioral sciences. Surprisingly, little research has examined individual differences in lie production ability. As a consequence, as far as we are aware, no previous study has investigated whether there exists an association between the ability to lie successfully and the ability to detect lies. Furthermore, only a minority of studies have examined deception as it naturally occurs; in a social, interactive setting. The present study, therefore, explored the relationship between these two facets of deceptive behavior by employing a novel competitive interactive deception task (DeceIT). For the first time, signal detection theory (SDT) was used to measure performance in both the detection and production of deception. A significant relationship was found between the deception-related abilities; those who could accurately detect a lie were able to produce statements that others found difficult to classify as deceptive or truthful. Furthermore, neither ability was related to measures of intelligence or emotional ability. We, therefore, suggest the existence of an underlying deception-general ability that varies across individuals.

It is not uncommon to hear of “poachers turned gamekeepers”; originally this referred to situations in which those who stole livestock from rich landowners would later become employed by the same landowner to guard their livestock. A more modern example relates to the case of the infamous confidence-trickster, Frank Abagnale Jr., who is now an FBI financial fraud consultant. Those who employ former “poachers” assume that people who are good at breaking the law are good at detecting when others break the law. This assumption is widespread, but at least in the case of deception, there is no scientific evidence to suggest that good liars are necessarily good lie detectors.

Although the existence of a “deception-general ability” (conferring success in both lie production and detection) has not been explored in the behavioral sciences, it has been suggested that skill in both the production and detection of deception offers selective advantages in human and non-human animals, and, therefore, that each is subject to evolutionary pressure (Dawkins and Krebs, 1979; Bond and Robinson, 1988). Twin studies, in which monozygotic and dizygotic twins are compared on a characteristic of interest in order to isolate genetic and environmental contributions to that trait, provide evidence for the role of evolution in shaping at least the propensity to deceive (with heritability values of between 0.34 and 0.63; Martin and Eysenck, 1976; Young et al., 1980; Martin and Jardine, 1986; Rowe, 1986), if not the ability to do so successfully. Evolutionary biologists and comparative psychologists have characterized the relationship between deception production and detection as two sides of an intra- or inter-specific “evolutionary arms race”—improvements in the ability to deceive in one species, or in certain members of a species, prompt resultant improvements in deception detection among competitors and vice versa (Dawkins and Krebs, 1979; Bond and Robinson, 1988; Byrne, 1996). While this characterization of the relationship between the ability to deceive and to detect deception is intuitively appealing, it relies on there being an opportunity for evolution to act independently on the two processes, i.e., it assumes that the two abilities depend on different psychological and neurological mechanisms. Interestingly, models of both the production and detection of deception derived from cognitive psychology and cognitive neuroscience do not readily support such a distinction. They posit roles for theory of mind (the ability to represent one’s own and another’s mental states) and executive function processes (conflict monitoring, response inhibition) in both deception production and deception detection (e.g., Spence et al., 2004; Sip et al., 2008). If these models are correct, then selection pressure favouring improvement in either production or detection will result in concomitant improvements in the other ability. One may, therefore, expect that good liars will also be good lie detectors.

In two wide-ranging reviews of the psychological literature on deception by Bond and DePaulo (2006, 2008) it was argued that the over-whelming majority of studies show that humans are poor lie detectors (achieving approximately 54% lie-truth detection accuracy), and that stable individual differences in lie detection ability may not exist. The latter conclusion was based on a meta-analysis demonstrating that variance in lie detection performance across participants was not greater than that expected by chance, and that no individual difference measure has been shown to reliably predict lie detection performance.

While fully endorsing these conclusions based on the existing literature, we make two observations: (1) that the claim of poor, undifferentiated lie detection performance across participants is only valid given the type of paradigms that have previously been used to study deception detection ability (see DePaulo et al., 2003 for an overview of the range of deception procedures employed), and (2) that potentially the most interesting, and theoretically relevant, individual difference measure has not yet been related to lie detection ability—the ability to deceive. This study, therefore, aims to introduce a novel interactive paradigm to assess the ability to produce and to detect deceptive statements, and to determine whether these two abilities are related; that is, to discover whether a deception-general ability exists.

Real-life deception is a dynamic interpersonal process (Buller and Burgoon, 1996), yet less than 9% (Bond and DePaulo, 2006) of previous deception studies have allowed for even moderate degrees of social interaction between those attempting to produce deceptive statements (“Senders”) and those attempting to detect deception (“Receivers”). The potential impact of this lack of interaction is difficult to gauge at this point in time. Assessment of deceptiveness on the basis of videotaped or written statements removes all opportunity for the Receiver to engage in explicitly taught or intuitive questioning techniques designed to make the task of deception detection easier. Furthermore, the number of channels through which (dis)honesty can be both detected and conveyed may be severely limited, with concomitant effects on the performance of both Sender and Receiver. The lack of social interaction is not the only factor that has contributed to the “dubious ecological validity” (O'Sullivan, 2008, 493) of previous deception research, however; further criticism centers on the “low-stakes” (and accompanying lack of motivation/arousal) inherent in an experimental setting (Vrij, 2000). In an attempt to address these criticisms we introduce a novel, fully interactive, group-based competitive deception “game” based on the False-Opinion paradigm (Mehrabian, 1971; Frank and Ekman, 2004); the Deceptive Interaction Task (DeceIT).

The game entails each player competing with the other members of the group to both successfully lie, and to detect the lies of the other players. The paradigm enables free-interaction between participants, and, therefore, requires participants to control both verbal and non-verbal cues when producing deceptive statements. The competitive element of the game (with accompanying high-value prizes) provides motivation when lying and attempting to detect lies, and increases arousal. The motivational effect makes the task of producing deceptive statements harder; increased motivation has previously been reported to result in impaired control of non-verbal deceptive cues when lying (Motivational Impairment Effect, DePaulo and Kirkendol, 1989), and it renders those tasked with detecting deception more sceptical (Porter et al., 2007). Increasing the difficulty of the Senders’ task is likely to result in easier detection of deception, and thus make individual differences in deception detection more apparent.

The second advantage to this paradigm is that both deception detection and production can be simultaneously evaluated within participants. Curiously, little research has focussed on individual differences relating to lie production success (Vrij et al., 2010), despite meta-analytic results indicating substantial variance in deceptive ability (Bond and DePaulo, 2008) and prevalence studies showing that approximately 50% of lies are told by only 5% of people (Serota et al., 2010). SDT (Green and Swets, 1966; Meissner and Kassin, 2002) has proved useful in characterizing deception detection performance (by providing independent measures of both the ability to discriminate truthful from deceptive statements, and any bias toward judging statements as truthful or deceptive). Here, for the first time, we also apply SDT to characterize deception production performance (to separate the ease with which statements produced by the Sender can be discriminated on the basis of their veracity, and the credibility of the Sender, i.e., how likely their statements are to be perceived as truthful regardless of their veracity).

The deception literature provides a number of markers by which a novel deception paradigm can be validated. For example, deception has been shown to increase feelings of guilt, anxiety, and cognitive load (Caso et al., 2005) and result in longer response latencies when lying than when telling the truth (Walczyk et al., 2003). The 54% lie-truth discrimination accuracy has also been shown to be remarkably robust (Levine, 2010), and thus we would expect to see all of these effects replicated in this study. Our new paradigm (DeceIT) allows us to determine individual differences in the capacity for successful deception and lie detection. Of chief theoretical interest is whether there is a deception-general ability, perhaps due to underlying individual differences in social decoding and encoding skills (Ekman and O'Sullivan, 1991; Frank and Ekman, 1997; Vrij et al., 2010) which would result in an association between lie production and detection abilities.

Fifty-one healthy adults (27 female, mean age = 25.35 years, SD = 8.54) with English as a first language participated in the present study. All participants provided informed consent to participate. The local Research Ethics Committee (Department of Psychology, Birkbeck College) granted ethical approval of the study.

Participants were recruited to a “Communication Skills” experiment and randomly assigned to nine groups of five participants and one group of six participants, with the constraint that group members were not previously acquainted. Participants were seated in a circle and asked to complete an “Opinion Survey” questionnaire. The questionnaire comprised 10 opinion statements (e.g., “Smoking should be banned in all public places”) to which participants responded “agree” or “disagree.” Responses to the Opinion Survey served as ground truth in the subsequent task (Mehrabian, 1971; Frank and Ekman, 2004). Participants also completed the Toronto Alexithymia Scale (Parker et al., 2001), a measure of the degree to which emotions can be identified and described in the self, and the Interpersonal Reactivity Index (Davis, 1980), a measure of empathy. These instruments provide self- and other-focussed measures of emotional intelligence (Mayer et al., 1999; Parker et al., 2001). A subset of participants (n = 31, 61% of sample) also completed the Wechsler Abbreviated Scale of Intelligence (Wechsler, 1999).

Participants were then informed that they were to take part in a competitive game designed to test their communication skills and that two £50 prizes would be awarded; one to the participant who was rated as most credible across all trials and the other to the participant who was most accurate in their judgments across all trials. Participants were required to make both truthful and dishonest statements relating to their answers on the Opinion Survey, with the objective being to appear as credible as possible regardless of whether they were telling a lie or the truth. Participants played the role of both “Communicator” (Sender) and “Judge” (Receiver), and their role changed randomly on a trial-by-trial basis.

On each trial, the experimenter presented one participant with a cue card, face-down, specifying a topic from the Opinion Survey and an instruction to lie or tell the truth. This indicated to all participants the Sender for the trial. At a verbal instruction to “go,” the participant turned the card, read the instruction, and then spoke for approximately 20s, presenting either their true or false opinion and some supporting argument. A practice trial was conducted for all participants and the experimenter presented a verbatim example response from the piloting phase of the study to illustrate the type of statement required (“I’m in favour of REALITY TV, it’s got to be one of the most important ways you can learn about the world out there and the way people are going to behave; sometimes seeing a bad example is a good way to shock you down the right path and make you think about what you’re doing or going to do”). Following each trial, Senders were required to rate whether they thought they had been successful or unsuccessful in appearing credible. Simultaneously, Receivers rated whether they thought the opinion given by the Sender was true or false. Each participant completed 10 or 20 trials as Sender, half with their true opinion and half with their false opinion. Statistical analysis demonstrated that performance did not vary as a function of the number of statements produced and so this variable is not analysed further. The 50:50 lie-truth ratio was not highlighted to the participants at any stage to prevent strategic responding in either the Sender or Receiver roles. Following the task, participants were asked to rate on a five point Likert scale ranging from “not at all” to “very much” the degree to which they experienced guilt, anxiety, and cognitive load (referred to as “mental demand”) when lying and when telling the truth. Participants were informed of the competitive nature of the task in both the “Sender” and “Receiver” roles, were given an overview of the trial structure (as above), but at no point were explicit instructions given with regards to aspects of behavior that should be attended to during the game, nor potential strategies.

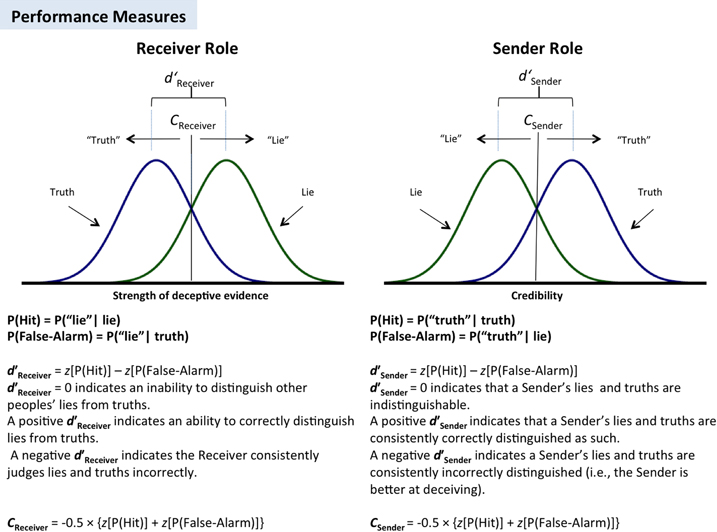

Performance in the Receiver and Sender roles was analysed using SDT (Green and Swets, 1966) (as described in Figure 1). An advantage of SDT is that it allows lie-truth discriminability (d′) to be measured independently of judgment bias (C). Separate SDT measures were calculated for the Receiver/Sender roles: the Receiver’s capacity to discriminate lies from truths was indexed by d′Receiver; the corresponding measure of bias, CReceiver, indicates the tendency of a Receiver to endorse a given opinion as truthful (credulity). The discriminability of the Sender’s truths and lies is indexed by d′Sender. The corresponding measure of bias, CSender, indicates the perceived credibility of a Sender’s opinions, regardless of their veracity. With these measures, better lie detection is indicated by higher d′Receiver values, and increasingly successful deception is indicated by more negative values of d′Sender.

Figure 1. Individual difference parameters for Senders and Receivers based on signal detection theory (SDT).

In line with previous studies (Caso et al., 2005) participants reported greater Guilt, Anxiety, and Cognitive Load when lying than when telling the truth (Guilt t(50) = 7.060, p < 0.001, d = 1.226, Anxiety t(50) = 9.598, p < 0.001, d = 1.784, Cognitive Load t(50) = 9.177, p < 0.001, d = 1.421). Also in common with previous studies (Walczyk et al., 2003), Response Latency was significantly shorter when participants told the truth (M = 4.6 s SD = 2.0) than when they lied (M = 6.5 s SD =3.1, t(50) = −3.885, p < 0.001, d = 0.728). Finally, task performance in the Receiver role was analyzed using conventional percentage accuracy rates and overall accuracy was found to be 54.1% (SD = 8.7%), not significantly different to the 54% reported previously (Levine, 2010) (t(50) = 0.065, p = 0.950, d = 0.013) but significantly greater than chance (t(50) = 3.335, p = 0.002, d = 0.667). Fractional rates addressing accuracy for different types of statement showed a significantly lower mean accuracy for truths (M = 51.1%, SD = 11.9%) than for lies (M = 57.1%, SD = 10.5%, t(50) = −3.731, p < 0.001, d = 0.746). To compare any response bias in the Receiver role with findings from the literature, we calculated the number of statements of all types classified by Receivers as truthful and found it to be 46.7% (SD = 8.8%) a figure significantly lower than chance (t(50) = −2.667, p = 0.005, d = 0.535).

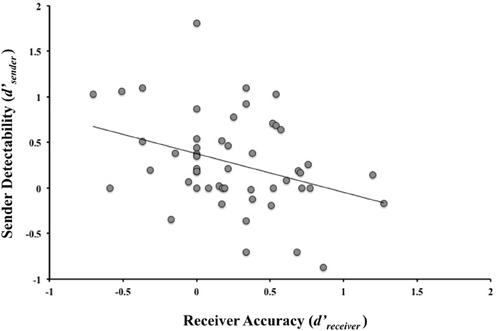

Large individual differences were observed in all of the four performance measures (M d′Receiver = 0.242, SD = 0.418; M CReceiver = −0.086, SD = 0.233; M d′Sender = 0.272, SD = 0.509; M Csender = 0.097, SD = 0.256). Of principal interest is the fact that detectability in the Sender role (d′Sender) and the ability to discriminate in the Receiver role (d′Receiver) were significantly correlated (r = −0.348, p = 0.006, d = 0.742, see Figure 2). As the ability to discriminate truthful from deceptive messages increased, the ability to produce deceptive messages that were hard to discriminate from truthful messages increased. Interestingly, a trend was observed for decreasing detectability in the Sender role to be associated with a reduced response latency difference between truthful and deceptive statements (Spearman’s rho = 0.259, p = 0.068). The only significant association with either measure of bias (Truth-Bias or Credibility) was a correlation between the Sender’s confidence that they were believed and their Credibility measure, i.e., those that judged they were believed were more likely to be seen as honest independently of the veracity of their statements (Spearman’s rho = −0.316, p = 0.024). Neither IQ (all r values < 0.184), emotional ability relating to the self (all r values < 0.198), nor empathy (all r values < 0.153) correlated with d′Receiver,CReceiver,d′Sender, or CSender.

Figure 2. Correlation between Sender and Receiver performance using SDT measures for Receiver Accuracy (d′Receiver) and sender detectability (d′Sender) (r = −0.348, p = 0.006, d = 0.742).

The relationship between lie production and lie detection abilities was examined using a novel group Sender/Receiver deceptive interaction task (DeceIT) designed to address concerns over ecological validity stemming from the use of tasks that do not require social interaction and fail to generate or maintain motivation in participants (O'Sullivan, 2008). Results indicate that the current paradigm is comparable to previous studies with regards to the participants’ self-reported experience of guilt, anxiety, and cognitive load during the task, and overall lie detection accuracy. In addition, previously reported chronometric cues to deception (Walczyk et al., 2003) were replicated in this study, with significantly longer response latencies when lying than when telling the truth. Moreover, as far as we are aware, this study is the first to provide evidence that the capacity to detect lies and the ability to deceive others are associated. This finding suggests the existence of a “deception-general” ability that may influence both “sides” of deceptive interactions.

At present the “deception-general” ability described above is little more than the association between performance on the deception production and detection task, the root of this ability is unknown. One can speculate that the association may be based upon personality characteristics (for example those relating to lie acceptability or those affecting the degree of affective or cognitive consequences of deception), upon learning/experience (which may affect strategies used to detect deception and to appear less deceptive), or on general socio-cognitive ability (e.g., Theory of Mind) which can be called upon during deceptive interactions. However, the data presented here merely indicate that variance in deceptive performance is not a consequence of IQ or emotional ability. It is clear that identification of the precise nature of the proposed “deception-general” ability is an important aim for deception research, and that further research should be devoted to this question.

Interestingly, some evidence was observed for an association between Sender detectability and the difference in response latency between truthful and deceptive statements, with good liars demonstrating smaller differences in response latency. This suggests that, either implicitly or explicitly, Receivers were using Response Latency in order to discriminate truthful from deceptive statements and that good liars exhibited less of this cue. A question for further research is the extent to which the control of response latency is a deliberate and consistent strategy of successful liars.

A significant correlation was also observed between a Sender’s confidence that they would be believed and their credibility, but not their discriminability. Therefore, participants could accurately judge the degree to which they would appear honest irrespective of whether they were lying or telling the truth, but neither their credibility, nor their confidence in appearing credible, was related to their success in producing lies that Receivers were less able to discriminate from truthful statements. This result bears striking resemblance to the finding that confidence in lie detection does not correlate with the ability to detect lies, but does correlate with the degree to which you judge others to be credible (DePaulo et al., 1997).

The absence of an association between IQ or emotional intelligence and the ability to produce or detect lies is in need of replication, but if supported, suggests that deceptive ability is not simply a product of cognitive or affective ability. Such a finding suggests deception-related knowledge structures that are used both to guide one’s own behavior, and aid in the interpretation of another’s behavior. The use of a shared representation system for both the self and the other is common e.g., “mirror neurons” code for one’s own and another’s action (Di Pellegrino et al., 1992), brain regions active when emotions are experienced by the self are active in response to the observation of another’s emotion (Wicker et al., 2003; Singer et al., 2004), and primary and secondary somatosensory cortices are active upon observation of another being touched (Blakemore et al., 2005). The use of a shared representational system for self and other typically promotes the detection of corresponding states; for example, induced depression increases the degree to which faces are viewed as sad (Bouhuys et al., 1995), while execution of an action enhances perception of that action when executed by another (Casile and Giese, 2006). In the current study, however, the detection of deception in another was associated with the control of deception-related cues in the self. Further work is needed to identify the relationship between deceptive success, control of deceptive cues, and the use of a shared representational system.

Despite addressing what have been described as flaws in some of the previous research on deception, two further methodological issues must be discussed in relation to the use of the DeceIT paradigm, which also apply to much of the experimental work on deception. These issues are related, and refer to the fact that in a typical experiment the experimenter usually, (1) sanctions the participant’s lie, and (2), instructs the participant when to lie.

Many authors have commented negatively on the use of sanctioned lies in experimental studies of deception, arguing that the use of sanctioned lies results in the liar feeling less guilt (Ekman, 1988; Vrij, 2000), less motivation to lie and, therefore, less accompanying arousal and cognitive effort (Feeley and de Turck, 1998), and less “decision-making under conflict” (Sip et al., 2008). These arguments suggest that the use of sanctioned lies in experimental studies results in a reduction in the available cues to detection. However, empirical studies of sanctioned versus unsanctioned lies reveal very few consistent differences between cues exhibited during both types of lie. Feeley (1996) found that interviewers could detect no differences in the behavior of participants telling sanctioned or unsanctioned lies, while Feeley and de Turck (1998) found that more cues to deception were associated with sanctioned lies, than with unsanctioned lies. In their meta-analysis of deception detection studies, Sporer and Schwandt (2007) identified only one deceptive cue (smiling) from the 11 studied that differed as a result of whether the lie was sanctioned or unsanctioned.

The use of sanctioned lies in experiments has also been criticized due to a claimed lack of ecological validity. However, proponents of the use of sanctioned lies in the laboratory argue that even if levels of motivation and cognitive effort are reduced through the use of sanctioned lies, the net effect may be to make the deception more ecologically valid. In everyday life most lies are unplanned, of little importance, and of no consequence if detected (DePaulo et al., 1996; Kashy and DePaulo, 1996). In addition, the types of sanctioned lie used in most laboratory studies of deception (including the present study) involve false reports about attitudes to issues or individuals, and are precisely those most often told in everyday communication (DePaulo and Rosenthal, 1979; Levine and McCornack, 1992; Feeley and de Turck, 1998). These lies are often sanctioned by society when used to, for example, bolster another’s ego (“white lies”), while more important lies may be sanctioned by the liar’s religion, political party, friends/family, or ideals.

Instructed lies have also been argued to lack ecological validity—it has been suggested that rather than lying, participants are merely following the experimenter’s instructions (e.g., Kanwisher, 2009). As a result it has been argued that participants should be free to choose when, and if, they lie during an experiment (e.g., Sip et al., 2010). Issues regarding statistical power and experimental control notwithstanding, we suggest that the basic premise that instructed lies are not ecologically valid may be flawed. For example, employees may be instructed to lie to a client or regulator by their supervisor, children may be instructed to lie to family members by their parents, and many people are compelled to lie by the situation they are in (in response to financial, legal, or moral pressure). Therefore, the choice of when to lie may not always truly exist in everyday life. Furthermore, solely studying non-instructed lies in an experimental setting may induce experimental confounds relating to confidence. In an experiment where the participant can choose whether or not to lie, it is likely to be the case that they only tell lies that they are confident are likely to be successful. Neuroimaging studies, therefore, when attempting to elucidate neural activity differentiating lies from truths, may instead identify neural activity differentiating topics about which participants believe they can lie successfully (which may be topics about which they do not hold a strong opinion) from those that they believe they cannot lie successfully about (potentially topics about which they do have a strong opinion). Across participants, the number of lies told is also likely to vary as a function of the participant’s belief that they are a good liar, meaning that in any corpus of lie items the majority will be contributed by participants who believe they are good liars. Whether this participant sampling error will result in a distribution of lies which is skewed relative to an ecologically valid distribution of lies depends both on the degree to which individuals have control over when to lie in everyday life, and the degree to which instructed lies are qualitatively different from lies freely chosen. Both of these factors are presently inestimable given current data.

The implications of the arguments pertaining to the study of sanctioned and instructed lies in relation to the DeceIT paradigm are unclear. Although the participant is given “permission” to lie by the experimenter, thus lies are both sanctioned and instructed—lies are not directed toward the experimenter, but instead to other participants who have not given their permission, and, due to the competitive scenario, are disadvantaged by the participant lying successfully. Furthermore, in the present study, levels of cognitive effort, guilt and anxiety were all significantly elevated during deceptive trials; indicating that the hypothesized reduction in guilt, motivation, and cognitive effort as a result of sanctioning lies was at least minimized using the DeceIT paradigm.

As discussed previously, it has been argued that the ability to deceive successfully, and to detect deception, each confer an evolutionary advantage (Dawkins and Krebs, 1979; Bond and Robinson, 1988). Indeed, several authors argue that the increasing utility of deception with larger social group size has driven the increase in neocortical volume observed in humans (Trivers, 1971; Humphrey, 1976) and other primates. Byrne and Corp (2004) demonstrated that among modern primate species there is an association between neocortex size and the use of tactical deception, with those species with neocortex sizes closer to humans engaging in more tactical deception.

These results do not necessarily imply that the ability to lie itself is genetically determined; it is possible that deception is a function of learning within social contexts and that different individuals have different propensities to learn socially (Cheney et al., 1986; Byrne, 1996). These individual differences in social learning may come about as a result of genetically determined differing levels of attention to conspecifics for example (Heyes, 2011). Bond et al. (1985) advance a third possibility in which individuals inherit a “demeanour bias,” which determines the degree to which other species members are likely to judge their statements as deceptive (indexed by Sender Credibility, CSender, in the current study). They suggest that individuals with a demeanour bias that results in a high probability of deceptive success are likely to use deception frequently and, therefore, improve their abilities. Conversely, those with a demeanour bias leading to a low probability of being judged truthful, are likely to learn quickly that deception is not a successful strategy for them and, therefore, to use alternative strategies. The association between a Sender’s confidence that they would be believed and their credibility/demeanour bias in the present experiment lends support to this hypothesis. It suggests that individuals track their demeanour bias and associate it with the probability of lie success.

In summary, the present study employed an interactive deception task designed to address ecological-validity concerns (O'Sullivan, 2008) and allow the within-subject comparison of deception production and detection ability. The paradigm brings motivated Senders and Receivers together in a competitive, interactive setting, and allows Receivers full access to both verbal and non-verbal cues to deception. The key finding was that Receiver accuracy and Sender detectability were reliably associated: better lie detectors tended to be better deceivers, suggesting some underlying “deception-general” ability that transfers to both aspects of deceptive engagements. Deception has been argued to be a difficult task to undertake successfully, but with the potential to confer evolutionary advantage (Spence, 2004). As proposed by Serota et al. (2010) and supported by evidence from this experiment, a small percentage of individuals may have the skills necessary to effect deception successfully, and to detect deception in their interaction partners.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We would like to acknowledge the work of the late Sean Spence in the theoretical groundwork of this paper. This work was supported by an Economic and Social Research Council (ESRC) studentship ES/I022996/1 awarded to Gordon R. T. Wright. Christopher J. Berry was supported by ESRC grant RES-063-27-0127.

Blakemore, S-J., Bristow, D., Bird, G., Frith, C. D., and Ward, J. (2005). Somatosensory activations during the observation of touch and a case of vision-touch synaesthesia. Brain 128, 1571–1583.

Bond, C. F., and DePaulo, B. M. (2006). Accuracy of deception judgments. Pers. Soc. Psychol. Rev. 10, 214–234.

Bond, C. F., and DePaulo, B. M. (2008). Individual differences in judging deception: accuracy and bias. Psychol. Bull. 134, 477–492.

Bond, C. F., Kahler, K. N., and Paolicelli, L. M. (1985). The miscommunication of deception: an adaptive perspective. J. Exp. Soc. Psychol. 21, 331–345.

Bouhuys, L., Bloem, G. M., and Groothuis, T. G. G. (1995). Affective perception of facial emotional expressions in healthy subjects. J. Affect. Disorders 33, 215–226.

Buller, D. B., and Burgoon, J. K. (1996). Interpersonal deception theory. Commun. Theor. 6, 203–242.

Byrne, R. W., and Corp, N. (2004). Neocortex size predicts deception rate in primates. Proc. R. Soc. 271, 1693–1699.

Casile, A., and Giese, M. A. (2006). Nonvisual motor training influences biological motion perception. Curr. Biol. 16, 69–74.

Caso, L., Gnisci, A., Vrij, A., and Mann, S. (2005). Processes underlying deception: an empirical analysis of truth and lies when manipulating the stakes. J. Invest. Psychol. Offender Profiling 2, 195–202.

Cheney, D., Seyfarth, R. M., and Smuts, B. B. (1986). Social relationships and social cognition in nonhuman primates. Science 234, 1361–1366.

Davis, M. H. (1980). A multidimensional approach to individual differences in empathy. JSAS Cat. Selected Docs. Psychol. 10, 85.

Dawkins, R., and Krebs, J. R. (1979). Arms races between and within species. Proc. R. Soc. B Biol. Sci. 205, 489–511.

DePaulo, B. M., Charlton, K., Cooper, H., Lindsay, J. J., and Muhlenbruck, L. (1997). The accuracy-confidence correlation in the detection of deception. Pers. Soc. Psychol. Rev. 1, 346–357.

DePaulo, B. M., Kashy, D. A., Kirkendol, S. E., Wyer, M. M., and Epstein, J. A. (1996). Lying in everyday life. J. Pers. Soc. Psychol. 70, 979–995.

DePaulo, B. M., and Kirkendol, S. E. (1989). “The motivational impairment effect in the communication of deception,” in Credibility Assessment, eds J. C. Yuille (Dordrecht, The Netherlands: Kluwer), 51–70.

DePaulo, B. M., Lindsay, J. J., Malone, B. E., Muhlenbruck, L., Charlton, K., and Cooper, H. (2003). Cues to deception. Psychol. Bull. 129, 74–118.

Di Pellegrino, G., Fadiga, L., Fogassi, L., Gallese, V., and Rizzolatti, G. (1992). Understanding motor events: a neurophysiological study. Exp. Brain Res. 91, 176–180.

Ekman, P. (1988). Lying and nonverbal behavior: theoretical issues and new findings. J. Nonverbal. Behav. 12, 163–175.

Feeley, T. H. (1996). Exploring sanctioned and unsanctioned lies in interpersonal deception. Commun. Res. 13, 164–173.

Feeley, T. H., and de Turck, M. A. (1998). The behavioral correlates of sanctioned and unsanctioned deceptive communication. J. Nonverbal. Behav. 22, 189–204.

Frank, M. G., and Ekman, P. (1997). The ability to detect deceit generalizes across different types of high-stakes lies. J. Pers. Soc. Psychol. 72, 1429–1439.

Frank, M. G., and Ekman, P. (2004). Appearing truthful generalizes across different deception situations. J. Pers. Soc. Psychol. 86, 486–495.

Green, D. M., and Swets, J. A. (1966). Signal Detection Theory and Psychophysics. New York, NY: Wiley.

Heyes, C. (2011). What’s social about social learning? J. Comp. Psychol. [Advance online publication].

Humphrey, N. K. (1976). “The social function of intellect,” in Growing Points in Ethology, eds P. P. G. Bateson and R. A. Hinde, Cambridge: Cambridge University Press, 303–317.

Kanwisher, N. (2009). “The use of fMRI in lie detection: what has been shown and what has not,” in Using Imaging to Identify Deceit: Scientific and Ethical Questions, eds E. Bizzi, S. E. Hyman, M. E. Raichle, N. Kanwisher, E. A. Phelps, S. J. Morse, W. Sinnott-Armstrong, J. S. Rakoff and H. T. Greely (Cambridge, MA: American Academy of Arts and Sciences), 7–13.

Levine, T. R., and McCornack, S. A. (1992). Linking love and lies: a formal test of the McCornack and Parks model of deception detection. J. Soc. Pers. Relat. 9, 143–154.

Martin, N. G., and Eysenck, H. J. (1976). “Genetical factors in sexual behaviour,” in Sex and Personality, eds H. J. Eysenck (Open Books, London), 192–219.

Martin, N. G., and Jardine, R. (1986). “Eysenck’s contributions to behaviour genetics,” in Hans Eysenck: Consensus and Controversy, eds S. Modgil and C. Modgil (Philadelphia, PA: The Falmer Press), 13–47.

Mayer, J. D., Caruso, D., and Salovey, P. (1999). Emotional intelligence meets traditional standards for an intelligence. Intelligence 27, 267–298.

Meissner, C. A., and Kassin, S. M. (2002). “He’s guilty!”: investigator bias in judgments of truth and deception. Law Hum. Behav. 26, 469–480.

O’Sullivan, M. (2008). Home runs humbugs: comment on Bond and DePaulo 2008. Psychol. Bull. 134, 493–497. discussion 501–503.

Parker, J. D. A., Taylor, G. J., and Bagby, R. M. (2001). The relationship between emotional intelligence and alexithymia. Pers. Indiv. Differ. 30, 107–115.

Porter, S., McCabe, S., Woodworth, M., and Peace, K. A. (2007). Genius is 1% inspiration and 99% perspiration? … or is it? An investigation of the effects of motivation and feedback on deception detection. Legal Crim. Psychol. 12, 297–309.

Rowe, D. C. (1986). Genetic and environmental components of antisocial behavior: a study of 265 twin pairs. Criminology 24, 513–532.

Serota, K. B., Levine, T. R., and Boster, F. J. (2010). The prevalence of lying in America: three studies of self-reported lies. Hum. Commun. Res. 36, 2–25.

Singer, T., Seymour, B., O’Doherty, J., Kaube, H., Dolan, R. J., and Frith, C. D. (2004). Empathy for pain involves the affective but not sensory components of pain. Science 303, 1157–1162.

Sip, K. E., Lynge, M., Wallentin, M., McGregor, W. B., Frith, C. D., and Roepstorff, A. (2010). The production and detection of deception in an interactive game. Neuropsychologia 48, 3619–3626.

Sip, K. E., Roepstorff, A., McGregor, W., and Frith, C. D. (2008). Detecting deception: the scope and limits. Trends Cogn. Sci. 12, 48–53.

Spence, S. A., Hunter, M. D., Farrow, T. F. D., Green, R. D., Leung, D. H., Hughes, C. J., and Ganesan, V. (2004). A cognitive neurobiological account of deception: Evidence from functional neuroimaging. Philos. T. R. Soc. B. 359, 1755–1762.

Sporer, S. L., and Schwandt, B. (2007). Moderators of nonverbal indicators of deception: a meta-analytic synthesis. Psychol. Public Policy Law 13, 1–34.

Vrij, A. (2000). Detecting Lies and Deceit. The Psychology of Lying and the Implications for Professional Practice. Chichester: Wiley.

Walczyk, J. J., Roper, K. S., Seemann, E., and Humphrey, A. M. (2003). Cognitive mechanisms underlying lying to questions: response time as a cue to deception. Appl. Cogn. Psychol. 17, 755–774.

Wechsler, D. (1999). Wechsler Abbreviated Scale of Intelligence (WASI). San Antonio, TX: Harcourt Assessment.

Keywords: deception, deception detection, lying, signal detection theory, social cognition

Citation: Wright GRT, Berry CJ and Bird G (2012) “You can’t kid a kidder”: association between production and detection of deception in an interactive deception task. Front. Hum. Neurosci. 6:87. doi: 10.3389/fnhum.2012.00087

Received: 28 December 2011; Paper pending published: 14 February 2012;

Accepted: 26 March 2012; Published online: 17 April 2012.

Edited by:

Chris Frith, Wellcome Trust Centre for Neuroimaging at University College London, UKReviewed by:

Bradford C. Dickerson, Harvard Medical School, USACopyright: © 2012 Wright, Berry and Bird. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: Geoffrey Bird, Department of Psychological Sciences, Birkbeck College, University of London, Malet Street, WC1E 7HX London, UK. e-mail:Zy5iaXJkQGJiay5hYy51aw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.