94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 31 October 2011

Sec. Sensory Neuroscience

volume 5 - 2011 | https://doi.org/10.3389/fnhum.2011.00111

Studies employing event-related potentials have shown that when participants are monitoring for a novel target face, the presentation of their own face elicits an enhanced negative brain potential in posterior channels approximately 250 ms after stimulus onset. Here, we investigate whether the own face N250 effect generalizes to other highly familiar objects, specifically, images of the participant’s own dog and own car. In our experiments, participants were asked to monitor for a pre-experimentally unfamiliar target face (Joe), a target dog (Experiment 1: Joe’s Dog) or a target car (Experiment 2: Joe’s Car). The target face and object stimuli were presented with non-target foils that included novel face and object stimuli, the participant’s own face, their own dog (Experiment 1), and their own car (Experiment 2). The consistent findings across the two experiments were the following: (1) the N250 potential differentiated the target faces and objects from the non-target face and object foils and (2) despite being non-targets, the own face and own objects produced an N250 response that was equal in magnitude to the target faces and objects by the end of the experiment. Thus, as indicated by its response to personally familiar and recently familiarized faces and objects, the N250 component is a sensitive index of individuated representations in visual memory.

Successful face recognition depends on our ability to quickly and accurately individuate a particular face (e.g., our roommate, spouse, best friend) from the hundreds of faces that we encounter every day. The rapid face identification of people at the individual level is the hallmark of human face recognition processes (Tanaka, 2001). Amongst all the familiar faces that we individuate, perhaps, no face is as familiar or as relevant to us as our own face. In addition to its extensive familiarity, the own face is uniquely salient because it references one’s self-identity (Keenan et al., 2001). However, personal individuation is not unique to face recognition. A similar kind of individuation occurs when we reach for our favorite coffee mug in the kitchen cupboard or try to locate our car in a crowded parking lot. Whether the perception and identification of these highly familiar, personal objects recruits similar neural processes as the recognition of one’s own face is still an open question. Using event-related potentials (ERPs), we will compare brain activity to the participant’s own face and other personal objects (i.e., participant’s own dog and own car). We will further compare the brain activity to the participant’s own face and own objects to newly familiarized faces and objects that are individuated by a name label.

Studies measuring adult ERPs have shown that faces are differentiated from non-face objects by a larger negative potential recorded over posterior electrode locations, approximately 170 ms (N170) after stimulus onset (Bentin et al., 1996; Rossion et al., 2003). Traditionally, the N170 has not been found to distinguish familiar from unfamiliar faces (e.g., celebrities, politicians) or to be sensitive to memory related factors (Bentin and Deouell, 2000; Eimer, 2000; Schweinberger et al., 2002; Tanaka et al., 2006). However, recent investigations, incorporating adaptation techniques or other various individuation tasks have found N170 effects for repeated or familiar stimuli (Jemel et al., 2005, 2009; Jacques and Rossion, 2006; Kovacs et al., 2006; Caharel et al., 2009; Keyes et al., 2010), suggesting that the N170’s sensitivity to face familiarity may be task dependent.

In addition to being sensitive to the task, the N170 has also been found to distinguish among other important non-face objects present in the environment. For example, the N170 is larger in response to birds relative to dogs in bird experts, and to dogs relative to birds in dog experts (Tanaka and Curran, 2001). Moreover, training novices with cars or birds leads to increased N170 responses post-training relative to pre-training (Scott et al., 2006, 2008). Combined, these results suggest that the N170, traditionally thought to be part of a system involved in the initial early coding of face and face-like stimuli, is modulated by familiarity and experience for both faces and objects.

The N250 component, also recorded over posterior electrode locations, peaks approximately 250 ms after stimulus onset and, unlike the N170, is consistently found to index face familiarity and repetition (Schweinberger et al., 2002, 2004). For example, priming experiments have shown that repeated presentations of a familiar politician or celebrity elicit a larger N250 than repeated presentations of novel faces (Schweinberger et al., 2002, 2004). The N250 component is also sensitive to novel faces familiarized under laboratory training conditions (Tanaka et al., 2006) and to the presentation of a familiar person across multiple viewpoints (Kaufmann et al., 2009)1. These results indicate that the N250 component indexes perceptual memory representations for faces at the level of the individual.

However, the N250 familiarity effect is not specific to faces, but can be elicited by familiar objects after expertise training (e.g., cars, birds; Scott et al., 2006, 2008). For example, participants trained to identify birds or cars at the subordinate-level (e.g., Snowy Owl, Subaru Outback) exhibit an enhanced N250 to subordinate-level trained birds or cars relative to individuals trained at the basic level (e.g., Owl, Sedan). This kind of subordinate-level expertise is also present when viewing a single, personalized object (Sugiura et al., 2005; Miyakoshi et al., 2007). For example, Miyakoshi et al. (2007) asked participants to passively view cups, shoes, hand bags, and umbrellas that were: (1) personal items belonging to the participant, (2) generically familiar items that were pre-experimentally known, but not personal items, or (3) novel items not known to the participants. The results showed a left-lateralized N250 component that distinguished personally familiar and generically familiar objects from novel and unfamiliar objects, suggesting that items previously known to participants accessed stored representations in visual memory.

Previous research suggests that the N250 differentiates objects of expertise and personally familiar objects from unfamiliar objects. However, the results do not address whether the N250 differentially modulates highly familiar faces from highly familiar objects. More specifically, to date no experiments have compared the N250 in response to the participant’s own face to the N250 in response to other highly familiar objects. Arguably, one’s own face is the definitive marker of self-identity and has been hypothesized to be our most distinctive physical feature (Tsakiris, 2008). It has been demonstrated that one’s own face is identified more quickly in a visual search task (Tong and Nakayama, 1999), shows greater hemispheric specialization (Keenan et al., 2003) and elicits enhanced fusiform gyrus activity (Kircher et al., 2001). It has also been hypothesized that one’s own face captures attentional resources (Brédart et al., 2006). For example, in a flanker task when participants were asked to judge whether two flanking digits were both even or odd, performance was significantly disrupted when the own face was centrally presented as a to-be-ignored stimulus (Devue and Bredart, 2008). Thus, the own face is a prepotent stimulus that seems to automatically grab the observer’s attention (for a revised account see Devue et al., 2009).

In the current study, we adapted a “Joe/No Joe” task previously used to test own face recognition, to examine the processing of highly familiar personal objects (Tanaka et al., 2006). In the “Joe/No Joe” task, participants are asked to actively monitor for a target face (“Joe”) that is sequentially presented along with two types of non-target faces: completely novel faces and the participant’s own face. It was found that despite its non-target status, the participant’s own face generated a robust N250 component equivalent to the target Joe face suggesting that the participant’s own face obligatorily activates a pre-existing representation (Tanaka et al., 2006). In the current study, participants were asked to monitor for a target face (Joe) and a target object (Joe’s dog or Joe’s car) that were shown among other novel faces, novel objects, the participant’s own face and a personally familiar object (Experiment 1: the participant’s own dog; Experiment 2: the participant’s own car). The primary objectives of this study were to test whether personally familiar objects elicit an obligatory N250 response similar to the N250 response produced by one’s own face and to compare the N250 response to the own face and own-object to the N250 response to the recently familiarized and task-relevant face (Joe) and object (Joe’s Dog, Joe’s Car).

In Experiment 1, participants were asked to monitor for a novel face and a novel dog target that were serially presented among their own face and dog and novel face and dog stimuli.

Participants included 12 (3 male, 9 female) undergraduate students recruited from the University of Victoria, aged 22–40 years (mean = 27.25). Participants were right-handed and had normal or corrected-to-normal vision. All participants gave informed consent to participate in this study. Participants were pre-screened to ensure that they were not familiar with any of the other subjects in the study.

Each participant completed two sessions on different days. During the first session, pictures of the participants and their dogs were taken. During the second session ERPs and behavioral responses were recorded while participants completed a target-detection task. Participation in session two took approximately 2 h. Participants were paid $30 for their time.

Stimuli included full color photographs of 12 faces and 12 dogs. Photographs of faces and dogs were taken with a digital camera in a well-lit room under similar conditions and were equated for size, luminance, and contrast using Adobe Photoshop. All images were cropped to show only the face or dog stimuli and were placed on a gray background. The assignment of stimuli to each experimental condition (Joe, Own, Other) was counterbalanced across participants so that each participant was exposed to each face and dog equally, and each face/dog appeared in each condition. All stimuli were 270 × 360 pixels and were presented at a visual angle of 9.03° horizontal and 11.95° vertical. Images were presented on a computer monitor.

All procedures were approved by the Institutional Review Board at the University of Victoria and were conducted in accordance with this approval. After digital photographs of participants’ own dog and face were taken, they returned on a different day to complete the Joe/No Joe task while both ERPs and behavioral measures of accuracy were recorded.

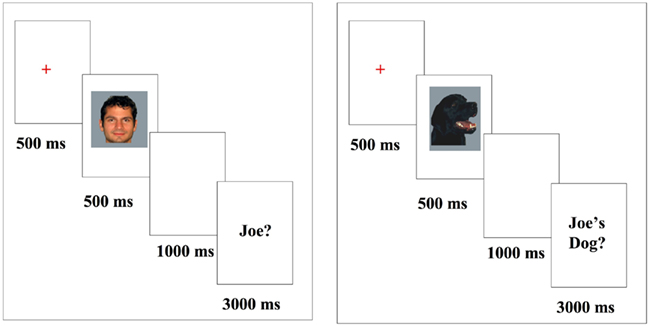

In the Joe/No Joe task (see Figure 1), participants were introduced to a face and dog target (e.g., “Joe”) and then viewed and responded to face or car stimuli that included pictures of their own face or dog, the “Joe” target face or dog and novel faces or dogs. After viewing each stimulus, participants were prompted to determine whether each face or dog was “Joe” or “not Joe” using two separate response buttons. Females saw six female “Jane” faces and males saw six male “Joe” faces including their own face, as well as the six dogs owned by the corresponding people. Each trial included the presentation of a fixation cross (500 ms), followed by the face or dog image (500 ms), followed by a blank screen for 1000 ms, followed by a response prompt which remained on the screen until a response was made, up to 3000 ms. Participants were instructed to respond only when prompted so that exposure and response were separated by a 1000-ms interval. Each participant completed 35 blocks of trials with self-paced rest breaks in between. Each block contained 24 trials; 2 presentations of each of the 6 faces and 6 dogs. Eight-hundred forty trials were completed in total.

Figure 1. Stimuli presentation; images presented for 500 ms followed by a “Joe?/Joe’s Dog?” forced choice response (duration 3000 ms). All images were presented subsequent to a fixation.

Scalp voltages were collected with a 41-channel Easy Cap using Brain Vision Recorder software (Version 1.3, Brain Products, GmbH, Munich, Germany). Amplified analog voltages (Quick Amp, Brain Products, GmbH, Munich, Germany; 0.017–67.5 Hz bandpass, 90 dB octave roll off) were digitized at 250 Hz and collected continuously. Filter cutoffs during recording have cutoff frequencies defined at −3 dB amplitude reduction. Electrode impedances were kept below 10 kΩ throughout the experiment and eye movements were measured using electrodes placed on the left and right canthi and below the right eye. Offline, the EEG data were filtered at 0.1–40 Hz passband. High and low cutoff filters reduced amplitude by 3 dB at their cutoff frequencies, corresponding to a half power reduction. EEG data were re-referenced to combined left and right earlobes (Joyce and Rossion, 2005; Luck, 2005; similar to Tanaka and Pierce, 2008). Ocular artifacts were corrected using an algorithm (Gratton et al., 1983) and an artifact rejection was used to remove trials with a change in voltage of 35 μV or greater. 1000 ms segments of the EEG were extracted around the stimulus presentation (−200 to 800 ms) for each trial and participant. ERPs were baseline-corrected with respect to a 100-ms pre-stimulus interval. Data were then averaged across conditions (face/dog × Joe/own/other), and participants.

Accuracy in each condition was at ceiling and thus no analyses were conducted using measures of accuracy. Reaction times were recorded but were not considered informative due to the delayed nature of the paradigm in which participants were instructed not to respond until the prompt.

Electrophysiological analyses of each component of interest (N170; N250) were analyzed separately. The channels were selected by identifying the electrode locations in the right and left hemisphere with the largest N170 and N250 across all conditions (channels P07 and P08). For the N170 peak amplitude was determined by finding the latency of the maximum peak within a window of 110–190 ms. In order to minimize selection bias, peak times and channels were determined from ERPs that were averaged across all conditions. Mean amplitude was then calculated in a window ± 1 SD from average peak latency: 142–183 ms. For each participant, maximum (negative) peak amplitude and peak latency were calculated within the window of interest for the N170. Average latency values for peak amplitude were in turn used to determine the time window for the N170 mean amplitude calculation. Mean amplitude was calculated within the window of interest for the N250 based on visual inspection and previous research (235–335 ms; Scott et al., 2006, 2008; Tanaka et al., 2006), rather than the peak latency approach used for the N170, because N250 peaks are difficult to reliably measure in individual subjects2. Statistical analyses were conducted on these mean amplitudes. Average amplitudes were submitted to a 2 Stimulus Type (face, car) × 3 Condition (Joe, own, other) × 2 Experimental half (first half, second half) × 2 Hemisphere (left, right) repeated measures ANOVA. Bonferroni corrected paired t-tests were conducted to follow-up significant effects, however uncorrected values are also shown below in order to elucidate some of the significant interactions.

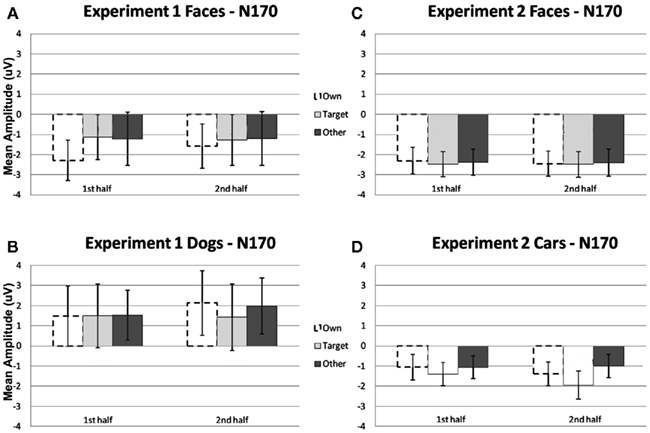

N170. Repeated measures ANOVA analyses revealed a main effect of stimulus type, due to a greater mean amplitude N170 to faces relative to dogs [F(1, 11) = 9.35, p < 0.05]. No other significant main effects were found (Figures 2A,B, 4, and 5). There was a significant interaction between stimulus type and hemisphere [F(1, 11) = 10.71, p < 0.01], and a significant experimental half by hemisphere interaction [F(1, 11) = 5.94, p < 0.05], which were qualified by a significant interaction between stimulus type, experimental half, and hemisphere [F(1, 11) = 4.66, p = 0.05]. This three-way interaction was due to the fact that the difference between faces and dogs occurred within the right hemisphere only, with greater amplitude N170s for faces relative to dogs (p < 0.01). While N170 amplitude for dogs was equal across hemispheres over time, from the first to the second half (p > 0.05), there was a marginal trend for faces to show greater N170 amplitude in the left hemisphere as compared to the right hemisphere, in the second half only (p = 0.10).

Figure 2. Comparison of mean amplitude of the N170 to Own, Target, and Other conditions across the first and second experimental halves for (A) Experiment 1 faces, (B) Experiment 1 dogs, (C) Experiment 2 faces, and (D) Experiment 2 cars. Error bars represent 95% confidence intervals. Experiment 1 electrode sites: average P07, P08; Experiment 2 electrode sites: Mean of electrodes in the left hemisphere (58, 59, 64, 65, and 70) and right (92, 97, 91, 96, 90, and 95) hemispheres, corresponding to regions between standard locations and O1/O2 and T5/T6.

A repeated measures ANOVA conducted on latency values with the factors stimulus type (face, dog), condition (own, Joe, other), and half (first, second) showed that the latencies were significantly longer for dogs (MN = 162.6 ms) than faces (MN = 149.1 ms), [F(1,11) = 39.93, p < 0.001].

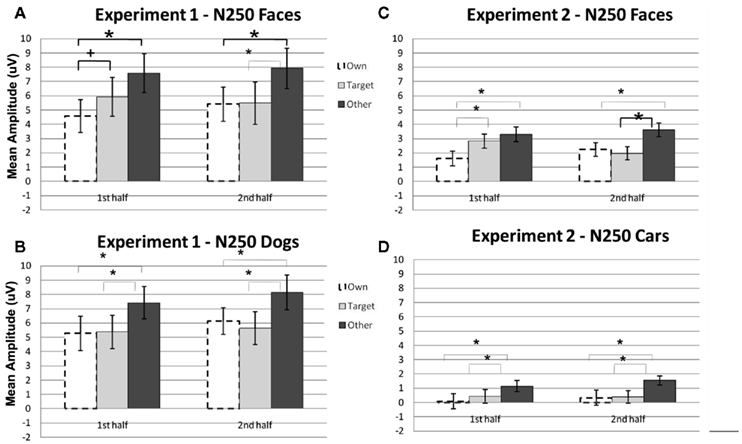

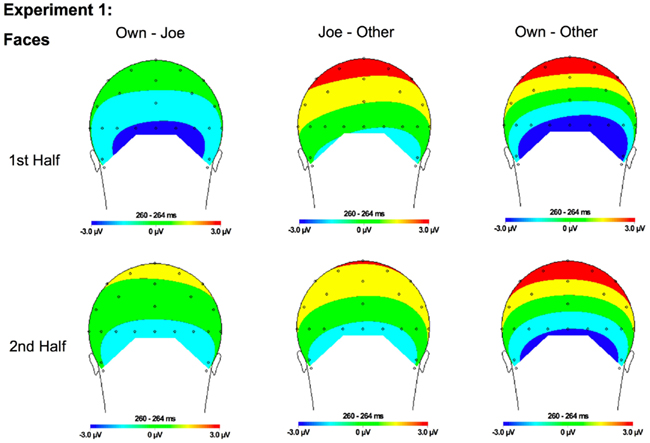

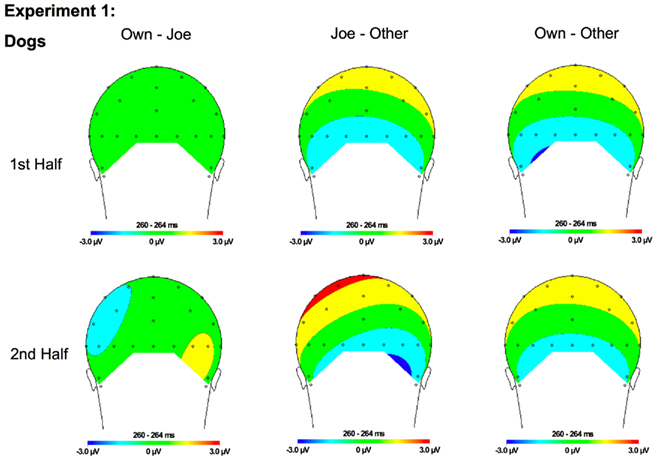

N250. A significant main effect of condition [F(2, 22) = 19.12, p < 0.001] was found due to a greater (more negative) amplitude N250 to own stimuli and target stimuli relative to other stimuli (p < 0.01; Figures 3A,B, 4, and 5). There were significant interactions between condition and experimental half [F(2, 22) = 4.87, p < 0.05], and between condition and hemisphere [F(2, 22) = 5.78, p < 0.05]. Greater N250 amplitude was seen to both own and target stimuli when compared to other stimuli in the first and second halves of the experiment (all p < 0.01). N250 amplitude to own stimuli was equivalent to target stimuli across experimental halves. While N250 amplitude was equivalent for both own and target stimuli across hemispheres, greater N250 amplitude was observed for other stimuli in the left hemisphere when compared to the right (p < 0.01; see topographic maps in Figures 6 and 7).

Figure 3. Comparison of mean amplitude of the N250 to Own, Target, and Other conditions across the first and second experimental halves for (A) Experiment 1 faces, (B) Experiment 1 dogs, (C) Experiment 2 faces, and (D) Experiment 2 cars. Error bars represent 95% confidence intervals. Experiment 1 electrode sites: average P07, P08; Experiment 2 electrode sites: Mean of electrodes in the left hemisphere (58, 59, 64, 65, and 70) and right (92, 97, 91, 96, 90, and 95) hemispheres, corresponding to regions between standard locations and O1/O2 and T5/T6. *p < 0.05, +p = 0.07.

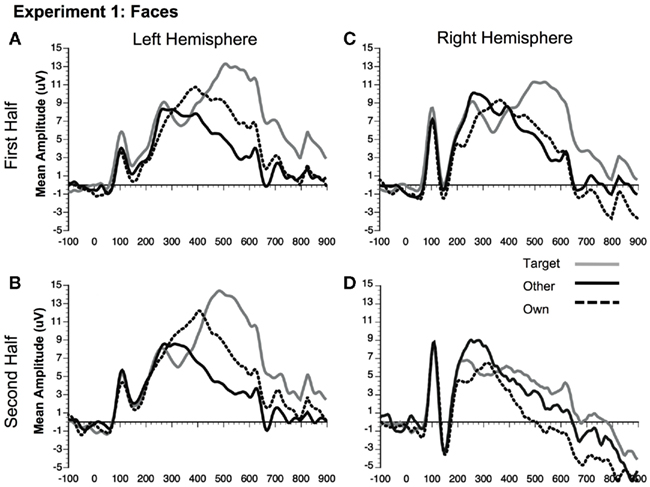

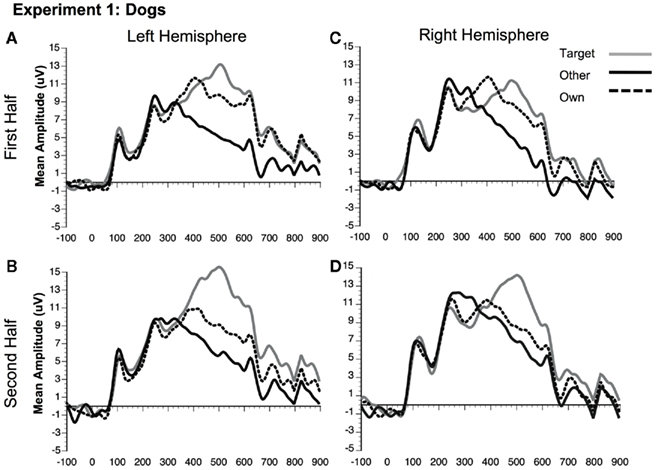

Figure 4. Comparison of ERP waveforms from stimulus onset to Own, Target, and Other conditions for Experiment 1 faces (A) first half left hemisphere, (B) second half left hemisphere, (C) first half right hemisphere, and (D) second half right hemisphere. Left hemisphere electrode: P07; Right hemisphere electrode: P08.

Figure 5. Comparison of ERP waveforms from stimulus onset to Own, Target, and Other conditions for Experiment 1 dogs (A) first half left hemisphere, (B) second half left hemisphere, (C) first half right hemisphere, and (D) second half right hemisphere. Left hemisphere electrode: P07; Right hemisphere electrode: P08.

Figure 6. Topographical maps for Experiment 1 faces showing the difference in amplitude between Own and Joe, Joe and Other, and Own and Other conditions during the first and second half of the experiment. Time points were selected as the average latency where differences across conditions were maximal.

Figure 7. Topographical maps for Experiment 1 dogs showing the difference in amplitude between Own and Joe, Joe and Other, and Own and Other conditions during the first and second half of the experiment. Time points were selected as the average latency where differences across conditions were maximal.

Although the stimulus type × condition × half interaction was not significant, pair-wise comparisons indicate different patterns of results for faces and dogs (Figures 3A,B for significance of pair-wise comparisons). Starting with the dogs, we found that both the own and target dogs elicited a more negative N250 than the other dogs in both the first and second halves of the experiment (p < 0.05 first half, p < 0.01 second half, uncorrected), with no differences between the own and target conditions (p > 0.10). Faces showed a similar pattern with own face eliciting a more negative N250 than other face (p < 0.001), a marginally significant difference between own and target faces (p < 0.07), and a significant difference between target and other faces (p < 0.01) in the first half. By the second half, own face and the target face were similar (p > 0.10), and both were more negative than other face (p < 0.001). By itself, this single marginal difference does not constitute much of a distinction between faces and dogs, but we point it out here because it was significantly replicated in Experiment 2.

Experiment 1 was designed to test the N250 familiarity effect shown in previous studies (e.g., Tanaka et al., 2006) both by replicating effects seen to own and experimentally familiarized faces, and investigating whether this process generalizes to own and experimentally familiarized non-face objects (i.e., dogs). With respect to the earlier N170 component, a face N170 effect was observed in which the N170 to faces was faster and greater than the N170 to dogs (e.g., Carmel and Bentin, 2002). Other differences were found between the N170 to faces and dogs. The N170 to faces was greatest over the right hemisphere whereas no hemisphere differences were observed for dogs. Both the face and dog N170 was not sensitive to familiarity to the extent that no within-category differences were observed between the own, Joe and other conditions for dogs or faces.

In contrast to the N170, the N250 showed sensitivity to familiarity, demonstrated by differences between the own, target, and other conditions. Both faces and dogs showed more negative N250s in response to the own and target conditions relative to the other condition. These results are similar to those previously reported by Tanaka et al. (2006), except that the previous experiment indicated that differences between the target and other conditions did not emerge until the second half – suggesting that learning the target face over the course of the experiment made the target familiarity effect slower to emerge than the immediate familiarity effect observed for the own face. However, consistent with Tanaka et al. (2006), the present experiment indicated a trend toward differences between the own and target faces in the first half of the experiment. Conversely, the own and target dog conditions showed absolutely no differences. Thus, the temporal dynamics of the own versus target familiarity effects may differ between dogs and faces, for reasons that will be examined in the General Discussion. Overall, these results suggest that increased N250 amplitude to familiar stimuli is not limited to human faces, as similar results were observed for both dogs and faces. Despite monitoring for the other target face and object stimuli, participants demonstrated a robust N250 to their own face and the face of their own dog. Experiment 2 was designed to determine whether this result is specific to living objects, such as human and animal faces or whether a self-relevance bias generalizes to non-living objects, such as one’s own car.

In Experiment 2, participants were asked to monitor for novel face and car targets that were presented serially among images of their own face, their own car and other novel car and face stimuli.

Participants included 30 right-handed (15 male, 15 female), undergraduates recruited from the University of Colorado at Boulder. Participants had normal or corrected-to-normal vision. All participants gave informed consent to participate in this study. Data from six participants were excluded from analysis. One participant who had his picture taken was excluded for failure to return for the EEG session. Five others were excluded because excessive alpha activity obscured their ERPs. The final sample included 24 participants (12 females).

Each participant completed two sessions on different days. During the first session, pictures of the participants and their cars were taken. Participants were paid $5.00 for session 1. During the second session ERPs and behavioral responses were recorded while participants completed a target-detection task. Participation in session two took approximately 2 h and participants were paid $15 per hour.

Stimuli included full color photographs of 30 faces and 30 cars. Photographs of faces were taken with a digital camera in a well-lit room, and photographs of cars were taken with the same digital camera outside. All images were equated for size, luminance, and contrast using Adobe Photoshop. All images were cropped to show only the face or car and were placed on a gray background. For stimulus counterbalancing purposes each participant was assigned to one of five different cohorts of six subjects. The assignment of stimuli to each experimental condition (Target, Own, Other) was counterbalanced across participants within each cohort so that each participant was exposed to each of the six faces and cars within the cohort 70 times, and each face/car appeared in each condition. Stimuli were displayed on a 15″ Mitsubishi flat-panel monitor. Each stimulus was approximately 3 cm wide and 4 cm high subtending a visual angle of approximately 1.9° and 2.4° in the horizontal and vertical dimensions respectively.

All procedures were approved by the Institutional Review Board at the University of Colorado and were conducted in accordance with this approval. After digital photographs of participants’ own car and face were taken, they returned on a different day to complete the Joe/No Joe task while both ERPs and behavioral measures of accuracy and reaction time were recorded.

Similar to Experiment 1, in the Joe/No Joe task, participants were introduced to a face and car target (e.g., “Joe”) and then viewed and responded to face or car stimuli that included pictures of their own face or car, the “Joe” target face or car and novel faces and cars. After viewing each stimulus, participants were prompted to determine whether each face or car was “Joe” or “not Joe” using two separate response buttons. Females saw six female “Jane” faces and males saw six male “Joe” faces including their own, along with the six cars of the corresponding people. Each trial included the presentation of a fixation cross (500 ms), followed by the face or car image (500 ms), followed by a delayed-response sequence consisting of a 500-ms blank screen and a 1000-ms response prompt. Participants were instructed to withhold their responses until the prompt appeared, and then press one of two buttons corresponding to whether or not the stimulus was “Joe” or “Not Joe.” A blank 1000 ms inter-trial interval followed the response. Each participant completed 35 blocks of trials with self-paced rest breaks in between. Each block contained 24 trials; 2 presentations of each of the 6 faces and 6 cars presented randomly for a total of 840 trials.

Scalp voltages were collected with a 128-channel Geodesic Sensor Net™ (Tucker, 1993) connected to an AC-coupled, 128-channel, high input impedance amplifier (200 MΩ, Net Amps™, Electrical Geodesics Inc., Eugene, OR, USA). Amplified analog voltages (0.1–100 Hz bandpass) were digitized at 250 Hz and collected continuously. Filter cutoffs were specified as the −3 dB point, which is half power. This was the same for offline filtering. Individual sensors were adjusted until impedances were less than 50 kΩ. Offline, the EEG data were low-pass filtered at 40 Hz. Trials were discarded from analyses if they contained eye movements (vertical EOG channel differences greater than 70 μV) or more than 10 bad channels (changing more than 100 μV between samples, or reaching amplitudes over 200 μV). EEG from individual channels that was consistently bad for a given participant was replaced using a spherical interpolation algorithm (Srinivasan et al., 1996). EEG was measured with respect to a vertex reference (Cz), but an average-reference transformation was used to minimize the effects of reference-site activity and accurately estimate the scalp topography of the measured electrical fields (Dien, 1998). The average reference was corrected for the polar average-reference effect (Junghöfer et al., 1999). ERPs were baseline-corrected with respect to a 100-ms pre-stimulus recording interval.

Accuracy in each condition was at ceiling and thus no analyses were conducted using measures of accuracy. Reaction times were collected but were not considered informative due to the delayed nature of the paradigm in which participants were instructed not to respond until the prompt.

Electrophysiological analyses of each component of interest (N170; N250) were analyzed separately. Due to the relatively higher electrode density in Experiment 2, groups of electrodes were used instead of single channels. The channels were selected by identifying the electrode locations in the right and left hemisphere with the largest N170 and N250 across all conditions (channels 58 and 92, between standard locations O1/O2 and T5/T6). Analyses were conducted on averaged ERPs across the mean of these channels and five immediately adjacent electrodes in the left hemisphere (59, 64, 65, 69, and 70) and right hemisphere (97, 91, 96, 90, and 95). These locations are immediately lateral to standard locations P07 and P08 used in Experiment 1, so similar locations were analyzed in both experiments despite differences in number of recording electrodes and reference choice. Windows used in analyses included 147–215 ms after stimulus onset for the N170 and 235–335 ms after stimulus onset for the N250. As in Experiment 1, the N170 window was calculated as ±1 SD from average peak latency, whereas the N250 latency window was based on previous research and identical to that used in Experiment 1. Mean amplitudes were submitted to a 2 Stimulus Type (face, car) × 3 Condition (Joe, own, other) × 2 Experimental half (first half, second half) × 2 Hemisphere (left, right) repeated measures ANOVA. Bonferroni corrected paired t-tests were conducted to follow-up significant effects.

N170. Repeated measures ANOVA analyses revealed a main effect of stimulus type [F(1, 23) = 23.32, p < 0.0001] due to a greater peak amplitude N170 response to faces compared to cars. In addition, analyses revealed a marginal main effect of hemisphere [F(1, 23) = 4.04, p = 0.056] due to a greater amplitude response in the right relative to the left hemisphere. No condition effects or interactions were significant (all ps > 0.05; see Figures 2C,D).

Analyses of the peak latency response for the N170 revealed a main effect of stimulus type [F(1, 23) = 16.33, p > 0.001] due to a longer latency response to cars (M = 191 ms, SE = 2.2) relative to faces (M = 184 ms; SE = 2.5). In addition, there was a significant interaction between condition, experimental half, and hemisphere [F(2, 46) = 4.79, p = 0.01]. This interaction was due to a longer latency response in the right (M = 192 ms; SE = 2.1) relative to the left (M = 185 ms, SE = 2.8) hemisphere in the second half of the Joe condition (p < 0.01).

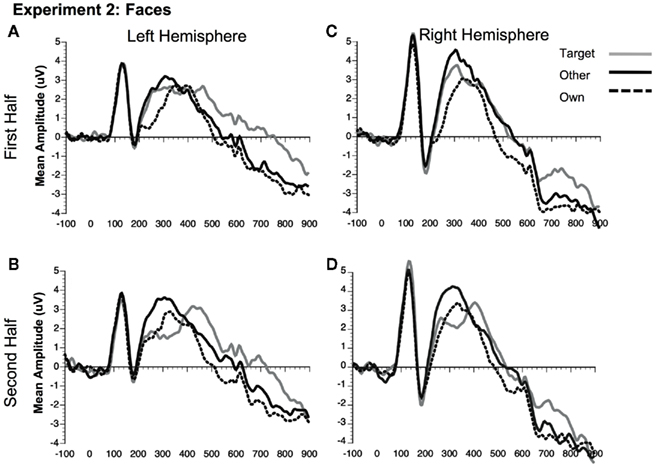

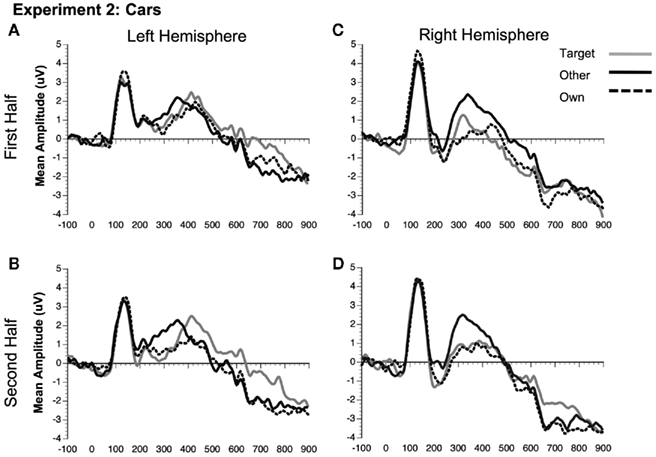

N250. Significant main effects of stimulus type [F(1, 23) = 62.88, p < 0.0001] and condition [F(2, 46) = 32.30, p < 0.0001] were found (see Figures 3C,D, 8, and 9). The main effect of stimulus type was due to a greater amplitude N250 for cars compared to faces and the main effect of condition was due to a greater response to the own and target stimuli compared to the other stimuli (follow-up paired comparison ps < 0.01). However, both of these main effects were qualified by interactions. Analyses revealed a significant interaction between condition and experimental half [F(2, 46) = 11.39, p < 0.001]. Follow-up comparisons revealed that this interaction was due to a greater amplitude response to the own stimuli compared to the target stimuli compared to the other stimuli in the first half and a greater amplitude N250 to the own stimuli and target stimuli relative to the other stimuli in the second experimental half (ps < 0.05; Figures 3C,D, 8 and 9).

Figure 8. Comparison of ERP waveforms from stimulus onset to Own, Target, and Other conditions for Experiment 2 faces (A) first half left hemisphere, (B) second half left hemisphere, (C) first half right hemisphere, and (D) second half right hemisphere. Left hemisphere electrodes: average 58, 59, 64, 65, 69, and 70; Right hemisphere electrodes: average 92, 97, 91, 96, 90, and 95.

Figure 9. Comparison of ERP waveforms from stimulus onset to Own, Target, and Other conditions for Experiment 2 cars (A) first half left hemisphere, (B) second half left hemisphere, (C) first half right hemisphere, and (D) second half right hemisphere. Left hemisphere electrodes: average 58, 59, 64, 65, 69, and 70; Right hemisphere electrodes: average 92, 97, 91, 96, 90, and 95.

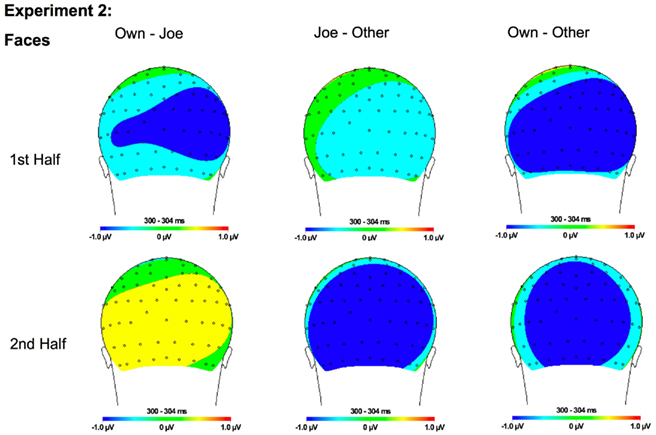

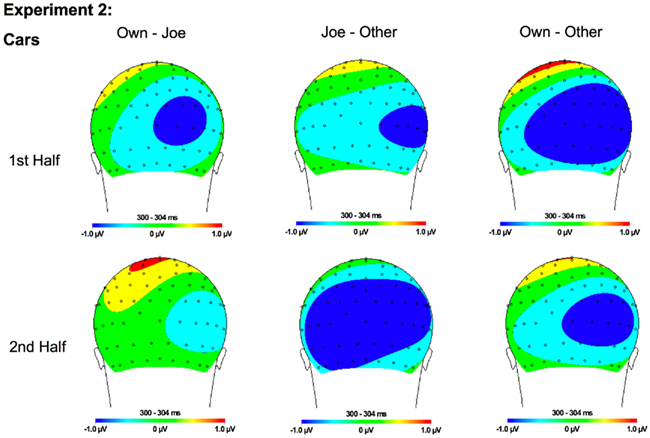

In addition, interactions between condition and hemisphere [F(2, 46) = 6.16, p < 0.01] and stimulus type and hemisphere [F(1, 23) = 6.81, p < 0.02] were found. Follow-up analyses suggest that the interaction between condition and hemisphere was due to a greater response to the target and own stimuli compared to the other stimuli in the left hemisphere and a greater response to the own stimuli compared to the Joe stimuli which were both greater than the other stimuli in the right hemisphere (all ps < 0.05). Finally, the interaction between stimulus type and hemisphere was due to a marginally greater amplitude to faces in the right compared to the left hemisphere (p = 0.07; see topographic maps in Figures 10 and 11).

Figure 10. Topographical maps for Experiment 2 faces showing the difference in amplitude between Own and Joe, Joe and Other, and Own and Other conditions during the first and second half of the experiment. Time points were selected as the average latency where differences across conditions were maximal.

Figure 11. Topographical maps for Experiment 1 cars showing the difference in amplitude between Own and Joe, Joe and Other, and Own and Other conditions during the first and second half of the experiment. Time points were selected as the average latency where differences across conditions were maximal.

As in Experiment 1, the condition × stimulus type interaction was not significant, but potential differences in the time course of the familiarity effects were found for the pair-wise comparisons (see Figures 3C,D). As in Experiment 1, in the second half of the experiment both faces and cars showed differences between target and own versus other conditions, but target and own conditions did not differ. Faces and cars appeared to differ in the first half such that cars showed a pattern that was similar to the second half, but target faces fell significantly in between the own and other conditions. Thus, as for faces and dogs in Experiment 1, familiarity effects were slower to develop for faces than cars in Experiment 2.

Experiment 2 tested the specificity of the previously reported N250 familiarity effect (Tanaka et al., 2006) by showing participants pictures of their own car and own face along with novel faces and cars and a target car or face. Consistent with previous research investigating the electrophysiological response to faces versus objects (e.g., Carmel and Bentin, 2002), the present experiment found a greater amplitude and faster latency N170 response to faces compared to cars. This N170 effect was not influenced by subsequent within-experiment familiarization to cars or faces and did not differ for the target or non-target stimuli.

Unlike the N170, the N250 amplitude was influenced by experimental familiarization. For both faces (Figure 8) and cars (Figure 9), the N250 was significantly more negative for the own than other novel conditions within both the first and second halves of the experiment, indicating an effect of pre-experimental familiarity on the N250 manifesting itself in the early part of the experiment. For cars, the N250 responded similarly to target cars as for subjects’ own cars, each being differentiated from novel cars in each half of the experiment. For faces, on the other hand, target familiarity effects were slower to develop since target faces significantly differed from the own face in the first, but not the second half of the experiment. Collectively, the main results from Experiment 2 suggest that the N250 is sensitive to pre-experimentally familiar stimuli, such as the participant’s own face or own car, even when the items were not directly pertinent to the experimental task. The current study shows that the N250 to personally familiar objects (Miyakoshi et al., 2007) is of the same magnitude as personally familiar faces.

The present experiments investigated the neural correlates of perceiving personally familiar, newly familiar and foil faces and objects. The pattern of response to the personally familiar faces and objects was remarkably consistent across the two experiments. Consistent with previous findings (e.g., Bentin et al., 1996; Eimer, 2000) the N170 component to faces was larger in amplitude and peaked faster than that elicited to non-face dog and car stimuli. However, the within-category manipulations of familiarity (own versus other stimuli) and task relevance (target versus non-target stimuli) had little effect on the amplitude and latency of the N170 component.

In contrast to the N170, manipulations of personal familiarity significantly influenced the magnitude of the later N250 component. The own face and own dog in Experiment 1, and own face and own car in Experiment 2 – despite their non-target status – elicited an greater N250 response than the other non-target face and object foils. In the Joe/No Joe task, the mere presentation of the own face, dog, and car images was sufficient to evoke an N250. The equivalent N250 response to own face and own objects (Experiment 1: own dog, Experiment 2: own car) indicates that the N250 familiarity effect is not restricted to faces, but reflects a generalized response to individuated, personally familiar items.

For the target Joe face, the N250 familiarity developed over the course of the first and second halves of the experiment. Consistent with previous studies (Tanaka et al., 2006), the N250 to the target face was significantly smaller in the first half of the experiment than the N250 to the own face. By the second half, however, the N250 to the target face was comparable to the N250 produced by the participant’s own face suggesting that the N250 is an index of perceptual familiarity that can develop over the course of an experiment. In contrast, the magnitude of the N250 to the target dog and car items, in the first half of the experiment, was equal in strength to the response generated by the personally familiar own dog and own car stimuli. It is not clear why formation of the N250 response to a novel face lags behind the formation of the N250 for non-face objects. It is possible that due to a large number of pre-experimental face exemplars stored in memory (Valentine, 1991), additional perceptual practice is required to differentiate a specific face from other competing faces. In contrast, less perceptual analysis is needed to distinguish a specific dog or car from the relatively few dog and car exemplars stored in perceptual memory.

Results from Experiments 1 and 2 demonstrate the value of the label in eliciting the N250 potential. Although presented an equal number of times as the target face and object stimuli, the un-individuated “other” faces, dogs and cars failed to exhibit an enhanced N250 component. The critical difference appears to be that the target face, dog and car stimuli were marked with the “Joe” label in contrast to the “other” faces and objects that were not labeled. However, name labels themselves are not sufficient to evoke an N250 response. In a recent investigation, Gordon and Tanaka(in press) familiarized participants to Joe and Bob faces at the beginning of the experiment. In the first half of the experiment, participants were asked to monitor for the target Joe shown among “other” novel faces, including Bob. Although identifiable by a name, the non-target Bob failed to elicit a differential N250 response. However, when the target face was switched from the Joe face to the Bob face halfway through the experiment, a robust N250 negativity was produced to both Bob and the non-target Joe. These findings suggest that the N250 relies on the name label to individuate a face and practice at identifying the face at a more subordinate level of categorization. Once the representation is established at this more specific level, its activation is obligatory in the sense that it does not need to be directly task relevant.

The current findings also clarify functional differences between the N170 and N250 ERP components. The N170 component has previously been proposed to index an early encoding stage in the perceptual processing of faces (Bentin and Deouell, 2000; Eimer, 2000) and objects of expertise viewed by real world (Tanaka and Curran, 2001) and laboratory-trained (Scott et al., 2006, 2008) experts. Previous studies have found that the N170 component is relatively insensitive to familiar faces, such as faces of celebrities (e.g., Bentin and Deouell, 2000; Eimer, 2000; Schweinberger et al., 2002) or even the participant’s own face (Tanaka et al., 2006). Consistent with these results, we found that the N170 did not detect differences between the participant’s highly familiar own face and completely novel faces. However, using adaptation techniques, several recent investigations have found N170 differences between familiar and unfamiliar faces (Jemel et al., 2005, 2009; Jacques and Rossion, 2006; Kovacs et al., 2006; Caharel et al., 2009). Other work has shown that face familiarity can increase the amplitude of the N170 if a very small set of familiar and unfamiliar faces are tested (Caharel et al., 2002; Keyes et al., 2010; but see Caharel et al., 2005). Thus, the presence (or absence) of N170 face familiarity effects are likely affected by a number of experimental factors including the cognitive task employed (e.g., passive viewing versus categorization), the number of faces in the stimulus set and whether the faces are celebrities or personally familiar to the participant.

In summary, unlike the N170 that was relatively insensitive to the familiarity of exemplars within a face or object category, the N250 component was responsive to two types of individual within-category exemplars. First, the N250 differentiated the pre-experimentally familiar own face and own objects from novel faces and objects. Second, the naming manipulation was sufficient to evoke an N250 response to novel Joe face and object stimuli that differentiated these items from the unnamed, novel “other” items. The amplitude of the N250 differences to familiar and learned dog and car stimuli (i.e., own dog, Joe’s dog, own car, Joe’s car) did not differ from the face stimuli (i.e., own face, Joe’s face). Thus, in contrast to the N170, the N250 is not specific to any one category (e.g., faces, objects of expertise), but taps into the long-term perceptual memory for any type (face or non-face) of individuated stimulus. As demonstrated in the current study, these long-term representations are obligatorily recruited even in situations when they are not the targets of the experimental task at hand.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This research was funded by grants from the James S. McDonnell Foundation, National Science Foundation grant #SBE-0542013 to the Temporal Dynamics of Learning Center (an NSF Science of Learning Center), the National Institutes of Health (MH64812), and the National Sciences and Engineering Research Council of Canada. The authors would like to thank John Capps, Debra Chase, Casey DeBuse, Danielle Germain, Jane Hancock, Elan Walsky, Brion Woroch, and Brent Young for research assistance and participant testing.

Bentin, S., Allison, T., Puce, A., Perez, E., and McCarthy, G. (1996). Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 8, 551–565.

Bentin, S., and Deouell, L. (2000). Structural encoding and identification in face processing: ERP evidence for separate mechanism. Cogn. Neuropsychol. 17, 35–54.

Brédart, S., Delchambre, M., and Laureys, S. (2006). One’s own face is hard to ignore. Q. J. Exp. Psychol. 59, 46–52.

Caharel, S., Courtay, N., Bernard, C., Lalonde, R., and Rebai, M. (2005). Familiarity and emotional expression influence an early stage of face processing: an electrophysiological study. Brain Cogn. 59, 96–100.

Caharel, S., d’Arripe, O., Ramon, M., Jacques, C., and Rossion, B. (2009). Early adaptation to unfamiliar faces across viewpoint changes in the right hemisphere: evidence from the N170 ERP component. Neuropsychologia 47, 639–643.

Caharel, S., Poiroux, S., and Bernard, C. (2002). ERPs associated with familiarity and degree of familiarity during face recognition. Int. J. Neurosci. 112, 1531–1544.

Carmel, D., and Bentin, S. (2002). Domain specificity versus expertise: factors influencing distinct processing of faces. Cognition 83, 1–29.

Curran, T., and Hancock, J. (2007). The FN400 indexes familiarity-based recognition of faces. Neuroimage 36, 464–471.

Devue, C., and Bredart, S. (2008). Attention to self-referential stimuli: can I ignore my own face? Acta Psychol. (Amst.) 128, 290–297.

Devue, C., Van der Stigchel, S., Bredart, S., and Theeuwes, J. (2009). You do not find your own face faster; you just look at it longer. Cognition 111, 114–122.

Dien, J. (1998). Addressing misallocation of variance in principal components analysis of event-related potentials. Brain Topogr. 11, 43–55.

Eimer, M. (2000). Event-related brain potentials distinguish processing stages involved in face perception and recognition. Clin. Neurophysiol. 111, 694–705.

Gordon, I., and Tanaka, J. W. (in press). Putting a name to a face: the role of name labels in the formation of face memories. J. Cognit. Neurosci.

Gratton, G., Coles, M., and Donchin, E. (1983). A new method for off-line removal of ocular artifact. Electroencephalogr. Clin. Neurophysiol. 55, 468–484.

Jacques, C., and Rossion, B. (2006). The speed of individual face categorization. Psychol. Sci. 17, 485–492.

Jemel, B., Pisani, M., Rousselle, L., Crommelinck, M., and Bruyer, R. (2005). Exploring the functional architecture of person recognition system with event-related potentials in a within- and cross-domain self-priming of faces. Neuropsychologia 43, 2024–2040.

Jemel, B., Schuller, A.-M., and Goffaux, V. (2009). Characterizing the spatio-temporal dynamics of the neural events occurring prior to and up to overt recognition of famous faces. J. Cogn. Neurosci. 22, 2289–2305.

Joyce, C., and Rossion, B. (2005). The face-sensitive N170 and VPP components manifest the same brain processes: the effect of reference electrode site. Clin. Neurophysiol. 116, 2613–2631.

Junghöfer, M., Elbert, T., Tucker, D. M., and Braun, C. (1999). The polar average reference effect: a bias in estimating the head surface integral in EEG recording. Clin. Neurophysiol. 110, 1149–1155.

Kaufmann, J. M., Schweinberger, S. R., and Burton, A. M. (2009). N250 ERP correlates of the acquisition of face representations across different images. J. Cogn. Neurosci. 21, 625–641.

Keenan, J. P., Nelson, A., O’Connor, M., and Pascual-Leone, A. (2001). Neurology – Self-recognition and the right hemisphere. Nature 409, 305–305.

Keenan, J. P., Wheeler, M., Platek, S. M., Lardi, G., and Lassonde, M. (2003). Self-face processing in a callosotomy patient. Eur. J. Neurosci. 18, 2391–2395.

Keyes, H., Brady, N., Reilly, R. B., and Fox, J. J. (2010). My face or yours? Event-related potential correlates of self-face processing. Brain Cogn. 72, 244–254.

Kircher, T. J., Senior, C., Phillips, M. L., Rabe-Hesketh, S., Benson, P. J., Bullmore, E. T., Brammer, M., Simmons, A., Bartels, M., and David, A. S. (2001). Recognizing one’s own face. Cognition 78, B1–B15.

Kovacs, G., Zimmer, M., Banko, E., Harza, I., Antal, A., and Vidnyanszky, Z. (2006). Electrophysiological correlates of visual adaptation to faces and body parts in humans. Cereb. Cortex 16, 742–753.

Miyakoshi, M., Nomura, M., and Ohira, H. (2007). An ERP study on self-referential object recognition. Brain Cogn. 63, 182–189.

Rossion, B., Joyce, C. A., Cottrell, G. W., and Tarr, M. J. (2003). Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuroimage 20, 1609–1624.

Rugg, M. D., and Curran, T. (2007). Event-related potentials and recognition memory. Trends Cogn. Sci. (Regul. Ed.) 11, 251–257.

Schweinberger, S. R., Huddy, V., and Burton, A. M. (2004). N250r: a face-selective brain response to stimulus repetitions. Neuroreport 15, 1501–1505.

Schweinberger, S. R., Pickering, E. C., Burton, A. M., and Kaufmann, J. M. (2002). Human brain potential correlates of repetition priming in face and name recognition. Neuropsychologia 40, 2057–2073.

Scott, L. S., Tanaka, J. W., Sheinberg, D. L., and Curran, T. (2006). A reevaluation of the electrophysiological correlates of expert object processing. J. Cogn. Neurosci. 18, 1453–1465.

Scott, L. S., Tanaka, J. W., Sheinberg, D. L., and Curran, T. (2008). The role of category learning in the acquisition and retention of perceptual expertise: a behavioral and neurophysiological study. Brain Res. 1210, 204–215.

Srinivasan, R., Nunez, P. L., Silberstein, R. B., Tucker, D. M., and Cadusch, P. J. (1996). Spatial sampling and filtering of EEG with spline-Laplacians to estimate cortical potentials. Brain Topogr. 8, 355–366.

Sugiura, M., Shah, N. J., Zilles, K., and Fink, G. R. (2005). Cortical representations of personally familiar objects and places: functional organization of the human posterior cingulate cortex. J. Cogn. Neurosci. 17, 183–198.

Tanaka, J. W., and Curran, T. (2001). A neural basis for expert object recognition. Psychol. Sci. 12, 43–47.

Tanaka, J. W., Curran, T., Porterfield, A. L., and Collins, D. (2006). Activation of pre-existing and acquired face representations: the N250 ERP as an index of face familiarity. J. Cogn. Neurosci. 18, 1488–1497.

Tanaka, J. W., and Pierce, L. J. (2008). The neural plasticity of other-race face recognition. Cogn. Affect. Behav. Neurosci. 9, 122–131.

Tong, F., and Nakayama, K. (1999). Robust representations for faces: evidence from visual search. J. Exp. Psychol. Hum. Percept. Perform. 25, 1016–1035.

Tsakiris, M. (2008). Looking for myself: current multisensory input alters self-face recognition. PLoS ONE 3, e4040. doi:10.1371/journal.pone.0004040

Keywords: ERP’s, face recognition, object recognition, familiar faces, N170, N250

Citation: Pierce LJ, Scott LS, Boddington S, Droucker D, Curran T and Tanaka JW (2011) The N250 brain potential to personally familiar and newly learned faces and objects. Front. Hum. Neurosci. 5:111. doi: 10.3389/fnhum.2011.00111

Received: 06 December 2010;

Accepted: 14 September 2011;

Published online: 31 October 2011.

Edited by:

Srikantan S. Nagarajan, University of California San Francisco, USAReviewed by:

Karuna Subramaniam, University of California San Francisco, USACopyright: © 2011 Pierce, Scott, Boddington, Droucker, Curran and Tanaka. This is an open-access article subject to a non-exclusive license between the authors and Frontiers Media SA, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and other Frontiers conditions are complied with.

*Correspondence: Tim Curran, Department of Psychology, University of Colorado Boulder, Boulder, CO 80309-0345, USA. e-mail:dGN1cnJhbkBjb2xvcmFkby5lZHU=; James W. Tanaka, Department of Psychology, University of Victoria, Victoria, BC, Canada V8P 5C2. e-mail:anRhbmFrYUB1dmljLmNh

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.