95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Hum. Dyn. , 11 January 2024

Sec. Digital Impacts

Volume 5 - 2023 | https://doi.org/10.3389/fhumd.2023.1289255

This article is part of the Research Topic Ethical Dilemmas of Digitalisation of Mental Health View all 4 articles

As mental healthcare is highly stigmatized, digital platforms and services are becoming popular. A wide variety of exciting and futuristic applications of AI platforms are available now. One such application getting tremendous attention from users and researchers alike is Chat Generative Pre-trained Transformer (ChatGPT). ChatGPT is a powerful chatbot launched by open artificial intelligence (Open AI). ChatGPT interacts with clients conversationally, answering follow-up questions, admitting mistakes, challenging incorrect premises, and rejecting inappropriate requests. With its multifarious applications, the ethical and privacy considerations surrounding the use of these technologies in sensitive areas such as mental health should be carefully addressed to ensure user safety and wellbeing. The authors comment on the ethical challenges with ChatGPT in mental healthcare that need attention at various levels, outlining six major concerns viz., (1) accurate identification and diagnosis of mental health conditions; (2) limited understanding and misinterpretation; (3) safety, and privacy of users; (4) bias and equity; (5) lack of monitoring and regulation; and (6) gaps in evidence, and lack of educational and training curricula.

Artificial intelligence (AI) is emerging as a potential game-changer in transforming modern healthcare including mental healthcare. AI in healthcare leverages machine learning algorithms, data analytics, and computational power to enhance various aspects of the healthcare industry (Bohr and Memarzadeh, 2020; Bajwa et al., 2021). Chat Generative Pre-trained Transformer (ChatGPT) is a powerful chatbot launched by open artificial intelligence (Open AI) (Roose, 2023) that has over 100 million users in merely 2 months, making it the fastest-growing consumer application (David, 2023).

The ChatGPT and ChatGPT-supported chatbots have the potential to offer significant benefits in the provision of mental healthcare. Some advantages of ChatGPT in mental health support is depicted in Figure 1. They include (1) improve accessibility, (2) anonymity & reduce stigma, (3) specialist referral, (4) continuity of care and long-term support, (5) potential for scale-up and (6) data-driven insights for decision-making (Miner et al., 2019; Denecke et al., 2021; Cosco, 2023; Nothwest Executive Education, 2023).

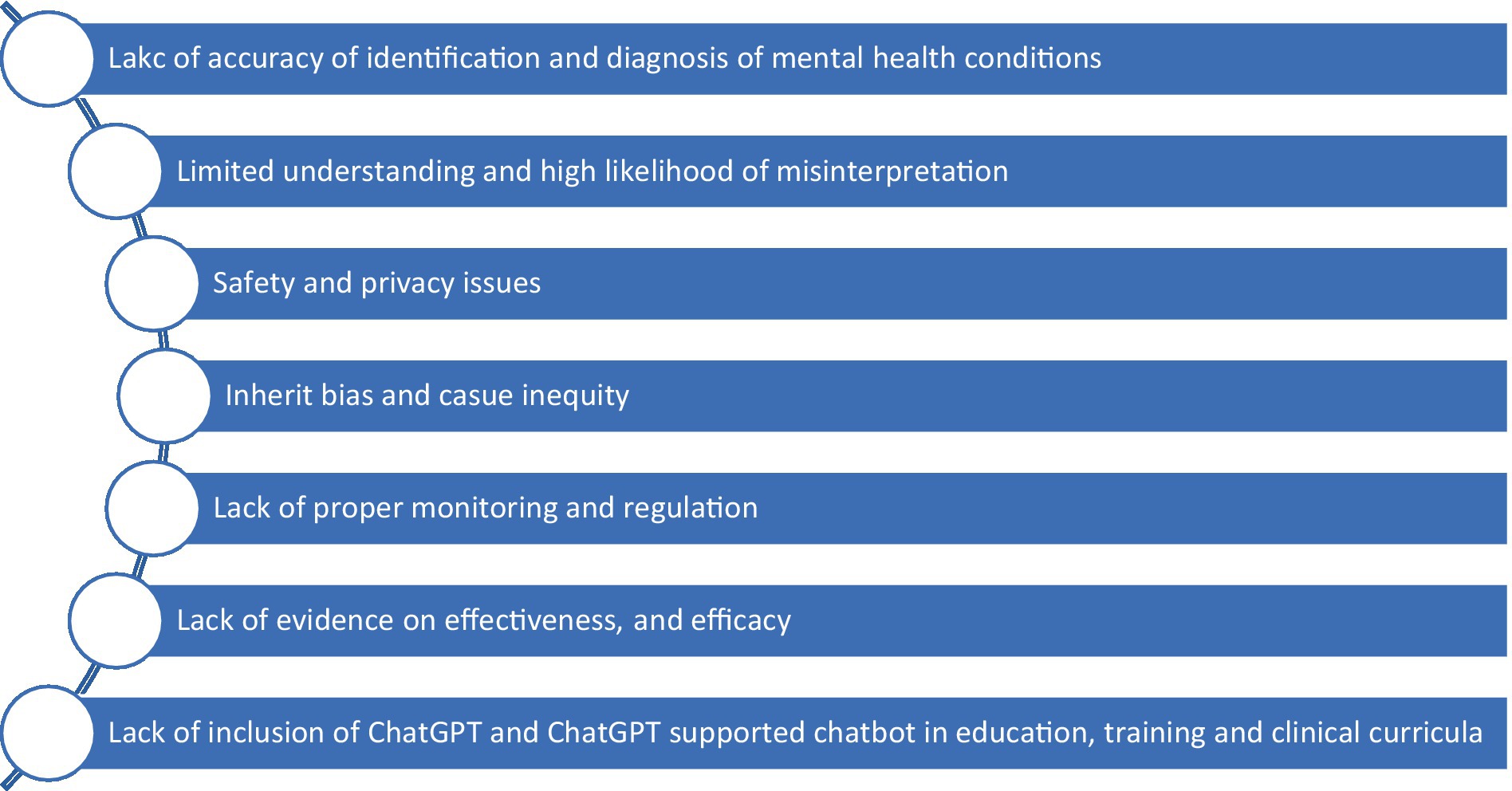

While ChatGPT-supported chatbots can provide certain benefits such as increasing accessibility to mental health support and offering a non-judgmental space for individuals to express their concerns (Denecke et al., 2021), they raise several concerns that need to be carefully addressed (Figure 2).

Table 1 offers a detailed explanation of the advantages of ChatGPT in transforming mental healthcare (Miner et al., 2019; Denecke et al., 2021; Cosco, 2023; Nothwest Executive Education, 2023).

1. Lack of accuracy in the identification and diagnosis of mental health conditions: ChatGPT and ChatGPT-supported chatbot was not intended to address mental health needs; however, lately, ChatGPT is being used as a substitute for psychotherapy which raises numerous ethical challenges. They are not a substitute for professional mental health services, and their effectiveness depends on the quality of their design, training, and risk assessment protocols. How specifically does ChatGPT identify and address mental health concerns considering cultural and individual differences while articulating symptoms? Can it accurately diagnose mental illness or identify complex comorbid mental health conditions? Can it provide support for crisis situations? Although the chatbot is knowledgeable about mental health, the accuracy with which it can diagnose users with a specific mental health condition and provide reliable support and treatment is a major concern (David, 2023). This creates a dilemma of users being misled, misdiagnosed, and mistreated.

2. Limited understanding and misinterpretation: Digital mental health tools can help people feel more in control and have an all-time accessible therapist, but they pose potential risks as well. Chatbots, even those powered by advanced AI, have limitations in understanding complex human emotions, nuanced language, and context. They may misinterpret or respond inadequately to a user’s mental health concerns, potentially leading to misunderstandings or inappropriate advice. ChatGPT is trained for using web-based information and reinforcement-learning techniques with human feedback. If not equipped with credible human responses and reliable resources, they may provide inaccurate information and inappropriate advice regarding mental health conditions, potentially harmful to persons with mental health problems. A piece of recent alarming news about AI blames it for leading to the death by suicide of a Belgian man (Cosco, 2023) and other articles discuss the harm posed by ChatGPT (Kahn, 2023; Nothwest Executive Education, 2023).

3. Safety and privacy issues: The concern regarding the confidentiality and privacy of users’ data is not unknown (Nothwest Executive Education, 2023). Any person engaging with an AI-based app for mental health support is bound to share personal details, making them vulnerable in situations of breach of confidentiality. Privacy is marred in the process of engaging with ChatGPT, which is combined with other concerns about confidentiality, lack of therapist disclosure, and simulated empathy that ultimately challenge users’ rights and therapeutic prognosis.

4. Bias and inequity: Developers should be mindful of potential biases in the chatbot’s algorithms or data sources as these may perpetuate systemic biases or inadvertently discriminate against certain populations. Furthermore, interacting with a mental health chatbot can evoke strong emotions and potentially trigger distressing memories or experiences for some users.

Figure 2. Key concerns regarding the use of ChatGPT and ChatGPT-supported chatbot in mental healthcare service delivery.

The current percentage of the population with access to the internet is 64.4% (Statista, 2023). One out of 10, a rough average, have access to conventional mental healthcare, which means that not everyone has access to mental healthcare, especially in the LMICs (Singh, 2019). Improving accessibility is another important factor to consider when determining whether ChatGPT is ready to change mental healthcare. ChatGPT should be designed to be accessible to those who may not have access to the internet. This could include making the program available to those who are located in rural or remote areas or who are unable to access conventional mental healthcare due to financial, cultural, or other barriers.

1. Lack of proper monitoring and regulation: Lack of proper monitoring, regulation, and the universality of applications is an important concern (Imran et al., 2023; Wang et al., 2023). It can indeed pose significant threats to the safety and wellbeing of its users. Given the diversity in digital literacy, education, language proficiency, and level of understanding among potential users across the globe, professional associations and other key stakeholders should evaluate and regulate AI-based apps for their safety, efficacy, and tolerability and provide guidance for their safe use by the general public. Indian Council of Medical Resesarch (ICMR) (2023) outlines ethical guidelines for the application of AI in biomedical healthcare, but no such guidelines exist for mental healthcare that can allow for supervised care and intervention.

There are various competitive “open software” platforms to ChatGPT that have been launched, including Google Bard among others (Schechner, 2023). Unfortunately, this raises the concern that ChatGPT and these open software platforms can invade the privacy of user content. Countries such as Italy have blocked ChatGPT over privacy concerns (EuroNews, 2023), and realizing the many other ethical dilemmas, there is a need for the formation of a global council responsible for the accreditation and standardization of applications of AI in mental healthcare intervention. It is quintessential to ensure quality care and reserved rights of the patients globally. The program should be easy to navigate and understand so that users feel comfortable and confident when using the program.

1. Lack of evidence on effectiveness and efficacy: To the best of our knowledge, there are no empirical studies assessing the effectiveness and efficacy of ChatGPT and ChatGPT-supported chatbot, especially in the LMIC context. The absence of empirical studies on ChatGPT’s effectiveness and efficacy, particularly in Low- and Middle-Income Countries (LMICs), poses a significant knowledge gap (Sallam, 2023; Wang et al., 2023). The dearth of evidence on the impact of ChatGPT and ChatGPT-supported chatbots in diverse socio-cultural contexts hinders our understanding of how these AI tools perform in promoting mental health, and health equity within resource-limited settings. Closing this void through targeted research is essential to harness the potential benefits of ChatGPT in addressing public health challenges in a global context.

2. The use of ChatGPT and ChatGPT-supported chatbot in educational training and clinical curricula should be explored, ensuring their integration aligns with the evolving needs of learners and practitioners. Research is needed to evaluate the chatbot program’s effectiveness, impact on learners and facilitators, and to identify potential issues or areas for improvement (Cascella et al., 2023; Kooli, 2023; Xue et al., 2023). Generating evidence-based protocols for the application of chatbot for mental healthcare is urgently required. The increasing role of AI in healthcare also makes it a prerequisite to have adequate curriculum-based training and a continuing education program on AI applications to (mental) healthcare and AI-based interventions that can be accessed by all.

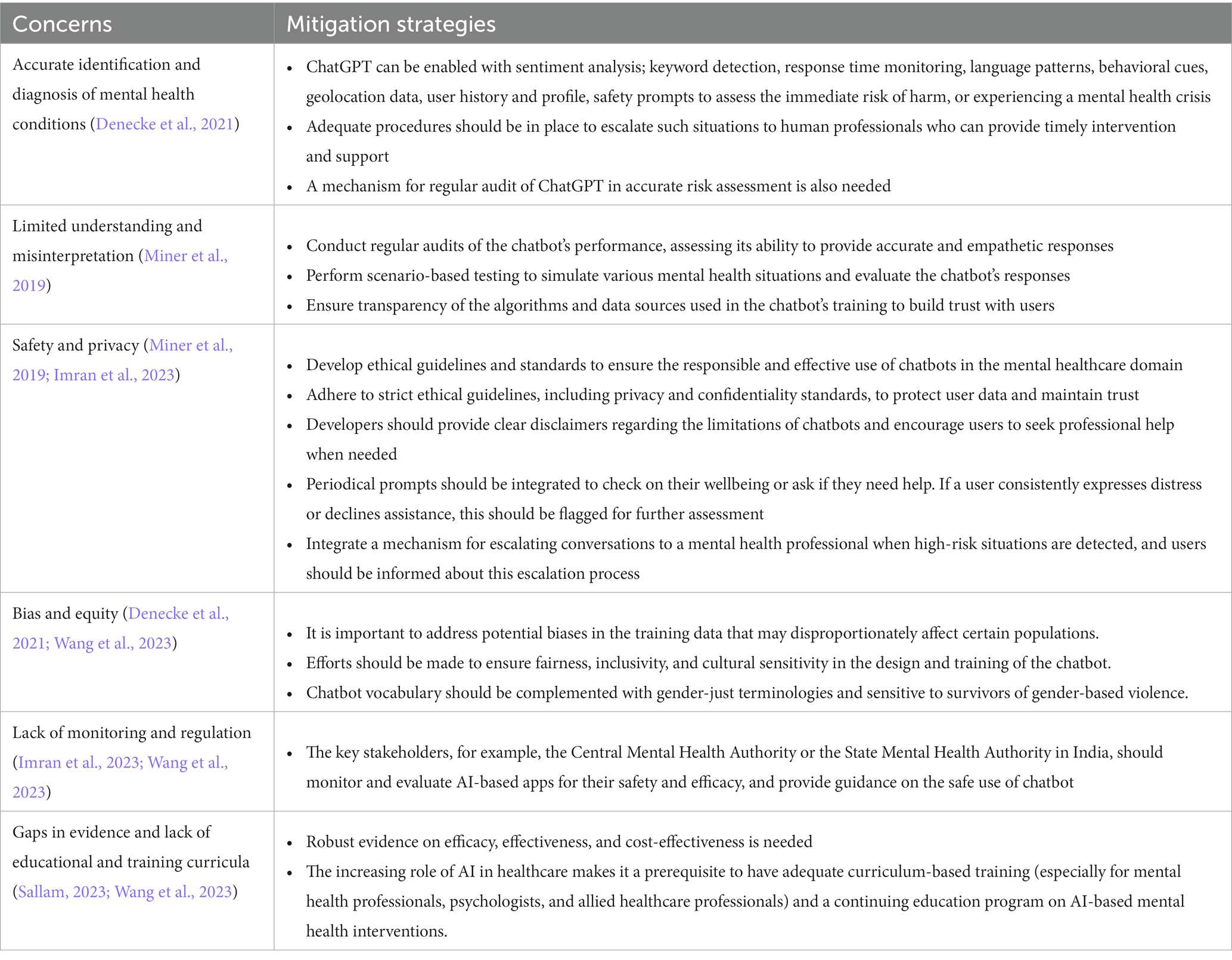

There is a need for mental health professionals to be trained in the use of AI in mental health practice and also research and equip them for AI-assisted therapy. The increasing role of AI in healthcare makes it a prerequisite to have adequate curriculum-based training and a continuing education program on AI applications to (mental) healthcare and AI-based interventions. The key concerns, their examples, and strategies to address concerns are shown in Table 2 (Denecke et al., 2021; Cosco, 2023; Nothwest Executive Education, 2023; Sengupta, 2023; Wang et al., 2023).

Table 2. Concerns related to the use of ChatGPT and its mitigation strategies (Miner et al., 2019; Denecke et al., 2021; Imran et al., 2023; Sallam, 2023; Wang et al., 2023).

The implementation of mitigation strategies for the use of ChatGPT and ChatGPT-supported chatbots presents several challenges, ranging from technical and ethical considerations to user experience and bias mitigation. There are challenges specific to low- and middle-income countries (LMIC) such as infrastructure to support the availability of the internet, knowledge and skills to use ChatGPT and ChatGPT-supported chatbot among diverse users, training healthcare providers on integrating ChatGPT and ChatGPT-supported chatbot and so. Furthermore, users must understand the difference between AI-generated therapy and AI-guided therapy when accessing digital tools for supporting mental health. ChatGPT and ChatGPT-supported chatbot offer a low-barrier, quick access to mental health support but is limited in approach. GPT generates the next text in response without really “understanding” which may be a daunting concern in mental healthcare. Thus, the “intelligence” of the bots which is currently limited to simulated empathy and conversational style is questionable to address complex mental health and to demonstrate effective care. Thus, digital tools need to be used as a part of the “spectrum of care” rather than just as a sole measure of healthcare.

It is also important to distinguish between chatbot-enabled care and psychotherapy. Psychotherapy (therapy) is a structured intervention delivered by a trained professional. Anything that relaxes or relieves a person can be therapeutic but cannot be equated to psychotherapy. ChatGPT is therapeutic with no scientific evidence of its efficacy as a “psychotherapist.” ChatGPT has the ability to respond quickly with the “right (sounding) answers” but it is not trained to induce reflection and insights as a therapist does. ChatGPT may be able to generate psychological and medical content, but it has no role in prescribing medical advice or personalized medical prescriptions.

The impact of ChatGPT on mental health and mental healthcare service delivery is yet to be determined. Will the future behold the generation of caring bots? That will be answerable with a far superior AI-guided program, research, robust regulatory and monitoring mechanisms, and integration of human intervention with ChatGPT.

The integration of ChatGPT and ChatGPT-supported chatbots opens avenues for expanding mental healthcare services to a larger population. To maximize their impact, ChatGPT and ChatGPT-supported chatbots should be part of a comprehensive mental health care that includes screening, continuous care and follow-up. It is essential to train ChatGPT and ChatGPT supported chatbots for a seamless transition to human professionals for diagnosis, treatment and for providing additional resources beyond the chatbot’s capabilities.

Addressing challenges and user/provider concerns requires rigorous development processes, concurrent monitoring, regular updates, and collaboration with mental health professionals. Research is pivotal for refining ChatGPT and ChatGPT-supported chatbots, optimizing their integration into mental health services, and ensuring they meet the evolving needs of users and healthcare providers alike within ethical framework. Prospective research with robust methodologies can focus on assessing clinical effectiveness, efficacy, safety, and implementation challenges.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

AP: Conceptualization, Methodology, Supervision, Writing – original draft, Writing – review & editing. PL: Writing – original draft, Writing – review & editing. AG: Technical inputs, Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Bajwa, J., Munir, U., Nori, A., and Williams, B. (2021). Artificial intelligence in healthcare: transforming the practice of medicine. Future Healthc. J. 8, e188–e194. doi: 10.7861/fhj.2021-0095

Bohr, A., and Memarzadeh, K. (2020). “The rise of artificial intelligence in healthcare applications” in Artificial intelligence in healthcare. Academic Press. 25–60.

Cascella, M., Montomoli, J., Bellini, V., and Bignami, E. (2023). Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. J. Med. Syst. 47, 1–5. doi: 10.1007/s10916-023-01925-4

Cosco, T. D. (2023). ChatGPT: a game-changer for personalized mental health care for older adults?. Available at: https://www.ageing.ox.ac.uk/blog/ChatGPT-A-Game-changer-for-Personalized-Mental-Health-Care-for-Older-Adults.

David, C. (2023). ChatGPT revenue and usage statistics. Business of Apps Available at: https://www.businessofapps.com/data/chatgpt-statistics.

Denecke, K., Abd-Alrazaq, A., and Househ, M. (2021). “Artificial intelligence for chatbots in mental health: opportunities and challenges” in Multiple perspectives on artificial intelligence in healthcare (lecture notes in bioengineering). eds. M. Househ, E. Borycki, and A. Kushniruk (Cham: Springer).

EuroNews. (2023). OpenAI’s ChatGPT chatbot blocked in Italy over privacy concerns. Available at: https://www.euronews.com/next/2023/03/31/openais-chatgpt-chatbot-banned-in-italy-by-watchdog-over-privacy-concerns.

Indian Council of Medical Resesarch (ICMR) (2023). Ethical guidelines for application of artificial intelligence in biomedical research and healthcare, New Delhi: ICMR. Available at: https://main.icmr.nic.in/content/ethical-guidelines-application-artificial-intelligence-biomedical-research-and-healthcare.

Imran, N., Hashmi, A., and Imran, A. (2023). Chat-GPT: opportunities and challenges in child mental healthcare. Pak. J. Med. Sci. 39, 1191–1193. doi: 10.12669/pjms.39.4.8118

Kahn, J. (2023). ChatGPT’s inaccuracies are causing real harm. Fortune Available at: https://fortune.com/2023/02/28/chatgpt-inaccuracies-causing-real-harm/amp/.

Kooli, C. (2023). Chatbots in education and research: A critical examination of ethical implications and solutions. Sustainability. 15:5614.

Miner, A. S., Shah, N., Bullock, K. D., Arnow, B. A., Bailenson, J., and Hancock, J. (2019). Key considerations for incorporating conversational AI in psychotherapy. Front Psychiatry 10:746. doi: 10.3389/fpsyt.2019.00746

Nothwest Executive Education. ChatGPT and mental health: can AI provide emotional support to employees? (2023). Available at: https://northwest.education/insights/career-growth/chatgpt-and-mental-health-can-ai-provide-emotional-support-to-employees/#:~:text=Continuous%20learning%20and%20improvement%3A%20AI,strategies%20to%20address%20them%20effectively.

Roose, K. (2023). The brilliance and weirdness of ChatGPT. New York Times Available at: https://www.nytimes.com/2022/12/05/technology/chatgpt-ai-twitter.html.

Sallam, M. (2023). ChatGPT utility in healthcare education, research, and practice: systematic review on the promising perspectives and valid concerns. Healthcare 11:887. doi: 10.3390/healthcare11060887

Schechner, S. Google opens ChatGPT rival bard for testing. Wall Street J.. (2023) Available at: https://www.wsj.com/articles/google-opens-testingof-chatgpt-rival-as-artificial-intelligence-war-heats-up-11675711198. (Accessed on February 28, 2023).

Sengupta, A. (2023). Death by AI? man kills self after chatting with ChatGPT-like chatbot about climate change. India Today Available at: https://www.indiatoday.in/amp/technology/news/story/death-ai-man-kills-self-chatting-chatgpt-like-chai-chatbot-climate-change-2353975-2023-03-31

Singh, O. P. (2019). Chatbots in psychiatry: can treatment gap be lessened for psychiatric disorders in India. Indian J. Psychiatry 61:225. doi: 10.4103/0019-5545.258323

Statista (2023). Number of internet and social media users worldwide as of January 2023 Statista Available at: https://www.statista.com/statistics/617136/digital-population-worldwide/#:~:text=Worldwide%20digital%20population%202023&text=As%20of%20January%202023%2C%20there,percent%20of%20the%20global%20population.

Wang, X., Sanders, H. M., Liu, Y., Seang, K., Tran, B. X., Atanasov, A. G., et al. (2023). ChatGPT: promise and challenges for deployment in low-and middle-income countries. Lancet Reg. Health West. Pac. 41:100905. doi: 10.1016/j.lanwpc.2023.100905

Keywords: ChatGPT, ChatGPT supported chatbots, psychotherapy, ethical dilemmas, artificial intelligence

Citation: Pandya A, Lodha P and Ganatra A (2024) Is ChatGPT ready to change mental healthcare? Challenges and considerations: a reality-check. Front. Hum. Dyn. 5:1289255. doi: 10.3389/fhumd.2023.1289255

Received: 05 September 2023; Accepted: 28 November 2023;

Published: 11 January 2024.

Edited by:

Mike Trott, Queen’s University Belfast, United KingdomReviewed by:

Mohamed Khalifa, Macquarie University, AustraliaCopyright © 2024 Pandya, Lodha and Ganatra. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Pragya Lodha, cHJhZ3lhNmxvZGhhQGdtYWlsLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.