- Lund University Cognitive Science, Department of Philosophy, Lund University, Lund, Sweden

Life-likeness is a property that can be used both to deceive people that a robot is more intelligent than it is or to facilitate the natural communication with humans. Over the years, different criteria have guided the design of intelligent systems, ranging from attempts to produce human-like language to trying to make a robot look like an actual human. We outline some relevant historical developments that all rely on different forms of mimicry of human life or intelligence. Many such approaches have been to some extent successful. However, we want to argue that there are ways to exploit aspects of life-likeness without deception. A life-like robot has advantages in communicating with humans, not because we believe it to be alive, but rather because we react instinctively to certain aspects of life-like behavior as this can make a robot easier to understand and allows us to better predict its actions. Although there may be reasons for trying to design robots that look exactly like humans for specific research purposes, we argue that it is subtle behavioral cues that are important for understandable robots rather than life-likeness in itself. To this end, we are developing a humanoid robot that will be able to show human-like movements while still looking decidedly robotic, thus exploiting the our ability to understand the behaviors of other people based on their movements.

1 Introduction

At the large robot exhibition in Osaka, all exhibition booths are empty. All visitors have gathered in a large cluster in the middle of the room and are looking in the same direction. But when I walk by, there are no robots. Just someone giving a presentation in Japanese. Because I do not understand a word, I move on when it suddenly strikes me. Something does not look right.

What I witnessed was one of the first demonstrations of the Android Repliee Q2, a robot designed to imitate a human, with silicone skin and advanced facial expressions. The Android has a large amount of movement possibilities and most of these are in the face. Although there is some development left to the 43 facial muscles of humans (Shimada et al., 2007), the android can show a large range of facial expressions and the mouth moves in a relatively realistic way when it speaks.

The body, on the other hand, has very little mobility and the robot must sit down or be screwed to the floor to prevent it from falling. Had it moved its body a little more, I might not have reacted at all, because what made me hesitate was its rather odd posture. The arm did not move in a completely natural way. A small detail, it may seem, but enough to break the illusion of life.

Designing life-like robots can be useful to facilitate interaction with humans, but we want to argue that imitation of life or human intelligence should not be a goal in itself. Instead, it is useful to mimic certain aspects of human behavior to facilitate the understanding of the robot as well as allowing natural communication between a robot and a human. Below, we review attempts to mimic human appearance and behavior and investigate what aspects of life-likeness are useful in a robot.

In the following sections, we highlight prominent research within AI and robotics that has aimed at reproducing different aspects of life-likeness in machines. We argue that many of these research directions are partially based on deception and suggest that the important property of life-like machines is not that they appear to be alive or try to mimic actual humans, but rather that they can potentially be easier for people to understand and interact with. Finally, we outline our work on a humanoid robot that aims at imitating some aspects of human behavior without pretending to be anything but a robot.

2 Pretending to be Human

During much of the history of artificial intelligence an overall aim has been to design technical systems that have human-like abilities. One way to approach this is to construct a machine in such a way that it appears indistinguishable from an actual human in some task or situation.

2.1 The Turing Test

But why is it goal to succeed in pretending that a machine is a human? To understand what lies behind this approach, we must go back to the fifties and an idea launched by the British computer pioneer Alan Turing. In addition to his revolutionary contributions to theoretical computer science, Turing also contributed to research in mathematics and theoretical biology. But he is best known today for his efforts during World War II. An operation that was considered so important that it was kept secret until 1974, 20 years after his death. Together with a motley crowd of creative geniuses at Bletchley Park, he led the work of deciphering the German Navy’s radio communications. To speed up the process, the electromechanical calculator called Bombe was constructed. The machine, which was able to decipher the German Enigma crypto, is estimated by historians to have shortened World War II by more than 2 years, thereby saving between 14 and 21 million lives (Copeland, 2012).

Although Bombe was not a general-purpose computer, it was a step towards Turing’s vision of a general computational mechanism. Turing had already shown that it is possible to define an abstract machine, the Turing machine, which can calculate everything that can be calculated, and it is easy to think about whether such a machine can also become intelligent. But how should the intelligence of the machine be measured?

In one of his most acclaimed articles, Turing presented what later came to be known as the Turing test (Turing, 1950). The test tries to solve the problem of determining whether a machine is intelligent or not. The idea is to let a person converse with the machine and then ask it to determine if it has just communicated with a human or a machine. If it is not possible to determine, then one must conclude that the machine is as intelligent as a human.

In order for the person performing the test not to be able to know who it is communicating with, Turing imagined communicating with the machine in some indirect way, for example via a text terminal. You enter sentences which are then delivered to the machine or the person at the other end, and then you get an answer back as a printout on paper. This makes it impossible to see who delivers the answers. Of course, one must also make sure that the response times correspond to those of a human being. If you ask the computer what 234 × 6,345 will be, then maybe it can answer immediately, while a human takes a moment to figure the answer out.

2.2 Eliza and Parry

The Turing test is both brilliant and simple, but it has one major drawback: people can be quite gullible. As early as 1966, Joseph Weizenbaum, one of the early pioneers of artificial intelligence, succeeded in constructing the ELIZA program (Weizenbaum, 1966). The program allowed the computer to have a conversation with a person in much the same way as Turing suggested in his test. ELIZA, named after the character Eliza Doolittle in George Bernard Shaw’s play Pygmalion, aimed to imitate a psychotherapist using Carl Rogers’ humanistic approach. A central idea in Rogers’ therapy is that the therapist should primarily let the client conduct the conversation himself. This was perfect for a computer program that does not really understand anything but is just trying to keep the conversation going. If ELIZA found words like “mom” or “dad” in her input, she could answer with a ready-made sentence like “Tell me more about your family.” With a set of simple rules and more or less ready-made answers, it was possible to deceive people who in the sixties had no major experience with computers. During tests with ELIZA, it even happened that the participants asked to be left alone to talk to the program about their problems privately.

An interesting development of the idea was made a few years later by the American psychiatrist Kenneth Colby who picked up the thread with the program PARRY (Colby, 1981). Instead of imitating a therapist, PARRY tried to imitate a person with paranoid schizophrenia. The program was successful in that people who would decide if they communicated with a program or human were not able to do so, but the fact that PARRY tried to imitate an irrational paranoid person obviously contributed to the result.

That neither ELIZA nor PARRY have much to bring to a conversation became apparent when they were connected for a two-way conversation. Since both programs need human input for there to be any content in the exchange, their conversation became very empty. The only time it gets a little hot is when PARRY happens to throw in a few words about the mafia, but the subject quickly fades out again and the conversation turns into a ping-pong game with empty phrases.

The development of programs that can converse has continued and today there are annual competitions where different programs try to pass the Turing test. The programs have now become so good that it is difficult even for experts to be sure after a few minutes of interaction whether they are talking to a human or a computer. With longer interaction, however, it is easy to detect that you are talking to a machine, so even if you sometimes hear that different programs have passed the Turing test, it only applies in when there is a limited length of interaction.

2.3 Geminoids

It is not easy to pass the Turing test, but it has not stopped some researchers from trying to go even further and try to build machines that are perceived as human even if you get to see them. Robot researcher Hiroshi Ishiguro, who was behind the Repliee Q2, has also designed other robots with a human appearance. One usually distinguishes between humanoids, which are robots with human form (Brooks et al., 1998; Hirai et al., 1998; Metta et al., 2008), but which still look like machines, and androids (Ishiguro, 2016), which are robots that are made to resemble a human as much as possible. Ishiguro has even gone a step further with what he calls geminoids, or twin robots, which aim to imitate specific people (Nishio et al., 2007). To this end he has constructed a copy of himself that he can remotely control with the help of sensors that read his movements and facial expressions. The latest geminoid is a copy of the Danish researcher Henrik Schärfe (Abildgaard and Scharfe, 2012).

Just trying to imitate people may seem like a rather superficial way of approaching living intelligent machines, but there are several reasons why it can still be a fruitful way to go. Why ignore certain aspects of the human constitution? To quote the two cybernetics Norbert Wiener and Arturo Rosenblueth, “the best model of a cat is another, or preferably the same, cat” (Rosenblueth and Wiener, 1945, p. 320). The more aspects of the human we copy, the closer we get to an artificial human. What android researchers do is start on the outside, with the body’s appearance, and then gradually develop intelligence for the body.

However, even if the illusion of life is perfect, it is easily destroyed if the movements of the robot looks prerecorded or if the domain of actions is to limited. This is elegantly illustrated in the TV series Westworld were the fictional android Bernard Lowe is seen performing exactly the same movements while cleaning his glasses. Although a perfect imitation of life, the illusion quickly vanishes with multiple repetitions. To strengthen the effect, another android is shown to do the exact same movements in one of the episodes. Similarly, the character Dolores Abernathy uses a limited vocabulary repeating the phrase “Have you ever seen anything so full of splendor?” in multiple contexts. What initially sounds sophisticated comes out as canned speech when used over and over.

In fact, humans are very sensitive to exact repetition (Despouy et al., 2020) and to interact in a natural way, a robot needs to vary its behaviors. We immediately recognize when a movement of phrase has been used before. This is similar to the effect of using unusual words or phrases more than once in a written text. Repetitions are easily recognized and distracts from the contents.

2.4 The Uncanny Valley

None of today’s androids look really human-like up-close. Instead of looking human in a sympathetic way, they cause discomfort in many people (MacDorman and Entezari, 2015). You feel the same reaction as if you met a zombie or mummy. Something dead that should be immobile has come to life. The jerky and unnatural movements do not make things better.

This is an example of a phenomenon first described by the Japanese robot scientist Masahiro Mori in the seventies (Mori, 2012). He noted that the more human a robot is, the nicer it feels, but this connection is broken when you get very close to a human appearance. Then feelings of discomfort arise instead. The phenomenon is called the uncanny valley. Instead of looking more vivid, the result is rather the opposite. You pay extra attention to what is not human in the robot.

However, the concept of an uncanny valley has been questioned (Zlotowski et al., 2013) and the reactions to realistic androids depend both on personality traits (MacDorman and Entezari, 2015) and surrounding culture (Haring et al., 2014). Indeed on closer examination, the reactions to different anthropomorphic robots depend on many interacting factors. There may be an uncanny cliff rather than a valley (Bartneck et al., 2007).

Interestingly, the reaction is not as strong for a humanoid robot that is not so human. The iCub robot, which was developed in a large European consortium, has been designed to have a child’s proportions Metta et al. (2008). It is clear that it is a robot and it is perceived by many as both cute and nice and people report feeling comfortable while interacting with it when it behaves appropriately for the situation (Redondo et al., 2021).

3 The Importance of Body and Behavior

That the body is important for thinking and intelligence has in recent years become increasingly obvious. After all, our brain is primarily developed to control the body. Researchers in the field usually talk about embodied cognition (Wilson, 2002), meaning that the body is part of the cognitive system and that how the body looks and interacts with the environment is central to understanding intelligence.

One of the main proponents of this direction in robotics is Rodney Brooks, who in the mid-eighties revolutionized the field. He showed that robots with a purposeful body, but very little intelligence, could perform tasks that had been very difficult to solve in the traditional way (Brooks, 1991). Instead of letting the robots build complicated internal models of the environment, Brooks’ proposed that in most cases they should only react directly to sensory signals. By starting from body-based behavior instead of reasoning and planning, the robots were able to function quickly and efficiently despite the fact that they almost completely lacked intelligence in any traditional sense.

One inspiration for this work was models from ethology, for example Tinbergen’s behavior patterns (Tinbergen, 2020) and Timberlake’s behavior systems (Timberlake, 1994). Unlike traditional models of cognition that investigate internal states, these models are based on fixed behaviors that are triggered by specific stimuli. In the robotics community this approach is usually described as behavior-based robotics (Brooks, 1991).

Brooks’ behavior-based robots were an answer to a challenge posed by the American philosopher Daniel Dennet, who questioned the possibility of creating artificial intelligence without a body (Dennett, 1978). Why not build an entire animal? Maybe a lizard? He argued that the best way to achieve human intelligence would be to start with a simple but complete robot animal and then develop this robot further both physically and cognitively. The robots that are built in this research field are sometimes called animates and their constructions are based on insights from biology (Wilson, 1991; Ziemke et al., 2012).

Some of Brooks’ first robots tried to imitate insects and used six legs to walk around (Brooks, 1989). They had various sensors at the front that allowed them to go towards different targets or avoid obstacles. The robots had no central intelligence but the sensors were connected so that they directly affected the gait pattern in different ways. Because the robots were adapted to the environment in the same way that an animal was developed to function in its ecological niche, these simple systems worked surprisingly well. Their behavior and movement patterns showed many characteristics that are associated with biological life, far from the robots that are seen in industry.

Another robot built on these principles was Herbert (Brooks et al., 1988) which elegantly illustrates the function of a behavior-based system. The robot had the task of collecting empty cans in the lab and throwing them in a dustbin. What makes the robot so fascinating is how flexible its behavior is despite a relatively simple control system. If you help Herbert and give it a can, it can take it and then go out in search of the dustbin. If you take the can away instead, the robot no longer feels that it is holding something in its hand and will start looking for other cans instead. Although there is no plan or model of the world, Herbert will behave appropriately in most cases. By reacting directly to the outside world, there are no problems with the model of the world being incorrect. Brooks formulated this as “the world is its own best model” (Brooks, 1990).

The different behavior are organized in that Brooks called the subsumption architecture (Brooks, 1986). The main feature of this architecture is that it is based on a hierarchy of behaviors. Higher level behaviors are triggered by specific stimuli and subsumes the output or lower level (or default) behaviors. In many ways, the subsumption architecture mimics the evolution of neural control system, where more recent developments build upon older structures to modulate their behaviors rater than to replace then entirely. The direct reactions to external signals produce a sense of life-likeness in the robot behavior.

These types of ideas were also the inspiration of Braitenberg’s vehicles (Braitenberg, 1986). These imaginary creatures where controlled by more or less direct connections between sensors and actuators which consisted of a motor driven wheel on each side of the vehicle. The symmetry of the vehicles made them show goal directed behaviors using very simple mechanisms. For example, an odour stimulus to the right would excite the motor to the left more than the motor to the right which would make the vehicle turn toward the odour source. This simple design would produce both goal directed behavior and a velocity profile that is immediately recognized.

Braitenberg’s vehicles have been intensively studied in both computer simulations (Balkenius, 1995) and in physical robots (Lilienthal and Duckett, 2003). Interestingly, such movement profiles may be how we as humans recognize intentions of others (Cavallo et al., 2016; ?).

The success of behavior-based robotics led to a rapid increase in the level of ambition. In the early nineties, work on the robot Cog was in full swing in Rodney Brooks’ lab (Brooks et al., 1998). The goal was to build a humanoid robot based on behavioral principles, and according to the original plan, Cog was expected to achieve self-awareness by 1997.

Despite not achieving its goals, the Cog project has been extremely important for the development of humanoid robots. The project showed the way for further research, above all by making it acceptable to try to imitate the human body as well as our intelligence.

A very interesting result of the Cog project was that it turned out that we as humans cannot help but relate to the humanoid as if it were a living being. It has earlier been observed that people tend to behave toward computers as if they were a person and it may not be surprising that this also applied to a humanoid robot (Reeves and Nass, 1996). Even the researchers who programmed the vision system in Cog thought it would be difficult when the robot looked at them all the time and set up screens to be able to work separately.

Daniel Dennet has called this approach the intentional stance (Dennett, 1989). We choose to interpret the behavior of the robot as if its actions have intentions, as if it has ideas about the world and desires that it tries to fulfil. It does not help to know how the robot is programmed, and that it may only be simple rules that control it. The experience of intentionality is there anyway. In the most extreme interpretation of the theory, this is enough to have achieved intelligence in the robot. When the simplest explanation for the robot’s behavior is that it has intentions in the same way as a human, it does not matter how this is programmed. You can see this as an updated Turing test. We see that it is a machine, but still choose to interpret its behavior as if it were a living being.

It is this line of thinking that underlies the plot of the film Ex Machina. Programmer Caleb Smith is given the task of determining if the humanoid robot Ava is aware and can think. It is clear from the beginning that Ava has already passed the Turing test and now the question is whether she is really aware or if she is only imitating a human being. The film never gives the answer, but Dennet and Brooks would say it does not matter. If the robot appears to have a consciousness, it is reasonable to treat it as if it has, in the same way that we assume that other people have consciousness even though there is no way to prove it.

The robot in the movie is clearly not a human. Instead its life-likeness comes from its behavior, its movements, and its ability to interact with humans in a natural way. In the next section, we outline our ongoing work on the humanoid robot Epi that aims at reproducing such abilities while still being clearly robotic.

4 Toward an Honest Robot Design

There are several reasons why one would want to construct a robot that is perceived as a human. One is that a robot that is to work with humans should be able to communicate with them in a natural way. This is not just about language but also about how to communicate non-verbally with your movements. When we look at another human being, we can usually understand what he is doing. The movements and body language communicate intentions and goals. We see if a person we meet in the corridor has noticed us and that it will not collide with us (Pacchierotti et al., 2006; Daza et al., 2021). This is a useful feature also in a robot that is not primarily social.

Of course, it is easier to understand such subtle signals from a robot that is human-like than one that looks like a vacuum cleaner. There may thus be reason to construct robots with a humanoid shape. And if we are to believe Dennet, we will treat the robot as if it were alive, simply because it is most economical.

The imitation of life can serve a purpose both to simplify interaction and to make robots more engaging. This is clearly shown in animated movies where life-likeness is essential to engage the audience. Similar principles can be applied to robots with great effect (Ribeiro and Paiva, 2012). It is clear that life-likeness is useful in a communicative robot (Yamaoka et al., 2006). However, this does not imply that the robot should pretend to be alive. Instead, we propose that robots should be designed in an honest way that clearly shows that they are machines.

If pretending to be a live human is not the way to produce useful robots, how should robots be designed to allow an intuitive interaction with humans? We want to argue that there are many aspects of humans that should indeed be reproduced but perhaps not the outer visual form as much as the detailed movements and non-verbal signals of a human. This is in line with the reasoning put forward by Fong et al. (2003) that argue that caricatured humans may be more suitable because they avoid the uncanny valley.

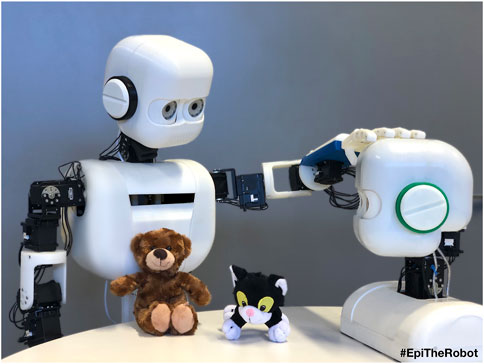

Towards this end we have designed the robot Epi (Figure 1), which is an attempt at an honest humanoid design in the sense that it is clear that it is a robot while it still tries to mimic the details of human-human interaction and reproduce a number of subtle non-verbal signals (Johansson et al., 2020). The overall design of the robot is in no way unique. Several humanoid robots use a design that clearly shows that it is a robot (e.g., Sakagami et al., 2002; Metta et al., 2008; Pandey and Gelin, 2018; Gupta et al., 2019). However, some aspects of the robot are unusual such as the animated physical irises of the eyes and the simple but robust hands.

FIGURE 1. Two versions of the humanoid robot Epi. The robots are designed to clearly show that they are machines but incorporates a number of antropomorphic feature such as the general shape of the body with a torso, two arms and head together with two eyes with animated pupils and hands with five fingers. Although the range of motion is limited compared to a human, the available degrees of freedom reflects the main joint of the human body. The version to the right in the figure uses a simplified design without arms and is used for studies of human-robot interaction.

The robot attempts to show limited anthropomorphism in that it is clearly a robot but still has some of the relevant degrees of freedom that can be found in the human body.

An important design criteria has been that the robot should be able to produce body motions that closely resembles human motions. Here, a human like speed of motion is important (Fong et al., 2003). A recent study investigated how people would react to a robot that performed social communicative movements in a collaborative box-stacking task rather than the most efficient movement to accomplish the task (Brinck et al., 2020). As a result, the participants unconsciously reciprocated the social movements and also sought more eye contact with the robot than participants that collaborated with the more efficient but non-communicative robot. The study showed that minimal kinematic changes gives large effects on how people react to a robot.

The physically animated pupils of Epi can be used to communicate with humans in a way that influences them in a unconscious way (Johansson et al., 2020). The control system uses a model of the brain systems involved in pupil control to let the pupil size reflect a number of inner processes including emotional and cognitive functions (Johansson and Balkenius, 2016; Balkenius et al., 2019). We are currently evaluating how people react unconsciously to the pupil dilation of the robot. Going forward we aim to test every design decision and how people react to different features of the robot and how they influence interaction with the robot.

5 Conclusion

The goal should not be to try to deceive that a machine is a human, but to construct robots that we can easily understand and interact with (Goodrich and Schultz, 2008). Such robots can be humanoid, or perhaps animal-like, but it is not human in itself that is important, but that we can naturally communicate with them and perceive what they are doing. Here it is fundamental to look at basic aspects of communication between people: how we use body language and gaze to coordinate our actions. It is only when you succeed in capturing these properties that you will be able to build robots that are almost alive.

Interestingly, the aspects of the interaction that make the robot look alive are similar to those that make the interaction successful. There is no need for the robot to pretend to be alive as long as its movements and interaction ties in to how a real living human would react in the same situation. Life-likeness may be important in social robots, but we believe that it is equally important for robots that are not primarily social since it potentially allows the behaviors of the robot to be easier to understand which makes it more transparent.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This work was partially supported by the Wallenberg AI, Autonomous Systems and Software Program—Humanities and Society (WASP-HS) funded by the Marianne and Marcus Wallenberg Foundation and the Marcus and Amalia Wallenberg Foundation. Additional funding was obtained from the Pufendorf Institute at Lund University.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abildgaard, J. R., and Scharfe, H. (2012). “A Geminoid as Lecturer,” in International Conference on Social Robotics, Switzerland AG, October 29–31, 2012 (Springer), 408–417. doi:10.1007/978-3-642-34103-8_41

Balkenius, C., Fawcett, C., Falck-Ytter, T., Gredebäck, G., and Johansson, B. (2019). “Pupillary Correlates of Emotion and Cognition: a Computational Model,” in 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER), San Francisco, CA, USA, 20-23 March 2019 (IEEE), 903–907. doi:10.1109/ner.2019.8717091

Balkenius, C. (1995). Natural Intelligence in Artificial Creatures. Lund: Lund University Cognitive Science.

Bartneck, C., Kanda, T., Ishiguro, H., and Hagita, N. (2007). “Is the Uncanny valley an Uncanny Cliff,” in RO-MAN 2007-The 16th IEEE international symposium on robot and human interactive communication, Jeju, Korea, August 26 ∼ 29, 2007 (IEEE), 368–373.

Brinck, I., Heco, L., Sikström, K., Wandsleb, V., Johansson, B., and Balkenius, C. (2020). “Humans Perform Social Movements in Response to Social Robot Movements: Motor Intention in Human-Robot Interaction,” in 2020 Joint IEEE 10th International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob, Valparaiso, Chile, 26-30 Oct. 2020 (IEEE), 1–6. doi:10.1109/icdl-epirob48136.2020.9278114

Brooks, R. (1986). A Robust Layered Control System for a mobile Robot. IEEE J. Robot. Automat. 2, 14–23. doi:10.1109/jra.1986.1087032

Brooks, R. A. (1989). A Robot that Walks; Emergent Behaviors from a Carefully Evolved Network. Neural Comput. 1, 253–262. doi:10.1162/neco.1989.1.2.253

Brooks, R. A., Breazeal, C., Marjanović, M., Scassellati, B., and Williamson, M. M. (1998). “The Cog Project: Building a Humanoid Robot,” in International workshop on computation for metaphors, analogy, and agents (Springer) 1562, 52–87.

Brooks, R. A., Connell, J., and Ning, P. (1988). Herbert: A Second Generation mobile Robot. Cambridge, MA: MIT AI Lab, A. I. Memo 1016.

Brooks, R. A. (1990). Elephants Don't Play Chess. Robotics autonomous Syst. 6, 3–15. doi:10.1016/s0921-8890(05)80025-9

Cavallo, A., Koul, A., Ansuini, C., Capozzi, F., and Becchio, C. (2016). Decoding Intentions from Movement Kinematics. Sci. Rep. 6, 37036–37038. doi:10.1038/srep37036

Colby, K. M. (1981). Modeling a Paranoid Mind. Behav. Brain Sci. 4, 515–534. doi:10.1017/s0140525x00000030

Copeland, J. (2012). Alan Turing: The Codebreaker Who Saved ‘millions of Lives. BBC News, June 19, 2012, https://www.bbc.com/news/technology-18419691.

Daza, M., Barrios-Aranibar, D., Diaz-Amado, J., Cardinale, Y., and Vilasboas, J. (2021). An Approach of Social Navigation Based on Proxemics for Crowded Environments of Humans and Robots. Micromachines 12, 193. doi:10.3390/mi12020193

Dennett, D. C. (1978). Why Not the Whole iguana? Behav. Brain Sci. 1, 103–104. doi:10.1017/s0140525x00059859

Despouy, E., Curot, J., Deudon, M., Gardy, L., Denuelle, M., Sol, J.-C., et al. (2020). A Fast Visual Recognition Memory System in Humans Identified Using Intracerebral Erp. Cereb. Cortex 30, 2961–2971. doi:10.1093/cercor/bhz287

Fong, T., Nourbakhsh, I., and Dautenhahn, K. (2003). A Survey of Socially Interactive Robots. Robotics autonomous Syst. 42, 143–166. doi:10.1016/s0921-8890(02)00372-x

Gupta, N., Smith, J., Shrewsbury, B., and Børnich, B. (2019). “2d Push Recovery and Balancing of the Eve R3-A Humanoid Robot with Wheel-Base, Using Model Predictive Control and Gain Scheduling,” in 2019 IEEE-RAS 19th International Conference on Humanoid Robots (Humanoids, Toronto, ON, Canada, 15-17 Oct. 2019 (IEEE), 365–372. doi:10.1109/humanoids43949.2019.9035044

Haring, K. S., Silvera-Tawil, D., Matsumoto, Y., Velonaki, M., and Watanabe, K. (2014). “Perception of an Android Robot in japan and australia: A Cross-Cultural Comparison,” in International conference on social robotics (Springer), 166–175. doi:10.1007/978-3-319-11973-1_17

Hirai, K., Hirose, M., Haikawa, Y., and Takenaka, T. (1998). “The Development of Honda Humanoid Robot,” in Proceedings. 1998 IEEE International Conference on Robotics and Automation (Cat. No. 98CH36146, Leuven, Belgium, 20-20 May 1998 (IEEE), 1321–1326.

Ishiguro, H. (2016). “Android Science,” in Cognitive Neuroscience Robotics A (Tokyo: Springer), 193–234. doi:10.1007/978-4-431-54595-8_9

Johansson, B., and Balkenius, C. (2016). A Computational Model of Pupil Dilation. Connect. Sci. 30, 1–15. doi:10.1080/09540091.2016.1271401

Johansson, B., Tjøstheim, T. A., and Balkenius, C. (2020). Epi: An Open Humanoid Platform for Developmental Robotics. Int. J. Adv. Robotic Syst. 17, 1729881420911498. doi:10.1177/1729881420911498

Lilienthal, A. J., and Duckett, T. (2003). “Experimental Analysis of Smelling Braitenberg Vehicles,” in IEEE international conference on advanced robotics (ICAR 2003, Coimbra, Portugal, June 30-July 3, 2003 (Coimbra, University), 375–380.

MacDorman, K. F., and Entezari, S. O. (2015). Individual Differences Predict Sensitivity to the Uncanny valley. Is 16, 141–172. doi:10.1075/is.16.2.01mac

Metta, G., Sandini, G., Vernon, D., Natale, L., and Nori, F. (2008). “The iCub Humanoid Robot: an Open Platform for Research in Embodied Cognition,” in Proceedings of the 8th workshop on performance metrics for intelligent systems, Menlo Park, CA, USA, 2-4 Oct. 2011 (IEEE), 50–56.

Mori, M., MacDorman, K., and Kageki, N. (2012). The Uncanny valley. IEEE Robots & Automation 2, 98–100.

Nishio, S., Ishiguro, H., and Hagita, N. (2007). Geminoid: Teleoperated Android of an Existing Person. Humanoid robots: New Dev. 14, 343–352. doi:10.5772/4876

Pacchierotti, E., Christensen, H. I., and Jensfelt, P. (2006). “Evaluation of Passing Distance for Social Robots,” in Roman 2006-the 15th ieee international symposium on robot and human interactive communication, Hatfield, UK, 6-8 Sept. 2006 (IEEE), 315–320. doi:10.1109/roman.2006.314436

Pandey, A. K., and Gelin, R. (2018). A Mass-Produced Sociable Humanoid Robot: Pepper: The First Machine of its Kind. IEEE Robot. Automat. Mag. 25, 40–48. doi:10.1109/mra.2018.2833157

Redondo, M. E. L., Sciutti, A., Incao, S., Rea, F., and Niewiadomski, R. (2021). “Can Robots Impact Human Comfortability during a Live Interview,” in Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, 8 March 2021, 186–189.

Reeves, B., and Nass, C. (1996). The media Equation: How People Treat Computers, Television, and New media like Real People. Cambridge, United Kingdom): Cambridge University Press.

Ribeiro, T., and Paiva, A. (2012). “The Illusion of Robotic Life: Principles and Practices of Animation for Robots,” in Proceedings of the seventh annual ACM/IEEE international conference on Human-Robot Interaction, Boston, MA, USA, 5-8 March 2012 (IEEE), 383–390.

Rosenblueth, A., and Wiener, N. (1945). The Role of Models in Science. Philos. Sci. 12, 316–321. doi:10.1086/286874

Sakagami, Y., Watanabe, R., Aoyama, C., Matsunaga, S., Higaki, N., and Fujimura, K. (2002). “The Intelligent Asimo: System Overview and Integration,” in IEEE/RSJ international conference on intelligent robots and systems, Lausanne, Switzerland, 30 Sept.-4 Oct. 2002 (IEEE), 2478–2483.

Shimada, M., Minato, T., Itakura, S., and Ishiguro, H. (2007). “Uncanny valley of Androids and its Lateral Inhibition Hypothesis,” in RO-MAN 2007-The 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju, Korea (South, 26-29 Aug. 2007 (IEEE), 374–379. doi:10.1109/roman.2007.4415112

Timberlake, W. (1994). Behavior Systems, Associationism, and Pavlovian Conditioning. Psychon. Bull. Rev. 1, 405–420. doi:10.3758/bf03210945

Tinbergen, N. (2020). The Study of Instinct. Lexington, MA: Pygmalion Press, an imprint of Plunkett Lake Press.

Turing, A. M. (1950). “Computing Machinery and Intelligence,” in Parsing the Turing Test (Springer), 23–65.

Weizenbaum, J. (1966). ELIZA-a Computer Program for the Study of Natural Language Communication between Man and Machine. Commun. ACM 9, 36–45. doi:10.1145/365153.365168

Wilson, M. (2002). Six Views of Embodied Cognition. Psychon. Bull. Rev. 9, 625–636. doi:10.3758/bf03196322

Wilson, S. W. (1991). “The Animat Path to Ai,” in From Animals to Animats: Proceedings of the First International Conference on Simulation of Adaptive Behavior. Editors J.-A. Meyer, and S. W. Wilson.

Yamaoka, F., Kanda, T., Ishiguro, H., and Hagita, N. (2006). “How Contingent Should a Communication Robot Be,” in Proceedings of the 1st ACM SIGCHI/SIGART conference on Human-robot interaction, Salt Lake City, Utah, USA, March 2-3, 2006, 313–320.

Ziemke, T., Balkenius, C., and Hallam, J. (2012). in From Animals to Animats 12: 12th International Conference on Simulation of Adaptive Behavior, SAB 2012, Odense, Denmark, August 27-30, 2012 (Springer).

Keywords: robots, androids, humanoids, life-like, human-robot interaction (HRI)

Citation: Balkenius C and Johansson B (2022) Almost Alive: Robots and Androids. Front. Hum. Dyn 4:703879. doi: 10.3389/fhumd.2022.703879

Received: 30 April 2021; Accepted: 12 January 2022;

Published: 22 February 2022.

Edited by:

Olaf Witkowski, Cross Labs, JapanReviewed by:

Hatice Kose, Istanbul Technical University, TurkeyEvgenios Vlachos, University of Southern Denmark, Denmark

Copyright © 2022 Balkenius and Johansson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christian Balkenius, Y2hyaXN0aWFuLmJhbGtlbml1c0BsdWNzLmx1LnNl

Christian Balkenius

Christian Balkenius Birger Johansson

Birger Johansson