- 1Centre for Quality Improvement and Clinical Audit, Royal College of Obstetricians and Gynaecologists, London, United Kingdom

- 2Obstetrics & Gynaecology, Croydon University Hospitals NHS Trust, London, United Kingdom

- 3Maternity Services, University Hospital Plymouth NHS Trust, Plymouth, United Kingdom

- 4Department of Health Services Research and Policy, London School of Hygiene and Tropical Medicine, London, United Kingdom

- 5Centre for Implementation Science, Health Service and Population Research Department, King’s College London, London, United Kingdom

Hybrid effectiveness-implementation studies allow researchers to combine study of a clinical intervention's effectiveness with study of its implementation with the aim of accelerating the translation of evidence into practice. However, there currently exists limited guidance on how to design and manage such hybrid studies. This is particularly true for studies that include a comparison/control arm that, by design, receives less implementation support than the intervention arm. Lack of such guidance can present a challenge for researchers both in setting up but also in effectively managing participating sites in such trials. This paper uses a narrative review of the literature (Phase 1 of the research) and comparative case study of three studies (Phase 2 of the research) to identify common themes related to study design and management. Based on these, we comment and reflect on: (1) the balance that needs to be struck between fidelity to the study design and tailoring to emerging requests from participating sites as part of the research process, and (2) the modifications to the implementation strategies being evaluated. Hybrid trial teams should carefully consider the impact of design selection, trial management decisions, and any modifications to implementation processes and/or support on the delivery of a controlled evaluation. The rationale for these choices should be systematically reported to fill the gap in the literature.

1. Introduction

In the last decade, there has been a gradual shift in the field of implementation science towards combining the study of clinical effectiveness and implementation. This shift has the potential to reduce the translational gap between evidence and practice (1). To guide this change, a “hybrid” typology has been suggested that blends clinical effectiveness and implementation research, standardises the associated terminology, and distinguishes between the primary and secondary study aims positioned on the clinical effectiveness vs. implementation research continuum (2).

In brief, a type-1 design is most suitable for an intervention with a limited evidence base, where clinical effectiveness must be established. Implementation effectiveness is a secondary focus. On the other end of the spectrum is the type-3 design, more suitable for interventions that already have an evidence base for the clinical intervention's effectiveness, and therefore implementation is the main focus. In the middle is a type-2 design, in which clinical and implementation effectiveness outcomes share equal importance.

As hybrid studies are relatively new in the field of implementation research in health, there is a lack of guidance on how to optimally design and execute them. In addition to considering the appropriate hybrid type, researchers need to consider all “usual” decisions involved in study design (3). In practice, and also in our experience of designing, conducting and reviewing such studies, we would argue that it is a relatively new concept for a study's primary focus to be on how to implement rather than what to implement—which is the case for type-2 and type-3 hybrids. Where these types of studies have more than one study arm, these are distinguished not by the evidence-based practice (EBP) that is implemented, but rather by their “implementation mechanism”—in other words, the implementation support strategy (or bundle of strategies) prescribed as part of the study to roll out the EBP and be subject to evaluation.

Furthermore, it has recently been argued that implementation studies in general and hybrid studies in particular need to consider whether there is contextual equipoise, or “genuine uncertainty about whether the implementation strategies will effectively deliver the evidence-based practice in a new context”. Assessing contextual equipoise involves reflecting upon the evidence for a clinical intervention/programme to perform well at scale, and whether a control group is needed to evaluate a particular implementation mechanism (4). Although contextual equipoise evolved as a concept in the realm of global health implementation studies, it usefully triggers ethical questions that we believe are pertinent in thinking about the design of control groups in hybrid studies. A fundamental such question is whether there is sufficient evidence to suggest that a particular implementation support mechanism will be effective (or require only minor adaptation prior to application), or if a full-blown controlled evaluation is required to determine the optimal implementation mechanism. Where a controlled evaluation is required, a subsequent question is what type of control group is ethically warranted and practically scalable post-study–in other words, does one apply a traditional “no intervention” control, which means no implementation support whatsoever, or does one compare different implementation mechanisms, in the aspiration that the study will elucidate the one that is most optimal for post-study for scale-up, which might reasonably be the ultimate ambition of such a study. A further question might then become how to manage the delivery of the study, such that interventions that are being evaluated (i.e., the implementation mechanisms) are delivered in such a manner that upholds the prescribed comparisons and corresponding conclusions. Whilst this list of questions is indeed not exhaustive, it illustrates the need for some research and reflection around these issues to advance the design of hybrids. Although generic guides for the design of implementation studies have recently been published, these do not provide specific guidance on a suitable control group in hybrid trials, especially where implementation is a primary focus (5, 6).

The impetus for the present paper comes from an on-going hybrid type-3 study to improve maternity care and outcomes in Great Britain, referred to as “OASI2”. OASI2 has two study arms, one of which receives more implementation support than the other, in order to understand what is required for successful, scalable implementation of an evidence-based care bundle developed to reduce women's risk of childbirth injury (7). In the process of setting up and managing the trial, several decisions needed to be made related to the nature of the comparison arm; the nature of the implementation support strategies being evaluated; and how to support participating sites for optimal delivery of the study without deviating from the prescribed implementation mechanism and thereby compromising the study's evaluation objectives.

The work that we report here aimed to address some of the aforementioned questions in relation to the design and delivery of control groups in hybrid implementation evaluation. In Phase 1 of our work, we carried out a review of published hybrid type-3 designs that featured a comparison/control arm to understand how such studies are designed. In Phase 2, we then conducted a qualitative case study inquiry into different design and management choices and how case contexts have affected the implementation mechanism and study outcomes in three cases of hybrid evaluations: the OASI2, the “PA4E1”, and the “CATCH-UP” studies (see section 3.2, comparative case study overview for more detail) (7–9).

2. Methods

The research proceeded in two interlinked phases. In Phase 1, we carried out a review of the evidence base to identify how control groups have been operationalized in hybrid-3 studies and develop a de facto typology. Based on what we found, in Phase 2 we selected a number of exemplar case studies, which we examined in detail to enable a reflection on the nature and application within research contexts of different types of control groups.

2.1. Phase 1: narrative review

A narrative review of hybrid trials was conducted to identify hybrid type-3 trials involving comparison/control and intervention groups with well-defined implementation support strategies. On 8-February 2022, PubMed was searched for publications with the following words in the title and/or abstract: “hybrid”, “effectiveness”, “implementation”, limiting the search to studies published in the English language. The search was intentionally broad and did not specify type-3 trials as many studies are reported as hybrid without stipulating the type. Titles and abstracts of the resulting publications were then reviewed for relevance, excluding non-hybrid studies. Hybrid studies without a specified type were assessed by the lead author (MJ) to deduce the type where possible. Publications of hybrid type-3 trials were then reviewed to identify different design structures. Information on the area of study, the intervention/programme being implemented, and implementation support strategies across the different study arms were extracted.

2.2. Phase 2: comparative case study analysis

For each design structure identified in the narrative review, one case study was selected for the comparative case study analysis, to be featured alongside the OASI2 study, which is led by our research group. Various approaches to case study methodology have been applied in the realm of implementation science (10). Our analysis is grounded in the theories of a realist evaluation, where the focus is on how context case-specific contexts influence the implementation mechanism and eventual study outcomes (10).

All published literature on the additional cases were reviewed and follow-up questions specific to the aims of this paper were developed for a semi-structured interview guide (i.e., how was the comparison/control arm managed in practice? Did the study team experience any challenges related to managing the comparison/control arm? Was scalability of the implementation strategies taken into account during the design? Were any changes made to implementation tools?). Lead authors of the selected case studies were invited to participate in a brief semi-structured phone interview with the lead author (MJ). The full set of follow-up questions can be found in Supplementary Data Sheet 1.

Common themes related to implementation support strategies, study arm management, and support offered to participating sites were identified from the publications and follow-up calls.

3. Results

3.1. Phase 1: narrative review

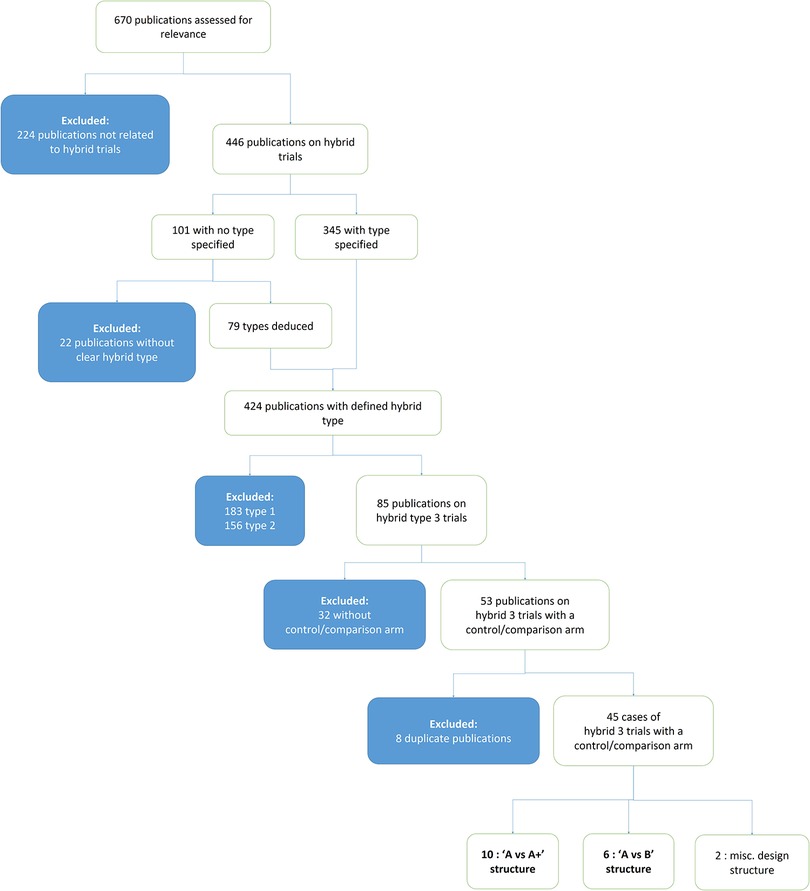

The search yielded 670 publications, excluding the OASI2 study protocol. Titles and abstracts were reviewed for relevance and categorised by type of hybrid trial. 224 publications were initially excluded as not relevant (not reporting on a hybrid implementation effectiveness trial). 446 articles were about hybrid trials, and 101 of these did not specify the type. The lead author (MJ) deduced the type of 79 of these 101 unclassified publications based on the description of the study design and outcomes. Of the 424 publications that were classifiable to a defined hybrid type, 183 were type-1, 156 were type-2, and 85 were type-3.

The methods of the 85 publications on hybrid type-3 trials were subsequently assessed to determine if a comparison/control arm was featured, and 32 were excluded on this basis. Of the resulting 53 in-scope publications, eight were excluded as they reported on a trial already represented by another publication.

The resulting 45 publications on distinct hybrid type-3 trials formed the corpus of the review. These reports were reviewed to identify the most common design structures. Figure 1 depicts how publications were assessed and selected for inclusion in the review.

17 had an “A vs. A+” structure (9, 11–26), 26 had an “A vs. B” structure (8, 27–51) and 2 had unique structures that did not fall into the two defined categories (52, 53).

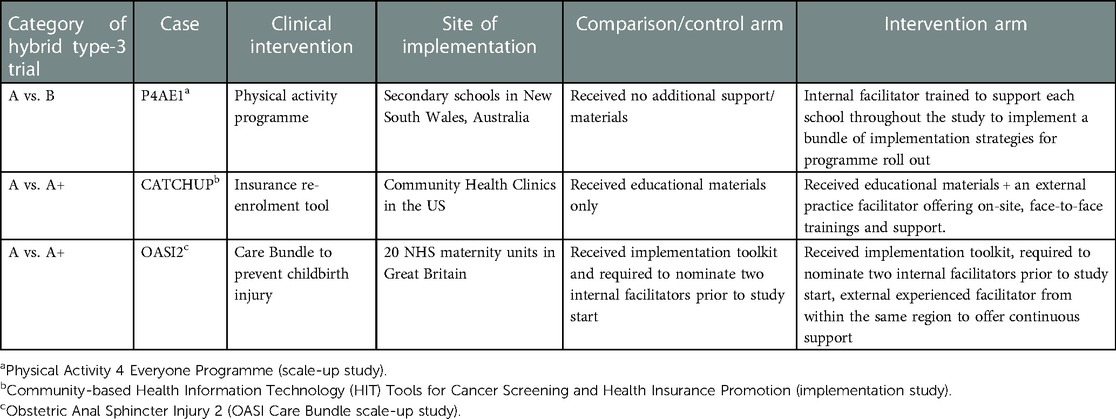

Three categories of study design structures were identified across the 45 type-3 cases: 17 had an “A vs. A+” structure, 26 had an “A vs. B” structure and 2 had unique structures that did not fall into the two defined categories. In “A vs. A+”, both study arms share a baseline mechanism of implementation (“A”) and one arm receives additional support (“A+”). In an “A vs. B” structure, one mechanism of implementation (“A”- i.e., an implementation strategy or combination of strategies) is compared to a different mechanism or no mechanism offered to the study sites at all (“B”) without overlap between the two. This includes the traditional intervention vs. “usual practice” controlled designs as well as stepped wedge trials. See Supplementary Table 1 for a detailed presentation of the 45 type-3 publications from which the case studies were selected.

The motivation for this narrative review was to compare studies with similar design to OASI2,which compares supported vs. unsupported facilitation of EBP implementation in Great Britain. Studies that were not comparable were not considered in the selection for comparative case study: these included studies taking place in low and middle income countries (4 papers), studies that did not involve facilitation as an implementation strategy (13 papers), studies with a unique design structure (2 papers), and studies with a stepped wedge design (10 papers). The rationale for excluding stepped wedge trials in particular was that one of the predicaments raised by the OASI2 team during study design was whether the unsupported (control arm) sites would continue their participation in the study without the promise of eventually receiving full implementation support (as would happen by design in a stepped wedge trial) – this would be a fundamentally different scenario.

One exemplar case study for each was selected to represent the ten remaining “A vs. A+” and six remaining “A vs. B” structures, respectively. The case exemplar selected for the “A vs. A+” structure was CATCH-UP, a trial implementing a health insurance enrolment tracking tool in community health centres (CHCs) in the United States (9). The case exemplar selected for the “A vs. B” structure was the scale-up of the “Physical Activity for Everyone” (PA4E1) programme, which promotes adolescent physical activity.

Table 1 gives an overview of these two cases alongside the OASI2 study.

3.2. Phase 2: comparative case study overview

In this section, we describe the context of each study, including the study design and how this affected the intended implementation of the EBP and the study outcomes.

3.2.1. OASI2 study

OASI2 is a cluster randomised controlled trial (C-RCT) that seeks to understand what is required for scalable implementation of the OASI Care Bundle (the EBP), a set of 4 evidence-based practices to reduce women's risk, and improve detection of severe tearing during childbirth (54). OASI2 has two randomised study arms, each comprised of 10 National Health Service (NHS) maternity units without prior experience of implementing the OASI Care Bundle. A third, parallel arm of 10 maternity units that had previously implemented the care bundle as part of the preceding study (OASI1) is engaged to offer external facilitation to one of the randomised arms as well as to allow further evaluation of the care bundle's sustainability over time.

An implementation toolkit was developed to guide all participating sites with EBP roll out, incorporating lessons learned from the preceding OASI1 study's process evaluation. The toolkit includes a clinical manual, an implementation guidebook for local facilitators, updated awareness campaign materials, and an eLearning package. All participating sites were also required to select two local facilitators, or “leads” to be responsible for local implementation.

The intervention arm is called the “peer support” arm. Leads from peer-supported units receive external experienced facilitation from OASI1 sites in the parallel arm. The comparison arm is the “lean implementation” arm. Leads from the lean implementation units do not receive any external support. Units in both the peer support and lean implementation arms receive the implementation toolkit.

In effect, the key difference between the two C-RCT arms is that the peer support arm receives continuous external support, while the lean implementation arm does not. The participating sites’ individual contexts (i.e., preparedness of selected leads to facilitate local implementation, senior/institutional support, and staff's pre-existing acceptance of the EBP) had an impact on how well the intended implementation mechanism was carried out. Deviations from the intended implementation mechanism include peer supported sites not engaging with their external facilitators (which can be construed as reduced fidelity of receipt of the intended implementation support intervention) and lean sites reaching out to the study team for additional support (likewise, due to the potential for increased implementation support beyond what was intended as per study design; and also potential for contamination, if the study team inadvertently functioned as “peer support” to the lean/control sites).

3.2.2. CATCH up study

The Community-based Health Insurance Technology (HIT) Tools for Cancer Screening and Health Insurance Promotion (CATCH-UP) intervention seeks to increase cancer screening and prevention care in uninsured patients in community healthcare settings in the United States. The CATCH-UP study included 23 community health clinics that implemented the HIT tool (the EBP).

The 23 participating clinics were randomised to two study arms. Clinics in the intervention arm received educational materials (electronic manual with instructions for tool use), beta testing, and a practice facilitator to explain the insurance support HIT tools, prepare clinic staff for using the tools, and assist clinics in revising workflows. The practice facilitator was available to the clinic staff and continued to engage actively during the implementation phase, including on-site trainings and support. Clinics in the comparison arm received educational materials only.

As there was no preceding study to establish the tool's effectiveness, the 23 clinics were matched with comparison sites to test the effectiveness of the tool—effectiveness was established based on outcomes in the 23 clinics in the intervention and comparison arms compared to the 23 clinics in the externally matched control group.

The implementation component of the trial compared the two levels of implementation support in the two arms, evaluating acceptance and use of the tools, as well as patient, provider, and system level factors associated with successful implementation of the tools (55, 56).

This study's context necessitated a modification to the CATCH-UP trial's insurance support tool (the EBP itself): as one of the main benefits for clinics to implement and use the tool was that it provided support with completing a complex report required by an external funding agency. Before the trial ended, the funding agency simplified its reporting requirements, decreasing the tool's value. The CATCH-UP trial team therefore had to adapt to this change by modifying the tool itself. The context had a major impact on the composition of the EBP and, by default, on how it was implemented.

3.2.3. PA4E1 scale-up study

“Physical Activity 4 Everyone” (PA4E1) is a multi-component, secondary school-based intervention promoting adolescents' physical activity in New South Wales, Australia. PA4E1 was first evaluated in a preceding study where it was found to be effective in slowing the decline in physical activity of adolescents at disadvantaged schools when compared to the control group, which carried on with usual practice (57).

In the PA4E1 scale-up study, the physical activity programme (the intervention) remained largely the same as in the preceding efficacy study, except that the six implementation strategies were slightly adapted to support delivery at scale and to synchronise with existing systems (57–60). One strategy—internal facilitation—was added as a seventh strategy as this was identified as a cost effective model for scalable delivery (61).

A total of 76 schools were randomised into the intervention or control arms. Schools in the intervention arm received the scalable implementation support (the seven strategies) to implement PA4E1. Schools in the control arm were introduced to PA4E1 at the beginning of the study via a brief presentation. They did not receive additional implementation support apart from usual care, which was reactive support based on explicit requests from the schools.

An electronic portal gave participating sites access to the implementation materials. Schools in the control arm had restricted access and could only see an overview of the school physical activity programme, while schools in the intervention arm could access all of the content, including a resource manual and online training (8).

In this study, contextual factors were not reported to have impacted on the EBP itself nor on how it was implemented. Both the EBP and the implementation mechanism were rolled out as originally intended by the study team.

3.2.4. Reflection on design challenges and responses

All three hybrid case exemplars have experienced a tension on the spectrum between fidelity to the original study design and reactively tailoring to the unique needs of participating sites that surface during the study conduct.

In OASI2, the tension manifested in two ways: in choosing how to respond to requests from participating sites that were contradictory to the prescribed implementation mechanism, and in deciding whether to make changes to the implementation toolkit based on participants' feedback before trial's end. Since the start of the trial local leads from the lean implementation arm (comparison arm) have reached out to the study team for additional guidance on how to implement the care bundle, a request in direct opposition with the “lean” nature of the arm. Similarly, there have been instances where leads in the peer support arm were not receiving external facilitation as planned. Additionally, since awareness of the OASI Care Bundle intervention had spread throughout NHS maternity units after the OASI1 study; non-participating sites began reaching out with requests to receive the toolkit before the OASI2 study launched. There was a surge of external requests around the summer of 2021, when the NHS launched an initiative to roll out new pelvic health clinics (62).

The study team convened to discuss how to address each scenario, weighing research design and evaluation considerations against “meeting the moment” and catering to the requests of both participating and non-participating sites.

Concerning the lean implementation (comparison) arm, we opted to signpost back to the toolkit. With regards to enforcing peer support in the intervention arm, we clarified expectations with the external facilitators and reminded them of their role as often as possible. In addition, we reached out to all peer supported units to investigate if they felt satisfied with the support offered to them since the start of the study. Finally, in response to sharing the implementation materials externally, we opted to wait until all participating units had the toolkit before making it publicly available via an online request form.

After the study had launched and the toolkit was disseminated, toolkit users reached out with suggested modifications to the resources to improve their usability. The team opted to make the suggested changes in the first half of the study, recognizing that the alternative option of waiting until the end of the study might negatively impact the EBP's uptake. The toolkit materials will undergo further modification to address all other feedback received at the outset of the study, with a goal to create an updated toolkit that is publicly available after the trial is over.

In the CATCH-UP trial, staff from clinics in the comparison arm (meant to receive educational materials only) reached out to the study team asking for additional guidance. Similarly to the OASI2 team's response to its comparison arm, the CATCH-UP team opted to address these requests by organising a meeting with the clinic staff and answering their questions by signposting back to the educational materials originally provided. The CATCH-UP study team recognised during the management of the study that there was a choice to be made between not engaging (per the study design), or responding to these comparison arm clinics, which, as evidenced by their request for help, were motivated to succeed. In this instance, the team opted to engage with the site, though without offering more support than what was originally intended.

In the PA4E1 study, the research team not only recognised but foresaw the tension between fidelity and tailoring. In anticipation of some participating schools in the intervention group failing to meet programme milestones, they created an external facilitator role responsible for intervening with the underperforming schools' executive leadership to identify the key barriers and enablers. In this case, reactive tailoring was built into the implementation support as originally intended.

4. Discussion and recommendations

Our review illustrates that despite the lack of clear guidance on how to design and manage hybrid trials, particularly related to comparison/control arms and managing pragmatic challenges to the study design, such studies are increasingly prevalent: 86% (366 of 424) publications on hybrid trials that we identified were published in the last five years (since 2018).

The narrative review identified two dominant patterns in the design of study arms. The A vs. B design structure seeks to identify the “best overall” implementation strategy or set of strategies when compared to another set of strategies or a “true” control, while the A vs. A + design structure aims to evaluate the value of the additional support over and above “basic”, “no-frills” implementation support approaches. We recommend that to guide selection of design structure in the early stages of trial development, researchers should internally assess contextual equipoise and beneficence in terms of ensuring that no study participants/sites are denied strategies with sufficient evidence of effectiveness (4). We realise that a judgement of sufficiency will always risk being subjective and that the gold standard to determine strength of evidence to support use of an implementation strategy or bundle of strategies will be a controlled study. However, this ought to be balanced against the need to implement well-evidenced EBPs speedily and sustainably. Hybrid implementation studies require time and resource, so to avoid the risk of ever extending the time lag between evidence and practice decision-making regarding what implementation mechanisms may require trialing and how we argue here that pragmatism and true contextual equipoise ought to be considered. Researchers should also include the rationale for their decision-making and ultimate selection of comparators/controls in study protocols to support the informed design of future trials.

Our case study analysis suggests that the OASI2, CATCH-UP, and PA4E1 study teams all recognised that their trial management required striking the right balance between fidelity to the study design and catering to the needs of participating sites. At its extreme, choosing fidelity to study design means withholding support or even contact with some sites. The foreseeable consequences of this could include sites losing interest in the trial, failing to collect data required for evaluation or even withdrawal from the study. Ethical considerations of withholding support should also be considered. The other extreme is catering to every emergent request from a participating site, regardless of study arm allocation. The consequence of this is a trial that is unable to make any claims about how to effectively implement an intervention sustainably and at scale. We argue that neither extreme is beneficial and therefore finding an optimal balance on this spectrum is vital. Researchers working on similar hybrid trials should reflect on the crossroad decisions made throughout the trial and include commentary on trial management in papers reporting study results.

All three case exemplars we report also demonstrate that modification—either of the intervention being implemented or the implementation strategies being evaluated—can be necessary and unavoidable. Although modification(s) may lead to improved implementation outcomes, it can also make evaluation challenging. To mitigate this, systematically recording these modifications is essential. The Framework for Reporting Adaptations and Modifications-Enhanced (FRAME) was developed to support the reporting of modifications made to interventions (63), and more recently, FRAME has been adapted to support reporting modifications to implementation strategies (64). All implementation trials, but especially hybrid trials, should report modifications using FRAME in results papers.

5. Limitations

The narrative review has a limited scope as the intention was to identify cases comparable to the OASI2 study. Although stepped wedge designs were excluded, they may offer valuable insight regarding optimal design and trial management decisions in hybrid trials. The 32 in-scope cases (refer to Supplementary Table 1) were reviewed to determine the design structure and only two study teams (CATCH UP and PA4E1) were contacted for follow-up discussion.

6. Conclusion

In the interest of contributing to the development of guidance on design and management of hybrid trials, systematic reporting of rationale for design selection, crossroad decisions during the trial, and any modifications made to the intervention or implementation strategies should become routine.

Author contributions

RT, JVDM, IGU, and NS: obtained funding. MJ, IGU and NS: contributed to the conceptualisation of this work. MJ: conducted the narrative review and comparative case study analysis, and drafted the manuscript, advised by RT, FEC, LP, JVDM, IGU and NS. All authors contributed to the article and approved the submitted version.

Funding

The OASI2 study is funded by the Health Foundation. NS' research is supported by the National Institute for Health Research (NIHR) Applied Research Collaboration (ARC) South London at King's College Hospital NHS Foundation Trust. NS is a member of King's Improvement Science, which offers co-funding to the NIHR ARC South London and is funded by King's Health Partners (Guy's and St Thomas' NHS Foundation Trust, King's College Hospital NHS Foundation Trust, King's College London and South London and Maudsley NHS Foundation Trust), and Guy's and St Thomas' Foundation. The views expressed in this publication are those of the author(s) and not necessarily those of the NIHR or the Department of Health and Social Care.

Acknowledgments

We would like to thank Dr Nathalie Huguet (CATCH UP) and Dr Rachel Sutherland (PA4E1) for their willingness and enthusiasm to share their respective experiences regarding the design and management of their studies for the purposes of this paper.

Conflict of interest

NS is the director of the London Safety and Training Solutions Ltd, which offers training in patient safety, implementation solutions and human factors to healthcare organisations and the pharmaceutical industry.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer JM declared a shared affiliation, with no collaboration, with one of the authors, NS, to the handling editor at the time of the review.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frhs.2023.1059015/full#supplementary-material.

References

1. Morris ZS, Wooding S, Grant J. The answer is 17 years, what is the question: understanding time lags in translational research. J R Soc Med. (2011) 104(12):510–20. doi: 10.1258/jrsm.2011.110180. PMID: 22179294; PMCID: PMC3241518.22179294

2. Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. (2012) 50(3):217–26. doi: 10.1097/MLR.0b013e3182408812. PMID: 22310560; PMCID: PMC3731143.22310560

3. Creswell JW. Research design: Qualitative, quantitative and mixed method aproaches. 3rd ed. SAGE Publ (2007). Available From: https://www.ucg.ac.me/skladiste/blog_609332/objava_105202/fajlovi/Creswell.pdf.

4. Seward N, Hanlon C, Murdoch J, Colbourn T, Prince MJ, Venkatapuram S, et al. Contextual equipoise: a novel concept to inform ethical implications for implementation research in low-income and middle-income countries. BMJ Glob Health. (2020) 5(12):e003456. doi: 10.1136/bmjgh-2020-003456. PMID: 33355266; PMCID: PMC7757476.33355266

5. Hull L, Goulding L, Khadjesari Z, et al. Designing high-quality implementation research: development, application, feasibility and preliminary evaluation of the implementation science research development (ImpRes) tool and guide. Implement Sci. (2019) 14:80. doi: 10.1186/s13012-019-0897-z

6. Wolfenden L, Foy R, Presseau J, Grimshaw JM, Ivers NM, Powell BJ, et al. Designing and undertaking randomised implementation trials: guide for researchers. Br Med J. (2021) 372:m3721. doi: 10.1136/bmj.m3721. PMID: 33461967; PMCID: PMC7812444.

7. Jurczuk M, Bidwell P, Martinez D, Silverton L, Van der Meulen J, Wolstenholme D, et al. OASI2: a cluster randomised hybrid evaluation of strategies for sustainable implementation of the obstetric anal sphincter injury care bundle in maternity units in Great Britain. Implement Sci. (2021) 16(1):55. doi: 10.1186/s13012-021-01125-z. PMID: 34022926; PMCID: PMC8140475.34022926

8. Sutherland R, Campbell E, Nathan N, Wolfenden L, Lubans DR, Morgan PJ, et al. A cluster randomised trial of an intervention to increase the implementation of physical activity practices in secondary schools: study protocol for scaling up the physical activity 4 everyone (PA4E1) program. BMC Public Health. (2019) 19(1):883. doi: 10.1186/s12889-019-6965-0. PMID: 31272421; PMCID: PMC6610944.31272421

9. DeVoe JE, Huguet N, Likumahuwa-Ackman S, Angier H, Nelson C, Marino M, et al. Testing health information technology tools to facilitate health insurance support: a protocol for an effectiveness-implementation hybrid randomized trial. Implement Sci. (2015) 10:123. doi: 10.1186/s13012-015-0311-4. PMID: 26652866; PMCID: 4676134.26652866

10. Paparini S, Papoutsi C, Murdoch J, Green J, Petticrew M, Greenhalgh T, et al. Evaluating complex interventions in context: systematic, meta-narrative review of case study approaches. BMC Med Res Methodol. (2021) 21(1):225. doi: 10.1186/s12874-021-01418-3. PMID: 34689742; PMCID: PMC8543916.34689742

11. Kirchner JE, Ritchie MJ, Pitcock JA, Parker LE, Curran GM, Fortney JC. Outcomes of a partnered facilitation strategy to implement primary care-mental health. J Gen Intern Med. (2014) 29 (Suppl 4):904–12. doi: 10.1007/s11606-014-3027-2. PMID: 25355087; PMCID: PMC4239280.25355087

12. Halvarsson A, Roaldsen KS, Nilsen P, Dohrn IM, Ståhle A. Staybalanced: implementation of evidence-based fall prevention balance training for older adults-cluster randomized controlled and hybrid type 3 trial. Trials. (2021) 22(1):166. doi: 10.1186/s13063-021-05091-1. PMID: 33637122; PMCID: PMC7913398.33637122

13. Zubkoff L, Lyons KD, Dionne-Odom JN, Hagley G, Pisu M, Azuero A, et al. A cluster randomized controlled trial comparing virtual learning collaborative and technical assistance strategies to implement an early palliative care program for patients with advanced cancer and their caregivers: a study protocol. Implement Sci. (2021) 16(1):25. doi: 10.1186/s13012-021-01086-3. PMID: 33706770; PMCID: 7951124.33706770

14. Eldh AC, Joelsson-Alm E, Wretenberg P, Hälleberg-Nyman M. Onset PrevenTIon of urinary retention in orthopaedic nursing and rehabilitation, OPTION-a study protocol for a randomised trial by a multi-professional facilitator team and their first-line managers’ implementation strategy. Implement Sci. (2021) 16(1):65. doi: 10.1186/s13012-021-01135-x. PMID: 34174917; PMCID: PMC8233619.34174917

15. Elinder LS, Wiklund CA, Norman Å, Stattin NS, Andermo S, Patterson E, et al. IMplementation and evaluation of the school-based family support PRogram a healthy school start to promote child health and prevent OVErweight and obesity (IMPROVE)—study protocol for a cluster-randomized trial. BMC Public Health. (2021) 21(1):1630. doi: 10.1186/s12889-021-11663-2. PMID: 34488691; PMCID: PMC8419825.34488691

16. Beidas RS, Ahmedani BK, Linn KA, Marcus SC, Johnson C, Maye M, et al. Study protocol for a type III hybrid effectiveness-implementation trial of strategies to implement firearm safety promotion as a universal suicide prevention strategy in pediatric primary care. Implement Sci. (2021) 16(1):89. doi: 10.1186/s13012-021-01154-8. PMID: 34551811; PMCID: PMC8456701.34551811

17. Becker SJ, Murphy CM, Hartzler B, Rash CJ, Janssen T, Roosa M, et al. Project MIMIC (maximizing implementation of motivational incentives in clinics): a cluster-randomized type 3 hybrid effectiveness-implementation trial. Addict Sci Clin Pract. (2021) 16(1):61. doi: 10.1186/s13722-021-00268-0. PMID: 34635178; PMCID: PMC8505014.34635178

18. Proaño GV, Papoutsakis C, Lamers-Johnson E, Moloney L, Bailey MM, Abram JK, et al. Evaluating the implementation of evidence-based kidney nutrition practice guidelines: the AUGmeNt study protocol. J Ren Nutr. (2022) 32(5):613–25. doi: 10.1053/j.jrn.2021.09.006. Epub 2021 Oct 30. PMID: 34728124; PMCID: PMC9733590.

19. Smelson DA, Chinman M, McCarthy S, Hannah G, Sawh L, Glickman M. A cluster randomized hybrid type III trial testing an implementation support strategy to facilitate the use of an evidence-based practice in VA homeless programs. Implement Sci. (2015) 10:79. doi: 10.1186/s13012-015-0267-4. PMID: 26018048; PMCID: PMC4448312.26018048

20. Lewis CC, Scott K, Marti CN, Marriott BR, Kroenke K, Putz JW, et al. Implementing measurement-based care (iMBC) for depression in community mental health: a dynamic cluster randomized trial study protocol. Implement Sci. (2015) 10:127. doi: 10.1186/s13012-015-0313-2. PMID: 26345270; PMCID: PMC4561429.26345270

21. Brookman-Frazee L, Stahmer AC. Effectiveness of a multi-level implementation strategy for ASD interventions: study protocol for two linked cluster randomized trials. Implement Sci. (2018) 13(1):66. doi: 10.1186/s13012-018-0757-2. PMID: 29743090; PMCID: PMC5944167.29743090

22. Monson CM, Shields N, Suvak MK, Lane JEM, Shnaider P, Landy MSH, et al. A randomized controlled effectiveness trial of training strategies in cognitive processing therapy for posttraumatic stress disorder: impact on patient outcomes. Behav Res Ther. (2018) 110:31–40. doi: 10.1016/j.brat.2018.08.007. Epub 2018 Aug 30. PMID: 30218837.30218837

23. Martino S, Zimbrean P, Forray A, Kaufman JS, Desan PH, Olmstead TA, et al. Implementing motivational interviewing for substance misuse on medical inpatient units: a randomized controlled trial. J Gen Intern Med. (2019) 34(11):2520–9. doi: 10.1007/s11606-019-05257-3. Epub 2019 Aug 29. PMID: 31468342; PMCID: PMC6848470.31468342

24. Vaughn AE, Studts CR, Powell BJ, Ammerman AS, Trogdon JG, Curran GM, et al. The impact of basic vs. Enhanced go NAPSACC on child care centers’ healthy eating and physical activity practices: protocol for a type 3 hybrid effectiveness-implementation cluster-randomized trial. Implement Sci. (2019) 14(1):101. doi: 10.1186/s13012-019-0949-4. PMID: 31805973; PMCID: PMC6896698.31805973

25. Quanbeck A, Almirall D, Jacobson N, Brown RT, Landeck JK, Madden L, et al. The balanced opioid initiative: protocol for a clustered, sequential, multiple-assignment randomized trial to construct an adaptive implementation strategy to improve guideline-concordant opioid prescribing in primary care. Implement Sci. (2020) 15(1):26. doi: 10.1186/s13012-020-00990-4. PMID: 32334632; PMCID: PMC7183389.32334632

26. Germain A, Markwald RR, King E, Bramoweth AD, Wolfson M, Seda G, et al. Enhancing behavioral sleep care with digital technology: study protocol for a hybrid type 3 implementation-effectiveness randomized trial. Trials. (2021) 22(1):46. doi: 10.1186/s13063-020-04974-z. PMID: 33430955; PMCID: PMC7798254.33430955

27. Swindle T, Johnson SL, Whiteside-Mansell L, Curran GM. A mixed methods protocol for developing and testing implementation strategies for evidence-based obesity prevention in childcare: a cluster randomized hybrid type III trial. Implement Sci. (2017) 12(1):90. doi: 10.1186/s13012-017-0624-6. PMID: 28720140; PMCID: PMC5516351.28720140

28. Nguyen MXB, Chu AV, Powell BJ, Tran HV, Nguyen LH, Dao ATM, et al. Comparing a standard and tailored approach to scaling up an evidence-based intervention for antiretroviral therapy for people who inject drugs in Vietnam: study protocol for a cluster randomized hybrid type III trial. Implement Sci. (2020) 15(1):64. doi: 10.1186/s13012-020-01020-z. PMID: 32771017; PMCID: PMC7414564.32771017

29. Kadota JL, Musinguzi A, Nabunje J, Welishe F, Ssemata JL, Bishop O, et al. Protocol for the 3HP options trial: a hybrid type 3 implementation-effectiveness randomized trial of delivery strategies for short-course tuberculosis preventive therapy among people living with HIV in Uganda. Implement Sci. (2020) 15(1):65. doi: 10.1186/s13012-020-01025-8. PMID: 32787925; PMCID: PMC7425004.32787925

30. Dubowitz H, Saldana L, Magder LA, Palinkas LA, Landsverk JA, Belanger RL, et al. Protocol for comparing two training approaches for primary care professionals implementing the safe environment for every kid (SEEK) model. Implement Sci Commun. (2020) 1:78. doi: 10.1186/s43058-020-00059-9. PMID: 32974614; PMCID: PMC7506208.32974614

31. Edelman EJ, Dziura J, Esserman D, Porter E, Becker WC, Chan PA, et al. Working with HIV clinics to adopt addiction treatment using implementation facilitation (WHAT-IF?): rationale and design for a hybrid type 3 effectiveness-implementation study. Contemp Clin Trials. (2020) 98:106156. doi: 10.1016/j.cct.2020.106156. Epub 2020 Sep 23. PMID: 32976995; PMCID: PMC7511156.32976995

32. Rogal SS, Yakovchenko V, Morgan T, Bajaj JS, Gonzalez R, Park A, et al. Getting to implementation: a protocol for a hybrid III stepped wedge cluster randomized evaluation of using data-driven implementation strategies to improve cirrhosis care for Veterans. Implement Sci. (2020) 15(1):92. doi: 10.1186/s13012-020-01050-7. PMID: 33087156; PMCID: PMC7579930.33087156

33. Campbell CI, Saxon AJ, Boudreau DM, Wartko PD, Bobb JF, Lee AK, et al. PRimary care opioid use disorders treatment (PROUD) trial protocol: a pragmatic, cluster-randomized implementation trial in primary care for opioid use disorder treatment. Addict Sci Clin Pract. (2021) 16(1):9. doi: 10.1186/s13722-021-00218-w. PMID: 33517894; PMCID: PMC7849121.33517894

34. White-Traut R, Brandon D, Kavanaugh K, Gralton K, Pan W, Myers ER, et al. Protocol for implementation of an evidence based parentally administered intervention for preterm infants. BMC Pediatr. (2021) 21(1):142. doi: 10.1186/s12887-021-02596-1. PMID: 33761902; PMCID: PMC7988259.33761902

35. Ridgeway JL, Branda ME, Gravholt D, Brito JP, Hargraves IG, Hartasanchez SA, et al. Increasing risk-concordant cardiovascular care in diverse health systems: a mixed methods pragmatic stepped wedge cluster randomized implementation trial of shared decision making (SDM4IP). Implement Sci Commun. (2021) 2(1):43. doi: 10.1186/s43058-021-00145-6. PMID: 33883035; PMCID: PMC8058970.33883035

36. Swindle T, McBride NM, Selig JP, Johnson SL, Whiteside-Mansell L, Martin J, et al. Stakeholder selected strategies for obesity prevention in childcare: results from a small-scale cluster randomized hybrid type III trial. Implement Sci. (2021) 16(1):48. doi: 10.1186/s13012-021-01119-x. PMID: 33933130; PMCID: PMC8088574.33933130

37. Brown LD, Chilenski SM, Wells R, Jones EC, Welsh JA, Gayles JG, et al. Protocol for a hybrid type 3 cluster randomized trial of a technical assistance system supporting coalitions and evidence-based drug prevention programs. Implement Sci. (2021) 16(1):64. doi: 10.1186/s13012-021-01133-z. PMID: 34172065; PMCID: PMC8235808.34172065

38. Damschroder LJ, Reardon CM, AuYoung M, Moin T, Datta SK, Sparks JB, et al. Implementation findings from a hybrid III implementation-effectiveness trial of the diabetes prevention program (DPP) in the veterans health administration (VHA). Implement Sci. (2017) 12(1):94. doi: 10.1186/s13012-017-0619-3. PMID: 28747191; PMCID: PMC5530572.28747191

39. Mazza D, Chakraborty S, Camões-Costa V, Kenardy J, Brijnath B, Mortimer D, et al. Implementing work-related mental health guidelines in general PRacticE (IMPRovE): a protocol for a hybrid III parallel cluster randomised controlled trial. Implement Sci. (2021) 16(1):77. doi: 10.1186/s13012-021-01146-8. PMID: 34348743; PMCID: PMC8335858.34348743

40. Kerlin MP, Small D, Fuchs BD, Mikkelsen ME, Wang W, Tran T, et al. Implementing nudges to promote utilization of low tidal volume ventilation (INPUT): a stepped-wedge, hybrid type III trial of strategies to improve evidence-based mechanical ventilation management. Implement Sci. (2021) 16(1):78. doi: 10.1186/s13012-021-01147-7. PMID: 34376233; PMCID: PMC8353429.34376233

41. Kellstedt DK, Schenkelberg MA, Essay AM, Welk GJ, Rosenkranz RR, Idoate R, et al. Rural community systems: youth physical activity promotion through community collaboration. Prev Med Rep. (2021) 23:101486. doi: 10.1016/j.pmedr.2021.101486. PMID: 34458077; PMCID: PMC8378795.34458077

42. Porter G, Michaud TL, Schwab RJ, Hill JL, Estabrooks PA. Reach outcomes and costs of different physician referral strategies for a weight management program among rural primary care patients: type 3 hybrid effectiveness-implementation trial. JMIR Form Res. (2021) 5(10):e28622. doi: 10.2196/28622. PMID: 34668873; PMCID: PMC8567148.34668873

43. Iwuji C, Osler M, Mazibuko L, Hounsome N, Ngwenya N, Chimukuche RS, et al. Optimised electronic patient records to improve clinical monitoring of HIV-positive patients in rural South Africa (MONART trial): study protocol for a cluster-randomised trial. BMC Infect Dis. (2021) 21(1):1266. doi: 10.1186/s12879-021-06952-5. PMID: 34930182; PMCID: PMC8686584.34930182

44. Branch-Elliman W, Lamkin R, Shin M, Mull HJ, Epshtein I, Golenbock S, et al. Promoting de-implementation of inappropriate antimicrobial use in cardiac device procedures by expanding audit and feedback: protocol for hybrid III type effectiveness/implementation quasi-experimental study. Implement Sci. (2022) 17(1):12. doi: 10.1186/s13012-022-01186-8. PMID: 35093104; PMCID: PMC8800400.35093104

45. Mello MJ, Becker SJ, Bromberg J, Baird J, Zonfrillo MR, Spirito A. Implementing alcohol misuse SBIRT in a national cohort of pediatric trauma centers-a type III hybrid effectiveness-implementation trial. Implement Sci. (2018) 13(1):35. doi: 10.1186/s13012-018-0725-x. PMID: 29471849; PMCID: PMC5824578.29471849

46. Jere DLN, Banda CK, Kumbani LC, Liu L, McCreary LL, Park CG, et al. A hybrid design testing a 3-step implementation model for community scale-up of an HIV prevention intervention in rural Malawi: study protocol. BMC Public Health. (2018) 18(1):950. doi: 10.1186/s12889-018-5800-3. PMID: 30071866; PMCID: PMC6090759.30071866

47. Nathan N, Wiggers J, Bauman AE, Rissel C, Searles A, Reeves P, et al. A cluster randomised controlled trial of an intervention to increase the implementation of school physical activity policies and guidelines: study protocol for the physically active children in education (PACE) study. BMC Public Health. (2019) 19(1):170. doi: 10.1186/s12889-019-6492-z. PMID: 30760243; PMCID: PMC6375171.30760243

48. D’Onofrio G, Edelman EJ, Hawk KF, Pantalon MV, Chawarski MC, Owens PH, et al. Implementation facilitation to promote emergency department-initiated buprenorphine for opioid use disorder: protocol for a hybrid type III effectiveness-implementation study (project ED HEALTH). Implement Sci. (2019) 14(1):48. doi: 10.1186/s13012-019-0891-5. PMID: 31064390; PMCID: PMC6505286.

49. Naar S, MacDonell K, Chapman JE, Todd L, Gurung S, Cain D, et al. Testing a motivational interviewing implementation intervention in adolescent HIV clinics: protocol for a type 3, hybrid implementation-effectiveness trial. JMIR Res Protoc. (2019) 8(6):e11200. doi: 10.2196/11200. PMID: 31237839; PMCID: PMC6682301.31237839

50. Swendeman D, Arnold EM, Harris D, Fournier J, Comulada WS, Reback C, et al. Rotheram MJ; adolescent medicine trials network (ATN) CARES team. Text-messaging, online peer support group, and coaching strategies to optimize the HIV prevention Continuum for youth: protocol for a randomized controlled trial. JMIR Res Protoc. (2019) 8(8):e11165. doi: 10.2196/11165. PMID: 31400109; PMCID: PMC6707028.31400109

51. Yoong SL, Grady A, Wiggers JH, Stacey FG, Rissel C, Flood V, et al. Child-level evaluation of a web-based intervention to improve dietary guideline implementation in childcare centers: a cluster-randomized controlled trial. Am J Clin Nutr. (2020) 111(4):854–63. doi: 10.1093/ajcn/nqaa025. PMID: 32091593; PMCID: PMC7138676.32091593

52. Knight D, Becan J, Olson D, Davis NP, Jones J, Wiese A, et al. Justice community opioid innovation network (JCOIN): the TCU research hub. J Subst Abuse Treat. (2021) 128:108290. doi: 10.1016/j.jsat.2021.108290. Epub 2021 Jan 15. PMID: 33487517.33487517

53. Knight D, Becan J, Olson D, Davis NP, Jones J, Wiese A, et al. Justice community opioid innovation network (JCOIN): the TCU research hub. J Subst Abuse Treat. (2021) 128:108290. doi: 10.1016/j.jsat.2021.108290. Epub 2021 Jan 15. PMID: 33487517.33487517

54. Gurol-Urganci I, Bidwell P, Sevdalis N, Silverton L, Novis V, Freeman R, et al. Impact of a quality improvement project to reduce the rate of obstetric anal sphincter injury: a multicentre study with a stepped-wedge design. BJOG. (2021) 128(3):584–92. doi: 10.1111/1471-0528.16396. Epub 2020 Aug 9. PMID: 33426798; PMCID: PMC7818460.33426798

55. Hatch B, Tillotson C, Huguet N, Marino M, Baron A, Nelson J, et al. Implementation and adoption of a health insurance support tool in the electronic health record: a mixed methods analysis within a randomized trial. BMC Health Serv Res. (2020) 20(1):428. doi: 10.1186/s12913-020-05317-z. PMID: 32414376; PMCID: PMC7227079

56. Huguet N, Valenzuela S, Marino M, Moreno L, Hatch B, Baron A, et al. Correction to: effectiveness of an insurance enrollment support tool on insurance rates and cancer prevention in community health centers: a quasi-experimental study. BMC Heal Serv Res. (2022) 22(1):587. doi: 10.1186/s12913-022-07974-8

57. Sutherland R, Campbell E, Lubans DR, Morgan PJ, Okely AD, Nathan N, et al. A cluster randomised trial of a school-based intervention to prevent decline in adolescent physical activity levels: study protocol for the ‘physical activity 4 everyone’ trial. BMC Public Health. (2013) 13:57. doi: 10.1186/1471-2458-13-57. PMID: 23336603; PMCID: PMC3577490.23336603

58. Sutherland R, Campbell E, McLaughlin M, Nathan N, Wolfenden L, Lubans DR, et al. Scale-up of the physical activity 4 everyone (PA4E1) intervention in secondary schools: 24-month implementation and cost outcomes from a cluster randomised controlled trial. Int J Behav Nutr Phys Act. (2021 Oct 23) 18(1):137. doi: 10.1186/s12966-021-01206-8. PMID: 34688281; PMCID: PMC8542325.

59. Milat AJ, King L, Bauman AE, Redman S. The concept of scalability: increasing the scale and potential adoption of health promotion interventions into policy and practice. Health Promot Int. (2013) 28(3):285–98. doi: 10.1093/heapro/dar097. Epub 2012 Jan 12. PMID: 22241853.22241853

60. Escoffery C, Lebow-Skelley E, Udelson H, Böing EA, Wood R, Fernandez ME, et al. A scoping study of frameworks for adapting public health evidence-based interventions. Transl Behav Med. (2019) 9(1):1–10. doi: 10.1093/tbm/ibx067. PMID: 29346635; PMCID: PMC6305563.29346635

61. Sutherland R, Reeves P, Campbell E, Lubans DR, Morgan PJ, Nathan N, et al. Cost effectiveness of a multi-component school-based physical activity intervention targeting adolescents: the ‘physical activity 4 everyone’ cluster randomized trial. Int J Behav Nutr Phys Act. (2016) 13(1):94. doi: 10.1186/s12966-016-0418-2. PMID: 27549382; PMCID: PMC4994166.27549382

62. NHS England. NHS pelvic health clinics to help tens of thousands of women across the country (2021). Available at: https://www.england.nhs.uk/2021/06/nhs-pelvic-health-clinics-to-help-tens-of-thousands-women-across-the-country/ (cited Sep 19, 2022).

63. Wiltsey Stirman S, Baumann AA, Miller CJ. The FRAME: an expanded framework for reporting adaptations and modifications to evidence-based interventions. Implement Sci. (2019) 14(1):58. doi: 10.1186/s13012-019-0898-y. PMID: 31171014; PMCID: PMC6554895.31171014

Keywords: study design, study management, hybrid trial designs, narrative review, comparative case study, implementation science, effectiveness-implementation

Citation: Jurczuk M, Thakar R, Carroll FE, Phillips L, Meulen Jan van der, Gurol-Urganci I and Sevdalis N (2023) Design and management considerations for control groups in hybrid effectiveness-implementation trials: Narrative review & case studies. Front. Health Serv. 3:1059015. doi: 10.3389/frhs.2023.1059015

Received: 30 September 2022; Accepted: 6 February 2023;

Published: 10 March 2023.

Edited by:

Xiaolin Wei, University of Toronto, CanadaReviewed by:

Jamie Murdoch, King's College London, United Kingdom© 2023 Jurczuk, Thakar, Carroll, Phillips, Meulen, Gurol-Urganci and Sevdalis. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Magdalena Jurczuk bWp1cmN6dWtAcmNvZy5vcmcudWs=

†These authors have contributed equally to this work and share senior authorship

Specialty Section: This article was submitted to Implementation Science, a section of the journal Frontiers in Health Services

Abbreviations OASI, obstetric anal sphincter injury; OASI2, OASI care bundle scale-up study; PA4E1, physical activity 4 everyone programme; CATCH-UP, community-based health information technology (HIT) tools for cancer screening and health insurance promotion; HIT, health information technology.

Magdalena Jurczuk

Magdalena Jurczuk Ranee Thakar2

Ranee Thakar2 Jan van der Meulen

Jan van der Meulen Ipek Gurol-Urganci

Ipek Gurol-Urganci Nick Sevdalis

Nick Sevdalis