- 1Department of Population Health Sciences, Spencer Fox Eccles School of Medicine, University of Utah, Salt Lake City, UT, United States

- 2Department of Medical Social Sciences, Northwestern University Feinberg School of Medicine, Chicago, IL, United States

- 3Departments of Psychology and Medicine, University of Miami, Coral Gables, FL, United States

- 4Sylvester Comprehensive Cancer Center, Miller School of Medicine, University of Miami, Miami, FL, United States

- 5Robert H. Lurie Comprehensive Cancer Center, Northwestern University, Chicago, IL, United States

Background: Longitudinal tracking of implementation strategies is critical in accurately reporting when and why they are used, for promoting rigor and reproducibility in implementation research, and could facilitate generalizable knowledge if similar methods are used across research projects. This article focuses on tracking dynamic changes in the use of implementation strategies over time within a hybrid type 2 effectiveness-implementation trial of an evidence-based electronic patient-reported oncology symptom assessment for cancer patient-reported outcomes in a single large healthcare system.

Methods: The Longitudinal Implementation Strategies Tracking System (LISTS), a timeline follow-back procedure for documenting strategy use and modifications, was applied to the multiyear study. The research team used observation, study records, and reports from implementers to complete LISTS in an electronic data entry system. Types of modifications and reasons were categorized. Determinants associated with each strategy were collected as a justification for strategy use and a potential explanation for strategy modifications.

Results: Thirty-four discrete implementation strategies were used and at least one strategy was used from each of the nine strategy categories from the Expert Recommendations for Implementing Change (ERIC) taxonomy. Most of the strategies were introduced, used, and continued or discontinued according to a prospective implementation plan. Relatedly, a small number of strategies were introduced, the majority unplanned, because of the changing healthcare landscape, or to address an emergent barrier. Despite changing implementation context, there were relatively few modifications to the way strategies were enacted, such as a change in the actor, action, or dose. Few differences were noted between the trial's three regional units under investigation.

Conclusion: This study occurred within the ambulatory oncology clinics of a large, academic medical center and was supported by the Quality team of the health system to ensure greater uptake, uniformity, and implementation within established practice change processes. The centralized nature of the implementation likely contributed to the relatively low proportion of modified strategies and the high degree of uniformity across regions. These results demonstrate the potential of LISTS in gathering the level of data needed to understand the impact of the many implementation strategies used to support adoption and delivery of a multilevel innovation.

Clinical trial registration: https://clinicaltrials.gov/ct2/show/NCT04014751, identifier: NCT04014751.

Introduction

Due to advances in screening and treatment, the 5-year survival rate upon a cancer diagnosis is close to 70%, and there are almost 17 million cancer survivors in the US (1). Despite advances in early detection and treatments that extends survivor longevity, survival benefit is often offset by chronic and debilitating cancer- and treatment-related symptoms that compromise health related quality of life (2). Cancer patients experience disruptive physical and psychosocial symptoms that are often under-addressed. Research indicates that one in five cancer survivors experience uncontrolled pain (3), and around 32% meet Diagnostic and Statistical Manual of Mental Disorders criteria for a mental health diagnosis (e.g., adjustment, anxiety, sleep, mood) (2, 4). Therefore, providing optimal cancer care requires systematic symptom monitoring (5).

Tools that capture patient-reported outcomes (PROs) are emerging as a way to bridge the gap between patient experiences and clinician understanding (6). In oncology, PROs are assessed by engaging patients on their physical and psychological symptoms, functioning, quality of life, and supportive care needs. Incorporating PROs into routine oncology practice has been shown to improve patient outcomes, care satisfaction, and quality of life (7, 8). However, most studies evaluating programs to monitor and manage patient-reported outcomes (PROs) via electronic health records (EHRs) have been limited to efficacy trials and not implemented within routine practice of large healthcare systems (9).

Despite available guidance on integrating PROs as a standard of care (10), additional strategies are needed to promote their consistent and sustained implementation (11–13). Tracking and reporting implementation strategies is critical to determining under what circumstances they achieve their effects (14) and for promoting rigor and reproducibility in implementation research. Moreover, reporting and tracking of implementation modifications can be used to demonstrate fidelity to the strategies per the study protocol or, conversely, track and assess protocol deviations. Strategies are often adapted, modified, and discontinued based on several multilevel factors, such as emerging barriers and facilitators and evidence of low effectiveness. Therefore, it is crucial to capture and track these modifications within implementation studies (15).

Systems for tracking implementation strategy use and modification over time have been developed (16–20). However, among the limitations to existing tracking methods are: (1) they lack specificity in accordance with strategy reporting standards; (2) they largely collect data retrospectively or with wide time spans during the study rather than routinely throughout the implementation process; (3) the majority have been developed or applied post-hoc and relied on existing data sources that might have lacked the necessary detail on the strategy and how it was enacted.

To improve upon existing tracking systems, and fill gaps in the current literature, Smith and colleagues developed the Longitudinal Implementation Strategies Tracking System (LISTS), a robust, dynamic tool for measuring, monitoring, reporting, and guiding strategy use and modifications (21–23). LISTS was iteratively developed within the National Cancer Institute's Improving the Management of symPtoms during And following Cancer Treatment (IMPACT) research consortium—a Cancer MoonshotSM program. The primary aim of LISTS is to track implementation strategies by capturing detailed data in near-real time on strategy use and modification that can be readily combined, synthesized, and compared within and between implementation projects. Secondarily, the system was developed to allow for tailoring strategies, assessing effectiveness, and evaluating costs of implementation strategies. LISTS was designed in alignment with (a) implementation strategy reporting and specification standards (14), (b) the Expert Recommendations for Implementing Change (ERIC) taxonomy (24), and (c) the Framework for Reporting Adaptations and Modifications to Evidence-based Implementation Strategies (FRAME-IS) (15). Use of LISTS over the course of 15 months in three randomized effectiveness-implementation hybrid trials (21–23) indicated that LISTS was feasible, usable, and led to meaningful data on strategy use and modification.

This study sought to demonstrate the capability of LISTS in tracking the use and modification of strategies to support implementation of the cancer patient-reported outcomes (“cPRO”) system across oncology care practices in a large healthcare system. cPRO consists of the Patient Reported Outcomes Measurement Information System (PROMIS®) computer adaptive tests (CATs) (25, 26) of (1) Depression (PROMIS Item Bank v1.0-Depression); (2) Anxiety (PROMIS Item Bank v1.0-Anxiety); (3) Fatigue (PROMIS Item Bank-Fatigue v1.0); (4) Pain Interference (PROMIS Item Bank v1.1-Pain Interference); and (5) Physical Function (PROMIS Item Bank v1.1-Physical Function), along with two supportive care checklist items (covering psychosocial and nutritional needs). Cancer center patients are asked to complete an assessment before each medical oncology visit (but no more than once a month). We report here on (a) the strategies used to support cPRO implementation, (b) the most common implementation strategy modifications made, which strategies and strategy types were modified, and, (c) which modifications were planned or unplanned, and the reasons for modifications. Additionally, we use this study as a use case to demonstrate the utility of using LISTS to populate the Implementation Research Logic Model (IRLM) (27) when reporting the results of an implementation trial. The IRLM can provide a useful visual of the conceptual relationships between determinants of implementation, strategies, and targeted outcomes.

Materials and methods

This study was approved by the Northwestern University Institutional Review Board (STU00207807).

Setting and participants

The study occurred at outpatient oncology settings across multiple hospitals that are part of the Northwestern Medicine healthcare system. Existing regional units (Central, North, and West) served as the clusters for a stepped wedge trial (28). In total, 32 clinical units participated across the three regions. All regions include medical centers/hospitals and specialty clinics for the diagnosis and management of cancer. The study population included any adult clinician (physician, nurse, social worker, dietician) administering cancer care at a medical oncology clinic; oncology clinic administrative staff; and eligible patients (confirmed cancer diagnosis and receiving oncology services within the past 12 months).

The participants involved in the completion of the LISTS tool in this study included a team comprised of one of the principal investigators (SFG), co-investigators (KAW, JDS), one of whom is an implementation scientist (JDS), and the project coordinator (SC). Implementers in the health system who enacted the implementation strategies were regularly consulted regarding LISTS data by members of the LISTS team via email, phone calls, and one-on-one conversations, but did not interact with the LISTS tool or the data entry system. This team has been involved and/or led implementation research studies and all members have familiarity with implementation science terms, theories, and concepts. However, only JDS has formal training in implementation science and thus guided the coding and classification of data elements. All team members contributed to the coding and agreed on the results reported.

Study design and procedures

The overall study used a cluster randomized, modified stepped wedge design, using a type 2 hybrid effectiveness-implementation approach spanning 4 years (28). This approach allowed for the evaluation of both the cPRO effectiveness as well as the implementation outcomes associated with the implementation strategies. The design leveraged the healthcare system's three geographic and operational regions (Central, North, West) of 32 total clinical units. Regions were pseudo-randomly assigned to the roll-out sequence with 3-month steps. The Central region was the first cluster at the request of system leadership. West and North were then randomly assigned to the second and third spots in the sequence. For each regional cluster, a multicomponent “package” of implementation strategies was used to increase adoption and reach of cPRO. The package consisted primarily of strategies that were system-wide, which were introduced immediately prior to the crossover in the stepped wedge to evaluate their impact on implementation. cPRO usage data prior to the crossover provided an “implementation as usual” comparison.

Longitudinal implementation strategies tracking system (LISTS)

Procedures and content

LISTS was used to track implementation strategy use and modifications. The LISTS team used observation, study records (meeting notes, calendars), and reports from implementers (via in-person, phone, and email inquiry) to document implementation strategy use, modifications, and discontinuations. When modification or discontinuation occurred, these were documented as planned or unplanned, reasons and person involved in the decision were recorded. To increase the accuracy of reporting, LISTS procedures involve the use of a timeline follow-back procedure (29) in which members of the research and implementation teams met every 3 months (quarterly) to complete LISTS, including entry of the data into a relational Research Electronic Data Capture (REDCap) (30) data entry system developed for LISTS. The team reported on strategy use and modifications at the study, region, and clinical unit levels as appropriate.

Data elements and capture

The data elements in LISTS were captured in REDCap, and the framework was drawn from multiple sources with widespread use and familiarity to the field of implementation. First, for strategy specification and reporting, we used the recommendations outlined by Proctor et al. (14). These elements include naming (using language consistent with the existing literature) and defining (operational definitions of the strategy and its discrete components) the strategy; specifying the actor (who enacts the strategy), action (active verb statements concerning the specific actions, steps, or processes), action targets (the strategy's intended target according to a conceptual model or theory), temporality (duration of use and interval or indication for use), dose (how long the strategy takes each time), and the implementation outcome(s) (implementation processes or outcomes likely to be affected).

Second, to assist LISTS users in naming strategies using language consistent with the existing literature, the tool is prepopulated with each of the 72 discrete strategies from the Expert Recommendations for Implementing Change (ERIC) compilation (24). The team completing LISTS used the ERIC compilation of strategies as a prompt and taxonomy for characterizing the strategies used. Detailed operational definitions were entered given the often vague nature of the ERIC strategy categories/types. Third, we used the Proctor et al. (31) taxonomy of implementation outcomes to provide users with agreed upon definitions for acceptability, adoption, appropriateness, cost, feasibility, fidelity, penetration/reach, and sustainability/sustainment. Fourth, LISTS included the complete list of determinants from the Consolidated Framework for Implementation Research (CFIR) (32) for users to select which determinant the strategy is hypothetically linked with, either as a barrier to overcome or a facilitator to be leveraged. This conceptual linking is consistent with the generalized theory of implementation research (27) and other theoretical and conceptual models used in the field (33–35), and will assist users in preparing the justification.

Finally, to capture the modifications made to the implementation strategies over time, we incorporated elements of the Framework for Reporting Adaptations and Modifications Expanded to Evidence-based Implementation Strategies (FRAME-IS) (15) for specific strategies, and additional elements related to project-level modifications. Consistent with the FRAME-IS, our data capture tool allows for updating already-entered strategies to indicate modifications to any aspect already described in this section and the discontinuation of a strategy. For both strategy modifications and discontinuation, branching logic prompts questions concerning the reason for a strategy change (e.g., ineffective, infeasible), who was involved in the strategy change decision (e.g., leadership, research team, clinicians), and whether the strategy change was planned (e.g., part of an a priori protocol) or unplanned (e.g., response to emergent implementation barrier). It is commonplace to add strategies during implementation for various reasons, which can be planned (e.g., as part of an adaptive or optimization study design) or unplanned. Unique to LISTS, when a strategy is added, the same “was it planned or unplanned” and “who was involved” questions are prompted along with the reason with response options of “to address an emergent barrier” or “to complement/supplement other strategies to increase effectiveness.” When a new strategy is added, the data elements for reporting and specifying as described above are also prompted. The full LISTS codebook with each data entry field as well as REDCap coding syntax are available in the primary LISTS paper (23). Most germane to the current study, we adhered to the definitions of CFIR constructs provided at https://cfirguide.org/constructs/ and used Additional File 6 (https://static-content.springer.com/esm/art%3A10.1186%2Fs13012-015-0209-1/MediaObjects/13012_2015_209_MOESM6_ESM.docx) from Powell et al. (24) for implementation strategy definitions and codes.

Timeframe of strategy reporting

Use of LISTS in this study began January 21, 2020. The study start date (official project period start date) was September 1, 2018 and start of implementation in the first region in the cluster randomized stepped-wedge sequence was December 23, 2019. While LISTS reporting began well into the project, reporting of previously used and currently in use strategies was comprehensive and included strategies prior to start of the project period that were instrumental to obtaining grant support for the study. These were conceptualized as part of the implementation preparation phase, defined as occurring prior to implementation of the innovation (i.e., cPRO) (36). Meetings related to LISTS occurred approximately quarterly through May 26, 2022, at which time data was pulled to conduct the current analysis.

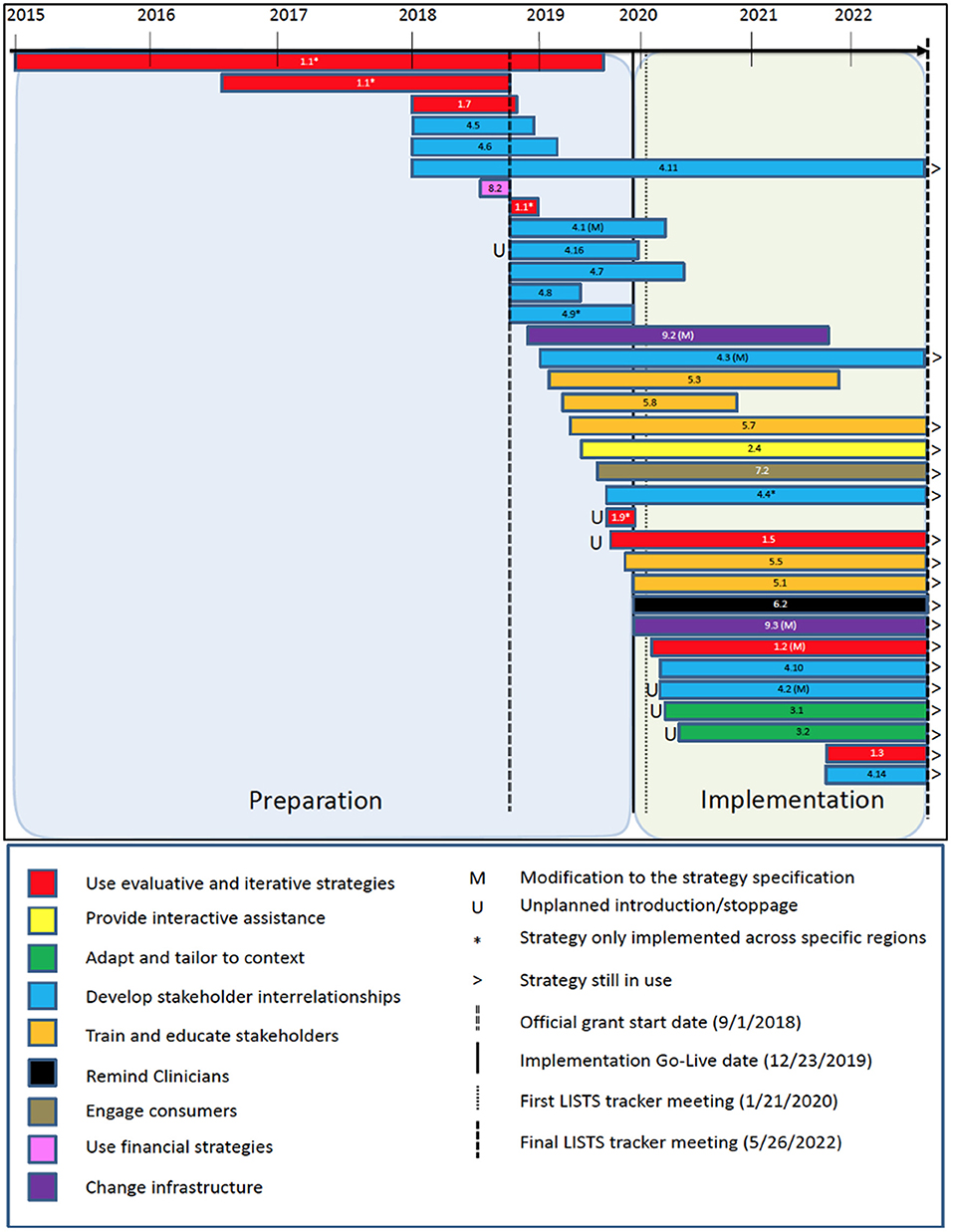

LISTS data display and output

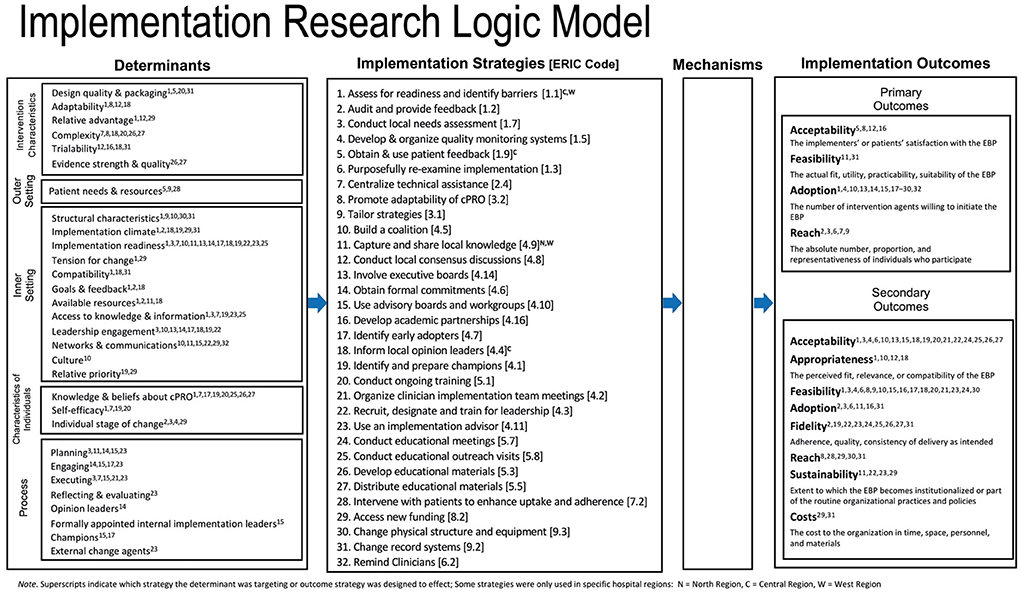

To aid in visualizing and interpreting the complex relationships between the data elements captured in the LISTS tracker, a notated timeline (Figure 1) was created that spans the length of the study to date. We also utilized the Implementation Research Logic Model (IRLM) (27) to aid readers by organizing the relationships between implementation determinants, strategies, and their purported primary and secondary outcomes. This also allowed us to critically appraise the utility of LISTS data output by assessing its fit with a tool that helps specify and synthesize implementation projects with rigor. This step could inform further refinements to the type of data captured by the LISTS methodology.

Figure 1. Timeline of implementation strategy use and modifications by phase. The number on each bar represent the associated ERIC strategy that can be found in Additional File 6 of Powell et al. (24).

Results

Implementation strategies used

A total of 34 discrete implementation strategies were documented as having been used between January 2015 and May 2022. While the formal trial described here began September 1, 2018, the team decided to capture strategies used during preparation for the trial, which included pilot studies and strategies that made submission of the grant application possible (e.g., partnership formation with the healthcare system). These strategies were coded into the ERIC categories (37) and all nine were represented. The category with the most strategies (n = 13) were from “develop stakeholder interrelationships,” followed by “use evaluative and iterative strategies” (n = 8) and “train and educate stakeholders” (n = 5). Only one strategy was used from each of “provide interactive assistance,” “support clinicians,” “utilize financial strategies,” and “engage consumers.” The remainder were from “change infrastructure” (n = 2) and “adapt and tailor to context” (n = 2). Most strategies (n = 28) were prospective (i.e., planned to be used a priori as part of the study protocol) and were used across all three regions of the healthcare system (n = 29). Research staff (n = 28) and/or quality improvement leaders (n = 27) served as the primary actor of the strategy (totals are not exclusive to one actor or the other). Figure 1 presents a timeline and key dates (study start), phases (preparation and implementation), color-coded strategy categorizations, and notation if the strategy was only used in one or two regions of the healthcare system. Detailed strategy definitions and their associated ERIC codes are available in Additional File 6 (https://static-content.springer.com/esm/art%3A10.1186%2Fs13012-015-0209-1/MediaObjects/13012_2015_209_MOESM6_ESM.docx) from Powell et al. (24).

Implementation strategy modifications

Modifications to strategies can be categorized into two types. First, the introduction and discontinuation of a strategy (i.e., use) constitutes a protocol-level modification. That is, the study protocol is modified concerning which strategies are used and when. Second, modifications can occur to the way a strategy is enacted. That is, a change to one of the specifications of a strategy: actor, action, action target, temporality, dose, or outcomes/barriers addressed. The majority of modifications in this study were protocol modifications in which strategies were either introduced or discontinued per an a priori implementation plan. By extension, the majority of discontinuations to strategies were planned as opposed to unplanned. However, six strategies were unplanned introductions during the implementation phase to either to augment another strategy to increase effectiveness (n = 4) or to address an emergent barrier (n = 2). Relatively few (n = 6) of the strategies that were used involved a modification to the strategy specification. Action (n = 3) and dose (n = 3) were the most common specifications modified, followed by the action target (n = 2) and actor (n = 1). Two strategies involved multiple specification modifications. Notations are provided in Figure 1 for unplanned stoppages and introductions, and for those that had modifications to their specification during the study. Finally, the individuals involved in making the decision to modify the strategies were also coded, and they included the research team (n = 2 strategies), program leaders and administrators (n = 2 strategies), clinicians and healthcare staff (n = 2 strategies), implementers and trainers (n = 2 strategies), and patients (n = 1 strategy).

Barriers and implementation outcomes targeted by strategies

Implemented strategies targeted barriers across all five CFIR domains. Most strategies were used to overcome barriers in the inner setting (n = 26, 37%), followed by intervention characteristics (n = 17, 25%), individuals (n = 14, 20%), process (n = 9, 12%), and outer setting (n = 4, 6%). Strategies could target multiple determinants. Figure 2 provides further detail regarding the CFIR determinants coded by strategy.

Figure 2. Implementation Research Logic Model (IRLM) populated with barriers, strategies, and outcomes. The Mechanisms field of the IRLM is left blank intentionally as that element is not captured within the LISTS method in its current version.

Strategies were used primarily to increase adoption (n = 23, 68%), followed by reach (n = 5, 15%), acceptability (n = 4, 12%), and feasibility (n = 2, 6%) related to cPRO implementation. Regarding secondary outcomes, most strategies targeted feasibility (n = 19, 58%), followed by acceptability (n = 18, 55%) and fidelity (n = 9, 27%). Costs (n = 1, 6%) was the least targeted secondary outcome. A single primary outcome was selected and multiple secondary outcomes could be selected. Figure 2 presents a direct population of the IRLM using data from LISTS with superscripts to indicate the proposed barriers and outcomes associated with each strategy per best practice. Hospital region differences (i.e., Central, West, North) are also specified via notation in Figure 2.

Discussion

Tracking the use and modification of implementation strategies is critical to ensure the rigor and reproducibility of implementation research (14). Despite the centrality of strategies in this scientific field, far too little attention has been paid to accurate reporting of strategies and how they change over time at the protocol and specification levels (23, 38). LISTS was developed to more accurately capture the dynamic nature of implementation strategy use and modifications over time in implementation research. Using a timeline follow-back procedure, strategies are evaluated on a routine basis at relatively short intervals (every 1–3 months) to capture and document modifications. This study is a demonstration of the utility of LISTS for strategy use and modifications that occurred over a 4-year-long cluster randomized stepped wedge trial using a type 2 effectiveness-implementation hybrid approach of cancer patient-reported outcomes (cPRO) symptom monitoring in a large urban and suburban healthcare system. The results demonstrate the potential of LISTS in gathering the type and granularity of data needed to understand the impact of strategies in implementation studies of complex, multilevel innovations.

Results indicated that 34 discrete implementation strategies were used, and at least one strategy was included from each of the nine strategy categories from the ERIC taxonomy. Since partnerships are crucial for implementation (39, 40), it was unsurprising that the category with the most strategies was “develop stakeholder interrelationships” (n = 12), and “evaluative and iterative strategies” was second (n = 7). Given the scope and complexity of this strategic implementation effort to effect system-wide change, the need for multilevel strategies to cut across ERIC categories seems reasonable and necessary. However, there is limited literature to contextualize this finding, specifically whether it is consistent with other implementation efforts. A study of opioid risk management implementation in the Department of Veterans Affairs found that project sites used an average of 23 strategies and a range of 16–31 discrete strategies. The most used strategies came from the ERIC categories of “adapt and tailor to the context,” “develop stakeholder interrelationships,” and “evaluative and iterative strategies,” which is consistent with our results. Adaptations to cPRO (n = 2) were few in comparison, perhaps because it is an electronic screener and simpler compared to the opioid risk management intervention.

Concerning the implementation strategy protocol, it was not surprising to see that most of the strategies used (28 of 34) were planned and relatively few modifications occurred to the strategies themselves once in use, which included no unplanned discontinuations, only six unplanned strategy introductions, and six unplanned modifications to a strategy's specification. The nature of the healthcare system and the experience of the study team are likely important determinants to consider when interpreting these results. This study occurred within the ambulatory oncology clinics of a large, academic medical center. As such, implementation was centralized, supported by established practice change processes, and championed by the Quality team of the health system to ensure greater uptake and uniformity across regions and clinics. This gave investigators considerable control over the protocol. Concerning the study team, there was a high degree of prior knowledge and experience related to PRO implementation in this specific healthcare system (9, 25, 26, 41). Relatedly, this study represented an attempt to improve and expand on the implementation of an already-in-use innovation (i.e., PROs), allowing for the specification of planned, targeted, strategic initiatives informed by prior data on identified barriers and effective facilitators. As such, there was a high degree of confidence in the protocol as designed. We believe these contextual factors contributed to fewer modifications.

It is worth noting that this study began prior to the COVID-19 pandemic, which had profound effects on healthcare delivery (42). Of the six strategies characterized as unplanned additions of specification modification, three were added during March and April, 2020 in direct response to the challenges associated with in-clinic cPRO assessment caused by COVID-19. Specifically, a clinician support team was organized to provide protected time to reflect on the implementation effort, share lessons learned, and determine needed supports. Despite the challenges, the centralized nature of the implementation seems to have counterbalanced the effects of COVID-19 mitigation measures on clinic operations. Two other unplanned additions occurred in September 2019, shortly before the intervention start date, were to augment other strategies to increase effectiveness. These included multimethod efforts to monitor data systems to check cPRO use quantitatively, and a patient advisory council to gather patient feedback regarding cPRO implementation. Though unplanned, these strategies served to provide a feedback loop to evaluate the ongoing implementation of cPRO. Later in implementation (September 2021), due to feedback from patients and data indicating that completion rates were lower than expected, the cPRO assessment was changed from a computer adaptive test version to a fixed-length version (called “cPRO Short”) to reduce the administration time with the goal of increasing patient response rates.

Concerning strategy use and modifications and the study design, it was important to carefully track and demonstrate that the implementation was consistent across the three regions of the Northwestern healthcare system, which served as the clusters in the stepped-wedge trial design. In most stepped-wedge designs, it is important to have the same implementation strategy across clusters for internal validity (43). However, it can be difficult to achieve this in implementation trials as strategies are often tailored to some extent to align with the contextual factors of the participating clinics or other units (44, 45). In this study, the contextual factors were relatively homogeneous across the regions and the centralized implementation support efforts further contributed to fewer region-level modifications to the protocol. Documenting the differences, or lack thereof, across study clusters aids with interpretation of the results. In this study, we can be confident that regional differences are not attributable to the implementation strategy package (given consistency in the strategies used across regions), but to other factors should they differ. Had there been meaningful variation in strategies across the regions, careful documentation of that variation would help the researchers' interpretation of differences in the findings by region.

The number of strategies that began during implementation preparation (n = 24) was two and a half times the number of strategies that began at or after implementation (n = 10). Consistent with what one might expect, “evaluative and iterative strategies,” such as “assessing for readiness and identifying barriers and facilitators” and “developing and implementing tools for quality monitoring” began years before implementation began and even before the grant period. Similarly, strategies within the “develop stakeholder interrelationships” (e.g., “Obtain formal commitments. “Promote network weaving,” “Inform local opinion leaders”) and a financial strategy of “Alter incentive/allowance structures” also began before the grant period. The remaining strategies (n = 26) began once funding was available through the grant. At the time the data were pulled for this analysis (May 16, 2022), 16 strategies were still being used to support cPRO implementation.

This study demonstrates that LISTS can be used to track strategy use and modifications at the protocol and specification levels; however, there are a number of considerations and potential future advancements to LISTS that could increase the utility and validity of the data. The use of the IRLM to visualize the data from LISTS concerning the relationships between strategies and the barriers and outcomes targeted provides useful information. The superscripts show that many implementation strategies were used to address prominent barriers. Barriers in the inner setting were most commonly the target of strategies used in this trial, with intervention characteristics being second most frequent and out setting determinants being the least frequent. More granular patterns of strategy-determinant relationships could be undertaken in a subsequent analysis of the data presented here. Similarly, implementation outcomes are conceptually connected to more than one, and in most cases many, strategies. Conceptually, each association is understandable and justifiable but the sheer number of relationships raises questions about the specificity of each strategy target and interpretation of effects that can be attributed a singular strategy. Although LISTS data can be used to populate the IRLM (Figure 2), further pruning and prioritization of the barriers and outcomes targeted might be needed to make it more useful and testable (e.g., causal path analysis). Additionally, the mechanisms that are part of a causal path analysis will need to be specified as LISTS in its current form does not prompt users to propose mechanistic targets. The IRLM was used in conjunction with LISTS data as it is becoming a popular method for reporting the results of implementation studies [see articles in Special Supplement of the Journal of Acquired Immune Deficiency Syndrome; (46)].

Future directions

We envision LISTS being used in a variety of implementation studies with various research questions and designs. Tracking strategy with LISTS or similarly rigorous tools use will allow the field to advance our understanding of strategy effects. Comprehensive tracking of key elements of strategy use, specification, and modification could unlock the “black box” of what works when and under what contextual conditions. LISTS provides a uniform collection method to facilitate synthesis as the results of a single study or trial inevitably have limitations. LISTS would benefit from additional research and refinement in a number of areas to be maximally useful to the field. First, although LISTS captures details regarding which strategies were used and modified, and to some extent why, the current tool does not capture the efficiency or effectiveness of the strategy on outcomes. This aspect requires appropriate research designs such as optimization and factorial designs (47, 48). LISTS is tailor made to be the strategy collection method for such investigations. LISTS currently requires significant knowledge of implementation science models and frameworks, namely CFIR and ERIC in the context of this study, but also implementation theory to specify mechanisms for strategy selection. This represents a potential limitation to adoption and to use by implementation practitioners and community partners. Relatedly, it is yet to be determined the acceptability and utility of LISTS to implementers outside of the context of rigorous implementation research. Lastly, visual or graphical display of strategy use and modifications is also a potential area of future development for LISTS data. Figure 1 in this article provides one way to visually display the timeframe of strategy use with some notations for protocol and specification modifications. Such a figure is useful for portraying when and which type of strategy was introduced and discontinued but less detail can be included regarding strategy specification and why modifications occurred. Moreover, the figure does not capture different strategies, with meaningfully unique operationalizations, within the same ERIC code, which may be an area of future development. Finally, the current process of creating a visual display such as Figure 1 is manual. Automated visualizations that are customizable by users is a future direction for LISTS developers to consider. Despite the need for additional research on LISTS and potential refinements and additions, the LISTS method represents an advancement to other strategy tracking methods in the literature. Future research into the LISTS method should also formally examine the utility of the process and output from the perspective of the implementers and the research team.

Conclusions

In conclusion, this study is the first to report implementation strategy use and modification over a multi-year period using LISTS, which was both feasible for use and resulted in meaningful and reliable data. While relatively few strategy modifications occurred within this study, due in large part to the centralized nature of the implementation support and the study being within one healthcare system, we demonstrated the potential utility of LISTS for capturing the type and granularity of data on modifications needed for rigor and reproducibility of implementation studies.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

This study was approved by the Northwestern University Institutional Review Board (STU00207807). Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author contributions

JS conceptualized the work reported in this article, wrote sections of the manuscript, provided critical edits to all manuscript sections, and provided consultation to the creation of figures. JM wrote sections of the manuscript, provided critical edits to all manuscript sections, analyzed the data, and created the figures. KW provided critical edits to all sections of the manuscript and made significant contribution to database organization. SC managed the database and reviewed the final version of the manuscript. SG and FP conceived of the overall trial and were awarded the grant supporting this work and provided critical edits to the manuscript. All authors contributed to and approved the manuscript to be published.

Funding

Research reported in this publication was supported by grant R18 HS026170 from the Agency for Healthcare Research and Quality to SG and FP, by grant number UL1TR001422 from the National Institutes of Health's National Center for Advancing Translational Sciences, and the National Institute of Health training postdoctoral slot to JM (NLM; T15LM007124). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Acknowledgments

The authors wish to thank staff and clinicians of Northwestern Medicine and its patients that participated in this study. We would also like to acknowledge Wynne Norton, Lisa DiMartino, Sandra Mitchell, and the rest of the IMPACT Implementation Science Working Group for their efforts in developing the Longitudinal Implementation Strategy Tracking System (LISTS).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Siegel RL, Miller KD, Fuchs HE, Jemal A. Cancer statistics, 2022. Cancer J Clin. (2022) 72:7–33. doi: 10.3322/caac.21708

2. Caruso R, Nanni MG, Riba MB, Sabato S, Grassi L. The burden of psychosocial morbidity related to cancer: patient and family issues. Int Rev Psychiatry. (2017) 29:389–402. doi: 10.1080/09540261.2017.1288090

3. Gallaway MS, Townsend JS, Shelby D, Puckett MC. Peer reviewed: pain among cancer survivors. Prevent Chron Dis. (2020) 17:E54. doi: 10.5888/pcd17.190367

4. American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders: DSM-5. Arlington, VA: American Psychiatric Association (2013). doi: 10.1176/appi.books.9780890425596

5. Yang LY, Manhas DS, Howard AF, Olson RA. Patient-reported outcome use in oncology: a systematic review of the impact on patient-clinician communication. Support Care Cancer. (2018) 26:41–60. doi: 10.1007/s00520-017-3865-7

6. Gensheimer SG, Wu AW, Snyder CF, Basch E, Gerson J, Holve E, et al. Oh, the places we'll go: patient-reported outcomes and electronic health records. Patient. (2018) 11:591–8. doi: 10.1007/s40271-018-0321-9

7. Kotronoulas G, Kearney N, Maguire R, Harrow A, Domenico DD, Croy S, et al. What is the value of the routine use of patient-reported outcome measures toward improvement of patient outcomes, processes of care, and health service outcomes in cancer care? A systematic review of controlled trials. J Clin Oncol. (2014) 32:1480–501. doi: 10.1200/JCO.2013.53.5948

8. Chen J, Ou L, Hollis SJ. A systematic review of the impact of routine collection of patient reported outcome measures on patients, providers and health organisations in an oncologic setting. BMC Health Services Res. (2013) 13:211. doi: 10.1186/1472-6963-13-211

9. Penedo FJ, Oswald LB, Kronenfeld JP, Garcia SF, Cella D, Yanez B. The increasing value of eHealth in the delivery of patient-centred cancer care. Lancet Oncol. (2020) 21:e240–51. doi: 10.1016/S1470-2045(20)30021-8

10. Snyder C, Wu A. Users' Guide to Integrating Patient-Reported Outcomes in Electronic Health Records. Baltimore, MD: John Hopkins University (2017).

11. Porter I, Gonçalves-Bradley D, Ricci-Cabello I, Gibbons C, Gangannagaripalli J, Fitzpatrick R, et al. Framework and guidance for implementing patient-reported outcomes in clinical practice: evidence, challenges and opportunities. J Compar Effect Res. (2016) 5:507–19. doi: 10.2217/cer-2015-0014

12. Anatchkova M, Donelson SM, Skalicky AM, McHorney CA, Jagun D, Whiteley J. Exploring the implementation of patient-reported outcome measures in cancer care: need for more real-world evidence results in the peer reviewed literature. J Patient Rep Outcomes. (2018) 2:64. doi: 10.1186/s41687-018-0091-0

13. Aburahma MH, Mohamed HM. Educational games as a teaching tool in pharmacy curriculum. Am J Pharm Educ. (2015) 79:1–9. doi: 10.5688/ajpe79459

14. Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. (2013) 8:139. doi: 10.1186/1748-5908-8-139

15. Miller CJ, Barnett ML, Baumann AA, Gutner CA, Wiltsey-Stirman S. The FRAME-IS: a framework for documenting modifications to implementation strategies in healthcare. Implement Sci. (2021) 16:36. doi: 10.1186/s13012-021-01105-3

16. Bunger AC, Powell BJ, Robertson HA, MacDowell H, Birken SA, Shea C. Tracking implementation strategies: a description of a practical approach and early findings. Health Res Policy Syst. (2017) 15:15. doi: 10.1186/s12961-017-0175-y

17. Boyd MR, Powell BJ, Endicott D, Lewis CC. A method for tracking implementation strategies: an exemplar implementing measurement-based care in community behavioral health clinics. Behav Ther. (2018) 49:525–37. doi: 10.1016/j.beth.2017.11.012

18. Rabin BA, McCreight M, Battaglia C, Ayele R, Burke RE, Hess PL, et al. Systematic, multimethod assessment of adaptations across four diverse health systems interventions. Front Public Health. (2018) 6:102. doi: 10.3389/fpubh.2018.00102

19. Haley AD, Powell BJ, Walsh-Bailey C, Krancari M, Gruß I, Shea CM, et al. Strengthening methods for tracking adaptations and modifications to implementation strategies. BMC Med Res Methodol. (2021) 21:133. doi: 10.1186/s12874-021-01326-6

20. Walsh-Bailey C, Palazzo LG, Jones SMW, Mettert KD, Powell BJ, Wiltsey Stirman S, et al. A pilot study comparing tools for tracking implementation strategies and treatment adaptations. Implement Res Pract. (2021) 2:26334895211016028. doi: 10.1177/26334895211016028

21. Smith JD, Norton W, DiMartino L, Battestilli W, Rutten L, Mitchell S, et al., editors. A Longitudinal Implementation Strategies Tracking System (LISTS): development and initial acceptability. In: 13th Annual Conference on the Science of Dissemination and Implementation. (2020). Washington, DC.

22. Smith JD, Norton W, Battestilli W, Rutten L, Mitchell S, Dizon D, et al., editors. Usability and initial findings of the Longitudinal Implementation Strategy Tracking System (LISTS) in the IMPACT consortium. In: 14th Annual Conference on the Science of Dissemination and Implementation. (2021). Washington, DC.

23. Smith JD, Norton WE, Batestelli W, Rutten L, Mitchell S, Dizon DS, et al. The Longitudinal Implementation Strategy Tracking System (LISTS): A Novel Methodology for Measuring and Reporting Strategies Over Time. (2022).

24. Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. (2015) 10:21. doi: 10.1186/s13012-015-0209-1

25. Cella D, Riley W, Stone A, Rothrock N, Reeve B, Yount S, et al. The Patient-Reported Outcomes Measurement Information System (PROMIS) developed and tested its first wave of adult self-reported health outcome item banks: 2005–2008. J Clin Epidemiol. (2010) 63:1179–94. doi: 10.1016/j.jclinepi.2010.04.011

26. Jensen RE, Moinpour CM, Potosky AL, Lobo T, Hahn EA, Hays RD, et al. Responsiveness of 8 Patient-Reported Outcomes Measurement Information System (PROMIS) measures in a large, community-based cancer study cohort. Cancer. (2017) 123:327–35. doi: 10.1002/cncr.30354

27. Smith JD, Li DH, Rafferty MR. The implementation research logic model: a method for planning, executing, reporting, and synthesizing implementation projects. Implement Sci. (2020) 15:84. doi: 10.1186/s13012-020-01041-8

28. Garcia SF, Smith JD, Kallen M, Webster KA, Lyleroehr M, Kircher S, et al. Protocol for a type 2 hybrid effectiveness-implementation study expanding, implementing and evaluating electronic health record-integrated patient-reported symptom monitoring in a multisite cancer centre. BMJ Open. (2022) 12:e059563. doi: 10.1136/bmjopen-2021-059563

29. Sobell LC, Sobell MB. Timeline follow-back. In: Litten RZ, Allen JP, editors. Measuring Alcohol Consumption: Psychosocial and Biochemical Methods. Totowa, NJ: Humana Press (1992). p. 41–72. doi: 10.1007/978-1-4612-0357-5_3

30. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. (2009) 42:377–81. doi: 10.1016/j.jbi.2008.08.010

31. Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. ADM Policy Ment Health. (2011) 38:65–76. doi: 10.1007/s10488-010-0319-7

32. Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. (2009) 4:50. doi: 10.1186/1748-5908-4-50

33. Lewis CC, Klasnja P, Powell BJ, Lyon AR, Tuzzio L, Jones S, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Health. (2018) 6:136. doi: 10.3389/fpubh.2018.00136

34. Krause J, Van Lieshout J, Klomp R, Huntink E, Aakhus E, Flottorp S, et al. Identifying determinants of care for tailoring implementation in chronic diseases: an evaluation of different methods. Implement Sci. (2014) 9:102. doi: 10.1186/s13012-014-0102-3

35. Waltz TJ, Powell BJ, Fernández ME, Abadie B, Damschroder LJ. Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implement Sci. (2019) 14:42. doi: 10.1186/s13012-019-0892-4

36. Moullin JC, Dickson KS, Stadnick NA, Rabin B, Aarons GA. Systematic review of the Exploration, preparation, implementation, sustainment (EPIS) framework. Implement Sci. (2019) 14:1. doi: 10.1186/s13012-018-0842-6

37. Waltz TJ, Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, Smith JL, et al. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement Sci. (2015) 10:109. doi: 10.1186/s13012-015-0295-0

38. Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, et al. Enhancing the impact of implementation strategies in healthcare: a research agenda. Front Public Health. (2019) 7:3. doi: 10.3389/fpubh.2019.00003

39. Brown CH, Kellam SG, Kaupert S, Muthén BO, Wang W, Muthén LK, et al. Partnerships for the design, conduct, and analysis of effectiveness, and implementation research: experiences of the prevention science and methodology group. Administr Policy Ment Health Ment Health Serv Res. (2012) 39:301–16. doi: 10.1007/s10488-011-0387-3

40. Chambers DA, Azrin ST. Research and services partnerships: partnership: a fundamental component of dissemination and implementation research. Psychiatr Serv. (2013) 64:509–11. doi: 10.1176/appi.ps.201300032

41. Garcia SF, Wortman K, Cella D, Wagner LI, Bass M, Kircher S, et al. Implementing electronic health record–integrated screening of patient-reported symptoms and supportive care needs in a comprehensive cancer center. Cancer. (2019) 125:4059–68. doi: 10.1002/cncr.32172

42. Roy CM, Bollman EB, Carson LM, Northrop AJ, Jackson EF, Moresky RT. Assessing the indirect effects of COVID-19 on healthcare delivery, utilization and health outcomes: a scoping review. Eur J Publ Health. (2021) 31:634–40. doi: 10.1093/eurpub/ckab047

43. Hemming K, Haines TP, Chilton PJ, Girling AJ, Lilford RJ. The stepped wedge cluster randomised trial: rationale, design, analysis, and reporting. Br Med J. (2015) 350:h391. doi: 10.1136/bmj.h391

44. Kwok EYL, Moodie STF, Cunningham BJ, Oram Cardy JE. Selecting and tailoring implementation interventions: a concept mapping approach. BMC Health Serv Res. (2020) 20:385. doi: 10.1186/s12913-020-05270-x

45. Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. (2017) 44:177–94. doi: 10.1007/s11414-015-9475-6

46. Mustanski B, Smith JD, Keiser B, Li DH, Benbow N. Supporting the growth of domestic HIV implementation research in the united states through coordination, consultation, and collaboration: how we got here and where we are headed. J Acquir Immune Defic Syndr. (2022) 90:S1–8. doi: 10.1097/QAI.0000000000002959

47. Smith JD, Brown CH. The roll-out implementation optimization (ROIO) design: rigorous testing of a data-driven implementation improvement aim. Implement Sci. (2021) 16(Suppl. 1):49. doi: 10.1186/s13012-021-01110-6

Keywords: implementation strategies, modifications, adaptations, cancer symptom screening, tracking system

Citation: Smith JD, Merle JL, Webster KA, Cahue S, Penedo FJ and Garcia SF (2022) Tracking dynamic changes in implementation strategies over time within a hybrid type 2 trial of an electronic patient-reported oncology symptom and needs monitoring program. Front. Health Serv. 2:983217. doi: 10.3389/frhs.2022.983217

Received: 30 June 2022; Accepted: 10 October 2022;

Published: 01 November 2022.

Edited by:

Catherine Battaglia, Department of Veterans Affairs, United StatesReviewed by:

Gabrielle Rocque, University of Alabama at Birmingham, United StatesPrajakta Adsul, University of New Mexico, United States

Alicia Bunger, The Ohio State University, United States

Copyright © 2022 Smith, Merle, Webster, Cahue, Penedo and Garcia. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Justin D. Smith, amQuc21pdGhAaHNjLnV0YWguZWR1

†These authors have contributed equally to this work and share first authorship

Justin D. Smith

Justin D. Smith James L. Merle

James L. Merle Kimberly A. Webster

Kimberly A. Webster September Cahue2

September Cahue2