94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Genet. , 12 May 2022

Sec. Computational Genomics

Volume 13 - 2022 | https://doi.org/10.3389/fgene.2022.880093

This article is part of the Research Topic Medical Knowledge-Assisted Machine Learning Technologies in Individualized Medicine View all 31 articles

Cheng-Hang Li1,2,3†

Cheng-Hang Li1,2,3† Du Cai1,2†

Du Cai1,2† Min-Er Zhong1,2†

Min-Er Zhong1,2† Min-Yi Lv1,2

Min-Yi Lv1,2 Ze-Ping Huang1,2

Ze-Ping Huang1,2 Qiqi Zhu4

Qiqi Zhu4 Chuling Hu1,2

Chuling Hu1,2 Haoning Qi1,2

Haoning Qi1,2 Xiaojian Wu1,2*

Xiaojian Wu1,2* Feng Gao1,2*

Feng Gao1,2*Background: Preoperative and postoperative evaluation of colorectal cancer (CRC) patients is crucial for subsequent treatment guidance. Our study aims to provide a timely and rapid assessment of the prognosis of CRC patients with deep learning according to non-invasive preoperative computed tomography (CT) and explore the underlying biological explanations.

Methods: A total of 808 CRC patients with preoperative CT (development cohort: n = 426, validation cohort: n = 382) were enrolled in our study. We proposed a novel end-to-end Multi-Size Convolutional Neural Network (MSCNN) to predict the risk of CRC recurrence with CT images (CT signature). The prognostic performance of CT signature was evaluated by Kaplan-Meier curve. An integrated nomogram was constructed to improve the clinical utility of CT signature by combining with other clinicopathologic factors. Further visualization and correlation analysis for CT deep features with paired gene expression profiles were performed to reveal the molecular characteristics of CRC tumors learned by MSCNN in radiographic imaging.

Results: The Kaplan-Meier analysis showed that CT signature was a significant prognostic factor for CRC disease-free survival (DFS) prediction [development cohort: hazard ratio (HR): 50.7, 95% CI: 28.4–90.6, p < 0.001; validation cohort: HR: 2.04, 95% CI: 1.44–2.89, p < 0.001]. Multivariable analysis confirmed the independence prognostic value of CT signature (development cohort: HR: 30.7, 95% CI: 19.8–69.3, p < 0.001; validation cohort: HR: 1.83, 95% CI: 1.19–2.83, p = 0.006). Dimension reduction and visualization of CT deep features demonstrated a high correlation with the prognosis of CRC patients. Functional pathway analysis further indicated that CRC patients with high CT signature presented down-regulation of several immunology pathways. Correlation analysis found that CT deep features were mainly associated with activation of metabolic and proliferative pathways.

Conclusions: Our deep learning based preoperative CT signature can effectively predict prognosis of CRC patients. Integration analysis of multi-omic data revealed that some molecular characteristics of CRC tumor can be captured by deep learning in CT images.

Colorectal cancer (CRC) is one of the most prevalent cancers and has become the third leading cause of cancer death (Siegel et al., 2020). Stratification of CRC patients is quite essential to design more accurate and personalized treatment according to their clinical characteristics (Sorbye et al., 2007). Though the current tumor-node-metastasis (TNM) system has been used for guiding treatment decisions of CRC patients for over 50 years (Nagtegaal et al., 2011), it is still inadequate for accurately assessing the prognosis of some colorectal patients, especially for patients in clinical stage II and III (Joachim et al., 2019). Even with the same clinical stage, patients may be suitable to different treatment options before and after surgery as heterogeneity of CRC (Molinari et al., 2018). Thus, prognostic analysis of CRC patients and evaluation of their preoperative and postoperative interventional treatment options are recent research hotspots.

Previous studies on the molecular basis of cancer and the discovery of cancer associated genes, oncogenes and tumor suppressor genes indicates that cancer is a genetic disease (Pierotti, 2017), which determines a natural advantage for cancer survival analysis with genomics data (Walther et al., 2009; Yu et al., 2015). However, the expensive cost and long detection time severely limit its mass adoption. Radiomics is a high-throughput analysis of quantitative tumor characteristics from standard-of-care medical imaging, like computed tomography (CT) and magnetic resonance imaging (MRI). By further modeling with machine learning, radiomics can provide better clinical-decision support systems for the clinicians, like tumor diagnosis and prognosis prediction (Lambin et al., 2017). Compared with genetic detection, radiographic testing is non-invasive and does little harm to the weak patients. Especially, comparing with MRI, CT is much cheaper, and its examination results can be available faster. As a preoperative routine test for CRC patients to locate the tumor before resection surgery, CT imaging analysis can provide timely guidance on surgical procedures and postoperative treatment. With sophisticated image processing tools to obtain high-dimensional image features, CT images contain abundant information which provides a powerful application in multiple medical studies (Limkin et al., 2017).

Typical radiomic features are mainly morphological characteristics of the tumor lesion, such as tumor size, shape and texture, which are customized according to human recognition cognition or compliant with certain human-defined rules (Gillies et al., 2016). The standardization of radiomic features makes it possible to quantify phenotypic characteristics on medical imaging (van Griethuysen et al., 2017). Through successfully applications in tumor differentiated grading (Kim et al., 2015), genomics prediction (Yang et al., 2018), prognosis predicting (Huang et al., 2016), evaluation of tumor immune microenvironment (Jiang et al., 2020) and prediction of chemoradiation therapy response (Shi et al., 2019), radiomic studies demonstrate that radiographic images can provide abundant information for cancer research. However, these radiomics features obtained by typical method are still limited by the human definition. It fails to consider the feature-to-feature relationship which plays a vital role in tumor microenvironment. Deep learning, one kind of machine learning based on artificial neural networks, has a powerful ability in image analysis (LeCun et al., 2015) with convolutional neural networks (CNNs). A few studies based on deep learning have proved its effectiveness in tumor assessment like lymph node status prediction (Zheng et al., 2020) and tumor recurrence prediction (Liu et al., 2022). Though with high prediction accuracy, deep learning is known as a black box as lacks the interpretation for its prediction, which makes it hard to be accepted by doctors. Excavating the hidden biological mechanism for the deep learning models will improve its interpretability and promote the clinical utility. Thus, there is an urgent need for developing a biologically interpretable deep learning model for predicting the prognosis of colorectal cancer.

In this study, we investigated an end-to-end CNNs model to quantify radiographic tumor characteristics and prognosis prediction for CRC patients. Deep features of CT images were extracted for correlation analysis with RNS-seq data from the ICGC-ARGO project (The International Cancer Genome Consortium-Accelerating Research in Genomic Oncology) to further explore the underlying biological mechanism learned by the Multi-Size Convolutional Neural Network (MSCNN) model. Our results proved that deep CT features can reveal the molecular information of tumors to some extent and ultimately improve the stratification of CRC patients.

In this retrospective study, a total of 808 colorectal cancer patients who had cancer resection at the Sixth Affiliated Hospital of Sun Yat-sen University from 22 Jan 2008 to 30 Jan 2018 were included for analysis. Patients admitted during 2008-2013 were assigned to the development cohort (n = 426) for model construction and the rest of patients admitted during 2014-2018 were assigned to the validation cohort (n = 382) for model validation. All patients had CT examinations before the cancer resection surgery and the image data were stored in DICOM (Digital Imaging and Communications in Medicine) format. Region of interest (ROI) for colorectal cancer tumor area was manually delineated by experienced doctors with ITK-snap (Version 3.2) software. Baseline clinicopathological information containing age, gender, differentiated grade, lymph node metastasis and microsatellite status. Among these patients, 236 patients were enrolled in the ICGC-ARGO project and had paired RNA sequencing data.

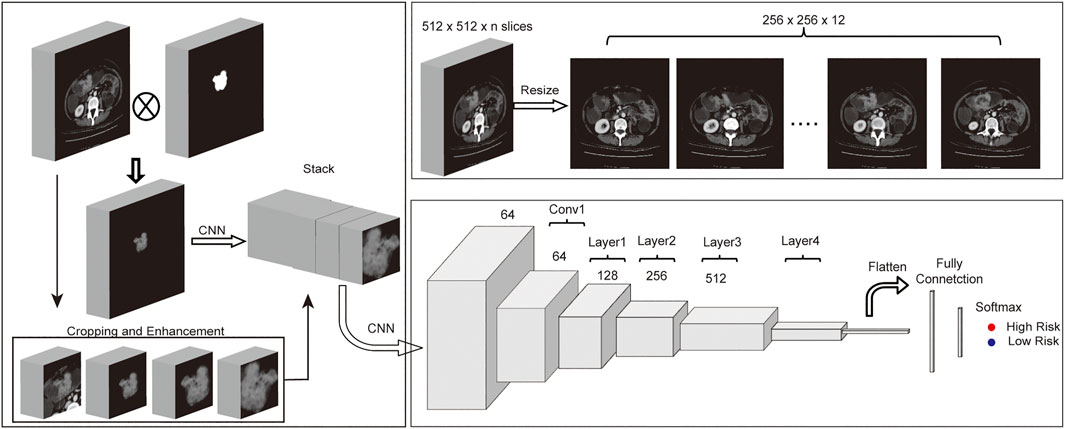

Figure 1 shows the pipeline of our analysis from origin CT images and their corresponding ROI to predict the disease-free survival for each patient. Origin CT images size is 512 * 512 with slices from 23 to 682 (mean = 162), and the valid slices which have tumor lesion of ROI for each CT image range between 3 and 77. To fit the deep learning model and reduce the computational parameters, all 3D CT images and ROIs only kept the slices with valid areas and then were resized to 256 * 256 * 12 with SciPy ndimage python submodule. To better conclude the tumor boundary information, all ROIs were binarily dilated with five pixels using morphology function in ndimage submodule. As the tumor ROI area of colorectal cancer is usually quite small, accounting for only 1–5% of the whole CT image, detailed information for the tumor is hard to extract from the deep learning model. To address this issue, the tumor area is cropped and magnified at different magnifications. Meanwhile, the cropped CT images were also augmented by rotating at random angles and flipping with a certain probability. Finally, all images for each patient were stacked together to feed into the neural network.

FIGURE 1. Workflow of MSCNN. (A) Multi-Size based data enhancement of CT images before fed into MSCNN. (B) Data preprocessing of CT images with ROIs. (C) Network structure of MSCNN Multi-Size which includes a CNN to combine Multi-Size CT data, a ResNet34 network to extract image features of tumors from CT images and a last classification network.

Convolutional Neural Network (CNN) is a powerful Deep Learning algorithm that can extract relevant texture features from the image. By stacking several CNNs, deep learning model can learn deeper features from the image according to the training task. Although model becomes much difficult to train if there are too many layers in deep learning networks, a residual neural network (ResNet (He et al., 2016)) is designed to solve this problem. Our model was based on ResNet34, which contains 34 convolutional neural networks and four residual blocks. First, one subnetwork with CNNs of different input sizes were designed for features extraction from the origin CT image and its enhanced cropped images. Then all features from these CNN were stacked together and following one CNN layer and the rest residual blocks of RenNet34 were used to extract higher and deeper features. Finally, one Fully Connected (FC) layer which contains one hidden layer with 64 nodes and one output layer finished the patient disease-free survival classification task.

As shown in Supplementary Figure S1, for better training the model, only patients with tumor recurrence in 3 years or disease-free survival for more than 5 years were considered in the model development stage. CT images with ROI were fed into the deep learning model, and disease-free survival status was used as the labels. Model training was performed by updating the network weights using the backpropagation algorithm according to the cross-entropy loss between the prediction and the real outcomes. Adam optimizer was used in model network weights updating, and the learning rate was decayed to half for every 10 training epochs with an initial rate of 0.001. During training, the loss was continuously monitored, and model weights were saved when loss decreased. If the loss was not decreased for more than 20 epochs, then training was ended and saved model with the highest Area under the receiver operating characteristic (ROC) Curve (AUC) was loaded for further validation. CT signatures score was calculated on the whole development and validation cohort through the MSCNN model with CT images. A nomogram was constructed by incorporating the CT signature with other clinicopathologic risk factors, and its benefit was evaluated by the calibration curve and Decision curves analysis (DCA).

To compare our deep learning based method with conventional radiomics method, we constructed a model with CT radiomics features. For each of CT image, a total of 107 radiomics features were extracted using Pyradiomics (van Griethuysen et al., 2017) package in python 3.8 platform. Standard Deviation (SD) and Median Absolute Deviation (MAD) were used to initially screen features with significant differences. Z-score normalization was performed to increase the comparability between the left radiomics features. The least absolute shrinkage and selection operator (LASSO) with cox regression was used to construct the final radiomics based model.

To visualize how the MSCNN divides patients into high recurrence risk and low recurrence risk, deep features from the last two layers of the MSCNN model were exported for further analysis. A correlation heatmap was performed on the 64 features from the hidden notes of the FC layer to show the most related deep CT features with high recurrence risk and low recurrence risk. Principal component analysis (PCA) analysis was performed on 512 origin deep CT features from the ResNet34 network and 64 features from hidden nodes of the FC layer.

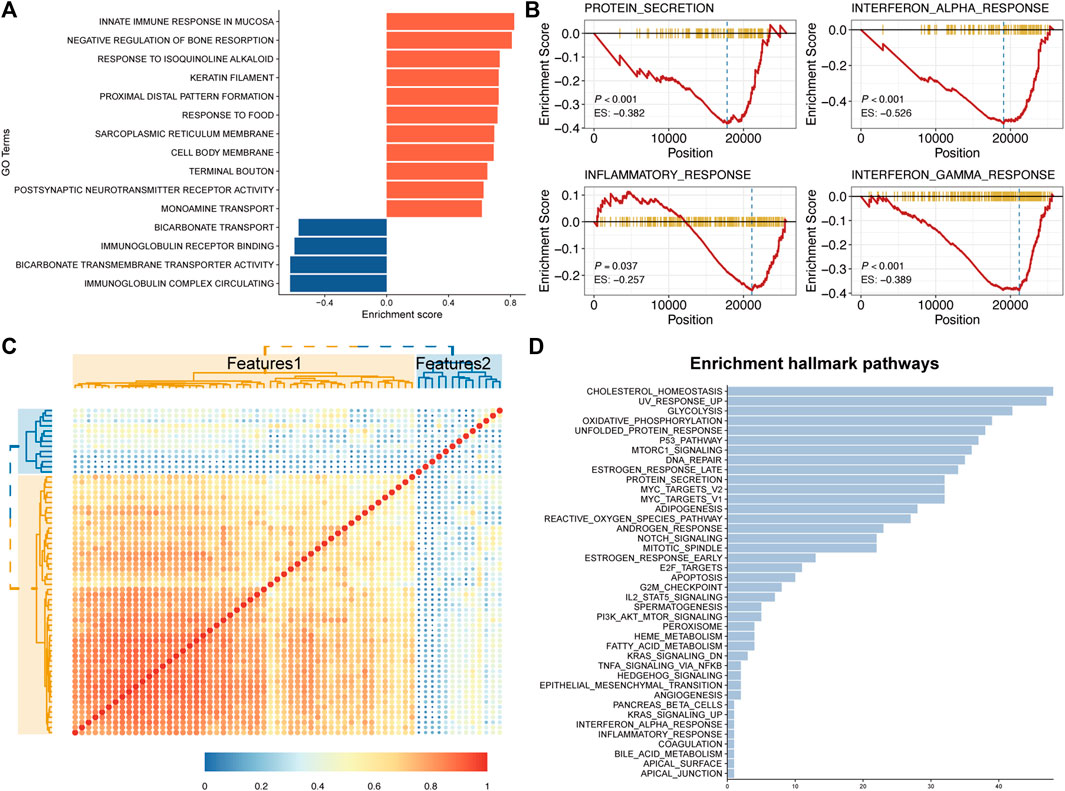

To explore the biological characteristics of CT signature, Gene Ontology analysis and Gene Set Enrichment Analysis (GSEA) was conducted for differentially expressed genes between the risk groups. To further figure out how the model captures the underlying biological information from CT images, correlation analysis was performed between 64 deep CT features and cancer-related pathways. Functional spectra were calculated with the DeepCC method to explore the most related biological pathways with deep CT features (Gao et al., 2019). All hallmark pathways which have significant correlations with these 64 deep CT features were displayed in a bar plot.

All statistical analyses were performed by R software (version 4.1.1). Kaplan-Meier curve was used to perform survival analysis for model prediction results with R package “survival”. Log-rank test was used to evaluate results of the univariable analysis of model prediction results and other clinic-pathological factors with disease-free survival (DFS). Multivariable analysis was performed using the Cox proportional hazards regression method with only the significant variables from univariable analysis. Correlation analysis were performed using the Pearson method. For all analyses, the two-sided value p value < 0.05 was considered statistically significant.

We calculated the recurrence risk of colorectal cancer patients with CT images and ROI in an end-to-end deep learning method. After model training with the development cohort, a CT signature score of each patient was calculated with the MSCNN model in Supplementary Table S1. Patients with a recurrence risk of more than 0.5 were classified into high risk groups, and the remain patients were in low risk group. Patients’ clinical characteristics in development and validation cohort were displayed in Table 1.

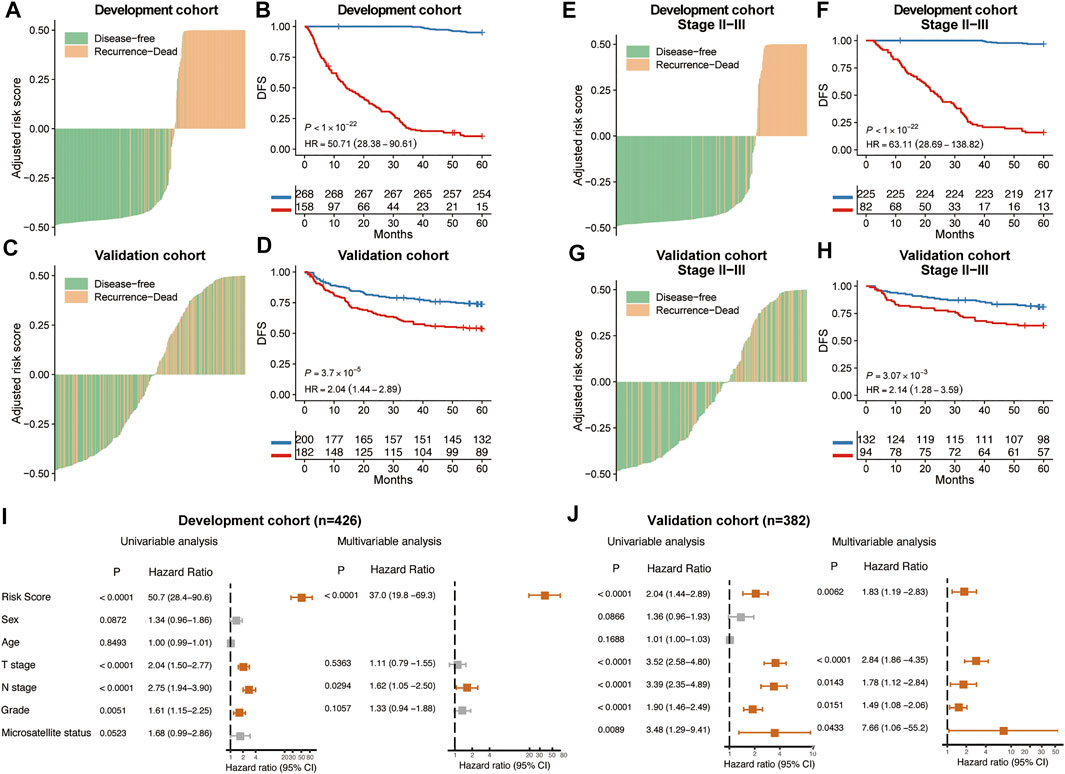

In both development and validation cohorts, high risk patients show worse mean survival (23 vs. 105 months and 46 vs. 58 months). Kaplan-Meier curve revealed a significant association between CT images risk prediction and patients’ DFS in the development cohort (HR: 50.7, 95% CI: 28.4–90.6, p < 0.001) and validation cohort (HR: 2.04, 95% CI: 1.44–2.89, p < 0.001) (Figures 2A–D). Previous research showed that clinicopathological information may be not enough to accurately predict the recurrence risk for colorectal patients with stage II and III (Tsikitis et al., 2014). Kaplan-Meier survival curve in stage II and III patients showed that risk prediction of our model can still divide those patients into significant survival different groups (Figures 2E–H). Univariable and multivariable cox regression analyses were performed to identity significant clinicopathological factors associated with cancer recurrence. Besides the risk scores calculated from CT images, clinical factors sex, age, T stage, N stage, differentiation grade and Microsatellite status were added to multivariable analysis. Forest plot showed risk scores from CT images was an independent prognostic predictor of cancer recurrence in both development and validation cohorts (Figures 2I,J).

FIGURE 2. Prognostic performance of MSCNN. The distribution of CT signature of MSCNN and its corresponding recurrence status in the development cohort (A) and validation cohort (C). Kaplan-Meier curves showed a significant survival difference between the high and low risk groups in the development cohort (B) and validation cohort (D). Prognostic analysis of CRC patients in stage II and III subgroups (E–H). Univariable and multivariable analysis of clinical factors in the development cohort (I) and validation cohort (J).

Standard Deviation and Median Absolute Deviation for each radiomics features was calculated after z-score normalization and only 50 features with SD > 1 and MAD > 3.5 were left for subsequent modeling. Finally, 11 radiomics features were kept with LASSO-cox regression to construct the classification model. Radiomics score was calculated by a linear combination of non-zero coefficients multiplied with these 11 radiomics features. To classify high and low risk groups, the optimal cut-off of radiomics scores was determined by the time-dependent ROC curve. Survival analysis showed significant differences between high risk patients and low risk patients according to radiomics scores (Supplementary Figures S2B,D). Comparison between our MSCNN method and Radiomics method were displayed with ROC curves and the result proved that our model could obtain better prediction of prognosis in both development and validation cohorts (Supplementary Figures S2A,C).

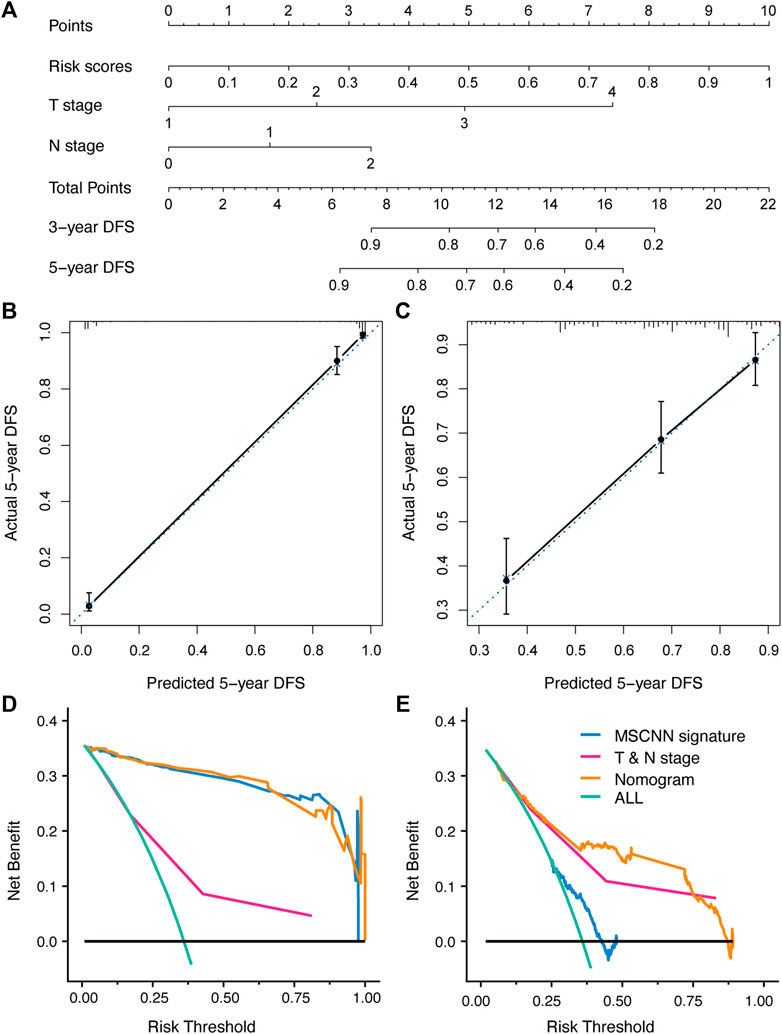

According to the multivariable analysis, the Cox regression model which incorporated CT signature, T stage and N stage was developed and displayed as a CT signature based nomogram (Figure 3A). The calibration curve of the radiomics nomogram showed good concordance between the prediction and the actual DFS survival (Figures 3B,C). DCA curve showed that nomogram achieved better net benefit compared with TNM-stage only (Figures 3E,F).

FIGURE 3. The developed nomogram incorporated CT signature with T & N stage (A). Coordinates length for each prognostic factor was determined by the coefficients of the cox regression model. For each patient, the total score was calculated with all variable scores. The probability of DFS was derived from the mapping relationship between the evaluation results and total score on specified patient survival time. (B,C) Calibration curves of nomogram for 5 years DFS in the development and validation cohort. (D,E) Decision curve analysis for nomogram established in the development and validation cohort.

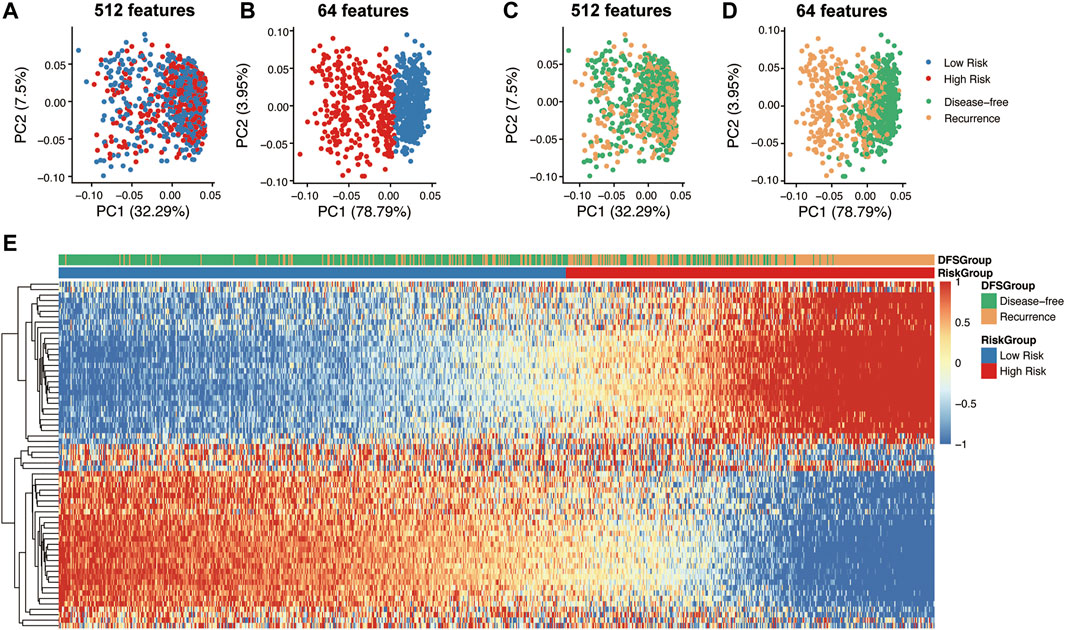

Deep features were extracted from the output of the ResNet34 network and hidden notes of the FC layer. 512 features were exported from the ResNet34 network for each CT image, and then the 64 features most related to tumor recurrence and disease-free survival were extracted from the hidden layer. PCA analysis showed deep features from the RseNet34 network were not enough to accurately divide patients into high risk groups and low risk groups (Figures 4A,B). However, recurrence related 64 features extracted from the hidden layers achieved distinct classification (Figures 4C,D). Unsupervised clustering of 64 deep CT features displayed in the heatmap showed that these deep CT features were significantly highly correlated with high and low risk subgroups of CRC patients (Figure 4E).

FIGURE 4. Dimension reduction for visualization and correlation analysis of deep CT features. Principle component analysis (PCA) on the 512 features of the ResNet34 network (A,C) and 64 features (CT feature) of hidden notes of the FC network (B,D). Correlation heatmap between 64 deep CT features and prognostic difference group (E).

To further explore the biological interpretability of deep CT features from the MSCNN model, Gene Ontology analysis of the different groups and the GSEA showed significant enrichment of immune pathways (Figure 5A) such as Interferon alpha response (p < 0.001), Interferon Gamma Response (p < 0.001) and Inflammatory response (p = 0.037) (Figure 5B). Significantly differential expression genes of risk groups were shown in Supplementary Figure S3. Besides, correlation analysis of the 64 deep CT features (Figure 5C) found that most of these features were highly correlated. Their further correlation analysis with the hallmark pathways was performed to explore the biological mechanism of the MSCNN model. Hallmark pathways were selected according to significant association with those deep CT features, and the result showed those features had a significant enrichment in some metabolism and proliferative pathways (Figure 5D).

FIGURE 5. Global gene set pathway analysis. (A) Gene Ontology pathway enrichment analysis between CT signatures and RNA-Seq expression. (B) GSEA showed several Immune related pathways were downregulated in high CT signature patients. (C,D) Correlation between 64 deep CT features and their enrichment hallmark pathways.

In this study, we proposed a deep learning based end-to-end method to predict prognosis of colorectal cancer patients after tumor resection surgery from CT images. Our deep learning model successfully screened out high tumor recurrence risk patients with significant prognostic differences from the others. Univariable and multivariable analyses showed that CT signature was an independent factor for CRC patient survival prediction. By incorporating CT signature and clinical risk factors, we built a nomogram that can facilitate the risk prediction for colorectal cancer patients. Correlation analysis with genomic data indicated that high risk patients showed downregulation of immune pathways and deep CT features learned by MSCNN model were significantly enriched in some metabolism and proliferative pathways.

Traditional prognostic analysis based on genetic testing can obtain good performance as expression of several genes were highly related with patient’s tumor progression (Kandimalla et al., 2018; Sveen et al., 2020). However high cost and long test time cycle limited its large-scale applications. Compared with genetic testing, CT imaging, a much cheaper non-invasive preoperative routine test for CRC patients to locate the tumor before the resection surgery, can provide more preoperative interventions. Our study was based on deep learning model which focused detailed and deeper information of CT images and acquired good performance in prognostic prediction for CRC patients.

Deep learning model with CNN can learn the features of CT images from low to high dimensions and their correlation (Yamashita et al., 2018), which may be the key reason for high performance in image analysis. Since most previous CT image based prognostic research have only used pretrained deep learning to extract images features, subsequent analysis required subjective screening of these features to build the machine learning model again (Huang et al., 2020; Park et al., 2021; Liu et al., 2022). Besides, they did not consider the special characteristics of medical images which mean generic pretrained deep learning models were not suitable. Our MSCNN model was an end-to-end method to quickly predict the prognosis for CRC patients with CT images, which can also reduce the subjectivity of human selection of image characteristics. In addition, the percentage of tumors in CT images is often small, accounting for only about 1–5%, which makes it difficult for ordinary CNN models to learn the key information of CT images. Based on the idea of multi-instance learning in pathology research (Bilal et al., 2021; Sirinukunwattana et al., 2021), our MSCNN model considered both full-image and local detail information of CT images by cropping and deflating the ROI region, making the prognostic predicting of our model more comprehensive and accurate.

Recent rapid development of deep learning has generated a series of CNN based studies for radiographic analysis, like treatment response predicting (Xu et al., 2019; Lu et al., 2021) and detection of Synchronous Peritoneal Carcinomatosis (Yuan et al., 2020). However, few of them considered the interpretation of their deep learning models, making it hard for clinicians to be convinced of their findings. Our study not only visualized the process of classifying CRC patients in high and low risk groups, but also found that the CT signature of our MSCNN model was significantly correlated with several immune pathways. Meanwhile, our results found that deep CT features showed significant enrichment in some metabolic proliferative pathways which was consistent with previous studies (Kandimalla et al., 2019; Cai et al., 2020; La Vecchia and Sebastián, 2020).

However, despite satisfactory results with sufficient biological interpretation, our study still has some limitations. First, a prospective study was needed to further confirm and optimize our model. In our study, all patients included are from one single center, which may cause bias for the model validation. In addition, our CT images for prognosis predicting need manual ROI segmentation which is time-consuming and seriously affects the applicability of our model. This can be achieved by object detection through deep learning with enough data. In this way, the ROI can be directly learned from the model without manual sketching.

In conclusion, our study demonstrated that deep learning with CT images can be effectively applied to cancer recurrence prediction. By incorporating clinical factors, more accurate results can be achieved than just routine TNM staging. Correlation analysis with gene expression data showed that deep CT features captured by our model did have a biological meaning which gave credibility to our MSCNN model.

The raw data supporting the conclusions of this study are available for reasonable request from the corresponding authors (FG). The CT images data are not public as they contain some private information of our patients. We only used gene expression data (but not microarray/deep sequencing) for pathway analysis to enhance the interpretability of the main results. Gene expression data of the ICGC-ARGO project are not public available currently and will be released on the website of the project (https://www.icgc-argo.org/page/114/cgcc) by ICGC after the milestone.

The studies involving human participants were reviewed and approved by The Medical Ethics Committee of the Sixth Affiliated Hospital of Sun Yat-sen University. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

FG and XW designed the whole study. C-HL and DC wrote the paper. C-HL and DC analyzed and interpreted the data and drew the figures. DC, FG, and XW revised the paper. M-EZ planned all the data for this study. M-EZ, Z-PH, and QZ delineated the ROIs. M-YL, CH, and HQ collected and cleaned up the clinical data. COCC Working Group helped with genomic sequencing and analysis. All authors contributed to manuscript revision and approved the submitted version.

This study was supported by the National Natural Science Foundation of China (No. 82002221, FG and No. 82102475, M-EZ), Guangzhou Basic and Applied Basic Research Fund (No. 202102020820, FG), The Sixth Affiliated Hospital of Sun Yat-sen University Start-up Fund for Returnees (No. R20210217202501975, FG), China Postdoctoral Science Foundation (No. 2020M683121, M-EZ), the Guangdong Basic and Applied Basic Research Foundation (No. 2020A1515110489, M-EZ), the China Postdoctoral Science Foundation (No. 2021T140769, M-EZ).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Thanks to the COCC Working Group for their help with this study.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fgene.2022.880093/full#supplementary-material

Bilal, M., Raza, S. E. A., Azam, A., Graham, S., Ilyas, M., Cree, I. A., et al. (2021). Development and Validation of a Weakly Supervised Deep Learning Framework to Predict the Status of Molecular Pathways and Key Mutations in Colorectal Cancer from Routine Histology Images: a Retrospective Study. Lancet Digital Health 3 (12), e763–e772. doi:10.1016/s2589-7500(21)00180-1

Cai, D., Duan, X., Wang, W., Huang, Z. P., Zhu, Q., Zhong, M. E., et al. (2020). A Metabolism-Related Radiomics Signature for Predicting the Prognosis of Colorectal Cancer. Front. Mol. Biosci. 7, 613918. doi:10.3389/fmolb.2020.613918

Gao, F., Wang, W., Tan, M., Zhu, L., Zhang, Y., Fessler, E., et al. (2019). DeepCC: a Novel Deep Learning-Based Framework for Cancer Molecular Subtype Classification. Oncogenesis 8 (9), 44. doi:10.1038/s41389-019-0157-8

Gillies, R. J., Kinahan, P. E., and Hricak, H. (2016). Radiomics: Images Are More Than Pictures, They Are Data. Radiology 278 (2), 563–577. doi:10.1148/radiol.2015151169

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep Residual Learning for Image Recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27-30 June 2016, 770–778.

Huang, S.-C., Kothari, T., Banerjee, I., Chute, C., Ball, R. L., Borus, N., et al. (2020). PENet-A Scalable Deep-Learning Model for Automated Diagnosis of Pulmonary Embolism Using Volumetric CT Imaging. npj Digit. Med. 3, 61. doi:10.1038/s41746-020-0266-y

Huang, Y., Liu, Z., He, L., Chen, X., Pan, D., Ma, Z., et al. (2016). Radiomics Signature: A Potential Biomarker for the Prediction of Disease-free Survival in Early-Stage (I or II) Non-small Cell Lung Cancer. Radiology 281 (3), 947–957. doi:10.1148/radiol.2016152234

Jiang, Y., Wang, H., Wu, J., Chen, C., Yuan, Q., Huang, W., et al. (2020). Noninvasive Imaging Evaluation of Tumor Immune Microenvironment to Predict Outcomes in Gastric Cancer. Ann. Oncol. 31 (6), 760–768. doi:10.1016/j.annonc.2020.03.295

Joachim, C., Macni, J., Drame, M., Pomier, A., Escarmant, P., Veronique-Baudin, J., et al. (2019). Overall Survival of Colorectal Cancer by Stage at Diagnosis. Med. Baltim. 98 (35), e16941. doi:10.1097/md.0000000000016941

Kandimalla, R., Gao, F., Matsuyama, T., Ishikawa, T., Uetake, H., Takahashi, N., et al. (2018). Genome-wide Discovery and Identification of a Novel miRNA Signature for Recurrence Prediction in Stage II and III Colorectal Cancer. Clin. Cancer Res. 24 (16), 3867–3877. doi:10.1158/1078-0432.ccr-17-3236

Kandimalla, R., Ozawa, T., Gao, F., Wang, X., Goel, A., Nozawa, H., et al. (2019). Gene Expression Signature in Surgical Tissues and Endoscopic Biopsies Identifies High-Risk T1 Colorectal Cancers. Gastroenterology 156 (8), 2338–2341. doi:10.1053/j.gastro.2019.02.027

Kim, J. E., Lee, J. M., Baek, J. H., Moon, S. K., Kim, S. H., Han, J. K., et al. (2015). Differentiation of Poorly Differentiated Colorectal Adenocarcinomas from Well- or Moderately Differentiated Colorectal Adenocarcinomas at Contrast-Enhanced Multidetector CT. Abdom. Imaging 40 (1), 1–10. doi:10.1007/s00261-014-0176-z

La Vecchia, S., and Sebastián, C. (2020). Metabolic Pathways Regulating Colorectal Cancer Initiation and Progression. Seminars Cell & Dev. Biol. 98, 63–70. doi:10.1016/j.semcdb.2019.05.018

Lambin, P., Leijenaar, R. T. H., Deist, T. M., Peerlings, J., de Jong, E. E. C., van Timmeren, J., et al. (2017). Radiomics: the Bridge between Medical Imaging and Personalized Medicine. Nat. Rev. Clin. Oncol. 14 (12), 749–762. doi:10.1038/nrclinonc.2017.141

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep Learning. Nature 521 (7553), 436–444. doi:10.1038/nature14539

Limkin, E. J., Sun, R., Dercle, L., Zacharaki, E. I., Robert, C., Reuzé, S., et al. (2017). Promises and Challenges for the Implementation of Computational Medical Imaging (Radiomics) in Oncology. Ann. Oncol. 28 (6), 1191–1206. doi:10.1093/annonc/mdx034

Liu, S., Sun, W., Yang, S., Duan, L., Huang, C., Xu, J., et al. (2022). Deep Learning Radiomic Nomogram to Predict Recurrence in Soft Tissue Sarcoma: a Multi-Institutional Study. Eur. Radiol. 32 (2), 793–805. doi:10.1007/s00330-021-08221-0

Lu, L., Dercle, L., Zhao, B., and Schwartz, L. H. (2021). Deep Learning for the Prediction of Early On-Treatment Response in Metastatic Colorectal Cancer from Serial Medical Imaging. Nat. Commun. 12 (1), 6654. doi:10.1038/s41467-021-26990-6

Molinari, C., Marisi, G., Passardi, A., Matteucci, L., De Maio, G., and Ulivi, P. (2018). Heterogeneity in Colorectal Cancer: A Challenge for Personalized Medicine? Int. J. Mol. Sci. 19 (12), 3733. doi:10.3390/ijms19123733

Nagtegaal, I. D., Quirke, P., and Schmoll, H.-J. (2011). Has the New TNM Classification for Colorectal Cancer Improved Care? Nat. Rev. Clin. Oncol. 9 (2), 119–123. doi:10.1038/nrclinonc.2011.157

Park, Y. J., Choi, D., Choi, J. Y., and Hyun, S. H. (2021). Performance Evaluation of a Deep Learning System for Differential Diagnosis of Lung Cancer with Conventional CT and FDG PET/CT Using Transfer Learning and Metadata. Clin. Nucl. Med. 46 (8), 635–640. doi:10.1097/RLU.0000000000003661

Pierotti, M. A. (2017). The Molecular Understanding of Cancer: from the Unspeakable Illness to a Curable Disease. ecancer 11, 747. doi:10.3332/ecancer.2017.747

Shi, L., Zhang, Y., Nie, K., Sun, X., Niu, T., Yue, N., et al. (2019). Machine Learning for Prediction of Chemoradiation Therapy Response in Rectal Cancer Using Pre-treatment and Mid-radiation Multi-Parametric MRI. Magn. Reson. Imaging 61, 33–40. doi:10.1016/j.mri.2019.05.003

Siegel, R. L., Miller, K. D., Goding Sauer, A., Fedewa, S. A., Butterly, L. F., Anderson, J. C., et al. (2020). Colorectal Cancer Statistics, 2020. CA A Cancer J. Clin. 70 (3), 145–164. doi:10.3322/caac.21601

Sirinukunwattana, K., Domingo, E., Richman, S. D., Redmond, K. L., Blake, A., Verrill, C., et al. (2021). Image-based Consensus Molecular Subtype (imCMS) Classification of Colorectal Cancer Using Deep Learning. Gut 70 (3), 544–554. doi:10.1136/gutjnl-2019-319866

Sorbye, H., Köhne, C.-H., Sargent, D. J., and Glimelius, B. (2007). Patient Characteristics and Stratification in Medical Treatment Studies for Metastatic Colorectal Cancer: a Proposal for Standardization of Patient Characteristic Reporting and Stratification. Ann. Oncol. 18 (10), 1666–1672. doi:10.1093/annonc/mdm267

Sveen, A., Kopetz, S., and Lothe, R. A. (2020). Biomarker-guided Therapy for Colorectal Cancer: Strength in Complexity. Nat. Rev. Clin. Oncol. 17 (1), 11–32. doi:10.1038/s41571-019-0241-1

Tsikitis, V. L., Larson, D. W., Huebner, M., Lohse, C. M., and Thompson, P. A. (2014). Predictors of Recurrence Free Survival for Patients with Stage II and III Colon Cancer. BMC Cancer 14, 336. doi:10.1186/1471-2407-14-336

van Griethuysen, J. J. M., Fedorov, A., Parmar, C., Hosny, A., Aucoin, N., Narayan, V., et al. (2017). Computational Radiomics System to Decode the Radiographic Phenotype. Cancer Res. 77 (21), e104–e107. doi:10.1158/0008-5472.can-17-0339

Walther, A., Johnstone, E., Swanton, C., Midgley, R., Tomlinson, I., and Kerr, D. (2009). Genetic Prognostic and Predictive Markers in Colorectal Cancer. Nat. Rev. Cancer 9 (7), 489–499. doi:10.1038/nrc2645

Xu, Y., Hosny, A., Zeleznik, R., Parmar, C., Coroller, T., Franco, I., et al. (2019). Deep Learning Predicts Lung Cancer Treatment Response from Serial Medical Imaging. Clin. Cancer Res. 25 (11), 3266–3275. doi:10.1158/1078-0432.ccr-18-2495

Yamashita, R., Nishio, M., Do, R. K. G., and Togashi, K. (2018). Convolutional Neural Networks: an Overview and Application in Radiology. Insights Imaging 9 (4), 611–629. doi:10.1007/s13244-018-0639-9

Yang, L., Dong, D., Fang, M., Zhu, Y., Zang, Y., Liu, Z., et al. (2018). Can CT-based Radiomics Signature Predict KRAS/NRAS/BRAF Mutations in Colorectal Cancer? Eur. Radiol. 28 (5), 2058–2067. doi:10.1007/s00330-017-5146-8

Yu, J., Wu, W. K. K., Li, X., He, J., Li, X.-X., Ng, S. S. M., et al. (2015). Novel Recurrently Mutated Genes and a Prognostic Mutation Signature in Colorectal Cancer. Gut 64 (4), 636–645. doi:10.1136/gutjnl-2013-306620

Yuan, Z., Xu, T., Cai, J., Zhao, Y., Cao, W., Fichera, A., et al. (2020). Development and Validation of an Image-Based Deep Learning Algorithm for Detection of Synchronous Peritoneal Carcinomatosis in Colorectal Cancer. Ann. Surg. 275 (4), e645–e651. doi:10.1097/sla.0000000000004229

Keywords: deep learning, colorectal cancer, prognosis, nomogram, pathway analysis

Citation: Li C-H, Cai D, Zhong M-E, Lv M-Y, Huang Z-P, Zhu Q, Hu C, Qi H, Wu X and Gao F (2022) Multi-Size Deep Learning Based Preoperative Computed Tomography Signature for Prognosis Prediction of Colorectal Cancer. Front. Genet. 13:880093. doi: 10.3389/fgene.2022.880093

Received: 22 February 2022; Accepted: 25 April 2022;

Published: 12 May 2022.

Edited by:

Liang Zhao, Hubei University of Medicine, ChinaReviewed by:

Guixi Zheng, Shandong University, ChinaCopyright © 2022 Li, Cai, Zhong, Lv, Huang, Zhu, Hu, Qi, Wu and Gao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaojian Wu, d3V4amlhbkBtYWlsLnN5c3UuZWR1LmNu; Feng Gao, Z2FvZjU3QG1haWwuc3lzdS5lZHUuY24=

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.