- 1School of Information Science and Engineering, Qufu Normal University, Rizhao, China

- 2College of Electrical Engineering and Automation, Anhui University, Hefei, China

- 3School of Information and Electrical Engineering, Ludong University, Yantai, China

As an important approach to cancer classification, cancer sample clustering is of particular importance for cancer research. For high dimensional gene expression data, examining approaches to selecting characteristic genes with high identification for cancer sample clustering is an important research area in the bioinformatics field. In this paper, we propose a novel integrated framework for cancer clustering known as the non-negative symmetric low-rank representation with graph regularization based on score function (NSLRG-S). First, a lowest rank matrix is obtained after NSLRG decomposition. The lowest rank matrix preserves the local data manifold information and the global data structure information of the gene expression data. Second, we construct the Score function based on the lowest rank matrix to weight all of the features of the gene expression data and calculate the score of each feature. Third, we rank the features according to their scores and select the feature genes for cancer sample clustering. Finally, based on selected feature genes, we use the K-means method to cluster the cancer samples. The experiments are conducted on The Cancer Genome Atlas (TCGA) data. Comparative experiments demonstrate that the NSLRG-S framework can significantly improve the clustering performance.

Introduction

High-throughput DNA microarray technology has long been used to collect biomedical cancer gene expression data (Russo et al., 2003). In general, gene expression data contain a notably large number of genes (high dimension), a small number of samples (low sample size), irrelevant genes and noisy genes caused by complex processing (Mohamad et al., 2010). Therefore, it is necessary to select feature genes or informative genes that contribute to identifying different cancers and the cancerous state (Mohamad et al., 2013; Ge and Hu, 2014; Tang et al., 2014). The selected genes have potential for use in developing cancer treatment strategies (Rappoport and Shamir, 2018). However, the high-dimensional and low-sample-size characteristics of the cancer gene expression dataset present a challenge for researchers in terms of data mining. To mitigate this problem, researchers have proposed many methods (Cui et al., 2013; Ge and Hu, 2014; Wang et al., 2016; Wang et al., 2018; Xu et al., 2019). Among the existing methods, feature selection is a reasonable method that has achieved great success.

Feature selection is an important data processing method that can select the most important feature subset from a set of features and reduce the dimension of the feature space. The existing feature selection methods can be divided into two groups: “wrapper” methods and “filter” methods (Kohavi and John, 1997). Wrapper methods use the learning algorithm to evaluate the candidate features. However, because wrapper methods are highly complex with a large amount of calculation, they are not suitable for large-scale datasets (Langley, 1994). Filter methods select a feature subset via the evaluation function. Construction of an evaluation function is based on the correlations between the features and properties of the raw data, such as the distance measures, information measures, dependence measures or others (Dash and Liu, 1997; Talavera, 2005; He et al., 2006). Among the existing evaluation functions, as a criterion, the data variance might be the simplest evaluation for feature selection. The main idea of the data-variance-based approach is to capture the directions of the maximum variance in the data, which reflects the major power of the data. The Principal Component Analysis (PCA) method and its variants belong to the filter methods and are used to find features that are useful for recovering data. However, there is no reason to confirm that selected features can effectively discriminate between data points in different classes. He et al. proposed the Laplacian Score (LS) method to select features with high identification, and the LS method is a “filter” method that is independent of other methods (He et al., 2006). The LS method constructs a nearest neighbour graph to preserve the local geometric structure. The selected features can reflect the local structure of the data space.

As we know, the global structure plays an important role in clustering when the data contain multiple subspaces (Liu et al., 2010). The LS method focuses excess attention on the relationships between local data points but ignores the influence of global data structures. This drawback might lead to reduced discrimination effects of the selected feature when the given data contain multiple subspaces. For the feature selection method, it is a challenge to satisfactorily characterize and represent global data structures from a dataset with multiple subspaces. Fortunately, the Low-Rank Representation (LRR) method solves this issue nicely. The LRR method can find a low-rank matrix to capture and represent the global structure of the raw dataset (Liu et al., 2010). The key to the LRR method is that the high-dimensional data can be represented by potential low-dimensional subspaces (You et al., 2016). In bioinformatics, LRR has achieved great success in gene expression data mining. For example, Cui et al. used the LRR method to identify subspace gene clusters and obtained good results (Cui et al., 2013). To preserve the intrinsic geometric structures of gene expression data, Wang et al. introduced graph regularization into LRR and proposed the Laplacian regularized LRR (LLRR) method (Wang et al., 2016). Recently, LLRR was applied to cancer sample clustering (Wang et al., 2019a). Furthermore, Wang et al. introduced the mixed-norm to increase the robustness of the LLRR method and proposed the mixed-norm Laplacian regularized LRR (MLLRR) method for tumour sample clustering based on penalized matrix decomposition (Wang et al., 2018). However, cancer sample clustering is processed on the obtained low-rank matrix, which is the global structural representation of the raw data. These LRR-based approaches mainly consider the global structure of data, but sometimes they ignore the single feature gene.

Motivated by the above insights, we propose a novel framework that integrates the advantages of the LRR and LS methods. Based on the multi-cancer gene expression dataset, the proposed framework is used to select the feature gene for cancer sample clustering.

First, we incorporate the constraints of the non-negative symmetric low-rank matrix and graph regularization in the LRR method and propose a non-negative symmetric low-rank representation graph regularized method, or NSLRG method for short. The NSLRG method considers the property and structure of the gene expression data. The NSLRG method obtains the lowest rank matrix, which preserves the local data manifold information and the global data structure information of the raw data.

Second, according to the lowest rank matrix, we construct a Score function to evaluate each gene for selection of the feature genes. The importance level of a gene depends on its significance for the global and local structures of the raw data. We integrate the NSLRG method with the Score function to achieve the aim of evaluating and selecting feature genes, and we refer to it as the NSLRG-S framework.

Finally, we apply the K-means method to cluster cancer samples based on the selected feature genes. Based on the different multi-cancer gene expression data, the experimental results suggest that the performance of the NSLRG-S framework is better than that of other methods.

In summary, the contributions of this paper include the following main aspects:

1. We propose a novel data mining method known as the NSLRG method. The NSLRG method operates under graph regularization and non-negative symmetric low-rank matrix constraints. The NSLRG method can learn the lowest rank matrix to satisfactorily represent the gene expression data and can capture the global structures and local geometric structures of the raw data. Non-negativity is more consistent with biological modelling. The symmetric constraint improves the interpretability of the lowest rank matrix. The constraints of non-negativity and symmetry facilitate the lowest rank matrix to learn the structure of the gene expression data.

2. Based on the lowest rank matrix, we propose a Score function to select the feature genes for cancer sample clustering. The selected feature genes have important significance to the raw data. In the clustering of cancer samples, the selected genes have strong discriminability to realize the classification of different samples.

3. We present a novel feature selection framework, known as NSLRG-S, that is designed to evaluate and select the feature genes for cancer sample clustering. Based on this framework, the selected result of the gene expression dataset has lower dimensionality. In multi-cancer sample clustering, this method has a high recognition rate to find subsets using the selected result as experimental data. We conduct extensive experiments to demonstrate that the feature gene subset selected by NSLRG-S has good performance in cancer sample clustering.

The remainder of this paper is organized as follows. In section Related Work, we briefly review the original LRR and several related variants as well as the LS method. In section Method, we first present the NSLRG method and its optimal solution, and based on the Score function, the NSLRG-S framework is clearly given for modelling of multi-cancer gene expression data. Section Experiments analyses and discusses the NSLRG method based on multiple evaluation indicators and convergence analysis. The performance of the NSLRG-S framework is validated by experiments based on synthetic data and multi-cancer gene expression data. Section Conclusions Work presents the conclusion of our work.

Related Work

In this section, we briefly introduce the original Low-Rank Representation (LRR) (Liu et al., 2010), the related variants based on the original LRR method, and the Laplacian Score method (He et al., 2006).

Low-Rank Representation

Original LRR Method

The Low-Rank Representation (LRR) method is an efficient method for exploring observed data and subspace clustering. The main idea is that each data sample can be represented as a linear combination of the dictionary data. In general, the matrix X = [x1,x2,…,xn]∈ℝm×n represents the observed data, of which each column is a data sample. Therefore, the matrix X contains n data samples drawn from independent subspaces. The matrix D = [d1,d2,…,dk]∈ℝm×k represents the dictionary data and is overcomplete. The general model of the LRR method is formulated as follows.

where the matrix Z∈ℝk×n is the coefficient matrix. The aim of this model is to learn a lowest rank matrix Z* to represent the observed data X. In the actual application, the matrix X always replaces D as the dictionary data (Liu et al., 2010; Liu et al., 2013). Therefore, Z becomes a square matrix and Z∈ℝn×n. The element can denote the confidence of sample i and j in the same subspace (Wang et al., 2019b). Hence, the matrix Z* can be used in subspace clustering that clusters data samples into several sets, with each set corresponding to a subspace.

The problem of is a rank function, which is difficult to optimize with an NP-hard nature. To mitigate this problem, the best alternative is convex relaxation on problem (1), and it is written as follows.

where ∥⋅∥* is the nuclear norm, and ∥Z∥* is defined as , where δi is the singular value of matrix Z∈ℝn×n. It has been confirmed in the literature (Cai et al., 2010) that matrix Z of the LRR can capture the global structure of the raw data using the nuclear norm item. Furthermore, to address the real data under the noise and outliers, a more reasonable formula is applied after adjustment, and it is expressed as follows.

where ∥E∥P is the error term, and it selects a different P to model special noise or outliers based on error prior information, such as l1-norm (∥E∥1) and l2,1- norm (∥E∥2,1) (Chen and Yang, 2014), and λ > 0 is the parameter that trades off the effect of the error item.

Many researchers have attempted and proposed variants based on the original LRR method. The main idea is to introduce constraint items to optimize or improve existing methods. For example, the original LRR method is improved by considering the geometric structures within the data, including the graph regularization method (Lu et al., 2013) and k-nearest neighbour graph method (Yin et al., 2016). The different norm items are used to improve the robustness of the original LRR method (Wang et al., 2018) and others.

LRR With Graph Regularization

Under certain conditions, the geometric structure within the data is crucial for the result that we desire. To address this issue, researchers introduced graph regularization into the LRR method to create the graph-regularized low-rank representation (GLRR) method (Lu et al., 2013). The equation of GLRR is written as follows.

where the error item uses the l2,1-norm and , tr(⋅) is the trace of the matrix, L is the graph Laplacian, and λ1 and λ2 are two parameters used to balance the graph-regularized item and the error item. Based on manifold learning, the graph-regularized item achieves the aim that representative data points zi and zj can hold the property of the data points xi and xj of X, which are closed in the intrinsic manifold. Therefore, the inherent geometric structure in the raw data is preserved in the low-rank matrix Z.

Non-Negative LRR With Sparsity

The non-negativity constraint ensures that every data point is in the convex hull of its neighbours. The sparse constraint ensures that each sample is associated with only a few samples. The non-negative and sparse low-rank matrix supplies a well discriminated weight for the subspace and information group.

Inspired by the above insights, Zhuang et al. proposed the non-negative low rank and sparse graph (NNLRS) method (Zhuang et al., 2012). The formula is given as follows.

where ∥Z∥1 is the l1-norm to guarantee the sparsity of coefficient matrix. In real-world applications, the sparsity and non-negativity matrix Z obtained by the NNLRS method can offer a basis for semi-supervised learning by constructing the discriminative and informative graph (You et al., 2016).

Laplacian Score Method

According to the Laplacian eigenmaps (Belkin and Niyogi, 2001) and the locality preserving projection (He and Niyogi, 2005), the aim of the Laplacian Score (LS) method is to evaluate features based on their locality preserving power (He et al., 2006). The LS is defined as follows.

where the heat kernel function is used to obtain weight matrix S, and t is a suitable constant, which is set empirically. The matrix S is used to model the local structure of the raw data space. Additionally, Var(xr,:) is the estimated variance of the r-th feature in all data points, and the larger the Var(xr,:), the more information held by the r-th feature. The is the sum of differences in the expression of r-th feature between all samples. For larger values of Sij and the smaller values of , the value of LS(r) tends to be smaller, meaning that the importance level of the feature is higher. Therefore, the important features are selected according to LS(r).

Method

In this section, we propose a novel feature selection framework to select the feature genes for cancer clustering. This framework is set up based on the NSLRG method and the Score function. We refer to this approach as the NSLRG-S Subsection NSLRG Method presents the NSLRG method and its optimization algorithm. In subsection NSLRG With Score Function, we introduce the NSLRG method with the Score function. The last subsection Framework of NSLRG-S is devoted to clustering of cancer samples based on NSLRG-S modelling of gene expression data.

NSLRG Method

Graph Regularization

Because graph regularization can preserve the intrinsic local geometric structure in the original data, it has received much attention from researchers. The theory of graph regularization is based on the principle that the representation of the intrinsic local geometric structure that is distributed in the original data is inherited by a graph under the new basis mapping. In the graph, the vertices correspond to the data points, and the edge weights represent the relationships between the data points (Du et al., 2017). Thus far, graph theory has been widely applied and developed (Chen et al., 2018).

For this paper, in the step of graph construction, we assume that if data points xi and xj are “close”, an edge exists between xi and xj. In this work, we use the K-nearest neighbour method to find the connection of xi and xj. In other words, if xi or xj is among the K-nearest neighbours of each other, the data points xi and xj are located on the same edge. This construction strategy is simpler for determination of connected edges, which tends to lead to a connected graph. In the next step, the edge weights are defined to represent the affinity between the data points. In current study, we define a symmetric weighting matrix W by the heat kernel weighting function (Cai et al., 2005). The weighting formula is defined as follows.

where the parameter t is defined as the mean value of the Euclidean distance for all data points, which can be automatically adjusted based on the different dataset. Therefore, the degree matrix D is defined as , which is a diagonal matrix. Finally, based on the connected graph, we obtain the graph Laplacian matrix L, which is defined as follows.

Accordingly, a reasonable minimize objective function exists to satisfy our assumption, and it is defined as follows.

where zi and zj are mappings of xi and xj under the new basis, which are also close to each other if xi and xj are close. The objective function is known as the graph regularization item.

Objective Function

We introduce graph regularization and sparse items into the original LRR. Furthermore, we impose the non-negative and symmetric constraints on the low-rank matrix Z. This method is known as the non-negative symmetric low-rank representation graph regularized (NSLRG) method, and its objective function is written as follows.

In the NSLRG method, we represent a given set of data points as a linear combination of other points using a low-rank matrix Z. The low-rank matrix should be sparse to improve the recognition ability. Therefore, the matrix Z with a sparse constraint could make the result of the representation more discriminative. However, the ∥Z∥0 item of problem (10) is NP-hard. Thus, as suggested by matrix completion methods (Candès et al., 2011), we use ∥Z∥1, a proper relaxed convex item, to replace ∥Z∥0, and the objective function of NSLRG can be rewritten as follows.

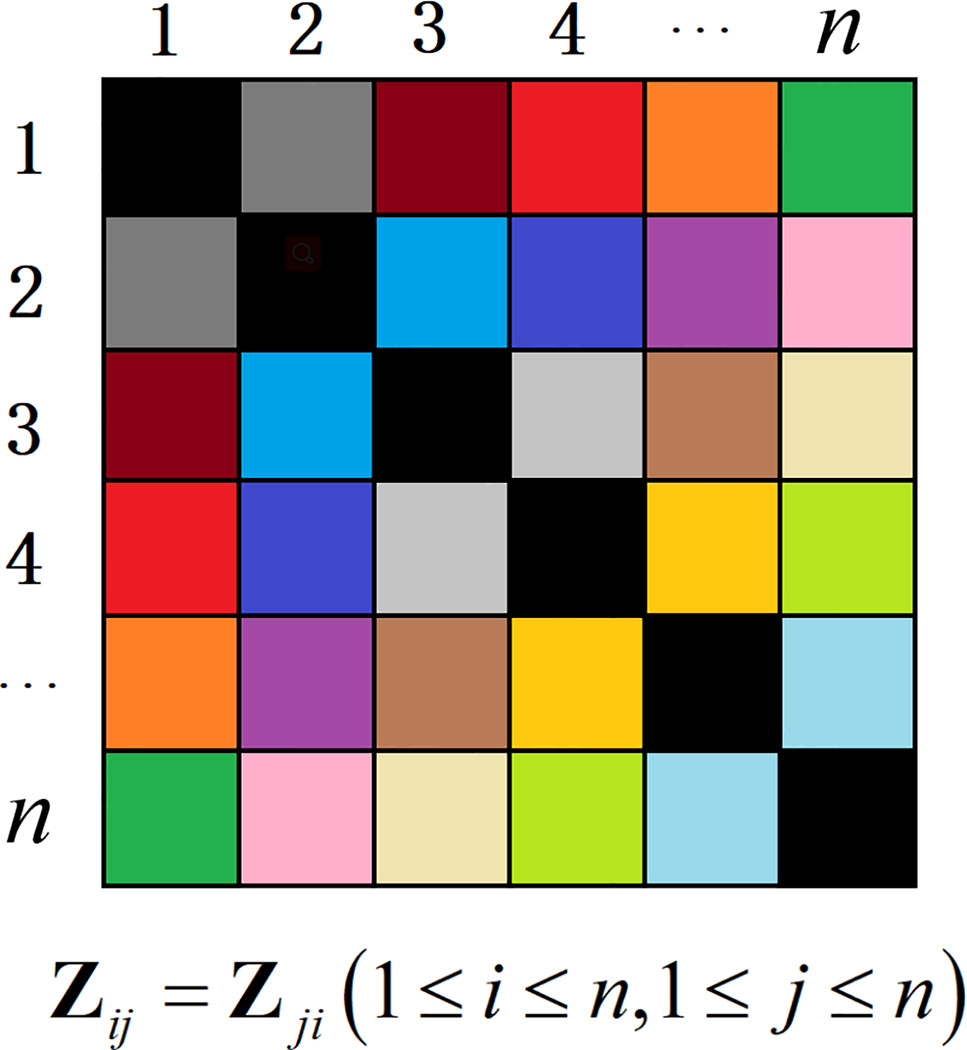

The matrix Z* is learned by the NSLRG method, and matrix Z* is a non-negative symmetric lowest rank matrix. The element zij of Z* can be treated as the degree of similarity between the data points xi and xj. In addition, the obtained matrix Z* has good interpretability, for which the element of matrix Z* can be directly converted to similar-degree weights. The symmetry constraint can strictly guarantee the consistency of similarity of data pairs. The similarity of data points i and j corresponding to the similar-degree weights elements zij and zji is equal, as shown as Figure 1. The non-negative constraint is more adaptive for the property of the gene expression data. In other words, the NSLRG method avoids the situation in which the lowest rank matrix might be negative and asymmetric, and it also avoids symmetrization of itself, as suggested in (Liu et al., 2010), i.e., . Therefore, we refer to the matrix Z* as the similar-degree matrix.

Optimization

As we know, many algorithms are based on convex relaxation to solve the high-dimension optimization problem, such as Singular Value Thresholding (SVT) (Cai et al., 2010), Accelerated Proximal Gradient (APG) (Toh and Yun, 2010), Alternating Direction Method (ADM) (Lin et al., 2009) and Linearized Alternating Direction Method with Adaptive Penalty (LADMAP) (Lin et al., 2011). As an extended ADM, the LADMAP algorithm adds the quadratic penalty term linearization and the penalty self-adaption change, which leads to use of fewer auxiliary variables and avoids matrix inversions to solve the problem. Specifically, LADMAP reduces the complexity of the LRR from O(n3) to O(rn3), where r is the rank of low-rank matrix Z. This algorithm makes it possible for LRR to be applied on large-scale dataset, such as video surveillance, digital images, and gene expression data. Therefore, the LADMAP algorithm has been recognized as the most efficient algorithm for solving the problem of convex relaxation of low-rank and sparse matrices. Similarly, we also adopt LADMAP to solve (11).

First, to easily and effectively obtain matrix Z, we use an auxiliary variable Q to separate the variables, i.e., nuclear norm (∥Z∥*) and l1-norm (∥Z∥1). The objective function can be rewritten as equation (12) using the Augmented Lagrange Multiplier method (Lin et al., 2010).

where λ1, λ2, and λ3 are positive weighting parameters; μ > 0 is the penalty parameter; Y1,Y2 are Lagrangian multipliers; A,B=tr(ATB) is the Euclidean inner product between the matrices A and B; and ∥⋅∥F is the Frobenius-norm. Mathematically, equation (12) is equivalent to equation (13) after applying a small transformation. Equation (13) facilitates processing of the next step.

Hence,

We divide equation (13) into three subproblems and solve it in three steps. The three subproblems are written as follows.

Finally, we solve the above subproblems to find the optimal solution. The specific steps are given as follows.

Step 1. Update Z: The matrix Z can be obtained by solving subproblem ℓ1 while keeping E and Q fixed. First, we define the following formula (17) based on ℓ1.

By setting the first derivative of with respect to Zk, we can obtain the following formula (18).

According to LADMAP, subproblem ℓ1 can be replaced by solving the following problem (19).

where .

Equation (19) can be transformed into the following formula (20).

To solve the symmetric and non-negative constraints of low-rank matrix Z, we adopt Lemma 1 of (Chen et al., 2017) and the non-negative operator, i.e., equation (24), respectively. Lemma 1 is defined as follows, and the detailed proofs have been given in the literature (Chen et al., 2017).

Lemma 1: If there is an expression similar to equation (21), its closed solution is equation (22).

In this work, Ur, ∑r and Vr are the members of the skinny singular value decomposition (SVD) of the matrix ; Σr = diag(δ1,δ2,…,δr); δr is the singular value for which the positive singular values are greater than , i.e., ; is defined as ; and Ir is an identity matrix with size r × r.

Based on Lemma 1, we make . We solve the Zk+1 using the singular value thresholding operator , where Sϵ = sgn(x)max(| x |−ϵ,0). The iterative formula is written as follows.

where . After obtaining matrix Zk+1 by equation (23), the non-negative constraint is imposed on matrix Zk+1 through a non-negative operator. The non-negative operator is defined as follows.

Finally, the non-negative symmetric low-rank matrix is obtained.

Step 2. Update E: The matrix E can be obtained by solving subproblem ℓ2 while keeping Z and Q fixed. Analogously, following equation (18), the first derivative of ℓ2 is set with respect to Ek, i.e., , and set . Thus, we obtain equation (25).

According to the NSHLRR method (Yin et al., 2016), the iterative formula of E is given as follows.

Step 3. Update Q: The matrix Q can be obtained by solving subproblem ℓ3 while keeping Z and E fixed. Similar to Step 2, we set the first derivative of ℓ3 with respect to Qk, i.e.,, and set . Thus, we obtain the following equation.

According to the NSHLRR method (Yin et al., 2016), the iterative formula of Q is written as follows.

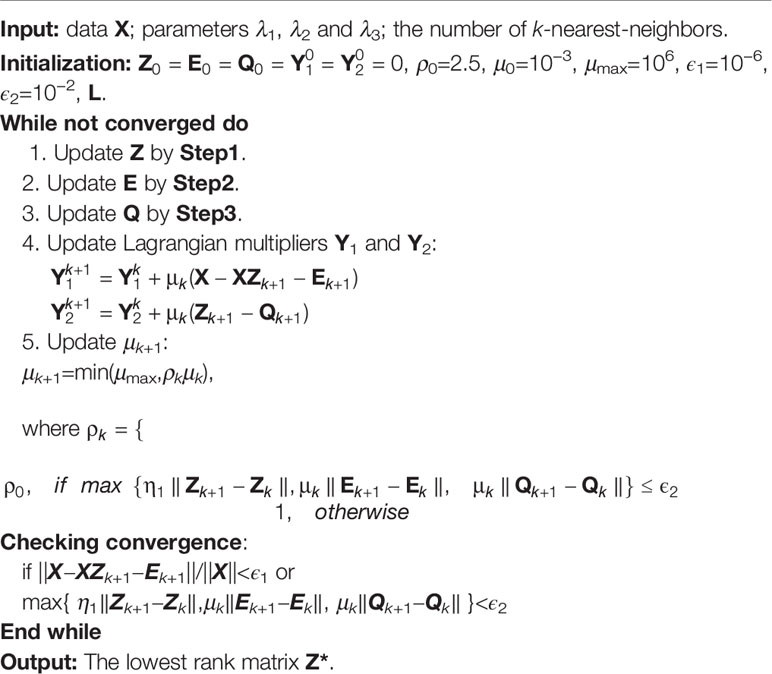

Algorithm 1 clearly summarizes the above solution steps. The initialization parameter values are set based on experimental experience and the existing relevant research recommendations (Yin et al., 2016).

NSLRG With Score Function

It is known that both local structure and global structure can influence the importance of features in raw data. However, the LS method primarily focuses on the locality preserving power of data to evaluate the features. Inspired by the lowest rank matrix Z* of the NSLRG method, which can capture the global and local structure of the raw data, we believe that the important feature of high-dimension data can be extracted based on the matrix Z*. Therefore, we propose a Score function that is established on the lowest rank matrix Z* for selection of the important feature. The formula is defined as follows.

where the Zij-NSLRG is the element of Z* obtained by the NSLRG method, and Zij-NSLRG denotes the similarity degree of the i-th and j-th samples and is used to measure the r-th feature between two samples. The property of the global and local structure captured by the lowest rank matrix can be used as a constraint for feature selection. The selected feature results are quite useful for capturing the subspace structures of raw data. In different classes, this constraint can guarantee the selected feature with high discrimination.

Based on the result of the Score function, all features are arranged in ascending order to form a score curve. The number of selected features is τ (τ <m), which occurs before the first inflection point of the score curve. Thus, we cluster the cancer samples based on the selected feature genes.

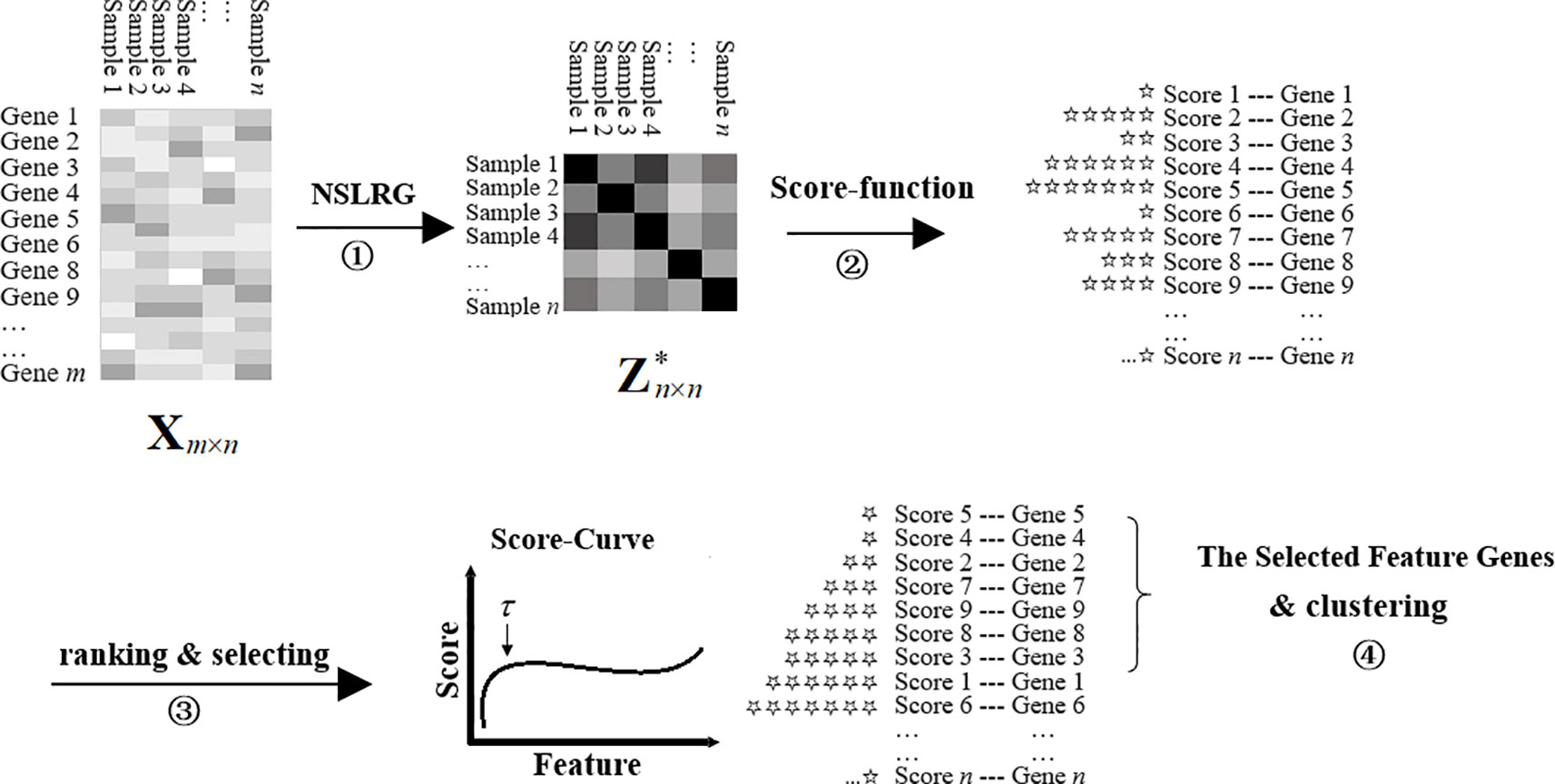

We refer to the NSLRG method with the Score function as the NSLRG-S framework for short. In a nutshell, the NSLRG-S framework can be divided into four steps. In the first step, the lowest rank matrix is obtained by the NSLRG method. In the second step, the Score function is used to evaluate and rank features based on the lowest-rank matrix of the first steps. In the third step, the feature genes are selected according to the results of the Score function. In the fourth step, cancer sample clustering is processed based on the selected feature genes. This novel framework delivers better reliability in selection of the most important feature for cancer sample clustering according to the global and local structure of the raw data.

Framework of NSLRG-S

Based on the proposed NSLRG-S framework, our goal is to model the gene expression data and cluster the cancer samples according to the selected feature genes.

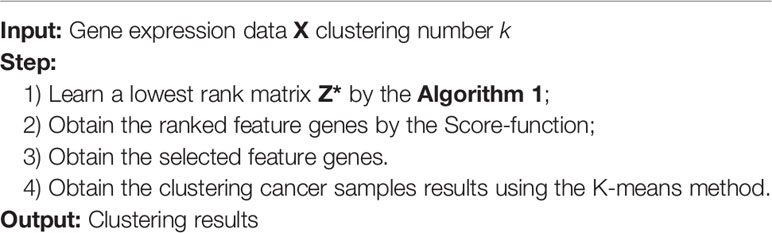

The modelling process is shown in Figure 2. At the start, the matrix Xm×n represents the gene expression data with size m × n, and one row represents the expression level of a same gene in different samples. The totals of genes and samples are m and n, respectively. Usually, m is notably large and n is rather small. The matrix is the lowest-rank matrix obtained by the NSLRG method as the basis for the Score function. Second, according to the score result, all of the genes are ranked in ascending order. The total number of τ (τ <m) feature genes are selected based on the first inflection point of the score curve. Finally, we cluster the cancer samples based on the selected feature genes to demonstrate the selected genes with efficient discrimination. The result is compared with those of different methods, including the K-means, Graph Regularized Nonnegative Matrix Factorization (GNMF) (Cai et al., 2011), Robust Principal Component analysis (RPCA) (Candès et al., 2011), Sparse Principal Component Analysis (SPCA) (Journée et al., 2010), Graph-Laplacian PCA (GLPCA) (Jiang et al., 2013), LS (He et al., 2006), and LLRR (Wang et al., 2016) methods. The details of the experimental result are described in subsection Experiments on Gene Expression Data. Algorithm 2 is the framework of the NSLRG-S for clustering of gene expression data.

Experiments

To evaluate the performance of the NSLRG-S framework, we compare the NSLRG-S framework with multiple typical methods, including the K-means, GNMF (Cai et al., 2011), RPCA (Candès et al., 2011), SPCA (Journée et al., 2010), GLPCA (Jiang et al., 2013), LS (He et al., 2006), and LLRR (Wang et al., 2016) methods. In subsection Evaluation and Quantitative Benchmarks, we select three quantitative benchmarks to evaluate the experimental results. In subsection Experiments on Synthetic Data and subsection Experiments on Gene Expression Data, comparative experiments are conducted on synthetic data and cancer gene expression data, respectively.

Evaluation and Quantitative Benchmarks

To evaluate the performance of the clustering results based on comparison methods, we select three quantitative benchmarks: the clustering accuracy rate (Acc) (Cui et al., 2013), F1 measurement (F1) (Rijsbergen, 1979), and Rand Index (RI) (Rand, 1971).

Clustering Accuracy Rate

The Acc is defined as follows.

where N is the total number of samples, and Ξ(ξi,map(ri)) is used to identify whether ξi and ri are matched. The ξi and ri are the actual label and clustering label of the i-th sample, respectively, and if they are matched, the value of Ξ(ξi,map(ri)) is equal to one; otherwise, its value is equal to zero. The map(ri) is the mapping function based on the Kuhn-Munkres method (Lovász and Plummer, 1986).

F1 Measurement

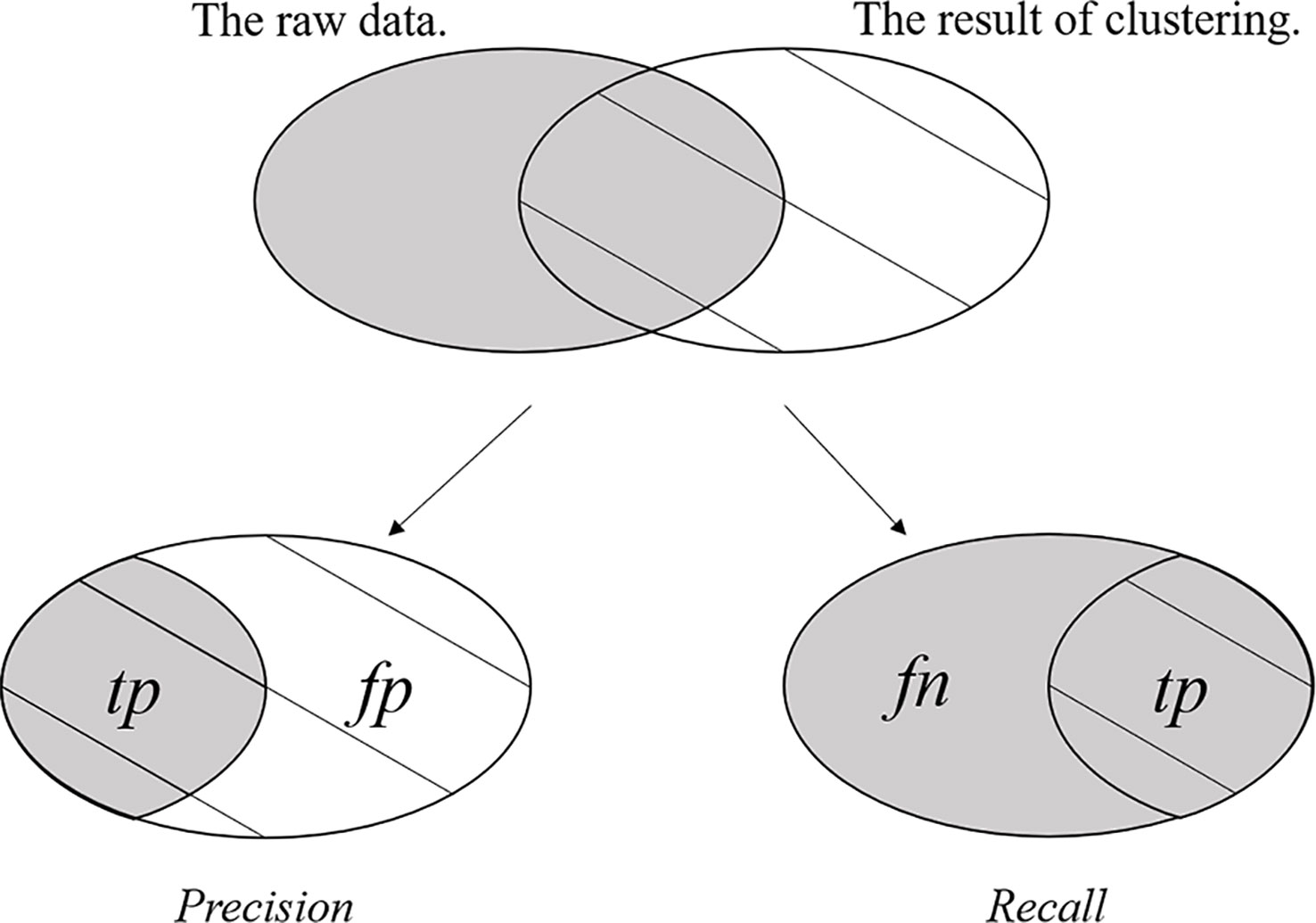

The F1 measurement is a special form of the F-Measure under a certain parameter. The F-Measure is also referred to as the F-Score and is the weighted harmonic mean of the Precision rate and Recall rate of the result of clustering. The F-Measure, Precision rate, and Recall rate are defined as follows.

where F is the F-Measure, P is the Precision rate and R is the Recall rate. The tp (true positives) is the item that records the number of positive samples that are clustered into their own positive class, fp (false positives) is the item that records the number of negative samples that are clustered into the positive class, and fn (false negatives) is the item that records the number of positive samples that are clustered into negative class. Figure 3 clearly shows tp, fp and fn. The F-Measure can balance the contribution of fn by weighting Recall through the parameter ϕ > 0. When the parameter ϕ = 1, F-Measure becomes the most common form, i.e., F1 measurement, and equation (31) is rewritten as follows.

F1 measurement reaches its best value at 1 and its worst score at 0. The relative contributions of the Precision rate and Recall rate to the F1 measurement are equal.

Rand Index

The given data have two partitions: one is the actual classification, and the other is the clustered result (returned by our Algorithm 2). The Rand Index (RI) is used to compute how similar the result of clustering is to the actual classification. The RI is defined as follows.

where a indicates the number of pairs of data points belonging to the same class in both the actual classification and the clustered result, b indicates the number of pairs of data points belonging to the different class in both the actual classification and the clustered result, and represents the total number of data pairs obtained from the given data. The range of RI is [0,1], and the larger the value, the more the clustering results are in accordance with reality.

Experiments on Synthetic Data

In this subsection, comparison experiments are conducted on synthetic data. In subsection Synthetic Data, we construct the synthetic data. In subsection Convergence Analysis, we perform convergence analysis to compare the NSLRG-S framework and other methods. In subsection Clustering Results, we analyze the performance of comparison methods on clustering data samples.

Synthetic Data

The synthetic data are constructed by the following steps (1) and (2). These synthetic data contain ten independent subspaces.

1. Construction of 10 original databases by Oi+1 = TOi, 1 ≤ i ≤ 9. The value of the database ranges from 0 to 1, T is the transform random rotation matrix, and O1 is a random orthogonal matrix of 1000×100. The rank of each original database is 100.

2. We extract 10 data vectors from each original database by Xi = OiQi,1 ≤ i ≤ 10, where the matrix is an independent identical distribution matrix N(0,1), and its size is 100×10. All extracted data vectors are combined in synthetic data .

Convergence Analysis

We define an Error-Values function FE-V(k) based on the loss function value to calculate the convergence rate. In the same iterations, the smaller the value of the Error-Values, the faster the convergence rate. The formula is given as follows.

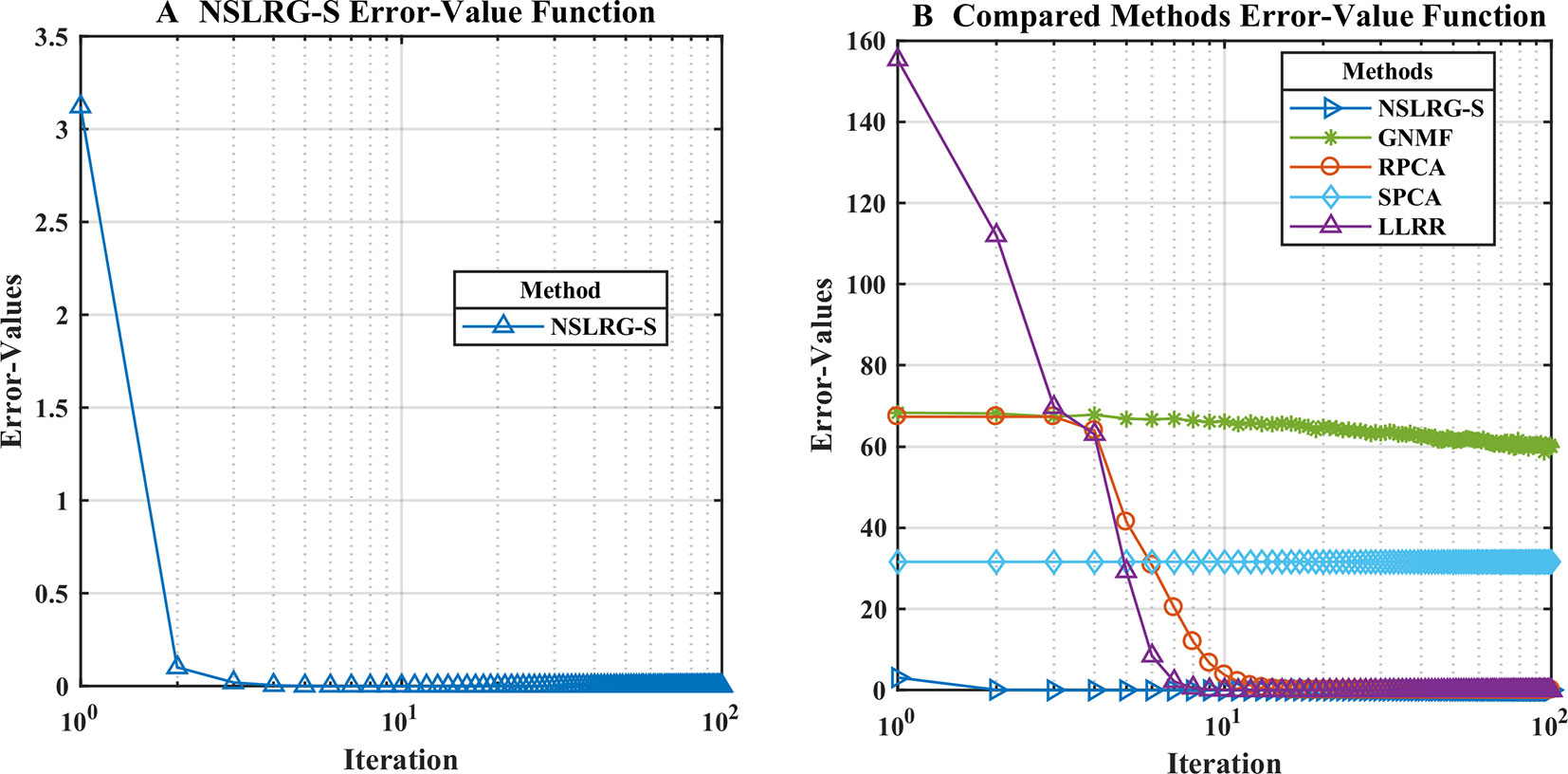

where the minimum value of FE-V(k) is equal to zero. To clearly characterize the convergence rate, Figures 4A, B show the convergence trends of the NSLRG-S and the compared methods GNMF, RPCA, SPCA, and LLRR in 100 iterations. In Figure 4B, we find that the convergence rate of the NSLRG method is faster than those of the other methods.

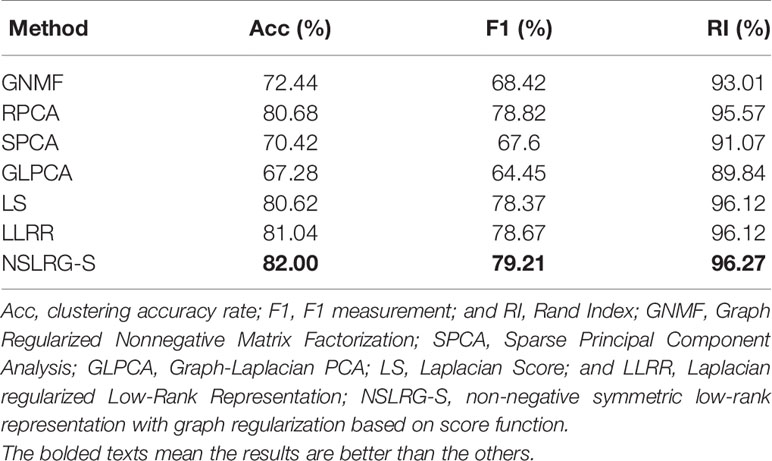

Clustering Results

Table 1 lists the results of the GNMF, RPCA, SPCA, GLPCA, LS, LLRR, and NSLRG-S methods on the three quantitative benchmarks as Acc, F1, and RI. The results show that the performance of NSLRG-S is better than those of other methods.

Experiments on Gene Expression Data

In this subsection, we conduct experiments on gene expression datasets. The experimental datasets are downloaded from the famous gene expression database The Cancer Genome Atlas (TCGA). We cluster the cancer samples based on the feature genes obtained by the NSLRG-S framework. The experimental results demonstrate that we can improve the performance in cancer samples clustering by applying the selected feature genes.

Gene Expression Datasets

The TCGA database is a source of experimental data and is an important project for accelerating and comprehensively understanding cancer genetics using innovative genome analysis technologies (Tomczak et al., 2015). This database is one of the invaluable sources for gene expression datasets. Therefore, we select the TCGA database as the data source to research the clustering performance of the NSLRG-S framework.

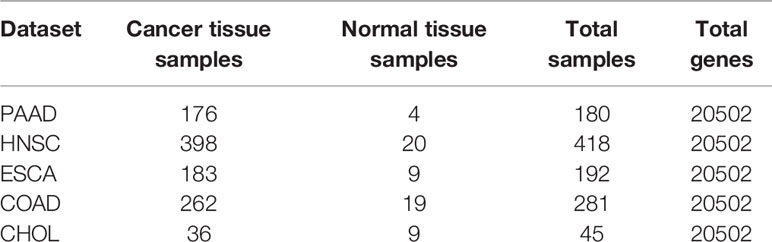

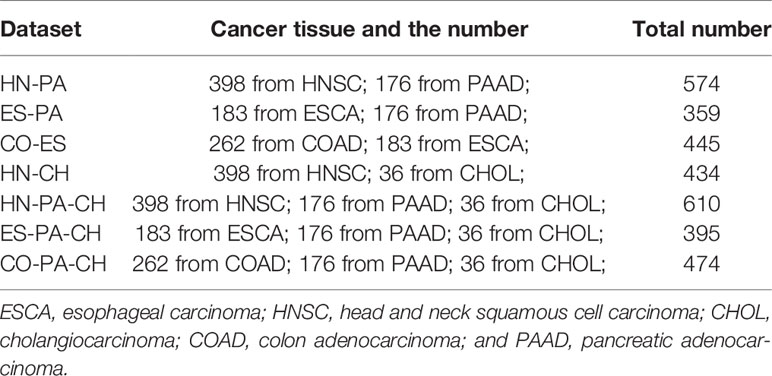

We downloaded five cancer gene expression datasets, namely, esophageal carcinoma (ESCA), head and neck squamous cell carcinoma (HNSC), cholangiocarcinoma (CHOL), colon adenocarcinoma (COAD) and pancreatic adenocarcinoma (PAAD). Each type of gene expression dataset contains cancer tissue samples and normal tissue samples. There are 20,502 genes in each tissue sample. The distribution of each gene expression dataset is listed in Table 2.

In addition, to find the feature gene with a high recognition rate between different cancers for cancer sample clustering, we construct seven mixed datasets. The mixed datasets are HN-PA, ES-PA, CO-ES and HN-CH; HN-PA-CH, ES-PA-CH, and CO-PA-CH. The construction rule combines tumour tissue samples that come from different gene expression data, and the combined datasets contain two or three types of cancers. For example, in the HN-PA data, HN represents all of the cancer tissue samples of the HNSC data, and PA represents the total of the cancer tissue samples of the PAAD data. The cancer tissue samples of HN and PA are combined to construct the new mixed data, i.e., HN-PA, which contain two types of cancers and have 574 cancer tissue samples. For the other mixed datasets, the distributions are listed in Table 3.

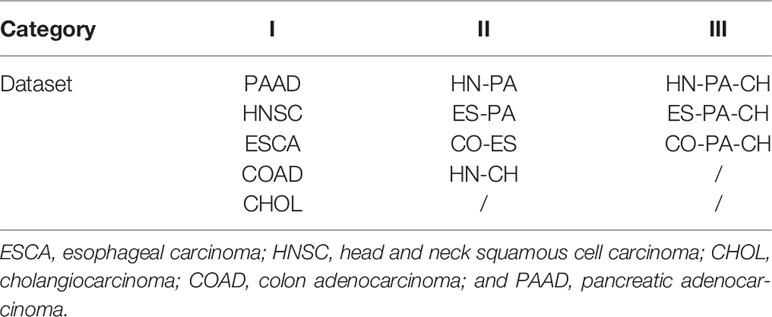

The five original datasets and seven mixed datasets are used in experiments. We classify all datasets into three categories according to the number of cancers they contain. The datasets that contain one type of cancer belong to Category I. Thus, Category I contains PAAD, HNSC, ESCA, COAD, and CHOL. Datasets that contain two types of cancers belong to Category II, and they are HN-PA, ES-PA, CO-ES, and HN-CH. The datasets that contain three types of cancers belong to Category III, and the names of these datasets are HN-PA-CH, ES-PA-CH, and CO-PA-CH. Table 4 clearly lists the category results.

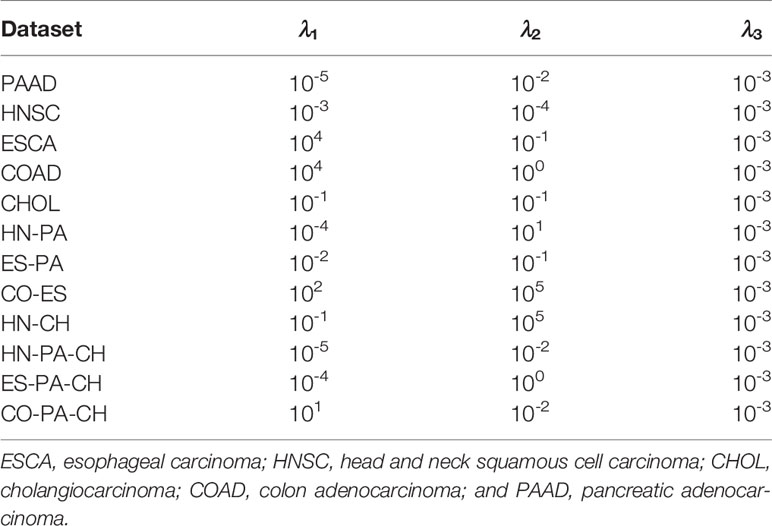

Parameter Selection

In the experiments, we need to select the optimal parameters of the different datasets. For the three parameters (λ1, λ2, λ3) of the NSLRG method, we assume that the optimal value of each parameter exists within an estimation range of 10t(t = { −5,−4,−3,−2,−1,0,1,2,3,4,5 }). We study the influence of each parameter on feature selection and select the optimal parameters according to the different datasets. First, our main task is to determine the sensitivity of each parameter to the different datasets. We change one parameter within the candidate interval while holding the other two parameters fixed to explore the influence degree of this parameter on the dataset. We find that the parameter λ3 is insensitive for all datasets. Therefore, the NSLRG method is robust for the parameter λ3, and we select the λ3 = 10-3 according to experimental experience. The details of selection of the other two parameters are listed in Table 5.

Results and Discussion

In this subsection, based on the datasets of subsection Gene Expression Datasets, we apply the NSLRG-S to cluster the cancer samples. We adopt seven clustering methods, including K-means, GNMF, RPCA, SPCA, GLPCA, LS, and LLRR, for comparison with NSLRG-S.

Typically, gene expression data mining can be recognized as addressing a small sample size and high-dimensional problem. The applied methods must face and suffer from what is known as the curse of dimensionality. This situation occurs because the more dimensions contained in the data (20,502 in our case), the more unstable the result. Therefore, in our experiments, we improve the reasonableness of the result by running the experiment 50 times. The mean of the results is taken as the measurement of the clustering results.

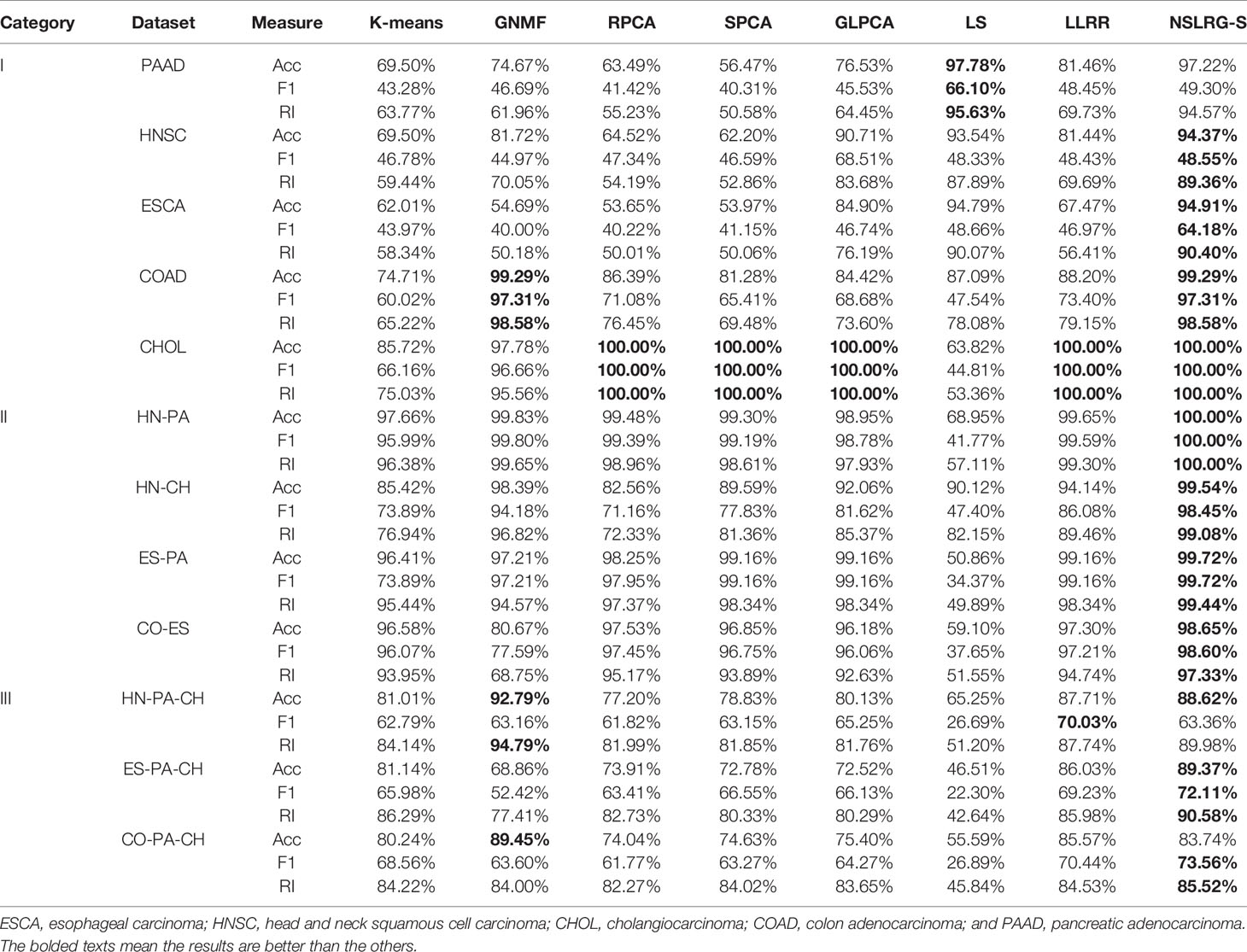

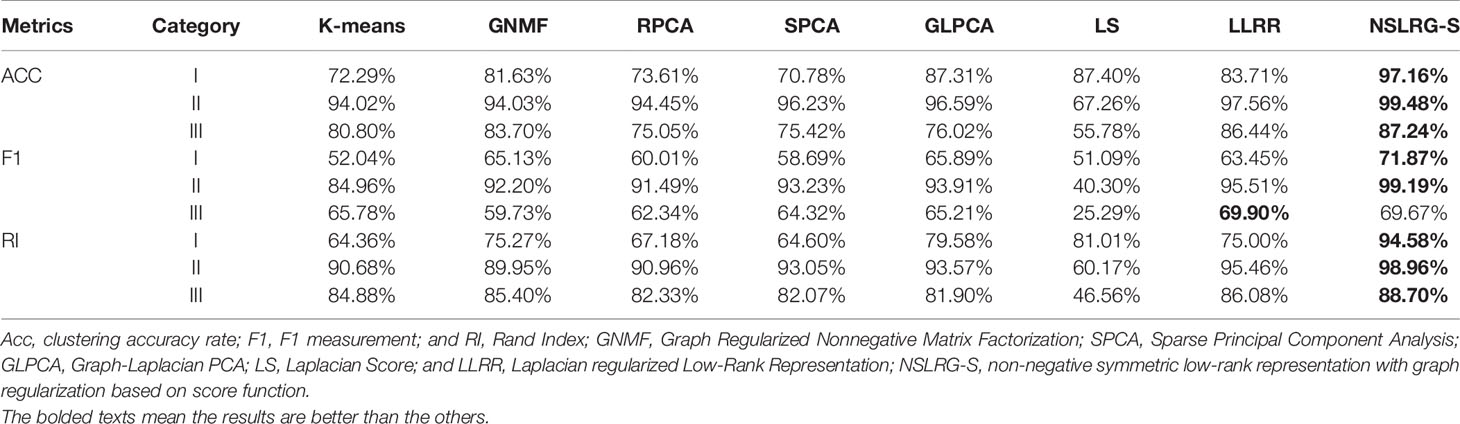

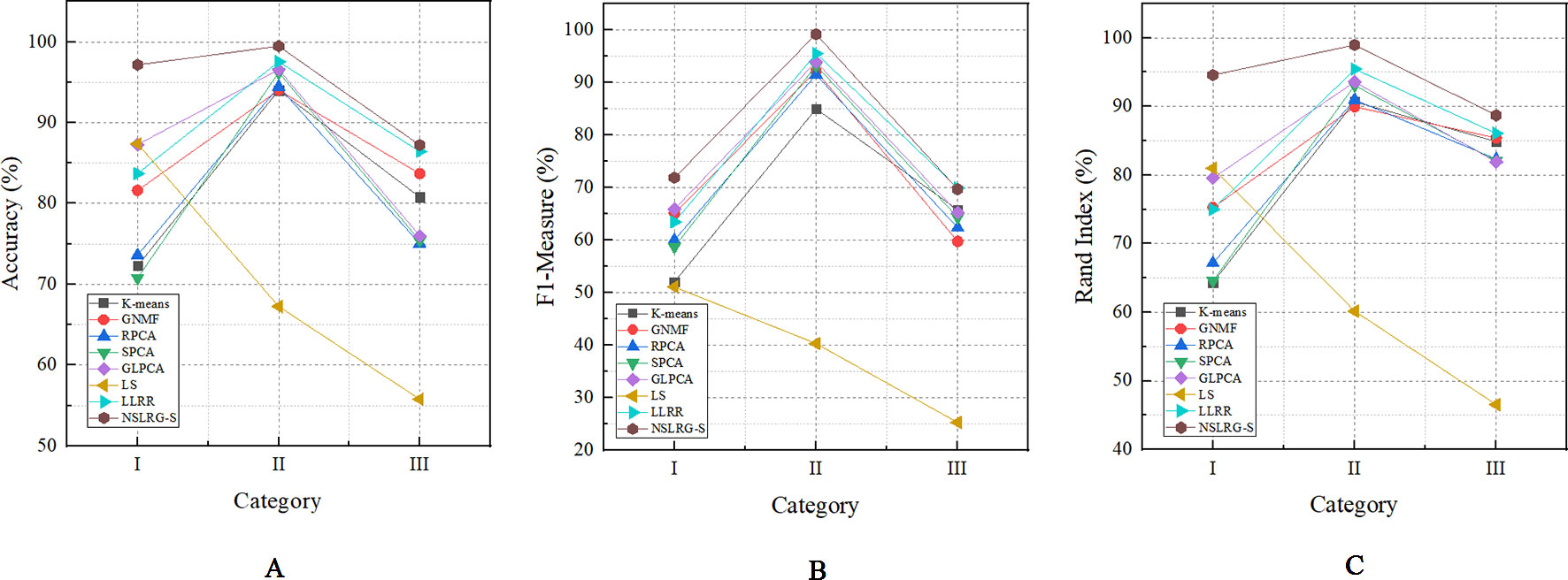

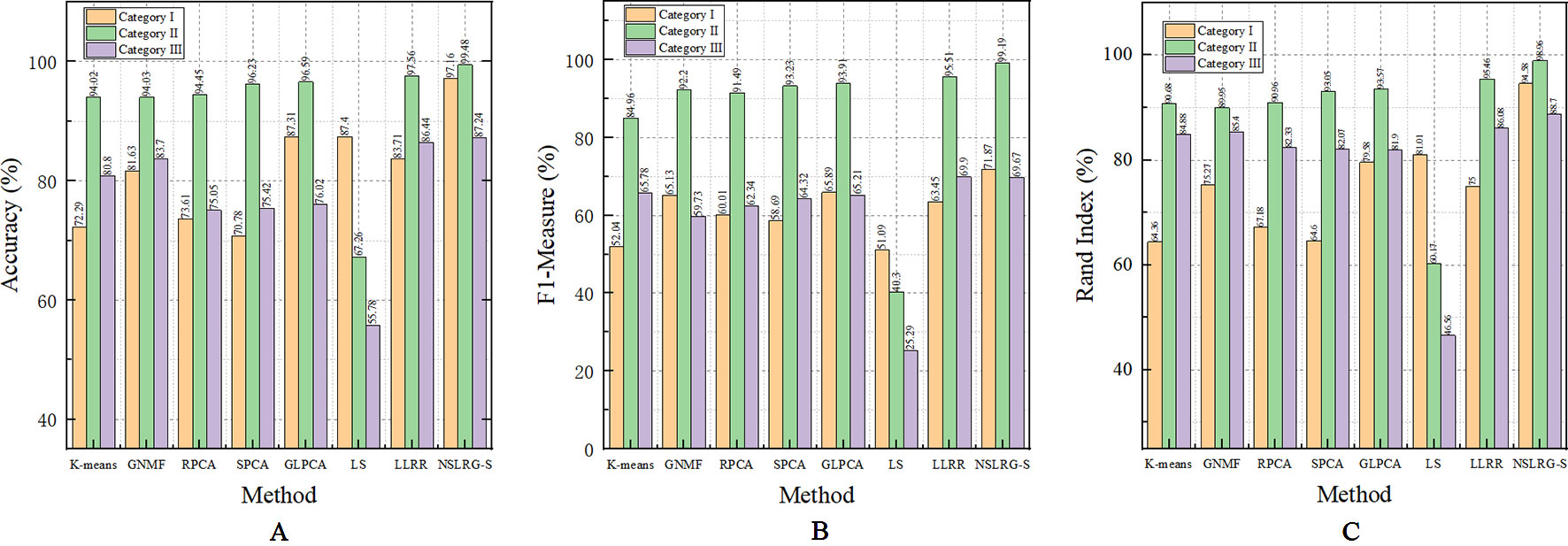

Table 6 clearly lists the experimental results of all methods. Based on Table 6, we obtain the mean metrics of each category dataset, and they are listed in Table 7. Furthermore, to clearly show the experimental results on different categories of dataset and different methods, Figure 5 presents a broken-line graph for the three category datasets corresponding to different methods. Figure 6 presents a histogram for the different methods corresponding to the three category datasets.

Figure 5 The mean metrics of experimental result for Category I, II, and III. (A) Accuracy-Category (B) F1-Category (C) Rand Index-Category.

Figure 6 The mean metrics of experimental result for all methods. (A) Accuracy-Method (B) F1-Method (C) Rand Index-Method.

By comparing the clustering results of NSLRG-S and other methods, we find that the results of the NSLRG-S method are the best of all methods in most datasets. According to Table 6, for the Category I dataset, the clustering performance of NSLRG-S for the HNSC and ESCA datasets is higher than that of other methods. In the COAD and CHOL dataset, NSLRG-S achieves the same best results as the other methods. For the Category II dataset, the clustering performance of NSLRG-S is the best of all methods. For the Category III dataset, except for the metrics of Acc and F1 on HN-PA-CH and Acc on CO-PA-CH, which are obtained by GNMF, and F1 on HN-PA-CH obtained by LLRR, the clustering performance of NSLRG-S is better than that of other methods.

In addition to the numerical comparison, we also find that the NSLRG-S method has different advantages after comparing it with different comparison methods. In the next section, we conduct a more detailed comparison and analysis between NSLRG-S and the other comparison methods.

In the seven comparison methods (K-means, GNMF, RPCA, SPCA, GLPCA, LS, and LLRR), K-means is the traditional clustering method; GNMF belongs to matrix factorization techniques, which extend the nonnegative matrix factorization with preservation of the intrinsic geometric structure (Cai et al., 2011); RPCA, SPCA, and GLPCA are variant methods of principal component analysis, which is a well-established descending dimension method for mining high dimensional data (Journée et al., 2010); LS is the feature selection method; and the LLRR is the subspace clustering method. In addition, the NSLRG-S framework combines the NSLRG method and Score function. Therefore, this framework belongs to a mixed method that combines the advantage of both sides.

First, we compare the NSLRG-S framework with K-means. Based on Table 6, we find that a higher clustering result is obtained by NSLRG-S. This comparison result shows that the proposed NSLRG-S framework is better than the traditional clustering method in cancer sample clustering. This result occurs because the NSLRG-S considers the local and global structure of the raw data. This framework can select feature genes with a high recognition rate for cancer sample clustering. In addition, the K-means method performs cancer sample clustering based on the raw data, which ignores the contents considered in NSLRG-S. Figure 5 clearly shows that the NSLRG-S is superior to the K-means method.

Second, we compare the NSLRG-S with the GNMF method. In GNMF, a nearest neighbour graph is constructed by encoding the geometrical information of the data space. The method seeks matrix factorization, which incorporates the graph structure (Cai et al., 2011). Based on Table 5, the GNMF method obtains good results, and a subset of them are even better than those of NSLRG-S method. For most of the datasets, the results of NSLRG-S are still better than those of GNMF. The reason for this result is that the NSLRG-S method can obtain the characteristics of the subspace structure of the raw data, and the corresponding subspace of different types of cancer can be satisfactorily distinguished.

Third, we compare the NSLRG-S with the RPCA, SPCA, and GLPCA methods. RPCA, SPCA, and GLPCA belong to principal component analysis methods and are suitable for processing high-dimensional gene expression data by learning a low-dimensional representation. The results of NSLRG-S are better than those of three methods, except for the CHOL dataset. We can conclude that the NSLRG-S method is better than the variant methods of principal component analysis in clustering of multiple cancer samples.

Fourth, we compare the NSLRG-S with the LS method. Based on Figure 5, we find that the performance of LS decreases gradually on the Category I, Category II and Category III datasets, and this trend is different with other methods. The reason for this result is that the feature genes selected by the LS method have locality-preserving power attributes but do not have good multi-subspace separation attributes. In the framework of the NSLRG-S, feature genes are obtained under the Score function based on the low-rank matrix obtained by the NSLRG method. This low-rank matrix can preserve the global and local structure of the raw data, and after further processing the low-rank matrix through the Score function, the selected genes have a strong discrimination in multi-subspace clustering. Therefore, the performance of NSLRG-S is better than that of LS.

Finally, we compare the NSLRG-S with the LLRR method. Based on Figure 5, the broken line of the NSLRG-S is always above that of the LLRR method except for F1 on the Category III dataset. The comparison results show that the Score function plays an important role in further mining of the low-rank matrix of the NSLRG method.

Furthermore, we note an interesting trend in the results of three categories of datasets for each method, as shown in Figure 6. Other than the LS method, which shows a downward trend, the other methods show an upward trend first followed by a downward trend. In other words, except for the LS method, after comparing all of the results of the other methods, we note that the experimental results of the Category II datasets are the best, followed by the Category III datasets or the Category I datasets, and this trend occurs in all metrics. According to Tables 2–4, the distributions of sample size in the Category II datasets are more balanced than those in Category I and Category III. Therefore, the result of the Category II dataset is more reasonable and stable than the results of Category I and Category III. However, with an increasing number of subspaces, the structure of the data is more complex, and the global and local structures of raw data are more difficult to capture. Therefore, compared with the experimental results of the Category II datasets, the experimental results of the Category III datasets decrease. Fortunately, according to Table 7, the NSLRG-S is still better than other methods. This observation demonstrates that the NSLRG-S framework has better advantages in cancer sample clustering than other methods when working with unbalanced and multi-subspace datasets. Based on the above discussion and analysis, we conclude that the NSLRG-S framework has a good effect for cancer sample clustering based on a gene expression dataset.

Conclusions Work

In this paper, we cluster the cancer samples of multi-cancer gene expression datasets based on select feature genes obtained by the NSLRG-S framework. In addition, NSLRG-S simultaneously considers the local and global structure of the raw gene expression dataset. The selected feature genes have a high recognition rate in subspace clustering. The comparison experimental results suggest that the NSLRG-S framework can significantly improve the cancer samples clustering performance.

Data Availability Statement

The datasets generated for this study can be found in the [The Cancer Genome Atlas (TCGA)] https://cancergenome.nih.gov/. We have uploaded scripts and examples on GitHub to adhere standards for reproducibility. The URL is https://github.com/guoguoguolu/NSLRG-S-method-scripts-and-example-files.

Author Contributions

JW and CL conceived the original research plans and methodology. JL and XK performed synthetic data analysis. JW, CL and XK performed experiments on gene expression data. JW and CL supervised and wrote the original draft. JW, CZ, and XZ reviewed and revised the writing.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant Nos. 61872220, 61702299, and 61873117.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank to the contributions of CBC 2019 that aided the efforts of the authors.

References

Belkin, M., Niyogi, P. (2001). “Laplacian eigenmaps and spectral techniques for embedding and clustering,” in Proceedings of the 14th International Conference on Neural Information Processing Systems: Natural and Synthetic (Vancouver, British Columbia, Canada: MIT Press).

Cai, D., He, X., Han, J. (2005). Document clustering using locality preserving indexing. IEEE Trans. Knowl. Data Eng. 17 (12), 1624–1637. doi: 10.1109/TKDE.2005.198

Cai, J.-F., Candès, E. J., Shen, Z. (2010). A Singular Value Thresholding Algorithm for Matrix Completion. SIAM J. Optim. 20 (4), 1956–1982. doi: 10.1137/080738970

Cai, D., He, X., Han, J., Huang, T. S. (2011). Graph Regularized Nonnegative Matrix Factorization for Data Representation. IEEE Trans. Pattern Anal. Mach. Intell. 33 (8), 1548–1560. doi: 10.1109/TPAMI.2010.231

Candès, E. J., Li, X., Ma, Y., Wright, J. (2011). Robust Principal Component Analysis? ACM 58 (3), 1–37. doi: 10.1145/1970392.1970395

Chen, J., Yang, J. (2014). Robust Subspace Segmentation Via Low-Rank Representation. IEEE Trans. Cybern. 44 (8), 1432–1445. doi: 10.1109/TCYB.2013.2286106

Chen, J., Mao, H., Sang, Y., Yi, Z. (2017). Subspace clustering using a symmetric low-rank representation. Knowl.-Based Syst. 127, 46–57. doi: 10.1016/j.knosys.2017.02.031

Chen, J., Peng, H., Han, G., Cai, H., Cai, J. (2018). HOGMMNC: a higher order graph matching with multiple network constraints model for gene–drug regulatory modules identification. Bioinformatics 35 (4), 602–610. doi: 10.1093/bioinformatics/bty662%JBioinformatics

Cui, Y., Zheng, C. H., Yang, J. (2013). Identifying subspace gene clusters from microarray data using low-rank representation. PloS One 8 (3), e59377. doi: 10.1371/journal.pone.0059377

Dash, M., Liu, H. (1997). Feature selection for classification. Intell. Data Anal. 1 (1), 131–156. doi: 10.1016/S1088-467X(97)00008-5

Du, S., Ma, Y., Ma, Y. (2017). Graph regularized compact low rank representation for subspace clustering. Knowl.-Based Syst. 118, 56–69. doi: 10.1016/j.knosys.2016.11.013

Ge, H., Hu, T. (2014). “Genetic Algorithm for Feature Selection with Mutual Information,” in 2014 Seventh International Symposium on Computational Intelligence and Design. (Piscataway, NJ: IEEE), 116–119.

He, X., Niyogi, P. (2005). In Advances in neural information processing systems 16 (NIPS). (Cambridge, MA: MIT Press),153–160.

He, X., Cai, D., Partha, N. (2006). “Laplacian Score for Feature Selection,” in the Neural Information Processing Systems Conference (NIPS) (Cambridge, MA: MIT Press), 507–514.

Jiang, B., Ding, C., Luo, B., Tang, J. (2013). “Graph-Laplacian PCA: Closed-Form Solution and Robustness,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR). (Piscataway, NJ: IEEE).

Journée, M., Nesterov, Y., Richtárik, P., Sepulchre, R. (2010). Generalized Power Method for Sparse Principal Component Analysis. J. Mach. Learn. Res. 11 (2), 517–553.

Kohavi, R., John, G. H. (1997). Wrappers for feature subset selection. Artif. Intell. 97 (1), 273–324. doi: 10.1016/S0004-3702(97)00043-X

Langley, P. (1994). “Selection of relevant features in machine learning,” in Proc of the AAAI Fall Symposium on Relevance. (Menlo Park, CA: AAAI), 1–5.

Lin, Z., Chen, M., Ma, Y. (2009). The Augmented Lagrange Multiplier Method for Exact Recovery of Corrupted Low-Rank Matrices (University of illinois at urbana-champaign technical report). (UILU-ENG-09-2215).

Lin, Z., Chen, M., Ma, Y. (2010). The Augmented Lagrange Multiplier Method for Exact Recovery of Corrupted Low-Rank Matrices. Eprint Arxiv. 2010 (v1). doi: 10.1016/j.jsb.2012.10.010

Lin, Z., Liu, R., Su, Z. (2011). Linearized Alternating Direction Method with Adaptive Penalty for Low-Rank Representation. In Advances in Neural Information Processing Systems (NIPS 2011). (New York: Curran Associates), 612–620.

Liu, G.,, Lin, Z., , Yu, Y. (2010). “Robust Subspace Segmentation by Low-Rank Representation,” in Proceedings of the 27th International Conference on Machine Learning (Madison, Wisconsin, USA: Omnipress), 663–670.

Liu, G. C., Lin, Z. C., Yan, S. C., Sun, J., Yu, Y., Ma, Y. (2013). Robust recovery of subspace structures by low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 35 (1), 171–184. doi: 10.1109/TPAMI.2012.88

Lovász, L., Plummer, M. D. (1986). Matching Theory. J. Appl. Math. Mech. 68 (3), 146–146. doi: 10.1002/zamm.19880680310

Lu, X., Wang, Y., Yuan, Y. (2013). Graph-Regularized Low-Rank Representation for Destriping of Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 51 (7), 4009–4018. doi: 10.1109/TGRS.2012.2226730

Mohamad, M. S., Omatu, S., Deris, S., Yoshioka, M. (2010). “A Three-Stage Method to Select Informative Genes from Gene Expression Data in Classifying Cancer Classes,” in 2010 International Conference on Intelligent Systems, Modelling and Simulation. (Piscataway, NJ: IEEE), 158–163.

Mohamad, M. S., Omatu, S., Deris, S., Yoshioka, M. (2013). “A Constraint and Rule in an Enhancement of Binary Particle Swarm Optimization to Select Informative Genes for Cancer Classification,” in Revised Selected Papers of PAKDD 2013 International Workshops on Trends and Applications in Knowledge Discovery and Data Mining - Volume 7867. (Berlin, Heidelberg: Springer), 168–178.

Rand, W. M. (1971). Objective Criteria for the Evaluation of Clustering Methods. J. Am. Stat. Assoc. 66 (336), 846–850. doi: 10.1080/01621459.1971.10482356

Rappoport, N., Shamir, R. (2018). Multi-omic and multi-view clustering algorithms: review and cancer benchmark. Nucleic Acids Res. 46 (20), 10546–10562. doi: 10.1093/nar/gky889

Russo, G., Zegar, C., Giordano, A. (2003). Advantages and limitations of microarray technology in human cancer. Oncogene 22, 6497–6507. doi: 10.1038/sj.onc.1206865

Talavera, L. (2005). An Evaluation of Filter and Wrapper Methods for Feature Selection in Categorical Clustering (Berlin Heidelberg: Springer), 440–451.

Tang, P., Tang, X., Tao, Z., Li, J. (2014). “Research on feature selection algorithm based on mutual information and genetic algorithm,” in 2014 11th International Computer Conference on Wavelet Actiev Media Technology and Information Processing (ICCWAMTIP). (Piscataway, NJ: IEEE), 403–406.

Toh, K., Yun, S. (2010). An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problems. Pac. J. Optim. 6 (3), 615–640.

Tomczak, K., Czerwinska, P., Wiznerowicz, M. (2015). The Cancer Genome Atlas (TCGA): an immeasurable source of knowledge. Contemp. Oncol. (Poznan Poland) 19 (1A), A68–A77. doi: 10.5114/wo.2014.47136

Wang, Y. X., Liu, J. X., Gao, Y. L., Zheng, C. H., Shang, J. L. (2016). Differentially expressed genes selection via Laplacian regularized low-rank representation method. Comput. Biol. Chem. 65, 185–192. doi: 10.1016/j.compbiolchem.2016.09.014

Wang, J., Liu, J. X., Zheng, C. H., Wang, Y. X., Kong, X. Z., Weng, C. G. (2018). A mixed-norm laplacian regularized low-rank representation method for tumor samples clustering. IEEE/ACM Trans. Comput. Biol. Bioinf. 16 (1), 172–182. doi: 10.1109/TCBB.2017.2769647

Wang, J., Liu, J.-X., Kong, X.-Z., Yuan, S.-S., Dai, L.-Y. (2019a). Laplacian regularized low-rank representation for cancer samples clustering. Comput. Biol. Chem. 78, 504–509. doi: 10.1016/j.compbiolchem.2018.11.003

Wang, J., Zheng, R., Liang, Z., Li, M., Wu, F.-X., Pan, Y. (2019b). SinNLRR: a robust subspace clustering method for cell type detection by non-negative and low-rank representation. Bioinformatics 35 (19), 3642–3650. doi: 10.1093/bioinformatics/btz139

Xu, A., Chen, J., Peng, H., Han, G., Cai, H. (2019). Simultaneous interrogation of cancer omics to identify subtypes with significant clinical differences. Front. Genet. 10, 236. doi: 10.3389/fgene.2019.00236

Yin, M., Gao, J., Lin, Z. (2016). Laplacian Regularized Low-Rank Representation and Its Applications. IEEE Trans. Pattern Anal. Mach. Intell. 38 (3), 504–517. doi: 10.1109/TPAMI.2015.2462360

You, C.-Z., Wu, X.-J., Palade, V., Altahhan, A. (2016). “Manifold locality constrained low-rank representation and its applications,” in 2016 International Joint Conference on Neural Networks (IJCNN). (Piscataway, NJ: IEEE), 3264–3271.

Keywords: cancer gene expression data, low-rank representation, feature selection, score function, clustering

Citation: Lu C, Wang J, Liu J, Zheng C, Kong X and Zhang X (2020) Non-Negative Symmetric Low-Rank Representation Graph Regularized Method for Cancer Clustering Based on Score Function. Front. Genet. 10:1353. doi: 10.3389/fgene.2019.01353

Received: 08 September 2019; Accepted: 10 December 2019;

Published: 22 January 2020.

Edited by:

Hongmin Cai, South China University of Technology, ChinaCopyright © 2020 Lu, Wang, Liu, Zheng, Kong and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Juan Wang, d2FuZ2p1YW5zZHVAMTYzLmNvbQ==

Conghai Lu

Conghai Lu Juan Wang

Juan Wang Jinxing Liu1

Jinxing Liu1 Chunhou Zheng

Chunhou Zheng Xiangzhen Kong

Xiangzhen Kong