- 1Center for International Forestry Research (CIFOR), Bogor, Indonesia

- 2Earth Innovation Institute (EII), San Francisco, CA, United States

- 3Climate, Community & Biodiversity Alliance (CCBA), Washington, DC, United States

- 4World Agroforestry Centre (ICRAF), Lima, Peru

Sustainable management of resources is crucial for balancing competing livelihood, economic, and environmental goals. Since forests and other systems do not exist in isolation, comprehensive jurisdictional approaches to forest, and land-use governance can help promote sustainability. The ability of jurisdictions to provide evidence of progress toward sustainability is essential for attracting public and private sector investments and maintaining local stakeholder involvement. The Sustainable Landscapes Rating Tool (SLRT) provides a way to assess enabling conditions for jurisdictional sustainability through an evidence-based rating system. We applied this rating tool in 19 states and provinces across six countries (Brazil, Ecuador, Indonesia, Cote d'Ivoire, Mexico, Peru) that are members of the Governors' Climate and Forests Task Force (GCF TF). Each SLRT assessment was completed using publicly available information, interviews with stakeholders in the jurisdiction, and a multi-stakeholder workshop to validate the indicator ratings. This paper explores the effects of stakeholder involvement in the validation process, along with stakeholder perceptions of the tool's usefulness. Our analysis shows that the validation workshops often led to modifications of the indicator ratings, even for indicators originally assessed using publicly available data, highlighting the gap between existence of a policy and its implementation. Also, a more diverse composition of stakeholders at the workshops led to more changes in indicator ratings, which indicates the importance of including different perspectives in compiling and validating the assessments. Overall, most participants agreed that the tool is useful for self-assessment of the jurisdiction and to address coordination gaps. Further, the validation workshops provided a space for discussions across government agencies, civil society organizations (CSOs), producer organizations, indigenous peoples and local community representatives, and researchers about improving policy and governance conditions. Our findings from the analysis of a participatory approach to collecting and validating data can be used to inform future research on environmental governance and sustainability.

Introduction

Balancing competing livelihood, economic, and environmental goals requires an integrated approach to sustainable management of resources at the landscape level. Since forests and other ecosystems do not exist in isolation, comprehensive landscape, and jurisdictional approaches to forest and land-use governance are considered pathways to promote sustainability. These approaches offer an alternative to conservation and development projects and farm-by-farm or supply chain certification schemes. Landscape approaches attempt to reconcile environmental and development trade-offs through addressing multiple objectives across scales and sectors, promoting equitable stakeholder involvement, emphasizing continual learning and adaptive management, and promoting participatory monitoring (Sayer et al., 2013). Jurisdictional approaches are similarly holistic in nature with the key difference that, in jurisdictional approaches, the landscape is defined by political or administrative boundaries (e.g., country, province, district), which facilitates strategic alignment with public policies and allows governments to lead or play active roles in the initiatives (Nepstad et al., 2013; Boyd et al., 2018; Stickler C. et al., 2018).

Sub-national jurisdictional approaches have been gaining traction as the sub-national level is increasingly seen as a strategic level of governance (Boyd et al., 2018). In federal and other decentralized political systems, sub-national jurisdictions (i.e., provinces, districts) have at least some legal authority and political power (Busch and Amarjargal, 2020), are better placed to communicate with communities and farmers making land-use decisions, and can help advance and support national-level goals (Stickler C. M. et al., 2018). Nearly 40 sub-national jurisdictions across the tropics have made commitments to reducing deforestation, but there has been limited financial and other support for these efforts (Stickler C. M. et al., 2018; Stickler et al., 2020). To attract the public and private sector finance, investments, and other partnerships needed to advance low emission development strategies, jurisdictions must demonstrate that they simultaneously represent high-performance and low-risk investment opportunities.

Sustainability standards and certification systems play an important role in global governance of production and trade as a way to demonstrate performance and compliance [UNFSS, 2018; though some research has questioned the effectiveness of such systems, e.g., Roberge et al., 2011; Glasbergen, 2018; Tröster and Hiete, 2019]. Typically, these standards and certifications are voluntary and require, in theory, that pre-specified criteria and measurable indicators of sustainable outcomes have been met (Potts et al., 2014; Smith et al., 2019). In the case of commodity certification, sustainable production is seen as a binary state, certified or uncertified, with principles and criteria defined through a global multi-sector process that is interpreted for individual regions. These standards usually rely on independent monitoring or third-party conformity assessments and certification to strengthen performance claims (Potts et al., 2014). Stakeholder engagement occurs primarily when the standard's principles and criteria are revised. Standards and certifications can also vary in the degree to which they encompass broader safeguards, social indices, and governance and policy issues (Potts et al., 2014). But the shift from project-level and supply chain to landscape/jurisdictional approaches to sustainability implies fundamental changes in the way that success is defined, the role of local stakeholders and engagement of market actors, and the measures and scale at which progress is measured.

In contrast to traditional standards and certification systems that focus on outcomes at the scale of a project, farm, or mill (Stickler C. et al., 2018), in landscape and jurisdictional approaches the unit of performance is the entire landscape or political geography, including all forms of production and economic development. As a result, change is slower, and a system is needed that recognizes meaningful progress at this geographic scale, including understanding the enabling conditions needed for sustainable landscapes. One tool that aims to assess such enabling conditions is the Climate, Community & Biodiversity Alliance's (CCBA) Sustainable Landscapes Rating Tool (SLRT), which was developed as a response to the very different approach that is needed for a jurisdictional approach. It rates governance conditions for sustainable landscapes against internationally recognized criteria, thereby focusing on process and enabling conditions rather than on outcomes [SLRT, 2019; for further tool background and information see section Sustainable Landscapes Rating Tool (SLRT)]. It draws inspiration from various sources, including country ratings and other governance assessment frameworks, such as the Ease of Doing Business rankings of the World Bank, Corruption Perception Index of Transparency International and Landscape Assessment Framework of Conservation International. It aims to facilitate private and public sector investment and other support through assessing a jurisdiction's potential to meet sustainable landscape goals. Although the tool can be used by external assessors or for internal self-assessment, it can also purposefully engage multiple stakeholders in the validation of information to assess progress in a given jurisdiction.

As tools like SLRT continue to be developed, research is needed to examine and document how various implementation approaches (e.g., secondary data collection, interviews, third-party verification, stakeholder involvement/validation) affect the final outcomes of an assessment. In this paper, we contribute in part to this research need by focusing on the data validation of an assessment through stakeholder engagement. We applied this approach for the SLRT assessment across 19 sub-national jurisdictions in six countries, a subset of the Governors' Climate and Forests Task Force (GCF TF) member states. Given the differences between third-party and more participatory assessments, we assess and describe how stakeholder participation in the data validation component of the SLRT affects the final outcomes of jurisdictional assessments (i.e., modifications of indicator ratings). We also explore the extent to which documents and data are publicly available, examine stakeholder perceptions of the tool, and discuss how this participatory component of the assessment might align with and/or foster broader engagement in jurisdictional planning and policymaking. Finally, we present some recommendations for improvement of the tool based on lessons learned from our implementation in diverse contexts.

Research Design, Data Collection, and Analysis

Sustainable Landscapes Rating Tool (SLRT)

Background and Objectives

Released in 2017, the SLRT was developed by the CCBA including its members Conservation International, Rainforest Alliance, and Wildlife Conservation Society, in partnership with EcoAgriculture Partners and Global Canopy Program. To facilitate a participatory and transparent process, tool development involved outreach to potential users in commodity and investment companies, government agencies, and international non-governmental organizations through meetings, round tables and workshops in 2016 and pilot testing of a draft version of the tool (including a validation workshop) in San Martin Region in Peru in January 2017.

The SLRT was designed to be flexible (via addition or removal of indicators) and applied at the subnational jurisdictional level with multiple objectives and users in mind (SLRT, 2017a). Its developers envisioned it as an “objective, evidence-based” tool that governments, producers, and other landscape actors could apply and use to communicate about the status of key governance conditions to attract investment and other support, to benchmark progress, and to build support and alignment among diverse stakeholders and facilitate planning to address gaps. Additionally, external investors could use the tool for initial screening to compare jurisdictions or for in-depth risk assessment (through the selection or modification of desired indicators). Overall, the SLRT aims to foster partnerships and enhance coordination among landscape actors and lead to improvements in polices, governance, and other enabling conditions for jurisdictional sustainability.

With a focus on land-use more broadly, the SLRT differentiates itself from other tools (see SLRT, 2017b for a full comparison) that aim to assess governance in the land-use sector, such as the Governance of Forests Indicators (GFI) of the World Resources Institute (WRI; wri.org/publication/assessing-forest-governance) and Assessing and Monitoring Forest Governance of the Program on Forests (PROFOR; profor.info/content/assessing-and-monitoring-forest-governance) that effectively focus mainly on forests. Furthermore, it aims to provide a simpler method, compared to these tools, for rating criteria by requiring fewer resources (e.g., cost to implement and time).

The SLRT provides different and complementary information to (and is designed to be used in tandem with) other tools and platforms that assess sustainability performance. These include LandScale led by CCBA, Rainforest Alliance and Verra (landscale.org), the GCF Impact (gcfimpact.org) and the Produce-Protect Platforms led by Earth Innovation Institute (EII; produceprotectplatform.com), and the Commodities-Jurisdictions Approach led by Climate Focus, Meridian and WWF (commoditiesjurisdictions.wordpress.com), which provide information on deforestation, productivity and human development metrics in select jurisdictions.

Assessment Indicators

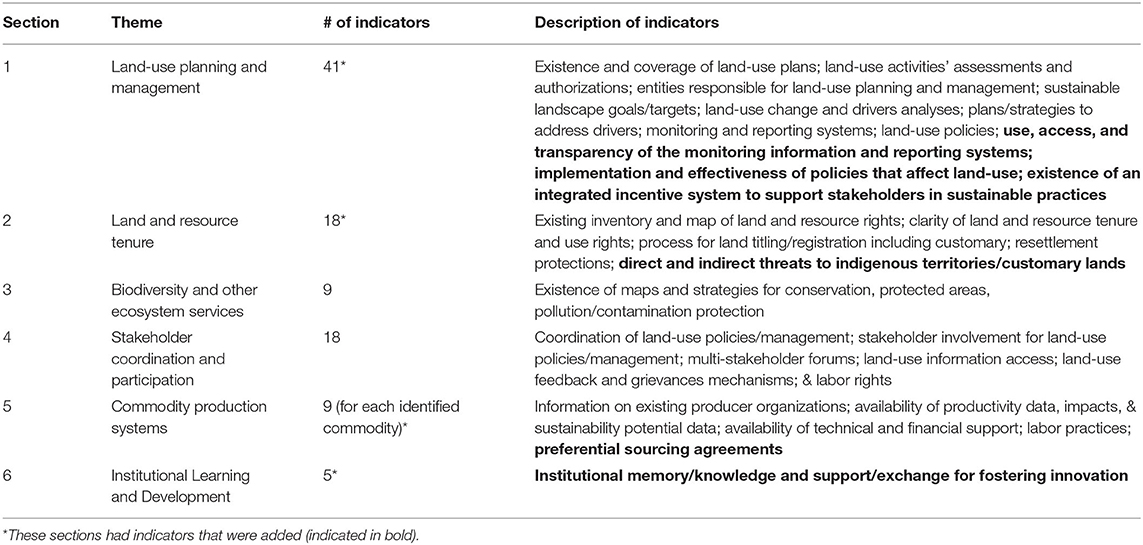

The rating tool used in this study expanded on the SLRT available on the CCBA website (version 1; www.climate-standards.org/sustainable-landscapes-rating-tool/) by adding 15 indicators to the original 85, including in a new theme on institutional learning and development. The 100 indicators are organized across six themes or sections: (1) land-use planning and management; (2) land and resource tenure; (3) biodiversity and other ecosystem services; (4) stakeholder coordination and participation; (5) commodity production systems; and (6) institutional learning and development. Indicators were added to Sections 1, 2, and 5. The added Section 6 on institutional learning and development focuses on mechanisms for preserving institutional knowledge/memory and financial support or collaboration/learning exchange to foster innovation. The additional indicators were added to assess factors, elements and conditions that were deemed important in advancing (or hindering) jurisdictional sustainability strategies/programs (Stickler et al., 2014; DiGiano et al., 2016). Table 1 provides a summary of the information captured by the indicators in each section of the tool, including the newly added indicators.

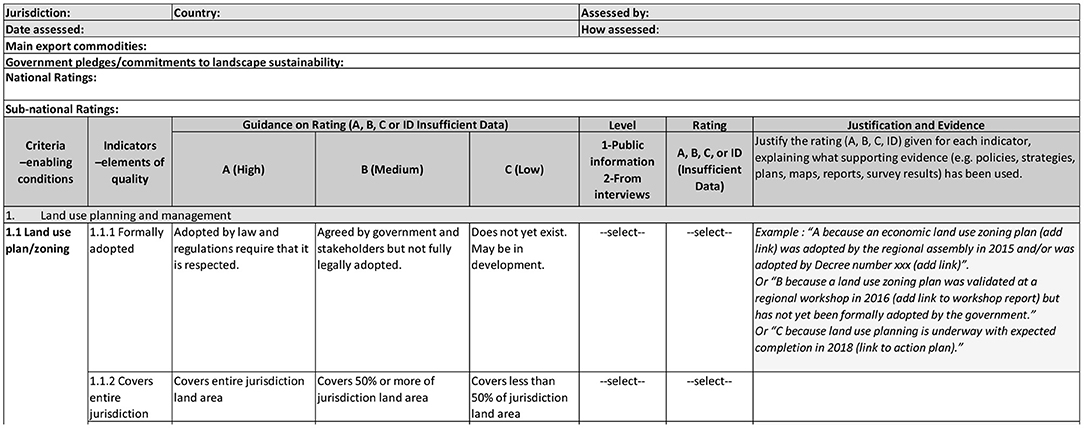

Further, the nine indicators in Section 5 on commodity production systems were assessed for each of the three main export-oriented products or group of products from the jurisdiction, resulting in a total of 118 indicators. A completed SLRT not only rates the status and progress made for each indicator for these enabling conditions based on specific criteria but also includes a justification and evidence for the rating given (see Figure 1 for a screenshot of the tool). The ratings are given on a scale of A (high), B (medium), C (low), or insufficient data (ID) for each individual indicator. The types of information used as evidence for the rating justification are detailed below.

Figure 1. A screenshot showing the top portion of the SLRT. At the top, information about the jurisdiction can be entered. Also, the various columns in the tool with the guidelines for each A (high), B (medium), or C (low) rating can be seen. The right most column in the SLRT provides space to enter the justification and evidence supporting the rating given for each indicator.

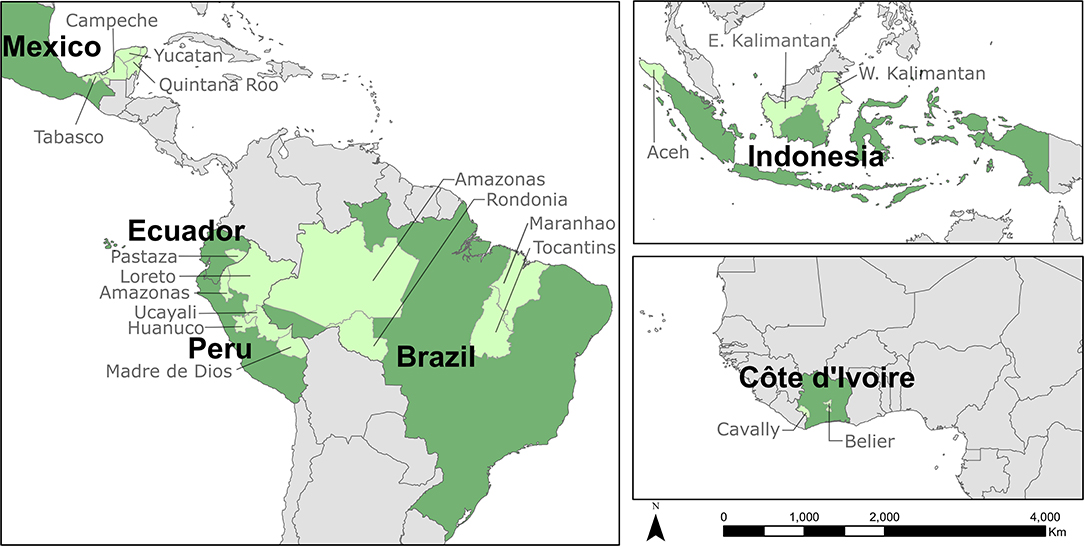

We implemented the SLRT and held a validation workshop in 19 GCF TF member jurisdictions from August 2017 until February 2019: four states in Brazil, five regions in Peru, four states in Mexico, one province in Ecuador, two regions in Cote d'Ivoire, and three provinces in Indonesia (Figure 2). GCF TF, initially established in 2008 by nine governors from Brazil, Indonesia, and United States, is a platform for collaboration among 38 states and provinces in 10 countries to advance jurisdictional approaches to REDD+ and low emissions development (GCF Task Force, 2019). The SLRT implementation was conducted as part of a larger research program and was not aligned with any specific events, strategy development initiatives, multi-stakeholder initiatives, or monitoring efforts in the individual study jurisdictions.

Figure 2. Map of six countries and 19 sub-national jurisdictions where the SLRT (including the added indicators) was implemented shown in dark and light green, respectively: Brazil - Amazonas, Maranhao, Rondonia, and Tocantins; Cote d'Ivoire - Belier and Cavally; Ecuador - Pastaza; Indonesia - Aceh, East Kalimantan, and West Kalimantan; Mexico - Campeche, Quintana Roo, Tabasco, and Yucatan; Peru - Amazonas, Huanuco, Loreto, Madre de Dios, Ucayali.

Data Collection and Analyses

Following the methods indicated in the SLRT guidance document (SLRT, 2017b), including the mentioned validation workshop in San Martin, Peru, we completed the assessments through four sequential steps: (1) compilation of secondary data; (2) interviews with key stakeholders in the jurisdictions; (3) multi-stakeholder workshops to validate the results; and (4) revision of the assessments based on feedback from the validation. Figure 3 shows a summary of the steps and full process. All assessments were completed under the supervision of a coordinating team from Center for International Forestry Research (CIFOR), EII, and CCBA. The assessment in the state/province was done by country-based researchers who were trained in the tool's indicators and familiarized with the SLRT guidance document, and guidance for the additional indicators, as part of the onboarding process. The coordinating team provided guidance and comments on drafts of the assessments as they were being completed and developed detailed validation workshop protocols to ensure a standard process for the assessments. Each SLRT took the country-based researchers ~25 person-days to complete.

Figure 3. Process and steps of SLRT implementation in each sub-national jurisdiction (state/province).

Publicly Available Data

For each jurisdiction, we first completed as much of the assessment as possible based on publicly available data, regulations, and other published documents from national and sub-national government sources. Some additional information was also obtained from reports published by national and international non-governmental organizations (NGOs) and research organizations. For each indicator with available secondary information, we gave a preliminary rating of A, B, C, or insufficient data (ID), the justification for the rating, and the source of information that supported the ranking (with a link to the website or document when possible). The tool provides distinctions between the ratings for each indicator, similar to a rubric. For example, an indicator in Section 1 examines whether the land-use plan or zoning has been formally adopted by the jurisdiction. For a rating of A, the plan will have been adopted by law with regulations requiring that it is respected; for a B rating, the plan will have been agreed upon by government and stakeholders but not fully legally adopted; and for a C rating, the plan does not yet exist or may be in development. The justification or rationale for this indicator should include whether the land-use plan exists, the name of the plan and associated legislations, and whether it is approved.

Interviews With Key Stakeholders

To supplement the secondary data, on average we conducted interviews with ~10–15 key informants mostly in the capital city of each province/state over a period of 7–10 days. Some interviews occurred in the country capital cities. We identified interview respondents based on their expertise and their position within their respective organizations as needed based on the information required for the indicators. Interviewees included GCF TF delegates, heads of government ministries/agencies (state/provincial and national), relevant civil society organization (CSO) employees, private sector representatives, and researchers/academics, representatives of producer organizations, and leaders of indigenous peoples/local community organizations.

Any indicator with information obtained or clarified through an interview was recorded as such in the SLRT assessment. Each indicator was marked based on whether the information used to assess it was “Level 1” or “Level 2,” with Level 1 indicating the use of publicly available information. If information regarding a public document was obtained during an interview and was used as the main source for the justification, then the indicator was marked as assessed at Level 1 and, if possible, the link to the document was added in the justification. However, if the information was solely obtained from an interviewee or if a publicly available document needed to be verified through an interview, it was marked as Level 2. If interviews indicated conflicting opinions for an indicator from different stakeholders, then this information was captured in the justification of the indicator. We revised or completed all indicators based on the additional information gathered through the interviews. Evidence gathered from secondary sources and interview data, and indicator ratings assigned by the country researchers were discussed and reviewed by the coordinating team, and pre-validation ratings were finalized for the indicators. All data from interviews were analyzed and included in the preliminary SLRT assessment prior to the next step: multi-stakeholder validation workshops.

Multi-Stakeholder Validation Workshops

As the third step of completing the SLRT assessment, we held a 1-day multi-stakeholder validation workshop in each jurisdiction with an average of 16 participants per workshop. The number of participants ranged from 5 to 29. Aside from two workshops in Brazil consisting of only five and six participants due to the election process and one workshop in Peru consisting of seven participants (although 10 had confirmed), at least 12 participants attended most workshops. Workshop participants included the interviewees noted above and key stakeholders from other relevant organizations. Invitations to participate in the workshop were sent directly to the interviewees or to the heads of the organization or government agency, depending on what was locally appropriate. Often, the invitations were sent via email and then followed up with a phone call to request/confirm participation. Any additional invitations sent beyond the interviewees were based on the recommendations of the interviewees or the respective GCF TF delegates. In some jurisdictions (i.e., in Brazil, Mexico), we were able to work with a local institution to send the invitations to potential participants. In general, 20–35 invitations were sent in each jurisdiction.

During each validation workshop, participants examined the draft ratings and justifications for each indicator. Workshops were structured to provide an overview of the tool and then participants were divided into three smaller groups to validate different sections based on their expertise. The three groups reviewed and corrected the indicator ratings and justifications in Sections 1, 2, and 4 in the morning followed by a plenary discussion of any changes, including the justifications for the change. Following a similar structure in the afternoon, the groups (with participants reshuffled so that they could chose the most relevant section aligned to their expertise) reviewed and discussed Sections 3, 5, and 6. Any changes to indicator ratings and justifications were noted, and the SLRT assessment was amended accordingly, resulting in the final or post-validation version. In the case of three Brazilian states, workshops were shortened due to the highly politicized and divisive election process in 2018, meaning that not all indicators could be validated. In such cases, validation of remaining indicators was conducted remotely via email reaching out to all the workshop participants and specifically following up with relevant agencies/organizations as needed. Though the process in the Brazilian states was not implemented as planned, the data and our experiences are presented here as they illustrate the importance of (and how) political cycles and elections in participatory assessment processes, information availability, and more broadly jurisdictional approaches.

Further, immediately at the end of several validation workshops when time allowed, we conducted a focus group discussion about participants' perceptions on the usefulness of the tool itself. Focus group discussions with all workshop participants were conducted in the following 11 jurisdictions: East and West Kalimantan, Indonesia; Campeche, Quintana Roo, Tabasco, and Yucatan, Mexico; Amazonas, Huanuco, and Madre de Dios, Peru; and Belier and Cavally, Cote d'Ivoire. We asked participants to reflect on how the SLRT assessment might be utilized, to whom it might be beneficial, advantages/disadvantages of using such a rating tool, and if they gained anything unexpected from using the SLRT and the workshop experience.

Analyses

In addition to indicator ratings and justifications, we compiled information on if and how individual indicator ratings changed during the validation workshops (i.e., change between the pre- and post-validation SLRT indicator ratings). We used descriptive statistics to summarize changes in the number and types of indicators, the source of information (Level 1 vs. 2), and whether indicators were changed to a higher (i.e., B to A) or lower (i.e., B to C) rating. We looked for global, regional, and country-level trends or patterns in aforementioned variables and tested for their correlation with the types of organizations/participants at the workshops.

The results of the focus group discussions conducted with the workshop participants were compiled, categorized according to the questions asked, and analyzed across jurisdictions using content/thematic analysis in Microsoft Excel. Responses from the participants across the jurisdictions were examined for reoccurring themes or ideas regarding the use, usefulness, advantages, and disadvantages of the SLRT. An inductive approach similar to grounded theory (Glaser and Strauss, 1967) served as a basis for this analysis, where the themes and ideas identified emerged from the data (Clifford and Valentine, 2003).

Results

Source of Information

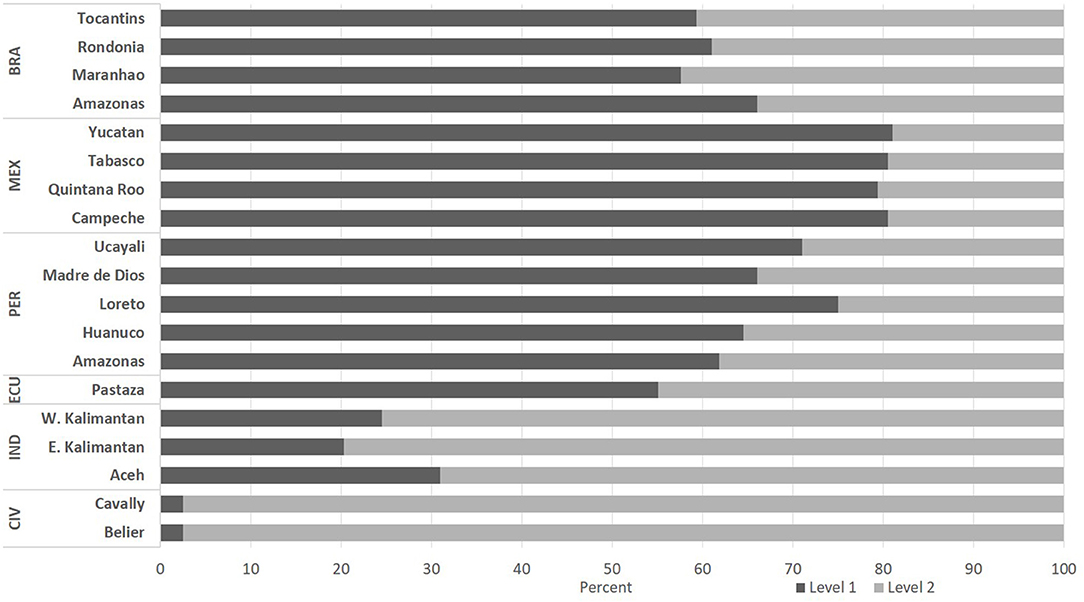

Figure 4 shows the percentage of indicators assessed via publicly available information (Level 1) or interviews (Level 2), highlighting varying levels of publicly available information across the states/provinces. The country with the highest number of indicators assessed at Level 1 was Mexico (averaging 80%), with similar percentages across all four sampled states, followed by Peru (64%), Brazil (61%), and Ecuador (55%). Only about one-fourth (25%) of indicators in Indonesia and <3% of the indicators in Cote d'Ivoire were completed using publicly available information. It is important to note that the four SLRT assessments in Brazil were completed during an election period, and as required by Brazilian regulations, information, and access to official government websites was limited during this time. This timing likely influenced the lower number of indicators that were assessed at Level 1 in Brazil. For the other countries, differences seen in the number of indicators assessed at Level 1 likely point to differences in government transparency with official data and/or national capacity for data generation. Further, the 15 indicators that were added to the SLRT were more likely to be completed using Level 2 data (64%).

Figure 4. Percent of indicators assessed at Level 1 (publicly available) and Level 2 (based on interviews). [Mexico (MEX), Peru (PER), Brazil (BRA), Ecuador (ECU), Indonesia (IND), Cote d'Ivoire (CIV)].

Given the focus of the SLRT indicators on government policies and programs in place, many of the interviewees in each jurisdiction were from various government agencies. However, other relevant organizations and associations were also interviewed for specific indicators (Supplementary Table 1).

Validation Workshop Participants

A breakdown of participant composition at the validation workshops shows that government representatives (across various government agencies and in some cases from the national level in addition to the state/provincial level) were present at all workshops, while CSOs were present at 79% and academic/research organizations were present at 58%. Across all validation workshops in the 19 sites, 52% of the participants were from government, 19% from CSOs, 10% from academic/research organizations, 6% from multi-stakeholder councils/committees, 6% from producer/indigenous organizations, 3% from multi- or bi-lateral donor organizations, and 3% from private companies. The lack of participation of representatives from the private sector and indigenous organizations was a limitation. In the case of indigenous organizations/representatives in some jurisdictions, despite being invited to participate in the workshops and efforts made (covering costs of transportation and lodging and support with logistics as needed) to ensure their attendance, they did not attend. Engagement with the private sector was limited due to the difficulties in access (i.e., securing interview appointments, distance to offices) and lower interest of the private sector entities in the assessment and SLRT topics.

Change in Indicator Ratings During the Multi-Stakeholder Validation Workshop

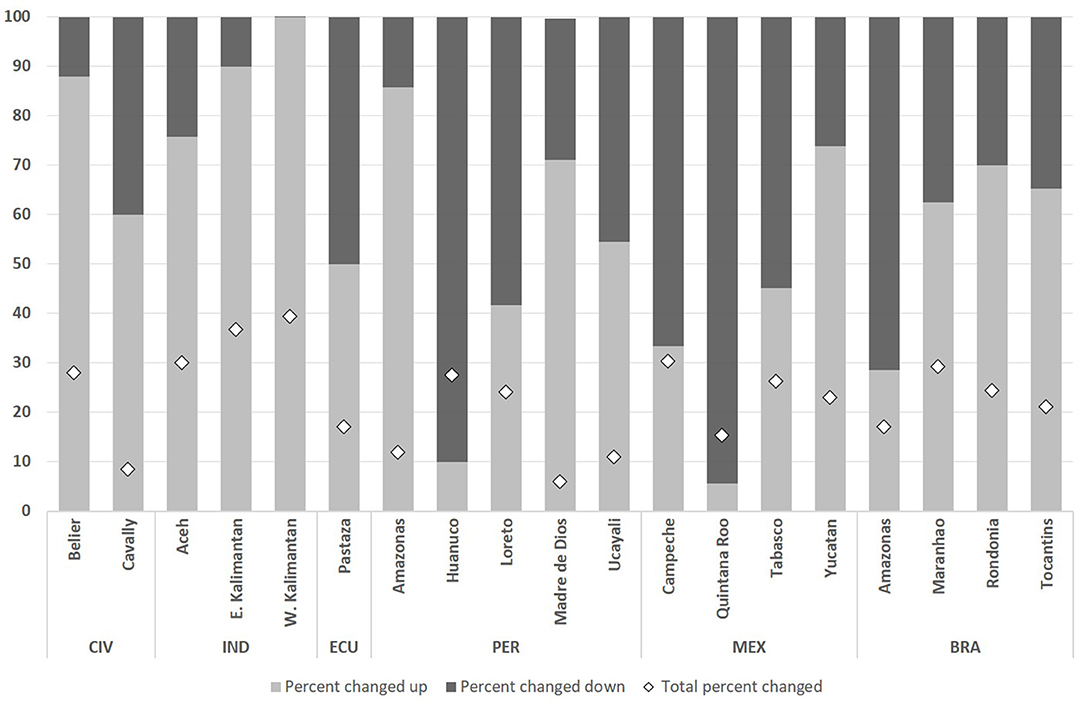

Indicators that changed during the validation workshops were changed not only to a better rating (“up,” e.g., B to A) but often also to a lower rating (“down,” e.g., B to C) when compared to the pre-validation SLRT version. Figure 5 shows the percent of indicators changed per jurisdiction and direction of the change during the validation workshops. On average 22% of indicators were changed and of these, 58% were changed up and 42% changed to a lower rating. Indicator justifications and ratings in SLRT Sections 4 (Stakeholder coordination and participation), 5 (Commodity production systems), 1 (Land-use planning and management), and 2 (Land and resource tenure) were changed most often. Participants in most jurisdictions changed at least some of the indicators downward during the workshops though the amounts varied. For example, in East Kalimantan, Indonesia only 10% of the indicators that changed were changed downward, but in Huanuco, Peru, 90% of the indicators that changed were changed downward.

Figure 5. Percent of indicators changed (♢) in total and percent changed “up” or “down” (bar) in each sub-national jurisdiction. [Mexico (MEX), Peru (PER), Brazil (BRA), Ecuador (ECU), Indonesia (IND), Cote d'Ivoire (CIV)].

Indicator ratings and justifications were changed at the workshops due to the availability of more information provided by the stakeholders present. We found a positive relationship between the percent of indicators assessed at Level 1 (publicly available information) and the percent of indicators changed to a lower rating. Of the total indicators changed downward, 65% were assessed at Level 1 and only 35% at Level 2. Participants were able to provide additional details, clarity, and applicable local context even for Level 1 indicators thought to be fully assessed using publicly available data. Often workshop participants clarified information, such as for unclear definitions of land-use and land cover categories within some land planning policies and regulations, and provided details about policy implementation, which justified shifts in the ratings. For example, in one Indonesian province, participants provided more details on a provincial regulation that defines the roles and responsibilities of land-use planning institutions/agencies, clarifying that not all roles and responsibilities are fully regulated by this regulation and some are ad-hoc. With the addition of this information to the indicator justification, participants decided to lower the indicator rating. These rating changes highlight the fundamental difference between the existence of a policy and the understanding, applicability, and effective implementation of the policy—underscoring the importance of complementing publicly available information with other sources.

In some cases, the new inputs prompted modifications in the ratings only after in-depth group discussions and, in some cases, heated debates, along three main lines. First, contentious topics, such as land tenure and stakeholder participation in policy making, generated much discussion. For example, in one Indonesian province, discussions about the inclusion of women, indigenous groups, and other marginalized groups in policy/management-related consultations triggered disagreement between representatives from an indigenous organization and the government agencies present, resulting in a lower rating for the indicator. Second, there were discussions about the applicability of certain SLRT indicators to the local context. For example, in one Peruvian jurisdiction, participants asked for additional clarifications about the criteria to rate an indicator focused on clarity in the definition of land and resource management and use rights, and felt the indicator did not fit the regional context given the legal implications of the terminology. Participants ultimately agreed on an indicator justification and rating but recommended the addition of new indicators related to this topic that were better suited to the Peruvian context. Third, indicators related to corruption, child labor, and forced labor were considered extremely sensitive in most workshops and often generated discussion. In some cases, participants were uncomfortable providing ratings and justifications for these indicators. In the case of child and forced labor, many defaulted to explain that it was illegal, but others debated what child labor means in an agricultural/rural context where it is common for children to help on family farms. Thus, in one jurisdiction in Mexico and another in Peru, participants chose to assign the rating of Insufficient Data (ID) to these indicators stating they did not have sufficient information regarding the definition of child/forced labor. At one workshop in Indonesia, participants were hesitant to assign a rating for the corruption indicator and decided to assign a rating of ID, stating that there was no strong evidence to show corruption, since so far there had been no cases related to land issues registered in the court. In other jurisdictions, such as one in Brazil, corruption was a difficult topic to discuss and participants agreed to the justification provided in the completed SLRT but with much discontent.

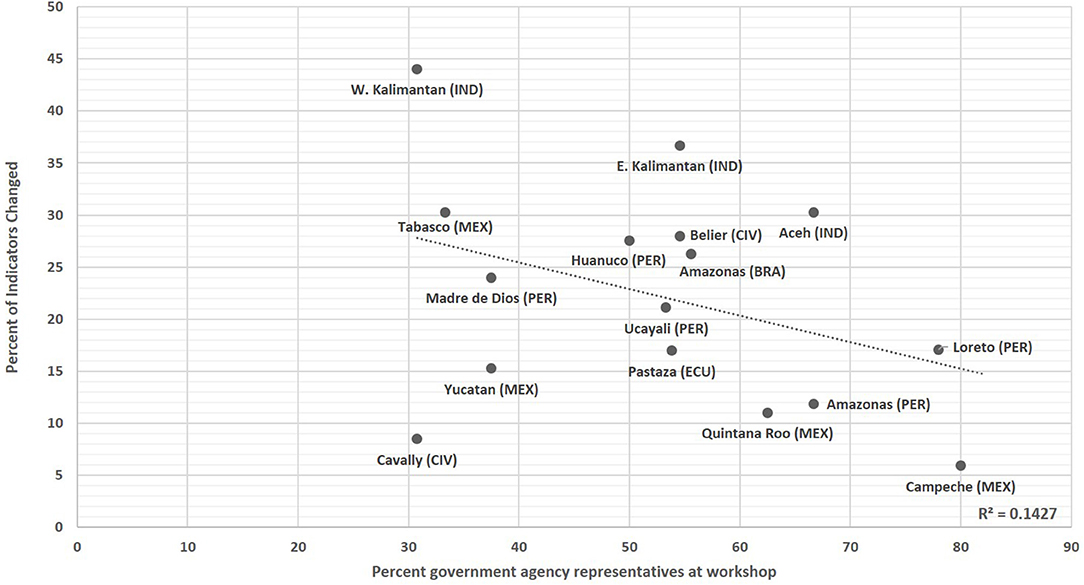

Further, as many of the indicator ratings changed due to the additional information and the discussions, we observed that the percent of indicator ratings changed varied with the composition of the workshop participants, specifically the percent of government agencies represented at the validation workshops. As illustrated in Figure 6, fewer indicator ratings were changed where there was a higher presence of government representatives compared to other types of organizations/representatives1. For example, Huánuco and Loreto workshops had higher representation from CSOs and other organizations compared to other Peruvian jurisdictions and also had more changes in indicator ratings. This finding highlights the importance of including diverse stakeholders in such processes given the additional information and perspectives provided.

Figure 6. Relationship between the percent of government officials present at the validation workshop and the percent of indicators ratings changed (r = −0.377). [Mexico (MEX), Peru (PER), Brazil (BRA), Ecuador (ECU), Indonesia (IND), Cote d'Ivoire (CIV)].

Stakeholder Perceptions of SLRT Usefulness

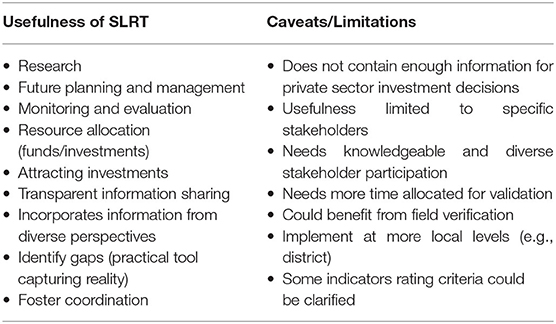

The results of the focus groups on stakeholder opinions and perspectives of the SLRT at the end of some workshops revealed how they perceived the usefulness of the tool. Participants mentioned many ways in which the SLRT assessments can be utilized, which are summarized in Table 2. Overall, stakeholders indicated that the advantages of the tool were to provide an overview of the problems to be addressed and be a guide for future decision making and planning. The tool could serve as a way to track achievement/effectiveness of the work of various stakeholders: local governments, CSOs, academic institutions, and other relevant parties through the ratings when implemented periodically. However, the SLRT could be useful to some stakeholders more than others. For example, it would be most useful for specialists or technicians familiar with issues of land-use planning and governance—most commonly mentioned entities were state/province level government agencies, especially those focusing on forestry, environment, land/spatial planning, agriculture/plantations, and mining.

Some workshop participants also mentioned the possibility of investors using the tool to identify and select jurisdictions. Despite consultation of private sector entities during the development of the SLRT to include the information potentially of interest to them, some workshop participants did not think there is enough information present in the tool to be useful to attract investors to the jurisdiction. For example, in one Indonesian jurisdiction, a participant from the private sector mentioned that to decide to invest in one area, they would need to know the risks, including political stability and safety, value chain information, stocks, productivity, access/transportation, and qualification of local people as a potential source of labor. Thus, the usefulness of the tool for private investors or attracting investments is not clear.

Participants stressed the importance of having a greater number and diversity of well-informed stakeholders for interviews and workshops to ensure that the SLRT is completed adequately and reflects the reality on the ground. Further, participants thought it is important to include some field verifications in addition to the interviews and to implement the tool at more local levels or even for different ecosystems of interest within the jurisdiction—for example, at the district level, the tool could better capture local enabling conditions since authority over land decisions lies with this level of government.

Participants at most workshops where perceptions of the tool were gathered expressed there were some unexpected benefits of being involved in the SLRT validation process. In one Peruvian jurisdiction, an indigenous representative stated that they were not often asked to participate in similar surveys or workshops. Another thought expressed broadly was the opportunity for representatives from different government agencies to engage and interact with each other as well as with the non-governmental actors at the workshop; stating it was good to learn about the policies, initiatives, and efforts of other government agencies around the same issues.

Discussion and Recommendations

Our findings highlight four main points related to assessment of enabling conditions for jurisdictional sustainability using tools like the SLRT. First, the results showcase the importance of data validation with stakeholder participation even for indicators that might seem to be easily assessed through publicly available data alone (e.g., existence of polices or programs). Second, a greater diversity of stakeholders present at the workshops led to more changes in SLRT indicators. Third, through the workshop discussions, stakeholders interested in jurisdictional sustainability began conversations that might lead to coordination and collaboration in the future. Fourth, broad application of the SLRT reinforced the importance of balancing the need for standardized assessment tools with inclusion of measures that reflect context-specific issues. We discuss each of these points below and summarize lessons for participatory approaches in sustainability tools and platforms.

Stakeholder Participation

Through participation in the workshops, stakeholders were able to provide additional information that resulted in indicator rating changes, even for indicators assessed at Level 1 (i.e., through publicly available data). Ratings based solely on secondary information, vs. those supplemented with interviews and validation workshops, could lead to gaps in assessments. This gap could potentially illustrate the difference between articulated polices and their implementation. And given the structure of the rating criteria, the gap would generate an overly optimistic assessment of the enabling conditions. Therefore, these gaps can be better identified and accounted for in the assessment using the multi-stakeholder workshop format.

Our results also show that changes in indicator ratings at the workshop appear to be affected by the types of stakeholders present at the workshop. This illustrates the relationship and complementary role of non-government stakeholders to that of governments (Hemmati, 2002), this includes information sharing especially when stakeholders have convergent goals (Coston, 1998; Najam, 2000). The in-depth discussions regarding indicator ratings and the resulting changes during the workshops, especially between civil society actors and government representatives, reinforces how non-governmental stakeholders can play a complementary role to government actors in such participatory processes. Although debates emphasized the different perspectives present at the workshop and the possible different interpretations of some of the criteria, they also illustrate, as some workshop participants noted, the importance of having interviewees and workshop participants (including CSOs) be knowledgeable about relevant policies and programs in their jurisdictions. Such knowledge would be needed especially for jurisdictions where a higher percentage of information for the SLRT is gathered through interviews (Level 2). Further, this observation highlights the importance of being cognizant of political cycles, government regime, and personnel changes, as it takes time for new employees to learn about the programs and initiatives within the jurisdiction's purview.

Validation Workshops as Catalyst for Relationship Building

Based on our observations of the workshops and feedback from the participants, such workshops can bring together various stakeholders and encourage discussions around jurisdictional sustainability. Participants indicated that the workshops facilitated learning and relationship building between various entities within the jurisdiction, creating possibilities to complement approaches of various actors. Though there might be multi-stakeholder forums being coordinated and organized within the jurisdictions, the SLRT validation workshop provided a focus on policy and governance enabling conditions and brought together interested actors who might not participate in such forums for many reasons (e.g., limited scope, limited time, intermittent meetings). Although the SLRT assessment on its own will not build the trust or address the capacity or power differentials between stakeholders that are necessary for successful multi-stakeholder processes (Kowler et al., 2016; Larson et al., 2018), it may be a way to start to break down the gaps in coordination. Although we did not assess whether all the participants trusted each other and felt able to express themselves in the workshops, most participants contributed to the discussions and the varied structure of the workshop (i.e., through plenary and small group discussions) facilitated their participation.

Pimbert and Pretty (1995) offer a continuum of participation as a way to think about the type and degree of stakeholder involvement—ranging from communication to consultation to self-mobilization (participants take on initiatives independently to change systems). Participation during SLRT data collection phases was mostly at the lower end of the continuum, participation in information giving as the interviewees were informants in addressing a set of questions. However, at the workshops, participants were able to vet the information provided for each indicator and partake in joint analysis in a multi-stakeholder setting, facilitating more interactive participation. Through this process, participants became more interested in and wanted ownership of the SLRT assessment. There were indications that they might begin initiatives together that were independent of the assessment process, thus demonstrating a potential move toward the self-mobilization type of participation (Pimbert and Pretty, 1995). Any resulting/lasting impacts from the workshop toward self-mobilization will likely be seen in formation or strengthening of existing networks within jurisdictions and would likely need a leader.

However, despite the benefits of participatory approaches, multi-stakeholder workshops add to the cost of implementation of the rating tool compared to assessments based on Level 1 information only. In our case, the cost varied greatly across jurisdictions, ranging from US $300 to $700 for fieldwork to conduct interviews and US $500 to $2,200 for workshops. The various steps involved in completing each SLRT required about 1 month of the country-based researcher's time (22–25 person-days on average, though in some cases over 35 days). This included the time needed to become familiar with the tool, undergo training with the coordinating team, identify government agencies with relevant documents or data, build connections/networks in the jurisdiction, organize the validation workshop, and address logistical concerns such as scheduling and traveling. Broadly, the timing of assessment (e.g., overlap with elections), previous experience of the SLRT implementer in the jurisdiction, and the availability of the interviewees will determine the time required for the SLRT. Overall, the SLRT is not a tool that could be rapidly implemented in 1–2 weeks without more extensive human and financial resources, especially since not all information is available online. However, similar tools to the SLRT, such as PROFOR's Assessing and Monitoring Forest Governance (15 weeks or more; Kishor and Rosenbaum, 2012) or the WRI's GFI Framework (pilot assessments took more than 1 year; GFI Guidance Manual, 2013), take much longer to complete.

The relatively high cost may prevent the tool from being widely adopted. Costs could be potentially lowered if local jurisdictional stakeholders fully take on the implementation, which could reduce logistical and venue costs. Depending on the facilities and norms within the jurisdiction, the workshop could be held at a government agency or an organization's office. However, the choice of venue and facilitator could affect the participation of certain stakeholders, especially those with less power. If, for example, government spaces are routinely utilized by multi-stakeholder forums for non-governmental purposes, there may be less risk in participation being compromised. A facilitator that is an “outsider” can be seen as contributing to objectivity of the process and results. Furthermore, based on our experience of implementing the SLRT, we believe that though the first-time implementation cost can be high, subsequent implementations could be less costly. With a baseline set through SLRT implementation, future efforts for monitoring would likely be easier since only indicators with relevant policies/programs that have been changed need to be updated. Additionally, if the capacity and understanding of the tool's implementation exists in a jurisdiction among the stakeholders, future updates/implementation process can be less cumbersome.

Improving the Tool

Based on the broad implementation of this tool and feedback from workshop participants, we recommend that CCBA and any future implementers of the tool consider some changes to the SLRT and its guidelines. While workshop participants generally provided positive feedback regarding the tool, they also highlighted some areas for improvement. The SLRT was seen as a tool that could help governments take stock of advances and identify gaps (possibly as part of a monitoring plan). Focus groups participants mentioned some of the intended SLRT objectives as potential uses of the tool, indicating that most of the tool's objectives, content, and implementation are closely aligned. The tool's value for research, which was also listed by the participants, is not explicitly defined as an objective by its creators.

Feedback regarding the usefulness of the tool showed a mismatch between what stakeholders believe investors want as information and the information contained in (and an objective of) the SLRT. This likely indicates an insufficient amount of information captured in the tool. As such, the tool would need to be utilized in conjunction with other tools or additional indicators developed and incorporated through conversations between investors, government, and non-government stakeholders. Such conversations could provide an opportunity for building a shared understanding of the types of information investors need from jurisdictions for their decision-making and what information jurisdictions can realistically provide. This is in line with what Pacheco et al. (2018) point to as possible co-learning opportunities between public and private sector actors that can contribute to compliance to commitments, maximization of benefits and minimization of trade-offs.

There is a need for simplification and revision of the SLRT and the indicators, which echoes calls for clarification and simplification of other tools and standards related to conservation and development (see Piketty and Garcia-Drigo, 2018; Romero and Putz, 2018). The completed tool is often long due to the justifications provided and though there is a SLRT summary sheet (containing only the indicators and the associated ratings), an alternate type of summary or infographic might help jurisdictions clearly capture and display their progress. This was also requested by the participants at the workshops, since many people, especially higher-level government officials, would not be able to quickly glean the importance of information from the full or summary SLRT assessments. The inclusion of the 15 additional indicators in our implementation of the SLRT increased the length of the completed tool. Though these indicators allowed for more detailed exploration of some of the tool's criteria (e.g., monitoring and reporting systems—use of data and capabilities, policies affecting land use—effective implementation, and institutional learning and development criteria), future implementors of the tool could choose to eliminate some of these as they might not be applicable to the selected jurisdiction.

Further, based on the indicators that generated much debate or discussion at the workshops, we would recommend clarification of the indicator on child labor, especially as it relates to rural landscapes and families. Similarly, indicators that contain thresholds as the distinction between ratings should have a rationale for the threshold. Additionally, we recommend placing the indicator on corruption at the top of the tool (alongside the national and sub-national ratings and government pledges/commitments; see Figure 1) to be assessed only using publicly available data and interviews with non-governmental stakeholders. In this manner, it would not be an indicator that would be discussed or validated during the workshop.

Moreover, we also recommend the inclusion of more guidance on the workshop participant composition and length of validation workshop, which requires at least one full-day but could be split over multiple days. As our results show, a more diverse composition of stakeholders would balance interests and improve the breadth of information generated. Further, ensuring participation from knowledgeable representatives along with prior preparation of the workshop participants, could also improve inputs and help to address some of the power imbalances. For example, preparation can include sharing the draft assessment with participating stakeholders before the workshop.

Lessons for Participatory Approaches in Sustainability Tools and Platforms

The participatory validation component suggested and employed in the approach to conducting the SLRT are not unique to this tool. The GFI tool mentioned earlier also suggests the use of consulting stakeholders not only in selecting the indicators for forest governance but also to “review the results” and test the credibility of the assessment results. However, in the piloting of the GFI tool, stakeholders were engaged differently across the pilot countries, ranging from a national advisory panel that periodically provided feedback to workshops at national and local levels to get feedback.

With a participatory approach being recommended for more sustainability tools and platforms, it is important to consider the lessons learned from our global application of the SLRT. In conducting the validation workshops, many indicator ratings and justifications were negotiated and changed, highlighting how broader perspectives and varied data sources are captured through the participatory validation of the tool, even for those assessed at Level 1. However, in light of our findings regarding the presence of specific stakeholder groups and changes in indicator ratings, the SLRT's aim to be “objective” in reporting the status of key governance conditions could be questioned. At the same time, in jurisdictions with less information available online, this sort of participatory platform can be helpful in triangulating data as well as increasing transparency. Participatory validation can minimize discrepancies in the resulting assessment when conducted by different users, as it would be reviewed and adjusted by the relevant stakeholders in a given jurisdiction. Through the employment of this participatory approach, a more nuanced and complete picture of the jurisdiction could be achieved, since various stakeholder groups are building consensus around the data and the assessment. Data validation by stakeholders, especially when conducted as a part of an initial- or self-assessment, can complement certification efforts that require independent, third-party assessments.

Given the SLRT's stated objective of communicating clearly and concisely, its implementation using a participatory approach needs to ensure that complicated indicator justifications resulting from diverse and nuanced stakeholder perspectives are coherently captured. Further, as stated by participants during the focus groups, being involved in the validation process may make participants more interested in being involved in the broader jurisdictional efforts and initiatives and take ownership of the data/assessment process. Based on our findings, we believe that the strength of the tool lies in its ability to be utilized by the jurisdictional actors to assess their own progress (due diligence), bring together different actors and data sources, and provide a basis for collecting information that can be communicated within the jurisdiction and with interested investors to begin conversations. Moreover, as we have seen, the participatory validation of the SLRT could further coordination and partnerships within the jurisdiction; helping to address one of the tool's objectives and an enabling condition for jurisdictional approaches.

Conclusions

This study highlights the importance and contributions of validating data on governance conditions that enable sustainable landscapes through multi-stakeholder workshops. Not only do these workshops provide an avenue for obtaining additional clarity on policies and programs to better capture the reality of enabling conditions, they also allow for discussions between stakeholders within a jurisdiction. Further, successfully implementing the SLRT or a similar tool in jurisdictions must take into consideration political cycles and administration/personnel changes, as these will likely affect the availability of information and the total time needed to complete the tool. Based on our findings and workshop experiences, we recommend some revisions to the tool through additional clarifications and simplifications of the indicators that generated a lot of debate. We also believe that additional guidance on how to carry out the multi-stakeholder validation workshop, specifically regarding the structure and composition of participants, would be helpful to future implementers in balancing discussions while capturing the various perspectives.

There is a potential for coordination between participating stakeholders and sectors to be improved through conducting the SLRT or similar assessments using this participatory methodology and identifying coordination gaps within a jurisdiction. Despite limited engagement with the private sector in all jurisdictions, we demonstrate that there is a need for more transparency and dialogue between the private sector investors and the public sector and other key stakeholders in the jurisdictions (CSOs, producer organizations). Through the participatory validation of the SLRT assessment as demonstrated in this paper, we show that inviting stakeholders to participate in discussions around sustainability indicator ratings is a useful way to initiate reflection on current policies and programs, expand the breadth of information generated, and potentially foster coordination and new partnerships to support jurisdictional approaches.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by CIFOR Ethics Review Committee. The ethics committee waived the requirement of written informed consent for participation.

Author Contributions

SP, AD, and CS contributed conception and design of the study. CL and MK led SLRT assessments in several jurisdictions in Peru and Indonesia, respectively. JD led the participatory process for the development of the SLRT and associated guidance. SP reviewed all SLRT assessments, cleaned and organized the database, and performed the statistical analysis. SP, AD, CS, and JD wrote the manuscript with contributions from CL and MK. All authors contributed to the article and approved the submitted version.

Funding

This work was funded through the CGIAR research program on Forests, Trees, and Agroforestry, the Norwegian Agency for Development Cooperation (Norad), and the International Climate Initiative (IKI) of the Federal Ministry for the Environment, Nature Conservation, Building and Nuclear Safety (BMUB).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Thank you to the many people who made this work possible. We would like to thank Maria DiGiano (EII) for her help with the additional indicators added to the SLRT. We would also like to thank additional field researchers who led SLRT assessments in the studied sub-national jurisdictions: Ana Carolina Crisostomo, David Solano, Dawn Ward, Ekoningtyas M. Wardani, Ines Saragih, Magda Rojas, and Natalia Cisneros. We also thank all the interviewees and workshop participants for their time. Finally, we would like to thank the reviewers and journal editors who provided helpful feedback on earlier versions of this manuscript.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/ffgc.2021.507443/full#supplementary-material

Footnotes

1. ^Maranhão, Rondônia, and Tocantins in Brazil were excluded from these analyses since non-governmental stakeholders were not present at the validation workshops due to complications associated with the election period mentioned earlier.

References

Boyd, W., Stickler, C., Duchelle, A. E., Seymour, F., Nepstad, D., Bahar, N. H. A., et al. (2018). Jurisdictional Approaches to REDD+ and Low Emissions Development: Progress and Prospects. Working Paper. Washington, DC: World Resources Institute. Available online at: https://www.wri.org/publication/ending-tropical-deforestation-jurisdictional-approaches-redd-and-low-emissions (accessed December 15, 2020)

Busch, J., and Amarjargal, O. (2020). Authority of second-tier governments to reduce deforestation in 30 tropical countries. Front. Forest Global Change 3:1. doi: 10.3389/ffgc.2020.00032

Coston, J. M. (1998). A model and typology of government-NGO relationships. Nonprofit Voluntary Sector Quart. 27, 358–382. doi: 10.1177/0899764098273006

DiGiano, M. L., Stickler, C., Nepstad, D., Ardila, J., Becerra, M., Benavides, M., et al. (2016). Increasing REDD+ Benefits to Indigenous Peoples and Traditional Communities Through a Jurisdictional Approach. San Francisco, CA: Earth Innovation Institute.

GCF Task Force (2019). Available online at: https://www.gcftf.org/ (accessed October 20, 2020).

GFI Guidance Manual (2013). The Governance of Forests Initiative (GFI) Guidance Manual: A Guide to Using the GFI Indicator Framework. Available online at: https://wri-indonesia.org/sites/default/files/governance_of_forests_initiative_guidance_manual.pdf (accessed October 20, 2020).

Glasbergen, P. (2018). Smallholders do not eat certificates. Ecol. Econ. 147, 243–252. doi: 10.1016/j.ecolecon.2018.01.023

Glaser, B. G., and Strauss, A. (1967). The Discovery Grounded Theory: Strategies for Qualitative Inquiry. Chicago, IL: Aldin. doi: 10.1097/00006199-196807000-00014

Hemmati, M. (2002). Multi-Stakeholder Processes for Governance and Sustainability: Beyond Deadlock and Conflict. New York, NY: Earthscan.

Kishor, N., and Rosenbaum, K. (2012). Assessing and Monitoring Forest Governance: A User's Guide to a Diagnostic Tool. Washington, DC: Program on Forests (PROFOR). Available online at: https://www.profor.info/sites/profor.info/files/AssessingMonitoringForestGovernance-guide.pdf (accessed October 20, 2020).

Kowler, L. F., Ravikumar, A., Larson, A. M., Rodriguez-Ward, D., Burga, C., and Gonzales Tovar, J. (2016). Analyzing Multilevel Governance in Peru: Lessons for REDD+ From the Study of Land-Use Change and Benefit Sharing in Madre de Dios, Ucayali and San Martin. Working Paper 203. Bogor: CIFOR. Available online at: https://www.cifor.org/publications/pdf_files/WPapers/WP203Kowler.pdf (accessed December 15, 2020).

Larson, A. M., Sarmiento Barletti, J. P., Ravikumar, A., and Korhonen-Kurki, K. (2018). “Multi-level governance: Some coordination problems cannot be solved through coordination,” in Transforming REDD+: Lessons and New Directions, eds A. Angelsen, C. Martius, V. De Sy, A. E. Duchelle, A. M. Larson, and T. T. Pham (Bogor: CIFOR), 81–91.

Najam, A. (2000). The four C's of government third Sector-Government relations. Nonprofit Manage. Leader. 10, 375–396. doi: 10.1002/nml.10403

Nepstad, D., Irawan, S., Bezerra, T., Boyd, W., Stickler, C., Shimada, J., et al. (2013). More food, more forest, few emissions, better livelihoods: linking REDD+, sustainable supply chains and domestic policy in Brazil, Indonesia and Colombia. Carbon Manage. 4, 639–658. doi: 10.4155/cmt.13.65

Pacheco, P., Bakhtary, H., Camargo, M., Donofrio, S., Drigo, I., and Mithöfer, D. (2018). “The private sector: can zero deforestation commitments save tropical forests?,” in Transforming REDD+: Lessons and New Directions, eds A. Angelsen, C. Martius, V. De Sy, A. E. Duchelle, A. M. Larson, and T. T. Pham (Bogor: CIFOR), 161–173.

Piketty, M.-G., and Garcia-Drigo, I. (2018). Shaping the implementation of the FSC standard: the case of auditors in Brazil. Forest Policy Econ. 90, 160–166. doi: 10.1016/j.forpol.2018.02.009

Pimbert, M., and Pretty, J. N. (1995). Parks, People and Professionals: Putting ‘Participation' Into Protected Area Management. UNRISD Discussion Paper 57. Geneva: United Nations Research Institute for Social Development.

Potts, J., Lynch, M., Wilkings, A., Huppé, G., Cunningham, M., and Voora, V. (2014). The State of Sustainability Initiatives Review 2014. International Institute for Sustainable Development. London, Winnipeg, MB; International Institute for Environment and Development.

Roberge, A., Bouthillier, L., and Boiral, O. (2011). The influence of forest certification on environmental performance: an analysis of certified companies in the province of Quebec (Canada). Canad. J. Forest Res. 41, 661–668. doi: 10.1139/x10-236

Romero, C., and Putz, F. E. (2018). Theory-of-change development for the evaluation of forest stewardship council certification of sustained timber yields from natural forests in Indonesia. Forests 9:547. doi: 10.3390/f9090547

Sayer, J., Sunderland, T., Ghazoul, J., Pfund, J.-L., Sheil, D., Meijaard, E., et al. (2013). Ten principles for a landscape approach to reconciling agriculture, conservation, and other competing land-uses. Proc. Natl Acad. Sci. U.S.A. 110, 8349–8356. doi: 10.1073/pnas.1210595110

SLRT (2017a). Sustainable Landscapes Rating Tool – Assessing Jurisdictional Policy and Governance Enabling Conditions. Available online at: https://s3.amazonaws.com/CCBA/sustainable+landscapes+rating+tool/Version+1+June+2017/Sustainable_Landscapes_Rating_Tool_Sept2017_overview.pdf (accessed October 20, 2020).

SLRT (2017b). Sustainable Landscapes Rating Tool: Guidance. Available online at: https://s3.amazonaws.com/CCBA/sustainable+landscapes+rating+tool/Version+1+June+2017/SLRatingTool_Guidance_1June2017.pdf (accessed October 20, 2020).

SLRT (2019). Sustainable Landscapes Rating Tool. Available online at: http://www.climate-standards.org/sustainable-landscapes-rating-tool/. (accessed October 20, 2020).

Smith, W. K., Nelson, E., Johnson, J. A., Polasky, S., Milder, J. C., Gerber, J. S., et al. (2019). Voluntary sustainability standards could significantly reduce detrimental impacts of global agriculture. Proc. Natl. Acad. Sci. U.S.A. 116, 2130–2137. doi: 10.1073/pnas.1707812116

Stickler, C., David, O., Chan, C., Ardila, J. P., and Bezerra, T. (2020). The Rio Branco declaration: assessing progress toward a near-term voluntary deforestation reduction target in subnational jurisdictions across the tropics. Front. Forests Glob. Change 3:50. doi: 10.3389/ffgc.2020.00050

Stickler, C., DiGiano, M., Nepstad, D., Swette, B., Chan, C., McGrath, D., et al. (2014). Fostering Low-Emission Rural Development from the Ground Up. San Francisco, CA: Earth Innovation Institute/Sustainable Tropics Alliance. Available online at: http://earthinnovation.org/publications/fostering_led-r_tropics-pdf/ (accessed December 15, 2020).

Stickler, C., Duchelle, A. E., Nepstad, D., and Ardila, J. P. (2018). “Subnational jurisdictional approaches: policy innovation and partnerships for change,” in Transforming REDD+: Lessons and New Directions, eds A. Angelsen, C. Martius, V. De Sy, A. E. Duchelle, A. M. Larson, and T. T. Pham (Bogor: CIFOR), 145–159.

Stickler, C. M., Duchelle, A. E., Ardila, J. P., Nepstad, D. C., David, O. R., Chan, C., et al. (2018). The State of Jurisdictional Sustainability. San Francisco, CA: Earth Innovation Institute/Bogor, Indonesia: Center for International Forestry Research/Boulder, USA: Governors' Climate & Forests Task Force Secretariat. Available online at: https://earthinnovation.org/state-of-jurisdictional-sustainability/ (accessed December 15, 2020).

Tröster, R., and Hiete, M. (2019). Do voluntary sustainability certification schemes in the sector of mineral resources meet stakeholder demands? A multi-criteria decision analysis. Res. Policy 63:101432. doi: 10.1016/j.resourpol.2019.101432

Keywords: participation, jurisdictional approach, environmental governance, data validation, stakeholders

Citation: Peteru S, Duchelle AE, Stickler C, Durbin J, Luque C and Komalasari M (2021) Participatory Use of a Tool to Assess Governance for Sustainable Landscapes. Front. For. Glob. Change 4:507443. doi: 10.3389/ffgc.2021.507443

Received: 25 October 2019; Accepted: 04 January 2021;

Published: 12 March 2021.

Edited by:

Mirjam Ros, University of Amsterdam, NetherlandsReviewed by:

Guy Chiasson, University of Quebec in Outaouais, CanadaVerina Jane Ingram, Wageningen University and Research, Netherlands

Copyright © 2021 Peteru, Duchelle, Stickler, Durbin, Luque and Komalasari. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Swetha Peteru, cy5wZXRlcnVAY2dpYXIub3Jn

Swetha Peteru

Swetha Peteru Amy E. Duchelle

Amy E. Duchelle Claudia Stickler

Claudia Stickler Joanna Durbin3

Joanna Durbin3 Mella Komalasari

Mella Komalasari