- Michigan State University Department of Community Sustainability, Natural Resources Building East Lansing, East Lansing, MI, United States

Introduction: There has been little work investigating the effect of framing in citizen science and the subsequent effects on data quality and participant outcomes (e.g., science literacy, trust in science, motivations to contribute).

Methods: To establish the impact of framing in citizen science on data quality and participant outcomes, an experimental web-based citizen science program was created where participants were engaged in tree phenology research. Participants were randomized to one of two differently framed conditions where they were engaged in the same exact data collection task, but the rhetoric around participant contribution was framed differently. In this, participants were either referred to as a “Citizen Scientist” or “Volunteer.” Participants took a pre and post survey that measured science literacy, trust in science, and motivations to contribute to citizen science.

Results: There were significant differences in participant outcomes and data quality between the two conditions post-participation. Individuals in the “Citizen Scientist” condition completed the project and submitted higher quality data significantly more than those individuals in the “Volunteer” condition. Additionally, individuals in the two conditions begin to diverge in their responses to questions within each of the measured areas post-participation.

Discussion: This research suggests that being called a citizen scientist may elicit internally held expectations of contribution, informed by normative and culturally informed experiences. Therefore, participants might view their contributions as citizen scientists are more consequential than when as volunteers. Research of this nature can help guide practitioners using citizen science in thinking about framing as a part of their project development and stimulate further research on best practices in citizen science project design.

Introduction

Citizen science (hereafter CS) has been championed as a means to attain scientific research goals and engender greater public support for science. In particular, CS has been helpful in addressing scientific questions when the broad scale or scope of the data collection would be difficult for individual scientists or research teams to collect (Newell, et al., 2012), for tasks that computers have not yet been able to complete reliably or competently (Savage, 2012), or in situations where research projects have data deluge and researchers are unable to keep up with processing the incoming data (Klein, 2016). Given CS’ potential benefits to the scientific enterprise, there has been a huge expansion in the number of CS projects across the scientific fields (Follett and Strezov, 2015). However, CS project developers and managers face the challenge of balancing public engagement goals and participant recruitment while also ensuring high quality data and minimizing participant turnover. Oftentimes, there are often additional obligations from funding agencies to demonstrate a project’s broader impacts, which can take the form of desired changes in participant learning and personal values after engaging in the project. Studies show CS can increase scientific literacy (Bonney, et al., 2009; Dickinson, et al., 2010; Silvertown, 2009), participant knowledge in the specific issue of focus (Jordan, et al., 2011), civic awareness and engagement (Nerbonne and Nelson, 2004), and engagement in scientific thinking (Trumbull et al., 2000) for its participants. While many professionals using CS explicitly include educational efforts (Crall, et al., 2013), citizen scientists frequently show knowledge gains by simply engaging in the project even if it was not an explicit goal (Nerbonne and Nelson, 2004; see Kloetzer et al., 2021 for review of learning in CS programs).

It is important for CS project managers to identify what design elements of the CS experience influences desired scientific contributions and beneficial participant outcomes (educational, social, behavioral, etc.). Having a deeper understanding of these drivers can help meet the broader impact goals of many CS projects and can ease the burden on project developers and managers who may not have had formal experience in design, social science, and public relations. There is, however, a continuous lack of rigorous investigation of the mechanistic underpinnings that elicit particular outcomes for participants, which makes it difficult to recommend best practices in CS project design. This is not to say that CS project developers and managers do not care about design, functionality, and outcomes. Indeed, many CS project managers and developers often invest a good deal of money and time through iterative design processes into these areas. However, without experimentally tested and informed best-practices, CS projects may not be as effective in driving participant outcomes. Therefore, we conducted a true experiment to test the impact of project design on CS participation. In particular, we sought to explore whether framing of the terminology of the participant in CS can lead to differences in contributions from and outcomes for participants engaged in CS.

Framing

Framing is a term used in the social sciences to refer to narratives that communicate information and why it matters (see Nisbet and Kotcher, 2009 for a discussion). Framing scientific information is often an effective means to shape the public response to scientific issues (Scheufele, 1999). A classic example of framing discusses a hypothetical medical procedure (Davis, 1995), where the procedure outcome can be framed as having either an ‘80% cure rate’ or a ‘20% mortality rate.’ These statements have functionally the same outcome (in terms of probability of survival), but the way they are communicated as either a gain (being cured) or a loss (dying) can be interpreted by the respondent differently and evoke different behavioral responses. In the public health literature, message framing (presenting functionally equivalent gain versus loss messages about getting a vaccine) has been shown to be effective in driving individual vaccination behavior (Rothman, et al., 2006; Rothman, et al., 2003). Though framing is not simply about gain or loss messaging, using a particular rhetoric or language can promote certain interpretations, evaluations, and solutions by emphasizing particular facets of an event or issue (Entman, 2004).

Within the context of CS projects, scholars have found framing can be influential for participation and behavioral outcomes. In a recent study, Tang and Prestopnik (2019) demonstrated that game and task framing within a CS project significantly impact participant perceived enjoyment. They also noted that the task framing, or the notion that contributions will be meaningful (for the self or for the enterprise of science), affected participants perceived meaningfulness of their contribution. In terms of framing influencing participant behavior, Dickinson et al. (2013) found that citizen scientists’ interest in taking action around climate change was influenced by whether the harm was framed around birds (CS project focus) or humans.

Work by Woolley et al. (2016) on the use of rhetoric in public engagement discusses how the language around public involvement in research has shifted, how this terminology is used to drive desired behavior from members of the public, and the ethical and social implications of this shift in the biomedical sciences. Woolley et al. (2016) bring up the ethical question of how participation as a “citizen scientist” should be interpreted, the normative factors that are encompassed in this interpretation, and the rights, duties, and role of a “citizen scientist” in biomedical research. Woolley et al.‘s work demonstrates how specific words or labels carry meaning and can be used by researchers and corporations to elicit beneficial behaviors from members of the public.

When Irwin and Bonney independently coined the term “citizen scientist,” they conceptualized citizen science and the role of participants in the process differently (Woolley, et al., 2016). Bonney takes a “top-down” approach where people contribute data or processing power to research (Bonney, et al., 2009; Riesch and Potter, 2013) and Irwin employs a “bottom-up” approach where projects are responsive to community needs and members of the public drive the scientific process (Irwin, 2015). These two alternative conceptions of the role of the citizen scientist in research can shape individuals’ association of what it means to do CS and influence how citizen scientists perceive themselves and their role in the process of science. Indeed, Eitzel et al. (2017) noted how the terminology used to address the participants in CS is both dynamic and often context specific, though they noted a need for a shared practice with the recognition that some terminology may be problematic for some groups.

Conversations around re-naming CS (i.e., community science, participatory action research, crowd-sourced science, civic science, etc.) emphasizes the underlying norms and values a name connotates. Indeed, concerns within the American Citizen Science Association (CSA), culminating in the renaming of CSA to the Association for Advancing Participatory Science in 2022, emphasizes the implications and broader associations the language around CS conveys (Putnam, 2023), particularly in the potential exclusion of some identity groups (Wilderman et al., 2007; Eitzel et al., 2017). This controversy acknowledges the power of framing, suggesting that the term “citizen scientist” is complex in its potential to impact individual interpretation and behavior. All together, these studies suggest that framing plays an important role in CS development and design. Given that framing through the act of naming affects interpretation of an idea or concept and has subsequent significant effects on behavior, it is reasonable to question how naming in the context of CS might also influence participants performance in CS projects.

Objectives

In this work, we test whether framing (in terms of rhetoric and language) for a CS project can result in differences in outcomes among participants in the areas of trust of scientific research (T), ideas about scientific practices (scientific literacy) (SP), and motivations to contribute to online science (MCS). Specifically, we investigate whether explicitly referring to participants as “citizen scientists”, or as “volunteers,” impacts participant and project outcomes. We hypothesize that explicitly labeling someone who contributes to CS a “citizen scientist,” as opposed to as a “volunteer,” will result in greater positive outcomes in terms of science literacy, trust in scientific research, and report greater motivation to contribute after engaging in a CS project. We also hypothesize that framing will impact project completion rates. Through this intentional investigation of the potential mechanistic underpinnings that can drive or influence participant outcomes, we seek to stimulate further rigorous research about best practices in engaging the public in CS. This work is exploratory in nature to help shed light on how the framing of CS projects can influence several different participant outcomes around science learning and behavior and further spur future research along similar veins.

Methods

The study is couched in the context of climate change induced changes in phenology, via the timing of buds and flowers on trees. We use climate change as the context of our study because of the ubiquity of this issue. Climate change has been described as a “wicked problem” (Levin, et al., 2012) involving much complexity, which in many cases has scientists struggling to obtain enough data. Additionally, climate change is an environmental issue about which the public struggles with the science and is often the subject of public outreach or public participatory research programs. Biological phenomena such as phenology are often used to track planetary change and have been studied extensively in the context of online public participatory research programs (Mayer, 2010; Klinger et al., 2023), making it an appropriate context for our study.

To test the effect of framing in CS, we devised an online contributory model CS tree phenology program. The phenology, or life cycle variations of plant and animals, of various flora and fauna has been used as an indicator of climatic change. Observations of phenological variation such as earlier migrations and blooming have matched patterns predicted by global warming in the Northern Hemisphere (Schwartz, et al., 2006). Given the large body of literature on phenology in CS, the tasks of participants will be exclusively focused on tree observations that signal seasonal changes (i.e., leaf drop or flowering) (akin to the National Phenology Network tree flowering program at http://usanpn.org). The focus of this project was not the phenology data itself, but the effect of differential framing on participant outcomes and project success in a realistic CS setting.

Commonalities between conditions

The outline of the participant experience is as follows (see Figure 1 for project design): (1) participant chooses to participate in the project (hereafter HIT) from the Amazon Mechanical Turk (hereafter MTurk) platform, after which participants are immediately randomized to an experimental condition platform hosted by researchers; (2) participant creates an anonymous identification (hereafter ID) for the project so researchers can pair pre- and post-surveys and submitted CS data; (3) participant takes a pre-survey before entering the site; (4) participant explores the website and reads the data collection protocol; (5) participant collects and submits data; (7) participant receives link immediately after data submission to take the post-survey about their experiences; (8) participants receive code of HIT completion to submit on MTurk upon completion of the post-survey.

Figure 1. Flow chart of participant engagement within the study. Framing around the terminology participants were called differed between conditions, but messaging and participant effort were held constant.

Individuals were recruited from MTurk to allow the authors to hold participant motivation constant in terms of expected benefits of contributions, and therefore can attribute the differences to the modified framing elements alone. MTurk was chosen because of the robustness of the data quality from MTurk in other sociological scientific research, its customizability, and MTurk’s contributor population is shown to be representative of the U.S. population with respect to gender, race, age, and education level (Paolacci, et al., 2010). Through use of MTurk, the authors were able to run a true experimental manipulation where individuals were assigned to conditions and can attribute any differences in outcomes due to the framing, unlike CS projects where one can only investigate participant outcomes retrospectively.

From the MTurk platform, participants opted to join the project and then were immediately randomized to a condition, assigning a total of 120 individuals to each condition. To do this, the project was listed with a brief description of the tasks workers were to complete on the MTurk marketplace where MTurk workers browse through potential projects to join. No specific language around CS was used in the project description, only the exact tasks participants would do (observe and take a picture of a tree), and the timeframe participants had to complete the project. When workers opted to join the project, they were navigated to the password protected homepage of one of the two conditions at random. Since MTurk workers choose the projects they participate in, just as citizen scientists choose to participate in projects they are interested in, we assume participants in this study have similar levels of initial interest as citizen scientists joining a project.

Individuals who participated in one participatory platform condition (i.e., “Citizen Scientist”) were not able to participate in, and were unaware of, the other condition (i.e., “Volunteer”). The tasks between these two participatory platform conditions were exactly the same to standardize participant effort. The two conditions were hosted on password-protected online domain free-ware. Participants were recruited in the spring of 2015 and fall of 2015, and recruitment was restricted to the Northeast region of the United States (including the following states: New Jersey, Pennsylvania, New York, Massachusetts, Rhode Island, Connecticut, Vermont, New Hampshire, and Maine). This allowed for comparison of participant contributed data to actual phenological data to assess validity of submitted data. Participation occurred over a maximum of 1 week in either the spring or the fall (individuals were given 6 days following the pre-survey to submit data and take the post-survey).

Data submitted by participants was used to assign each participant a ‘likelihood of accuracy’ score to provide a sense of participant effort and achievement. Participants were assigned a low-likelihood score if they submitted incomplete information in the data sheet, inaccurate data for the geographic location, or clearly falsified data. For example, one low-likelihood participant reported in their data sheet a particular species of tree and then provided a photo of a different species. Another low-likelihood participant submitted a clearly watermarked photo pulled from the Internet that could be reverse imaged searched. High-likelihood scores were assigned to participants for complete data (i.e., completed data sheets, photos aligned with reported data, photos of plausible tree species in appropriate season). As the authors could not validate true accuracy of the phenology data reported by each participant on the ground, participant accuracy likelihood scores represent a coarse assessment of accuracy if the data submitted by participants; (1) aligned with researchers’ expectations of tree species and phenology based on geographic location and time of year and (2) the data were easily falsifiable. This model is similar to other geographically distributed online CS programs (i.e., eBird, iNaturalist) that base validation of those data reported by participants on seasonal and geographic distribution expectations of species presence in a particular location and rely on photographic evidence of anomalous sightings that do not align with those expectations. Participants were not made aware of this likelihood of accuracy metric and was assigned by the authors post data submission. Only data from participants who completed the pre-post surveys and high-likelihood of accurate data submitted were used for the analysis. Project attrition rate was calculated using the number of participants who did not complete all the tasks in relation to the number of participants who began the project.

The pre- and post-participation surveys were taken within a week of each other (see Appendix 1 for full survey). The surveys included the items on a five-point Likert-type scale (1 = Strongly Disagree, 3 = Neutral, 5 = Strongly Agree) in the following areas: trust of scientific research (T); ideas about scientific practice (SP); and motivation to contribute to online science (MCS). The items were taken from a series of instruments used in an ongoing CS project based on invasive plant identification (described in its initial form in Jordan, et al., 2011). The post-participation survey became available directly after participants submitted their tree data and contained eight additional items to assess perceptions of project trustworthiness, benefits, and enjoyment. Three items asked about the perceived trustworthiness of the project (on the same five-point Likert-type scale as the rest of the survey) and the remaining five items asked about perceived project enjoyment and benefits (on a 100-point sliding scale, 1 being not at all, 50 being a moderate amount, 100 being greatly). After submission of the pre-survey, post-survey, and data, participants were compensated $5 and given a debriefing statement about their participation. As participants were asked to complete the tasks for this project over the course of a minimum of 2 h to a maximum 7 days, this minimal compensation was deemed unlikely to skew data due to excessive compensation. Research protocol was approved through institutional IRB and participants had the option to decline to participate prior to accepting the HIT if they did not wish to have their data used for human-subjects research.

Differences between conditions

While the task of all participants remained the same, the rhetoric of the online interface differed for participants, in particular the name in which participants were referred to. Since both conditions worked through similar mechanisms of participant contribution and interaction, the framing of those contributions alone would be the expected trigger any differences in participant outcomes. In the “Citizen Scientist” condition, participants were specifically called citizen scientists and were provided a brief description on CS and its history in contributing to science (e.x., “You, as a citizen scientist, are helping us collect data about trees in your area!“). In the “Volunteer” condition, participants were called volunteers and there was no specific discussion of citizen science; however, there was a description on how people have contributed to science (e.x., “You are helping us collect data about trees in your area!“). Beyond the slight language differences, all other elements of the participant interface were the same (the scientific background on phenology, explanation of why scientific researchers ask members of the public to collect geographically distributed data, how tree phenology data are used to answer research questions about climate change, data submission forms, etc.) and participation effort was the same between the two conditions.

Analysis

A Mann-Whitney U test was performed to compare the spring and fall data within the “Citizen Scientist” and “Volunteer” conditions to test for differences related to the time of year the individual participated because of the two-time period sampling design. A Mann-Whitney U is a non-parametric of the null-hypothesis that two samples come from the same population without the assumption of a normal distribution.

A Chi-Square test was used to test differences in project completion rates between the two conditions and a multivariate analysis of variance (MANOVA) was used to test for differences in participant survey responses between conditions. A MANOVA is an extension of the univariate analysis of variance (ANOVA), with multiple dependent variables. A MANOVA uses the Wilks’ lambda test statistic to test whether there are differences between the means of groups on dependent variables (Crichton, 2000; Everitt and Dunn, 1991; Polit, 1996). If the independent variable explains a large proportion of the variance, that suggests there is an effect from the grouping variable and that the groups have different mean values (Crichton, 2000; Everitt and Dunn, 1991; Polit, 1996). In this study, the MANOVA compared the differences in responses to the survey items between the two conditions. We report here the comparison of the survey items of the pre and post surveys between the conditions, as any differences in the post survey between the conditions would help inform our hypothesis of the effect of the framing. A Benjamini and Hochberg (1995) test was used as a post hoc analysis to address Type I discovery errors. A false discovery rate of 25% was used in calculation for the critical value to establish significance. This post hoc test was used as this study is exploratory in nature and other post hoc tests have been shown to be overly conservative in exploratory contexts (McDonald, 2007).

A Mann-Whitney U test was also used to compare the two conditions on the eight post-survey items that asked about the perceptions of project trustworthiness, benefits, and enjoyment. All data were analyzed using SPSS.

Results

The Mann-Whitney U test revealed no significant differences were found within condition in the pre- or post-survey due to seasonality, allowing us to treat the fall and spring respondents as a single population for the remainder of the analysis.

The “Citizen Scientist” condition had a significantly higher project completion rates than the “Volunteer” condition as evidenced by a chi-square analysis (Χ 2 (1) = 4.24, p = 0.039). In terms of participant retention, there was a 65% completion rate for the “Citizen Scientist” condition, with 91 individuals at the start of the project and 60 completed paired pre/post surveys and submitted data. Ten of the 60 individuals submitted low-likelihood quality data (i.e., obviously watermarked photos, photos of trees in the wrong season, photo and data that did not match), leaving 50 individuals retained for data analysis. For the “Volunteer” condition there was a 59% rate of project completion with 111 individuals at the start of the project and 66 completed paired pre/post surveys and submitted data. Twenty-six individuals submitted low-likelihood quality data, therefore 40 individuals were retained for data analysis. There were slightly different numbers of individuals having started the project between the conditions because of the nature of the MTurk platform (e.g., workers can accept the HIT and not complete the work, reducing the availability in the marketplace).

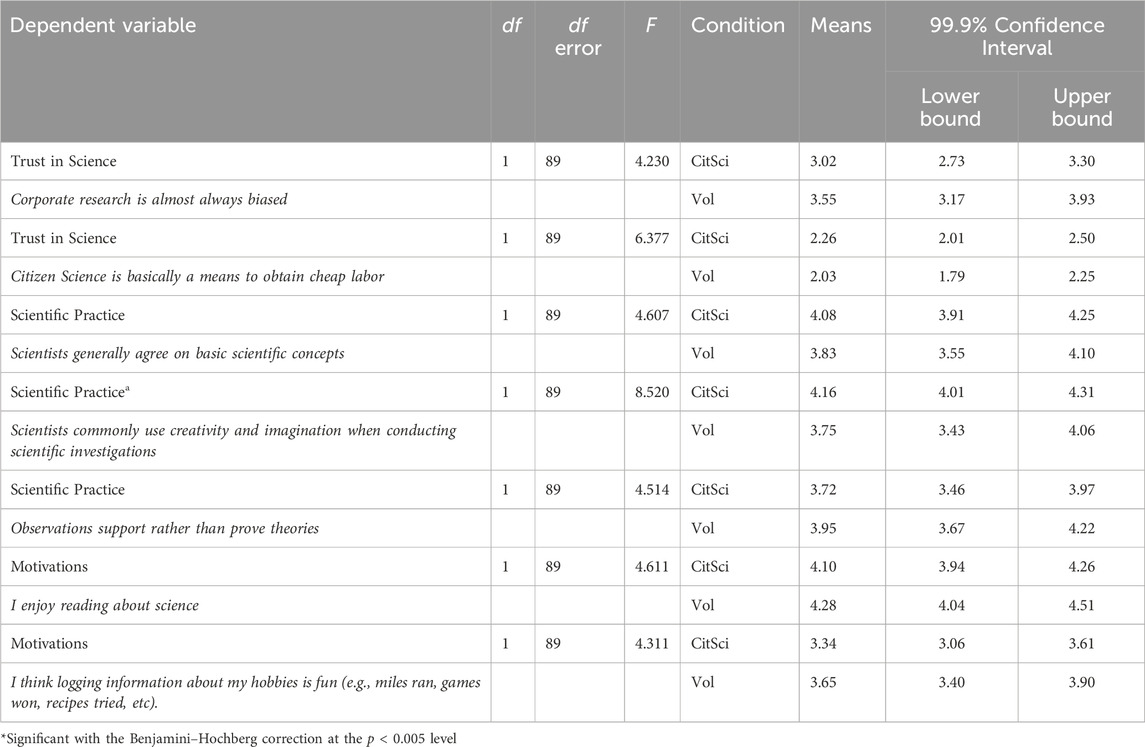

The MANOVA found no significant differences between the conditions in the pre survey, but found significant differences between the conditions on the post survey; F (1,94) = 1.79; p = 0.007; Wilk’s Λ = 0.512. Looking at the individual item comparison, seven individual items were significantly different between the treatments and with the Benjamini–Hochberg correction, one item was found as significant. The items that came out as significant prior to the Benjamini–Hochberg correction were from all three areas measured in survey; trust of scientific research (T), ideas about scientific practice (SP), motivation to contribute to online science (MCS) (see Table 1). The item that remained significant after correction was from the SP items, specifically “Scientists commonly use creativity and imagination when conducting scientific investigations.”

Table 1. Univariate Effects for Framing Condition (Citizen Science or Volunteer) (at p < 0.05 level).

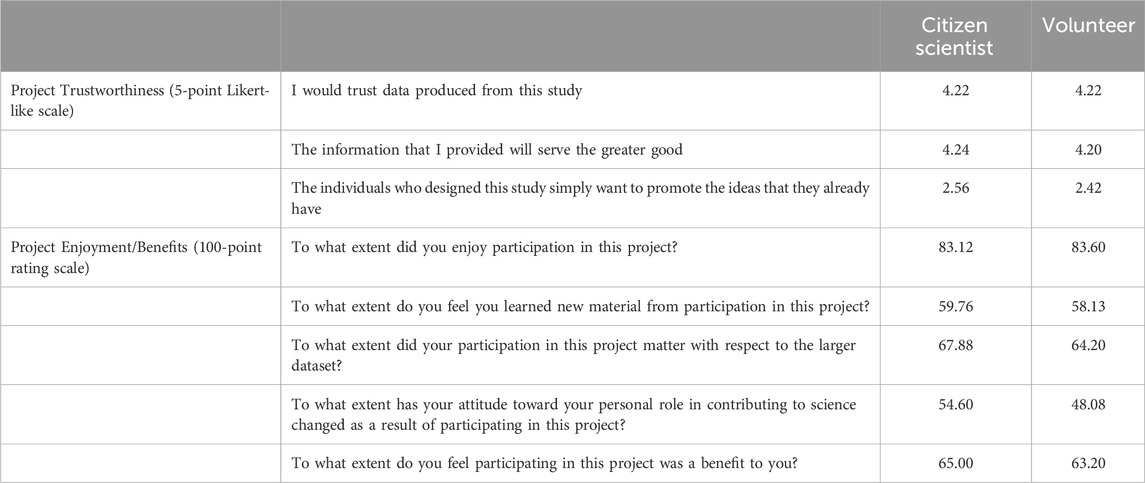

There was no significant different between the two conditions in relation to the eight additional items that were asked only in the post-survey, assessing perceptions of project trustworthiness, benefits, and enjoyment as revealed by the Mann-Whitney U (see Table 2). Individuals in both conditions rated their enjoyment of the experience highly with an average score of 83 out of 100. In terms of project trustworthiness, individuals across both conditions reported they would trust the data produced from the study and felt the information they provided would serve a greater good.

Table 2. Mean response to post-survey items on perceived project trustworthiness, enjoyment, and benefits of participating. There was no significant different between condition on responses to these items. (Citizen Scientist, n = 50) (Volunteer, n = 40).

Discussion

This study suggests that specific framing around the language used to identify participants within a CS project may increase participant retention, data quality, and potentially influence outcomes in participant scientific identity, attitudes, and trust. While all CS research programs experience attrition and invalid data submission from participants, it is interesting that there was a significant difference between the two conditions in this study in terms of project completion and quality of data submitted. Significantly more individuals in the “Citizen Scientist” condition completed the program successfully and submitted highly-likely valid data. As individuals were assigned to a condition at random when joining the project, one would predict there would be similar completion rates and data validity. In the CS condition, specifically emphasizing that we as researchers considered the participants ‘citizen scientists’ may have conferred a sense of authenticity or relative importance of their contributions, similar to task framing effects as noted by Tang and Prestopnik (2019). Together with the notion proposed by Woolley, et al. (2016), these findings suggest that participating as a citizen scientist may have underlying normative factors that influence interpretation of what participation means for the ‘citizen scientist’ participant.

In terms of differences in outcomes between the two conditions, seven items across all three areas (Trust, Scientific Practice, Motivations to Contribute to Science) showed up as significantly different prior to the post hoc analysis, and one item remained significant after the post hoc analysis. In the area of trust of scientific research (T), two of the ten questions showed shifts, though non-significant, between the two conditions. Individuals in the “Citizen Scientist” condition reported being more neutral in their trust in science for both items than individuals in the “Volunteer” condition. Perhaps, again, participation in CS as a citizen scientist elicits underlying normative views of what it means to be a scientist. The second trust (T) item that showed significant shift prior to post hoc analysis was individuals in the “Volunteer” condition agreeing more strongly that “Citizen science is basically a means to obtain cheap labor,” whereas individuals in the “Citizen Scientist” condition responded more neutrally. This aligns with the negative interpretations for common names of participants in CS for ‘Volunteers’ posited by practitioners (see Figure 1 in Eitzel et al., 2017). Perhaps, those in the “Volunteer” condition were primed to evaluate any contribution in a more transactional perspective, though they were not referred to as “Citizen Scientists” in the project itself.

We also saw shifts in three of the 24 items asked about scientific practice (SP), but only one remained significant in the post hoc analysis. Given the prior literature (e.g., Brossard, et al., 2005; Crall, et al., 2013), it is not unexpected that participants did not show major consistent significant shifts across the construct of scientific practice or literacy in either condition. It is likely that a single, week-long contributory CS experience is not enough to drive meaningful, large changes in individuals’ understanding of science. However, the mechanism(s) within CS projects driving the changes found in this research and other projects (see Trumbull, et al., 2000) have not yet been conclusively identified and may be project specific.

Finally, two of 16 items showed shifts around motivation to contribute to online science (MCS) between the two conditions. In this, individuals in the “Volunteer” condition reported enjoying pleasure science pursuits more than individuals in the “Citizen Scientist” condition. This could be due to the perceived gravity of their contributions and nature of their engagement (a job vs a hobby). It is clear that additional experimental studies using different measures and survey instruments to investigate changes in the areas measured in this paper (T, SP, MCS) and other potential outcomes of participating in CS (i.e., science identity, science literacy) may help build a fuller picture of the impact of framing in CS. Additionally, future in-depth qualitative work is needed to understand how citizen scientists view the nature and consequence of their contributions and what factors influence this interpretation.

Summary

This research is one of the first to the authors’ knowledge that aims to experimentally evaluate the role of terminology framing in CS project design on CS participant contributions and outcomes. While this work suggests that framing in CS may influence participant contributions and outcomes, these ideas were tested on a relatively small scale within the context of a particular type of CS paradigm (online contributory) on an ecological phenomenon in the United States. As framing effects are often mediated by identity (Brough, et al., 2016; Zhao, et al., 2014), and interpreted through a lens of culturally specific social groups (e.g., Ho, et al., 2008; Nisbet, 2005), it is reasonable to believe that international communities may respond differently to this type of framing. Researchers in the public health field have found that certain frames are only effective for particular populations, driven by experience and current behavior (Gerend and Shepherd, 2007). Further efforts to replicate this work in other communities within and outside of the United States is needed to understand whether these trends hold across other social groups. Additionally, the mode in which participants are engaged (solely online, hybrid in-person and online, in-person only, expedition trips, etc.) and length of engagement (1 day bio-blitz vs sustained participation) also likely influence the effect of framing in CS. More work is needed to understand how participants of CS view their contributions to science and how framing may affect participant outcomes in CS projects.

Along with replicating experimental efforts to understand the impact of framing in CS, additional work investigating how the effect of project focus and context may influence outcomes for participants in CS is needed. Here, participants were experimentally assigned to a condition to investigate framing, but in genuine CS projects participants come to projects for a variety of reasons. This exploratory study seeks to prompt research within the CS community around how phenomena from the communication sciences that are inherently at play within CS projects can influence participant outcomes, and further, how to design projects with these phenomena in mind. Researching and vetting metrics of project design and development would help practitioners tailor particular aspects of the project towards desired outcomes. The findings here have broad implications for scientists hoping to engage the public in scientific research, particularly highlighting the importance of being mindful of desired learning and behavioral outcomes for participants and how framing and communication may play an important role in driving these outcomes.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Rutgers University institutional IRB approval #15-595Mx. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

AS: Conceptualization, Data curation, Formal Analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing–original draft, Writing–review and editing. RJ: Formal Analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing–original draft, Writing–review and editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

All research was done with Rutgers University institutional IRB approval #15-595Mx. The authors would like to thank D.R. Betz and B. Meyer for their thoughtful comments and contributions to this manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fenvs.2024.1496203/full#supplementary-material

Abbreviations

CS, Citizen Science; MTurk, Amazon Mechanical Turk; HIT, Individual projects workers can participate in within the Amazon Mechanical Turk environment; ID, Identification.

References

Benjamini, Y., and Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B Methodol. 57 (1), 289–300. doi:10.1111/j.2517-6161.1995.tb02031.x

Bonney, R., Cooper, C. B., Dickinson, J., Kelling, S., Phillips, T., Rosenberg, K. V., et al. (2009). Citizen science: a developing tool for expanding science knowledge and scientific literacy. BioScience 59 (11), 977–984. doi:10.1525/bio.2009.59.11.9

Brossard, D., Lewenstein, B., and Bonney, R. (2005). Scientific knowledge and attitude change: the impact of a citizen science project. Int. J. Sci. Educ. 27 (9), 1099–1121. doi:10.1080/09500690500069483

Brough, A. R., Wilkie, J. E., Ma, J., Isaac, M. S., and Gal, D. (2016). Is eco-friendly unmanly? The green-feminine stereotype and its effect on sustainable consumption. J. Consumer Res. 43 (4), 567–582. doi:10.1093/jcr/ucw044

Crall, A. W., Jordan, R., Holfelder, K., Newman, G. J., Graham, J., and Waller, D. M. (2013). The impacts of an invasive species citizen science training program on participant attitudes, behavior, and science literacy. Public Underst. Sci. 22 (6), 745–764. doi:10.1177/0963662511434894

Davis, J. J. (1995). The effects of message framing on response to environmental communications. Journalism and Mass Commun. Q. 72 (2), 285–299. doi:10.1177/107769909507200203

Dickinson, J. L., Crain, R., Yalowitz, S., and Cherry, T. M. (2013). How framing climate change influences citizen scientists’ intentions to do something about it. J. Environ. Educ. 44 (3), 145–158. doi:10.1080/00958964.2012.742032

Dickinson, J. L., Zuckerberg, B., and Bonter, D. N. (2010). Citizen science as an ecological research tool: challenges and benefits. Annu. Rev. Ecol. Evol. Syst. 41, 149–172. doi:10.1146/annurev-ecolsys-102209-144636

Eitzel, M., Cappadonna, J., Santos-Lang, C., Duerr, R., West, S. E., Virapongse, A., et al. (2017). Citizen science terminology matters: exploring key terms. Citiz. Sci. Theory Pract. 2, 1–20. doi:10.5334/cstp.96

Entman, R. M. (2004). Projections of power: framing news, public opinion, and US foreign policy. University of Chicago Press.

Everitt, B. S., and Dunn, G. (1991). Applied multivariate data analysis. London: Edward Arnold, 219–220.

Follett, R., and Strezov, V. (2015). An analysis of citizen science based research: usage and publication patterns. PloS one 10 (11), e0143687. doi:10.1371/journal.pone.0143687

Gerend, M. A., and Shepherd, J. E. (2007). Using message framing to promote acceptance of the human papillomavirus vaccine. Health Psychol. 26 (6), 745–752. doi:10.1037/0278-6133.26.6.745

Ho, S. S., Brossard, D., and Scheufele, D. A. (2008). Effects of value predispositions, mass media use, and knowledge on public attitudes toward embryonic stem cell research. Int. J. Public Opin. Res. 20 (2), 171–192. doi:10.1093/ijpor/edn017

Irwin, A. (2015). “Science, public engagement,” in International encyclopedia of the social and behavioral sciences (Oxford: Elsevier), 255–260.

Jordan, R. C., Gray, S. A., Howe, D. V., Brooks, W. R., and Ehrenfeld, J. G. (2011). Knowledge gain and behavioral change in citizen-science programs. Conserv. Biol. 25 (6), 1148–1154. doi:10.1111/j.1523-1739.2011.01745.x

Klein, A. (2016). A game for crowdsourcing the segmentation of BigBrain data. Res. Ideas Outcomes 2, e8816. doi:10.3897/rio.2.e8816

Klinger, Y. P., Eckstein, R. L., and Kleinebecker, T. (2023). iPhenology: using open-access citizen science photos to track phenology at continental scale. Methods Ecol. Evol. 14 (6), 1424–1431. doi:10.1111/2041-210x.14114

Kloetzer, L., Lorke, J., Roche, J., Golumbic, Y., Winter, S., Jõgeva, A., et al. (2021). Learning in citizen science. Sci. Citiz. Sci., 283–308. doi:10.1007/978-3-030-58278-4_15

Levin, K., Cashore, B., Bernstein, S., and Auld, G. (2012). Overcoming the tragedy of super wicked problems: constraining our future selves to ameliorate global climate change. Policy Sci. 45 (2), 123–152. doi:10.1007/s11077-012-9151-0

Mayer, A. (2010). Phenology and Citizen Science: volunteers have documented seasonal events for more than a century, and scientific studies are benefiting from the data. BioScience 60 (3), 172–175. doi:10.1525/bio.2010.60.3.3

McDonald, J. H. (2007). The handbook of biological statistics. Available at: http://www.biostathandbook.com/multiplecomparisons.html (Accessed February 1, 2019).

Nerbonne, J. F., and Nelson, K. C. (2004). Volunteer macroinvertebrate monitoring in the United States: resource mobilization and comparative state structures. Soc. Nat. Resour. 17 (9), 817–839. doi:10.1080/08941920490493837

Newell, D. A., Pembroke, M. M., and Boyd, W. E. (2012). Crowd sourcing for conservation: web 2.0 a powerful tool for biologists. Future Internet 4 (2), 551–562. doi:10.3390/fi4020551

Nisbet, M. C. (2005). The competition for worldviews: values, information, and public support for stem cell research. Int. J. Public Opin. Res. 17 (1), 90–112. doi:10.1093/ijpor/edh058

Nisbet, M. C., and Kotcher, J. E. (2009). A two-step flow of influence? Opinion-leader campaigns on climate change. Sci. Commun. 30 (3), 328–354. doi:10.1177/1075547008328797

Paolacci, G., Chandler, J., and Ipeirotis, P. G. (2010). Running experiments on amazon mechanical turk. Judgm. Decis. Mak. 5 (5), 411–419. doi:10.1017/s1930297500002205

Polit, D. F. (1996). Data analysis and statistics for nursing research. Stamford, Connecticut: Appleton and Lange, 320–321.

Putnam, R. (2023). Announcing a new name for this association - association for advancing participatory sciences. Assoc. Adv. Participatory Sci. Available at: https://participatorysciences.org/2023/07/14/announcing-a-new-name-for-this-association/.

Riesch, H., and Potter, C. (2013). Citizen science as seen by scientists: methodological, epistemological and ethical dimensions. Public Underst. Sci. 23, 107–120. doi:10.1177/0963662513497324

Rothman, A. J., Bartels, R. D., Wlaschin, J., and Salovey, P. (2006). The strategic use of gain- and loss-framed messages to promote healthy behavior: how theory can inform practice. J. Commun. 56, 202–220. doi:10.1111/j.1460-2466.2006.00290.x

Rothman, A. J., Kelly, K. M., Hertel, A., and Salovey, P. (2003). “Message frames and illness representations: implications for interventions to promote and sustain healthy behavior,” in The self-regulation of health and illness behavior. Editors L. D. Cameron, and H. Leventhal (London: Routledge), 278–296.

Savage, N. (2012). Gaining wisdom from crowds. Commun. ACM 55 (3), 13–15. doi:10.1145/2093548.2093553

Scheufele, D. A. (1999). Framing as a theory of media effects. J. Commun. 49 (1), 103–122. doi:10.1093/joc/49.1.103

Schwartz, M. D., Ahas, R., and Aasa, A. (2006). Onset of spring starting earlier across the Northern Hemisphere. Glob. Change Biol. 12 (2), 343–351. doi:10.1111/j.1365-2486.2005.01097.x

Silvertown, J. (2009). A new dawn for citizen science. Trends Ecol. and Evol. 24 (9), 467–471. doi:10.1016/j.tree.2009.03.017

Tang, J., and Prestopnik, N. R. (2019). Exploring the impact of game framing and task framing on user participation in citizen science projects. Aslib J. Inf. Manag. 71 (2), 260–280. doi:10.1108/ajim-09-2018-0214

Trumbull, D. J., Bonney, R., Bascom, D., and Cabral, A. (2000). Thinking scientifically during participation in a citizen-science project. Sci. Educ. 84 (2), 265–275. doi:10.1002/(sici)1098-237x(200003)84:2<265::aid-sce7>3.0.co;2-5

Wilderman, C. C., McEver, C., Bonney, R., and Dickinson, J. (2007). “Models of community science: design lessons from the field,” in Citizen science toolkit conference. Editors C. McEver, R. Bonney, J. Dickinson, S. Kelling, K. Rosenberg, and J. L. Shirk (Ithaca, NY).

Woolley, J. P., McGowan, M. L., Teare, H. J., Coathup, V., Fishman, J. R., Settersten, R. A., et al. (2016). Citizen science or scientific citizenship? Disentangling the uses of public engagement rhetoric in national research initiatives. BMC Med. ethics 17 (1), 33–17. doi:10.1186/s12910-016-0117-1

Keywords: contributory citizen science, participant outcomes, data quality, framing, communication science

Citation: Sorensen AE and Jordan RC (2025) Framing impacts on citizen science data collection and participant outcomes. Front. Environ. Sci. 12:1496203. doi: 10.3389/fenvs.2024.1496203

Received: 13 September 2024; Accepted: 09 December 2024;

Published: 03 January 2025.

Edited by:

James Kevin Summers, Office of Research and Development, United StatesReviewed by:

John A. Cigliano, Cedar Crest College, United StatesMarta Meschini, University of Liverpool, United Kingdom

Copyright © 2025 Sorensen and Jordan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Amanda E. Sorensen, c29yZW4xMDlAbXN1LmVkdQ==

ORCID: Amanda E. Sorensen, orcid.org/0000-0003-2936-1144; Rebecca C. Jordan, orcid.org/0000-0001-6684-3105

Amanda E. Sorensen

Amanda E. Sorensen Rebecca C. Jordan

Rebecca C. Jordan