94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Environ. Sci., 05 September 2023

Sec. Environmental Citizen Science

Volume 11 - 2023 | https://doi.org/10.3389/fenvs.2023.1228480

This article is part of the Research TopicBridging Citizen Science and Science CommunicationView all 15 articles

Yaela N. Golumbic1*

Yaela N. Golumbic1* Marius Oesterheld2

Marius Oesterheld2Introduction: Attracting and recruiting volunteers is a key aspect of managing a citizen science initiative. Science communication plays a central role in this process. In this context, project descriptions are of particular importance, as they are very often, the first point of contact between a project and prospective participants. As such, they need to be reader-friendly, accessible, spark interest, contain practical information, and motivate readers to join the project.

Methods: This study examines citizen science project descriptions as science communication texts. We conducted a thorough review and analysis of a random sample of 120 English-language project descriptions to investigate the quality and comprehensiveness of citizen science project descriptions and the extent to which they contain information relevant to prospect participants.

Results: Our findings reveal information deficiencies and challenges relating to clarity and accessibility. While goals and expected outcomes were frequently addressed, practical matters and aspects related to volunteer and community management were much less well-represented.

Discussion: This study contributes to a deeper understanding of citizen science communication methods and provides valuable insights and recommendations for enhancing the effectiveness and impact of citizen science.

As citizen science continuously grows and establishes itself as an independent field of scholarship, questions regarding its implementation, impact and sustainability arise. In many cases, practices from science communication have proven useful in promoting and organizing citizen science projects, streamlining communication with participants, and ensuring their needs and ideas are considered. Practices such as storytelling, data visualization and co-creation are gaining increased attention in the citizen science landscape with calls to consistently incorporate these practices into project design and implementation. Storytelling, for example, can help communicate complex scientific concepts to a wider audience and foster greater engagement and understanding among citizen scientists (Hecker et al., 2019). Data visualization can enable citizen scientists to more easily understand and interpret scientific data (Sandhaus et al., 2019; Golumbic et al., 2020) and co-creation can help build trust and collaboration between scientists and citizen scientists, whilst attending to the needs of both groups (Gunnell et al., 2021; Thomas et al., 2021).

A key element in leading a citizen science initiative is attracting and recruiting volunteers. Naturally, science communication plays a central role in this process. A successful citizen science project requires a strategic approach to volunteer recruitment, including identifying target audiences, developing compelling communication and training materials, and leveraging a range of channels to reach potential participants (Lee et al., 2018; Hart et al., 2022). Citizen science platforms, such as SciStarter (https://scistarter.org) and EU-Citizen.Science (https://eu-citizen.science), serve as key entry points for many citizen scientists as they allow users to explore a diverse range of projects (Liu et al., 2021). Within these platforms, projects are given the opportunity to introduce themselves to potential participants and provide valuable information about their goals and activities relevant to future volunteers. Often, citizen science platforms are central components in a project’s online presence and serve as the first point of contact between a project and prospective participants. While some platforms present the information provided by project representatives in a structured or semi structured way (which is typically determined by the submission forms used for data entry), others have adopted a less pre-structured format. What all platforms have in common, is that they offer the choice to include a free-style project description, which according to Calvera-isabal et al. (2023), is the key source of information about citizen science projects openly available online. As such, information provided in these descriptions should be presented in an easy-to-understand and engaging manner, providing all the relevant practical information, while also sparking readers' interest and motivating them to join. It is furthermore essential to explain how potential participants may benefit from their involvement, and to make the project description as a whole reader-friendly and accessible.

There is a growing body of literature in the field of science communication that focuses on effective ways to convey scientific information to non-scientific audiences. Generally speaking, popular science writing often employs a contrasting structure to that of scientific academic writing. While academic writing tends to follow a pyramid structure with much background and detail upfront, popular science writing often employs an inverted pyramid structure similar to news and journalism standards (Po¨ttker, 2003; Salita, 2015). The inverted pyramid arranges content according to its newsworthiness, beginning with the conclusions and bottom line, followed by additional information in descending order of relevance (Rabe and Vaughn, 2008; Salita, 2015). Additionally, popular science often incorporates a range of rhetoric styles, such as storytelling, humor, collective identification and empathy, and strives to decrease the use of jargon and technical language (Bray et al., 2012; Rakedzon et al., 2017). Similarly to popular science writing, project descriptions are texts intended for general audiences and would benefit from adopting science communication practices in their structure.

Over the past 2 years, several studies have been conducted within the CS Track consortium that investigated citizen science project descriptions using a variety of different approaches and methods. CS Track is an EU-funded project aimed at broadening our knowledge of citizen science by combining web analytics with social science practices (De-Groot et al., 2022). In this context, project descriptions were analyzed from various angles, examining participatory, motivational and educational aspects reflected in the descriptions’ texts (Oesterheld et al., 2022; Santos et al., 2022; Calvera-isabal et al., 2023). This work revealed that citizen science project descriptions vary significantly in terms of content, length and style. Some are extremely short and contain very little information on project goals or concrete activities citizen scientists will be expected to engage in. Others provide lengthy and jargon-laden explanations of the project’s scientific background that are difficult for non-experts to understand. Similar findings have also been described by Lin Hunter et al. (2020) in the context of volunteer tasks as presented in project descriptions on the CitSci.org platform. In light of these observations a set of evidence-based recommendations for writing engaging project descriptions was developed, in the form of an annotated template. This template lists ten essential elements of effective project descriptions and provides text examples for each element, as well as offering general advice on length, format and style (Golumbic and Oesterheld, 2022). Designed as a tool for citizen science project leaders and coordinators, the template has been piloted in a series of online and face-to-face workshops, where it received positive feedback.

In this paper, we aim to examine the information deficits in project descriptions that we described above in a more systematic and quantifiable manner. We ask, to what extent do citizen science project descriptions contain information relevant to prospect participants, and is this information presented in a comprehensible manner? To answer these questions, we conducted a thorough review and analysis of a sample of citizen science project descriptions, with a focus on identifying and categorizing key elements related to project scope, objectives, methods, volunteer management, community engagement, and more. Using content analysis, we quantified the prevalence of these characteristics within project descriptions, identifying deficiencies and areas that lack clarity in their communication to potential participants. The findings of this study will contribute to a deeper understanding of citizen science communication methods, identify areas for improvement and will inform the development of more effective science communication strategies for the recruitment of volunteers.

The coding rubric used in this study is based on the project description template the authors developed as a resource for the citizen science community (Golumbic and Oesterheld, 2022). As described above, the template lists and exemplifies ten essential elements for writing engaging project descriptions. The development of the project description template was informed by research conducted in the CS Track consortium in order to extract information about citizen science activities in Europe from project descriptions stored in the CS Track database. In conjunction with the citizen science and science communication literature, ten essential elements were identified which jointly contribute to making a project description engaging and effective. Table 1 details the essential elements identified and their justification for being included in the rubric.

For the purpose of this study, the project description template was translated into a coding rubric (see Supplementary S1). Each element of the template was transformed into a categorical coding format, with clear definitions and examples for each category. While the “one-sentence overview” is a meta-level element that cuts across content-related distinctions, the remaining nine elements of the coding rubric can be grouped into three categories representing different dimensions of citizen science projects:

(1) the project’s purpose and expected outcomes (“goals” and “impact”)

(2) the project’s method of operation (“activities/tasks,” “target audience,” “information on how to join,” “training/educational resources”)

(3) the project’s volunteer and community management (“benefits of participation,” “recognition for citizen scientists,” “access to results”)

For six of the ten elements—impact, benefits, information on how to join, training and educational resources, access to results, recognition—presence or absence was easily detectable. Therefore, a binary coding scheme (mentioned/not mentioned) was chosen. The remaining four elements—overview, goals, activities, and target audience—were more challenging to classify into two categories since even when present, the comprehensiveness and detail provided in the text did not always provide sufficient clarity as to the full element content. We therefore introduced a three-dimensional ordinal coding scheme (poor, fair, good). The full rubric (Supplementary S1) provides detail as to the differentiation between the 3 codes. For example, in order to qualify as a “good” description of project goals, these needed to be explicitly framed as goals using phrases such as: “this project aims to,” “Our goals are,” “the purpose of this project is.” In cases where goals were implied but not made explicit, using terms such as “we are investigating,” “we are compiling,” “this allows us to,” the respective descriptions were classified as “fair.” Finally, descriptions where no goals were detected were classified as “poor.” In order to increase the content-level granularity of our analysis, three subcategories were added for both “goals” and “impact” (scientific, social & educational, environment & policy-related), classified in the case “good” goals and “mentioned” impacts were found.

Once the initial rubric was designed and the meaning of each category defined, examples from existing project descriptions were used to populate the rubric and clearly differentiate between coding options. This was done through discussion and negotiation between authors and with the assistance of a third coder, an expert in computational content analysis, until full agreement was achieved.

Validity-checking of the coding rubric, ensuring it is fit for purpose and that all categories are well-defined and demarcated, was conducted with the assistance of 6 external researchers who were familiar with the research goals and context, yet were not involved in the rubric development. Researchers were presented with the coding rubric and asked to review and use it for coding two independent project descriptions. Following this process, results were compared and discussed, and where disagreement arose, the rubric was adjusted and revised.

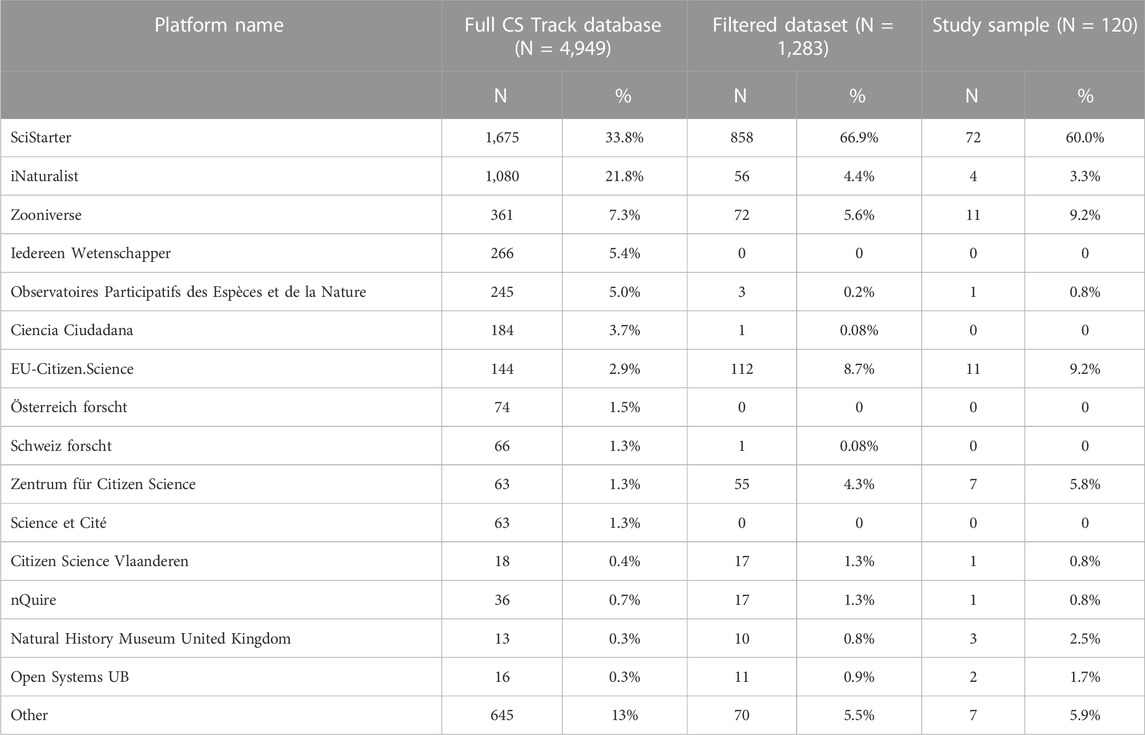

A sample of project descriptions for this study was selected from the CS Track database (De-Groot et al., 2022; Santos et al., 2022), which aggregates data from 59 citizen science project platforms, collected primarily by an automated web crawler. In cases where a project was presented on more than one platform, it is listed here under the name of the platform it was first extracted from. At the time of writing this paper, the CS Track database contained information on more than 4,900 Citizen Science projects worldwide. The distribution of projects according to their platform source is provided in Table 2.

TABLE 2. Platform distribution for the entire CS Track database, filtered dataset including only English-language project descriptions with a word count between 100 and 500, and the random sample used for the analysis of this study.

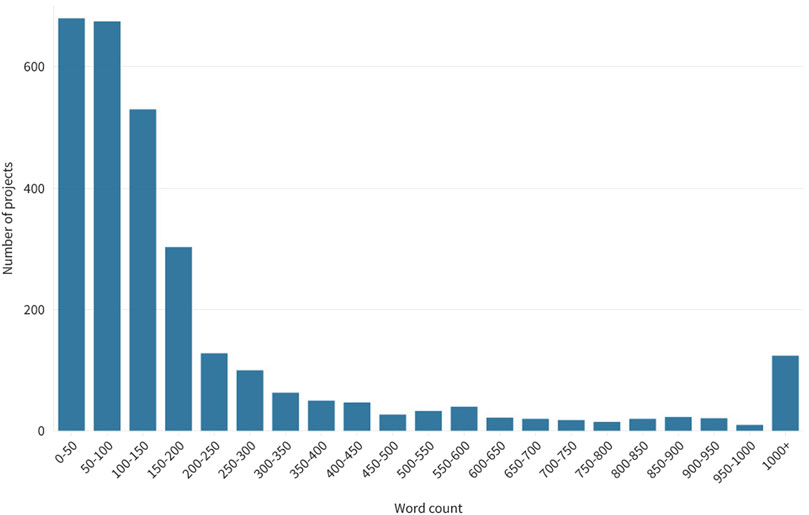

For the purpose of this study, we applied two filters to generate our sample (see Figure 1). First, due to the authors’ language skills, we created a dataset containing only English-language project descriptions, resulting in a subsample of 2,949 projects. This excluded a number of major citizen science platforms from non-English speaking countries such as Iedereen Wetenschapper from the Netherlands, which accounts for 5.4% of project descriptions in the CS Track database, or OPEN Observatoires Participatifs des Espèces et de la Nature, which accounts for 5%. We then filtered all descriptions according to their word count (see Figure 2), descriptions which consisted of less than 100 or more than 500 words were excluded. This threshold was set as texts of less than 100 words cannot be expected to contain a significant amount of information. Project descriptions of more than 500 words are less likely to be read in their entirety than shorter texts (Meinecke, 2021) and thus ill-suited to the task of capturing the readers’ interest and prompting them to join the project. This second round of filtering excluded an additional 1,666 project descriptions, leaving a dataset of 1,283 useable texts. The distribution of these projects according to their platform source was significantly different to that of the whole dataset, as can be seen in Table 2, with an abundance of projects from one platform (SciStarter).

FIGURE 2. Distribution of English language project descriptions from the CS Track database according to their word count.

Using this dataset, we created a random sample of approximately 10% of project descriptions by applying the “random” function of RStudio. In total, we analyzed the descriptions of 120 citizen science projects from 16 different online citizen science platforms. The distribution of the sample in terms of platform sources resembles that of the abridged 1,283-project dataset (see Table 2). During the analysis process, 6 project descriptions were excluded from the final sample because they did not contain any indication of engaging the public in research processes.

Following the establishment of rubric validity, 10% of the project description sample was randomly selected and independently coded by the authors and the third coder introduced above. Results were compared and discussed where disagreement occurred. This was followed by a second round of coding of an additional 10% of the sample.

To increase reliability of the results, and since at this stage intercoder reliability of over 90% of agreement was not established for all categories, an additional 10% of the sample was coded by all 3 coders, whilst highlighting specific places where coders were not confident of their coding decisions. Inter-rater reliability was calculated for all items, excluding those with low coding confidence, and was found to be over 90% for all categories. In those cases where coding was challenging, results were compared and discussed to reach full agreement. In total 36 project descriptions were coded by 3 independent coders. Calculations of the inter-rater reliability for each category, with and without the low confidence coding, are presented in Supplementary S2.

Following the coding reliability check, the remaining 80 projects were coded by one of the two authors. As in the last round of the reliability check, coders indicated places of low confidence, which were then discussed to reach full agreement.

To assess the quality and comprehensiveness of citizen science project descriptions found on online platforms, our analysis focused on 10 key elements contributing to engaging and effective project descriptions. In this section, we present the results of our analysis, which revealed several important aspects relevant to prospective participants that are missing in many project descriptions. Our results first present findings on the first key element—project overview, followed by the remaining elements divided according to the three dimensions of citizen science projects described above: 1. purpose and expected outcomes 2. methods of operation 3. volunteer and community management.

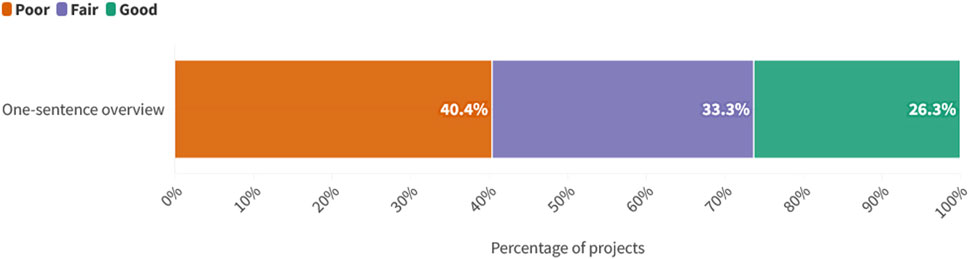

A project overview is an important part of project descriptions as it provides a clear and concise summary of the project’s main objective, scope, and expected outcomes. It can quickly capture the attention of potential participants and help them understand the purpose and value of the project and make a swift decision as to their involvement.

Yet, our analysis reveals that only 30 of the projects in our sample (i.e., 26.3%) begin their descriptions with a clear and concise one-sentence summary of the project (Figure 3). An example for a good project overview is: “[Project name] is a network of citizen scientists that monitor marine resources and ecosystem health at 450 beaches across [name of place].” In 38 cases (33.3% of our sample), a project overview was present, but either lengthy and unfocused or spread across two sentences. An example for such a lengthy and detailed project overview is “[The project] needs volunteers to undertake surveys for grassland birds, such as [names of birds], along established routes and in managed grasslands, and to collect data on bird abundance and habitat characteristics.” A more extreme case is split into two sentences: “[The project] was initiated in 1983 to provide a mid-summer estimate of the statewide [type of bird] population. On the third Saturday in July each year, volunteers survey assigned lakes, ponds, and reservoirs from 8:00 to 9:00 a.m., recording the number of adult [bird], subadult [bird] (1–2 year olds), and [bird] chicks on the water body, as well as relevant human and wildlife activity.” An additional 40.4% of project descriptions make no attempt to open with a project overview and instead dive straight into the historic or scientific background of a project. One project, for example, started by explaining that “Scientists have flown over and systematically photographed the [name of year, place and animal] migration. This herd of [animal], estimated in 2013 to number around 1.3 million […]. An estimate of the [animal] population is completed by counting the number of [animals] in a large number of images.”

FIGURE 3. Percentage of projects descriptions coded as “poor,” “fair” or “good” in the category “one-sentence overview.”

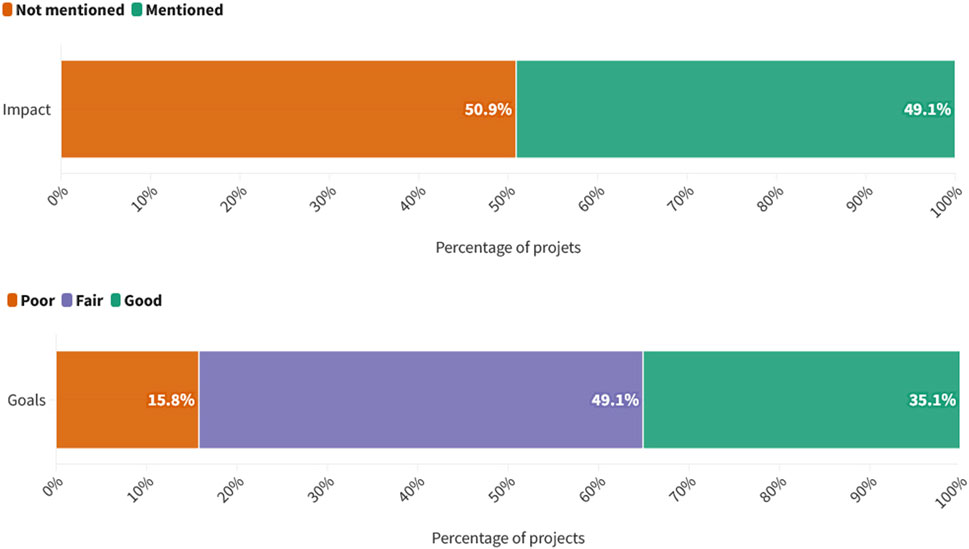

Project goals were outlined in 96 of the descriptions in our sample (i.e., 84.2%) (Figure 4), yet only 31.5% met the criteria for a “good” project goal, as defined above. An example for a good project description is: “The purpose of this project is to record the occurrence and location of [ecological phenomenon] throughout Europe”. A further 56 (or 58.3%) of project descriptions, did not present goals explicitly enough and were thus categorized as “fair.” One example of a description that implies project goals without explicitly framing them as such, is the following: “The [project] provides a harvest-independent index of grouse distribution and abundance during the critical breeding season in the spring.” Another description states that “[these activities] allow us to test fire mapping, interpret plant responses and assess changes to animal habitats.” Yet another project informs the reader that “These data will be used to create a snapshot of seabird density”.

FIGURE 4. Percentage of project descriptions coded as “poor,” “fair” or “good” in the category of “goals” (top) and as “not mentioned” or “mentioned” in the category of “impact” (bottom).

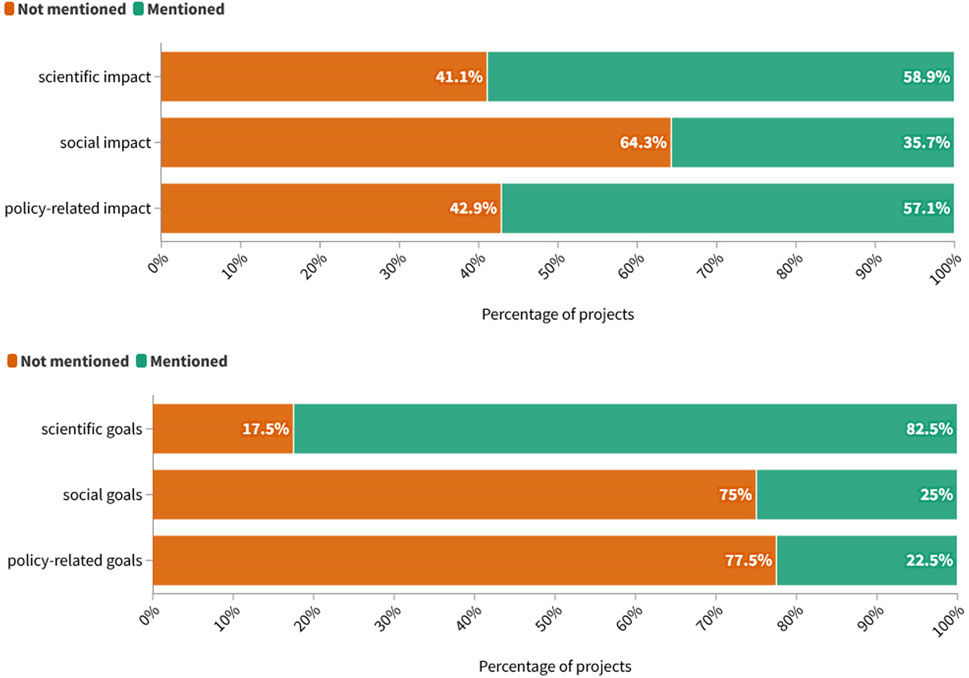

In terms of goal subcategories (scientific, social and policy), scientific goals, such as collecting data, closing data gaps and answering research questions, were most commonly referred to (namely, in 82.5% of project descriptions coded as “good” in the category of goals). Both social goals related to public discussion, education and communication and policy-related goals were mentioned much more rarely, namely, in 25% and 22.5% of cases respectively (Figure 5).

FIGURE 5. Breakdown of the types of goals (top) and impact (bottom) indicated by project descriptions. % are of those descriptions coded as “good” in the category of goals and “mentioned” in the category of impact.

Information about the project’s impact appeared in nearly 50% (N = 56) of the project descriptions we analyzed. Of these, 33 projects (58.9% of those which indicated their impact) mention scientific impact and 32 (57.1%) were coded for policy-related impact. For instance, one project specified that they create tools “that researchers all over the world can use to extract information” (scientific impact), while another one stated their project conducts “research that will ultimately help protect our fragile environment” (environmental-policy impact), Social impact was referenced in 20 project descriptions (35.7%). One of these points out that data collected by their project are “used in actions of environmental education.” Another project explains that its activities “promote a process of awareness and self-reflection on the reality of people with mental health problems.” While the impact statements are sometime vague, they remain important elements in the text, as they provide context for the projects’ long-term contributions.

“Method of operation” refers to the inner workings or mechanics of a citizen science project, i.e., to the specific procedures and activities it uses to achieve its goals and engage participants. This includes the tasks participants are asked to complete in the project, the project’s target audience, training and didactic resources offered to participants, and information on how to join the project.

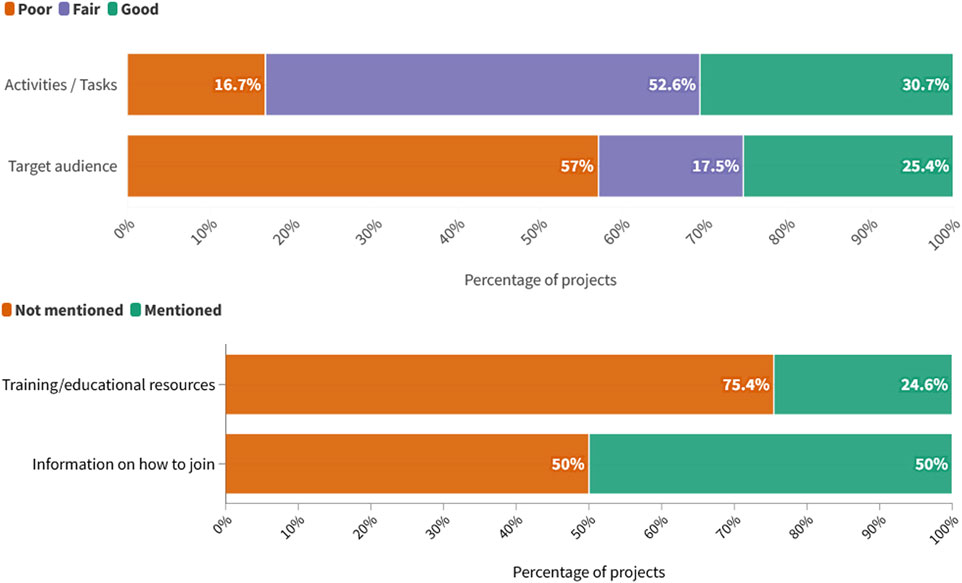

Activities and tasks associated with participation in the respective citizen science project were mentioned in 83.3% of all project descriptions we studied (Figure 6). However, the majority of these texts (63.2%) contain only partial information on location, date, tools and equipment, or required time commitment. Examples for incomplete descriptions of activities and tasks (which were coded as “fair”) include: “submit your observations”, with no specification of the nature or location of the observations, “transcribe information from the specimen labels”, with no refence to the technology used or time commitment, and “tracking a tree’s growth”, with no explanation on what this task entails. On the other hand, nearly one third of the descriptions we analyzed were coded as “good” because they contained detailed and informative explanations of the project activities. One project, for example, summarized the citizen scientists’ tasks as follows: “Using [app and website]: Stop 3–5 times along a pre-determined route and spend 5 min at each spot photographing/recording every insect that you see.” This example detailed the technology used (name of platform), location (along a pre-determined route) time investment (5 min * 3–5 times), and detailed task (photographing/recording every insect). In another project the activity was described as: “Participants register the nest boxes in their gardens or local areas and record what’s inside at regular intervals during the breeding season,” indicating location (gardens or local areas), task (record what’s inside their nest boxes) and timing and duration (regular intervals during the breeding season).

FIGURE 6. Percentage of project descriptions coded as “poor,” “fair” or “good” in the categories of “activities/tasks” and “target audience” (top) and as “not mentioned” or “mentioned” in the categories “training & educational materials” and “information on how to join” (bottom).

In terms of target audience, more than half of the descriptions in our sample (57%) failed to make any reference to the projects’ intended audience. 17.5% contained vague or superficial statements (e.g., “anyone in NSW” or “distributed global players”). Only 25.4% mentioned specific groups, equipment needed in order to join or required skills. One project, for instance, is explicitly geared towards “people who go on regular beach walks, boat trips, or scuba dives”, another project description informs readers that “Absolutely anyone can join this project—all you need is an internet connection and plenty of free time!”.

Training processes and educational resources offered to citizen scientists were only referred to in 24.6% of the descriptions in our sample. Frequently mentioned types of training or instructions include downloadable guides, information sheets or video tutorials. A handful of project descriptions talked about in-person training or lesson plans for teachers.

Concrete, practical information on how to join the respective projects was only provided in 50% of the cases we analyzed. Typically, prospective participants are asked to “to sign up,” “Create a free account,” “download the mobile app” or “Click on the “Get Started” button.” In some cases, they can directly “upload [their] observations” or “submit [their] data.” A handful of projects require registration via email.

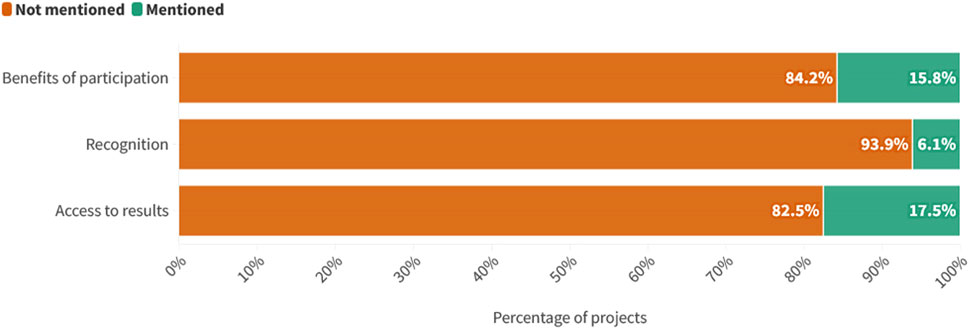

Volunteer and community management refers to the processes and strategies used to effectively engage, recruit, and retain volunteers and community members who are involved in a citizen science project. On the level of project descriptions, this includes explaining which benefits participation will have for those who decide to join the project, whether they will have access to project outcomes and findings, and how their contributions will be honored and recognized. This dimension was remarkably underrepresented in the project descriptions analyzed, with 79 project descriptions ignoring it completely.

A mere 15.8% of project descriptions contained information on how volunteers can benefit from participating in the project (Figure 7). In the vast majority of those cases, the benefits mentioned are related to learning, i.e., to acquiring new skills and knowledge. For example, one project provides participants with “new ideas for attracting wildlife to your backyard and community.” Another project offers a “fun way for young people and other members of the public to learn alongside experts”. In a few cases, learning was associated with citizen scientists’ health and safety - like in the project that ”...has helped waterfront residents[...] learn what makes for safer oysters and clams” or the one where participants “learn more about the existing resources for disaster and crisis management in their surrounding.”

FIGURE 7. Percentage of projects descriptions coded as “not mentioned” or “mentioned” in the categories “benefits of participation,” “recognition for citizen scientists” and “access to results.”

Similarly to the underrepresentation of participant benefits, only a small number of project descriptions (17.5%) detailed how participants may be able to access project outputs. Most cases which do describe access to data indicate that datasets and results are available for viewing or download on some form of website. One project description mentions that, after the end of the project, participants “will receive a research report summarizing the results and findings of the whole project.”

Finally, a paltry 6.1% of project descriptions contain details on how the contributions of citizen scientists will be acknowledged and recognized. Examples include being “listed as the collector” of a specimen displayed in a public exhibition or earning credit in the platform dashboard. A handful of project descriptions include expressions of gratitude, such as “Thank you to everyone who helped us transcribe the slides”, or of appreciation, e.g., “[the project] believes that the citizens of coastal communities are essential scientific partners”.

In summary, of the three project dimensions identified above (objectives and expected outcomes, method of operation, and volunteer and community management), the one discussed most prominently in the project descriptions in our sample was the first. Although impact is significantly less represented than goals, jointly this dimension has an average omission rate of just 33.4%. At 48.9%, the average omission rate of the second dimension, methods of operation, is significantly higher, which indicates that many project coordinators do not devote much attention to the practical or technical aspects of their project’s day-to-day workings when writing project descriptions. Finally, volunteer and community management clearly is the most underrepresented dimension of the three, with an average not-mentioned rate across all categories of 86.9%.

This paper aims to assess the quality and comprehensiveness of citizen science project descriptions found on online platforms. Our analysis focused on several key aspects of project descriptions, including an overview of the project, its purpose and expected outcomes, the level of detail provided about projects’ methods and operation and about its approach to volunteer and community management. Through a systematic review of a sample of citizen science project descriptions, we identify areas for improvement and provide recommendations for enhancing the effectiveness and impact of citizen science initiatives.

We found that citizen science project descriptions vary greatly in terms of their content, length and style. In fact, over 50% of project descriptions in the CS Track database did not even meet our inclusion criteria, in terms of their length. Approximately half of the project descriptions stored in the CS Track database contain less than 100 words, meaning that key information on the project such as technology used, tasks to be completed and benefits of participation is inevitably lacking. Other project descriptions are extremely long with over 1,000 words and provide extensive detail and scientific background which may be difficult for participants to follow (Meinecke, 2021). One notable observation from the analysis is that the style of writing in many project descriptions tends to be overly academic. Even among project descriptions with an appropriate word count (100–500 words), we found descriptions which provided lengthy and excessively technical explanations of the project’s background and scientific context that make the text difficult for non-experts to understand. Overall, the style of writing was often more suited to an academic abstract than to a project description targeted at a general audience. This may be attributable to the fact that the majority of citizen science project coordinators and leaders come from a scientific background and have experience writing for such an audience. Yet, writing for non-scientists demands a different skill and style, which many scientists are not trained for (Salita, 2015). Popular science writing begins with the most important issue up front, followed by the scientific background and other technical details (Rabe and Vaughn, 2008). Yet, instead of opening with a succinct overview of the project, the descriptions we analyzed tended to start by providing background information on the field of study, describing the state of the art and identifying a research gap. As a result, the participatory dimension and the roles of citizen scientists in the project are only briefly mentioned towards the end of the description (or in some cases not at all). While this structure is perfectly appropriate for an academic abstract, it is not well-suited to capturing the attention of non-academic readers and motivating them to join. This is quite unfortunate since project descriptions are often the first point of contact between a citizen science project and prospective participants and thus play a crucial role in recruiting volunteers.

Our findings further demonstrate that of the three dimensions of project descriptions, the first dimension, purpose and expected outcomes, received the most attention. While goals were not always explicit, they were present in 84.2% of project descriptions, with the majority of goals being of a scientific nature. Mentions of impact, on the other hand, were spread more evenly across scientific, policy and social aspects. The comparatively strong presence of these elements within project descriptions suggests that project leaders view them as important elements of project communication. Alternatively, this could be derived from the academic style writing which includes an emphasis on the goal of the study alongside its contribution for research and practice (Bray et al., 2012).

The second dimension, which encompasses the methods and operations of projects, featured much less prominently, meaning that the practical and technical aspects of day-to-day participant engagement were not sufficiently emphasized in project descriptions. This finding raises questions about the extent to which project coordinators are effectively conveying the operational aspects that contribute to participant engagement and project success. If potential participants do not understand the tasks they are asked to complete, or who the project is targeting, they are less likely to join the project as participants (West and Pateman, 2016; Hart et al., 2022).

The third dimension, volunteer and community management, emerges as the most underrepresented aspect across all categories, with an overwhelmingly high omission rate for all three elements (benefits, recognition and access to results). It is evident that project descriptions often fail to address the crucial role of volunteers and the management strategies implemented to support community involvement. This highlights the absence of a participant-oriented approach to project management as reflected by project descriptions. As volunteer support, recognition and community engagement are vital for the success and sustainability of citizen science projects (de Vries et al., 2019; Asingizwe et al., 2020), it is crucial that these are adequately reflected within project descriptions.

As discussed above, a significant portion of the project descriptions we analyzed were written in the format of a paper or conference abstract. Stylistic conventions typical of academic writing make the text less attractive for non-scientist audiences and may also be to blame for the rather indirect or implicit way project descriptions present information. Often, the clarity and accessibility of a project description could be improved significantly by applying the following three principles:

1. Explicitly mention your project and what it will do—make sure the project is the subject of your sentence and avoid writing in a way that leaves the reader to guess the connections between the project and its activities. For example, rather than writing: “In order to be able to make better predictions about future climate change, scientists need to know more about how decomposition occurs,” make the project and its activities more visible by stating: “By collecting data about decomposition, this project will help scientists make better predictions about future climate change”. Whilst the change in style may seem trivial to some readers, others will find the second version more accessible and clear, as it does not expect them to infer that the project will provide information scientists need.

2. Avoid the passive voice–mention the persons or teams conducting the research and write your message directly to your reader. Writing in the active voice will help you highlight and acknowledge the work scientists and citizen scientists are doing in the project. It will also make it easier for you to explicitly state which activities participants will be engaging in. For instance, instead of writing “Through this project, ten thousands of documents will be annotated and made available to interested researchers and members of the public” you could inform the reader that “Together with the project team, you—our volunteers—will annotate ten thousands of documents, making them available to interested researchers and members of the public.” As this example shows, a simple change in syntax affects both the tone of a sentence and the message it conveys.

3. Be brief and to the point—Do not include an excess of information, particularly regarding technical aspects or the scientific background of the project. Try to find the right balance between providing all the information prospective participants may need, while not overwhelming them with too many details. For example, “This project looks at the seasonal migration patterns of two bird species—black storks and common cranes” is much easier to understand than the much more detailed version: “This project looks at the seasonal migration patterns of the black stork (Ciconia nigra, native to Portugal, Spain, and certain parts of Central and Eastern Europe, migrates to sub-Saharan Africa) and the common crane (Grus grus or Eurasian crane, mainly found in Eastern Europe and Siberia, migrates to the Iberian Peninsula and northern Africa)”. While the additional pieces of information included in the latter version may be relevant in the project context, they hamper the comprehensibility of the text and are in all likelihood not pertinent to the readers’ decision on whether they would like to join the project or not.

Additional recommendation for writing project descriptions alongside advice on style and format, can be found in the project description template this study was inspired by (Golumbic and Oesterheld, 2022). Furthermore, some online tools exist for supporting writers in improving the readability of their texts for lay audiences. Examples include the De-Jargonizer1, a free online tool developed by Rakedzon et al. (2017) which identifies overly technical words, jargon and complex phrases in the text, or the Hemingway Editor App2 which highlights lengthy, complex sentences, common errors and uses of the passive voice. These tools have been tested with students and shown to enhance their writing skills and improve the reader-friendliness and accessibility of texts (Capers et al., 2022; Imran, 2022).

While this study aimed to utilize a representative sample of citizen science project descriptions, a number of limitations influence the results and interpretations. First, as the analysis was conducted in English, all projects presented in other languages have inevitably been excluded from the analysis. The results of this study therefore may not pertain to non-English platforms. Additionally, since the CS Track database contains information on citizen science projects extracted from a wide range of platforms, differences may occur in the way project descriptions are presented, which in turn influenced our analysis.

On some platforms, for example, information on the project is spread across several tabs, like in the case of Zooniverse which has a landing page, and an “About” section consisting of five tabs - Research, The Team, Results, Education, and FAQ. In these cases, and for reasons unknown, the web crawler sometimes extracted text only from one or two tabs leaving out crucial information. While we excluded any descriptions that were evidently disjointed (i.e., not part of a coherent running text or narrative), we decided against manually correcting these crawler errors since doing so would further bias our sample and in many cases have resulted in a project description exceeding our word limit. SciStarter, which accounts for 60% of the texts in our sample, asks project coordinators, to fill in a form containing both text fields (e.g., “goals,” “tasks” and “description”) and drop-down menus (e.g., “average time,” “ideal frequency”), in addition to submitting a full project description. As a result, project pages on SciStarter usually contain both structured and unstructured information. The CS Track web crawler mainly extracted the unstructured information (i.e., the running text contained in the “description” field), meaning the information added in the platforms’ pre-defined fields is sometimes absent in our sample. On iNaturalist (which constitutes 3.3% of our sample) all observations submitted by citizen scientists are by default visible and accessible on this platform. Accordingly, project coordinators may not see any reason to include information about “access to results” in their descriptions.

However, while limitations of the web crawler and the structure and characteristics of the platforms themselves have inevitably influenced the results of our analysis, the main argument presented in this paper still holds. Vital pieces of information about a citizen science project should always be included in the project description itself, even if they are also written elsewhere—in other tabs or structured sections of the platform. Otherwise, website visitors are forced to click and/or scroll through several pages to find the information they seek. Some readers may not be willing to invest the time and effort needed and instead simply move on to the next project.

We also acknowledge that while this analysis was based on literature and expert experience and validation, it did not incorporate perceptions of prospective audiences. Future work could examine how non-expert readers perceive and interpret texts. One option would be to present such readers with a selection of texts written in different formats, styles and speech registers, and ask them to assess their attractiveness, clarity and fitness for purpose.

Citizen science is growing dramatically, engaging thousands of volunteers who contribute daily to a wide range of initiatives, from health to astronomy and biodiversity. As citizen science continues to establish itself as an independent field of study, the pivotal role of science communication becomes increasingly evident. Yet, our analysis reveals that many citizen science project leaders or coordinators fail to incorporate science communication practices when writing project descriptions. Many project descriptions are structured like an academic abstract and do not sufficiently address practical matters of project participation and aspects related to volunteer and community management. These findings highlight a much bigger challenge of citizen science, namely, inclusion and diversity. In other words, project descriptions written in an academic style of writing contribute to the problem of homophily in citizen science—they are more likely to attract participants with university degrees and high science literacy, rather than people with different educational and linguistic backgrounds or abilities. Our findings underscore the need for project coordinators to adopt a more holistic approach, that takes into account all of the project dimensions identified in our rubric, including those related to volunteer support, recognition and community engagement. To ensure readability, project descriptions should be explicit, written in an active voice and include only vital information. Following these guidelines will help project coordinators compose comprehensive, readable and engaging project descriptions and streamline the communication with potential volunteers. Engaging project descriptions will spark the readers’ curiosity, foster a deeper interest in the project’s objectives and encourage their active involvement.

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the participants was not required to participate in this study in accordance with the national legislation and the institutional requirements.

YG and MO both led the conceptual design of the manuscript, conducted the data collection and analysis, developed the coding rubric, created images and wrote the manuscript. All authors contributed to the article and approved the submitted version.

The work in this paper has been carried out in the framework of the CS Track project (Expanding our knowledge on Citizen Science through analytics and analysis), which was funded by the European Commission under the Horizon 2020/SwafS program. Project reference number (Grant Agreement number): 872522. YG received a research fellowship from the Steinhardt Museum of Natural History, Tel Aviv University.

We would like to acknowledge all the partners of the CS Track project for their contributions to this study. Special thanks to Nicolas Gutierrez Paez for his contribution to the rubric development and validity establishment. Thank you ChatGPT for helping us craft a smart title.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fenvs.2023.1228480/full#supplementary-material

1De-Jargonizer, Available online at: https://scienceandpublic.com (accessed 23 May 2023).

2Hemingway Editor, Available online at: https://hemingwayapp.com (accessed 23 May 2023).

Asingizwe, D., Marijn Poortvliet, P., Koenraadt, C. J. M., van Vliet, A. J. H., Ingabire, C. M., Mutesa, L., et al. (2020). Why (not) participate in citizen science? Motivational factors and barriers to participate in a citizen science program for malaria control in Rwanda. PLoS One 15, 1–25. doi:10.1371/journal.pone.0237396

Baruch, A., May, A., and Yu, D. (2016). The motivations, enablers and barriers for voluntary participation in an online crowdsourcing platform. Comput. Hum. Behav. 64, 923–931. doi:10.1016/j.chb.2016.07.039

Bonney, R., Byrd, J., Carmichael, J. T., Cunningham, L., Oremland, L., Shirk, J., et al. (2021). Sea change: Using citizen science to inform fisheries management. Bioscience 71, 519–530. doi:10.1093/biosci/biab016

Bray, B., France, B., and Gilbert, J. K. (2012). Identifying the essential elements of effective science communication: What do the experts say? Int. J. Sci. Educ. Part B Commun. Public Engagem. 2, 23–41. doi:10.1080/21548455.2011.611627

Calvera-isabal, M., Santos, P., Hoppe, H., and Schulten, C. (2023). How to automate the extraction and analysis Cómo automatizar la extracción y análisis de información. Educ. digital Citizsh. Algorithms, automation Commun., 23–34. doi:10.3916/C74-2023-02

Capers, R. S., Oeldorf-Hirsch, A., Wyss, R., Burgio, K. R., and Rubega, M. A. (2022). What did they learn? Objective assessment tools show mixed effects of training on science communication behaviors. Front. Commun. 6, 295. doi:10.3389/fcomm.2021.805630

de Vries, M., Land-Zandstra, A., and Smeets, I. (2019). Citizen scientists’ preferences for communication of scientific output: A literature review. Citiz. Sci. Theory Pract. 4, 1–13. doi:10.5334/cstp.136

De-Groot, R., Golumbic, Y. N., Martínez Martínez, F., Hoppe, H. U., and Reynolds, S. (2022). Developing a framework for investigating citizen science through a combination of web analytics and social science methods—the CS Track perspective. Front. Res. Metrics Anal. 7, 988544. doi:10.3389/frma.2022.988544

Golumbic, Y. N., Baram-Tsabari, A., and Koichu, B. (2019). Engagement and communication features of scientifically successful citizen science projects. Environ. Commun. 14, 465–480. doi:10.1080/17524032.2019.1687101

Golumbic, Y. N., Fishbain, B., and Baram-Tsabari, A. (2020). Science literacy in action: Understanding scientific data presented in a citizen science platform by non-expert adults. Int. J. Sci. Educ. Part B 10, 232–247. doi:10.1080/21548455.2020.1769877

Golumbic, Y., and Oesterheld, M. (2022). CS Track project description template. doi:10.5281/zenodo.7004061

Gunnell, J. L., Golumbic, Y. N., Hayes, T., and Cooper, M. (2021). Co-created citizen science: Challenging cultures and practice in scientific research. China: JCOM.

Hart, A. G., de Meyer, K., Adcock, D., Dunkley, R., Barr, M., Pateman, R. M., et al. (2022). Understanding engagement, marketing, and motivation to benefit recruitment and retention in citizen science. Citiz. Sci. Theory Pract. 7, 1–9. doi:10.5334/CSTP.436

Hecker, S., Luckas, M., Brandt, M., Kikillus, H., Marenbach, I., Schiele, B., et al. (2019). “Stories can change the world-citizen science communication in practice,” in Citizen science: Innovation in open science, society and policy. Editors Susanne Hecker, M. Haklay, A. Bowser, Z. Makuch, and J. Vogel (Bonn (London: UCL Press). doi:10.14324/111.9781787352339

Hut, R., Land-Zandstra, A. M., Smeets, I., and Stoof, C. R. (2016). Geoscience on television: A review of science communication literature in the context of geosciences. Hydrol. Earth Syst. Sci. 20, 2507–2518. doi:10.5194/HESS-20-2507-2016

Imran, M. C. (2022). Applying Hemingway app to enhance students ’ writing skill. Educ. Lang. Cult., 180–185. doi:10.56314/edulec.v2i2

Lee, T. K., Crowston, K., Harandi, M., Østerlund, C., and Miller, G. (2018). Appealing to different motivations in a message to recruit citizen scientists: Results of a field experiment. J. Sci. Commun. 17, A02–A22. doi:10.22323/2.17010202

Lin Hunter, D. E., Newman, G. J., and Balgopal, M. M. (2020). Citizen scientist or citizen technician: A case study of communication on one citizen science platform. Citiz. Sci. Theory Pract. 5, 17–13. doi:10.5334/cstp.261

Liu, H.-Y., Dörler, D., Heigl, F., and Grossberndt, S. (2021). “Citizen science platforms,” in The science of citizen science. K. Vohland, A. Land-Zandstra, L. Ceccaroni, R. Lemmens, J. Perello, and M. Ponti (Springer International Publishing), 439–459. doi:10.1007/978-3-030-58278-4

Lorke, J., Golumbic, Y. N., Ramjan, C., and Atias, O. (2019). Training needs and recommendations for Citizen Science participants, facilitators and designers. COST Action 15212 Rep. Available at: http://hdl.handle.net/10141/622589.

Maund, P. R., Irvine, K. N., Lawson, B., Steadman, J., Risely, K., Cunningham, A. A., et al. (2020). What motivates the masses: Understanding why people contribute to conservation citizen science projects. Biol. Conserv. 246, 108587. doi:10.1016/j.biocon.2020.108587

Meinecke, K. (2021). Practicing science communication in digital media: A course to write the antike in wien blog. Teach. Cl. Digit. Age, 69–81. doi:10.38072/2703-0784/p22

Oesterheld, M., Schmid-Loertzer, V., Calvera-Isabal, M., Amarasinghe, I., Santos, P., and Golumbic, Y. N. (2022). Identifying learning dimensions in citizen science projects. Proc. Sci. 418, 25–26. doi:10.22323/1.418.0070

Po¨ttker, H. (2003). News and its communicative quality: The inverted pyramid—when and why did it appear? J. Stud. 4, 501–511. doi:10.1080/1461670032000136596

Rabe, R. A. (2008). ‘Inverted pyramid.’ In Encyclopedia of American journalism, edited by Stephen L. Vaughn, .,” in Ecyclopedia of American Journalism, ed. S. L. Vaughn (New York: Routledge.), 223–225.

Rakedzon, T., Segev, E., Chapnik, N., Yosef, R., and Baram-Tsabari, A. (2017). Automatic jargon identifier for scientists engaging with the public and science communication educators. PLoS One 12, 01817422–e181813. doi:10.1371/journal.pone.0181742

Robinson, L. D., Cawthray, J. ., West, S. ., Bonn, A., and Ansine, J. (2018). “Ten principles of citizen science,” in Citizen science: Innovation in open science, society and policy. Editors S. Hecker, M. Haklay, A. Bowser, Z. Makuch, and J. Vogel (Bonn (London: UCL Press), 27–40.

Salita, J. T. (2015). Writing for lay audiences: A challenge for scientists. Med. Writ. 24, 183–189. doi:10.1179/2047480615Z.000000000320

Sandhaus, S., Kaufmann, D., and Ramirez-Andreotta, M. (2019). Public participation, trust and data sharing: Gardens as hubs for citizen science and environmental health literacy efforts. Int. J. Sci. Educ. Part B Commun. Public Engagem. 9, 54–71. doi:10.1080/21548455.2018.1542752

Santos, P., Anastasakis, M., Kikis-Papadakis, K., Weeber, K. N., Peltoniemi, A. J., Sabel, O., et al. (2022). D2.2 Final documentation of initiatives selected for analysis. doi:10.5281/zenodo.7390459

Thomas, S., Scheller, D., and Schröder, S. (2021). Co-Creation in citizen social science: The research forum as a methodological foundation for communication and participation. Humanit. Soc. Sci. Commun. 81 8, 244–311. doi:10.1057/s41599-021-00902-x

Wehn, U., Gharesifard, M., Ceccaroni, L., Joyce, H., Ajates, R., Woods, S., et al. (2021). Impact assessment of citizen science: State of the art and guiding principles for a consolidated approach. Sustain. Sci. 16, 1683–1699. doi:10.1007/s11625-021-00959-2

Keywords: participatory research, popular science writing, science communication, science and society, open science, public engagement with science

Citation: Golumbic YN and Oesterheld M (2023) From goals to engagement—evaluating citizen science project descriptions as science communication texts. Front. Environ. Sci. 11:1228480. doi: 10.3389/fenvs.2023.1228480

Received: 24 May 2023; Accepted: 21 August 2023;

Published: 05 September 2023.

Edited by:

Dan Rubenstein, Princeton University, United StatesReviewed by:

John A. Cigliano, Cedar Crest College, United StatesCopyright © 2023 Golumbic and Oesterheld. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yaela N. Golumbic, eWFlbGFnb0B0YXVleC50YXUuYWMuaWw=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.