- Key Laboratory of Energy Thermal Conversion and Control of Ministry of Education, Southeast University, Nanjing, China

Introduction

With the rapid development of information technology such as 5G mobile communication, big data and cloud computing, the size and capacity of data centers are increasing at a rapid pace. To achieve high-performance computing, the electronic devices of data centers are increasingly high-integrated, which leads to an increase in heat generation power and heat flow density (Dong et al., 2017). For example, a traditional data center consumes about 7 kW per rack, while a new high-performance server consumes 50 kW per rack and is expected to reach over 100 kW within 5 years (Garimella et al., 2012). The increase in power density of high-performance servers makes the data center heat dissipation problem more obvious (Zhang et al., 2021). How the cooling system timely and efficiently removes the heat generated by the electronic devices to meet the temperature requirements of their normal work has become the main bottleneck restricting the green development of data centers.

Over 40% of the data center’s energy consumption is generated by the cooling system which is one of the highest energy consumption parts of the data centers (Habibi Khalaj and Halgamuge, 2017). Therefore, choosing efficient cooling technology is an effective means to avoid overheating failure of electronics and reduce energy consumption in data centers (Liu et al., 2013). Onefold air-cooled technology has been proved to be dissatisfied with the heat cooling requirements of high-power data centers with the problems such as local hot spots and high energy consumption (Moazamigoodarzi et al., 2020). The specific heat capacity of liquid is 1,000–3,500 times the specific heat capacity of air, and the thermal conductivity of the liquid is 15–25 times the thermal conductivity of air (Haywood et al., 2015). With the benefits of high reliability and low energy consumption, the liquid cooling technology better meets the cooling needs of new high-density data centers (Deng et al., 2022). Among the liquid cooling technologies, cooling plate-based liquid refrigeration technology is the earliest applied and the most popular, and its cooling effect is significantly better than air-cooled technology In engineering applications, the cold plate liquid cooling technology combined with air cooling technology can remove the heat of the server more quickly and reduce the power usage effectiveness (PUE) of the data center effectively. It is considered the main technical approach to solving the high heat flow and high power consumption problems of data center cooling systems (Habibi Khalaj et al., 2015).

This paper gives an outline of the development status of cooling plate-based liquid refrigeration technology and discusses the possible problems and challenges in its future application, providing a basis for the subsequent construction of green and high-efficiency data centers.

Development Status of Cold Plate Liquid Cooling Technology

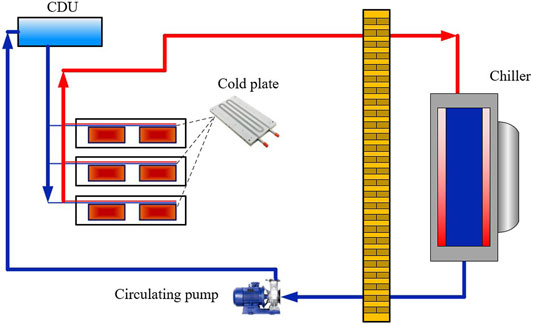

The standard cooling plate-based liquid refrigeration system consists mainly of a cold plate, a cooling distribution unit, a circulating pump, and a chiller (Kheirabadi and Groulx, 2016). The cooling plate-based liquid refrigeration technology transports the heat from the electronic device to the coolant in the circulating pipe via the cold plate, and then the coolant transports the heat to the chiller, where it is eventually dissipated to the external environment or recycled (Figure 1).

In the cooling system based on cooling plate-based liquid refrigeration technology, the coolant has no direct contact with the server, which is an indirect liquid cooling technology. According to whether the evaporation of coolant occurs in the cold plate, can be categorized into single-phase cooling plate-based liquid refrigeration technology and two-phase cooling plate-based liquid refrigeration technology (Rani and Garg, 2021). Water-cooled backplanes are often used in cold plate cooling technology, distinguished as open water-cooled backplanes and closed water-cooled backplanes. Open water-cooled backplanes can reach a cooling power of 8–12 kW, while closed water-cooled backplanes use air to circulate inside the closed cabinet, which has high cooling utilization and its cooling power can reach 12–35 kW (Cecchinato et al., 2010). Compared with the backplane based on traditional air-cooled methods, data center cabinets with enclosed water-cooled backplanes can save nearly 35% of annual energy consumption and allow for precise cooling (Nadjahi et al., 2018). Therefore, the closed water-cooled backplane technology can be used to optimize the existing open cabinet, improve the cooling capabilities of the cooling system, reduce the data center power consumption, and lower the data center’s PUE. The cabinet based on the closed water-cooled backplane designed by IBM successfully meets the heat dissipation requirements of the high heat flux server, and its heat cooling efficiency is 80% higher than that of onefold air-cooled technology (Zimmermann et al., 2012). The above solutions can realize precise cooling for specific cabinets and tackle the heat cooling dilemma of high-heat flux cabinets, however, there are also defects such as high customization cost and difficult maintenance.

The cold plate liquid cooling technology solution combined with air-cooled technology can be better utilized in the cooling system of the data center and enhance the refrigeration ability. The cold plate liquid cooling bears the main thermal load (e.g., CPU), and the air-cooled method undertakes the remaining dispersed portion of the heat load (e.g., Hard drive, interface cards) (Amalfi et al., 2022). The average annual PUE of a data center using this technical solution can be controlled below 1.2 (Cho et al., 2012). Customizing the cooling plates based on the configurational differences and thermal requirements of different electronic devices, such as CPUs, ASICs, graphics processors, accelerators, and hard disk drives, can further improve the system’s thermal capacity (Zhang et al., 2011). In other words, matching the heat-generating parts of the server with the corresponding cooling plate can expand the application ratio of cold plate-liquid refrigeration, thus promoting the comprehensive use of cold plate liquid cooling technology in data centers and advancing the process of efficient as well as green development of data centers. The CPU and memory in the server are all cooled by cold plate-liquid refrigeration increasing the proportion of cooling plate-based liquid refrigeration technology to 90%, which can reduce the energy consumption by up to 50% compared to traditional air-cooled data centers (Zimmermann et al., 2012).

On one hand, the cooling capacity of cooling plate-based liquid refrigeration technology depends on the structural layout of the cooling system, and on the other hand, the coolant parameters also significantly affect its cooling performance. The requirements of different cold plate liquid cooling forms for the coolant are quite different (Li et al., 2020). The single-phase cold plate liquid uses the coolant to absorb heat in the cooling plate for removing the heat of the chip, without allowing vaporization (Ebrahimi et al., 2014; Wang et al., 2018). Compared with ordinary insulating liquid and refrigerant, due to the high boiling point and good heat transfer performance of water, it becomes an ideal cooling medium for single-phase plate cooling liquid. However, the existence of leakage hazards limits the application and demand of water as a medium (Ellsworth and Iyengar, 2009; Hao et al., 2022) The thermal oil has good electrical insulation, thermal conductivity, and heat recovery value, which can replace water as the cooling agent for cooling plate-based liquid refrigeration technology in data centers. The cabinet with the oil-cooled backplane can effectively lower the energy usage of the refrigeration system, compared to the air-cooled cabinet cooling efficiency increased by 48.16% (Yang et al., 2019). Two-phase cold plate liquid cooling removes the heat generated by electronic devices by evaporation phase change of coolant (Gess et al., 2015). Many low boiling point insulating liquids and refrigerants can be used as the coolant of two-phase cold plate liquid cooling technology, which will not be discussed here.

Cold plate liquid cooling technology has both benefits of lowering the cooling energy usage in data centers and providing good heat recovery benefits. The higher temperature of the coolant in the circulation pipe of the cold plate liquid cooling system made it practical to efficiently leverage the waste heat, further improving the utilization of energy in data centers (Shia et al., 2021). Using this waste heat can realize district heating, absorption cooling, direct power generation, seawater desalination, and other heat recovery technologies (Cecchinato et al., 2010; Gullbrand et al., 2019). The district heating can directly use heat without conversion loss between different grades of energy, which has high economic value and is currently a more mature strategy for recovering waste heat from data centers. The CO2 emission can be reduced by 4,102 tons per year by recovering the heat of the 3.5 MW data center as a thermal supply of district heating (Davies et al., 2016). About 1,620.87 tons of standard coal can be saved in each heating season by using cold plate liquid cooling technology to recover data center heating to implement district heating, and the power consumption efficiency of heat recovery (Ratio of total heat recovery amount to total heat recovery consumption power in a data center) is 1.4 times that of air-cooled thermal recovery (Marcinichen et al., 2012). In addition, if the waste heat from the data center is recycled in the power plant, the plant’s efficiency can be increased by up to 2.2 percent. As a result, if a 500 MW power plant uses waste heat from a data center to generate electricity, it can save 1,95,000 tons of CO2 emissions annually (Marcinichen et al., 2012). With the full coupling of cold plate liquid cooling technology and waste heat utilization of data centers, district heating technology will further expand the scale of application, and heat recovery technologies such as waste heat absorption refrigeration and direct power generation are also becoming increasingly sophisticated, energy-saving and carbon reduction will be achieved in a broader sense in the future.

Problems and Challenges of Cold Plate Liquid Cooling Technology

The number of large and ultra-large data centers continues to increase globally. The traditional air-cooled technology cannot meet the thermal cooling demand of high-power and high-heat flux in the data centers. The tendency of high-efficiency data center construction makes the air-cooled technology face a great dilemma. More efficient and energy-saving cooling plate-based liquid refrigeration technology in future data center development is promising. Considering its research status and practical application requirements of heat dissipation in data centers, the cold plate liquid cooling technology still faces many challenges:

1) Low utilization of the core server room. The deployment environment for cold plate liquid cooling technology is different from traditional data centers. The data center retrofitting requires cold plate liquid cooling technology to match traditional air-cooled servers, which are costly to deploy and expensive to operate and maintain. The cold plate liquid cooling technology needs further optimization in terms of architecture, operation, and maintenance.

2) Leakage and corrosion prevention of coolant. The use of coolant involves the problem of leakage and corrosion prevention of the cooling system in the data centers. Higher requirements are needed for the composition of coolant and the packaging of auxiliary devices. Further research on raw materials and accessories is also needed to reduce the cost of large-scale applications.

3) Waste heat utilization of data centers. The higher the coolant temperature is, the easier the heat recovery will be. However, the increase in coolant temperature will widen the difference in temperature with the environment, resulting in additional heat loss and useless power consumption in the data center, which in turn affects the PUE. It is necessary to further study the coupling relationship among cooling temperature, energy-saving efficiency, and heat recovery performance to further enhance the efficiency of energy utilization in the data center.

Author Contributions

Writing the original draft and editing, YZ; Conceptualization, CF; Formal analysis, GL. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This work is supported by the National Key R&D Program of China (No. 2021YFB3803203).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Amalfi, R. L., Salamon, T., Cataldo, F., Marcinichen, J. B., and Thome, J. R. (2022). Ultra-Compact Microscale Heat Exchanger for Advanced Thermal Management in Data Centers. J. Electron. Packag. 144 (2), 8. doi:10.1115/1.4052767

Cecchinato, L., Chiarello, M., and Corradi, M. (2010). A Simplified Method to Evaluate the Seasonal Energy Performance of Water Chillers. Int. J. Therm. Sci. 49, 1776–1786. doi:10.1016/j.ijthermalsci.2010.04.010

Cho, J., Lim, T., and Kim, B. S. (2012). Viability of Datacenter Cooling Systems for Energy Efficiency in Temperate or Subtropical Regions: Case Study. Energy Build. 55, 189–197. doi:10.1016/j.enbuild.2012.08.012

Davies, G. F., Maidment, G. G., and Tozer, R. M. (2016). Using Data Centres for Combined Heating and Cooling: An Investigation for London. Appl. Therm. Eng. 94, 296–304. doi:10.1016/j.applthermaleng.2015.09.111

Deng, Z., Gao, S., Wang, H., Liu, X., and Zhang, C. (2022). Visualization Study on the Condensation Heat Transfer on Vertical Surfaces with a Wettability Gradient. Int. J. Heat Mass Transf. 184, 122331. doi:10.1016/j.ijheatmasstransfer.2021.122331

Dong, J., Lin, Y., Deng, S., Shen, C., and Zhang, Z. (2017). Experimental Investigation of an Integrated Cooling System Driven by Both Liquid Refrigerant Pump and Vapor Compressor. Energy Build. 154, 560–568. doi:10.1016/j.enbuild.2017.08.038

Ebrahimi, K., Jones, G. F., and Fleischer, A. S. (2014). A Review of Data Center Cooling Technology, Operating Conditions and the Corresponding Low-Grade Waste Heat Recovery Opportunities. Renew. Sustain. Energy Rev. 31, 622–638. doi:10.1016/j.rser.2013.12.007

Ellsworth, M. J., and Iyengar, M. K. (2009). Energy Efficiency Analyses and Comparison of Air and Water Cooled High Performance Servers. San Francisco, California, USA: ASME, 907–914. http://asmedigitalcollection.asme.org/InterPACK/proceedings-pdf/InterPACK2009/43604/907/2742018/907_1.pdf.

Garimella, S. v., Yeh, L.-T., and Persoons, T. (2012). Thermal Management Challenges in Telecommunication Systems and Data Centers. IEEE Trans. Compon. Packag. Manufact. Technol. 2, 1307–1316. doi:10.1109/TCPMT.2012.2185797

Gess, J. L., Bhavnani, S. H., and Johnson, R. W. (2015). Experimental Investigation of a Direct Liquid Immersion Cooled Prototype for High Performance Electronic Systems. IEEE Trans. Compon. Packag. Manufact. Technol. 5, 1451–1464. doi:10.1109/TCPMT.2015.2453273

Gullbrand, J., Luckeroth, M. J., Sprenger, M. E., and Winkel, C. (2019). Liquid Cooling of Compute System. J. Electron. Packag. Trans. ASME 141 (1), 10. doi:10.1115/1.4042802

Habibi Khalaj, A., and Halgamuge, S. K. (2017). A Review on Efficient Thermal Management of Air- and Liquid-Cooled Data Centers: From Chip to the Cooling System. Appl. Energy 205, 1165–1188. doi:10.1016/j.apenergy.2017.08.037

Habibi Khalaj, A., Scherer, T., Siriwardana, J., and Halgamuge, S. K. (2015). Multi-objective Efficiency Enhancement Using Workload Spreading in an Operational Data Center. Appl. Energy 138, 432–444. doi:10.1016/j.apenergy.2014.10.083

Hao, G., Yu, C., Chen, Y., Liu, X., and Chen, Y. (2022). Controlled Microfluidic Encapsulation of Phase Change Material for Thermo-Regulation. Int. J. Heat Mass Transf. 190, 122738. doi:10.1016/j.ijheatmasstransfer.2022.122738

Haywood, A. M., Sherbeck, J., Phelan, P., Varsamopoulos, G., and Gupta, S. K. S. (2015). The Relationship Among CPU Utilization, Temperature, and Thermal Power for Waste Heat Utilization. Energy Convers. Manag. 95, 297–303. doi:10.1016/j.enconman.2015.01.088

Kheirabadi, A. C., and Groulx, D. (2016). Cooling of Server Electronics: A Design Review of Existing Technology. Appl. Therm. Eng. 105, 622–638. doi:10.1016/j.applthermaleng.2016.03.056

Li, Y., Wen, Y., Tao, D., and Guan, K. (2020). Transforming Cooling Optimization for Green Data Center via Deep Reinforcement Learning. IEEE Trans. Cybern. 50 (5), 2002–2013. doi:10.1109/TCYB.2019.2927410

Liu, X., Chen, Y., and Shi, M. (2013). Dynamic Performance Analysis on Start-Up of Closed-Loop Pulsating Heat Pipes (CLPHPs). Int. J. Therm. Sci. 65, 224–233. doi:10.1016/j.ijthermalsci.2012.10.012

Marcinichen, J. B., Olivier, J. A., and Thome, J. R. (2012). On-chip Two-phase Cooling of Datacenters: Cooling System and Energy Recovery Evaluation. Appl. Therm. Eng. 41, 36–51. doi:10.1016/j.applthermaleng.2011.12.008

Moazamigoodarzi, H., Gupta, R., Pal, S., Tsai, P. J., Ghosh, S., and Puri, I. K. (2020). Modeling Temperature Distribution and Power Consumption in IT Server Enclosures with Row-Based Cooling Architectures. Appl. Energy 261, 114355. doi:10.1016/j.apenergy.2019.114355

Nadjahi, C., Louahlia, H., and Lemasson, S. (2018). A Review of Thermal Management and Innovative Cooling Strategies for Data Center. Sustain. Comput. Inf. Syst. 19, 14–28. doi:10.1016/j.suscom.2018.05.002

Rani, R., and Garg, R. (2021). A Survey of Thermal Management in Cloud Data Centre: Techniques and Open Issues. Wirel. Pers. Commun. 118 (1), 679–713. doi:10.1007/s11277-020-08039-x

Shia, D., Yang, J., Sivapalan, S., Soeung, R., and Amoah-Kusi, C. (2021). Corrosion Study on Single-phase Liquid Cooling Cold Plates with Inhibited Propylene Glycol/Water Coolant for Data Centers. J. Manuf. Sci. Eng. 143 (11), 7. doi:10.1115/1.4051059

Wang, J., Gao, W., Zhang, H., Zou, M., Chen, Y., and Zhao, Y. (2018). Programmable Wettability on Photocontrolled Graphene Film. Sci. Adv. 4. doi:10.1126/sciadv.aat7392

Yang, W., Yang, L., Ou, J., Lin, Z., and Zhao, X. (2019). Investigation of Heat Management in High Thermal Density Communication Cabinet by a Rear Door Liquid Cooling System. Energies 12, 4385. doi:10.3390/en12224385

Zhang, C., Chen, Y., Wu, R., and Shi, M. (2011). Flow Boiling in Constructal Tree-Shaped Minichannel Network. Int. J. Heat Mass Transf. 54, 202–209. doi:10.1016/j.ijheatmasstransfer.2010.09.051

Zhang, C., Li, G., Sun, L., and Chen, Y. (2021). Experimental Study on Active Disturbance Rejection Temperature Control of a Mechanically Pumped Two-phase Loop. Int. J. Refrig. 129, 1–10. doi:10.1016/j.ijrefrig.2021.04.038

Keywords: data center, cold plate liquid cooling, heat dissipation of electronic devices, PUE, thermal management

Citation: Zhang Y, Fan C and Li G (2022) Discussions of Cold Plate Liquid Cooling Technology and Its Applications in Data Center Thermal Management. Front. Energy Res. 10:954718. doi: 10.3389/fenrg.2022.954718

Received: 27 May 2022; Accepted: 06 June 2022;

Published: 20 June 2022.

Edited by:

Xiangdong Liu, Yangzhou University, ChinaReviewed by:

Conghui Gu, Jiangsu University of Science and Technology, ChinaCopyright © 2022 Zhang, Fan and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Guanru Li, bGlndWFucnVAc2V1LmVkdS5jbg==

Yufeng Zhang

Yufeng Zhang Guanru Li

Guanru Li