- 1State Grid Sichuan Comprehensive Energy Service Co. Ltd., Chengdu, China

- 2State Grid Chengdu Power Supply Co. Ltd., Chengdu, China

- 3Sichuan Yongjing Investment Co. Ltd., Chengdu, China

As a fundamental task in power system operations, transmission-constrained unit commitment (TCUC) decides ON/OFF state (i.e., commitment) and scheduled generation for each unit. Generally, TCUC is formulated as a mixed-integer linear programming (MILP) and must be resolved within a limited time window. However, due to the NP-hard property of MILP and the increasing complexity of power systems, solving the TCUC within a limited time is computationally challenging. Regarding the computation challenge, the availability of historical TCUC data and the development of the machine learning (ML) community are potentially helpful. To this end, this paper designs an ML-aided framework that can leverage historical data in enabling computation improvement of TCUC. In the offline stage, ML models are trained to predict the commitments based on historical TCUC data. In the online stage, the commitments are quickly predicted using the well-trained ML. Furthermore, a feasibility checking process is conducted to ensure the commitment feasibility. As a result, only a reduced TCUC with fewer binary variables needs to be solved, leading to computation acceleration. Case studies on an IEEE 24-bus and a practical 5655-bus system show the effectiveness of the presented framework.

1 Introduction

The transmission-constrained unit commitment (TCUC) problem has been widely regarded as one of the most fundamental applications in power system operations Li et al. (2022, 2021a,b, 2020); Wu J. et al. (2021); Liu et al. (2021b). In practice, the TCUC is routinely implemented by Independent System Operators (ISO) to clear the day-ahead electricity market within 4–6 h Chen et al. (2022a, 2016); Liu et al. (2020, 2021a); Chen et al. (2021); Ma et al. (2021). Toward a specific power system consisting of massive transmission lines and units, TCUC is mathematically formulated as a mixed-integer linear programming (MILP) with massive binary and continuous variables, as well as prevailing constraints. Aiming at least operation cost, the MILP-based TCUC determines the optimal ON/OFF state (i.e., commitment) and scheduled generation of each unit to clear the electricity market. Typically, ISOs apply powerful commercial solvers (e.g., Gurobi) to solve the MILP-based TCUC problem on a daily basis Zhang et al. (2020).

Due to the rapid development of modern power systems, the size and the complexity of TCUC increased significantly. For example, 42,705 buses and 1,258 units exist in the Midcontinent ISO Chen et al. (2013), resulting in a MILP-based TCUC model with hundreds of thousands of variables and constraints. Moreover, it is well known that the MILP problems belong to the class of NP-hard problems. Consequently, an ongoing challenge in ISOs is how to efficiently solve such a large-size MILP problem within a limited time window.

Regarding the computation challenge, developments in the machine learning (ML) community enable various ML algorithms [e.g., deep neural network (DNN), decision tree (DT), random forest (RF), and k-nearest neighbors (k-NN)] to be practically helpful Nair et al. (2020). For example, Google Research Team successfully developed a DNN-based framework that can quickly solve large-size MILP problems without optimality loss. The core is leveraging the massive historical MILP instances to train an array of sophisticated heuristic processes in the DNN. As long as the new features are available, the well-trained DNN can quickly yet accurately predict the binary solutions. However, even though the DNN in Nair et al. (2020) shows remarkable ability to solve MILP, it is still difficult to convince ISOs to replace mathematical-programming-based commercial solvers with the black-box ML algorithms. This is because the black-box solutions may not satisfy all the physical constraints. Indeed, Álinson et al. Xavier et al. (2021) pointed out that combining ML algorithms and off-the-shelf solvers is more practical and reliable regarding improving TCUC computation.

Inspired by the above problems, this paper presents a data-driven ML-aided framework for improving the TCUC computation while maintaining enough solution quality. First, the historical TCUC optimal solutions and their corresponding features are collected. Furthermore, the unit generation pattern is analyzed, so that a target set

The main works of this paper are summarized as follows:

• A data-driven ML-aided framework is presented for improving the TCUC computation. The framework possesses general and plug-and-play properties. That is, it is compatible with various ML classification algorithms and the ISOs’ practice. Most importantly, the feasibility checking process ensures that the predicted commitments satisfy all the constraints.

• Taking DNN, DT, RF, and k-NN as core, case studies are conducted on an IEEE 24-bus system and a practical 5655-bus system, showing the computation benefits of the framework. In addition, our results report an interesting observation: the most naive k-NN significantly outperforms DNN, DT, and RF under the framework. This observation implies that learning TCUC could be a low-hanging fruit Pineda and Morales (2021) not requiring sophisticated methods. To improve the transparency of this paper, the datasets and the ML codes have been uploaded at Lin (2022).

The remaining parts are organized as follows: Section 2 reviews related works; Section 3 introduces the mathematical model of TCUC; Section 4 expounds the presented framework; Section 5 shows the experimental results; Section 6 concludes this paper.

2 Related works

Leveraging the black-box ML algorithms to solve TCUC can be traced back to the 90s when Huang et al. Huang and Huang (1997) combined neural networks and dynamic programming for solving TCUC. After the 20th century, the breakthroughs of commercial solvers enabled the mathematical programming (white-box methods) to be mainstream in solving TCUC.

In recent years, the significant progress of the ML community has awakened the interest in applying ML to TCUC Yang and Wu (2021). Typically, the main applications can be categorized into 1) explicitly describing the operation rules that are difficult to describe in the TCUC model mathematically Li et al. (2019); Zhang et al. (2021); Hou et al. (2020); Chen et al. (2022b); Ye et al. (2019), 2) simplifying the TCUC model Mohammadi et al. (2021); Yang et al. (2020); Wu T. et al. (2021); Pineda et al. (2020), and 3) accelerating the TCUC computation performance de Mars and O’Sullivan (2021); Nikolaidis and Chatzis (2021); Pineda and Morales (2021); Xavier et al. (2021); Yang et al. (2021); Zhou et al. (2018); Zhou et al. (2021).

Explicitly describing the operation rules. Li et al. Li et al. (2019) applied a centralized Q-learning-based method that requires no prior information on the actual cost functions, thus can handle the cases that TCUC cost functions are indescribable mathematically. Similarly, Zhang et al. (2021) utilized DNN to encode the complicated frequency response as constraints in the TCUC model. In Hou et al. (2020), a sparse oblique DT was deployed to extract security rules as sparse constraints. As a matter of fact, learning to approximate the mathematically indescribable rules of TCUC explicitly has been gradually recognized as an excellent alternative to improve the TCUC performance. In Chen et al. (2022b), Chen et al. employed a closed-loop predict-and-optimize method to learn the RES prediction that can lead to better TCUC economics. In addition, one desirable byproduct of such learning is the better TCUC computation performance. For example, Ye et al. (2019) combined deep learning and reinforcement learning to handle the computation intractability caused by the non-convexity in a bi-level electricity market model.

Simplifying the TCUC model. An important reason for the difficulty of TCUC computation is the massive physical constraints. In fact, a large part of the constraints is redundant. Thus, ISOs can remove these constraints without affecting the optimal solution. For example, Midcontinent ISO governs a system with 42,705 buses but only considers about 20 transmission constraints in TCUC. As a result, leveraging ML to filter out the redundant constraints is valuable. In Mohammadi et al. (2021), a tree method was employed to relieve the heavy computation burden by removing redundant constraints (most of them are transmission constraints) from the original MILP-based TCUC model. In Yang et al. (2020), support vector machine, RF, and neural network were applied to classify whether a TCUC problem is a hard case. If identified as a hard case, the decision variables are aggregated and reduced, leading to a computationally easier TCUC. In Wu T. et al. (2021), the commitment variables were directly decided by a convolutional neural network. Thus the operators only need to solve a small-scale convex optimization, leading to remarkable computation improvement. Furthermore, Pineda et al. Pineda et al. (2020) designed a simple yet effective k-NN-based method to learn the congestion status of transmission lines so that the redundant and inactive transmission constraints can be removed. According to the experiments in Mohammadi et al. (2021); Pineda et al. (2020); Yang et al. (2020); Wu T. et al. (2021), simplifying the TCUC model by filtering out certain constraints is valuable for practical TCUC.

Accelerating the TCUC computation performance. This paper falls in this category, which is to accelerate TCUC computation while trying to avoid quality losses as much as possible. This category can be further divided into two sub-categories 1) using ML for solving TCUC directly Nikolaidis and Chatzis (2021); Zhou et al. (2018); de Mars and O’Sullivan (2021); Yang et al. (2021) and 2) using ML to aid commercial solvers for solving TCUC Xavier et al. (2021); Zhou et al. (2021); Pineda and Morales (2021). In Nikolaidis and Chatzis (2021), the authors developed a Gaussian-process-based Bayesian optimization for quickly solving TCUC and showed the effectiveness in the medium system. In Zhou et al. (2018), reinforcement learning was deployed to enable multi-objective TCUC solutions to bypass local optimum. In de Mars and O’Sullivan (2021), a purely data-driven method that can simulate experts was presented to solve TCUC, which shows remarkable ability in a practical system. Moreover, Yang et al. (2021) designed a guided tree to solve TCUC, which computationally outperforms the unguided tree. Even though experimental results in Nikolaidis and Chatzis (2021); Zhou et al. (2018); de Mars and O’Sullivan (2021); Yang et al. (2021) highlighted certain preferable advantages of these black-box ML algorithms, they lack comprehensive comparisons to the state-of-the-art solvers. On the other hand, Zhou et al. (2021) leveraged the classification-based method to identify useful TCUC instances, of which binary variables are strategically utilized to fix that in the new TCUC instance. According to their experiments, the method leads to 58.82% computation improvement in a Polish 2382-bus system while guaranteeing good solution quality. Furthermore, Álinson et al. Xavier et al. (2021) designed a framework involving k-NN and support vector machine for boosting the computation performance of the commercial solvers. According to their comprehensive experiments in 9 large-size systems, the speedup achieves 17.47x without loss in solution optimality. As a result, Pineda and Morales (2021) concluded that even a naive ML algorithm could significantly boost the computation performance in solver-based TCUC solving.

Instead of focusing on a single ML algorithm, this paper presents a general ML-aided framework compatible with various ML classification algorithms (i.e., DNN, DT, RF, and k-NN). Additionally, the ML-aided framework is compared to the traditional way based entirely on the commercial solvers. As a result, case studies in IEEE 24-bus and practical 5655-bus systems show the computation benefits.

3 Mathematical model of transmission-constrained unit commitment

The TCUC is modeled as in Eqs 1–13. The objective Eq. 1 is to minimize the total operation cost z, including start-up, shut-down, and generation costs. Regarding the unit constraints, Eqs 2, 3 indicate the minimum ON/OFF constraints; Eqs 4, 5 describe the linearized generation cost using

The computation intractability of MILP-based TCUC mainly stems from the binary variables Iti,

4 The ML-Aided framework

First, this section describes the dataset processing. And then, the utilized ML algorithms and the tuned hyper-parameters are briefly introduced. Finally, the steps to implement the framework are expounded.

4.1 Construction of dataset

The construction of the dataset is shown as in Figure 1.

• A raw dataset is downloaded from Chen (2021). The dataset includes load demand

• Taking

For a system with

• The row-sum operation is applied on

• In the presented framework, each unit corresponds to a predictor. Thus,

Here,

Taking

Under the lens of ML, this mapping is essentially a multi-label classification.

Finally, the dataset is divided into two parts for creating training and testing sets: 1) Training set (83%) ranging from 01/01/2018 to 06/30/2020, which is treated as historical dispatch days indexed by

4.2 Machine learning algorithms

Among the various ML algorithms, DNN, DT, RF, and k-NN are utilized as the predictor.

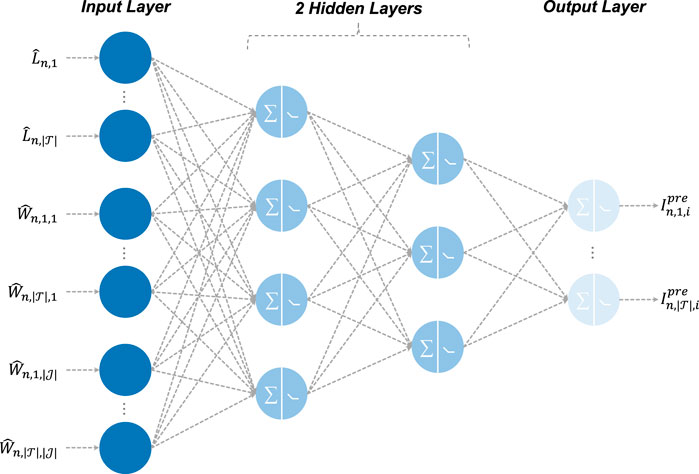

4.2.1 Deep neural network

Essentially, DNN is an artificial neural network containing two or more hidden layers, which has been recognized as a powerful supervised ML algorithm. In the case of TCUC, a simple DNN with 2 hidden layers for unit i is sketched as in Figure 2.

The neurons in the hidden layers are based on certain activation functions, such as rectified linear unit Eq. 19 and threshold logic unit Eq. 20.

Here, w0 is a bias term and the remaining w indicate the weights assigned for the gray arrows; f indicates the inputs for the neurons. By combining many hidden layers, DNN has potential to approximate the complex mapping from RES/load to the optimal unit commitment.

Fine-tuning hyper-parameters, especially those for the hidden layer structure, are the key to obtaining a high-performance DNN. This paper utilizes a 10-fold grid search to identify the best combination of hidden layer structure hidden_layer_sizes = {(150, 150, 150), (150, 100, 50), (150, 150, 150, 150, 150, 150), (150, 125, 100, 75, 50, 25)} and coefficient of the norm-2 regularization alpha = {0.000001, 0.001, 1}. The adaptive moment estimation algorithm Pedregosa et al. (2011) is utilized for the weight optimization, due to its robustness. The rectified linear unit Eq. 19 is deployed as the activation functions, which can lead to faster training. DNN is sensitive to scaling, thus the features

4.2.2 Decision tree

DT is a supervised ML algorithm that good at multi-label classification. The most preferable property of DT is the interpretability, which can visualize the map from the features to the decisions. One byproduct caused by the interpretability is feature selection. That is, DT can show which attribute of feature has the most significant impact on the label. In the case of TCUC, the visualization can show which RES/load bus has the greatest impact on the commitment.

Additionally, DT can handle contextual data conveniently. For example, the weather information (e.g., sunny or rainy) can be utilized for training without digitization and normalization. Instead, this is somehow difficult for DNN.

The training of DT is essentially a process of minimizing Gini Impurity Eq. 21 or Entropy Eq. 22 for each node in a DT.

Here,

According to Pedregosa et al. (2011), the Gini Impurity Eq. 21 and Entropy Eq. 22 generally lead to similar DT predictor. However, the former could be faster in terms of training. Therefore, Gini Impurity Eq. 21 is utilized. In DT, 4 hyper-parameters could affect the performance significantly, including the maximum depth of DT max_depth = {5, 6, 7, 8, 9}, the minimum number of samples required to split an internal node min_samples_split = {2, 4}, the minimum number of samples required to be at a leaf node min_samples_leaf = {2, 4}, the maximum number of leaf nodes max_leaf_nodes = {5, 25}. The best combination of these hyper-parameters are identified via a 10-fold grid search.

4.2.3 Random forest

Ensembling a number of DTs results in a RF. The RF makes predictions by comprehensively considering the voting results of the DTs. Thus, RF is regarded as a boosting version of DT. The RF and DT share similar properties (e.g., using Gini Impurity or Entropy for measuring quality), except the interpretability. This is because visualizing a tree is easy, but visualizing a forest is difficult and meaningless.

Regarding the hyper-parameters of RF, the number of DTs in the RF n_estimators = {5, 25, 50}, the maximum depth of the trees max_depth = {3, 5, 9}, the minimum number of samples required to split an internal node min_samples_split = {2, 4} are tuned via a 10-fold grid search. The quality measurement is set as Gini Impurity that is consistent with DT.

4.2.4 K-nearest neighbor

Compared to DNN, DT, and RF, k-NN is a naive ML algorithm. Essentially, k-NN conducts classification by identifying the closest instances from historical instances. In this paper, the following variant of k-NN with k = 1 is designed.

First, the most closest historical instances

Here, Vec (⋅) indicates the vectorizing operation that can adaptively reformulate the matrices as a vector. Finally, historical commitment solution

4.3 Implementation steps

The process of implementing the presented ML-aided framework is shown as in Figure 3, which can be summarized as following:

• Based on a specified ML algorithm, a predictor

• Taking

• Given the predictions

After solving Eqs 1–13, record the computation time oml and the optimal TCUC cost zml,⋆ under the presented ML-aided framework. Furthermore, evaluating the computation improvement Eq. 24 and optimality loss Eq. 25 compared to ocs (computation time of being solved by commercial solvers directly) and z⋆ (optimal TCUC cost provided by commercial solvers).

5 Case studies

Based on a small-size IEEE 24-bus system and a large-size 5655-bus system, case studies are conducted to evaluate the presented framework. Both the systems are tuned following Chen et al. (2022b). The ML algorithms are carried out via scikit-learn Pedregosa et al. (2011) based on Python 3.8. The commercial solver is Gurobi 9.5.

5.1 Cases on 24-bus system

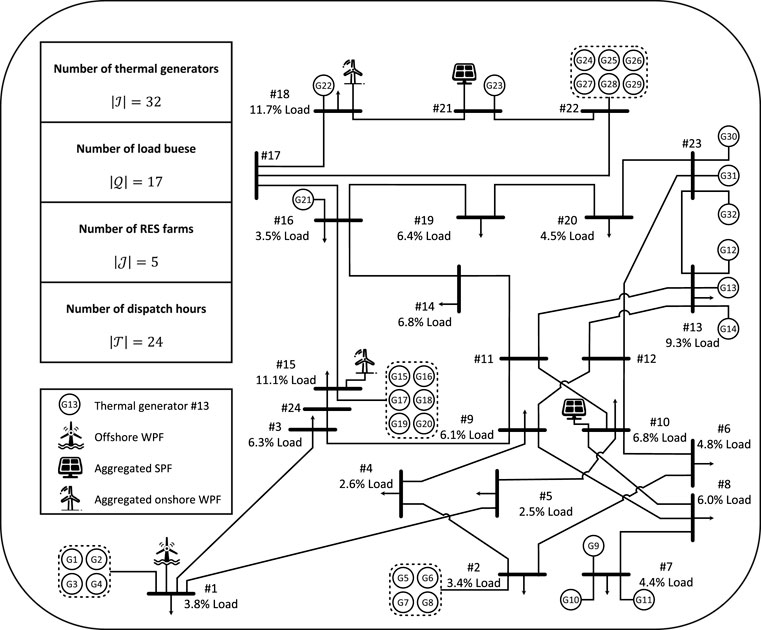

The IEEE 24-bus is sketched in Figure 4, in which 32 generators are involved.

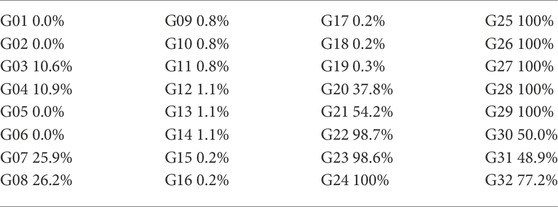

The ON-state ratios of the generators are computed using their historical datasets and listed in Table 1. According to Table 1, some generators never startup (e.g., G01 and G02) or shutdown (e.g., G28 and G29), leading to overly unbalanced historical data. Therefore, only the generators with ON-state ratios fall within [5%, 95%] are relatively suitable for being predicted, resulting in set

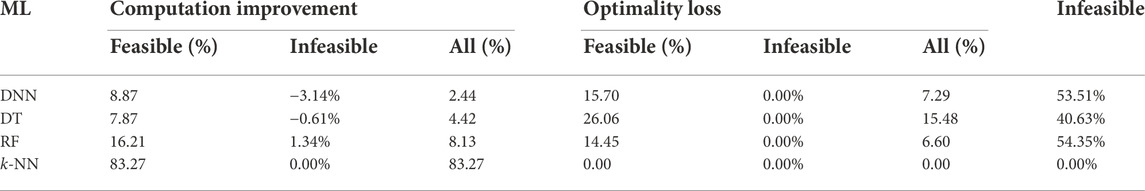

5.1.1 Overall performance

Table 2 compares the computation improvement and the optimality loss, in which the column label Feasible/Infeasible indicates the performance on feasible/infeasible TCUC cases. Regarding the computation improvement, k-NN surprisingly outperforms other algorithms with 83.27% improvement. This is due to the fact that the k-NN essentially picks an optimal commitment result from the historical days, which can satisfy all constraints of TCUC. Also, since the RES and load information of the picked historical day and the upcoming dispatch day is similar, the commitment results are mutually feasible. Additionally, it should be pointed out that the warm-start strategy for infeasible predictions may not lead to positive computation improvement. This is because the infeasible commitment could induce the Branch-and-Bound algorithm to search from a bad initial node, thus worsening the computation performance.

In terms of the optimality loss, only k-NN achieves ignorable loss. The other ML algorithms suffer noticeable optimality loss in the feasible cases. This point indicates that the predicted commitments provided by DNN, DT, and RF are mathematically far from the optimal commitment. As a result, about half of these predicted commitments are infeasible. On the other hand, k-NN directly identifies the mathematically similar and feasible commitments from historical data, in which the physical requirements are preserved entirely. Even though the commitments are not exactly the same as the optimal commitment, TCUC can still adjust scheduled power p to achieve the optimal cost. As a result, k-NN achieves significant computation improvement without optimality loss in all the cases.

5.1.2 Prediction performance

Even though the prediction performance is not the main concern of this paper, it is of interest to observe the relationship between the accuracy and the computation improvement.

This section introduces accuracy Eq. 26, receiver operating characteristic curve (ROC) score Géron (2019), precision Eq. 27, and recall Eq. 28 to evaluate the prediction performance. The ROC score is the area under the ROC curve, which can be regarded as a comprehensive evaluation tool combining precision and recall.

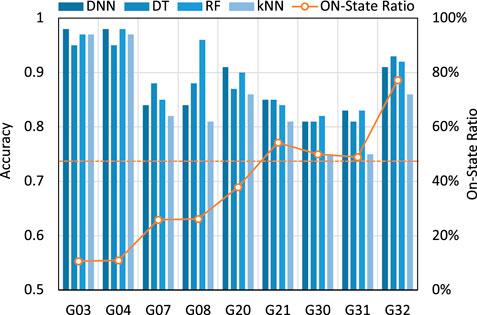

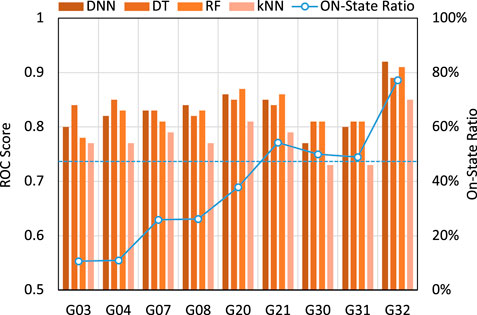

Figure 5 shows that DNN, DT, and RF are comparable regarding accuracy. Additionally, Figure 5 utilizes a dashed line to indicate the 50% ON-state ratio, which means an ideally balanced dataset. Figure 5 illustrates that the closer to the 50% line, the worse accuracy. This is because invariably predicting ON/OFF can achieve good accuracy for the units always stay ON/OFF state. On the other hand, when the ON-state ratio is around 50% (e.g., G30 and G31), it is challenging to maintain high accuracy.

Furthermore, Figure 6 shows that DNN, DT, and RF are comparable and significantly outperform k-NN in terms of ROC score. As a result, it can be concluded that DNN, DT, and RF perform better than k-NN regarding prediction. This is due to the sophisticated prediction mechanisms of these three algorithms.

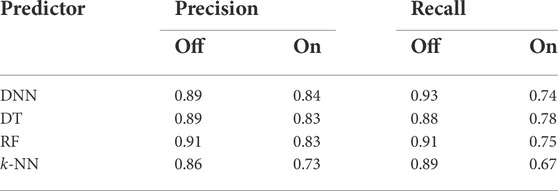

Furthermore, Table 3 lists the precision and recall. The precision indicates the accuracy of the ON-state predictions; the recall represents the ratio of ON states that the predictors correctly detect. Clearly, these algorithms only perform well in the OFF-state cases, which are more frequent state than the ON state. Therefore, Table 3 implies that all the algorithms struggle to predict the less-frequent state.

5.2 Cases on 5655-bus system

This section investigates the ML-aided framework in the 5655-bus system Chen et al. (2022b). The system possesses 461 generators, 6,630 transmission lines, 5 aggregated RES farms. The predictors merely predict the 246 generators with a 5%–95% ON-state ratio. Since our results show that the 5655-bus prediction comparisons have the same trends as the 24-bus comparisons, the prediction results for the 5655-bus are not specifically analyzed in this section for better readability.

5.2.1 Overall performance

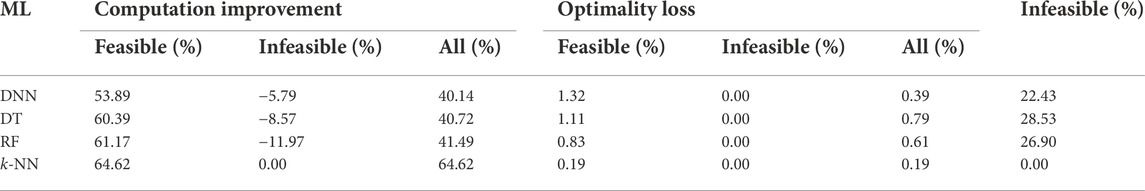

Table 4 compares the computation improvement and optimality loss on a large-size 5655-bus system. Regarding the computation improvement, k-NN still outperforms other algorithms with a 64.62% improvement, of which all the predictions are feasible. DNN, DT, and RF also achieve a computation improvement of about 40%.

In terms of optimality loss, all the algorithms result in a loss within 1%, which is acceptable because the computation benefits are at least 40%.

In sum, except for k-NN, Table 4 indicates that DNN, DT, and RF are comparable in the 5655-bus system regarding the overall performance. However, DT has the fastest training speed among the three algorithms, and DNN is the slowest. Therefore, DT could be more preferable.

5.2.2 Comparing performances in 24-bus and 5655-bus systems

Furthermore, it is worth comparing the framework performances in the 24-bus and 5655-bus systems.

Regarding the computation improvement, the margin between k-NN and the other three algorithms is smaller in the 5655-bus. This is because, in such a large-size system, the massive transmission constraints Eq. 13 are also the primary cause of computational burden. Therefore, even though fixing the binaries I can relieve the computational burden, the accelerations cannot be as significant as the small-size 24-bus system. Additionally, due to the remarkable adjusting ability (i.e., massive online generator fleet) of the 5655-bus system, the predicted commitments are more likely to be feasible, leading to a lower infeasibility ratio in the 5655-bus system. Moreover, it should be pointed out that the adverse effects of infeasible predictions on TCUC instances also become more noticeable in the 5655-bus system. The reason is that the Branch-and-Bound algorithm is more sensitive to the initial node in the large-size system. However, the infeasible predictions generally make the Branch-and-Bound algorithm start from a bad initial node.

It is also worth pointing out that the optimality losses of DNN, DT, and RF become acceptable in the 5655-bus system. This is also due to the fact that the 5655-bus system possesses remarkable adjusting ability. Thus given different commitments I, the TCUC can still achieve a solution that is mathematically close to the optimal solution.

In summary, comparisons between the two systems demonstrate that the presented ML-aided framework is suitable for the large-size system with significant adjustment ability.

6 Conclusion and discussions

6.1 Conclusion

This paper designs an ML-aided framework for improving the TCUC computation performance. Specifically, DNN, DT, RF, and k-NN are leveraged to predict and fix the commitment decisions of the TCUC models, so that Gurobi can solve a reduced TCUC problem with fewer binary variables. To evaluate the effectiveness of the framework, case studies are conducted on a 24-bus system and a 5655-bus system. The following conclusions are obtained:

• The presented ML-aided framework achieves significant TCUC computation improvement, especially in the large-size system, but at the expense of certain optimality loss. In the small-size 24-bus system, the optimality loss could be 6%–17%. In the large-size 5655-bus system, the optimality loss is merely within 1%, but the computation improvement achieves as much as 64.62%.

• Our results report that the simplest k-NN outperforms the other ML algorithms (DT, RF, and DNN) in terms of computation improvement. Interestingly, the k-NN leads to the worst prediction accuracy. This point indicates that learning and accelerating TCUC could be a low-hanging fruit Pineda and Morales (2021). In such a case, naive algorithms could be more preferable than sophisticated algorithms since pursuing the ultimate task improvement is our primary goal.

6.2 Discussions

It is noteworthy that using infeasible predictions for the warm start could adversely affect the computation, as shown in Table 2 and Table 4. This adverse effect is even worse in the 5655-bus system. There are three potential options for relieving this problem: 1) discarding the infeasible solution and solving TCUC directly with the solver; 2) fixing the infeasible solution (especially for constraints Eqs 2, 3) and then performing the warm start; and 3) using k-NN to find the nearest feasible solution to the infeasible solution and replacing it.

Although the presented framework cannot achieve the impressive effectiveness as the mentioned references [e.g., 17.47x in Xavier et al. (2021) and 215.9x in Pineda and Morales (2021)], it is more general, more exploratory, and more transparent. Specifically, Pineda and Morales (2021) only considers the k-NN method and does not discuss the data processing; in comparison, the presented framework is compatible with DNN, DT, and RF in addition to the k-NN, and is designed with a data-processing stage. Moreover, this paper has open-sourced all the datasets and code, which can facilitate the further exploration of the presented framework.

Additionally, it is worth pointing out that the TCUC model in this paper does not yet consider the security constraints (e.g., N-1 feasibility constraints) commonly used in practical applications. This is because considering these constraints may require decomposition algorithms to solve the TCUC model, while this paper intends to focus on the most fundamental TCUC problem, so that it is convenient to obtain a clear and intuitive conclusion regarding the acceleration. However, this may raise a question: is the presented framework still effective in extreme scenarios? In light of this, future works will further refine the TCUC model and discuss the effectiveness of the presented framework in different scenarios.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/Supplementary Material.

Author contributions

Conceptualization: ZL, LiL, and CM; methodology: ZL and YL; software: ZL; validation: YC and JY; formal analysis: HL; investigation: LWL; writing—original draft preparation: ZL and YL; writing—review and editing: ZL and YL; visualization: YL; supervision: ZL; funding acquisition: ZL. All authors have read and agreed to the published version of the manuscript.

Funding

This paper is supported by the project entitled The Operation Service of Distributed Resource Aggregators in the Park under the Background of the Opening of the Energy Market (2021YFSY0019) from State Grid Sichuan Comprehensive Energy Service Co. Ltd.

Conflict of interest

ZL, YC, JY, CM, LWL, and YL were employed by the company State Grid Sichuan Comprehensive Energy Service Co. Ltd.; HL was employed by the company State Grid Chengdu Power Supply Co. Ltd. and LiL was employed by the comany Sichuan Yongjing Investment Co. Ltd.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Chen, T., Liu, Y., Li, W., and Ma, T. (2021). Adaptive microgrid price-making strategy and economic dispatching method based on blockchain technology. Electr. Power Constr. 42, 17–28. doi:10.12204/j.issn.1000-7229.2021.06.003

Chen, X. (2021). Closed-loop ncuc dataset. Available at: https://github.com/asxadf/closed_loop_ncuc_dataset.GitHub.

Chen, X., Liu, Y., and Wu, L. (2022a). Improving electricity market economy via closed-loop predict-and-optimize. arXiv preprint arXiv:2208.13065. Available at: https://arxiv.org/abs/2208.13065 (Accessed Aug 27, 2022).

Chen, X., Yang, Y., Liu, Y., and Wu, L. (2022b). Feature-driven economic improvement for network-constrained unit commitment: A closed-loop predict-and-optimize framework. IEEE Trans. Power Syst. 37, 3104–3118. doi:10.1109/tpwrs.2021.3128485

Chen, Y., Casto, A., Wang, F., Wang, Q., Wang, X., and Wan, J. (2016). Improving large scale day-ahead security constrained unit commitment performance. IEEE Trans. Power Syst. 31, 4732–4743. doi:10.1109/tpwrs.2016.2530811

Chen, Y., Gribik, P., and Gardner, J. (2013). Incorporating post zonal reserve deployment transmission constraints into energy and ancillary service co-optimization. IEEE Trans. Power Syst. 29, 537–549. doi:10.1109/tpwrs.2013.2284791

de Mars, P., and O’Sullivan, A. (2021). Applying reinforcement learning and tree search to the unit commitment problem. Appl. Energy 302, 117519. doi:10.1016/j.apenergy.2021.117519

Géron, A. (2019). Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow: Concepts, tools, and techniques to build intelligent systems. California, United States: O’Reilly Media.

Hou, Q., Zhang, N., Kirschen, D. S., Du, E., Cheng, Y., and Kang, C. (2020). Sparse oblique decision tree for power system security rules extraction and embedding. IEEE Trans. Power Syst. 36, 1605–1615. doi:10.1109/tpwrs.2020.3019383

Huang, S., and Huang, C. (1997). Application of genetic-based neural networks to thermal unit commitment. IEEE Trans. Power Syst. 12, 654–660. doi:10.1109/59.589634

Li, F., Qin, J., and Zheng, W. (2019). Distributed q-learning-based online optimization algorithm for unit commitment and dispatch in smart grid. IEEE Trans. Cybern. 50, 4146–4156. doi:10.1109/tcyb.2019.2921475

Li, Z., Wu, L., Xu, Y., Moazeni, S., and Tang, Z. (2022). Multi-stage real-time operation of a multi-energy microgrid with electrical and thermal energy storage assets: A data-driven mpc-adp approach. IEEE Trans. Smart Grid 13, 213–226. doi:10.1109/tsg.2021.3119972

Li, Z., Wu, L., and Xu, Y. (2021a). Risk-averse coordinated operation of a multi-energy microgrid considering voltage/var control and thermal flow: An adaptive stochastic approach. IEEE Trans. Smart Grid 12, 3914–3927. doi:10.1109/tsg.2021.3080312

Li, Z., Xu, Y., Fang, S., Wang, Y., and Zheng, X. (2020). Multiobjective coordinated energy dispatch and voyage scheduling for a multienergy ship microgrid. IEEE Trans. Ind. Appl. 56, 989–999. doi:10.1109/tia.2019.2956720

Li, Z., Xu, Y., Wu, L., and Zheng, X. (2021b). A risk-averse adaptively stochastic optimization method for multi-energy ship operation under diverse uncertainties. IEEE Trans. Power Syst. 36, 2149–2161. doi:10.1109/tpwrs.2020.3039538

Lin, Z. (2022). Codes of ml-aided framework. Available at: https://github.com/linzhaohang11/data_mluc.GitHub.

Liu, Y., Chen, X., Li, B., Li, H., and Ye, Y. (2020). Wks-type distributionally robust optimisation for optimal sub-hourly look-ahead economic dispatch. IET Gener. Transm. &. Distrib. 14, 2237–2246. doi:10.1049/iet-gtd.2019.1344

Liu, Y., Chen, X., Wu, L., and Ye, Y. (2021a). An idm-based distributionally robust economic dispatch model for iehg-mg considering wind power uncertainty. CSEE J. Power Energy Syst. 1–11. Early access. doi:10.17775/CSEEJPES.2021.03940

Liu, Y., Li, L., Liu, Z., Miao, S., Zhang, S., and Zhang, L. (2021b). Cooperative control strategy of distribution network considering generalized energy storage cluster participation. Electr. Power Constr. 42, 89–98. doi:10.12204/j.issn.1000-7229.2021.08.011

Ma, T., Liu, Y., Liu, J., Jiang, Z., and Xu, L. (2021). Distributed transaction model of electricity in multi-microgrid applying smart contract technology. Electr. Power Constr. 42, 41–48. doi:10.12204/j.issn.1000-7229.2021.01.005

Mohammadi, F., Sahraei-Ardakani, M., Trakas, D., and Hatziargyriou, N. (2021). Machine learning assisted stochastic unit commitment during hurricanes with predictable line outages. IEEE Trans. Power Syst. 36, 5131–5142. doi:10.1109/tpwrs.2021.3069443

Nair, V., Bartunov, S., Gimeno, F., von Glehn, I., Lichocki, P., Lobov, I., et al. (2020). Solving mixed integer programs using neural networks. arXiv preprint arXiv:2012.13349. Available at: https://arxiv.org/abs/2012.13349 (Accessed Dec 23, 2020).

Nikolaidis, P., and Chatzis, S. (2021). Gaussian process-based bayesian optimization for data-driven unit commitment. Int. J. Electr. Power & Energy Syst. 130, 106930. doi:10.1016/j.ijepes.2021.106930

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., et al. (2011). Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830. doi:10.5555/1953048.2078195

Pineda, S., and Morales, J. (2021). Is learning for the unit commitment problem a low-hanging fruit? arXiv preprint arXiv:2106.11687. Available at: https://arxiv.org/pdf/2106.11687.pdf (Accessed Jun 22, 2021).

Pineda, S., Morales, J., and Jiménez-Cordero, A. (2020). Data-driven screening of network constraints for unit commitment. IEEE Trans. Power Syst. 35, 3695–3705. doi:10.1109/tpwrs.2020.2980212

Wu, J., Liu, Y., Xu, L., Ma, T., Guo, J., and Yang, Q. (2021a). Robust game transaction model of multi-microgrid system applying blockchain technology considering wind power uncertainty. Electr. Power Constr. 42, 10–21. doi:10.12204/j.issn.1000-7229.2021.09.002

Wu, T., Zhang, Y., and Wang, S. (2021b). Deep learning to optimize: Security-constrained unit commitment with uncertain wind power generation and besss. IEEE Trans. Sustain. Energy 99, 1. doi:10.1109/TSTE.2021.3107848

Xavier, Á. S., Qiu, F., and Ahmed, S. (2021). Learning to solve large-scale security-constrained unit commitment problems. Inf. J. Comput. 33, 739–756. doi:10.48550/arXiv.1902.01697

Yang, N., Yang, C., Wu, L., Shen, X., Jia, J., Li, Z., et al. (2021). Intelligent data-driven decision-making method for dynamic multi-sequence: An e-seq2seq based scuc expert system. IEEE Trans. Ind. Inf. 18, 3126–3137. doi:10.1109/tii.2021.3107406

Yang, Y., Lu, X., and Wu, L. (2020). Integrated data-driven framework for fast scuc calculation. IET Gener. Transm. &. Distrib. 14, 5728–5738. doi:10.1049/iet-gtd.2020.0823

Yang, Y., and Wu, L. (2021). Machine learning approaches to the unit commitment problem: Current trends, emerging challenges, and new strategies. Electr. J. 34, 106889. doi:10.1016/j.tej.2020.106889

Ye, Y., Qiu, D., Sun, M., Papadaskalopoulos, D., and Strbac, G. (2019). Deep reinforcement learning for strategic bidding in electricity markets. IEEE Trans. Smart Grid 11, 1343–1355. doi:10.1109/tsg.2019.2936142

Zhang, S., Ye, H., Wang, F., Chen, Y., Rose, S., and Ma, Y. (2020). Data-aided offline and online screening for security constraint. IEEE Trans. Power Syst. 36, 2614–2622. doi:10.1109/tpwrs.2020.3040222

Zhang, Y., Cui, H., Liu, J., Qiu, F., Hong, T., Yao, R., et al. (2021). Encoding frequency constraints in preventive unit commitment using deep learning with region-of-interest active sampling. arXiv preprint arXiv:2102.09583. Available at: https://arxiv.org/abs/2102.09583 (Accessed Feb 18, 2021).

Zhou, M., Wang, B., Li, T., and Watada, J. (2018). A data-driven approach for multi-objective unit commitment under hybrid uncertainties. Energy 164, 722–733. doi:10.1016/j.energy.2018.09.008

Zhou, Y., Zhai, Q., and Wu, L. (2021). A data-driven variable reduction approach for fast unit commitment of large-scale systems. J. Mod. Power Syst. Clean Energy, 1–12. Early access. doi:10.35833/MPCE.2021.000382

Nomenclature

Sets and indices

Decision variables

x Vector of TCUC decisions. x = {I, Isu, Isd, Pseg, P, W, Cp}

Iti Commitment of unit i at hour t

Pti Total scheduled generation of unit i at hour t

Wtj Scheduled generation of RES farm j at hour t

zn TCUC cost of dispatch day n

⋅⋆/⋅pre Optimal/Predicted solution for a variable

Constant parameters

T The last hour in set

Bb Transmission limitation of branch b

τqb Power shifting factor from bus q to branch q

Others

|⋅| Cardinality of a set

ocs/oml TCUC solving time of the commercial solver/ML-aided framework

zml,⋆ TCUC cost of the ML-aided framework

TCUC Transmission-constrained unit commitment

MILP Mixed-integer linear programming

ML Machine learning

DT Decision tree

RF Random forest

DNN Deep neural network

k-NN k-nearest neighbors

RES Renewable energy source

ISO Independent System Operator

ROC Receiver operating characteristic

Keywords: transmission-constrained unit commitment, machine learning, data-driven, artificial intelligence, power system operation

Citation: Lin Z, Chen Y, Yang J, Ma C, Liu H, Liu L, Li L and Li Y (2023) Accelerating transmission-constrained unit commitment via a data-driven learning framework. Front. Energy Res. 10:1012781. doi: 10.3389/fenrg.2022.1012781

Received: 05 August 2022; Accepted: 07 September 2022;

Published: 11 January 2023.

Edited by:

Yikui Liu, Stevens Institute of Technology, United StatesReviewed by:

Yue Zhou, Cardiff University, United KingdomNan Yang, China Three Gorges University, China

Copyright © 2023 Lin, Chen, Yang, Ma, Liu, Liu, Li and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yingyuan Li, eWluZ3l1YW5DRENAb3V0bG9vay5jb20=

Zhaohang Lin

Zhaohang Lin Ying Chen1

Ying Chen1