94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Electron. Mater, 07 October 2022

Sec. Semiconducting Materials and Devices

Volume 2 - 2022 | https://doi.org/10.3389/femat.2022.1020076

This article is part of the Research TopicAdvances in Highly Efficient Neuromorphic Computing with Emerging Memory DevicesView all 5 articles

Biologically-inspired neuromorphic computing paradigms are computational platforms that imitate synaptic and neuronal activities in the human brain to process big data flows in an efficient and cognitive manner. In the past decades, neuromorphic computing has been widely investigated in various application fields such as language translation, image recognition, modeling of phase, and speech recognition, especially in neural networks (NNs) by utilizing emerging nanotechnologies; due to their inherent miniaturization with low power cost, they can alleviate the technical barriers of neuromorphic computing by exploiting traditional silicon technology in practical applications. In this work, we review recent advances in the development of brain-inspired computing (BIC) systems with respect to the perspective of a system designer, from the device technology level and circuit level up to the architecture and system levels. In particular, we sort out the NN architecture determined by the data structures centered on big data flows in application scenarios. Finally, the interactions between the system level with the architecture level and circuit/device level are discussed. Consequently, this review can serve the future development and opportunities of the BIC system design.

Information processing in the human brain in an analogue and cognitive manner is a key challenge for a brain-inspired computing (BIC) paradigm. The BIC paradigm aims to solve problems using working principles in the brain and drive the next wave of computer engineering (Kendall and Kumar, 2020). The BIC system has been widely used in artificial intelligence (AI) applications such as object detection and classification (Merolla et al., 2014; Pei et al., 2019; Roy et al., 2019), accelerators (Chen et al., 2016; Friedmann et al., 2016), intelligent robots (Zhang et al., 2016), in-datacenter performance analysis (Jouppi et al., 2017), LASSO optimization problems (Davies et al., 2018), and brain drop (Neckar et al., 2018). All these applications challenge the performance and system efficiency of each module of the BIC system.

In the recent years, based on the extensive research, neural networks (NNs) are considered as efficient methods for the advent of big data, and the proliferation of data and information based on the von Neumann architecture and breakthroughs have been made in terms of improved availability of big data flows, operating power, and training methods (Lawrence et al., 1997; LeCun et al., 2015; Goodfellow et al., 2016). Since 1943, the concept of NNs was first introduced by McCulloch and Pitts (1943), and later, NNs have been widely studied and developed. In 1957, a machine that simulated human perception was proposed by Frank Rosenblatt, which is known as single-layer perceptron (SLP). The SLP was the first generation of NNs, with single-feature layers that could be applied to recognize some letters of the alphabet. In 1985, the multiple hidden layers were applied in the perceptron to replace the only single layer by Geoffrey Hinton, which is known as multilayer perceptron (MLP), and this was the beginning of the second generation of NNs. As the range of applications for NNs expands, various NN structures have since emerged, such as the convolutional neural network (CNN), graph neural network (GNN), recurrent neural network (RNN), and their various variants. Despite the existence of numerous types of NNs, there is still a fundamental challenge to be able to realistically and accurately emulate the human brain. Thus, the third-generation NNs represented by the spiking neural network (SNN) have emerged, which is considered to be the closest to the synapses and neurons of the human brain.

While exploring the advanced BIC paradigms, some reviews have been carried out in terms of applied materials (Ko et al., 2020; Liu et al., 2020) or devices (Kwon et al., 2022; Meier and Selbach, 2022) or neuromorphic computing algorithms (Kumar et al., 2022; Yang et al., 2022). The two-dimensional (2D) materials were reviewed to apply for the next-generation computing technologies (Liu et al., 2020), which is focusing on a broader application scope in neuromorphic computing, matrix computing, and logic computing. The review work on emerging neuromorphic devices by using 2D materials (Ko et al., 2020) comprehensively explores various 2D materials, especially their prospects for neuromorphic applications. Furthermore, in the recent years, the memristors, owing to their non-volatile and reconfigurable properties, are considered as the promising candidates for BIC systems. The 2D memristive devices were reviewed for the applications of neuromorphic computing, and the fabrication and characterization of neuromorphic memristors were primarily discussed (Kwon et al., 2022). Moreover, the research progress on memristors especially as artificial synapses to neuromorphic systems is reviewed by Yang et al. (2022).

In comparison to aforementioned reviews, in this work, we overview the recent development of BIC systems, with respect to the perspective of a system designer. We provide a comprehensive review on the advances in the device level and circuit level up to the architecture and system levels for constructing a reliable BIC system, especially pointing out the interaction among them. Particularly, in the data-centric era, the structures of data sets in diverse application scenarios have a significant influence on the construction of NNs. Hence, the choice of NN algorithms sorted by data structures is emphasized in this work. The work is structured as follows: the functional materials and devices for BIC systems in terms of low-dimensions including zero-dimension (0D), one-dimension (1D), and two-dimensions (2D) are reviewed in Section 2. The artificial synapses and neurons as the building blocks of the BIC system are discussed in Section 3. NN algorithms and architectures, which are determined by the data structures in AI applications, are introduced and discussed in Section 4. Section 5 discusses the interactions among the device, circuit, and architecture from system level aspects, which include the pros and cons among the 0D, 1D, and 2D materials in the device level, the features of artificial synapses and neurons based on memristors in the circuit level, various neural networks depending on data structures in the architecture level, and the current challenges and perspectives at the device/circuit level, architecture level, and system level, which provide guidance for future research.

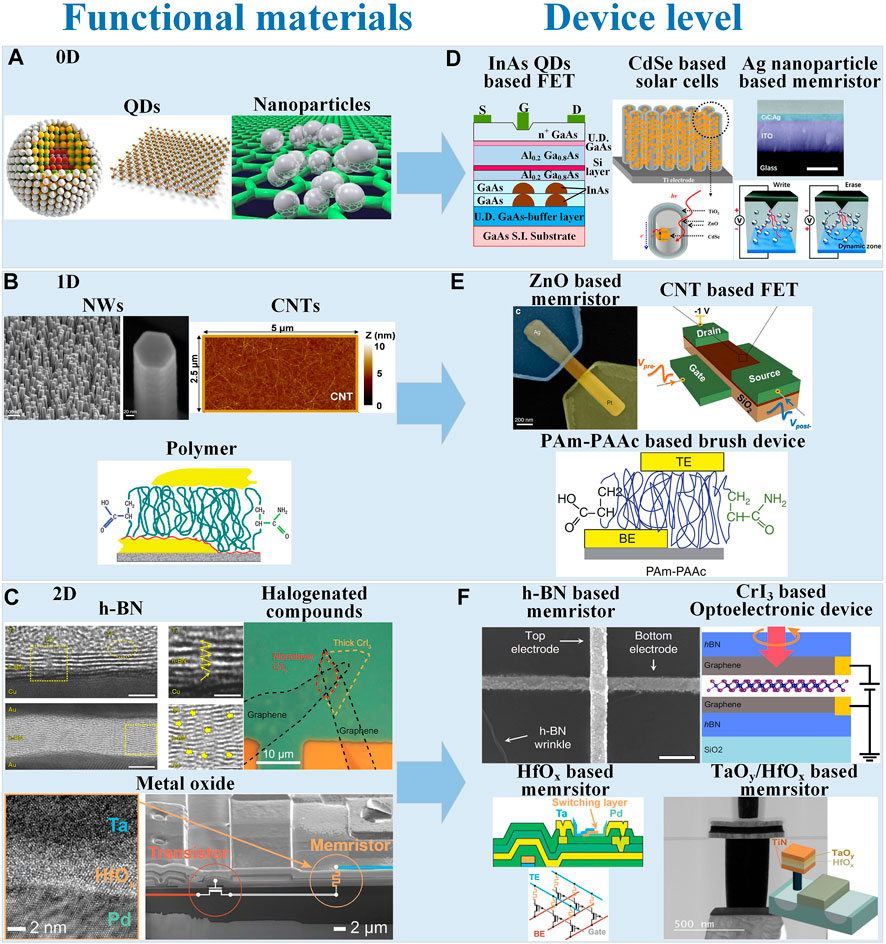

The device level is crucial for the hardware of the BIC system. Based on different functional materials, diverse devices can be realized for constructing circuits in the BIC system. Functional materials exist in many ways according to different functional requirements and classifications. For example, the main difference between organic and inorganic materials is that organic compounds always contain carbon, while most inorganic compounds do not. The organics [i.e., polymers (Biesheuvel et al., 2011; Fong et al., 2017; Namsheer and Rout, 2021), organic molecular crystal (Wang et al., 2011; Lee et al., 2012) and carbon allotropes (Zestos et al., 2014; Schwerdt et al., 2018; Xu et al., 2021), and hydrogel composites (Shi et al., 2014; Kayser and Lipomi, 2019; Pyarasani et al., 2019)], owing to unique features such as long-term biocompatibility, good mechanical flexibility, and molecular diversity, have been explored in neuromorphic devices (Deng et al., 2019; Tuchman et al., 2020; Go et al., 2022). In addition, various inorganics with unique advantages are not being ignored (i.e. metal oxides (Hu et al., 2018; Gao et al., 2022), sulfur compounds (Knoll et al., 2013; Lu and Seabaugh, 2014; Wu et al., 2017), and halogenated compounds (Cheng et al., 2021)) in many applications. Another way is according to different dimensional orientation; the materials can be classified as 0D, 1D, and 2D. It can be referred as 0D material when it is within a nanoscale range of (1–100 nm) in three dimensions or if they are composed of basic units. 1D materials refer to those in which electrons are free to move in only one nanoscale direction (linear motion), such as nanowire (NW) junction materials, quantum wires, most representative carbon nanotubes (CNTs), and polymers. 2D materials refer to materials in which electrons are free to move in a planar between 1–100 nm, such as graphene, h-BN, metal oxides, sulfur compounds, and halogenated compounds. In this section, we discuss the functional materials and devices for the BIC system in terms of low-dimensions including 0D, 1D, and 2D. Table 1 summarizes the reported 0D, 1D, and 2D devices for neuromorphic computing.

0D devices are composed of the materials based on 0D structure, such as semiconducting quantum dots (QDs) and nanoparticles, as demonstrated in Figure 1A. First, 0D materials are suitable for application in neural morphology-related photonic systems, owing to its promising optical properties. Attributing to the fact that 0D photons are not limited in space and power density during propagation, 0D photonic devices are used for parallel communication and super-connectivity. In the context of neuromorphic computing, 0D materials show great potential in many applications, such as Ag/Au nanoparticles (Alibart et al., 2010; Ge et al., 2020), InAs/InGaAs-based QDs (Mesaritakis et al., 2016), black phosphorus-based QDs (Han et al., 2017), MoS2-based QDs (Thomas et al., 2020), GaAs-based QDs (Lee et al., 2009), and CdSe-based QDs (Moreels et al., 2007) as listed in Figures 1A,D and Table 1. The local gain can be obtained by size-tunable plasmonic responses from 0D metal nanoparticles. Attributing to charge-trapping actions, 0D Au nanoparticles have been exploited in synaptic transistors (Alibart et al., 2010). The phenomena of dynamic contraction and extension can be seen with these Au nanoparticles, in which the competing effects between surface tension and electric field play a role, which leads to effective learning behavior. In addition, the synergistic effect between Ag nanoparticles and electrodes can be applied for flexible neuromorphic networks to enhance switching properties (Ge et al., 2020) as listed in Table 1.

FIGURE 1. Overview on transition from 0D, 1D, and 2D (A,B,C) functional materials to (D,E,F) device level. (A) 0D materials: QDs and nanoparticles (Ge et al., 2020; Thomas et al., 2020); and (D) 0D devices: InAs QDs FETs (Mokerov et al., 2001), CdSe solar cells (Lee et al., 2009), and Ag nanoparticle memristor (Ge et al., 2020). (B) 1D functional materials: NWs, CNTs, and long-chain polymer (Zhitenev et al., 2007; Kim et al., 2015b; Milano et al., 2018a); and (E) 1D devices: ZnO memristor (Milano et al., 2018a), CNT FET (Kim et al., 2015b), and PAm-PAAc flexible brush (Zhitenev et al., 2007). (C) 2D materials: h-BN, halogenated compounds, and metal oxide (Hu et al., 2018; Shi et al., 2018; Cheng et al., 2021). (F) 2D devices: h-BN memristor (Shi et al., 2018), CrI3 light helicity detector (Cheng et al., 2021), HfOx memristor (Hu et al., 2018), and TaOy/HfOx memrsitor (Gao et al., 2022). All images in the diagram are adapted from the corresponding references.

The excitatory and inhibitory synaptic responses have been imitated by the InAs/InGaAs-based QDs with explicit energy levels, in which multi-band emission from QDs enables operation (Mesaritakis et al., 2016). But a challenge for integrating QDs and photonic waveguides still remains. The site-controlled QDs were employed to realize electro-photo-sensitive devices on GaAs/AlGaAs wafers with tuning conductance by electrical/optical pulses (Maier et al., 2015; Maier et al., 2016). In addition, the metal and QD nanoparticles were embedded in a matrix manner to obtain resistive random access memory (ReRAM or RRAM). In particular, the black phosphorus QDs are sandwiched within polymers (i.e., methyl methacrylate) with bilayers to print multi-level ReRAM, presenting high switching ratio up to

1D devices are composed of the materials based on the 1D structure, such as nanowires (NWs), carbon nanotubes (CNTs), and polymers. Early on, 1D materials were extensively studied, showing that they share a very similar topology with tubular axon, which is the key to achieving hyperlinkability in the biological system. As 1D materials are being studied widely, their diverse properties in both physics and chemistry, solution processability, and the bottom-up growth feature compared to 0D materials would allow 1D materials to behave more potentially in BIC systems.

1D semiconductor NWs have been developed worldwide as low-dimensional single crystals. 1D metal oxide NWs fabricated using a bottom-up approach are being investigated for the implementation of resistive switching devices as this method can achieve device size reduction beyond limitations of traditional lithography (top-down approach) and can be considered a good platform for highly localized and characterized switching events (Nagashima et al., 2011; Ielmini et al., 2013; Milano et al., 2018a) as listed in Figures 1B,E and Table 1. For such reasons, such studies have been carried out not only in NWs (Oka et al., 2009; Nagashima et al., 2010; He et al., 2011; Nagashima et al., 2011; Yang et al., 2011; Ielmini et al., 2013; Qi et al., 2013; Liang et al., 2014; O’Kelly et al., 2014), but also in arrays of NWs (Park et al., 2013; Anoop et al., 2017; Porro et al., 2017; Xiao et al., 2017; Milano et al., 2018b), which have become another major material for 1D devices. However, a non-negligible problem regarding NWs is the tendency of Joule heating to induce them to melt and lead to further hardware failures; thus, single devices based on NWs still suffer from high operating voltages or poor device reliability in terms of ruggedness and variability (Oka et al., 2009; Nagashima et al., 2010; He et al., 2011; Nagashima et al., 2011; Yang et al., 2011; Ielmini et al., 2013; Qi et al., 2013; Liang et al., 2014; O’Kelly et al., 2014).

The CNT shows many unique properties in addition to those similar to NWs as listed in Figures 1B,D. It is a graphite cylinder, which shows semiconductor/metallic behaviors according to its chiral vector and has been used to construct 1D devices. Single-walled CNTs have been used in post-silicon digital logic because of their high charge carrier mobility and scalable properties, especially in the ultra-short channel limit (i.e., sub-5 nm nodes) (Cao et al., 2017; Milano et al., 2018b). CNTs have been used in transistor devices and as models for synaptic circuits (Joshi et al., 2009; Kim et al., 2015b). However, due to the challenges faced by individual CNTs in practical applications, such as wafer-level assembly of CNTs and alignment aspects, efforts are applied to study CNT networks structured as thin-film transistors (TFTs) (Milano et al., 2018b). CNTs can be used widely as aligned arrays (Sanchez Esqueda et al., 2018) and random networks (Kim et al., 2013; Shen et al., 2013; Kim et al., 2015b; Feng et al., 2017; Kim et al., 2017; Danesh et al., 2019) as listed in Table 1, which have also indirectly demonstrated the research potential and value of synaptic transistors. In addition, these CNT-based 1D devices have also been applied to neuromorphic circuits for unsupervised learning in NNs (Sanchez Esqueda et al., 2018). However, the lack of NVM and the inherent problem of transverse geometry in CNT-based devices results in the inability to form dense arrays of horizontal bars.

Furthermore, the hybridization like the ZnO nanowires (1D) decorated with CeO2-based QDs (0D) were applied in memory devices (Younis et al., 2013), which were investigated for charge-trapping mechanisms with the aim of designing more advanced devices. Similarly, the diffusion of Ag along the ZnO nanowires after they are in contact is observed (Figure 1D). The diffusion behavior can produce volatile/non-volatile resistive switching, which is similar to Ag-SiOx diffusion-based memristor devices (Wang et al., 2017; Milano et al., 2018a).

In particular, the polymers not only have the characteristics and research value of organic materials in neuromorphic devices to boost the properties of devices and broaden the application areas (c), but they also possess 1D properties. Therefore, polymers also possess 1D properties that have been exploited in 1D devices such as memories, organic electrochemical transistors (OECTs), and nanoscale molecular devices (Zhitenev et al., 2007; Waser et al., 2009; Cho et al., 2011; Rivnay et al., 2018; van De Burgt et al., 2018) as listed in Figures 1B,D and Table 1. The synaptic transistors can be enabled by combining polymer electrolytes with planar silicon and lithium-ion battery materials (Lai et al., 2010; Fuller et al., 2017). In addition, OECTs show promising conductivity states (

2D devices are composed of the materials based on the 2D structure, such as graphene, MoS2, TMCs including MX2 (M = Mo, W; X = S, Se), and hexagonal boron nitride (h-BN) (Figures 1C,F and Table 1). The widespread use of 2D materials is due to three unique features: First, the layers in 2D materials are all bonded by covalent bonds, and the van der Waals forces between adjacent layers are very small, so that layer-by-layer exfoliation of 2D bulk materials can be achieved. Second, in the exfoliated ultrathin 2D bulk structure, the electron activity space is limited, so it can be precisely controlled by the gate voltage to eliminate the influence of the short channel effect. Third, the 2D material system is relatively large, and innovation can be achieved by controlling the energy band structure. In addition, 2D devices show scaling and integration with planar wafer technology. The development of new 2D device concepts is spurred subsequently by realizing neuromorphic functionality in 2D nanomaterials to reveal unexpected mechanisms. Although 2D devices have shown great advantages and value, they are difficult to prepare in large quantities, have low preparation efficiency, are prone to defects, are prone to the introduction of impurities, are difficult to control the composition of the product and have high environmental requirements, and preventing large-scale industrialization. This is why materials with mature preparation processes such as graphene and h-BN have endured for so long.

2D nanomaterials attracted researchers as new building blocks for the development of memristor-based (Jin et al., 2015; Lee et al., 2016; Lei et al., 2016; Schmidt et al., 2016; Vu et al., 2016; Sangwan and Hersam, 2018; Wang T.-Y. et al., 2020; Chen et al., 2021) and non-memristor-based devices (Geim and Grigorieva, 2013; Jariwala et al., 2014), which are essential parts in the BIC system. Here, we refer to 2D memristors-based devices (Bessonov et al., 2015; Cheng et al., 2016; Tian et al., 2017; Huh et al., 2018; Sangwan et al., 2018; Shi et al., 2018; Jadwiszczak et al., 2019; Wang L. et al., 2019; Zhang et al., 2019; Zhong et al., 2020). A typical metal–insulator–metal (MIM) is the structure of memristor and also includes a 2D insulator as the switching layer. The reason why 2D materials can be used for artificial synaptic devices in the field of BIC is attributed to its unique structure, electronic properties, and mechanical properties (Arnold et al., 2017; Bao et al., 2019). The Ta/HfOx/Pd memristor is applied to accelerate computations in the BIC system (Hu et al., 2018) as listed in Figure 1F. MoS2, as one of promising 2D materials, is used in a wide range of applications due to its attractive direct band gap structure, which creates its large conductivity and electron mobility. Following this, TMCs, including transition metal dichalcogenides (TMDs) (Kwon et al., 2022), have become a research hotspot (Fiori et al., 2014). The ultrathin 2D memristor devices (thickness

By taking full advantage of the respective fascinating properties of 2D materials, 2D devices performances can be boosted. As one of the first 2D materials to be discovered, graphene is used as a switching layer in devices to improve their stability. In addition, it is used as a contact material to boost robust features for memory cells (Jariwala et al., 2014). As a material with a structure very similar to graphene, h-BN, also known as white graphene, exhibits properties distinct from those of graphene, such as high insulating properties, which are different from graphene (Sangwan and Hersam, 2018). It is employed as a switching layer in devices, endowing devices with high endurance and low operating currents (Shi et al., 2018) as listed in Table 1. 2D memory devices are able to operate at low operating voltages and therefore have low energy consumption, which is attributed to one of the promising features of 2D materials, namely, the ultra-thin structure (Cheng et al., 2016; Zhang et al., 2019) as listed in Table 1. Consequently, the matrix computing can be boosted by potential 2D memory devices with a promising structure in operating voltage/current, accuracy, etc., which is also a frequent concern for devices.

One of the most promising performances in matrix computing is extremely low energy consumption. As can be seen in Table 1, the different materials form devices with different levels of operating voltage and current. Generally, the low operating voltage and ultralow current also mean low power consumption. Recently, some significant efforts have been made for reducing the energy consumption, operating current, and voltage; for example, a memristor crossbar array based on 2D hafnium diselenide (HfSe2) has been fabricated using the molecular beam epitaxy technique, which exhibited small switching voltage with 0.6 V, especially low switching energy with 0.82 pJ (Li et al., 2022). In addition, a memristor device was prepared with h-BN materials through chemical vapor deposition by Shi et al. (2018) as listed in Figures 1C,F. The device presented excellent performances with ultralow power consumption of 0.1 fW, which cannot be obtained without the role of h-BN. Based on few-layer h-BN as a switching layer, the bipolar/unipolar resistive switching can be achieved in some 2D devices (Gandhi et al., 2011; Ganjipour et al., 2012), in which switching is controlled by controlling the generation of defects from active Cu/Ag. The MoS2/h-BN/graphene heterostructures were applied in random access memory devices by Vu et al. (2016), which demonstrate ultrahigh on/off ratio of 109, low operating voltage with 6 V, and ultralow off-state current with 10–14.

As aforementioned, the functional 0D, 1D, and 2D materials play a crucial role in constructing devices for BIC systems. In this review, we focus on memristors due to their promising functional properties for constructing BIC systems in the circuit level, architecture level, and system level.

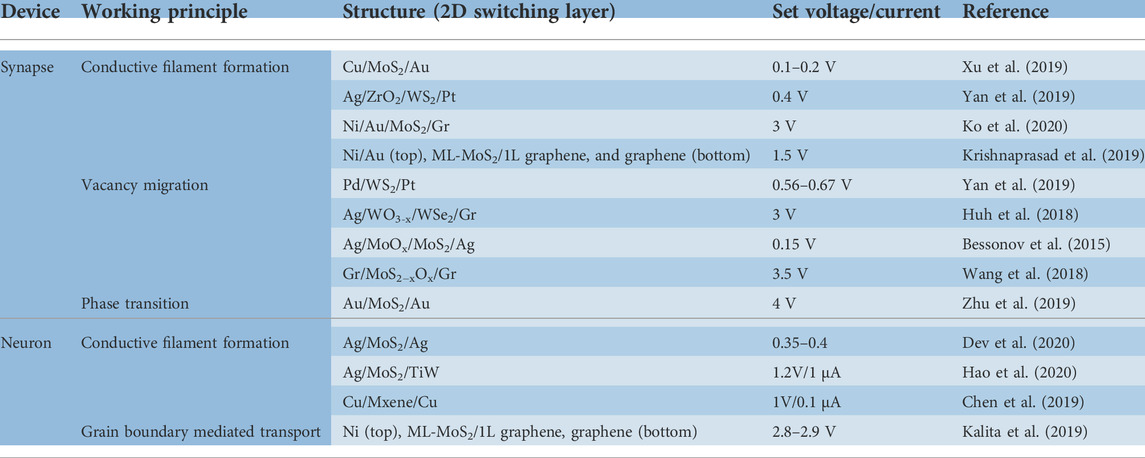

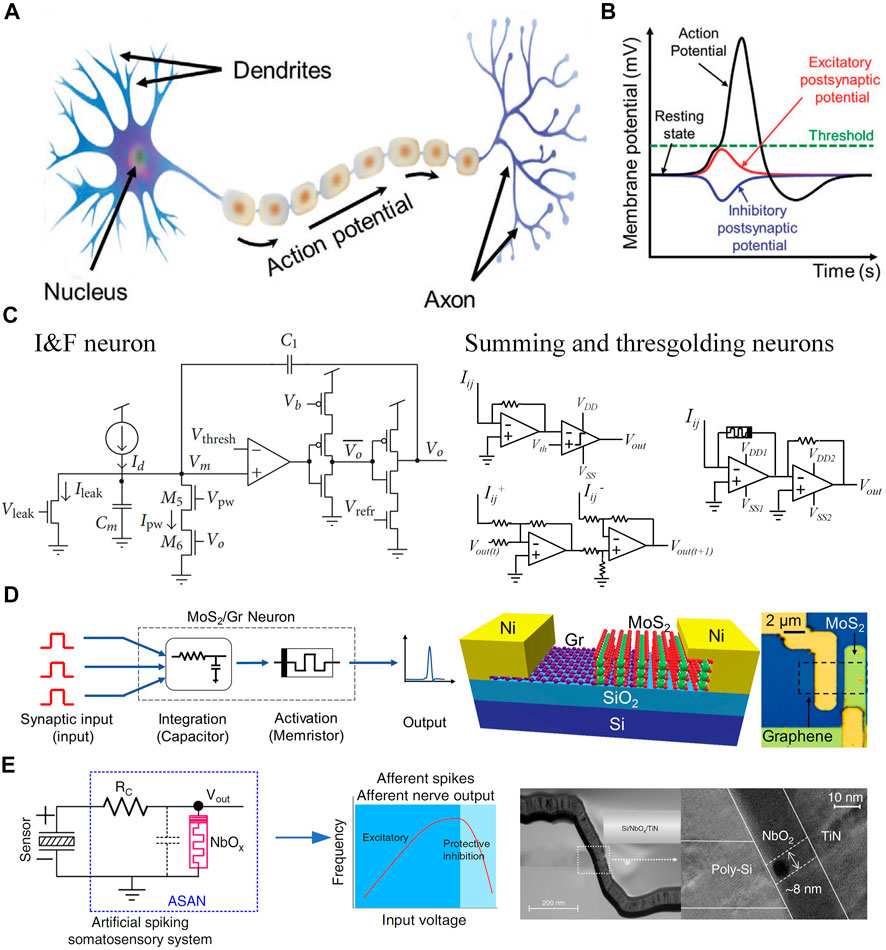

In the biological human brain, there are 86 billion neurons that perform activation functions and transmit information via around 1,000 trillion synapses, which form the learning functions, that is, senses such as smelling and listening, in the human brain. External signals are received and transmitted to synapses, which in turn are transmitted to neurons. The activation functions are processed to produce new signals, which are then transmitted back to the senses in reverse, producing the so-called “limbic response.” In this section, we review artificial synapses and neurons in the BIC system. The characteristics of artificial synapse and neuron devices based on 2D memristors are summarized in Table 2, such as set voltage/current, memristor structure, functional materials, and their working principle.

TABLE 2. 2D memristive devices applied as artificial neurons and artificial synapses in the BIC system.

The weight storage and induction protocol unit constitute a simple synapse, which modifies the weights according to the pulse signals. In general, the proliferation of plasticity-related proteins (PRPs) is the main cause of synaptic interactions during which these proteins are required for synaptic growth between adjacent synapses (Farris and Dudek, 2015) as listed in Figure 2A. The synaptic interactions are fundamental and essential in the neuromorphic systems. In the past, two approaches were implemented, one through a software-assisted circuit concept (Sheridan et al., 2017) and the second through a voltage splitter effect assisted by an external voltage bias (Borghetti et al., 2010). However, these two approaches do not directly mimic the internal processes of biological synapses. The ionic coupling involves the diffusion and exchange of ions between artificial synaptic devices that can potentially mimic this process. Thus, the competition and cooperation effects of biological synapses can be enabled appropriately.

FIGURE 2. Working principle of biological synapses and representative memristive artificial synapses. (A) Illustration of functional connection for artificial neurons and synapses by using MCAs. (B) Configurations of memristive artificial synapses, including 0T1R-, 0T2R-, and 1T1R-synapses (Du et al., 2021a). Schematics of I–V characteristics of different configurations of memristive artificial synapses (Sangwan and Hersam, 2020). (C) Illustration of artificial memristive synapse induced by filament formation and rupture. The inset demonstrates the filamentary switching of a single memristor unit formed by the Ag/SiOx:Ag/TiOx/p++-Si structure (Zhu et al., 2019; Ilyas et al., 2020; Sangwan and Hersam, 2020). The artificial memristive synapse whose transconductance is controlled by its structural phase (D,E). (D) Insets demonstrate local transition from the 1T′ phase (LRS) to the 2H phase (HRS) in Li+-MoS2, which is related with Li+ ion migration and controlled by a voltage bias (Zhu et al., 2019; Sangwan and Hersam, 2020). (E) Inserts demonstrate phase change by controlling temperature, the CCDW phase at low temperature (left), in the hexagonal NCCDW (middle), and in the ICCDW phase at high temperature (right) (Yoshida et al., 2015). All images in the diagram are adapted from the corresponding references.

The earliest artificial synapse was achieved with transistors (which can be called synaptic transistors) by using a floating gate. The charge of the floating gate (storage or dissipation) can be controlled by the injection and tunneling from hot electrons, respectively. Therefore, the threshold voltage and conductivity of the transistor are modulated efficiently (Diorio et al., 1996). For synaptic transistors, the robustness and open channel are critical advantages, which can lead to spatiotemporal responses that are achieved by multiple gate terminals (Buonomano and Maass, 2009; Qian et al., 2017). However, it cannot be ignored that the relatively large footprint is accompanied by lateral geometry from synaptic transistors, which is suboptimal for obtaining synapses with high density (Lanza et al., 2019).

Subsequently, the memristors as a popular emerging device (Figure 2B top), which possess a smaller size (Yu and Chen, 2016; Pi et al., 2019), enable 3D stacked memory (Seok et al., 2014), and combine both an induction protocol and memory into a single device (Jeong et al., 2016), become a suitable alternative in terms of the application of artificial synapses (as listed in Table 2).The functional materials and operating mechanisms used in memristive devices have been reviewed extensively (Waser et al., 2009; Pershin and Di Ventra, 2011; Kuzum et al., 2013; Yang et al., 2013; Tan et al., 2015).

The different configurations of memristor-based artificial synapses are listed at the bottom of Figure 2B. Memristors with 0T1R configuration (T is the transistor, and R is the memristor) have been applied to implement artificial synapses (Krestinskaya et al., 2017; Zhang Y. et al., 2017; Du et al., 2021b), and such synaptic devices are very efficient in terms of density and power consumption. In addition, the non-volatile resistive switching will occur in the memristors with distinct resistance states with zero bias (Yang et al., 2008); however, the common leaky path problems of memristive crossbar arrays (MCAs) cannot be ignored. A transistor in series with the memristors might be a good method for this (Yao et al., 2017), but it is not as good as a 0T1R configuration in terms of density. Another popular configuration for implementing artificial synapses consist of two memristors (i.e., 0T2R) (Alibart et al., 2013; Hasan and Taha, 2014), which have the advantage of being able to double the area size and to apply the negative synaptic weights required in an NN. Voltage stimulation signals generated from neurons (word-lines) and the current signal can be generated from each bit line. In unipolar switching, only at the same bias polarity, the switching events can occur, whereas the devices can be operated to on- and off-states by reverse bias in the bipolar resistive switching (Yang et al., 2008; Chang et al., 2009). Therefore, by connecting the two bipolar switches back-to-back, this results in a complementary resistive switch (Linn et al., 2010).

The 1T1R is the third configuration besides 0T1R and 0T2R, which has been studied for artificial neurons and synapses (Figure 2B bottom). The chips with 1T1R configurations have been widely used (James et al., 2017; Schuman et al., 2017; Krestinskaya et al., 2019), and almost all of them employed crossbar arrays to address individual synapse nodes. In 1T1R configuration, the leakage current can be reduced in the 1T1R configuration, which is attributed to the use of the transistor as a selector. Two memristive elements are included in the memtransistor, in addition to the pinched hysteresis loop, which can be modulated by the gate terminals (Mouttet, 2010; Sangwan et al., 2015; Sangwan et al., 2018; Wang L. et al., 2019; Yang et al., 2019). Memristor devices are often complex in both structural design and preparation, but this is also the main reason for the simplicity of the circuit when they are applied to circuits (Chua and Kang, 1976; Pershin and Di Ventra, 2011; Xia et al., 2011; Abdelouahab et al., 2014; Kim et al., 2015a). Thus, for 1T1R configuration, not only do transistors and memristors combine their respective characteristics into one device, but they also exhibit unique features in terms of applications such as spike-timing-dependent plasticity (STDP) and bio-realistic hyper-connectivity (Bi and Poo, 1998; Caporale and Dan, 2008; Sangwan et al., 2018). Precisely, the conversion mechanism of the memristor corresponds well to biological synapses, which can be generated by the formation and rupture of filaments (Figure 2C up) or by modulation of the Schottky barrier by defects or migration of ionic species.

The Ag/SiOx:Ag/TiOx/p++-Si memristor devices have been reported by Ilyas et al. (2020), and the devices present analog switching behaviors. The schematic diagram of the device is shown in Figure 2C. In such a simple device, the SiOx:Ag and TiOx thin layers serve as the transition layer, and the Ag TE and p++-Si BE serve as the electrodes, respectively. The Ag/SiOx:Ag serves as the presynaptic membrane, and the TiOx/p++-Si as the postsynaptic membrane in the Ag/SiOx:Ag/TiOx/p++-Si memory device. The ions from the synaptic weights are released between the presynaptic and postsynaptic membranes, thus causing a change in synaptic weights when it receives a neural pulse. The Ag ions migrate in response to the voltage pulse, thus causing the conductivity of the Ag/SiOx:Ag/TiOx/p++-Si memory device to be modulated. The physical model is shown in Figure 2C, and the switching mechanisms of the SiOx:Ag- and SiOx:Ag/TiOx-based memristor devices are similarly explained.

Phase-change memristor devices show a metal-to-insulator transition by local heating and rapid quenching (Figure 2D). The schematic illustration describing the phase transition is shown in Figure 2D, in which electrode A can drive migration of Li+ ions. The 1T’ phase will produce as the increase of Li+ concentration; conversely, the 2H phase can be brought forth by the decreasing of Li+ concentration. The high-resolution transmission electron microscopy images demonstrate LixMoS2 film have been switched to the high-resistance state (HRS) and low-resistance state (LRS), respectively, as listed in Figure 2D. The MoS2 lattice fringes can be observed with uniform interlayer spacing (∼ 0.62 nm) in the HRS sample. However, the distorted MoS2 lattice fringes with interlayer spacing (for example, 0.91 and 0.71 nm at the two positions) (Zhu et al., 2019) can be observed in Figure 2D. The phase transition of molybdenum ditelluride (MoTe2) was deployed by Wang et al. (2019b), and another example of polymorphic TMD (Cho et al., 2015) demonstrates that the phase change resistive memory devices were devised. The phase transitions for MoTe2 occurred by the electric field, in which the electronic characteristics can be found between both phases.

Figure 2E illustrates schematic illustration of quantum phase transition memristors. Yoshida et al. (2015) show that polymorphic memory non-volatile switching consisting of 1T-TaS2 with first-order charge density wave (CDW) phase transitions (Sipos et al., 2008; Stojchevska et al., 2014), which presents the first-order CDW phase transition (Figure 2E). It can be seen that the phase switching occurred from incommensurate CDW (ICCDW) to a nearly commensurate CDW (NCCDW) between 100 and 220 K, with relationship of temperature sweep direction in as-prepared few-layer 1T-TaS2. The memristive I–V characteristics and hysteretic current–temperature curves can be caused by these phase transitions. It can be seen from Figure 2E that 13 Ta atoms form a large satellite cluster in the CCDW phase, as zoomed-in in the inset. The adjacent NCCDW phase has a hexagonal arrangement originating from the CCDW domain, which is transformed into the ICCDW phase after further heating.

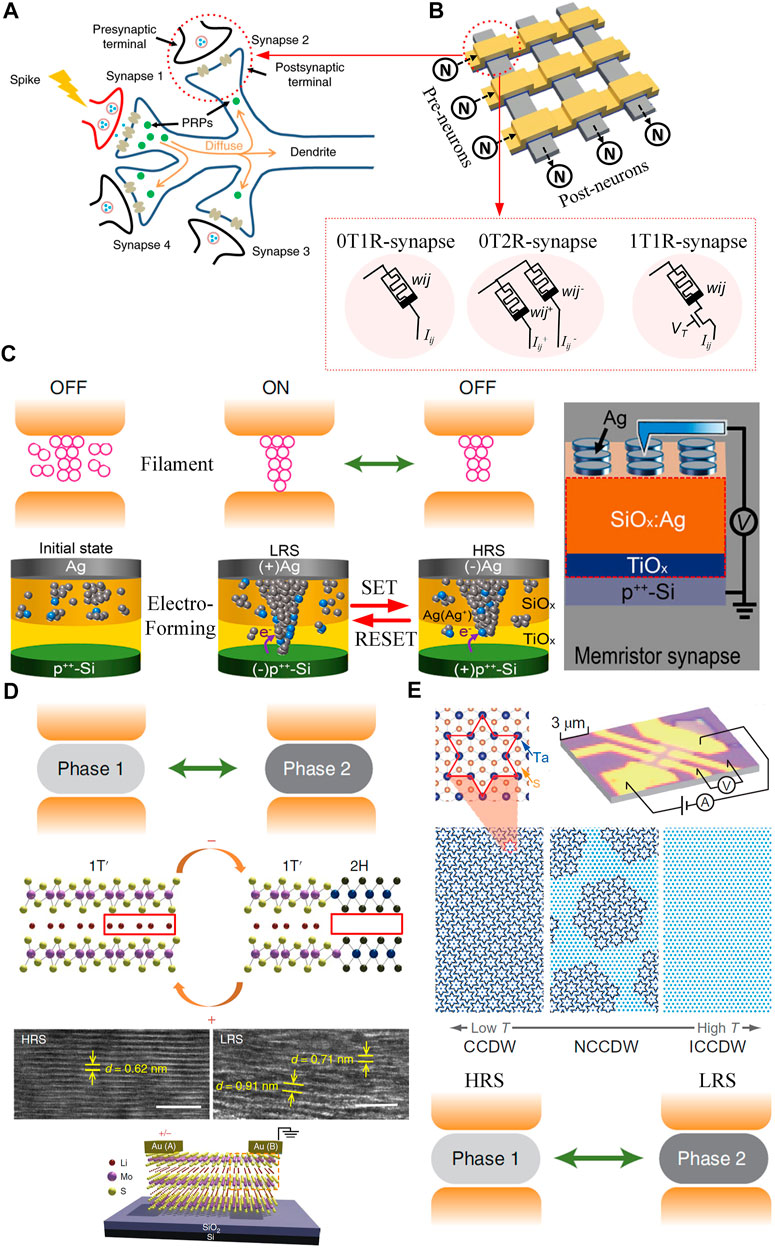

Neurons, consisting of cell bodies, axons, and dendrites, are the basic structural and functional units that transmit biological signals in the human body (Abbott and Nelson, 2000; Gerstner and Kistler, 2002) as listed in Figure 3A. A neuron receives signals from the anterior neuron via the dendrites and then transmits them to the posterior neuron via the axon. The cell body of a neuron determines its electrical response (i.e., the opening/closing of ion channels) according to the signal of excitatory or inhibitory potentials. Figure 3B shows the membrane potential in neurons with an excitatory or inhibitory potential. When the membrane potential is greater than the threshold, the ion channel opens and an action potential (spike) is generated. The generated action potential causes a continuous potential difference in the neuron so that the signal is transmitted to the axon and the neuron releases ions externally, returning to its initial state (resting state). When the membrane potential is less than the threshold potential, no action potential is generated and the signal charge escapes and returns to the initial state (Bear et al., 2016).

FIGURE 3. Working principle of biological neurons and their classification of memristive artificial neurons. (A) Structure of the biological neuron. (B) Membrane potential change of the neuron depending on the excitatory and inhibitory potentials (Lee et al., 2021). (C) Realizations of artificial neurons, including I-F neuron circuit and summing/thresholding neuron models for 1R-synapse and 2R-synapse (Du et al., 2021a). (D) Conceptual representation of the v-MoS2/graphene memristor-based artificial neuron; schematic of a v-MoS2/graphene TSM; and optical image of the MoS2/graphene TSM (Kalita et al., 2019). (E) Artificial spiking somatosensory system, consisting of a mechanical sensor and an artificial spiking afferent nerve (ASAN) made of a resistor and an NbOx memristor. The spiking frequency shows a similar trend to that seen in its biological counterpart. Scanning electron micrograph cross-sectional image of the NbOx device (Zhang et al., 2020). All images in the diagram are adapted from the corresponding references.

Mimicking this set of behaviors of biological neurons is a key for implementing artificial neurons (Zhang X. et al., 2017). Various models, such as Hodgkin–Huxley, Izhikevich, and integrate-and-fire models, have been proposed to explain the behavior of neurons and to implement artificial neurons (Sangwan et al., 2018; Wang et al., 2019a; Chen et al., 2019; Yin et al., 2019; Lee et al., 2020; Li et al., 2020; Wang H. et al., 2020). The leaky integration emission (LIF) model is the most widely used of these models (Figure 3C left), which assumes that the sub-threshold membrane potential dynamics of a neuronal membrane potential is similar to that of a resistor connected in parallel with a capacitor. The LIF model describes the behavior of the spiking nervous system very well (Yang et al., 2020). In particular, the LIF model, made from a leaky capacitor, adds the current from the synapse to the leaky current, helping to bring the neuron to a resting state. However, the excessive size of the capacitor increases the size of the neuron devices and its high power consumption severely limits its application.

The transistor circuits including comparators, summing amplifiers, and Schmitt triggers are employed in neuron models to achieve spiking behaviors efficiently. The right side of Figure 3C presents the configuration for the conventional summing and thresholding neuron (Chowdhury et al., 2017; Jiang et al., 2018), where the input current is accumulated and the corresponding voltage signal is sourced to the comparator by the summing amplifier. If the output voltage of the amplifier is higher than the threshold (Du et al., 2021a), then the comparator will generate a voltage spike to the next layer of neurons. Despite the improvement over the previous one, it still suffers from size and energy consumption, so memristors have been suggested for the realization of artificial neurons (Tang et al., 2019).

The memristor design can be an alternative approach for artificial neurons (as listed in Table 2). The first amplifier with a memristor is not only to scale the output voltage, but also to implement the sigmoid activation function, in which the reconfigurable resistor of the memory is used to control the feedback gain, and the second amplifier is used to invert the output. Although memristors based on 2D materials have been used to implement neurons, the limitations in the preparation of volatile threshold switching devices mean that neuron devices have been less studied than synapse devices. The inherent characteristics (such as diffusive dynamics and the interfacial energy of the metal/vacancy species) (Valov et al., 2011) of component materials contribute to the performance of memristors, which operated with the filamentary mechanism. The characteristics of volatile resistive switching are usually required for most neurons based on memristive materials. Hao et al. (2020) verified that volatile resistive switching is possible in MoS2-based neural components as listed in Table 2. In the case of CVD-grown MoS2 in contact with Ag and W electrodes, the channel width of MoS2 was 1.5 nm. Since both Ag and W electrodes are connected to the switching layer, the device only achieved stable volatile switching behavior by continuously adjusting the channel width of MoS2 to 500 nm. In a vertical memory consisting of Ag/MoS2/Au (Dev et al., 2019, 2020) as listed in Table 2, volatile/non-volatile properties were shown to be thickness-dependent. Thicker MoS2 exhibits volatility threshold switching behavior, suggesting that thicker MoS2 (∼ 20 nm) can be used as a neural component. Here, this critical behavior of a medium-frequency artificial neuron firing as a function of the potential formed on it is implemented with the v-MoS2/graphene threshold switching memristor (TSM) (Kalita et al., 2019) as listed in Table 2, and the conceptual scheme is listed on left of Figure 3D. The v-MoS2/graphene neuron integrates the received input signals with the help of a capacitor. The capacitor consolidates the charge, and once the voltage across the capacitor increases above the TSM threshold, the neuron kicks in and produces an output spike. The schematic diagram of the v-MoS2/graphene device is shown on the right of Figure 3D. It consists of CVD-grown monolayers of graphene, wet transferred onto a Si/SiO2 substrate (Chan et al., 2012), and then patterned on graphene to grow v-MoS2. Nickel contacts are deposited on graphene and v-MoS2. An optical image of the device is shown on the right of Figure 3D.

Zhang et al. (2020) have designed a memristor-based artificial spiking somatosensory system (on the left of Figure 3E), which is a two-terminal sensor device and a compact oscillator, in which the special oscillator serves as the artificial spiking afferent nerve (ASAN) and contains two passive components: a resistor and a niobium oxide (NbOx) memristor. In biological systems, the firing rate of afferent nerves increases with increasing input intensity whenever the intensity of the input stimulus exceeds the threshold of the afferent nerve (Sivaramakrishnan et al., 2004; Lin et al., 2006). However, when the stimulus intensity is very high, the firing rate decreases due to protective inhibition of neuronal cells to prevent neuronal death (Stetler et al., 2009). In this working artificial body sensing system, the analogue input voltage signal is generated by the sensor device and NbOx ASAN can convert the voltage intensity into the corresponding spike frequency. The generated spikes will then be transmitted to NNs for further processing. The device has a titanium nitride (TiN) top electrode, a NbOx switch layer, and a polysilicon bottom electrode. Here, the polysilicon bottom electrode with its low thermal conductivity is specifically designed to reduce the threshold current. Cross-sectional transmission electron micrograph (TEM) of the device structure is shown on the right of Figure 3E. A circular region of NbO2 crystals with a diameter of approximately 8 nm can be observed in the channel region at a close range (on the right of Figure 3E).

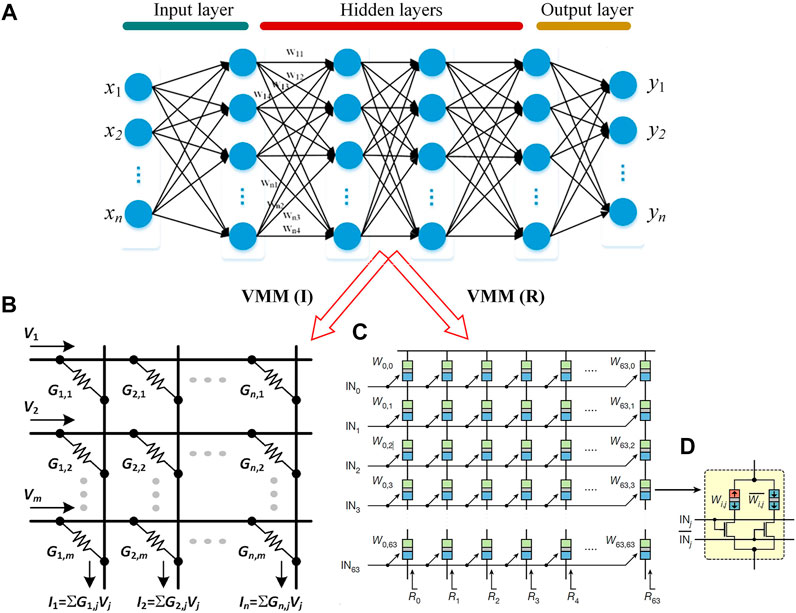

As a row-by-row vector generation process, vector–matrix multiplication (VMM) aims to generate new vectors using existing vectors, where each element of the new vector is generated by weighting and summing each row of the matrix using the elements of the vector as coefficients. In the BIC system, VMM is the basis for NN algorithms, which can be imitated by hardware-based NNs for efficient learning processes. For NNs, hidden layers are used to interconnect input layers (which can be pixel points or data values in the image) and output layers (as shown in Figure 4A), and each layer is interconnected with nodes to complete the information transfer. Therefore, the NN relying on VMM can be applied for inference or prediction as well as learning. The connectivity of nodes, that is, synaptic weights, can be modulated.

FIGURE 4. VMM (I) and VMM (R) can be efficiently imitated using hardware-based crossbar topologies. (A) VMM structure performed

In terms of hardware, VMM based on crossbar arrays can be effectively implemented in synaptic devices using Ohm’s law and Kirchhoff’s law. However, since the conductivity of a synaptic device is always a positive value, the negative weight cannot be effectively implemented. In a typical approach, the conductance values of the two synaptic devices are subtracted to express a negative weight (Burr et al., 2015; Choi et al., 2019). Another method is weight shifting, where the median of the weight values is shifted from zero to a positive region so that all weight values become positive. Based on positive weight values, this can be expressed directly in terms of the conductivity of the synaptic apparatus (wij) (Han et al., 2021). The VMM performed with

In these cases, the resistance-sum columns have been introduced into the crossbar array, still with the aim of obtaining dot products (Figure 4C). The architecture starts with a new bit cell design (a bit cell is an element at a row–column intersection). Each bit cell connects two paths in parallel, each consisting of an MTJ and a field effect transistor (FET) switch in series (Figure 4D). The FET gates in the left path are driven by the binary input voltage IN (VL = 0 V, or VH = 1.8 V), while the FET gates in the right path are driven by a voltage complementary to IN. The MTJ–FET path on the left stores a synaptic weight W (RL or RH; each is the sum of the MTJ and FET switching resistances), while the MTJ–FET path on the right stores a weight complementary to W. Then, the left or right path can be selected by IN, generating the resistance (RL or RH) of the selected path as a bit cell output (Jung et al., 2022).

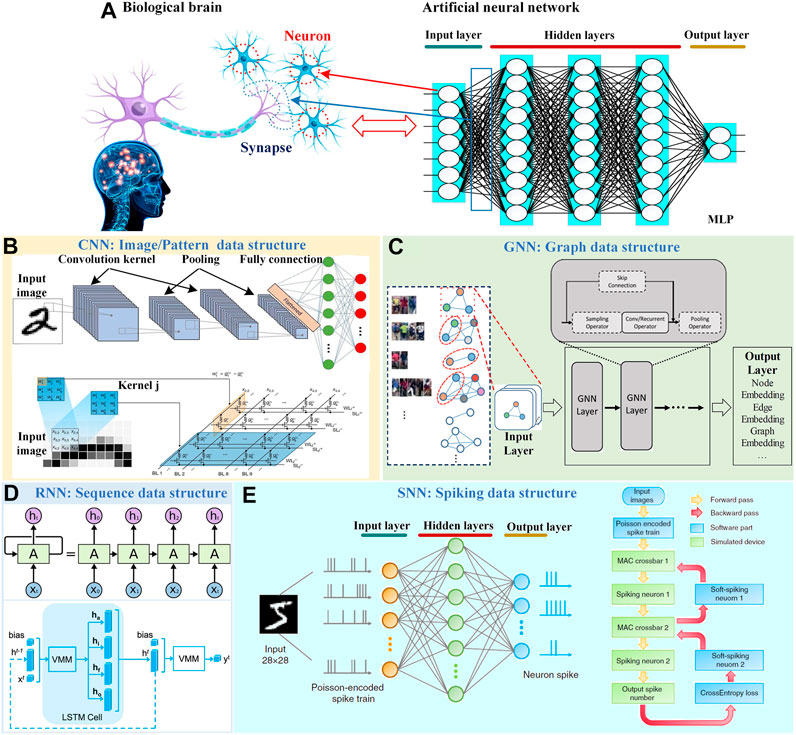

In the data-centric era, NNs are applied to solve different data structures for different application-specific scenarios. The network architectures in BIC and layer configurations are subsequently tailored, depending on the data structures. Inspired by the biology of the human brain, NNs have been constructed and applied to imitate the biological properties of the human brain (Figure 5A). As aforementioned, the artificial synapses correspond to the neuronal ties between the layers of the NN and serve to enable the transmission of signals between layers, while the artificial neurons correspond to the blue units in every layer of the NN (Figure 5A).

FIGURE 5. (A) Data analysis in biological brains and artificial neural networks and comparison of synapses and neurons. Overview on memristive NNs developed for the analysis of different data structures: (B) CNN (Yao et al., 2020), (C) GNN (Huang et al., 2019), (D) RNN (Nikam et al., 2021), and (E) SNN (Duan et al., 2020) for computing image/pattern, graph, sequence, and spiking data structures, respectively. All images in the diagram are adapted from the corresponding references.

From architecture point of view, the input layer and the output layer are indispensable in the NN, between which are the hidden layers. One typical structure of the hidden layer is the full connection (FC) layer as shown in Figure 5A. The N neurons connected to the inputs are arranged vertically in a single line as the first layer (input layer), the same number of neurons are arranged vertically in a single line as the second layer (first hidden layer), and similarly there are M neurons arranged horizontally in a single line as the third layer (i.e., as the second hidden layer), and the last layer is the output layer. Each input neuron in the first layer and the corresponding output neuron in the second layer are connected to each other by synapses. It can be referred to as the SLP with only one hidden layer or the MLP with multiple hidden layers. The DNN is used for specifying the machine learning (ML) in general based on the previously described NN architectures with multiple hidden layers. In addition to the FC layers, different layer constructions can serve as hidden layers for diverse application scenarios. In this subsection, we discuss the following four representative NN architectures for BIC systems: CNN, GNN, RNN, and SNN.

A feed-forward NN consisting of one or more convolutional layers and pooling layers is termed as the CNN, which responds to surrounding units in the coverage area. The CNN is excellent for processing image/pattern data structures. In addition, the input layer, activation function layer, associated weights, and FC layer are indispensable for building a complete CNN (Figure 5B).

For the hardware implementation of CNN architecture, the input layer consists of the same number of neurons as the pixels of the input image/pattern. N different convolution kernel groups form the convolution layer, and the size and number of which also depend on the input image/pattern. The convolution kernel can be shared during data processing, which is useful for the small size of NN, and the input image can still retain the original positional relationship through the convolution operation. All the neurons are formed by synaptic connections between them. First, the images in the data set are sorted and numbered. The convolution operation is then performed by sliding continuously with a fixed step size and computing the sum of weights between the shared local kernel and the input blocks generated by the input layer. The output is the first convolution layer, which is then sub-sampled by the first pooling layer, at which point the first round of convolution operations is complete. The subsampling result from the subsequent pooling operation becomes the input data for the second convolution operation, and then loops the structure above. The number of convolution layers depends on the size of the input data. For memristors crossbar computing architecture, the VMM is the basic algorithmic operation in the convolution procedure. The convolution needs mapped into VMM by converting high-dimensional convolution into low-dimensional VMM. Then, the low-dimensional convolution can be implemented using matrix multiplication by converting kernels (Zhang and Hu, 2018). Then, a kernel is mapped throughout to the corresponding positive and negative weight rows, the later pooling layers are expanded and then these are expanded into vectors. Thus, the input of highly dimensional and complex data is effectively implemented as dimensional compression, that is, dimensionality reduction. Then, the data with reduced dimensionality can be transferred to the final FC layers. Then, the value of the weight summation is fed as the input of the soft-max function to calculate classification probability. This is the complete execution process of a CNN. The convolution operation based on memristor crossbar has two implementation methods. One is that the input feature maps in turn are input into sliding windows based on compact memristor crossbar to obtain the output feature map (Yakopcic et al., 2016). The other is that an entire feature map is input to a sparse crossbar (Yakopcic et al., 2017), but lots of redundant memristors may exist and it is challenging to make the conductance of the same convolution kernel uniform.

The GNN is an NN that acts directly on the graph, which consists of an input layer, a hidden layer, and an output layer (Figure 5C). The GNN is capable of processing graph data structures. First, given a graph, the nodes were initially converted into recurrent units and the edges, into feed-forward NNs. For example, we defined the features for any social network graph, where the features could be age, gender, address, dress, etc. The nodes connected by each edge may have similar characteristics. This reflects some kind of correlation or relationship between these nodes. Then, the nodes are labeled, all nodes are converted into recurrent units, and all edges contain simple feed-forward NNs. At this point, the node and edge transformations are complete, and the graph can pass messages between the nodes. Then, n times nearest neighbor aggregation (i.e., message passing) is performed for all nodes. This is because it involves pushing messages (i.e., embedding) from surrounding nodes via directed edges around a given node. For a single reference node, the nearest neighbor nodes pass their messages (embedding) to the recurrent units on the reference node through the edge NN. The new embedding update with reference to the cyclic unit is based on using the cyclic function on the sum of the output of the edge NN, with cyclic embedding and nearest neighbor node embedding. Then, the embedding vectors of all nodes are summed up to obtain the graph characterization parametrization. Last, one can skip H altogether and go directly to higher levels or use the parametrization to characterize the unique properties of the graph as well. Most GNNs will choose “convolutional” layers, as those in CNNs (Yujia et al., 2016; Hamilton et al., 2017). The “convolution” operation in GNN can be roughly divided into two phases (Yan et al., 2020). The aggregation phase aggregates nodes’ information from their multi-hop neighbors by pointer-chasing operations. This phase incurs intensive random memory accesses. The handling phase feeds the aggregated features into an NN to generate new features. Both computation and aggregation are regular in this phase. This process is executed in parallel on all nodes in the network, since the embedding of the L+1 layer depends on the embedding of the L layer. Therefore, there is no need to “move” from one node to another to pass messages. It happens that the GNN directly propagates the graph structure rather than treating it as a feature and maintains a state that can represent information from a human-specified depth.

NNs that can process sequential data using recurrent processing units are called RNNs, which consist of an input layer, a hidden layer (containing recurrent kernels), and an output layer. The RNN is capable of processing time sequences and image/pattern data structures. The hidden layer of a conventional RNN is a tangent function that acts as a memory unit for the activation function, while the output W at moment t-1 is re-weighted as input and re-entered into the neuronal activation function to jointly act on the output result at the current moment t. As the time step increases, the values within the early memory gradually increase to astronomical numbers, which can lead to the more long-term memory having a greater impact on subsequent output, while short-term memory is rather less influential, and such a structure can cause gradient disappearance and explosion problems. Later, a special type of RNNs emerged, namely, the long–short term memory network (LTSM). The LSTM consists mainly of an oblivion gate, an input gate, an output gate, and a memory cell. Its hidden layer is a self-connected linear cell, also known as a constant error carousel (CEC). CEC protects RNNs from vanishing and exploding gradient problems of the traditional RNN.

For the hardware implementation, all the neurons between the layers in the hidden layer are interconnected, but the neurons between the same layers are not. Each neuron in the hidden layer of an RNN introduces a recurrent synapse that stores the neuron’s output at moment t as its own input at moment t+1, and similarly, the output at moment t+1 will serve as input at moment t+2, and so on. The RNN achieve information transfer through it (as shown in Figure 5D). The structure of RNN is an iterative process and the weights are shared, that is, the input x at different moments uses the same weight matrix for each part each time, which can reduce the number of parameters and thus the complexity of the computation. The key point is the recurrent kernel, which remembers the features of the last hidden layer output and passes it to the next input. Each of the inputs and outputs can be of indefinite and unequal length. RNNs are very effective for data with sequential properties, which can mine the temporal information as well as semantic information in the data. This ability of RNNs to process sequential data recursively is exploited to make a breakthrough in deep learning models for solving problems in natural language processing (NLP) fields such as speech recognition, language modeling, machine translation, and temporal analysis.

The SNN is one of the third-generation NNs, which is to process the data in a more biological fashion in comparison to other aforementioned NNs. The structural units for transforming data into spiking sequences are included in addition to the input, hidden, and output layers (Figure 5E). The SNN is capable of processing spike sequence data structures.

SNNs process information using the timing of signals (pulses). In contrast to actual physiological mechanisms, SNNs almost universally use an idealized pulse generation mechanism. First, a number of pulse spike sequences are fed to the neuron, and then these spike trains are fed into the memristor crossbar and converted into weighted current sums through the columns. A row of transimpedance amplifiers can be used to amplify and convert the currents to analog voltages of (–2 and 2 V). The neurons can then integrate the analog voltages and generate spikes when reaching the firing threshold, which propagate to the next layer for similar processes. At last, the spiking numbers of output neurons are counted, and the index of most frequently spiking neuron is taken as the prediction result. In the brain, communication between neurons is accomplished by propagating sequences of action potentials (also called pulse sequences transmitted to downstream neurons). What it enhances is the ability to process data in spatiotemporal, spatial meaning that neurons only connect with nearby neurons so that they can process input blocks separately. Temporal means that pulse training occurs over time so that information lost in the binary encoding can be regained in the temporal information of the pulse, which allows to process temporal data naturally; and SNN can handle time series data without additional complexity. The neuronal units in SNNs are active only when spikes are received or sent, thus making them energy efficient. It is therefore event-driven, thus allowing it to save energy.

In this section, we discuss five representative data structures in application-oriented scenarios applied to BIC systems: image/pattern data structure, graph data structure, sequence data structure, spiking sequence data structure, and discrete data structure.

Image/pattern data are a collection of grayscale values for each pixel expressed as a numerical value and are a type of structured data, also known as Euclidean data, and the data structure is high-dimensional, complex. In the practical application of the BIC system, there are many image/pattern data structures being expected to be processed by suitable NNs. In addition, there are also many other data types that cannot be processed into image/pattern data types, such as photonic devices that have freeform geometries, and these data cannot be parameterized by a few discrete variables. But it is possible to convert these data into 2D/3D images/patterns. It will be traded off with other performance metrics to realize all the features of the input (full connection), so each neuron is connected to only one local region of the input data, called the receptive field, and the size of the connection is equal to the depth of the input quantity in the depth direction. Image data types are effectively processed using a set of convolutional layers in series that can extract and process spatial features (Krizhevsky et al., 2012; Simonyan and Zisserman, 2014; Szegedy et al., 2015; Szegedy et al., 2017). The CNN can extract a large number of local close features and combine them into high-order features. For this high-dimensional and complex image data, the downscaling of image data is achieved through 2–3 layers of convolutional operations by kernel sharing and convolutional operation in the CNN, and finally the classification probability is calculated for low-dimensional data operations. Therefore, image/pattern data structure is regarded to be more suitable for CNN architecture to process (as listed in Table 3).

The CNN with five layers, which contains two convolutional layers, two pooling layers, and one FC layer was applied for recognition of the MNIST (LeCun et al., 1998) digit images (Yao et al., 2020). The max-pooling and rectified linear unit (ReLU) activation functions are employed and obtained the accuracy of 96.19%. The results show that more than two orders of magnitude has better power efficiency and one order of magnitude has better performance density compared with Tesla V100 GPU. The CNN architecture for the model was employed to recognize the image data (LeCun et al., 2015; He et al., 2016). In addition, image-processing-related tasks, such as image segmentation and object detection (Ren et al., 2015), image classification (Simonyan and Zisserman, 2014), video tracking (Fan et al., 2010), and NLP (Karpathy and Fei-Fei, 2015) are also still inseparable from CNN architecture.

In computer science, a graph is a data structure consisting of two parts: vertices and edges. A graph can be described by the set of vertices and the edges it contains, which is a data structure that models the relationship between nodes and nodes. Graph data consist of nodes and undirected edges with label information, which are also the only non-Euclidean data in ML. Graph is the disorder, and can express starting and ending points without clear information. Graphs can represent many things—social networks, molecules, etc. Nodes can represent users/products/atoms, and edges represent connections between them, such as following/usually buying/keying at the same time as the connected product. A social network graph may look like this, where the nodes are users and the edges are connections. There are two main types of graphs: directed graphs and undirected graphs. In a directed graph, there is a direction of connections between nodes; in an undirected graph, the order of connections does not matter. A directed graph can be either unidirectional or bidirectional (as listed in Table 3).

Therefore, graph data structures are suitably processed in GNNs (Henaff et al., 2015; Niepert et al., 2016; Veličković et al., 2017), which analyze and operate on aggregated information between neighboring nodes in each layer. The node classification is a typical application with GNN architecture, each node in the graph is associated with a label and we are required to predict the label of unlabeled nodes. GNNs have been applied to broad aspects, including the modeling of molecular drug discovery (Torng and Altman, 2019), molecular fingerprint analysis (Duvenaud et al., 2015), and phase transitions in glasses (Bapst et al., 2020). In addition, graph network architectures are highly specialized depending on the actual application, such as graph generative networks (Ma et al., 2018), graph recurrent networks (Li et al., 2015), and graph attention networks (Veličković et al., 2017).

In addition to discrete data and images/patterns data, there are also large-volume sequential data structure and sequential problems. Sequence data are the data collected at different points in time and reflect the state or extent of change of a thing, phenomenon, etc. over time, such as time series data structures and text sequences, but there is a temporal correlation between data and a dependency between, before, and after for series data. In addition, there are variables and responses among mathematics and physics, for example, in dynamic electromagnetic systems; when the discrete time steps are small enough, these continuous electromagnetic change phenomena can be represented by discrete time series without going general. Moreover, both the input and output signals are time series, and the output at a given time depends not only on the input at the current moment, but also on the state of the device at the previous time step (i.e., the electromagnetic variation of the device).

For example, the rise and fall of a stock requires prediction of the data at the next moment, so the output depends not only on the input, but also on the memory (that is, the output at the previous moment can act on itself again). RNNs feed the network outputs back into the input layer, maintaining a memory that accounts for the past state of the system, which makes them ideally suited to model time-sequential systems (Graves, 2013; Weston et al., 2014; Luong et al., 2015) as listed in Table 3.

Spiking sequence data structures are discrete spike signals containing both spatial data information between layers and temporal data information, that is, spatiotemporal data. In the human brain, when any of the body’s senses are stimulated, the neurons in the human brain produce an impulse signal containing action information. There are two forms of impulse signals, one is directly spiking sequence data, and the other is the signals collected from the environment, which are usually in the continuous and analog domain, and need to be transformed into spiking sequence data first to serve as the inputs to SNNs. These individual pulses are temporally sparse, each with a high information content and approximately uniform amplitude. Thereafter, the spiking sequence data structures are well-suited to be processed by SNN architecture as listed in Table 3.

In 1997, the SNN has been proposed as the third-generation NN (Maass, 1997), and the SNN has been widely studied. A million spiking neuron into circuit with a scalable communication network and interface were integrated to achieve multi-object detection and classification tasks (Merolla et al., 2014). The Loihi chip was applied to solve LASSO optimization problems with over three orders of magnitude superior energy-delay product compared to conventional solvers running on a CPU iso-process/voltage/area (Davies et al., 2018). This provides an unambiguous example of spike-based computation, outperforming all known conventional solutions. SNNs have become the focus of many recent applications in many areas of pattern recognition, such as vision processing, speech recognition, and medical diagnostics.

Discrete data are a class of countable data that are numerically independent and specific in nature for certain specific values and lack correlation between the data. Typical discrete parameters are, for instance, the device geometry such as the height, the width and the period, or the permittivity and permeability of a material. In addition, there are also many properties of devices/objects described as discrete parameters, which include device efficiency, quality factor, band gap, and spectral response sampled at discrete points. Owing to lacking correlation, they cannot be effectively studied by partial or related parameter analysis and feature extraction. Discrete data structures naturally interface with MLP (as listed in Table 3).

DNN was applied for the signal integrity problem. The characters in signals are classified by a large-scale memristor-based DNN in Shin et al. (2020), and a three-layer NN was constructed and presented the classification accuracy of 99.4% by using ReLU as the activation function. Using DNN training and real measurements, the magnitude of the impact of interconnection parasitic of memristor arrays problems (e.g., IR drop, crosstalk or ringing) on signal integrity was determined. Similar research works have been carried out (Chen et al., 2015; Lee and Kim, 2019). In addition, research works on robotic controls (Abbeel et al., 2010) and drug discovery (Lavecchia, 2015) to image classification (Krizhevsky et al., 2012; Liu et al., 2016; Adam et al., 2018; Tang et al., 2020; Liu and Zeng, 2022) and language translation (Wu et al., 2016) have also been performed. These algorithms will only get more powerful, particularly given the recent explosive growth of the field of data science. The memristor-based DNNs can be applied for XOR operation and digit recognition on the MNIST (Modified National Institute of Standards and Technology) (LeCun et al., 1998) data set. The classification accuracy is up to 96.42% (Liu and Zeng, 2022).

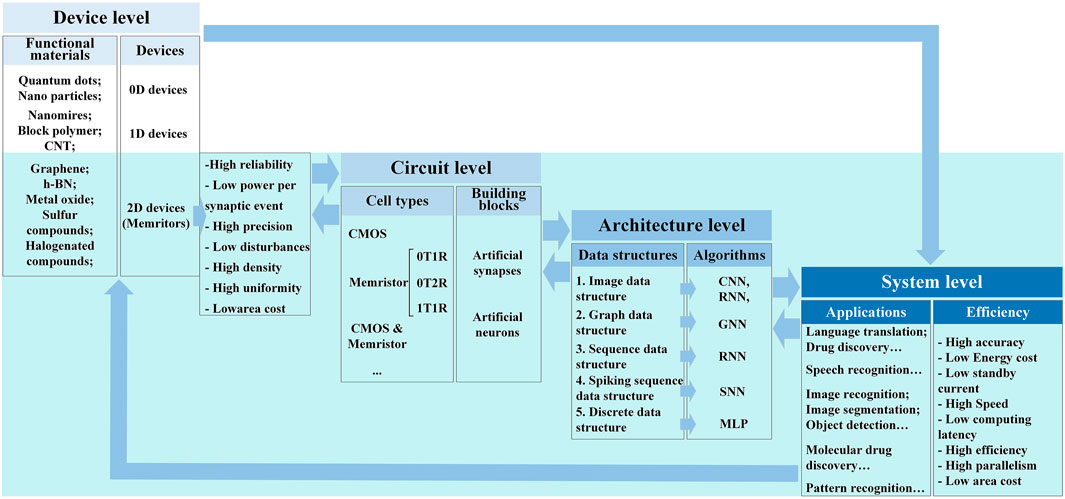

The development and implementation of the BIC system is an interdisciplinary work, which is determined by the design in the device level, circuit level, up to architecture level and system level as demonstrated in Figure 6. Upon the systematic review on the aforementioned design levels, in this section, the interactions between the system level and device/circuit level and between the system level and architecture level are discussed.

FIGURE 6. Interaction among material/device, circuit, architecture, and system levels for the BIC system.

For the BIC system design, the choice of materials and devices in the integrated circuit significantly influences the construction in the architecture and system levels, especially in terms of power consumption, area density, computing speed, and system accuracy.

In the device level, the ideal functional material for integrated devices in BIC possesses the features with easy fabrication possibility, excellent optical/electrical properties, high stability, microstructural tunability, and controlled charge and promising innovation. As reviewed in Section 2, the 0D materials have excellent optical properties and can be fully valued in photonic systems, but their stability is poor and the selection is narrow, so the variety of 0D devices are also limited. Inevitably, 0D devices face the context of fabrication challenges, especially among 0D QDs, where there are obvious difficulties in obtaining quantum coherence. Furthermore, in high-mobility semiconductors, the number of quantum states is critical (Altaisky et al., 2016). An important point of 1D materials is the general bottom-up growth characteristics, with metal or semiconductor behavior, which depends on its chiral vector, but single 1D materials have always been a challenge in application and alignment. So, it is limited to application in promising 1D materials with the lack of NVM characteristics and preventing the inherent transverse geometry of the dense cross switch array. The 2D materials present the following unique features: first, the layer-by-layer exfoliation of 2D bulk materials can be achieved due to weak van der Waals forces between adjacent layers; second, the charges can be precisely controlled by the gate voltage to eliminate influences; and third, the promising innovation can be achieved by controlling the energy band structure. For instance, the 2D memristor devices with excellent properties as shown in Figure 6 can be served as ideal device candidates in the circuit level in the BIC system. Furthermore, the integration of low-dimensional materials (0D–1D, 0D–2D, and 1D–2D) (Sangwan and Hersam, 2020) is regarded as an efficient method to avoid its own problems.

In the circuit level, 2D memristor devices are considered as an example; the ideal memristive devices for mimicking the functional behavior of synapses and neurons in the NN should possess low switching energy, small feature size, high device conversion speed, low device variability (high reproducibility), high endurance, and symmetrically programmable conductance states (for possible linear weight training), especially with the ability of VMM as demonstrated in Figure 6. Particularly, the device reliability demonstrates a strong impact on accuracy in BIC. For example, the artificial neurons and artificial synapses are needed for processing and transmitting the signals in the BIC system. Taking 2D memristors as an example, as reviewed and illustrated in Table 2 in Section 2, the 2D memristors have many options for implementing synapse devices, but very few for neuron devices, which is closely linked to the choice of functional materials used as the switching layers. It proves that the working principle of devices cannot be separated from the structure and properties of the material. The application of the same materials to devices with different working principles results in very different sets of voltages and currents, which also determine the magnitude of power consumption. Therefore, the role of functional materials is indispensable for the design of different types and switching mechanisms of the memristive devices. It is the excellent characteristics of the devices themselves that enable the possible functional properties of the artificial neuronal circuits. Note that, despite in general, the longer retention time is favored in memory devices, the requirements of retention time can be strongly dependent on the targeted building blocks in the BIC system. The artificial synaptic devices based on 2D MoS2/graphene memristors were constructed and exhibited essential synaptic behaviors, especially excellent retention characteristics of 104 s (Krishnaprasad et al., 2019), which is attributed to the fact that synapses present long data retention after electrical stimulation. However, as for the artificial neuron based on a diffusive memristor, after generating the signal, it quickly resumes its initial state to wait to respond to the next signal. Thus, a shorter retention performance should be presented (Wang et al., 2018; Du et al., 2021b).

The energy cost is crucial in the performance evaluation of the BIC system, especially the energy consumption generated per synaptic events. In numerous reports, the energy consumption of CMOS-based artificial synapses is at ∼nJ per event level (Painkras et al., 2013). However, it is easy for memristive artificial synapses to reach several ∼pJ per synaptic event (Yu et al., 2011; Jackson et al., 2013; Li et al., 2022), or even several hundreds of ∼fJ (Xiong et al., 2011; Pickett and Williams, 2012), which is close to those of the biological brain. As reviewed in Section 2, the application of different materials to the devices shows different energy consumptions, which is due to the properties of the materials themselves. Particularly with 2D materials, which are used in memristor devices, very low energy consumptions can be obtained consistently [h-BN (Vu et al., 2016; Shi et al., 2018), MoS2 (Knoll et al., 2013), HfSe2 (Li et al., 2022)]. Therefore, for memristor devices, the choice of switching layer material has an inestimable impact on the energy consumption of the device and is also a potential direction. In addition to the devices category, considering the huge number of synapses in the Mem–BIC system, such as 105 synapses in the application (Kornijcuk et al., 2016), the energy consumption of memristive synaptic operations has been significantly reduced by several orders of magnitude compared to conventional CMOS technology. Furthermore, 2D memristor devices optimize the performance of parallel VMM computing in terms of power consumption (include operating current/voltage), and number of states, providing a superior platform for the future BIC system.

In addition to the device and circuit levels, the architecture level plays a crucial role in the BIC system as the NN architecture is a direct reflection of the processing requirements in big data flow. Despite the optimized energy cost per switching event or synaptic event in the device/circuit level, more significant power gain can be found in the architecture level in NN architectures with comparable data size/representation. Note that the overhead design for controlling memory blocks, that is, the circuit design for data movement will further influence the energy dissipation in the architecture level.

Table 3 summarizes the features of data structures for mostly applied data sets in different application scenarios. For unstructured data, limited NN structures can be used to process it directly, showing inconsistencies in the data set and data size. Specifically, discrete data structures lack tight correlation between data and have no timeline. In the field of neuromorphic computing, the graph is the only non-Euclidean data structure, which presents different data sets and data sizes. For structured data, there are strong correlations between data, such as image/pattern with positional correlations, and sequential data and pulse sequences with temporal and logical correlations. Therefore, it is possible to capture the main features of the data while processing this type of data, thus reducing the dimensionality of the data and simplified NN to obtain an efficient system. In particular, the types of data associated with time are becoming more and more abundant (such as brain signals, stock data, gross domestic product data, and business cycles), and the requirements for NNs will also increase.

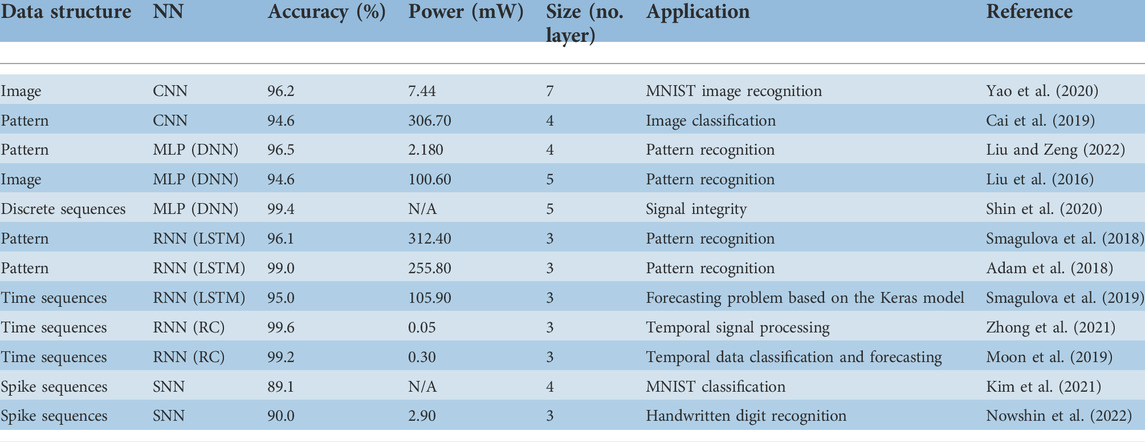

Based on the aforementioned summary of different data structures and characteristics, MLP is suitable for discrete data lacking correlation between data and having no timeline in unstructured data. All outputs from the last layer are inputs to the next layer, and all data information has a logical relationship with adjacent layers. GNNs may belong to a class of NN architectures, especially tailored for this purpose, and thus are widely employed to process the graph, which is the only non-Euclidean data structure in the field of neuromorphic computing. CNN, RNN, and SNN can be applied for processing structured data, including image/pattern with positional correlations, sequential data, and pulse sequences, with temporal and logical correlations. CNNs include unique internally sharable volume and pooling layers, which reduce the dimensionality of the data and simplify the NN. For RNNs, the unique feature is the temporal correlation, where the output of the previous moment can be fed back and applied to the current moment, which works well for data with strong temporal correlation (such as brain signals, stock data, gross domestic product data, and business cycles). The most obvious feature of the SNN is that it most closely resembles human brain signaling and processing and is ideally suited to impulse signals similar to those of the human brain. It is clear from Table 4 that structured data such as images/patterns are common in practice. There is also more than one type of NN used to process these data, including CNNs, RNNs, and their variants, while for discrete data and graph data, there are very few practical applications of BIC based on memristors.

TABLE 4. Comparison of performances (accuracy and power consumption) of different NNs (different sizes) depending on different data structures.