- 1Swire Institute of Marine Science, School of Biological Sciences, The University of Hong Kong, Hong Kong, Hong Kong SAR, China

- 2Mobius AI, Hong Kong, Hong Kong SAR, China

- 3International Union for Conservation of Nature Groupers & Wrasses Specialist Group, Gland, Switzerland

- 4Science & Conservation of Fish Aggregations, Fallbrook, CA, United States

Introduction: Humphead, or Napoleon, wrasse (Cheilinus undulatus) is a large reef fish highly valued in the live reef food fish trade. Overexploitation, driven primarily by demand from Chinese communities, led to its ‘Endangered’ status and CITES Appendix II listing in 2004. Hong Kong is the global import and consumer hub for this species. A Licence to Possess system for CITES is implemented in the city to regulate the quota of live wild-sourced CITES specimens, including humphead wrasse, held at each registered trading premise and ensure traceability through documentation. However, the absence of identification and tagging systems to distinguish legally traded from illegally sourced individuals is a critical CITES enforcement loophole, allowing traders to launder illegally imported fish provided the total number on their premises remains within the licensed quota. To address this, a photo identification system utilizing the unique complex facial patterns of humphead wrasse was established enabling enforcement officers to detect possible laundering by monitoring individual fish at retail outlets.

Methods and results: Deep learning models were developed for facial pattern extraction and comparison to enhance efficiency and accuracy. A YOLOv8-based extraction model achieved a 99% success rate in extracting both left and right facial patterns. A ResNet-50-based convolutional neural network retrained using a triplet loss function for individual identification, achieved top-1, top-3, and top-5 accuracies of 79.73%, 95.95%, and 100%, respectively, further characterized by a mean rank of 1.797 (median = 1, mode = 1, S.D. = 0.86) for correct comparisons with appropriate images. The ‘Saving Face’ mobile application integrates these models, enabling officers to photograph and upload humphead wrasse images during inspections to a centralized database. The application compares and detects changes in fish individuals at each location. Discrepancies between detected changes and transaction documentation raise red flags for potential illegal trade, prompting further investigation. The system is also designed for use by researchers and citizen scientists.

Discussion: This novel solution seeks to address a critical CITES enforcement loophole and shows potential for research and citizen science initiatives. The beta version of ‘Saving Face’ is available, and general public users can contribute supplementary information for enforcement and continuous model optimization. This new photo identification approach developed against wildlife trafficking using unique body markings is potentially adaptable to other threatened species.

1 Introduction

1.1 Context and significance of the study

The humphead, Napoleon, wrasse (Cheilinus undulatus; family Labridae) is an endangered coral reef fish susceptible to overfishing and of particularly high value in the live seafood trade. It is listed in CITES Appendix II and subject to controls to ensure that international trade is sustainable. Hong Kong is the global trade hub for this species but struggles to control illegal trade. This represents an ongoing threat to the species. To close serious loopholes in the city’s ability to enforce regulations supporting conservation efforts to safeguard the humphead wrasse and effectively monitor its trade, a method is needed to track legally imported fish and detect possible laundering, i.e. replacement of legal imports with illegally acquired individuals. AI-driven photo identification and associated software is a novel and potentially effective means of leveraging the complex body markings of humphead wrasse for individual tracking. The approach not only offers a potential solution to enforcement but also circumvents the many problems of using physical tagging to track individuals and can improve monitoring and research for this species.

1.2 Species description and biology

The humphead wrasse is among the largest of all extant reef fishes and is widely distributed across the Indo-West Pacific between latitudes 30°N and 23°S. Smaller individuals typically live inshore, while larger individuals occupy lagoons and outer coral reefs down to 100 m (Randall et al., 1978; Sluka, 2000; Dorenbosch et al., 2006; Tupper, 2007; Oddone et al., 2010). The species grows slowly, matures at 5-7 years (40-50 cm TL), and can live for over 30 years reaching at least 1.5 m TL (Choat and Bellwood, 1994; Choat et al., 2006; Sadovy de Mitcheson et al., 2010). It is a pelagic egg spawner and exhibits protogynous hermaphroditism, whereby most individuals mature as females, with some individuals later undergoing sex change to males at around 80-90 cm TL (Sadovy et al., 2003; Choat et al., 2006; Colin, 2010; Sadovy de Mitcheson et al., 2010). The species primarily feeds on invertebrates including mollusks, gastropods, crustaceans, echinoids as well as fishes (Randall et al., 1978).

1.3 Economic and cultural value

The humphead wrasse is among the most highly regarded fish in the international live seafood trade related to Chinese cuisine. The species is valued for its taste and considered a symbol of social status and prosperity in Chinese culture, mainly served at family and business occasions (Fabinyi, 2011). Mainland China and Hong Kong are the major markets for the species, along with sales in other Chinese communities, for example, in Taiwan, Indonesia and Singapore (Sadovy de Mitcheson et al., 2017). Retail prices can reach high levels, amongst the highest per unit weight in the trade. Depending on the season, market availability and financial climate, individual fish have sold at up to USD 600 and USD 850 in mainland China and Hong Kong, respectively (Fabinyi and Liu, 2014; Sadovy de Mitcheson et al., 2017). The preferred ‘plate-size’ fish, suitable for a family meal, reported in Hong Kong is about 700-1,000 g, a size which includes mainly juvenile and some small adult individuals (Sadovy de Mitcheson et al., 2017). Because of its high retail value, the species is particularly profitable for traders.

1.4 Conservation status and threats

Due to natural low abundance, long lifespan, high consumer demand and commercial value, and preferred market size, this heavily sought-after species is particularly susceptible to overexploitation. The species is considered to be ‘conservation-dependent’, with overexploitation being the major threat (Gillett, 2010; Romero and Injani, 2015). The species was listed as ‘Vulnerable’ in 1999 and ‘Endangered’ in 2004 on the IUCN Red List. A recent reassessment by the IUCN Groupers & Wrasses Specialist Group recommends maintaining the endangered status (personal communication, May 2024). The humphead wrasse was listed in the CITES Appendix II in 2004, the first reef food fish to be so-listed, after which time many countries ceased to export it due to conservation concerns.

A substantial proportion of commercial trade in humphead wrasse is international, although there is also considerable domestic trade in Indonesia (personal communication, November 2024). Malaysia has restricted live exports of the species since 2010, while illegal exports from the Philippines are ongoing (both into Malaysia and Hong Kong) (Poh and Fanning, 2012; BFAR, 2017). Indonesia became the dominant exporter of the species after 2010. Hong Kong is the key global trade hub for imports and reexports of the species and also a consumer (CAPPMA, 2013; Liu, 2013; Wu and Sadovy de Mitcheson, 2016).

1.5 Regulation and trade control efforts

CITES is implemented in Hong Kong through local legislation, the Protection of Endangered Species of Animals and Plants Ordinance (Cap 586). The key purpose of the Ordinance is to regulate the import, ‘introduction from the sea’, export, re-export, and possession of CITES-listed species into and through the city. As a major global wildlife trade hub for many species, the effective implementation of trade regulations in the city is particularly important in support of safeguarding threatened species (ADMCF, 2021; ADMCF, 2018; Cheng, 2021). The Agriculture, Fisheries and Conservation Department of the Hong Kong Special Administrative Region (AFCD, HKSAR) is the authorized CITES Management Authority (MA) in Hong Kong and is responsible for the local implementation of CITES and supporting the Ordinance.

All imports of CITES II-listed species to the HKSAR need export (and re-export) permits from sources and an import permit issued by AFCD. Additionally, in Hong Kong, a Licence to Possess (PL) is required for any premises (shops or individuals) ‘to possess an Appendix I species, or a live animal or plant of Appendix II species of wild origin for commercial purposes in Hong Kong’ (AFCD Endangered Species Advisory Leaflet AF CON 07/37). A possession quota is listed on each PL to identify the maximum number of live individuals of a CITES II listed species that can be held at any point in time within the 5-year PL validity period on the holding premises (e.g. shop, restaurant, etc.). All trade (in- and out-flow) under the PL must be recorded by the licensee within three days, together with supporting documents, and trade records must be made available to AFCD for inspection if requested.

In 2021, wildlife trafficking, including humphead wrasse, was included in the Organized and Serious Crimes Ordinance (OSCO, Cap 455) of Hong Kong Law. This not only raised the maximum penalties for relevant crimes but also empowered the government to inspect the financial flow of suspected individuals and companies and confiscate their proceeds of crime (Chan et al., 2024). This showed the increased attention by the Hong Kong government to wildlife trafficking crimes and the incentive to enhance enforcement capability.

However, at least three loopholes seriously undermine the ability of AFCD to control and oversee the trade of humphead wrasse, as well as certain other species traded live by the city. Illegal trade in the species has been recorded on multiple occasions and evidently continues (Wu and Sadovy de Mitcheson, 2016; Hau and Sadovy de Mitcheson, 2019; Hau and Sadovy de Mitcheson, 2023; Y. Sadovy pers. obs.). Major loopholes include (1) the absence of any tagging system to identify and track specific individuals and clearly identify those imported legally, (2) limited control of imports on live fish carrier vessels (Class III[a]), a major import mode, due to a reporting exemption and general lack of vessel oversight, and (3) serious shortcomings in the ability of the PL system to control trade in the city for some CITES source codes (Hau and Sadovy de Mitcheson, 2019; Hau and Sadovy de Mitcheson, 2023).

These loopholes undermine the enforcement of CITES under Cap 586 of trade into, within and through Hong Kong and compromise its ability to conserve HHW and ensure its sustainable and legal trade. The absence of a tagging system to distinguish legally imported from illegal fish after they enter the city makes it impossible to detect laundering. Most fish enter the city by live fish carrier vessel, Class III(a). However, since these vessels are exempted from reporting their entry and exit to the Marine Department in Hong Kong (Cap 548 section 69) their movements and activities cannot be tracked. This means that, unlike other cargo vessels under Hong Kong law which must notify the Marine Department of their movements, Customs cannot readily inspect these vessels or follow their activities. The third loophole, the PL system, is intended to help enable Cap 586 in the city to support CITES; in practice, its ability to do so for humphead wrasse is severely limited when individual animals cannot be identified and tracked. For example, PL validity is issued for five-year periods, a timeframe applied by the government across a wide range of species for consistency and ease of administration. In the case of humphead wrasse, 5 years is far longer than the typical market turnaround time of a month or less (Hau and Sadovy de Mitcheson, 2019; Hau, 2022). Also, because fish are not individually tagged, a single business can launder multiple fish undetected for 5 years after a single legal import as long as the total number on sale does not exceed the number allowed in their issued PL.

1.6 Roles of tagging and monitoring in trade control

Distinguishing among different individual animals of a species in trade and monitoring their volumes and movements is critically important in support of trade regulation and conservation measures. For live fish, like the humphead wrasse, there are two main ways that individuals can be distinguished; physical tagging (conventional, telemetry and electronic) and natural tagging. Conventional tagging involves attaching a simple coded device to the animal, paint injection, fin-clipping or branding. Telemetry tags follow the movements and behaviors of organisms using animal-borne battery-powered sensors. Electronic tags, or microchips, are applied internally or externally and transmit information wirelessly to a receiver or store information that is retrieved later. All of these methods usually require handling animals or, at the least, some form of physical contact which can pose risks to their health, welfare and survival (Jepsen et al., 2015; Soulsbury et al., 2020). In the case of live fish traded for food, physical tagging poses potential food safety risks, while damage to the fish might reduce their value or increase the risk of mortality prior to sale. Moreover, external physical tags can potentially be transferred among individual animals to circumvent regulations.

Natural ‘tagging’, using individually distinctive markings or characteristics, can be an unobtrusive way of identifying and following live animals and avoiding many of the problems associated with physical tags. Natural tagging has been widely used for terrestrial animals (Sacchi et al., 2010; Treilibs et al., 2016; Choo et al., 2020). Among marine species, this approach has been applied for decades to track dolphins, whale sharks, and sharks (Arzoumanian et al., 2005; Marshall and Pierce, 2012; Ballance, 2018). Among fishes, such an application is recent but it has already been successfully used in a range of species to track individuals over space or time, study mating and social systems and for tracking, handling and accounting animals in aquaculture facilities (Martin-Smith, 2011; Zion, 2012; Love et al., 2018; Ritter and Amin, 2019; Cisar et al., 2021; Correia et al., 2021; Sadovy de Mitcheson et al., 2022; Desiderà et al., 2021; Nyegaard et al., 2023). However, a major shortcoming in using natural markings, which are identified by eye (i.e. manually, whether directly or by looking at photos), is the limited number of animals that can be distinguished and the often time-consuming nature of such work (Perrig and Goh, 2008).

AI-driven photo identification of animals using their own body markings is a novel non-invasive way to follow individuals and an approach not previously used to identify and track regulated fishes for enforcement purposes. When applied with AI-driven models, this approach addresses many of the challenges and shortcomings of using physical tagging methods and following fish markings manually. This can enable workers to handle much larger numbers of individuals using detailed photo identification combined with functions of receiving, processing and handling images to ensure an efficient, reliable and practical system of identification. As such, the approach has been actively developed and effectively utilized in aquaculture for individualized phenotypic measurements and tracking, hence informing data-based management decisions (Cisar et al., 2021; Tuckey et al., 2022). If natural markings are used in support of law enforcement, high precision in identifying individuals and in reliably distinguishing these from other individuals is essential, which AI-driven models can ensure. For computer-automated systems, programmes can be developed utilizing algorithms and machine learning to find unique individual characteristics and to compare images to a catalogue or database of a large number of reference individuals automatically. However, while such programmes can be far more efficient compared to manual processing, they take considerable technical expertise to develop (Petso et al., 2022).

1.7 Study rationale and objectives

In the specific case of the humphead wrasse, applying photo identification represents a novel approach to enhancing seafood trade law enforcement. The lack of a tracing mechanism (i.e. physical tagging) for imported fish in the market has been a major challenge in the CITES enforcement of humphead wrasse trade in Hong Kong. Untagged humphead wrasse become untraceable after import, which, because this is poorly controlled, readily enables laundering (Wu and Sadovy de Mitcheson, 2016). Hence, the absence of tagging and tracking severely hinders AFCD’s inspection efforts and its ability to comply with CITES requirements, making it impossible to verify the legality of fish, monitor changes in stock within shops or keep track of numbers in trade.

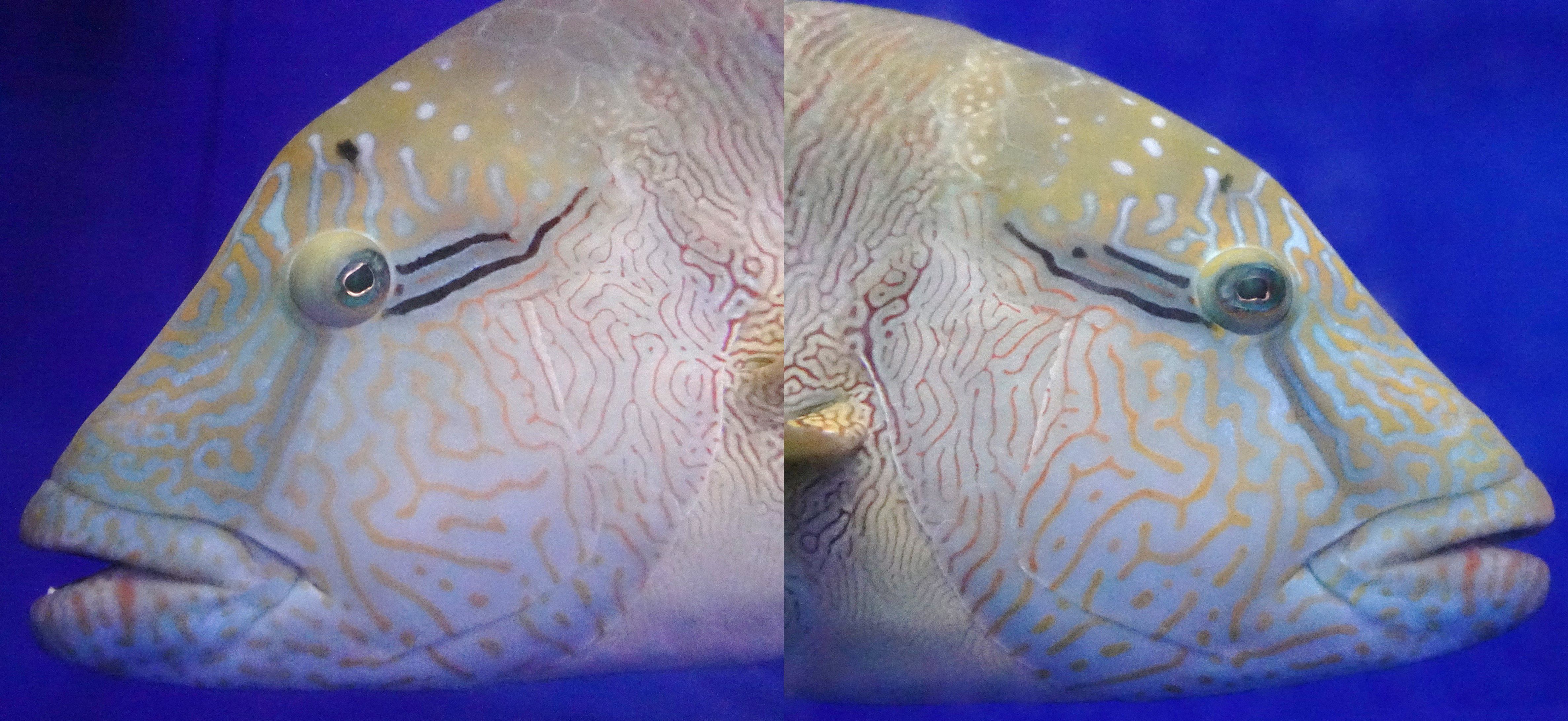

To close serious loopholes in the enforcement of Cap 586 and CITES for humphead wrasse, a method is needed to track legally imported fish and detect possible laundering. Photo identification, leveraging the complex facial markings of humphead wrasse, offers a potential solution. Crucially, research has demonstrated the stability of humphead wrasse body markings over several years, and well beyond their typical tank survival period/turnaround time of less than a month; the high variability of facial patterns, like fingerprints (Figure 1), among different individuals provides extensive scope for distinguishing among multiple animals (Hau and Sadovy de Mitcheson, 2019).

Figure 1. Complex facial patterns of humphead wrasse showing distinctive black eyeslashes of the species. Even from the same individual, as shown here, the facial patterns of the left and right sides are different.

Building upon the proven concept of humphead wrasse photo identification, this paper documents the development and validation of artificial intelligence models and the subsequent creation of a mobile application system for practical use by both the public and enforcement officers. This study aims to develop a practical artificial intelligence-based photo identification system for humphead wrasse.

1. To develop, train and evaluate a practical artificial intelligence-based photo identification system for humphead wrasse.

2. To develop the mobile application system, ‘Saving Face’, for the utilization of the developed models by the public and by CITES enforcement users.

3. To identify recommendations for enforcement improvements and public contribution to conservation efforts with the ‘Saving Face’ system.

The whole development process involves 4 phases, including 1) image collection and processing, 2) training and evaluation of machine learning models for facial extraction and comparison, 3) creation of a user-friendly mobile application that allows 4) snapshots and rapid identification result generation to enhance enforcement capabilities and facilitate research and public participation in conservation efforts using the photo identification technique of humphead wrasse and the Saving Face system.

2 Materials and methods

2.1 Framework for humphead wrasse photo identification and image collection

In the realm of wildlife conservation and ecological studies, particularly those focused on endangered species, researchers often face a key challenge: the scarcity of real-world image data or other individual body samples. This limitation usually stems from the inherent rarity of these species, clandestine trade practices, difficulties associated with capturing high-quality images in their natural habitats and otherwise limited availability. Traditional machine-learning approaches, which typically rely on large datasets for individual classification, are thus rendered less effective in these scenarios.

To address the constraint of the limited availability of images from individual animals, a novel approach that leverages a similarity function rather than a conventional classification model is proposed. This method is designed to maximize the inter-individual distance while minimizing the intra-individual distance in a high-dimensional feature space. By doing so, the paradigm shifts from individual-specific classification to a more generalizable similarity-based identification system.

The core of this approach lies in training a deep neural network to learn this similarity function. Instead of outputting class probabilities for each individual animal, the network learns to embed images into a feature space where images of the same individual cluster together, while those of different individuals are pushed apart. This is achieved through the use of techniques such as Siamese networks or triplet loss functions, which are particularly well-suited for learning from limited data input (Koch et al., 2015).

Source codes and data for the two models are open-sourced and posted on GitHub from end-March 2025 (https://github.com/mobiusxyz/savingface).

2.1.1 Facial image collection and preparation

Considerable effort was necessary to source sufficient images for programme training and model development. Images were obtained from researchers, citizen scientists and government officials using mobile phones or cameras. Images were taken, without removing fish from water, under a range of conditions typical of those found in the shops and retail outlets where the fish are held and monitored by enforcement officials, researchers and others. The fish were typically held in large tanks ready for retail sale, together with other fish, and were generally slow-moving, often displaying clearly the side of the face to viewers. Factors that could affect image quality are typically condensation, water droplets, reflections, photograph angle or image resolution.

Photos used for training were of both good and poor quality. The total number of images available came from 200 separate individuals. Because of the small number of images, for each one 5-10 variations were generated. Positive (same fish) and negative (different fish) were generated to make 10,000 different images. These images made up the training set and validation set.

2.1.2 Facial markings extraction model

The study employed a comprehensive approach to custom object detection model development encompassing dataset preparation, model training, validation, and inference testing. A model for extracting facial markings of the humphead wrasse was developed using the YOLOv8 computer vision architecture. YOLO algorithms enable real-time object detection in images by predicting and anchoring the boundaries of target objects (Redmon et al., 2016). We used YOLOv8 to subset the face region from the original image as a first step in the comparison pipeline (Supplementary Material). The model’s efficiency was enhanced by resizing the extracted facial images to 224 x 224 pixels which minimizes data storage and processing requirements without significantly losing identifying features. This model standardizes the extraction of facial marking patterns from input photos of humphead wrasses, facilitating the subsequent comparison of these markings.

For dataset preparation, Roboflow was utilized to manage 679 images (574 training, 61 validation, 44 test) across two classes: ‘L’ (left side of the face) and ‘R’ (right side of the face). The platform facilitated image annotation, preprocessing, and augmentation. Preprocessing included auto-adjusting contrast using histogram equalization and applying grayscale. Augmentations generated 3 outputs per training example with rotations between -12° and +12°. The training phase employed the Ultralytics YOLOv8 framework. Using YOLOv8’s command-line interface, training was initiated with specific hyperparameters: YOLOv8s model (11.1 million parameters), 150 epochs, batch size of 16, and learning rates from 0.01 to 0.001. The process involved iterative learning with periodic evaluations on the validation set.

This methodological approach presents a comprehensive pipeline for developing custom object detection models, leveraging modern tools and practices in computer vision. It serves as a potential template for similar research in object detection and broader computer vision applications.

2.2 Markings comparison model

The comparison model, based on the ResNet-50 architecture as the CNN tower, was retrained using the triplet loss approach (Hoffer and Ailon, 2015). This approach involved training the network on triplets of images: an anchor image, a positive image (of the same individual), and a negative image (of a different individual). A shared tower architecture that extracted features and projected them into a 128-dimensional embedding space, with a margin-based triplet loss function (α=0.2), was used. The objective was to minimize the distance between the anchor and positive embeddings while maximizing the distance between the anchor and negative embeddings. This method enabled the model to learn discriminative features that effectively distinguish between individuals even when faced with subtle differences (i.e. between different photos of the same fish and head side). The ResNet-50 architecture, known for its deep residual learning framework, provided a robust foundation for feature extraction, allowing the model to capture complex facial marking patterns and characteristics in fish images (Supplementary Material).

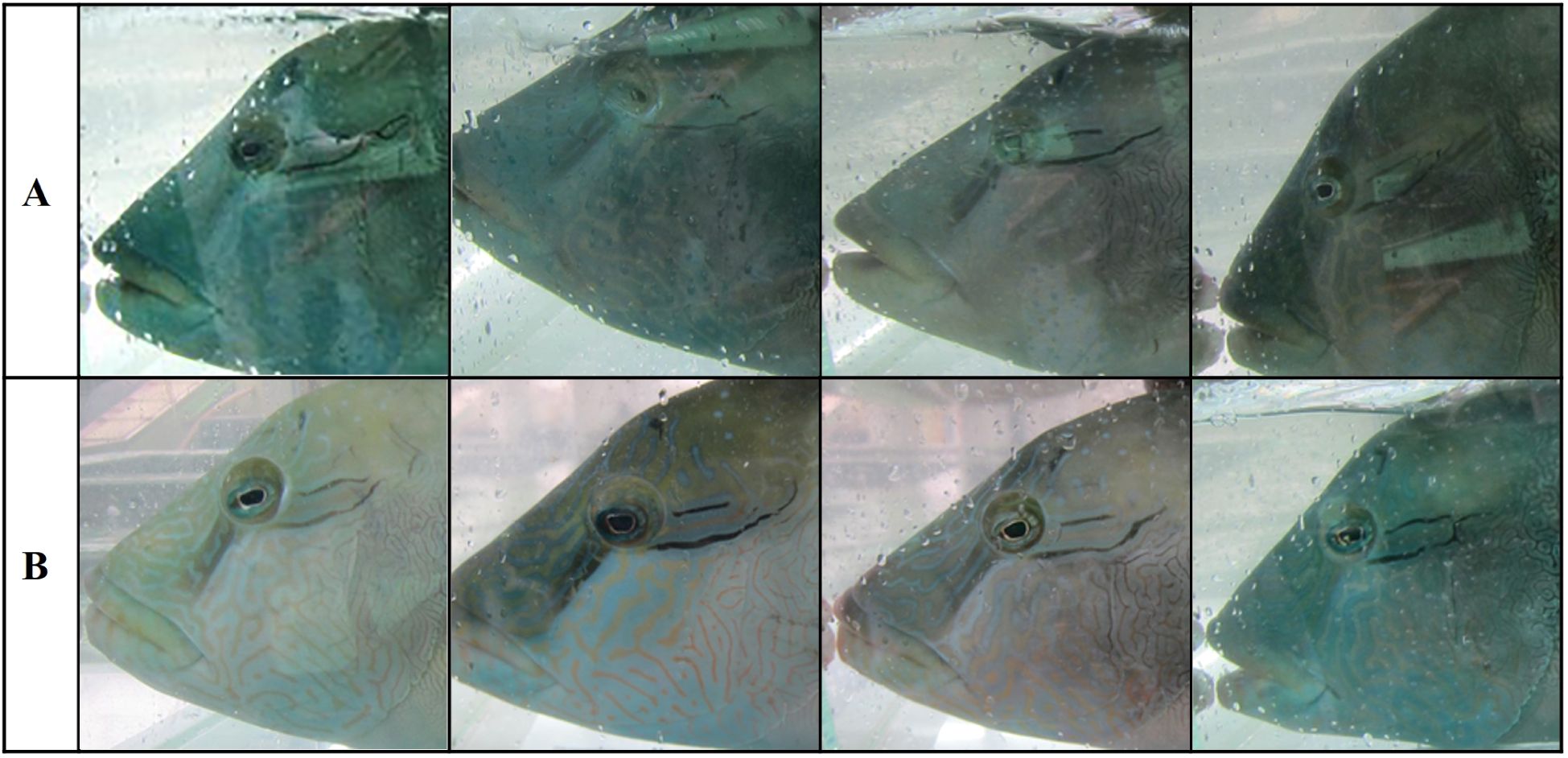

The training data preparation involved the curation of good-quality images (i.e. facial markings less blurry and no reflection on the tank glass) of humphead wrasse (Figure 2). At least two images of each individual fish were collected, ensuring the representation of various angles and conditions while maintaining good image quality. These images were then organized into triplets for the triplet loss training. The triplet loss method uses automated random selection to create training examples from a dataset of 186 fish images representing 91 unique fish. The images were grouped in an 8: 2 ratio by fish individuals for training and test processes. This ensured training and testing images did not overlap.

Figure 2. Examples of (A) ‘poor-quality’ and (B) ‘good-quality’ images capturing the facial area of the humphead wrasse are shown. Poor-quality images fail to showcase the prominent facial markings of the humphead wrasse and exhibit noticeable reflections and water droplets that seriously hinder the detection of these markings. High contrast, good-quality well-lit images highlight the prominent facial markings, with reflections and water droplets being negligible enough not to interfere with facial pattern detection.

The model trained with early stopping based on validation loss, using Adam optimizer, and was evaluated on the 20% holdout set to ensure generalization to unseen fish. For the training batch, triplets were created consisting of an anchor image, a positive image (same fish), and a negative image (different fish), generating 10,000 triplets per training epoch. Special attention was given to creating challenging negative pairs, including fish with similar patterns or coloration, to enhance the model’s discriminative capabilities. Data augmentation techniques, including random rotations (-10° to +10°), scaling variations (0.8× to 1.2×), and shear transformations (-10° to +10°), were employed to 50% of images to increase the diversity of the training set and improve the model’s robustness to variations in real-world conditions. The dataset was balanced to ensure equal representation of different individuals and to prevent bias towards any particular fish characteristics.

The process of creating negative pairs for the facial recognition system involved a combination of manual and automated methods. Initially, positive pairs were labelled manually by the research team, identifying images of the same individual fish. To generate negative pairs, a shuffling process was implemented: after selecting one image, another image was chosen randomly from the dataset, ensuring it was not the same individual. This process was repeated to create a diverse set of negative pairs. The manual labelling of positive pairs provided a foundation for accurate matches, while the shuffling method for negative pairs introduced randomness and variety. This approach helped to create a balanced dataset for training the facial recognition models, ensuring they could effectively distinguish between similar looking but different individual fish. The combination of manual positive pair selection and automated negative pair generation balanced accuracy and efficiency in dataset preparation.

2.3 Model performance testing

2.3.1 Facial markings extraction model

The validation and testing protocol for the trained YOLO model encompassed two critical phases: validation and prediction testing. During the validation phase, the model underwent rigorous evaluation using a distinct dataset comprising 61 images. Specialized software was employed to compare the model’s predictions against established labels, thereby generating comprehensive performance metrics. The prediction testing phase simulated real-world scenarios by processing 44 previously unseen images from a designated test folder. This dual-pronged approach yielded both quantitative performance indicators and qualitative insights into the model’s practical efficacy. Replication of this protocol necessitated the following components: the trained model, a validation dataset completed with labels, a corpus of test images, and the YOLO software suite. This methodology ensured a thorough assessment of the model’s accuracy and its readiness for deployment in real-world applications.

2.3.2 Markings comparison model

A dataset of 90 humphead wrasse left body side images (original, non-augmented), consisting of two to three photos from 40 humphead wrasse individuals, was used to evaluate the extraction and comparison models. The dataset included both poor-quality photos taken under suboptimal conditions and high-quality images, ensuring a balanced test of the model’s robustness.

To assess the performance of the comparison model, positional accuracy was evaluated during comparison trials using two subsets of images: 1) two repetitions with all 90 photos, and 2) two repetitions with 74 high-quality photos from the dataset. The results were measured as percentages (%) of correct matches between the input photo and the images in the testing subsets, focusing on the most similar matches in the top-1, top-3, and top-5 positions.

This neural network model uses a pre-trained ResNet50V2 as its foundation, followed by layers that refine and compress the extracted features. It processes images through several stages, ultimately producing a compact 128-dimensional representation. With about 23.8 million parameters, mostly trainable, the model is designed to efficiently extract and condense important visual features, making it suitable for tasks like fish facial recognition.

3 Results

3.1 Facial markings extraction model

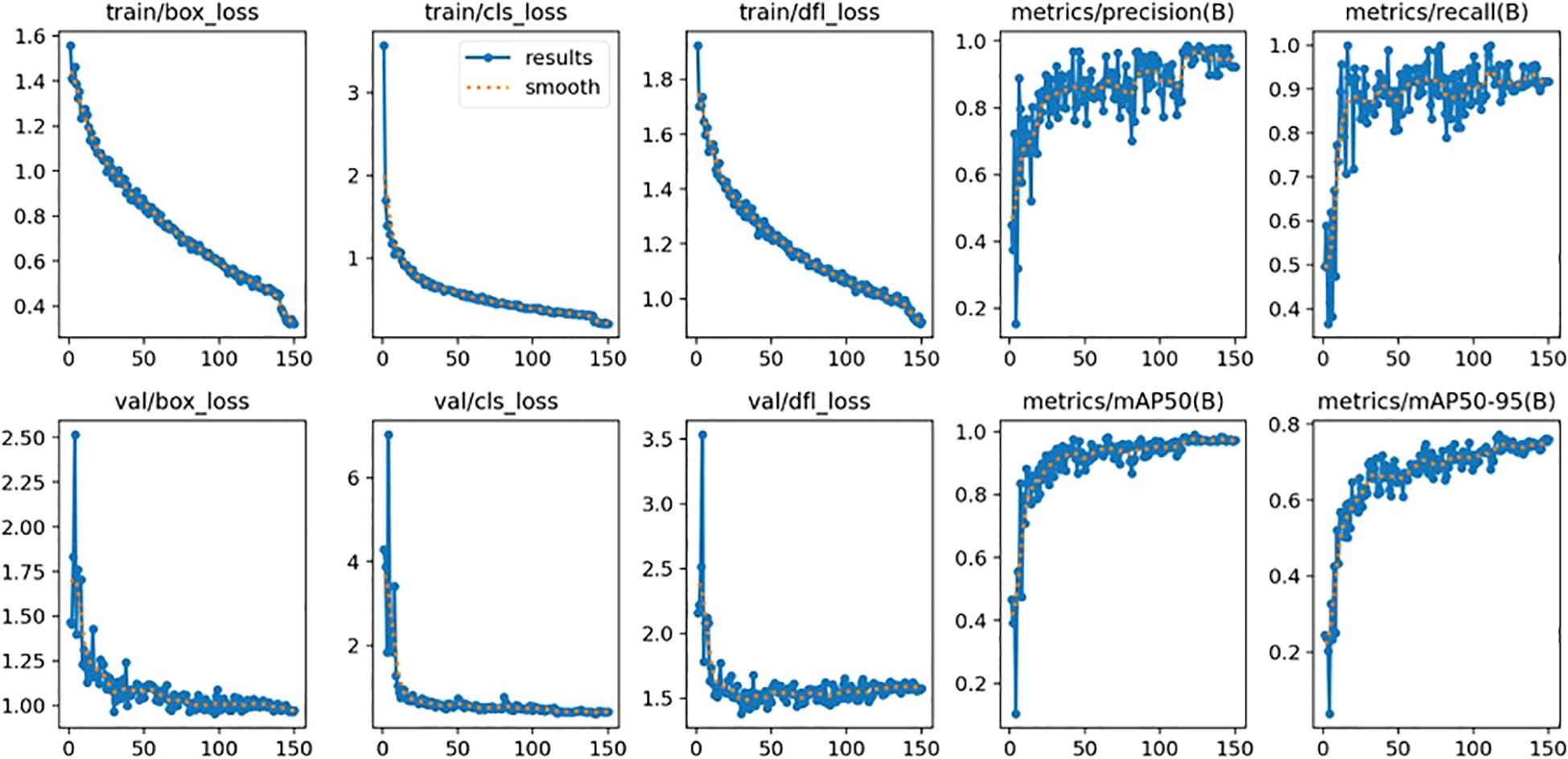

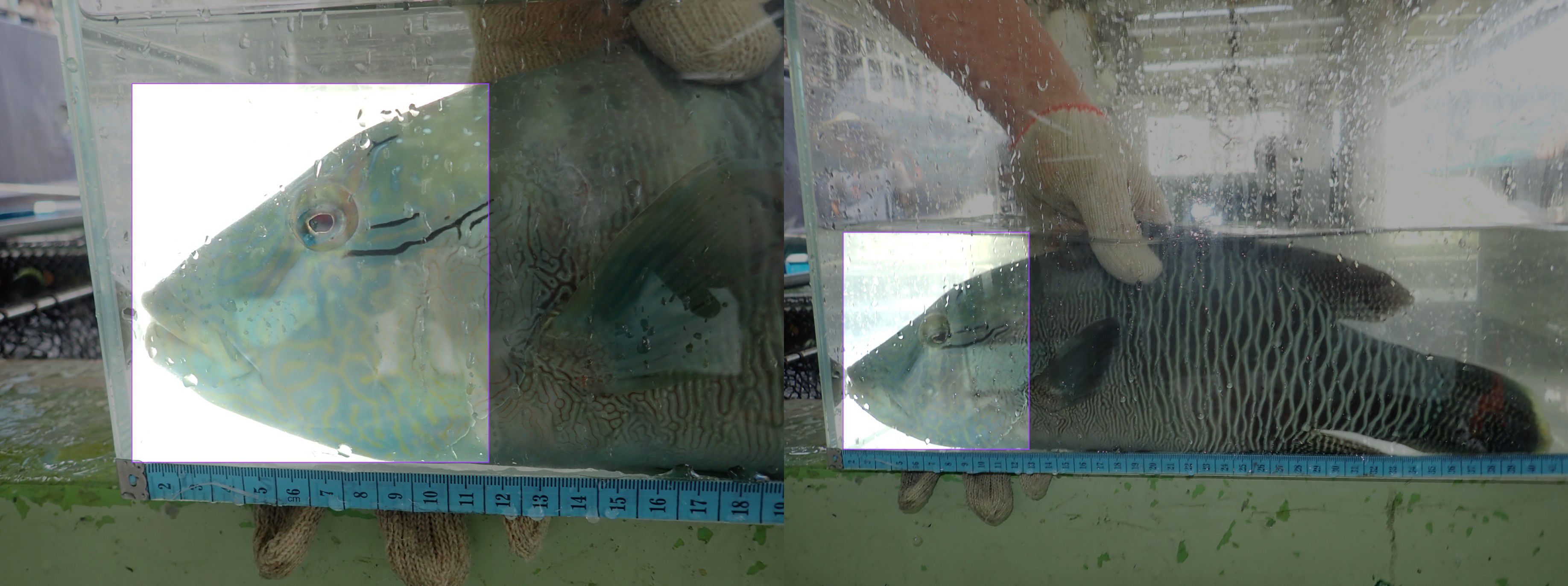

The validation results for the trained object detection model demonstrated satisfactory performance on unseen images. Tested on 61 previously unseen images from the validation set, the model achieved high overall precision (0.978), recall (0.955), and mean average precision (mAP) scores (mAP50: 0.982, mAP50-95: 0.771). It performed exceptionally well in both classes ‘L’ and ‘R’, with perfect recall for ‘L’ and perfect precision for ‘R’. The model also showed efficient processing speeds, with a total of 30.9ms per image for preprocess, inference, and postprocess combined. These results indicate that the model generalizes well to new, unseen images, maintaining high accuracy in detecting objects and performing consistently across different IoU thresholds (Figure 3).

Figure 3. Examples of outputs of the developed extraction model that extracts the facial area of humphead wrasse from inputted photos.

This training session for an object detection model showed promising results. The model was trained for 150 epochs, with the best performance achieved in the final epochs. The training process took about 0.981 hours. The final validation results showed high accuracy across various metrics. The model achieved a mAP of 0.982 at an IoU threshold of 0.5, and 0.772 for mAP50-95. The model performed well in both classes, labelled ‘L’ and ‘R’, with slightly better results for class ‘L’. The precision (P) and recall (R) values were also high, at 0.977 and 0.955 respectively, indicating good overall detection performance. The training logs showed a general trend of decreasing loss values (box_loss, cls_loss, and dfl_loss) over time, suggesting successful learning. The final model size was relatively compact at 22.6MB, making it potentially suitable for deployment in various applications. Figure 4 provides a visual representation of the loss curves for both the training and validation sets, allowing for a clear observation of the model’s learning progress and performance over time.

3.2 Markings comparison model

In two comparison repetitions with the 90-photo (i.e. both poor and good quality images) dataset, 40% of comparisons matched the correct humphead wrasse individual at the top-1 position, 67.5% were within the top-3, and 72.5% fell within the top-5 positions.

Among the two comparison repetitions with 74 good-quality images, 79.73% of entries achieved the top-1 position, while 95.95% fell within the top-3 positions, and 100% within the top-5 positions. The mean position was 1.797, with a median and mode of 1, indicating that most comparisons ranked at the highest accuracy. A standard deviation of 0.86 reflects a tight clustering of position values around the mean, demonstrating consistent performance across the dataset.

3.3 Saving face mobile application system

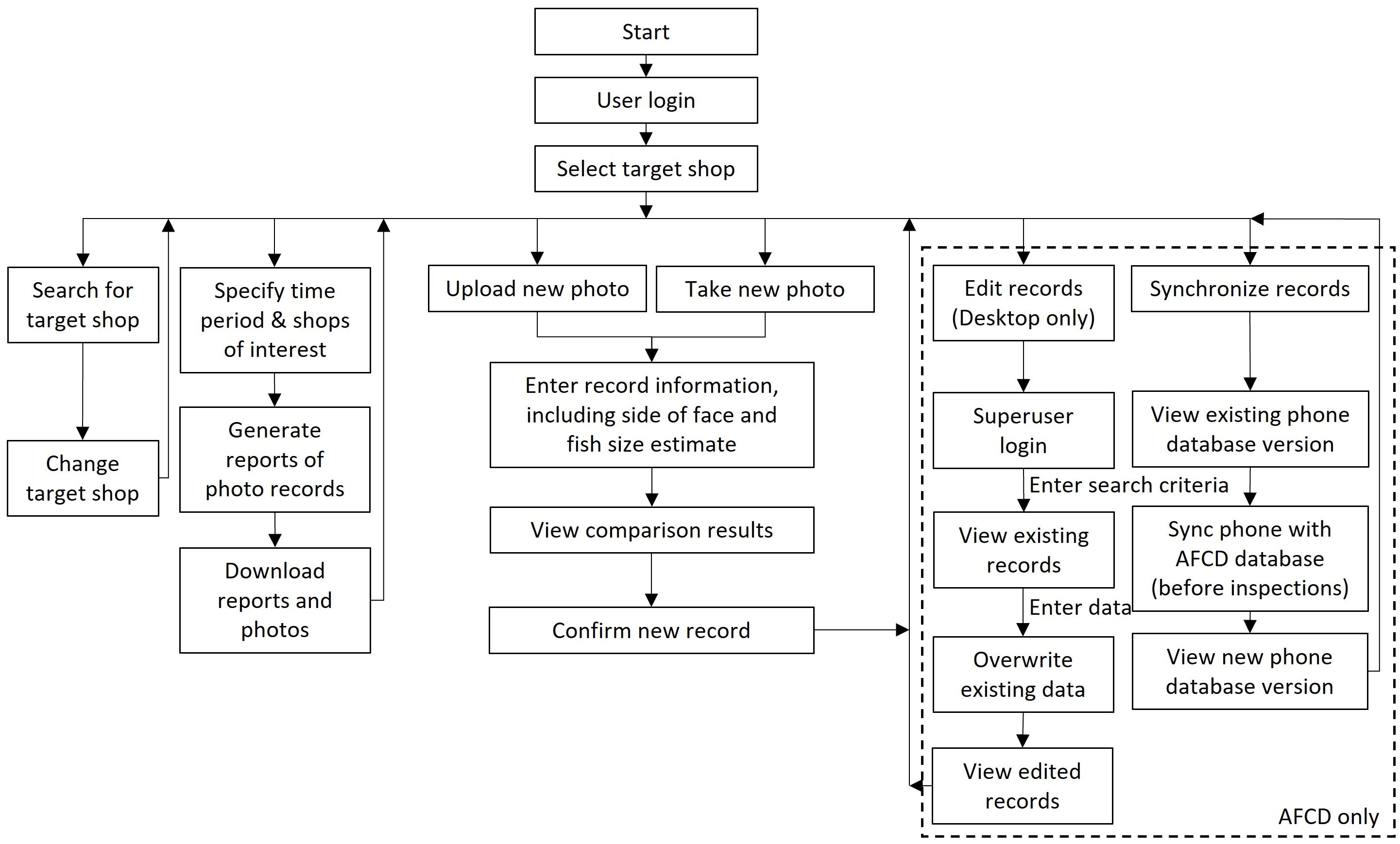

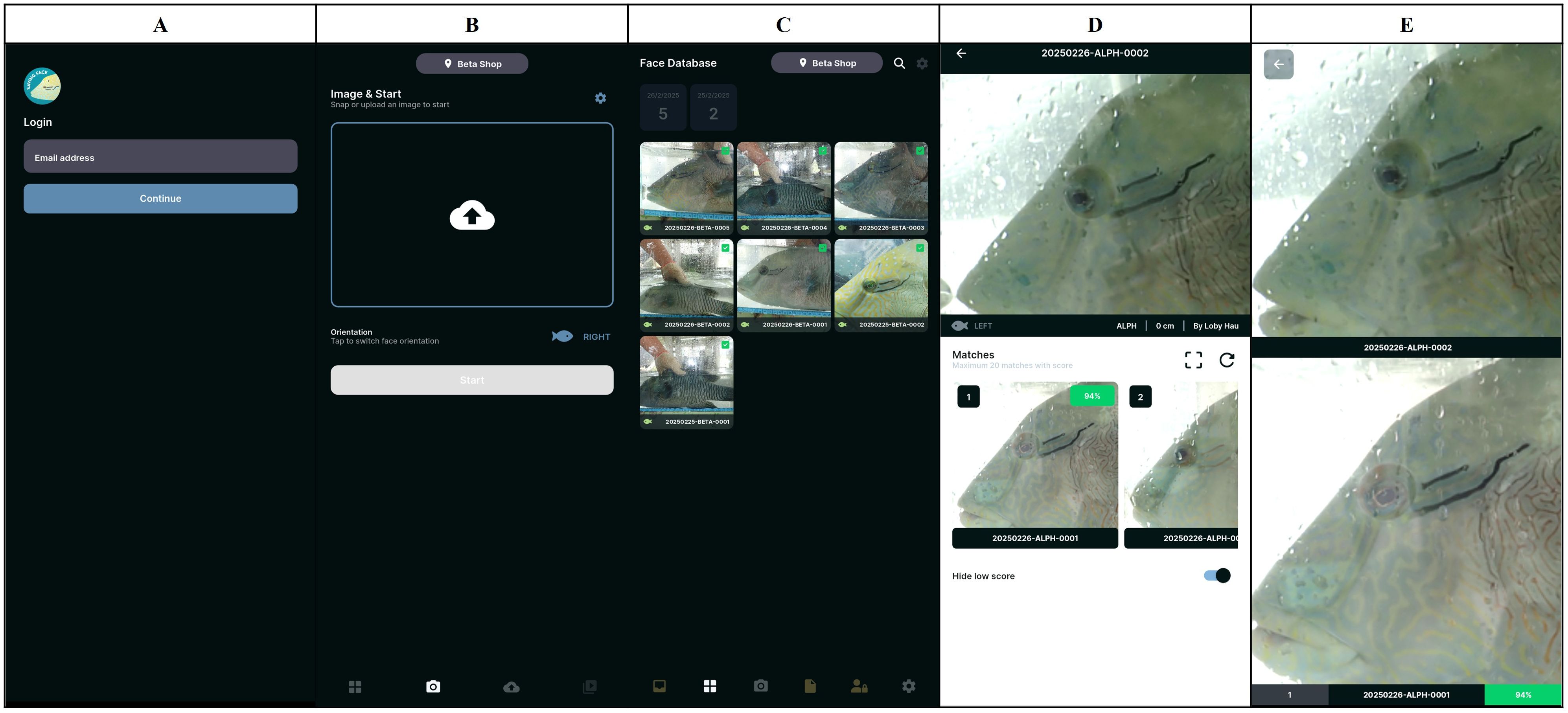

The Saving Face application is designed to deliver detailed analyses of fish’s facial features through an intuitive interface (Figures 5, 6). Upon logging in, users are provided with the option to either capture a new photo or upload one from their device’s album. This flexibility allows users to choose the most suitable image for analysis. Importantly, the upload and analysis processes are separated; after the image is uploaded, the analysis occurs in the background. This allows users to continue using the app or engage with other features while the processing occurs seamlessly.

Figure 5. Basic use flow of the mobile application system “Saving Face” for photo identification of humphead wrasse.

Figure 6. Screenshots of the “Saving Face” mobile application. (A) The “User Login” page for registered users. (B) The “Create Comparison” page, where users can take new photos or upload images of humphead wrasse from their device storage. Users also have the option to enter optional details about the captured fish, including orientation (left/right), name, and size (cm). (C) The “Comparisons” page lists the photo records created by the user in each shop, displaying details such as a unique ID, shop name, and date for each record. (D) The “Comparison Result” page for each record shows a list of the most similar stored photo records from the same shop, along with the respective similarity score (%). (E) The application allows users to enlarge the two photos in each comparison for closer manual examination, with the similarity score (%) also displayed for the examined pair of photos.

Once the image has been processed, the app generates ranked comparison results showcasing various aspects of the user’s facial features. One of the app’s notable features is the ability to view these analysis results in a clear, ranked list format. Users can tap on individual results to expand them for detailed information which enhances the understanding of each specific attribute assessed by the app. This interactive component makes the experience engaging as users are encouraged to explore the nuances of their facial analysis further.

4 Discussion

4.1 Pros and cons of the current frameworks for model development

The current framework for humphead wrasse identification demonstrates several notable strengths, particularly in its extraction capabilities and overall system architecture. The YOLOv8-based extraction model achieves an impressive 99% success rate in face detection for both left and right sides under ideal conditions (i.e. high-quality images), providing a robust foundation for the identification process. The cloud-based infrastructure and user-friendly mobile interface contribute to the system’s accessibility and efficiency in field applications. Moreover, the comparison model, when dealing with high-quality images, shows promising accuracy, with 79.73% of correct matches ranked in the top position and 95.95% within the top three positions for good-quality images.

However, the system faces significant challenges when confronted with lower-quality images, particularly those affected by environmental factors such as reflections on tank surfaces, water droplets, or poor lighting conditions. As a result, careful attention from the user (photographer) to image quality will continue to be important. Good quality images are usually easy to obtain, even under more challenging field circumstances, if the photographer takes the time and effort. This limitation in handling suboptimal images substantially impacts the accuracy of the comparison model leading to less reliable comparisons and potentially false identifications. This issue is compounded by the current imbalance in the training data, where the model performs optimally only for one side (the left) of the face due to a larger number of high-quality images used for model training from that side. The combination of these factors – sensitivity to image quality and side-specific performance – significantly narrows the range of field conditions under which the system can currently operate at its highest accuracy.

4.2 Optimization and prospects for model development

To address the above limitations and further enhance the performance and reliability of the humphead wrasse face identification system, several optimization strategies can be implemented with a particular focus on data cleanup and normalization techniques. This will encompass images with different types of reflections, varying water clarity, and diverse lighting conditions, ensuring the model learns to handle the real-world variability that workers will likely face when capturing images in the field, whether photographing fish in holding tanks or underwater. Implementing advanced pre-processing techniques to mitigate the effects of reflections and enhance image quality before feature extraction could significantly improve performance on suboptimal images. This might involve, for example, developing specialized image enhancement algorithms tailored to address the photography challenges of taking images of animals in tanks.

For the side-specific limitation developing a two-stream comparison model (one for each side) or investigating cross-side comparison techniques is important in addition to increasing the photo database of right-hand side images. Incorporating active learning approaches, where the system identifies and flags challenging cases for human review, could help to improve the model’s performance while adapting to new environmental conditions encountered in the field. These enhancements, while increasing the complexity of the system, are essential for creating a truly versatile and reliable tool capable of operating effectively across the wide range of conditions encountered in real-world inspection and research environments. By focusing on these areas of improvement, the system can evolve to handle better the challenges of variable image quality and side-specific identification, ultimately providing more consistent and accurate results in diverse field conditions.

A critical area for improvement is the pre-processing of images to mitigate common issues encountered in taking photos of animals in water through glass. Implementing advanced deraining algorithms could significantly reduce the impact of water droplets and reflections on image quality. These algorithms, which have shown promising results in computer vision applications, can effectively remove water-related artefacts from images, potentially improving the clarity of fish features crucial for identification (Li et al., 2019). Additionally, developing and applying specialized color correction and contrast enhancement techniques tailored for underwater imagery could help normalize the appearance of fish across various water conditions and lighting scenarios.

Nonetheless, despite current limitations, under a specific set of conditions, the model can already be successfully applied to document and follow individuals and as a tool to aid enforcement. Those taking photographs can pay attention to image quality by controlling environmental conditions to produce images that can be successfully used. For example, light levels are often high around tanks where fish are kept, while photographers can pay attention to where they are standing to avoid water droplets and reflections on tank surfaces to reduce the risk of distortion. For formal (rather than undercover) inspections, officers should be able to closely control the conditions under which photos are taken. Still images and video material are continually being collected to improve model functionality and accuracy. Even as the model improves, user care in image procurement will continue to be important.

4.3 Application of AI-based tool to improve CITES implementation in Hong Kong in practice

4.3.1 Context for application to humphead wrasse

The application of facial patterns of the humphead wrasse as a novel tool to mitigate the CITES enforcement loophole to detect laundering is proven and developed with this study (Hau and Sadovy de Mitcheson, 2019; Hau, 2022). AFCD has been a supporter of the development of AI technology to facilitate the monitoring of wildlife trade to enhance their enforcement capability under Cap 586. In line with this approach, the AFCD worked closely with the ‘Saving Face’ project to tailor and trial the facial recognition mobile app for the humphead wrasse to assist in the Department’s inspection work against laundering.

After import, humphead wrasse that enter the city’s markets are typically displayed in tanks that are easy to view from a public area. Sometimes, they are stored in the back of the business, away from public sight. In both cases, they should be available for inspection by AFCD officers. They should not be traded between businesses without traceable documentation proof. The typical turnaround time between entry to the city and sale or death for individual fish is about a week and no more than one month. Hence photo-recording of known individual fish over time in retail outlets can be used, together with import data, to detect possible laundering. There are fewer than 15 different businesses that regularly trade in small numbers of this species, and most animals are traded within three main seafood retail markets in the city.

Not only is the humphead trade focused on a small number of holding locations, but the typically small number of fish also traded means that all legally imported fish can either be photographed on import or when they enter specific retail outlets and then followed over time. Only five shipments of humphead wrasse have entered the city from 2023 to 2024 (none in 2021 and 2022), each shipment containing between 468 and 484 fish. These numbers are readily manageable using the facial recognition approach. The mobile application system empowers AFCD officials to detect possible laundering by tracking individuals over time within specific retail outlets. During inspections of shops, restaurants and hotels, officials photograph each fish creating sighting records for specific locations stored in a centralized database. On subsequent visits, the application’s photo identification models enable officials to identify any changes in humphead wrasse individuals in stock. Any changes could be due to sale or death, or when new individuals are acquired (legally or illegally).

Recorded (i.e. photographed) individuals can potentially be followed over time using their facial identity. If any fish are still available for sale a month or more (i.e. beyond the turnaround period) after the last legal import, their facial identity can be checked against the database of legal imports (or fish recorded earlier in the same shop). If there is no match, this alerts enforcement officers to follow up with businesses to track their trade paperwork as the fish is likely to have been laundered.

If a suspect fish cannot be traced back to any legal export, then AFCD can use the photographic evidence, combined with expert judgment of facial matches in the same way that human fingerprint evidence in court typically depends on human expert judgement, in addition to automated fingerprint image processing It is worth noting that users (including AFCD enforcers) are not expected to solely rely on the outcome of the models. Human validation is expected for follow-up prosecution and subsequent legal processes considering real-life scenarios and practical legal requirements as advised by legal experts and relevant officers (personal communication, December 2024).

To facilitate investigation, research, and data handling, information can be exported to an Excel file that embeds exported photographs along with details on the location, date, fish size, and matches identified. These reports can be generated for selected time periods and shop combinations according to need.

4.3.2 Saving face in practice

Early-stage trials of the practical application of the facial recognition app in Hong Konge highlighted two challenges. The first is the occasional refusal by shop owners to allow visitors to take photographs or to permit visitors to have sufficient time to ensure good photo quality. This constrains the availability of usable images for subsequent follow-up and enforcement and means that not all fish can always be photographed from both sides. The second challenge is the limited number of government staff and funding dedicated to wildlife crime in Hong Kong to ensure sufficiently regular inspections.

The positive trials by officers, researchers and non-technical users (such as citizen scientists and the general public) indicate that the app is easy to use in practice and applied undercover. AFCD and legal experts also reflected that results obtained from the tool could be acceptable as evidence for law enforcement and legal processes. In support of its use and integration as a tool for government officers, a supporting data management system tailored to department needs is currently under development in collaboration with AFCD (Audit Commission, 2021).

4.3.3 Prospects of automated wildlife trade enforcement solutions

Various automated solutions to combat wildlife trafficking have been developed in recent years following the evolution of artificial intelligence, for example, legal documents assessment (humphead wrasse was used as a relevant example, Tlusty et al., 2023), luggage tomography (Pirotta et al., 2022), taxa-specific image and keyword mining for (online/physical) market (Cardoso et al., 2023; Chakraborty et al., 2025) and big data monitoring (Wang et al., 2024). The use of an individual identification system using body markings for wildlife trade control has not been applied elsewhere. As such, this study introduced a new approach for the purpose, and we are not able to compare it with other AI-based wildlife monitoring systems in terms of cost, scalability, and ease of integration into existing wildlife protection infrastructures. However, given the prominence of Hong Kong in the global trade of endangered species, the willingness of the government to alternative tools to aid enforcement is encouraging.

In other species, individually distinctive markings and patterns are being considered in relation to their possible value in combatting wildlife crime. Tiger stripe patterns are individually distinctive, and a database of these is being compiled to develop an AI detection tool using stripe pattern profiles that are as unique as human fingerprints (EIA, 2022). A similar approach is being explored for turtles (Tabuki et al., 2021).

4.3.4 Beyond enforcement

A public version of the mobile humphead wrasse photo identification application is also available as a citizen science and research tool enabling the general public, investigators and students to monitor the species’ trade. Sighting records submitted by the public enter a master database, with anonymized data sources providing valuable supplementary information for enforcement investigations and contributing to the ongoing optimization (through additional model training) of the photo identification models. Also, individual (non-government) users have their own database that they can download and manage for further analysis.

Development of the ‘Saving Face’ application system for public use is entering the beta testing stage. The beta version is available publicly on iOS and Android platforms, allowing trial users to provide feedback and help identify bugs. This feedback will inform further optimization in future updates.

5 Conclusion

The absence of a system for individual animal identification and tracking significantly compromises CITES enforcement of humphead wrasse trade under Cap 586 in Hong Kong and precludes the city from fulfilling its CITES obligations. The development of AI-based humphead wrasse individual identification models and the ‘Saving Face’ mobile application system provides a tool that could significantly help to improve enforcement if the government can successfully integrate this approach into their inspection process and enforcement operations. The work demonstrates the potential of advanced photo identification techniques for improving CITES trade management throughout the supply chain, a method potentially applicable to other listed species with distinctive individual features.

However, other enforcement challenges remain, including inadequate control of imports on live fish carrier vessels (Class III[a]), limited law enforcement and investigation capacity and the inability to distinguish individual fish according to their source of production (CITES terminology, https://cites.org/eng/node/131004), each of which is subject to different regulatory measures (Hau and Sadovy de Mitcheson, 2023). If the AI-facial recognition approach cannot be applied, then a tagging system is needed to distinguish legal from illegal imports. However, that could be complicated, may be easy to abuse, involve animal welfare issues and be unacceptable to traders who need fish to look good. By improving humphead wrasse trade management the AI approach used in this initiative could serve as a showcase for enhancing the conservation of other similarly listed or potentially listed fish and species, contributing to the sustainable use and management of endangered wildlife resources.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

CYH: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. WKN: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. YSdM: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. Ocean Park Conservation Foundation, Hong Kong (OPCFHK) provided funding for the development of the photo identification models for humphead wrasse and the prototype ‘Saving Face’ application in this project from 2020 to 2021. Funding in 2022-2024 for ongoing development work was provided by a private donor to Science & Conservation of Fish Aggregations.

Acknowledgments

AFCD has contributed to the development of the humphead wrasse facial recognition technology by providing essential data and materials (photographs) and has been closely involved in integrating this technology into its CITES enforcement procedures. The University of Hong Kong supported the development of the humphead wrasse photo identification technique and the ‘Saving Face’ project.

Conflict of interest

Author WKN was director of the company Mobius AI.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fevo.2025.1526661/full#supplementary-material

References

ADMCF (2018). Trading in Extinction: The Dark Side of Hong Kong’s Wildlife Trade (Hong Kong: ADMCF).

ADMCF (2021). Still Trading in Extinction: The Dark Side of Hong Kong’s Wildlife Trade (Hong Kong: ADMCF).

Arzoumanian Z., Holmberg J., Norman B. (2005). An astronomical pattern-matching algorithm for computer-aided identification of whale sharks Rhincodon typus. J. Appl. Ecol. 42, 999–1011. doi: 10.1111/j.1365-2664.2005.01117.x

Audit Commission (2021). Control of trade in endangered species by the Agriculture, Fisheries and Conservation Department (Hong Kong: Audit Commission).

Ballance L. T. (2018). Contributions of photographs to cetacean science. Aquat. Mammals 44, 668. doi: 10.1578/AM.44.6.2018.668

BFAR (2017). Napoleon Wrasse (Cheilinus undulatus) “Mameng” Philippine Status Report and National Plan of Action 2017-2022 (Quezon City, Philippines: Bureau of Fisheries and Aquatic Resources - National Fisheries Research and Development Institute).

CAPPMA (2013). A Survey of Coral Reef Fish Market in Tier 1 and Tier 2 Cities in Northern Mainland China. A Report Prepared for WWF Coral Triangle Program by the China Aquatic Products Processing and Marketing Alliance (CAPPMA) (Beijing, China: China Aquatic Products Processing and Marketing Alliance (CAPPMA).

Cardoso A. S., Bryukhova S., Renna F., Reino L., Xu C., Xiao Z., et al. (2023). Detecting wildlife trafficking in images from online platforms: A test case using deep learning with pangolin images. Biol. Conserv. 279, 109905. doi: 10.1016/j.biocon.2023.109905

Chakraborty S., Roberts S. N., Petrossian G. A., Sosnowski M., Freire J., Jacquet J. (2025). Prevalence of endangered shark trophies in automated detection of the online wildlife trade. Biol. Conserv. 304, 110992. doi: 10.1016/j.biocon.2025.110992

Chan J. Y., Nijman V., Shepherd C. R. (2024). The trade of tokay geckos Gekko gecko in retail pharmaceutical outlets in Hong Kong. Eur. J. Wildlife Res. 70, 10. doi: 10.1007/s10344-023-01762-3

Cheng K. (2021). Is Hong Kong Illegal Wildlife Trade On the Brink. Available online at: https://earth.org/is-hong-kong-illegal-wildlife-trade-on-the-brink/ (Accessed February 8, 2025).

Choat H., Bellwood D. (1994). “Wrasses and parrotfishes,” in Encyclopedia of fishes (Academic Press, San Diego, CA), 209–213.

Choat J. H., Davies C. R., Ackerman J. L., Mapstone B. D. (2006). Age structure and growth in a large teleost, Cheilinus undulatus, with a review of size distribution in labrid fishes. Mar. Ecol. Prog. Ser. 318, 237–246. doi: 10.3354/meps318237

Choo Y. R., Kudavidanage E. P., Amarasinghe T. R., Nimalrathna T., Chua M. A., Webb E. L. (2020). Best practices for reporting individual identification using camera trap photographs. Global Ecol. Conserv. 24, e01294. doi: 10.1016/j.gecco.2020.e01294

Cisar P., Bekkozhayeva D., Movchan O., Saberioon M., Schraml R. (2021). Computer vision based individual fish identification using skin dot pattern. Sci. Rep. 11, 16904. doi: 10.1038/s41598-021-96476-4

Colin P. L. (2010). Aggregation and spawning of the humphead wrasse Cheilinus undulatus (Pisces: Labridae): general aspects of spawning behaviour. J. Fish Biol. 76, 987–1007. doi: 10.1111/j.1095-8649.2010.02553.x

Correia M., Antunes D., Andrade J. P., Palma J. (2021). A crown for each monarch: a distinguishable pattern using photo-identification. Environ. Biol. Fishes 104, 195–201. doi: 10.1007/s10641-021-01075-x

Desiderà E., Trainito E., Navone A., Blandin R., Magnani L., Panzalis P., et al. (2021). Using complementary visual approaches to investigate residency, site fidelity and movement patterns of the dusky grouper (Epinephelus marginatus) in a Mediterranean marine protected area. Mar. Biol. 168, 111. doi: 10.1007/s00227-021-03917-9

Dorenbosch M., Grol M. G. G., Nagelkerken I., van der Velde G. (2006). Seagrass beds and mangroves as potential nurseries for the threatened Indo-Pacific humphead wrasse, Cheilinus undulatus and Caribbean rainbow parrotfish, Scarus guacamaia. Biol. Conserv. 129, 277–282. doi: 10.1016/j.biocon.2005.10.032

EIA (2022). Groundbreaking stripe-pattern database to boost enforcement in fight against illegal tiger trade. Available online at: https://eia-international.org/news/groundbreaking-stripe-pattern-database-to-boost-enforcement-in-fight-against-illegal-tiger-trade/ (Accessed February 8, 2025).

Fabinyi M. (2011). Historical, cultural and social perspectives on luxury seafood consumption in China. Environ. Conserv. 39, 83–92. doi: 10.1017/S0376892911000609

Fabinyi M., Liu N. (2014). Seafood banquets in Beijing: consumer perspectives and implications for environmental sustainability. Conserv. Soc. 12, 218–228. doi: 10.4103/0972-4923.138423

Gillett R. (2010). Monitoring and management of the humphead wrasse, Cheilinus undulatus (Rome, Italy: Food and Agriculture Organization of the United Nations).

Hau C. Y. (2022). Outcomes, challenges and novel enforcement solutions following the 2004 cites appendix II listing of the humphead (=napoleon) wrasse, Cheilinus undulatus (order Perciformes; family Labridae). [PhD thesis]. Hong Kong: The University of Hong Kong.

Hau C. Y., Sadovy de Mitcheson Y. (2019). A facial recognition tool and legislative changes for improved enforcement of the CITES appendix II listing of the humphead wrasse, Cheilinus undulatus. Aquat. Conservation: Mar. Freshw. Ecosyst. 29, 2071–2091. doi: 10.1002/aqc.3199

Hau C. Y., Sadovy de Mitcheson Y. J. (2023). Mortality and management matter: Case study on use and misuse of ‘ranching’ for a CITES Appendix II-listed fish, humphead wrasse (Cheilinus undulatus). Marine Policy. 49, 105515. doi: 10.1016/j.marpol.2023.105515

Hoffer E., Ailon N. (2015). “Deep metric learning using triplet network,” in Similarity-based pattern recognition: third international workshop, SIMBAD 2015, Copenhagen, Denmark, October 12-14, 2015 (Cham, Germany: Springer).

Jepsen N., Thorstad E. B., Havn T., Lucas M. C. (2015). The use of external electronic tags on fish: an evaluation of tag retention and tagging effects. Anim. Biotelem. 3 (1), 49. doi: 10.1186/s40317-015-0086-z

Koch G., Zemel R., Salakhutdinov R. (2015). “Siamese neural networks for one-shot image recognition,” in ICML deep learning workshop (New York, United States: Journal of Machine Learning Research), vol. 2, 1–30.

Li S., Araujo I. B., Ren W., Wang Z., Tokuda E. K., Junior R. H., et al. (2019). “Single image deraining: A comprehensive benchmark analysis” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. (New York, United States: The Computer Vision Foundation), 3838–3847.

Liu M. (2013). Trade of the humphead wrasse (Cheilinus undulatus) in mainland China (Xiamen, China: Xiamen University).

Love M. S., Seeto K., Jainese C., Nishimoto M. M. (2018). Spots on sides of giant sea bass (Stereolepis gigas ayres 1859) are likely unique to each individual. Bulletin South. California Acad. Sci. 117, 77–81. doi: 10.3160/soca-117-01-77-81.1

Marshall A. D., Pierce S. J. (2012). The use and abuse of photographic identification in sharks and rays. J. Fish Biol. 80, 1361–1379. doi: 10.1111/j.1095-8649.2012.03244.x

Martin-Smith K. M. (2011). Photo-identification of individual weedy seadragons Phyllopteryx taeniolatus and its application in estimating population dynamics. J. Fish Biol. 78, 1757–1768. doi: 10.1111/j.1095-8649.2011.02966.x

Nyegaard M., Karmy J., McBride L., Thys T. M., Welly M., Djohani R. (2023). Rapid physiological colouration change is a challenge - but not a hindrance - to successful photo identification of giant sunfish (Mola alexandrini, Molidae). Front. Mar. Sci. 10. doi: 10.3389/fmars.2023.1179467

Oddone A., Onori R., Carocci F., Sadovy Y., Suharti S., Colin P. L., et al. (2010). Estimating reef habitat coverage suitable for the humphead wrasse, Cheilinus undulatus, using remote sensing. FAO Fisheries and Aquaculture Circular. No. 1057 (Rome, Italy: Food and Agriculture Organization of the United Nations).

Perrig M., Goh B. (2008). Photo-ID on reef fish – avoiding tagging-induced biases. Proc. 11th Int. Coral Reef Symposium, 7–11. doi: 10.13140/RG.2.1.3528.0487

Petso T., Jamisola R. S., Mpoeleng D. (2022). Review on methods used for wildlife species and individual identification. Eur. J. Wildlife Res. 68. doi: 10.1007/s10344-021-01549-4

Pirotta V., Shen K., Liu S., Phan H. T. H., O’Brien J. K., Meagher P., et al. (2022). Detecting illegal wildlife trafficking via real time tomography 3D X-ray imaging and automated algorithms. Front. Conserv. Sci. 3, 757950. doi: 10.3389/fcosc.2022.757950

Poh T. M., Fanning L. M. (2012). Tackling illegal, unregulated, and unreported trade towards Humphead wrasse (Cheilinus undulatus) recovery in Sabah, Malaysia. Mar. Policy 36, 696–702. doi: 10.1016/j.marpol.2011.10.011

Randall J. E., Head S. M., Sanders A. P. (1978). Food habits of the giant humphead wrasse, Cheilinus undulatus (Labridae). Environ. Biol. Fishes 3, 235–238. doi: 10.1007/BF00691948

Redmon J., Divvata S., Girshick R., Farhadi A. (2016). “You only look once: Unified, real-time object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition. (New York, United States: Institute of Electrical and Electronics Engineers), 779–788.

Ritter E. K., Amin R. W. (2019). Mating scars among sharks: evidence of coercive mating? Acta ethologica 22, 9–16. doi: 10.1007/s10211-018-0301-z

Romero F. G., Injani A. S. (2015). “Assessment of humphead wrasse (Cheilinus undulatus) spawning aggregations and declaration of marine protected area as strategy for enhancement of wild stocks,” in Resource Enhancement and Sustainable Aquaculture Practices in Southeast Asia: Challenges in Responsible Production of Aquatic Species: Proceedings of the International Workshop on Resource Enhancement and Sustainable Aquaculture Practices in Southeast Asia 2014 (RESA). Eds. Romana-Eguia M. R. R., Parado-Estepa F. D., Salayo N. D., Lebata-Ramos M.J.H. (Aquaculture Dept., Southeast Asian Fisheries Development Center, Tigbauan, Iloilo, Philippines), 103–120.

Sacchi R., Scali S., Pellitteri-Rosa D., Pupin F., Gentilli A., Tettamanti S., et al. (2010). Photographic identification in reptiles: a matter of scales. Amphibia-Reptilia 31, 489–502. doi: 10.1163/017353710X521546

Sadovy Y., Kulbicki M., Labrosse P., Letourneur Y., Lokani P., Donaldson T. J. (2003). The humphead wrasse, Cheilinus undulatus: synopsis of a threatened and poorly known giant coral reef fish. Rev. Fish Biol. Fisheries 13, 327–364. doi: 10.1023/B:RFBF.0000033122.90679.97

Sadovy de Mitcheson Y., Liu M., Suharti S. (2010). Gonadal development in a giant threatened reef fish, the humphead wrasse Cheilinus undulatus, and its relationship to international trade. J. Fish Biol. 77, 706–718. doi: 10.1111/j.1095-8649.2010.02714.x

Sadovy de Mitcheson Y. J., Mitcheson G. R., Rasotto M. B. (2022). Mating system in a small pelagic spawner: field case study of the mandarinfish, Synchiropus splendidus. Environ. Biol. Fishes 105, 699–716. doi: 10.1007/s10641-022-01281-1

Sadovy de Mitcheson Y., Tam I., Muldoon G., le Clue S., Botsford E., Shea S. (2017). The Trade in Live Reef Food Fish – Going, Going, Gone (Hong Kong: ADM Capital Foundation and The University of Hong Kong).

Sluka R. D. (2000). Grouper and napoleon wrasse ecology in Laamu atoll, republic of Maldives: part 1. Habitat, behavior, and movement patterns. Atoll Res. Bullet. 491, 1–28. doi: 10.5479/si.00775630.491.1

Soulsbury C., Gray H., Smith L., Braithwaite V., Cotter S., Elwood R. W., et al. (2020). The welfare and ethics of research involving wild animals: A primer. Methods Ecol. Evol. 11, 1164–1181. doi: 10.1111/2041-210X.13435

Tabuki K., Nishizawa H., Abe O., Okuyama J., Tanizaki S. (2021). Utility of carapace images for long-term photographic identification of nesting green turtles. J. Exp. Mar. Biol. Ecol. 545, 151632. doi: 10.1016/j.jembe.2021.151632

Tlusty M. F., Cawthorn D. M., Goodman O. L., Rhyne A. L., Roberts D. L. (2023). Real-time automated species level detection of trade document systems to reduce illegal wildlife trade and improve data quality. Biol. Conserv. 281, 110022. doi: 10.1016/j.biocon.2023.110022

Treilibs C. E., Pavey C. R., Hutchinson M. N., Bull C. M. (2016). Photographic identification of individuals of a free-ranging, small terrestrial vertebrate. Ecol. Evol. 6, 800–809. doi: 10.1002/ece3.2016.6.issue-3

Tuckey N. P., Ashton D. T., Li J., Lin H. T., Walker S. P., Symonds J. E., et al. (2022). Automated image analysis as a tool to measure individualised growth and population structure in Chinook salmon (Oncorhynchus tshawytscha). Aquaculture Fish Fisheries 2, 402–413. doi: 10.1002/aff2.v2.5

Tupper M. (2007). Identification of nursery habitats for commercially valuable humphead wrasse Cheilinus undulatus and large groupers (Pisces: Serranidae) in Palau. Mar. Ecol. Prog. Ser. 332, 189–199. doi: 10.3354/meps332189

Wang W., Hel J., Liu S. (2024). “Combating illegal wildlife trade through big data,” in Proceedings of the 2024 3rd International Conference on Artificial Intelligence, Internet and Digital Economy (ICAID 2024) (Berlin, Germany: Springer Nature), Vol. 11. 138.

Wu J., Sadovy de Mitcheson Y. (2016). Humphead (Napoleon) Wrasse Cheilinus undulatus trade into and through Hong Kong (Hong Kong: TRAFFIC).

Keywords: Napoleon/humphead wrasse, CITES, photo identification, artificial intelligence, wildlife trade, law enforcement

Citation: Hau CY, Ngan WK and Sadovy de Mitcheson Y (2025) Leveraging artificial intelligence for photo identification to aid CITES enforcement in combating illegal trade of the endangered humphead wrasse (Cheilinus undulatus). Front. Ecol. Evol. 13:1526661. doi: 10.3389/fevo.2025.1526661

Received: 12 November 2024; Accepted: 06 March 2025;

Published: 26 March 2025.

Edited by:

Kyle Ewart, The University of Sydney, AustraliaReviewed by:

Eve Bohnett, University of Florida, United StatesJin Hou, Beijing Normal University, China

Copyright © 2025 Hau, Ngan and Sadovy de Mitcheson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: C. Y. Hau, Y2hldWt5dWhhdUBnbWFpbC5jb20=

C. Y. Hau

C. Y. Hau W. K. Ngan

W. K. Ngan Y. Sadovy de Mitcheson

Y. Sadovy de Mitcheson