95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Ecol. Evol. , 17 March 2022

Sec. Models in Ecology and Evolution

Volume 10 - 2022 | https://doi.org/10.3389/fevo.2022.806453

This article is part of the Research Topic Biologically-Informed Approaches to Design Processes and Applications View all 9 articles

Biodiversity is in a state of global collapse. Among the main drivers of this crisis is habitat degradation that destroys living spaces for animals, birds, and other species. Design and provision of human-made replacements for natural habitat structures can alleviate this situation. Can emerging knowledge in ecology, design, and artificial intelligence (AI) help? Current strategies to resolve this issue include designing objects that reproduce known features of natural forms. For instance, conservation practitioners seek to mimic the function of rapidly disappearing large old trees by augmenting utility poles with perch structures. Other approaches to restoring degraded ecosystems employ computational tools to capture information about natural forms and use such data to monitor remediation activities. At present, human-made replacements of habitat structures cannot reproduce significant features of complex natural forms while supporting efficient construction at large scales. We propose an AI agent that can synthesise simplified but ecologically meaningful representations of 3D forms that we define as visual abstractions. Previous research used AI to synthesise visual abstractions of 2D images. However, current applications of such techniques neither extend to 3D data nor engage with biological conservation or ecocentric design. This article investigates the potential of AI to support the design of artificial habitat structures and expand the scope of computation in this domain from data analysis to design synthesis. Our case study considers possible replacements of natural trees. The application implements a novel AI agent that designs by placing three-dimensional cubes – or voxels – in the digital space. The AI agent autonomously assesses the quality of the resulting visual abstractions by comparing them with three-dimensional representations of natural trees. We evaluate the forms produced by the AI agent by measuring relative complexity and features that are meaningful for arboreal wildlife. In conclusion, our study demonstrates that AI can generate design suggestions that are aligned with the preferences of arboreal wildlife and can support the development of artificial habitat structures. The bio-informed approach presented in this article can be useful in many situations where incomplete knowledge about complex natural forms can constrain the design and performance of human-made artefacts.

This article aims to investigate whether and how artificial intelligence can help in the urgent task of designing replacement habitat structures. We seek to demonstrate that an artificial intelligence agent can synthesise visual abstractions of natural forms and support the design of artificial habitat structures. The overarching purpose of our approach is to contribute to ecocentric design or design that seeks to benefit all forms of life and life-hosting abiotic environments.

Complex habitat structures are pervasive and tend to contain more organisms and species than simple habitats (Torres-Pulliza et al., 2020). Yet humans simplify habitats at an unprecedented rate (Díaz et al., 2015). For instance, large old trees are structurally complex organisms that provide critical habitats for many animals. Many human practices routinely remove old trees from urban and rural areas to satisfy economic, aesthetic and safety concerns (Le Roux et al., 2014). It is difficult to replace such organisms because large old trees take hundreds of years to mature (Banks, 1997). Ecologists predict that a massive reduction of the old-tree populations and the subsequent simplification of their local ecosystems are unavoidable in the near future worldwide, even if the planting of young trees increases significantly (Lindenmayer et al., 2012; Manning et al., 2012).

The global loss of large old trees and other complex natural structures calls for a remedial action. Artificial coral reefs (Baine, 2001) and wetlands (Mitsch, 2014) are instances where human-made habitat opportunities attempt to augment natural occurring structures. In these conditions, it is important to understand why complex natural habitat structures are more attractive to fauna and design artificial replacements that reproduce their key features. We do not aim to facilitate or be supportive of the alarming loss of natural habitats, such as large old trees or coral reefs. Preservation of such environments is the highest priority. Our work aims to contribute to situations where degradation has already occurred or is inevitable.

Restoration practitioners recognise that artificial structures will never fully match the geometries and functions of complex natural structures. Old trees that can live for many centuries provide a telling example. Here, a combination of growth processes and environmental factors occurring over extended timespans make highly differentiated shapes that typical construction methods cannot reproduce (Le Roux et al., 2015a) and the contemporary scholarship does not fully understand (Lindenmayer, 2017). As tens of millions of old trees will be lost within the next 50 years in south-eastern Australia alone (Fischer et al., 2009), designers seeking to replicate these complex forms must also consider rapid construction at scale and under conditions of uncertainty.

In such contexts, designers successfully use criteria such as the ease of construction and cost to judge the quality of artificial habitat structures. However, these approaches also introduce biases that result in simplified systems that do not occur in natural habitats. These biases include creating designs based on widely used artefacts and ignoring relationships and scales that are difficult to document. For instance, one emerging strategy for woodland restoration augments tree plantings with constructions including utility poles enriched with perch structures (Hannan et al., 2019) and nestboxes (Mänd et al., 2009). These structures can be successful in attracting wildlife. Yet their relatively homogenous forms do not replicate the number and diversity of naturally occurring branching structures of old trees. This means they are considerably less effective than their natural precedents (Le Roux et al., 2015a; Hannan et al., 2019). Therefore, designers of surrogate habitat structures need novel methods and tools that can emphasise, recognise, and replicate significant features of trees for artificial reproduction at high volumes.

Researchers, conservation managers and arborists are among human users who already use tools to sort, extract and visualise data to understand tree forms. However, these approaches rely on pre-existing parametrisation to describe and quantify tree structures. For instance, ecologists describe trees by measuring trunk diameters. This approach is simple but crude and can be significantly misleading. For example, large diameter young trees are not ecologically equivalent to old trees, in part because they have more simple shapes (Lindenmayer, 2017). Furthermore, there is no widely accepted definition or measure of a tree structure (McElhinny et al., 2005; Ehbrecht et al., 2017). Consequently, tree geometries do not easily reduce to discrete attributes without significant artefacts and the loss of meaningful information (Parker and Brown, 2000; Ehbrecht et al., 2017).

As an alternative to such parameter-based approaches, we propose to achieve bio-informed designs through an AI-driven synthesis of visual abstractions. This process reduces the amount of information in visual data to produce simplified representations that heighten semantically relevant features of such data (for a review, see Arnheim, 2010; Viola et al., 2020). For our objective, relevant data includes three-dimensional scans of natural habitat structures. In this context, human design objectives seek to express the needs and preferences of nonhuman animals and other lifeforms (Roudavski, 2021). An ability to express needs and preferences is particularly important for nonhuman users such as arboreal fauna. These users have needs that are not fully understood by humans. The process of abstraction is beneficial in such cases because it can focus on relevant features of a source object without the need to pre-specify parameters or types.

We acknowledge an increasing interest in AI systems dealing with visual information. These systems include applications in medicine, geographical information systems, design and other domains (Soffer et al., 2019; Reimers and Requena-Mesa, 2020; Mirra and Pugnale, 2021). We propose that such emerging techniques can significantly contribute to environmental conservation and regeneration through the design of artificial habitat structures. Exploiting this opportunity, we ask whether and how an artificial intelligence agent can synthesise simplified surrogates of natural forms. Consequently, this article seeks to demonstrate the ability of AI to capture and reproduce characteristic features of complex geometries.

The article is structured as follows. Section “Materials and Methods: An Autonomous Agent for Visual Abstraction of Complex Natural Forms” discusses AI techniques for visual information processing, including data generation, reconstruction, and synthesis of visual abstractions. In this section, we also introduce our AI agent, explain the use case, describe the training procedures and discuss the evaluation methods. Section “Results: Demonstration of Autonomous Visual Abstraction and Verification” demonstrates the capability of the AI agent to synthesise visual abstractions of tree forms. Our output analysis indicates that the visual abstractions generated by the AI agent are verifiable and meaningful. Section “Discussion: Proposed Advancements and the Future Development of the Artificial Intelligence Toolkit” compares our findings with previous studies, highlighting our innovations in three areas: AI for the synthesis of visual abstractions in 3D, resistance to human biases, and design innovation. This section also presents this work’s current limitations and prospects for future research.

The steps we followed to answer the research question and test the hypothesis include:

• Step 1: Select an AI model that can synthesise visual abstractions of 3D forms. In our case, a reinforcement learning agent.

• Step 2: Define an appropriate case study. We use large old trees.

• Step 3: Adapt the AI model to the case study.

• Step 4: Develop measurement and analysis routines to compare human-reduced artificial habitat structures, trees represented as simplified 3D models, and AI-synthesised forms.

• Step 5: Evaluate visual abstractions produced by the AI agent.

This section justifies our choice of an AI model to synthesise visual abstractions and describes its main features. We aim to use AI to extract visual features from complex natural forms that are three-dimensional and synthesise visual abstractions of these forms. We provide a brief overview existing AI techniques that can be trained on 3D visual data to perform similar tasks and describe their limitations. We focus on strategies to represent 3D data and applications for 3D data generation and reconstruction.

Our overview includes applications of generative models for 3D data generation and explains their unsuitability for the case study presented in this article, we then describe applications of AI agents that interact with a modelling environment to reconstruct forms. These agents can reduce the representation accuracy of target 3D forms but are trained only to reconstruct samples that are stored in the training dataset. Finally, we describe how generative models and AI agents can be combined to synthesise visual abstractions of habitat structures. We identify an existing technique that can achieve this goal in 2D and outline our plan to extend it for 3D applications.

Artificial Intelligence comprises a broad range of techniques that can simulate how humans process visual information, including 2D images and 3D forms. Among these techniques, Convolutional Neural Networks (CNNs) (Krizhevsky et al., 2012) have become mainstream in AI due to their success in image classification.

The convolution operation can extract features from spatially organised data, such as a grid of pixels – i.e., images – which the AI model combines into low-dimensional representations useful for data classification. The reverse of the convolution operation – named deconvolution can synthesise features from low-dimensional representations. An AI model can be trained to aggregate these features and generate or reconstruct data. Convolution and deconvolutions natively process 2D data but can work in 3D if the representation format of the 3D data is discrete and regular.

Generative models, such as generative adversarial networks (GAN) (Goodfellow et al., 2014) and variational autoencoders (VAE) (Kingma and Welling, 2014), exploits deconvolutional layers to synthesise images at high fidelities and resolutions (Brock et al., 2019; Karras et al., 2019). They also can perform a variety of image translation tasks, including translation from photographs to sketches (Isola et al., 2017) and from low-resolution to high-resolution images (Ledig et al., 2017).

Previous studies used generative models featuring 3D deconvolutions for 3D data generation. AI researchers had to develop strategies to represent 3D data in a format that complied with the requirements of the deconvolution operations. The most straightforward approach converts a 3D model into a voxel grid, or occupancy grid, which is a data format originally proposed for 3D-model classification tasks (Maturana and Scherer, 2015; Wu et al., 2015). AI researchers used voxel grids to train AI models to generate new forms (Brock et al., 2016; Wu et al., 2016) and to translate text or 2D sketches into 3D models (Chen et al., 2018; Delanoy et al., 2018).

Alternative representation strategies developed for classification tasks – including unordered point clouds (Qi et al., 2017; Wang et al., 2019) or heterogeneous meshes (Feng et al., 2019; Hanocka et al., 2019) – cannot work for data generation tasks because the output of an AI model consists of a finite and ordered set of numbers. One strategy that extends the application of generative models beyond voxel grids involves representing the 3D models that populate the dataset as isomorphic meshes and training a model to synthesise new topologically equivalent meshes (Tan et al., 2018). This approach can also work with point clouds by ensuring one-to-one point correspondences between every data sample (Gadelha et al., 2017; Nash and Williams, 2017). Besides data generation, these strategies proved effective for 3D data reconstruction from partial 3D input (Litany et al., 2018) or 2D images (Yan et al., 2016; Fan et al., 2017).

These applications demonstrate that generative models can effectively learn to synthesise 3D data, but not all representation formats are suitable for this task. Furthermore, 3D deconvolution operations produce very accurate data, making the AI-generated forms too detailed to be considered visual abstractions. In application to the design of habitat structures, this is not helpful as preserving the complexity of existing structures is not practicable.

A different approach to the problem of 3D data generation involves training an AI agent to perform modelling actions within modelling software. This approach does not rely on deconvolution operations to produce 3D forms and thus can produce less detailed forms. Therefore, it has a greater potential for synthesising visual abstractions of natural habitat structures.

The main differences with the generative models described in section “Artificial Intelligence for 3D Data Generation” are that:

• The learning task is dynamic: the agent must learn to perform a set of modelling actions rather than synthesising a form in one shot.

• Forms can be generated through any external modelling environment: The choice of a representation format that is suitable for AI training is less relevant for the quality of the generated forms because, after training, the 3D modelling actions can be rendered by any modelling environment to produce forms at high resolutions.

The application of this approach focused on inverse graphics, i.e., the problem of predicting modelling actions necessary to reconstruct 3D forms. Solving this problem involves: (1) deciding on a representation that supports comparisons between target and generated forms; (2) defining a similarity metric; and (3) designing how the AI agent interacts with the modelling environment, which includes defining the typology of modelling actions and the effect of each action on the generated form.

Generally, inverse graphics aims to reconstruct an exact replica of target forms. The modelling environment is not constrained, and the agent can perform all the actions necessary to reconstruct the form. For instance, Willis et al. (2021) developed a reinforcement learning agent that selects modelling actions such as extrude and Boolean union to reconstruct a target CAD model procedurally. They used a domain-specific language (DSL) to formalise sequences of actions in a symbolic way. Their implementation represented 3D models as graphs, whereas the agent assessed the quality of the reconstructions by measuring the similarity between the actions necessary to produce the target form and the actions performed by the agent. Sharma et al. (2018) also used a DSL and trained an agent to reconstruct 3D forms. Their agent performed comparisons in the voxel space and assessed similarity by a set of geometric metrics, including the chamfer distance.

Other studies used a constrained modelling environment, such that the agent could only approximate the target form. Kim et al. (2020) developed an agent that reconstructed 3D forms through the aggregation of primitives. The model placed 3D components in a voxel grid to reconstruct target geometries that were also represented as voxels. Besides formal similarity, the model considered the stability of generated forms by getting feedback from a physics simulator. Liu et al. (2017) and Zou et al. (2017) applied a similar approach to construct simplified representations of target meshes through the aggregation of multiple primitives.

Overall, approaches based on AI agents can either produce an accurate reconstruction of target forms or simplified representations of them. The representation accuracy of the generated forms depends on how the agent interacts with the modelling environment and assesses the similarity between generated and target forms. However, most applications address data reconstruction, which in our case study would preclude the necessary innovation, for example, to support the production of the AI-generated forms via artificial means and their adaptation to novel ecosystems.

Through the analysis of literature on AI, we found that there are no models that are specifically designed to synthesise visual abstractions in 3D. Despite this, existing models do show some of the features that are necessary to perform this task. For instance, the AI agent developed by Zou et al. (2017) can generate simplified representations of target meshes by assembling solid primitives. However, their AI agent could not generate forms other than those contained in the training dataset. Conversely, generative models like GANs can learn the underlying distribution of a dataset of 3D forms and sample new regions of such distribution to generate new data (Wu et al., 2016). Yet, the GAN generator has infinite representation capabilities. Consequently, a well-trained model can produce synthetic forms that are very accurate. The generator does not try to reduce the number or complexity of the extracted features and, therefore, does not produce visual abstractions.

A model that can synthesise visual abstractions should be able to extract visual features from a dataset of forms in the same way GANs do. It should also be able to produce synthetic data through interaction with a constrained modelling environment, which is what an AI agent trained for inverse graphics does.

Computer scientists achieved these objectives for 2D images applications by integrating an AI agent with a GAN discriminator. Ganin et al. (2018) used this approach to train an AI model named “Synthesising Programs for Images using Reinforced Adversarial Learning” – SPIRAL – to generate visual abstractions of 2D images. This application tasked the AI agent with learning a set of drawing actions to reproduce the key features of an image dataset within a drawing software.

Unlike previous applications of AI for inverse graphics (see section “Artificial Intelligence for Inverse Graphics”), in SPIRAL, humans do not supply the agent with information about human drawings, and the agent must develop a strategy by trial and error. An additional component – a GAN discriminator – produces a similarity metric that informs the agent about the quality of the synthesised images.

The SPIRAL model and its upgrade SPIRAL++ (Mellor et al., 2019) demonstrated that the agent could learn to extract meaningful visual features from samples and reproduce such features while drawing on an empty canvas, even without examples of drawing actions. The implementation controlled the accuracy of the synthesised images by (1) number of actions; and (2) action typology. In this case, a lower number of actions produced less realistic images but forced the agent to focus more efficiently on the most relevant features. For instance, an agent trained to generate human faces in 17 drawing actions attempted to maximise the efficiency of the actions by exploiting a single stroke to produce every facial feature. The action typology affected the character of the representation. For instance, if the agent had used straight lines instead of curves, it would have produced abstract shapes that could only crudely approximate the features of a human face. Yet, humans might still easily recognise such arrangements as sketches of faces.

To date, studies applied SPIRAL and SPIRAL++ to generate human faces, handwritten digits, and images representing 3D scenes. There are no current implementations of SPIRAL for 3D data to our knowledge. Despite that, we selected this SPIRAL model for further development because, unlike GANs, it can autonomously decide the number and characteristics of the features to reproduce in a synthetic visual abstraction. Furthermore, unlike current applications for inverse graphics, it does not require a dataset of drawing instructions and supports fine control of the abstraction process through the specification of constraints. Later in the article, we describe how our version of SPIRAL can extract visual features from a 3D dataset of tree forms and synthesise visual abstractions of such forms.

Our second step defines a relevant use case for the AI agent that defines a scenario where abstraction is possible and beneficial.

We first establish a domain of complex forms suitable for simplification through a process of abstraction. We then select an example known to contain relevant features. As described in the introduction, we focus on characteristics of large old trees. Below, we define four groups of characteristics, from general to specific, and explain why trees can serve as a useful case study.

Our first group relates to patterns of energy and material flows. We acknowledge that recent research describes trees as complex and highly social organisms. At the level of whole landscapes, large old trees mediate critical physical, chemical, and biological roles at varying spatial and temporal scales. They regulate hydrological, nitrogen and carbon regimes; alter micro- and meso-climatic conditions; act as key connectivity nodes in modified landscapes such as paddocks and cities; contribute to vertical and horizontal habitat heterogeneity; and serve as local hotspots of biodiversity (Lindenmayer and Laurance, 2016). Underground, systems of roots and mycorrhizal fungi form communicatory networks that connect trees (Beiler et al., 2010). In such networks, large old trees act as hub nodes with high connectivity to other plants. Highly-connected large old trees improve responsiveness to threats, maximise resource utilisation and build forest resilience by sharing nutrients (Gorzelak et al., 2015) and supporting long-term succession (Ibarra et al., 2020). These characteristics provide ample scope for future work but remain beyond the scope of this study.

Our second group narrows our focus to the composition and arrangement of physical matter. In doing so, we consider the trees’ capability to support dwelling. Ecologists describe large old trees as keystone habitat structures because they support far more reptiles, insects, birds and other taxa than other landscape elements (Manning et al., 2006; Stagoll et al., 2012). Large old trees support more diverse animal and plant communities than smaller and younger trees because they have unique structures with many different attributes. Hollows and cavities afford breeding sites; foliage offers shelter; fissured bark offers invertebrate food resources, and large complex canopies with an abundance of diverse branches serve as sites for resting and social activities (Lindenmayer and Laurance, 2016). These important characteristics provide a broad selection of definable features for potential artificial replication.

Our third group relates to bird sheltering and perching. To demonstrate the potential for our AI agent to synthesise visual abstractions of tree habitat structures, we select two significant and interrelated activities supported by trees. Many birds, including passerines – representing 60% of all bird species – spend most of their time perching. Large tree crowns provide a variety of sheltered and exposed perch sites, creating opportunities for habitation (Hannan et al., 2019). For instance, one study observed a quarter of all bird species exclusively used perched in structures provided by large trees (Le Roux et al., 2018). Canopies of large old trees have significantly more branches and branch types and so support more birds than small trees (Stagoll et al., 2012; Barth et al., 2015). We use existing ecological evidence in combination with our own statistical estimates of branch types to generate baselines for the artificial replication of branch types and distributions.

Our fourth and final group relates to how the geometrical characteristics of canopies affect bird preferences, wellbeing, and survival. Birds rely on their physiological, sensory, and cognitive abilities to make use of tree canopies. Tall old trees are visually prominent and can serve as landmarks that link landscapes (Fischer and Lindenmayer, 2002; Manning et al., 2009). In the canopies of old trees, dieback creates vertical structural heterogeneity (Lindenmayer and Laurance, 2016), resulting in diverse and segmented branch distributions (Sillett et al., 2015). These complex canopy shapes provide a broad range of geometric conditions supporting birds, including significant quantities of exposed and near-horizontal branches (Le Roux et al., 2018). Studies of large old trees show that many individual birds and bird species prefer to perch on horizontal branches and branches lacking foliation (Le Roux et al., 2015a; Zielewska-Büttner et al., 2018). The existence of such evidence makes it possible to consider geometric properties of branch distributions in isolation from other essential characteristics of trees.

Despite having many key attributes and functions, these steps show that it is possible to isolate important characteristics of large old trees for use in our case study. We focus on branch distributions within the canopy structure of old trees as a test case for AI-driven visual abstraction of complex habitat structures. Our approach can accommodate and layer additional measurements for other characteristics.

The next step in our method establishes base datasets of canopy structures for use within our AI model.

We establish base datasets (Dataset 1: Natural habitat-structures and Dataset 2: Artificial habitat-structures) representing the tree canopy features identified previously as our focus (Figure 1). These datasets allow our AI agent to be exposed to branch features and distributions occurring in natural trees. They also enable us to evaluate the resulting AI-generated forms. We briefly outline these datasets below. For technical details about the workflow and the equipment and software used, refer to Appendix D: Habitat Structure Dataset Construction.

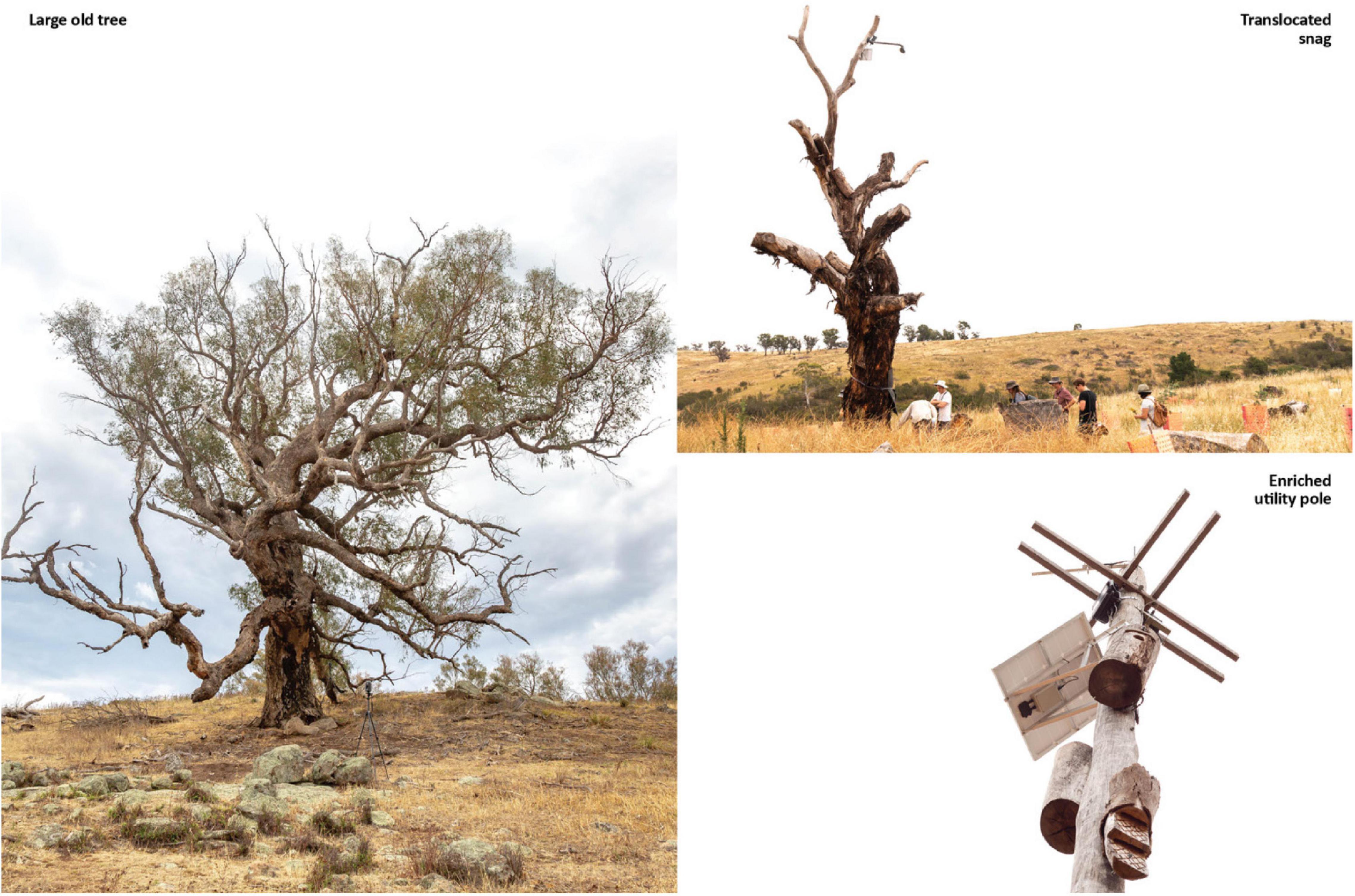

Figure 1. Natural and artificial habitat structures of differing complexity: an old tree; a translocated dead tree (complex artificial habitat structure); an enriched utility pole (simple artificial habitat structure).

To create an appropriate training dataset of branch features (Dataset 1: Natural habitat-structures), we first acquired geometries of three natural trees in a format that captures large volumes of structural information in three dimensions. Given the technical constraints of the modelling environment discussed below, we selected younger trees to test our approach. Although these trees have simpler canopy shapes than the canopy of older trees, they still represent a significant increase in structural complexity and perch diversity relative to current artificial habitat structures (refer to section “Measurement and Comparison” for a comparison between the artificial habitat structures and tree samples in our datasets).

We use LiDAR (Light Detecting and Ranging), as it does not require pre-existing categorisation of geometrical features. Instead, this technology creates 3D points representing locations where the tree surfaces reflect the laser beams (Atkins et al., 2018). We then isolate points representing branches from the resulting point clouds, and fit geometric primitives that approximate individual branches. From this, we extract the 3D centroids and radii of all branches in each sample tree. Refer to Appendix D: Habitat Structure Dataset Construction for technical details of the workflow.

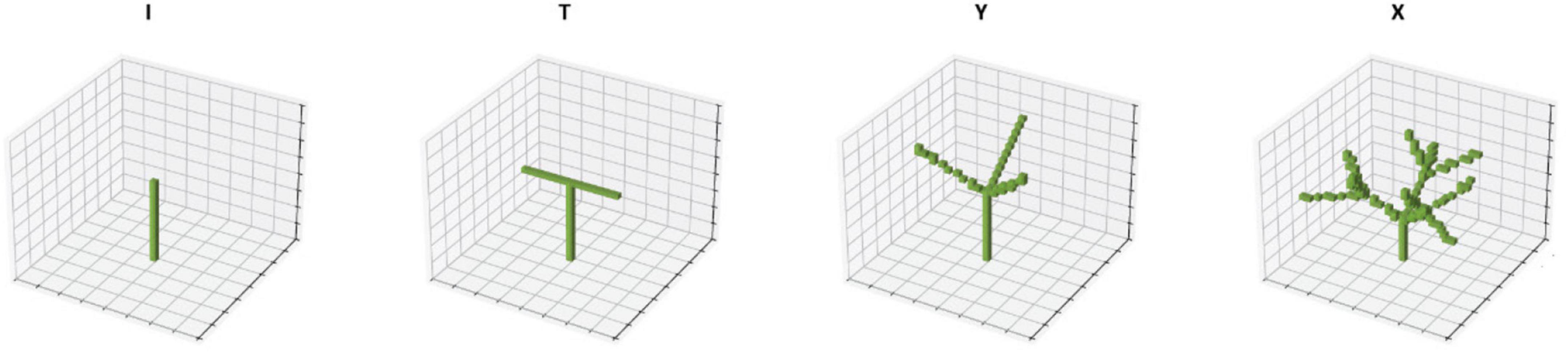

To enable an evaluation of the forms synthesised by our AI agent in comparison to existing artificial habitat structures (Dataset 2: Artificial habitat structures), we select a range of artefacts representing existing artificial habitat structures of increasing geometric complexity. These structures provided benchmark states of complexity for comparison with our AI-synthesised forms. They also represented meaningful reductions of complexity performed by humans with existing conservation techniques for artificial habitat construction (Hannan et al., 2019). This set of sample structures includes – from simple to more structurally complex – a non-habitat vertical structure representing a non-enriched utility pole (I), a two (T) and three (Y) prong habitat-structure representing utility poles enriched with perches, and a more complex 9-prong artificial structure (X) analogous to a translocated dead snag with major tree limbs retained (Figure 2).

Figure 2. Artificial habitat-structure dataset. Voxel representations of current artificial structures (Dataset 2): a non-habitat utility pole (I), two forms of utility poles enriched with perches (T and Y), and a 9-prong artificial structure analogous to a translocated dead tree (X).

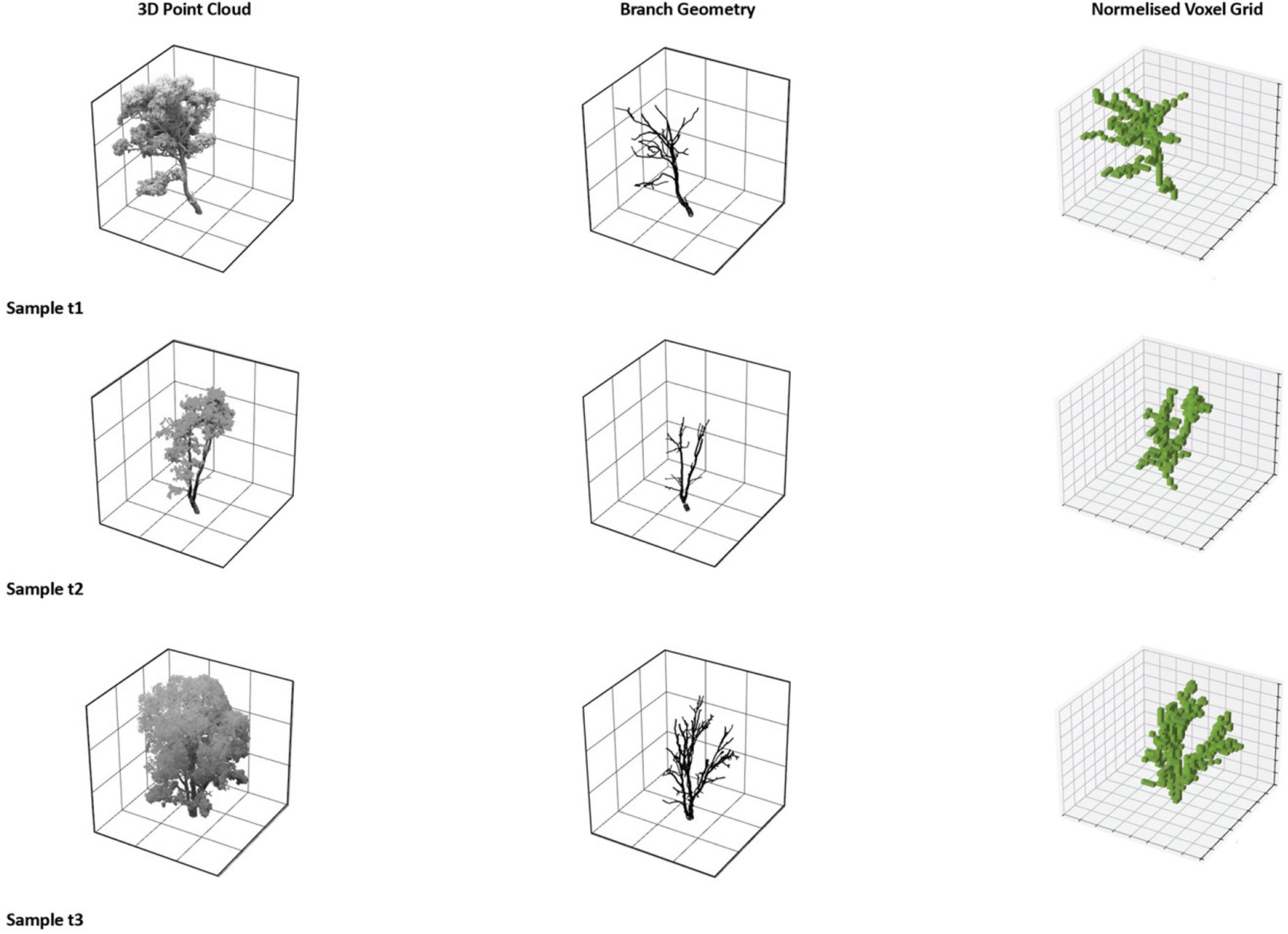

We then pre-processed our dataset to make it suitable for AI training. Current technical constraints require AI to work with input data at relatively coarse resolutions. We describe in detail the modelling environment that we constructed for the AI agent in the next section. Here, we briefly outline how we create a simplified dataset of tree forms appropriate for the limited resolution of the modelling environment.

Figure 3 shows the data preparation process. To simplify the tree representations, we first discard all branch centroids with a radius smaller than 5 cm. We then convert these centroids into 32 × 32 × 32 voxel representations to comply with the maximum resolution set for the 3D modelling environment. To do so, we assign zeros to the voxels that contain one or more branch centroid points. We then normalise the data by centring and scaling the voxel representations so that their largest dimension does not exceed the size of the voxel grid. These measures discourage AI agents from differentiation based on size at the expense of more relevant characteristics such as branching structures.

Figure 3. Conversion of the 3D scans of existing trees into 32 × 32 × 32 normalised voxel representations to create the natural habitat-structure dataset.

This process results in a set of 32 × 32 × 32 voxel grids representing natural trees (Dataset 1: Natural habitat structures). We apply a similar strategy to represent current artificial habitat structures as voxel grids (Dataset 2: Artificial habitat structures).

The steps outlined in this section establish a useable case for training and testing our AI agent. Despite simplifications, this case is characteristic of many habitat structures and their geometries. The proposed technique supports future scaling and customisation.

This step of the process implements an artificial agent that can ingest and learn from the dataset described above, making it suitable for the use case.

The application presented in this article required several adaptations of the SPIRAL architecture. We use the implementation by Mellor et al. (2019) – SPIRAL++ – as a baseline because it features upgrades that stabilise training and simplify the learning task for the agent. In the article, we use the acronym SPIRAL to refer to both implementations by Ganin et al. (2018) and Mellor et al. (2019), as the agent’s policy architecture is the same.

Our model had to be able to observe 3D representations of trees described in the previous section and synthesise visual abstractions of such trees within a 3D modelling environment. Currently, SPIRAL works with 2D images. Although Ganin et al. describe an application for 3D modelling, the agent always observes 2D projections, and the existing design limits its actions to translations of geometric primitives already placed in the 3D canvas.

Following previous applications of AI for 3D data generation (Wu et al., 2016), we define the observation, or the visual input for the model, as a 3D array of numbers that we represent as a voxel grid. We set the size of the grid to be 32 × 32 × 32, due to hardware constraints, and assign each location of the grid values of 0 or 1. According to this definition, a 3D form is a discrete array of binary values, where 0 corresponds to the coordinate of each point and 1 to the empty space.

We prepare a 3D modelling environment to allow the SPIRAL agent to perform observable actions in three dimensions. In Ganin et al. (2018), the agent interacts with an external drawing software that allows a wide variety of drawing actions, including the selection of brushes, pressure, and colour. Our implementation runs in a custom 3D modelling environment with a limited number of actions but has future potential to integrate with common CAD software and exploit a full range of modelling actions.

The environment consists of a 3D canvas, which initialises as empty space – a 32 × 32 × 32 array of ones – and a set of modelling actions. The agent can interact with the environment by moving the cursor within a sub-voxel grid of size 16 × 16 × 16 contained in the main 32 × 32 × 32 voxel grid. The cursor can switch the state of one or multiple voxels. The environment renders the actions performed by the agent as groups of visible voxels.

Since we aim to train AI agents to emphasise features of a tree canopy structure without copying its geometry, we limited the action space to the placement of lines in the 3D modelling environment. We choose lines because existing state-of-the-art ecological research uses lines to define cylinders that describe three-dimensional branching structures of trees (Malhi et al., 2018). Spatial configurations of lines can also readily translate into full-scale construction techniques.

Our custom agent places lines by specifying start and end points. The modelling environment renders such lines as sequences of voxels with a value of 0. Formally, we describe the placement of a line as a modelling action defined by two elements: end point (P′′) and placement flag (f). Given an initial start point (P′), the agent decides the location of the endpoint within the voxel grid boundaries. Then, it controls the variable f to either place a line between P′ and P′′ or jump directly to P′′ without placing any object in the canvas. P′′ becomes the start point for the next modelling iteration.

It is worth mentioning that we could provide 3D visual input in any other format, including point clouds and meshes. As discussed in section “Artificial Intelligence Model Selection”, many AI techniques can process such formats with limited computational power. We opt for voxel representations because the translation of the 2D convolutional layers of the SPIRAL architecture to 3D convolutional layers – necessary to extract visual features from 3D data – is straightforward and does not require extensive fine-tuning of network parameters other than layers sizes. Moreover, since the agent is trained to perform sequences of 3D modelling actions – by selecting voxel coordinates within a discrete 3D grid – our implementation generates forms that can be rendered in any CAD software and at any resolution. For these reasons, we considered the use of voxel representations as the best approach to test the hypothesis of this research work.

To process the voxel grids and let the agent perform actions in the 3D modelling environment, we reimplemented the SPIRAL model using the Tensorflow open-source machine learning platform (v 2.6.0). We modified the architectures of the agent’s policy and the discriminator as follows:

• We turned all the 2D convolutional and deconvolutional layers of policy and discriminator into 3D convolutional and deconvolutional layers. We preserved all original kernel and stride sizes but halved the number of filters for the residual blocks and kept the same for the remaining layers.

• We upscaled the embedding layer by 2, due to the increased size of the action-space locations.

• We included a new term in the conditioning vector to account for the extra dimension in the action space locations.

We provide additional details of our reimplementation of the SPIRAL architecture in Appendix B: Network Architecture, and a description of our training procedure in Appendix A: Training Procedure and Hyperparameters. To validate the adaptations described above, we tested the model on a benchmark, which we describe in detail in Appendix C: Model Calibration.

Having prepared a training dataset and developed an AI agent trained to produce 3D forms, we evaluate the outcomes. These steps aim to confirm that resulting AI-synthesised visual abstractions of natural structures are verifiable, meaningful and can serve as a base for future scaling.

For this purpose, we developed a measurement and assessment routine that can compare trees represented as voxels (Dataset 1), human-reduced sets of habitat structures (Dataset 2), and AI-synthesised forms. Since our focus is on the branch distributions of old trees as outlined in section “Use-Case Selection”, we base our assessment on two quantitative indexes:

• Complexity index: Our first measure is an overall estimation of geometric complexity. We focus on complexity as a measure of the geometry-habitat relationship based on the evidence that it positively correlates with biodiversity. More diverse geometries of branches in a tree canopy allow species to use a greater number of structures as their habitat.

• Perch index: Our second measure quantifies specific geometric features we identified as relevant for birds for perching. These features are branches that are elevated, horizontal and exposed. Such branch conditions represent perch sites chosen by birds to rest for periods of time and have relatively unobstructed views with clear access.

This two-prong assessment strategy combines a broad but generic measure (the complexity index) with a measure that focuses on an important but narrow activity of a key habitat user (the perch index). For further justification of each measure, refer to Appendix E: Measurement Supporting Information.

There is also an additional, implicit measure that is automatically learnt by the AI discriminator during the training process: the similarity index. We do not use the similarity index to compare forms. Instead, the AI agent uses this measure to evaluate how many features of the voxelised trees observed during the training process are preserved in the visual abstractions it generates. This internal similarity index ensures that the voxel forms evaluated by our comparison routine are not random aggregations but are already statistically congruent to the source data of voxelised trees.

To generate the complexity index, we use the fractal dimension, which is a ratio that measures how the detail of geometry changes at different scales (Godin et al., 2005). We used box-counting in three-dimensional arrays (Chatzigeorgiou Group, 2019) as the method for our measurement, which is a standard procedure for calculating the fractal dimension of 3D objects.

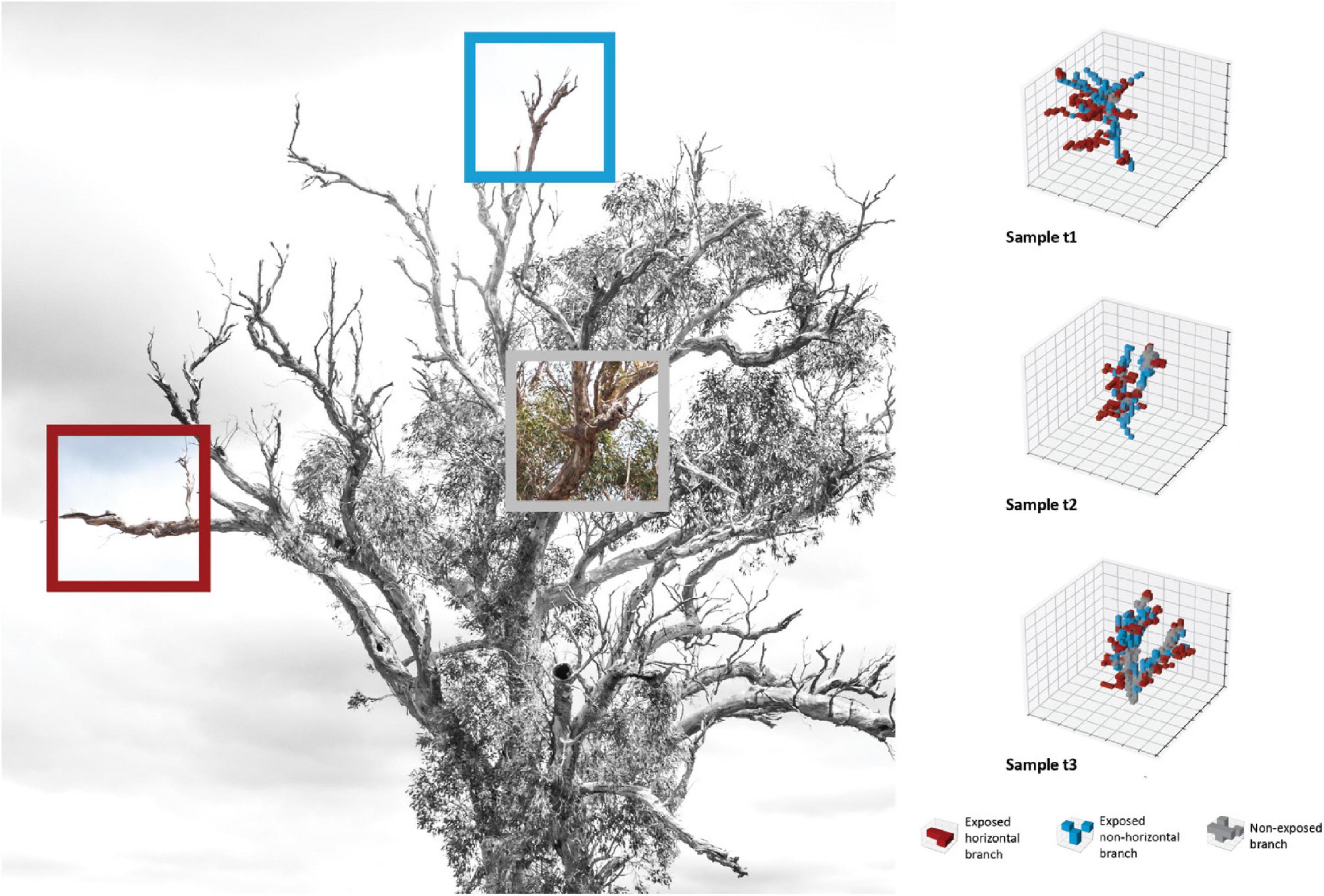

To generate the perch index, we developed an automatic procedure that finds all horizontal and exposed branches in the voxelised forms. To find these branches, we first segment the voxel representations according to three classes of branch conditions. Our branch classes are exposed horizontal branches (red), exposed non-horizontal branches (blue), and non-exposed branches (grey) (Figure 4).

Figure 4. Segmentation of 32 × 32 × 32 voxel representations. (Left) Select branch types in a large old tree. (Right) 3D segmentation of the sample tree dataset.

Our branch classification procedure works as follows. We first define a branch as a 3 × 3 × 3 window centred at the voxel location. For every voxel, we assign a label to the voxels included in the window by verifying the occurrence of the following geometric conditions:

• If the number of voxels within the window exceeds a threshold T, we label the voxels as a non-exposed branch.

• If the number of voxels within the window is less than or equal to T, we label the voxels as a non-horizontal exposed branch.

• If the number of voxels within the window is less or equal than T and either the top or the bottom plane of the window is empty, we label the voxels as a horizontal exposed branch.

Finally, to convert this segmentation routine into a perch index, we sum the z co- ordinate of all voxels classified as horizontal- exposed.

Following a set of experiments, we defined a value of 5 for the threshold T. The legend in Figure 4, bottom right, visualises the results obtained by segmenting randomly populated 3 × 3 × 3 windows.

We validated our routines by segmenting the voxel representations of the training dataset and visually inspecting the quality of the resulting segmentations (Figure 4, right). The analysis revealed that, despite the coarse resolution, the routine could extract semantic information that matches the three branch categories.

As a final step, we also validated our measurement workflow by comparing the assessment of structures in our training and comparison datasets with empirical observations of bird behaviour.

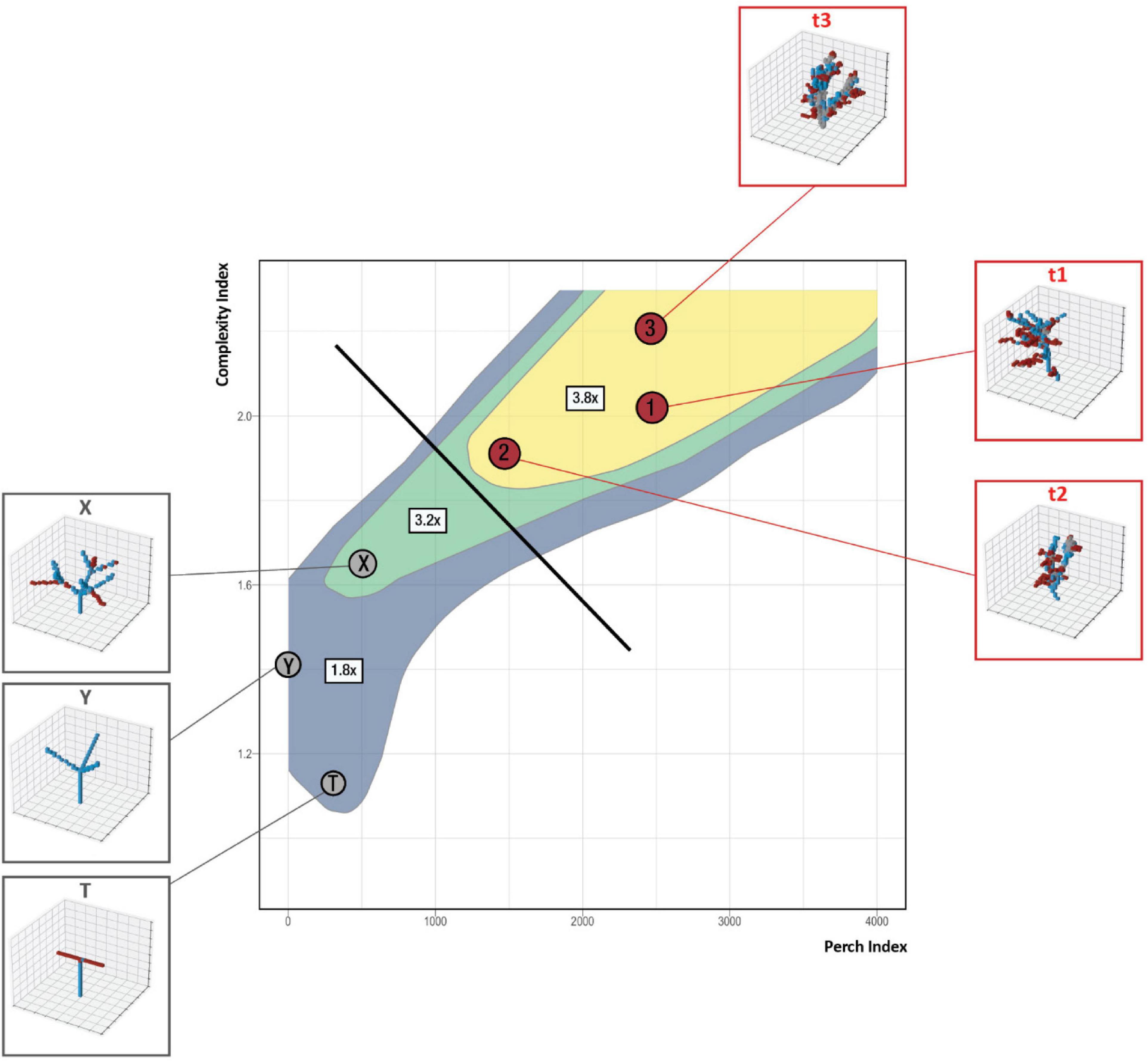

The 2D graph in Figure 5 shows the comparison space defined by our measurements. We define the assessment space by our complexity and perch indexes and plot voxel representations of natural trees (red) and artificial habitat structures (grey) in this space. Bubbles represent the three types of habitat structures in our datasets that have also been experimentally observed for their bird species response: enriched habitat pole (T and Y), translocated dead snag (X) and remnant trees (T1, T2, and T3).

Figure 5. Validation of measurement workflow through a comparison of the assessment routine outcomes and field observations of bird response to the same structural types.

The contours under this assessment space represent the observed mean native species gain when a structure type is installed compared to a site with no habitat structures. Contour levels include bird response values for enriched utility poles (blue, with the lowest species response), translocated dead trees (green) and natural trees (yellow, with the highest species response).

We use data collected by Hannan et al. (2019) to populate our species response contours. Compared to a site with no habitat structures, Hannan et al. (2019) found that enriched utility poles, translocated dead trees and living natural trees increased native bird species richness by multiples of 1.8, 3.2, and 3.8, respectively (Figure 5, blue, green and yellow contours).

We found that the positions of the structural types in our assessment space align with the species response multipliers derived from bird observations. Furthermore, Hannan et al. (2019) observed that 38% of all bird species only visit natural trees. The diagonal line (Figure 5, black) shows that we can express this gap in bird response between trees (red) and artificial structures (grey) in our assessment space.

These validation processes show that the assessment space defined by our complexity and perch indexes expresses aspects of birds’ response to structures even if other important features are not quantified. This space also suggests that, even when old trees are excluded, it is possible to design artificial habitat structures to better match birds’ preferences. Finally, this assessment space shows that there is still a significant gap between best-in-class artificial habitat structures and the performance of natural trees.

The outcome of this step is a set of validated metrics for assessing spatial complexity and features of forms generated by the AI agent.

Our results show that an AI agent can synthesise visual abstractions of complex natural shapes. In particular, they show that: (1) the agent can use the incoming data in 3D to learn design strategies; and (2) the resulting abstractions are verifiable and meaningful.

We first illustrate how the AI agent can synthesise artificial forms by learning design strategies that simplify the structures of natural trees.

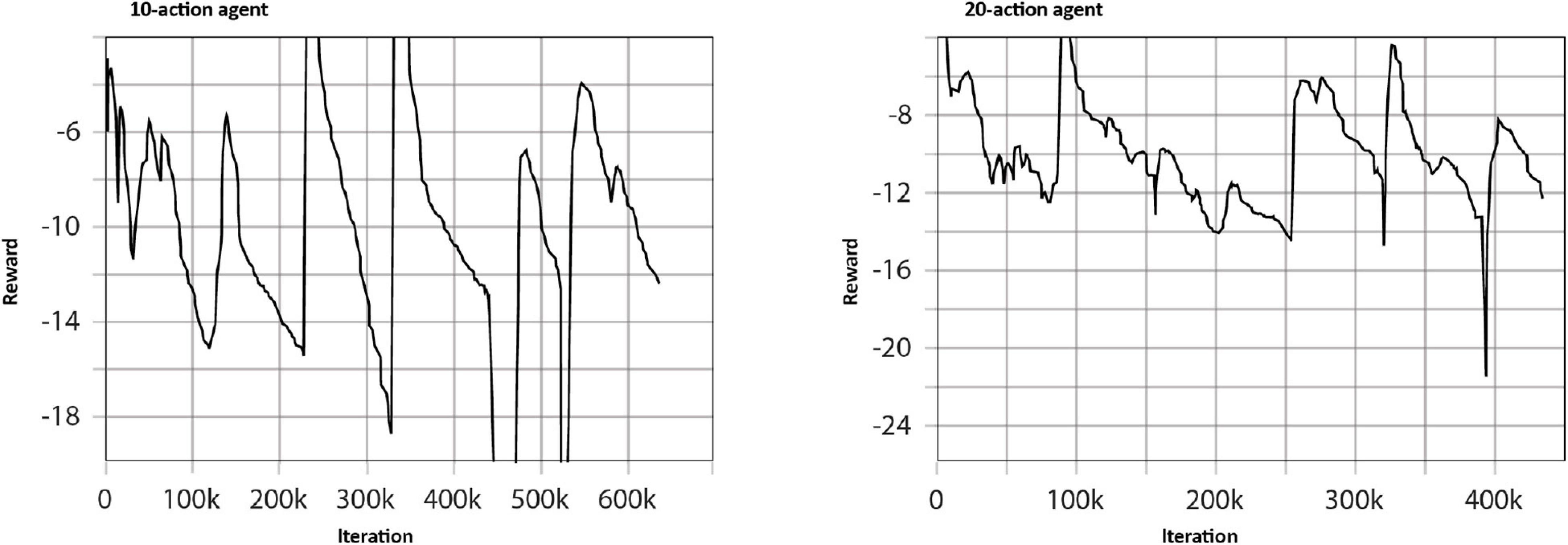

We tested the ability of the agent to synthesise visual abstractions of tree forms through two applications: (1) synthesis of forms with 10 actions; and (2) 20 actions.

We select 20 actions for two reasons. First, in the original SPIRAL implementation (Ganin et al., 2018), the agent could only be trained to perform sequences of 20 actions. Although in SPIRAL++ Mellor et al. (2019) were able to increase the number of actions to 1,000 – in which case the agents could reproduce very detailed features of the given image dataset – they also managed to generate recognisable visual abstractions of human faces with 20 actions. Second, in our 3D implementation, a number of actions higher than 20 would increase the computing time and cause out-of-memory issues. Therefore, we chose 20 actions as the upper bound. We also describe a second application where the agent can perform 10 actions. This version aims to assess the abstraction capabilities of our model further.

The two tasks involved extracting visual features from voxel representations of trees from Dataset 1: Natural Trees. We considered the representations in the dataset as a distribution of trees. In practice, such a limited number of 3D models is insufficient to represent the properties of tree populations. Furthermore, a dataset that contains only three samples has high variance, which causes instability during the training process.

To alleviate these issues, we implemented a strategy based on data augmentation. We augmented the dataset using rigid transformations to ensure that the agent focused on the structural features of the trees. Similar to Maturana and Scherer (2015), we rotated every 3D form about the z-axis located at the centre of the ground plane by consecutive intervals of 10°. We populated the resulting dataset with 35 extra samples per tree structure for a total of 108 voxel representations. This data augmentation strategy produced a distribution of samples characterised by an independent variable of rotation.

To simplify the learning task in both applications, we set the starting location of the cursor at the centre of the ground plane. This forced the agent to start modelling forms from a location aligned with the base of the dataset samples.

Figure 6 shows the results of the training process for the two applications. The graphs represent the trends of the reward collected by the agents over consecutive training iterations. We smoothed the curves using an exponential moving average with 0.8 as the smoothing factor.

Figure 6. Trend of the reward achieved by the 10-action and 20-action agents over consecutive training iterations.

We observed that the rewards fluctuated erratically throughout the process, as visible in the graph spikes. These fluctuations, which do not appear in the reward curves described by Mellor et al. (2019), are due to the increased difficulty of exploring a 3D environment. The agents must select the end point of a line among a grid of 163 locations and an extra value for the placement flag for every time step. This results in a 2 × 163 × 10 number of permutations for the 10-action agent and 2 × 163 × 20 for the 20-action agent.

To guarantee appropriate exploration of the action space, we increased the weight of the entropy loss term – which is a term included in the learning function to prevent early convergence – by a factor of 10. Furthermore, since every observation consists in an array of 323 numbers, the specification of an appropriate batch size was computationally impractical. We used batch sizes of 32 and 16 – instead of 64, as recommended by Ganin et al. (2018) – to train the 10-action agent and the 20-action agent, respectively. Appendix A: Training Procedure and Hyperparameters provides further details on the hyperparameters used to train the two agents.

Although these modifications were a major source of instability of the training processes, we found that each spike corresponded to a tentative local convergence. The agents could successfully find a design strategy to trick the discriminator several times, but due to the increased entropy cost, they had to resort to a random exploration of other actions to find a different design strategy. Because of the peculiarity of the training curves – which share similarities with conventional GAN training – we stopped the training processes once they reached a time limit, rather than checking for convergence. In the following sections, we validate the success of the training process by analysing the forms produced by the agents.

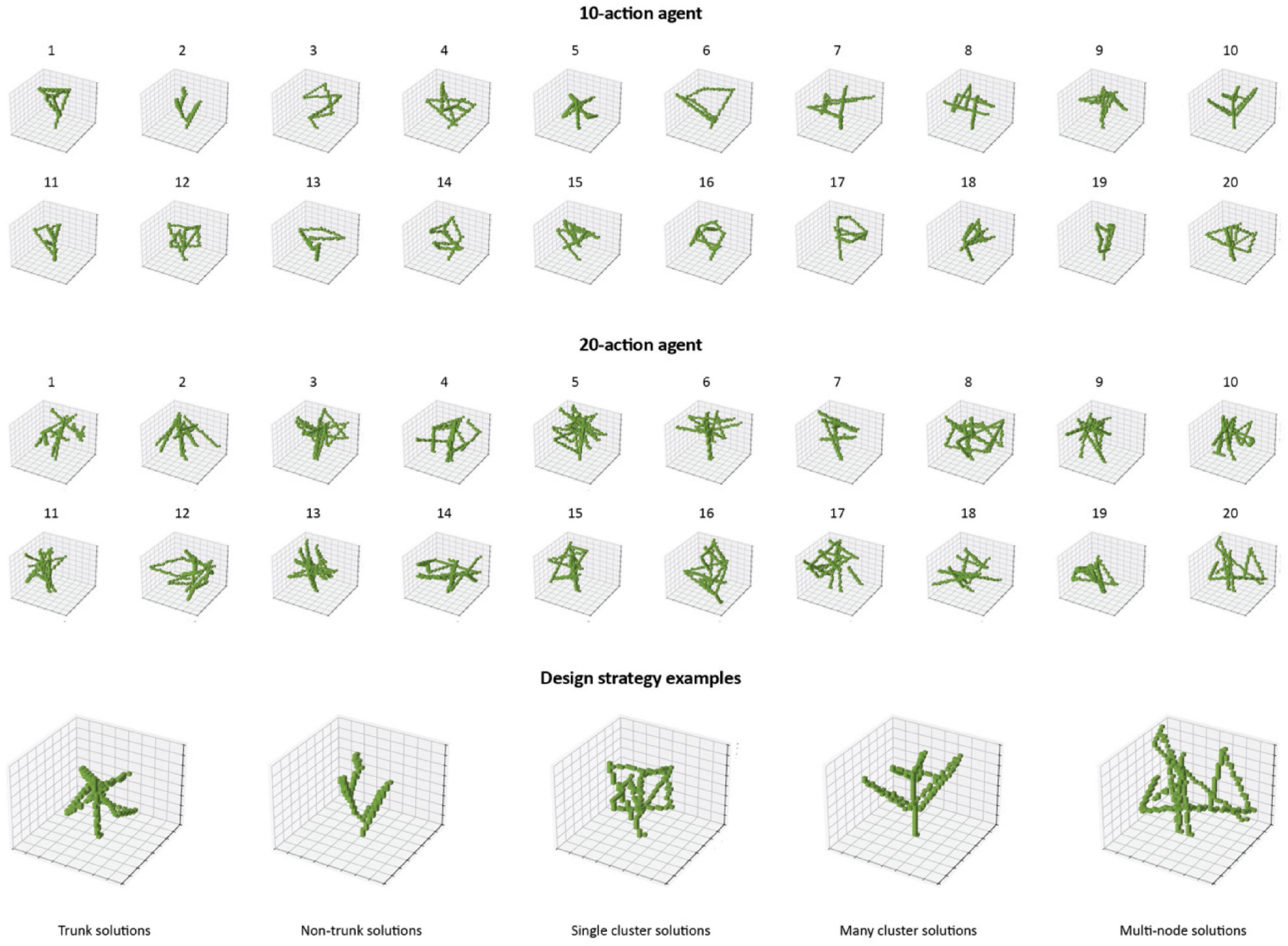

We extracted 20 forms generated by the two agents during the last training iterations to assess the success of the training process qualitatively. Our results confirm that we can describe the AI-synthesised forms in terms of the characteristics of trees and human-simplified artificial structures.

Figure 7 shows the selected forms sorted per training iterations. Through inspection, we found that the agents learnt different design strategies. In the 10-action agent, these were:

• Trunk solutions: forms with clear canopy-trunk segmentation (e.g., samples 5, 9, 10, 11, 12, and 17).

• Non-trunk solutions: forms without a clear trunk (e.g., samples 2, 3, 14, 16, and 16).

Figure 7. Visual abstractions produced by the 10-actions agent and the 20-actions agent during the last training iterations.

Within each of these broader categories, we also identified:

• Single cluster solutions: forms with a single elevated aggregation of lines resembling an intact tree canopy (e.g., samples 12, 17, and 20 in the trunk category; and 6 in the no-trunk category).

• Many cluster solutions: forms characterised by multiple sub-clusters. Such distributions resemble vertically heterogenous trees with multiple sub-canopies (e.g., samples 9 and 10 in the trunk category, samples 2 and 3 in the no-trunk category).

Clearly defined canopy structures occurred most often in conditions where agents had a scarce supply of lines, as in the 10-action agent. This suggested that confining the number of lines forced agents to place lines that maximised the reward for each line, resulting in a design strategy that produced forms most closely resembling trees.

The 20-action agent had to search within a larger space of possibilities. This agent exploited the larger number of actions to place as many horizontal lines as possible to approximate the input tree branches. This resulted in more erratic forms. However, it also resulted in an additional strategy that we did not see in the 10-action agent. This category was:

• Multi-node solutions: synthesised forms attached to the ground plane with multiple points (e.g., samples 10, 13, and 20).

Our qualitative analysis confirmed that the AI agents were able to simplify tree structures in a way that created forms that had relevant features (understood as many cluster solutions). They also produced forms that were different to trees and tree-like artificial habitat structures (understood as multi-nodal solutions).

The next step is to verify that the AI-synthesised visual abstractions were meaningful. We ensured that by comparing their performance with natural trees and human-made structures. We discuss the performance of these AI-generated forms in our previously established evaluation space below.

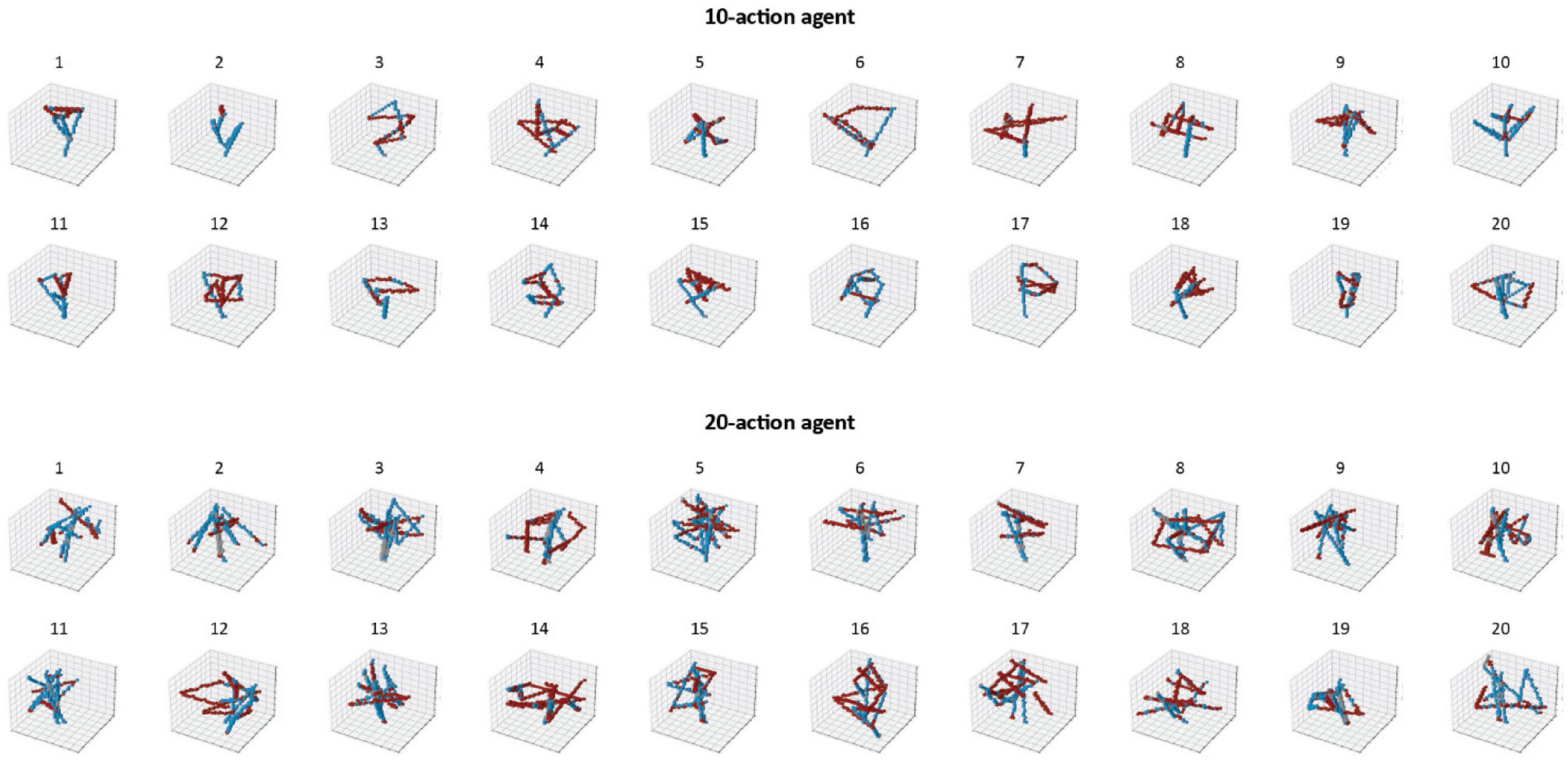

To confirm that AI-driven synthesis of visual abstractions is possible and verifiable, we performed a quantitative analysis that compared the synthesised forms with natural trees and human-made structures.

Using the comparison space described in section “Measurement and Comparison,” we compared the AI-synthesised forms with the voxel representations of human-defined structures and natural trees. Figure 8 visualises the outputs of the segmentation process that identifies exposed horizontal branches (red).

Figure 8. Segmentation of the visual abstraction produced by the 10-action agent and the 20-action agent during the last training iterations.

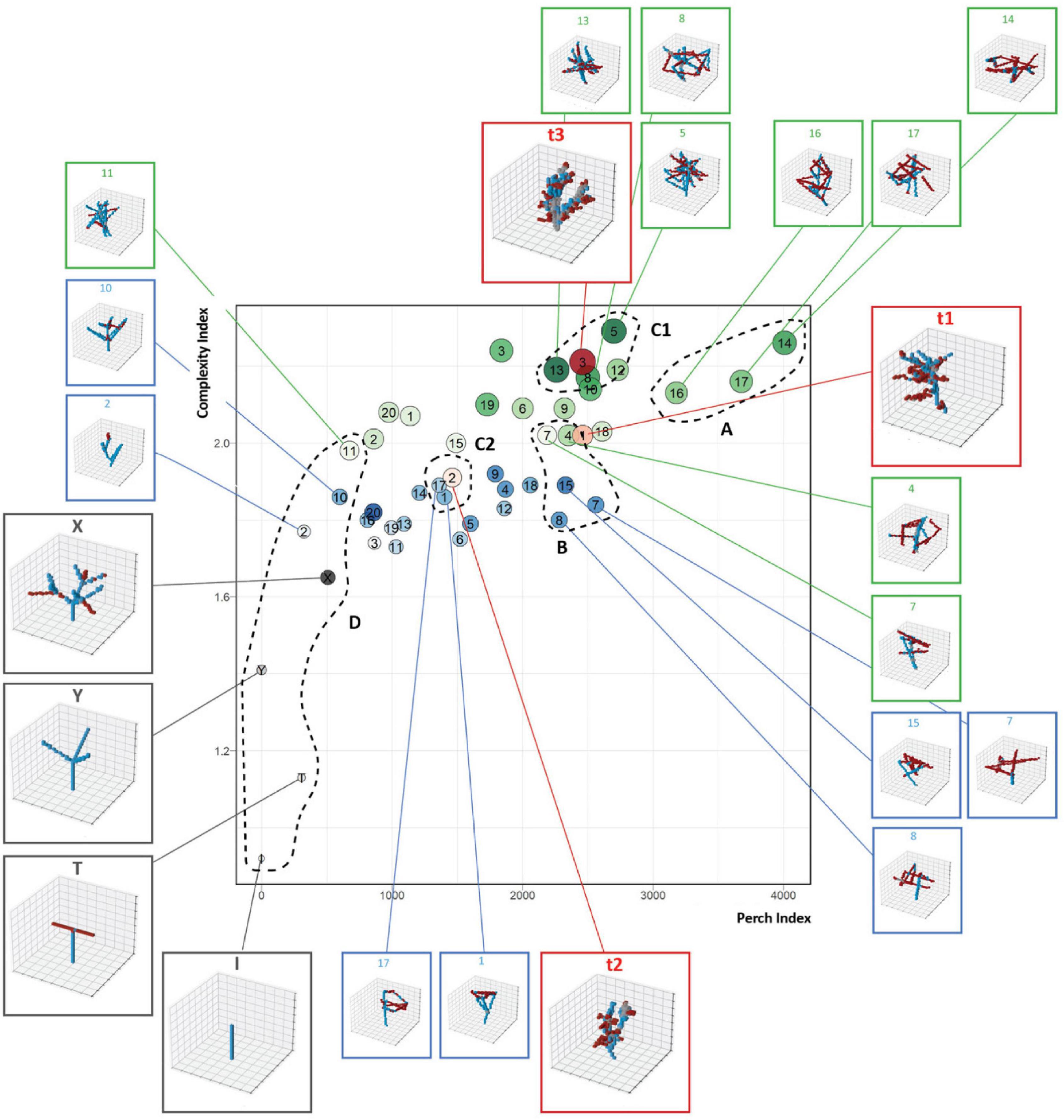

The 2D graph in Figure 9 visualises the forms as bubbles in the comparison space. The x and y axes correspond to the perch and complexity indexes. We also considered a third metric – material cost – which we computed as the sum of the visible voxels. This cost maps to the scale and colour transparency of the bubbles. We colour coded the bubbles according to our four dataset types: voxel representations of human-defined habitat structures (black), voxel representations of natural trees (red), forms synthesised by the 20-actions agent (green) and forms synthesised by the 10-actions agent (blue).

Figure 9. Comparison between 3D representations of natural trees (red), human-defined simplified trees (grey), and forms synthesised by the 10-action agent (blue) and 20-action agent (green).

We first examined the location of the representations of natural trees. The three samples showed a high complexity index and occurred at the centre of the perch index axis. High geometric complexity indicated a level of diversity of branch distributions that representations of human-defined artificial structures did not have. We assumed that natural physical constraints, such as the maximum number and length of horizontal elements that a tree can have, limited the maximum perch index of these samples.

Overall, we observed a correlation between the perch index and the size of the blobs, which represented the material cost. Generally, canopy structures represented by many voxels – and a greater material cost – also had a higher number of horizontal exposed voxels.

By observing the distributions of the four dataset types, we found a clear separation between representations of human-defined habitat structures and the AI-synthesised forms. Our samples of human-defined structures were distributed evenly along the complexity index axis and had a low perch index. In contrast, the AI-synthesised forms were distributed across the graph and concentrated around our voxel representation of natural trees. We found that the distribution of the AI-synthesised forms defined two main “bands” of complexity. These two bands partially overlapped along the perch index dimension and expanded from left to right. Occurring in the topmost band were the forms synthesised by the 20-action agent, which achieved the highest complexity.

We analysed the graph more deeply by describing and comparing the samples from the different datasets grouped into five distinct clusters: A, B, C1, C2, and D. We acknowledge that these clusters are not derived statistically. Instead, we defined them by delimiting regions in the graph we deemed relevant to the design challenge.

Cluster A included the most performing forms synthesised by the 20-action agent as per our comparison space. These canopy structures were defined by patterns of lines that filled a large portion of the voxel grid and did not resemble trees. Form 14 returned the highest fractal dimension and perch index. However, unlike forms 16 and 17, this structure was short: it did not include enough vertical elements. Consequently, we considered form 14 a poor candidate for further design iterations.

Cluster B included AI-generated forms distributed at the centre of the graph. We highlighted these representations as they achieved a perch index comparable to natural forms while maintaining a moderate level of complexity and cost. The forms synthesised by the 10-action agent occupied the lowest part of the cluster and were all less complex than the simplest natural form (2, red). These forms had a distinct trunk and several horizontal lines that maximised space-filling while creating large voids. The cluster also included two forms synthesised by the 20-action agent, which shared similar features but had a higher fractal dimension.

Clusters C1 and C2 included AI-synthesised forms that were closer to the highest scoring natural form (3, red) and the lowest scoring one (2, red). By analysing these clusters, we found an alignment between the similarity metric considered by the agents and the metrics used for this evaluation. We used the 20-action agent to synthesise the forms in cluster C1. Forms 13 and 5 were tree-looking structures with multiple lines. Form 8 included a horizontal ring that increased the perch index. We used the 10-action agent to synthesise the forms in cluster C2. These forms consisted of simple looped and branched distributions.

Cluster D included representations of human-defined habitat structures and the AI-generated forms that had a low perch index. We chose this cluster so we could analyse features that characterised AI-synthesised forms that were closer to examples of current artificial habitat structures. Forms 2 and 10 were branched structures synthesised by the 10-action agent. These forms had a higher complexity index than the human-defined artificial habitat structures. This was due to the increased thickness of the branches containing multiple overlapping lines. These overlapping lines create a varied surface. In contrast, the representations of artificial structures were modelled to mimic simple poles. The more pronounced local diversity within individual branch shapes of the synthesised forms meant the AI solutions had a higher structural complexity than the human-defined artificial habitat structures, even if their overall appearance was similar. We note that, in reality, the translocated dead snag would also have a varied surface – and thus score higher on our complexity index – on its surface than Sample X, which is its voxelised representation.

In summary, our analysis demonstrates that – within the limits of the chosen voxel representation format – the AI agents produced forms that were more similar to the natural canopy structures than the examples of human-defined canopy structures used for the comparison. We assessed this similarity by combining a visual inspection and interpretation strategy with our comparison space. The analysis also showed that the agents produced a varied set of forms with differing levels of complexity and perch indexes. Many of the solutions contained diverse and relevant canopy shapes and branch distributions. We identified in the AI-synthesised forms a subset that balanced geometric complexity with material cost and perch index. This subset corresponds to Cluster B and includes the best candidates for future exploration.

We see the results presented in this article as the first step toward AI-supported design of artificial habitat structures. In this approach, AI can support human designers from idea generation to decision-making. Our results improve on previous studies in three areas, as described in Table 1.

Our first improvement relates to meaning extraction. Ecologists struggle to define large old trees because they represent ecosystem-specific phenomena that have many important ecosystem roles. We responded to this challenge by conceptualising large old trees as layers of characteristics from ecosystem dynamics to specific branch distributions. For further details, see section “Use-Case Selection”.

This study focused on branch distributions within tree canopies. These characteristics present a non-trivial challenge for meaning extraction. Branching structures in trees form highly heterogenous patterns and tightly packed patterns. Many of their features are unknown (Ozanne et al., 2003; Lindenmayer and Laurance, 2016) and remain difficult to quantify (Parker and Brown, 2000).

To tackle this challenge, we extended previous applications of SPIRAL by making it operational in 3D. Our implementation demonstrates that the two AI agents can reduce the complexity of branch distributions and synthesise their visual abstractions. Although the agents had no information about features meaningful to tree-dwelling organisms or artificial habitat designs, they produced forms that retained ecologically meaningful features suggesting their usefulness for the design of artificial habitat structures. Our segmentation and comparison procedures showed that even in relatively simple modelling environments, these agents could synthesise features that are relevant to arboreal organisms.

We briefly describe one extraction process that focused on elevated perches. Reflecting the input dataset of trees, the two agents adopted a strategy of concentrating lines at higher Z coordinates. Using this approach, the agents synthesised structures with a limited number of vertical elements closer to the ground and more lines higher up, mimicking an elevated canopy. The availability of such structures is important to tree-dwelling organisms who need to perch above certain heights. This means that artificial perch structures close to the ground will not be suitable. The process demonstrates that autonomous visual abstraction is possible in 3D, and a particular use case with complex geometries that escape typical description methods.

The AI agents discussed in this article learn from 3D coordinates of tree branches to construct visual abstractions. Future implementations can aim to consume other spatial data representing other layers of information within our conceptualisation of trees. Data about these structures and their users can be integrated in our training datasets as voxel representations. We also expect that non-spatial information, such as statistical information about bird behaviour, can further guide the agent in extracting features that are functionally relevant to the wildlife inhabitants.

We are not yet ready to claim that the agents can produce suitable habitat designs. Instead, the benefit from our approach emerges from a combination of (1) developing forms by automatically synthesising natural structures described above; and (2) providing a numeric comparison between natural, existing artificial, and synthesised forms. Through (1) and (2), we created an approach that explicitly defines the biases in current designing strategies.

Identifying biases is crucial for improved designs because structures such as enriched habitat poles and translocated dead trees are reductions or abstractions of complex natural structures. In many instances, humans perform this abstraction with a limited understanding of the biases they can bring to the process. Such biases include a tendency to:

• Create designs based on widely used existing artefacts, such as utility poles, given that the final structures often result from efforts by domain experts who are not designers by profession.

• Focus on simplified forms that could be built using common structural systems, such as regularly spaced slats, or on discrete habitat features that are easy to identify, such as perches.

• Ignore scales and relationships that are difficult to document, such as representing complex and differentiated branching.

Our synthesised forms resist conditioning by existing examples of artificial habitats. Our comparison space showed that there is a large gap between the complexity of existing designs for artificial habitat structures and the unexplored design space indicated by natural structures and possible synthetic forms. This gap shows the clear need for bio-informed construction, manufacturing and design technologies that can better engage with non-standard and highly differentiated shapes.

The ambition of our process is to consider how artificial habitat-structures appear and work for birds. For this task, the third benefit of our approach relates to meaning abstraction and reproduction.

In our use case, traditional artificial habitat designs do not show the perch diversity and distributions observed in trees and do not perform well (Le Roux et al., 2015a; Lindenmayer, 2017). Recognising these limitations, recent ecological work has investigated the use of translocated dead snags as artificial habitats (Hannan et al., 2019). It has also studied the deliberate damaging of young trees to make them resemble old trees with their hollowing trunks and branches (Rueegger, 2017). There have been some notable improvements in performance through the use of these strategies (Hannan et al., 2019). However, the resulting structures closely resemble trees. This means they suffer from many of the same drawbacks as trees: they are heavy, require significant preparations, including footings and grading of terrain, and are difficult to implement at scale.

Our agents produced forms with perch distributions that are similar to trees. However, they also developed solutions that were distinct from the structures as typical trees with their central stems and radial branch distributions. We could discount a high degree of these non-tree forms, including those with many vertically oriented lines or those with many lines close to the ground plane. Our agents also learnt more useful strategies. For example, the multi-nodal forms created by the 20-action agent was an instance of load being distributed over multiple points rather than being concentrated in one trunk. Such strategies are useful in many restoration projects that occur in environments where large footings are impossible or destructive. Even though our agents had no knowledge of structural systems, their incentive to minimally describe the training dataset created a useful alternative structural strategy: maximising space-filling by distributing elements throughout 3D space using strategies not available to most tree species. These strategies suggest possibilities for expanding design options using AI-generated visual abstraction and will need to be further tested in future research.

In this section, we discuss the limitations of this work in relation to three themes: ecological knowledge, design, and artificial intelligence.

Limitations given by the state of ecological knowledge include its incompleteness. This applies to all aspects, from the number of known and described species to species behaviour. Field observation can be resource-intensive at large sites or with many individuals, difficult in the case of cryptic species, and slow when it must follow breeding cycles that take years.

These constraints impact potential human-made designs because they misalign with project-based work, brief periods of project-related research and the reliance on human expertise. In response to these limitations, this project aimed to take steps toward design processes that can accrue knowledge over time and be of use in the conditions of incomplete understandings of ecological interactions.

In regard to artificial intelligence, we recognise that future work will have to address several issues to improve the quality of the forms synthesised by the AI agent and turn the procedure into a useable design strategy.

First, we observed that the model was difficult to train. We related this to the complexity of the task in 3D. This task became more difficult because of the need to resort to a low-resolution voxel representation. Alternative strategies for 3D data representation are possible, and we shall test them in future work.

Second, our training dataset included only three samples. Training AI models on such a limited number of samples is a well-known issue, as the dataset does not represent an actual distribution and is characterised by high variance. All this causes instability in the training process. We partially addressed the problem by artificially expanding the dataset using a data augmentation strategy (section “The Effectiveness of the Training Procedure”). However, we consider developing larger datasets to be a priority to improve the model’s capabilities.

Third, following the original SPIRAL implementation, we defined the learning task as maximising the similarity between the synthesised forms and the dataset samples with a limited number of modelling actions. The agent did that by maximising a reward computed from the similarity score. In future work, we will augment this reward definition with other metrics, such as structural stability, to synthesise forms that satisfy additional performance requirements.

Fourth, the SPIRAL agent learned to perform sequences of actions: at each step, the agent observed a partially designed form, got a partial reward, and decided what to design next. This feature opens several possibilities in terms of human-machine interaction that we did not explore in this article. For instance, the designer can provide SPIRAL with any partially defined form and ask the agent to complete the design based on its acquired experience. We plan to integrate this feature in a Graphical User Interface (GUI) to explore the implications of a human-AI partnership in the design of artificial habitat structures.

Last, we limited the action space to the placement of lines in the canvas, making the agent produce forms with limited variations. We will include other modelling actions to increase the expressive capabilities of the agent and synthesise more diverse, and potentially more performative forms for artificial habitat structures.

As we already mentioned in section “Extending Synthesising Programs for Images Using Reinforced Adversarial Learning to 3D on a Domain-Specific Challenge,” this work serves as a basis for future scaling for trees. It also has the potential to be extended toward other domains of complex natural structures, such as rock formations or coral reefs. We expect further experimentation in this domain to continue in parallel with increasing computing power. Future input datasets can be rich, with multiple layers of information. It is already possible to create high resolution, feature-rich classifications for training in many instances. To give one example, Figure 10 illustrates an extended version of our tree sample data. This dataset has multiple layers of information, including branch centroids (bubbles), key habitat structures (colours), predictions of faunal use (colour intensity) and species richness (size of bubble). We have included this figure in the manuscript to show that such datasets already exist and represent key targets for our future research on AI.

Our AI-synthesised forms are not better than human-made artificial habitat structures or natural trees. Our approach instead complements other innovations in artificial habitat design. Wildlife response to novel artificial habitat structures such as translocated dead trees is encouraging. However, stakeholders for this infrastructure, including birds, bats, insects, and other life forms, have preferences only partially known to human ecologists, conservation managers, and designers.

More generally, the work on artificial intelligence and ecocentric design can contribute to ecological research by supplying requirements for data acquisition, novel analytical techniques for numerical analysis, and generative procedures for field testing. Future implementations of AI agents can work with additional meaningful constraints and targets, including constructability, thermal performance, materiality, modularity, and species-related requirements. These AI agents can suggest unexpected solutions that will be combinable with other design approaches such as expert-driven development or performance-oriented modelling.

In this study, we demonstrate that an artificial intelligence agent can synthesise simplified surrogates of natural forms through a process of abstraction. We show that the forms generated by the agent can be ecologically meaningful and thus contribute to the design of artificial habitat structures. Human-driven degradation of natural habitats makes such work necessary and urgent. The process of habitat simplification accelerates extinctions and loss of health because less diverse habitats can support fewer forms of life. It is difficult to create suitable artificial habitat designs because the reproduction of key features of natural habitat structures is complex and resource-intensive. In response, our study extends existing work in ecological restoration and AI. We interpret the design of artificial habitat structures as a bio-informed process that relates to the cognitive mechanism of abstraction. Our innovative AI agent can synthesise visual abstraction of 3D forms by recombining features extracted from datasets of natural forms, such as trees. The assessment routines that compared geometries of natural trees, artificial habitat structures and synthesised objects confirmed that the outcomes of the process preserve meaningful features.

Our design strategy offers novel methods for reconstructing trees and other complex natural structures as they are seen by nonhuman habitat users, such as birds and other wildlife. The forms produced by our agent can be beneficial because they avoid biases common to existing strategies for artificial habitat design. Strategies developed by our AI agent can produce structures that resemble trees but can also deviate from natural structural forms, while preserving some of their meaning. This research creates an opportunity for future work that can include objectives to satisfy additional criteria in habitat provision, such as material use and constructability, to apply our approach to other sites and species and implement the outcomes at large scales and numbers. This work will depend on further development and – in particular – on the construction and field-testing of physical prototypes. To conclude, this work offers innovative applications in ecology, design and computer science, demonstrating the potential for AI-assisted design that seeks to benefit all forms of life and life-hosting environments.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

GM developed, trained, tested the AI model, and implemented the verification workflow. AH generated datasets of trees and implemented the procedure for assessing the results. JW provided technical support to the AI development and contributed to the literature review. GM and AH contributed to the literature review, research design, and drafting and editing the manuscript. SR and AP directed the conception and design of the project and contributed to the writing and editing. All authors worked on the conclusions and approved the final version of the manuscript.

The project was supported by research funding from the Australian Research Council Discovery Project DP170104010 and the funding from the Australian Capital Territory Parks and Conservation Service.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We respectfully acknowledge the Wurundjeri and Ngunnawal peoples who are the traditional custodians of the lands on which this research took place. We thank Philip Gibbons and Darren Le Roux for their contributions to the ecological aspects of this project.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fevo.2022.806453/full#supplementary-material

Arnheim, R. (2010). Toward a Psychology of Art: Collected Essays. Berkeley: University of California Press.

Atkins, J. W., Bohrer, G., Fahey, R. T., Hardiman, B. S., Morin, T. H., Stovall, A. E. L., et al. (2018). Quantifying Vegetation and Canopy Structural Complexity from Terrestrial Lidar Data Using the Forestr R Package. Methods Ecol. Evolut. 9, 2057–2066. doi: 10.1111/2041-210x.13061

Baine, M. (2001). Artificial Reefs: A Review of Their Design, Application, Management and Performance. Ocean Coastal Manag. 44, 241–259. doi: 10.1016/s0964-5691(01)00048-5