94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

HYPOTHESIS AND THEORY article

Front. Ecol. Evol. , 14 May 2019

Sec. Behavioral and Evolutionary Ecology

Volume 7 - 2019 | https://doi.org/10.3389/fevo.2019.00164

This article is part of the Research Topic An Ecological Perspective on Decision-Making: Empirical and Theoretical Studies in Natural and Natural-Like Environments View all 6 articles

Organisms have evolved to trade priorities across various needs, such as growth, survival, and reproduction. In naturally complex environments this incurs high computational costs. Models exist for several types of decisions, e.g., optimal foraging or life history theory. However, most models ignore proximate complexities and infer simple rules specific to each context. They try to deduce what the organism must do, but do not provide a mechanistic explanation of how it implements decisions. We posit that the underlying cognitive machinery cannot be ignored. From the point of view of the animal, the fundamental problems are what are the best contexts to choose and which stimuli require a response to achieve a specific goal (e.g., homeostasis, survival, reproduction). This requires a cognitive machinery enabling the organism to make predictions about the future and behave autonomously. Our simulation framework includes three essential aspects: (a) the focus on the autonomous individual, (b) the need to limit and integrate information from the environment, and (c) the importance of goal-directed rather than purely stimulus-driven cognitive and behavioral control. The resulting models integrate cognition, decision-making, and behavior in the whole phenotype that may include the genome, physiology, hormonal system, perception, emotions, motivation, and cognition. We conclude that the fundamental state is the global organismic state that includes both physiology and the animal's subjective “mind”. The approach provides an avenue for evolutionary understanding of subjective phenomena and self-awareness as evolved mechanisms for adaptive decision-making in natural environments.

Choice is fundamental for the behavior of all animals (Barnard, 2004). Making a decision involves identifying and selecting one specific physiological, behavioral, or cognitive alternative among several available options. This requires integration of various types of information and weighting conflicting needs (e.g., Mangel and Clark, 1986; McNamara and Houston, 1986). When the decision is being made, its exact outcome is usually uncertain. Thus, decision-making explicitly or implicitly involves prediction of the best alternative by the organism. Prediction ability may be fundamental for all living systems (Ramstead et al., 2018; Suddendorf et al., 2018). Even unicellular microorganisms are capable of predictive decision-making in behavior and homeostatic regulation (Lyon, 2015; Bleuven and Landry, 2016; van Duijn, 2017).

There is a paradox in evolutionary ecology. On the one hand, behavioral ecologists have developed elaborate theories and models identifying the behavioral strategies that are expected to maximize life-time fitness under specific constraints. On the other hand, evolutionary ecology is silent on how these decisions are made or how fitness maximization is implemented in the nervous system. Do animals really assess their expected fitness? Are they using proxies? Are decision-makers aware of their decisions? We argue that (i) considering both perspectives at the same time improves understanding of how animals make decisions, and what animals know about themselves, and that (ii) the cognitive system for predicting possible consequences of available behavioral choices, the “prediction machine”* (see Glossary for terms marked by asterisks), is central in animal decision-making, and rests on functions of the nervous system.

The dominant tradition in ecology is to see the animal through the lens of the researcher who is omniscient, aware of the factors that are relevant in the specific decision context, and has complete information and control. Then, analytical methodology identifies abstract decision rules and heuristics. A century ago, this was the only perspective available. By taking the perspective of the “agent”*, the acting animal, we will follow the opposite path. It essentially runs through building the whole, albeit simplified, animal, a virtual “robot,” that mechanistically implements cognitive and behavioral functions (Dean, 1998). Its machinery is adapted through simulated natural selection as in the artificial life approach (Adami, 1998; Seth, 2007). We will show how animal behavior emerges from principles of information processing, not just fitness maximization. Decisions depend on the state of the organism, and are sensitive to the mechanisms for withholding or bringing information to the decision-making system, which is encoded in nervous systems that vary throughout Animalia. We show that ecology has much to learn from “cognitive sciences”* by implementing computational models and point at some prospects.

It is convenient for ethologists and behavioral ecologists to assume that apparently complex behavioral and life history choices are implemented by simple processes where the underlying mechanisms evolved through natural selection (Dawkins and Dawkins, 1973; Krebs and Davies, 1993; Doya, 2008). Consequently, one focus in behavioral ecology has been to account for the choices observed in a specific context in terms of specific costs and benefits without referring to the proximate mechanisms involved (Krebs and Davies, 1993; Barnard, 2004). The Euler-Lotka equation provides the principal mathematical framework in evolutionary ecology. Euler (1760) showed how age-dependent survival and fecundity schedules determine population per capita growth rate, and Lotka (1925) redefined it to account for the evolutionary fitness of life-history strategies where patterns of survival and fecundity depend on the genotype (Fisher, 1930) and behavioral actions. The transition from population statistics (Euler, 1760) to decisions (Lotka, 1925) was advanced with state dependent life history theory (Mangel and Clark, 1986; McNamara and Houston, 1986), which explicitly assumes that animals make decisions—choose a specific behavior from a suite of possible ones—that depend on physiological state.

In these modeling approaches, the researcher deduces the predicted decision by maximizing a metric of evolutionary fitness. Already Alfred Lotka hinted that there are mechanisms within a body that enable the organism to make evolutionarily good decisions: “What guides a human being, for example in the selection of his activities, are his tastes, his desires, his pleasures and pains, actual or prospective” (Lotka, 1925, p. 352). He pointed at an evolved neurological basis—and an ability to predict—that controls decisions. When introducing Optimal Foraging Theory, [Emlen (1966), p. 611] wrote that the energy efficiency rule applied “within the physical and nervous limitation of a species.” In practice of the “phenotypic gambit”* (Grafen, 1984), ecologists have usually ignored proximate mechanisms and focused on specific decision rules that maximize fitness in specific situations (Fawcett et al., 2013). The resulting models usually leave unspecified how animals implement these rules. Therefore, no account is given to how animals assess the probabilities and utilities of alternatives, or which cognitive mechanisms, learning rules, or nervous system components are involved. This gap is, however, populated with new knowledge from two directions. A growing trend in the neuroscience of decision-making accounts for natural ecological contexts and evolutionary adaptation (Glimcher, 2003; Hutchinson and Gigerenzer, 2005; Louâpre et al., 2010; Mobbs et al., 2018). Similarly, behavioral ecologists are increasingly interested in psychological mechanisms, motivation, emotion and learning as mechanisms underlying decision-making in specific ecological contexts (McNamara and Houston, 2009; Giske et al., 2013; Trimmer et al., 2013; Fawcett et al., 2014; Frankenhuis et al., 2018; Higginson et al., 2018).

Detailing individual-level decision mechanisms can be taken one step further: how does the world look from the perspective of the animal? The agent metaphor—the animal seen as an adaptive autonomous system—is not new in ethology. It has been used in the theory of behavioral control and motivation (McFarland and Bosser, 1993). Even bacteria, displaying impressive decision-making capacities (Lyon, 2015; Reid et al., 2015; Bi and Sourjik, 2018), are recognized as autonomous agents (Fulda, 2017). There is much to gain in the study of behavioral ecology by taking the adaptive autonomous agent perspective from the cognitive science and robotics.

In human psychology and cognitive science, decision-making is usually thought to depend on explicit thinking and deliberation by the individual (Baars and Gage, 2010). But even in humans, much of decision-making is served by model-based and goal-directed mechanisms that are not necessarily linked with consciousness (Botvinick and Cohen, 2014; Pezzulo et al., 2014; Bach and Dayan, 2017). The focus in these disciplines is on general and universal proximate mechanisms (e.g., Bayesian analysis or a set of broadly applicable heuristic rules, e.g., tallying) to account for rational decision-making across various domains of situations. In this context, rationality means that the agent chooses the best option given constraints (Kahneman, 2002; Gigerenzer and Gaissmaier, 2015). A similar approach taking the perspective of the decision-making subject and accounting for its neurobiological mechanisms for choice (e.g., reinforcement learning, role of prefrontal cortex, basal ganglia etc.) is used in neurobiology (Gold and Shadlen, 2007; Cisek and Kalaska, 2010; Brody and Hanks, 2016). A focus on the general architecture for optimal and resilient action selection is common in robotics and artificial intelligence (Arkin, 1998; Pezzulo et al., 2014; Lewis and Canamero, 2016).

There is a gap between the ultimate ecological and evolutionary understanding of optimal decisions in specific contexts and the general proximate machinery of decision-making across different situations. One bridging tool would be general models that combine a mechanistic system capable of trading priorities across different needs and producing optimal choice in different unpredictable contexts with evolutionary adaptation. Such a model cannot represent an equation or a system of equations but needs a set of computational algorithms implementing a cognitive machinery that “works” like a real system (Dean, 1998). Thus, the challenge is to bring cognitive and ecological models together. Adaptive decision-making in natural environments needs to account for the evolved organism with its integrated phenotype (Murren, 2012), including the cognitive machinery enabling it to behave autonomously, make predictions about the future through subjective* processes, and thus producing adaptive decisions in real time.

Experimenters normally use simple artificial environments and experimental systems focused on a single problem or context that are controllable and lack ambiguity (Staddon, 2003; Fawcett et al., 2014). The natural environment, however, is usually complex, heterogeneous, continuously changing, and stochastic. What is the “context” may not be obvious for the organism. The animal is bombarded with numerous, conflicting, and often novel stimuli. A considerable fraction of incoming sensory information is irrelevant, and distracting, the relevant sensory input is often partial, inaccurate, and ambiguous (Tsotsos, 2011). Nervous system functions involve inherent noise (Faisal et al., 2008; Tsetsos et al., 2016), and the availability of different behavioral options changes over time and space and may be unknown (Blumstein and Bouskila, 1996; Fawcett et al., 2014; Bossaerts and Murawski, 2017).

A straightforward assumption is that animals can make better decisions through the use of all, or most, available information, so that decisions are based on Bayesian reasoning (Knill and Pouget, 2004; McNamara et al., 2006; Bogacz, 2007). This requires that the animal's cognitive system is able to calculate, represent and use probabilities and accumulate information about the different choice options. The evidence is scant (Tecwyn et al., 2017) and the current view is that this capacity in animals is generally rather poor (Johnson and Fowler, 2013). Even humans have inherent difficulties with probabilistic thinking (Kahneman and Tversky, 1982; Kahneman, 2002), especially early in life (Girotto et al., 2016). Nonetheless, animals can perform Bayesian coding and reasoning in some circumstances (Knill and Pouget, 2004; Kheifets and Gallistel, 2012; Fontanari et al., 2014). Probabilistic models based on sequential sampling, accumulating noisy information, integrating evidence (Bogacz, 2007; Forstmann et al., 2016) and Bayesian sampling (Sanborn and Chater, 2016) assume collecting much information. This way of decision-making presupposes collecting information about the probability distributions for most variables defining state as well as environmental alternatives (Griffiths et al., 2008; Lee, 2011). However, obtaining information involves costs and can be risky (Lima and Dill, 1990; Barnard, 2004; Eliassen et al., 2009).

Combinatorial complexity becomes a major problem for decision-making in dynamic and stochastic environments because the organism cannot constantly evaluate future effects of every environment and action on its survival and fecundity (Bryson, 2000; Schmid et al., 2011; Tsotsos, 2011). Such a task would require high computational power: any increase in the complexity of the system would raise the computational demands at least exponentially (Cooper, 1990; Tsotsos, 2011; Bossaerts and Murawski, 2017). The only feasible solution is to use approximate and special case algorithms (Cooper, 1990; Goldreich, 2010). One such case is represented by extreme prior probabilities, when the full-information Bayesian model is equivalent to a simple heuristic* decision rule (Parpart et al., 2018). Generally, however, determining a mapping between unconstrained stimuli (input) to a specific response (output) does not scale up to natural environments, quickly reaching an almost intractable computational complexity*. Thus, the strictly bottom-up stimulus to response decision-making process becomes infeasible (Tsotsos, 1995; Shanahan and Baars, 2005; Bossaerts and Murawski, 2017). Consequently, the cognitive machinery must restrict information entering into the decision system.

In computer science, a system based on fixed mapping between the input and output represents a lookup table. Using such a table, potentially complex runtime computations are substituted by a simpler array indexing operation (Knuth, 1997). Indeed, computational demand increases linearly with growing fixed size tables (Fredman et al., 1984). However, a non-deterministic decision tree cannot be represented by a finite lookup table. Russell and Norvig (2010) considered a hypothetical case of a table-driven autonomous agent doing a simple task in limited in time. The number of entries in the lookup table required for a rather moderate-size system could exceed the number of atoms in the observed universe. A similar consideration for a brute force table-based program that could pass the Turing test is equally discouraging: a tabular program cannot make adaptive decisions in an unpredictable environment, such a task, even limited in time, requires mental states (McDermott, 2014). Animals face even more complex situations because they do not have a specific computational goal with a halting point (Glimcher, 2003). In the general case, the size of the rule base would grow exponentially (or faster) when the set of the contexts and stimuli for decision-making only grows linearly. The problem exacerbates with systems that change over time, as the lookup table would require extra dimensions to account for planning (Pollock, 2006) and sequential decisions (Walsh and Anderson, 2014). Generally, a system based on advance planning needs higher computational capacity than that generating adaptive behavior autonomously (Arkin, 1998; Russell and Norvig, 2010).

Viewing animal behavior as a lookup table of fixed adaptive recipes, where it can draw the best response to each potential situation may appear simple but brings about increased complexity at other levels: (i) the need to determine the current situation and (ii) how each entry in the table has evolved, encoded in genes, or come to store the adaptive recipe.

Although natural environments are complex and unbounded, certain natural situations are highly repeatable, making simple context-specific rules feasible as a special case. Such rules would translate to simple fixed strategies governed by reflexive neural circuits like the fast startle avoidance in teleost fish (Simmons and Young, 1999). Similarly, mammals have two distinct circuits that are responsible for fear and anxiety, the “high road” and the “low road.” The former involves complex cortical processing whereas the later can quickly transmit information from the sensory thalamus directly to the fear processing amygdala (LeDoux, 1996). Thus, the low road may involve an evolutionary adaptation specifically to simple stimuli and rigid, repeatable contexts whereas the high road is adapted to complex, flexible general purpose processing not easily attainable through fixed automatic reflexes (Rolls, 2000; Trimmer et al., 2008).

There is a growing recognition that behavior is agentic, purposive (Dickinson, 1985), generated endogenously by the animal (Edelman, 2016), and has an intrinsic spontaneity and indeterminacy (Maye et al., 2007; Brembs, 2011). Such behavior can be based on internal predictive models (Clayton et al., 2003; Suddendorf and Corballis, 2010; Corballis, 2013) involving subjective assessment of the animal's state (Bubic et al., 2010; McNally et al., 2011; Clark, 2013). Doing so does not require a complicated nervous system (Dyer, 2012; Giurfa, 2013; Haberkern and Jayaraman, 2016). The nervous system and the organism as a whole are now increasingly depicted as a “prediction machine” (Bubic et al., 2010; McNally et al., 2011; Clark, 2013) with ability to model the environment and anticipate future consequences of actions.

Thus, the fundamental problem of adaptive behavior and decision-making in a naturally complex environment is what are the best stimuli to respond to and what are the best contexts to choose for achieving a specific goal such as homeostasis, survival, or reproduction rather than just how to best respond to particular environmental stimuli. This points to three essential aspects: (a) the focus on the autonomous agent with a subjective internal model* and predictive processing; (b) the need to limit, as much as integrate, information from the environment; (c) the importance of goal-directed (top-down) rather than purely stimulus-driven (bottom-up) cognitive and behavioral control.

Efficient decision-making in natural environments requires control over which stimuli to process and which to ignore. In neuroscience, such selectivity is thought to arise through attention: the capacity to acquire and process only a limited subset of the available sensory input (Bushnell, 1998; Katsuki and Constantinidis, 2014; Moore and Zirnsak, 2017). Furthermore, attention restriction must emerge from interactions between the components of the systems (McClelland et al., 2010; Botvinick and Cohen, 2014; Dennett, 2017).

Selective attention can be achieved through either stimulus-driven (bottom-up) or goal-driven (top-down) mechanisms (Corbetta and Shulman, 2002; Moore and Zirnsak, 2017). In its simplest form, noticeability or apparent physical salience of the stimulus induces stimulus-driven (bottom-up) attention automatically. Here the properties of the stimulus itself, rather than the internal state of the agent, elicit the selection bias. Goal-driven (top-down) attention emerges when specific types of information (e.g., specific kinds of stimuli) are actively sought out from the external environment. Top-down attention is implicated in goal-directed behavior and complex forms of cognition (Norman and Shallice, 1986; Corbetta and Shulman, 2002; Buschman and Miller, 2014).

Attention is a critical mechanism for sensory system functioning and decision-making. Quite well-developed attention can be found in animals with relatively small nervous systems, for example in insects (Nityananda, 2016). Selectivity in the visual processing center modulates later behavioral choices (Paulk et al., 2014), which leads to selective priming of motion detectors in the optic lobe, accounting for the insects fascinating ability to predict prey movements (Kohn et al., 2018).

Selective attention is closely linked with cognitive control that involves prioritization of the information for goal-directed decision-making (Braver, 2012; Duncan, 2013; Mackie et al., 2013) typically by inhibitory neuronal mechanisms (Aron, 2007; MacLeod, 2007). Cognitive control includes hierarchically organized integration of information from many sources (Toates, 2002; Verschure et al., 2014; Pezzulo et al., 2015), top-down suppression of distracting emotion-eliciting stimuli, and regulation of the emotional response (Banich et al., 2009; Goschke and Bolte, 2014). Various brain circuits have been implicated in goal-directed cognitive control, such as basal ganglia, prefrontal cortex (Haddon and Killcross, 2005; Goschke and Bolte, 2014; Verschure et al., 2014), and lateral habenula (Hikosaka, 2010). Although few studies of cognitive control have been conducted in non-human species, the existing evidence indicates that at least some form of cognitive control can be found in species lacking prefrontal cortex, such as pigeons (Castro and Wasserman, 2016) and fruit-flies (van Swinderen, 2005). Models of cognitive control emphasize local self-organizing computations (Botvinick and Cohen, 2014).

In economic judgment and psychological decision-making, humans do not typically use exhaustive analysis of all information about the various alternatives and their probabilities. Cognitive complexity is limited by using simple heuristic rules (Hutchinson and Gigerenzer, 2005; Gigerenzer, 2008; Gigerenzer and Brighton, 2009). This reflects the trade-off between accuracy and effort (in behavioral ecology, see Chittka et al., 2009). While more information is usually beneficial, obtaining it generally follows the law of diminishing returns. Neural computations are energetically costly and consume time. At some point, obtaining more information and performing additional computations will not result in sufficiently better decisions (Eliassen et al., 2009). Instead of the best choice, the organism makes fast and frugal decisions that are good enough most of the time for the ecological conditions (Hutchinson and Gigerenzer, 2005; Gigerenzer, 2008). Simple heuristics based on limited information can perform quite well compared to complex informed decision strategies in a variety of situations (Hutchinson and Gigerenzer, 2005; Eliassen et al., 2007, 2016).

Although the human mind creates verbal heuristics and rules of thumbs, the evolved rules in most animals are non-symbolic neurophysiological mechanisms. Heuristic rules that describe animal behavior are theoretical constructs, not actual machinery implemented in the nervous system. Natural selection can maintain simplified algorithms for approximate solutions in organisms with finite computational power, memory, and limited information (Geisler and Diehl, 2003; Trimmer et al., 2011; Bach and Dayan, 2017). It can also facilitate decision biases because some errors (e.g., underestimating predation risk or overconfidence in resource contests) are far more costly than erring on the other side (Houston et al., 2007; Johnson et al., 2013).

Using less information could result in better decisions than strategies based on more detailed computations, even when additional processing is cheap and information is easily available (Gigerenzer and Brighton, 2009). The main explanation for such a “less-is-more” effect is that decision-making is about prediction of the future rather than accounting for the past. Including extra information and computations within a stochastic environment incurs significant risk of overfitting. A complex model that includes more parameters and fits the observed data better is frequently less capable to predict the future state of the environment than a simpler model.

This is a general issue that has been treated in non-parametric inference and machine learning literature. If a model is based on too limited information (high bias), prediction is inaccurate because of inadequate model specification. However, if too much information is accepted (high variance), the prediction can also be wrong because the model fits all irrelevant stochastic idiosyncrasies; there is no universal solution to this trade-off (Geman et al., 1992; Bishop, 2006). Gigerenzer and Brighton (2009) suggest that the more uncertain the environment is, the more the cognitive system should protect itself from the variance even at the expense of increasing bias, thus accounting for the prevalence of simple and imperfect but resilient heuristics.

How can the animal decide which heuristics to choose in each situation? This would still require a fairly complex cognitive system. The main conclusion is that the proximate decision-making architecture should not use general inference algorithms (e.g., explicit Bayesian probabilistic reasoning) to find the best solution in a wide range of contexts, nor should it be largely based on context-specific lookup table rules. Instead, the cognitive system of the animal must be able to adapt to a wide range of situations, as well as its own need states, by dynamically adjusting the information input in real time.

In the 1960s, David Marr (2010) proposed the now classical idea that full understanding of any cognitive system requires three levels of analysis: (a) computational theory, (b) representation and algorithm, and (c) proximate mechanistic implementation. Simple heuristics, Bayesian, and active inference models provide an elegant mathematical framework—they account for what the organism should do—but do not provide mechanistic explanation of how it is done (Gigerenzer and Brighton, 2009; Bowers and Davis, 2012). How the organism and its nervous system may implement these computations is still poorly understood. However, the agent view of the organism requires understanding at all three levels, specifically, the algorithmic implementation and neurobiological “hardware.” Understanding animal decision-making requires models that integrate goal-directed predictive processing, information-limiting heuristics and probabilistic Bayesian thinking into mechanistic evolutionary models in a (neuro-)biologically realistic way. We believe that an approach based on cognitive architecture (Anderson, 2007; Lucentini and Gudwin, 2015; Budaev et al., 2018) would be a viable strategy for a more mechanistic understanding of the adaptation and evolution of decision-making. This involves building simulation models of cognition and behavior, embodied decision-making, and action selection (Seth, 2007; Cisek and Pastor-Bernier, 2014). In terms of Marr's analysis, cognitive architecture models work at the second level—the proximate software of cognition, behavior and decision-making—and allow simplified representation at the third level—neurobiological hardware—within a simulation system. As a result, analyzing evolutionary adaptation of the proximate mechanism becomes possible (Giske et al., 2013, 2014; Evers et al., 2014; Eliassen et al., 2016; MacPherson et al., 2017; Budaev et al., 2018).

We have developed an adaptive architecture for decision-making (Huse and Giske, 1998; Strand et al., 2002; Giske et al., 2003, 2013, 2014; Andersen et al., 2016; Eliassen et al., 2016). It currently contains a general framework and simulation models that integrate cognition, decision-making and behavior in the whole integrated phenotype including the genome, physiology, hormonal system, perception, emotions, motivation and cognition (Budaev et al., 2018). It follows from and extends the classical ethological notion of behavioral control systems (see Toates, 2002; Hogan, 2009). In the following sections we show how this framework can be used to model adaptive animal decision-making.

At any time, new information from the body or the external environment may arrive at the animal's sensory system. Yet, before that, the animal has some representation within its nervous system of both itself and its surroundings. Even unicellulars can evaluate the situation within the cell and in the surroundings and make decisions about pursuing resources, closing enemies out, dividing, or entering a resting stage (Våge et al., 2014; Lyon, 2015; Bi and Sourjik, 2018). The complexity of this internal representation varies within Animalia, but it can be described as an “image” (Damasio, 2010) of aspects of itself and of the world around it. Much of incoming information will not be relevant for altering this image, and will not evoke a new behavior. We call this image an internal model (of the internal and external world). It is subjective, as it accumulates from the organism's own experiences. The subjective internal model (SIM) is the animal's cognitive environment for its decision-making. Technically, SIM represents a set of parameters that are fixed from the individual genome. We now describe the processes that can challenge an animal's SIM, and how that may lead to new decisions and behavior.

Modularity is ubiquitous in anatomy, physiology, and behavior (Lorenz et al., 2011; Clune et al., 2013). In particular, behavioral organization is viewed as hierarchically modular (Toates, 2002; Hogan, 2009). Causal factors for behavior bring about constraints and correlations across contexts making up personality (Gosling and John, 1999; Sih et al., 2004; Budaev and Brown, 2011). In this framework, modularity is represented by the elementary unit of information processing: the survival circuit.

The survival circuit is an evolutionarily conserved and highly integrated neural pathway, along with its neural centers, that responds to a specific class of innate or learned stimuli and controls a specific set of behavioral and physiological responses important for the survival (LeDoux, 2012). Such systems represent integrated sensory-motor units that link behavioral decision and action with perception of the environment. The organism has several of these systems that control specific behavioral domains linked to nutrition, danger avoidance, resting, reproduction, etc.

Animal behavior can always be viewed as a series of mutually exclusive activities, that occur one at a time (McFarland and Sibly, 1975). Since most organisms have several survival circuits, priority needs to be determined. This happens through mutual competition (Cisek, 2007; Colas, 2017; Alhadeff et al., 2018). The winning control system gains over the organism and becomes its current dominant state, called the global organismic state* (GOS, LeDoux, 2012, 2014). The strength of the activation of the dominant state determines the general arousal* of the organism which involves a wide range of neural pathways and brings about alertness to various sensory stimuli, reactivity, and motor activity (Pfaff, 2006; Calderon et al., 2016). This is an oversimplification dictated by the main application area: ecological and evolutionary analysis of animal behavior. Whereas arbitration between different controllers involves competitive exclusion, it can also include sharing of attention, working memory, and other limited resources (Daw et al., 2005; Keramati et al., 2011; Korn and Bach, 2018).

What happens once a GOS has been established can differ across Animalia. Certain taxa may have to run the priorities of a GOS isolated from all other survival circuits, and may even have a higher number of specialized survival circuits. More complex nervous systems have means to communicate between survival circuits, and thus incorporate more factors in the decision-making. For instance, Milinski (1984) found that starving three-spined sticklebacks were not willing to focus entirely on attention-demanding high-speed feeding after seeing the silhouette of a predatory bird, and also chose to feed in a less attention-demanding situation after first reducing its hunger in high-risk feeding (Heller and Milinski, 1979). Thus, the brains of these fish would implement an information broadcast in the SIM across survival circuits that allows for nuanced behavioral decision by the GOS. In the crab Heterozius rotundifrons, on the other hand, the alternative global organismic states seem to be like an on/off switch (Hazlett and McLay, 2000).

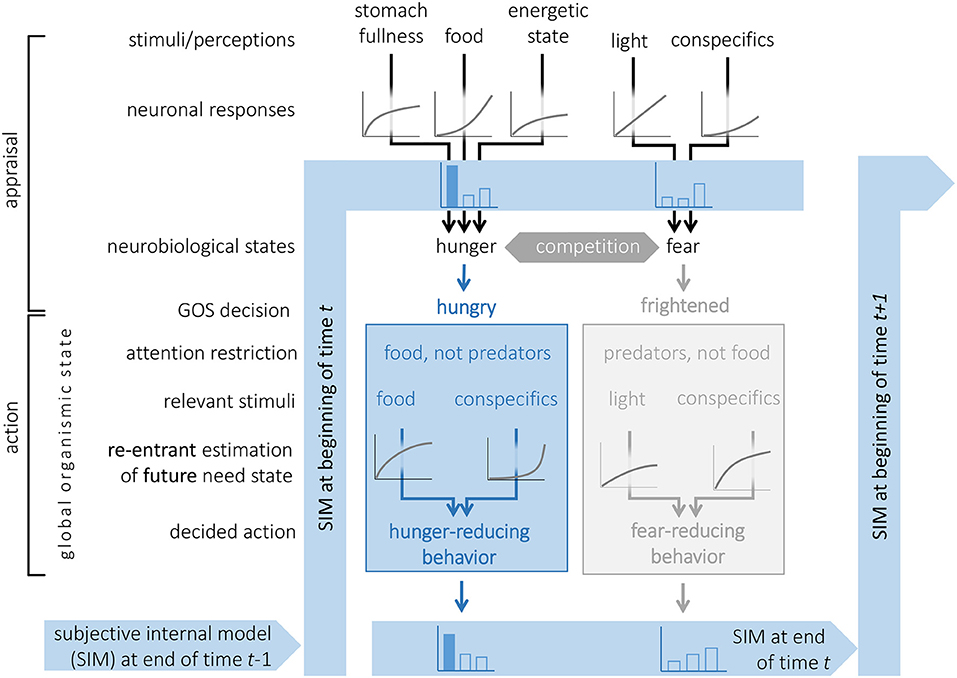

The GOS and general arousal jointly modulate the top-down attention system such that all the perceptions that are not linked with the currently dominant survival circuit are suppressed (Figure 1). The stickleback feeding (Heller and Milinski, 1979) illustrates that the extent of this suppression is proportional to the general arousal. For example, if the dominant state of the organism is fear, then hunger, thirst, and sexual drive systems are suppressed proportionally to the fear arousal. This provides a simple mechanism for goal-directed limitation and modulation of information input. That is, arousal determines the current need state of the organism (e.g., higher or lower hunger) such that a higher need brings about a stronger information limitation while a weaker need causes a wider integration of diverse stimuli. This accommodates both limited- and full-information models of decision-making: a strong need dictates the organism to prefer a fast decision based on the most critical information, while being in a low-need state permits longer and more deliberate collection and analysis of information. Attention restriction in our framework is a simplification, real animals have various forms of attention (orienting, selective, expectancy, sustained, parallel resource sharing etc.) that can involve both suppression of irrelevant stimuli and enhancement of relevant stimuli (Bushnell, 1998; Katsuki and Constantinidis, 2014; Moore and Zirnsak, 2017).

Figure 1. A graphical model of the cognitive environment for decision-making in an animal with Subjective Internal Model (SIM), survival circuits (here two shown, where the blue became the GOS) and the potential for a Global Organismic State (GOS). The continuity of the SIM across time steps is illustrated. Two survival circuits are activated from sensory information and compete for access to the GOS. The winner, in this case related to hunger reduction, narrows the attention to stimuli relevant for feeding, utilizes the prediction machine for re-entrant evaluation of the future arousal of its need state to be obtained by each behavioral option available, and chooses accordingly. If the neurobiological arousal from all survival circuits is low, the organism may not enter a strict GOS, and rather make reentrant predictions that considers needs in several survival circuits. Information from both (and other) survival circuits is stored by the SIM, but will gradually be forgotten.

Top-down attention creates elementary goal-directed behavior. Indeed, the current GOS determines which stimuli the organism will select to respond to without an additional internal controller. Furthermore, this also creates an elementary form of subjective phenomena that exist only for experiencing subject (Searle, 1997). Indeed, due to differences in their internal states, two organisms with identical genomes placed into identical environments will have different weights of environmental inputs and thus different SIMs. This is analogous to being in different subjective environments, so the organism would come to make different decisions. As they continue to interact with their environments, subjective differences would further deviate.

The link between perception, decision-making, and action within the survival circuit not only produces behavior directly, but can also be reactivated recursively*. This provides a simple mechanism for internal subjective simulation of action consequences. Combined with the general arousal as a proxy for the organism's subjective need, this provides a mechanistic system capable of predictive processing. Now the organism can build simple sensorimotor models of its internal and external environments by recurrent reactivation of its survival circuits and calculate its expected need state under all or many immediately expected conditions of the environment. For example, an animal can from visual inspection and memory make a prediction of how much a potential food item will reduce hunger before it decides to eat it. This simulation process within the SIM implements an elementary form of “mind” capable of elementary declarative representation: knowledge symbolizing facts about the world, e.g., “If I eat this food item, my hunger diminishes.” This is consistent with the current thinking in cognitive neuroscience where the same integrated neural pathways are recruited when the behavioral action is produced as when the same action is planned, anticipated, subjectively simulated, or even observed (Hurley, 2008; Casile et al., 2011; Soylu, 2016). Such mechanisms are not limited to human higher cognitive functions: insects are a model system for sensorimotor integration because of their simple nervous systems but complex behaviors (e.g., Huston and Jayaraman, 2011). Furthermore, computationally similar mechanisms can exist even in bacteria (Duijn et al., 2008).

Recurrent rather than simple feed-forward processing is particularly important for complex decision-making computations. For example, recurrent networks can implement Turing-complete machines and therefore perform computations of any complexity (Siegelmann and Sontag, 1995). Such recurrent networks can be supplemented with attention and memory providing a powerful unsupervised architecture capable of inferring computational algorithms (Graves et al., 2014). Whenever the same set or sequence of inputs is used, one can unwind a recurrent into a simple linear process. The power of recurrent processing lies in its inherent and parsimonious capacity to cope with uncertainty and unpredictability.

It is thought that complex forms of cognition depend on reentrant* recruitment of sensorimotor bundles (Barsalou, 2008). These are involved in mental simulations of actions and provide an objective substrate for subjective experience (Barsalou, 2008). Barsalou (2005) suggested that there is a continuity of such systems across species: a common architecture can underlie conceptual systems in different taxa. Neurobiology of learning converges on the idea that even simple nervous systems should store information intrinsically in the symbolic way (Gallistel and King, 2010; Gallistel and Balsam, 2014). There is also now evidence that at least some species are able to travel in time mentally and “future-think” (Corballis, 2013; Thom and Clayton, 2015; Dere et al., 2018). Thus, a range of species, even with simple nervous systems, may have cognitive mechanism for active, dynamic understanding of their environment (a simple form of deliberation or thinking), probably based on recurrent recruitment mechanisms and perceptual symbol systems. Furthermore, such mechanisms may show evolutionary continuity and range from simple to very complex (incidentally, there is no a priori reason to assume that primates should always use more complex internal mechanisms than fish or insects). Indeed, there is a growing literature indicating that animals are capable of causal reasoning and abstraction (Penn and Povinelli, 2007; Urcelay and Miller, 2009; Guez and Stevenson, 2011).

The adaptive decision-making architecture described in this paper provides a mechanistic model for elementary forms of subjective understanding of the environment by the organism (Budaev et al., 2018). Such an understanding does not involve building a generalized abstract representation of the environment, but is produced by the organism's internal control systems (Figure 1). It also provides a model of elementary self-awareness, which is defined as the ability of the organism to assess its own subjective internal state and use this information for decision-making and behavior (see Budaev et al., 2018). In this way, the subjective state depicted by the survival circuit and general arousal represents a common subjective currency for decision-making. For example, when choosing one of several available food items, the organism can calculate the anticipated level of hunger resulting from eating each one and then select the item that minimizes the expected arousal (Budaev et al., 2018). Thus, the organism anticipates effects of its decisions through prediction of its own subjective states. Similarly, it was shown that using simple subjective information can significantly increase the efficiency of decision-making: it can approach a Bayesian learning strategy integrating large amount of information (Higginson et al., 2018). The ability to monitor one's own subjective state in animals should not be considered a glimpse of anthropomorphism. On the contrary, there is a growing understanding that many animals can in fact monitor their own cognitive state and therefore are capable of metacognition (Smith et al., 2003; Kornell, 2014).

Thus, the decision-making architecture based on simple subjective simulations with elementary self-awareness—the primary regulator of the whole organism behavior—encodes a valid, albeit limited and not always precise, model of the organism's world including both the internal and external environment (Figure 1). It is apparently much simpler and has far fewer degrees of freedom than the stochastically fluctuating environment, and can even be drastically simplified in situations of high need (high arousal) through the use of heuristics. This provides a solution to the complexity challenge in animal decision-making.

Behavior of the organism is determined by multiple causal factors (Hogan, 2009), such as reflexes, homeostatic drives, and emotions (Andersen et al., 2016). These mechanisms can be inherited and operate without individual learning. Hence, the major computational challenge of both environmental assessment and bodily priorities does not all depend on the individual's cognitive capacity: it is partially conducted through adaptive evolution of the population gene pool. Animals are unlikely to perform complex Bayesian computations, but natural selection is expected to favor organisms that approximate Bayesian inference (Ramírez and Marshall, 2017). How such computations can be done mechanistically is generally unknown.

This framework investigates these problems through the genetic algorithm to evolve solutions to genetic (Huse and Giske, 1998; Fiksen, 2000) and cognitive/behavioral (Giske et al., 2013; MacPherson et al., 2017) architecture in individual-based models. Our approach directly translates into testable experiment: increasingly adaptive behavior evolved over successive generations provides evidence for the architecture implemented (Giske et al., 2013, 2014; Andersen et al., 2016; Eliassen et al., 2016; Budaev et al., 2018). Also, the solution found by the genetic algorithm is at the level of the gene pool, so there will usually be genetic variation in the population (Giske et al., 2014). Such genetic diversity allows for consistent variation among individuals, including emergence of personality types that differ in sensory sensitivity and top-down emotional evaluation of the choices (Giske et al., 2013). This enables generating and testing hypotheses on both the decision architecture and its variability. Further models can be built testing co-evolution and/or competition of distinct architectures in common environments. We envision the framework as a digital laboratory, a middle ground between theoretical optimality models and experiments.

Individual learning plays a crucial role in decision-making (Doya, 2008; Frankenhuis et al., 2018). Much of learning can be mediated by innate cognitive machinery with subjective currency serving for the prediction error* (Sutton and Barto, 1981; Botvinick and Weinstein, 2014; Holland and Schiffino, 2016). This calls for models of learning based on top-down cognition and information rather than temporal association between stimuli (Gallistel and King, 2010; Gallistel and Balsam, 2014; Haselgrove, 2016). Individual learning is therefore linked with the evolved cognitive architecture and memory system, so that even individually acquired choices can be based on evolutionary computations over the previous generations. The elementary forms of declarative representation that result from reentrant recruitment of survival circuit in our framework may therefore provide a model for the evolution of innate core knowledge (Kinzler and Spelke, 2007; Vallortigara, 2012).

Many biological problems are complex, studying them need models that can incorporate complexity (Levins, 1966). Such models often contain a mixture of theory, statistical relationships from empirical studies, and theoretical relationships that need to be challenged by new data (Railsback and Grimm, 2019). An important part of the current shift toward an integrative understanding of proximate and ultimate causation involves viewing the animal as an agent rather than just passive responder. Decisions made by the agent can be analyzed from its point of view, accounting for its sensory and motor capabilities, environmental conditions and affordances. This focuses on how the animal solves its everyday decision tasks across various contexts through its evolved cognitive architecture, acting autonomously, purposively, determining dynamically which information to use and which to ignore. Merging evolutionary ecology with the cognitive sciences, animal cognition, neuroscience as well as artificial intelligence and robotics opens important perspectives in the study of decision-making in organisms with complex cognition and behavior. A drawback is that more complex, computationally intensive simulations with expensive computer programming are required (Huse and Giske, 1998; Giske et al., 2013); (MacPherson et al., 2017).

Ethologists (e.g., Tinbergen, 1952) and evolutionary ecologists (Mangel and Clark, 1986; McNamara and Houston, 1986) have long accepted that animal behavior is not a mechanical response to the external pressure from the environment but depends on the state of the organism. In this paper we argue that the fundamental state to consider is not stomach contents, fat reserves, or body mass per se, but a state of its “mind”: the global organismic state. The animal's subjective internal model can, via control of the nervous system, set the animal in a mental state that will dominate many aspects of its cognition and behavior, including decision-making. There is an exciting avenue for linking cognition and neuroscience with ecological function. Predictions on the fitness economics (Krebs and Davies, 1993) of various cognitive systems and emotions can now be produced. By integrating knowledge at different levels of analysis it could depict correlations across contexts, time, and developmental stages. This provides a key to better understanding animal personalities and life history strategies. Finally, the focus on agency, top-down causes and emergence may lead to novel discoveries that are not easily predicted from simple aggregate phenomena.

SB and JG conceived the theoretical ideas. SB wrote the first version of the manuscript. JG, MM, SE, and CJ provided theoretical developments, additional references, and contributed critically to later versions of the manuscript.

This research is supported by the University of Bergen and the Research Council of Norway grant No. FRIMEDBIO 239834. MM is partially supported by the US National Science Foundation grant DEB-15-55729.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors have benefited from discussions with Bernhard Baars, Victoria Braithwaite, Lars O.E. Ebbesson, Tore S. Kristiansen, and Steve Railsback. The reviewers also substantially improved the manuscript.

Alhadeff, A. L., Su, Z., Hernandez, E., Klima, M. L., Phillips, S. Z., Holland, R. A., et al. (2018). A neural circuit for the suppression of pain by a competing need state. Cell 173, 140–152.e15. doi: 10.1016/j.cell.2018.02.057

Andersen, B. S., Jørgensen, C., Eliassen, S., and Giske, J. (2016). The proximate architecture for decision-making in fish. Fish Fish. 17, 680–695. doi: 10.1111/faf.12139

Anderson, J. R. (2007). How Can the Human Mind Occur in the Physical Universe?. Oxford: Oxford University Press.

Aron, A. R. (2007). The neural basis of inhibition in cognitive control. Neuroscience 13, 214–228. doi: 10.1177/1073858407299288

Bach, D. R., and Dayan, P. (2017). Algorithms for survival: a comparative perspective on emotions. Nat. Rev. Neurosci. 18, 311–319. doi: 10.1038/nrn.2017.35

Banich, M. T., Mackiewicz, K. L., Depue, B. E., Whitmer, A. J., Miller, G. A., and Heller, W. (2009). Cognitive control mechanisms, emotion and memory: a neural perspective with implications for psychopathology. Neurosci. Biobehav. Rev. 33, 613–630. doi: 10.1016/j.neubiorev.2008.09.010

Barnard, C. (2004). Animal Behaviour: Mechanism, Development, Function and Evolution. Harlow: Prentice Hall.

Barsalou, L. W. (2005). Continuity of the conceptual system across species. Trends Cogn. Sci. 9, 309–311. doi: 10.1016/j.tics.2005.05.003

Barsalou, L. W. (2008). Grounded cognition. Annu. Rev. Psychol. 59, 617–645. doi: 10.1146/annurev.psych.59.103006.093639

Bi, S., and Sourjik, V. (2018). Stimulus sensing and signal processing in bacterial chemotaxis. Curr. Opin. Microbiol. 45, 22–29. doi: 10.1016/j.mib.2018.02.002

Bleuven, C., and Landry, C. R. (2016). Molecular and cellular bases of adaptation to a changing environment in microorganisms. Proc. R. Soc. B Biol. Sci. 283:20161458. doi: 10.1098/rspb.2016.1458

Blumstein, D. T., and Bouskila, A. (1996). Assessment and decision making in animals: a mechanistic model underlying behavioural flexibility can prevent ambiguity. Oikos 77, 569–576.

Bogacz, R. (2007). Optimal decision-making theories: linking neurobiology with behaviour. Trends Cogn. Sci. 11, 118–125. doi: 10.1016/j.tics.2006.12.006

Bossaerts, P., and Murawski, C. (2017). Computational complexity and human decision-making. Trends Cogn. Sci. 21, 917–929. doi: 10.1016/j.tics.2017.09.005

Botvinick, M., and Weinstein, A. (2014). Model-based hierarchical reinforcement learning and human action control. Philos. Trans. R. Soc. B Biol. Sci. 369:20130480. doi: 10.1098/rstb.2013.0480

Botvinick, M. M., and Cohen, J. D. (2014). The computational and neural basis of cognitive control: charted territory and new frontiers. Cogn. Sci. 38, 1249–1285. doi: 10.1111/cogs.12126

Bowers, J. S., and Davis, C. J. (2012). Bayesian just-so stories in psychology and neuroscience. Psychol. Bull. 138, 389–414. doi: 10.1037/a0026450

Braver, T. S. (2012). The variable nature of cognitive control: a dual mechanisms framework. Trends Cogn. Sci. 16, 106–113. doi: 10.1016/j.tics.2011.12.010

Brembs, B. (2011). Towards a scientific concept of free will as a biological trait: spontaneous actions and decision-making in invertebrates. Proc. R. Soc. B Biol. Sci. 278, 930–939. doi: 10.1098/rspb.2010.2325

Brody, C. D., and Hanks, T. D. (2016). Neural underpinnings of the evidence accumulator. Curr. Opin. Neurobiol. 37, 149–157. doi: 10.1016/j.conb.2016.01.003

Bryson, J. (2000). Cross-paradigm analysis of autonomous agent architecture. J. Exp. Theor. Artif. Intell. 12, 165–189. doi: 10.1080/095281300409829

Bubic, A., Von Cramon, D. Y., and Schubotz, R. I. (2010). Prediction, cognition and the brain. Front. Hum. Neurosci. 4:25. doi: 10.3389/fnhum.2010.00025

Budaev, S., Giske, J., and Eliassen, S. (2018). AHA: a general cognitive architecture for Darwinian agents. Biol. Inspired Cogn. Archit. 25, 51–57. doi: 10.1016/j.bica.2018.07.009

Budaev, S. V., and Brown, C. (2011). “Personality traits and behaviour,” in Fish Cognition and Behavior, eds C. Brown, K. Laland, and J. Krause (Cambridge: Blackwell Publishing), 135–165.

Buschman, T. J., and Miller, E. K. (2014). Goal-direction and top-down control. Philos Trans R Soc L. B Biol Sci 369:20130471. doi: 10.1098/rstb.2013.0471

Bushnell, P. J. (1998). Behavioral approaches to the assessment of attention in animals. Psychopharmacology 138, 231–259. doi: 10.1007/s002130050668

Calderon, D. P., Kilinc, M., Maritan, A., Banavar, J. R., and Pfaff, D. W. (2016). Generalized CNS arousal: an elementary force within the vertebrate nervous system. Neurosci. Biobehav. Rev. 68, 167–176. doi: 10.1016/j.neubiorev.2016.05.014

Casile, A., Caggiano, V., and Ferrari, P. F. (2011). The mirror neuron system: a fresh view. Neuroscientist 17, 524–538. doi: 10.1177/1073858410392239

Castro, L., and Wasserman, E. A. (2016). Executive control and task switching in pigeons. Cognition 146, 121–135. doi: 10.1016/j.cognition.2015.07.014

Chittka, L., Skorupski, P., and Raine, N. E. (2009). Speed-accuracy tradeoffs in animal decision making. Trends Ecol. Evol. 24, 400–407. doi: 10.1016/j.tree.2009.02.010

Cisek, P. (2007). Cortical mechanisms of action selection: the affordance competition hypothesis. Philos. Trans. R. Soc. B Biol. Sci. 362, 1585–1599. doi: 10.1098/rstb.2007.2054

Cisek, P., and Kalaska, J. F. (2010). Neural mechanisms for interacting with a world full of action choices. Annu. Rev. Neurosci. 33, 269–298. doi: 10.1146/annurev.neuro.051508.135409

Cisek, P., and Pastor-Bernier, A. (2014). On the challenges and mechanisms of embodied decisions. Philos. Trans. R. Soc. B Biol. Sci. 369:20130479. doi: 10.1098/rstb.2013.0479

Clark, A. (2013). Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 36, 181–204. doi: 10.1017/S0140525X12000477

Clayton, N. S., Bussey, T. J., and Dickinson, A. (2003). Can animals recall the past and plan for the future? Nat. Rev. Neurosci. 4, 685–691. doi: 10.1038/nrn1180

Clune, J., Mouret, J.-B., and Lipson, H. (2013). The evolutionary origins of modularity. Proc. R. Soc. B Biol. Sci. 280:20122863. doi: 10.1098/rspb.2012.2863

Colas, J. T. (2017). Value-based decision making via sequential sampling with hierarchical competition and attentional modulation. PLoS ONE 12:e0186822. doi: 10.1371/journal.pone.0186822

Cooper, G. F. (1990). The computational complexity of probabilistic inference using Bayesian belief networks. Artif. Intell. 42, 393–405. doi: 10.1016/0004-3702(90)90060-D

Corballis, M. C. (2013). Mental time travel: a case for evolutionary continuity. Trends Cogn. Sci. 17, 5–6. doi: 10.1016/j.tics.2012.10.009

Corbetta, M., and Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 201–215. doi: 10.1038/nrn755

Damasio, A. R. (2010). Self Comes to Mind. Constructing the Conscious Brain. New York, NY: Pantheon Books.

Daw, N. D., Niv, Y., and Dayan, P. (2005). Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat. Neurosci. 8, 1704–1711. doi: 10.1038/nn1560

Dawkins, R., and Dawkins, M. (1973). Decisions and the uncertainty of behaviour. Behaviour 45, 83–103. doi: 10.1163/156853974X00606

Dean, J. (1998). Animats and what they can tell us. Trends Cogn. Sci. 2, 60–67. doi: 10.1016/S1364-6613(98)01120-6

Dennett, D. (2017). From Bacteria to Bach and Back: The Evolution of Minds. New York, NY: W. W. Norton and Company.

Dere, E., Dere, D., de Souza Silva, M. A., Huston, J. P., and Zlomuzica, A. (2018). Fellow travellers: working memory and mental time travel in rodents. Behav. Brain Res. 352, 2–7. doi: 10.1016/j.bbr.2017.03.026

Dickinson, A. (1985). Actions and habits: the development of behavioural autonomy. Philos. Trans. R. Soc. London. Ser. B 308, 67–78. doi: 10.1098/rstb.1985.0010

Duijn, M., Van Keijzer, F., and Franken, D. (2008). Adaptive behavior principles of minimal cognition: casting cognition as sensorimotor coordination. Adapt. Behav. 14, 157–170. doi: 10.1177/105971230601400207

Duncan, J. (2013). The structure of cognition: attentional episodes in mind and brain. Neuron 80, 35–50. doi: 10.1016/j.neuron.2013.09.015

Dyer, A. G. (2012). The mysterious cognitive abilities of bees: why models of visual processing need to consider experience and individual differences in animal performance. J. Exp. Biol. 215, 387–395. doi: 10.1242/jeb.038190

Edelman, S. (2016). The minority report: some common assumptions to reconsider in the modelling of the brain and behaviour. J. Exp. Theor. Artif. Intell. 28, 751–776. doi: 10.1080/0952813X.2015.1042534

Eliassen, S., Andersen, B. S., Jørgensen, C., and Giske, J. (2016). From sensing to emergent adaptations: modelling the proximate architecture for decision-making. Ecol. Modell. 326, 90–100. doi: 10.1016/j.ecolmodel.2015.09.001

Eliassen, S., Jørgensen, C., Mangel, M., and Giske, J. (2007). Exploration or exploitation: life expectancy changes the value of learning in foraging strategies. Oikos 116, 513–523. doi: 10.1111/j.2006.0030-1299.15462.x

Eliassen, S., Jørgensen, C., Mangel, M., and Giske, J. (2009). Quantifying the adaptive value of learning in foraging behavior. Am. Nat. 174, 478–489. doi: 10.1086/605370

Euler, L. (1760). Recherches générales sur la mortalité et la multiplication du genre humain. Hist. Acad. R. Sci. B Lett. Berl. 16, 144–164.

Evers, E., De Vries, H., Spruijt, B. M., and Sterck, E. H. M. (2014). The EMO-model: an agent-based model of primate social behavior regulated by two emotional dimensions, anxiety-FEAR and satisfaction-LIKE. PLoS ONE 9:e87955. doi: 10.1371/journal.pone.0087955

Faisal, A. A., Selen, L. P. J., and Wolpert, D. M. (2008). Noise in the nervous system. Nat. Rev. Neurosci. 9, 292–303. doi: 10.1038/nrn2258

Fawcett, T. W., Fallenstein, B., Higginson, A. D., Houston, A. I., Mallpress, D. E. W., Trimmer, P. C., et al. (2014). The evolution of decision rules in complex environments. Trends Cogn. Sci. 18, 153–161. doi: 10.1016/j.tics.2013.12.012

Fawcett, T. W., Hamblin, S., and Giraldeau, L.-A. (2013). Exposing the behavioral gambit: the evolution of learning and decision rules. Behav. Ecol. 24, 2–11. doi: 10.1093/beheco/ars085

Fiksen, Ø. (2000). The adaptive timing of diapause - a search for evolutionarily robust strategies in Calanus finmarchicus. ICES J. Mar. Sci. 57, 1825–1833. doi: 10.1006/jmsc.2000.0976

Fontanari, L., Gonzalez, M., Vallortigara, G., and Girotto, V. (2014). Probabilistic cognition in two indigenous Mayan groups. Proc. Natl. Acad. Sci. U S A. 111, 17075–17080. doi: 10.1073/pnas.1410583111

Forstmann, B. U., Ratcliff, R., and Wagenmakers, E.-J. (2016). Sequential sampling models in cognitive neuroscience: advantages, applications, and extensions. Annu. Rev. Psychol. 67, 641–666. doi: 10.1146/annurev-psych-122414-033645

Frankenhuis, W. E., Panchanathan, K., and Barto, A. G. (2018). Enriching behavioral ecology with reinforcement learning methods. Behav. Processes 161, 94–100. doi: 10.1016/j.beproc.2018.01.008

Fredman, M. L., Komlós, J., and Szemerédi, E. (1984). Storing a sparse table with 0(1) worst case access time. J. ACM 31, 538–544.

Fulda, F. C. (2017). Natural agency: the case of bacterial cognition. J. Am. Philos. Assoc. 3, 69–90. doi: 10.1017/apa.2017.5

Gallistel, C. R., and Balsam, P. D. (2014). Time to rethink the neural mechanisms of learning and memory. Neurobiol. Learn. Mem. 108, 136–144. doi: 10.1016/j.nlm.2013.11.019

Gallistel, C. R., and King, A. P. (2010). Memory and the Computational Brain. Why Cognitive Science Will Transform Neuroscience. Chichester: Wiley.

Geisler, W. S., and Diehl, R. L. (2003). A Bayesian approach to the evolution of perceptual and cognitive systems. Cogn. Sci. 27, 379–402. doi: 10.1016/S0364-0213(03)00009-0

Geman, S., Doursat, R., and Bienenstock, E. (1992). Neural networks and the bias/variance dilemma. Neural Comput. 4, 1–58. doi: 10.1162/neco.1992.4.1.1

Gigerenzer, G., and Brighton, H. (2009). Homo heuristicus: why biased minds make better inferences. Top. Cogn. Sci. 1, 107–143. doi: 10.1111/j.1756-8765.2008.01006.x

Gigerenzer, G., and Gaissmaier, W. (2015). “Decision making: nonrational theories,” in International Encyclopedia of the Social and Behavioral Sciences: Second Edition, ed. J. D. Wright (Amsterdam: Elsevier), 911–916.

Girotto, V., Fontanari, L., Gonzalez, M., Vallortigara, G., and Blaye, A. (2016). Young children do not succeed in choice tasks that imply evaluating chances. Cognition 152, 32–39. doi: 10.1016/j.cognition.2016.03.010

Giske, J., Eliassen, S., Fiksen, Ø., Jakobsen, P. J., Aksnes, D. L., Jørgensen, C., et al. (2013). Effects of the emotion system on adaptive behavior. Am. Nat. 182, 689–703. doi: 10.1086/673533

Giske, J., Eliassen, S., Fiksen, Ø., Jakobsen, P. J., Aksnes, D. L., Mangel, M., et al. (2014). The emotion system promotes diversity and evolvability. Proc. R. Soc. B Biol. Sci. 281:20141096. doi: 10.1098/rspb.2014.1096

Giske, J., Mangel, M., Jakobsen, P., Huse, G., Wilcox, C., and Strand, E. (2003). Explicit trade-off rules in proximate adaptive agents. Evol. Ecol. Res. 5, 835–865.

Giurfa, M. (2013). Cognition with few neurons: higher-order learning in insects. Trends Neurosci. 36, 285–294. doi: 10.1016/j.tins.2012.12.011

Glimcher, P. W. (2003). Decisions, Uncertainty, and the Brain. The Science of Neuroeconomics. Cambridge, MA: MIT Press.

Gold, J. I., and Shadlen, M. N. (2007). The neural basis of decision making. Annu. Rev. Neurosci. 30, 535–574. doi: 10.1146/annurev.neuro.29.051605.113038

Goldreich, O. (2010). P, NP, and NP-Completeness: The Basics of Computational Complexity. Cambridge: Cambridge University Press.

Goschke, T., and Bolte, A. (2014). Emotional modulation of control dilemmas: the role of positive affect, reward, and dopamine in cognitive stability and flexibility. Neuropsychologia 62, 403–423. doi: 10.1016/j.neuropsychologia.2014.07.015

Gosling, S. D., and John, O. P. (1999). Personality dimensions in nonhuman animals: a cross-species review. Curr. Dir. Psychol. Sci. 8, 69–75. doi: 10.1111/1467-8721.00017

Grafen, A. (1984). “Natural selection, kin selection and group selection,” in Behavioural Ecology: An Evolutionary Approach, eds J. R. Krebs and N. B. Davies (Oxford: Blackwell Publishing), 62–84.

Graves, A., Wayne, G., and Danihelka, I. (2014). Neural turing machines. arXiv:1410.5401. doi: 10.3389/neuro.12.006.2007

Griffiths, T. L., Kemp, C., and Tenenbaum, J. B. (2008). “Bayesian models of cognition,” in The Cambridge Handbook of Computational Psychology, ed R. Sun (Cambridge: Cambridge University Press), 59–100.

Guez, D., and Stevenson, G. (2011). Is reasoning in rats really unreasonable? Revisiting recent associative accounts. Front. Psychol. 2:277. doi: 10.3389/fpsyg.2011.00277

Haberkern, H., and Jayaraman, V. (2016). Studying small brains to understand the building blocks of cognition. Curr. Opin. Neurobiol. 37, 59–65. doi: 10.1016/j.conb.2016.01.007

Haddon, J., and Killcross, A. (2005). Medial prefrontal cortex lesions abolish contextual control of competing responses. J. Exp. Anal. Behav. 84, 485–504. doi: 10.1901/jeab.2005.81-04

Haselgrove, M. (2016). Overcoming associative learning. J. Comp. Psychol. 130, 226–240. doi: 10.1037/a0040180

Hazlett, B., and McLay, C. (2000). Contingencies in the behaviour of the crab Heterozius rotundifrons. Anim.Behav. 59, 965–974. doi: 10.1006/anbe.1999.1417

Heller, R., and Milinski, M. (1979). Optimal foraging of sticklebacks on swarming prey. Anim. Behav. 27, 1127–1141. doi: 10.1016/0003-3472(79)90061-7

Higginson, A. D., Fawcett, T. W., Houston, A. I., and McNamara, J. M. (2018). Trust your gut: using physiological states as a source of information is almost as effective as optimal Bayesian learning. Proc. R. Soc. B Biol. Sci. 285:20172411. doi: 10.1098/rspb.2017.2411

Hikosaka, O. (2010). The habenula: from stress evasion to value-based decision-making. Nat. Rev. Neurosci. 11, 503–513. doi: 10.1038/nrn2866

Hogan, J. A. (2009). “Causation: the study of behavioral mechanisms,” in Tinbergen's Legacy. Function and Mechanism in Behavioral Biology, eds J. J. Bolhuis and S. Verhulst (Cambridge: Cambridge University Press), 35–53.

Holland, P. C., and Schiffino, F. L. (2016). Mini-review: prediction errors, attention and associative learning. Neurobiol. Learn. Mem. 131, 207–215. doi: 10.1016/j.nlm.2016.02.014

Houston, A. I., McNamara, J. M., and Steer, M. D. (2007). Do we expect natural selection to produce rational behaviour? Philos. Trans. R. Soc. B Biol. Sci. 362, 1531–1543. doi: 10.1098/rstb.2007.2051

Hurley, S. (2008). The shared circuits model (SCM): how control, mirroring, and simulation can enable imitation, deliberation, and mindreading. Behav. Brain Sci. 31, 1–58. doi: 10.1017/S0140525X07003123

Huse, G., and Giske, J. (1998). Ecology in mare pentium: an individual-based spatio-temporal model for fish with adapted behaviour. Fish. Res. 37, 163–178. doi: 10.1016/S0165-7836(98)00134-9

Huston, S. J., and Jayaraman, V. (2011). Studying sensorimotor integration in insects. Curr. Opin. Neurobiol. 21, 527–534. doi: 10.1016/j.conb.2011.05.030

Hutchinson, J. M. C. C., and Gigerenzer, G. (2005). Simple heuristics and rules of thumb: where psychologists and behavioural biologists might meet. Behav. Processes 69, 97–124. doi: 10.1016/j.beproc.2005.02.019

Johnson, D. D. P., Blumstein, D. T., Fowler, J. H., and Haselton, M. G. (2013). The evolution of error: error management, cognitive constraints, and adaptive decision-making biases. Trends Ecol. Evol. 28, 474–481. doi: 10.1016/j.tree.2013.05.014

Johnson, D. D. P., and Fowler, J. H. (2013). Complexity and simplicity in the evolution of decision-making biases. Trends Ecol. Evol. 28, 446–447. doi: 10.1016/j.tree.2013.06.003

Kahneman, D. (2002). Maps of bounded rationality: a perspective on intuitive judgment and choice. Sveriges Riksbank Prize Econ. Sci. Mem. Alfred Nobel, 449–489. doi: 10.1037/0003-066X.58.9.697

Kahneman, D., and Tversky, A. (1982). “Subjective probability: a judgment of representativenes,” in Judgment Under Uncertainty: Heuristics and Biases, eds D. Kahneman, P. Slovic, and A. Tversky (Cambridge: Cambridge University Press), 32–47.

Katsuki, F., and Constantinidis, C. (2014). Bottom-up and top-down attention: Different processes and overlapping neural systems. Neuroscientist 20, 509–521. doi: 10.1177/1073858413514136

Keramati, M., Dezfouli, A., and Piray, P. (2011). Speed/accuracy trade-off between the habitual and the goal-directed processes. PLoS Comput. Biol. 7:e1002055. doi: 10.1371/journal.pcbi.1002055

Kheifets, A., and Gallistel, C. R. (2012). Mice take calculated risks. Proc. Natl. Acad. Sci. U S A. 109, 8776–8779. doi: 10.1073/pnas.1205131109

Kinzler, K. D., and Spelke, E. S. (2007). Core systems in human cognition. Prog. Brain Res. 164, 257–264. doi: 10.1016/S0079-6123(07)64014-X

Knill, D. C., and Pouget, A. (2004). The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 27, 712–719. doi: 10.1016/j.tins.2004.10.007

Kohn, J. R., Heath, S. L., and Behnia, R. (2018). Eyes matched to the prize: The state of matched filters in insect visual circuits. Front. Neural Circuits 12:26. doi: 10.3389/fncir.2018.00026

Korn, C. W., and Bach, D. R. (2018). Heuristic and optimal policy computations in the human brain during sequential decision-making. Nat. Commun. 9:325. doi: 10.1038/s41467-017-02750-3

Kornell, N. (2014). Where is the “meta” in animal metacognition? J. Comp. Psychol. 128, 143–149. doi: 10.1037/a0033444

Krebs, J. R., and Davies, N. B. (1993). An Introduction to Behavioural Ecology. 3rd Edn. Oxford: Blackwell Publishing.

LeDoux, J. E. (2012). Rethinking the emotional brain. Neuron 73, 653–676. doi: 10.1016/j.neuron.2012.02.004

LeDoux, J. E. (2014). Coming to terms with fear. Proc. Natl. Acad. Sci. U S A. 111, 2871–2878. doi: 10.1073/pnas.1400335111

Lee, M. D. (2011). How cognitive modeling can benefit from hierarchical Bayesian models. J. Math. Psychol. 55, 1–7. doi: 10.1016/j.jmp.2010.08.013

Levins, R. (1966). The strategy of model building in population biology. Am. Sci. 54, 421–431. doi: 10.2307/27828530

Lewis, M., and Canamero, L. (2016). Hedonic quality or reward? A study of basic pleasure in homeostasis and decision making of a motivated autonomous robot. Adapt. Behav. 24, 267–291. doi: 10.1177/1059712316666331

Lima, S. J., and Dill., L M (1990). Behavioral decisions made under risk of predation: a review and prospectus. Can. J. Zool. 68, 619–640. doi: 10.1139/z90-092

Lorenz, D. M., Jeng, A., and Deem, M. W. (2011). The emergence of modularity in biological systems. Phys. Life Rev. 8, 129–160. doi: 10.1016/j.plrev.2011.02.003

Louâpre, P., van Alphen, J. J. M., and Pierre, J. S. (2010). Humans and insects decide in similar ways. PLoS ONE 5:e14251. doi: 10.1371/journal.pone.0014251

Lucentini, D. F., and Gudwin, R. R. (2015). A comparison among cognitive architectures: a theoretical analysis. Procedia Comput. Sci. 71, 56–61. doi: 10.1016/j.procs.2015.12.198

Lyon, P. (2015). The cognitive cell: bacterial behavior reconsidered. Front. Microbiol. 6:264. doi: 10.3389/fmicb.2015.00264

Mackie, M. A., Van Dam, N. T., and Fan, J. (2013). Cognitive control and attentional functions. Brain Cogn. 82, 301–312. doi: 10.1016/j.bandc.2013.05.004

MacLeod, C. M. (2007). “The concept of inhibition in cognition,” in Inhibition in Cognition, eds D. S. Gorfein and C. M. MacLeod (Washington, DC: American Psychological Association), 3–23.

MacPherson, B., Mashayekhi, M., Gras, R., and Scott, R. (2017). Exploring the connection between emergent animal personality and fitness using a novel individual-based model and decision tree approach. Ecol. Inform. 40, 81–92. doi: 10.1016/j.ecoinf.2017.06.004

Mangel, M., and Clark, C. (1986). Towards a unified foraging theory. Ecology 67, 1127–1138. doi: 10.2307/1938669

Marr, D. (2010). Vision. A Computational Investigation Into the Human Representation and Processing of Visual Information. Cambridge, MA: MIT Press.

Maye, A., Hsieh, C. H., Sugihara, G., and Brembs, B. (2007). Order in spontaneous behavior. PLoS ONE 2:e443. doi: 10.1371/journal.pone.0000443

McClelland, J. L., Botvinick, M. M., Noelle, D. C., Plaut, D. C., Rogers, T. T., Seidenberg, M. S., et al. (2010). Letting structure emerge: connectionist and dynamical systems approaches to cognition. Trends Cogn. Sci. 14, 348–356. doi: 10.1016/j.tics.2010.06.002

McDermott, D. (2014). On the claim that a table-lookup program could pass the Turing test. Minds Mach. 24, 143–188. doi: 10.1007/s11023-013-9333-3

McFarland, D., and Bosser, T. (1993). Intelligent Behavior in Animals and Robots. Cambridge, MA: MIT Press.

McFarland, D. J., and Sibly, R. M. (1975). The behavioural final common path. Philos. Trans. R. Soc. Lond. B Biol. Sci. 270, 265–293. doi: 10.1098/rstb.1975.0009

McNally, G. P., Johansen, J. P., and Blair, H. T. (2011). Placing prediction into the fear circuit. Trends Neurosci. 34, 283–292. doi: 10.1016/j.tins.2011.03.005

McNamara, J. M., Green, R. F., and Olsson, O. (2006). Bayes' theorem and its applications in animal behaviour. Oikos 112, 243–251. doi: 10.1111/j.0030-1299.2006.14228.x

McNamara, J. M., and Houston, A. I. (1986). The common currency for behavioral decisions. Am. Nat. 127, 358–378. doi: 10.1086/284489

McNamara, J. M., and Houston, A. I. (2009). Integrating function and mechanism. Trends Ecol. Evol. 24, 670–675. doi: 10.1016/j.tree.2009.05.011

Milinski, M. (1984). A predator's costs of overcoming the confusion-effect of swarming prey. Anim. Behav. 32, 1157–1162.

Mobbs, D., Trimmer, P. C., Blumstein, D. T., and Dayan, P. (2018). Foraging for foundations in decision neuroscience: insights from ethology. Nat. Rev. Neurosci. 19, 419–427. doi: 10.1038/s41583-018-0010-7

Moore, T., and Zirnsak, M. (2017). Neural mechanisms of selective visual attention. Annu. Rev. Psychol. 68, 47–72. doi: 10.1146/annurev-psych-122414-033400

Murren, C. J. (2012). The integrated phenotype. Integr. Comp. Biol. 52, 64–76. doi: 10.1093/icb/ics043

Nityananda, V. (2016). Attention-like processes in insects. Proc. R. Soc. B Biol. Sci. 283:20161986. doi: 10.1098/rspb.2016.1986

Norman, D. A., and Shallice, T. (1986). “Attention to action: willed and automatic control of behavior,” in Consciousness and Self Regulation, eds R. J. Davidson, G. E. Schwartz, and D. Shapiro (Boston, MA: Plenum Press), 1–18.

Parpart, P., Jones, M., and Love, B. C. (2018). Heuristics as Bayesian inference under extreme priors. Cogn. Psychol. 102, 127–144. doi: 10.1016/j.cogpsych.2017.11.006

Paulk, A. C., Pearson, T. W. J., van Swinderen, B., Stacey, J. A., Srinivasan, M. V., Taylor, G. J., et al. (2014). Selective attention in the honeybee optic lobes precedes behavioral choices. Proc. Natl. Acad. Sci. U S A. 111, 5006–5011. doi: 10.1073/pnas.1323297111

Penn, D. C., and Povinelli, D. J. (2007). Causal cognition in human and nonhuman animals: a comparative, critical review. Annu. Rev. Psychol. 58, 97–118. doi: 10.1146/annurev.psych.58.110405.085555

Pezzulo, G., Rigoli, F., and Friston, K. (2015). Active inference, homeostatic regulation and adaptive behavioural control. Prog. Neurobiol. 134, 17–35. doi: 10.1016/j.pneurobio.2015.09.001

Pezzulo, G., Verschure, P. F. M. J., Balkenius, C., and Pennartz, C. M. A. (2014). The principles of goal-directed decision-making: from neural mechanisms to computation and robotics. Philos. Trans. R. Soc. B Biol. Sci. 369:20130470. doi: 10.1098/rstb.2013.0470

Pfaff, D. W. (2006). Brain Arousal and Information Theory: Neural and Genetic Mechanisms. Cambridge, MA: Harvard University Press.

Pollock, J. L. (2006). Against optimality: logical foundation for decision-theoretic planning in autonomous agents. Comput. Intell. 22, 1–25. doi: 10.1111/j.1467-8640.2006.00271.x

Railsback, S., and Grimm, V. (2019). Agent-Based and Individual-Based Modeling: A Practical Introduction. Princeton, NJ: Princeton University Press.

Ramírez, J. C., and Marshall, J. A. R. (2017). Can natural selection encode Bayesian priors? J. Theor. Biol. 426, 57–66. doi: 10.1016/j.jtbi.2017.05.017

Ramstead, M. J. D., Badcock, P. B., and Friston, K. J. (2018). Answering Schrödinger's question: a free-energy formulation. Phys. Life Rev. 24, 1–16. doi: 10.1016/j.plrev.2017.09.001

Reid, C. R., Garnier, S., Beekman, M., and Latty, T. (2015). Information integration and multiattribute decision making in non-neuronal organisms. Anim. Behav. 100, 44–50. doi: 10.1016/j.anbehav.2014.11.010

Rolls, E. T. (2000). Précis of The brain and emotion. Behav. Brain Sci. 23, 177–234. doi: 10.1017/S0140525X00512424

Russell, S., and Norvig, P. (2010). Artificial Intelligence—A Modern Approach. Englewood Cliffs: Prentice Hall.

Sanborn, A. N., and Chater, N. (2016). Bayesian brains without probabilities. Trends Cogn. Sci. 20, 883–893. doi: 10.1016/j.tics.2016.10.003

Schmid, U., Ragni, M., Gonzalez, C., and Funke, J. (2011). The challenge of complexity for cognitive systems. Cogn. Syst. Res. 12, 211–218. doi: 10.1016/j.cogsys.2010.12.007

Seth, A. K. (2007). The ecology of action selection: insights from artificial life. Philos. Trans. R. Soc. B Biol. Sci. 362, 1545–1558. doi: 10.1098/rstb.2007.2052

Shanahan, M., and Baars, B. (2005). Applying global workspace theory to the frame problem. Cognition 98, 157–176. doi: 10.1016/j.cognition.2004.11.007

Siegelmann, H. T., and Sontag, E. D. (1995). On the computational power of neural nets. J. Comput. Syst. Sci. 50, 132–150. doi: 10.1006/jcss.1995.1013

Sih, A., Bell, A. M., Johnson, J. C., and Ziemba, R. E. (2004). Behavioral syndromes: an integrative overview. Q. Rev. Biol. 79, 241–277. doi: 10.1086/422893

Simmons, P. J., and Young, D. (1999). Nerve Cells and Animal Behaviour. Cambridge: Cambridge University Press.

Smith, J. D., Shields, W. E., and Washburn, D. A. (2003). The comparative psychology of uncertainty monitoring and metacognition. Behav. Brain Sci. 26, 317–373. doi: 10.1017/S0140525X03000086

Soylu, F. (2016). An embodied approach to understanding: making sense of the world through simulated bodily activity. Front. Psychol. 7:1914. doi: 10.3389/fpsyg.2016.01914

Strand, E., Huse, G., and Giske, J. (2002). Artificial evolution of life history and behavior. Am. Nat. 159, 624–644. doi: 10.1086/339997

Suddendorf, T., Bulley, A., and Miloyan, B. (2018). Prospection and natural selection. Curr. Opin. Behav. Sci. 24, 26–31. doi: 10.1016/j.cobeha.2018.01.019

Suddendorf, T., and Corballis, M. C. (2010). Behavioural evidence for mental time travel in nonhuman animals. Behav. Brain Res. 215, 292–298. doi: 10.1016/j.bbr.2009.11.044

Sutton, R. S., and Barto, A. G. (1981). Toward a modern theory of adaptive networks: expectation and prediction. Psychol. Rev. 88, 135–170.

Tecwyn, E. C., Denison, S., Messer, E. J. E., and Buchsbaum, D. (2017). Intuitive probabilistic inference in capuchin monkeys. Anim. Cogn. 20, 243–256. doi: 10.1007/s10071-016-1043-9

Thom, J. M., and Clayton, N. S. (2015). Translational research into intertemporal choice: the Western scrub-jay as an animal model for future-thinking. Behav. Processes 112, 43–48. doi: 10.1016/j.beproc.2014.09.006

Tinbergen, N. (1952). “Derived” activities; Their causation, biological significance, origin, and emancipation during evolution. Q. Rev. Biol. 27, 1–32. doi: 10.1086/398642

Toates, F. (2002). Application of a multilevel model of behavioural control to understanding emotion. Behav. Processes 60, 99–114. doi: 10.1016/S0376-6357(02)00083-9

Trimmer, P. C., Houston, A. I., Marshall, J. A. R., Mendl, M., Paul, E. S., and McNamara, J. M. (2011). Decision-making under uncertainty: biases and Bayesians. Anim. Cogn. 14, 465–476. doi: 10.1007/s10071-011-0387-4