- Image Archive, ETH Library, ETH Zurich, Zurich, Switzerland

The ETH-Library’s Image Archive has been using the knowledge of volunteers for over 15 years to identify photographs (aerial images, documentary and scientific photographs, press photography, etc.) correct and improve image metadata or georeference images. The first big challenge in starting so-called crowdsourcing projects is how to find people who might be enthusiastic about such a project. The second big challenge is how to maintain a community and keep it going over a longer period. A variety of experiences, tips as well as do’s and don’ts are discussed in the article.

1 Introduction

1.1 Crowdsourcing in archives and libraries

Crowdsourcing is a form of collaboration in which tasks are outsourced to an undefined mass of people. These tasks can be in different areas, e.g., knowledge generation, product development or service delivery.

External help in indexing images and other documents is nothing new, especially in archives and libraries. What was new about 15 years ago, however, was that in the age of the internet and Web 2.0, other forms of collaboration and working with experts and interested laypeople became technically possible. The outsourcing of traditionally internal work processes to a group of voluntary users has been called “crowdsourcing” since 2004 (Surowiecki, 2004; Howe, 2006). Crowdsourcing is characterised in particular by the fact that a clearly defined task is processed in voluntary work on online platforms or by means of social web components. The participants can contribute—and this is the great innovation—regardless of location and time.

In archives and libraries, crowdsourcing is mainly used to index images and other documents. This includes tasks such as:

• Commenting on and contextualising images and documents. Example: Flickr Commons, a project initiated by the Library of Congress in January 2008 for image archives worldwide (https://www.flickr.com/commons and https://www.flickr.org/programs/flickr-commons/). Currently with over 100 participating institutions.

• Georeferencing of images and maps. Example: Georeferencing of old map materials at the British Library (https://www.bl.uk/projects/georeferencer). Georeferencer has since been used in many institutions, including the ETH Library (https://library.ethz.ch/en/locations-and-media/media-types/geodata-maps-aerial-photos/crowdsourcing-project.html).

• Transcribing and correcting texts. Example: Text corrections at the Australian Newspapers Digitisation Program of the National Library of Australia (Holley, 2010). In German-speaking countries, the Transkribus platform, an AI-supported platform for text recognition, transcription and searching of historical documents in any language, has become established (https://readcoop.eu/transkribus/).

• Classifying and tagging documents. Example: Annotation of images with keywords (tags) on the collaborative platform ARTigo since 2010 (https://www.artigo.org/en/about).

• Supplementing collections with your own documents (“user-generated content”)

Crowdsourcing offers several advantages for archives and libraries. It can increase the efficiency and effectiveness of indexing images and documents. Crowdsourcing can tap into a large pool of volunteers to index large volumes of materials quickly and accurately, something that would be difficult and expensive to do with in-house staff alone. Crowdsourcing can be used to gather feedback on new products and services, identify areas for improvement, and even generate new ideas, all of which can help to save money on development costs. Crowdsourcing projects can give the public a sense of ownership and engagement in the preservation of their cultural heritage, which can help to increase support for archives and libraries. Crowdsourcing also has some risks that need to be considered. The quality of crowdsourced work can vary depending on the skill and motivation of the volunteers. It is important to have quality control measures in place to ensure that the work meets the required standards. Volunteers may lose motivation over time if they do not feel that their work is appreciated or if they do not see the results of their labor. It is important to recognize and reward volunteers. for their contributions.

In the sciences, the concept of lay participation was introduced shortly afterwards under the keyword “Citizen Science”. The geoscientist Muki Haklay (2013) has identified different levels of Citizen Science and defined them as follows:

- Level 1: Crowdsourcing: Citizens as sensors, Volunteered computing. This is the most basic level where citizens contribute data or computing power without necessarily understanding the scientific question.

- Level 2: Distributed Intelligence: Citizens as basic interpreters, Volunteered thinking. Citizens interpret existing data by following specific instructions or using provided tools. They contribute to the scientific process but may not fully grasp the research context.

- Level 3: Participatory Science: Participation in problem definition and data collection. Citizens are more actively involved in the scientific process. They collaborate with scientists in defining research questions, data collection methods, and sometimes even data analysis.

- Level 4: Extreme Citizen Science: Collaborative science - problem definition, data collection and analysis. This is the highest level of citizen science involvement. Citizens are considered co-creators of scientific knowledge, collaborating with scientists throughout the entire research process, from defining the question to analysing data and publishing results.

He understands crowdsourcing as the classic data collection by lay people and thus the lowest-threshold way of involving volunteers in knowledge generation.

2 Crowdsourcing projects of the ETH Library’s Image Archive

The Image Archive of the ETH Library in Zurich with 3.6 million photographs and other picture documents from the period between 1860 and today is one of the largest historical image archives in Switzerland. The thematic collection focuses on image holdings directly related to the Swiss Federal Institute of Technology (ETH) Zurich, such as architecture and construction sciences, engineering sciences, natural sciences, computer science or earth and environmental sciences. Experimental photographs of the first ETH professor of photography, photographs of geologists and other ETH professors travelling around the world, macro shots of orchids and insects, screenshots of the first CAD experiments, to name but a few. Image material from organisational units of ETH Zurich, from private individuals or institutions with a direct connection to ETH Zurich are taken over, as are image holdings and archives from external bodies (private individuals, organisations, foundations, companies such as Swissair, the Glaciological Commission of the Swiss Society of Natural Sciences, the Swiss Foundation for Alpine Research, the Inventory of Swiss Peatlands). The Image Archive also houses the largest collection of oblique aerial photographs in Switzerland, totalling over 1.3 million images. In addition to the collection development, digitisation, mediation, and archiving are the classic tasks of the ETH Library’s Image Archive. The cost of digitisation is high. The images must first be inventoried and repackaged in a way that is suitable for archiving. After digitisation, they are indexed in the image database. The aim is not to digitise every single image. Depending on the collection, it may also make sense to select in advance, in which all motifs are recorded but not all individual images are digitised.

For indexing and mediation, the Image Archive has been operating the web-based image database “E-Pics Image Archive” (https://ba.e-pics.ethz.ch) since 2006; this is part of E-Pics, ETH Zurich’s platform for photographs and image documents (https://www.e-pics.ethz.ch/). Around 1,000,000 images are publicly accessible on E-Pics Image Archive. Most of these published images can be downloaded free of charge in various resolutions since the introduction of the Open Data Policy in March 2015. The Image Archive licenses those images whose rights of use are held in full by the ETH Library with Creative Commons BY-SA 4.0. These images can be used freely for scientific, private, non-commercial, and commercial purposes, provided the correct image credit is given, and must be passed on under the same conditions if they are modified.

In addition to the indexing of images, i.e., classical metadata generation, the ETH Library Image Archive has been relying on the less classical form of data generation, crowdsourcing, for about 15 years. For the successful implementation of such crowdsourcing projects, it is crucial to find and motivate volunteers.

For around 15 years, the Image Archive has been using crowdsourcing to support the indexing of its holdings. In doing so, the archive has pursued various approaches:

• Expert crowdsourcing: In this approach, an identifiable group of experts is recruited to collaborate on a specific collection. For example, the ETH-Library’s Image Archive was supported by a group of Swissair retirees in cataloguing the holdings of the former Swiss airline “Swissair” from 2009 to 2013.

• Open crowdsourcing: With this approach, anyone can contribute to the indexing of a collection. The ETH Library’s Image Archive opened its image database for general comment in 2015.

• Georeferencing: The ETH Library’s Image Archive has been using the collaborative georeferencing platform “sMapshot” to georeference its images since 2018.

2.1 Expert crowdsourcing

The Image Archive of the ETH Library in Zurich gained its first experience with web-based crowdsourcing between 2009 and 2013. When cataloguing the company archive (not the aerial photographs) of the former Swiss airline Swissair, it became apparent that the existing image information was often sparse, incomplete, or incorrect. A small group of interested Swissair retirees offered to systematically label the images.

Cooperation was sought with the well-organised group of former Swissair employees. Volunteers were recruited for the project through appeals in the “Swissair News” and “Oldies News” magazines and at the annual retirees’ general meeting. Interested persons had to contact the Image Archive by e-mail. The name and address of each interested person were recorded in an Excel file. Together with instructions, everyone received a generic password for web access to the digitised images in the image database. About 130 interested volunteers came forward, an average of 40 (93% men) worked on the image description, half a dozen of them regularly and intensively. Two retirees were internally crowned “indexing kings”. The volunteers included pilots, technicians, stewards, ground staff, station masters, sales staff, freight service and editors.

The cooperation with the Swissair retirees could be described as “controlled” or closed crowdsourcing or expert crowdsourcing. In this approach, it is not an unknown mass of volunteers that is motivated to cooperate through an open call, but an identifiable group of experts (cf. also Graf, 2016).

The project was a success and was able to significantly advance the image description of the Swissair holdings.

The following example image illustrates the added value of the volunteers’ work. There are several hundred images with the same generic title “Workshop”. With the additional information, the image can be specified and thus found more easily.

Image caption: Original information: “Workshop,” no date. Supplemented image information: Overhaul of a DC-3 engine in the Dübendorf engine workshop. Installation of the crankshaft with counterweight in the centre section of the crankcase, Pratt & Whitney R-1830 Twin Wasp, ca. 1937–1948 (DOI: http://doi.org/10.3932/ethz-a-000226464).

Expert crowdsourcing lends itself to archival collections that are monothematic, such as a district, a city, a company, a species of animal or plant, or an area of interest such as the Alps or scientific instruments. Volunteers can be approached through clearly defined territories (neighbourhood associations, city libraries and archives, local media, etc.) or through professional associations.

2.2 Open crowdsourcing

Since 2015, the Image Archive of the ETH Library has been open for general annotation. After the completion of the Swissair project, the image database was to be opened for general commenting, but this was only possible at the end of 2015, also due to technical hurdles. Any user can comment on any image via the comment function. The slogan “Do you know more?” was deposited in meaningful places on E-Pics Image Archive.

The innovation was initially communicated only on the homepage of E-Pics Image Archive and on the ETH-Library’s website. Within a month, 100 comments were received, which is a considerable amount compared to the previous average of one and a half mails in average per month.

Even before the Image Archive had developed a marketing concept, a coincidence led to the “Neue Zürcher Zeitung” (NZZ), one of Switzerland’s most important daily newspapers, publishing an article on crowdsourcing in January 2016 and publishing ten unidentified aerial photographs by Walter Mittelholzer from the Image Archive’s holdings (cf. Kälin, 2016). A journalist from the newspaper had become aware of it while researching the Image Archive. The report triggered a great response from readers as well as the media. That same evening, Swiss television SRF 1 reported on it in the prime-time edition of the news. After only one and a half days, the readers of the NZZ had already identified nine of the ten pictures, and a follow-up article appeared in the NZZ the following day. Other daily newspapers and radio stations also took up the topic.

During the media hype, 1,617 comments were received from 384 people within 2 weeks. Since then, 1,600 people (88% of them men) have sent at least one comment, improving 170,000 images (as of 31 M 2024). An average of 2,000 comments are received monthly. Here, too, we see the phenomenon that few people do a lot: The Top 10 is very active and most of the people in the Top 10 have also been in the Top 10 since January 2016. The Top 10 has made 83% of all comments, or 128,000 comments. The Top 30 is also very active and has posted 92% of all comments. Similar experiences, that many people are interested in the content but only a few actively participate, are reported by Wikipedia as well as the Library of Congress: of the many commenters on Flickr Commons, they were able to identify a core group of 20 people over time; these “history detectives” regularly supplemented the images with historically relevant information (Springer et al., 2008). This social-media phenomenon is also discussed as the 1–9–90 rule. This means 90 percent of users are silent readers of posts, nine percent comment or like the posts and one percent are the authors of the posts on social media.

What does the crowd improve? Volunteers add and correct information about the images, such as the time the images was taken, the location where it was taken, or the people depicted. In the case of missing titles (“Untitled”), an attempt is made to identify the image content. Localisations are also made more precise where possible, e.g., by indicating the direction of gaze. In addition, we also receive contextual information that goes beyond so-called Wikipedia knowledge. Even spelling and typing errors are reported. The Image Archive crowd is very conscientious and only sends substantial contributions by email. Contributions such as “What a beautiful image!” or similar, as often found on Flickr Commons, do not exist. Usually, source information or Google Maps coordinates are included. After a plausibility check, the permanent staff of the Image Archive enters the information into the metadata. If the information is not correct, the crowd usually corrects itself.

Open crowdsourcing has long since ceased to be a project and has been integrated into the normal work processes of indexing in the Image Archive. Incorporating the volunteer comments is first thing on the to-do list in the morning. Open crowdsourcing has led to a significant increase in the number of comments.

Open crowdsourcing encompasses all documents in an archive. Volunteers can therefore no longer be approached via clearly defined groups. The most effective way to approach volunteers is through (national) media. However, these cannot be “ordered” and often depend on chance and/or good contacts. Presentations at adult education centres or similar institutions are costly and usually have limited success.

2.3 Georeferencing with sMapshot

Since February 2018, the Image Archive has been using the collaborative platform “sMapshot” of the Laboratoire de SIG, Haute École d’Ingénierie et de Gestion du Canton de Vaud (HEIG-VD) for georeferencing oblique aerial images. The sMapshot platform was first introduced to the public with the stock of the Image Archive (https://smapshot.heig-vd.ch/owner/ethz). Since its launch, the Image Archive has published 25 so-called campaigns, each with a maximum of 9,000 images and a total of 187,000 images on sMapshot.

At the second crowdsourcing meeting of the Image Archive in early 2018, the volunteers were introduced to the new tool. Many who comment on images on the E-Pics Image Archive have also tried out the game-like georeferencing. As a result, most volunteers in the top 10 now work on both platforms. Specialisations have emerged in one direction or the other. Some have tried georeferencing but stayed with commenting, others have discovered georeferencing for themselves and leave commenting to the others. So much for the synergy effects within the crowd. Through sMapshot, the pool of volunteers has also grown, and new people have been approached. In the first year, when no other institutions were online, 158 people participated in the Image Archive campaigns. This year, there are still about 50 people who contribute regularly and usually over a longer period.

sMapshot is a collaborative platform for the georeferencing of image collections. It is open to all image archives and libraries. Currently, 13 institutions from Switzerland, Austria and Brazil are participating. The ETH Library has by far the largest collection with over 187,000 images, followed by the Swiss Federal Office of Topography swisstopo with 62,749 images and the Vorarlberger Landesbibliothek (Austria) with 14,885 images. The smallest collection comprises only 75 images.

The cooperation on sMapshot brings further synergy effects. For example, the smaller collections benefit from the volunteer pool of the ETH Library’s Image Archive. Volunteers can participate in various projects via a single point of access. Most of the time they don’t even notice which institution is behind it but choose access purely according to thematic interest.

Crowdsourcing is a valuable tool for archives and libraries. It can help increase the efficiency and effectiveness of image and document indexing, reduce costs, and encourage audience participation. In the future, crowdsourcing will continue to gain importance in archives and libraries.

3 Overview of the crowdsourcing projects community management for scientific crowdsourcing projects

In crowdsourcing projects, volunteers work for free to collect, process or analyse data. To ensure successful collaboration, it is important to nurture, motivate and retain volunteers over the long term (cf. Table 1).

Community management is a task that is done continuously. In places, it also requires tact and, above all, appreciation. Volunteers do voluntary work and therefore have different expectations than paid staff. It is important to take this into account and not overburden volunteers. Volunteers make a valuable contribution. It is therefore important to show them this. This can be done through praise, recognition, and small gifts.

In the following, some important aspects of community management for crowdsourcing projects are presented.

3.1 Clear task descriptions and instructions

Volunteers need to know what is expected of them. Therefore, the tasks should be described clearly and understandably. You ensure volunteers know exactly what is expected of them, reducing confusion, and increasing the quality of their work. This can be done through short and clear instructions or tutorials.

Volunteers are open to suggestions for improvement, e.g., also for basic tips such as the formatting of comments. Volunteers usually take the suggestions for improvement well and directly on the one hand, but on the other hand they always provide new hints themselves on how the processes could be improved.

3.2 Short response time and transparent processes

Volunteers are often very dedicated and motivated. They work for free and invest their time and knowledge to enrich the archives and image libraries. It is therefore important that their work is valued and recognised. This can be done through the following measures:

• Establish a transparent process for processing volunteer contributions: Volunteers should be informed about the status of their contributions.

• Feedback on contributions: Volunteers should receive feedback on their contributions to improve their work.

• Recognition and appreciation: Volunteers should be appreciated for their work, e.g., through public recognition or awards.

Another important prerequisite for motivating volunteers is the quick implementation of their findings. They want to see that their contributions have a visible benefit. Therefore, it is important that the archives process the contributions quickly and transparently. This can be done through an automated process or through manual review.

If the volunteers’ contributions are not implemented or not implemented promptly, this can lead to demotivation. Volunteers do not feel valued, and their work seems pointless. This can eventually lead to (silent) withdrawal from the project.

Motivating volunteers is crucial to the success of crowdsourcing projects. It is therefore important to value the work of volunteers and to process their contributions quickly and transparently.

3.3 Communication is the key to success

Communication with volunteers is crucial. Volunteers should be informed about new projects, tasks, or developments. This can be done through emails, a community blog, or social media.

Expert crowdsourcing like the Swissair project relies on the expertise of volunteers. To ensure the quality of the work, it is important to communicate the background knowledge of the volunteers to the permanent staff. In the Swissair project, this led to unexpected weekly phone calls with retirees. However, the additional effort was justified, as the images could be keyworded much better this way.

Email communication is essential for crowdsourcing projects. Volunteers need to be informed promptly and directly about questions and concerns. In addition to direct communication, indirect communication is also important. Volunteers want to feel valued and know that they are cared for.

The Image Archive has learned quickly from the media hype in January 2016. Previously, comments from volunteers were not even answered automatically. A first general thank-you email a month later was a motivational boost for volunteers.

The ETH Library community blog (https://crowdsourcing.ethz.ch) is used for regular communication since May 2016. It informs about new images, campaigns, and other important information. On Monday, blogposts appear with images that can still be edited. On Friday, blogposts are published with exciting or unexpected insights from the volunteers.

3.4 Crowdsourcing ideas and help

Volunteers are a valuable resource for crowdsourcing projects. Their ideas and assistance can make an important contribution. Therefore, it is important to take their ideas seriously and use them in a targeted way.

One of the most important lessons from previous Image Archive crowdsourcing projects is that the ideas of the crowd should be taken seriously. Volunteers often have a better insight into the web frontends than the permanent staff. They can therefore indicate specific usability suggestions and improvements. Volunteers can also be asked to help directly. For example, they can be used as beta testers for new applications or as experts for certain skills. Volunteers are also often very creative and have new ideas that the permanent staff do not have. They can help strengthen the community and promote user engagement.

3.5 Bonding incentives for volunteers

Bonding incentives are measures that promote the motivation and satisfaction of volunteers. They help volunteers identify with the project and the institution and feel part of a community.

Bonding incentives can be both tangible and intangible. Examples are:

• Regular meetings: Creating opportunities for volunteers to connect and share experiences fosters a sense of community and belonging.

• Statistics and rankings: Publicly acknowledging volunteer contributions through metrics or friendly competition can increase engagement and a sense of accomplishment.

• Community blog: A dedicated space for and by volunteers to share stories, tips, and experiences can promote knowledge exchange and a feeling of being part of something bigger.

• Self-organisation: Giving volunteers some control over their tasks and schedules shows trust and can be motivating.

• Openness to new ideas: Involving volunteers in brainstorming sessions or project planning demonstrates their value and fosters a sense of ownership.

• Testimonial videos: Featuring volunteers in videos that showcase their work and the impact they have can be a source of pride and encourage others to join.

• Small material gifts: Gift certificates, branded merchandise (t-shirts, mugs), or small tokens of appreciation can be a nice way to show volunteers they are valued.

When choosing bonding incentives, the needs of the volunteers should be considered. It is important that the measures convey appreciation to the volunteers and give them the feeling of being part of a community.

Bonding incentives can play an important role in motivating and satisfying volunteers. They should be carefully selected and offered on a long-term basis.

3.6 Know your community!

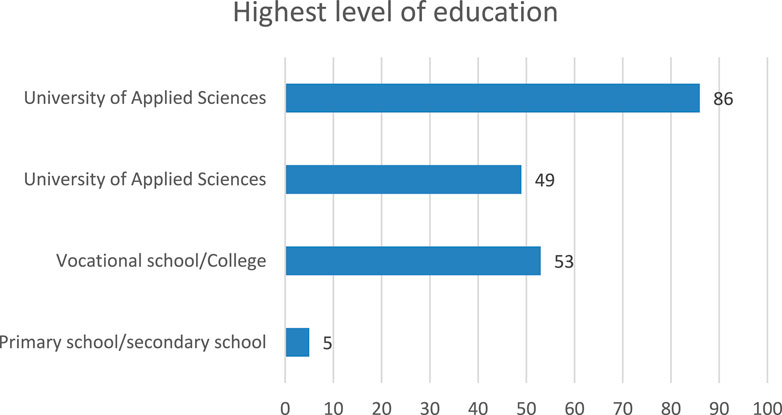

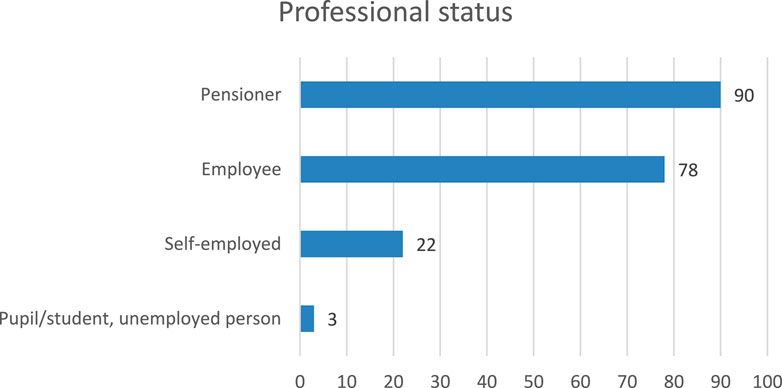

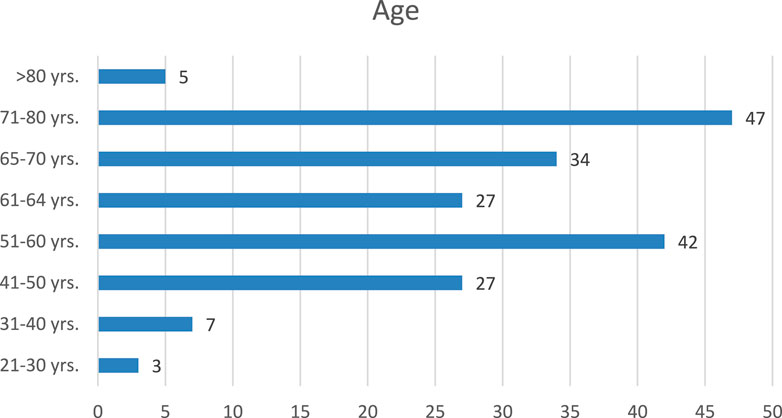

To better understand the demographics, motivation and working practices of the crowd, an online survey was conducted in January 2017 (cf. Graf, 2016) with 700 respondents. The survey resulted in a response rate of 27.6%.

The crowd consists mainly of retired people with a university or technical college degree. The average age of the respondents is 61 years, 45% are 65 years old or older. Retired people often have time and experience to contribute to the projects (cf. Figures 1–3).

Respondents are motivated to participate in the crowdsourcing project for different reasons. The most important reasons are:

• Curiosity and interest in historical images.

• Fun with detective work.

• Knowledge sharing and transmission.

• Knowing the importance of good metadata.

• Support crowdsourcing as an idea.

• Chronicle work or other projects.

The survey showed that the crowd has a wide range of interests and skills. The respondents are not only interested in historical images, but also in specific topics such as architecture, technology, or culture. They have a broad knowledge and can use it to identify and describe the images.

The survey showed that the crowd sees the crowdsourcing project as a meaningful and intellectually challenging activity. Respondents are proud of their contributions and see themselves as part of a community working to preserve cultural heritage.

3.7 Reasons why the crowd likes to participate

Crowdsourcing projects offer volunteers the opportunity to contribute to something meaningful. This can be by working on a project that is personally important to you or that has a positive impact on the world.

There are different reasons why volunteers participate in crowdsourcing projects:

• Meaningful activity: Many people like to get involved in something they find meaningful. Crowdsourcing projects offer this opportunity, as they often help to generate knowledge or improve the world.

• Leisure activity: Crowdsourcing projects can also be a meaningful leisure activity. They can be fun and help people learn new skills.

• Low-threshold participation: Crowdsourcing projects are often low-threshold and can be carried out by anyone in their spare time. This can increase the identification with the project and the motivation of the volunteers.

The digitisation of images and other data has greatly advanced the spread of crowdsourcing projects. This means that people from all over the world can also participate and contribute to these projects.

4 Conclusion

Crowdsourcing is a form of knowledge generation and dissemination in which a clearly defined task is worked on voluntarily on online platforms or by means of social web components. In the field of cultural heritage institutions, such as archives and libraries, this form of cooperation with volunteers has gained a lot of importance in recent years.

The ETH Library Image Archive’s experience with crowdsourcing has been consistently positive. The crowdsourcing projects have led to a significant improvement in the metadata quality of the image holdings. In addition, the projects have helped to raise the profile of the Image Archive and reach new user groups.

Crowdsourcing offers archives and libraries several benefits, including:

• Increasing metadata quality: Crowdsourcing can help images to be better described and localised. This facilitates the use of images for research and teaching.

• Reducing costs and time: Crowdsourcing can reduce the costs and time needed to index images. This is especially the case when it comes to large collections that can no longer be processed alone with the existing resources and intellectual capacities.

• Increasing reach and awareness: Crowdsourcing can help increase the reach and awareness of an archive or library. This is especially the case when crowdsourcing activities are communicated via social media and other channels.

However, there are also some challenges to consider when implementing crowdsourcing projects in archives and libraries, including:

• Quality assurance: The quality of metadata created by volunteers must be ensured. This can be done by providing training and guidance to volunteers as well as moderating the contributions.

• Motivation: Volunteers need to be motivated to participate in crowdsourcing projects. This can be done by providing recognition and feedback and by creating a collaborative environment.

Crowdsourcing is a promising tool for archives and libraries. However, when implementing crowdsourcing projects, it is important to consider the challenges and take appropriate measures.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author contributions

NG: Conceptualization, Writing–original draft.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feart.2024.1328883/full#supplementary-material

References

Graf, N. (2016). Crowdsourcing. The indexing of the Swissair photo archive in the picture archive of the ETH-Bibliothek, Zurich [German]. Rundbr. Fotogr. 23 (1), 24–32.

Graf, N. (2018). Knowledge should not be lost! An online survey on the motivation and commitment of volunteers of the crowdsourcing of the ETH-Library’s Image Archive [German]. Zurich ETH Libr. 2018. doi:10.3929/ethz-b-000401438

Graf, N., and Pfister, T. (2022). Knowledge should not be lost! Interview with a volunteer, Austrian Citizen Science Conference 2022. PoS 407, 1–5. Available at: https://pos.sissa.it/407/020 (Accessed October 27, 2023). doi:10.22323/1.407.0020

Haklay, M. (2013). “Citizen science and volunteered geographic information: overview and typology of participation,” in Crowdsourcing geographic knowledge. Editors D. Sui, S. Elwood, and M. Goodchild (Dordrecht: Springer), 105–122. (Accessed October 27, 2023). doi:10.1007/978-94-007-4587-2_7

Holley, R. (2010). Crowdsourcing: how and why should libraries do it? D-Lib Mag. 16, 3–4. (Accessed October 27, 2023). doi:10.1045/march2010-holley

Howe, J. (2006). The rise of crowdsourcing. Available at: http://www.wired.com/wired/archive/14.06/crowds.html (Accessed October 27, 2023).

Kälin, A. (2016). Wer kennt die Berge, Orte und Fabriken?. Neue Zürcher Zeitung Available at: https://www.nzz.ch/zuerich/wer-kennt-die-berge-orte-und-fabriken-ld.7773 (Accessed October 27, 2023).

Springer, M., Dulabahn, B., Michel, P., Natanson, B., Reser, D. W., Ellison, N. B., et al. (2008). For the common good: the Library of Congress Flickr pilot project, final report, October 30, Available at: http://www.loc.gov/rr/print/flickr_report_final.pdf (Accessed October 27, 2023).

Keywords: crowdsourcing, community building, community management, image archive, georeferencing, citizen science

Citation: Graf N (2024) Building and maintaining a volunteer community: experiences of an image archive. Front. Earth Sci. 12:1328883. doi: 10.3389/feart.2024.1328883

Received: 27 October 2023; Accepted: 27 May 2024;

Published: 16 July 2024.

Edited by:

Claudia Schuetze, Helmholtz Association of German Research Centres (HZ), GermanyReviewed by:

Karoly Nemeth, Institute of Earth Physics and Space Science (EPSS), HungaryEmanuela Ercolani, National Institute of Geophysics and Volcanology, Italy

Copyright © 2024 Graf. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nicole Graf, bmljb2xlLmdyYWZAbGlicmFyeS5ldGh6LmNo

Nicole Graf

Nicole Graf