- 1Advanced Analytics and Solutions, Virtua Health, Marlton, NJ, United States

- 2School of Systems Science and Industrial Engineering, Binghamton University, Binghamton, NY, United States

Background: Discharge date prediction plays a crucial role in healthcare management, enabling efficient resource allocation and patient care planning. Accurate estimation of the discharge date can optimize hospital operations and facilitate better patient outcomes.

Materials and methods: In this study, we employed a systematic approach to develop a discharge date prediction model. We collaborated closely with clinical experts to identify relevant data elements that contribute to the prediction accuracy. Feature engineering was used to extract predictive features from both structured and unstructured data sources. XGBoost, a powerful machine learning algorithm, was employed for the prediction task. Furthermore, the developed model was seamlessly integrated into a widely used Electronic Medical Record (EMR) system, ensuring practical usability.

Results: The model achieved a performance surpassing baseline estimates by up to 35.68% in the F1-score. Post-deployment, the model demonstrated operational value by aligning with MS GMLOS and contributing to an 18.96% reduction in excess hospital days.

Conclusions: Our findings highlight the effectiveness and potential value of the developed discharge date prediction model in clinical practice. By improving the accuracy of discharge date estimations, the model has the potential to enhance healthcare resource management and patient care planning. Additional research endeavors should prioritize the evaluation of the model's long-term applicability across diverse scenarios and the comprehensive analysis of its influence on patient outcomes.

1 Introduction

A key aspect of optimal healthcare delivery is the ability of hospitals and clinicians to achieve both patient-centered care and efficient resource utilization (1, 2). These objectives are interrelated: Early hospital discharge can have positive clinical implications for patients. Evidence has shown that patients who experience prolonged hospital stays have increased susceptibility to hospital-acquired infections, pressure ulcers, and nutritional deterioration (3–5). A potential benefit of discharging patients when they no longer require hospital-level care is the prevention of complications associated with prolonged hospitalization. Additionally, timely discharge is crucial for ensuring the availability of patient beds for those in the Emergency Department (ED) who need them (6). The timely transfer of critically ill patients from the ED to an appropriate inpatient unit is essential for optimal patient outcomes. Previous studies have shown that prolonged ED boarding times can lead to increased hospital length of stay and higher mortality rates for these patients (7). A phenomenon that occurs infrequently but has serious implications for patient safety is the departure of patients who need hospital care from emergency departments without being seen by a physician. This can happen when EDs are overcrowded due to inefficient patient flow processes. Patients who leave without being seen may experience adverse outcomes that could have been prevented or mitigated by timely medical attention (8).

One of the factors that affects the quality and cost of care is patient flow, which refers to the efficient use of resources and time during the patient's stay in the hospital (9, 10). A common practice for planning the discharge of patients is to rely on the clinicians' estimates of the discharge date. However, this practice has some drawbacks, such as consuming the clinicians' time that could be spent on other tasks or direct patient care, and having low accuracy (11, 12). A possible alternative is to apply machine learning models to predict the discharge date of patients based on their length of stay (LOS) in the hospital. Several studies have proposed and developed different LOS models for this purpose (11, 13–18). However, most of these studies have focused on the model development stage, using retrospective data, creating features for LOS prediction, training, and evaluating different models, without considering how to implement them in practice. The majority of published articles in healthcare machine learning have failed to showcase successful implementations of proposed models, resulting in a substantial gap between theoretical concepts and practical applications. This underscores the pressing need for practical research that addresses the entire life cycle of predictive models, spanning from their initial conceptualization to their effective integration into operational systems (19, 20).

This article introduces a practical machine learning model that combines various clinical data sources, such as demographics, complaints, and medical problems, to predict the discharge date for patients. By enabling early and proactive discharge planning, this model offers significant benefits. The key contributions of this paper are as follows:

• It develops a machine learning model that is tailored for discharge date prediction and can be seamlessly integrated into the clinical workflow.

• It integrates the predictive model with a widely adopted electronic medical record system in the United States.

• It goes beyond previous studies by evaluating the model after its deployment, a step that is often overlooked in similar research, providing valuable insights into the model's real-world performance and effectiveness.

This paper is organized as follows. Section 2 delves into the materials and methods employed in this research. It encompasses an explanation of the data sources utilized, the methods employed for data collection, and an outline of the model development and deployment process. In Section 3, the results and findings of the study are presented. This includes an analysis of the model's performance during both the development and deployment stages, providing insights into its effectiveness and reliability. Lastly, in Section 4, the paper concludes by highlighting the main contributions and outcomes of the research, summarizing the key findings and implications derived from the study.

2 Materials and methods

2.1 Methodology overview

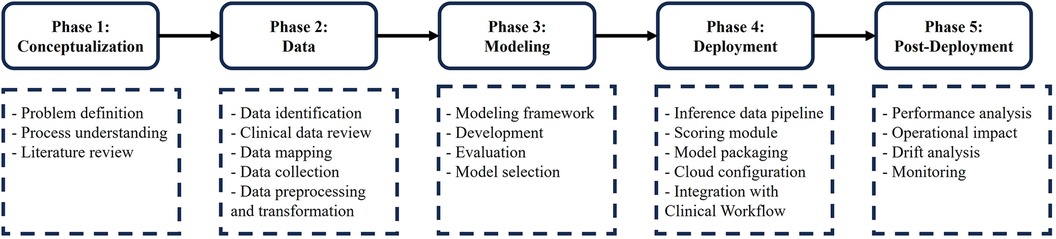

We present a systematic and rigorous approach to develop a predictive model for inpatient discharge date, as illustrated in Figure 1. Our approach consists of five phases. In the first phase, we define the healthcare problem and analyze the underlying process. In the second phase, we identify the relevant data elements and validate them with clinical teams. Then, we map the data elements to the clinical database and retrieve them for further analysis. Next, we preprocess and transform the data for modeling purposes. In the third phase, we develop and evaluate various machine learning models and select the best one to proceed to the fourth phase, where we deploy and integrate the model within the electronic medical record (EMR) system. Finally, in the fifth phase, we monitor the model's performance after deployment, assess its operational impact and evaluate its effect on the healthcare process under investigation.

2.2 Conceptualization

In this phase, we engaged in multiple discussions with the clinical team to gain a comprehensive understanding of the current discharge planning procedures and discharge processes. Additionally, we sought insights into the interdisciplinary rounds and the role of the estimated discharge date. To guide the modeling process, we focused on addressing several key questions:

• What should be the prediction window? Should it be dynamic or static?

• What is the optimal time window for generating static predictions?

• Where should the model scores be recorded to facilitate integration into the clinical workflow, specifically during interdisciplinary rounds?

The primary conclusions from these discussions are as follows:

• The model should facilitate early discharge planning; thus, static predictions are preferred.

• The model should provide discharge date estimates for patients within the first 18 h of admission.

• Model scores should be incorporated into a data column in the patient list reviewed during interdisciplinary rounds.

Additionally, we reviewed a range of studies in the literature (see Section 1) to evaluate the current advancements in discharge date prediction and to identify relevant data elements that could enhance our modeling process. This review helped us understand existing methodologies, highlight gaps in current approaches, and compile a comprehensive list of data features that could improve the accuracy and applicability of our predictive model.

2.3 Data

We identified the data based on the clinical team's input and the relevant literature (11, 13–18). We then mapped the collected data to the corresponding fields and tables in our EMR system (Epic). Next, we developed an SQL query to extract the dataset from the Clarity database. We also performed a temporal analysis to select only the data elements that were available before the model run (18 h after inpatient admission). The final list of data elements included: age, marital status, sex, admission date, admission source, problems, complaints, and MS Geometric Mean Length of Stay (MS GMLOS).

The raw data underwent a comprehensive preprocessing pipeline to prepare it for machine learning modeling. Considering the negligible proportion of missing values, rows containing any nulls were removed entirely. The categorical feature “marital status” was transformed into a binary feature, assigning a value of 1 to married patients and 0 otherwise. Sex was similarly encoded using two binary features: “Is_Male” and “Is_Female”. The admission date was utilized to create situational binary features indicating admission on Friday, Saturday, and Sunday (“Is_Friday”, “Is_Saturday”, “Is_Sunday”).

One-hot encoding was applied to the “admission source” variable, generating 27 binary features corresponding to the 27 unique admission sources. “Problems” and “complaints” underwent similar preprocessing. For each patient, all complaints were concatenated into a single text string, which was then cleaned by removing extra spaces and punctuation, converting to lowercase, and tokenizing into words. Subsequently, unique words were extracted, with each word represented by a binary feature: 1 if present in the complaint, 0 otherwise. After eliminating highly sparse features, 64 binary features remained for complaints. The same approach was applied to problems, resulting in 727 features. In total, the preprocessing steps yielded 814 features suitable for machine learning analysis. In our approach, we utilized the XGBoost machine learning algorithm, which naturally learns the ranking of features during model training. XGBoost constructs numerous decision trees, with each tree performing splits based on the features that most effectively reduce the overall loss (prediction errors). Features that are more predictive are selected more frequently across the trees, leading to highly important features contributing more significantly to the final predictions.

This study focuses on the MS Geometric Mean Length of Stay (MS GMLOS) as the target variable, selected for its efficacy in streamlining discharge processes and reducing patient excess days. Unlike the arithmetic mean, prone to skewing by outliers, GMLOS offers a more accurate depiction of typical patient flow within a Diagnosis-Related Group (DRG) by calculating the geometric mean of all lengths of stay. This emphasis on central tendency makes GMLOS valuable for identifying patients at risk of prolonged stays and implementing targeted interventions for their expedited discharge. To adapt MS GMLOS for use in multi-class classification models, we categorized its continuous values into five discrete groups: discharged within 1, 2, 3, 4 days, and those with stays lasting 5 or more days. This transformation enables the application of classification algorithms to predict the likelihood of patients falling into these predefined discharge timeframes.

The dataset, following the preprocessing steps outlined above, comprised 99,561 samples. We allocated 69,692 samples for training the model, while the remaining 29,869 (30%) samples were reserved for testing the model's performance.

2.4 Model development

Using predictive modeling, this study proposes a system to estimate the discharge date of patients. The system computes a score for each patient based on their attributes, which reflects their predicted discharge date. The predictive system's complexity can range from a simple linear or nonlinear function to a highly sophisticated one that requires additional algorithms for interpretation. As the complexity increases, the system becomes more capable of detecting intricate patterns and associations in the data. For estimating discharge dates, there is a complex and nonlinear association between the score and patient attributes, which requires the application of advanced machine learning algorithms. In particular, this study uses the Extreme Gradient Boosting algorithm (XGBoost) (21).

XGBoost stands as a contemporary algorithm in predictive modeling, leveraging the tree-boosting framework. This technique combines numerous weak learners into a robust learner, predominantly employing decision trees with constrained depth or leaf count. Sequential training of these trees aims to rectify the errors of preceding iterations, culminating in a final prediction derived through a weighted aggregation of individual tree outputs. Our study implements the XGBoost package in Python, renowned for its scalability and efficiency in tree boosting. XGBoost exhibits notable advantages over alternative boosting methodologies, including regularization, parallelization capabilities, and proficiency in handling missing data.

2.5 Model deployment

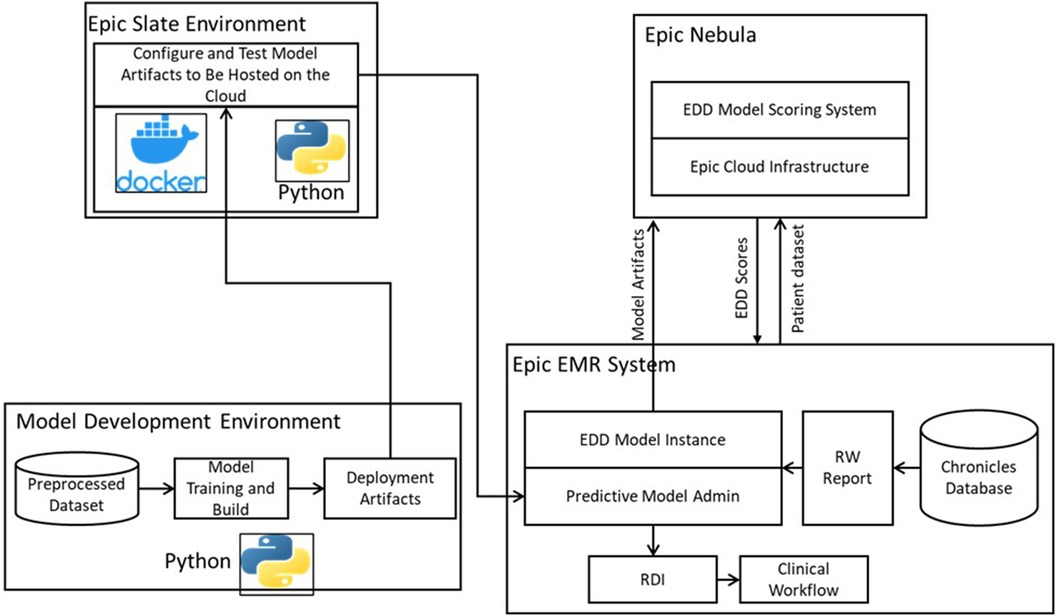

To optimize the usability of the model for clinicians, we successfully integrated it into the clinical workflow. Our integration efforts involved hosting the model on the Epic Nebula, which is an Epic cloud computing platform (Epic Systems, Verona, WI, United States). Figure 2 provides an overview of this integration. To facilitate the integration process, we utilized the Epic Slate Environment to configure and thoroughly test the model artifacts. This setup ensured a smooth and reliable transfer of data between the Chronicles database and the cloud-based model for EDD generation. The resulting scores were seamlessly delivered back to the system, enabling the placement of the EDD in the patient list to be used during clinical discussions and patient flow planning. To provide input data for the model, we customized Epic workbench reports specifically tailored to the discharge date prediction model. These reports served as the means through which data was supplied to the model. Additionally, we established a batch job that executed the model on an hourly basis. Through our comprehensive integration process, we successfully embedded the model within the clinical workflow, allowing for efficient data exchange, EDD scoring, and EDD integration with patient lists. This seamless integration enhances the model's accessibility and usability for clinicians, ultimately improving patient care and outcomes.

Figure 2. Model deployment and operationalization, adopted from (22).

2.6 Integration with clinical workflow

The model scores, specifically the estimated discharge date (EDD), were transferred from the Epic Nebula cloud back to the real-time Chronicles database. These scores were recorded in flowsheet rows and seamlessly integrated into the interdisciplinary round (IDR) lists as a distinct column labeled “Model EDD”, positioned alongside the clinical EDD column populated by clinicians. The Model EDD was generated once the data threshold was met, defined as the patient being an inpatient for 18 h or more. The Model EDD is generated once per patient when their stay exceeds 18 h. However, the current model configuration does not update the EDD thereafter.

3 Results and discussion

In this section, we will delineate the results and findings of our research. Initially, we will evaluate the model following its development. Subsequently, we will present the results of post-deployment performance. Lastly, we will highlight the operational outcomes that have emerged from the model's practical implementation.

3.1 Model evaluation

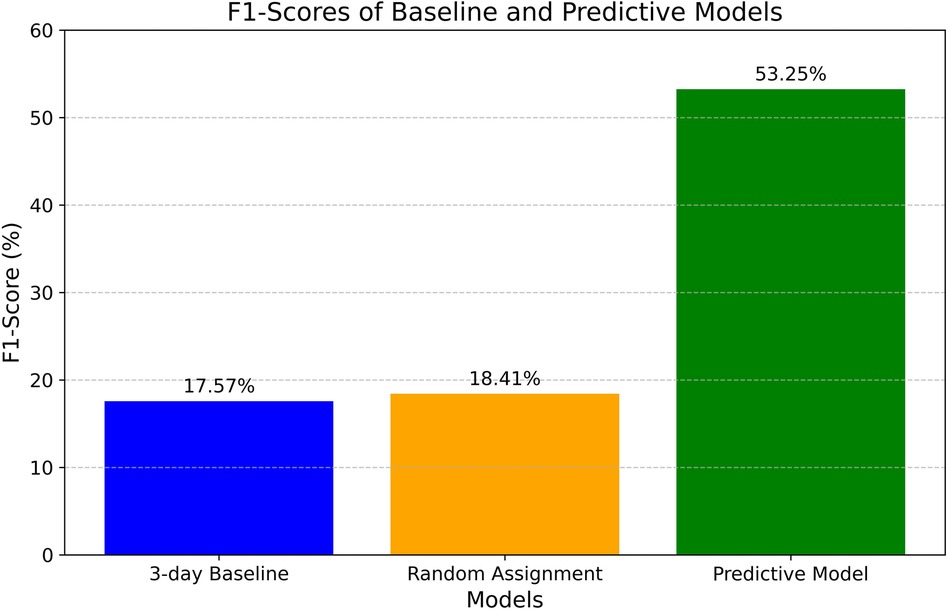

In this study, we tackled a multi-class classification problem (five classes) to predict the estimated discharge date. The model was evaluated on the hold-out testing dataset comprising 29,869 samples. We assessed the model's performance using the F1-score metric (see Table 1). All evaluations were compared against two baseline predictions:

• Predicting a discharge date of three days (patient is discharged in 3 days from admission) for each patient, which represents the legacy process for estimating discharge dates before deploying the predictive model.

• Randomly assigning a class to a patient, where each class (representing the estimated discharge date) is assigned with an equal likelihood of one-fifth.

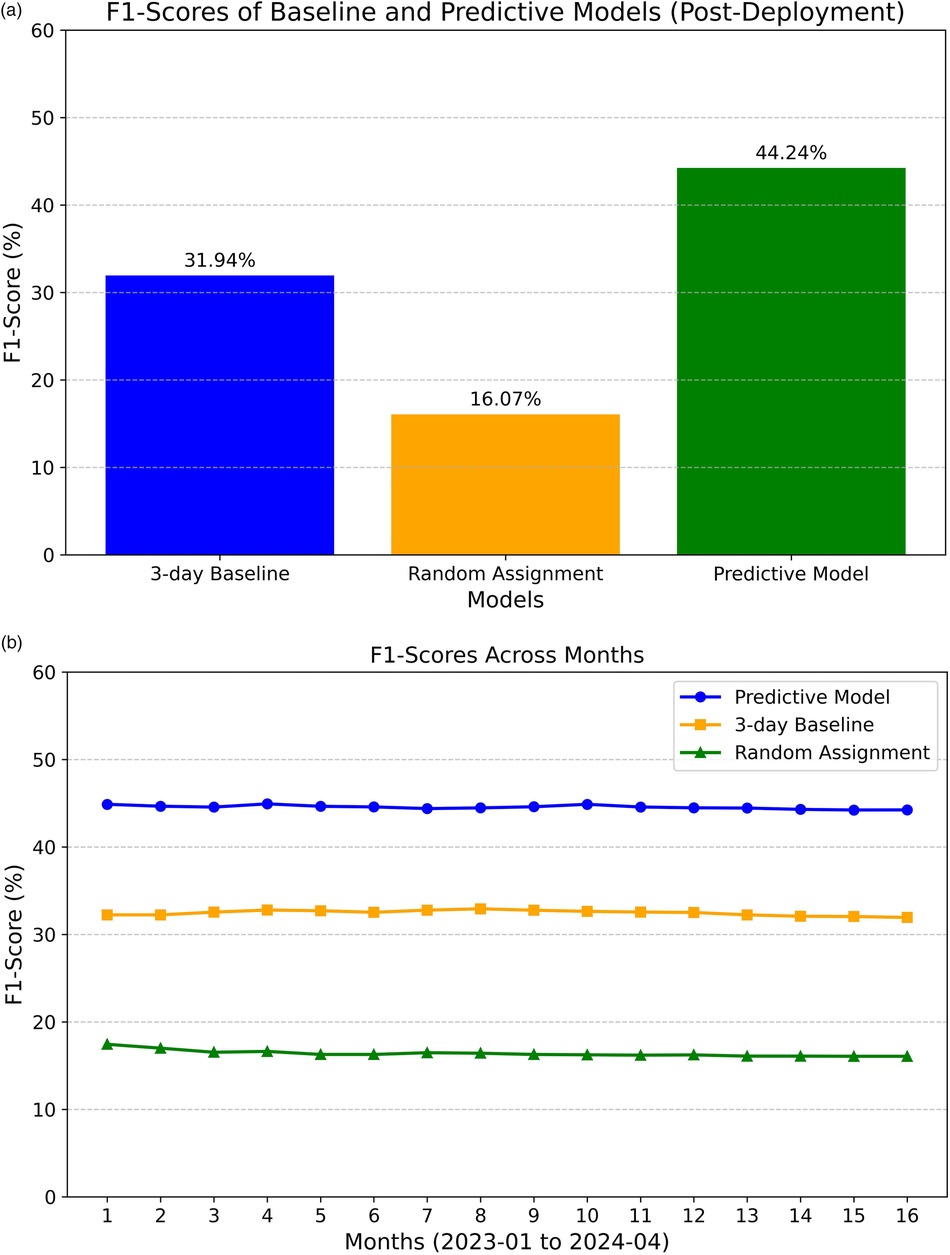

The F1-score is a widely recognized metric for evaluating classification models, representing the harmonic mean of precision and recall. Precision, also referred to as the positive predictive value, denotes the proportion of true positives among all positive predictions. Recall, or sensitivity, assesses the model's ability to accurately identify positive classes. Together, these metrics offer a comprehensive evaluation of the model's predictive performance. Figure 3 compares the baseline F1 scores to those of the predictive model.

The 3-day baseline F1-score is 17.57%, signifying a very low score with minimal utility for solving the classification problem. The random label assignment method achieved an F1-score of 18.41%. In contrast, the predictive model attained an F1-score of 53.25%, demonstrating significant improvement compared to the baseline scores, particularly with a 35.68% gain over the current estimation process (estimating three days for all patients). This improvement can prove instrumental in optimizing patient flow and reducing excess days, as will be discussed later.

Several factors may have influenced the observed model F1 score. First, the dataset was limited to a few demographic details, problem lists, admission sources, and complaints. Expanding the dataset to include additional variables like vital signs, laboratory data, and medications could improve model performance. Second, the data collection window is restricted to 18 h post-admission to facilitate early prediction, which may exclude nuanced clinical data emerging later during the patient's hospital journey. It is argued that dynamically incorporating more clinical data elements and generating model scores more frequently during the patient's stay would likely improve evaluation scores as the patient's discharge time approaches. Moreover, predicting the discharge date is a multifaceted issue that extends beyond clinical factors alone. Workflow processes, clinical team decisions, transportation, and other considerations also influence discharge date determination. Collecting data on these aspects can be challenging. Hence, further investigations are recommended to explore the issue from these perspectives.

3.2 Post-deployment performance

In this study, we assess the post-deployment performance of the model with respect to addressing the original goal of reducing excess days relative to the MS Geometric Mean Length of Stay (MS GMLOS).

We revisit the F1 score to gauge the model's operational performance and measure any drift from its pre-deployment performance. As illustrated in Figure 4A, the model's overall F1 score is 44.24% (based on operational data collected from January 2023 to April 2024, totaling 52,885 samples). The model still demonstrates advantages over the previously used approach of assigning a standardized three-day estimated inpatient length of stay. However, a significant 9% drop in the F1 score was observed compared to pre-deployment. The F1 score remained relatively stable at around 44% (see Figure 4B).

Figure 4. Post-deployment F1-scores. (A) F1-scores of baseline and predictive models. (B) F1-score across months for both baseline and predictive models.

The decrease in performance can be attributed to several factors. Firstly, the operational inference data pipeline pulls data from a real-time database, while the training data during development was collected from a backend offline database. Although efforts were made to align the data between the two environments, some unaccounted-for variables may still warrant further investigation. Besides potential data drift, other external factors, such as modifications to clinical workflows in response to the model's deployment, might have influenced the results. Nevertheless, the model's utility in operations remains evident, as will be further discussed.

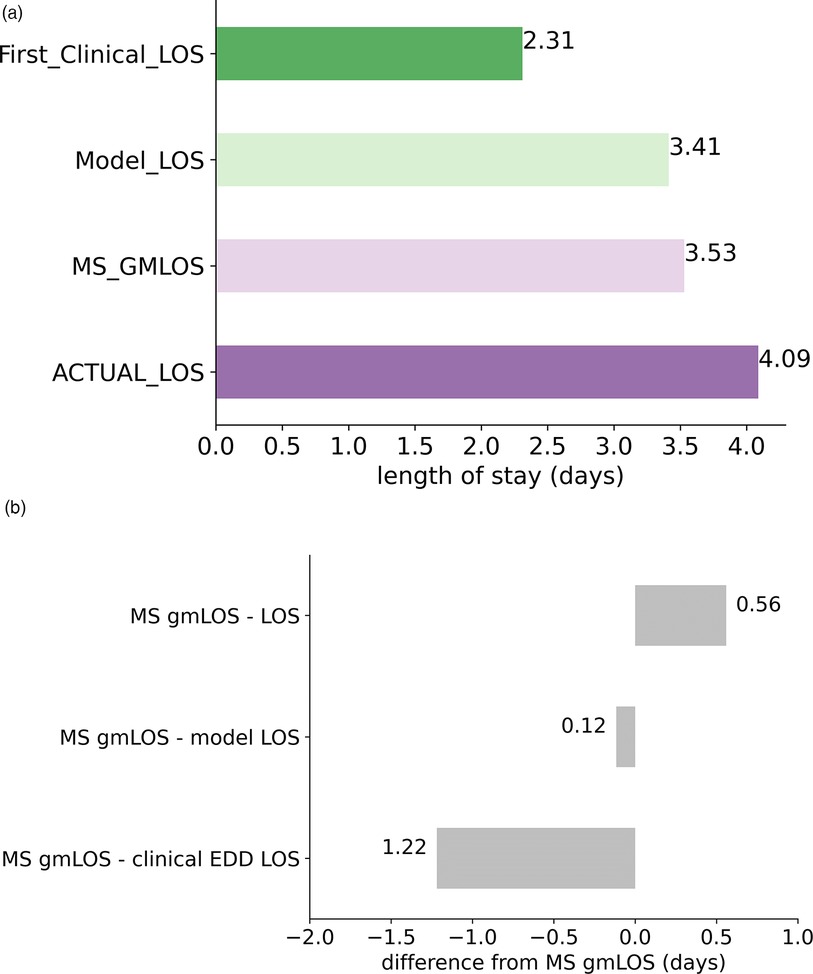

A crucial aspect of evaluating the model's operational impact is to assess how well it aligns with the target benchmark (MS GMLOS) to minimize excess days. In Figure 5, we compare the average LOS for the predictive model, MS GMLOS, initial clinical estimation-based LOS, and actual LOS. Data was clipped to a range of 1–5 days to accommodate the fact that the predictive model is a multi-class classification model, providing five possible discharge durations: one day, two days, and so on.

Figure 5. The average length of stay (LOS) comparison. (A) Average LOS for various variables including model-related LOS. (B) Difference from the reference MS GMLOS.

The predictive model aligns closely with the MS GMLOS, with a slight underestimation (difference of 0.12 days). In contrast, the initial clinical estimation-based LOS significantly underestimates, with a 1.22-day shortfall compared to MS GMLOS. Such underestimation can disrupt the patient discharge planning process. Conversely, the actual LOS overestimates by 0.56 days, leading to excess days. The model's alignment with the MS GMLOS can potentially help reduce excess days and optimize the patient discharge planning process.

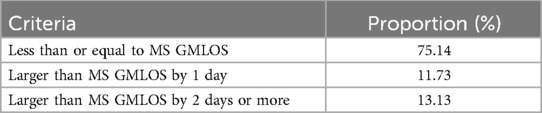

The operational utility of the model can also be assessed based on the number of predictions that are less than or equal to the MS GMLOS, exceed it by one day, or exceed it by two or more days (refer to Table 2). In 75.14% of cases, the model predicted an LOS that was less than or equal to the GMLOS, prompting the clinical team to initiate proactive discharge planning and potentially reducing excess days. This highlights the model's effectiveness in aligning with the desired discharge benchmark. By closely predicting the GMLOS, the model aids healthcare providers in managing patient flow more efficiently and helps in timely discharge planning. The model's ability to predict LOS within the benchmark range supports the goal of reducing excess hospital days and optimizing resource utilization, which is crucial in improving hospital efficiency and patient outcomes.

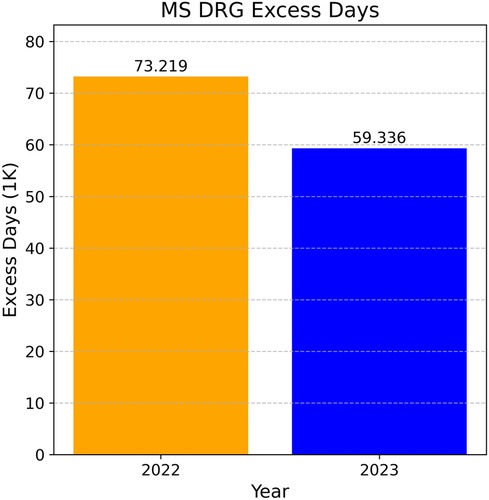

3.3 Operational outcomes

In this analysis, we compare the excess days recorded in 2022 with those in 2023, during which the model was actively in operation. As illustrated in Figure 6, there was a substantial reduction of 13,883 days (18.96%) in excess days between 2022 and 2023. The predictive model played a significant role, along with other accompanying technological implementations, in reducing these excess days.

This reduction underscores the positive impact of data-driven decision-support tools on hospital management. Such tools help streamline discharge planning and enhance resource utilization by providing healthcare professionals with data-driven insights. By accurately forecasting patient length of stay, these models enable better scheduling and utilization of hospital resources, resulting in improved patient care and increased hospital efficiency. The reduction in excess days not only minimizes unnecessary costs associated with prolonged hospital stays but also enables hospitals to accommodate more patients, effectively improving the overall healthcare delivery system. The predictive model's ability to integrate seamlessly into the clinical workflow demonstrates the transformative potential of advanced technology in addressing critical challenges in healthcare operations.

3.4 Operational and clinical implications

Accurate estimation of patient discharge date facilitates ancillary services, such as Social Work and Outcomes Management, to initiate the process of insurance authorization for necessary post-discharge services like subacute rehabilitation. This proactive approach prevents delays in patient discharge due to pending service authorizations and contributes to a reduction in excess hospital days.

For families, the predictive model enhances the accuracy of discharge estimations, thereby improving communication and preparation for the patient's transition from the hospital. Knowing the estimated discharge date in advance allows families to prepare adequately for the patient's return home or transfer to another facility, such as subacute rehabilitation. This foresight enables Outcomes Management, nurses, and physicians to engage in discussions about patient disposition with the family several days before discharge, rather than on the day of discharge. Consequently, these early conversations foster better and more effective communication between the care team and the patient's family, ensuring a smoother transition and reducing stress for all parties involved.

3.5 Applicability to various health systems and contexts

Our model was trained using the Virtua Health dataset, with a focus on ensuring local generalizability. This was assessed using the Virtua Health validation dataset, which was not seen by the model during development or training. We anticipate that the results will be applicable to public datasets and other health systems, provided the underlying population is similar to that of Virtua Health. Such similarity may stem from comparable demographics (similar baseline characteristics) and aligned data collection methods (data quality-based similarity). Nevertheless, the results can still be replicated with adjustments or retraining on patient populations that differ from Virtua Health. In conclusion, this paper discusses a specific use case of utilizing machine learning to predict inpatient discharge dates, and reported performance may vary in different health settings.

4 Conclusions

This study illustrates the development and deployment of a machine-learning model for inpatient discharge date prediction. By leveraging XGBoost and integrating the model into the clinical workflow through Epic EMR, the research offers a practical and scalable approach to enhancing patient flow and resource management in hospitals. The results demonstrate significant improvements in reductions of excess days, highlighting the operational benefits of the model. Despite challenges like data drift and limitations in data scope, the study shows how machine learning models can provide actionable insights to improve hospital efficiency and patient outcomes. Further research is needed to refine the model by: (1) incorporating more clinical data and dynamic features such as vital signs, laboratory data, and medications; (2) investigating the inclusion of external factors such as transportation logistics that contribute to the patient discharge date; (3) exploring a dynamic prediction window rather than a single prediction at a fixed time after admission; (4) evaluating model's long-term applicability across different healthcare settings.

Data availability statement

The datasets presented in this article are not readily available because data in the present study are not available due to agreements made with the IRB of Virtua Health. Requests to access the datasets should be directed to Ajit Shukla,YXNodWtsYUB2aXJ0dWEub3Jn.

Ethics statement

The studies involving humans were approved by Virtua Health Institutional Review Board FWA00002656. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and institutional requirements.

Author contributions

MM: Conceptualization, Data curation, Formal Analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. KD: Formal Analysis, Validation, Visualization, Writing – review & editing. RY: Formal Analysis, Validation, Writing – review & editing. RB-D: Formal Analysis, Validation, Writing – review & editing. AS: Conceptualization, Project administration, Resources, Supervision, Validation, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Grover S, Fitzpatrick A, Azim FT, Ariza-Vega P, Bellwood P, Burns J, et al. Defining and implementing patient-centered care: an umbrella review. Patient Educ Couns. (2022) 105(7):1679–88. doi: 10.1016/j.pec.2021.11.004

2. Håkansson Eklund J, Holmström IK, Kumlin T, Kaminsky E, Skoglund K, Höglander J, et al. “Same same or different?” A review of reviews of person-centered and patient-centered care. Patient Educ Couns. (2019) 102(1):3–11. doi: 10.1016/j.pec.2018.08.029

3. Tess BH, Glenister HM, Rodrigues LC, Wagner MB. Incidence of hospital-acquired infection and length of hospital stay. Eur J Clin Microbiol Infect Dis. (1993) 12(2):81–6. doi: 10.1007/BF01967579

4. Cremasco MF, Wenzel F, Zanei SS, Whitaker IY. Pressure ulcers in the intensive care unit: the relationship between nursing workload, illness severity and pressure ulcer risk. J Clin Nurs. (2013) 22(15–16):2183–91. doi: 10.1111/j.1365-2702.2012.04216.x

5. Caccialanza R, Klersy C, Cereda E, Cameletti B, Bonoldi A, Bonardi C, et al. Nutritional parameters associated with prolonged hospital stay among ambulatory adult patients. CMAJ. (2010) 182(17):1843–9. doi: 10.1503/cmaj.091977

6. Bernstein SL, Aronsky D, Duseja R, Epstein S, Handel D, Hwang U, et al. The effect of emergency department crowding on clinically oriented outcomes. Acad Emerg Med. (2009) 16(1):1–10. doi: 10.1111/j.1553-2712.2008.00295.x

7. Chalfin DB, Trzeciak S, Likourezos A, Baumann BM, Dellinger RP, D.-E. S. Group. Impact of delayed transfer of critically ill patients from the emergency department to the intensive care unit. Crit Care Med. (2007) 35(6):1477–83. doi: 10.1097/01.CCM.0000266585.74905.5A

8. Rowe BH, Channan P, Bullard M, Blitz S, Saunders LD, Rosychuk RJ, et al. Characteristics of patients who leave emergency departments without being seen. Acad Emerg Med. (2006) 13(8):848–52. doi: 10.1197/j.aem.2006.01.028

9. Lagoe RJ, Westert GP, Kendrick K, Morreale G, Mnich S. Managing hospital length of stay reduction: a multihospital approach. Health Care Manage Rev. (2005) 30(2):82–92. doi: 10.1097/00004010-200504000-00002

10. Gualandi R, Masella C, Tartaglini D. Improving hospital patient flow: a systematic review. Bus Process Manag J. (2020) 26(6):1541–75. doi: 10.1108/BPMJ-10-2017-0265

11. Barnes S, Hamrock E, Toerper M, Siddiqui S, Levin S. Real-time prediction of inpatient length of stay for discharge prioritization. J Am Med Inform Assoc. (2016) 23(e1):e2–10. doi: 10.1093/jamia/ocv106

12. Sullivan B, Ming D, Boggan JC, Schulteis RD, Thomas S, Choi J, et al. An evaluation of physician predictions of discharge on a general medicine service. J Hosp Med. (2015) 10(12):808–10. doi: 10.1002/jhm.2439

13. Ippoliti R, Falavigna G, Zanelli C, Bellini R, Numico G. Neural networks and hospital length of stay: an application to support healthcare management with national benchmarks and thresholds. Cost Eff Resour Alloc. (2021) 19(1):67. doi: 10.1186/s12962-021-00322-3

14. Chrusciel J, Girardon F, Roquette L, Laplanche D, Duclos A, Sanchez S. The prediction of hospital length of stay using unstructured data. BMC Med Inform Decis Mak. (2021) 21(1):351. doi: 10.1186/s12911-021-01722-4

15. Levin S, Barnes S, Toerper M, Debraine A, DeAngelo A, Hamrock E, et al. Machine-learning-based hospital discharge predictions can support multidisciplinary rounds and decrease hospital length-of-stay. BMJ Innov. (2021) 7(2):414–21. doi: 10.1136/bmjinnov-2020-000420

16. Macedo R, Barbosa A, Lopes J, Santos M. Intelligent decision support in beds management and hospital planning. Procedia Comput Sci. (2022) 210:260–4. doi: 10.1016/j.procs.2022.10.147

17. Bacchi S, Tan Y, Oakden-Rayner L, Jannes J, Kleinig T, Koblar S. Machine learning in the prediction of medical inpatient length of stay. Intern Med J. (2022) 52(2):176–85. doi: 10.1111/imj.14962

18. Mekhaldi RN, Caulier P, Chaabane S, Chraibi A, Piechowiak S. Using machine learning models to predict the length of stay in a hospital setting. In: Rocha Á, Adeli H, Reis LP, Costanzo S, Orovic I, Moreira F, editors. Trends and Innovations in Information Systems and Technologies. vol 1159. Cham: Springer International Publishing (2020). p. 202–11, Advances in Intelligent Systems and Computing. doi: 10.1007/978-3-030-45688-7_21

19. Zhang A, Xing L, Zou J, Wu JC. Shifting machine learning for healthcare from development to deployment and from models to data. Nat Biomed Eng. (2022) 6(12):1330–45. doi: 10.1038/s41551-022-00898-y

20. Harris S, Bonnici T, Keen T, Lilaonitkul W, White MJ, Swanepoel N. Clinical deployment environments: five pillars of translational machine learning for health. Front Digit Health. (2022) 4:939292. doi: 10.3389/fdgth.2022.939292

21. Chen T, Guestrin C. XGBoost: a scalable tree boosting system. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, in KDD ‘16. New York, NY, USA: Association for Computing Machinery (2016). p. 785–94. doi: 10.1145/2939672.2939785

Keywords: discharge date prediction, discharge planning, machine learning, XGBoost, machine learning operations

Citation: Mahyoub MA, Dougherty K, Yadav RR, Berio-Dorta R and Shukla A (2024) Development and validation of a machine learning model integrated with the clinical workflow for inpatient discharge date prediction. Front. Digit. Health 6:1455446. doi: 10.3389/fdgth.2024.1455446

Received: 26 June 2024; Accepted: 13 September 2024;

Published: 30 September 2024.

Edited by:

Roshan Joy Martis, Global Academy of Technology, IndiaReviewed by:

Parisis Gallos, National and Kapodistrian University of Athens, GreeceShreedhar HK, Visvesvaraya Technological University, India

Copyright: © 2024 Mahyoub, Dougherty, Yadav, Berio-Dorta and Shukla. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ajit Shukla, YXNodWtsYUB2aXJ0dWEub3Jn

Mohammed A. Mahyoub

Mohammed A. Mahyoub Kacie Dougherty

Kacie Dougherty Ravi R. Yadav

Ravi R. Yadav Raul Berio-Dorta1

Raul Berio-Dorta1