- 1Takeda Pharmaceuticals, Cambridge, MA, United States

- 2Center for Health+Technology, University of Rochester, Rochester, NY, United States

- 3Department of Neurology, University of Rochester, Rochester, NY, United States

- 4Clinical Ink, Horsham, PA, United States

- 5Balance Disorders Laboratory, Department of Neurology, Oregon Health and Science University, Portland, OR, United States

- 6Critical Path Institute, Tuscan, AZ, United States

- 7Abbvie, North Chicago, IL, United States

Introduction: Digital health technologies (DHTs) have the potential to alleviate challenges experienced in clinical trials through more objective, naturalistic, and frequent assessments of functioning. However, implementation of DHTs come with their own challenges, including acceptability and ease of use for study participants. In addition to acceptability, it is also important to understand device proficiency in the general population and within patient populations who may be asked to use DHTs for extended periods of time. We thus aimed to provide an overview of participant feedback on acceptability of DHTs, including body-worn sensors used in the clinic and a mobile application used at-home, used throughout the duration of the Wearable Assessments in the Clinic and at Home in Parkinson's Disease (WATCH-PD) study, an observational, longitudinal study looking at disease progression in early Parkinson's Disease (PD).

Methods: 82 participants with PD and 50 control participants were enrolled at 17 sites throughout the United States and followed for 12 months. We assessed participants' general device proficiency at baseline, using the Mobile Device Proficiency Questionnaire (MDPQ). The mean MDPQ score at Baseline did not significantly differ between PD patients and healthy controls (20.6 [2.91] vs 21.5 [2.94], p = .10).

Results: Questionnaire results demonstrated that participants had generally positive views on the comfort and use of the digital technologies throughout the duration of the study, regardless of group.

Discussion: This is the first study to evaluate patient feedback and impressions of using technology in a longitudinal observational study in early Parkinson's Disease. Results demonstrate device proficiency and acceptability of various DHTs in people with Parkinson's does not differ from that of neurologically healthy older adults, and, overall, participants had a favorable view of the DHTs deployed in the WATCH-PD study.

1 Introduction

Advances in digital technologies, such as mobile phones and wearables, are now ubiquitous and have changed how we interact with others and the world around us. For example, a 2020 poll showed that 90% of Americans own a smartphone and 21% own a smartwatch or fitness tracker (1, 2). Beyond giving us the capabilities to post pictures, play games, or track our workouts, these technologies have become particularly valuable in the health and research sectors (3). In clinical trials, for example, as opposed to traditional assessments, which are subjective and performed infrequently, digital tools have the potential to provide a more holistic view of disease symptoms (4–6), progression (7–9), and response to treatment (5). Furthermore, using digital tools in fully decentralized or hybrid clinical trials can reduce or fully eliminate site visits, a documented barrier to clinical trial participation due to patient and caregiver burden (10).

Although using digital health technologies (DHTs) may alleviate some of the challenges faced in clinical trials, they often come with their own challenges resulting in lower rates of adaptation, particularly among older individuals. There is a false assumption of device proficiency in the general population, especially when working with a population of older adults, who require greater assistance in relation to digital technologies than younger populations (11). For instance, a nonexperimental study design exploring attitudes about technology in older adults found that older adults were willing to use technology but had negative outlooks associated with technology creating inconveniences and unhelpful features, thus making it harder to use and navigate (12). Other factors that have contributed to low technology adaptation in older adults include poor technology designs that don't consider the perceptual and cognitive abilities of older adults, and poor training on use of the technology (13).

One disease consisting primarily of older adults where the use of DHTs has been especially relevant in clinical trial measurement is Parkinson's Disease (PD). PD, the second most prevalent and fastest growing movement disorder in the world, affects about 1% of adults 60 years and older (14). The cardinal features of the disease are motor impairments such as tremor, rigidity, and bradykinesia, however, the clinical features extend beyond that as patients typically bring to light the cognitive and mood impairments caused by the disease (15, 16). The current gold standard for assessing progression in PD, the Movement Disorders Society Unified Parkinson's Disease Rating Scale (MDS-UPDRS) (17), has limitations which pose challenges for clinical trials. For instance, to properly power a phase II clinical trial to see a change in the MDS-UPDRS studies must have large sample sizes and long study durations (18, 19). The frequency in which participants need to come into the clinic in traditional clinical trials can also be a hurdle as clinical trials are typically run in large, academic hospitals researchers are only capturing participants that live in metropolitan areas or have the means to travel to study sites (20). Using digital technologies in clinical trials can not only give us better, more sensitive, measures of disease progression but can also help us reach a wider range of participants by reducing the number of clinic visits or potentially shifting towards totally remote clinical trials.

One method to assess comfort with technology in older adults is the Mobile Device Proficiency Questionnaire (MDPQ). The MDPQ includes items related to comfort using devices, such as tablets and smartphones, and has been found to be a highly reliable measure of mobile device proficiency in older adults (21). The MDPQ could serve as a tool to identify participants who may need more training in using digital technologies in clinical trials. Additionally, researchers can evaluate patients’ first-hand experiences using DHTs by harnessing the voice of the individuals participating in research studies and clinical trials. Acquiring patient feedback early and often, through panels, interviews, and questionnaires, can provide insights related to the acceptability of these technologies and help inform future study design.

1.1 Current study

The Wearable Assessments in the Clinic and at Home in Parkinson's Disease [WATCH-PD (4);] study was a one-year, observational study exploring disease progression using DHTs in early Parkinson's Disease. Perceptions of the DHTs used in the WATCH-PD study were captured from participants throughout the study. In this paper we aim to give an overview of participant feedback with the goal of providing a better understanding of the feasibility and burden of using these technologies during participation in longitudinal clinical trials. Specifically, we aimed to report if there are differences between people with PD and control participants in (1) device proficiency at baseline as measured by the MDPQ and (2) overall impressions of using digital technologies during participation in a 12-month longitudinal study.

2 Methods

2.1 Trial design

The Wearable Assessments in the Clinic and at Home in PD is a prospective, longitudinal, multisite natural history study in people with early, untreated PD (<2 yr since diagnosis) and neurologically healthy matched controls. 82 participants with PD and 50 control participants were enrolled at 17 sites throughout the United States and followed for 12 months. Participants completed regular clinic visits in addition to completing self-administered assessments of motor and non-motor function outside of the clinic using a mobile application twice monthly. A brief description is provided below. For a fuller description, please see Adams et al. (4).

2.2 Participants

Participants were recruited from clinics, study interest registries, and social media. We aimed to evaluate a population similar to the Parkinson's Progression Markers Initiative (PPMI) (22). Thus, at enrollment, PD patients were required to be aged 30 or older, within 2 years of diagnosis, untreated with symptomatic medications [including levodopa, dopamine agonists, Monoamine oxidase-B (MAO-B) inhibitors, amantadine, anticholinergics] and not expected to require medication for at least 6 months at baseline, a modified Hoehn and Yahr ≤2, and at least two of the following symptoms: resting tremor, bradykinesia, or rigidity (must have either resting tremor or bradykinesia as one of two symptoms); OR either asymmetric resting tremor or asymmetric bradykinesia. Control participants were required to be aged 30 or older at the time of enrollment, with no diagnosis of a significant Central Nervous System (CNS) disease (other than PD), history of repeated head injury, history of epilepsy or seizure disorder other than febrile seizures as a child, or history of a brain magnetic resonance imaging (MRI) scan indicative of clinically significant abnormality. For both PD patients and controls, a Montreal Cognitive Assessment (MoCA) score < 24 was considered exclusionary.

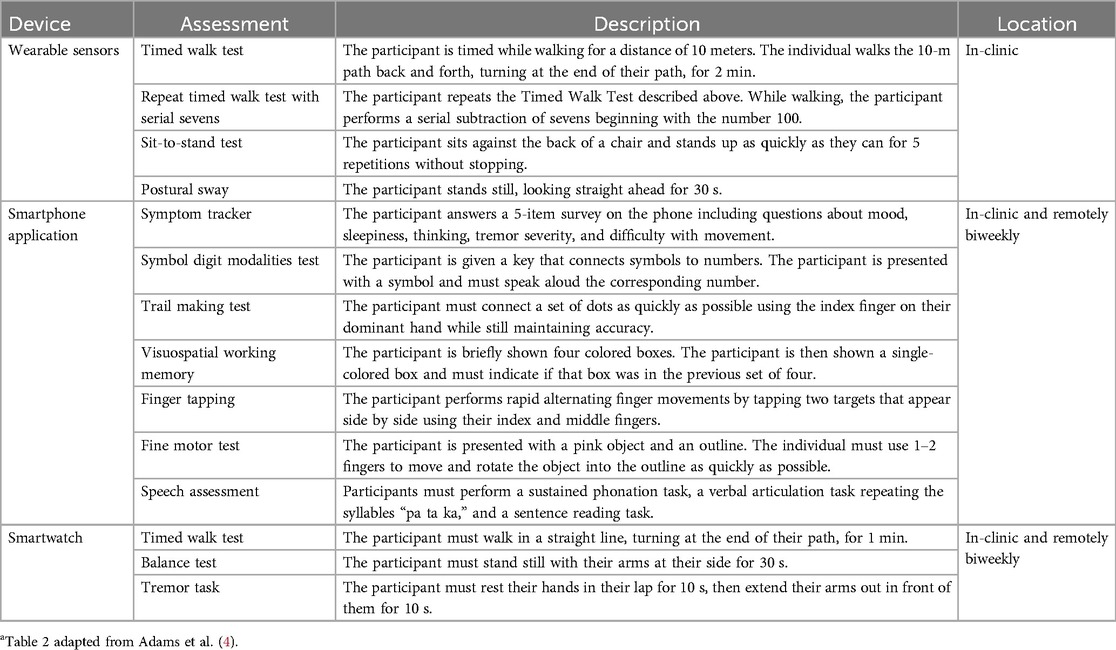

2.3 Study assessments

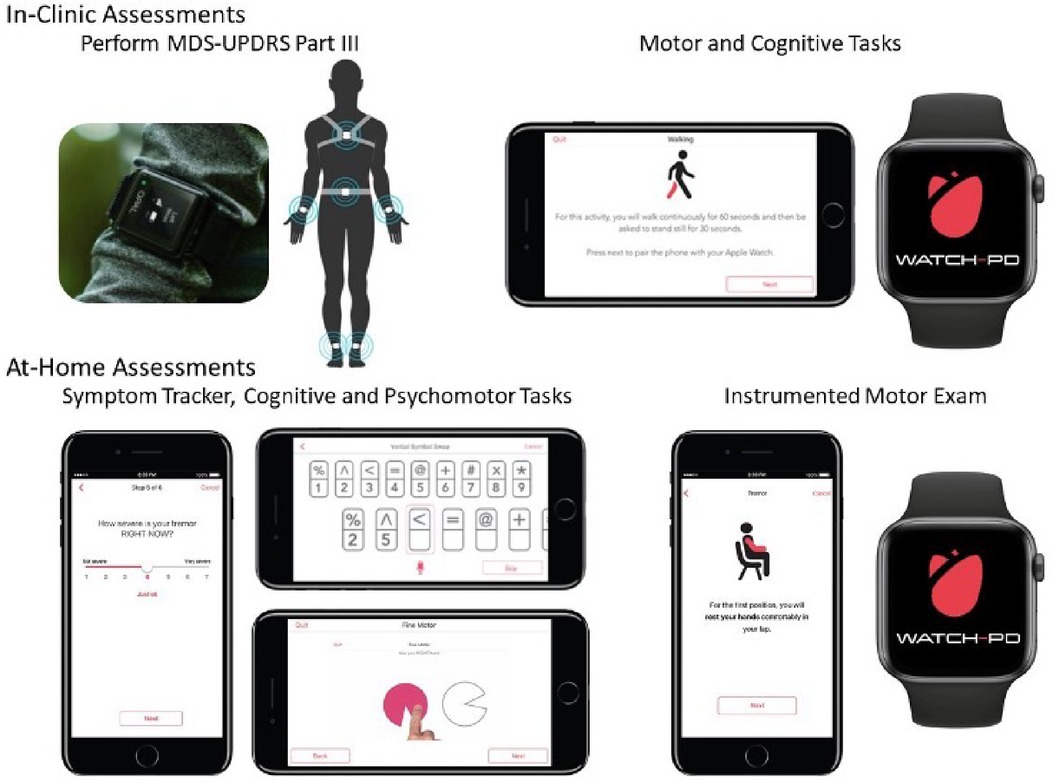

Each participant completed clinic visits at Screening/Baseline, 1, 3, 6, 9, 12 months. Clinic visits consisted of three core components: (1) a comprehensive battery of clinician and patient-reported outcomes measuring both motor and non-motor function, (2) a set of motor assessments completed while wearing inertial sensors distributed across the body, and (3) completion of a custom-developed, self-administered mobile phone battery designed to measure aspects of motor and non-motor function. In addition to in-clinic assessments, participants were asked to wear a smartwatch on the wrist of their most affected side for 7 days following each clinic visit and were asked to complete the same mobile battery they completed during clinic visits every two weeks for the duration of the study. Due to COVID-19 a subset of individuals did some of the in-clinic assessments remotely and not all data were available.

2.4 Instrumented motor assessments

At each clinic visit, participants were instrumented with a set of six Opal sensors (OPAL system, APDM, Inc., Portland, OR, United States) placed on each wrist, around each foot, and one sensor each positioned on the sternum and the lumbar area (Figure 1). The Opal sensors contain 3-axis, accelerometers, gyroscopes, and magnetometers, and were used to capture raw kinematic data during the performance of the MDS-UPDRS Part III motor examination, as well as a 5× sit-to-stand task, a 30 s standing balance task (eyes open), a two-minute walking task and a two-minute walking task under cognitive load (serial sevens).

Figure 1. Digital devices evaluated in-clinic and at-home during WATCH-PD. Adapted from Adams et al. (4).

2.5 Mobile assessment battery

As noted above, participants were provided with a provisioned smartphone and smartwatch and completed a custom-designed mobile battery, developed by Clinical Ink (Clinical Ink, Horsham, PA USA), at each clinic visit and every two weeks during their participation in the study. The purpose of completing the mobile battery during clinic visits was twofold. First, it provided a means of orienting participants to the devices and tasks to be performed. In addition, it allowed comparison of performance measures derived from the mobile assessments to contemporaneously collected measures acquired through the Opal system and clinician and patient reported outcomes completed during each visit. The mobile battery took approximately 15–20 min to complete, and participants were asked to complete the entire battery at once. They were allowed up to an hour to complete the tasks, providing time for unexpected interruptions or breaks. The battery consisted of three core components, measuring both motor and non-motor domains. First, participants completed a set of six, brief PRO questions, providing responses on a 1–7 Likert scale with questions related to current mood, fatigue, sleepiness, and cognition, as well as the current severity of bradykinesia and tremor (Table 1). Participants then completed a set of brief cognitive and psychomotor tasks and a brief speech recording battery. Finally, participants completed a brief instrumented motor exam consisting a of a 1-minute walking task, a 30 s balance task, and 20 s resting and postural tremor tasks.

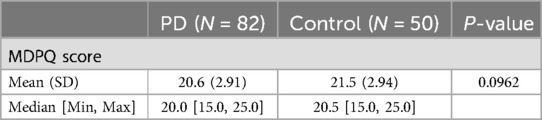

Table 1. Results of the mobile device proficiency questionnaire (MDPQ) in Parkinson's disease participants and controls at baseline.

2.6 Mobile device proficiency questionnaire

At baseline participants completed an abridged version of the MDPQ focused on a subscale of Mobile Device Basics most relevant to the tools being used in the current study. The MDPQ Mobile Device Basics subscale is comprised of nine questions that ask participants to rate their ability to perform tasks on a smartphone or tablet device on a 1–5 Likert scale (1 = never tried, 2 = not at all, 3 = not very easily, 4 = somewhat easily, 5 = very easily). The MDPQ was available for all participants at Baseline.

2.7 Wearability and comfort questionnaire

At baseline, Months 1, 6, and end of study (month 12), participants were asked to take a questionnaire with quantitative questions related to using the digital technologies both in the clinic and at home (Supplemental 1). Quantitative questions relating to comfort, ease of use, and burden were either on a Likert or categorical (Yes/No/Neutral) scale. The Likert Scale was a 1 to 5 scale for both comfort of devices (1 = Very Acceptable, 2 = Acceptable, 3 = Neutral, 4 = Unacceptable, 5 = Very Unacceptable) and ease of use (1 = Very easy, 2 = Easy, 3 = Neutral, 4 = Difficult, 5 = Very Difficult). At the end of the study, participants completed an exit questionnaire which addressed qualitative questions related to the use of the devices, and non-device questions related to length of study and compensation.

At baseline, the Wearability and Comfort Questionnaire was available for 80 participants with PD and 49 controls, however some questions were left blank which is reflected in our results. At month 1, the Wearability and Comfort Questionnaire was available for 72 participants with PD and 40 controls and at month 12, it available for 80 participants with PD and 46 controls.

2.8 Statistical analysis

Descriptive statistics for the MDPQ total subset score and Wearability and Comfort Questionnaire scores at Baseline, Month 1, and Month 12 were reported for PD participants and controls. A two-tailed t-test was performed between PD participants and controls on the MDPQ to determine if there was a difference in scores between the two groups where p < 0.05 was considered statistically significant. All analyses were performed in R Statistical Software (v4.1.2; R Core Team 2021).

3 Results

3.1 Mobile device proficiency questionnaire

Table 2 summarizes the results of the MDPQ at Baseline. The mean [SD] score in PD participants [20.6 (2.91)] was numerically smaller than controls [21.5 (2.94)] but did not differ significantly across the two groups (p = 0.10).

Table 2. Description and location of assessments conducted with the digital devices used in WATCH-PDa.

3.2 Wearability and comfort questionnaire

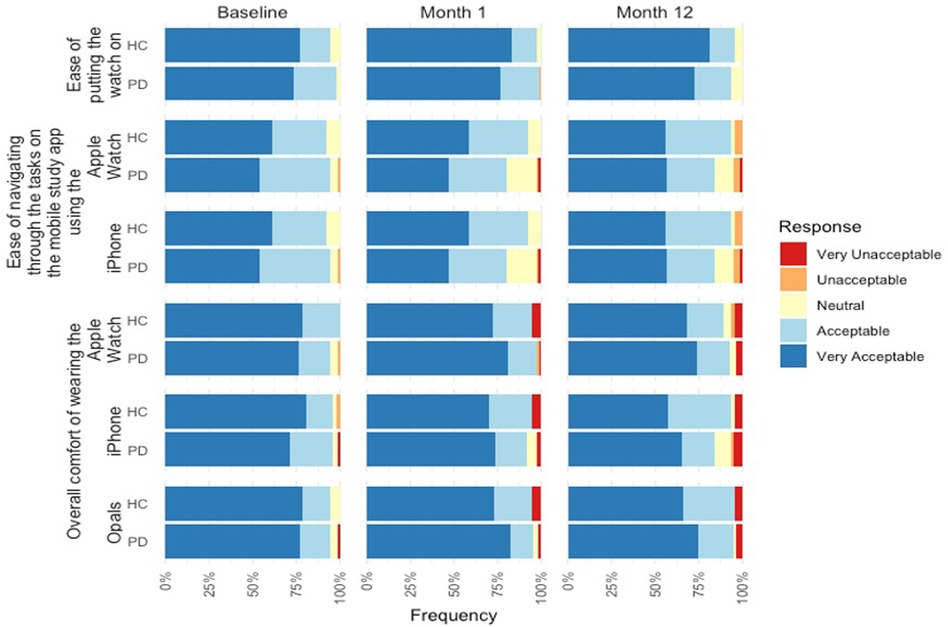

Figure 2 summarizes the results of the Wearability and Comfort Questionnaire at Baseline, Month 1, and Month 12.

3.3 Baseline

For overall comfort of the devices, the majority of the PD participants (75.9%) found the comfort of wearing the Opals to be very acceptable. Positive feedback was also reported for the mobile phone and smartwatch with the majority of participants reporting the comfort of the devices very acceptable (71.2% and 78.8% respectively). Similarly, controls reported very acceptable comfort for the Opals (77.6%), mobile phone (79.6%), and smartwatch (77.6%).

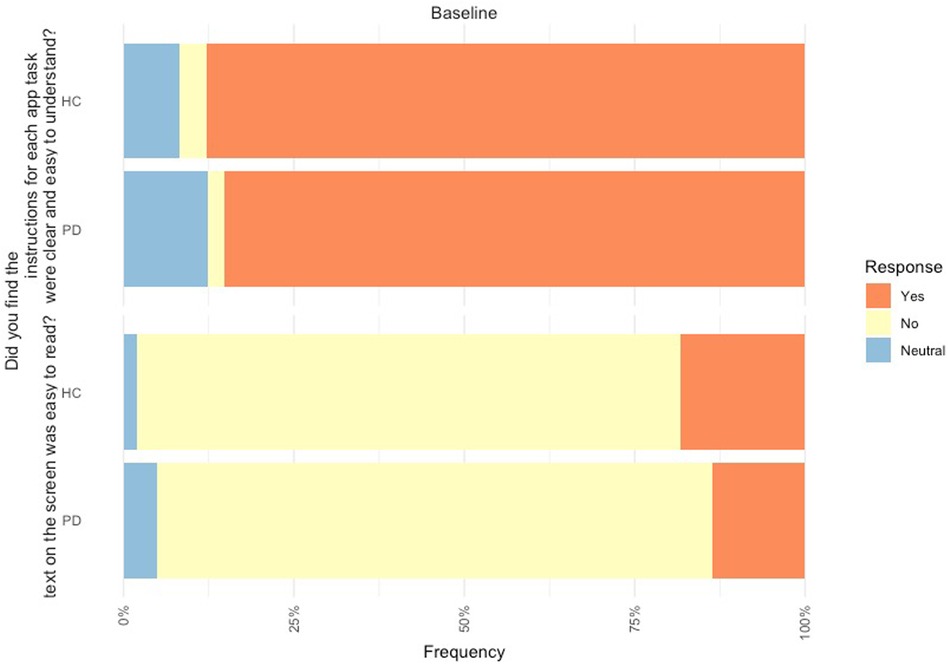

In relation to the mobile assessment, 85.2% of PD participants found the instructions on the mobile assessment to be clear and easy to understand, but 81.5% found the text was not easy to read. Likewise, 87.8% of controls found the instructions on the mobile assessment easy to be clear and easy to understand and 79.6% found the text was not easy to read (Figure 3).

Figure 3. Feedback of mobile device platform at baseline from PD participants (n = 80) and controls (n = 49).

3.4 Month 1

For overall comfort of the devices in Month 1, the majority of the PD participants (82.1%) found the comfort of wearing the Opals to be very acceptable. Positive feedback was also reported for the mobile phone and smartwatch with the majority of participants reporting the comfort of the devices very acceptable (74.0% and 80.8% respectively), including acceptability of putting the smartwatch on at home (76.7%). Similarly, controls reported very acceptable comfort for the Opals (73.0%), mobile phone (70.0%), and smartwatch (72.5%), and 83.0% reported the ease of putting on the smartwatch as very acceptable.

3.5 Month 12

For overall comfort of the devices in Month 12, the majority of PD participants (74.4%) found the comfort of wearing the Opals to be very acceptable. Positive feedback was also reported for the mobile phone and smartwatch with the majority of participants reporting the comfort of the devices very acceptable (65.0% and 73.8% respectively), including acceptability of putting the smartwatch on at home (72.5%). Similarly, controls reported very acceptable comfort for the Opals (66.0%), mobile phone (57.4%), and smartwatch (68.1%), and 83.0% reported the ease of putting on the smartwatch as very acceptable.

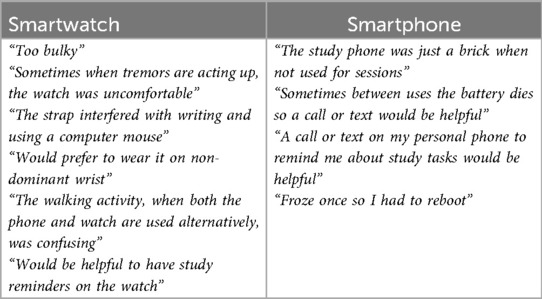

Highlights of the qualitative feedback related to the devices at Month 12 was grouped and can be found in Table 3. Participants highlighted the need for a better watch strap, more notifications on the mobile device to complete the battery, and frustrations with technological issues.

Table 3. Qualitative feedback from participants on use of smartwatch and smartphone at-home in WATCH-PD.

4 Discussion

This work aimed to gather participant perceptions of the DHTs used in the WATCH-PD study. This is the first study to evaluate feedback and impressions of using common DHTs in both controls and people with early PD in the context of a longitudinal, observational study. We show that for an early PD population, experiences and comfort with technology are not different from the general experience in neurologically healthy older adults. Furthermore, there was an overall favorable view of the usability and comfort of the digital technologies deployed in the WATCH-PD study, both in-clinic and at-home.

Results from the MDPQ mobile subscale at baseline demonstrated no significant differences in device proficiency between the PD participants and controls. The results from the Wearability and Comfort Questionnaire overall demonstrated generally positive views on the comfort and use of the digital technologies in this study. Consistently, over the 12-month study duration, within both cohorts, most participants found wearing the Opal sensors, mobile phone, and smartwatch either very acceptable or acceptable regarding comfort. The ease of putting on the Apple Watch band was also favorable throughout the study, which was encouraging given that many of the PD participants presented with tremor dominant symptoms at baseline.

The study is not without limitations. The baseline MDPQ scores combined with the highly positive results on the Wearability and Comfort Questionnaire might suggest that the study was biased towards recruiting people who were already very comfortable with technology. This cohort was also homogenous, potentially limiting the generalizability of our findings. Thus, it is recommended that future work collect similar measures in more diverse cohorts, potentially through a fully remote study design to widen recruitment and include a broader range of individuals. Moreover, there were a few limitations which we could not control, including the maximum size of the screen of the mobile device.

5 Conclusions

The current research in early PD, along with extant literature on DHT usability and acceptability more generally, provides a foundation for understanding the acceptability of using digital tools in early PD clinical trials. Our work provides insights into how older individuals, especially those with a movement disorder, will adapt to using digital technologies in clinical trials. A key to overcoming possible challenges with the use of DHTs in older participants with neurological disorders is to incorporate the patient voice by gathering regular formal and informal feedback throughout study design and conduct. Furthermore, the option of co-design with the end users provides an opportunity to collect valuable feedback and create a collaborative experience between researchers and patients.

Data availability statement

The datasets presented in this article are not readily available because data is available to members of the Critical Path for Parkinson's Consortium 3DT Initiative Stage 2. For those who are not a part of 3DT Stage 2, a proposal may be made to the WATCH-PD Steering Committee (via the corresponding author) for de-identified datasets. Requests to access the datasets should be directed toSmFtaWUuQWRhbXNAY2hldC5yb2NoZXN0ZXIuZWR1.

Ethics statement

The studies involving humans were approved by WCG Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

TK: Writing – original draft, Writing – review & editing. RL: Writing – review & editing. JA: Writing – review & editing. RD: Writing – review & editing. MK: Writing – review & editing. JS: Writing – review & editing. DA: Writing – review & editing. FH: Writing – review & editing. DS: Writing – review & editing. JC: Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. Funding was provided by Takeda, Biogen, and Critical path Institute.

Acknowledgments

We want to thank the members of the Critical Path for Parkinson's Digital Drug Development tool (3DT) initiative for generously funding and collaborating to support the 3DT project and a talented group of scientific advisors who have supported this effort. Members include Biogen, Takeda, AbbVie, Novartis, Merck, UCB, GSK, Roche, The Michael J. Fox Foundation and Parkinson's UK.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Sidoti O. Mobile Fact Sheet. Washington, DC: Pew Research Center (2024). Available online at: https://www.pewresearch.org/internet/fact-sheet/mobile/

2. Vogels E. About One-in-Five Americans Use a Smart Watch or Fitness Tracker. Washington, DC: Pew Research Center (2020). Available online at: https://www.pewresearch.org/short-reads/2020/01/09/about-one-in-five-americans-use-a-smart-watch-or-fitness-tracker/

3. Steinhubl SR, Wolff-Hughes DL, Nilsen W, Iturriaga E, Califf RM. Digital clinical trials: creating a vision for the future. NPJ Digital Medicine. (2019) 2:126. doi: 10.1038/s41746-019-0203-0

4. Adams JL, Kangarloo T, Tracey B, O’Donnell P, Volfson D, Latzman RD, et al. Using a smartwatch and smartphone to assess early Parkinson’s disease in the WATCH-PD study. NPJ Parkinson’s Dis. (2023) 9(1):64. doi: 10.1038/s41531-023-00497-x

5. Stroud C, Onnela J-P, Manji H. Harnessing digital technology to predict, diagnose, monitor, and develop treatments for brain disorders. NPJ Digit Med. (2019) 2:44. doi: 10.1038/s41746-019-0123-z

6. Torous J, Kiang MV, Lorme J, Onnela J-P. New tools for new research in psychiatry: a scalable and customizable platform to empower data driven smartphone research. JMIR Ment Health. (2016) 3:e16. doi: 10.2196/mental.5165

7. Stephenson D, Badawy R, Mathur S, Tome M, Rochester L. Digital progression biomarkers as novel endpoints in clinical trials: a multistakeholder perspective. J Parkinson’s Dis. (2021) 11:S103. doi: 10.3233/jpd-202428

8. Czech MD, Badley D, Yang L, Shen J, Crouthamel M, Kangarloo T, et al. Improved measurement of disease progression in people living with early Parkinson’s disease using digital health technologies. Commun Med. (2024) 4(1):49. doi: 10.1038/s43856-024-00481-3

9. Dodge HH, Zhu J, Mattek NC, Austin D, Kornfeld J, Kaye JA. Use of high-frequency in-home monitoring data may reduce sample sizes needed in clinical trials. PloS One. (2015) 10:e0138095. doi: 10.1371/journal.pone.0138095

10. Brouwer WD, Patel CJ, Manrai AK, Rodriguez-Chavez IR, Shah NR. Empowering clinical research in a decentralized world. npj Digit Med. (2021) 4(1):102. doi: 10.1038/s41746-021-00473-w

11. Chen C, Ding S, Wang J. Digital health for aging populations. Nat Med. (2023) 29(7):1623–30. doi: 10.1038/s41591-023-02391-8

12. Gitlow L. Technology use by older adults and barriers to using technology. Phys Occup Ther Geriatr. (2014) 32(3):271–80. doi: 10.3109/02703181.2014.946640

13. Moxley J, Sharit J, Czaja SJ. The factors influencing older Adults’ decisions surrounding adoption of technology: quantitative experimental study. JMIR Aging. (2022) 5:e39890. doi: 10.2196/39890

14. Samii A, Nutt JG, Ransom BR. Parkinson's disease. Lancet (London, England). (2004) 363(9423):1783–93. doi: 10.1016/S0140-6736(04)16305-8

15. Balestrino R, Schapira AHV. Parkinson disease. Eur J Neurol. (2020) 27(1):27–42. doi: 10.1111/ene.14108

16. Port RJ, Rumsby M, Brown G, Harrison IF, Amjad A, Bale CJ. People with Parkinson's disease: what symptoms do they most want to improve and how does this change with disease duration? J Parkinsons Dis. (2021) 11(2):715–24. doi: 10.3233/JPD-202346

17. Goetz CG, Tilley BC, Shaftman SR, Stebbins GT, Fahn S, Martinez-Martin P, et al. Movement disorder society-sponsored revision of the unified Parkinson’s disease rating scale (MDS-UPDRS): scale presentation and clinimetric testing results. Mov Disord. (2008) 23(15):2129–70. doi: 10.1002/mds.22340

18. Dorsey ER, Papapetropoulos S, Xiong M, Kieburtz K. The first frontier: digital biomarkers for neurodegenerative disorders. Digit Biomark. (2017) 1(1):6–13. doi: 10.1159/000477383

19. Simuni T, Siderowf A, Lasch S, Coffey CS, Caspell-Garcia C, Jennings D, et al. Longitudinal change of clinical and biological measures in early Parkinson’s disease: parkinson’s progression markers initiative cohort. Mov Disord. (2018) 33:771–82. doi: 10.1002/mds.27361

20. Vaswani PA, Tropea TF, Dahodwala N. Overcoming barriers to Parkinson disease trial participation: increasing diversity and novel designs for recruitment and retention. Neurotherapeutics. (2020) 17:1724–35. doi: 10.1007/s13311-020-00960-0

21. Roque NA, Boot WR. A new tool for assessing Mobile device proficiency in older adults: the Mobile device proficiency questionnaire. J Appl Gerontol. (2018) 37:131–56. doi: 10.1177/0733464816642582

Keywords: digital tool, patient feedback, Parkinson, wearability, wearable sensors

Citation: Kangarloo T, Latzman RD, Adams JL, Dorsey R, Kostrzebski M, Severson J, Anderson D, Horak F, Stephenson D and Cosman J (2024) Acceptability of digital health technologies in early Parkinson's disease: lessons from WATCH-PD. Front. Digit. Health 6:1435693. doi: 10.3389/fdgth.2024.1435693

Received: 20 May 2024; Accepted: 2 August 2024;

Published: 26 August 2024.

Edited by:

Pradeep Nair, Central University of Himachal Pradesh, IndiaReviewed by:

Jessica De Souza, University of California, San Diego, United StatesKristin Hannesdottir, Novartis, United States

Copyright: © 2024 Kangarloo, Latzman, Adams, Dorsey, Kostrzebski, Severson, Anderson, Horak, Stephenson and Cosman. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: T. Kangarloo, dGFpcm1hZS5rYW5nYXJsb29AdGFrZWRhLmNvbQ==

T. Kangarloo

T. Kangarloo R. D. Latzman1

R. D. Latzman1 D. Stephenson

D. Stephenson