- 1Department of Psychology, Health and Technology, Centre for eHealth and Wellbeing Research, TechMed Centre, Faculty of Behavioural, Management and Social Sciences, University of Twente, Enschede, Netherlands

- 2Department of Health Technology Implementation, TechMed Centre, Faculty of Science & Technology, University of Twente, Enschede, Netherlands

For successful health technology innovation and implementation it is key to, in an early phase, understand the problem and whether a proposed innovation is the best way to solve the problem. This review performed an initial exploration of published tools that support innovators in academic research and early stage development with awareness and guidance along the end-to-end process of development, evaluation and implementation of health technology innovations. Tools were identified from scientific literature as well as in grey literature by non-systematic searches in public research databases and search engines, and based on expert referral. A total number of 14 tools were included. Tools were classified as either readiness level tool (n = 6), questionnaire/checklist tool (n = 5) or guidance tool (n = 3). A qualitative analysis of the tools identified 5 key domains, 5 innovation phases and 3 implementation principles. All tools were mapped for (partially) addressing the identified domains, phases, and principles. The present review provides awareness of available tools and of important aspects of health technology innovation and implementation (vs. non-technological or non-health related technological innovations). Considerations for tool selection include for example the purpose of use (awareness or guidance) and the type of health technology innovation. Considerations for novel tool development include the specific challenges in academic and early stage development settings, the translation of implementation to early innovation phases, and the importance of multi-disciplinary strategic decision-making. A remaining attention point for future studies is the validation and effectiveness of (self-assessment) tools, especially in the context of support preferences and available support alternatives.

1 Introduction

Health technology innovations can play an important role in addressing our societies' health(care) problems. However, this does require that innovations actually get developed, commercialized and implemented in (clinical) practice. In academic and early-stage development settings, it can be a challenge to oversee the complete end-to-end innovation process,1 to understand what is needed to get an innovation implemented in (clinical) practice, and to decide on the activities needed in projects to increase the chances of successful innovation with added value for society.

1.1 Implementation science perspective

Health technology innovations are not just (medical) devices2 or technologies “ready for deployment” (1, 2). During development, evaluation and implementation, health technologies can become innovations in (clinical) practice. For example, by creating an infrastructure for the way of working, for new services and concepts on how to change and improve healthcare, how to align work practices with technologies, how to prepare healthcare workers to use technologies, how to engage stakeholders to invest in maintenance, and how to assess the impact on healthcare (3). Within this context, implementation can be described as a process of several planned and guided activities to ultimately launch, introduce and maintain technologies in a certain context to innovate or improve healthcare (3, 4). These activities may, in addition, deliver the evidence for adoption and upscaling a technology in healthcare practices. Importantly, implementation of a certain health technology innovation may require a re-evaluation or even de-implementation of other existing health technology innovations or practices (5). Indeed, the implementation of health technology innovations is widely acknowledged as a highly complex process involving a variety of factors on multiple levels, including different stakeholders and perspectives that play a role during different innovation phases over an often-lengthy timeframe (i.e., years) (6–8). Numerous models and frameworks have evolved that aim to understand the processes and driving factors involved in innovation and implementation, and to predict outcomes, often academically framed as “implementation science” or “early health technology assessment” (3, 6, 9–11). All emphasize that implementation should be iteratively intertwined with development, involving multi-disciplinary stakeholders at an early stage. And that clear governance, i.e., leadership, vision, policy and accountability, including project/innovation management, should be in place (3). However, in practice these frameworks often remain underused (9, 11, 12).

1.2 Failed innovation perspective

And indeed, many health technologies fail, with failure rates being reported as high as 95% (NLC—Health Ventures) (13, 14). Analyses of failed innovations have identified several critical phases in the end-to-end innovation process, the so-called “valleys of death” (13, 15, 16). A first “valley of death” occurs during the early stages of the innovation process at the transition between original scientific research and the commercialization of associated technologies (15). An important hampering factor specifically in academic settings is that the development of technologies is often primarily funded and organized for the purpose of advancing research and academic impact (15). This has important implications. For example, the innovation process often stops after the grant money has been spent, the papers have been published and the PhD student has graduated. For medical devices in particular, the complex and evolving legal and regulatory landscape both prior to as well as during commercialization, may involve more extensive clinical validation activities as well as complex, costly and lengthy market access and maintenance procedures (14, 16). This requires additional funding as compared with non-medical (health) technology innovations, but is often not covered as part of regular research funding schemes. Together, this leads to many potential innovations not reaching commercialization.

A second “valley of death” occurs at the transition between commercialization and the actual roll-out, i.e., scaling, spreading and sustained use of technology. Notably, a large portion of technology start-ups fail within the first 2–3 years after commercialization. Although there is rarely one reason for a single start-up's failure, one of the most frequently reported reasons is the lack of market (customer) need (13). This suggests that the actual challenges experienced by people that are impacted by the introduction of a health technology innovation, including changes in workflow and responsibilities, have been insufficiently assessed in previous innovation phases. Indeed, understanding the actual problem or need is closely related to understanding the complexity of implementation. The current state/best practice is constantly evolving and it should be avoided that the (wrong) wheel gets re-invented. In addition, health technologies that do not have added value for society should not be pursued up to commercialization and roll-out, especially given the constraints on the current healthcare system in terms of staff shortage, increased healthcare demand, and increased costs (17). In return, society should strive for financial systems that support the development of viable innovations that also have societal value.

1.3 Supporting academic research and early-stage development

There are many lessons to be learned from implementation science and from failed innovation: Early, iterative, multi-dimensional and multi-disciplinary assessment of innovation and implementation with clear governance is needed to create health technology innovations that can have added value for society, and that have a higher chance of surviving both valleys of death. However, in academic research and early-stage development settings, many organizations struggle with the question of how to best support their innovators to increase the chances of developing, evaluating and implementing health technology innovations that create impact beyond academia (7, 8, 18). Innovators' key support needs include awareness of the end-to-end innovation process and of implementation barriers/facilitators and practical guidance at the right moment in time, e.g., what stakeholders to involve when and how, what methods to employ (7, 19, 20).

At most academic institutes, generic research support is available to support health technology innovators in different phases of academic research and early-stage development. This includes for example project management and funding support. In addition, a “Knowledge Transfer Office” can support researchers with patent applications, legal advice, contracts and spin-off companies. However, innovation and implementation challenges related to the health/medical domain may require additional support, and beyond knowledge transfer. For example, support with setting up and maintaining clinical or industry collaborations, organizing clinical studies (including ethical approval), and quality and regulatory affairs (medical device classification and documentation requirements).

In addition, innovation communities have been working towards the integration or extension of models and frameworks for innovation and implementation, mostly used by experts, with practical tools for use by innovators themselves. These tools aim to support the planning, funding, and execution of health technology development in the context of the end-to-end innovation process and with the ultimate aim to implement health technology innovations in routine practice and to create socioeconomic impact (7, 21–23). Notably, some of these process support tools have become a requirement during the grant application and/or ethical approval process (1), part of healthcare insurer evaluations (24), and/or are relative requirements for the valorization of research work.

1.4 Considerations for tool development and selection

The present review is a follow up of previous work from our group. Specifically, a previous evaluation of health technology implementation frameworks, together with researchers and support staff, resulted in the identification of 5 implementation domains that could be useful for support tool development or selection, i.e., User, organization and system requirements; Legal requirements and ethical considerations; Effectiveness; Economic aspects; and Business plan (3, 25). As an initial validation step, the potential usefulness of these 5 domains as a support tool was tested in a retrospective case study (25). A 5-point Likert scale was used to rate the implementation “maturity”3 for each domain and the results were visualized in a spider-plot. The domains, scoring, and spider-plot were found to be useful in systematically assessing, rating, and visualizing implementation maturity and for identifying and discussing differences and similarities between different innovations and healthcare organizations. However, the scoring was performed by implementation experts based on subjective judgement and the study was of retrospective nature. As such, additional validation of the domains and the scoring methodology would be required prior to continuing development efforts towards a support tool for use by innovators or project/innovation managers.

In summary, there seem to be ample frameworks and tools available that could be useful in supporting the development, evaluation and implementation of health technology innovations, and this has also been the focus of several recent publications (9, 11). However, regarding the relevance of tools for supporting innovators in academic research and early-stage development with awareness and guidance, several aspects remain to be investigated. These include the number, overlap and congruence of relevant implementation domains and innovation phases, and the identification of considerations, e.g., gaps, strengths and limitations, for tool selection and/or novel tool development.

1.5 Objective

This review performed an initial exploration of different types of published tools that aim to support innovators (and project/innovation managers) in academic research and early-stage development settings with awareness and guidance along the end-to-end process of health technology innovation. Specifically, the aim was to identify and confirm key implementation domains and innovation phases by using a qualitative approach, as well as to discuss methodological considerations for each (type of) tool or method in order to inform tool selection and novel tool development. As such, this review provides novel directions for supporting innovators in pursuing health technology innovation and implementation as an integral part of academic research and early-stage development.

2 Methods

Since this concerned an initial exploration, a pragmatic non-exhaustive approach was employed. Potentially relevant tools were identified from scientific literature as well as in grey literature by non-systematic searches in public research databases and search engines, and based on expert referral. Search terms included combinations of keywords such as “implementation”, “innovation”, “tool”, “readiness”, “maturity”, “checklist”, “questionnaire”, “guidance”, and “roadmap”. Health technology innovation and implementation science experts from our institution were consulted to verify whether, based on their expertise, additional relevant tools should be considered. Tools were identified between March and August 2023.

2.1 Inclusion

Based on a preliminary evaluation 3 types of tools were identified for potential inclusion: (1) readiness levels, (2) questionnaires/checklists, and (3) guidance tools. Tools were considered in scope for the present review when fulfilling the following criteria:

• To be used during the development, assessment and/or evaluation of health technology innovations

• Includes key domains/milestones relevant for the end-to-end development, evaluation and implementation of health technology innovations

• (Also) targeting academic researchers/innovators

• Peer-reviewed journal paper or published grey literature from governmental agencies, non-academic organizations or innovation communities (free access only)

• Published between 2018 and 2023 and/or mandatory use in academic research (e.g., Technology Readiness Levels)

• Published in the English or Dutch language

2.2 Exclusion

The following exclusion criteria were employed:

• Tools associated with frameworks primarily focusing on standardizing implementation as a science, e.g., the Consolidated Framework for Implementation research (27)

• Frameworks focusing on the implementation/dissemination of research findings instead of innovations, e.g., RE-AIM (28)

• Grant-related impact tools, e.g., Impact Helper (29) or Impact Plan Approach (30)

• Tools that focus on estimating the chance of success of an innovation or project, e.g., funding success or funding potential

• Tools with a commercial purpose

2.3 Qualitative analysis

A step-wise approach was employed. First an overview of included tools was created. Subsequently, the overview and source documents were qualitatively analyzed, as described below, in order to identify key domains and innovation phases.

2.3.1 Overview of included tools

The following items were summarized for each tool based on information sourced from the actual publication/reference: type, tool name + reference(s) + extensions (e.g., user guidance, toolkit), purpose, target user(s), domains, innovation phases, reported underlying theoretical frameworks, and other relevant findings such as methodological considerations, gaps, strengths and limitations. For each of the included tools we summarized (additional) explicit information on tool use, validation, effectiveness, and versatility across different innovation phases in the original source document and in other documents by screening citations (for academic sources) and/or by performing a google search “[(partial) Tool name or title of the source document]” AND (validation OR validity).

2.3.2 Identification of key domains and innovation phases

The identification of key domains and innovation phases largely followed the approach for inductive thematic analysis, including dataset familiarization, coding/grouping (generating themes), and refining (31). For the purpose of this paper, all reported classifications, e.g., “themes”, “topics”, “risks”, or “barriers” were termed “domains”. As such “domains” cover the combined content of the reported themes, topics and risks. The previous report (3) and all newly identified tools (columns) and reported domains (rows) were arranged on a digital sheet (excel). All reported domains were compared for overlap and were grouped accordingly. Subsequently, this dataset was used to rephrase or extend the 5 domains from the previous report (3) into key domains.

Innovation phases concerned milestones, the timing and sequence of events, or stepwise approach. All newly identified tools that reported innovation phases (columns) and the actual reported innovation phases (rows) were arranged on a digital sheet, were compared for overlap and were grouped accordingly. Subsequently the number of different phases was reduced to a relevant minimum while still covering the end-to-end innovation process.

Reported domains or innovation phases that could not be clustered into an overarching domain or innovation phase were identified as “implementation principles” and were compared for overlap and grouped. Subsequently the number of implementation principles was reduced to a relevant minimum while still covering all principles.

2.3.3 Mapping of tools

Lastly, all tools were mapped for coverage onto the newly framed key domains, innovation phases and implementation principles. For tools that did not report innovation phases, the mapping was done based on the described purpose of the tool.

3 Results

3.1 Overview of included tools

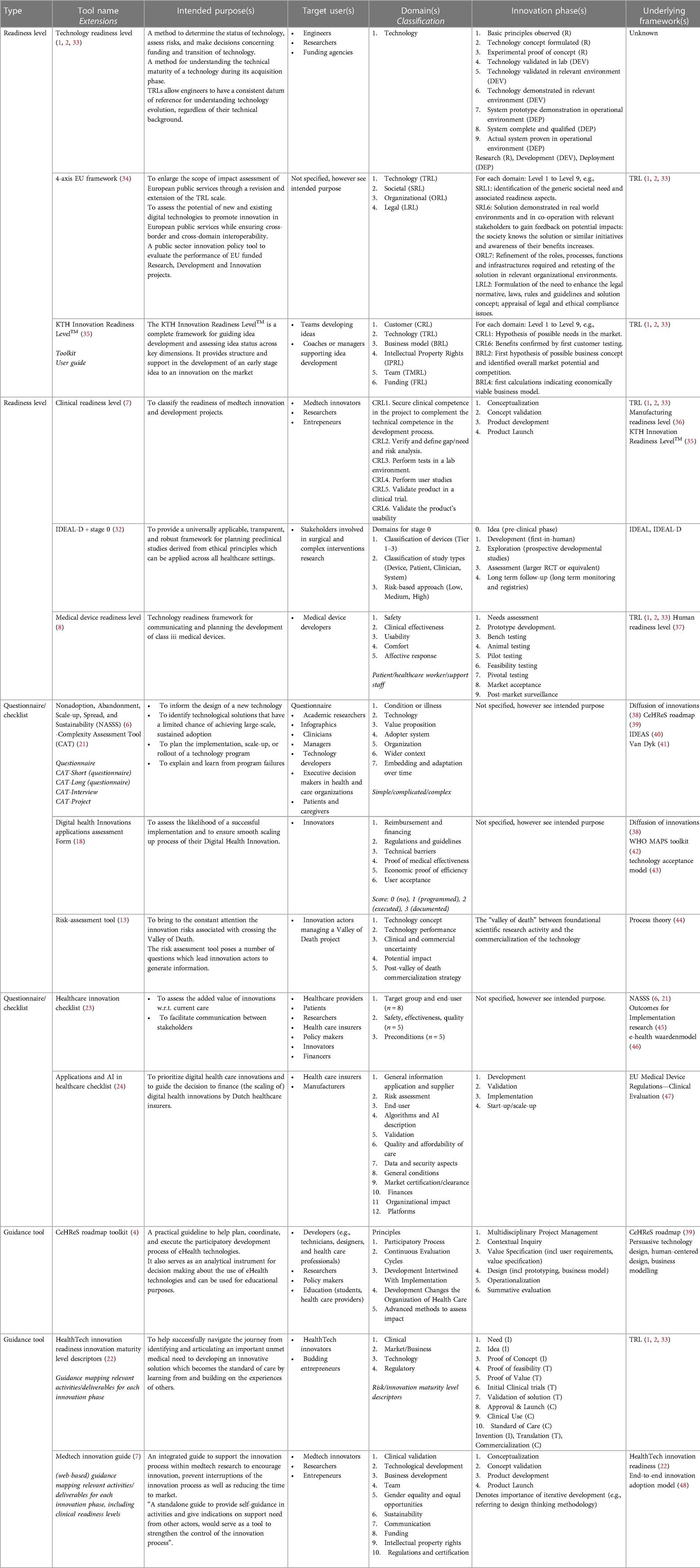

A total number of 14 tools were included for qualitative analysis in this review. The majority of tools was identified from the non-systematic search (n = 11), 3 tools were identified based on expert referral (18, 23, 32). Relevant details on each included tool are presented in Table 1. Tools were classified as either readiness level tool (n = 6) (7, 8, 32–35), questionnaire/checklist tool (n = 5) (6, 15, 18, 21, 23, 24) or guidance tool (n = 3) (4, 7, 22).

For all tools, the intended purpose was made explicit in the source documentation and the target users were clarified for all but one tool (34), see Table 1—Intended Purpose. Tools intended to support the development, assessment and/or evaluation of health technology innovations (4, 6–8, 15, 18, 21, 22, 24, 32), of technology innovations in general (including health technology innovations) (33–35), or of healthcare innovations in general (including health technology innovations) (23). Notably, some tools were developed for specific health technology innovations, including for digital or eHealth innovations (4, 18, 24), applications and artificial intelligence (24), or for medical devices (7, 8, 24, 32). Several tools were developed for localized use because of reference to national rules and regulations (18), or because of the tool language (23, 24). Importantly, all tools can be used without restrictions in academic settings or during early-stage development. However, beyond academic settings, the use of the KTH Innovation Readiness LevelTM can be associated with trademark restrictions (35).

A wide variety of target users have been reported for the different tools including (teams or coaches of) innovators, engineers, researchers, entrepreneurs, medical device developers, managers, healthcare providers, patients, healthcare insurers, policy makers, funding agencies, and other financers, see Table 1—Target user(s).

All tools included the description of one or more domains, and innovation phases were specified for most tools, except for the Nonadoption, Abandonment, Scale-up, Spread, and Sustainability (NASSS) (6, 21), the Digital Health Innovations (DHI) applications assessment form (18), and the Healthcare Innovation checklist (23), for details see Table 1—Domain(s) and Table 1—Innovations Phase(s). A detailed analysis of domains and innovation phases is presented in the next section.

Not surprisingly, several of the included tools were based on the well-known Technology Readiness Level (TRL) (7, 8, 22, 34, 35). In addition, also other included tools, such as the NASSS (6, 21), the HealthTech Innovation Readiness (HIR) Innovation Maturity Level Descriptors (22), and the KTH Innovation Readiness LevelTM (35), were reported to underly the development of other included tools. Other reported frameworks that were used as a basis for tool development included various types of theoretical frameworks such as the Diffusion of Innovations Theory (38), the Technology Acceptance Model (43), Process Theory (44), Outcomes for Implementation Research (45), the End-to-end Innovation Adoption Model (48) and framework literature reviews (41); readiness levels such as the Manufacturing Readiness Level (MRL) (36) and the Human Readiness Level (HRL) (37); various types of guidance tools such as the CeHReS roadmap (39), mHealth Assessment and Planning for Scale (MAPS) Toolkit (42), the Integrate, Design, Assess, and Share (IDEAS) framework (40), and the e-health waardenmodel (46); regulatory frameworks such as the EU Medical Device Regulations—Clinical Evaluation (47); design methodology such as persuasive technology design, human-centered design; and business modelling, see Table 1—Underlying frameworks.

From the original source documents, some form of validation during tool development was described for 7 of the included tools, mostly describing expert interviews or consultations and/or case studies (4, 6–8, 18, 21, 23, 34, 39, 49). Most of the academic tools were referenced in the literature (range between 1 and 638 citations) or on google, and some extensively e.g., TRL (1, 2), NASSS (6, 21, 49), CeHReS roadmap (4, 39). For non-academic sources, no additional information was found regarding the use of the tool, except for the KTH Innovation readiness Level, which was reported to be used as the standard tool being used in their own academic population (Sweden) (35). Together, this at least suggests that some of the tools are actually used and are considered useful. However, references were generally not focused on explicitly validating the tools. Note that several tools were extended/updated after a period of use, e.g., NASSS (6, 21, 49), CeHReS roadmap (4, 39); or explicitly invited users to report on their experiences with using the tool, e.g., IDEAL-D (32), Healthcare Innovation Checklist (23) which can be considered as an additional (ongoing) validation step. Hence, ecological validation and user/expert consensus should be considered the gold standard for the evaluation of these type of tools. Effectiveness (in terms of funding or innovation success) or versatility (in terms of usefulness during different innovation phases) of the tools was not studied for any of the tools.

3.2 Identification of key domains, innovation phases, and implementation principles

The qualitative analysis of the included tools with respect to the reported domains and innovation phases presented in Table 1 resulted in the identification of 5 key domains, 5 innovation phases, and 3 implementation principles, for details on the analysis see “Methods”.

3.2.1 Key domains

For each identified key domain a short summary and illustrative examples are presented, based on the details of the reported domains in Table 1 and the reported references.

Key domain 1: Understand the condition, user, organization, and healthcare system

This key domain reflects the importance of understanding and engaging with stakeholders4 in order to identify, evaluate, and ultimately take into account their needs, while developing, evaluating and implementing health technology innovations. This requires in the first place an understanding of the condition or illness that is being targeted (6, 21) in order to identify relevant stakeholders and experts. Regarding stakeholder needs, explicit reference was made to needs associated with the condition or illness (6, 21), to comfort (8), to safety and risk-based approach (7, 8, 24, 32), to usability (7, 8), to clinical validation (7), and to potential organizational or societal impact (e.g., adopter system, wider context) (4, 15, 24). Regarding the type of stakeholders, explicit reference was made to users or target groups (e.g., patients, healthcare workers, support staff) (8, 24, 32), customers (35), healthcare organizations and their infrastructures (6, 21, 24, 34), and societal stakeholders (e.g., political, economic, regulatory) (6, 21, 34) or “healthcare system”. Regarding the way of working, explicit reference was made to a participatory process (4), to user acceptance/user studies (7, 18), and to the embedding and adaptation of health technology innovations over time (6, 21, 35).

Key domain 2: Develop legal, safe, ethical, and environmentally sustainable health technology innovations

This key domain reflects the importance of taking into account additional technological, legal, safety, ethical and sustainability requirements in the context of developing, evaluating and implementing health technology (vs. other technology) innovations. Technical requirements were explicitly referenced by all tools. In addition, explicit reference was made to additional requirements for algorithms and AI description (24) and for platforms (24). Regarding legal, safety and ethical requirements, explicit reference was made to additional requirements for data and security aspects (24), gender equality and equal opportunities (7), risk assessment (7, 8, 23, 24, 32), the classification of devices (32), and regulations and certification (7, 21, 23, 24, 32). The importance of taking into account requirements for environmental sustainability of health technology innovations was explicitly referenced only by the MedTech Innovation Guide (7).

Key domain 3: Develop evidence strategy

This key domain reflects the importance of developing a strategy for collecting evidence (including pre-clinical and clinical evidence) during the development, evaluation and implementation of health technology innovations with the aim to demonstrate additional benefits and/or reduced risks with regards to the current state/best practice. Especially in the context of additional legal, safety and ethics requirements. Tools made explicit reference to the relevance and distinction between different study types (4, 32) or study environments (7), and to the relevance of assessing and demonstrating clinical effectiveness (8, 23), usability (7, 8), and impact or added value with respect to current (care) practices (4, 6, 15, 21).

Key domain 4: Investigate economic aspects

This key domain reflects the importance of investigating economic aspects relating to the development, evaluation and implementation of health technology innovations, in the context of effectiveness and impact (added value) with respect to current practices. This was reflected in the explicit mentioning of e.g., direct (implementation) costs (23), quality and affordability of care (24), economic efficiency (18), and as relevant input for business modelling (4, 7, 22).

Key domain 5: Develop business model

This key domain reflects the importance of developing a business model to support the development, evaluation and implementation of health technology innovations, in the context of all other key domains. Elements of business modelling that were explicitly referenced as separate domains included intellectual property rights (7, 35), potential impact (15), value proposition (4, 6, 21, 35), commercialization strategy (15), securing funding (7, 18, 24, 35), reimbursement (18), and communication (7).

3.2.2 Innovation phases

The traditional technology readiness levels (TRLs) (1, 33) have been used as underlying framework for several of the included tools. As such, for each identified innovation phase the corresponding TRLs are provided as well as (partially) corresponding phases reported by other tools. For details on the reported innovation phases see references and Table 1—Innovations Phase(s).

Phase 1: Conceptualization

Conceptualization (7) corresponds with TRL1-2 (i.e., basic principles observed and technology concept formulated) (33). Other tools have identified (part of) this phase as ideation (22, 32), needs assessment (8), contextual inquiry (4), and/or value specification (including user requirements) (4).

Phase 2: Concept validation

Concept validation (7) corresponds with TRL3 (i.e., experimental proof of concept). Other tools have identified (part of) this phase as prototype development (8), design (incl prototyping, business model) (4), or proof of concept (22).

Phase 3: Development

Development (7, 24, 32) corresponds with TRL4-9 (i.e., technology validated in lab, technology validated in relevant environment, technology demonstrated in relevant environment, system prototype demonstration in operational environment, system complete and qualified, actual system proven in operational environment). Other tools have identified (part of) this phase as operationalization (4), bench testing (8), animal testing (8), pilot testing (8), feasibility testing (8, 22), exploration (prospective developmental studies), formative evaluation (4), proof of value (22), initial clinical trials (22), assessment (larger RCT or equivalent) (32), pivotal testing (8), validation (24), validation of solution (22), or valley of death (15).

Phase 4: Market access & launch

Market access & launch does no longer correspond with any TRL. Other tools have identified (part of) this phase as product launch (7), market acceptance (8), or approval & launch (22).

Phase 5: Post-market phase

Post-market phase does no longer correspond with any TRL. Other tools have identified (part of) this phase as implementation (24), clinical use (22), start-up/scale-up (24), long term follow-up (long term monitoring and registries) (32), summative evaluation (4), post-market surveillance (8), and/or standard of care (22).

3.2.3 Implementation principles

For each identified implementation principle a short summary and illustrative examples are presented. For details see references and Table 1.

Principle 1: Multidisciplinary development teams (including project management)

This principle refers to the importance of the development team (7, 35) in relation to gender equality and equal opportunities (7), clinical competence (7), and multidisciplinary project and risk management (4).

Principle 2: Continuous evaluation cycles

The principle of continuous evaluation cycles (4) refers to the importance of employing an iterative development, evaluation and implementation process. Other tools also referenced this as embedding and adaptation over time (6, 21), or “proven over time” (35).

Principle 3: Development intertwined with implementation

The principle of development intertwined with implementation (4) refers to the importance of early and systematic assessment of risks and factors that might influence the uptake and adoption of a health technology innovation including all of the identified key domains and across all identified subsequent innovation phases.

3.2.4 Mapping of tools

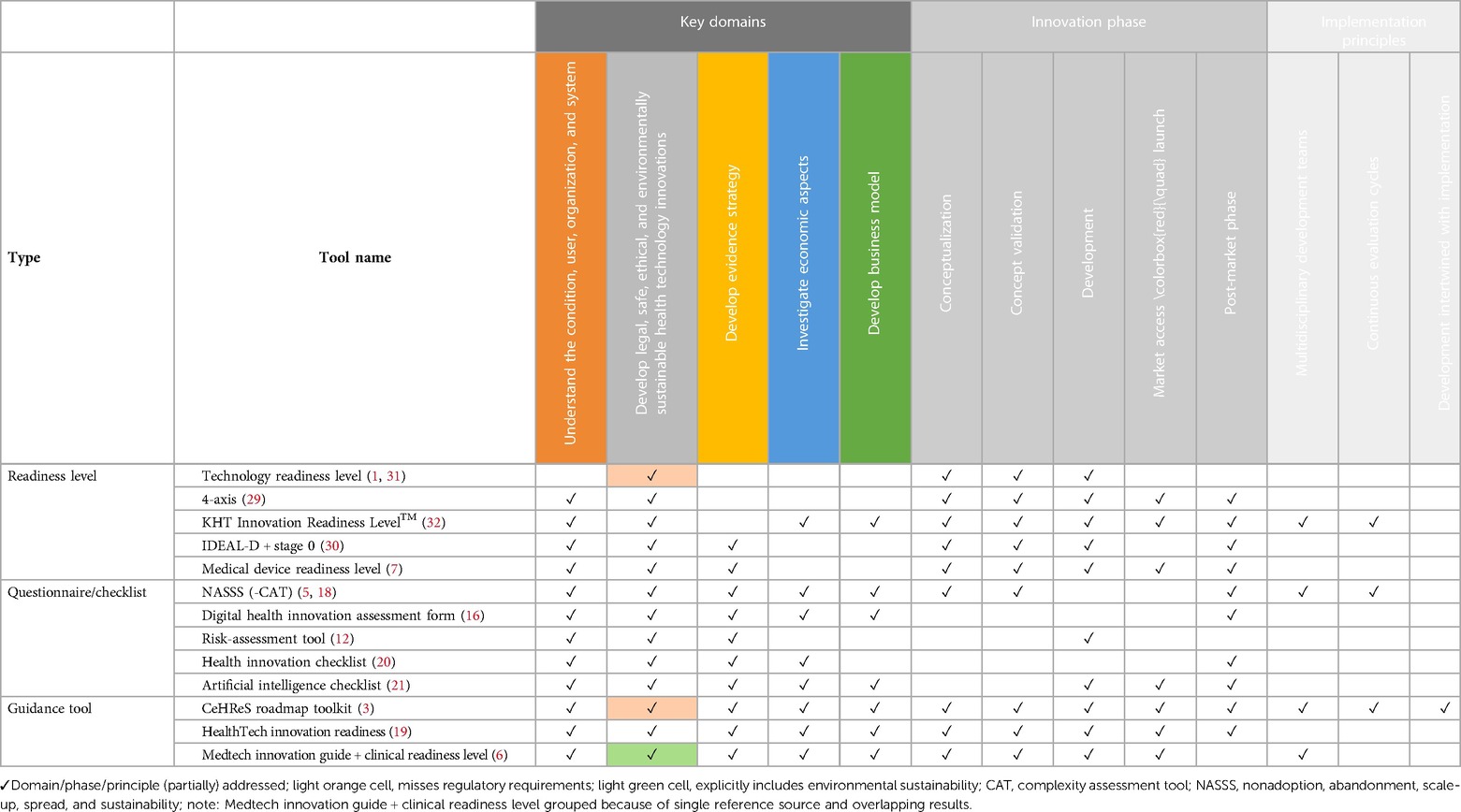

As a final step, all tools have been mapped for (partial) overlap with the identified key domains, innovation phases and implementation principles, see Table 2.

Table 2. Mapping of tools to identified key domains, innovation phases and implementation principles.

Most tools (partially) addressed at least 3 key domains (n = 11). The number of tools (partially) addressing each key domain was highest for the key domain “Develop legal, safe, ethical, and environmentally sustainable health technology innovations (all tools), followed by “Understand the condition, user, organization, and healthcare system” (n = 12), “Develop evidence strategy” (n = 10). “Investigate economic aspects” (n = 8) and “Develop business model” (n = 7) were only (partially) addressed by about 50% of the tools.

Several tools (partially) addressed all innovation phases (n = 5). However, the majority of tools were intended for use during specific parts of the innovation process covering either one (n = 3), three (n = 3), or four (n = 2) innovation phases. Only 50% of the tools (partially) addressed the phase “Market access & launch” (n = 7). For details see Table 2.

Implementation principles could be mapped only for the 4 tools from which they were identified, with tools (partially) addressing either one (n = 1), two (n = 2) or all three (n = 1) principles.

4 Discussion & conclusion

This review identified and evaluated published tools that can support innovators and project/innovation managers in academic research and early-stage development settings with awareness and guidance along the end-to-end process of development, evaluation and implementation of health technology innovations in order to overcome the so called “valleys of death”. This last section will interpret the results in the context of previous work and alternative approaches, and will discuss considerations for tool selection and for novel tool development.

4.1 Key domains and innovation phases

Whereas previous review papers have mostly focused on the evaluation of theoretical frameworks (9, 11), this review focused on the exploration of practical tools. The number, overlap and congruence of identified key domains and innovation phases, are relevant practical considerations that could subsequently impact tool selection and/or development.

This review identified 5 key domains and 5 innovation phases, whereas the number of domains and/or innovation phases reported by individual tools ranged from 1 (1) to 12 (24) domains and from 1 (15) to 10 (22) phases, although several tools did not specify any innovation phases (5, 18, 23). Naturally, for awareness, the tension lies in wanting to be complete without being too complex. This could be of particular relevance in academic and early-stage development settings. For example, a previous evaluation together with various research groups and support staff showed that 12 domains were found to be too complex for a practical tool (3). And although the use of 9 innovation phases for readiness levels seems to be accepted by researchers for the purpose of grant applications, readiness levels are generally not used beyond this purpose (34). Indeed, the many smaller validation steps within the phases of development and deployment often do not follow a clear sequential path. Rather, they occur in parallel iterations and most of the time across different research or innovation projects (4, 7, 34). Moreover, a limited number of innovation phases allows for a more straightforward mapping or translation of key domains to smaller/project activities to be defined or discussed for specific innovation phases which could be beneficial both for awareness and guidance, e.g., (7). Lastly, a lot of research on health technology innovations takes place in the post-market phase of existing (commercially available) technology (e.g., eHealth). Also, the post-market phase is the phase during which many start-ups fail (13). As such, it was deemed important to include the post-market phase, even though this was not included in all existing frameworks.

Congruence and language used for describing domains and phases are relevant aspects for both awareness and guidance. As such, abstract domains such as “clinical” [e.g., (7, 22)] should be avoided, since this could actually consist of very different types of activities such as “understanding the user” and “develop evidence strategy” requiring different expertise/stakeholders. In addition, key domains and innovation phases should be understandable for innovators working on different types of health innovations and jargonistic language should be avoided since this has been reported as a significant barrier for the use of implementation frameworks and tools by non-experts (12) In addition, key domains make most sense if they can be easily mapped onto specific expertise/stakeholders, e.g., patients and healthcare organizations and system representatives (key domain 1), (medical device) design and engineering representatives (key domain 2), clinical epidemiology representatives (key domain 3), early health technology assessment representatives (key domain 4), and from business development experts (key domain 5). In relation to this latter point, it is important to realize that not all health technology innovations become medical devices. As such, key domains should avoid terms such as “regulations” or “standards” but should rather reflect the core values underlying regulations and standards (i.e., delivering legal, safe, ethical, and environmentally sustainable technology). Of course, complying with regulations and standards for medical device development is seen as an important innovation and implementation barrier, and regulations and certification were explicitly mentioned in some of the tools (7, 21, 23, 24, 32). This denotes the importance of supporting researchers with understanding the potential impact of (novel) regulations such as the Medical Device Regulations in Europe. For example in the context of timely fulfilling (ethical/legal) requirements and administration for conducting initial clinical studies. During academic research and early-stage development, this could be supported by having QA/RA expertise on board during projects or by providing regulatory-specific support tools for awareness and guidance (e.g., Lean Entries).

4.2 Implementation principles

Reported domains or innovation phases that could not be clustered into an overarching domain or innovation phase were identified as implementation principles, mostly reflecting “the how” of implementation (research). Indeed, key domains and innovation phases provide awareness and guidance on what should be done when. However, in practice, overall project or innovation decisions generally impact all domains, and decisions need to be made regarding the relative priority of challenges and activities in a certain domain over those in another domain. These activities depend in part on the innovation phase but also on the specifics of the project. Implementation principles can provide awareness and guidance about how these aspects can be addressed in projects. For example, although each key domain asks for input from specific stakeholders, a multidisciplinary team (principle 1) needs to bring all different input together in order to intertwine implementation with development (principle 2) and make iterative decisions based on a continuous evaluation (principle 3) of the combined input. However, it should be noted that these principles were only operationalized for a selected number of tools (4, 6, 7, 21, 35), mostly by providing suggestions for team composition (4, 6, 7, 21, 35), but also by providing suggestions for step-wise evaluation using iterative research designs (4, 35), or by providing concrete guidance on identifying implementation barriers and strategies in an early development stage (4). An important aspect that was less operationalized by the included tools was governance beyond multidisciplinary project/innovation management, i.e., leadership, vision, policy and accountability. Although awareness of potential governance challenges is covered by an understanding of the healthcare system (key domain 1), it is not straightforward when and how research or innovation projects should do what to address these challenges. Some suggestions on how to organize governance are provided by frameworks describing (relatively late phase) practical implementation strategies, focusing on accountability and responsibility regarding implementation (e.g., involving an organization's executive board, recruit, designate and train for leadership, mandate change, changing liability laws) (50). During project in academic research and early-stage development settings, this could for example be supported by having implementation (science) expertise on board.

4.3 Considerations for tool selection

For this review three different types of tools were considered. Readiness level tools, which can be seen as milestones during innovation projects; questionnaire/checklist tools that can help to assess the complexity of a specific innovation project; and guidance tools that provide suggestions for next steps. All types of tools have their inherent pro's and con's. Some considerations were mentioned previously including the focus on specific health technology innovations (4, 18, 24); applications and artificial intelligence (24); medical devices (7, 8, 24, 32); localized use (18, 23, 24), and potential trademark restrictions (35). Still, for awareness all tools are considered to be relevant, as opposed to not using any tool. For guidance, some tools may actually be less useful. Readiness level or questionnaire/checklist tools are generally easy to use and offer fixed “scoring criteria”, they do not offer much support for what to do next, with some exceptions, e.g., (35). In addition, being mostly evaluative tools, questionnaire/checklist tools may have limited relevance in early innovation phases, the NASSS being an exception (6, 21). Indeed, the need for guidance is likely to depend on the specific innovation phase the researcher is in, as well as on the researcher's background and preferences. When discussing some of the included tools with researchers, it was observed that the level of guidance was perceived “too linear” by some, e.g., “check the box” (7, 22), and “too vague” by others, e.g., thematic methodological suggestions (4). As such, for actual guidance, support from multi-disciplinary experts and stakeholders with knowledge of the different key domains is essential.

Besides the intended purpose, awareness and guidance, several other considerations need to be taken into account when selecting a support tool (26). For example, the intended use, reliability, ease of use, and availability of (peer) support. Although most included tools were developed on the basis of well-accepted underlying frameworks or tools, there was only limited information available regarding the validation and effectiveness of support tools. Validation and establishing effectiveness in terms of funding or implementation success can be a challenge since most of these tools are process tools (rather than intervention tools) supporting processes that can occur over multiple (research) projects and years with changing development teams and stakeholders. Previous studies therefore mostly reported ecological validation and user/expert consensus. An alternative approach could be to use the tools as (comparative) interventions in order to test specific research questions, e.g., regarding user-friendliness, versatility for different innovation phases, or for different types of users/teams. This is something that is currently being pilot tested with academic users during e.g., workshops and events and could be an initial step towards more formal evaluation. In addition, experiences with support tools and other best practices should be shared via the scientific literature and via education and training initiatives in academic and early-stage development networks.

Taken together, what this paper contributes to tool selection for both individual researchers as well as for innovation communities, is on the one hand the mapping of recent support tools onto key domains, innovation phases and implementation principles, and on the other hand providing generic considerations and suggestions that may facilitate the selection process. Given that most of the included tools and most of these considerations are not specific for academic and early-stage development settings, they may also be relevant beyond these settings, e.g., for start-ups or healthcare institutions.

4.4 Considerations for novel tool development

An abundance of available support tools were identified in the current review and still new tools are being developed. Although the use of support tools is generally thought to improve the innovation process and increase the chance of surviving the valleys of death, as mentioned, little is known about their effectiveness. As such, novel tool development is not simply about creating a “better” tool on the basis of gaps (or opportunities) identified for currently available tools.

As introduced, in academic and early-stage development settings some domains traditionally receive limited attention (requiring awareness), but also specific challenges may be encountered (requiring guidance) (7, 22). In general, it is often difficult for innovators to effectively translate potential barriers in later innovation phases to concrete actions during the research and early development stage. Although the tools included in this review provide awareness and guidance on what needs to be done when and how, still, these tools generally do not address how to identify shortcomings needing extra attention, how to decide on the most relevant implementation domains to address during a particular project and what would be the step-wise approach, i.e., strategy. As such, an overarching strategic tool that helps multi-disciplinary teams and stakeholders to understand, discuss, balance and set priorities over the course of their innovation project seems a relevant addition to existing tools. Such a “deliberation tool” that supports 360 degrees and end-to-end perspectives on innovation and implementation, could also help innovation projects to, after prioritization, select the most appropriate framework, methodology, or support tools for addressing implementation throughout the project. In addition, a “deliberation tool” could serve as an “impact tool”. That is, it could support early discussions on (discontinuation due to lack of) added value, and on the potential need for de-implementation of existing health technology innovations. This may be used to inform subsequent funding, commercialization and reimbursement decisions, thereby potentially reducing the number of non-value adding innovations being developed.

In its most basic form, a “deliberation tool” or “Health Technology Innovation 360” could be developed from the key domains, innovation phases, implementation principles, available support tools, and considerations for tool selection and tool development identified in this review. For example by mapping domains to innovation phases similar to the MedTech Innovation Guide (7) or the HIR Innovation Maturity Level Descriptors (22). Naturally, additional work would be needed to better understand how researchers and innovators would like to use a “deliberation tool” and whether they, for example, would require any training or additional support from experts (26). Especially in the context of anticipated repeated use, important aspects could be timing, access, and ease of use, which might be operationalized by creating an intuitive and engaging interactive digital tool [e.g., (7)]. Also the formulation of deliberation criteria, or a priority scoring and visualization thereof, may help to support ease of use and communication about the “deliberation results” [e.g., (23, 25)]. Lastly, regarding tool development, lessons might be learned from other types of multi-disciplinary collaboration processes and methods, such as risk-assessment and design-thinking/futuring (26, 51).

4.5 Strengths and limitations of this review

Some methodological considerations need to be taken into account when interpreting the results of this review. The current review employed a qualitative and non-systematic approach. On the one hand this may have resulted in a non-exhaustive search for and identification of support tools and associated domains and innovation phases. On the other hand this may have impacted the reproducibility of the present results. With regards to the non-exhaustiveness of the search, this was partially mitigated by also performing an expert consultation. It could be argued that a systematic (scoping) review could have been a more suitable approach. However, the purpose of this review was not to assess and maintain a(n exhaustive) list of available tools. Rather, this review aimed to validate and extend the previous work, to identify considerations for the selection and use of support tools and to inform novel tool development. With regards to the reproducibility of the findings, this is in part also warranted by the definition of clear in- and exclusion criteria specific to our research question (see Methods section). For example, in academic settings there has been an increased focus on the dissemination and translation of research results beyond academia, i.e., the publication of research results in non-scientific journals and/or the translation of knowledge into updated practice guidelines. In addition, researchers are more and more required to reflect on, plan for, and report on the broader socio-economic impact of their research. As this study was focused on supporting researchers with the process of health technology innovation in order to overcome the so-called “valleys of death”, such dissemination and impact tools were considered out of scope for this review.

Together, the qualitative and non-systematic approach is deemed appropriate given that this review was firmly rooted in theoretical frameworks and started from previous analyses that identified and pilot-tested relevant implementation domains for academic research and early-stage development settings (3, 25). The results from this paper could be used as a starting point for additional systematic assessment of practical tools similar to existing reviews on theoretical frameworks (9, 11), and may additionally include specific research questions regarding the validation and effectiveness of tools.

Another consideration is that the induction of key domains from domains reported by individual tools was complicated because some domains could be captured under multiple key domains. “Clinical” (7, 22) was already mentioned, but other examples are “post-valley of death commercialization strategy” (15), “gap/need and risk analysis” (7), “design” (4), “clinical and commercial uncertainty” (15), “value specification” (4), “potential impact” (15). This overlap in domains is a factor that complicated this analysis but also complicates the interpretation, selection, use, and development of (novel) support tools.

Lastly, the present review focused on practical tools that may support innovators (rather than implementation experts) in academic research and early-stage development settings with awareness and guidance of the end-to-end innovation process and implementation. This does, however, not mean that these tools are relevant only in the academic or early-stage development context or that the complexity of implementation can indeed be captured in a simple tool. However, tools may provide suggestions for the type of stakeholders to involve (21, 22) and for the identification of relevant themes or methods to explore, serving as a starting point for further discussion. In addition, support tools may inform and enhance other solutions for awareness and guidance. This includes academic and professional education, (improved) utilization of available experience and expertise (e.g., peer-coaching, implementing multidisciplinary teams), and building or attracting missing expertise (e.g., innovation/project management, clinical expertise, business development expertise). Support tools may also help to prepare or provide input for external support initiatives such as the innovation round-tables organized by Health Innovation Netherlands (20).

5 Conclusion

This review performed an initial exploration of different types of published tools that aim to support innovators with awareness and guidance along the end-to-end process of development, evaluation and implementation of health technology innovations. Specifically, it identified and key domains and innovation phases and discussed novel directions for tool selection and novel tool development. A remaining attention point for future studies is the validation and effectiveness of (self-assessment) tools, especially in the context of support preferences and available support alternatives. This review will be used to further develop the assessment and support of health technology innovation and implementation in academic research and early-stage development settings.

Author contributions

MR: Writing – original draft, Writing – review & editing. L-GP: Writing – original draft, Writing – review & editing. RV: Writing – original draft, Writing – review & editing. SK: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

The authors would like to thank Dr. Esther Rodijk-Rozeboom for reviewing an earlier version of this manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^The step-wise (iterative) approach from basic (technological) concept towards an innovation that has added value and that gets implemented in routine (clinical) practice.

2. ^Medical devices are a specific type of health technology subject to additional legal and regulatory requirements.

3. ^Maturity with respect to the attitude and behavior of people (culture), the available processes/structures for implementation, and with respect to the integration of the technology (26).

4. ^For this paper defined as a person, group or organization with a vested interest, or stake, in the decision-making and (outcome of) activities of a business, organization or project.

References

1. Mankins JC. “Technology Readiness Levels, White Paper.” Advanced Concepts Office, Office of Space Access and Technology. Washington DC: National Aeronautics and Space Administration (1995).

2. Mankins JC. Technology readiness assessments: a retrospective. Acta Astronaut. (2009) 65(9):1216–23. doi: 10.1016/j.actaastro.2009.03.058

3. Van Gemert-Pijnen JE. Implementation of health technology: directions for research and practice. Front Digit Health. (2022) 4:1030194. doi: 10.3389/fdgth.2022.1030194

4. Kip H, Kelders SM, Sanderman R, Van Gemert-Pijnen . eHealth Research, Theory and Development: A Multi-Disciplinary Approach. 1st Edn. London: Routledge (2018). Available online at: https://www.utwente.nl/en/bms/ehealth/cehres-roadmap-toolkit/ (Accessed July 10, 2023).

5. Norton WE, Chambers DA. Unpacking the complexities of de-implementing inappropriate health interventions. Implement Sci. (2020) 15(1):2. doi: 10.1186/s13012-019-0960-9

6. Greenhalgh T, Wherton J, Papoutsi C, Lynch J, Hughes G, A'Court C, et al. Beyond adoption: a new framework for theorizing and evaluating nonadoption, abandonment, and challenges to the scale-up, spread, and sustainability of health and care technologies. J Med Internet Res. (2017) 19(11):e367. doi: 10.2196/jmir.8775

7. Mejtoft T, Lindahl O, Öhberg F, Pommer L, Jonzén K, Andersson BM, et al. Medtech innovation guide: an empiric model to support medical technology innovation. Health Technol. (2022) 12(5):911–22. doi: 10.1007/s12553-022-00689-0

8. Seva RR, Tan ALS, Tejero LMS, Salvacion MLDS. Multi-dimensional readiness assessment of medical devices. Theor Issues Ergon Sci. (2023) 24(2):189–205. doi: 10.1080/1463922X.2022.2064934

9. Moullin JC, Dickson KS, Stadnick NA, Albers B, Nilsen P, Broder-Fingert S, et al. Ten recommendations for using implementation frameworks in research and practice. Implement Sci Commun. (2020) 1(1):42. doi: 10.1186/s43058-020-00023-7

10. Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. (2015) 10(1):53. doi: 10.1186/s13012-015-0242-0

11. Rodriguez Llorian E, Waliji LA, Dragojlovic N, Michaux KD, Nagase F, Lynd LD. Frameworks for health technology assessment at an early stage of product development: a review and roadmap to guide applications. Value Health. (2023) 26(8):1258–69. doi: 10.1016/j.jval.2023.03.009

12. Roberts NA, Young AM, Duff J. Using implementation science in nursing research. Semin Oncol Nurs. (2023) 39(2):151399. doi: 10.1016/j.soncn.2023.151399

13. CB Insights. The Top 20 Reasons Startups Fail: From Lack of Product-Market Fit to Disharmony on the Team, We Break Down the Top 20 Reasons for Startup Failure by Analyzing 101 Startup Failure Post-Mortems. New York: CB Insights (2021). Available online at: https://www.cbinsights.com/research/report/startup-failure-reasons-top/ (Accessed August 17, 2023).

14. Ramesh S, Nithyavathani JA, Syed M, Kachroo K, Sharma JK, Priyadarshini A, et al. A landscape study to determine the innovation mortality rate in health technology innovations across the globe: global health innovation mortality in health technology innovation. Glob Clin Eng J. (2024) 6(2):5–15. doi: 10.31354/globalce.v6i2.169

15. Ellwood P, Williams C, Egan J. Crossing the valley of death: five underlying innovation processes. Technovation. (2022) 109:102162. doi: 10.1016/j.technovation.2020.102162

16. MedTech Europe. MedTech Europe Survey Report Analysing the Availability of Medical Devices in 2022 in Connection to the Medical Device Regulation (MDR) Implementation. Brussels: MedTech Europe (2022). Available online at: https://www.medtecheurope.org

17. World Health Organization. World Health Statistics 2023: Monitoring Health for the SDGs, Sustainable Development Goals. Geneva: World Health Organization (2023). Available online at: https://www.who.int/publications/i/item/9789240074323 (Accessed October 02, 2023).

18. Hobeck R, Schlieter H, Scheplitz T. Overcoming diffusion barriers of digital health innovations conception of an assessment method. Presented at the Hawaii International Conference on System Sciences (2021). doi: 10.24251/HICSS.2021.443

19. Juckett LA, Bunger AC, McNett MM, Robinson ML, Tucker SJ. Leveraging academic initiatives to advance implementation practice: a scoping review of capacity building interventions. Implement Sci. (2022) 17(1):49. doi: 10.1186/s13012-022-01216-5

20. Levin L, Sheldon M, McDonough RS, Aronson N, Rovers M, Gibson CM, et al. Early technology review: towards an expedited pathway. Int J Technol Assess Health Care. (2024) 40(1):1–37. doi: 10.1017/S0266462324000047

21. Greenhalgh T, Maylor H, Shaw S, Wherton J, Papoutsi C, Betton V, et al. The NASSS-CAT tools for understanding, guiding, monitoring, and researching technology implementation projects in health and social care: protocol for an evaluation study in real-world settings. JMIR Res Protoc. (2020) 9(5):e16861. doi: 10.2196/16861

22. Consortia for Improving Medicine with Innovation & Technology (CIMIT). Navigating the Healthtech Innovation Cycle. Boston: CIMIT (2019). Available online at: https://www.cimit.org/documents/173804/228699/Navigating+the+HealthTech+Innovation+Cycle.pdf/2257c90b-d90b-3b78-6dc9-745db401fbc6 (Accessed July 10, 2023).

23. RIVM. Zorginnovaties. Een checklist voor succesvolle implementatie. (2022). Available online at: https://www.rivm.nl/documenten/zorginnovaties-checklist-voor-succesvolle-implementatie (Accessed July 10, 2023).

24. Zorgverzekeraars Nederland. Leidraad applicaties en algoritmes in de zorg. (2023). Available online at: https://www.zn.nl/dossier/digitalisering/ (Accessed August 17, 2023).

25. Van Gemert-Pijnen L, Nieuwenhuis B, Lindenberg M. Digitalisering in de gezondheidszorg nader beschouwd. (2022). Available online at: https://research.utwente.nl/en/publications/digitalisering-in-de-gezondheidszorg-nader-beschouwd-eindrapport- (Accessed June 13, 2024).

26. Mettler T. Maturity assessment models: a design science research approach. Int J Soc Syst Sci. (2011) 3(1/2):81. doi: 10.1504/IJSSS.2011.038934

27. Damschroder LJ, Reardon CM, Widerquist MAO, Lowery J. The updated consolidated framework for implementation research based on user feedback, Implement Sci. (2022) 17(1):75. doi: 10.1186/s13012-022-01245-0

28. Glasgow RE, Harden SM, Gaglio B, Rabin B, Smith ML, Porter GC, et al. RE-AIM planning and evaluation framework: adapting to new science and practice with a 20-year review. Front Public Health. (2019) 7:64. doi: 10.3389/fpubh.2019.00064

29. University of Dublin. Research Impact Toolkit. Dublin: UCD Research and Innovation (2023). Available online at: https://www.ucd.ie/impacttoolkit/ (Accessed October 10, 2023).

30. NWO. Impact Plan Approach. Den Haag: NWO (2023). Available online at: https://www.nwo.nl/en/impact-plan-approach (Accessed October 10, 2023).

31. Braun V, Clark V. Conceptual and design thinking for thematic analysis. Qual Psychol. (2022) 9(1):3–26. doi: 10.1037/qup0000196

32. Marcus HJ, Bennett A, Chari A, Day T, Hirst A, Hughes-Hallett A, et al. IDEAL-D framework for device innovation: a consensus statement on the preclinical stage. Ann Surg. (2022) 275(1):73–9. doi: 10.1097/SLA.0000000000004907

33. NASA. Technology Readiness Levels. Washington DC: National Aeronautics and Space Administration Available online at: https://www.nasa.gov/wp-content/uploads/2017/12/458490main_trl_definitions.pdf (Accessed July 10, 2023).

34. Bruno I, Lobo G, Covino BV, Donarelli A, Marchetti V, Panni AS, et al. Technology readiness revisited: a proposal for extending the scope of impact assessment of European public services. Proceedings of the 13th International Conference on Theory and Practice of Electronic Governance; Athens Greece: ACM (2020). p. 369–80. doi: 10.1145/3428502.3428552

35. KTH Innovation. KTH Innovation Readiness LevelTM. Stockholm: KTH Innovation (2023). Available online at: https://kthinnovationreadinesslevel.com/ (Accessed July 10, 2023).

36. Wheeler D, Ulsch M. Manufacturing Readiness Assessment for Fuel Cell Stacks and Systems for the Back-up Power and Material Handling Equipment Emerging Markets. Washington DC: United States Department of Energy, National Renewable Energy Laboratory (2010). NREL/TP-560-45406.

37. See JE. Human readiness levels explained. Ergon Des. (2021) 29(4):5–10. doi: 10.1177/10648046211017410

38. Rogers EM. Diffusion of Innovations, 4th Edn. New York: Free Press (2010). Available online at: https://books.google.nl/books?id=v1ii4QsB7jIC

39. Van Gemert-Pijnen JE, Nijland N, Van Limburg M, Ossebaard HC, Kelders SM, Eysenbach G, et al. A holistic framework to improve the uptake and impact of eHealth technologies. J Med Internet Res. (2011) 13(4):e111. doi: 10.2196/jmir.1672

40. Mummah SA, Robinson TN, King AC, Gardner CD, Sutton S. IDEAS (Integrate, design, assess, and share): a framework and toolkit of strategies for the development of more effective digital interventions to change health behavior. J Med Internet Res. (2016) 18(12):e317. doi: 10.2196/jmir.5927

41. Van Dyk L. A review of telehealth service implementation frameworks. Int J Environ Res Public Health. (2014) 11(2):1279–98. doi: 10.3390/ijerph110201279

42. World Health Organization. The MAPS Toolkit: MHealth Assessment and Planning for Scale. Geneva: World Health Organization (2015). Available online at: https://iris.who.int/handle/10665/185238 (Accessed October 10, 2023).

43. Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. (1989) 13(3):319–40. doi: 10.2307/249008

44. van de Ven AH, Poole MS. Explaining development and change in organizations. Acad Manage Rev. (1995) 20(3):510–40. doi: 10.2307/258786

45. Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda, Adm Policy Ment Health Ment Health Serv Res. (2011) 38(2):65–76. doi: 10.1007/s10488-010-0319-7

46. Weggelaar AM, Auragh Rd, Visser M, Sulz S, Elten HV, Cranen K, et al. e-Health Waardenmodel. Rotterdam: Erasmus School of Health Policy & Management (2020). Available online at: https://www.eur.nl/en/eshpm/media/2020-07-ehealth-waardenmodel (Accessed October 07, 2023).

47. European Parliament and Council. Regulation (EU) 2017/745 of the European Parliament and of the Council of 5 April 2017 on Medical Devices. Brussels: European Parliament and Council (2021).

48. Guarcello C, Raupp E. Pandemic and Innovation in Healthcare: The End-to-End Innovation Adoption Model. Maringá: Brazilian Administration Review (2021). Available online at: https://www.scielo.br/j/bar/a/pHcpFJYsYNxbcnWMwnbXm3z/?lang=en# (Accessed October 07, 2023).

49. Greenhalgh T, Wherton J, Papoutsi C, Lynch J, Hughes G, A'Court C, et al. Analysing the role of complexity in explaining the fortunes of technology programmes: empirical application of the NASSS framework. BMC Med. (2018) 16(1):66. doi: 10.1186/s12916-018-1050-6

50. Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. (2012) 69(2):123–57. doi: 10.1177/1077558711430690

51. Matos-Castano J, Zaga C, Visscher K, Baibarac-Duignan C, Wildevuur S, Voort van der M. A responsible futuring approach to create spaces of transdisciplinary co-speculation. J Future Stud. (2023). Available online at: https://jfsdigital.org/a-responsible-futuring-approach-to-create-spaces-of-transdisciplinary-co-speculation/ (Accessed November 24, 2023).

Keywords: health technology, innovation, implementation, support tool, medical device

Citation: Roosink M, van Gemert-Pijnen L, Verdaasdonk R and Kelders SM (2024) Assessing health technology implementation during academic research and early-stage development: support tools for awareness and guidance: a review. Front. Digit. Health 6:1386998. doi: 10.3389/fdgth.2024.1386998

Received: 16 February 2024; Accepted: 6 June 2024;

Published: 14 October 2024.

Edited by:

Rhodri Saunders, Coreva Scientific, GermanyReviewed by:

Panagiotis Katrakazas, Zelus_GR P.C., GreeceCarla Fernandez Barceló, Coreva Scientific, Germany

Copyright: © 2024 Roosink, van Gemert-Pijnen, Verdaasdonk and Kelders. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Meyke Roosink, bS5yb29zaW5rLTFAdXR3ZW50ZS5ubA==

Meyke Roosink

Meyke Roosink Lisette van Gemert-Pijnen

Lisette van Gemert-Pijnen Ruud Verdaasdonk2

Ruud Verdaasdonk2 Saskia M. Kelders

Saskia M. Kelders