95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

CURRICULUM, INSTRUCTION, AND PEDAGOGY article

Front. Digit. Health , 18 April 2024

Sec. Connected Health

Volume 6 - 2024 | https://doi.org/10.3389/fdgth.2024.1307817

This article is part of the Research Topic Designing and Evaluating Digital Health Interventions View all 14 articles

Michael Loizou1*

Michael Loizou1* Sylvester Arnab2

Sylvester Arnab2 Petros Lameras2

Petros Lameras2 Thomas Hartley3

Thomas Hartley3 Fernando Loizides4

Fernando Loizides4 Praveen Kumar5

Praveen Kumar5 Dana Sumilo6

Dana Sumilo6

Emotions play an important role in human-computer interaction, but there is limited research on affective and emotional virtual agent design in the area of teaching simulations for healthcare provision. The purpose of this work is twofold: firstly, to describe the process for designing affective intelligent agents that are engaged in automated communications such as person to computer conversations, and secondly to test a bespoke prototype digital intervention which implements such agents. The presented study tests two distinct virtual learning environments, one of which was enhanced with affective virtual patients, with nine 3rd year nursing students specialising in mental health, during their professional practice stage. All (100%) of the participants reported that, when using the enhanced scenario, they experienced a more realistic representation of carer/patient interaction; better recognition of the patients' feelings; recognition and assessment of emotions; a better realisation of how feelings can affect patients' emotional state and how they could better empathise with the patients.

Simulation is an educational tool that is becoming increasingly prevalent in nursing education (1) provides students with realistic clinical situations allowing them to practice and learn in a safe environment (2). Human patient simulators provide experiences that are realistic and offer students an opportunity to assess, intervene, and evaluate patient outcomes (3). Findings from a meta-analysis suggests that simulation education improved nursing students’ performance in clinical reasoning skills (4).

A considerable amount of literature focuses on the use of digitally-mediated tools in healthcare. For example, a systematic review of 12 studies investigated the effectiveness of dementia carer-oriented interventions delivered through the internet and showed improvements in carer wellbeing (5). The review highlighted that, multicomponent individually tailored programmes that combine information, caregiving strategies, and contact with other carers can increase their confidence and self-efficacy, reducing stress and depression. The evolution of nursing education teaching technologies has witnessed a shift towards interactive and immersive learning experiences. Building upon the observation by Baysan et al. (6) regarding the prevalent use of videos, recent studies have explored the integration of virtual reality (VR) and augmented reality (AR) simulations in nursing education. For instance, a study by Nakazawa et al. (7) shows that training with AR is effective in enhancing caregivers’ physical skills and fostering greater empathy towards their patients. This approach not only enhances learner engagement but also facilitates active learning and skill retention.

However, despite the potential benefits of digital health interventions, addressing the emotional challenges and stressors faced by participants remains a critical concern. Research has highlighted the impact of environmental factors and basic emotions on user experience and outcomes in health teaching simulations (8, 9). Another study found undergraduate students felt unready, were anxious about having their mistakes exposed, worried about damaging teamwork and were afraid of evaluation (10).

According to Rippon (9), anger in healthcare environments is a very common emotion, leading to aggression and violence, and healthcare professionals are exposed to it daily with an increasing number of them suffering from signs of post-traumatic stress disorder. Although these problems have been identified and the potential of technologies has been widely recognised, most Human-Computer Interaction applications tend to overlook these emotions when responding to user input (11).

Thus, there is an increasing need for computer systems to endow affective intelligent agents with emotional capabilities and socially intelligent behaviour, and the aim of having an affective interaction between virtual and human users and an effect on the affective state of the user (12–14). This article proposes the design, implementation, and evaluation of a model for incorporating emotional enhancements (concentrating on negative emotions such as stress, fear, and anxiety) into virtual agents applied to virtual teaching applications for healthcare provision. For this purpose, we have created the following research objectives:

• RO1—To what extent does a virtual learning scenario incorporating emotional virtual agents provide a realistic experience.

• RO2—To what extent does the incorporation of emotions into virtual agents stimulate a better set of responses from the human user.

• RO3—To what extent do emotional virtual agents improve the learning experience.

• RO4—To what extent do emotional virtual agents allow the learners recognise their emotions and understand how these affect the virtual agents and, subsequently, allow them to feel more empathy towards them.

Although it has been increasingly accepted that emotions are an important factor in improving human-machine interaction, many times digital teaching systems do not consider the emotional dimension that human users expect to encounter in an interaction, and this can lead to frustration (11). To be able to provide affective interaction between intelligent agents and human users, the computer system needs to provide the virtual agents with capabilities for emotional and socially intelligent behaviour which should, in real time, have a measurable effect on the user (15).

Emotion recognition has been widely explored in human-robot interaction (HRI) and affective computing (16). Recent works aim to design algorithms for classifying emotional states from different input modalities, such as facial expression, body language, voice, and physiological signals (17, 18). Ability to recognise human emotional states can encourage natural bonding between humans and robotic artifacts (19).

Andotra (20) found that by integrating emotional intelligence with technological capabilities, chatbots or virtual agents could enhance user engagement and well-being through personalised and empathetic interactions. To enhance conversational skills integrating emotional capabilities in chatbots is essential. AI-driven chatbots can detect user sentiments in a conversation, thus triggering the chatbot to comprehend the user's emotional state and generate an appropriate response (21).

There are several models for agent decision-making in interactive virtual environments, such as interactive healthcare teaching applications, for example:

• Belief, Desire, and Intention (BDI) (22), explained in more detail below

• Psi-theory (23), OCC (24) which models the human mind as an information processing agent, controlled by a set of basic physiological, social and cognitive drives

• and the Five factor Model (FFM), a grouping of five unique characteristics used to study personality: conscientiousness, agreeableness, neuroticism, openness to experience, extraversion.

Models are needed because agents are part of an environment that has limited resources, such as memory and computational power. This means that agents cannot take an infinite amount of time to process their next move(s). One of the most prominent models is the BDI model that uses a set of rules designed to reduce the number of possible actions the agent can perform, thus reducing the time needed for the agent to decide its next action. The environment within which the agents act changes continuously so the agent must decide upon its next move and act upon it quickly enough so that it ensures that the environment does not change in such a way that it may invalidate the selected action. BDI constrains the reasoning needed by the agent and, in consequence, the time needed for making a decision. Within BDI beliefs represent beliefs about oneself, other agents, and the world. Desires represent what the agent would like to achieve, such as situations it would like to accomplish. For example, an agent could desire a patient in a simulation to be helped with their meal. Intentions represent actions/plans the agent has committed to do.

Research on agent design architectures and emotions for virtual teaching of personnel for stressful situations focuses on emotion integration, the design of affective intelligent agents that interact with other agents (virtual or real) based on social norms and on how to improve agent realism and believability. Towards this goal some of the research combines and/or extends other theories and models.

For example, the extended BDI (EBDI) model (25), adds the influence of primary (first, more instinctive, emotional response to an event e.g., fear, sadness, anger) and secondary emotions (arising from higher cognitive processes and based on what we perceive that the result of a certain situation will be e.g., joy and relief). This makes agents more engaging and believable so that they can better play a human-like role in affective intelligent agent scenarios.

In addition to the user's emotions, virtual agents in our model (described in the next section—Architecture Overview) also adapt their actions and emotions based on the user's personality traits. The cognitive-behavioural architecture (26) incorporates the notion of personality as a determining factor for the agent's future emotions and coping preferences. This approach enables improved character diversity and personality coherence across different situations.

We present an architecture for creating a virtual tutor with the ability to alter both the tutor's and the virtual patient's behaviour and mood based on the responses, emotions, and personality of human participants. Responses to human participant's actions are selected from a database of possible rules that are based on previous research and input from psychologists and experts in the area of health training. The testing scenario used in this research focuses on teaching nurses caring for dementia patients.

The proposed architecture makes use of the BDI model and extends it by incorporating the emotions and personality of the trainees in the decision-making process. BDI is one of the most popular models for developing rational agents based on how humans act based on the information derived from an environment (27). BDI was chosen because it is one of the most prominent approaches to building agent and multiagent systems, it is based on a sound psychological theory, Michael Bratman's theory of human practical reasoning (28) and it has been successfully used for the design of realistic affective intelligent agents for many years (29–31).

The architecture proposed in this work extends BDI by incorporating two new elements. Firstly, it incorporates the current emotional state of the participant into its decision-making process. Systems that have explored emotion integration, such as the BDI tutor model (29) and Model Social Game Agent (MSGA) (30) have focused on just incorporating the emotions of trainees and do not take other factors into account, such as a human participant's personality traits. Our proposed model focuses on individual users and on the emotions of both the human participants and of the virtual agents. The emotional state of the human participant is not approximated by the model but is the dependant variable and reported by the participants in real time in order to convey the emotional state to the simulation for it to adapt. This introduces realism and personalises the process for the human participant as it focuses on the individual's emotional state and how this changes throughout the teaching session. With advances in artificial intelligence, virtual agents are starting to play an active role in various fields such as information presentation, sales, training, education, and healthcare (32, 33). To help induce a positive emotion and thereby enhance social bonding in human–virtual agent interaction, affective communication between people and virtual agents has become important (34).

Secondly, our architecture incorporates the personality of the participant. For the purposes of this research the Five Factor Model (also known as the “Big 5”) personality test (35) is used for measuring the participant's level of neuroticism. This has been suggested to be related to the level that negative emotions affect an individual during training (36–38). The feedback provided to the participant as well as the virtual patient's emotional state is affected by both these elements.

Regarding the reasoning rule-based model, the Jboss DROOLS Expert rules engine is used for providing feedback to the user and uses the adapted BDI model for providing emotional intelligence feedback. The front end of the learning environment has been designed using the UNITY3D game development system and programmed using C# to communicate with the server back end. The back end was programmed using JAVA and runs under Tomcat so that several front-end sessions can communicate concurrently with and between modules on the server.

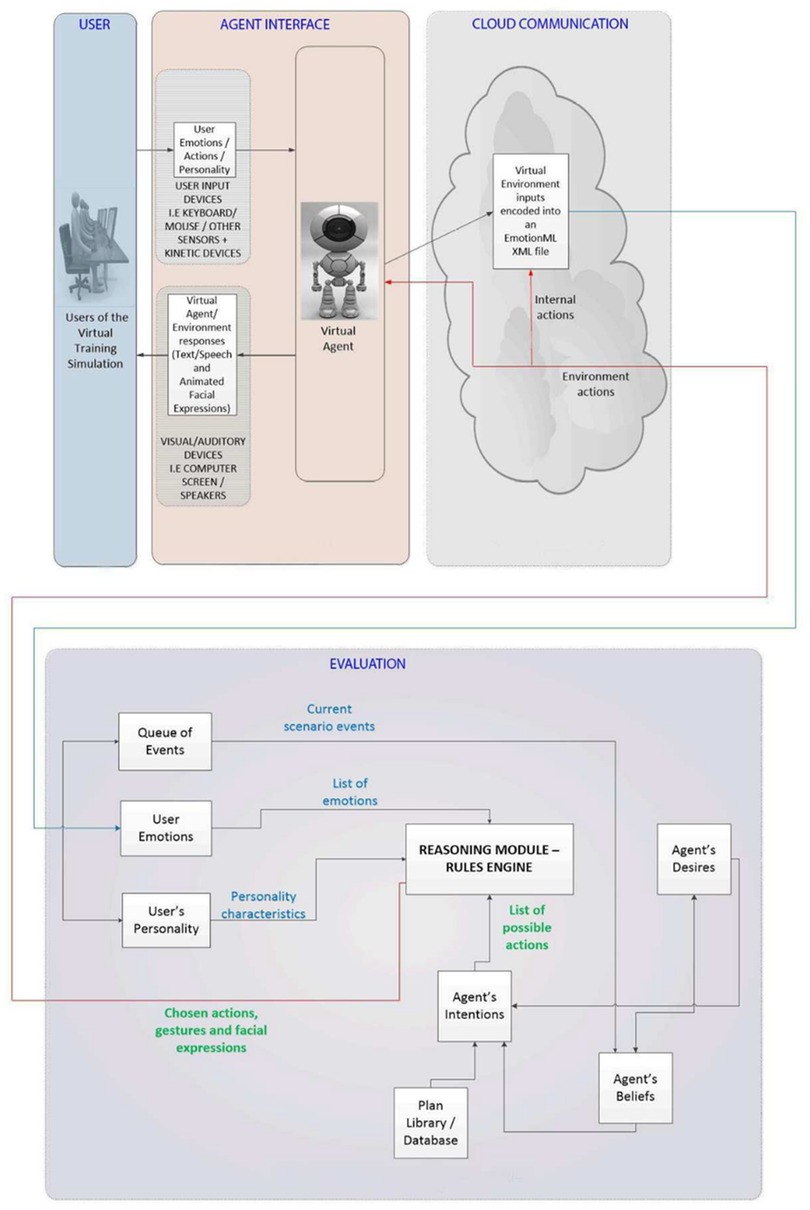

A high-level overview of the emotional virtual agent's architecture can be seen in Figure 1. The architecture comprises 4 sections. The “User” section illustrates the real world and the participant taking part in the virtual teaching simulation. The “Agent Interface” section illustrates the communication between the participant and the virtual agent. The “Cloud Communication” section illustrates the data communication between the virtual teaching environment and the emotional agent architecture on the server. Finally, the “Evaluation” section illustrates the decision-making process based on the BDI system, available plans, human participant emotions and personality. Once inputted, the events, emotions and personality characteristics of the participant are sent from the virtual learning environment to the server, as shown in the “Cloud Communication” section.

The interaction between the participant and the agent interface, shown in the “User” and “Agent Interface” sections of Figure 1, is performed using traditional input devices, such as the keyboard and mouse. The participants take part in an interactive 3D virtual teaching scenario. The communication between the participant and the virtual tutor/patient is achieved using dialog boxes. The virtual tutor has no embodiment within the virtual environment. It presents messages and feedback to the participant via text boxes. The virtual patient is represented within the virtual environment by a 3D human model. They are controlled using scripted behaviours; however, the virtual tutor can alter the selection of behaviour and the mood based of the virtual patient based on the responses, emotions, and personality of human participant.

The participants have a first-person view of the virtual teaching environment and select their choice of action using selection boxes, as shown in Figure 2. Participants input their personality type and their current emotional status by selecting the type and intensity of their emotions, using selection grids. This method of emotional input is used as it is a quick and reliable way for collecting information on the emotional status of a trainee in a real time environment.

Figure 2. A screenshot of the virtual teaching environment and an interaction with the virtual agent. Please enter a scale for the emotion Stress and then reply to the question below—Current Value 0; Please enter a scale for the emotion Agitation and then reply to the question below—Current Value 0; Please select Emotions; (Q1 of 5) Should I be here in this place?:- I know you are worried about being here in hospital. Did you need to use the toilet?; You are in hospital and you have to stay in for quite some time; Please stop talking to me and interrupting me while I am trying to do the medicine round.

The BDI model is used as the basis for interactive virtual environment decision-making in the emotional virtual agent's architecture. The virtual tutor's beliefs are created and updated based on its desires and current scenario events. The tutor uses dialogue boxes for teaching the participants and prompting them to perform different actions. The participant responds by performing the required actions and replies to the tutor using multiple choice and true/false responses.

As illustrated in Figure 1, the decision-making process consists of the following modules:

• The agent's Beliefs module accepts as input events from the current scenario and the agent's desires. Based on these inputs, the module outputs a list of the agent's beliefs to the agent's desires and intentions modules. For example, a scenario input could be a virtual patient that needs help finishing his meal and the tutor's desire to provide the needed help to the patient. The outputs of the module would include the intention to ask a participant to help the patient and the desire to follow through by confirming at a later time that the participant fulfilled her task.

• The agent's Desires module accepts as input a list of the agent's beliefs and outputs a list of the agent's desires to both the agent's beliefs and agent's intentions modules. Based on the example illustrated above, the module could receive the desire of the agent to confirm that the participant helped the virtual patient finish his meal. The module would then output the intention of asking the participant at a later time if she fulfilled the task of helping the virtual patient and if the patient did, indeed, manage to finish his meal.

• The agent's Intentions module accepts as input a list of possible plans, the agent's desires and the agent's beliefs. It outputs a list of possible actions to the reasoning module. For example, inputs could include the plan of the tutor to ask the participant to help the virtual patient finish his meal by helping him sit at the table and cut the meat in manageable pieces, the desire of the tutor to help the patient and the belief that the patient needs the participant's help for finishing his meal.

• The agents Reasoning Module accepts as inputs a list of possible participant responses to the tutor's question and the participant's emotions and personality traits. It outputs the chosen actions to be communicated to the participant and a set of gestures and facial expressions to be used by the virtual patient's 3D avatar. For example, if a participant's inputs indicate that they are tired and not very empathetic then the tutor would ask her in a firmer way to help the virtual patient. The tutor would be less firm when communicating with a rested participant that is more likely to feel empathy for the patient that has difficulty finishing his meal.

Participant emotions and personality in the proposed architecture are inputted by the participant using the keyboard/mouse within the virtual learning environment. These are then transferred over a network to the server/back-end to be interpreted by the inference engine. The participant's input their emotions and personality by replying to multiple choice questions, selecting one or more emotions and their intensity (using a Likert scale). As discussed above, for this research the Five Factor Model (also known as the “Big 5”) personality test (35) is used for measuring the participant's level of neuroticism.

The participant's personality and emotions and the virtual agent's desires, intentions and beliefs are the inputs to the emotion creation and revision module. Emotions affect the best possible way an agent can respond to his requests. Personality affects the intensity and duration of these emotions. For example, using the Myers Briggs Personality Types, a Facilitator Caretaker incorporating the following Myers-Briggs Personality Preferences: Extraversion Sensing Feeling Judging, is more likely to feel compassion and sadness for a patient than a Conceptualizer Director that incorporates the following preferences: Introversion, iNtuition, Thinking, Judging.

The virtual agents in our model predict how to respond to the indicators of a participant's emotions depending on an inference of the participant's personality. The participant's neuroticism level is used then to adjust the scale grade of the reported emotion. As people with higher levels of neuroticism tend to over-report their negative emotions, the rule is to decrease the level of emotion reported on an 1–10 scale from participants with higher than average levels of neuroticism and lower the reported level for participants with lower than average levels of neuroticism.

A set of rules adapts the responses of the virtual tutor based on the trainee's emotions, emotion strength, and personality traits.

Our system outputs the feedback to be given to the participant, and information that identifies how the participant's response affected the emotional state of the virtual patient. This information can then be used to adjust the virtual patient's behaviour and animations. For example, an animation can be played, and an emoticon can be displayed that show the patient's resulting emotional state. This approach allows the system to decide which actions will be performed by the virtual agent and what types of gestures, facial expressions and emoticons will be used for interacting with the participant within the virtual teaching environment. In a similar manner, depending on the virtual patient feedback, which is directly related to the emotion felt by the patient, an emoticon is displayed on the screen that shows clearly how the patient feels. The possible emotions portrayed are happiness, sadness, disgust, fear and anger. Figure 3 illustrates an example of sadness for the virtual patient.

Figure 3. User of animations, sounds and emoticons for portraying the emotion of sadness for the virtual patient. “A few minutes for someone with dementia is meaningless. Mrs Smith may be unable to work out how long that might be. The response may make her feel agitated that she has to wait. She may be left feeling that she is not being taken seriously. This may lead to her getting up out of her chair very quickly and asking the same question. PLEASE TRY AGAIN.”

The chosen scenario for the data collection was designed by a team of nursing tutors that specialise in mental health and, specifically, in treating patients with dementia. The participants for the case studies consisted of nine nursing students with an interest in mental health. The data collection consisted of:

1. A pre-study interview that gathered background information about the participant;

2. An online personality test (Five Factor Model). This was used to determine the level of neuroticism of the participants;

3. Two teaching sessions. During the sessions, the participants interacted with a virtual patient with dementia and responded to common questions asked by such patients. During the interaction, interruptions occurred that had to be dealt with (these were random in occurrence and duration, in the same way these would occur in the real world), such as another patient asking for water or the fire alarm going off. These interruptions were chosen based on input from experienced nurses. The training comprised two different scenarios:

a. Scenario 1—A teaching scenario where the feedback given to the participants did not depend on their emotions and personality and the patient did not demonstrate any visual or auditory change in their mood. This session was delivered as a point of reference to the participant on how systems that do not cater for different personalities and emotions work;

b. Scenario 2—an updated system based on the model designed for this research; the second scenario provided visual (emoticons and animations) and auditory feedback on the patient's emotional state. These were based on the trainee's emotions, personality, and responses to the patient's questions.

4. An interview was conducted for gathering data on how the teaching session affected the learning process, emotional states and general satisfaction of the participants. Interview questions (Appendix A) were semi-structured and the participants were prompted to discuss and expand their replies.

A set of qualitative data was collected using observation and semi-structured interviews. The data was used to draw out patterns of how visual and auditory representations of a virtual patient's mood may affect the learning process, emotional states, and general satisfaction of the trainees.

• Data analysis

This section presents and interprets the qualitative results from the testing of the developed emotional virtual agent's architecture.

The participants were selected using convenience sampling from a 3-year nursing course at a university. Students were in their early 20s, 7 were female and 2 male, a representative ratio of nursing students according to statistics (39). Inclusion criteria included that the participants were taking part in their hospital placements in the mental health ward and were working with patients with dementia. The study was approved by the university's ethical committee and the participants signed a consent statement before participating. The sample size was deemed to be large enough for a small-scale qualitative test of the technology to evaluate feasibility and acceptability. A future quantitative study with a larger number of participants will be used to evaluate statistical significance.

A summative content analysis was first used to identify themes related to the research objectives. In the table (Appendix B) the number of occurrences that support each research theme for each of the nine participants are listed. The research objectives (RO) are as follows:

• RO1—To what extent does a virtual learning scenario incorporating emotional virtual agents provide a realistic experience.

• RO2—To what extent does the incorporation of emotions into virtual agents stimulate a better set of responses from the human user.

• RO3—To what extent do emotional virtual agents improve the learning experience.

• RO4—To what extent do emotional virtual agents allow the learners recognise their emotions and understand how these affect the virtual agents and, subsequently, allow them to feel more empathy towards them.

Below is a summary of the occurrences for each RO from interviewing the participants

Table 1 illustrates how the scenario that provided text responses that were affected by the participant's personality traits and emotions, in addition to using visual and auditory cues, was more successful in providing a more realistic experience, better responses, improved learning and increased empathy.

In more detail, Increased Empathy was the research objective that had the highest number of occurrences. This is a very important part of what we tried to achieve with our VR scenario educational design as we aimed to have a patient-centered educational design, something extremely important in general, but even more in the mental health sector, The next RO with 26 occurrences was Realistic Experience, something that a VR experience can certainly provide if designed correctly. Improved learning experience and Better Responses to patient questions followed closely with a still high 23 and 21 occurrences respectively, showing that all of the ROs were sufficiently achieved.

In the remainder of this section, we will provide a detailed analysis of the qualitative data collected for this study. The analysis is organised according to the research objectives outlined at the start of this section.

• To what extent does a virtual learning scenario, incorporating emotional virtual agents provide a realistic experience

All participants (n = 9) reported that the scenario questions were realistic, based on their experience of roleplaying teaching and actual first-hand experience of working with people with dementia during hospital placement, and made them feel that they were interacting with a real patient, especially in the case that the patients reacted in a way that made their emotions clear. They also felt that the realism of the scenario could help them improve their nursing skills, especially with the integrated distractions which corresponded to what they would expect to have to deal with in a real-world scenario. They found that the realism of the system could provide a good alternative to training with real people either at university or during their placements. The realistic sounds from the patients (laughter, screams etc.) were also found to be very useful and increased the realism of the experience.

One participant stated in the initial interview that took place before the teaching session that he believed that online teaching would never replace practicing with real people.

“I’m a firm believer that simulated and online experiences will never replace the total quality of experiencing that in practice.” (Participant 9).

After the session the same participant reported that he found the online teaching to be much more realistic than expected:

“I think that's exactly the kind of challenges that a healthcare worker will have. They will go into a bay, they’ll be approached by one patient but there will be others also demanding on their time, there’ll be other important tasks and it's important to make sure that the patient is cared for and you also meet the needs of others. So, the scenarios felt very real to me.” (Participant 9).

• To what extent does the incorporation of emotions into virtual agents stimulate a better set of responses from the human user

All the participants reported that the emotionally enhanced teaching scenario made them realise how their actions can affect the patient's mood and subsequent reactions and how by being calm and not letting their emotions guide their actions they can help the patients much more. They also agreed that seeing how happy the patient was because of their care also improved their mood. The affective virtual agents also made the participants reflect on their work, when the results were positive but, mostly, when they did not succeed in keeping the patient happy. For example, one participant stated:

“… in one of the questions the patient was shouting, he wanted to get out of there. If I was shouting back it would have escalated, it would have made the patient more angry, whereas if I just calmly say we can help then at least the patient's hear that there is some help for them.” (Participant 1).

They continued to say that reporting these emotions helped him try to control them:

“… so I’ve got to keep it in and just find an easier, calmer way to say, ‘Let's sit down and we’ll sort it out.’” (Participant 1).

The participant also said that asking him to report these emotions helped him think about how he felt and prompted him to try and choose the answer that would make it less likely to transfer his negative emotions onto the patient.

“Yeah, because there were some answers that I was reading, and I thought if I answer with that it will make the scenario escalate and make the patient more agitated and angry if I tell them, “Oh just sit down, I’ll deal with you later.” They don’t feel like they’re being cared for, so they’ll just want to leave, they won’t want to sit down and talk to you but if you say, ’Sit down, I’ll get you a drink and we’ll talk about how worried you are.’” (Participant 1).

The online teaching environment also helped them respond better as they felt more secure not having to deal with real patients while still at the initial stages of learning as the embarrassment of getting one question wrong in the real-world would sometimes make them get more wrong answers.

• To what extent do emotional virtual agents improve the learning experience

Again, all the participants stated that the feedback based on their responses made them understand clearly how their answers affected the patient and it allowed them to better understand how important it is to know how the patient feels at every stage of their interaction. They reported that the experience seemed realistic and close to what they would expect to do in real life, and this helped them learn better. It was also something they found more enjoyable and less stressful as real-life teaching scenarios sometimes made them feel uncomfortable and as they were judged by their tutor. They felt that these teaching sessions within a “safe” environment would allow them to practice and improve their skills before attempting to work with a real patient. For example:

“So, like when we did it face to face with a member of staff in a way you could feel that you were being judged by that member of staff. I suppose to test out your skills first online then you’re sort of in a safer environment than when you’re face to face with somebody. Learning this online is more of a safe environment to get it wrong and where you can get your skills up to scratch really to be able to do it face to face with somebody.” (Participant 3).

The teaching sessions also made them realise that this kind of teaching can also be applied to other patients with different conditions where it would also help improve the learning experience. Responses included:

“I think that's the thing that's missing in practice is that nobody tells you, you are so driven within the moment of stress that you don’t realise you are and you do need to take a step back. I think that's perhaps the difference here between the virtual environment and the real environment, there is no button in the real environment to press, to gauge my anxiety or my stress, to help me realise it, to help me control it.” (Participant 9).

• To what extent do emotional virtual agents allow the learners recognise their emotions and understand how these affect the virtual agents and, subsequently, allow them to feel more empathy towards them

The extent to which affective virtual agents allow carers to increase their empathy was investigated. Both the replies to the interview questions and the observation from the researcher provided data that supported the research objective. More specifically the participants reported that they realised how patients can feel threatened when carers let their negative emotions show and, on the other hand, how by seeing a patient being calm and happy they know that they succeeded in giving them the right care and they did not let their stress, anger or other emotions affect their work. For example, one participant commented on how having to report how stressed and agitated he was before every reply to a question helped him understand better how those emotions could affect the patient.

“Well, it made me really think of the patient. That it could escalate the patient's condition and make him feel threatened if the trainee let those emotions show.” (Participant 1).

In the same way he noted that reporting those emotions help him think about them more, and subsequently made him try to control them.

“Yeah, if I’m shouting, even though I might feel angry and just want to finish, if I show that it might make the patient feel threatened.” (Participant 1).

He also stated that asking him to report those emotions helped think about how he felt and try to choose the right answer that wouldn't affect the patient negatively.

“Yes, you may not have the time to and you may need to do other stuff but that way at least you’ve calmed the patient down a little.” (Participant 1).

The reactions of the patient in the second scenario made them realise clearly how the patient felt and made them try even more to be compassionate and calm and not allow their actions to have a negative impact on the patient. All the participants agreed that a happy patient made them feel happy and contented too whereas a distressed patient affected them negatively. Responses included:

“Yes, because if you feel you’re affected emotionally by the situation you do think about what it is you’ve got to say next and what you’re going to do next because you know that you don’t want your emotions to impact on the patient because it's not about you, it's about the patient and that side of care.” (Participant 4).

The results of the comparison between the two different systems are outlined below. The system that provided the visual and auditory representations of the virtual patient's emotions based on the participant's emotions, personality and responses was reported by the nice participants to have the following advantages:

1. Provided a more realistic representation of the carer/patient interaction;

2. Performed better in helping the carers:

a. recognise how the patients feel;

b. recognise and evaluate their own emotions;

c. realise how their actions can affect the patient's emotional state;

d. realise how their emotions can affect the patient's emotional state;

e. empathise with the patients.

To summarise, all (100%) of the participants reported, when using the enhanced scenario, a more realistic representation of carer/patient interaction; better recognition of the patients' feelings; recognition and assessment of emotions; a better realisation of how feelings can affect patients' emotional state and how they could better empathise with the patients.

Our aim was to explore how intelligent virtual agents in healthcare provision teaching simulations can improve the human participant's learning experience by incorporating visual and auditory representations of their emotional states. These emotional states and the virtual agent's responses were adapted based on an indication of the participant's emotions and personality.

We found that visual and auditory representations of the patient's emotional state based on our adapted BDI architecture positively affected the learning process, emotional states, and general satisfaction of trainee nurses. Two scenarios were used; one that did not include a virtual patient with different mood states and an updated system that provided visual and auditory feedback based on the patient's emotional state.

There was no negative feedback regarding the second, emotionally enhanced, scenario. When completing the emotionally enhanced scenario some of the participants even had a complete change in heart regarding the realism and usefulness of online learning environments. All the nurses reported that the second scenario using the enhanced learning system was more realistic, helped them better realise how their actions and emotions can affect a patient and, thus, made them more empathetic and improved their learning experience.

Rich data was collected from the nine participants that support that the adapted scenario with the emotionally enhanced patients allowed them to:

• Experience a more realistic representation of carer/patient interaction

• Better recognise the patients' feelings;

• Better realise how feelings can affect patients' emotional state

• Empathise with the patients.

These results will be useful for researchers in designing and conducting future studies relevant to a broader range of healthcare providers. By creating more varied scenarios focusing on different target groups, e.g., people with learning disabilities, people that suffered from stroke etc., the architecture can be tested further and modified, as/if necessary, to better cater for different health conditions.

Previous research in this area has focused on:

1. Non interactive videos where the participants did not have a hands-on experience, as we mentioned in the introduction of this paper regarding the systematic review of nursing education teaching technologies (6).

2. scenarios focusing on only the emotions of the participants and not on both their emotions and their personality (40, 41).

Our work can be further adapted to be used in different and more varied fields, including crisis management and with both formal (soldiers, firefighters, law enforcement officers) and informal personnel. This can be done by using a different set of emotions for the participants; these can be negative, positive or include some of each type of emotion depending on the field and scenarios used. In future work, we plan to incorporate online assessments instruments into the virtual scenario for collecting quantitative data on how emotionally enhanced virtual agents could improve the learning experience.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were approved by University of Wolverhampton ethics committee. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

ML: Conceptualization, Data curation, Formal Analysis, Investigation, Methodology, Project administration, Validation, Visualization, Writing – original draft, Writing – review & editing. SA: Writing – review & editing. PL: Writing – review & editing. TH: Conceptualization, Methodology, Writing – review & editing. FL: Validation, Writing – review & editing. PK: Writing – review & editing. DS: Conceptualization, Formal Analysis, Methodology, Validation, Visualization, Writing – original draft, Writing – review & editing.

The authors declare that no financial support was received for the research, authorship, and/or publication of this article.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Yuan HB, William BA, Fang JB, Ye QH. A systematic review of selected evidence on improving knowledge and skills through high-fidelity simulation. Nurse Educ Today. (2012) 32(3):294–8. doi: 10.1016/j.nedt.2011.07.010

2. Arthur C, Levett-Jones T, Kable A. Quality indicators for design and implementation of simulation experiences: a delphi study. Nurse Educ Today. (2012) 33(11):1357–61. doi: 10.1016/j.nedt.2012.07.012

3. Lee SO, Eom M, Lee JH. Use of simulation in nursing education. J Korean Acad Soc Nurs Educ. (2007) 13(1):90–4. pISSN: 1225-9578. eISSN: 2093-7814.

4. Shin S, Park JH, Kim JH. Effectiveness of patient simulation in nursing education: meta-analysis. Nurse Educ Today. (2015) 35(1):176–82. doi: 10.1016/j.nedt.2014.09.009

5. Boots LMM, de Vugt ME, van Knippenberg RJM, Kempen GIJM, Verhey FRJ. A systematic review of internet-based supportive interventions for caregivers of patients with dementia. Int J Geriatr Psychiatry. (2013) 29(4):331–44. doi: 10.1002/gps.4016

6. Baysan A, Çonoğlu G, Özkütük N, Orgun F. Come and see through my eyes: a systematic review of 360-degree video technology in nursing education. Nurse Educ Today. (2023) 128:105886. doi: 10.1016/j.nedt.2023.105886

7. Nakazawa A, Iwamoto M, Kurazume R, Nunoi M, Kobayashi M, Honda M. Augmented reality-based affective training for improving care communication skill and empathy. PLoS One. (2023) 18(7):e0288175. doi: 10.1371/journal.pone.0288175

9. Rippon TJ. Aggression and violence in health care professions. J Adv Nurs. (2000) 31(2):452–60. doi: 10.1046/j.1365-2648.2000.01284.x

10. Kang SJ, Min HY. Psychological safety in nursing simulation. Nurse Educ. (2019) 44(2):E6–9. doi: 10.1097/NNE.0000000000000571

11. Schröder M, Cowie R. Developing a consistent view on emotion-oriented computing. Lecture Notes in Computer Science. (2006):194–205. doi: 10.1007/11677482_17

12. Maples B, Pea RD, Markowitz D. Learning from intelligent social agents as social and intellectual mirrors. In: Niemi H, Pea RD, Lu Y, editors. AI in Learning: Designing the Future. Cham: Springer (2023). p. 73–89. doi: 10.1007/978-3-031-09687-7_5

13. Colombo S, Rampino L, Zambrelli F. The adaptive affective loop: how AI agents can generate empathetic systemic experiences. In: Arai K, editor. Advances in Information and Communication. FICC 2021. Advances in Intelligent Systems and Computing, Vol. 1363. Cham: Springer (2021). p. 547–59. doi: 10.1007/978-3-030-73100-7_39

14. Zall R, Kangavari MR. Comparative analytical survey on cognitive agents with emotional intelligence. Cogn Comput. (2022) 14:1223–46. doi: 10.1007/s12559-022-10007-5

15. Prendinger H. Human physiology as a basis for designing and evaluating affective communication with life-like characters. IEICE Trans Info Syst. (2005) E88-D(11):2453–60. doi: 10.1093/ietisy/e88-d.11.2453

16. Spezialetti M, Placidi G, Rossi S. Emotion recognition for human-robot interaction: recent advances and future perspectives. Front Robot AI. (2020) 7. doi: 10.3389/frobt.2020.532279

17. McColl D, Hong A, Hatakeyama N, Nejat G, Benhabib B. A survey of autonomous human affect detection methods for social robots engaged in natural HRI. J Intell Robot Syst. (2016) 82:101–33. doi: 10.1007/s10846-015-0259-2

18. Cavallo F, Semeraro F, Fiorini L, Magyar G, Sinčák P, Dario P. Emotion modelling for social robotics applications: a review. J. Bionic Eng. (2018) 15:185–203. doi: 10.1007/s42235-018-0015-y

19. Erol BA, Majumdar A, Benavidez P, Rad P, Raymond Choo K, Jamshidi M. Toward artificial emotional intelligence for cooperative social human–machine interaction. IEEE Trans Comput Soc Syst. (2020) 7:234–46. doi: 10.1109/TCSS.2019.2922593

20. Andotra S. Enhancing human-computer interaction using emotion-aware chatbots for mental health support. [Preprint]. (2023). doi: 10.13140/RG.2.2.20219.90409. [Epub ahead of print].

21. Bilquise G, Ibrahim S, Shaalan K. Emotionally intelligent chatbots: a systematic literature review. Hum Behav Emerg Technol. (2020) 2022:9601630. doi: 10.1155/2022/9601630

22. Georgeff M, Pell B, Pollack M, Tambe M, Wooldridge M. The belief-desire-intention model of agency. Lecture Notes in Computer Science. (1999):1–10. doi: 10.1007/3-540-49057-4_1

23. Dörner D, Güss CD. PSI: a computational architecture of cognition, motivation, and emotion. Rev Gen Psychol. (2013) 17(3):297–317. doi: 10.1037/a0032947

24. Clore GL, Ortony A. Psychological construction in the OCC model of emotion. Emot Rev. (2013) 5(4):335–43. doi: 10.1177/1754073913489751

25. Jiang H, Vidal JM, Huhns MN. EBDI: an architecture for emotional agents. Proceedings of the 6th International Joint Conference on Autonomous Agents and Multiagent Systems—AAMAS ‘07 (2007). doi: 10.1145/1329125.1329139

26. Sandercock J, Padgham L, Zambetta F. Creating adaptive and individual personalities in many characters without hand-crafting behaviors. Lecture Notes in Computer Science. (2006):357–68. doi: 10.1007/11821830_29

27. Chodorowski J. A case study of adding proactivity in indoor social robots using belief-desire-intention (BDI) model. Biomimetics (Basel). (2019) 4(4):74. doi: 10.3390/biomimetics4040074

28. Bratman ME, Israel DJ, Pollack ME. Plans and resource-bounded practical reasoning. Comput Intell. (1988) 4(3):349–55. doi: 10.1111/j.1467-8640.1988.tb00284.x

29. Florea A, Kalisz E. Embedding emotions in an artificial tutor. Seventh International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC’05) (2005). doi: 10.1109/synasc.2005.34

30. Johansson M, Verhagen H, Eladhari MP. Model of social believable NPCs for teacher training: using second life. 2011 16th International Conference on Computer Games (CGAMES) (2011). p. 270–4. doi: 10.1109/cgames.2011.6000351

31. Zoumpoulaki A, Avradinis N, Vosinakis S. A multi-agent simulation framework for emergency evacuations incorporating personality and emotions. In: Konstantopoulos S, Perantonis S, Karkaletsis V, Spyropoulos CD, Vouros G, editors. Artificial Intelligence: Theories, Models and Applications. SETN 2010. Lecture Notes in Computer Science, Vol. 6040. Berlin, Heidelberg: Springer. (2010). p. 423–8.doi: 10.1007/978-3-642-12842-4_54

32. D’Haro LF, Kim S, Yeo KH, Jiang R, Niculescu AI, Banchs RE, et al. CLARA: a multifunctional virtual agent for conference support and touristic information. In: Lee G, Kim H, Jeong M, Kim JH, editors. Natural Language Dialog Systems and Intelligent Assistants. Cham: Springer (2015). p. 233–9. doi: 10.1007/978-3-319-19291-8_22

33. Philip P, Micoulaud-Franchi JA, Sagaspe P, Sevin E, Olive J, Bioulac S, et al. Virtual human as a new diagnostic tool, a proof of concept study in the field of major depressive disorders. Sci Rep. (2017) 7:42656. doi: 10.1038/srep42656

34. Collins EC, Prescott TJ, Mitchinson B. Saying it with light: a pilot study of affective communication using the MIRO robot. Biomimetic and Biohybrid Systems: 4th International Conference, Living Machines 2015, Barcelona, Spain, July 28–31, 2015, Proceedings 4. (2015). Vol. 9222. p. 243–55. doi: 10.1007/978-3-319-22979-9_25

35. McCrae RR, John OP. An introduction to the five-factor model and its applications. J Pers. (1992) 60(2):175–215. doi: 10.1111/j.1467-6494.1992.tb00970

36. Gallagher DJ. Extraversion, neuroticism and appraisal of stressful academic events. Pers Individ Dif. (1990) 11(10):1053–7. doi: 10.1016/0191-8869(90)90133-c

37. Sambol S, Suleyman E, Scarfo J, Ball M. Distinguishing between trait emotional intelligence and the five-factor model of personality: additive predictive validity of emotional intelligence for negative emotional states. Heliyon. (2022) 8(2):e08882. doi: 10.1016/j.heliyon.2022.e08882

38. Kumar VV, Tankha G. Association between the big five and trait emotional intelligence among college students. Psychol Res Behav Manag. (2023) 16:915–25. doi: 10.2147/PRBM.S400058

39. The Royal College of Nursing. RCN Nursing in Numbers 2023|Publications|Royal College of Nursing. (n.d.). Available online at: https://www.rcn.org.uk/Professional-Development/publications/rcn-nursing-in-numbers-english-uk-pub-011-188 (accessed June 2, 2023).

40. Mano LY, Mazzo A, Neto JR, Meska MH, Giancristofaro GT, Ueyama J, et al. Using emotion recognition to assess simulation-based learning. Nurse Educ Pract. (2019) 36:13–9. doi: 10.1016/j.nepr.2019.02.017

41. Abajas-Bustillo R, Amo-Setién F, Aparicio M, Ruiz-Pellón N, Fernández-Peña R, Silio-García T, et al. Using high-fidelity simulation to introduce communication skills about end-of-life to novice nursing students. Healthcare. (2020) 8(3):238. doi: 10.3390/healthcare8030238

Keywords: affective, virtual, reality, VR, nursing, intelligent, teaching, simulation

Citation: Loizou M, Arnab S, Lameras P, Hartley T, Loizides F, Kumar P and Sumilo D (2024) Designing, implementing and testing an intervention of affective intelligent agents in nursing virtual reality teaching simulations—a qualitative study. Front. Digit. Health 6:1307817. doi: 10.3389/fdgth.2024.1307817

Received: 5 October 2023; Accepted: 3 April 2024;

Published: 18 April 2024.

Edited by:

Edith Talina Luhanga, Carnegie Mellon University Africa, RwandaReviewed by:

Jeanne Markram-Coetzer, Central University of Technology, South Africa© 2024 Loizou, Arnab, Lameras, Hartley, Loizides, Kumar and Sumilo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Michael Loizou bWljaGFlbC5sb2l6b3VAcGx5bW91dGguYWMudWs=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.