- 1Research Center on Ethical, Legal, and Social Issues, Osaka University, Suita, Japan

- 2Graduate School of Human Sciences and Headquarters, Osaka University, Suita, Japan

- 3Department of Biomedical Ethics and Public Policy, Graduate School of Medicine, Osaka University, Suita, Japan

Patients and members of the public are the end users of healthcare, but little is known about their views on the use of artificial intelligence (AI) in healthcare, particularly in the Japanese context. This paper reports on an exploratory two-part workshop conducted with members of a Patient and Public Involvement Panel in Japan, which was designed to identify their expectations and concerns about the use of AI in healthcare broadly. 55 expectations and 52 concerns were elicited from workshop participants, who were then asked to cluster and title these expectations and concerns. Thematic content analysis was used to identify 12 major themes from this data. Participants had notable expectations around improved hospital administration, improved quality of care and patient experience, and positive changes in roles and relationships, and reductions in costs and disparities. These were counterbalanced by concerns about problematic changes to healthcare and a potential loss of autonomy, as well as risks around accountability and data management, and the possible emergence of new disparities. The findings reflect participants' expectations for AI as a possible solution for long-standing issues in healthcare, though their overall balanced view of AI mirrors findings reported in other contexts. Thus, this paper offers initial, novel insights into perspectives on AI in healthcare from the Japanese context. Moreover, the findings are used to argue for the importance of involving patient and public stakeholders in deliberation on AI in healthcare.

1. Introduction

Public and private investments in the development of artificial intelligence (AI) technologies for healthcare are being rapidly expanded. AI for healthcare as currently conceived involves the ability to process and learn from massive amounts of data, and includes machine and deep learning, expert systems, natural language processing, healthcare informatics, and cloud computing, as well as applications in robotics (1). Despite these investments, there is a growing recognition that little is known about the views of the end-users of these systems, including patients, members of the public, and healthcare professionals (HCPs) (2). Given the particular challenges of implementing AI in healthcare, including the sensitivity of the data involved, there have been calls for increased stakeholder—including patients and the public—involvement in the development of AI for healthcare (1).

There is a growing body of literature seeking to fill this gap, which has been captured by a recent review of the field by Young et al. (3), who found that in the 23 included studies in their study, patients and members of the public generally expressed positive views on the use of AI, but also “had many reservations and preferred human supervision”. Two recent studies, by Richardson et al. (2) and Musbahi et al. (4) sought to elicit patient and public perspectives on the application of AI in healthcare more broadly, and similarly found some ambivalence towards AI, with recognition of both its potential benefits and its risks.

However, despite groundbreaking efforts such as those by Muto and Inoue (5) and Kodera et al. (6), little research to date has examined patient and public opinions on AI in healthcare in the Japanese context. There has also been little dissemination of these results more broadly, as a recent review by Young et al. (3) of English-language literature reported no studies from the Japanese context. Yet, insights from the Japanese context can provide a counter-balance to an overly Western-dominated discourse on AI (7).

As Ishii et al. (8) indicate, Japan is a key case study through which to explore the opportunities and issues of AI in healthcare as it has “a technologically savvy populace, well-developed healthcare system founded on universal coverage, and pre-existing academic, government, and industrial collaborative alignments”. Japan has been actively investing in the development of AI for healthcare purposes, while the regulatory environment is being adjusted to facilitate the agglomeration and use of personal data on health (8). These advances are being carried out in part through a Cross-Ministerial Strategic Innovation Promotion Program implemented by the national government, which includes a target for the creation of ten “AI Hospitals”, receiving funding to integrate AI into healthcare practice (9, 10). Osaka University Hospital is one of the five hospitals where this process is currently being accelerated (11).

Patient and Public Involvement (PPI) in healthcare is increasingly relied on to ensure that advances in healthcare best meet the needs of their end-users (12). A collaborative research project entitled “Ensuring the benefits of AI in healthcare for all: Designing a Sustainable Platform for Public and Professional Stakeholder Engagement” (the AIDE Project) and jointly funded by JST-RISTEX in Japan and the UKRI in the UK is being carried out between teams at Osaka University in Japan and the University of Oxford in the UK. Now in its fourth and final year, the aim of the AIDE Project is to advance stakeholder engagement for AI in healthcare. For this reason, our work on the AIDE Project has taken a novel approach, guided by Patient and Public Involvement Panels (PPIPs) established in both Osaka and Oxford, to advise the research teams and provide insights on AI in healthcare from a patient and public perspective. It is noteworthy that despite initial efforts by the Japan Agency for Medical Research and Development to increase awareness of the importance of PPI (13), PPIPs remain few in number in the Japanese context, and there is a lack of infrastructure and structured support for their establishment.

We conducted a two-part, exploratory workshop with members of the Osaka PPIP, to understand participants' expectations and concerns about AI in healthcare. In this paper, we report the results of an analysis of the workshop outcomes, with the aim of providing a snapshot of patient and public perceptions of AI in healthcare in the Japanese context, and identify areas for future attention.

2. Methods

At the time of the workshop, the Osaka AIDE PPIP was made up of 11 members, with a balanced representation of patients, caregivers, and members of the public. Panel members ranged in age from their 20s to their 70s, with a balance of participants identifying as male and female. All participants were Japanese, and although no objective measure was taken of participant knowledge about AI, no participants declared having particular expertise in the field of AI.

Following an initial orientation session for the PPIP in early 2021 in which a researcher from the Osaka University AI Hospital Project was invited to speak to the Panel about advances in AI for healthcare, a two-part workshop was conducted with PPIP members to elicit their expectations and concerns in relation to AI. Ethics approval was received through the Osaka University Graduate School of Medicine [Number 20083(T1)-3], and informed consent was received from all PPIP members for the use of this data for research. Feedback was given to PPIP members following the workshop on the themes which were identified from the data.

The workshop sessions were held on February 25 and April 21, 2021. PPIP members were divided into three groups and asked to freely record their expectations and concerns about the use of AI in healthcare, without input from the research team. A Japanese method was selected for the workshops: the “Science Café” participatory workshop approach, proposed by Nakagawa and Yagi (14), was adapted so as to enable to the workshop to be conducted online due to the Covid-19 pandemic. Nakajima et al. (15) propose the use of the online platform Apisnote (16) to facilitate idea-sharing in online workshops. Aligned with this approach, in the first workshop, PPIP members were first given time for individual reflection, following which they were asked to post their ideas (items) to Apisnote. Following this, each member was given time to present on their items. In the second session, each group was asked to review, cluster, and title the expectations and concerns which they had identified in the previous session. In this process, participants were asked to keep a distinction between those items that were expectations and those that were concerns through color-coding.

The results were translated into English, with the aim in the translation process being to remain as close as possible to the original phrasing and nuance of the Japanese. As each group's titles were unique but contained overlap, thematic content analysis was used to synthesize the items across groups and to identify overarching trends in the data. The titles created by group members—rather than the items themselves—were coded, to better reflect the clustering work by PPIP members. The data was coded through an inductive process, and codes were then merged to form overarching themes, which were then reapplied to the dataset (See Appendix for further details.) The results of this analysis are reported below.

3. Results

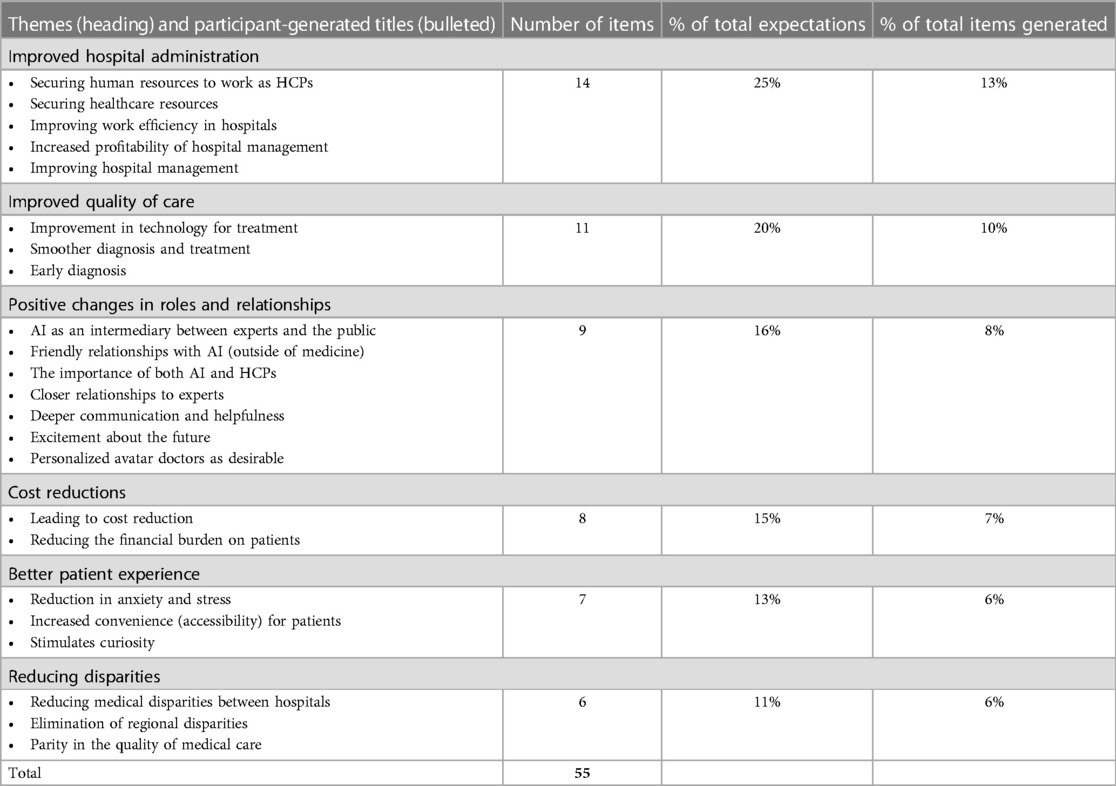

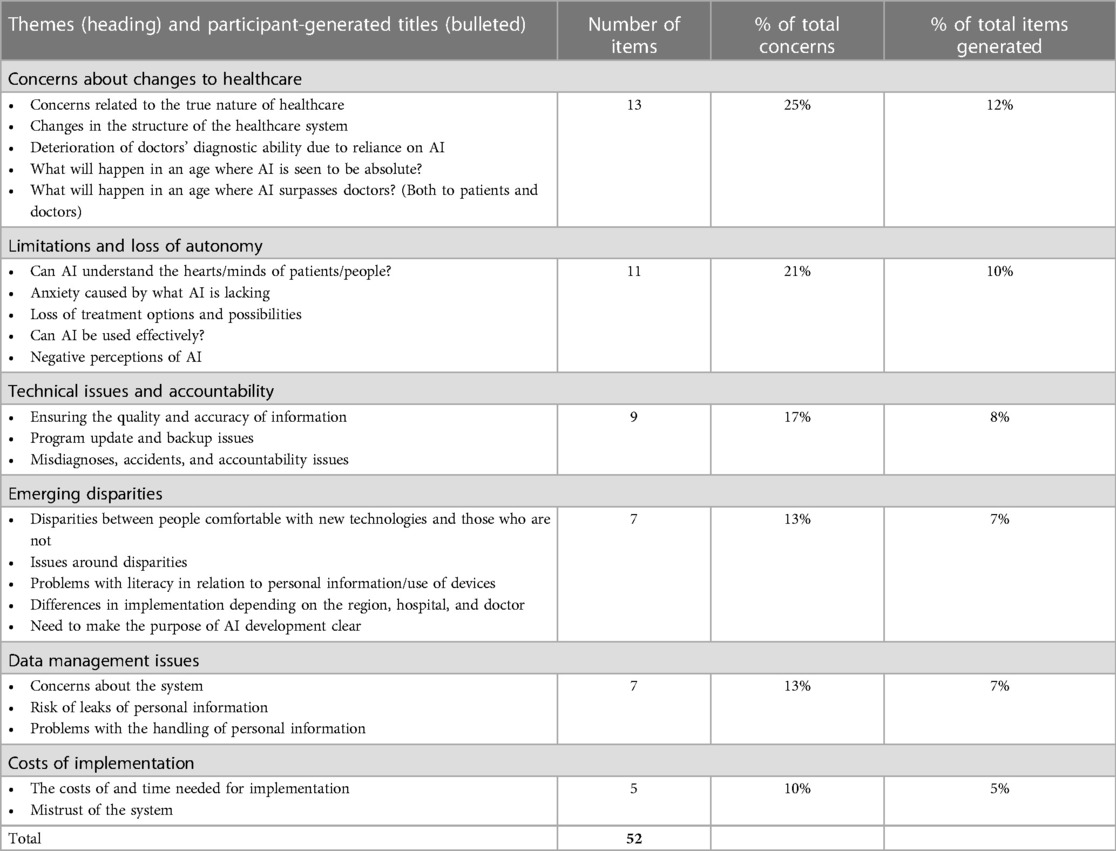

Across the three groups, a total of 107 items were identified. Of this total, 55 (51%) were expectations, while 52 (49%) were concerns. Six themes reflected expectations, while six reflected concerns. This suggests a perception of AI in healthcare among the PPIP members that is balanced overall. Through the analytic process, these items were clustered and categorized into 12 themes by AmK with review by BY.

Below, the overarching themes and the participant-generated titles within each theme will be discussed. The extracts included below are participant postings, translated by AmK with review from all co-authors.

3.1. Expectations

There were six themes reflecting expectations for AI in healthcare. These are reported in Table 1 below, where the first item in each row is the researcher-generated overarching theme and is followed by the participant-generated bulleted items.

Expected improvements in hospital administration was the most prominent theme across the results and made up 13 percent of the total items. PPIP members expressed expectations that AI would help to offset the lack of human and other resources needed for healthcare. This included the expectation that AI would enable the provision of healthcare even in remote areas and other places where there may be shortages of doctors. Moreover, it was expected that hospitals would function more efficiently in areas ranging from the management of medicines and prescriptions to clinical trials, leading to increased profitability. HCPs would benefit from a reduction in burden and in long working hours, as rote work would be reduced. This would allow them to devote time to fulfilling their true role as healthcare professionals (Extract 1).

Extract 1 (Group 2)

The possibility that healthcare professionals will be able to concentrate on the work that they should be able to focus on

The next-largest theme, comprising 10 percent of all items, was improved quality of care. This dealt with the possibility that AI implementation would lead to improvements in diagnosis and treatment. Patients would have increased access to healthcare-related information, and access to remote care would be expanded (Extract 2).

Extract 2 (Group 2)

Possibility of clinical examinations and treatment from home for people in remote areas, the elderly, and people with disabilities

This tied in with a further theme, which was the expectation that there would be positive changes to roles and relationships. AI was expected to facilitate better communication in clinical settings, and overall, there was the expectation that AI would become a familiar entity in patients' lives (Extract 3), with hopes for personalized interactions.

Extract 3 (Group 1)

Excited about the future of healthcare because AI will be something the children will be familiar with going forward

Furthermore, and closely linked with the first theme, PPIP members expected that AI would improve the diagnostic and treatment processes, which would lead to a reduction in the financial burden on patients through a broad range of knock-on effects, including the increased use of generic drugs and a reduction in medical expenses for patients, who generally have to cover 30% of costs under the universal healthcare care system. Participants expected that the use of AI would also facilitate outpatient triage and allow for increased data portability as patients would be able to access and search within their own medical information, which was seen to be a part of cost reduction criteria (Extract 4).

Extract 4 (Group 3)

It will become easier to accumulate and search (personal) information

PPIP members expected that AI would facilitate a better patient experience through a reduction in the anxiety and stress involved in hospital visits, and increased convenience for patients. One participant referred to the exhausting nature of hospital visits at present (Extract 5). There were hopes that AI would lighten the burden of hospital visits by simplifying administrative procedures. Moreover, these procedures would be more accessible to patients who did not speak Japanese, who could benefit from more user-friendly systems. Some participants expressed a personal interest in AI, and saw it as something exciting and of interest, which they said would be appreciated by those who enjoy interacting with new technologies.

Extract 5 (Group 3)

(From the perspective of patients) I expect that procedures at the hospital will be simplified and that waiting times will be shortened. I also expect that the system will be user-friendly for people with disabilities and non-Japanese speakers, who experience barriers to access to information. I hope that hospital visits will no longer exhaust patients and lead to a breakdown in their health

PPIP members also expected that AI would reduce disparities in the quality of care experienced by patients in different regions (i.e., remote or rural areas), or at different types of hospitals (i.e., smaller clinics and larger research hospitals), making medical treatment more accessible to patients regardless of location. At the same time, they expected that remote care would be expanded, thus reducing the physical burden of travel to receive care, and reducing disparities by increasing access to quality care for those living in remote areas. This would reduce the concentration of patients visiting large hospitals (Extract 6).

Extract 6 (Group 2)

Standardization of the level of healthcare, elimination of the concentration of patients at large hospitals

3.2. Concerns

Although PPIP members noted a variety of expectations for AI in healthcare, they also had concerns about its implementation. There were six themes which reflected concerns about AI in healthcare, as in Table 2.

The second largest overall theme identified in the analysis was that of concerns about changes in healthcare, which made up 12% of total items. One set of concerns was that AI may move healthcare away from its “true nature”, or how participants felt it “should” be, leading to problems in clinical relationships and in the structure of healthcare in the future. This related to concerns that doctors' diagnostic skills may be surpassed by AI, and their roles as decisionmakers would be undermined. PPIP members indicated concern about a possible negative impact on the relationship between doctors and patients, if doctors were to become over-reliant on AI, and if decisions from black-boxed algorithms were to be accepted as “absolutes”, with little room for reconsideration or second opinions (Extract 7). Moreover, contrary to the expectations discussed above, if these systems were to be made available at large hospitals, there could be an increased over-concentration of patients at large hospitals seeking out AI-powered healthcare, even if they could otherwise be treated elsewhere.

Extract 7 (Group 1)

I think that in healthcare, the language of “absolutes” is avoided, but AI healthcare may come with such absolutes

The second largest cluster of concerns was the perceived limitations of AI and potential loss of autonomy for both HCPs and patients. There was an implicit assumption that the introduction of AI into healthcare would require direct communication between patients and robots or other AI-powered entities, to which care would be delegated. This appeared, for example, in PPIP members' concerns that patients would struggle to fully express themselves in interaction with AI, or that they may experience psychological anxiety at not being able to meet “the real thing” (i.e., a human HCP; Extract 8).

Extract 8 (Group 1)

Psychological anxiety due to no longer being able to meet the real thing.

For this reason, although participants expected that AI could improve communication, as described above, there were also concerns that the technology would not have the capacity to understand patients' thoughts and feelings, and that this would impede communication and prevent them from freely expressing themselves. This tied into an overarching perception of AI as “cold”, as opposed to the implied warmer nature of human-centered healthcare. Furthermore, there were concerns that delegating care to AI-based systems would mean a loss of autonomy for patients in pursuing treatment options. Participants worried about being made to use new machines, or that they may be subject to medical decisions that they are not prepared for. One example given by a PPIP member was of being recommended surgery for which the patient is totally unprepared (Extract 9).

Extract 9 (Group 1)

Things will advance suddenly in directions unanticipated by patients (Such as having surgery suddenly recommended without any preparation)

Accuracy of the output from AI was another concern, and how legal and other issues related to accountability would be managed, such as if system errors or failures occurred which led to fatal outcomes. PPIP members also expressed concerns about whether bugs in the system would be fixed appropriately and questioned what would happen if online platforms failed due to natural or other disasters (Extract 10).

Extract 10 (Group 2)

Issues of backups when online platforms are unavailable due to natural disasters, etc.

Although, as described above, there were expectations that AI would reduce disparities, there were contradictory concerns that it would expand them at the levels of individuals, hospitals, and regions. At the individual level, participants worried that some people may not be comfortable with new technologies. They thought that not all hospitals may be able to implement new technologies equally, and that there may be regional disparities both in implementation and in the ability of doctors or hospitals to handle new technologies. One participant expressed concern about the risk for expanded disparities on a global scale (Extract 11).

Extract 11 (Group 1)

For what purpose are we developing AI? If we have an awareness of humanity as a whole, if it is only available in particular environments, won't this only serve to exacerbate disparities?

The final area of concern was around the possible costs of implementation and whether investments would bear fruit. There was unease about the possibility that the investment of time and money into developing AI may not pay off. Participants observed that other major investments in system reform paid for with taxpayer money had come to nothing, and so there was a degree of skepticism about investments in AI (Extract 12).

Extract 12 (Group 3)

Many systems created with taxpayer money cannot be used—will that not happen?

4. Discussion

Overall, this exploratory workshop with the AIDE Project PPIP highlighted the meaningful input patients and members of the public can provide on AI for healthcare in the Japanese context. This is notable given that PPI in Japan continues to be underdeveloped, and consultation with stakeholders on AI remains limited.

The results of this study reflect PPIP members' perceptions of issues in healthcare broadly, and their expectations for AI as a possible remedy for them, as exemplified through the key question raised in Group 1 above, of what the purpose behind AI development truly is (Extract 11).These issues as reflected in participants' postings include the limited availability of human and financial resources for healthcare, the need for greater efficiency and accuracy, issues in patient experience, and disparities between hospitals and regions within Japan. The expectations of participants that AI will improve healthcare align with those expressed in reports promoting its implementation in healthcare [e.g., (11, 17)]. Participants expected there would be the potential for better hospital administration, an improved quality of care and patient experience, positive changes in roles and relationships, and a reduction in disparities. Thus, it is noteworthy that the PPIP held a balanced perception of AI, with a nearly-even split between expectations and concerns in the items elicited. This mirrors the ambivalent approach of patients and members of the public toward the implementation of AI in healthcare identified in other contexts [e.g., (3)].

Recent research such as by Richardson et al. (2) and Musbahi et al. (4) has sought to identify patient and public expectations and concerns about AI in healthcare broadly and can offer a source for comparative cross-cultural insights. The themes in this study echo those found in Richardson et al.'s (2) study in which participants were “generally enthusiastic”, about AI, but held concerns about the potential impact of AI on the autonomy of patients, concerns about rising healthcare costs, data quality, and concerns about security. These themes overlap with the themes identified in our study, though data quality was not articulated as a prominent concern by our PPIP. Similarly, many of the expectations and concerns emerging from Musbahi et al.'s study were also reflected in this study, including expectations for faster diagnosis, the possibility of AI-powered triage, a reduction in rote work, greater efficiency, AI as a helpful source of information, AI as an equalizer to reduce disparities, and AI as a cost-saver. There was also overlap in the concerns elicited, including about privacy and data management, issues around accountability, and the risk of deterioration in HCPs' skills (4)—also reported by Jutzi et al. (18).

The concern about the potential loss of the human touch in healthcare was a key theme here which has been reported in other settings, such as by Nelson et al. (19), Adams et al. (20), Yang et al. (21), and Young et al. (3). This perception that AI implementation may result in increased anxiety as due to the loss of the “emotional side” (4, 19, 20) of clinical relationships emphasizes the urgency of ensuring that human skill in healthcare is enhanced rather than replaced (22).

There were also some notable absences in the themes from this Japanese workshop given recent literature on the ethics of AI for healthcare. These included a lack of expressed concern around bias (23–25)—where disparities were raised, they were generally in relation to differences in the quality of healthcare between regions within Japan, and between different types of healthcare providers. There was no expression of concern about the insufficiency of current regulatory frameworks for AI in healthcare, the export of AI into other regions internationally, or of commercial involvement (8). Also missing was concern over sustainability issues across the life-course of AI (26). Further research is needed to determine to what extent patients and the public in Japan are aware of these issues around AI, and how to facilitate information-sharing about these risks. Moreover, it is noteworthy that participants themselves did not propose increased stakeholder involvement or engagement around AI in healthcare in this workshop, although some participant-generated items pointed to the introduction of AI itself as one way through which to increase patients' involvement in their own care.

A further aspect of the findings was the broad and future-oriented range of AI applications implied by the items elicited. Several of the items centered around possible applications of AI which are more advanced AI than the narrow applications available for real-world deployment. There was an implicit assumption that AI would replace clinicians, or that clinicians would be entirely reliant on AI. This contrasts with findings from studies by Yang et al. (27) and Jutzi et al. (18), where patients did not expect clinicians to be replaced by AI. It is also does not reflect the current reality in Japan, where approval of AI is limited, and only HCPs are permitted to make medical decisions (28). This future-orientation may reflect the challenges for stakeholders in understanding the entirety of AI systems and their applications and a need for greater information-sharing (29, 30).

4.1. Limitations and future directions

This study was intended as a small-scale, exploratory study spotlighting opinions of PPIP members on AI in healthcare. Though generalizability of the findings is limited, the qualitative orientation of this study and the small sample size ensured that the unique voices of each participant were well-reflected in the findings. Furthermore, this study utilized a Japanese method in eliciting stakeholder views on AI in healthcare—a novel contribution to the field. Bounds were not set around the types of AI under consideration to allow participants to situate the discussions around the technologies they found to be most of relevance or concern.

There is a need for the voices of otherwise marginalized or vulnerable stakeholders to be centered in deliberation on AI in healthcare (31). Both patients and caregivers were represented on the PPIP and offer perspectives on one aspect of potential vulnerability. Future research should actively seek out the perspectives of diverse individuals to investigate whether these themes remain consistent in a more diversified population. The AIDE Project research team is engaged in ongoing research with multiple stakeholder groups, with parallel but distinct involvement from a UK-based PPIP.

There is a growing consensus that consideration is urgently needed of the implications of AI for healthcare prior to its implementation. The meaningful involvement of stakeholders in these processes, including patients and members of the public is essential. This study has shown that patients and members of the public are keen to be engaged around AI in healthcare. It is crucial that they be given the opportunity to do so.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the Ethics Committee of the Graduate School of Medicine, Osaka University (Number 20083(T1)-3). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

AmK, BY, AtK, and KK conceived the study. AmK, BY, AtK, and KK were involved in the workshops as organizers and facilitators. AmK translated all postings into English, with review and approval from all authors. AmK conducted data analysis, with first round review by BY and final review by AtK and KK. AmK wrote the first draft of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This work was funded by the Japan Science and Technology Agency Research Institute of Science and Technology for Society Grant Number JPMJRX19H1.

Acknowledgments

We express our appreciation to all members of the AIDE Osaka Patient and Public Involvement Panel, as well as to our co-facilitators in the workshop, Yayoi Aizawa and Seongeun Kang. We also thank members of the AIDE research team both at Osaka University and at the University of Oxford and Takuma Iwasa for administrative support. Gratitude as well to Ryo Kawasaki and researchers involved with the AI Hospital Project at Osaka University Hospital. Moreover, we express our thanks to those involved in the review process.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Fogel AL, Kvedar JC. Artificial intelligence powers digital medicine. Npj Digit Med. (2018) 1:5. doi: 10.1038/s41746-017-0012-2

2. Richardson JP, Smith C, Curtis S, Watson S, Zhu X, Barry B, et al. Patient apprehensions about the use of artificial intelligence in healthcare. Npj Digit Med. (2021) 4:140. doi: 10.1038/s41746-021-00509-1

3. Young AT, Amara D, Bhattacharya A, Wei ML. Patient and general public attitudes towards clinical artificial intelligence: a mixed methods systematic review. Lancet Digit Health. (2021) 3:e599–611. doi: 10.1016/S2589-7500(21)00132-1

4. Musbahi O, Syed L, Le Feuvre P, Cobb J, Jones G. Public patient views of artificial intelligence in healthcare: a nominal group technique study. Digit Health. (2021) 7:205520762110636. doi: 10.1177/20552076211063682

5. Muto K, Inoue Y. 医療AIと医療倫理ー患者・市民とともに考える企画の試みから [Medical AI and medical ethics - from a trial event with patients and citizens]. 医学のあゆみ. (2020) 274:890–4.

6. Kodera S, Ninomiya K, Sawano S, Katsushika S, Shinohara H, Akazawa H, et al. 医療AIに対する患者の意識調査 [A survey of patient awareness about medical AI]. Presented at the The 36th Annual Conference of the Japanese Society for Artificial Intelligence (2022).

8. Ishii E, Ebner DK, Kimura S, Agha-Mir-Salim L, Uchimido R, Celi LA. The advent of medical artificial intelligence: lessons from the Japanese approach. J Intensive Care. (2020) 8:35. doi: 10.1186/s40560-020-00452-5

9. National Institutes of Biomedical Innovation, Health and Nutrition. (2021). AIホスピタルプロジェクトとは [What is the AI Hospital Project?] [WWW Document]. Available at: https://www.nibiohn.go.jp/sip/about/outline/ (Accessed 8.17.22).

10. Nikkei Staff Writers. (2018). Japan plans 10 “AI hospitals” to ease doctor shortages [WWW Document]. Available at: https://asia.nikkei.com/Politics/Japan-plans-10-AI-hospitals-to-ease-doctor-shortages (Accessed 8.17.22).

11. Nakamura Y. Japanese cross-ministerial strategic innovation promotion program “innovative AI hospital system”; how will the 4th industrial revolution affect our health and medical care system? JMA J. (2022) 5:1–8. doi: 10.31662/jmaj.2021-0133

12. Katirai A, Kogetsu A, Kato K, Yamamoto B. Patient involvement in priority-setting for medical research: a mini review of initiatives in the rare disease field. Front Public Health. (2022) 10:915438. doi: 10.3389/fpubh.2022.915438

13. Japan Agency for Medical Research and Development. (2019). 患者・市民参画 (PPI) ガイドブック ∼患者と研究者の協働を目指す第一歩として∼ [Patient and Public Involvement (PPI) Guidebook∼As a first step towards collaboration between patients and researchers∼] [WWW Document]. Available at: https://www.amed.go.jp/ppi/guidebook.html

14. Nakagawa C, Yagi E. 科学技術に関するさまざまな論点を可視化する ; 科 学技術に関する「論点抽出カフェ」の提案 [Proposal of the science cafe methods to enhance participants' discussion; Opinion Eliciting Workshops about Science and Technology issues]. Commun Des. (2011) 4:47–64.

15. Nakajima A, Bawiec M, Nakayama K, Horii H. The Development of APISNOTE a Digital Sticky Note System for Information Structuring 7 (n.d.).

16. Apisnote [WWW Document]. (n.d.). Available at: https://www.apisnote.com (Accessed 9.15.22).

17. Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. (2019) 25:44–56. doi: 10.1038/s41591-018-0300-7

18. Jutzi TB, Krieghoff-Henning EI, Holland-Letz T, Utikal JS, Hauschild A, Schadendorf D, et al. Artificial intelligence in skin cancer diagnostics: the Patients’ perspective. Front Med. (2020) 7:233. doi: 10.3389/fmed.2020.00233

19. Nelson CA, Pérez-Chada LM, Creadore A, Li SJ, Lo K, Manjaly P, et al. Patient perspectives on the use of artificial intelligence for skin cancer screening: a qualitative study. JAMA Dermatol. (2020) 156:501. doi: 10.1001/jamadermatol.2019.5014

20. Adams SJ, Tang R, Babyn P. Patient perspectives and priorities regarding artificial intelligence in radiology: opportunities for patient-centered radiology. J Am Coll Radiol. (2020) 17:1034–6. doi: 10.1016/j.jacr.2020.01.007

21. Yang L, Ene IC, Arabi Belaghi R, Koff D, Stein N, Santaguida P. Stakeholders’ perspectives on the future of artificial intelligence in radiology: a scoping review. Eur Radiol. (2022) 32:1477–95. doi: 10.1007/s00330-021-08214-z

22. Pasquale F. New laws of robotics: Defending human expertise in the age of AI. Cambridge: The Belknap Press of Harvard University Press (2020).

24. Crawford K. Atlas of AI: power, politics, and the planetary costs of artificial intelligence. Yale New Haven: University Press (2021).

25. Obermeyer Z, Powers B, Vogeli C, Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. (2019) 8:447–53. doi: 10.1126/science.aax2342

26. WHO. Ethics and governance of artificial intelligence for health. Geneva: World Health Organization (2021).

27. Yang K, Zeng Z, Peng H, Jiang Y. Attitudes of Chinese cancer patients toward the clinical use of artificial intelligence. Patient Prefer Adherence. (2019) 13:1867–75. doi: 10.2147/PPA.S225952

29. Donia J, Shaw J. Co-design and Ethical Artificial Intelligence for Health: Myths and Misconceptions, in: AIES ‘21: Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society. Presented at the AIES ‘21 (2021).

30. Donia J, Shaw JA. Co-design and ethical artificial intelligence for health: an agenda for critical research and practice. Big Data Soc. (2021) 8:205395172110652. doi: 10.1177/20539517211065248

31. Sloane M, Moss E, Awomolo O, Forlano L. (2020). Participation is not a Design Fix for Machine Learning 7.

Appendix

The following is a description of the analytic process used for this study.As the workshop was conducted online, participants were asked to post their comments to Apisnote directly. For this reason, the first analytic task was to put the data into a form through which it could be analyzed. Thus, it was manually copied into an Excel table with the following categories: the group number, whether participants had identified the posting as an expectation or a concern, the content of the posting, and the title for the cluster in which that posting was included. Certain groups also further clustered multiple titles into broad areas, and this was included in the table as needed. All items were translated into English, and both the English and Japanese were retained in the table.There was a total of 48 titles (clusters) created by participants across the three groups. As noted above, there was overlap in these titles across groups, and for this reason, the titles were coded to find these commonalities. However, an analytic decision was made to code titles rather than the items (postings) themselves, to prioritize participants' own understandings and clustering of the items they generated.An inductive process was used to code these titles, and codes were refined and readjusted multiple times until they best captured the data. Multiple codes were collapsed together to form overarching themes. Then, these themes were applied to both the titles and the items which participants had themselves grouped together under each title. This allowed for data to be extracted about the number of items under each theme, across the groups.This process involved decision-making by the researchers about the appropriate thematic categorization of the titles. Although the data for this study was solely made up of participant postings, this decision-making was informed by the researchers' understandings gleaned from their experiences with participants in the workshop, in which the postings were discussed by participants. However, there were cases in which the participant-generated title (and thus the theme developed through coding) did not necessarily match all of the items under a particular participant-generated title. Given the decision to prioritize participant-generated clustering and titling of items, no items were moved from one cluster to another by the researchers, but rather were left as organized by participants, even when another categorization was conceivable.Keywords: artificial intelligence, healthcare, patient and public involvement, Patient and Public Involvement Panel, community participation

Citation: Katirai A, Yamamoto BA, Kogetsu A and Kato K (2023) Perspectives on artificial intelligence in healthcare from a Patient and Public Involvement Panel in Japan: an exploratory study. Front. Digit. Health 5:1229308. doi: 10.3389/fdgth.2023.1229308

Received: 26 May 2023; Accepted: 28 August 2023;

Published: 13 September 2023.

Edited by:

Jin Han, Black Dog Institute, University of New South Wales, AustraliaReviewed by:

Aditya U. Kale, University Hospitals Birmingham NHS Foundation Trust, United KingdomAdewale Adebajo, The University of Sheffield, United Kingdom

Janet L. Wale, HTAi Patient and Citizen Involvement in HTA Interest Group, Canada

© 2023 Katirai, Yamamoto, Kogetsu and Kato. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Amelia Katirai YS5rYXRpcmFpLmVsc2lAb3Nha2EtdS5hYy5qcA== Beverley Anne Yamamoto eWFtYW1vdG8uYmV2ZXJsZXkuYW5uZS5ocUBvc2FrYS11LmFjLmpw

Amelia Katirai

Amelia Katirai Beverley Anne Yamamoto

Beverley Anne Yamamoto Atsushi Kogetsu

Atsushi Kogetsu Kazuto Kato

Kazuto Kato