94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

SYSTEMATIC REVIEW article

Front. Digit. Health, 04 November 2021

Sec. Digital Mental Health

Volume 3 - 2021 | https://doi.org/10.3389/fdgth.2021.764079

This article is part of the Research TopicHighlights in Digital Mental Health 2021/22View all 5 articles

Digital mental health interventions (DMHIs) present a promising way to address gaps in mental health service provision. However, the relationship between user engagement and outcomes in the context of these interventions has not been established. This study addressed the current state of evidence on the relationship between engagement with DMHIs and mental health outcomes. MEDLINE, PsycINFO, and EmBASE databases were searched from inception to August 1, 2021. Original or secondary analyses of randomized controlled trials (RCTs) were included if they examined the relationship between DMHI engagement and post-intervention outcome(s). Thirty-five studies were eligible for inclusion in the narrative review and 25 studies had sufficient data for meta-analysis. Random-effects meta-analyses indicated that greater engagement was significantly associated with post-intervention mental health improvements, regardless of whether this relationship was explored using correlational [r = 0.24, 95% CI (0.17, 0.32), Z = 6.29, p < 0.001] or between-groups designs [Hedges' g = 0.40, 95% CI (0.097, 0.705), p = 0.010]. This association was also consistent regardless of intervention type (unguided/guided), diagnostic status, or mental health condition targeted. This is the first review providing empirical evidence that engagement with DMHIs is associated with therapeutic gains. Implications and future directions are discussed.

Systematic Review Registration: PROSPERO, identifier: CRD 42020184706.

Mental illness is a worldwide public health concern with an overall lifetime prevalence rate of ~14%, and accounting for 7% of the overall global burden of disease (1). The estimated impact of mental illness on quality of life has progressively worsened since the 1990's, with the number of disability-adjusted life years attributed to mental illness estimated to have risen by 37% between 1990 and 2013 (2). This rising mental health burden has prompted calls for the development of alternative models of care to meet the increasing treatment needs, which is unlikely to be able to be adequately serviced through face-to-face services into the future (3).

Digital mental health interventions (DMHIs) are one alternative model of care with enormous potential as a scalable solution for narrowing this service provision gap. By leveraging technology platforms (e.g., computers and smartphones) well-established psychological treatments can be delivered directly into people's hand with high fidelity and no-to-low human resources, empowering individuals to self-manage mental health issues (4). The anonymity, timeliness of access, and flexibility afforded by DMHIs also circumvents many of the commonly identified structural and attitudinal barriers to accessing care such as cost, time, or stigma (5). While there is a growing body of evidence supporting that DMHIs can have significant small-to-moderate effects on alleviating or preventing symptoms of mental health disorders (6–10), low levels of user engagement have been reported as barriers to both optimal efficacy and adoption into health settings and other translational pathways. Health professionals will require further convincing that digital interventions are a viable adjunct or alternative to traditional therapies before they are willing to advocate for patient usage as a therapeutic adjunct (11). Low engagement—defined as suboptimal levels of user access and/or adherence to an intervention (12)—is touted as one of the main reasons why the potential benefits of these interventions remain unrealized in the real world (13, 14).

Recognizing the need to promote better engagement with digital interventions, several review studies have sought to establish both the nature of engagement with DMHIs, and the effectiveness of various engagement strategies to improve uptake of, and adherence to, DMHIs. A review of empirical studies has found collectively low rates of DMHI completion, with over 70% of users failing to complete all treatment modules, and more than 50% disengaging before completing half of all treatment modules (15). Reviews of the effectiveness of various engagement strategies have shown that such strategies can have a positive impact on engagement with, and efficacy of, digital interventions. Reminders, coaching, and tailored feedback delivered via telephone or email have been found to have modest to moderate effects toward increasing engagement with digital interventions targeting physical and mental health outcomes, compared to if no strategy was used (16). In addition, a review of efficacy studies of smartphone apps for depression and anxiety found that apps which incorporated more elements aimed at promoting user engagement had larger effect sizes (17).

Though these prior reviews are undoubtedly important to accelerating our understanding of how the benefits of DMHIs might be realized in real world settings, it may be premature to invest substantial effort in engagement strategies. To date, only one prior systematic review (18) has explored the effect of engagement on the effectiveness of digital interventions. This review was limited to a narrative synthesis, citing heterogeneity in how engagement is operationalized (e.g., number of logins, module completion, frequency of use, and time spent in the intervention) as a barrier to meta-analysis. Accordingly, no meta-analyses have yet empirically examined the pooled strength of the association between DMHI engagement and its impacts on mental health outcomes. Despite the lack of meta-analytic evidence, it has been widely assumed that the association between face-to-face treatment engagement and treatment success extends to these tools by virtue of the extensive replication of this relationship in the general intervention literature (19). However, extrapolating research findings from face-to-face therapies to their digital equivalents may not be appropriately comparable because differences in how content is delivered (e.g., via developing human relationships) is likely to affect both engagement and associated treatment responses (20).

The increasing number of individual studies examining the engagement—mental health outcome association published in recent years, now enables a review and quantification of the literature. To address this gap in knowledge, and justify the need for the development of engagement strategies, the primary purpose of this meta-analysis is to examine the relationship between level of engagement and change in mental health outcomes in the context of digital mental health interventions. Our primary hypothesis is that poor engagement is associated with non-significant changes in mental health outcomes, given that individual studies suggest that users who engage poorly with DMHIs derive limited treatment benefit (21, 22). This is the first meta-analysis to quantify the association between engagement and primary mental health outcome measures in respect to digital interventions, and as such, extends previous work which has been constrained by a small pool characterized by substantial heterogeneity in how engagement with DMHIs is measured.

This study is registered with PROSPERO (CRD 42020184706) and adheres to the Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) reporting guidelines (23).

A systematic search was undertaken in three online academic databases from inception to articles indexed as of August 1, 2021: MEDLINE (from 1946), PsycINFO (from 1806), and EmBASE (from 1947). A test set of five papers meeting inclusion criteria (described later) was obtained via manual search. From these papers, a set of primary search terms were developed using Medical Subject Heading (MeSH) terms and centered around four search blocks: (i) engagement/ adherence, (ii) digital interventions, (iii) mental health, and (iv) study design. This search strategy was tested in MEDLINE, where it achieved 100% sensitivity against the initial test set, and was subsequently adapted for use in the other databases. A manual ancestry search of reference lists of eligible studies identified from the database search was also conducted to identify other relevant studies that could have been missed. The search terms can be found in the Supplementary Material (p. 1).

Following removal of duplicate records, titles and abstracts were independently coded for relevance by two authors (DZQG and MT). Screening of full-text records was performed in a similar way (DZQG and LM). Disagreements were resolved via mutual discussion. Inter-rater consensus was acceptable for both title/abstract (κ = 0.86, p < 0.001) and full-text screening (κ = 0.80, p < 0.001).

Studies were eligible for inclusion if they were original or secondary analyses of randomized controlled trial (RCT) evaluations of digital interventions that were specifically designed for a mental health issue and which quantitatively examined the relationship between engagement and mental health. Only digital interventions that delivered manualised therapeutic content to users via a digital platform (e.g., smartphone, tablet, and computer) were included. The interventions could be unguided (self-directed, used independently without support or guidance by a trained health professional) or guided (health professional-assisted) and differences in these approaches were accounted for by analyzing them separately in the sub-analyses. The rationale for including only RCT studies was to understand if the intervention being tested had a main effect on efficacy to then be able to contextualize the impact of engagement. Non-experimental studies are unable to causally establish efficacy, making it redundant as to whether user engagement improves outcomes for an intervention that we are unable to determine works or not. Engagement was defined as any objective indicator used to quantify the extent of intervention use. Continuous measures of engagement were generally focused on the extent of content accessed (e.g., number of modules, sessions, or lessons completed), or the extent of intervention-related activity (e.g., number of logins or visits, time spent, specific activities or exercises completed, number of online interactions with therapists or peers). Categorical measures of engagement focused on the percentage of users who downloaded, logged-in, and/or completed the intervention.

Studies were excluded if they were not peer-reviewed journal articles (e.g., dissertations, conference presentations, etc.), in a language other than English, or if they did not investigate the relationship between engagement and mental health outcomes. No restrictions were placed on the target population, setting, type of mental health condition targeted, intervention type, or language that the interventions were delivered in.

To improve comparability and reduce heterogeneity, studies were excluded if the digital intervention was (i) targeted at caregivers or health professionals (i.e., gatekeepers) rather than individuals with the mental health condition of interest, (ii) comprised solely of activities (e.g., journal and mood tracking) or psychoeducational material without any therapeutic content, or (iii) that were delivered by other digital means (e.g., fully text-based interventions, pre-recorded videos, and DVD).

Key study information was extracted and recorded in a custom spreadsheet by three authors (DZQG, MT, and LM). One author (DZQG) extracted data for all the included papers. Accuracy of the extraction was checked by another author (LM). Any differences were resolved through discussion, and in cases where no consensus was reached, a third author (MT) was consulted. Such information included the (i) study design, (ii) intervention characteristics, (iii) target population and recruitment, (v) how engagement was measured, (vi) primary mental health condition targeted, and (vii) findings pertaining to the relationship between DMHI engagement and the primary mental health outcome. Corresponding authors of studies were contacted by email if more information was needed to determine eligibility. Based on previous research (18), studies were expected to vary in the engagement measures and mental health outcomes reported. A narrative review was therefore undertaken to ensure a systematic discussion of the findings from all studies. Studies which utilized similar measures of engagement and which employed similar analytical techniques to test the association between engagement and outcome(s) were pooled together for meta-analysis.

Random effects meta-analyses with the Pearson correlation coefficient (r) were used to examine the relationship between engagement strategies and mental health outcomes. This was different from the effect size measure (Cohen's d) stated in the protocol. However, r was determined to be more appropriate as it was commonly reported by the included studies. Corresponding authors for 33 of the 35 studies were contacted for statistical or other study data. If correlation coefficients could not be obtained, estimates of r were derived based on the data available. If non-parametric correlations were reported, estimates were computed using formulae provided by Gilpin (24); if beta regression coefficients were reported, estimates were computed using formulae proposed by Peterson and Brown (25). All correlations were standardized such that a positive coefficient indicated that greater DMHI engagement was associated with improvements in mental health (i.e., reduced symptom severity) at post-intervention.

Based on the available data, two separate analyses were conducted to address our a priori hypothesis that better engagement with digital interventions is associated with greater improvement in mental health outcomes at post-intervention. Two analyses were needed because effect sizes of studies using between-group vs. correlational designs cannot be combined (26). One meta-analysis was performed for studies which examined this association using a correlational design, while the other was conducted for studies which investigated this relationship using a between-groups design. Quantitative data for each meta-analysis were summarized using the Pearson correlation coefficient r or the Hedges' g statistics (27), respectively. For studies where data were summarized using correlation coefficients, all analyses were performed on the transformed Fisher's z-values, and subsequently transformed back to r to yield an overall summary correlation (27). Subgroup analyses were planned a priori and included comparisons between (i) level of assistance with engagement (guided or unguided interventions), (ii) user mental health severity (diagnosed or non-diagnosed), and (iii) primary mental health target (depressive- or anxiety-related symptoms).

Study heterogeneity was evaluated for each meta-analysis using the I2 statistic, with thresholds of 25, 50, and 75% denoting low, moderate, and high levels of heterogeneity, respectively (28). Ninety-five percent confidence intervals (CIs) for I2 values were computed using formulae proposed by Borenstein et al. (29). For meta-analyses with a sufficient number of included studies (i.e., ≥10 studies), publication bias was assessed using funnel plots and Egger's regression test (30). All analyses were performed using Comprehensive Meta-Analysis version 3.0 (31).

Risk of bias was assessed using Version 2 of the Cochrane risk-of-bias tool for randomized trials (RoB 2) (32). The RoB 2 comprises five domains and an overall risk domain, each of which were scored against a three-point rating scale corresponding to “low,” “some,” or “high” risk of bias. Ratings were independently conducted by two pairs of coders (40% by DZQG and LM, and 60% by DZQG and JH). Discrepancies were resolved through mutual discussion. An overall agreement rate of 93.3% was reached.

The search yielded a total of 10,623 articles. Following removal of duplicates and non-eligible studies, 35 unique studies were identified that met inclusion criteria (Figure 1). All studies were included in the narrative synthesis, and 25 of the 35 were included in the meta-analysis. Ten studies could not be included in the meta-analysis because study authors were either unable to provide the data required for effect size calculation (n = 7) or were uncontactable (n = 3).

Table 1 summarizes key information from the 35 studies, which provided baseline data on a total of 4,484 participants who were assigned to receive the digital intervention, and 8,110 participants in total (intervention and control conditions). Consistent with the aims of the present study, from herein we only report data for participants assigned to the intervention condition. Intervention condition sample sizes ranged from 22 to 542 participants (Mdn = 81). Mean participant ages ranged from 11.0 (SD 2.57) to 58.4 (SD 9.0) years. Proportion of female participants ranged from 5.3 to 100% (Mdn = 68.8%). Most studies were conducted with adults (aged 18 years and above; 30 studies; 85.7%). Duration between baseline and post-intervention assessments for mental health outcomes ranged from 3 to 14 weeks (Mdn = 9). The studies were carried out in middle to high income countries across four continents: Europe (n = 21; 60%), North America (n = 9; 25.7%), Australia (n = 3; 8.6%), and Asia (n = 2, 5.7%). Most of the interventions were either fully or partially based on cognitive-behavioral therapy (27 studies, 80%). Digital interventions were designed to address a range of mental health symptoms; the most common symptoms targeted were anxiety (10 studies, 28.6%), depression, (nine studies, 25.7%), and psychological distress/recovery (nine studies, 25.7%). Thirty (85.7%) of the DMHIs were online programs accessed primarily via computer, while the remaining five were app-based interventions accessed via smartphones. The number of modules/activities/sessions completed was the most commonly reported engagement measure (33 studies, 94.3%). Other engagement metrics included number of logins (five studies; 14.3%), time spent using the intervention (five studies, 14.3%), and other actions performed in response to DMHI content, such as emails to therapist, interactions with other users (four studies, 11.4%). Ten studies (28.6%) reported on more than one measure of engagement.

Assessment of the methodological quality of studies on the Cochrane Risk of Bias 2.0 tool found most studies to have some level of potential bias (Supplementary Material, p. 2). Selective reporting was identified to be the largest source of bias, with 28 studies (80.0%) not reporting sufficient information—such as a prospectively published trial protocol—to rule out bias in this domain. Risk of outcome measurement bias was the second most common source of potential bias, with 19 studies (54.3%) reporting that outcome assessors—usually study participants themselves—were aware of the intervention received by study participants. Participants in 16 studies (45.7%) were not blinded to their assigned intervention. Most studies had sound random sequence generation and allocation concealment processes, and employed analytical techniques to minimize bias in missing post-intervention outcome data.

Of the 35 studies, 14 (40%) reported evidence that greater engagement with DMHIs was associated with statistically significant improvements in mental health symptoms at post-intervention, and this was consistent across all engagement measures used if multiple measures were used in a single study (33, 34, 36, 39, 40, 50–52, 54, 55, 61, 63, 65, 66). Five studies (17.1%) reported mixed findings, where improvements in the primary mental health outcome were associated with some, but not all, measures of engagement (37, 43, 47, 49, 57). Sixteen studies (45.7%) did not find any significant association between engagement and post-intervention mental health outcomes (20, 35, 38, 41, 42, 44–46, 48, 53, 56, 58–60, 62, 64).

Seventeen studies evaluated digital interventions that were unguided (33–49). Of these, nine reported a positive association between engagement and mental health outcomes (33, 34, 36, 39–41, 43, 47, 49) while eight did not find a significant association (35, 37, 38, 42, 44–46, 48). Fourteen studies examined DMHIs that were guided (20, 50–62). Among these studies, six reported a positive association between engagement and mental health outcomes (50–52, 54, 55, 57) while eight did not find a significant association (20, 53, 56, 58–62). The remaining four studies included participants who used both unguided and guided versions of the same digital intervention (63–66). Of these studies, three reported a positive association between engagement and mental health outcomes (63, 65, 66) while one did not find a significant association (64).

In 12 of the studies, participants' mental health status were confirmed with formal diagnostic instruments. These ranged from anxiety-related disorders (20, 34, 35, 51, 53–55, 61, 66), schizophrenia-related disorders (60), gambling disorder (39), and obsessive-compulsive disorder (56). Of these studies, six reported a positive association between engagement and the primary mental health outcome (34, 39, 51, 54, 55, 66) while the other six did not find a significant association (20, 35, 53, 56, 60, 61). In the remaining 23 studies, participants were not formally diagnosed with a mental health condition but were screened for symptoms indicative of mental disorders. Of these studies, 12 reported a positive association between engagement and the primary mental health outcome (33, 36, 40, 41, 43, 47, 49, 50, 52, 57, 63, 65) while the other 11 did not find a significant association (37, 38, 42, 44–46, 48, 58, 59, 62, 64).

Ten studies (20, 34, 38, 51, 53–55, 58, 61, 66) investigated the relationship between engagement and anxiety-related symptoms. Of these, five reported a positive association between engagement and post-intervention symptoms (34, 51, 54, 55, 66) while five did not find any significant association (20, 38, 53, 58, 61).

Nine studies (33, 38, 40, 48, 49, 57, 58, 63, 65) investigated the relationship between engagement and depression-related symptoms. Of these, six reported a positive association between engagement and post-intervention symptoms (33, 40, 49, 57, 63, 65) while three did not find any significant association (38, 48, 58).

Nine studies (35, 36, 42, 44, 46, 50, 52, 59, 64) investigated the relationship between engagement and psychological distress or psychological recovery. Of these, three reported a positive association between engagement and post-intervention symptoms (36, 50, 52) while six did not find any significant association (35, 42, 44, 46, 59, 64).

Finally, nine studies reported associations between engagement and other primary mental health outcomes. These outcomes include loneliness (37), gambling symptoms (39), repetitive negative thinking (41), self-compassion (43), OCD symptoms (56), body dissatisfaction (45), motivation in schizophrenia (60), quality of life in bipolar disorder (62), and pain self-efficacy (47). Of these, five studies reported a positive association between engagement and post-intervention symptoms (37, 39, 41, 43, 47) while four studies did not find any association (45, 56, 60, 62).

Of the 25 studies included in the meta-analysis, 20 studies (20, 33–35, 37, 40, 41, 43, 47, 48, 51, 54–57, 59, 61, 63–65) employed correlational designs to investigate the association between engagement and mental health outcomes. The other five studies (36, 38, 50, 52, 66) used between-group mixed model comparisons to identify any differences in post-intervention outcomes between users who exhibited higher vs. lower levels of engagement. To maximize comparability across studies, the number of modules (also referred to as activities or sessions) completed was used as our primary measure of engagement, owing to its common use among the included studies.

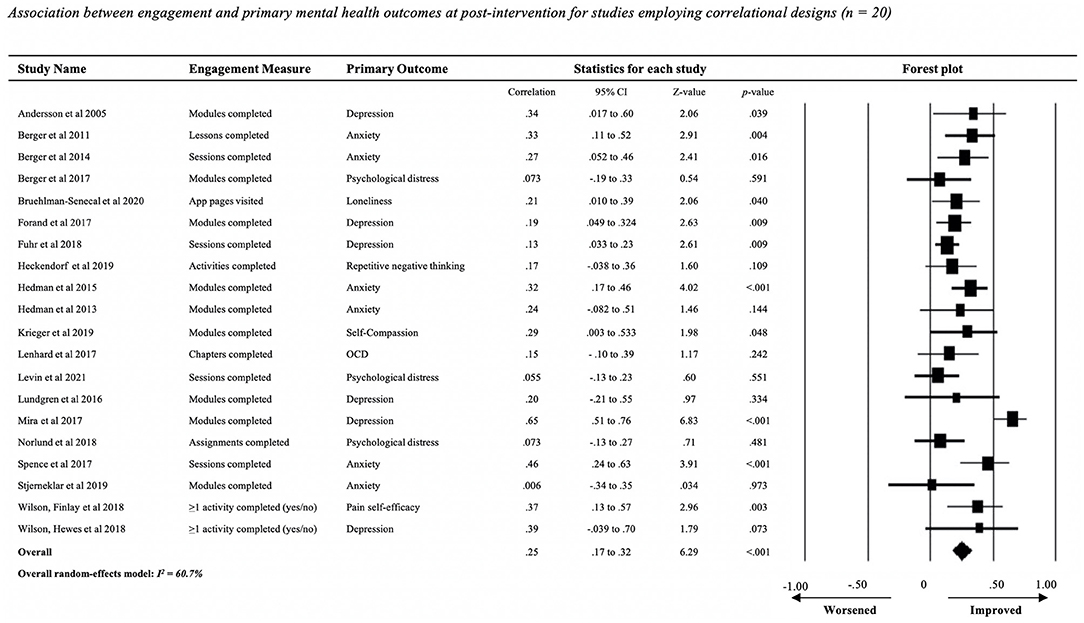

Meta-analysis of the mean pooled correlation between number of modules completed and change in any mental health outcome (20 studies, N = 1,808 participants; Figure 2) showed a small, significant positive association [r = 0.25, 95% CI (0.17, 0.32), Z = 6.29, p < 0.001]. Leave-one-out analysis revealed that no single study rendered the random-effects model non-significant. Removal of Mira et al. (65) had the largest effect influence on the model, reducing the overall r from 0.25 to 0.21. There was a moderate level of heterogeneity in the distribution of individual study effect sizes (I2 = 60.7%). Examination of the funnel plot (Supplementary Figure 5A, p. 6) revealed that there was no publication bias for this analysis, as indicated by the one-tailed p-value (p = 0.11).

Figure 2. Association between engagement and primary mental health outcomes at post-intervention for studies employing correlational designs (n = 20).

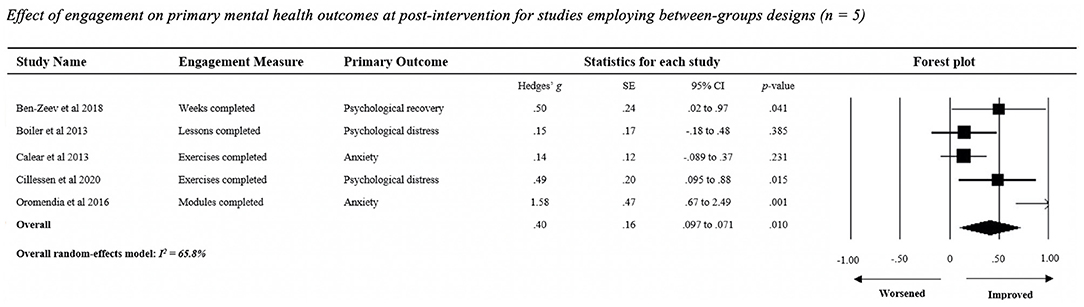

Meta-analysis of the effect of engagement on any mental health outcome among studies that used between-group comparisons (n = 5 studies; Figure 3) showed that users reporting higher levels of content access had significant, moderate improvements in post-intervention mental health outcomes relative to users with lower levels of engagement [Hedges' g = 0.40, SE =0.16, 95% CI (0.097, 0.705), Z = 2.59, p = 0.010]. Leave-one-out analysis revealed that no single study rendered the random-effects model non-significant. Omission of Oromendia et al. (66) had the largest effect reduction, increasing the Hedges' g value from 0.40 to 0.25. There was a moderate level of heterogeneity in the distribution of individual study effect sizes (I2 = 65.8%).

Figure 3. Effect of engagement on primary mental health outcomes at post-intervention for studies employing between-groups designs (n = 5).

Figures for all subgroup analyses can be found in the Supplementary Material (p. 3–5).

Data for nine of the 17 studies evaluating unguided interventions and for six of the 14 studies evaluating guided interventions were examined in two separate meta-analyses. To avoid ambiguity, studies that administered interventions in both guided and unguided formats (four studies) were excluded from the analyses. The overall mean pooled correlation for the meta-analysis of nine studies evaluating unguided interventions (N = 674; Supplementary Figure 4A) showed a small, significant positive association between engagement and post-intervention mental health outcomes (r = 0.23, 95% CI [0.16, 0.31], Z = 6.07, p < 0.001). Leave-one-out analysis revealed that no single study rendered the random-effects model non-significant. Removal of Forand et al. (40) had the largest effect influence on the model, increasing the overall r from 0.23 to 0.25. There was minimal heterogeneity in the distribution of individual study effect sizes (I2 = 0.0%).

The overall mean pooled correlation for the meta-analysis of six studies evaluating guided interventions (N = 423; Supplementary Figure 4B) showed a significant, moderate positive association between engagement and post-intervention mental health outcomes (r = 0.30, 95% CI [0.20, 0.38], Z = 6.13, p < 0.001). Leave-one-out analysis revealed that no single study rendered the random-effects model non-significant. Removal of Spence et al. (61) had the largest effect influence on the model, reducing the overall r from 0.30 to 0.26. There was no heterogeneity in the distribution of individual study effect sizes (I2 = 0.0%).

We analyzed studies that used self-report symptom screening (11 studies) separately to those studies which used diagnostic instruments (six studies). The overall mean pooled correlation for the meta-analysis of self-report symptom measures (N = 1,056; Supplementary Figure 4C) showed a significant positive association between engagement and post-intervention mental health outcomes (r = 0.24, 95% CI [0.17, 0.32], Z = 6.29, p < 0.001). Leave-one-out analysis revealed that no single study rendered the random-effects model non-significant. Removal of Mira et al. (65) had the largest effect influence on the model, reducing the overall r from 0.27 to 0.17. There was a moderate level of heterogeneity in the distribution of individual study effect sizes (I2 = 70.2%). Examination of the funnel plot (Supplementary Figure 5B, p. 6) revealed that there was no publication bias for this analysis, as indicated by the one-tailed p-value (p = 0.08).

The overall mean pooled correlation for the meta-analysis of 6 studies with participants who fulfilled criteria for a psychiatric diagnosis (N = 530; Supplementary Figure 4D) showed a significant positive association between engagement and post-intervention mental health outcomes (r = 0.28, 95% CI [0.19, 0.36], Z = 6.03, p < 0.001). Leave-one-out analysis revealed that no single study rendered the random-effects model non-significant. Removal of Spence et al. (61) had the largest effect influence on the model, reducing the overall r from 0.28 to 0.26. Heterogeneity in the distribution of individual study effect sizes was minimal (I2 = 11.6%).

Data for five of the 10 studies which investigated the relationship between engagement and anxiety-related symptoms, and for six of the 12 studies involving participants who met diagnostic criteria for a mental health condition were combined and examined in two separate meta-analyses.

The overall mean pooled correlation for the meta-analysis of 5 studies with anxiety-related symptoms as the primary outcome (N = 411; Supplementary Figure 4E) showed a significant positive association between engagement and post-intervention mental health outcomes (r = 0.33, 95% CI [0.24, 0.41], Z = 6.76, p < 0.001). Leave-one-out analysis revealed that no single study rendered the random-effects model non-significant. Removal of Spence et al. (61) had the largest effect on the model, reducing the overall r from 0.33 to 0.20. There was no heterogeneity in the distribution of individual study effect sizes (I2 = 0.0%).

The overall mean pooled correlation for the meta-analysis of 6 studies with depressive symptoms as the primary outcome (N = 735; Supplementary Figure 4F) showed a significant positive association between engagement and post-intervention mental health outcomes (r = 0.33, 95% CI [0.13, 0.50], Z = 3.12, p = 0.002). Leave-one-out analysis revealed that no single study rendered the random-effects model non-significant. Removal of Mira et al. (65) had the largest effect on the model, reducing the overall r from 0.33 to 0.17. There was a high level of heterogeneity in the distribution of individual study effect sizes (I2 = 82.2%).

To our knowledge, this is the first systematic review and meta-analysis to quantitatively examine whether the level of user engagement with a digital intervention was associated with change in mental health outcomes after the intervention period. Although it is widely accepted that the extent of engagement with digital interventions will be positively associated with improvements in mental health, robust empirical evidence to support or validate this hypothesis is scant. While the narrative synthesis showed mixed support for a positive engagement—outcome relationship, the meta-analyses (main and subgroup) consistently supported our main a priori hypothesis. That is, the results unequivocally support that greater engagement with digital interventions is modestly but significantly associated with improvements in mental health (effect size range: r = 0.23 to Hedges' g = −0.40) regardless of the level of guidance provided, mental health symptom severity of users, or type of mental health condition(s) targeted by the intervention.

Our findings validate the qualitative findings reported in the systematic review by Donkin and colleagues (18), who reported that improvements in mental health-related outcomes appeared to be associated with the number of modules accessed, but not with other engagement indicators (e.g., time spent, logins, and online interactions). In our study, it was not possible to quantitatively explore this relationship using these latter engagement indicators as too few of the included studies reported on such data. Future studies should consider reporting associations between multiple engagement measures and mental health outcomes to continue to build the evidence base for the impact of engagement on treatment outcomes, and to reach an understanding of what level or threshold of engagement is needed to achieve therapeutic benefits.

The study findings have several important implications for clinical practice and research. Firstly, they support the view that users' level of engagement with intervention content is likely a key mechanism for predicting the amount of treatment benefit obtained (18), justifying the development of strategies aimed at increasing engagement with digital interventions. There is already some promising research being done in this space, with several studies finding that external strategies such as automated reminders (13, 67), therapist-led coaching (68, 69), and moderated peer-support groups (70) can be effective toward promoting engagement with digital interventions for mental health. Though the literature on the use of strategies is still emerging, it is worthwhile for healthcare, educational, or community-based organizations who may eventually recommend or deliver digital health interventions to consider incorporating such strategies as part of their implementation models. To ensure that digital interventions are being built in ways that users are motivated to engage with them, researchers should consider involving those with lived experience in the design and development process so that these programs are appropriately solving problems that users care about, building dynamic rather than static programs, ensuring well-integrated and meaningful gamification, and allowing personalisation or tailoring of these programs to the user (12, 71).

Secondly, our findings suggest that module completion may be one of the more acceptable measures of engagement to evaluate. Given that research on the impact of engagement on mental health outcomes has been hindered by the lack of consensus over a suitable engagement measure (72, 73), we recommend that future studies consider including module completion as the primary engagement measure to facilitate future corroboration of the present findings.

Our study had several limitations. First, the included studies differed in many ways, such as by target population, DMHIs employed, and the types of mental health conditions examined. Thus, specific analyses of the effect of a specific measure of engagement on a particular mental health outcome could not be conducted. Second, there were also differences in the statistical approaches employed by studies in how they quantified the engagement-outcome relationship. As effect sizes from repeated measures and between-subject designs are not comparable (26), data provided by both types of studies had to be analyzed separately. Third, Pearson's r had to be estimated for some of the studies which employed correlational designs. While estimating r from other indices may not be ideal, it is preferred to omitting studies without this data so as to maximize objectivity and minimize selection bias (26, 29). Finally, bivariate correlations between engagement and outcomes do not account for the possibility that other variables may influence this association. For example, one of the included studies reported that sessions completed and time spent were correlated with reduced depression scores at post-intervention; however, these associations were non-significant after email support was accounted for (63). Controlling for factors linked with engagement may be necessary for verifying the robustness of its relationship with outcomes.

This systematic review and meta-analysis provides the first meta-analytic evidence that the more that users engage with digital interventions the greater the improvements in mental health symptoms. Our findings speak to the importance of ensuring that those individuals who are less motivated to engage, or experience more barriers to engagement, have access to strategies that can overcome these challenges if we are to maximize therapeutic benefits. On a methodological level, the findings underscore the importance of standardizing measures of user engagement in future trials to build our certainty in this evidence. To further advance the field, it is important for future research to explore which engagement metrics (log-ins, sessions completed, time spent, etc.) have the greatest impact on improving mental health outcomes. This information will enable the targeted development of engagement strategies that will support users to interact with interventions in ways that they are most likely to benefit from them.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

DZQG, MT, LM, and HC designed the study. DZQG and MT planned the statistical analysis. DZQG extracted and analyzed the data, with assistance from MT and LM. DZQG, MT, LM, and JH assessed study eligibility and quality. DZQG, MT, and LM wrote the first draft of the manuscript. All authors contributed to the interpretation and subsequent edits of the manuscript.

DZQG was supported by an Australian Government Research Training Program Scholarship and a Centre of Research Excellence in Suicide Prevention (CRESP) Top-Up Scholarship for the completion of a PhD. MT was supported by a NHMRC Early Career Fellowship. JH was supported by a Commonwealth Suicide Prevention Research Fund Post-Doctoral Fellowship. HC was supported by a NHMRC Elizabeth Blackburn Fellowship.

MT, LM, JH, and HC are employed by the Black Dog Institute (University of New South Wales, Sydney, NSW, Australia), a not-for-profit research institute that develops and tests digital interventions for mental health.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fdgth.2021.764079/full#supplementary-material

1. Rehm J, Shield KD. Global burden of disease and the impact of mental and addictive disorders. Curr Psychiatry Rep. (2019) 21:10. doi: 10.1007/s11920-019-0997-0

2. Murray CJL, Barber RM, Foreman KJ, Ozgoren AA, Abd-Allah F, Abera SF, et al. Global, regional, and national disability-adjusted life years (DALYs) for 306 diseases and injuries and healthy life expectancy (HALE) for 188 countries, 1990–2013: quantifying the epidemiological transition. Lancet. (2015) 386:2145–91. doi: 10.1016/S0140-6736(15)61340-X

3. Aboujaoude E, Gega L, Parish MB, Hilty DM. Editorial: digital interventions in mental health: current status and future directions. Front Psychiatry. (2020) 11:111. doi: 10.3389/fpsyt.2020.00111

4. Andersson G, Titov N. Advantages and limitations of Internet-based interventions for common mental disorders. World Psychiatry. (2014) 13:4–11. doi: 10.1002/wps.20083

5. Hom MA, Stanley IH, Joiner TE Jr. Evaluating factors and interventions that influence help-seeking and mental health service utilization among suicidal individuals: a review of the literature. Clin Psychol Rev. (2015) 40:28–39. doi: 10.1016/j.cpr.2015.05.006

6. Andrews G, Basu A, Cuijpers P, Craske MG, McEvoy P, English CL, et al. Computer therapy for the anxiety and depression disorders is effective, acceptable and practical health care: an updated meta-analysis. J Anxiety Disord. (2018) 55:70–8. doi: 10.1016/j.janxdis.2018.01.001

7. Deady M, Choi I, Calvo RA, Glozier N, Christensen H, Harvey SB. eHealth interventions for the prevention of depression and anxiety in the general population: a systematic review and meta-analysis. BMC Psychiatry. (2017) 17:310. doi: 10.1186/s12888-017-1473-1

8. Mason M, Ola B, Zaharakis N, Zhang J. Text messaging interventions for adolescent and young adult substance use: a meta-analysis. Prev Sci. (2015) 16:181–8. doi: 10.1007/s11121-014-0498-7

9. Stefanopoulou E, Hogarth H, Taylor M, Russell-Haines K, Lewis D, Larkin J. Are digital interventions effective in reducing suicidal ideation and self-harm? A systematic review. J Mental Health. (2020) 29:207–16. doi: 10.1080/09638237.2020.1714009

10. Torok M, Han J, Baker S, Werner-Seidler A, Wong I, Larsen ME, et al. Suicide prevention using self-guided digital interventions: a systematic review and meta-analysis of randomised controlled trials. Lancet Digit Health. (2020) 2:e25–36. doi: 10.1016/S2589-7500(19)30199-2

11. Aboujaoude E. Telemental health: why the revolution has not arrived. World Psychiatry. (2018) 17:277–8. doi: 10.1002/wps.20551

12. Torous J, Nicholas J, Larsen ME, Firth J, Christensen H. Clinical review of user engagement with mental health smartphone apps: evidence, theory and improvements. Evid Based Mental Health. (2018) 21:116–9. doi: 10.1136/eb-2018-102891

13. Bidargaddi N, Almirall D, Murphy S, Nahum-Shani I, Kovalcik M, Pituch T, et al. To prompt or not to prompt? A microrandomized trial of time-varying push notifications to increase proximal engagement with a mobile health app. JMIR mHealth uHealth. (2018) 6:e10123. doi: 10.2196/10123

14. Yardley L, Spring BJ, Riper H, Morrison LG, Crane DH, Curtis K, et al. Understanding and promoting effective engagement with digital behavior change interventions. Am J Prev Med. (2016) 51:833–42. doi: 10.1016/j.amepre.2016.06.015

15. Karyotaki E, Kleiboer A, Smit F, Turner DT, Pastor AM, Andersson G, et al. Predictors of treatment dropout in self-guided web-based interventions for depression: an “individual patient data” meta-analysis. Psychol Med. (2015) 45:2717–26. doi: 10.1017/S0033291715000665

16. Alkhaldi G, Hamilton FL, Lau R, Webster R, Michie S, Murray E. The effectiveness of prompts to promote engagement with digital interventions: a systematic review. J Med Internet Res. (2016) 18:e6. doi: 10.2196/jmir.4790

17. Wu A, Scult MA, Barnes ED, Betancourt JA, Falk A, Gunning FM. Smartphone apps for depression and anxiety: a systematic review and meta-analysis of techniques to increase engagement. NPJ Digit Med. (2021) 4:1–9. doi: 10.1038/s41746-021-00386-8

18. Donkin L, Christensen H, Naismith SL, Neal B, Hickie IB, Glozier N, et al. Systematic review of the impact of adherence on the effectiveness of e-therapies. J Med Internet Res. (2011) 13:e52. doi: 10.2196/jmir.1772

19. Farrer LM, Griffiths KM, Christensen H, Mackinnon AJ, Batterham PJ. Predictors of adherence and outcome in internet-based cognitive behavior therapy delivered in a telephone counseling setting. Cognit Ther Res. (2014) 38:358–67. doi: 10.1007/s10608-013-9589-1

20. Stjerneklar S, Hougaard E, Thastum M. Guided internet-based cognitive behavioral therapy for adolescent anxiety: predictors of treatment response. Internet Intervent. (2019) 15:116–25. doi: 10.1016/j.invent.2019.01.003

21. El Alaoui S, Ljotsson B, Hedman E, Kaldo V, Andersson E, Rück C, et al. Predictors of symptomatic change and adherence in internet-based cognitive behaviour therapy for social anxiety disorder in routine psychiatric care. PLoS ONE. (2015) 10:e0124258. doi: 10.1371/journal.pone.0124258

22. Hilvert-Bruce Z, Rossouw PJ, Wong N, Sunderland M, Andrews G. Adherence as a determinant of effectiveness of internet cognitive behavioural therapy for anxiety and depressive disorders. Behav Res Ther. (2012) 50:463–8. doi: 10.1016/j.brat.2012.04.001

23. Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JPA, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J Clin Epidemiol. (2009) 62:e1–34. doi: 10.1016/j.jclinepi.2009.06.006

24. Gilpin AR. Table for conversion of Kendall's Tau to Spearman's Rho within the context of measures of magnitude of effect for meta-analysis. Educ Psychol Meas. (1993) 53:87–92. doi: 10.1177/0013164493053001007

25. Peterson RA, Brown SP. On the use of beta coefficients in meta-analysis. J Appl Psychol. (2005) 90:175–81. doi: 10.1037/0021-9010.90.1.175

28. Higgins JP, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. (2003) 327:557–60. doi: 10.1136/bmj.327.7414.557

29. Borenstein M, Cooper H, Hedges L, Valentine J. Effect sizes for continuous data. In: Cooper H, Hedges LV, Valentine JC, editors, The Handbook of Research Synthesis and Meta-Analysis. New York, NY: Russell Sage Foundation (2009). p. 221–35.

30. Egger M, Smith GD, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ. (1997) 315:629–34. doi: 10.1136/bmj.315.7109.629

31. Borenstein M, Hedges LV, Higgins JP, Rothstein HR. Comprehensive Meta-Analysis (version 3.0). Englewood, NJ: Biostat (2013).

32. Sterne JAC, Savović J, Page MJ, Elbers RG, Blencowe NS, Boutron I, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ. (2019) 366:l4898. doi: 10.1136/bmj.l4898

33. Andersson G, Bergstrom J, Hollandare F, Carlbring P, Kaldo V, Ekselius L. Internet-based self-help for depression: randomised controlled trial. Br J Psychiatry. (2005) 187:456–61. doi: 10.1192/bjp.187.5.456

34. Berger T, Caspar F, Richardson R, Kneubuhler B, Sutter D, Andersson G. Internet-based treatment of social phobia: a randomized controlled trial comparing unguided with two types of guided self-help. Behav Res Ther. (2011) 49:158–69. doi: 10.1016/j.brat.2010.12.007

35. Berger T, Urech A, Krieger T, Stolz T, Schulz A, Vincent A, et al. Effects of a transdiagnostic unguided internet intervention ('velibra') for anxiety disorders in primary care: results of a randomized controlled trial. Psychol Med. (2017) 47:67–80. doi: 10.1017/S0033291716002270

36. Bolier L, Haverman M, Kramer J, Westerhof GJ, Riper H, Walburg JA, et al. An Internet-based intervention to promote mental fitness for mildly depressed adults: randomized controlled trial. J Med Internet Res. (2013) 15:e200. doi: 10.2196/jmir.2603

37. Bruehlman-Senecal E, Hook CJ, Pfeifer JH, FitzGerald C, Davis B, Delucchi KL, et al. Smartphone app to address loneliness among college students: pilot randomized controlled trial. JMIR Mental Health. (2020) 7:e21496. doi: 10.2196/21496

38. Calear AL, Christensen H, Mackinnon A, Griffiths KM. Adherence to the MoodGYM program: outcomes and predictors for an adolescent school-based population. J Affect Disord. (2013) 147:338–44. doi: 10.1016/j.jad.2012.11.036

39. Casey LM, Oei TP, Raylu N, Horrigan K, Day J, Ireland M, et al. Internet-based delivery of cognitive behaviour therapy compared to monitoring, feedback and support for problem gambling: a randomised controlled trial. J Gambling Stud. (2017) 33:993–1010. doi: 10.1007/s10899-016-9666-y

40. Forand NR, Huibers MJH, DeRubeis RJ. Prognosis moderates the engagement–outcome relationship in unguided cCBT for depression: a proof of concept for the prognosis moderation hypothesis. J Consult Clin Psychol. (2017) 85:471–83. doi: 10.1037/ccp0000182

41. Heckendorf H, Lehr D, Ebert DD, Freund H. Efficacy of an internet and app-based gratitude intervention in reducing repetitive negative thinking and mechanisms of change in the intervention's effect on anxiety and depression: results from a randomized controlled trial. Behav Res Ther. (2019) 119:103415. doi: 10.1016/j.brat.2019.103415

42. Hensel JM, Shaw J, Ivers NM, Desveaux L, Vigod SN, Bouck Z, et al. Extending access to a web-based mental health intervention: who wants more, what happens to use over time, and is it helpful? results of a concealed, randomized controlled extension study. BMC Psychiatry. (2019) 19:39. doi: 10.1186/s12888-019-2030-x

43. Krieger T, Reber F, von Glutz B, Urech A, Moser CT, Schulz A, et al. An internet-based compassion-focused intervention for increased self-criticism: a randomized controlled trial. Behav. (2019) 50:430–45. doi: 10.1016/j.beth.2018.08.003

44. Levin ME, Hayes SC, Pistorello J, Seeley JR. Web-based self-help for preventing mental health problems in universities: comparing acceptance and commitment training to mental health education. J Clin Psychol. (2016) 72:207–25. doi: 10.1002/jclp.22254

45. Luo YJ, Jackson T, Stice E, Chen H. Effectiveness of an Internet dissonance-based eating disorder prevention intervention among body-dissatisfied young Chinese women. Behav. (2021) 52:221–33. doi: 10.1016/j.beth.2020.04.007

46. Twomey C, O'Reilly G, Byrne M, Bury M, White A, Kissane S, et al. A randomized controlled trial of the computerized CBT programme, moodGYM, for public mental health service users waiting for interventions. Br J Clin Psychol. (2014) 53:433–50. doi: 10.1111/bjc.12055

47. Wilson M, Finlay M, Orr M, Barbosa-Leiker C, Sherazi N, Roberts MLA, et al. Engagement in online pain self-management improves pain in adults on medication-assisted behavioral treatment for opioid use disorders. Addict Behav. (2018) 86:130–7. doi: 10.1016/j.addbeh.2018.04.019

48. Wilson M, Hewes C, Barbosa-Leiker C, Mason A, Wuestney KA, Shuen JA, et al. Engaging adults with chronic disease in online depressive symptom self-management. West J Nurs Res. (2018) 40:834–53. doi: 10.1177/0193945916689068

49. Zeng Y, Guo Y, Li L, Hong YA, Li Y, Zhu M, et al. Relationship between patient engagement and depressive symptoms among people living with HIV in a mobile health intervention: secondary analysis of a randomized controlled trial. JMIR mHealth uHealth. (2020) 8:e20847. doi: 10.2196/20847

50. Ben-Zeev D, Brian RM, Jonathan G, Razzano L, Pashka N, Carpenter-Song E, et al. Mobile health (mHealth) versus clinic-based group intervention for people with serious mental illness: a randomized controlled trial. Psychiatric Serv. (2018) 69:978–85. doi: 10.1176/appi.ps.201800063

51. Berger T, Boettcher J, Caspar F. Internet-based guided self-help for several anxiety disorders: a randomized controlled trial comparing a tailored with a standardized disorder-specific approach. Psychotherapy. (2014) 51:207–19. doi: 10.1037/a0032527

52. Cillessen L, van de Ven MO, Compen FR, Bisseling EM, van der Lee ML, Speckens AE. Predictors and effects of usage of an online mindfulness intervention for distressed cancer patients: Usability study. J Med Internet Res. (2020) 22:e17526. doi: 10.2196/17526

53. El Alaoui S, Hedman E, Ljotsson B, Bergstrom J, Andersson E, Ruck C, et al. Predictors and moderators of internet- and group-based cognitive behaviour therapy for panic disorder. PLoS ONE. (2013) 8:e79024. doi: 10.1371/journal.pone.0079024

54. Hedman E, Andersson E, Lekander M, Ljotsson B. Predictors in Internet-delivered cognitive behavior therapy and behavioral stress management for severe health anxiety. Behav Res Ther. (2015) 64:49–55. doi: 10.1016/j.brat.2014.11.009

55. Hedman E, Lindefors N, Andersson G, Andersson E, Lekander M, Ruck C, et al. Predictors of outcome in Internet-based cognitive behavior therapy for severe health anxiety. Behav Res Ther. (2013) 51:711–7. doi: 10.1016/j.brat.2013.07.009

56. Lenhard F, Andersson E, Mataix-Cols D, Rück C, Vigerland S, Högström J, et al. Therapist-guided, Internet-delivered cognitive-behavioral therapy for adolescents with obsessive-compulsive disorder: a randomized controlled trial. J Am Acad Child Adolesc Psychiatry. (2017) 56:10–9.e2. doi: 10.1016/j.jaac.2016.09.515

57. Lundgren JG, Dahlstrom O, Andersson G, Jaarsma T, Karner Kohler A, Johansson P. The effect of guided web-based cognitive behavioral therapy on patients with depressive symptoms and heart failure: a pilot randomized controlled trial. J Med Internet Res. (2016) 18:e194. doi: 10.2196/jmir.5556

58. Moberg C, Niles A, Beermann D. Guided self-help works: randomized waitlist controlled trial of Pacifica, a mobile app integrating cognitive behavioral therapy and mindfulness for stress, anxiety, and depression. J Med Internet Res. (2019) 21:e12556. doi: 10.2196/12556

59. Norlund F, Wallin E, Gustaf Olsson EM, Wallert J, Burell G, von Essen L, et al. Internet-based cognitive behavioral therapy for symptoms of depression and anxiety among patients with a recent myocardial infarction: the U-CARE heart randomized controlled trial. J Medical Internet Res. (2018) 20:jmir.9710. doi: 10.2196/jmir.9710

60. Schlosser DA, Campellone TR, Truong B, Etter K, Vergani S, Komaiko K, et al. Efficacy of PRIME, a mobile app intervention designed to improve motivation in young people with schizophrenia. Schizophr Bull. (2018) 44:1010–20. doi: 10.1093/schbul/sby078

61. Spence SH, Donovan CL, March S, Kenardy JA, Hearn CS. Generic versus disorder specific cognitive behavior therapy for social anxiety disorder in youth: a randomized controlled trial using internet delivery. Behav Res Ther. (2017) 90:41–57. doi: 10.1016/j.brat.2016.12.003

62. Todd NJ, Jones SH, Hart A, Lobban FA. A web-based self-management intervention for Bipolar Disorder “Living with Bipolar”: a feasibility randomised controlled trial. J Affect Disord. (2014) 169:21–9. doi: 10.1016/j.jad.2014.07.027

63. Fuhr K, Schroder J, Berger T, Moritz S, Meyer B, Lutz W, et al. The association between adherence and outcome in an Internet intervention for depression. J Affect Disord. (2018) 229:443–9. doi: 10.1016/j.jad.2017.12.028

64. Levin ME, Krafft J, Davis CH, Twohig MP. Evaluating the effects of guided coaching calls on engagement and outcomes for online acceptance and commitment therapy. Cogn Behav Ther. (2021) 2021:1–14. doi: 10.1080/16506073.2020.1846609

65. Mira A, Breton-Lopez J, Garcia-Palacios A, Quero S, Banos RM, Botella C. An internet-based program for depressive symptoms using human and automated support: a randomized controlled trial. Neuropsychiatr Dis Treat. (2017) 13:987–1006. doi: 10.2147/NDT.S130994

66. Oromendia P, Orrego J, Bonillo A, Molinuevo B. Internet-based self-help treatment for panic disorder: a randomized controlled trial comparing mandatory versus optional complementary psychological support. Cogn Behav Ther. (2016) 45:270–86. doi: 10.1080/16506073.2016.1163615

67. Bidargaddi N, Pituch T, Maaieh H, Short C, Strecher V. Predicting which type of push notification content motivates users to engage in a self-monitoring app. Prev Med Rep. (2018) 11:267–73. doi: 10.1016/j.pmedr.2018.07.004

68. Beintner I, Jacobi C. Impact of telephone prompts on the adherence to an Internet-based aftercare program for women with bulimia nervosa: a secondary analysis of data from a randomized controlled trial. Internet Interv. (2019) 15:100–4. doi: 10.1016/j.invent.2017.11.001

69. Proudfoot JP G, Manicavasagar V, Hadzi-Pavlovic D, Whitton A, Nicholas J, Smith M, et al. Effects of adjunctive peer support on perceptions of illness control and understanding in an online psychoeducation program for bipolar disorder: a randomised controlled trial. J Affect Disord. (2012) 142:98–105. doi: 10.1016/j.jad.2012.04.007

70. Carolan SHPR, Greenwood K, Cavanagh K. Increasing engagement with an occupational digital stress management program through the use of an online facilitated discussion group: results of a pilot randomised controlled trial. Internet Interv. (2017) 10:1–11. doi: 10.1016/j.invent.2017.08.001

71. Morton E, Barnes SJ, Michalak EE. Participatory digital health research: a new paradigm for mHealth tool development. Gen Hosp Psychiatry. (2020) 66:67–9. doi: 10.1016/j.genhosppsych.2020.07.005

72. Perski O, Blandford A, West R, Michie S. Conceptualising engagement with digital behaviour change interventions: a systematic review using principles from critical interpretive synthesis. Transl Behav Med. (2016) 7:254–67. doi: 10.1007/s13142-016-0453-1

Keywords: digital mental health, eHealth, mHealth, systematic review, meta-analysis

Citation: Gan DZQ, McGillivray L, Han J, Christensen H and Torok M (2021) Effect of Engagement With Digital Interventions on Mental Health Outcomes: A Systematic Review and Meta-Analysis. Front. Digit. Health 3:764079. doi: 10.3389/fdgth.2021.764079

Received: 25 August 2021; Accepted: 29 September 2021;

Published: 04 November 2021.

Edited by:

Thomas Berger, University of Bern, SwitzerlandReviewed by:

Matthias Domhardt, University of Ulm, GermanyCopyright © 2021 Gan, McGillivray, Han, Christensen and Torok. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Daniel Z. Q. Gan, ZGFuaWVsenEuZ2FuQHVuc3cuZWR1LmF1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.