94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Dev. Psychol., 11 April 2025

Sec. Social and Emotional Development

Volume 3 - 2025 | https://doi.org/10.3389/fdpys.2025.1536440

This article is part of the Research TopicMoral Emotions Scrutinized: Developmental, Clinical and Neuroscientific AspectsView all articles

Introduction: Objective measures of emotional valence in young children are rare, but recent work has employed motion depth sensor imaging to measure young children's emotional expression via changes in their body posture. This method efficiently captures children's emotional valence, moving beyond self-reports or caregiver reports, and avoiding extensive manual coding, e.g., of children's facial expressions. Moreover, it can be flexibly and non-invasively used in interactive study paradigms, thus offering an advantage over other physiological measures of emotional valence.

Method: Here, we discuss the merits of studying body posture in developmental research and showcase its use in six studies. To this end, we provide a comprehensive validation in which we map the measures of children's posture onto the constructs of emotional valence and arousal. Using body posture data aggregated from six studies (N = 466; Mage = 5.08; range: 2 years, 5 months to 6 years, 2 months; 220 girls), coders rated children's expressed emotional valence and arousal, and provided a discrete emotion label for each child.

Results: Emotional valence was positively associated with children's change in chest height and chest expansion: children with more upright upper-body postures were rated as expressing a more positive emotional valence whereas the relation between emotional arousal and changes in body posture was weak.

Discussion: These data add to existing evidence that changes in body posture reliably reflect emotional valence. They thus provide an empirical foundation to conduct research on children's spontaneously expressed emotional valence using the automated and efficient tool of body posture analysis.

Understanding children's emotional responses provides an important window into their cognitive, social, and moral development. For instance, scholars have relied on emotional valence to gain insights into the motivational mechanisms (such as empathy, gratitude, guilt, and shame) underlying the development and maintenance of (pro)sociality and cooperation in young children (Aknin et al., 2018; Gerdemann et al., 2022b; Malti et al., 2019; Tan and Hamlin, 2024; Vaish and Hepach, 2020). Emotional responses also enable the study of children's emerging cognitive capacity for counterfactual thinking, expressed through emotions such as regret and relief (Beck and Riggs, 2014; Weisberg and Beck, 2012). Furthermore, assessing audience effects on emotional expression can provide insights into children's metacognitive capacities and concerns for their reputation (Botto and Rochat, 2018; Jones et al., 1991).

To uncover these various cognitive and motivational mechanisms during ontogeny, reliable tools to measure young children's emotional responses are essential. One recent novel approach has begun to provide insights into children's emotional responses by using automated measures of children's body posture, recorded using Microsoft Kinect depth sensor imaging cameras (Gerdemann et al., 2022c; Hepach and Tomasello, 2020; Sivertsen et al., 2024; Waddington et al., 2022). With this approach, children's body posture is automatically tracked while they walk toward the camera during live interactive study paradigms. In later processing steps, the elevation of children's upper body posture is used to assess emotion expression resulting from different study manipulations. Here, we present the results of a validation study in which we show that changes in children's upper body posture (assessed using Kinect cameras) robustly reflect changes in children's emotional valence. In addition, we examine the relation between changes in children's body posture and their emotional arousal to assess which construct is captured best by changes in upper body posture elevation. Our aim is to validate body posture as a useful tool for measuring emotions in developmental science. Before describing the present validation study, we discuss other existing methods for studying children's emotional responses to illustrate the critical importance of adding measurements of posture to the toolkit available to developmental scientists interested in studying early emotional development.

One established approach to measuring young children's emotions involves interviewing children and directly asking them to verbally self-report their emotions (Kogut, 2012; Uprichard and McCormack, 2019). Interview methods can provide an easily accessible measure of children's emotional responses. Moreover, this approach parallels the approach often taken to study adults' emotions, thus rendering comparisons between children's and adults' emotional expressions possible (de Hooge et al., 2008; Gummerum et al., 2020; Keltner and Buswell, 1996). The main limitation of interview-based methods in developmental science is that they are only suitable for verbal populations (i.e., children beyond their second or third year of life). Conducting studies of children's emotions across wide age ranges (including toddlers) is difficult using interviews. Moreover, in cases in which children respond to pre-provided prompts, their potential responses are typically constrained by the available options (e.g., feeling “good,” “a little bit good,” “a little bit bad,” and so on). These broad categories in which children typically report their emotions may obscure more subtle differences as these responses are not recorded on a natural scale. On the other hand, open-ended interview methods in which participants can provide richer descriptions of their emotional state may not be suitable until children have far more sophisticated cognitive and verbal abilities and may not yield responses that can be easily analyzed using quantitative methods. Thus, while interviews provide a very useful measure of emotional experiences for verbal populations, they are limited in their ability to measure subtle differences in degree and to older developmental populations.

A second approach is based on manually coding children's emotional expressions as expressed, for instance, in their overall affect (Aknin et al., 2012), facial expressions, including through the facial action coding scheme, or FACS (Castro et al., 2018; Ekman et al., 1978; Steckler et al., 2018; Strayer and Roberts, 1997) or combination of bodily and facial features, such as those thought to reflect complex emotions like guilt and shame (Kochanska et al., 2002; Stipek et al., 1992; Vaish et al., 2016). This is a useful approach for both verbal and non-verbal populations, and therefore it can be applied from infancy throughout development. The main disadvantage of having coders rate children's emotional expression manually is that it is a time-intensive method usually requiring multiple naive coders. This approach therefore may only be suitable for specific kinds of studies. For instance, it may be less applicable to studies involving multiple trials and/or a large sample size, due to resource limitations (Zaharieva et al., 2024). However, increasing sample sizes or collecting data from multiple trials is often desirable to increase statistical power and therefore replicability in developmental science (Bergmann et al., 2018). Moreover, as with an interview-based approach, the responses often use pre-defined categories to code children's emotional expression rather than a natural scale, thereby potentially obscuring subtle variations in the degree of emotional response. Especially when the goal is to assess discrete emotions such as fear or anger, FACS is often employed (Ekman et al., 1978). With this coding scheme, different micro-movements or facial action units are identified. Yet, there is a debate concerning whether discrete emotions can be identified based on facial expressions, as many emotions show overlapping facial action units. Moreover, emotional expressions are often subtle and stereotyped emotion displays of the kind that are usually expected when using FACS may be rarer in naturalistic contexts, thus limiting the informativeness of this approach (Barrett et al., 2019). Finally, a difficult methodological challenge is posed by the question of which (facial) actions to code or whether to focus on overall affect. Thus, although manually coding emotional responses is informative, and can be used across a wide age range, it has several downsides (Zaharieva et al., 2024).

Notably, there also exist automated computer-assisted methods for applying the facial action coding scheme with infants and young children (Zaharieva et al., 2024; Zanette et al., 2016). These detect the occurrence and intensity of action units or of overall facial expressions. Such methods overcome some of the limitations of the manual coding of facial expressions, specifically concerning the efficiency with which large data sets can be coded. However, so far this method has focused exclusively on facial expressions and has excluded other dimensions of emotional expression, such as posture (Aviezer et al., 2012).

The disadvantages of these two often used methods to study emotional development illustrate why developmental scientists have increasingly turned to direct physiological measures of children's emotional arousal such as pupil dilation (Hepach et al., 2012), galvanic skin conductance or electrodermal activity (EDA; Gummerum et al., 2020), and emotional valence such as facial electromyography (fEMG; Vacaru et al., 2020). These methods gauge young children's emotions directly and objectively on fine-grained natural scales.

It is important when discussing these measures to distinguish between two dimensions of emotion: emotional arousal and valence (Posner et al., 2005). Emotional valence is typically defined as the pleasantness of an emotion, from negative to positive (Walle and Dukes, 2023) or displeasure to pleasure (Reisenzein, 1994). On the other hand, emotional arousal refers to the degree of physiological or psychological activation compared to deactivation related to an emotion (Reisenzein, 1994). Pupillometry and electrodermal measures both assess emotional arousal but not emotional valence (Bradley et al., 2008; Sirois and Brisson, 2014). Pupil dilation is a result of activation of the sympathetic nervous system (Sirois and Brisson, 2014). This can reflect changes in emotional arousal in response to stimuli that are positive or negative in valence (Bradley et al., 2008). Measuring emotional arousal via changes in pupil dilation in infants and young children can be useful for investigating whether children differentiate between different scenes such as those involving a moral vs. a conventional violation (Kassecker et al., 2023; Yucel et al., 2020). Electrodermal activity similarly indicates sympathetic nervous system activity or stress but cannot indicate emotional valence (Fowles, 2008). For instance, measures of skin conductance have been used to indicate emotional arousal in children in response to fair and unfair resource allocations. However, the electrodermal activity data were paired with explicit emotional valence ratings to disentangle the nature of the emotional arousal (Gummerum et al., 2020).

Facial electromyography, on the other hand, can directly inform about the valence of an emotional response. This is a clear advantage of facial electromyography over other physiological methods when the goal is to study emotional valence. Accordingly, fEMG is increasingly used in developmental studies (Armstrong et al., 2007; Beall et al., 2008; Cattaneo et al., 2018; Geangu et al., 2016; Oberman et al., 2009; Tan and Hamlin, 2024; Vacaru et al., 2020). With this method, changes in electrical activity resulting from facial muscular contractions are recorded from the surface of the skin using electrodes. Activation of the brow muscle, the corrugator supercilii, has been found to be associated with negative emotional valence whereas activation of the zygomaticus major, the cheek muscle, is associated with positive emotional valence (van Boxtel, 2010). Recent studies suggest that this general pattern also holds for infant populations, who respond with congruent patterns of facial muscle activation to happy and angry action kinematics (Addabbo et al., 2020; Schröer et al., 2022). Finally, when planning studies with this method, one must consider the relatively high dropout rate with infant populations (Addabbo et al., 2020).

In summary, physiological measures are increasingly being used to assess emotions in developmental populations due to their increased sensitivity to subtle changes in degree of emotional response and their efficiency and objectivity. However, among the reviewed methods, only facial EMG captures emotional valence, which is often of interest in developmental studies on the motivational mechanisms underlying behavior. Yet, facial EMG has other disadvantages such as being somewhat invasive due to the application of electrodes. We now turn to the use of body posture as a measure of emotion in adulthood and as a physiological measure of emotional valence in developmental studies, and one that addresses some of the disadvantages of the measures described thus far.

In the adult emotion literature, like in developmental studies, there has been a predominant focus on the role of facial movements when studying emotional expression, especially based on FACS (Du et al., 2014; Ekman et al., 1978). Nevertheless, dating back to Darwin's The expression of emotions in man and animals (Darwin, 1872/2009), scholars have recognized that bodily expressions play a key role in conveying emotional experience. Emotions such as shame, pride, anger, fear, happiness, sadness, and disgust can be reliably recognized by adults based on body postures, even in the absence of facial information (Atkinson et al., 2004; de Gelder and Van den Stock, 2011; Tracy et al., 2009; Witkower and Tracy, 2019). Even young children from age 3 years onwards can recognize sadness based on people's postures, and the recognition of anger and fear from posture increases between ages 4 to 8 (Witkower et al., 2021b). There is also some evidence of universality in the recognition of basic emotions from body postures, based on the finding that members of a small-scale traditional society were able to recognize fear, anger, sadness, and neutral emotions from postures without facial information (Witkower et al., 2021a). Posture is considered critical to the expression of emotions as it is visible from a greater distance than facial expressions, and therefore enables rapid decoding of an emotional expression, allowing observers to adjust their behavior (Aviezer et al., 2012; de Gelder, 2009; Witkower and Tracy, 2019). Indeed, for some social emotions such as pride and shame, bodily actions are thought to be more critical to their expression and recognition than facial expressions (e.g., Tracy et al., 2009). In addition, for intense emotions, such as frustration and joy in response to winning or losing at sports, body postures are used more than facial expressions to identify emotional valence (Aviezer et al., 2012).

Once identified, non-verbal expressions of emotions through posture have an impact on moral judgment and social behaviors. For instance, appropriate postural expressions of shame or sadness after moral wrongdoing have been found to lead to less social exclusion and higher perceived moral standing of the perpetrator (Halmesvaara et al., 2020). This suggests that some emotion displays, including posture-based emotional expressions, function as “signs of appeasement” (Keltner, 1995). Notably, analogs to human postural emotional expressions exist in the animal kingdom in the form of appeasement vs. dominance displays (Darwin, 1872/2009). These points to the evolutionary roots of human postural emotional expressions (Beall and Tracy, 2020). We note that while body posture displays may be prototypical for some emotions, such as shame and pride (Tracy and Matsumoto, 2008), they also occur for other emotions, such as anger or joy/elation in adulthood (Wallbott, 1998; Witkower and Tracy, 2019). Taken together, this research illustrates that body postures provide a key window into the experience of a wide range of emotions in adulthood, and that body posture cues are also used in meaningful ways to identify emotional valence by observers.

Among children, there have been a small number of studies focusing on the expression of shame, pride, and guilt, in which bodily cues of emotion were considered (Kochanska et al., 2002; Lewis et al., 1992; Stipek et al., 1992). In these studies, children who expressed a lowered, hunched, or bent upper body posture were considered to express shame or guilt. On the other hand, an elevation of upper body posture is thought to reflect nascent pride in young children (Lewis et al., 1992; Stipek et al., 1992). Importantly, this prior work relied on coders' subjective interpretation of observed body posture.

More recent work, however, has begun to use depth sensor imaging to automatically capture dynamically expressed changes in posture as an index of emotional valence in childhood (Gerdemann et al., 2022a; Hepach et al., 2023, 2017; Waddington et al., 2022). In these studies, the elevation in children's upper body posture was measured after emotion-eliciting events, such as after children failed or succeeded in helping someone (see Table 1). After children completed the required action, their body posture was measured in the moment by depth sensor imaging. These studies focused on the upper body posture (i.e., the change in chest height and the change in chest expansion) because this is thought to be most affected by changes in emotion valence, with negative emotions (such as shame, sadness, or disappointment) slumping the upper body and positive emotions (such as pride or elated joy) elevating the upper body (Lewis et al., 1992; Stipek et al., 1992; Tracy and Matsumoto, 2008; Wallbott, 1998; Witkower and Tracy, 2019).

This recent work has found that positive actions such as successfully helping someone elevate children's upper body posture (Hepach et al., 2023, 2017; Sivertsen et al., 2024), especially when doing so involves a cost, such as having to abandon a game to provide the help (Sivertsen et al., 2024). Similarly, seeing deserving help elevates children's body posture, especially when children are being observed (Gerdemann et al., 2022a; Hepach and Tomasello, 2020). On the other hand, failing to help lowers children's upper body posture (Gerdemann et al., 2022c). Moreover, advantageous unfairness, such as receiving 4 of a resource while a peer receives 1 resource, elevates children's upper body posture during the pre-school years (until around 5.5 years of age), but lowers children's posture at older ages (from around 8.5 years onward) in social contexts (Gerdemann et al., 2022b). Children's posture is also differentially affected by whether requests they make are justified (i.e., when they have to ask for a sticker from another person who needs it more), with 3-year-olds showing a lowered posture after making unjustified requests (e.g., when they have to ask for a sticker from another person who needs it more; Waddington et al., 2022).

In summary, body posture as measured by depth sensor imaging has already been employed in a number of different study paradigms with children between 2 and 10 years of age (see also Outters et al., 2023). In contrast to paradigms using other physiological measures, such as skin conductance or facial electromyography, which require the application of sensors, measures of changes in body posture as recorded by the Kinect in developmental studies do not require the use of any sensors or markers. The estimation of 20 body posture points is accomplished via an edge detection algorithm based on depth images. This can be an advantage especially when studying both neurotypical and neurodivergent younger developmental populations who might become fussy during the application of sensors or markers (Van Schaik and Dominici, 2020). Moreover, children can remain naive to the recording of their posture. This may allow for more spontaneous expressions, which are less influenced by the experimental situation. This approach also contrasts with marker-based motion tracking systems. Although these can capture shifts in body position with more precision, they come with the caveat of requiring the application of markers or sensors. Moreover, commercially available marker-based motion tracking systems are often significantly more expensive to acquire than markerless options (Van Schaik and Dominici, 2020).

A further advantage of markerless motion tracking of posture over other physiological measures is that children can be mobile and walk through the room while their posture is recorded. Notably, this is also possible, for instance, with wearable devices to measure electrodermal activity (Thammasan et al., 2020), and facial electromyography (Sato et al., 2021). However, the use of wearable technologies for these methods is not quite as widespread yet, perhaps, in part, because the processing algorithms and artifact detection has not yet been well validated. One interesting avenue for future research would be to combine the use of wearable devices to measure electrodermal activity or facial electromyography with measures of body posture to cross-validate these measures and explore their individual strengths.

Several past investigations have suggested an association between emotional valence and changes in body posture as measured by the Kinect. First, when adults are asked to pose emotions such as pride, shame, joy, and disappointment, their body posture, as measured by motion depth sensor imaging, changed according to the valence of the emotion, i.e., it was more elevated for the more positive emotions than the negative ones (Hepach et al., 2015; von Suchodoletz and Hepach, 2021). Moreover, studies with children have found modest or descriptively positive associations between changes in body posture (as captured by the Kinect) and expressed emotional valence (as rated by human coders). For instance, in Hepach et al. (2017), the pattern of changes in children's upper body posture paralleled the pattern of the number of smiles children showed. Furthermore, Gerdemann et al. (2022c) found a small positive association between changes in children's upper body posture and their rated happiness on a 5-point scale. Similarly, Hepach and Tomasello (2020) found positive associations between changes in children's upper body posture and their rated happiness as well as their relative number of smiles, although these associations did not reach statistical significance. In addition, in Gerdemann et al. (2022c), higher ratings of shame, sadness, and anger were negatively (though non-significantly) correlated with children's upper body posture (see Table 1 for an overview). Finally, in Gerdemann et al. (2022b), children's self-reported emotional valence (feeling “good,” “a little bit good,” “a little bit bad,” and “bad”) showed the same pattern as children's change in body posture.

Thus, some of these prior studies indicate that changes in body posture reflect emotional valence (see also Aviezer et al., 2012), but several of the prior null results may be due to relatively small sample sizes and a lack of statistical power to detect small effects within a single study. Moreover, there may have been a lack of variability in the emotional responses of young children within a single study, because some studies tended to focus exclusively on negative or positive emotional events. This may render the association between changes in body posture and emotional valence difficult to measure in a single study. In the present study, therefore, we present a comprehensive validation study to assess the relation between changes in upper body posture and emotional valence.

Additionally, except for Gerdemann et al. (2022c), prior studies focused on emotional valence, self-reported emotional states, or the number of smiles children showed. Yet, emotions can be mapped along the dimensions of both valence and arousal (Posner et al., 2005), and several physiological measures reflect emotional arousal, such as pupil dilation (Sirois and Brisson, 2014). Therefore, in the present study, we included a measure of emotional arousal alongside valence.

Finally, to date, only one prior study (Gerdemann et al., 2022c) examined differences in children's expressed posture changes across discrete emotions (i.e., shame, anger, sadness, or happiness). In the developmental literature, the emotions of shame, pride, and guilt have been most strongly associated with changes in posture (Stipek et al., 1992; Tracy and Matsumoto, 2008). However, other emotions, such as joy, anger, and sadness have also been associated with changes in posture—though their expression has been explored to a lesser extent in developmental research. Thus, the present study also explored which discrete emotions children showed, with the goal of descriptively examining whether discrete emotions in children can be mapped using body posture.

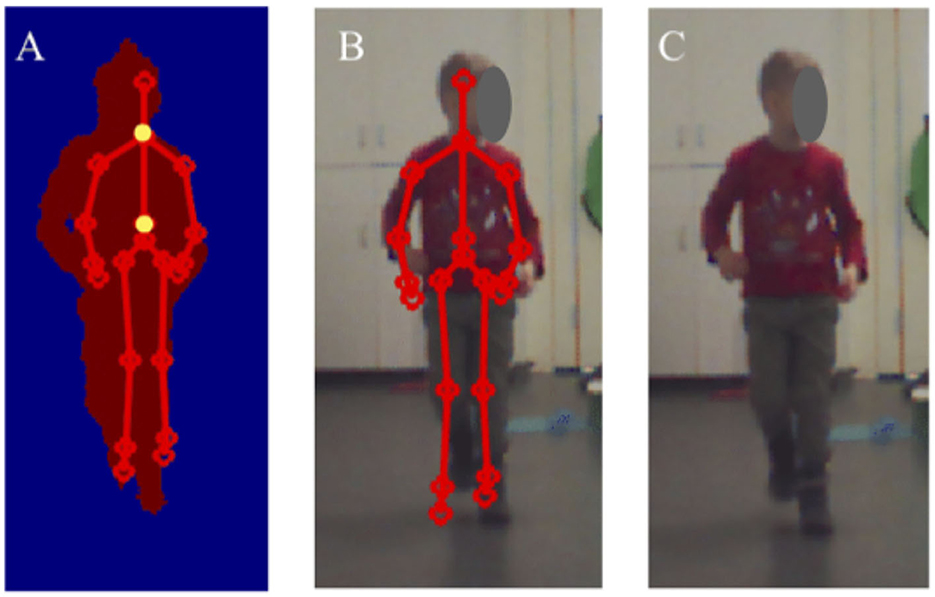

In this study, we aimed to validate automatically recorded body posture points as measures of children's emotional valence. We focused on three different posture measures (see Figure 1):

1. The change in chest height (the change in the y-value of the chest center data point).

2. The change in hip height (the change in the y-value of the hip center data point).

3. The change in chest expansion (the change in chest height minus the change in hip height).

Figure 1. An illustration of the images provided by the Kinect. (A) Depth image with tracked skeletal points. (B) Color image with the skeletal data mapped onto it. (C) Color images of the child walking toward the Kinect. The chest and hip center data points are marked in yellow on (A). This image is taken from the dataset of Gerdemann et al. (2022a).

Our central research question was whether automatically measured changes in upper body posture (chest height and chest expansion) are related to coders' ratings of emotional valence. We hypothesized that if there is a systematic relation between measures of children's upper body posture and emotion valence, then increases in chest height and chest expansion should be positively related to ratings of children's emotion valence. We note that the change in chest height and chest expansion may represent similar measures, though the change in chest expansion focuses more on changes only in the upper body posture, whereas the change in chest height focuses on the change in upper body and lower body posture combined.

We also examined associations between changes in children's body posture and emotional arousal. This is the first study, to our knowledge, to examine the relation between emotional arousal and body posture measures as recorded by the Kinect. We also examined which discrete emotions children showed with the goal of descriptively examining whether and which emotions can be mapped using body posture as a method in developmental studies. Finally, we asked coders to select which emotion cue stood out to them when they selected a discrete emotion.

This study was pre-registered at aspredicted.org (https://aspredicted.org/blind.php?x=W2S_1HM) and the data and code required to reproduce the analyses are provided on the Open Science Framework: https://osf.io/z6pwu/. All separate studies received ethical approval from the authors' institutions.

The final participant sample for the present study included N = 466 children (Mage = 5.08, SDage = 0.89; age range: range: 2 years, 5 months to 6 years, 2 months; 220 girls). This sample size is smaller than the one we preregistered by n = 2. Two children did not contribute any usable data after all pre-processing routines to extract body posture data had been applied. Children were drawn from six different studies conducted in our laboratory or pre-schools between 2016 and 2019 (see Table 1). Whether a child provided usable data was determined by a pre-processing algorithm available on the last author's github site (https://github.com/rhepach/Kinect), as well the individual OSF-resources of the original studies (see Table 1). Parents gave informed consent for their child's participation in each of the studies, which were conducted in a mid-sized city in Germany. Children participated in the studies either individually or in dyads.

We computed the implied power to detect the effects of interest using a simulation-based approach (~1,000 iterations per sample size interval) in R (Version 1.3.1093, R Core Team, 2021). Based on prior work (see Table 1), we estimated a small positive correlation (Pearson's r = 0.15) between coders' average rating of valence and the change in children's upper body posture. We further estimated a negligible positive correlation (Pearson's r = 0.05) of emotional arousal and the change in children's upper body posture. This estimate was not based on prior work, as the present study was the first to examine this association empirically. Simulations of the preregistered linear models revealed an average power between 1 – β = 0.85 – 0.91 to detect the effects of interest (an effect of valence) with our final sample size of N = 466 (the code for the simulation is also available via the OSF repository).

Inclusion criteria in this validation study were that the study was completed between 2016 and 2019 and focused on measuring body posture as the main dependent variable of interest. Body posture studies from 2020 or later were not included because we gathered the data from these studies and began our analysis in 2021, and no additional studies had been completed in 2020. The six studies included a range of different experimental manipulations, with which the studies aimed to elicit both positive emotions (e.g., in response to successfully helping) and negative emotions (e.g., in response to failing to help; Table 1). In each of the studies, children were engaged in an activity with one or more experimenters or with a peer and an experimenter. The studies all had a similar structure: they included a baseline or neutral phase during which children's body posture was recorded at the beginning of the study, and a test phase during which children's body posture was recorded after one or more experimental manipulation(s). In addition, the studies covered a broad age range of children between 2 and 6 years.

For each study, Kinect recordings were created, which provided color images, RGB depth-images, and the x-, y-, and z-coordinates of 20 posture points (see Figure 1). The recording and processing routines were automated using scripts written in MATLAB. The entire associated recording and processing code is available via the last author's Github site (https://github.com/rhepach/Kinect). The raw x, y, and z coordinates for each trial were further processed using a script run in R (R Core Team, 2021). In R, several predefined filters were applied to ensure high data quality. Data were removed, for instance, if no skeleton could clearly be mapped. This often was the result of children moving fast, or if children were crouching or jumping. The processed data comprised body posture data along 20 increments of children's distance from the Kinect camera. The script then calculated the difference in several posture points (the chest height and hip height) between the baseline phase and the measurement taken during the test phase after the experimental manipulation, resulting in a baseline-corrected change score that indicated the change in body posture. In each study, the main analyses included increments of distance with more than 90% of the median number of data points.

Although multiple test trials were recorded for most studies, we focused predominantly on the change in children's body posture on the first test trial for the present validation study. If children did not provide valid body posture data on the first test trial, the next available test trial was selected. Overall, we included 442 data points from the first test trial, 20 from the second test trial and 4 from the third test trial of the respective study. Those participants who were included with data from the second or third test trial were drawn from studies 3a or 3b (see Table 1), which included several experimental manipulations. We chose this approach as it ensured greater comparability across studies since not all studies recorded body posture data on multiple test trials. We also averaged the posture points across all increments of distance for the analysis. In summary, for the present study, each child provided body posture data from one test trial for each of the measures: change in chest height, change in chest expansion, and change in hip height.

We trained two research assistants to independently code children's emotional valence and arousal, as well as to identify which discrete emotion children expressed based on the same video recordings that were used to automatically extract children's body posture data. The coders were unaware of the study hypotheses but were given basic information about each of the studies to understand the actions visible in the videos. Coders were provided with video stills or video recordings (without audio) that showed children walking toward the Kinect camera during the test phase (see Figure 1C, with the child's face visible). Both the video stills and recordings were taken using the Kinect camera, however, the video stills made it slightly easier for coders to view the recording, so we opted to use those whenever available. Some studies did not produce video stills, only video recordings. Before beginning the full coding, coders were trained on a separate training data set of N = 21 videos. We ensured that coders reached satisfactory agreement with each other (ICCs ≥ 0.4) on the central measures of emotional valence and arousal before proceeding to complete the full coding.

For each of the studies included in the final study as well as for the training data set, coders were provided with a neutral description of the action children completed immediately preceding the recording of their body posture during the test phase (e.g., picking an object up or dropping an object off; see Supplementary Table S1). To prevent order effects, coders were instructed to rate blocks of one-eighth of a study's sample at a time. We provided coders with a pre-defined pseudo-random order in which to code each of the blocks. Moreover, the appearance of each child's ID within a study was randomized for each coder.

To code children's emotional valence and arousal, coders were provided with self-assessment manikins (Bradley and Lang, 1994). To rate children's emotional valence, coders were presented with five different manikins expressing emotions of different valences (see Supplementary Figure S1) and the following descriptions: “The person on the far left feels very negative; the person right of the center feels slightly negative; the person in the center feels neutral, neither negative nor positive; the person right of center feels slightly positive; the person on the far right feels very positive.” Coders were asked to use the manikins to rate the valence of children's emotional expression on a scale from 1 to 5. Interrater agreement was as follows: ICCvalence = 0.7 (r = 0.62). For the analyses, the ratings of children's emotional valence were averaged across both coders.

To rate children's emotional arousal, coders were shown five different manikins indicating people at different levels of arousal and an accompanying description which read as follows: “The person on the left feels very calm; the person left of the center feels slightly calm; the person in the center feels a little bit aroused and a little bit calm; the person right of the center feels slightly aroused; the person on the far right feels very aroused” (see Supplementary Figure S2). Coders were then asked to use the manikins to indicate how aroused the child is feeling on a scale from 1 to 5. Interrater agreement was as follows: ICCarousal = 0.57 (r = 0.43). For the analyses, the two coders' ratings of children's emotional arousal were averaged.

In addition, the coders identified a discrete emotion for each child. To do so, coders were given the option to indicate whether children expressed one of the following 10 emotions: elated joy, shame, happiness, anger, contentment, guilt, sadness, pride, disappointment, or awe. Coders were provided with a list of emotion cues for each of the emotions (see Supplementary Table S2), however, they were told that for the emotion label to apply, not all emotion cues needed to be present. They were asked to select one of the available discrete emotions, even if children did not express all emotion features associated with it (i.e., they were asked to form a global judgment). No other emotion labels were provided, but coders were given the option to indicate that the child expressed a different emotion than the ones on the list or no apparent emotion. Finally, coders selected one emotion cue that stood out to them. This was done primarily as an attention check to make sure coders were attending to the images presented to them and to explore which types of cues coders were using in their judgement.

The selection of the 10 discrete emotions for the list of emotions was in part the result of the research questions for the individual studies. In Studies 1a and 1b, the aim was to explore whether and when children express a shame- or guilt-like emotion after failing to help others (Gerdemann et al., 2022c). In Studies 2a and 2b, the goal was to explore children's expression of positive emotions (such as elated joy, awe, contentment, or happiness) after seeing a needier peer receive help (Gerdemann et al., 2022a; Hepach and Tomasello, 2020). Finally, in Studies 3a and 3b, children may have expressed emotions such as pride, elated joy, or happiness after successfully helping others (Hepach et al., 2023). In addition, we decided to code the expression of anger, sadness, and disappointment, because some of the experimental manipulations may have also caused these emotions, and to offer a broader range of negative emotions. Our goal was to include emotions that are similar in valence but are thought to differ in emotional arousal (such as anger and sadness or elated joy and contentment; Hepach et al., 2011).

Our analytic approach was pre-registered (https://aspredicted.org/blind.php?x=W2S_1HM). To examine our central research question regarding the relation between changes in children's body posture (as recorded automatically by the Kinect), and children's emotional valence and arousal (as assessed by human coders), we built a series of multiple linear regression models in R (R Core Team, 2021). In summary, we ran two linear models for each of three dependent measures. Our main prediction, in line with previous research, was that there would be an association between children's change in upper body posture (i.e., the change in chest height and the change in children's chest expansion) and emotional valence. We had no clear expectation regarding the relation between children's upper body posture and emotional arousal. Moreover, we did not expect a strong association of children's change in hip height with emotional valence or arousal. The models included the control predictors gender and age (z-transformed). As effect sizes, we report adjusted R2 of the models, as well as the correlation coefficient Pearson's r. We base our interpretations on the former but include the latter for comparability to previous studies.

Additionally, we planned to conduct exploratory analyses on the discrete emotions encompassing information on both the valence and arousal, as well as the changes in posture for each emotion. Our analysis of the discrete emotion labels provided by coders focused on plotting the data and examining descriptive statistics. First, the emotions were distributed in a grid of valence and arousal (Posner et al., 2005). Then we plotted the emotions from most negative to most positive change in chest height and chest expansion (averaged). Finally, we examined which emotion cues coders selected when asked to pick one that stood out to them.

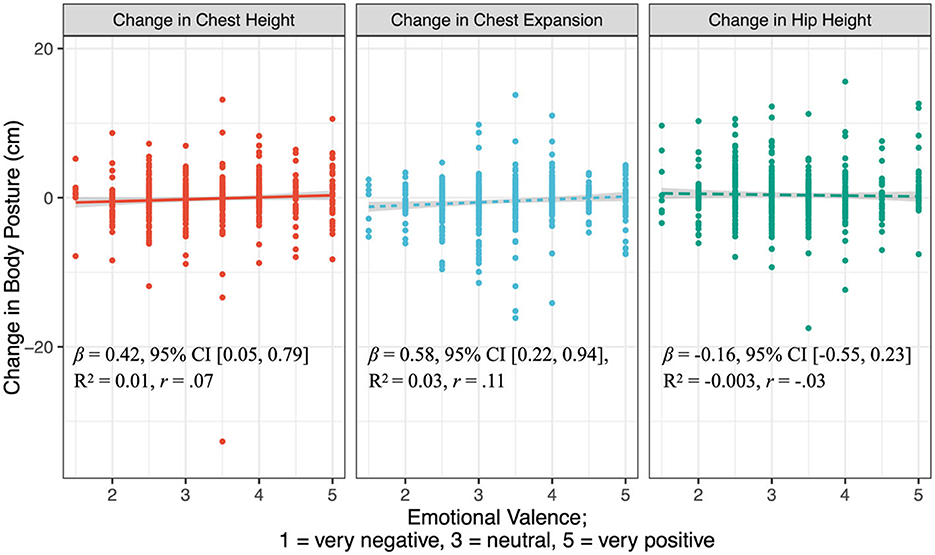

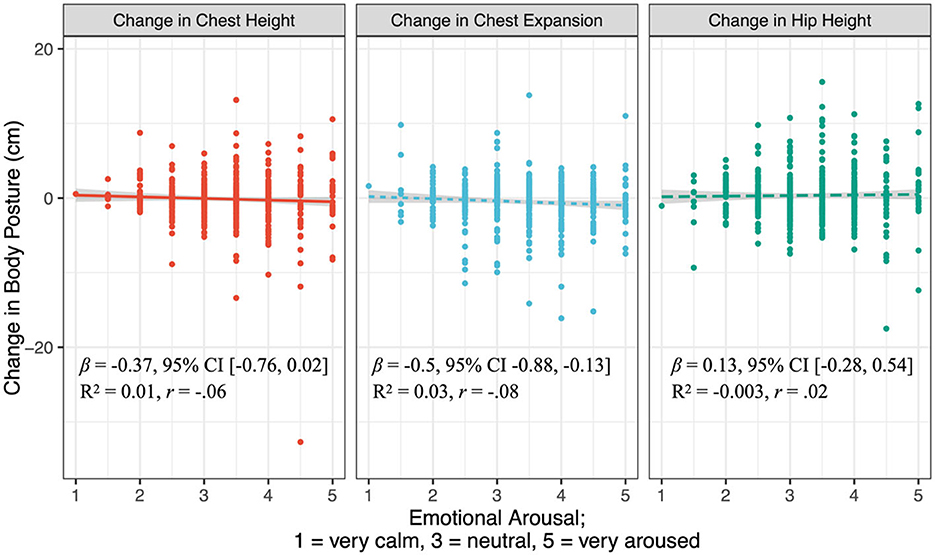

For the analysis of children's change in chest height, we first found a combined overall effect of including our variables of interest, arousal and valence in the model, compared to including only gender and age, F(2) = 3.04, p = 0.049. In our pre-registered analysis, for children's change in chest height, as predicted, there was a statistically significant effect of valence, β = 0.42, SE = 0.19, 95% CI [0.05, 0.79], t(461) = 2.22, p = 0.03, adjusted R2 = 0.01 (Figure 2). The more positive children's emotion was rated, the more elevated was their chest height compared to baseline. The correlation between emotional valence and the change in chest height was as follows: Pearson's r = 0.07. In contrast, we did not find an effect of arousal on the change in children's chest height, though this relation revealed a statistical trend, β = −0.37, SE = 0.2, 95% CI [−0.76, 0.02], t(461) = −1.88, p = 0.06, Pearson's r = −0.06 (Figure 3). Interestingly, the effect for emotional arousal was in the opposite direction as the effect for valence: children with a more elevated chest height showed somewhat lower emotional arousal. There was no interaction between arousal and valence, β = 0.13, SE = 0.21, 95% CI [−0.29, 0.54], t(460) = 0.6, p = 0.55, nor were there significant effects of gender, β = 0.15, SE = 0.15, 95% CI [−0.15, 0.45], t(461) = 1.03, p = 0.31, or age, β =-0.18, SE = 0.15, 95% CI [−1.22, 0.22], t(461) = −1.22, p = 0.12.

Figure 2. The relation between emotional valence and the change in children's chest height, chest expansion, and hip height. This figure was created with the package ggplot2 and the function stat_smooth (method = lm). Points represent data for individual children. Note that the measurement for the change in chest expansion is taken differently than for the change in chest height and hip height. On each panel we indicate the model estimate for emotional valence, associated 95% Confidence Interval, adjusted R2 of the model, and Pearson's correlation coefficient r.

Figure 3. The relation between emotional arousal and the change in children's chest height, chest expansion, and hip height. This figure was created with the package ggplot2 and the function stat_smooth (method = lm). Points represent data for individual children. Note that the measurement for the change in chest expansion is taken differently than for the change in chest height and hip height. On each panel we indicate the model estimate for emotional arousal, associated 95% Confidence Interval, adjusted R2 of the model, and Pearson's correlation coefficient r.

In the model with children's change in chest expansion as a dependent variable, there was an overall influence of the predictors arousal and valence, as removing these factors resulted in a reduction in model fit, compared to a model which included only gender and age, F(2) = 6.17, p = 0.002. In the pre-registered analysis, children's change in chest expansion showed a positive relation to their emotional valence, β = 0.58, SE = 0.18, 95% CI [0.22, 0.94], t(461) = 3.18, p = 0.002, adjusted R2 = 0.03, Pearson's r = 0.11 (Figure 2). The more expanded children's chest as measured by the Kinect, the more positive children's emotion valence was rated.

The effect of arousal was also significant though in the opposite direction as the effect of valence, β = −0.5, SE = 0.19, 95% CI −0.88, −0.13], t(461) = −2.64, p = 0.01, Pearson's r = −0.08. Children with high arousal showed a clear reduction in their chest expansion (see Figure 3). There was an additional effect of age on children's change in chest expansion, β = −0.34, SE = 0.15, 95% CI [−0.62, −0.05], t(461) = −2.31, p = 0.02. Overall, older children showed a greater reduction in chest expansion. The interaction of arousal and valence was not significant in predicting children's change in chest expansion, β = −0.21, SE = 0.2, 95% CI [−0.6, 0.19], t(460) = −1.02, p = 0.31. Moreover, there was no effect of gender on children's change in chest expansion, β = 0.03, SE = 0.15, 95% CI [−0.26, 0.31], t(461) = 0.18, p = 0.86.

For children's change in hip height, there was no effect of including the predictors arousal and valence into the model compared to a model which included only gender and age, F(2) = 0.36, p = 0.7. In the pre-registered analysis, children's change in hip height showed no clear relation to their emotional valence, β = −0.16, SE = 0.2, 95% CI [−0.55, 0.23], t(461) = −0.78, p = 0.44, adjusted R2 = −0.003, Pearson's r = −0.03 (see Figure 2), or arousal, β = 0.13, SE = 0.21, 95% CI [−0.28, 0.54], t(461) = 0.61, p = 0.54, Pearson's r = 0.02 (Figure 3). The interaction of arousal and valence was not significant in predicting children's change in hip height, β = 0.33, SE = 0.23, 95% CI [−0.12, 0.78], t(460) = 1.47, p = 0.14. There were also no significant effect of gender, β = 0.13, SE = 0.16, 95% CI [−0.18, 0.44], t(461) = 0.8, p = 0.43, or age on children's change in hip height, β = 0.15, SE = 0.16, 95% CI [−0.16, 0.46], t(461) = 0.94, p = 0.35.

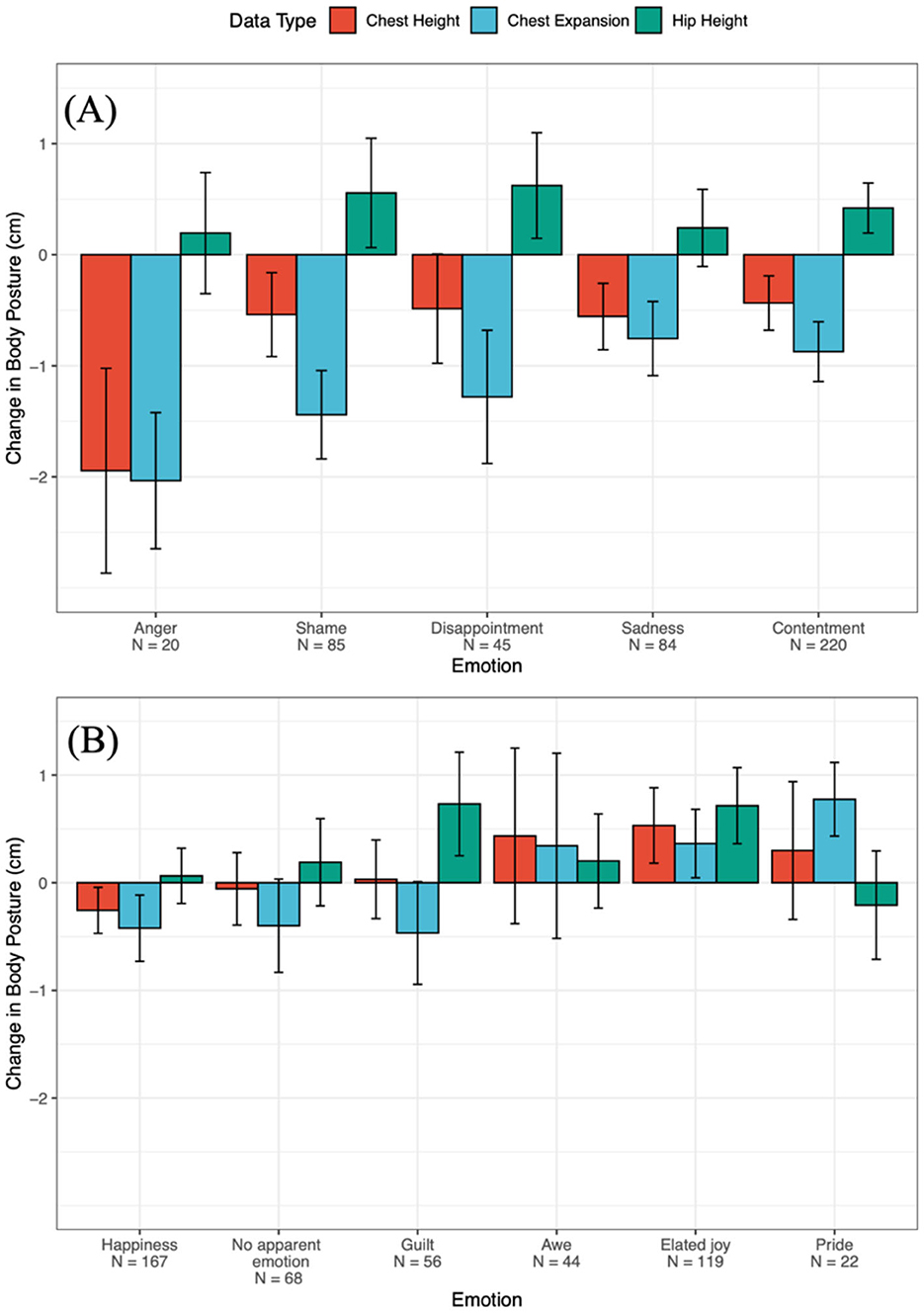

The emotion of anger was rated to be the negative emotion with the highest arousal (arousal = 3.78, valence = 2.75, n = 20), while sadness was rated as the lowest arousal negative emotion (arousal = 2.88, valence = 2.39, n = 84). Elated joy was the highest arousal positive emotion (arousal = 4.52, valence = 4.43, n = 119) and contentment the lowest arousal positive emotion (arousal = 3.08, valence = 3.15, n = 220; see Figure 4). This suggests that coders were using the labels in a way that aligns with the placement of these emotion labels in the circumplex model (Posner et al., 2005; Tseng et al., 2014).

In a plot in which we show each emotion label according to their average change in children's chest height and chest expansion, we found the greatest decreases in upper body posture (chest height and chest expansion, averaged) for children who received the emotion labels anger, shame, disappointment, and sadness (see Figure 5A). On the other hand, the positive emotions awe, elated joy, and pride were associated with the most elevated upper body posture. In between the negative and positive emotions were contentment, happiness, no apparent emotion, and guilt (see Figures 5A, B).

Figure 5. Change in children's chest height, chest expansion, and hip height according to each discrete emotion and sorted according to the average change in upper body posture (from most reduced to most elevated change in chest height and chest expansion). Panel (B) is a continuation of Panel (A). Note that the average body posture for children who were identified as showing a different emotion is not listed because this was only the case 6 times.

Supplementary Table S3 provides an overview of which emotion cues coders relied on to identify each emotion. Notably, coders predominantly relied on facial expressions to identify different emotions. However, some postural cues were also named for elated joy (dynamic movements was named 53.78 % of the time) and pride (elevated or expanded upper body posture was named 72.73% of the time). This suggests that coders were, in part, using body posture cues to identify discrete emotions.

Measuring emotional valence reliably is critical to understanding how children's cognition and motivation develop. In this preregistered validation study, we show that changes in upper body posture, as measured by an automatic depth sensor imaging technology, provide a reliable measure of emotional expression, and thereby can provide a window into the underlying mechanisms of young children's behavior and development. Critically, this method goes beyond forced choice or open-ended methods such as verbal interviews and can offer more objective insights into subtle differences in children's emotional responses. In addition, it complements other physiological methods such as pupillometry, galvanic skin conductance, and facial electromyography, and offers an advantage over these methods because it provides a direct measure of emotional valence and can be used without the application of sensors.

Our validation study using data from six developmental studies found, first, that emotional valence is reliably captured by changes in chest height and chest expansion, a finding that had not been clearly and robustly demonstrated before and that provides a crucial foundation for future studies. In addition, this is the first study to measure children's emotional arousal and relate this measure to the change in children's upper body posture. Here, we found that a reduction in chest expansion is related to an increase in emotional arousal. Finally, the exploratory assessment of different emotion labels provided by coders suggests that diverse emotions can be mapped by changes in upper body posture, aligning with the adult emotion literature (Wallbott, 1998; Witkower and Tracy, 2019). Finally, a list of different emotion cues that stood out to coders showed that coders relied on both facial expressions and body posture to identify each emotion (see Supplementary Table S3).

Our central finding was that emotional valence as rated by human coders was related to measures of upper body posture. This is how the change in upper body posture has predominantly been operationalized in previous studies (Gerdemann et al., 2022a,b; Hepach et al., 2017; Waddington et al., 2022). Notably, in past validation studies, adults have been asked to pose emotional expressions, supporting the notion that emotional expressions are reflected in subtle changes in upper body posture (Hepach et al., 2015; von Suchodoletz and Hepach, 2021). Similarly, in a study by Försterling et al. (2024), children were asked to pose the emotions of shame, sadness, joy, and pride after telling a story in which they felt each of these emotions. In this study, children's posture varied according to the valence of the emotions, i.e., between the shame and sadness inductions (combined) and the joy and pride inductions (combined). Importantly, however, here we used children's spontaneous emotional responses in naturalistic studies. Thus, children's emotions are expressed in changes in body posture even when children are not explicitly instructed to pose any emotion. Moreover, in a prior study by Gerdemann et al. (2022b), children's self-reported emotion on a 4-point scale paralleled their change in upper body posture. However, in our study, coders were able to identify these emotional responses from video recordings, without a verbal report from children. In line with prior findings, the present study provides clear evidence that emotional valence specifically maps onto spontaneously expressed non-verbal changes in children's upper body posture.

Even though the relation between emotional valence and the change in chest height, as well as chest expansion, align with our preregistered prediction, it is worth noting that each of the models only explained a low amount of variance, namely 1% for the change in chest height, and 3% for the change in chest expansion. There may be multiple reasons for this, perhaps including that factors other than emotions affect posture, such as fatigue. Yet this result may also be due to methodological decisions we made for the present study. For instance, the body posture measures were change scores from baseline. On the other hand, we chose to only code children's emotional expression during the test trials, thus the emotional valence and arousal values represent absolute ratings of children's emotional response after the experimental manipulations. When making this decision, we were aware that our valence and arousal measures may lack some sensitivity to parallel the subtle changes in emotional expression captured by our body posture measures. We chose this approach because we expected that requiring coders to rate many “neutral” emotional expressions would lead to undifferentiated or guessed responses. However, we acknowledge that our coded measures may be unable to capture some of the variance of the body posture measures for this reason.

Notably, in the current work, coders were asked to rate to children's overall affective valence as expressed through their body posture and facial expressions. Thus, it remains an open question whether body posture would also be related to, for instance, objective measures of facial movements alone, such as those obtained from facial electromyography. Thus, far studies have found positive relations between changes in body posture and the number of smiles children showed (Hepach et al., 2017). However, the relation between changes in posture and measures of facial electromyography has not been examined yet.

Interestingly, children's chest expansion tended to decrease with greater emotional arousal. We note that the sample was not entirely balanced regarding the emotional intensity of the events preceding the body posture measurement. Thus, some of the preceding events (e.g., failing to help; Gerdemann et al., 2022c) might have caused high-arousal negative emotions (similar to anger, shame, and disappointment). In contrast, the positive emotions resulting from, for instance, successfully helping (Hepach et al., 2023) or seeing others receive help (Gerdemann et al., 2022a; Hepach and Tomasello, 2020) might not have caused an equally high-arousal positive emotional response. Hence, we cannot be certain that our findings regarding emotional arousal will be replicable in a different sample of children or with other kinds of tasks. Nevertheless, it remains possible that high arousal emotions in general are expressed through a slump in children's upper body posture. In this case, through measuring body posture we may also be able to differentiate between events of higher or lower intensity. However, further studies are needed to corroborate this finding of the present study.

The slight imbalance across individual studies in the types of activities children completed prior to the measurement of their posture may, moreover, explain the effect of age on children's change in chest expansion. Older children showed a greater reduction in chest expansion, on average. Yet somewhat older children participated in some of the studies with negative mood inductions, such as the failure to help (see Table 1). In contrast, studies with successful, positive outcomes, such as providing help or watching someone receive help, included younger children- even as young as 2-year-olds (Hepach et al., 2023).

Regarding the discrete emotions identified by coders, our descriptive results suggest that these align with the pattern we observed for the relation between emotional valence and the change in children's upper body posture. The children whose emotion was labeled with anger, shame, disappointment, and sadness showed the greatest decrease in upper body posture. On the other hand, children who received the emotion labels awe, elated joy, and pride showed the greatest elevation in upper body posture. While these data are descriptive in nature, they nevertheless provide important insights into emotional expressions in young children. Traditionally, only the emotions of pride and shame have been associated with changes in upper body posture in young children (Kochanska et al., 2002; Lewis et al., 1992; Stipek et al., 1992; Tracy and Matsumoto, 2008). Yet in the adult emotion literature, a broader set of emotions is thought to be conveyed via postural expression (Witkower et al., 2021a; Witkower and Tracy, 2019). Our findings suggest that like adults, young children also express a variety of emotions via their upper body posture, suggesting that body posture most clearly reflects emotion valence rather than specific discrete emotions.

Notably, several of the discrete emotions showed intermediate body posture changes. This included no apparent emotion and contentment, but also happiness and guilt. Indeed, guilt showed a greater increase in upper body posture than happiness and contentment and was rated as more positive in emotional valence than shame and sadness. This might be, in part, explained by the inclusion of the cues “guilty smile” and “blushing” in the descriptions of guilt provided to the coders (see Supplementary Table S2). Notably, these cues also overlap with those for embarrassment (Keltner, 1995), so it is possible that coders used the emotion cues provided to identify a more positive emotion (embarrassment) than guilt is normally thought to be. Indeed, guilt is sometimes thought to not have a clear non-verbal emotion display and rather require a set of verbal and behavioral actions (e.g., making amends; Keltner and Buswell, 1996). Moreover, happiness was not associated with a particularly elevated upper body posture. Other emotions, such as awe, elated joy, and pride showed a greater elevation in upper body posture. This might be because happiness was perceived to be close to a neutral emotional state compared to the more specific positive emotions that children expressed.

Notably, our goal in asking coders to provide an emotion label was primarily to examine if the pattern we observe for valence and arousal is approximately stable across different emotions. Indeed, the pattern of results suggested that a wide variety of emotions differing in valence and arousal can be measured using body posture. This may be useful, for instance, for newer investigations examining emotions such as awe in young children (Gerdemann et al., 2022a; Gibhardt et al., 2024; Hepach and Tomasello, 2020). These new avenues of research may benefit from novel tools, such as body posture analysis, to study such complex emotions in childhood, especially when children cannot provide detailed verbal accounts of their emotional experience.

More generally, body posture complements currently available methods in important ways. It is not only useful to study new emotions but also to enhance existing developmental emotion research such as on the moral emotions of shame, guilt, and pride (Kochanska et al., 2002; Lewis et al., 1992; Stipek et al., 1992), or empathetic concern (Davidov et al., 2020; Hepach, 2017). Crucially, body posture methods have the advantage that they do not require extensive coding like other behavior observation methods. Yet, unlike other physiological methods, such as skin conductance and facial electromyography, this method does not require the application of any sensors and is thus non-invasive. Moreover, the current findings show that unlike pupillometry and galvanic skin response, body posture changes clearly reflect differences in emotional valence, not only arousal. As such, body posture is an invaluable addition to the methodological tools for the study of early emotional development.

Using body posture as a measure of emotion also comes with some limitations. First, posture cannot distinguish between different discrete emotions. Indeed, the body posture changes for emotions like anger, shame, disappointment, and sadness all looked quite similar, although we did not conduct a formal analysis of whether there are statistically significant differences between them. Though some emotion theories focusing on the one-to-one mapping of facial expressions to discrete emotions would posit that such clear correspondences between discrete emotions and their displays should exist (e.g., Izard and Malatesta, 1987), other emotion theories posit that information about the context in which an emotion occurred is essential to interpreting which kind of emotion is expressed (Barrett, 1993). For instance, smiles may reflect joy or happiness but could also represent embarrassment in the context of a mishap (Buss, 1980; Lewis et al., 1989). Similarly, changes in body posture might be thought to represent different emotions depending on the antecedents and concomitants of the emotional expression.

A second limitation is that, in principle, factors other than emotions can cause body posture changes. For instance, fatigue might cause children to slump their postures over time during an experimental study. As with identifying discrete emotions, it may be critical to consider the context in which a body posture change was expressed to distinguish fatigue from an emotional response. Moreover, in experimental studies, it is important to include control conditions to ensure that body posture changes are not due to fatigue accumulating over time.

Furthermore, studies focusing on body posture as a dependent measure thus far have predominantly used live interactive study paradigms in which children engage with one or more experimenters and/or peers in a semi-structured social situation (Gerdemann et al., 2022a,b; Hepach et al., 2017; Hepach and Tomasello, 2020; Sivertsen et al., 2024; Waddington et al., 2022). These situations might be especially emotion-eliciting and lead to stronger changes in body posture, because children are directly involved in or affected by the emotion-eliciting events (e.g., when they help someone solve a task; Hepach et al., 2023, 2017; Sivertsen et al., 2024). On the other hand, studies using pupillometry could detect children's emotional arousal when they were merely observers of interactions. For instance, one study found that children from 3 years onwards respond with different emotional arousal (pupil dilation) to moral vs. conventional norm violations (Yucel et al., 2020). Furthermore, in a study using facial electromyography infants responded with congruent facial expressions to happy and angry bodily action kinematics that were displayed on videos (Addabbo et al., 2020). These studies used quite subtle affective stimuli, and future investigations should explore whether children would express differences in body posture in response to observing such stimuli as well.

Moreover, within the functionalist emotion framework, emotions are thought to be non-verbal communicative signals to observers of an emotional reaction (e.g., Barrett, 1993). For example, adults displaying shame and sadness non-verbally have been found to be subject to less harsh moral punishment and social exclusion following a norm violation (Halmesvaara et al., 2020). Thus, expressing emotions in certain contexts can lead to adaptive social consequences. However, it remains an open question how emotional displays, including the ones expressed through changes in body posture, are utilized and integrated into social judgments in childhood.

Finally, one advantage of using body posture to track emotional expressions in childhood is that it can be seamlessly integrated into naturalistic experimental situations. Nevertheless, it is important to acknowledge that posture tracking remains limited to the field of view of the Kinect depth sensor imaging camera, and it is essential to arrange the experimental situation in a way that children walk directly toward the Kinect camera during the baseline and test trials of their body posture.

In conclusion, changes in upper body posture are associated with differences in emotional valence in childhood. Changes in emotional arousal are associated with differences in upper body posture to a lesser degree, and this association requires further validation in future studies. Moreover, different discrete emotions may be characterized by different changes in upper body posture, suggesting that this method can be used to study diverse emotions in early childhood. Together, the present findings point to the value of incorporating and expanding the use of body posture in the study of children's emotional development.

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://osf.io/z6pwu/.

The studies involving humans were approved by Max Planck Institute for Evolutionary Anthropology Child Subjects Committee and the medical faculty of Leipzig University (IRB number: 169/17). The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants' legal guardians/next of kin.

SG: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Visualization, Writing – original draft, Writing – review & editing. AV: Funding acquisition, Writing – review & editing. RH: Funding acquisition, Methodology, Resources, Writing – review & editing.

The author(s) declare that financial support was received for the research and/or publication of this article. This work was partially supported by a University of Leipzig Flexible Fund awarded to RH, by a graduate stipend “Doktorandenförderplatz” to SG, as well as a Max Planck Sabbatical Award awarded to AV.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

The author(s) declare that no Gen AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fdpys.2025.1536440/full#supplementary-material

Addabbo, M., Vacaru, S. V., Meyer, M., and Hunnius, S. (2020). ‘Something in the way you move': infants are sensitive to emotions conveyed in action kinematics. Dev. Sci. 23:e12873. doi: 10.1111/desc.12873

Aknin, L. B., Broesch, T., Hamlin, J. K., and Van de Vondervoort, J. W. (2015). Prosocial behavior leads to happiness in a small-scale rural society. J. Exp. Psychol. Gen. 144, 788–795. doi: 10.1037/xge0000082

Aknin, L. B., Hamlin, J. K., and Dunn, E. W. (2012). Giving leads to happiness in young children. PLoS ONE 7:e39211. doi: 10.1371/journal.pone.0039211

Aknin, L. B., Van de Vondervoort, J. W., and Hamlin, J. K. (2018). Positive feelings reward and promote prosocial behavior. Curr. Opin. Psychol. 20, 55–59. doi: 10.1016/j.copsyc.2017.08.017

Armstrong, J. E., Hutchinson, I., Laing, D. G., and Jinks, A. L. (2007). Facial electromyography: responses of children to odor and taste stimuli. Chem. Senses 32, 611–621. doi: 10.1093/chemse/bjm029

Atkinson, A. P., Dittrich, W. H., Gemmell, A. J., and Young, A. W. (2004). Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception 33, 717–746. doi: 10.1068/p5096

Aviezer, H., Trope, Y., and Todorov, A. (2012). Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science 338, 1225–1229. doi: 10.1126/science.1224313

Barrett, K. C. (1993). The development of nonverbal communication of emotion: a functionalist perspective. J. Nonverbal Behav. 17, 145–169. doi: 10.1007/BF00986117

Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., and Pollak, S. D. (2019). Emotional expressions reconsidered: challenges to inferring emotion from human facial movements. Psychol. Sci. Public Interest 20, 1–68. doi: 10.1177/1529100619832930

Beall, A. T., and Tracy, J. L. (2020). “The evolution of pride and shame,” in The Cambridge Handbook of Evolutionary Perspectives on Human Behavior, eds. J. H. Barkow, L. Workman, and W. Reader (Cambridge: Cambridge University Press), 179–193.

Beall, P. M., Moody, E. J., McIntosh, D. N., Hepburn, S. L., and Reed, C. L. (2008). Rapid facial reactions to emotional facial expressions in typically developing children and children with autism spectrum disorder. J. Exp. Child Psychol. 101, 206–223. doi: 10.1016/j.jecp.2008.04.004

Beck, S. R., and Riggs, K. J. (2014). Developing thoughts about what might have been. Child Dev. Perspect. 8, 175–179. doi: 10.1111/cdep.12082

Bergmann, C., Tsuji, S., Piccinini, P. E., Lewis, M. L., Braginsky, M., Frank, M. C., et al. (2018). Promoting replicability in developmental research through meta-analyses: insights from language acquisition research. Child Dev. 89, 1996–2009. doi: 10.1111/cdev.13079

Botto, S. V., and Rochat, P. (2018). Sensitivity to the evaluation of others emerges by 24 months. Dev. Psychol. 54, 1723–1734. doi: 10.1037/dev0000548

Bradley, M. M., and Lang, P. J. (1994). Measuring emotion: the self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 25, 49–59. doi: 10.1016/0005-7916(94)90063-9

Bradley, M. M., Miccoli, L., Escrig, M. A., and Lang, P. J. (2008). The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology 45, 602–607. doi: 10.1111/j.1469-8986.2008.00654.x

Castro, V. L., Camras, L. A., Halberstadt, A. G., and Shuster, M. (2018). Children's prototypic facial expressions during emotion-eliciting conversations with their mothers. Emotion 18, 260–276. doi: 10.1037/emo0000354

Cattaneo, L., Veroni, V., Boria, S., Tassinari, G., and Turella, L. (2018). Sex differences in affective facial reactions are present in childhood. Front. Integr. Neurosci. 12:19. doi: 10.3389/fnint.2018.00019

Davidov, M., Paz, Y., Roth-Hanania, R., Uzefovsky, F., Orlitsky, T., Mankuta, D., et al. (2020). Caring babies: concern for others in distress during infancy. Dev. Sci. 24:e13016. doi: 10.1111/desc.13016

de Gelder, B. (2009). Why bodies? Twelve reasons for including bodily expressions in affective neuroscience. Philos. Trans. R Soc. Lond. B Biol. Sci. 364, 3475–3484. doi: 10.1098/rstb.2009.0190

de Gelder, B., and Van den Stock, B. J. (2011). The bodily expressive action stimulus test (BEAST). Construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Front. Psychol. 2:181. doi: 10.3389/fpsyg.2011.00181

de Hooge, I. E., Breugelmans, S. M., and Zeelenberg, M. (2008). Not so ugly after all: when shame acts as a commitment device. J. Pers. Soc. Psychol. 95, 933–943. doi: 10.1037/a0011991

Du, S., Tao, Y., and Martinez, A. M. (2014). Compound facial expressions of emotion. Proc. Natl. Acad. Sci. U.S.A. 111, E1454–E1462. doi: 10.1073/pnas.1322355111

Ekman, P., Friesen, W. V., and Hager, J. (1978). Facial action coding system: a technique for the measurement of facial movement. Palo Alto, CA: Consulting Psychologists Press.

Försterling, M., Gerdemann, S., Parkinson, B., and Hepach, R. (2024). “Exploring the expression of emotions in children's body posture using OpenPose,” Proceedings of the 46th Annual Conference of the Cognitive Science Society, eds. L. K. Samuelson, S. L. Frank, M. Toneva, A. Mackey, and E. Hazeltine (Rotterdam: UC Merced), 4901–4908.

Fowles, D. C. (2008). “The measurement of electrodermal activity in children,” in Developmental Psychophysiology: Theory, Systems, and Methods, eds. L. A. Schmidt and S. J. Segalowitz (Cambridge: Cambridge University Press), 286–316.

Geangu, E., Ichikawa, H., Lao, J., Kanazawa, S., Yamaguchi, M. K., Caldara, R., et al. (2016). Culture shapes 7-month-olds' perceptual strategies in discriminating facial expressions of emotion. Curr. Biol. 26, R663–R664. doi: 10.1016/j.cub.2016.05.072

Gerdemann, S. C., Büchner, R., and Hepach, R. (2022a). How being observed influences preschoolers' emotions following (less) deserving help. Soc. Dev. 31, 862–882. doi: 10.1111/sode.12578

Gerdemann, S. C., McAuliffe, K., Blake, P. R., Haun, D. B. M., and Hepach, R. (2022b). The ontogeny of children's social emotions in response to (un)fairness. R Soc. Open Sci. 9:191456. doi: 10.1098/rsos.191456

Gerdemann, S. C., Tippmann, J., Dietrich, B., Engelmann, J. M., and Hepach, R. (2022c). Young children show negative emotions after failing to help others. PLoS ONE 17:e0266539. doi: 10.1371/journal.pone.0266539

Gibhardt, S., Hepach, R., and Henderson, A. M. E. (2024). Observing prosociality and talent: the emotional characteristics and behavioral outcomes of elevation and admiration in 6.5- to 8.5-year-old children. Front. Psychol. 15:1392331. doi: 10.3389/fpsyg.2024.1392331

Gummerum, M., López-Pérez, B., Dijk, E. V., and Dillen, L. F. V. (2020). When punishment is emotion-driven: children's, adolescents', and adults' costly punishment of unfair allocations. Soc. Dev. 29, 126–142. doi: 10.1111/sode.12387

Halmesvaara, O., Harjunen, V. J., Aulbach, M. B., and Ravaja, N. (2020). How bodily expressions of emotion after norm violation influence perceivers' moral judgments and prevent social exclusion: A socio-functional approach to nonverbal shame display. PLoS ONE 15:e0232298. doi: 10.1371/journal.pone.0232298

Hepach, R. (2017). Prosocial arousal in children. Child Dev. Perspect. 11, 50–55. doi: 10.1111/cdep.12209

Hepach, R., Engelmann, J. M., Herrmann, E., Gerdemann, S. C., and Tomasello, M. (2023). Evidence for a developmental shift in the motivation underlying helping in early childhood. Dev. Sci. 26:e13253. doi: 10.1111/desc.13253

Hepach, R., Kliemann, D., Grüneisen, S., Heekeren, H., and Dziobek, I. (2011). Conceptualizing emotions along the dimensions of valence, arousal, and communicative frequency – implications for social-cognitive tests and training tools. Front. Psychol. 2:266. doi: 10.3389/fpsyg.2011.00266

Hepach, R., and Tomasello, M. (2020). Young children show positive emotions when seeing someone get the help they deserve. Cogn. Dev. 56:100935. doi: 10.1016/j.cogdev.2020.100935

Hepach, R., Vaish, A., and Tomasello, M. (2012). Young children are intrinsically motivated to see others helped. Psychol. Sci. 23, 967–972. doi: 10.1177/0956797612440571

Hepach, R., Vaish, A., and Tomasello, M. (2015). Novel paradigms to measure variability of behavior in early childhood: posture, gaze, and pupil dilation. Front. Psychol. 6:858. doi: 10.3389/fpsyg.2015.00858

Hepach, R., Vaish, A., and Tomasello, M. (2017). The fulfillment of others' needs elevates children's body posture. Dev. Psychol. 53, 100–113. doi: 10.1037/dev0000173

Izard, C. E., and Malatesta, C. Z. (1987). “Perspectives on emotional development I: differential emotions theory of early emotional development,” in Handbook of Infant Development, 2nd Edn, Wiley Series on Personality Processes (Oxford: John Wiley and Sons), 494–554.

Jones, S. S., Collins, K., and Hong, H.-W. (1991). An audience effect on smile production in 10-month-old infants. Psychol. Sci. 2, 45–49. doi: 10.1111/j.1467-9280.1991.tb00095.x

Kassecker, A., Verschoor, S. A., and Schmidt, M. F. H. (2023). Human infants are aroused and concerned by moral transgressions. Proc. Natl. Acad. Sci. 120:e2306344120. doi: 10.1073/pnas.2306344120

Keltner, D. (1995). The signs of appeasement: evidence for the distinct displays of embarrassment, amusement, and shame. J. Personal. Soc. Psychol. 68, 441–454. doi: 10.1037/0022-3514.68.3.441

Keltner, D., and Buswell, B. N. (1996). Evidence for the distinctness of embarrassment, shame, and guilt: a study of recalled antecedents and facial expressions of emotion. Cogn. Emot. 10, 155–172. doi: 10.1080/026999396380312

Kochanska, G., Gross, J. N., Lin, M.-H., and Nichols, K. E. (2002). Guilt in young children: development, determinants, and relations with a broader system of standards. Child Dev. 73, 461–482. doi: 10.1111/1467-8624.00418

Kogut, T. (2012). Knowing what I should, doing what I want: from selfishness to inequity aversion in young children's sharing behavior. J. Econ. Psychol. 33, 226–236. doi: 10.1016/j.joep.2011.10.003

Lewis, M., Alessandri, S. M., and Sullivan, M. W. (1992). Differences in shame and pride as a function of children's gender and task difficulty. Child Dev. 63, 630–638. doi: 10.1111/j.1467-8624.1992.tb01651.x

Lewis, M., Sullivan, M. W., Stanger, C., and Weiss, M. (1989). Self development and self-conscious emotions. Child Dev. 60, 146–156. doi: 10.1111/j.1467-8624.1989.tb02704.x

Malti, T., Zhang, L., Myatt, E., Peplak, J., and Acland, E. L. (2019). “Emotions in contexts of conflict and morality: developmental perspectives,” in Handbook of Emotional Development, eds. V. LoBue, K. Pérez-Edgar, and K. Buss (New York, NY: Springer), 543–567.

Oberman, L. M., Winkielman, P., and Ramachandran, V. S. (2009). Slow echo: facial EMG evidence for the delay of spontaneous, but not voluntary, emotional mimicry in children with autism spectrum disorders. Dev. Sci. 12, 510–520. doi: 10.1111/j.1467-7687.2008.00796.x

Outters, V., Hepach, R., Behne, T., and Mani, N. (2023). Children's affective involvement in early word learning. Sci. Rep. 13:7351. doi: 10.1038/s41598-023-34049-3

Posner, J., Russell, J. A., and Peterson, B. S. (2005). The circumplex model of affect: an integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 17, 715–734. doi: 10.1017/S0954579405050340

R Core Team (2021). R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing. Available online at: https://www.R-project.org/

Reisenzein, R. (1994). Pleasure-arousal theory and the intensity of emotions. J. Personal. Soc. Psychol. 67, 525–539. doi: 10.1037//0022-3514.67.3.525

Sato, W., Murata, K., Uraoka, Y., Shibata, K., Yoshikawa, S., and Furuta, M. (2021). Emotional valence sensing using a wearable facial EMG device. Sci. Rep. 11:5757. doi: 10.1038/s41598-021-85163-z

Schröer, L., Çetin, D., Vacaru, S. V., Addabbo, M., van Schaik, J. E., and Hunnius, S. (2022). Infants' sensitivity to emotional expressions in actions: the contributions of parental expressivity and motor experience. Infant Behav. Dev. 68:101751. doi: 10.1016/j.infbeh.2022.101751

Sirois, S., and Brisson, J. (2014). Pupillometry. WIREs Cogn. Sci. 5, 679–692. doi: 10.1002/wcs.1323

Sivertsen, S. S., Haun, D., and Hepach, R. (2024). Choosing to help others at a cost to oneself elevates preschoolers' body posture. Evol. Human Behav. 45, 175–182. doi: 10.1016/j.evolhumbehav.2024.02.001