- Department of Biomedical Engineering, Ulsan National Institute of Science and Technology, Ulsan, Republic of Korea

Introduction: Behaviors often involve a sequence of events, and learning and reproducing it is essential for sequential memory. Brain loop structures refer to loop-shaped inter-regional connection structures in the brain such as cortico-basal ganglia-thalamic and cortico-cerebellar loops. They are thought to play a crucial role in supporting sequential memory, but it is unclear what properties of the loop structure are important and why.

Methods: In this study, we investigated conditions necessary for the learning of sequential memory in brain loop structures via computational modeling. We assumed that sequential memory emerges due to delayed information transmission in loop structures and presented a basic neural activity model and validated our theoretical considerations with spiking neural network simulations.

Results: Based on this model, we described the factors for the learning of sequential memory: first, the information transmission delay should decrease as the size of the loop structure increases; and second, the likelihood of the learning of sequential memory increases as the size of the loop structure increases and soon saturates. Combining these factors, we showed that moderate-sized brain loop structures are advantageous for the learning of sequential memory due to the physiological restrictions of information transmission delay.

Discussion: Our results will help us better understand the relationship between sequential memory and brain loop structures.

1 Introduction

Behaviors, including movement or language, consist of a sequence of events. To learn and reproduce a sequence of events, cognitive systems often form sequential memory. It has been suggested that brain loop structures play an important role in subserving sequential memory (Gisiger and Boukadoum, 2018; Janacsek et al., 2020; Logiaco et al., 2021). In the present study, loop structures in the brain only refer to a network in which multiple, anatomically segregated, brain areas are connected in a closed-form: for example, brain area X is connected to area Y, Y is connected to Z, and Z is connected back to X (X → Y → Z → X).

Loop structures are widely distributed in the brain. Among them, brain loop structures related to movement or language include parallel cortico-basal ganglia-thalamic loops (Alexander et al., 1986; Lee et al., 2020; Foster et al., 2021) and cortico-cerebellar loops (Middleton and Strick, 2000; Kelly and Strick, 2003; Ramnani, 2006). Brain injury research has revealed that damage to these structures impairs movement or language functions (Alexander et al., 1986; Middleton and Strick, 2000; Vargha-Khadem et al., 2005; Ramnani, 2006; Aoki et al., 2019; Chang and Guenther, 2020; Lee et al., 2020). Furthermore, parallel loop structures are related to the parallel modulation of different behaviors. For example, a loop passing through the ventrolateral striatum is involved in licking, and a loop passing through the medial striatum is involved in turning (Lee et al., 2020).

It has been shown that loop structures in the brain support the formation and consolidation of sequential memory related to movement or language (for a review, see Rusu and Pennartz, 2020). This relationship between these structures and sequential memory has mainly been proven by experimental results: that is, the observation of increased neural activity in these structures associated with sequential memory, or disruption of sequential memory by damage to these structures. Recently, computational models for the formation of sequential memory have been proposed using recurrent networks (Maes et al., 2020; Cone and Shouval, 2021). These studies showed that spatiotemporal patterns of inputs can be learned on recurrent structures that are randomly connected to each other by a biologically plausible learning rule. However, from a computational model perspective, it still remains unclear how loop structures can form sequential memory since recurrent networks in the previous studies do not form a loop structure that consisting of multiple, anatomically segregated, brain areas. In fact, while closed loops can occur within a recurrently connected network, this study investigates them at a larger scale with multiple, individual areas. Although the formation of sequential memory can be computationally explained by recurrent networks, loop structures of this type are known to subserve sequential memory within the brain (for a review, see Rusu and Pennartz, 2020). Since recurrent networks may not fully elucidate how sequential memory is formed via these structures in the brain, it is more pertinent to directly investigate the role of loop structures in the creation of sequential memory.

One of the key properties of computational models built for loop structures is the size of the structures, i.e., the number of nodes in a loop. Some computational studies have demonstrated that the size of neuronal networks affects connectivity (Meisel and Gross, 2009) or energy-efficiency (Yu and Yu, 2017). If the number of neurons in a node is fixed, then increasing the size of the neuronal networks can be thought of as increasing the loop size. However, the relationship between the size of the loop structure and the formation of sequential memory is unknown. Another property to be considered for modeling these structures is time delay. Information transfer between nodes in these structures via neuronal signal transmission should accompany time delay in sequential neural activations of nodes. However, it is also unexplored how such time delay in these structures contributes to the formation of sequential memory.

In this study, we assume that the formation of sequential memory draws upon delayed information transmission across loop structures. To investigate the learning of sequential memory over these structures, we devise a basic neural activity model with delayed information transmission. Using this model, we analyze structural conditions under which sequential memory can emerge, particularly focusing on the size of loop structures. We confirm our theoretical prediction through a computer simulation.

2 Theory of the learning and retrieval of sequential memory

The theory of the formation of sequential memory will be presented in the following order: Section 2.1: we introduce the conceptual conditions necessary for the formation of sequential memory in brain loop structures; Section 2.2: we introduce a simplified neural model, referred to as the basic neural activity model, which is used to facilitate the mathematical analysis of the conceptual conditions above; Section 2.3: we analyze the mathematical necessary conditions for enabling the formation of sequential memory, building upon the basic neural activity model; and Section 2.4: we mathematically assess the likelihood of the learning of sequential memory.

We first mathematically define terms related to neural representations. Let Xt be a neural representation of the brain area X at time t. X is the set of all cell assemblies (as a representative of a specific subpopulations of excitatory and inhibitory neurons with strong synaptic connections) in the brain area and a neural representation Xt ⊆ X is the set of active cell assemblies at time t. An active cell assembly is defined as a cell assembly in which, for example, more than 50% of neurons are activated. We assumed that all cell assemblies have separated subpopulations of neurons within the same brain area, but they are interconnected between different brain areas. Nearby neurons are more likely to be connected by synapses (Schnepel et al., 2015; for a review, Boucsein et al., 2011), and cell assemblies are subpopulations of neurons connected by these strong synapses (Sadeh and Clopath, 2021). Therefore, the assumption that cell assemblies within the same brain area are separated implies weak connectivity between neurons in different cell assemblies. The recurrent connections that are pervasive in the brain indicate strong connectivity between neurons within a single cell assembly in our model. As such, we did not define the recurrent connections explicitly in our model as a cell assembly containing the recurrent connections is a basic element. Furthermore, we say that Xt is inhibited at time t+1, if and only if Xt ∩ Xt+1 is an empty set. Unless otherwise specified, it is assumed that Xt ≠ Xt+ 1.

Let Et represent an event at time t, signifying its occurrence alongside its neural representations of Xt, Yt, and Zt. These representations belong to brain areas related to X, Y, and Z, forming a loop structure in a sequence, X→Y→Z→X. While there may exist time delays between Xt and Yt, as well as between Yt and Zt, it is important to note that all of Xt, Yt, and Zt constitute delayed neural representations of the same event Et. In other words, Xt is not the sole representation of Et, nor are Yt and Zt solely for delayed versions of Xt.

2.1 Delayed information processing in brain loop structures

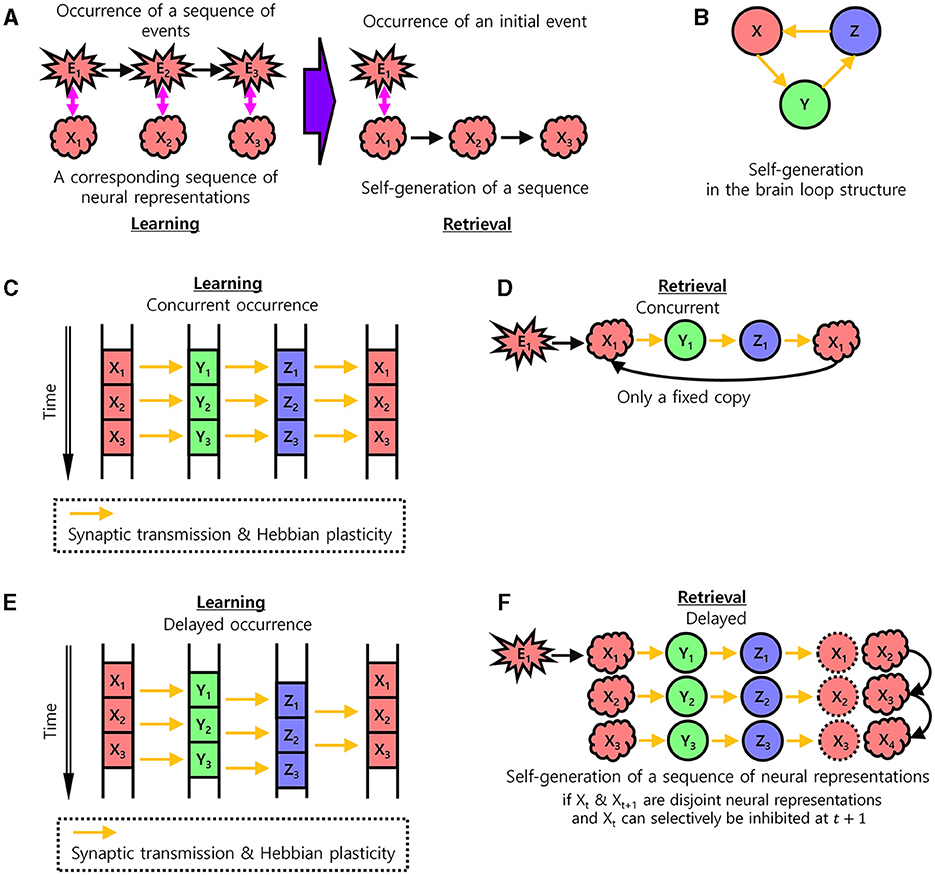

Behaviors related to movement or language consist of a sequence of events. For subsequent successful behavior, a sequence of events must be reproducible. Such a sequence of events may induce the learning of a sequence of corresponding neural representations in the brain. These neural representations can be seen as neural substrates of sequential behaviors, which construct an internal model of the corresponding behavior (McNamee and Wolpert, 2019; Yildizoglu et al., 2020; Mok and Love, 2022). In the present study, the sequential memory problem was stated as how the sequence of neural representations of a sequence of behavioral events can be self-generated in the activation of all the cell assemblies in each consecutive brain area (Figure 1A). Since brain loop structures play important roles in forming sequential memory (Gisiger and Boukadoum, 2018; Logiaco et al., 2021), we assumed that this structure enables the self-generation of a sequence of neural representations (Figure 1B). Self-generation here means that when a part of a sequence of neural representations is stimulated, the rest of the sequence is automatically activated.

Figure 1. The learning of sequential memory in brain loop structures. (A) Self-generation of a sequence of neural representations of behavioral events. In the learning step, a corresponding neural representation occurs for each behavioral event and is learned. In the retrieval step, a sequence of neural representations is self-generated by an initial behavioral event. Xt: Neural representation of the brain area X at time t. Equivalently, X: Set of all cell assemblies in the brain area, Xt ⊆ X: Set of active cell assemblies in the brain area X. Et: Event at time t. Therefore, Et is a simultaneous event with Xt, Yt, and Zt, even if Yt, and Zt are delayed representations. (B) The brain loop structure for the self-generation of a sequence of neural representations. (C, D) Learning with concurrent occurrence. (C) Information transmission and Hebbian plasticity in case of the concurrent occurrence of neural representations during the learning of sequential memory. Arrows indicate pathways through which synaptic transmission takes place and results in Hebbian plasticity. (D) No self-generation of the sequence of neural representations in case of the concurrent occurrence of neural representations during the retrieval of sequential memory. (E, F) Learning with delays occurrence. Same as (C, D) with non-zero delays. (E) Information transmission and Hebbian plasticity in case of the delayed occurrence of neural representations during the learning of sequential memory. The learning of sequential memory. (F) Self-generation of the sequence of neural representations in case of the delayed occurrence of neural representations (if there is inhibition of the previous neural representation) during the retrieval of sequential memory.

Let X, Y, and Z be brain areas forming a loop structure such that X→Y→Z→X (Figure 1B). Let Xt, Yt, and Zt be neural representations of a certain behavioral event, Et, occurring at time t in each area. If this behavioral event is concurrently represented in this structure with no time delay, i.e., if Xt, Yt, and Zt concurrently occur, then the Hebbian plasticity would associate neural representations, Xt → Yt, Yt → Zt, and Zt → Xt (Figure 1C). The Hebbian plasticity can be formulated as:

where wji is a synaptic weight from a cell assembly to another cell assembly and τ is a time constant. A cell assembly has the value 1 or 0, when active or non-active, respectively. In this case, when a behavioral event induces the neural representation Xt in the area X at time t, it would induce Yt and Zt concurrently, generating Xt again by Zt → Xt+1Xt. Therefore, this concurrent loop would result in the learning of the self-generation of only a fixed neural representation Xt → Xt by the logical transitive relation, without learning to self-generate the next neural representation Xt+1, (Figure 1D). As such, the neural representation Xt+1 would only be induced by an external associated behavioral event, Et+1, not by the internal loop structure, making it difficult to self-generate sequential memory.

In contrast, if the neural representations of behavioral events are delayed in the brain loop structure, i.e., if Xt, Yt, and Zt are sequentially induced with non-zero delays between them when an event Et occurs, then the Hebbian plasticity may associate both neural representations, Zt → Xt and Zt → Xt+1, from Z to X (Figure 1E). If Xt and Xt+1 are disjoint and the previous neural representation Xt can be selectively inhibited at time t+1, a sequence of neural representations Xt → Yt → Zt → Xt+1 can be self-generated. Afterward, the generation of Xt+1 would induce Yt+1 and so on. Therefore, this delayed information processing along with the inhibition of previous neural representations of Xt would result in the self-generation of a sequence of disjoint neural representations, Xt → Xt+1 → … → XT in the area X (Figure 1F). This serves as a basis for generating the sequential memory of behavioral events, Et → Et+1 → … → ET, via the internal loop structure without the occurrence of external behavioral events. As such, finding a biologically plausible model that supports this self-generation of a sequence of neural representations in this structure would be important. Below, we present a biologically plausible model for the delayed information processing and the inhibition of the previous neural representation.

To emphasize, learning based on Hebbian plasticity implemented in our model primarily encodes the sequence of neural representations of events rather than precise timing. Consequently, the delay at which each neural representation sequentially appears may vary depending on the specific context. The proposed model learns sequences of neural representations from the examples of neural representations of events. Note that the stages of neural dynamics (t, t+1) in Figures 1C, E evolve on the order of tens of milliseconds. The time difference of (t, t+1) (Figures 1C, E) in the neural representation of each event does not indicate a behavioral transition. In fact, a single behavioral transition XT, X2T, and X3T can occur over multiple stages of neural dynamics t, t+1, t+2, …, t+T, …, t+2T, …, and t+ 3T.

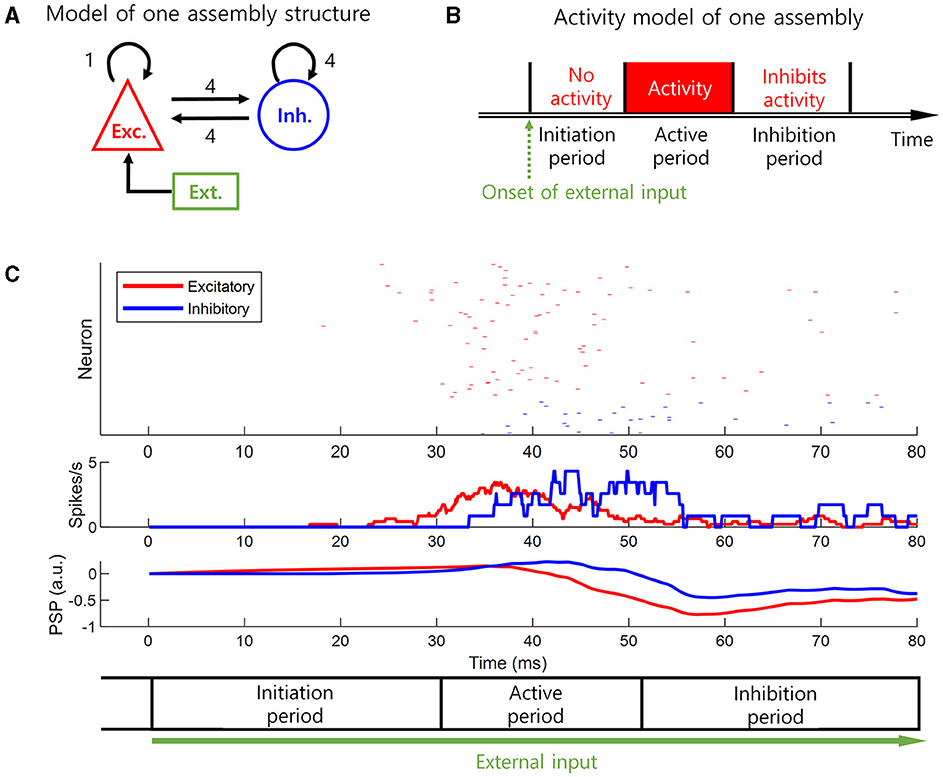

2.2 Basic neural activity model

To present a biologically plausible model of the delayed information processing and the inhibition of previous neural representation, we modeled the neural activity of a cell assembly (i.e., a subpopulation of co-active neurons). This basic neural activity model was based on excitatory-inhibitory balanced networks (Figure 2A) and the flow of postsynaptic potential (PSP) changes (Figure 2C). The flow of PSP changes in response to an external input can be described in three periods (Figures 2B, C). When excitatory external input is received by excitatory neurons, the PSP of the excitatory neurons starts to increase in the beginning until it exceeds a threshold level. We call this first period initiation period with no neural activity of excitatory neurons as the PSP remains subthreshold level. When the PSP of the excitatory neurons crosses the threshold, it increases the PSP of both the excitatory and inhibitory neurons via synaptic interactions. We call this second period active period with apparent neural activity of excitatory neurons. When the PSP of the inhibitory neurons exceeds the threshold, the inhibitory neurons begin to inhibit the excitatory neurons. When the input from the inhibitory neurons is greater than that from the excitatory neurons, the activity of the excitatory neuron is inhibited even though the external input constantly lasts. This period persists for a certain amount of time, even in the absence of excitatory input from the excitatory neuron because of the duration of the PSP of the inhibitory neuron. We call this third period inhibition period with no neural activity of the excitatory neurons (Figures 2B, C). Therefore, our basic neural activity model has three consecutive periods: (1) initiation, (2) active, and (3) inhibition periods. Apparent neural activity is present in the active period but absent in the initiation and inhibition periods (Figure 2B). The length of the initiation period and inhibition period depends on the rise and decay times of the PSP (Figure 2C).

Figure 2. Basic neural activity model. A biologically plausible model of delayed information processing and previous neural representation inhibition. This is a neural activity model of a cell assembly, i.e., subpopulation, in the loop structure. The model is based on the excitatory-inhibitory balanced networks and the flow of postsynaptic potential (PSP) changes. (A) The excitatory-inhibitory balanced networks that form the basic neural activity model. (B) The basic neural activity model. This model has a value of 1 during the active period, and a value of 0 during the initiation or inhibition periods. (C) The simulation result to derive the basic neural activity model based on the setting in (A). The spike rate is smoothed by a 3-ms uniform moving window, where the length of this moving window corresponds to the refractory period. The initiation and inhibition periods are set based on the slow change of PSP. PSP is slowly decayed. The initiation period corresponds to delayed information processing, and the inhibition period corresponds to previous neural representation inhibition.

In this subsection, a basic neural activity model, a model of the cell assembly unit, was derived from the population of spiking neurons. From now on, we will perform mathematical analysis related to the formation of sequential memory using the basic neural activity model derived here, rather than the model of spiking neurons.

2.3 Necessary conditions for the learning and retrieval of sequential memory

Each brain area in a loop structure consists of multiple cell assemblies, where a cell assembly is defined as the substrate of a neural activity model introduced in the Section 2.2. Suppose that each cell assembly undergoes one cycle of the neural activity model, i.e., initiation-active-inhibition, with a random starting point, i.e., random phase. Let tN, tA, and tI denote the length of the No-activity (initiation), Activity, and Inhibition periods, respectively. Note that tN represents a delay from a cell assembly in one area to another cell assembly in the subsequent area. Let N be the number of areas in the loop structure.

We assume that tN, tA, and tI are fixed over all the cell assemblies in each area over the loop structure. This does not have a significant impact on the conclusions of this study, especially when areal heterogeneity is not large. Let us assume that individual neural activity models defined on each cell assembly in an area share the same active period length tA, but start differently at a random time point, i.e., random phase. At an arbitrary time point t, each neural activity model undergoes in one of the active, initiation or inhibition periods. Then, right before t+tA, some models that start their active period at t will still be under the active period. Right after t+tA, those models that start their active period at t will end the active period and transit to the initiation, inhibition or new active period. If some of those models are under new active periods right after t+tA, these models change to new active periods exactly once as the active period spans tA. Therefore, between t and t+tA, the active period of every model will change to the initiation, inhibition or new active period exactly once. Since neural activity during the active period represents an event, we can assume that a combination of the activities of all cell assemblies representing an event changes into a different combination exactly once during a period of the length tA.

When the sequential activities of cell assemblies return to the first area X through the loop, i.e., delay tN with N − 1 times, it must encounter the neural representation of a new event to form a sequential memory by Hebbian plasticity between co-active cell assemblies in the adjacent areas, where the sequential memory should consist of different neural representations, i.e., Xt → Xt+1. Hence, it is necessary to have the following condition: the time during which information for sequential events returns through the loop must be >zero, i.e., (N − 1)tN > 0. This condition yields tN > 0.

When information for sequential events returns through the loop, it must encounter the next neural representation, i.e., an intermediate neural representation must not be omitted, in other words, Xt → Xt+1 , but not where t′>1. If neural activity in the loop returns to the first area X after the activation period ends, neural activity in the last area of the loop will activate the (t+j)-th neural activity in X where j > 1, causing the omission of the (t+1)-th neural activity in X. Consequently, the sequence of events would not be accurately represented. Hence it is necessary to have the condition that NtN ≤ tN + tA, where tA corresponds to the active period of a neural representation. This condition yields:

To inhibit previous neural representation (Figure 1F), the same cell assembly must not be active in succession. Let be a cell assembly in the first area in the loop structure. A neural representation emerges in the first area during a period from tN to tN + tA will be represented in the last area during a period from NtN to NtN + tA. In this case, the combination of the outputs of the neural activity models in the last area will be associated with in the first area through Hebbian plasticity. That is, the combination of the outputs of the neural activity models in the last area can cause the activation of . If NtN + tA is larger than tN + tA + tI, may become active in succession. This leads to the loss of opportunity for the next event to be appropriately represented. To prevent this, it is necessary that NtN + tA ≤ tN + tA + tI. This condition yields:

The inhibition period's role is to prevent cell assemblies activated in the neural representation of a previous event from consecutively remaining active, ensuring the generation of a distinct neural representation for the next event. However, as per Equation 2, (N − 1)tN ≤ tA is established, signifying that the activity in the last area is associated with the cell assembly in the first area through Hebbian plasticity. Nevertheless, if Equation 3 is not satisfied—that is, if the activity in the last area persists even after the inhibition period of the first area has concluded—it can result in the continuous activation of cell assembly due to the association facilitated by the aforementioned Hebbian plasticity.

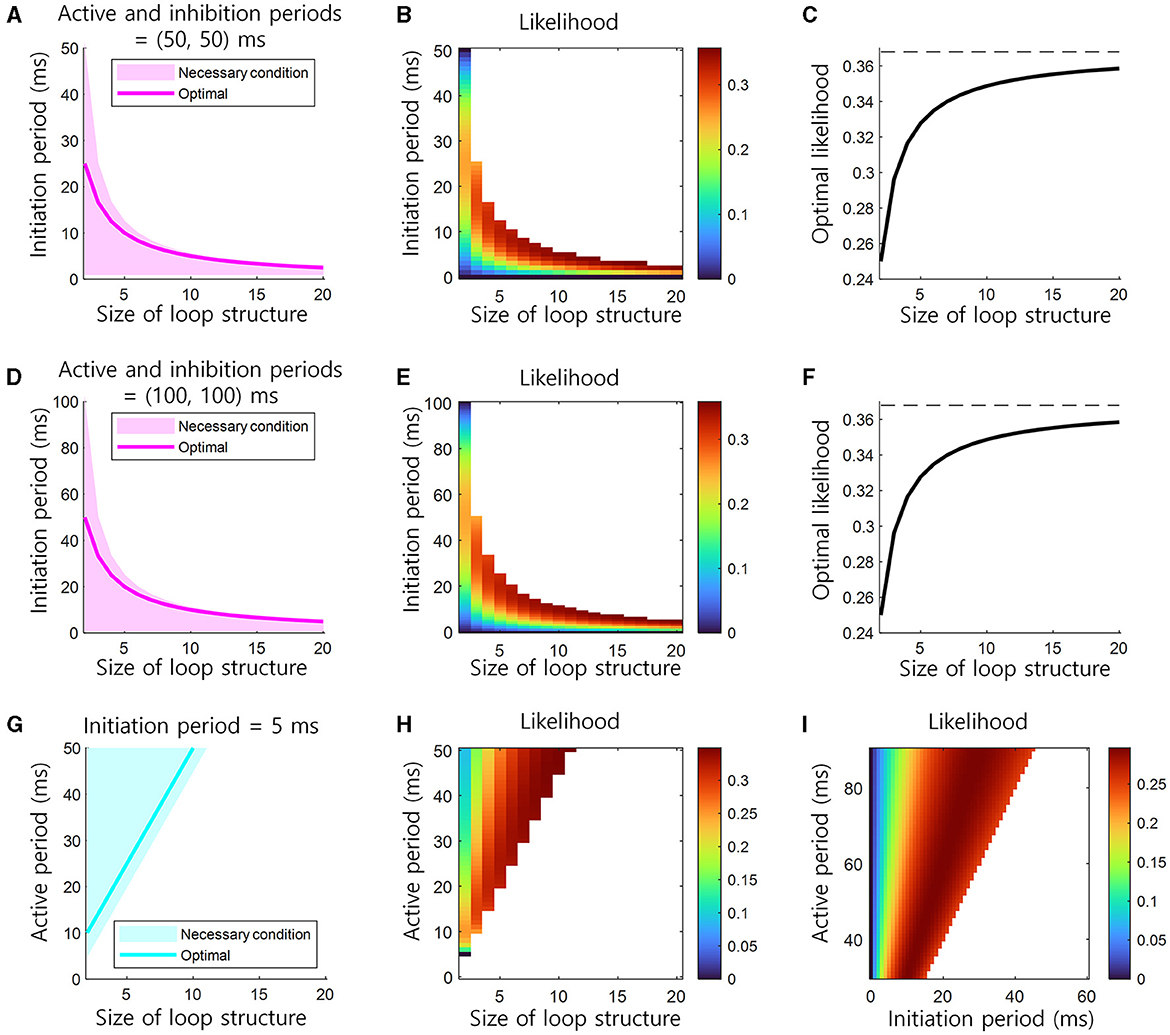

The conditions in Equation 2 are necessary for learning via Hebbian plasticity. The condition in Equation 3 is necessary for the inhibition of previous neural representation. If the lengths of the active (tA) or inhibition (tI) periods of a cell assembly are fixed, the possible range of information transmission delay (tN) is bounded by the inverse of the size of the loop structure (1/(N − 1)). In other words, as the size of this structure increases, the information transmission delay should decrease (Figures 3A, D). If the information transmission delay (tN) is fixed, the length of the active (tA) period should increase as the size of this structure (N) increases (Figure 3G).

Figure 3. Conditions for the learning and retrieval of sequential memory in brain loop structures. (A) Necessary conditions for the learning and retrieval of sequential memory (see Equations 2, 3). The necessary condition of the initiation period as the function of the size of loop structure when the active and inhibition periods are 50 ms. The optimal, i.e., bold magenta line, corresponds to the location of ridge in (B). (B) The likelihood of the learning of sequential memory, as the function of the initiation period and the size of loop structure when the active period is 50 ms (see Equation 4). (C) The optimal likelihood of the learning of sequential memory, as the function of the size of loop structure (see Equations 11, 12). This corresponds to the height of ridge in (B). Top dashed black line indicates the upper bound of the optimal likelihood, e−1 ≈ 0.3679. (D–F) Similar to (A–C), but the active and inhibition periods are 100 ms. (G) The necessary condition of the active period as the function of the size of loop structure when the initiation period are 5 ms. (H) The likelihood of the learning of sequential memory, as the function of the active period and the size of loop structure when the initiation period is 5 ms. (I) The likelihood as the function of both initiation and active periods, when the inhibition period is 50 ms and the size of loop structure is 3.

We assume that tN, tA, and tI are fixed over all the cell assemblies in each area over the loop structure. We acknowledge that this is a strong simplification because the brain would be highly heterogeneous in general. Nonetheless, we adopt this assumption to obtain simplified mathematical solutions so as to arrive at simplified conclusions. If we assume that tN, tA, and tI are heterogeneous for N different brain areas, the necessary conditions are from the summation of different (N – 1)-terms instead of the (N – 1)-summation of the same terms. This would alter our equations as follows: , instead of (N − 1)tN>0; Equation 2 would become , instead of NtN ≤ tN + tA.; and Equation 3 would become , instead of NtN + tA ≤ tN + tA + tI. However, using the summation of different (N – 1)-terms instead of the (N – 1)-summation of the same terms does not change the direction of the conclusions of this study because each term has same characteristics.

2.4 The likelihood of the learning of sequential memory

The next step is to determine the information transmission delay for which sequential memory can learn with the most likelihood. Here, the likelihood indicates a probability that sequential memory can be learned in a loop structure. For every pair of adjacent areas, we calculated the “probability” of sequential memory learning between them and multiplied all those “probabilities” to form the likelihood. Hence, the likelihood here takes a value between 0 and e−1≈0.3679, with higher values indicating higher possibilities of sequential memory learning. The value of e−1≈0.3679 is derived below in this subsection. In the present study, the learning of sequential memory is based on Hebbian plasticity which associates co-active cell assemblies. The likelihood of the learning of sequential memory between the adjacent areas, i.e., Xt → Yt or Yt → Zt, except the last to first areas, i.e., Zt → Xt+1, can be described as the proportion of a duration that two areas represent the same event in one cycle of the neural representation of an event. Since tA is the duration of the representation of an event and tN is the time limiting the representation of the same event, the likelihood can be written as the ratio: (tA−tN)/tA = 1−tN/tA. The likelihood from the last to first areas, i.e., Zt → Xt+1, is the proportion of the representation time of two different events that the last area represents a current event and the first area represents the next event. This proportion of time satisfies the condition that the duration of the neural representation of a current event in the last area overlaps the duration of the neural representation of the next event in the first area, which can be written as the ratio of the duration (N − 1)tN to the duration tA of the next neural representation: (N − 1)tN/tA. Therefore, the likelihood in the loop structure is the product of all proportions (Figure 3B):

To maximize the likelihood function, we take its partial derivative:

The first term becomes:

The second term becomes:

The summation of these two terms is:

Since the local maxima of f(tN, tA) can be taken when , the possible values are tN = tA or tN = tA/N. Since f(tN, tA) = 0 when tN = tA and f(tN, tA) > 0 when tN = tA/N, the likelihood function f(tN, tA) has the maximum value at (Figure 3A):

This solution for the maximization of f(tN, tA) in Equation 9 needs to satisfy the necessary conditions 0 < tN ≤ tA/(N − 1) (Equation 2). If tA of a cell assembly is fixed, the optimal information transmission delay tN decreases as the size of the loop structure N increases. With this optimal information transmission delay tN, the maximum likelihood in this structure is:

The maximum likelihood, maxf(tN, tA), increases as the size of this structure N increases and soon saturates to e−1≈0.3679 (Figure 3C).

If we assume that tN, tA, and tI are heterogeneous for N different brain areas, the likelihood is the product of different N-terms so as to make it difficult to obtain a simplified mathematical solution. In Equation 4, we would have instead of . However, in this case, the number of similar terms with the same characteristics increases, so it does not have a significant impact on the conclusions of this study, especially when areal heterogeneity is not large.

3 Simulation methods

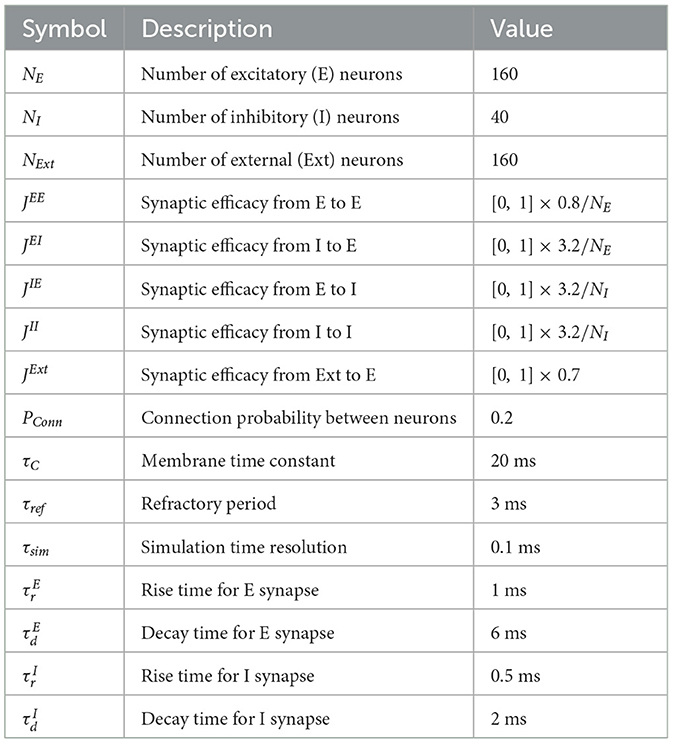

3.1 Simulation to derive the basic neural activity model

To derive the basic neural activity model, we performed the simulation of a neuronal network. All parameters used in this simulation were provided in Table 1. The neuronal network was based on the excitatory-inhibitory balanced networks and the flow of postsynaptic potential (PSP) changes. Neurons in the network belonged to either the excitatory E, inhibitory I, or external Ext populations. The ratio 4:1 of E to I was in accordance with the previous simulation study (Litwin-Kumar and Doiron, 2014). The synaptic inputs from E made the excitatory postsynaptic potential (EPSP), while the synaptic inputs from I made the inhibitory postsynaptic potential (IPSP). The PSP of excitatory neuron i was determined as:

where tC is the membrane time constant, is an external input, is an excitatory input, and is an inhibitory input. The PSP of inhibitory neuron i was determined as:

where is an excitatory input, and is an inhibitory input. Then the spiking activity of an E or I neuron was determined as:

where P is a population (E or I) and u is a pseudo-random number generated from the uniform distribution between 0 and 1. This was intended to generate irregular spike patterns reflecting probabilistic neural responses (Cannon et al., 2010). Once a neuron was active (1), it became inactive (0) for the next 3 ms, representing the refractory period of the action potential of the neurons. The spiking activity of Ext neuron was determined as:

where w is a pseudo-random number generated from the uniform distribution between 0 and 1. The synaptic kernel, which determines the temporal properties of PSP, can be modeled as follows (Litwin-Kumar and Doiron, 2014):

where P is excitatory population E or inhibitory population I, is the rise time for synapses of the certain population E or I, and is the decay time for synapses of the certain population E or I. The current PSP is a convolution between the synaptic kernel KP(t) and previous neural activity. The rise and decay times of the current PSP is determined by and . The synaptic input from E or I neurons was the weighted sum of the spiking activities multiplied by the synaptic kernel as follows:

where P is a certain population, E or I, KP(t) is the synaptic kernel introduced in Equation 15, is the synaptic efficacy from P to E population (Table 1), and * is a convolution operator. The parameters used in the synaptic kernel were similar to the previous study (Litwin-Kumar and Doiron, 2014). The ratio of E-I to E-E synaptic efficacy was set to 4 (Table 1). This balance of the excitatory-inhibitory populations used in the simulation was similar to the previous study, which represents strong lateral inhibition (Sadeh and Clopath, 2021). The synaptic input from Ext to E neurons was as follows:

where is the synaptic efficacy from Ext to E population (Table 1).

3.2 Simulation for the learning and the retrieval of sequential memory

For simplicity, we set the size of the loop structure to N = 3, in addition, N = 2, 4, 5, 6. The initiation period range was set to tN = [20, 60] ms, which was comparable to the previous study (Siegle et al., 2021; for a review, Wang, 2022). The range of initiation period was distributed around the biologically plausible initiation period of 40 ms. The active period was set to tA = 120−tN ms, which is equal to or longer than the range of the initiation period. The inhibition period range was set to tI = [0, 120] ms, which were also within a reasonable range compared to the range of the initiation period to cover both the initiation and active periods. These lengths of the initiation, active, and inhibition periods, tN, tA, and tI, respectively, were the parameters of our basic neural activity model. In spiking neural networks, the lengths of the initiation, active, and inhibition periods depend on other parameters such as time constant, synaptic efficacy, spiking threshold, and also varies across brain areas. However, here, a range of three periods was used to verify the theoretical predictions in Section 2. In these three parameter ranges, there are parameters satisfying the necessary conditions up to N = 2, … , 6. Therefore, simulations were performed at these structure sizes.

We modeled one cell assembly by one basic neural activity model, which constituted a fundamental unit in the simulation. Here, unit refers to cell assembly. The value of a cell assembly was 1 for the active period or 0 for the initiation or inhibition periods. It simplifies the activity of cell assemblies as 1 or 0. These values are used to calculate Hebbian plasticity described in Equation 1. We set five cell assemblies to represent a common event in each area. Here, event refers to an external event and is represented by the activities, e.g., Xt and Yt in Section 2, of cell assemblies. In this simulation, Xt and Yt are pre-assigned to represent each external event such that all external events are represented as disjoint sets, e.g., Xt and Xt+1, of the same number of cell assemblies, with each set assigned to appear at a specific time delay. The five cell assemblies in the same area had the same cycle of periods with the same starting point. We also assumed that different events were represented distinctly by different cell assemblies. As we presented ten consecutive events to the loop structure, a total of fifty cell assemblies were created in each area. Ten consecutive events were applied to each area with sequentially added time delays. Time delays indicate the size of the initiation period, reflecting a situation in which external events are sequentially represented in the brain.

When ten consecutive neural representations were induced by external events in the first area, the same events were presented in the second area with a delay of tN. Synaptic weights between the cell assemblies of connected areas were learned by Hebbian plasticity where a synaptic weight was strengthened if both pre- and post-synaptic neurons were activated. The sum of all synaptic weights on a cell assembly was fixed to implement the synaptic normalization during 1,000 iterations of the presentation of ten events. The value of each cell assembly was set to 1 if the weighted sum of the values of cell assemblies in other areas and the corresponding synaptic weights exceeded 0.15; otherwise, it was set to 0.

A difference between the assumptions in theory and simulation was the distribution of the phase of the cell assembly within an area. While theory assumes that the phases of assembly activity are randomly distributed, simulation assumes that all assembly activities are in the same phase to quantify the results.

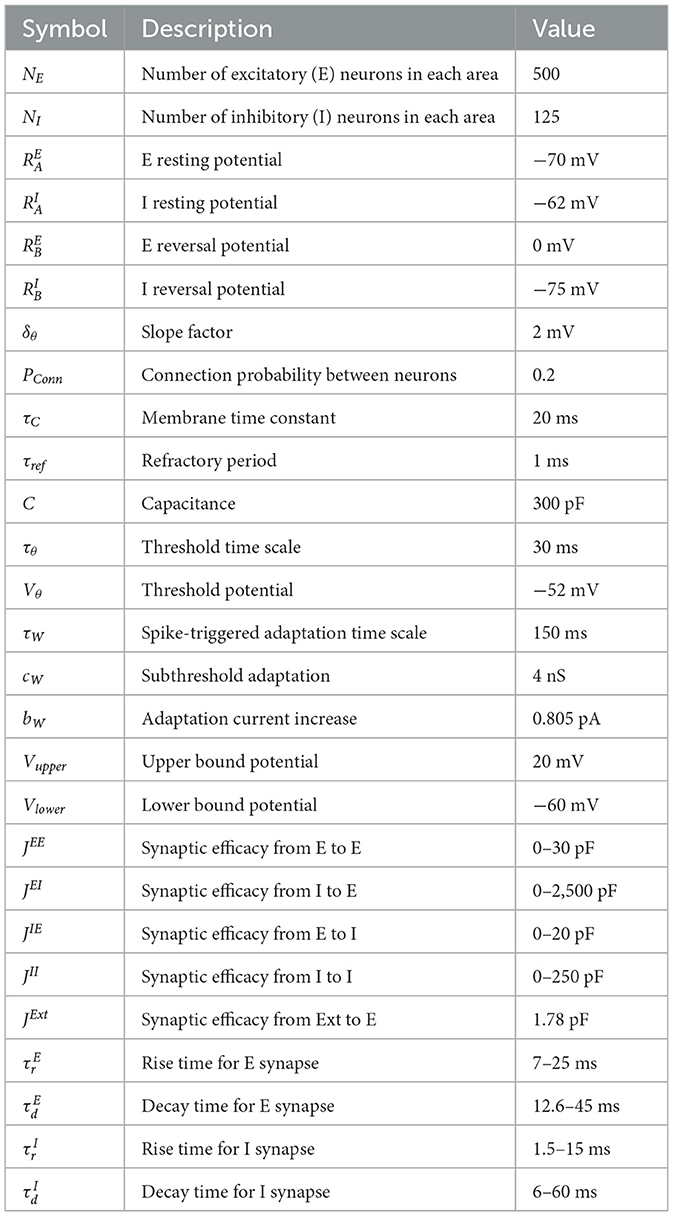

3.3 Simulation for the retrieval of sequential memory in spiking neural networks

For consistency with the simulations in the previous section, we set the size of the loop structure to N = 2, …, 6, containing two to six brain areas. Neurons in the network belonged to either the excitatory E or inhibitory I populations. We employed an adaptive exponential leaky integrate-and-fire neuron model (Brette and Gerstner, 2005) as the spiking neuron model and adopted model parameters from the previous study (Litwin-Kumar and Doiron, 2014). All parameters used in these simulations were provided in Table 2.

All the equations and parameters used in the spiking neural network model were taken from Litwin-Kumar and Doiron (2014). Specifically, the dynamics of the potential of E neuron i at time t was as follows:

where Vθ, i(t) is the threshold dynamics, and are the E to E and I to E synaptic conductance, respectively, and Wi(t) is the adaptation current. The dynamics of the potential of I neuron i at time t was as follows:

where and are the E to I and I to I synaptic conductance, respectively. The threshold dynamics Vθ, i(t) was given as follows:

An E neuron was influenced by the dynamics of the adaptation current Wi(t) given by:

A neuron discharged a spike when its potential increased above Vupper. At the same time, Wi(t) was increased by bw. After spiking, the potential was set to Vlower during the refractory period.

The dynamics of the synaptic conductance from population P to X at time t was given as follows:

where P and X are E or I, is the synaptic efficacy from external neurons, is the synaptic efficacy from population P to population X, is the number of spikes of external neurons, represents spiking activity of neuron j, * is the convolution, and KP(t) is the synaptic kernel in Equation 16. The specific parameters of the synaptic kernel were , , , and , which indicate the rise r and decay d time for synapses of the E and I populations, respectively. These parameters were adjusted to control the initiation and inhibition periods of the simulation (see Table 2). Consequently, the initiation duration, representing the appearance of the first spike, fell within the range [25.5, 55.5] ms. An approximate inhibition duration was calculated as , resulting in a range of [15, 150] ms.

We presented 10 consecutive events within the loop structure, and the synaptic weights were preset to ensure that this sequence of events was self-generating. There were 10 assemblies in each area. E-I, I-E, and I-I populations were connected only within each assembly. The E-E populations were not only connected within an assembly in an area, but also connected between areas. The E-E connections from the nth to the (n + 1)-th areas had similar structures, while the E-E connection matrix from the (N + 1)-th to the first area was shifted circularly downward by 50 rows—one assembly—along the vertical axis to enable the self-generation of spiking activity. The external stimulus was applied only to the first assembly in each area. The external stimulus was given during 0–120 ms to the first area, 40–160 ms to the second area and 80–200 ms to the third area, with a 40-ms delay between subsequent areas. The spiking activity could then propagate sequentially to other assemblies, resulting in self-generation.

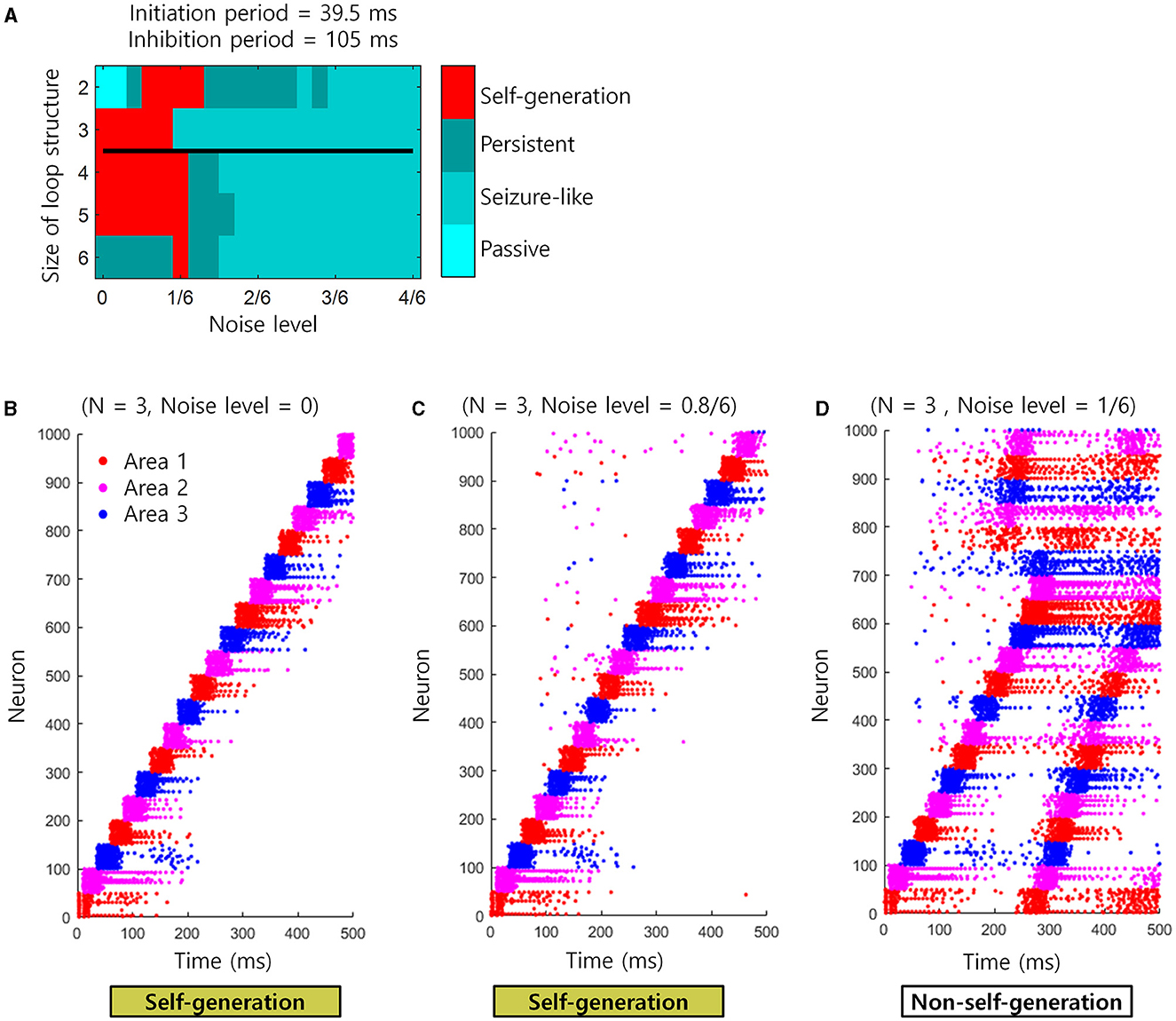

There exists a trial-to-trial variability in neural activity, which can be viewed as spontaneous neuronal noise. It is therefore important to know whether the sequential retrieval of “Self-generation” would be affected in the presence of such spontaneous neuronal noise. To confirm this, we re-performed the simulation of the spiking neural network with an initiation period = 39.5 ms and inhibition period = 105 ms. However, at this time, neuronal noise of various magnitudes, i.e., 0–4/6 noise level, was applied to 10% of randomly selected neurons. We set an external stimulus as 12-kHz spikes with the synaptic efficacy of JExt. We also generated external noise as noise level × 12-kHz spikes with the same synaptic efficacy. Note that the magnitude of external stimulus and noise represent the aggregation of the firing rates of all presynaptic neurons. For the issue of the aggregation of the firing rates of different neurons, we assumed that the firing processes of neurons are mutually independent Poisson processes. Due to this assumption, the firing rate of different neurons can be added together linearly. Both the external stimulus and external noise were input to a fixed set of neurons or a set of randomly selected neurons, respectively, each of which occupied 10% of the whole neurons. If the noise level is equal to 1/6, the noise is provided to the same number of neurons as those receiving the stimulus but with as 1/6 less intensity as that of the stimulus.

4 Simulation results

4.1 Simulation results for the learning and the retrieval of sequential memory

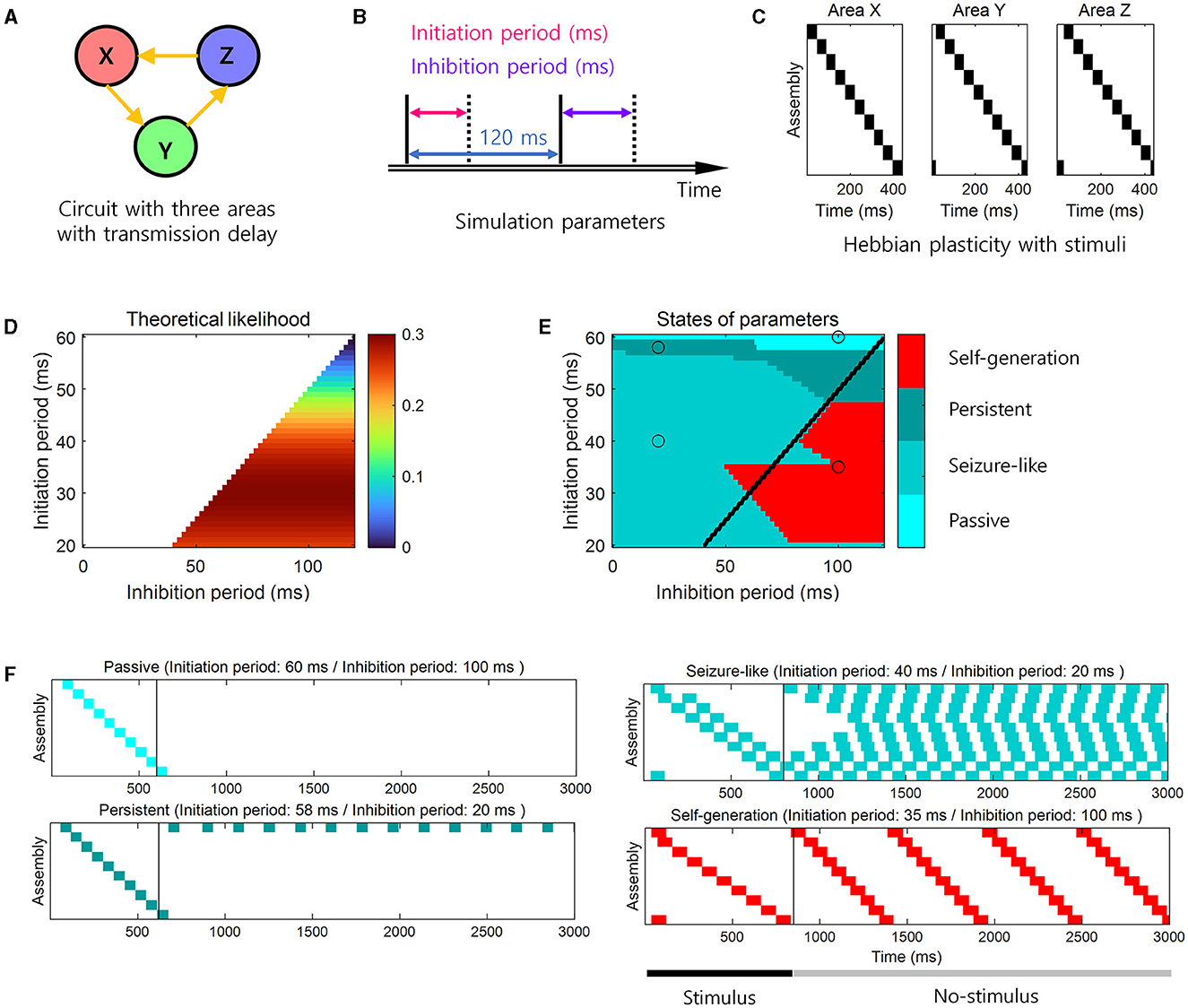

To confirm the theoretical predictions, we performed the simulation study when N = 3 (Figures 4A–C). When the theoretical likelihood (Equation 4) was high within the range of the parameters that satisfied the necessary conditions (Equations 2, 3), the simulation result showed that the neural representations were self-generated and sequential memory emerged, i.e., “Self-generation” (Figure 4F). When the theoretical likelihood was low within the range that satisfied the necessary conditions, cell assemblies responded only when a stimulus was given, i.e., “Passive” (Figure 4F). In the parameter range where necessary conditions were not satisfied, the last stimulated cell assemblies continued to respond, i.e., “Persistent” (Figure 4F), or all cell assemblies responded, i.e., “Seizure-like” (Figure 4F). These simulation results confirmed the theoretical predictions for the learning of sequential memory (Figures 4D–F). Specifically, self-generation of sequential activities mainly occurred within a parameter range where the theoretical likelihood was >0.2 while satisfying the necessary conditions (Figures 4D,E). For example, when the initiation period was 35 ms and the inhibition period was 100 ms, we observed “Self-generation” (Figure 4F). In contrast, when the inhibition period was smaller, e.g., 20 ms, but with the similar initiation period, the necessary conditions were not satisfied, resulting in the “Seizure-like” state (Figure 4F). Additionally, when the initiation period was 60 ms and the inhibition period was 100 ms, the cell assemblies exhibit “Passive” state (Figure 4F). When the inhibition period was 58 ms and the necessary conditions were not met, the cell assemblies were in “Persistent” state (Figure 4F).

Figure 4. Simulation for the learning and the retrieval of sequential memory. (A) The loop structure. (B) Simulation parameters for the basic neural activity model. The active period was set to 120 ms minus the length of the initiation period. (C) Stimuli that exhibit neural representations to the learning via Hebbian plasticity. (D) The theoretical prediction of the learning of sequential memory for simulation parameters. The color-coded parameter indicates that this parameter satisfies the necessary conditions for the learning of sequential memory (see Equations 2, 3). The color codes the likelihood of the learning of sequential memory (see Equation 4). (E) The simulation results for the theoretical prediction. The color codes the 4 states of neural activity. We determined the state in the simulation in the following way. The “Passive” state was determined if there was no active cell assembly when the stimulus ended, representing the absence of activity. The “Seizure-like” state was determined if more than 40% of all cell assemblies were active when the stimulus ended, representing the excessive activity. If the active cell assemblies existed and occupies <40% when the stimulus ended, the state was determined as the “Self-generation” or “Persistent” state. Among these two states, if cell assemblies were activated by the order of stimuli, then the state was determined as “Self-generation”, representing the generation of the stimulus sequence without the stimulus presentation. Otherwise, the state was alternatively determined as “Persistent”, representing the incapability of generating the stimulus sequence. Black line represents the boundary of areas that satisfy the necessary conditions. (F) Examples of four states of neural activity. The left side of the black vertical bar indicates the neural activity during the stimulus presentation. The right side of the black vertical bar indicates the neural activity during the no-stimulus presentation. Each panel corresponds to the black open circle in e.

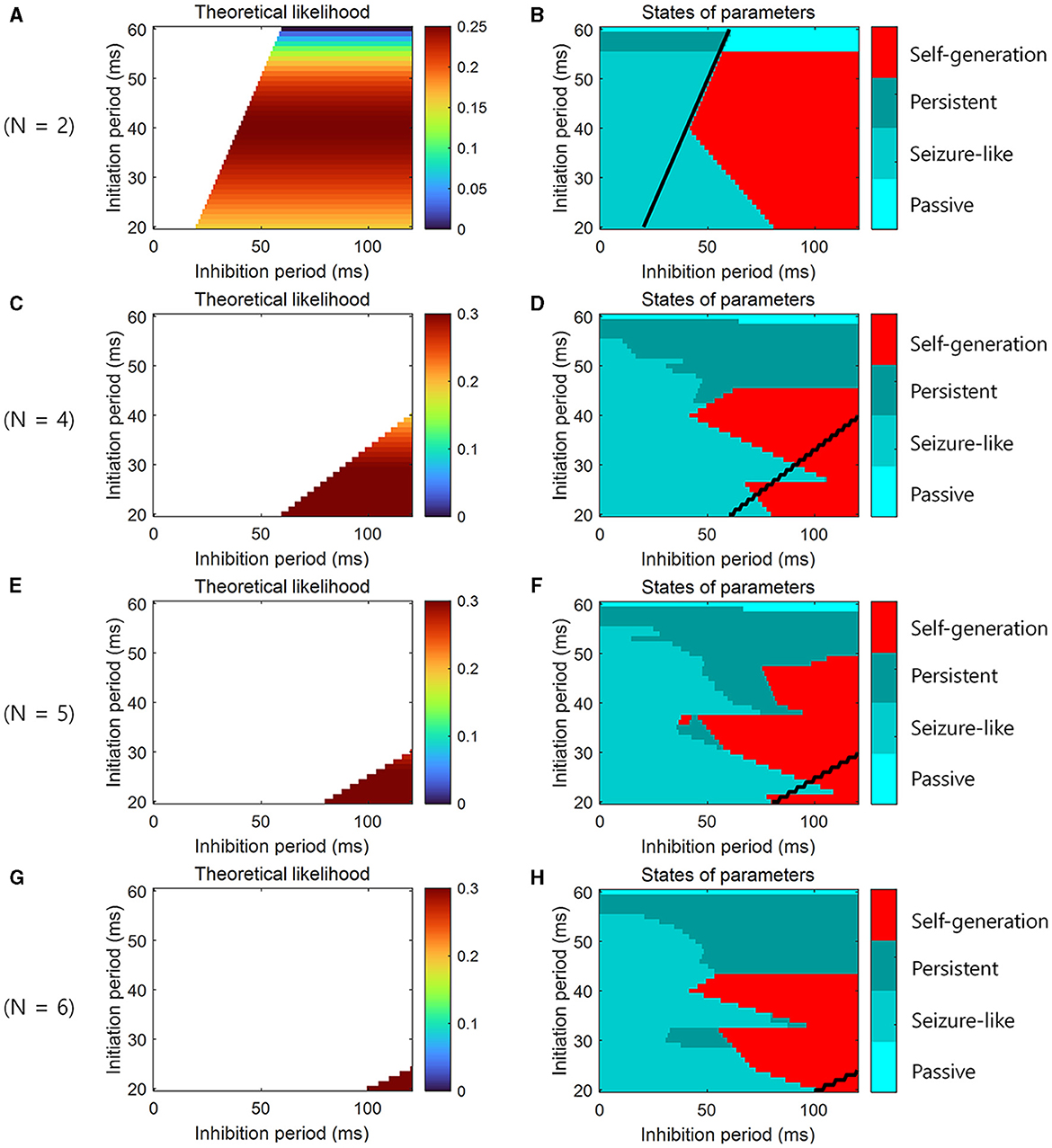

To confirm theoretical predictions in loop structures of different sizes, additional simulations were performed not only at N = 3 but also at N = 2, 4, 5, 6. When the theoretical likelihood (Equation 4) was high within the range of the parameters that satisfied the necessary conditions (Equations 2, 3), the simulation result showed that the neural representations were self-generated, and sequential memory emerged (Figures 5A–H).

Figure 5. Simulation for the learning and the retrieval of sequential memory in loop structures of various sizes. (A) The theoretical prediction of the learning of sequential memory for simulation parameters when N = 2. The color-coded parameter indicates that this parameter satisfies the necessary conditions for the learning of sequential memory (see Equations 2, 3). The color codes the likelihood of the learning of sequential memory (see Equation 4). (B) The simulation results for the theoretical prediction when N = 2. The color codes the 4 states of neural activity. (C) Similar to (A), but N = 4. (D) Similar to (B), but N = 4. (E) Similar to (A), but N = 5. (F) Similar to (B), but N = 5. (G) Similar to (A), but N = 6. (H) Similar to (B), but N = 6. We determined the state in the simulation in the same way as for Figure 4E. Black line represents the boundary of areas that satisfy the necessary conditions.

Note that our theoretical model assumes that neural activity has a random phase such that every cell assembly in an area changes its activity exactly once during tA (see Section 2.3). However, in the simulations, only a few cell assemblies were activated simultaneously; otherwise they displayed seizure-like behavior. Therefore, for the sake of visualization and effective simulations, this assumption is not always met. That is, it may be possible to change the neural activity of every cell assembly before tA. Conversely, the neural activity of cell assemblies can be unchanged during as well as after tA due to prolonged lack of activity. Let α be such timing variation. In the former case, α has a negative value; in the latter, α has a positive value. The conditions to obtain Equation 2 are then modified as follows: NtN ≤ tN + tA + α. This condition yields:

The conditions to obtain Equation 3 can be modified as follows: NtN + tA ≤ tN + tA + tI + α. This condition yields:

This is why “self-generation” appears in the simulation (Figures 4E, 5A–H), although it does not satisfy the necessary conditions (Equations 2, 3).

4.2 Simulation results for the retrieval of sequential memory in spiking neural networks

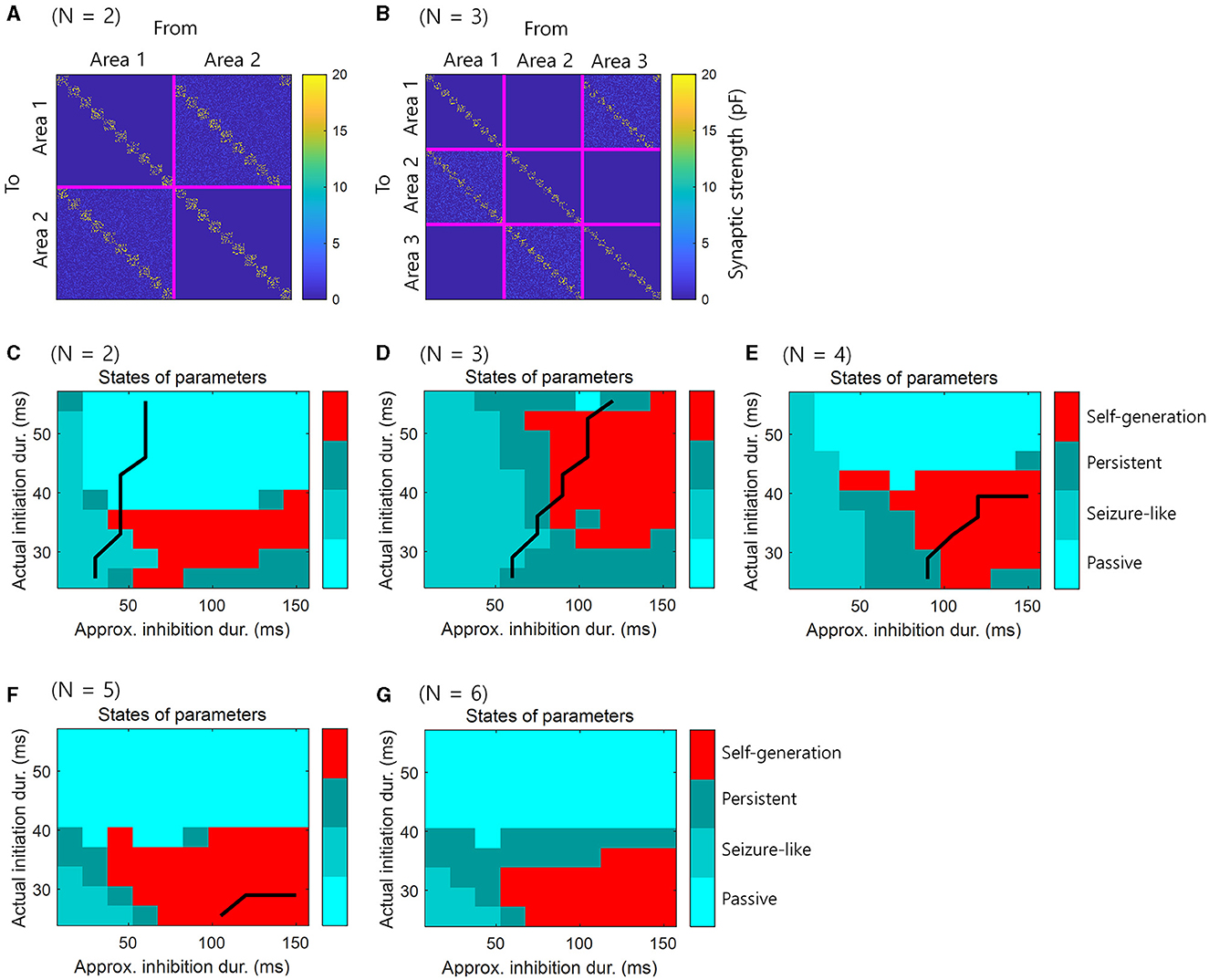

For consistency with the simulations in the previous section, we set the size of the loop structure to N = 2, …, 6, containing two to six brain areas. We presented 10 consecutive events within this structure, and the synaptic weights were preset to ensure that this sequence of events was self-generating. There were 10 assemblies in each area and one assembly contains 50 neurons. The E-E populations were not only connected within assembly, but also connected between areas. The E-E connections from n to n + 1 areas had similar structures, while, the E-E connection from N + 1 to 1 area was shifted by one assembly to enable the self-generation of spiking activity (Figures 6A, B). The external stimulus was applied during 120 ms to the first assembly in each area, with a 40 ms delay added cumulatively to each area. The spiking activity could then propagate sequentially to other assemblies, resulting in self-generation.

Figure 6. Simulation for the retrieval of sequential memory in loop structures of various sizes in spiking neural networks. (A) Pre-assigned synaptic weights for simulation of retrieval of sequential memory in spiking neural networks when N = 2. (B) Similar to (A) with N = 3. (C) The simulation results in spiking neural networks when N = 2. (D) Similar to (C), but N = 3. (E) Similar to (C), but N = 4. (F) Similar to (C), but N = 5. (G) Similar to (C), but N = 6. Black line represents the boundary of areas that satisfy the necessary conditions when tA = 120 ms virtually. The right-lower areas of black line satisfy the necessary conditions.

What differs from previous simulations is that we controlled the initiation period and inhibition period by adjusting the parameters of the synaptic kernel. The specific parameters of the synaptic kernel were , , , and , which indicate the rise r and decay d time for synapses of the E and I populations. These parameters were adjusted to control the initiation and inhibition periods of this simulation (see, Table 2). Consequently, the actual initiation duration, representing the appearance of the first spike, fell within the range [25.5, 55.5] ms. An approximate inhibition duration was calculated as , resulting in a range of [15, 150] ms. Unlike previous simulations, the longer the initiation period was, the longer the active period was in this simulation. The purpose of this simulation is not to use a simplified basic neural activity model, but to use spiking neural networks in a real environment to verify “Self-generation”.

Simulation results showed that the phenomenon of “self-generation” was also evident in spiking neural networks of loop structure with various sizes N. We assumed that the actual initiation duration corresponds to the initiation period and the approximated inhibition duration corresponds to the inhibition period. The simulation results demonstrated that when the theoretical likelihood was high within the range of parameters satisfying the necessary conditions (Figures 4D, 5A, C, E, G), neural representations were self-generated, indicating the retrieval of sequential memory (Figures 6C–G).

There exists a trial-to-trial variability in neural activity, which can be viewed as spontaneous neuronal noise. It is therefore important to know whether the sequential retrieval of “Self-generation” would be affected in the presence of such spontaneous neuronal noise. To confirm this, we re-performed the simulation of the spiking neural network with an initiation period = 39.5 ms and inhibition period = 105 ms. However, at this time, neuronal noise of various magnitudes, i.e., 0–4/6 noise level, was applied to 10% of randomly selected neurons. As a result, the “self-generation” of the sequence was retrieved well until the noise level was ≤ 1/6, but disrupted when the noise level exceeded 1/6 (Figure 7A). This phenomenon may occur because as the magnitude of the noise increases beyond a 1/6 level, the cell assembly can be activated regardless of the sequence (Figures 7B–D). That is, below a 1/6 noise level, spontaneous neuronal noise can be corrected and ignored by the loop structure, but above that level, these errors appear to propagate globally (Figures 7C, D). See the previous section for more information about applying noise.

Figure 7. Simulation for noise injection in spiking neural networks. (A) The state of the loop structures (self-generation, seizure-like, persistent or passive) depending on the noise level and the size of loop structures. Black line represents the boundary of areas that satisfy the necessary conditions when tA = 120 ms virtually. The upper areas of black line satisfy the necessary conditions. (B) An example of spike trains when the noise level is 0. Each colored dot indicates each spike. Red dots indicate spikes of neuron in the area 1. Magenta dots indicate spikes of neuron in the area 2. Blue dots indicate spikes of neuron in the area 3. (C) Similar to (B), but the noise level is 0.8/6. (D) Similar to (B), but the noise level is 1/6.

5 Discussion

In the present study, we investigated how brain loop structures subserve the learning of sequential memory. We assumed that the sequential memory emerges by delayed information transmission in these structures (Figure 1) and presented a basic neural activity model for the delayed information transmission (Figure 2). Based on this model, we described necessary conditions for the learning of sequential memory in these structures (Figure 3). Through the simulation, it was confirmed that sequential memory emerged in this structure under the theoretically predicted conditions and the neural representations of sequential events were self-generated (Figure 4).

If the active or inhibition periods of a cell assembly are fixed, the possible range of information transmission delay is bounded by the inverse of the size of the loop structure. In other words, as the size of this structure increases, the information transmission delay should decrease (Equations 2, 3; Figures 3A, D). The range of information transmission delay may be bounded according to the cellular properties of the tissue. Therefore, the information transmission delay cannot be infinitely reduced. This means that for the learning of sequential memory, (1) the size of brain loop structure must be limited so that it does not become too large. The optimal likelihood of the learning of sequential memory increases as the size of this structure increases and soon saturates theoretically (Equation 10; Figure 3C). Yet, it is noteworthy that it remains challenging to verify these characteristics of the optimal likelihood by simulations because while the possibility of sequential memory learning can be confirmed through simulation, it is difficult to determine “how well sequential memory is learned” by simulations. This means that for the learning of sequential memory, (2) the size of brain loop structure must be relatively large, but not necessarily infinitely large. Combining (1) and (2), we come to a conclusion that a moderate level of this structure size, e.g., N = 5, is advantageous for the learning of sequential memory. This may explain why biological brain loop structures are made up of a moderate number of areas, such as cortico-basal ganglia-thalamic loops (Alexander et al., 1986; Lee et al., 2020; Foster et al., 2021) and cortico-cerebellar loops (Middleton and Strick, 2000; Kelly and Strick, 2003; Ramnani, 2006).

The basic neural activity model in this study is based on cell assemblies. During the inhibition and initiation periods, cell assemblies exhibit low activity levels, whereas they exhibit high activity levels during the active period, indicating oscillatory patterns. If the lengths of the inhibition plus initiation periods and the active period are unequal (Figures 4, 5), they can resemble sawtooth oscillations, similar to those observed in hippocampal theta oscillations (Montgomery et al., 2009).

The firing timing of hippocampal neurons is known to be related to the oscillatory phase of theta oscillations, and the theta sequence—the order of neuron firing timings associated with theta oscillations—is known to correlate with behavioral sequences (Foster and Wilson, 2007; Gupta et al., 2012). This exemplifies how spatially and temporally correlated neural activity, i.e., oscillations, is linked to sequences of behaviors.

In our model, if the activities of cell assemblies are oscillatory and spatially correlated, the neural activity represented by the active period spreads spatially like a traveling wave. In this case, because neural activity propagates collectively, the sequential activation of cell assemblies is less likely to be disrupted and may therefore appear more stable. These oscillatory propagations are thought to facilitate information transmission across many brain areas and frequency bands (Rubino et al., 2006; Bhattacharya et al., 2022; Zabeh et al., 2023; for reviews, see Bauer et al., 2020). This collective information transmission through loop structures enables stable sequential activation of cell assemblies, allowing the brain to better represent behavioral sequences.

Recent studies showed that a sequence of activities can be generated in randomly connected structures (Rajan et al., 2016; Rajakumar et al., 2021), other than loop structures. The present study does not exclude this possibility. Moreover, it has been shown that the neural representations of sequential events can be self-generated even when input is absent in recurrent structures (Klos et al., 2018). However, a key difference between the present study and other studies is whether it handles loop structures, which are known to be important in sequential memory.

The present study used a basic model to simplify the derivation of mathematical results, and therefore, all connections between each area were assumed to be excitatory. However, inhibitory connections are also present in cortico-basal ganglia-thalamic loops (Lanciego et al., 2012). Our results can still be applied to inhibitory connections if cell assemblies of each area selectively represent each neural representation. However, it is a limitation of this study that the diversity of these excitatory-inhibitory connections was not directly incorporated into the model. This point can be addressed in future studies.

There exists a trial-to-trial variability in neural activity, which can be viewed as spontaneous neuronal noise. It is therefore important to know whether the sequential retrieval of “Self-generation” would be affected in the presence of such spontaneous neuronal noise. In this study, we showed that the activities of cell assemblies for self-generation were robust to spontaneous neuronal noise when the noise level was < 1/6 of the external stimulus. However, this study did not provide comprehensive simulations of the intensities of various external stimuli and the number of stimulated neurons. This limits our understanding of how robust cell assemblies are to noise. Future studies should be conducted to understand the effects of spontaneous neuronal noise on the self-generation of sequential memory through the loop structure of cell assemblies. Moreover, 1/6 of the external stimulus can be considered as a low level of spontaneous neural noise. The simulation results show that “self-generation” can fail even under such small noises (Figure 7). This can be seen as a limitation of this study. Therefore, future studies are needed, such as additional theoretical predictions based on cell assembly models that can include the presence of spontaneous noise, or additional simulation studies under conditions that can be more robust to spontaneous noise.

Nonetheless, this study elucidated the relationship between the size of brain loop structures and the formation of sequential memories. By revealing that a moderate-sized structure is most suitable for the formation of sequential memory, it provided insight into why the biological brain loop structure is moderate-sized. The results of this study may help to advance our understanding of the relationship between brain structures and functions involved in sequential memory.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

DS: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. S-PK: Funding acquisition, Project administration, Supervision, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. RS-2023-00213187) and the Alchemist Brain to X (B2X) Project funded by the Ministry of Trade, Industry and Energy (No. 20012355; NTIS No. 1415181023).

Acknowledgments

During the preparation of the manuscript, the authors used Google Translate and ChatGPT 4.0 in order to improve readability and English writings. After using these tools, the authors reviewed and edited the texts as needed and took a full responsibility for the contents. We have provided all input prompts to ChatGPT 4.0 and outputs received from it in the Supplementary Figure.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2024.1421458/full#supplementary-material

References

Alexander, G. E., DeLong, M. R., and Strick, P. L. (1986). Parallel organization of functionally segregated circuits linking basal ganglia and cortex. Annu. Rev. Neurosci. 9, 357–381. doi: 10.1146/annurev.ne.09.030186.002041

Aoki, S., Smith, J. B., Li, H., Yan, X., Igarashi, M., Coulon, P., et al. (2019). An open cortico-basal ganglia loop allows limbic control over motor output via the nigrothalamic pathway. Elife 8:e49995. doi: 10.7554/eLife.49995

Bauer, A.-K. R., Debener, S., and Nobre, A. C. (2020). Synchronisation of neural oscillations and cross-modal influences. Trends Cogn. Sci. 24, 481–495. doi: 10.1016/j.tics.2020.03.003

Bhattacharya, S., Brincat, S. L., Lundqvist, M., and Miller, E. K. (2022). Traveling waves in the prefrontal cortex during working memory. PLoS Comput. Biol. 18:e1009827. doi: 10.1371/journal.pcbi.1009827

Boucsein, C., Nawrot, M. P., Schnepel, P., and Aertsen, A. (2011). Beyond the cortical column: abundance and physiology of horizontal connections imply a strong role for inputs from the surround. Front. Neurosci. 5:32. doi: 10.3389/fnins.2011.00031

Brette, R., and Gerstner, W. (2005). Adaptive exponential integrate-and-fire model as an effective description of neuronal activity. J. Neurophysiol. 94, 3637–3642. doi: 10.1152/jn.00686.2005

Cannon, R. C., O'Donnell, C., and Nolan, M. F. (2010). Stochastic ion channel gating in dendritic neurons: morphology dependence and probabilistic synaptic activation of dendritic spikes. PLoS Comput. Biol. 6:e1000886. doi: 10.1371/journal.pcbi.1000886

Chang, S.-E., and Guenther, F. H. (2020). Involvement of the cortico-basal ganglia-thalamocortical loop in developmental stuttering. Front. Psychol. 10:3088. doi: 10.3389/fpsyg.2019.03088

Cone, I., and Shouval, H. Z. (2021). Learning precise spatiotemporal sequences via biophysically realistic learning rules in a modular, spiking network. Elife 10:e63751. doi: 10.7554/eLife.63751

Foster, D. J., and Wilson, M. A. (2007). Hippocampal theta sequences. Hippocampus 17, 1093–1099. doi: 10.1002/hipo.20345

Foster, N. N., Barry, J., Korobkova, L., Garcia, L., Gao, L., Becerra, M., et al. (2021). The mouse cortico–basal ganglia–thalamic network. Nature 598, 188–194 doi: 10.1038/s41586-021-03993-3

Gisiger, T., and Boukadoum, M. (2018). A loop-based neural architecture for structured behavior encoding and decoding. Neural Netw. 98, 318–336. doi: 10.1016/j.neunet.2017.11.019

Gupta, A. S., van der Meer, M. A. A., Touretzky, D. S., and Redish, A. D. (2012). Segmentation of spatial experience by hippocampal theta sequences. Nat. Neurosci. 7, 1032–1039. doi: 10.1038/nn.3138

Janacsek, K., Shattuck, K. F., Tagarelli, K. M., Lum, J. A. G., Turkeltaub, P. E., and Ullman, M. T. (2020). Sequence learning in the human brain: a functional neuroanatomical meta-analysis of serial reaction time studies. Neuroimage 207:116387. doi: 10.1016/j.neuroimage.2019.116387

Kelly, R. M., and Strick, P. L. (2003). Cerebellar loops with motor cortex and prefrontal cortex of a nonhuman primate. J. Neurosci. 23, 8432–8444. doi: 10.1523/JNEUROSCI.23-23-08432.2003

Klos, C., Miner, D., and Triesch, J. (2018). Bridging structure and function: a model of sequence learning and prediction in primary visual cortex. PLoS Comput. Biol. 14:e1006187. doi: 10.1371/journal.pcbi.1006187

Lanciego, J. L., Luquin, N., and Obeso, J. A. (2012). Functional neuroanatomy of the basal ganglia. Cold Spring Harb. Perspect. Med. 2:a009621. doi: 10.1101/cshperspect.a009621

Lee, J., Wang, W., and Sabatini, B. L. (2020). Anatomically segregated basal ganglia pathways allow parallel behavioral modulation. Nat. Neurosci. 23, 1388–1398. doi: 10.1038/s41593-020-00712-5

Litwin-Kumar, A., and Doiron, B. (2014). Formation and maintenance of neuronal assemblies through synaptic plasticity. Nat. Commun. 5:5319. doi: 10.1038/ncomms6319

Logiaco, L., Abbott, L. F., and Escola, S. (2021). Thalamic control of cortical dynamics in a model of flexible motor sequencing. Cell Rep. 35:109090. doi: 10.1016/j.celrep.2021.109090

Maes, A., Barahona, M., and Clopath, C. (2020). Learning spatiotemporal signals using a recurrent spiking network that discretizes time. PLoS Comput. Biol. 16:e1007606. doi: 10.1371/journal.pcbi.1007606

McNamee, D., and Wolpert, D. M. (2019). Internal models in biological control. Ann. Rev. Cont. Robot. Auton. Syst. 2, 339–364. doi: 10.1146/annurev-control-060117-105206

Meisel, C., and Gross, T. (2009). Adaptive self-organization in a realistic neural network model. Phys. Rev. E 80:061917. doi: 10.1103/PhysRevE.80.061917

Middleton, F. A., and Strick, P. L. (2000). Basal ganglia and cerebellar loops: motor and cognitive circuits. Brain Res. Rev. 31, 236–250. doi: 10.1016/S0165-0173(99)00040-5

Mok, R. M., and Love, B. C. (2022). Abstract neural representations of category membership beyond information coding stimulus or response. J. Cogn. Neurosci. 34, 1719–1735. doi: 10.1162/jocn_a_01651

Montgomery, S. M., Betancur, M. I., and Buzsáki, G. (2009). Behavior-dependent coordination of multiple theta dipoles in the hippocampus. J. Neurosci. 29, 1381–1394. doi: 10.1523/JNEUROSCI.4339-08.2009

Rajakumar, A., Rinzel, J., and Chen, Z. S. (2021). Stimulus-driven and spontaneous dynamics in excitatory-inhibitory recurrent neural networks for sequence representation. Neural Comput. 33, 2603–2645. doi: 10.1162/neco_a_01418

Rajan, K., Harvey, C. D., and Tank, D. W. (2016). Recurrent network models of sequence generation and memory. Neuron 90, 128–142. doi: 10.1016/j.neuron.2016.02.009

Ramnani, N. (2006). The primate cortico-cerebellar system: anatomy and function. Nat. Rev. Neurosci. 7, 511–522. doi: 10.1038/nrn1953

Rubino, D., Robbins, K. A., and Hatsopoulos, N. G. (2006). Propagating waves mediate information transfer in the motor cortex. Nat. Neurosci. 9, 1549–1557. doi: 10.1038/nn1802

Rusu, S. I., and Pennartz, C. M. A. (2020). Learning, memory and consolidation mechanisms for behavioral control in hierarchically organized cortico-basal ganglia systems. Hippocampus 30, 73–98. doi: 10.1002/hipo.23167

Sadeh, S., and Clopath, C. (2021). Excitatory-inhibitory balance modulates the formation and dynamics of neuronal assemblies in cortical networks. Sci. Adv. 7:eabg8411. doi: 10.1126/sciadv.abg8411

Schnepel, P., Kumar, A., Zohar, M., Aertsen, A., and Boucsein, C. (2015). Physiology and impact of horizontal connections in rat neocortex. Cereb. Cortex 25, 3818–3835. doi: 10.1093/cercor/bhu265

Siegle, J. H., Jia, X., Durand, S., Gale, S., Bennett, C., Graddis, N., et al. (2021). Survey of spiking in the mouse visual system reveals functional hierarchy. Nature 592, 86–92. doi: 10.1038/s41586-020-03171-x

Vargha-Khadem, F., Gadian, D. G., Copp, A., and Mishkin, M. (2005). FOXP2 and the neuroanatomy of speech and language. Nat. Rev. Neurosci. 6, 131–138. doi: 10.1038/nrn1605

Wang, X.-J. (2022). Theory of the multiregional neocortex: large-scale neural dynamics and distributed cognition. Annu. Rev. Neurosci. 45, 533–560. doi: 10.1146/annurev-neuro-110920-035434

Yildizoglu, T., Riegler, C., Fitzgerald, J. E., and Portugues, R. (2020). A neural representation of naturalistic motion-guided behavior in the zebrafish brain. Curr. Biol. 30, 2321–2333.e6. doi: 10.1016/j.cub.2020.04.043

Yu, L., and Yu, Y. (2017). Energy-efficient neural information processing in individual neurons and neuronal networks. J. Neurosci. Res. 95, 2253–2266. doi: 10.1002/jnr.24131

Keywords: behavioral sequence, cell assembly, loop structure, self-generation, sequential memory

Citation: Sihn D and Kim S-P (2024) A neural basis for learning sequential memory in brain loop structures. Front. Comput. Neurosci. 18:1421458. doi: 10.3389/fncom.2024.1421458

Received: 22 April 2024; Accepted: 12 July 2024;

Published: 05 August 2024.

Edited by:

Renato Duarte, University of Coimbra, PortugalReviewed by:

Andrew E. Papale, University of Pittsburgh, United StatesPetros Evgenios Vlachos, Frankfurt Institute for Advanced Studies, Germany

Copyright © 2024 Sihn and Kim. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sung-Phil Kim, c3BraW1AdW5pc3QuYWMua3I=

Duho Sihn

Duho Sihn Sung-Phil Kim

Sung-Phil Kim