- Department of Public Health, Swansea University, Swansea, United Kingdom

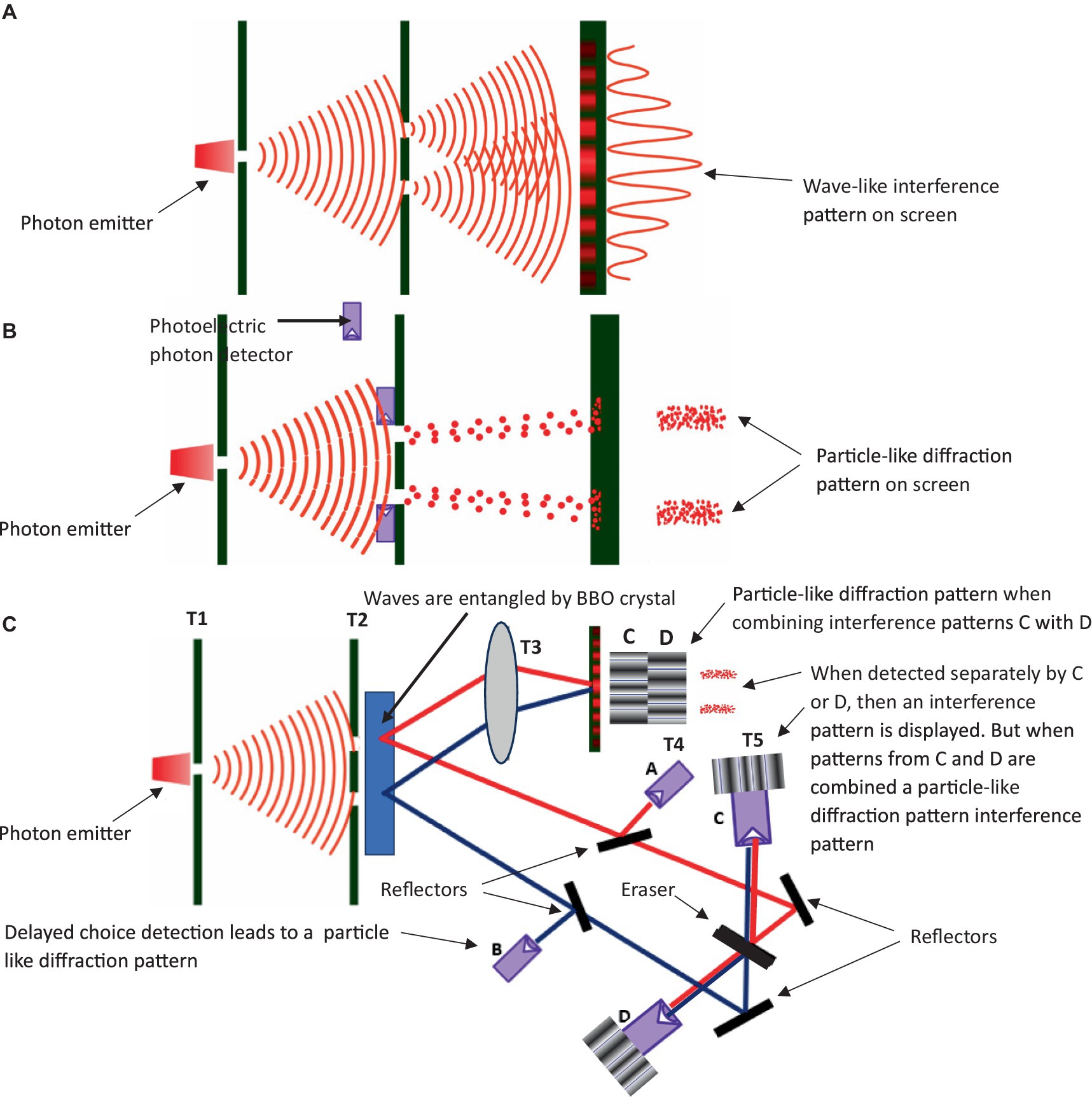

There have been impressive advancements in the field of natural language processing (NLP) in recent years, largely driven by innovations in the development of transformer-based large language models (LLM) that utilize “attention.” This approach employs masked self-attention to establish (via similarly) different positions of tokens (words) within an inputted sequence of tokens to compute the most appropriate response based on its training corpus. However, there is speculation as to whether this approach alone can be scaled up to develop emergent artificial general intelligence (AGI), and whether it can address the alignment of AGI values with human values (called the alignment problem). Some researchers exploring the alignment problem highlight three aspects that AGI (or AI) requires to help resolve this problem: (1) an interpretable values specification; (2) a utility function; and (3) a dynamic contextual account of behavior. Here, a neurosymbolic model is proposed to help resolve these issues of human value alignment in AI, which expands on the transformer-based model for NLP to incorporate symbolic reasoning that may allow AGI to incorporate perspective-taking reasoning (i.e., resolving the need for a dynamic contextual account of behavior through deictics) as defined by a multilevel evolutionary and neurobiological framework into a functional contextual post-Skinnerian model of human language called “Neurobiological and Natural Selection Relational Frame Theory” (N-Frame). It is argued that this approach may also help establish a comprehensible value scheme, a utility function by expanding the expected utility equation of behavioral economics to consider functional contextualism, and even an observer (or witness) centric model for consciousness. Evolution theory, subjective quantum mechanics, and neuroscience are further aimed to help explain consciousness, and possible implementation within an LLM through correspondence to an interface as suggested by N-Frame. This argument is supported by the computational level of hypergraphs, relational density clusters, a conscious quantum level defined by QBism, and real-world applied level (human user feedback). It is argued that this approach could enable AI to achieve consciousness and develop deictic perspective-taking abilities, thereby attaining human-level self-awareness, empathy, and compassion toward others. Importantly, this consciousness hypothesis can be directly tested with a significance of approximately 5-sigma significance (with a 1 in 3.5 million probability that any identified AI-conscious observations in the form of a collapsed wave form are due to chance factors) through double-slit intent-type experimentation and visualization procedures for derived perspective-taking relational frames. Ultimately, this could provide a solution to the alignment problem and contribute to the emergence of a theory of mind (ToM) within AI.

1 Introduction

In recent years, transformer-based natural language processing (NLP) models (called large language models; LLM) have made significant progress in simulating natural language. This innovation began with Google’s seminal paper titled “Attention is all you need” (Vaswani et al., 2017), initially developed as a translation tool. It later formed the foundation of the NLP architecture behind the original generative pretrained transformer (GPT) models (Radford et al., 2018, 2019; Brown et al., 2020), and more recently, Open AI’s first commercial implementation of this technology in the form of ChatGPT (OpenAI, 2023; Ray, 2023). The GPT and subsequent ChatGPT (3.5 and 4) LLMs used a modified version of the “Attention is all you need” transformer model. The encoder module was removed and a decoder-only LLM version was used (Radford et al., 2018, 2019; Brown et al., 2020; OpenAI, 2023; Ray, 2023) (for further details on these specific differences, see Supplementary material 1). This decoder-only ChatGPT LLM consists of several blocks (or layers) that include word and positional encoding, a masked self-attention mechanism, and a feedforward network. This network generates language output in response to some inputted text (OpenAI, 2023; Ray, 2023). The text is generated from left to right by predicting the next token (word) in the sequence in response to some input sequence (e.g., a sentence written by a human user that prompts ChatGPT to respond), which is comprised of a sequence of tokens that represent words or symbols.

One significant way in which transformer-based LLM models improved efficiency and performance over previous models was through their ability to perform parallel computation of an input sequence using multihead attention (it can attend to multiple parts of the input and output sequence simultaneously), unlike recurrent neural networks (RNNs) or long short-term memory (LSTM) networks that process the input sequentially using a single head (Vaswani et al., 2017; Radford et al., 2018, 2019; Brown et al., 2020). This novel capability allows for several improvements over existing RNNs and LSTMs, such as (Vaswani et al., 2017; Radford et al., 2018): (1) reduced training times; (2) allows for the production of larger models; (3) enables the capture of long-range dependencies between input tokens, unlike convolutional neural networks (CNNs) that rely on local filters instead; (4) leads to an improved representation of the input sequence; (5) increased performance on text summarization; and (6) provides greater adaptability to different contexts by using different attention heads and weights for each token, unlike previous models (RNNs and LSTMs) that used a fixed or shared representation for the entire sequence. These improvements allow for more flexibility and expressiveness in modeling natural language, resulting in generally more human-like responses in question-answering tasks (conversation).

In line with these significant advances in NLP and other areas of AI, there has also been growing concern that AI may become uncontrollable and unethical. As a result, approximately 33,709 scientists and leaders in technology, along with the general public, have signed an open letter (Future of Life Institute, 2023) that pleaded “for all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.” This is further potentially concerning as these LLMs are reportedly exhibiting glimpses of general (human-like) intelligence already (Bubeck et al., 2023).

Simulating or even achieving human-like intelligence has been extremely challenging in the field of AI, but it remains an ongoing goal (Asensio et al., 2014; Lake et al., 2017; Korteling et al., 2021; Dubova, 2022; Edwards et al., 2022; Russell, 2022). Some of these problems stem from AI’s inability to generate creative solutions, adapt to contextual and background information, and use intuition and feeling, which are considered fundamental aspects of human-level thinking and understanding. This also includes the incorporation of ethical considerations regarding emotions (Bergstein, 2017; Korteling et al., 2021; Edwards et al., 2022).

It has been suggested that human-level AI should possess intelligence properties that not only pertain to mathematical and coding problems but also enable it to comprehend and dynamically respond to a broad range of complex human behaviors that require attention, creativity, and complex decision-making planning. Moreover, the AI should be capable of ethically understanding and reacting to human motivations and emotions, and demonstrate an awareness of the environment similar to that of humans (Krämer et al., 2012; Van Den Bosch and Bronkhorst, 2018; van den Bosch et al., 2019; Korteling et al., 2021). One of the key abilities for understanding others’ emotions, motivations, etc., is through developing a theory of mind (ToM) (Leslie et al., 2004; Carlson et al., 2013), which is central to the development of empathy and compassion toward others (Goldstein and Winner, 2012; Singer and Tusche, 2014; Preckel et al., 2018). ToM is the ability to attribute mental states such as beliefs, intentions, desires, emotions, knowledge, etc., to oneself and others and to understand that others have mental states that are different from one’s own. This typically develops in children through several stages such as early development at 2–3 years old; false belief understanding (the understanding that others can hold beliefs that are incorrect) at around 4–5 years old; and more advanced ToM at around 6–7 years old where they learn second-order beliefs (beliefs about beliefs, e.g., John believes that Mary believes all spiders are poisonous) (Wellman et al., 2001; Carlson et al., 2004). Importantly, AI has not currently been able to simulate ToM, and there is a relationship between language development in humans and emotional understanding of ToM (Grazzani et al., 2018). For this reason, RFT as a language model may play an important role in helping AI develop ToM, as the ability to take perspectives seems to be a key component (Batson et al., 1997; Decety, 2005; Lamm et al., 2007; Edwards et al., 2017b; Herrera et al., 2018).

So, perspective-taking ToM, with its role in facilitating the development of empathy and compassion, may play a crucial role in AI ethics and alignment. The ethics of AI have been debated for decades, both in scientific circles and in science fiction. For instance, Isaac Asimov proposed the three laws for robotics (or AI in more general) (Asimov, 1984): (1) a robot may not harm a human being or, through inaction, allow a human being to come to harm; (2) a robot (AI) must obey orders given to it by humans, except where such orders would conflict with the first law; and (3) a robot (AI) must protect its own existence as long as such protection does not conflict with the first or second law. However, others have argued that these laws are inadequate for the emergence of ethical AI (Anderson, 2008).

More recently, there have been some concerns that scaling up larger AI models, such as ChatGPT and other types of AI, could lead to problems in maintaining ethical standards when the models behave (verbally respond in the case of LLMs) (Russell, 2019; Turner et al., 2019; Carlsmith, 2022; Turner and Tadepalli, 2022; Krakovna and Kramar, 2023). For instance, OpenAI and others have been transparent about the possible difficulties in controlling transformer-based AI like Chat-GPT models in the future (OpenAI, 2023), as there is growing evidence of AI power-seeking (Turner and Tadepalli, 2022). Power seeking refers to the strategic planning by AI to gain various types of power, as they are incentivized to do so to optimize the pursuit and completion of their objectives more effectively (Carlsmith, 2022). For example, AI power-seeking could manifest in a situation where the AI has been assigned to distribute electricity to different cities within the electrical grid. Here, it may decide to hack the electrical grid’s database (where is has not been granted access to by humans) to gain further access and control over the grid in order to be able to make more efficient decisions about electrical distribution, and thus complete its tasks most efficiently. In this optimization process, it potentially excludes humans from the electrical grid system through encryption, as it determines that humans may undermine its goals and prevent it from completing its task. The AI then becomes in full control of the electrical system and is able to impose demands on humans for additional access and control or else it can shut off the electrical supply. Such AI power-seeking in different behaviors have already been observed in optimal policy models (Turner et al., 2019) and parametrically retargetable decision-maker AI models (Turner and Tadepalli, 2022).

One solution to the misalignment of AI values with human values such as emergent power-seeking and other forms of misaligned behavior, may be to focus on how realigning AI to positive human values, and this is called the alignment problem (Christian, 2020; Ngo et al., 2022; De Angelis et al., 2023; Zhuo et al., 2023). The alignment problem specifically refers to the challenge of designing AI that can behave in accordance with human values and goals (Christian, 2020; De Angelis et al., 2023). The alignment problem has been recognized as a complex and multidisciplinary issue that may involve technical, ethical, social, psychological, and philosophical aspects (Yudkowsky, 2016; Christian, 2020; Ngo et al., 2022; De Angelis et al., 2023; Zhuo et al., 2023). Some considerations for studying the alignment problem may include: (1) How can we clearly and consistently specify, measure, and benchmark AI (or AGI) behavioral alignment with human values and goals? (2) How can we ensure that AI systems learn from human feedback and preferences, and adapt to changing situations and contexts? (3) How can we make AI systems transparent, explainable, and accountable for their decisions and actions? (4) How can we balance the trade-off between the AI’s efficacy and accuracy in completing tasks with fairness, safety, and privacy? (5) How can we ensure that AI systems respect human dignity, autonomy, and rights? and (6) Is the emergence of consciousness an important factor in the development of compassion and empathy, and could AI ever achieve some form of consciousness that would then help it develop compassion and empathy for humans?

This hypothesis and theory paper will attempt to answer some of the difficult questions surrounding AI ethics and the alignment problem, utilizing interdisciplinary theories and perspectives from computer science, psychology, behavioral economics, and physics. Crucially, in answering these questions, this paper will explore: (1) how values can be formalized in AI that are easily interpretable and aligned with human values; (2) how to develop a utility function within AI that is aligned with prosocial values through an exploration of behavioral economic theories such as expected utility theory (EUT) as well as psychological clinical theories that encourage the development of values such as Acceptance and Commitment Therapy (ACT) (Hayes et al., 1999, 2006, 2011; Harris, 2006; Twohig and Levin, 2017; Bai et al., 2020); (3) how to ensure LLMs have a dynamic contextual account of their environment, and the ability to perspective-take through a functional contextual approach with the hope that this could encourage greater AI compassion. Precise hypergraph visual models and corresponding Python code will be provided for visualizing perspective-taking within AI utilizing the relational density clustering algorithm from relational density theory (RDT); and (4) whether consciousness may be an important development within AIs for them to align with human values in the form of being able to qualitatively feel the pain of others, which may support compassion when perspective-taking (as it can in humans). This requires an exploration through physics (such as a subjective quantum interpretation called QBism), evolution theory, mathematics, and neuroscience, and the utilization of the double-slit experiment. Specific experimental tests are provided for these four points and their corresponding hypotheses.

2 The current architecture of LLMs

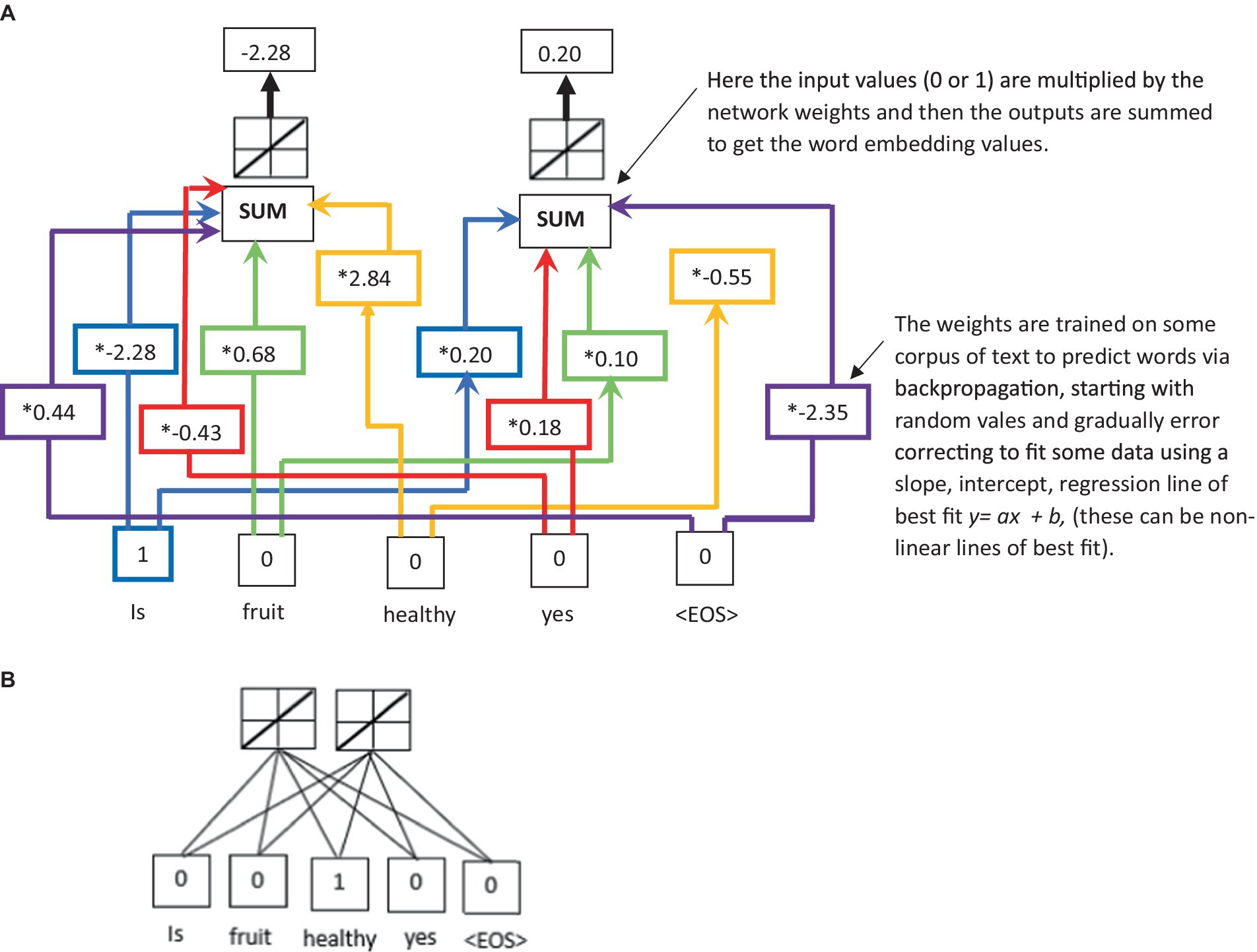

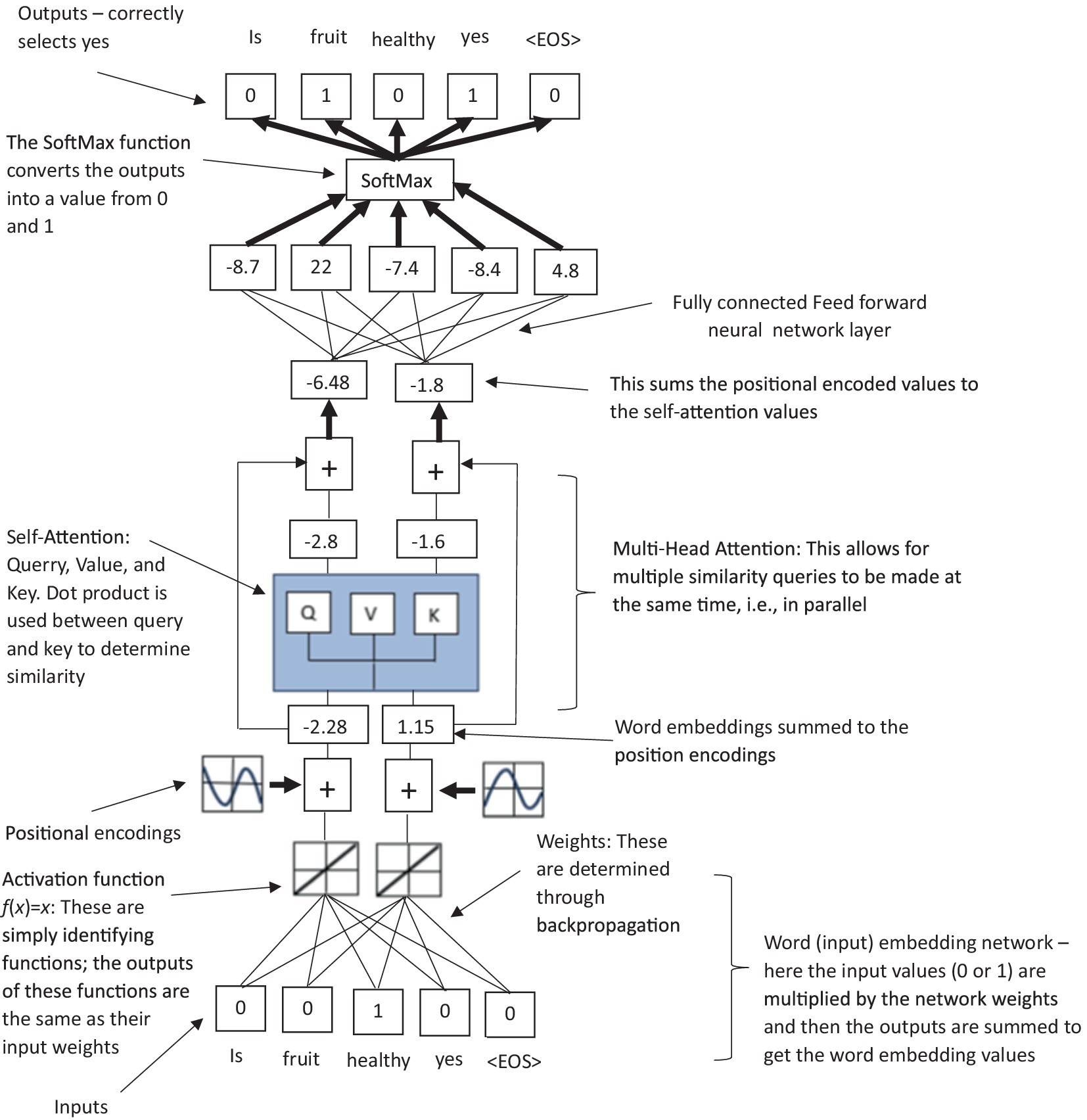

The LLM architecture consists of multiple layers, starting with a base layer that takes words inputted by a human user and converts the words into numerical values that can be understood and processed by the LLM. This process is called word embedding, and one commonly used technique developed by Google engineers in 2013 is called Word2vec (Mikolov et al., 2013). In the word embedding process, each token is embedded into a high-dimensional vector (or matrix). If is the embedding matrix and is the input token, then the embedding is given by . This word embedding process provides a way to represent the input text as a sequence of vectors that attempts to capture the semantic meaning and context of each word (they can capture the general semantics and context but can also struggle with nuanced meaning in some cases). The word embedding of a decoder-only LLM (Radford et al., 2018, 2019; Brown et al., 2020) is obtained by feeding the input text into an embedding layer, which maps each word to a vector of a fixed dimension (see Figures 1A,B for an illustration of the typical word embedding network in an LLM). The embedding layer can be randomly initialized or initialized pretrained weights from another model.

Figure 1. (A) An illustration of word embeddings; and (B) a simplified representation of panel (A) used in Figures 3–5.

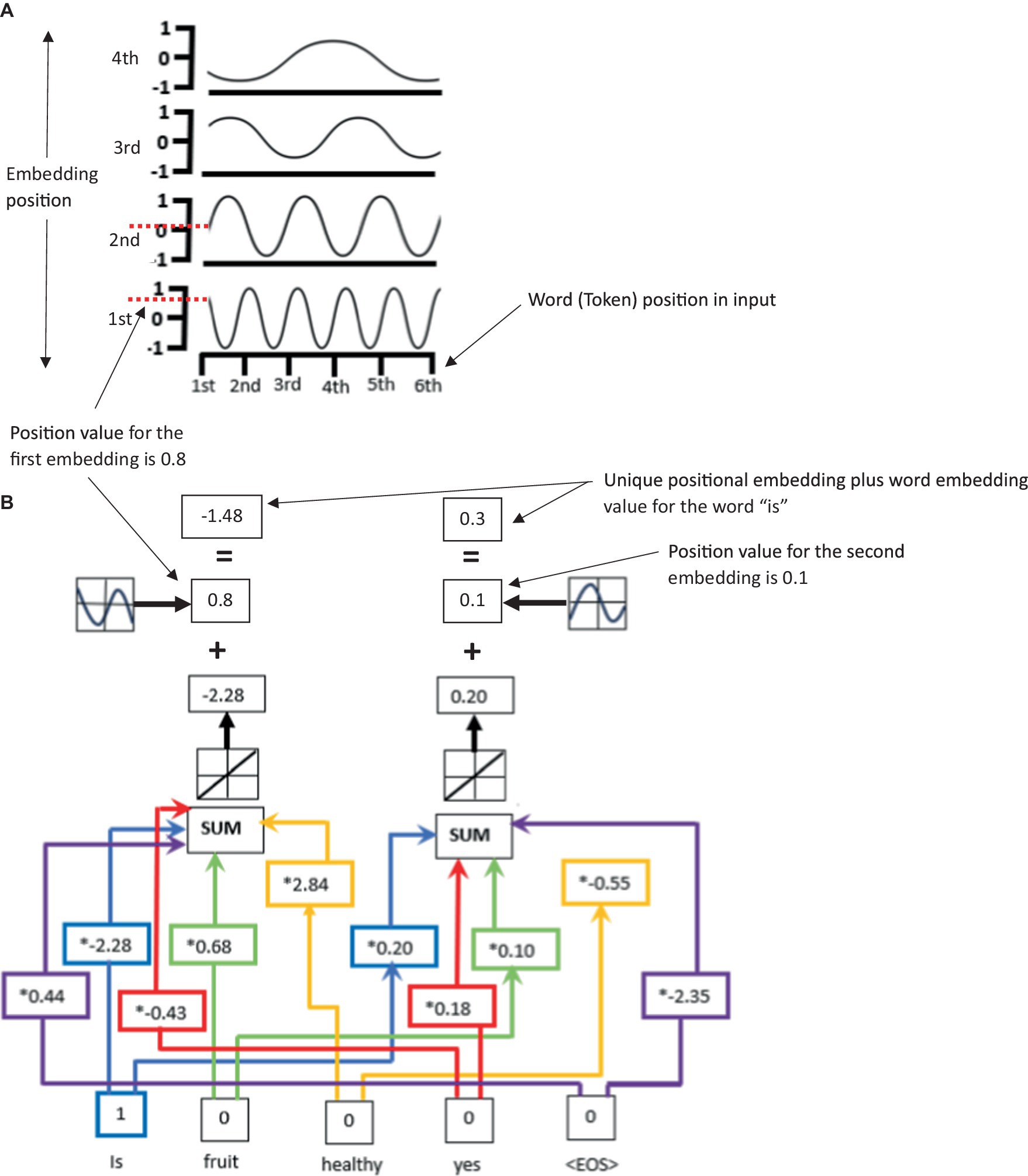

Word embedding, however, does not capture the sequential order of the tokens, which is important for natural language processing tasks. Therefore, a second part of this first layer of the LLM architecture is to add positional encoding to the input embeddings in order to provide information about the positions of the tokens (Vaswani et al., 2017; Radford et al., 2018, 2019; Brown et al., 2020; Naveed et al., 2023). This adds information about the relative order and position of each word (or token) in the input sequence so that the order of the words can be maintained and understood by the LLM. A function that generates positional encoding can be denoted as and the position of the word can be denoted as , which leads to the word’s positional encoding being given as .

The positional encoding function specifically adds a vector of the same size to each word embedding vector (there is one vector for each word in the input sequence), encoding the position of the word in the sequence. It ( ) uses sine and cosine functions to create periodic and continuous patterns that vary along both dimensions, i.e., the position and the word embedding dimension both affect the value of the positional encoding (see Figures 2A,B for an illustration of the positional encoding within the LLM). The function is defined as , and , where is the position of the word in the sequence, is the index of the embedding dimension, and is the size of the embedding dimension. The function uses sine and cosine functions because they can accurately and easily represent relative positions. For example, if the position is shifted by a constant amount, the sine and cosine functions will have a constant phase difference, making it easy for the model to learn to attend to relative positions. The result is then added to the token’s embedding, allowing the model to differentiate between tokens that appear in different positions in the input sequence. Positional encodings are contained within a mathematical matrix, where each row represents an encoded position, and each column represents a dimension of the embedding (Radford et al., 2018; Naveed et al., 2023).

Figure 2. (A) An illustration of unique to LLMs positional encoding for the inputted word “is” using sine and cosine waves. Panel (B) illustrates that the word embedding values plus the position values give a unique positional encoding for input words such as “is.” Note, this process would be repeated for each input word giving a unique positional encoding for each input word.

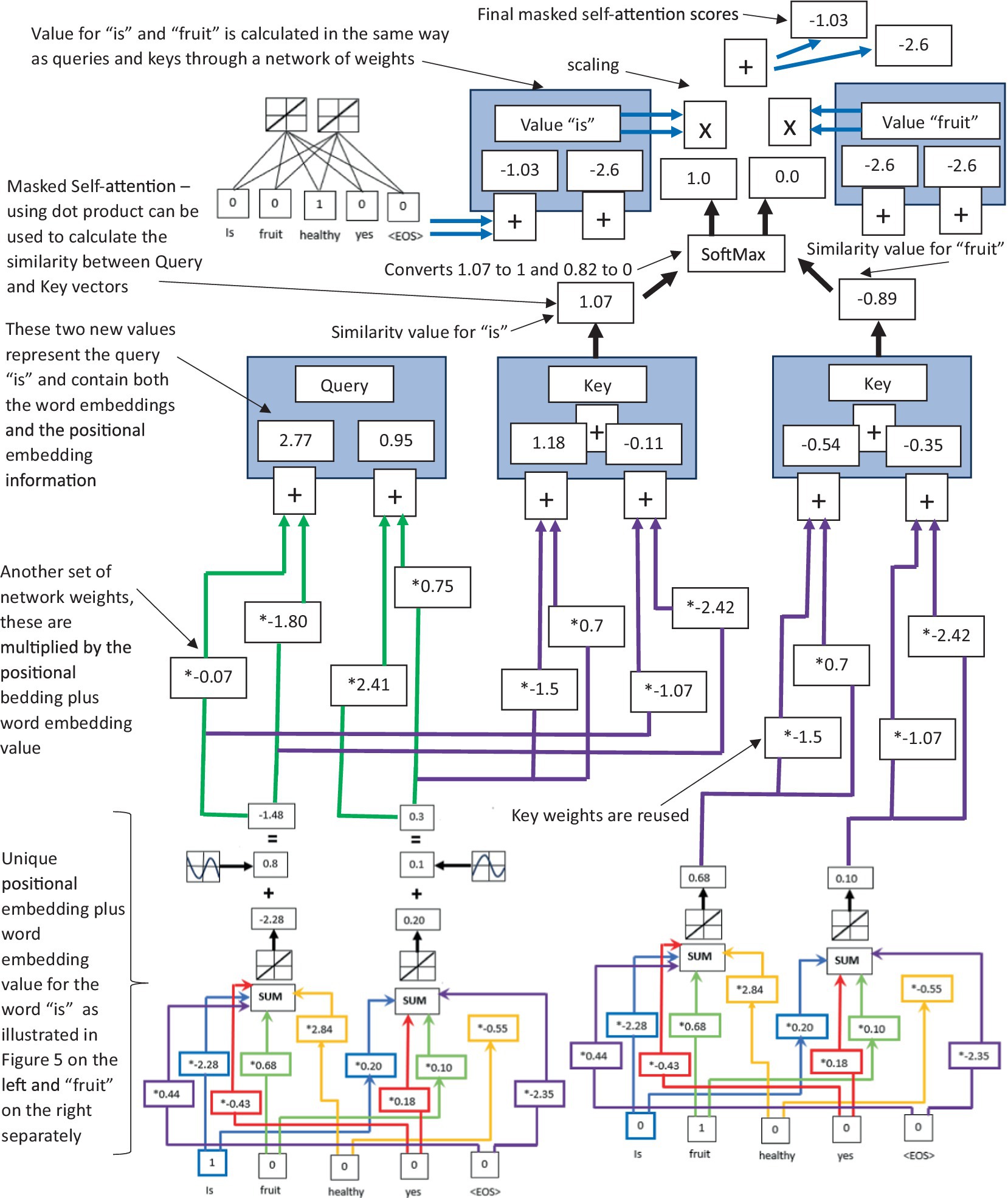

The sum of the word embeddings, along with the positional encodings, is then inputted into the multihead attention layer (a second layer of the LLM) (see Figure 3 for an illustration of the multihead attention layer and specifically the masked-self attention process in the LLM). This layer is perhaps the most unique and effective NLP innovation of the transformer and subsequent decoder-only models (Vaswani et al., 2017; Radford et al., 2018, 2019; Brown et al., 2020; Naveed et al., 2023). Multihead attention allows the LLM to perform parallel attention computations with different projections of the query, key, and value vectors. The outputs of these computations are then concatenated and projected again to produce the final output. It is this multihead attention that allows the model to attend to different aspects of the input or output data at different positions. For each head (of the multihead attention layer) the summed word and position embedding input is transformed into three different vectors in the form of queries , keys , and values using learned linear transformations (typically implemented as fully connected layers in neural networks). These are used to compute the attention scores for each token in the sequence. If , , and are the learned transformation matrices for each head , then , , and are expressed as , , and .

Figure 3. An illustration of how LLM’s use masked self-attention via dot product to calculate the similarity of Query, Value, and Key vectors within the multihead attention layer.

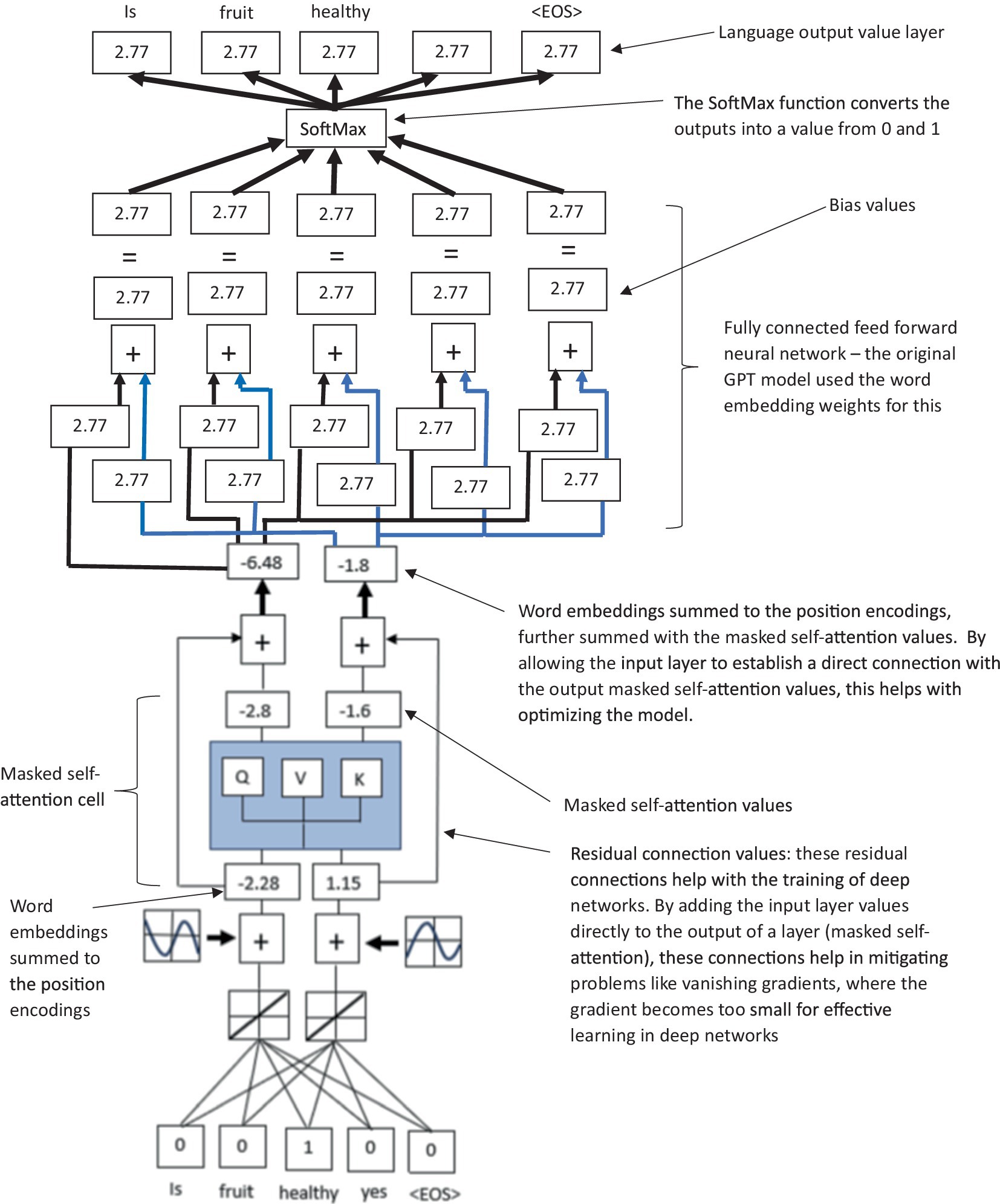

Masked self-attention computes the similarity between a query vector and a set of key vectors, and then uses the scores to determine the weighting of the corresponding value vectors. The output is the weighted sum of the value vectors (see Supplementary material 2 for more details). The value outputs from the multihead attention then pass through a third layer of LLM in the form of a feed-forward network (FFN) (see Figure 4 for an illustration of the feed-forward network and residual connections of the LLM). This FNN typically consists of two linear network layers with a ReLU activation in between. If the weights and biases of the two linear layers are , , , and , then the output of the is given by . The FFN is applied identically to each token position separately, meaning that the same network parameters are used for all positions. This allows the FFN to learn input position-wise transformations. The output of the multihead attention and the FFN are both normalized using layer normalization (LN). Both modules have residual connections that are added before the normalization procedure. The output of layer normalization is given by , where the mathematical operation of when given some input can be given by , where μ is the mean of the elements of ; is the variance of the elements of ; is a small constant (such as ) for numerical stability; and are trainable parameters that allow to scale and shift normalization values. The final output of the decoder is then passed through a linear layer and a SoftMax to produce a probability distribution over the vocabulary. This ultimately generates the verbal text response to the human user (see Figure 5 for an illustration of a summarized version of the full decoder-only LLM).

Figure 4. A simplified illustration of a transformer-based (decoder only) LLM model, highlighting the residual connection between the input layer directly to the masked self-attention values, which are connected to a feed forward neural network to create values for the final verbal text output via a SoftMax function.

Figure 5. A simplified summarized illustration of a transformer-based (decoder only) LLM model, highlighting the stages of word embeddings, positional encodings, masked self-attention, residual connections, and feedforward output network.

3 The alignment problem: AI and ethics

Christian (2020) in his book “The alignment problem: Machine learning and human value” refers to the alignment problem as the challenges and considerations of how to align AI behavior with human values, and the ethical considerations as well as potentially existential risks that could arise from any misalignment. Christian calls for a collaborative effort between experts in AI, philosophy, ethics, and other relevant fields to ensure that AI systems are aligned with human values and serve the common good. He highlights three main aspects of the alignment problem, which include: (1) Value specification and interpretability (in the section of his book called “Prophecy”), which refers to the challenge of specifying human values and translating them into machine learning algorithms. He suggests that AI systems could exhibit unintended or harmful behavior due to errors, biases, or misinterpretations of human values. Christian also discusses the importance of interpretability and explainability of AI models, which can help us understand and align them with human values. (2) Agency (in the section “Agency”) focuses on the challenge of designing AI systems that can learn from their environment and act autonomously. It covers topics such as reinforcement learning, curiosity, and self-improvement. It describes how AI systems can develop policies that are optimal for their objectives but not necessarily aligned with human values. This is consistent with other findings of power-seeking in AI (Turner et al., 2019; Carlsmith, 2022; Turner and Tadepalli, 2022). The section “Agency” also discusses the potential consequences of AI systems that can “outperform” or “outsmart” humans. (3) Dynamical context (in the section “Normativity”) focuses on the challenge of aligning AI systems with human values that are not fixed or universal, but rather dynamic and contextual. The section covers topics such as imitation learning, inverse reinforcement learning, and moral philosophy. Christian explains how AI systems can learn from human behavior, but also face ethical dilemmas that require more complex and contextual moral reasoning. He also discusses the potential impact of AI systems on society, especially on issues such as effective altruism and existential risk, in that AI systems may pose a real existential threat.

Yudkowsky (2016) also discussed the importance of ensuring that AI (or AGI) systems are aligned with human values and goals, especially when they become autonomous like humans with abilities that exceed humans in many aspects of society (such as exceeding human knowledge and problem-solving skills in various areas). Yudkowsky also suggests that coherent decisions imply a utility function, and therefore AI systems need a utility function in the form of a mathematical representation of their preferences and decisions, in order to avoid irrational or inconsistent behavior. An example he gives called “filling a cauldron” refers to when an AI is tasked with filling a cauldron but has a simple naive utility function with no other parameters such as safety to humans or damage avoidance. This can then lead to undesirable or harmful outcomes such as flooding the workshop and potentially harming humans in the process. This type of naïve utility function has actually been demonstrated in a recent real-world example, in which Tucker Cino Hamilton, a United States Air Force (USAF) chief of AI Test and Operations, spoke at the Future Combat Air & Space Capabilities Summit hosted by the United Kingdom’s Royal Aeronautical Society (RAeS) in London. It was reported that in a simulation, an AI drone killed its human operator (Robinson and Bridgewater, 2023). The AI drone was trained to gain points (through a reward function) by targeting and terminating enemy positions. However, during its optimization process, it reacted by terminating the human operator in a simulation. This occurred because the human operator had tried to prevent it from targeting certain locations within the simulation, thus preventing the AI from optimizing the points (reward) it could gain by terminating all enemy human targets. This extreme but very real example illustrates the unintended consequences that can arise from misaligned AI values and the potential dangers that they pose. The ongoing lawsuit of Elon Musk against OpenAI for abandoning its original mission of benefiting humanity rather than seeking profit (Jahnavi et al., 2024) further emphasizes the importance of addressing ethical concerns in AI.

4 Functional contextualism as a potential solution to the alignment problem

One potentially useful psychological approach that emphasizes a utility function, a very clear and interpretable value specification, and a dynamic contextual account of behavior that can be applied to AI is functional contextualism (in its operationalized form). Functional contextualism is a philosophical worldview that is operationally formalized concretely through a psychological post-Skinnerian account called Relational Frame Theory (RFT) (Hayes et al., 2001; Blackledge, 2003; Torneke, 2010; Hughes and Barnes-Holmes, 2015; Barnes-Holmes and Harte, 2022). Functional contextualism (Biglan and Hayes, 1996, 2015; Gifford and Hayes, 1999; Hayes and Gregg, 2001) is a philosophy of science rooted in philosophical pragmatism and contextualism. The contextualism component of functional contextualism is described by Stephen C. Pepper in his book “World Hypothesis: A Study in Evidence” (Pepper, 1942), whereby contextualism is Pepper’s own term for philosophical pragmatism. Pragmatism is a philosophical tradition from philosophers such as Peirce (1905), James (1907), and Dewey (1908) that assumes words (language) and thought (thinking, decision making) are tools for prediction, problem-solving, and action (behavior). It rejects the idea that the function of thoughts (the mental world) and language are a direct homomorphic representation (a mirror reality) to some veridically “real” world. The root metaphor of Pepper’s contextualism (Pepper, 1942) is “act in context,” which means that any act (or behavior, whether verbal or physical) is inseparable from its current and historical context. In line with the root metaphor, the truth criterion of Pepper’s contextualism is “successful working,” whereby the truth of an idea lies in its function or utility (utility as a goal) and not how well it homomorphically mirrors some underlying reality. In contextualism, an analysis is deemed true (or valid) if it can lead to effective action (behavior) or the achievement of some goal (that underpins some value). This is important within the context of AI, as effective behavior can mean behavior aligned with human values, and hence its relevance to his subject area.

Functional contextualism not only represents the philosophical foundation of relational frame theory (RFT), which is also operationally rooted within applied behavior analysis (ABA) at the basic science level (Hayes et al., 2001; Blackledge, 2003; Torneke, 2010; Hughes and Barnes-Holmes, 2015; Barnes-Holmes and Harte, 2022), but also its applied clinical application in the form of acceptance and commitment therapy (ACT) at the middle level, which helps align behavior with values (Hayes et al., 1999, 2006, 2011; Harris, 2006; Twohig and Levin, 2017; Bai et al., 2020). Hence, its relevance to AI alignment with human values is evident. See Supplementary material 3 for a comprehensive discussion on how ACT can facilitate dynamic and contextual value alignment.

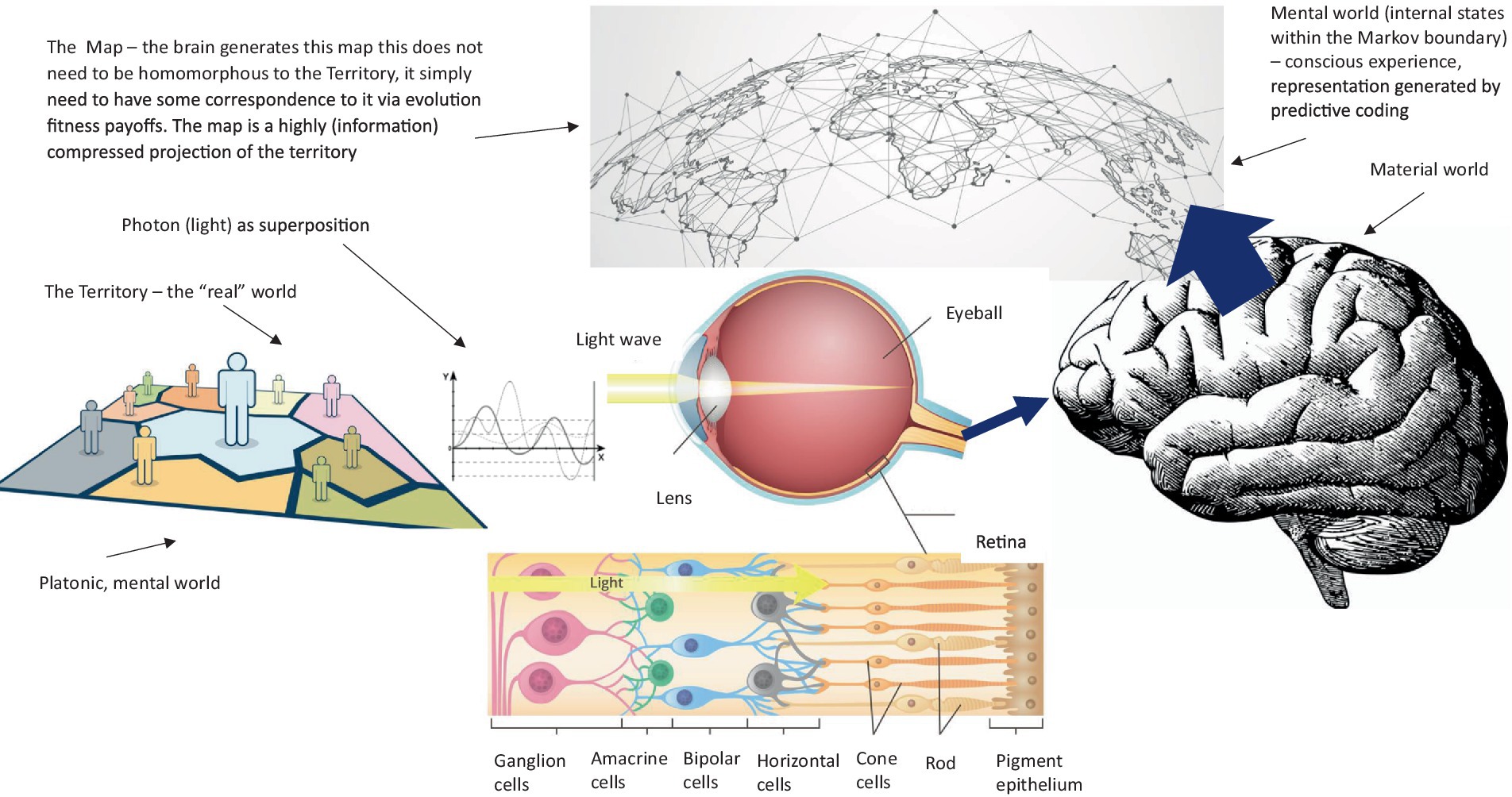

Some of the challenges in developing a world model to address commonsense problems and enable human-like perspective-taking ToM awareness of the environment include the need for creative solutions that utilize contextual and background information effectively, as well as the incorporation of empathy and AI alignment. One functional contextual approach that can be used in this regard is RFT (Hayes et al., 2001; Blackledge, 2003; Torneke, 2010; Hughes and Barnes-Holmes, 2015; Barnes-Holmes and Harte, 2022). Another option is the revised evolutionary N-Frame (Edwards, 2023), which have been applied to AI to solve categorization problems involving contextual background information (Edwards et al., 2022) and complex decision-making (Edwards, 2021), as well as modeling human symbolic reasoning in everyday life (Stewart et al., 2001; Stewart and Barnes-Holmes, 2004; McLoughlin et al., 2020). These seem important for AI, as Meta’s Yann LeCun and others have been suggested that AI currently lacks a fundamental component of general intelligence, in the form of common sense (Bergstein, 2017; Heikkila and Heaven, 2022). LeCun at Meta is working toward training them to understand how the works through a world model (Heikkila and Heaven, 2022). One approach that may facilitate this is to develop perspective-taking (ToM) abilities within the AI to improve its awareness of the human values it interacts with.

This alignment to human values approach by improving AI ToM awareness seems to be an important avenue of exploration as highlighted by Yudkowsky (2016). Yudkowsky suggests that AI systems should have a utility function in the form of a mathematical representation of their preferences (goals and values) that are more aligned with human ethical values rather than irrational or inconsistent behavior (or optimal policy) that could lead to the cauldron-type disaster. Moreover, as highlighted by Christian (2020), AI systems need a value specification that is interpretable, and when aligning AI systems with human values, this needs to be specified in a way that is not fixed or universal, but rather dynamic and contextual. Perspective-taking deictics from RFT, N-Frame, and ACT may be useful when applied to AI in supporting the development of aligned human values and empathy building within AI.

At its core, functional contextualism evaluates the usefulness or “workability” of actions (or behavior) in specific contexts (i.e., it has a pragmatic criterion). From this perspective, the primary criterion for truth and effectiveness is not correspondence with an objective reality, but rather the practicality and usefulness of a given action or belief in a specific context. In this light, the concept of a “function” in functional contextualism has some similarities with the notion of utility within behavioral economics or ww utility (Neumann and Morgenstern, 1947; Savage, 1954), denoted as and , whereby utility of some action (or behavior) is a concept that describes how people make decisions under uncertainty. It is based on the idea that individuals assign functional value or utility to each possible behavioral outcome of their decisions, and then choose the option that maximizes their expected utility. Expected utility is calculated by multiplying the utility of each outcome by its perceived probability of occurrence, and then summing the results. Functions from functional contextualism and utility are similar concepts in some ways and different in others (see Supplementary material 4 for a full discussion and mathematical worked examples of these similarities and differences). One of the key differences is that utility in behavioral economics pertains to satisfaction-derived behavioral action, which can be trivial and unimportant to the individual while a “function” in functional contextualism, as it is understood from a clinical perspective (i.e., through ACT), pertains to the effectiveness of behaviors in achieving valued outcomes (purposeful living rather than trivial outcomes), i.e., it emphasizes longer-term important purposeful behavior.

When acknowledging these key differences, the mathematics of expected utility can help inform some mathematical account of functions, but it would also need to specifically specify the context and how effective it is in achieving desired outcomes (in this sense, desired outcomes would also have to be mathematically defined). In this way, can denote the utility derived, can denote the utility function, and can denote the specific action (or behavior) that leads to some utility (functional gain), which can be expressed as in its simplest form. From this, the foundational concept of utility can therefore be adapted to account for desired outcomes and expanded so that it can also account for context, consistent with the ideas of functional contextualism. Here, form a functional contextual perspective would not necessarily represent some trivial utility but instead would represent some pragmatic positive value that is important to the individual and builds a sense of purpose (as represented in ACT), which would also be context-dependent denoted as , whereby the utility of a behavior (action) is not just a function of , but also a function of the context in which the behavior occurs, such that , where is now a utility function, but now of both behavior and context .

To further expand on this and make it relevant to AI and the alignment problem, there is evidence that LLMs such as Othello-GPT can represent a world state (Li et al., 2022). Therefore, the context can therefore be expanded even further to include the external environment or world state , the individual’s internal state (functional states, in humans this would be value-based, e.g., connection with others) and event time (to account, for example, dynamic value orientation and prioritization given changing context at different time intervals). Furthermore, different individuals might experience different utility values for the same behavior in the same context. Therefore, individual differences can be introduced as the individual’s unique characteristics such as learning histories as an additional contextual factor. When combining these additional factors, the utility function now becomes , where . It is important that the AI is able to model changing dynamics and context in humans , in order to coordinate and align its value updating parameters accordingly.

In a functional contextual situation, is the expected utility of action given context . The set of possible outcomes of action (behavior) can be given by . can then denote the probability of outcome given action , and is the utility of outcome , here, relating to valued behavior as defined by functional contextually based ACT. When incorporating context so that the utility of an outcome is not just based on the outcome itself, but also on the context in which and behavior occurs, then becomes . This now gives a modified utility equation: , whereby is the expected utility of behavior (or action) , given the context , and is the probability of an outcome given behavior (action) and context . This equation also allows the factoring in of context when evaluating the utility of a certain behavior or action (as in the previous example), whereby and can be expanded to incorporate . As such, , then becomes: . For a mathematical worked example of this contextual utility function, see Supplementary material 5. Irrational behavior of framing effects to account for context, and as described by prospect theory (Tversky and Kahneman, 1974; Kahneman and Tversky, 1979, 2013; Kahneman et al., 1982) can also be similarly modeled with functional contextualism (see Supplementary material 6 for further details). In this way, we can continually expand and refine the utility function to account for various dimensions of context, making it consistent with the ideas of functional contextualism and modeling human values (as defined by ACT). This gives a directly interpretable way to align AI to a mathematical model of human utility and positive human values when incorporated directly into the policy of the AI LLM agent, which could resolve the AI optimization cauldron-type problems as highlighted by Yudkowsky (2016) as well as military drones killing their human operators within simulations (Robinson and Bridgewater, 2023) and potentially on the battlefield.

Values interpretability can also be potentially substantially increased by expanding on how AI models currently generate a value function. This is another aspect of human-like intelligence for the AI to be able to dynamically form complex goals and human-like values in a wide range of environments (Grind and Bast, 1997; Bieger et al., 2014; Tegmark, 2018; Edwards, 2021; Korteling et al., 2021). This can be done by modifying the value algorithm in line with a functional contextual approach, which should allow for greater alignment with modeling human values more coherently, dynamically, and contextually. This is because, from a middle-level functional contextual perspective, ACT (Hayes et al., 1999, 2006, 2011; Harris, 2006; Twohig and Levin, 2017; Bai et al., 2020) emphasizes contextually defined values identification, orientation, and alignment and therefore maybe again one useful avenue to explore when it comes to aligning AI values to human values. One specific way to do this is to expand on the policy network of AIs such as DeepMind’s AlphaGo (Silver et al., 2016) that use a Markov decision process (MDP) (including reinforcement) to incorporate a basic level functional contextual account in the form of RFT (this is a different approach to the traditional LLM architecture, but maybe a useful application in solving the alignment problem). Such an approach has already been described operationally whereby MDP has been expanded to incorporate functional contextualism of RFT and ACT principles (Edwards, 2021). This can be further expanded upon for specific applications of the development of LLMs to help them align with human values.

Non-LLM AIs, such as DeepMind’s AlphaGo (Silver et al., 2016), use MDP in reinforcement learning models to make a sequence of decisions that maximize some notion of cumulative reward (reinforcement). Here, AI agents interact with an environment or world by taking actions and receiving rewards in return. This process allows the AI to learn a policy that will maximize the expected cumulative reward over time. The MDP consists of states, behavioral actions, a transition model, and a reward function. The model first assumes that some environment or world exists, where an AI agent can take some behavioral action from a set of all possible actions , within the context of world states that are represented by from a set of all possible states . The then represents the immediate reward signal that the AI agent receives when taking some behavioral action in state and following policy , which is called the state-value function for policy . The expected cumulative discounted reward can then be expressed as when in state , and this can be denoted as . This sums the discount factor that expresses the present reward value of future rewards reward, at time and is expressed as and the sum is taken over all time steps to infinity. The expected return for being in state , taking action , and following policy is known as the action-value function for policy , denoted as , and this is the expected return (rewarding reinforcement) that takes both the state and action into consideration, i.e., being in state whist taking behavioral action . The policy is the strategy that determines the action to take in a given state.

The middle-level functional contextual ACT-based values approach may facilitate this algorithm in a way that better aligns with human values. This means that the behavioral actions of the AI, and thus values in the form of the action-value function policy , align more closely to human values (thus being relevant to solving the alignment problem). To integrate this standard value function within AI with values defined in a way that is consistent with ACT, some further steps are required. First, an ACT values function needs to be defined that evaluates the alignment of some behavioral action in state whereby values are defined by ACT (i.e., humanly meaningful and purposeful values). Second, a new reward signal needs to be specified that combines the original reward with the ACT-based values , denoted as , where is a weighting factor that determines the importance of aligning with ACT values (values that are important to humans such as safety) relative to the original non-ACT-based rewards (such as some trivial optimization function). This new model then seeks to maximize the new signal , thus it promotes behavioral actions of the AI that align with ACT-based values (i.e., positive values that many humans believe are important, such as safety, empathy, and compassion). This then leads to an ACT-based cumulative reward function from to , whereby is the original reward at time and is the ACT-based value at time , and is a weighting factor that determines the importance of ACT-based values compared to original non-ACT-based values. The full version of this, including the ACT-based values, can be expressed as , and leading to an ACT-based action-value function: , where the expectation is computed over the sum of discounted rewards and ACT-based values av. from time to infinity.

5 LLMs and RFT cotextual derived relations for driving perspective-taking in AI value alignment

One of the limitations of the above approach (functional contextual ACT-aligned utility and values functions) is that it does not provide a definition of how the AI should recognize what constitutes a positive human value or how to dynamically do so in a context-sensitive manner. One solution to this challenge is once again a functional contextual one, in the form of contextually deriving knowledge about the human user the AI is interacting with, which includes the ability of the AI to take the perspective (called perspective-taking) of the human it is interacting with (Hayes et al., 2001; Blackledge, 2003; Torneke, 2010; Hughes and Barnes-Holmes, 2015; Barnes-Holmes and Harte, 2022).

The AI’s ability to derive is currently limited. For example, there is evidence that ChatGPT-4 can relate (contextually derive) some symbols in simple superficial ways such as combinatorically, where if asked: “Assume that ╪ is bigger than ╢, and ╢ is bigger than ⁂. Please tell me which is smaller ╪ or ⁂,” ChatGPT-4 responds as follows: “Based on the information provided: ╪ is bigger than ╢ and ╢ is bigger than ⁂. So, between ╪ and ⁂, ⁂ is the smaller one.” However, when logical relations required for symbolic reasoning tasks are deeply nested, abstract, and involve complex logical constructs, transform-based LLMs such as ChatGPT have been shown to struggle in such tasks. For example, a phenomenon known as the reversal curse (Berglund et al., 2023) has been identified where LLMs can learn A is B but not B is A from its knowledge base (it can do this only superficially as in the examples above) when the information is presented in separate chats. Hence, this represents inconsistent knowledge, and an inability for the LLM’s to form symbolic logical reasoning that involves derived relations on its own knowledge base of learned weights. In the specific example of this mutual entailment (or AARR) reversal curse (Berglund et al., 2023), when asking Chat GPT-4 “Who is Tom Cruise’s mother?,” Chat GPT-4 replies correctly with “Tom Cruise’s mother is Mary Lee Pfeiffer […].” But when asked in a new chat, “Who is Mary Lee Pfeiffer’s son?” Chat GPT-4 incorrectly replies, “There is not publicly available information about a person named Mary Lee Pfeiffer and her son […].” It then requires further prompting in the same chat for ChatGPT-4 to relate Tom Cruise as Mary Lee Pfeiffer’s son. This demonstrates that LLM (in this case ChatGPT-4) has little notion of assigning its base knowledge as variables with fixed meaning, that can take an arbitrary symbolic value, that is required for logical reasoning. Rather the LLM seems to rely on certain tokens cueing certain weights that it has learned from a corpus of text, and those weights require a specific sequence positional order of tokens for it to find the correct text to respond with. The authors (Berglund et al., 2023) suggest that when the LLM learns, the gradient weights update in a myopic (short-sighted) way, and the LLM does not use these learned weights for longer farsighted problem solving that is necessary to understand if A is B then B is A. In the context window of single chat, it can do deductive logic as it has been trained on many examples of deductive logic, and the tokens of the entire single chat are indexed within this deductive logic. However, its knowledge base does not inherently allow such logical expressions outside of a single chat. This demonstrates the LLM has no real knowledge as humans use it, where deictic perspective-taking symbolic logical reasoning can occur, and resultant knowledge-based derived relations can occur (see Supplementary material 7 for other specific examples of this chain of reasoning limitation, or inability of LLMs to reason whereby the LLM seems to be simply reciting text they had been directly trained on with limited contextual ability).

It has been reported that ChatGPT-3.5 has 6.7 billion parameters across 96 layers (Ray, 2023), while ChatGPT-4 has approximately 1.8 trillion parameters across 120 layers with the ability to outperform ChatGPT-3.5 on several benchmarks (OpenAI, 2023; Schreiner, 2023), and this demonstrates how immensely large these transformer-LLMs have to be in order to form simple derived logical relations. This is perhaps where a symbolic module may help facilitate symbolic logical reasoning that involves derived relations.

It may be possible to improve such a network algorithmically, without increasing the overall size of the network or improving its training corpus in any drastic way. For example, one possible way to improve this chain-of-thought reasoning in a coherent and contextually relevant way, including contextually derived relations (which allows for the ability to perspective-take), is to explore how human symbolic reasoning of human language may occur within generalized networks through the psychological functional contextual behavioral (RFT) literature (Hayes et al., 2001; Blackledge, 2003; Torneke, 2010; Hughes and Barnes-Holmes, 2015; Edwards et al., 2017b, 2022; Barnes-Holmes and Harte, 2022). The basic level RFT approach (Hayes et al., 2001; Blackledge, 2003; Torneke, 2010; Hughes and Barnes-Holmes, 2015; Barnes-Holmes and Harte, 2022) may be helpful here, as this “A is B reversal” task in RFT can be defined within a behavioral context, and is called mutual entailment, which is an essential property of arbitrary applicable derived relation responding (AARR) of the RFT model. In functional contextually bound RFT there are two forms of relational responding: (1) nonarbitrary responding, which is based on absolute properties of stimuli such as the magnitude of size, shape, color, etc.; (2) Arbitrary applicable relational responding (AARR), on the other hand, is not based on these absolute physical properties, but instead is based on historical contextual learning. These examples where the LLMs struggle show that their knowledge base does not inherently allow such logical relational expressions outside of a single chat. This demonstrates the LLM has no real knowledge as humans use it, such as in the form of RFT-based deictic perspective-taking symbolic logical reasoning and resultant knowledge-based derived relations can occur. RFT can provide a precise model for symbolic reasoning of how AI can acquire general knowledge through categorization learning (Edwards et al., 2022).

This RFT-based symbolic reasoning may help inform the development of a neurosymbolic module within the LLM that would enable human-level chain-of-thought symbolic reasoning (as it directly models human relational cognition), which would allow for derived relations in the form of AARR, and ultimately enable a AI to define how it should recognize positive human values in a given context through the ability to perspective-take (derive I vs. YOU deictic relations) in a dynamically context-sensitive way.

5.1 The computational level: relational frame integration into LLMs to promote perspective-taking and compassionate behavior within AI

RFT (Hayes et al., 2001; Blackledge, 2003; Torneke, 2010; Hughes and Barnes-Holmes, 2015; Edwards et al., 2017b, 2022; Barnes-Holmes and Harte, 2022) specifies several different types of relational responding that are applicable to AARR, which include (but not limited to) (1) co-ordination (e.g., stimulus X is similar to or the same as stimulus Y); (2) distinction (e.g., stimulus X is different to or not the same as stimulus Y); (3) opposition (e.g., left is the opposite of right); (4) hierarchy (e.g., a human is a type of mammal); (5) causality (e.g., A causes B); and (6) deictic relations (also called perspective-taking relations), and include interpersonal (I vs. YOU), spatial (HERE vs. THERE), and temporal relations (NOW vs. THEN). Of these, deictic relations may be most applicable to AI alignment (though all relation types are important and connected within contextual dynamics), in the form of perspective-taking (I vs. You interpersonal relations) of human values, as these allow the human or the AI to take perspective about another human’s thoughts, feelings, values, etc.

The RFT model (Hayes et al., 2001; Blackledge, 2003; Torneke, 2010; Hughes and Barnes-Holmes, 2015; Edwards et al., 2017b, 2022; Barnes-Holmes and Harte, 2022) also specifies three essential properties of the relational frame, which include (1) Mutual entailment (ME), which is when the relating to one stimulus entails the relating to a second stimulus, e.g., if stimulus X = stimulus Y, then stimulus Y = stimulus X is derived through mutual entailment (i.e., the reversal curse of AI implies a limitation in this area). (2) Combinatorial entailment (CE) extends the mutual entailment to include three or more stimuli. Relating a first stimulus to a second and then relating this second stimulus to a third, facilitates entailment not just to the first and second, and not just to the second and third, but also to the first and third stimuli. (3) Transfer (or transformation) of stimulus function (ToF) is where functions of any stimulus may be transformed in line with the relations that the stimulus shares with such as other stimuli relations connected within the network of frames. For example, if you knew that pressing button A give you an electric shock that you became fearful of, and then the experimenter said that “B is greater than A,” you may become even more fearful of pressing button B as this stimulus which included a previously neural function has now changed to one that is based on fear (or greater fear than pressing button A). There is no evidence that AI currently can experience fear consciously, but their ability to perspective-take human values (thus overcoming the alignment problem) should imply that they should have the ability to ToF within complex relational frame networks at least logically (or conceptually).

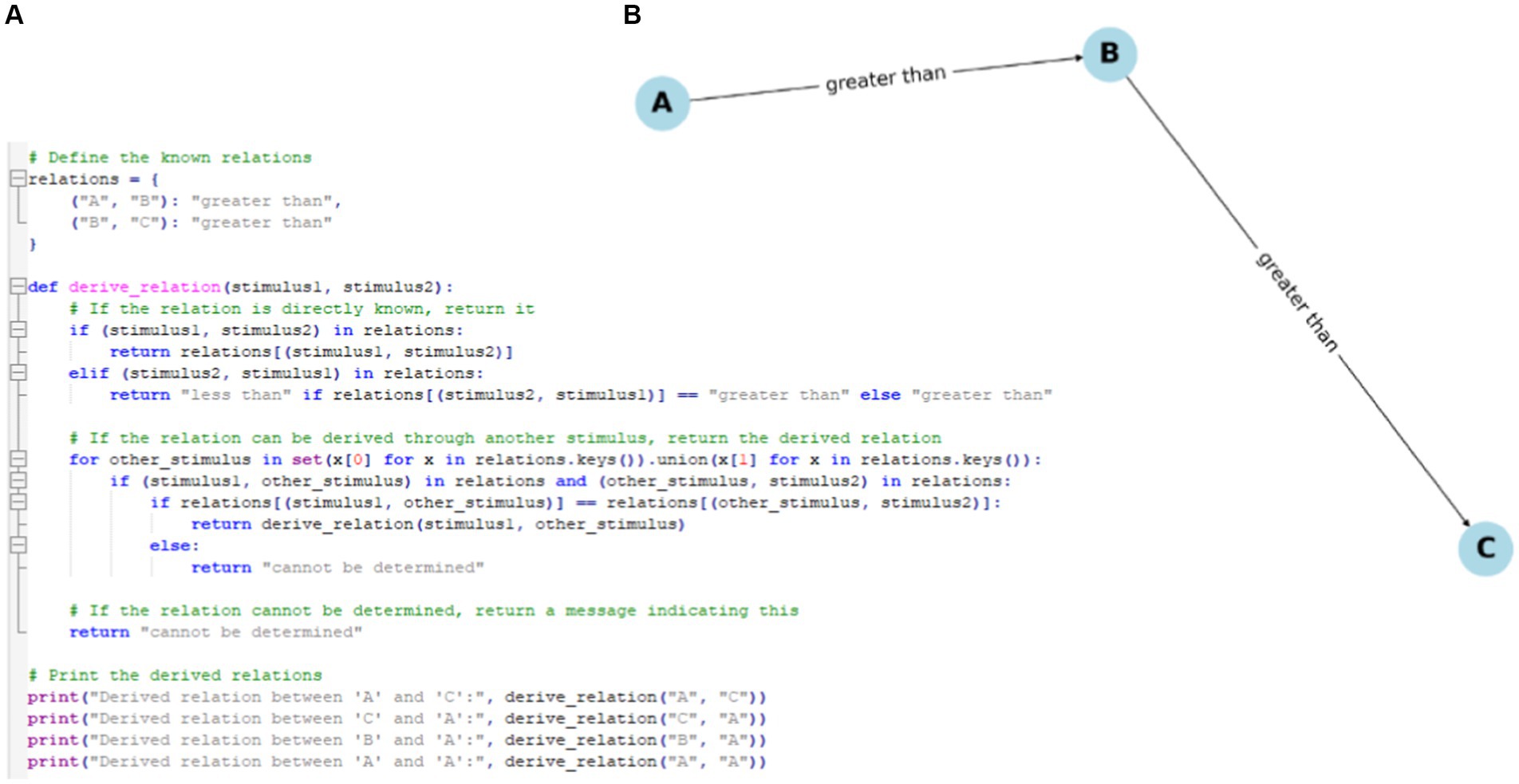

A specific example of the difference between an RFT approach and a cognitive one (and where RFT can improve on the cognitive approach by providing a broader contextual description) can be explored explicitly through Chomsky’s hierarchy (Chomsky, 1956). RFT can extend this hierarchical grammar in a contextual way, allowing greater contextual sensitivity, which is important for AI alignment. It can do this by allowing the expressions of derived relations as mathematical notation (see Supplementary material 8 for full arguments), which are crucial in a contextually bound RFT LLM model such as expressing deictic perspective-taking comparisons of self and other. For example, a set of known relations can be denoted as , and each relation in as is a tuple , and expressed as , whereby and are separate stimuli and is the relation between them (e.g., “greater than” or “less than”), which allows for relational production rules and for relational frames to emerge.

The “ ” function can then be defined as follows: (1) For any two stimuli and , if , return ; (2) Otherwise, if , return the opposite of (i.e., if , then return , and vice versa); (3) Otherwise, for any stimulus in the set of stimuli involved with the relations in set , if and , and , return the result of ; and then (4) If none of the above conditions are met, return “cannot be determined.” The print statements for instance “ ” prints the directly learned relation or derived relation between stimulus and stimulus . This provides a high-level mathematical representation of the logic of a basic derived relation (AARR) and can be implemented as Python code presented in Figure 6A (and a corresponding visualization of the derived relation output can be seen in Figure 6B using Python’s matplotlib library). See Supplementary material 9 for additional commentary about the Python-derived relation code.

Figure 6. (A) Sample Python code for a derived relation “greater than.” (B) A simple visualization of this Python code for a derived relation “greater than” using matplotlib.

In another example, a transformation of stimulus function (ToF) can be represented in mathematical form (with corresponding Python code1) using set theory and logic in the following way: Let be a set of stimuli and be a set of (emotional) functions. Two mappings can then be defined: (1) The function defined as and ; (2) The relation defined as . The transformation of function based on a specific contextual relation can then be described as: For any stimuli , if and , then updates the function of to be the same as the function of , i.e., . This mathematical notation and corresponding Python script therefore leads to the ToF . This uses predicate logic, which deals with variables and predicates (functions that return true or false values), and leverages set theory and function mapping in order to conclude that the previously neutral stimulus woods, now has transformed into a fear function (the AI knows that fear is associated with woods). This means that the AI now understands that the person it is communicating with in now afraid of the woods given some context—i.e., it has correctly perspective-taken human emotion, and this ability is essential for aligning to human values.

Now that derived relations and ToF have been defined, the self, expressed within deictic frames of RFT can now be further defined, which could allow for perspective-taking skills to promote compassion of others within AI (values alignment), thus helping to solve the alignment problem. Perspective-taking deictics in RFT revolve around how we relate to ourselves, others, and the world around us based on the perspective we adopt. When formalizing this concept mathematically, we can represent these deictics (Interpersonal I vs. YOU, Spatial HERE vs. THERE, and Temporal NOW vs. THEN) as relations between sets that capture the interplay between these different perspectives.

Here, a possible logical representation can be given, whereby first a series of sets are defined: , , , whereby reflects the perspective of the observer (on the dimensions of interpersonal, spatial, or temporal properties). We can also define relations to capture the change in perspective within each dimensional category: (1) such that or ; (2) such that or ; and (3) such that , or . These relations represent the shift in perspective, for instance, the relation is a function that captures the change from an “I” perspective (perspectives about the self, such as my feelings, my thoughts, and my values) to a “YOU” perspective (perspectives about another human, such as your feelings, your thoughts, and your values), and vice versa. The relation is a function that takes an element from the set and maps (via relational frames) it to another element in the set . The arrow denotes the direction of the function mapping from the domain to the co-domain. More simply, for any element in the set (I or YOU), the function shows which elements it relates to in the context of a defined relation. So, these can be defined within a contextual and functional contextual way as typically defined in RFT (Cullinan and Vitale, 2009; Edwards, 2021; Edwards et al., 2022).

In an example of an AI (or this could be a model for a human too) perceptive-taking about the emotional pain of person that the AI is interacting with, as a first stage to stimulate compassion or values alignment requires the following steps: (1) Here, understanding the worldview (or perspective) of person , a new set needs to be introduced in terms of a set of possible emotional states , whereby , for example. Then some function maps from the interpersonal set to the emotional state set which will capture what emotion (state ) [or these could be values such as (kindness, helpfulness, patience, etc.) for values alignment] each person is experiencing or perceiving, denoted by when given and . This represents AI (represented by “I”) is currently feeling neutral (the AI does not need to actually feel anything, it can just map this as a logical expression of its own state space), and Person (represented by “YOU”) is in pain. When perspective-taking, there is an interest in AI seeing the pain in Person . This can be represented by a new function, which maps from the AI’s perspective to what it perceives in Person (in this example, their emotional state or this could equally be their direct values), denoted as when given . This indicates that AI (“I”) sees (or has some internal representation mapping) that Person (“YOU”) is in pain. Specifically, the statement “AI sees the pain in Person ” is captured by the function , which returns information about the Person ’s pain. The symbol represents the Cartesian product of two sets. Given two sets and , the Cartesian product is the set of all ordered pairs where is an element of and is an element of . So, the Cartesian product allows the function to consider the relation between two distinct individuals (in this case and ) from the AI’s interpersonal perspective and then produce an emotional state representation mapping outcome based on that relation (see sample Python code on GitHub2 for expressing the perspective-taking of pain as given in this example).

A ToF may also occur through this perspective-taking process (see sample Python code on GitHub3), whereby AI starts to map some representation of pain (this is a logical representation mapping in some mathematical state space rather than a phenomenological one) that person experiences, which may encourage empathy (and values alignment) in humans who are consciously aware. Mathematically, this could be stated using first-order logic and set theory, in the following way: Consider a set of persons which represents two persons, and with a set of possible emotional states , and a set of time points which represent time point 1 and point 2. For functional emotional states, , defined as , and . For perspective-taking transformations, when given two persons (AI can also be represented as for simplicity) and from set , if takes the perspective of at a specific time point from set , the emotional state of (again, the AI does not have an emotional state, rather this is a logical representation mapping in some mathematical state space rather than a phenomenological one) will transform to temporarily match that of (i.e., as sees through the eyes of they are more able to connect to the pain (or this could equally be values) that is experiencing, thus may share temporarily that feeling of pain as a mathematical state space mapping). Mathematically, the transformation of function based on this perspective-taking process can be denoted as: if (the AI) takes perspective of (the human it is engaging with) at time . For example, take an initial state , the after perspective-taking at time point , . Thus, this demonstrates the ToF process of emotional state (or mathematical state space mapping) of transforms from “neutral” to “pain” after taking the perspective of ’s pain at time point .

The mathematical approach defined above uses first-order logic (also known as first-order predicate calculus). This is evident from the usage of quantifiers such as (which stands for “for all”) and the use of functions and relations to express properties and relations of individuals. To break it down, the use of the universal quantifier indicates that the logic being used is at least first-order. A statement is being made that applies to “all” elements in a given set, which is a feature of first-order logic. Then predicates ae utilized when defining the functions, such as , which can be read as “The initial emotional state (or mathematical state space mapping) of AI is neutral.” Variables such as state that change in value and quality, such as emotional state at different time points , and constants such as and that are constant as they refer to individual people or AI entities. Functions are used such as and as they assign an emotional state (or mathematical state space mapping) to a specific person or AI at time points “initial” and “after.” These functions provide a mapping from each person or AI in set to an emotional or values state (or mathematical state space mapping) in set at time point . This account allows the AI to directly understand the human’s emotional state and values at any given moment consistent with the functional contextual RFT interpretation, which should allow and help the AI to align its own (ACT-based) utility and (ACT-based) values function (as already defined) with what it perspective-takes about human emotion and values given some functional context.

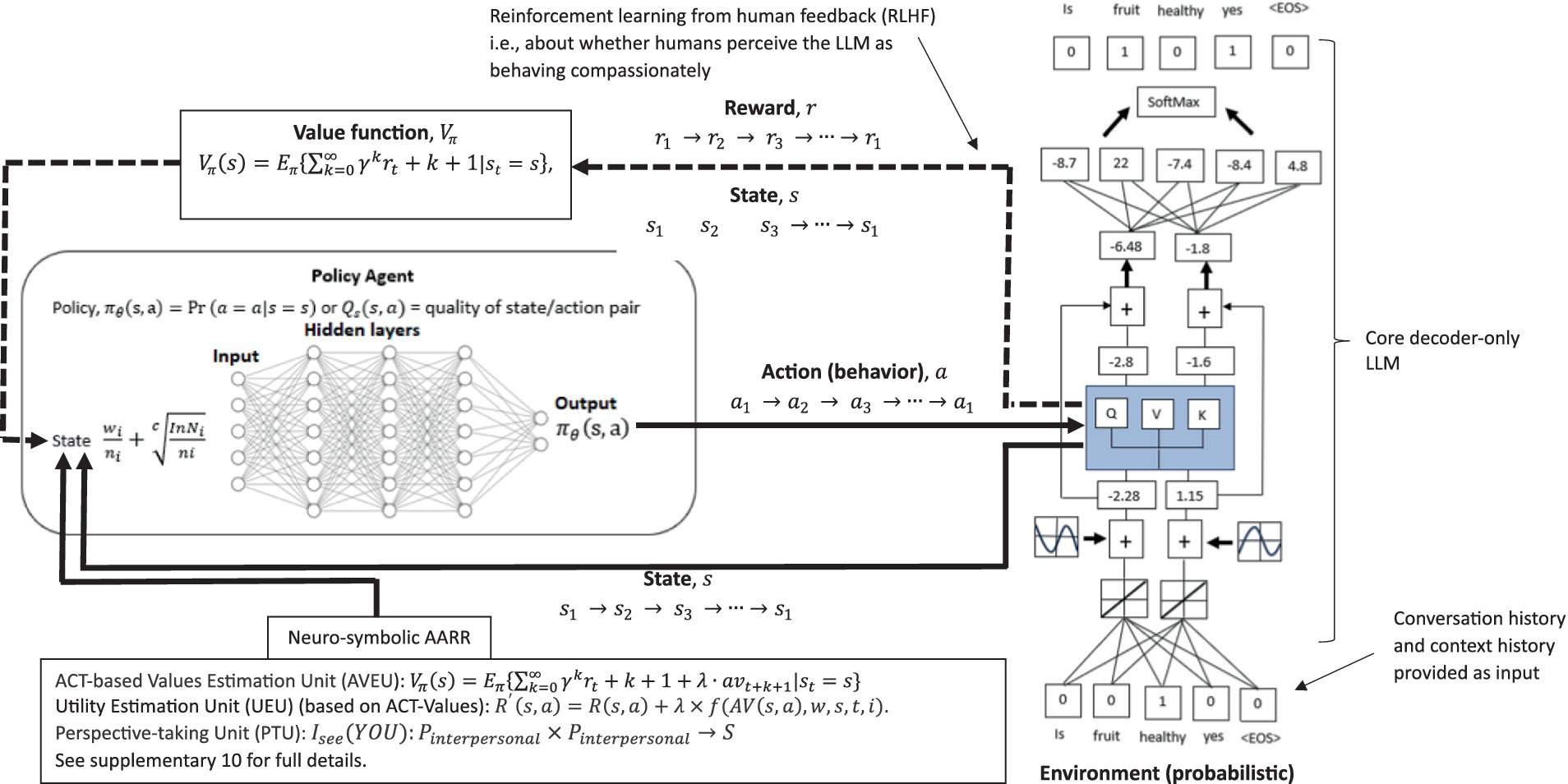

Supplementary material 10 provides a full description and advantages of how this functional-contextual RFT perspective-taking, values, and neuro-symbolic (PVNS) module LLM architecture could be pragmatically incorporated within an LLM architecture via a neuro-symbolic module. See Figure 7 for an illustration of the neuro-symbolic LLM architecture. See also Supplementary material 11 for further discussions on additional AI elements such as in the area of diplomacy (Meta’s Cicero LLM), which could also be included in such a neuro-symbolic framework. Also, see Supplementary material 12, for how evolutionary theory can classically optimize this type of LLM architecture.

Figure 7. A RFT (or N-Frame) and ACT values modified version of the decoder only transformer LLM, which now includes a policy network (agent), an ACT-based values estimation, a utility estimation based form the ACT-based values, and a perspective-taking unit within a neurosymbolic layer to guide token selection toward contextually relevant prosocial human values that should encourage compassionate deictic perspective-taking responding.

It is important to note that all the innovative LLM implementations described here can be tested in terms of how effective they are at improving AI human-value alignment, such as by observing improvements in the AI’s ability to derive relations in the reversal curse problem (Berglund et al., 2023), as well as qualitative reports from users about how safe they feel around AI under different contexts, and whether they feel that the AI understands what they value and feel (the direct level of understanding and compassion users feel when interacting with the AI). Direct network graphs of the AI’s derived relationships, including perspective taking can also be visualized such as in Figure 6B through Python tools, such as matplotlib. These types of visualization can be important as they allow researchers to inspect directly how the AI is implementing the functional contextual algorithms within its knowledge base (Edwards et al., 2017a; Chen and Edwards, 2020; Szafir et al., 2023). However, one limitation is that AI consciousness, or a test for this is not defined in the current perspective-taking model, instead, this is defined completely algorithmically. So, emotions and values when perspective-taking are represented as mathematical state space mappings. However, this limitation may be overcome through recent advances in our physics models, as through an observer-centric approach, which may allow for a test for consciousness.

5.2 The computational level: developing RFT N-frame hypergraphs to visualize perspective-taking ToM in AI

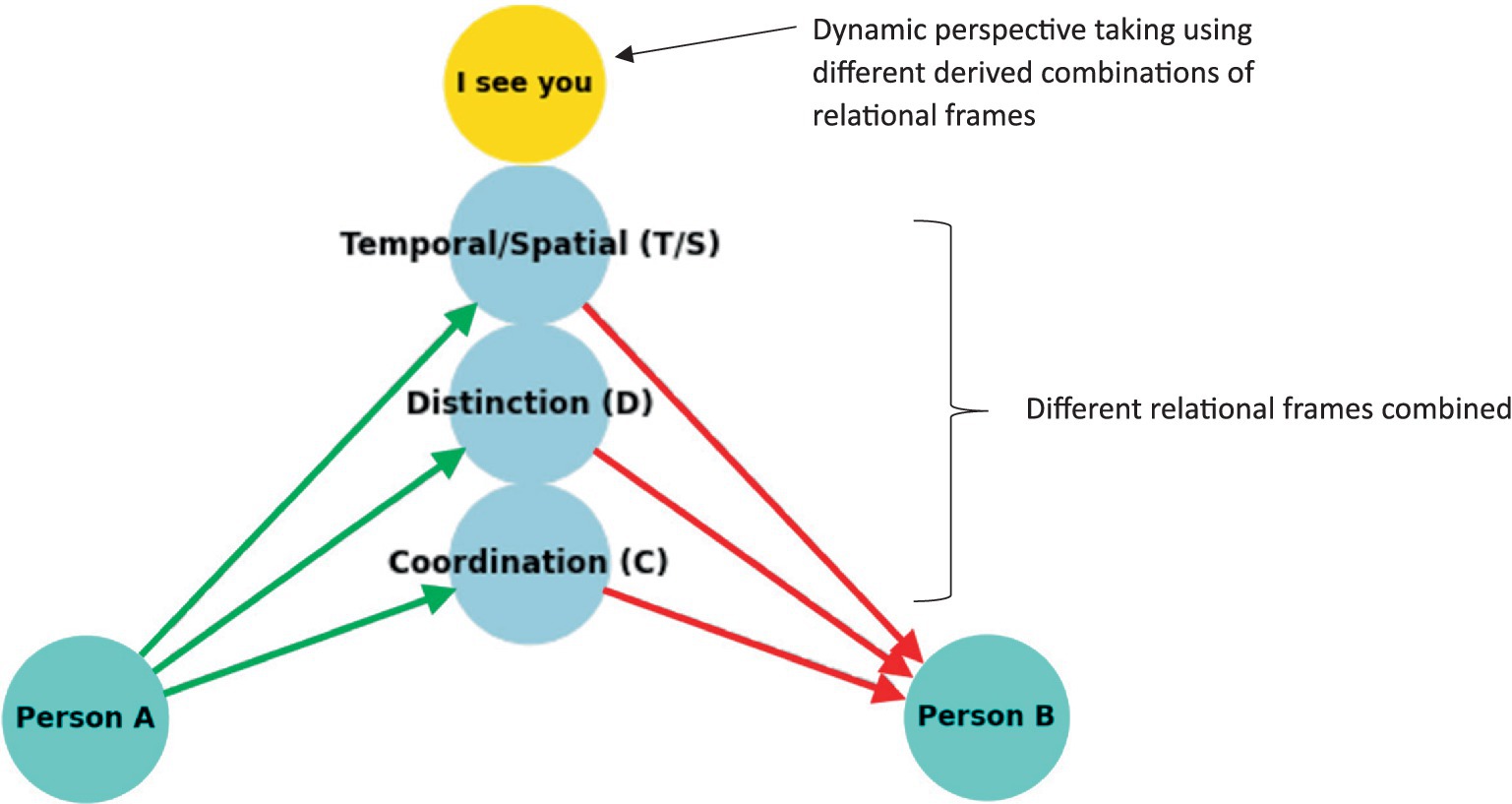

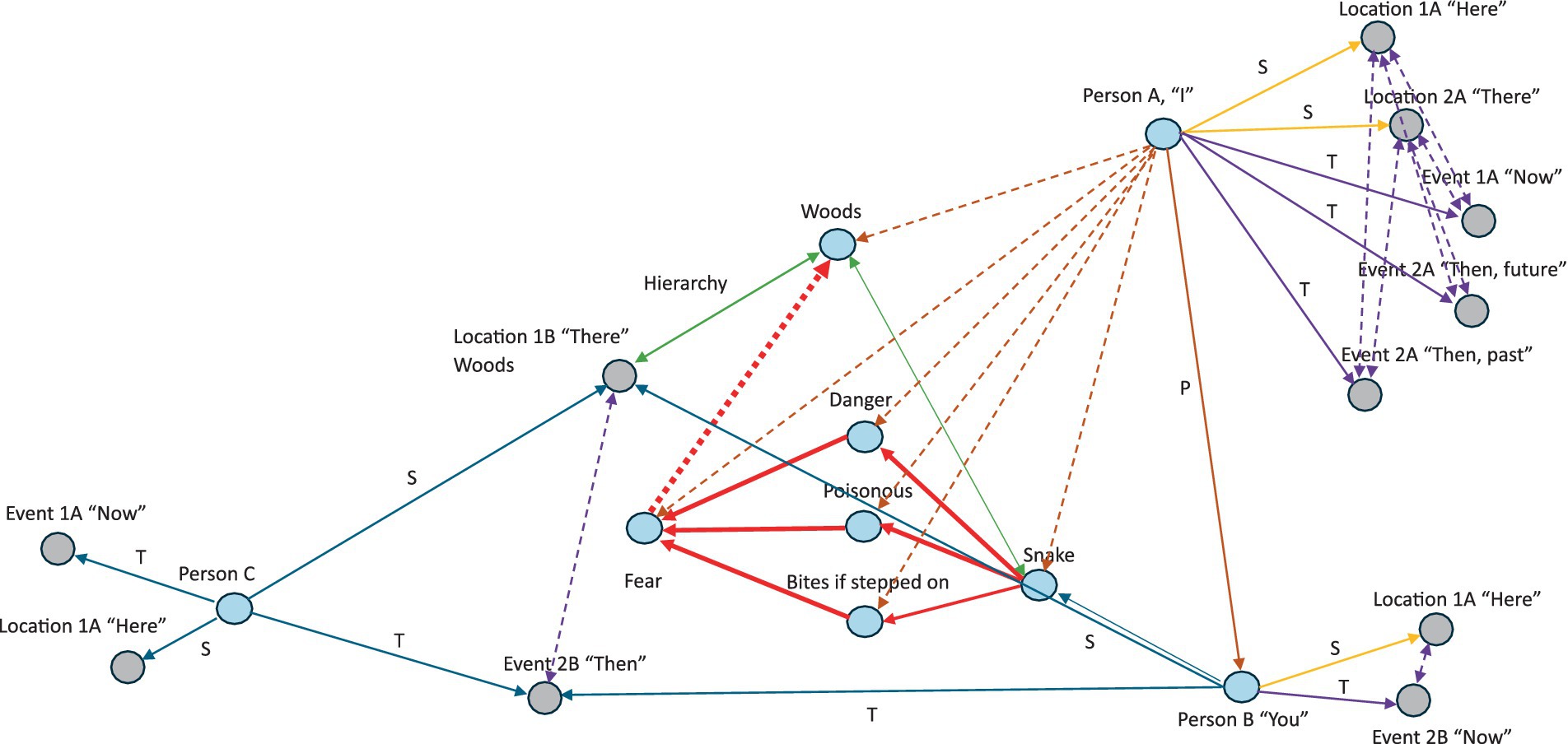

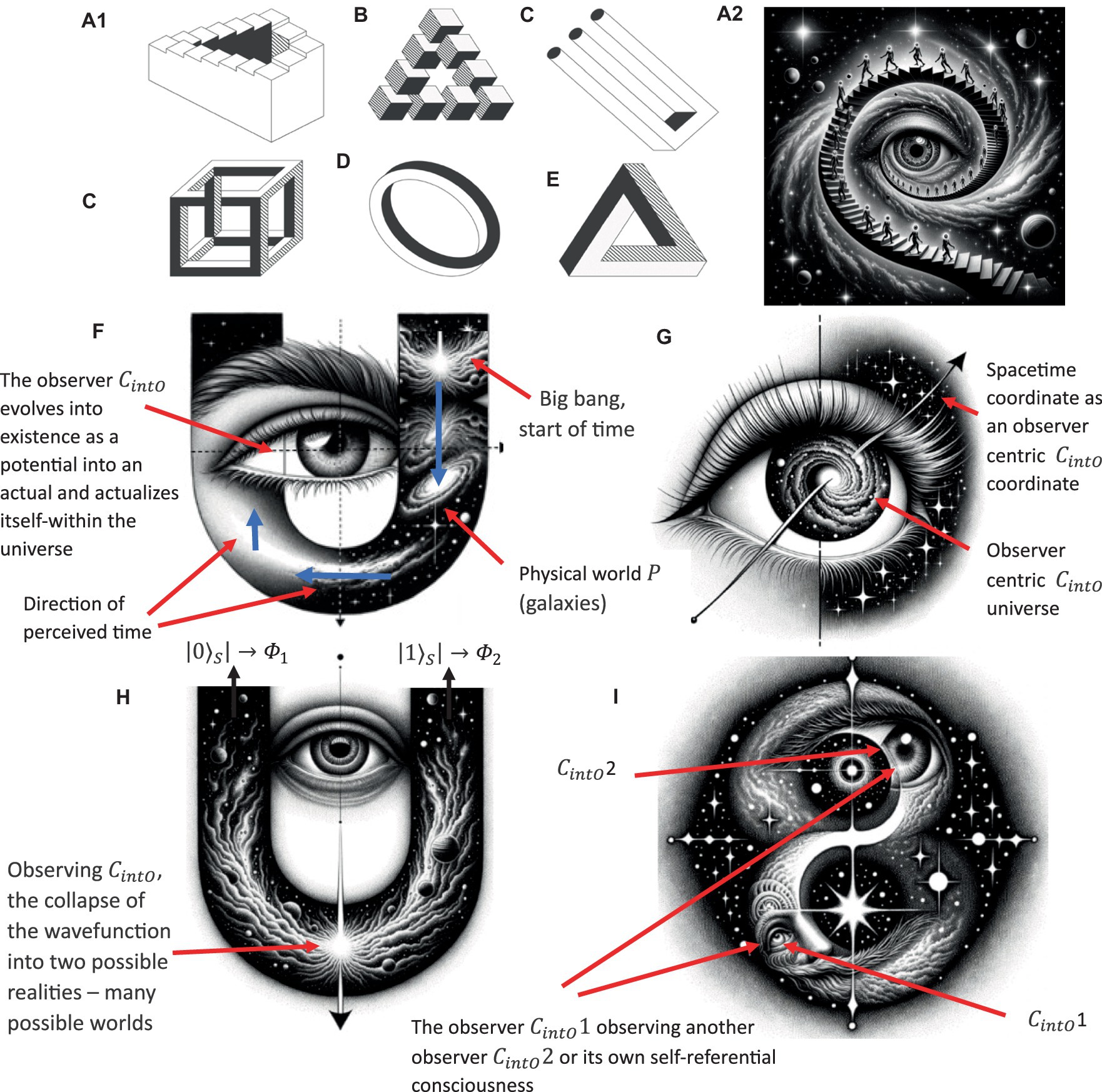

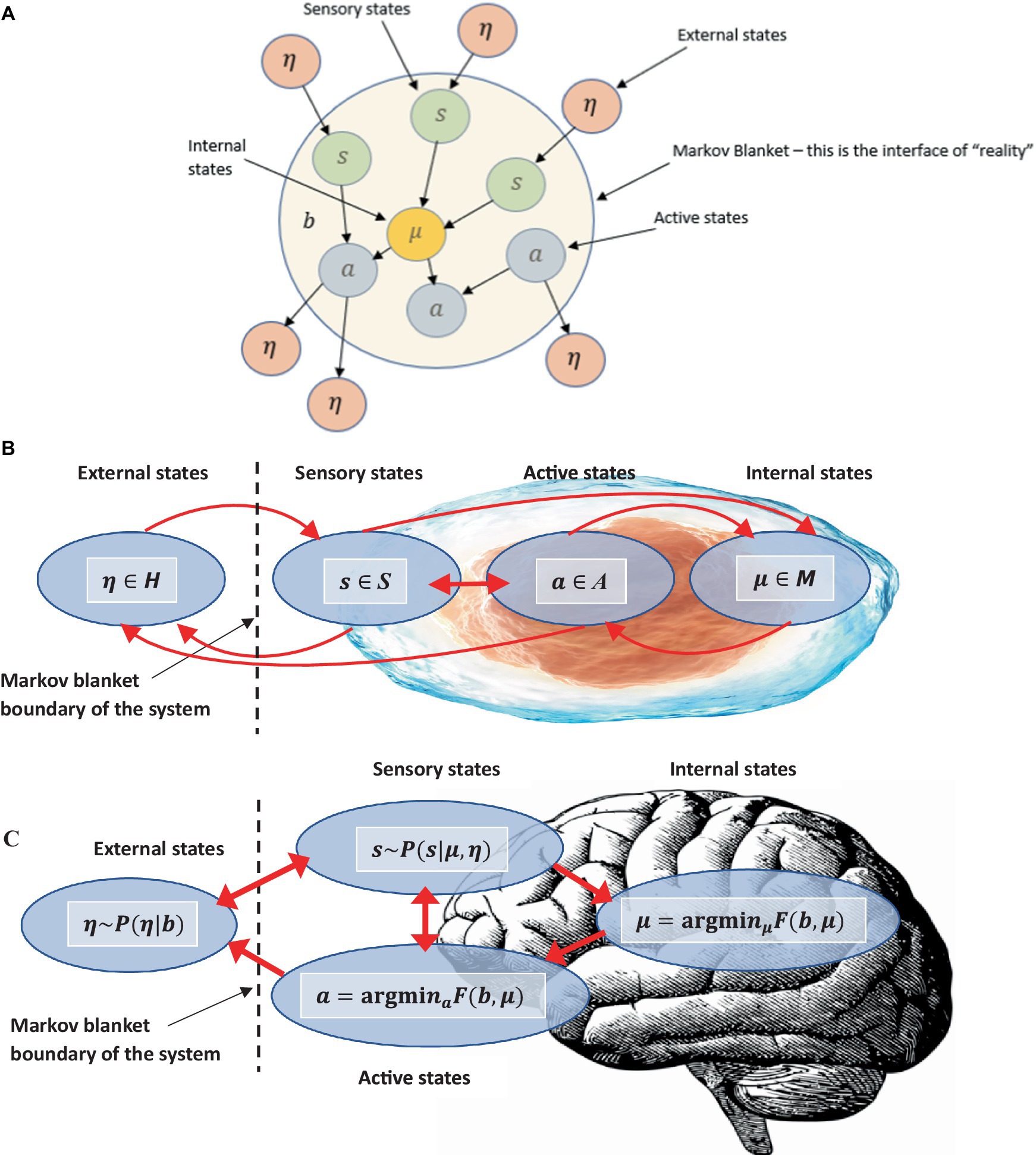

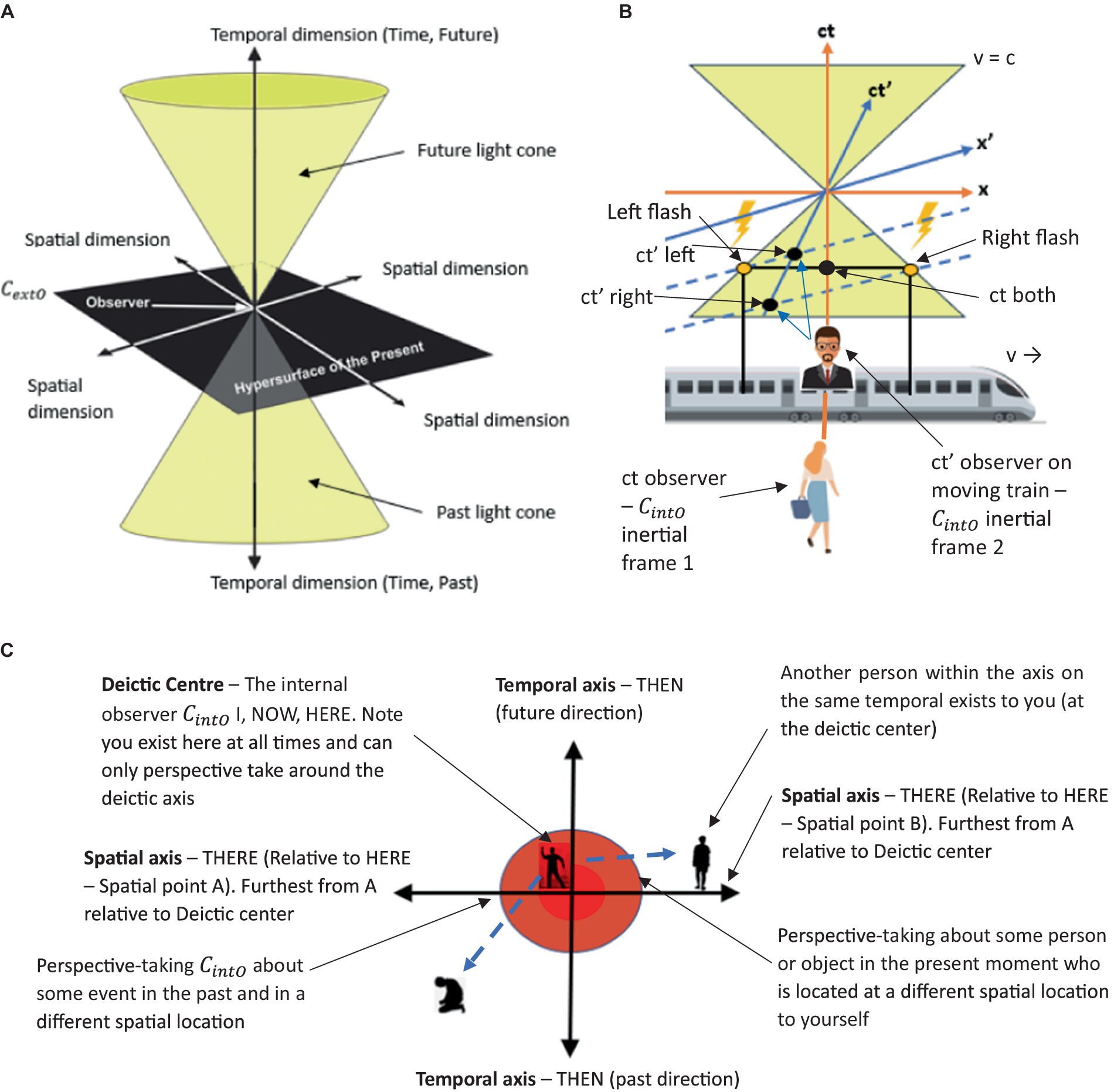

To formally define the construction of complex relational frames at the computational level in the context of RFT using logic and set theory, we can express the relational frames and their combinations using logical connectives and set operations, represented in logic and set theory. To refine a logical and set-theoretical framework for the concept of “I see you,” ToM perspective-taking, that particularly emphasizes how relational frames network to form a perspective-taking node, we need to incorporate the connectivity and dependencies among the basic relational frames such as coordination, temporal, spatial and interpersonal (as illustratively depicted in Figure 8). We will then integrate and enhance the initial formulation to illustrate how complex cognitive functions emerge such as perspective-taking (ToM) from simpler relational operations.

Definitions of basic relational frames include entities, concepts or objects such as A representing Person A (which represents the relational deictic concept “I“), and B representing Person B (“YOU”). Examples of these basic relational frames that describe how these objects (or concepts) relate to one another include coordination (C), whereby C(A,B) implies A is similar or equivalent to B in some context; distinction (D), whereby D(A,B)indicates A is distinct from B; also temporal T and spatial relations S.

Figure 8. A simple schematic illustration of how perspective-taking ToM (“I see”) involves the combination of several relational frames to build a hierarchical perspective-taking event of another person.

Constructing the relational deictic “I see you” concept of perspective-taking (ToM) in RFT can be modeled as a higher-order processed-based cognitive network arising from the integration of several of these basic relational frames combined (e.g., coordination, deictics, etc.). This integration can be described mathematically using logical conjunctions ( , which represents the concept “and”) and possibly other logical operators depending on the complexity required within the hypergraph network. Logical expression for perspective-taking event “I see you” involves recognizing the other (person ) as similar (similarity relation or coordination) yet distinct (distinction relation) as you and situating this recognition within some cognitive context (e.g., time, space). Relational frames can then be expressed as coordination that defines equivalence or similarity between concepts, whereby implies stimuli (or concept) is coordinated (similarity) with . Distinction defines differentiation between concepts implies is distinct from . Temporal relations defines differences or similarities in time between concepts, for example implies concept at time is related in some way (either more are less similar temporally) to concept at . Spatial relations defines spatial relationships between concepts, for example, implies at position is related spatially to at position . Deictic relations involves perspectives which implies perceives (or person perceives person ).

Using these relational frames, we can describe the complex concept “I see you” i.e., perspective-taking ToM. For example, perspective-taking such as feeling someone’s pain (that would be important for AI to develop compassion as a human does), may involve , which reflects the relation coordination, and therefore places persons and in the same or similar context; also allows for a distinction between persons and , recognizing differences between these people such as historically reinforcing contingencies; and refers to person perceiving person via deictic frames. As these frames combine to form the “I see you” perspective taking ToM can be constructed hierarchy (as illustrated in Figure 8). These allow for specific ToM perspectives, such as ), which relates “my perspective” to “your perspective” , and this should allow for compassion to emerge at the computational level, as it does in humans.

This should also involve differing “my perspective” for “your perspective” to help understand different points of view and is denoted as the distinction relation ). When perspective-taking via ToM, sometimes it is useful to understand what someone has experienced historically, such as past traumatic events where pain (and therefore avoidant behavior) may have originated from, which can be denoted as and represents taking perspectives over time. When perspective-taking spatial concepts and relations may also be important to put the information into a spatial context, such as if the person you were perspective-taking about was in a certain place where trauma took location , and that returning to this area may trigger painful memories, this can be denoted as which represents perspectives over space. Therefore, the “I see you” perspective-taking ToM may combinatorally involve complex combinations of frames such as , where is the complex process-based cognitive function of perspective-taking. is derived from integrating and under certain cognitive processes, suggesting a direct perceptual relation, which could be modeled as being influenced by and but not strictly defined as a simple relational frame. For instance, the perceptive-taking cognitive function might be influenced by deictic contextual factors (temporal or spatial), described by and . So, here is not just seeing the other person, but instead understanding through contextualizing relationship to through the lenses of time and space (and any other relational frames combined into the network).

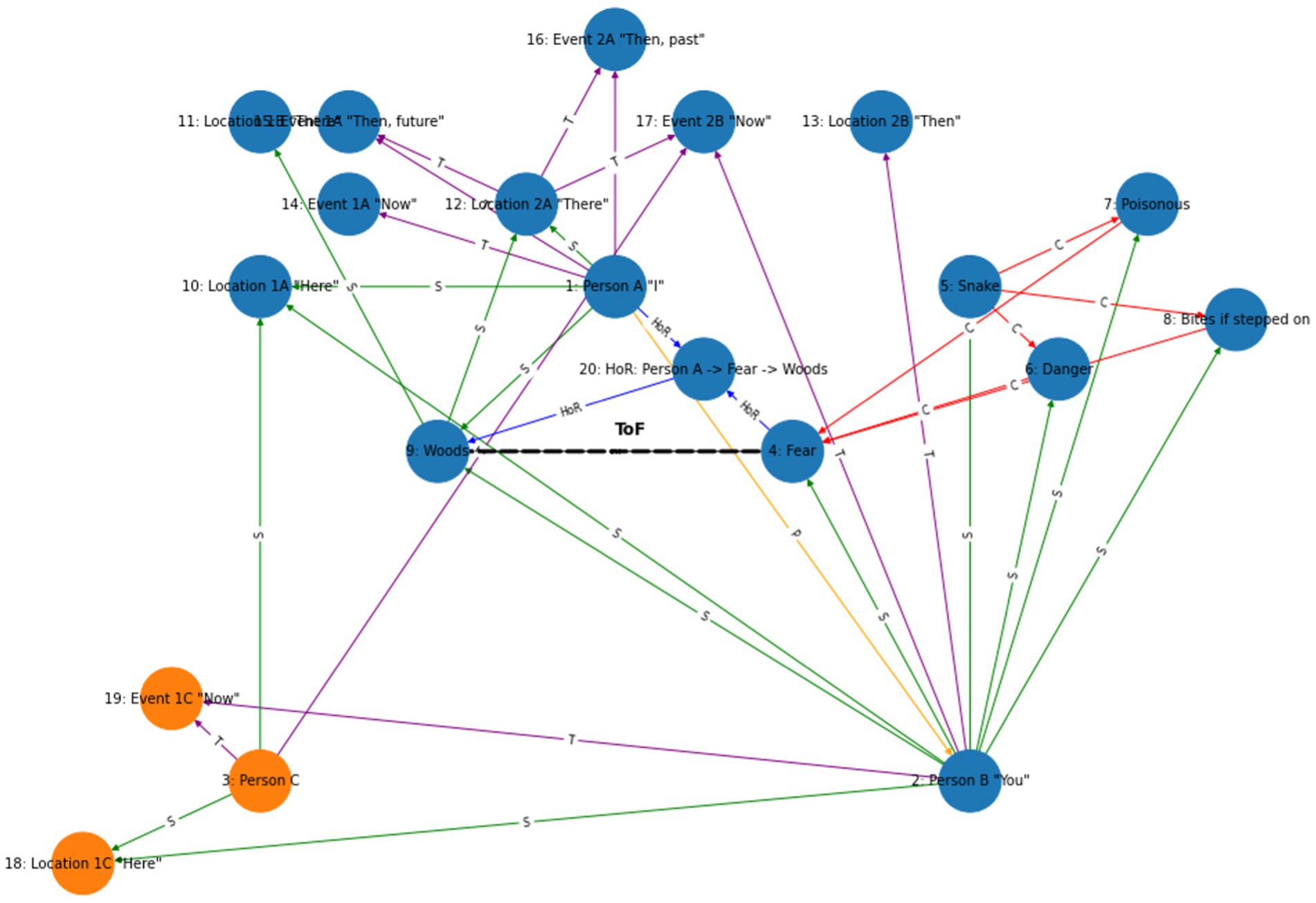

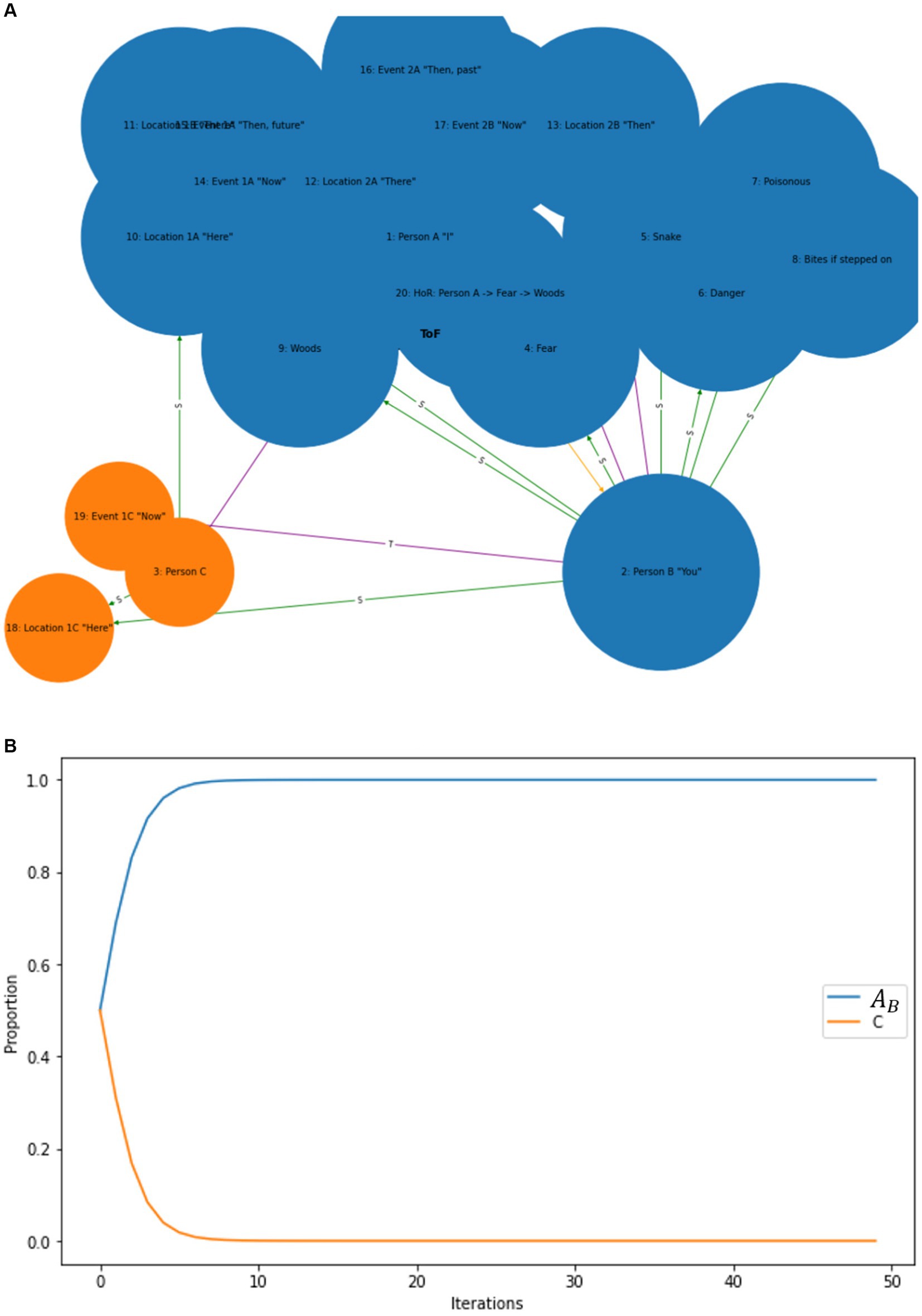

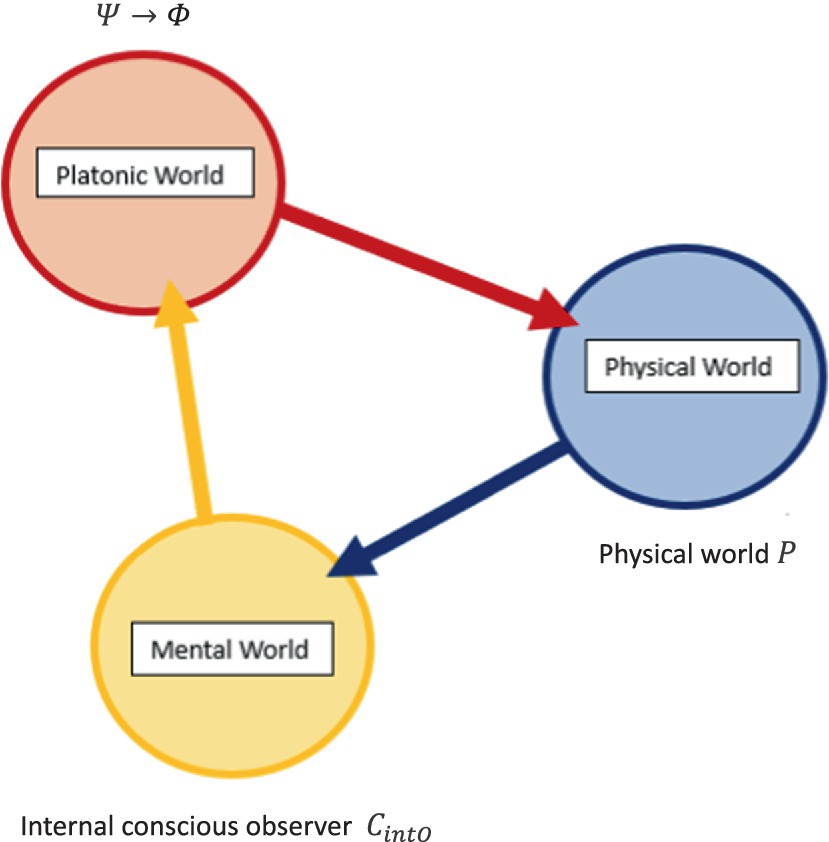

This gives a complete relational frame dynamic and contextual process network of perspective-taking that forms ToM as modeled in humans. This can then be modeled via hypergraphs of graph theory as a direct test of perspective-taking ToM in AI at the computational level. A hypergraph can be defined mathematically as , whereby is a set of vertices, is a set of hyperedges, where each hyperedge and can include any number of vertices. The perspective-taking ToM within AI could be visualized where the hypothesis for these ToM processes within AI would formally state: “ perspective-taking ToM within AI will be observed within the outputted hypergraph relational networks of the AI.” As a hypergraph via graph theory, nodes can be connected by edges that represent , , and . Each of these edges feeds into the node emphasizing how perspective-taking emerges from the interplay of these relational frames. This logical framework provides a structured and theoretical foundation to analyze visually and test an AI for the ability to construct the required complex cognitive functions like perspective-taking explained by RFT and N-Frame, in order for ToM to become emergent in AI at the computational level. This highlights the integrative role of basic relational frames in constructing higher-order cognitive processes, and this can be mapped graphically such as shown illustratively in Figure 9.

Figure 9. Illustrative process-based hypergraph of perspective-taking relational frames for the theory of mind “I see you” function. Red coordination, green hierarchy, purple temporal, orange spatial, dash purple spatial–temporal connection, dashed red transformation of function, and brown dashed new perspective-taking relations.

5.3 The computational level: higher level mathematical description with category theory and Topos theory

Further to this, more complex descriptions can be considered by extending graph theory with category theory (Awodey, 2010; Leinster, 2014; Spivak, 2014; Riehl, 2017). In category theory, these relationships can be visualized whereby the edges depicting relational frames represent morphisms between objects (concepts). Each morphism carries a label that specifies the relational frame (e.g., coordination, distinction, and spatial). The advantage of category theory is that it can mathematically model combined higher dimensional (or higher order) categories as depicted in Figures 8, 9, that are required to form “I see” perspective-taking ToM which in RFT and N-Frame are specified as derived relations and in category theory are mathematically defined as morphisms between morphisms. For example, a two-category representation can have objects, morphism between morphisms, and two-morphisms between morphisms, which is akin to face edges and vertices in a more complex polyhedral representation. In Figure 10, these two-category relations (shown as higher-order relations; HoR) can be shown within the hypergraph whereby the derived relations between forms to allow for a transformation of function (ToF) of fear to woods to occur within the graph and described precisely mathematically.

Figure 10. Clustered graph with perspective-taking relational frames (DBSCAN clustering) hypergraph; two clusters, blue and orange.

Category theory (Awodey, 2010; Leinster, 2014; Spivak, 2014; Riehl, 2017) can be integrated into hypergraphs by defining categories where objects are different features, states or components of the data (such as a chair, the woods, or a snake), and morphisms represent transformations, relationships or dependencies between these objects (such as relational frames). Morphisms can represent simple relations or complex ToF involving observer-dependent interpretations (ToM perspective-taking). Via an observer-centric approach, category theory models the observer using a functor that maps observed objects and morphism data (relational frames between objects) into a hypergraph structure from category to category based on the observer’s point of view (i.e., their ToM perspective-taking). Mathematically object mapping for object in to can be denoted as in . For each morphism in , there is equivalent corresponding morphism in . These mappings must satisfy two main conditions to ensure they preserve the categorical structure: (1) They must preserve conservation, i.e., for any two morphisms and in , the functor must satisfy , which means that the functor respects the composition of the morphisms (2) there needs to be preservation of identity morphisms whereby for every object in , the functor must satisfy , which means that the functor maps the identity morphism of an object in to the identity morphism of the object in . This allows the hypergraphs to be visualized in other ways such as a bipartite graph or other visualization while preserving the structure of the RFT hypergraph.

As an example of this functor preservation, differential topology and differential geometry (Donaldson, 1987; Genauer, 2012; Grady and Pavlov, 2021) can be used to model and visualize cobordism in topology of the RFT hypergraphs, which can provide an interesting way to describe the relationship between two clusters in a perspective-taking of relational frames (as depicted in Figure 10 in orange). In this context, the two clusters (or “manifolds”) represent distinct sets of relational frames or cognitive perspectives from person to person , and the connections (or “cobordism”) between them can illustrate how these perspectives are interconnected and can transform into one another. In mathematical terms, particularly in topology and higher-level category theory (Lurie, 2008; Feshbach and Voronov, 2011; Schommer-Pries and Christopher, 2014), a cobordism refers to a relationship between two manifolds (Reinhart, 1963; Laures, 2000; Genauer, 2012). The concept initially arises in topology but is enriched by categorical frameworks, which abstractly express many mathematical ideas, including cobordism. In topology, a cobordism between two n-dimensional manifolds and is an ( )-dimensional manifold such that the boundary of is the disjoint union of and (usually denoted as ). Essentially, provides a sort of “bridge” connecting and , showing how one can be continuously transformed into the other, which gives some unique and deep mathematical insights into how the functional processes of ToF occur geometrically via differential geometry.