94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Comput. Neurosci., 20 October 2023

Volume 17 - 2023 | https://doi.org/10.3389/fncom.2023.1222924

Biases are a fundamental aspect of everyday life decision-making. A variety of modelling approaches have been suggested to capture decision-making biases. Statistical models are a means to describe the data, but the results are usually interpreted according to a verbal theory. This can lead to an ambiguous interpretation of the data. Mathematical cognitive models of decision-making outline the structure of the decision process with formal assumptions, providing advantages in terms of prediction, simulation, and interpretability compared to statistical models. We compare studies that used both signal detection theory and evidence accumulation models as models of decision-making biases, concluding that the latter provides a more comprehensive account of the decision-making phenomena by including response time behavior. We conclude by reviewing recent studies investigating attention and expectation biases with evidence accumulation models. Previous findings, reporting an exclusive influence of attention on the speed of evidence accumulation and prior probability on starting point, are challenged by novel results suggesting an additional effect of attention on non-decision time and prior probability on drift rate.

In a decision-making task, a response bias can occur when one choice is preferred over its alternative or actively avoided (Leite and Ratcliff, 2011). Conversely, an unbiased decision involves equal competition between all possible choices (Dricu et al., 2017). Unbiased decisions, no matter how theoretical, serve as a benchmark to detect response biases and control for undesired factors. For example, researchers often compare conditions of biased attention with neutral ones. Attention and expectation are two common experimental manipulations that can induce a response bias in decision-making tasks.

A controversy exists about the exact definition of attention and addressing this issue is beyond the scope of this article. Here, we define attention as “the flexible control of limited computational resources” (Lindsay, 2020). Several attentional mechanisms are experimentally manipulated in perceptual decision-making tasks. Spatial attention allows us to prioritize information processing at a given location, like covertly attending to one side of the screen (Carrasco, 2018). Temporal attention is the ability to focus in time (Ramirez et al., 2021), and, in a similar way, feature-based and object-based attention prioritize the processing of relevant features (White and Carrasco, 2011) or objects in the environment (Ciaramitaro et al., 2011). Response biases between conditions have been detected for spatial (Ghaderi-Kangavari et al., 2023), temporal (Denison et al., 2017), feature-based (Ho et al., 2012), and object-based attention (Ciaramitaro et al., 2011).

Similarly to attention, tasks manipulating the subjects’ expectation, i.e., prior knowledge about the choices’ probability, can induce response biases in the behavioral data (Mulder et al., 2012). We usually refer to this contextual information as prior probability. In decision-making tasks, instructions informing the participant about the stimulus’ prior probability are often used to induce perceptual expectations (Cheadle et al., 2015). On a neural level, Kok et al. (2014, 2017) demonstrated in a series of functional magnetic resonance imaging (fMRI) and magnetoencephalography (MEG) experiments that perceptual predictions evoked similar BOLD activity patterns to those evoked by the stimuli in the primary visual cortex. One appealing idea is that the actual sensory input is compared to the prediction template to evaluate the quality of the prediction. If the input mismatches the prediction, a prediction error signal is generated (Smout et al., 2019).

Response biases may also be induced by reward manipulations (Mulder et al., 2012); we refer to this as a reward bias. Another bias is the choice history bias, the replication of the previous answer(s) to guide the current decision (Urai et al., 2019). Moreover, consistency in the direction of evidence (e.g., the left stimulus is consistently brighter than the right stimulus) has also been shown to bias accuracy, confidence and response times (Glickman et al., 2022). This is known as consistency bias. In sum, the term decision-making bias encompasses response biases induced by various manipulations.

The aim of this paper is to provide researchers with a conceptual overview of both the theoretical principles and empirical applications related to decision-making biases. More specifically, we will focus on the modelling approaches researchers use to study attention and expectation. We start by describing statistical models and then move to cognitive models of decision-making (Townsend and Ashby, 1983). Here we will describe their advantages over statistical models. We review studies that used both Signal Detection Theory (SDT; Green and Swets, 1966) and the Diffusion Decision Model (DDM; Ratcliff, 1978), as they stand out as well-established cognitive models (a thorough description of SDT and DDM is present in Section 2.3). We also compare these two cognitive models and argue that the latter offers greater insight into the data due to the analysis of response times (RT) distributions.

With respect to the latter models, response biases are particularly suitable for cognitive modelling because they provide valuable information about the underlying cognitive processes that lead to the observed behavior. This is because response biases can help identify the specific cognitive mechanisms involved in decision-making. For example, a response bias favoring one choice option over another may indicate that the participant is relying more heavily on certain perceptual cues or using a specific strategy. This information can then be incorporated into cognitive models to better understand how these cognitive mechanisms operate. Moreover, it is possible to jointly model both behavioral and neural activity measures (Turner et al., 2017). This simultaneous modelling offers the possibility of gaining a neural understanding of these cognitive processes. Response biases can also be used to test and compare different cognitive models. By comparing the fit of different models to the observed data, researchers can identify the model that best describes the putative underlying cognitive mechanisms. Lastly, response biases can be used to study individual differences in decision-making. By examining how response biases vary across different individuals or populations, researchers can identify factors that influence decision-making and tailor interventions accordingly.

Overall, response biases are a valuable tool for cognitive modelling as they provide rich information about the underlying cognitive processes that drive behavior, allow for the comparison of different cognitive models, and facilitate the study of individual differences in decision-making.

Researchers studying response biases are usually interested in testing a specific theory of decision-making. Nonetheless, theories of decision-making vary in their form. On the one hand, formal theories are characterized by mathematical tools and formal concepts (van Rooij and Blokpoel, 2020). On the other hand, verbal theories are verbally expressible intuitions (van Rooij and Blokpoel, 2020).

Verbal and formal theories are the two sides of the theoretical modelling “coin.” Intuitions about psychological phenomena need to be formally transcribed to be quantitatively tested. Similarly, the formalization process of a verbal theory can highlight practical limitations that were not previously conceptualized. The resolution of these practical limitations results in a theory that quantitatively predicts data. In this way, the process of creating a theory is characterized by the cyclical alternation between verbal and formal theories.

Nonetheless, researchers do not test formal theories solely. Contrarily, it is common to encounter studies that aim at testing a verbal theory. Testing verbal and formal theories has different implications that affect the whole empirical process, from hypotheses, to predictions, testing, and inferences (see Table 1).

Verbal theories can be, and usually are, tested and validated via statistical models. Statistical models do not make assumptions about the underlying cognitive processes that result in decision-making, but rather focus on describing the patterns observed in the data. The description and decomposition of variance in the data can in turn inform whether the data are in line with the theory and drive the further formalization of the verbal theory under investigation.

A substantial set of studies that probed decision-making biases applied statistical models to analyze their data (Hsu et al., 2018; Huang et al., 2018; Jigo et al., 2018; Garcia-Lazaro et al., 2019; Kveraga et al., 2019; Smout et al., 2019, 2020; Aitken et al., 2020; Moerel et al., 2022). An example is the event-related potential (ERP) study proposed by Doherty et al. (2005) which jointly investigated the effect of temporal and spatial expectation on decision-making. The task consists of a red ball moving in steps from the left to the right side of the screen. The ball is then occluded by a grey vertical bar for two steps. When it reappears on the other side of the occluded area, participants are asked to respond in case a black dot was present inside the ball. A 2-by-2 design produced a total of four conditions, namely temporal and spatial expectation (ST), spatial expectation only (S), temporal expectation only (T) and neither of the two (N). The four conditions were set up using the movement of the ball. The ball moved either with or without a constant spatial trajectory to manipulate spatial expectation and, with or without a constant step duration to manipulate temporal expectation. The authors analyzed the behavioral data using a repeated-measures two-way ANOVA (and post hoc paired-samples t tests), which examines the influence of two categorical independent factors (temporal and spatial expectations) on a continuous dependent variable (response time). Provided that the assumptions of the parametric estimation of p-values corresponding to the expectation manipulations have been met [which can be challenging, given the non-Gaussian nature of RT distributions and repeated measures paradigms (Girden, 1992)], these models can give valuable insights in the presence and size of biases caused by experimental manipulations. The authors reported faster response times in the S and T conditions compared to the N condition, but the interaction between the two factors was not significant. The reduction in mean response time in the ST condition was larger than the effects in the S and T conditions alone, indicating a so-called additive effect.

In sum, the authors provided evidence using a statistical model for an enhancing effect of both spatial and temporal expectation on response speed, plus an additive effect when both expectations were induced. Nonetheless, the verbal theory used for hypothesis generation cannot quantify the relative influences of these biases in the ST condition nor identify which cognitive processes (e.g., evidence accumulation, criterion or motor response time) have induced such changes. Thus, one may argue that the interpretation of these results remains an approximation while, next to statistical models, mathematical cognitive models may overcome this limitation. By specifying formal assumption about the decision process and subsequently testing which model best describes the data, mathematical cognitive models offer the possibility of decomposing behavioral data into a more structured set of cognitive processes. For the very same reason, mathematical cognitive models cannot account for any underlying cognitive factor that is not specified in the model a priori.

Mathematical cognitive models permit the decomposition of the observed performance into isolated contributions of relevant cognitive processes (Heathcote et al., 2015; Forstmann et al., 2016). Nonetheless, the relationship between statistical and cognitive models is not competitive; instead, it can be understood as a hierarchy where statistical models offer preliminary inference and cognitive models delve deeper into cognitive processes by mathematically formalizing their assumptions. The fit of the model gives insights in how well the cognitive process model can capture the empirical data. Thus, cognitive models yield falsifiable, transparent, and reproducible descriptions of the cognitive processes inferred by the behavioral data (Frischkorn and Schubert, 2018).

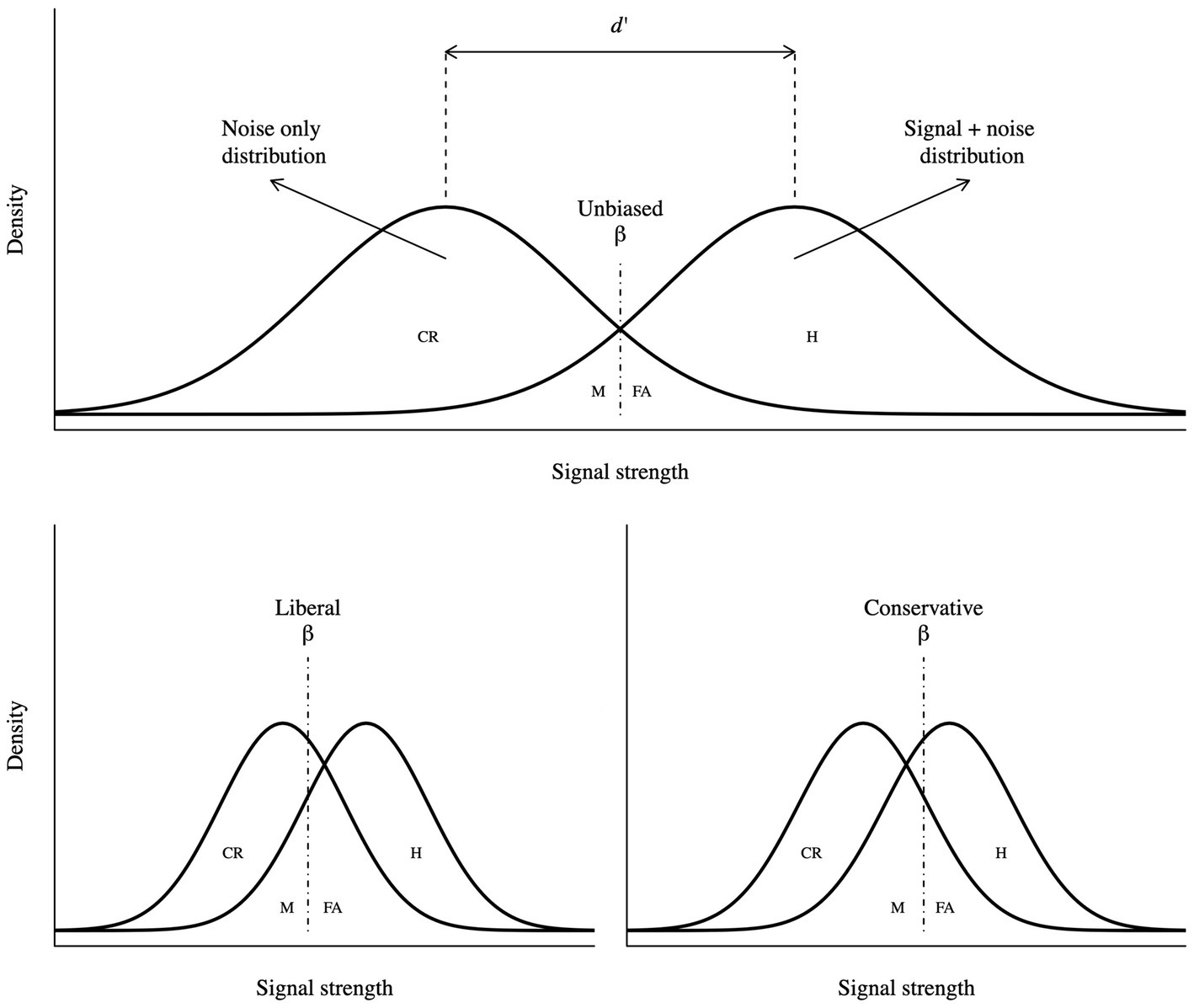

Signal detection theory is a widely used framework to study decision-making and perception. In a simple psychophysics experiment, the observer gathers noisy evidence (e) from the environment and has two categorical options, stimulus is present (h1), and stimulus is not present (h2; see Figure 1). This theory is a means to infer which of the hypotheses caused the evidence (Gold and Shadlen, 2007). If the observer correctly reports the presence or absence of the stimulus, the trial is labelled Hit (H) and Correct rejection (CR), respectively. Conversely, if the observer incorrectly reports the stimulus presence or absence, the trials are labelled as False alarm (FA) and Miss (M), respectively. In a two-choice task, the ratio of the two likelihoods (h1 and h2) defines the decision variable as follows:

Figure 1. Visual representation of SDT. Sensitivity is reported as d’, response bias as beta, hits as H, false alarms as FA, misses as M and correct rejections as CR. A more liberal criterion is characterized by more positive responses, resulting in higher hit and false alarm rates. Parallelly, a more conservative criterion is characterized by more negative responses, resulting in higher miss and correct rejection rates.

The likelihood ratio (β) is one measure of criterion, also called response bias, which acts as a threshold with respect to the decision variable:

Thus, the criterion determines whether the participant systematically responds more positively or negatively (Vickers, 1979; Macmillan and Creelman, 2005); SDT effectively defines and provides a formal description of a bias. As illustrated in Figure 1, a more liberal criterion implies higher Hit and False alarm rates, while a more conservative criterion implies higher Miss and Correct rejection rates, compared to an unbiased criterion.

Conversely, the sensitivity measures the ability of discrimination, which quantifies accurate performance (Vickers, 1979; Macmillan and Creelman, 2005). Conceptually, it represents the distance between the “noise only” and “noise + signal” distributions (see Figure 1). The most common measure of sensitivity (Green and Swets, 1966) is d’, which is formalized in terms of z as follows:

The same sensitivity value can be obtained from different combinations of FA and H proportions. This equivalence in sensitivity is captured by the so-called receiver operating characteristic (ROC) curve, which describes the sensitivity of any classifier. The criterion, desirably independent of sensitivity, captures the remaining component of the parameter space. In reality, only one measure of criterion – the criterion location (c) – is statistically independent from sensitivity (Macmillan and Creelman, 2005). Such independence is also depicted by the ROC curve, as a shift in criterion does not alter the curve itself but is represented as different points along the same ROC curve (meaning different combinations of FA and H proportion).

The seemingly simplicity of SDT may explain the wide use of this framework as a cognitive model. There are several studies that have used SDT to investigate prior probability bias, but the results depict an inconsistent picture. Prior probability manipulations have been found to mainly influence criterion (de Lange et al., 2013; Cheadle et al., 2015; Kaneko and Sakai, 2015) but also sensitivity (Stein and Peelen, 2015). Conversely, substantial work using the attentional cue paradigm converged on the idea that spatial attention has an enhancing effect on discriminability, which is captured by the sensitivity (d’) parameter (Bashinski and Bacharach, 1980; Downing, 1988; Hawkins et al., 1990; Cheadle et al., 2015).

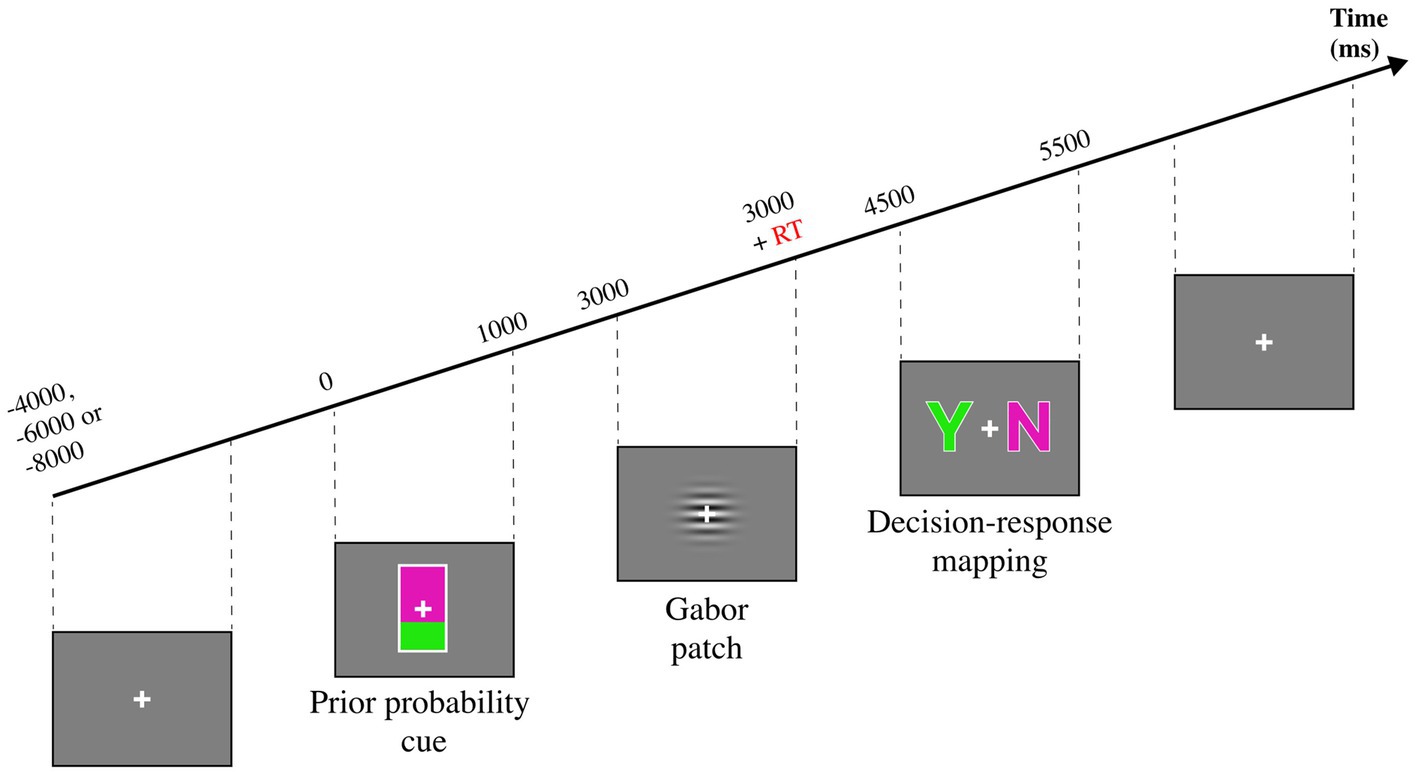

The study of Kaneko and Sakai (2015) is of relevance for this review because it highlights the benefits of applying cognitive modelling to behavioral data. In this study, the authors investigated the differences between prior probability and choice history bias with a perceptual decision-making task (see Figure 2), as it is unclear whether these biases arise via the same mechanism. The aim of the task was to report whether the target Gabor patch was moving [Target (+)] or not [Target (−)]. Prior probability was manipulated via a probabilistic cue preceding the Gabor patch. Two cues indicated whether the Gabor patch was likely (67%) or unlikely (33%) to move. The authors conducted a multilevel logistic regression analysis (a statistical model) on the behavioral data and reported that the previous decision, the cue, and the target were the factors that mostly contributed to target detection. Response times were enhanced by valid cues and impaired by invalid cues, whereas the previous decision did not affect RT. Moreover, the best-fitting model reported a significant interaction between previous decision and target on accuracy, which led the authors to suggest an effect of choice history bias only when the Gabor patch was moving. Conversely, cue and previous decision did not interact, suggesting distinct mechanisms biasing the decision process. The authors also modelled the dataset with SDT to determine the effects of prior probability and choice history on the model’s parameters. The authors report a main effect of choice history on sensitivity and a main effect of prior probability on criterion. This result further corroborates a view in which these manipulations affect decision-making via distinct mechanisms.

Figure 2. Experimental task from Kaneko and Sakai (2015). A mostly green probability cue indicates a 67% probability of seeing a moving Gabor patch, while a mostly purple cue indicates a 33% probability of seeing a moving Gabor patch. The Gabor patch is shown until the participant presses a key, indicating that he has decided. A subsequent decision-response mapping cue instructs the participants to report whether the Gabor patch was moving or not.

The main difference between the two analyses conducted by the authors is the extent to which they can link results to specific factors influencing the decision process. Even if the results of the statistical model converged with the SDT ones, only the latter ones can be associated with latent factors which are cognitively significant. The statistical model’s parameters have not been designed to map onto latent cognitive processes. Thus, while the statistical model’s parameters describe unspecified factors, the SDT’s parameters are directly linked with latent cognitive processes.

While SDT explains decision-making based on a single observation of evidence, the Sequential Probability Ratio Test (SPRT; Wald, 1945) introduces the idea of making decisions through a sequential evidence accumulation process. In SPRT, the log likelihood ratio between two competing hypotheses is updated after every observation. In turn, evidence accumulation models (EAMs) build upon this framework to better describe the whole decision-making process, including sensory encoding and motor execution time. Thus, SDT and EAMs present themselves as static and dynamic variants within the same family of hypothesis tests (Griffith et al., 2021). By estimating the accumulation of evidence over time, EAMs not only predict accuracy but also response time distributions – which have been shown to be crucial when linking behavioral and neural data (Gold and Shadlen, 2007). The assumptions shared between EAMs constitute a formal theory of decision-making (Evans and Wagenmakers, 2020) with three main assumptions: (1) evidence supporting each choice is accumulated over time, (2) the accumulation is characterized by random fluctuations, and (3) the decision is taken when enough evidence favoring one choice has been accumulated up to a threshold (Bogacz et al., 2006). While these assumptions generally hold for the whole class of EAMs, each model has different characteristics, parameters, and assumptions. For example, a major distinction between evidence accumulation models pertains to the number of accumulators. The DDM has a single accumulator with two boundaries, thus describing a two-alternative forced choice. Race models, such as the Racing Diffusion Model (RDM; Tillman et al., 2020), the Linear Ballistic Accumulator (LBA; Brown and Heathcote, 2008) and the Leaky Competing Accumulator (LCA; Usher and McClelland, 2001), can account for multiple responses and can thus be applied to a wider range of tasks. For a detailed comparison between EAMs see Ratcliff et al. (2016).

In the past, both the RDM (Tillman et al., 2020) and the LBA (Forstmann et al., 2010; Nishiguchi et al., 2019) have been used to investigate decision-making biases. Nonetheless, the DDM remains the most used EAM in the response bias research (Mulder et al., 2012; Nunez et al., 2017; Garton et al., 2019; Klatt et al., 2020; Ghaderi-Kangavari et al., 2022, 2023) and it has been shown to be the optimal decision-making mechanism for two-alternative forced choice tasks (Bogacz et al., 2006). Because of its extended applications and efficacy, here we will only report studies that used the DDM or a closely related version such as the attentional DDM (Krajbich et al., 2010).

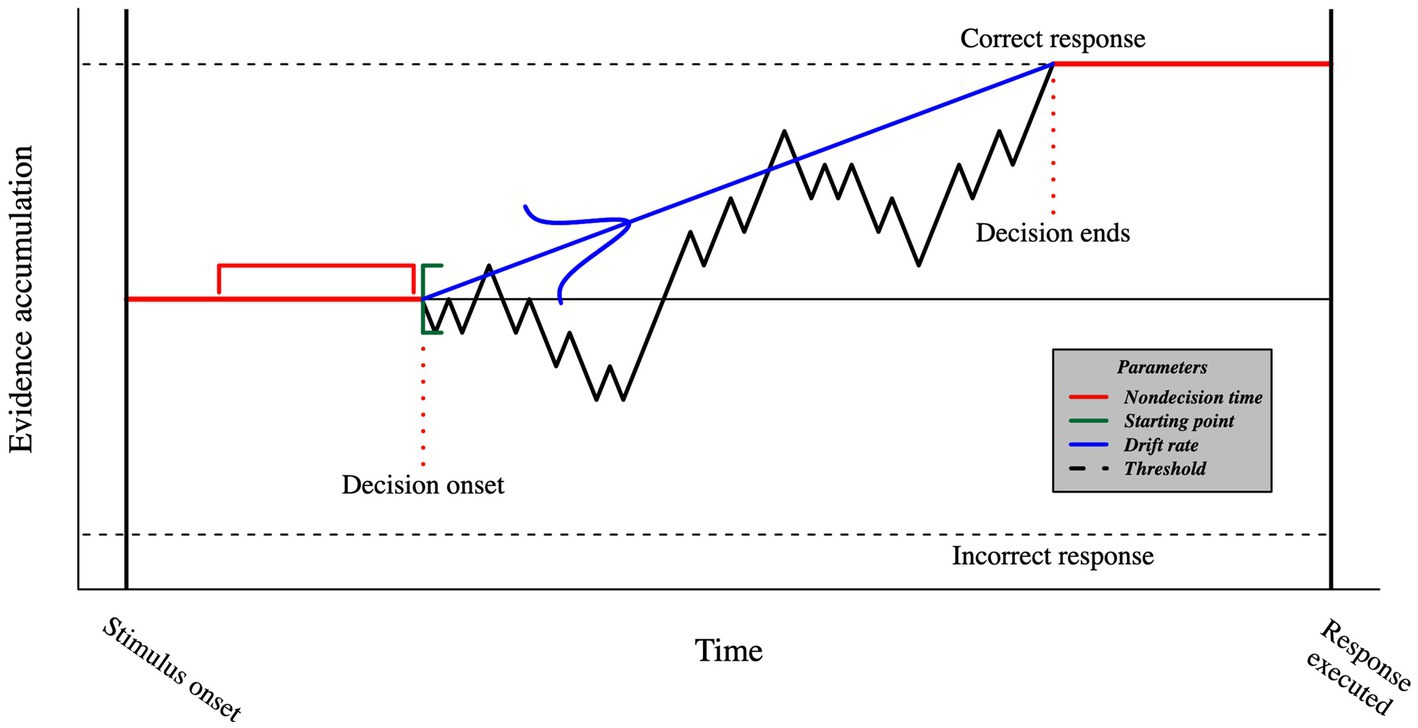

The DDM describes how sensory evidence is accumulated over time (see Figure 3). The average velocity of evidence accumulation is defined by the drift rate. The starting point of evidence accumulation is drawn from a uniform distribution and, when unbiased, is assumed to be equally distant from each choice on average so that no option has an advantage over the other. The threshold is the evidence accumulation boundary. Once it is reached, the participant commits to a choice and executes the motor processes that allow for a response. Finally, non-decision time captures all the remaining cognitive processes that happen between the stimulus onset and the decision time, such as visual encoding time and motor response execution. The DDM also has three additional parameters addressing across-trial variabilities in drift rate, starting point, and non-decision time. These parameters allow the DDM to provide accurate predictions of the law-like patterns in the data (Forstmann et al., 2016), especially predicting different RT distributions for correct and incorrect responses (Laming, 1979).

Figure 3. Visual representation of the DDM. The timeframe between stimulus onset and the execution of the response includes both decision- (black) and non-decision- (red) related processes. The evidence accumulation begins at a starting point that is sampled from a uniform distribution (green), and proceeds with a mean speed known as the drift rate, which is sampled from a normal distribution (blue). Once the threshold is reached, we assume that the participant commits to a choice and initiates the motor command. The duration of non-decision processes is sampled from a uniform distribution.

Despite the advantages EAMs offer over simpler cognitive models or statistical tests, a series of criticisms have been raised towards this class of models. Firstly, some authors (Evans and Wagenmakers, 2020) argue that EAMs have reached a limit in the extent to which they can explain decision-making processes. The high similarity between EAMs renders difficult assessing which cognitive dynamics best explain the decision process. A crucial challenge involves assessing how well neural data can be integrated into the EAM framework. Neural data offer greater possibility of discrimination between different theoretical accounts compared to behavioral data. Secondly, some of the models’ assumptions are incompatible with recent empirical findings (Evans and Wagenmakers, 2020). For example, the motor response and the decision process have been shown to be temporally intertwined (Servant et al., 2015, 2016), contradicting the DDM assumption of sequentiality. A future step to address such a problem will consist of replacing random sources of variability with systematic ones. Other limitations include falsifiability issues which could arise with some EAMs (Heathcote et al., 2014; Jones and Dzhafarov, 2014). If the parameters are not properly constrained, the high flexibility of such models could result in an unfalsifiable model (Heathcote et al., 2014).

A diverse range of attentional tasks, from Posner Cueing to Multiple Object Tracking, is essential for comprehensively probing the intricate nature of attention. Each paradigm targets specific attentional aspects, such as spatial, feature-based, or object-based attention, enabling a more holistic understanding. As attention is a multifaceted phenomenon, employing various tasks ensures a more accurate and nuanced portrayal of its mechanisms and limitations. This broad perspective is crucial for the development of cognitive models of attention, whose primary goal is to encompass the different attentional mechanisms under a common theoretical framework.

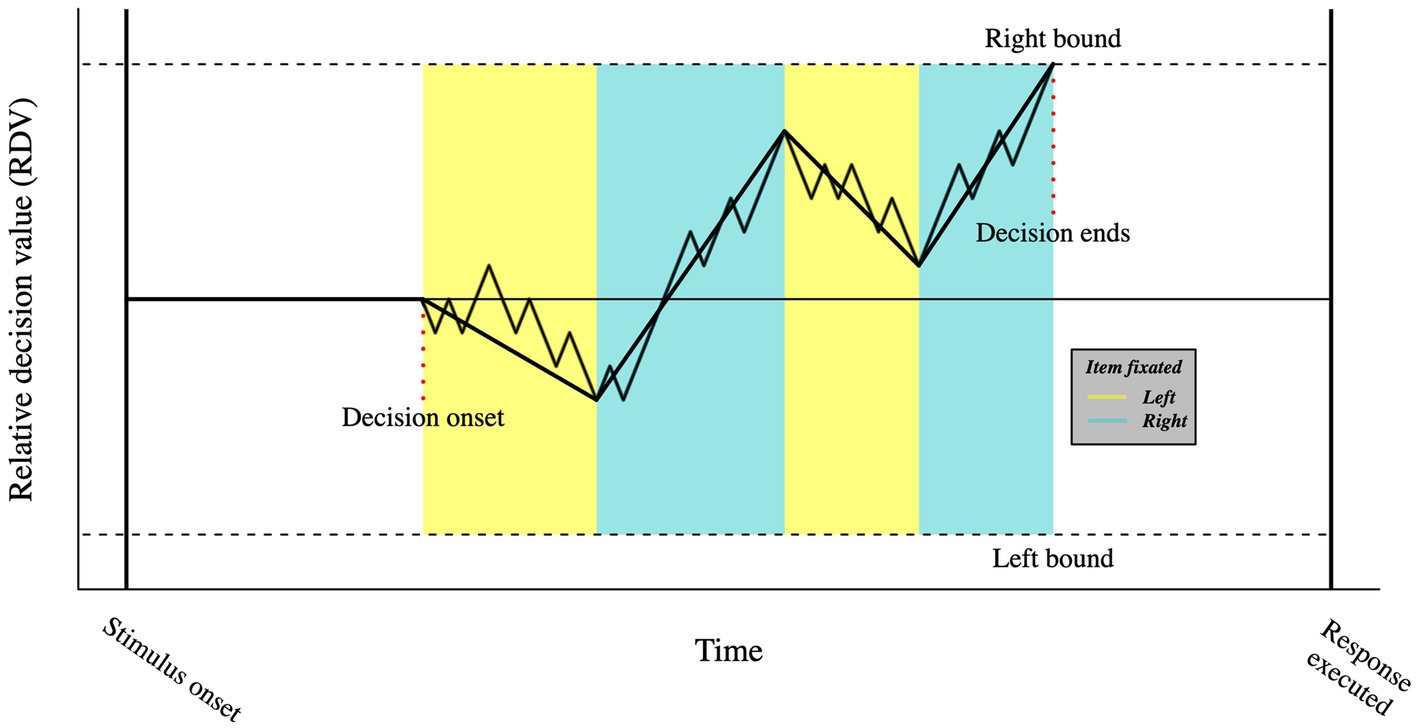

An example of this comes from the developed of a variant of the DDM called attentional drift-diffusion model (aDDM; Krajbich et al., 2010). The aDDM is based on the idea that attention mediates the formation of visual short-term memory traces, a concept previously formalized by Smith and Ratcliff (2009). The attentional drift-diffusion model keeps the main properties of the DDM but makes further assumptions about the role of eye movements. Specifically, the model assumes that as participants allocate gaze time onto one option relative to the other, they are more likely to choose the gazed option. Such assumption is formalized as a drift rate bias towards the attended choice (see Figure 4). The aDDM predicts that participants will tend to choose the last seen option, unless the choice’s value is significantly worse than the alternative option; a prediction that has received empirically support (Smith and Krajbich, 2018) across different decision-making domains (e.g., food- and money-related, risky, and social choices), consolidating the role of eye movements in attention orienting. Crucially, promising prospects derive from the recent expansion of the aDDM, in which the probability of fixation on an alternative is expressed as a logistic function of its accumulated value (Gluth et al., 2020). Such models highlight how the attentional mechanisms not only increase evidence accumulation, but also account for the value of the available options (Smith and Krajbich, 2019).

Figure 4. Visual representation of the aDDM. In the aDDM, fixating one option biases the slope of the relative decision value towards that choice. As in the DDM, when the relative decision value reaches one of the boundaries, a decision is made.

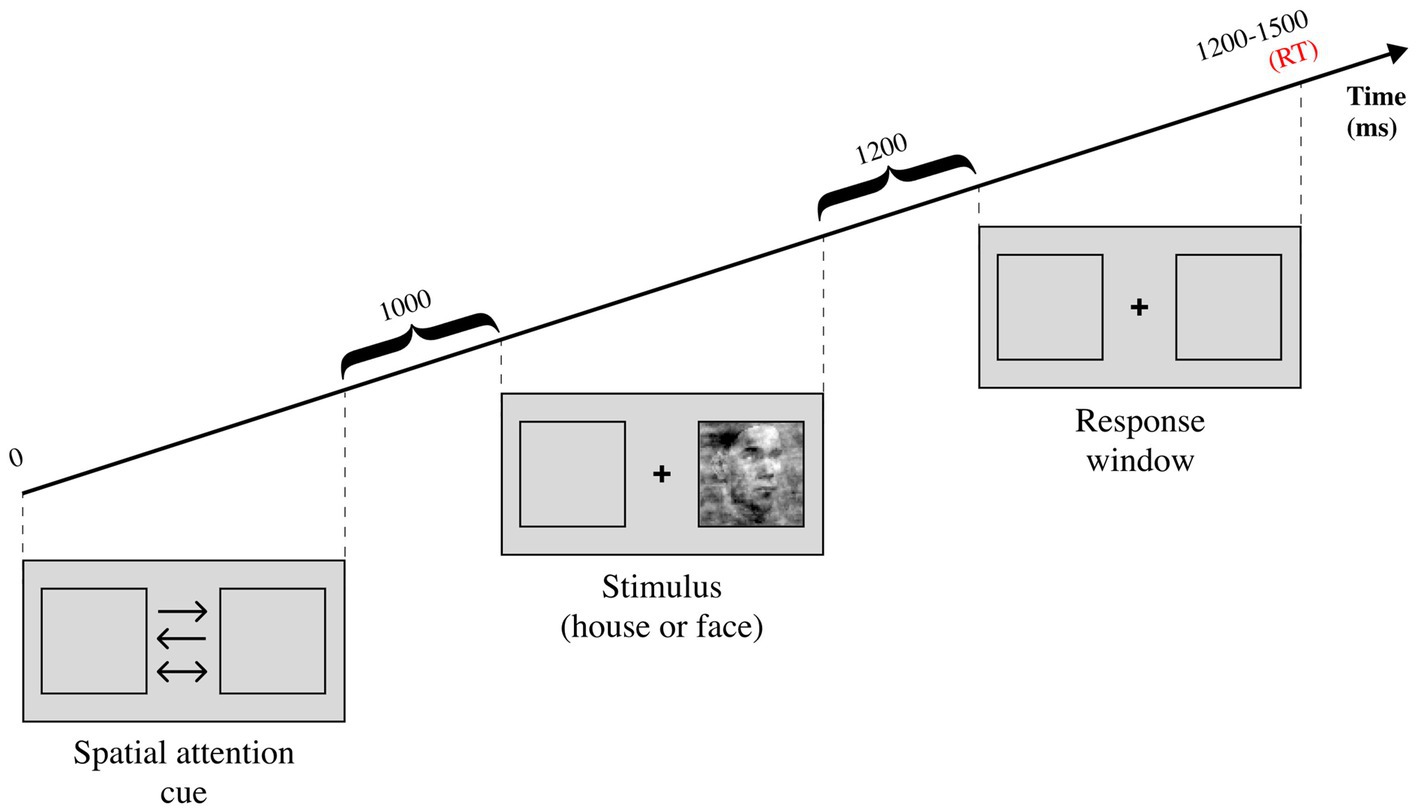

Two more recent studies investigated how spatial attention influences perceptual decision-making with the classic DDM, while recording electroencephalography (EEG) data. The paradigm, shared between the two studies, consisted in a house/face discrimination task which featured a spatial attention cue before the stimulation (see Figure 5). The first study (Ghaderi-Kangavari et al., 2023) consisted in a model comparison test, measured with deviance information criterion (DIC) and R2. The authors reported that the winning model allowed non-decision time to vary between the spatial attention conditions. This finding diverges from the idea that attention affects drift rates by increasing signal-to-noise ratio during evidence accumulation (Smith et al., 2004; Krajbich, 2019; Klatt et al., 2020) but remains in line with DDM predictions (Smith et al., 2004). Nonetheless, this result may not reflect the same attentional processes investigated in previous studies as the uncertainty factor induced by the coherence manipulation influences attentional effects (Smith and Ratcliff, 2009). It is also worth noting that the authors report a significant correlation between the amplitude of contralateral N2 component and non-decision time, corroborating previous studies (Klatt et al., 2020). These findings were extended by the second study (Ghaderi-Kangavari et al., 2022) investigating how spatial attention affects different sub-components of non-decision time. Visual encoding time was found to be influenced by spatial attention but not exclusively. In fact, the authors speculate that motor execution time might be affected as well. Considering that older adults were found to have significantly higher non-decision times compared to younger adults (Klatt et al., 2020), both hypotheses remain plausible.

Figure 5. Experimental task from Ghaderi-Kangavari et al. (2022, 2023). There are three possible spatial attention cues, the one-sided arrows are informative while the two-sided arrow is uninformative. The stimulus can either be a face or a house, and it can either have high coherence (low noise) or low coherence (high noise). The participant is asked to report whether the stimulus is a face or a house after the stimulation is over, during the response window.

Several EAM-based studies that probed prior probability manipulations converged on the idea that they mainly influence the starting point parameter (Ratcliff and McKoon, 2008; Simen et al., 2009; Forstmann et al., 2010; Mulder et al., 2012; White and Poldrack, 2014; Garton et al., 2019). More recently, researchers combined the DDM with fMRI data to reveal how prior probability affects neural dynamics during a face/house discrimination task (Dunovan and Wheeler, 2018). The authors reported that the model with the best fit allowed both starting point and drift rate to vary between prior probability conditions. At the neural level, the drift rate bias mapped onto pre- and post-stimuli BOLD activity in the Inferior Temporal Cortex (ITC), indicating that quality of evidence is reflected by physiological activity in this category-selective region. This result corroborates previous findings that found prior probability manipulations to affect the drift rate parameter (Hanks et al., 2011; Kelly et al., 2021). Another recent study (Garton et al., 2019) investigated age-related differences in prior probability bias. The results indicate that flexibility of bias control, which refers to the tendency to be biased, is minimally impaired with age.

Taken together, studies investigating the role of attention and prior probability via cognitive modelling reveal a complex picture. The discrepancies within studies on attention or expectation might be due to the heterogeneity of paradigms used, deployed strategies by participants or unknown latent factors. Nonetheless, the different impact of age on attention and expectation biases supports the view in which these psychological phenomena arise from different mechanisms.

Attentional manipulations, which have been often linked to the drift rate parameter, and thus to the speed of evidence accumulation, have also been shown to influence non-decision time. Such findings suggest that attention has a broad impact on cognition, which is not measurable by a single DDM parameter. This is in line with the generally shared view of attention as an all-round cognitive phenomenon, not attributable to a single brain region but rather to a network of regions. Components of the frontoparietal network have repeatedly been associated with spatial attention (Nobre and Kastner, 2014), including the posterior parietal cortex, intraparietal sulcus, dorsal premotor/posterior prefrontal cortex and anterior cingulate cortex. On the more temporal side, the results showing a correlation between the contralateral N2 component and non-decision time pave the way for future studies investigating the influence of attention on the motor component of non-decision time, e.g., via electromyography-based models of decision-making (Servant et al., 2021). The overall picture suggests that the selective influence of spatial attention manipulations on DDM parameters is challenged.

Several studies investigating prior probability manipulations have been shown to influence drift rate (Hanks et al., 2011; Dunovan and Wheeler, 2018; Kelly et al., 2021), in addition to the established influence on starting point (Ratcliff and McKoon, 2008; Simen et al., 2009; Forstmann et al., 2010; Mulder et al., 2012; White and Poldrack, 2014; Garton et al., 2019). The diffuse influence of prior probability manipulations on both drift rate and starting point suggests that a multistage process might be in place (Dunovan and Wheeler, 2018). In line with this hypothesis, drift rate between prior probability conditions correlates with the ITC BOLD activity (Dunovan and Wheeler, 2018) while activation in the frontoparietal network is associated with shifts in the starting point parameter (Mulder et al., 2012).

In this paper, we reviewed the most popular methodological approaches used to study decision-making biases. We illustrated the properties of statistical models and explained why their descriptive function is fundamental to empirical research. Nonetheless, we also discussed how such models do not provide enough insight into the cognitive processes underlying decision-making. We continued by illustrating how cognitive models overcome the limitations of statistical models by providing a formal description of the investigated cognitive processes; the obtained results are unambiguously interpretable according to the model’s assumptions. We reviewed studies that applied both Signal Detection Theory and Evidence Accumulation Models and showed that EAMs have a significant advantage over SDT because they incorporate response time measures, in addition to response choice. Early applications of cognitive models, such as the SDT, provided means to quantify the bias. More advanced cognitive models, such as EAMs, can account for the relationship between RT distributions and accuracy (Forstmann et al., 2016). Particularly, the DDM have been shown to reliably explain a variety of speeded decision-making tasks. Finally, we reviewed recent studies which manipulated expectation and attention. Even if the emerging picture remains elusive, cognitive models of decision-making can help us to disentangle intricately intertwined processes such as attention and expectation.

In conclusion, investigating decision-making biases is a necessary step to achieve a greater understanding of the decision-making process. This is true for both basic and clinical research. We argue that, at this point in time, the implementation of state-of-the-art cognitive models is the best approach to quantify biases, test formal theories and advance the field of research.

While cognitive models are useful for identifying and quantifying (e.g., attentional) biases, they leave open the question of which neural dynamics lead to these biases.

A prominent class of models used to explain the neural dynamics of evidence accumulation are attractor neural network models (Wang, 2002; Wong and Wang, 2006; Quax and Van Gerven, 2018; Esnaola-Acebes et al., 2022). Attractor dynamics entail that the neural networks have one or more stable states, to which the network converges over time. These models have also been successful in explaining a wider variety of cognitive processes including memory (Hopfield, 1982) and classification (Chaudhuri and Fiete, 2019). The temporal dynamics of these models can lead to response time and choice data that are highly similar to those predicted by the evidence accumulation models (Keung et al., 2020). Attractor neural network models have been used to investigate which neural parameters lead to known phenomena in RT data. For example, Lo and Wang (2006) used a neural network model of cortico-basal ganglia dynamics to show that adjustments in response caution (decision thresholds) could be implemented by adjusting the strength between synapses of the cortico-striatal connections. Moreover, nonlinear attractor models can explain post-error response time biases in the absence of feedback (Berlemont and Nadal, 2019) and confidence-related sequential effects observed in empirical data (Berlemont et al., 2020).

Despite it is yet unclear how biases in attention or prior probability could be implemented in attractor neural network models, a recent study that fit such models to empirical RT data (Berlemont et al., 2020) offers exciting opportunities for direct comparison with evidence accumulation models.

EC, SM, and BF contributed to the conception of the article. EC wrote all the sections of the article. SM and BF contributed to manuscript revision and proofreading. All authors contributed to the article and approved the submitted version.

This work was supported by the Deep Brain ERC Cog grant [grant number 8674750]. Funding sources had no role in the design, writing or interpretation of this review.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Aitken, F., Menelaou, G., Warrington, O., Koolschijn, R. S., Corbin, N., Callaghan, M. F., et al. (2020). Prior expectations evoke stimulus-specific activity in the deep layers of the primary visual cortex. PLoS Biol. 18:e3001023. doi: 10.1371/journal.pbio.3001023

Bashinski, H. S., and Bacharach, V. R. (1980). Enhancement of perceptual sensitivity as the result of selectively attending to spatial locations. Percept. Psychophys. 28, 241–248. doi: 10.3758/BF03204380

Berlemont, K., Martin, J.-R., Sackur, J., and Nadal, J.-P. (2020). Nonlinear neural network dynamics accounts for human confidence in a sequence of perceptual decisions. Sci. Rep. 10:7940. doi: 10.1038/s41598-020-63582-8

Berlemont, K., and Nadal, J.-P. (2019). Perceptual decision-making: biases in post-error reaction times explained by attractor network dynamics. J. Neurosci. 39, 833–853. doi: 10.1523/JNEUROSCI.1015-18.2018

Bogacz, R., Brown, E., Moehlis, J., Holmes, P., and Cohen, J. D. (2006). The physics of optimal decision making: a formal analysis of models of performance in two-alternative forced-choice tasks. Psychol. Rev. 113, 700–765. doi: 10.1037/0033-295X.113.4.700

Brown, S. D., and Heathcote, A. (2008). The simplest complete model of choice response time: linear ballistic accumulation. Cogn. Psychol. 57, 153–178. doi: 10.1016/j.cogpsych.2007.12.002

Carrasco, M. (2018). How visual spatial attention alters perception. Cogn. Process. 19, 77–88. doi: 10.1007/s10339-018-0883-4

Chaudhuri, R., and Fiete, I. (2019). Bipartite expander Hopfield networks as self-decoding high-capacity error correcting codes. Adv. Neural Inf. Proces. Syst. 32 Available at: https://papers.nips.cc/paper_files/paper/2019/hash/97008ea27052082be055447be9e85612-Abstract.html

Cheadle, S., Egner, T., Wyart, V., Wu, C., and Summerfield, C. (2015). Feature expectation heightens visual sensitivity during fine orientation discrimination. J. Vis. 15:14. doi: 10.1167/15.14.14

Ciaramitaro, V. M., Mitchell, J. F., Stoner, G. R., Reynolds, J. H., and Boynton, G. M. (2011). Object-based attention to one of two superimposed surfaces alters responses in human early visual cortex. J. Neurophysiol. 105, 1258–1265. doi: 10.1152/jn.00680.2010

de Lange, F. P., Rahnev, D. A., Donner, T. H., and Lau, H. (2013). Prestimulus oscillatory activity over Motor cortex reflects perceptual expectations. J. Neurosci. 33, 1400–1410. doi: 10.1523/JNEUROSCI.1094-12.2013

Denison, R. N., Heeger, D. J., and Carrasco, M. (2017). Attention flexibly trades off across points in time. Psychon. Bull. Rev. 24, 1142–1151. doi: 10.3758/s13423-016-1216-1

Doherty, J. R., Rao, A., Mesulam, M. M., and Nobre, A. C. (2005). Synergistic effect of combined temporal and spatial expectations on visual attention. J. Neurosci. 25, 8259–8266. doi: 10.1523/JNEUROSCI.1821-05.2005

Downing, C. J. (1988). Expectancy and visual-spatial attention: effects on perceptual quality. J. Exp. Psychol. Hum. Percept. Perform. 14, 188–202. doi: 10.1037//0096-1523.14.2.188

Dricu, M., Ceravolo, L., Grandjean, D., and Frühholz, S. (2017). Biased and unbiased perceptual decision-making on vocal emotions. Sci. Rep. 7:16274. doi: 10.1038/s41598-017-16594-w

Dunovan, K., and Wheeler, M. E. (2018). Computational and neural signatures of pre and post-sensory expectation bias in inferior temporal cortex. Sci. Rep. 8:13256. doi: 10.1038/s41598-018-31678-x

Esnaola-Acebes, J. M., Roxin, A., and Wimmer, K. (2022). Flexible integration of continuous sensory evidence in perceptual estimation tasks. Proc. Natl. Acad. Sci. U. S. A. 119:e2214441119. doi: 10.1073/pnas.2214441119

Evans, N., and Wagenmakers, E.-J. (2020). Evidence accumulation models: current limitations and future directions. Quant. Methods Psychol. 16, 73–90. doi: 10.20982/tqmp.16.2.p073

Forstmann, B., Brown, S., Dutilh, G., Neumann, J., and Wagenmakers, E.-J. (2010). The neural substrate of prior information in perceptual decision making: a model-based analysis. Front. Hum. Neurosci. 4:40. doi: 10.3389/fnhum.2010.00040

Forstmann, B. U., Ratcliff, R., and Wagenmakers, E.-J. (2016). Sequential sampling models in cognitive neuroscience: advantages, applications, and extensions. Annu. Rev. Psychol. 67, 641–666. doi: 10.1146/annurev-psych-122414-033645

Frischkorn, G. T., and Schubert, A.-L. (2018). Cognitive models in intelligence research: advantages and recommendations for their application. J. Intelligence 6:34. doi: 10.3390/jintelligence6030034

Garcia-Lazaro, H. G., Bartsch, M. V., Boehler, C. N., Krebs, R., Donohue, S., Harris, J. A., et al. (2019). Dissociating reward- and attention-driven biasing of global feature-based selection in human visual cortex. J. Cogn. Neurosci. 31, 469–481. doi: 10.1162/jocn_a_01356

Garton, R., Reynolds, A., Hinder, M. R., and Heathcote, A. (2019). Equally flexible and optimal response bias in older compared to younger adults. Psychol. Aging 34, 821–835. doi: 10.1037/pag0000339

Ghaderi-Kangavari, A., Parand, K., Ebrahimpour, R., Nunez, M. D., and Amani Rad, J. (2023). How spatial attention affects the decision process: looking through the lens of Bayesian hierarchical diffusion model & EEG analysis. J. Cogn. Psychol. 35, 456–479. doi: 10.1080/20445911.2023.2187714

Ghaderi-Kangavari, A., Rad, J. A., Parand, K., and Nunez, M. D. (2022). Neuro-cognitive models of single-trial EEG measures describe latent effects of spatial attention during perceptual decision making. J. Math. Psychol. 111:102725. doi: 10.1016/j.jmp.2022.102725

Glickman, M., Moran, R., and Usher, M. (2022). Evidence integration and decision confidence are modulated by stimulus consistency. Nat. Hum. Behav. 6, 988–999. doi: 10.1038/s41562-022-01318-6

Gluth, S., Kern, N., Kortmann, M., and Vitali, C. L. (2020). Value-based attention but not divisive normalization influences decisions with multiple alternatives. Nat. Hum. Behav. 4, 634–645. doi: 10.1038/s41562-020-0822-0

Gold, J. I., and Shadlen, M. N. (2007). The neural basis of decision making. Annu. Rev. Neurosci. 30, 535–574. doi: 10.1146/annurev.neuro.29.051605.113038

Green, D. M., and Swets, J. A. (1966). Signal detection theory and psychophysics. New York: John Wiley.

Griffith, T., Baker, S.-A., and Lepora, N. F. (2021). The statistics of optimal decision making: exploring the relationship between signal detection theory and sequential analysis. J. Math. Psychol. 103:102544. doi: 10.1016/j.jmp.2021.102544

Hanks, T. D., Mazurek, M. E., Kiani, R., Hopp, E., and Shadlen, M. N. (2011). Elapsed decision time affects the weighting of prior probability in a perceptual decision task. J. Neurosci. 31, 6339–6352. doi: 10.1523/JNEUROSCI.5613-10.2011

Hawkins, H. L., Hillyard, S. A., Luck, S. J., Mouloua, M., Downing, C. J., and Woodward, D. P. (1990). Visual attention modulates signal detectability. J. Exp. Psychol. Hum. Percept. Perform. 16, 802–811. doi: 10.1037//0096-1523.16.4.802

Heathcote, A., Brown, S., and Wagenmakers, E. J. (2015). “An introduction to good practices in cognitive modeling,” in An introduction to model-based cognitive neuroscience. eds. B. Forstmann and E. J. Wagenmakers (New York, NY: Springer), 25–48.

Heathcote, A., Wagenmakers, E.-J., and Brown, S. (2014). The falsifiability of actual decision-making models. Psychol. Rev. 121, 676–678. doi: 10.1037/a0037771

Ho, T. C., Brown, S., Abuyo, N. A., Ku, E.-H. J., and Serences, J. T. (2012). Perceptual consequences of feature-based attentional enhancement and suppression. J. Vis. 12:15. doi: 10.1167/12.8.15

Hopfield, J. J. (1982). Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. 79, 2554–2558. doi: 10.1073/pnas.79.8.2554

Hsu, Y.-F., Hämäläinen, J. A., and Waszak, F. (2018). The processing of mispredicted and unpredicted sensory inputs interact differently with attention. Neuropsychologia 111, 85–91. doi: 10.1016/j.neuropsychologia.2018.01.034

Huang, D., Xue, L., Wang, M., Hu, Q., Bu, X., and Chen, Y. (2018). Feature-based attention elicited by precueing in an orientation discrimination task. Vis. Res. 148, 15–25. doi: 10.1016/j.visres.2018.05.001

Jigo, M., Gong, M., and Liu, T. (2018). Neural determinants of task performance during feature-based attention in human cortex. eNeuro 5, ENEURO.0375–ENEU17.2018. doi: 10.1523/ENEURO.0375-17.2018

Jones, M., and Dzhafarov, E. N. (2014). Unfalsifiability and mutual translatability of major modeling schemes for choice reaction time. Psychol. Rev. 121, 1–32. doi: 10.1037/a0034190

Kaneko, Y., and Sakai, K. (2015). Dissociation in decision bias mechanism between probabilistic information and previous decision. Front. Hum. Neurosci. 9:261. doi: 10.3389/fnhum.2015.00261

Kelly, S. P., Corbett, E. A., and O’Connell, R. G. (2021). Neurocomputational mechanisms of prior-informed perceptual decision-making in humans. Nature human. Behaviour 5, 467–481. doi: 10.1038/s41562-020-00967-9

Keung, W., Hagen, T. A., and Wilson, R. C. (2020). A divisive model of evidence accumulation explains uneven weighting of evidence over time. Nat. Commun. 11:2160. doi: 10.1038/s41467-020-15630-0

Klatt, L.-I., Schneider, D., Schubert, A.-L., Hanenberg, C., Lewald, J., Wascher, E., et al. (2020). Unraveling the relation between EEG correlates of attentional orienting and sound localization performance: a diffusion model approach. J. Cogn. Neurosci. 32, 945–962. doi: 10.1162/jocn_a_01525

Kok, P., Failing, M. F., and de Lange, F. P. (2014). Prior expectations evoke stimulus templates in the primary visual cortex. J. Cogn. Neurosci. 26, 1546–1554. doi: 10.1162/jocn_a_00562

Kok, P., Mostert, P., and de Lange, F. P. (2017). Prior expectations induce prestimulus sensory templates. Proc. Natl. Acad. Sci. U. S. A. 114, 10473–10478. doi: 10.1073/pnas.1705652114

Krajbich, I. (2019). Accounting for attention in sequential sampling models of decision making. Curr. Opin. Psychol. 29, 6–11. doi: 10.1016/j.copsyc.2018.10.008

Krajbich, I., Armel, C., and Rangel, A. (2010). Visual fixations and the computation and comparison of value in simple choice. Nat. Neurosci. 13, 1292–1298. doi: 10.1038/nn.2635

Kveraga, K., Vito, D. D., Cushing, C. A., Im, H. Y., Albohn, D. N., and Adams, R. (2019). Spatial and feature-based attention to expressive faces. Exp. Brain Res. 237, 967–975. doi: 10.1007/s00221-019-05472-8

Laming, D. (1979). Choice reaction performance following an error. Acta Psychol. 43, 199–224. doi: 10.1016/0001-6918(79)90026-X

Leite, F. P., and Ratcliff, R. (2011). What cognitive processes drive response biases? A diffusion model analysis. Judgm. Decis. Mak. 6, 651–687. doi: 10.1017/S1930297500002680

Lindsay, G. W. (2020). Attention in psychology, neuroscience, and machine learning. Front. Comput. Neurosci. 14:29. doi: 10.3389/fncom.2020.00029

Lo, C.-C., and Wang, X.-J. (2006). Cortico–basal ganglia circuit mechanism for a decision threshold in reaction time tasks. Nat. Neurosci. 9, 956–963. doi: 10.1038/nn1722

Macmillan, N. A., and Creelman, C. D. (2005). Detection theory: a user’s guide. 2nd Edn. New York: Psychological Press.

Moerel, D., Grootswagers, T., Robinson, A. K., Shatek, S. M., Woolgar, A., Carlson, T. A., et al. (2022). The time-course of feature-based attention effects dissociated from temporal expectation and target-related processes. Scientific Rep. 12:1. doi: 10.1038/s41598-022-10687-x

Mulder, M. J., Wagenmakers, E.-J., Ratcliff, R., Boekel, W., and Forstmann, B. U. (2012). Bias in the brain: a diffusion model analysis of prior probability and potential payoff. J. Neurosci. 32, 2335–2343. doi: 10.1523/JNEUROSCI.4156-11.2012

Nishiguchi, Y., Sakamoto, J., Kunisato, Y., and Takano, K. (2019). Linear ballistic accumulator modeling of attentional bias modification revealed disturbed evidence accumulation of negative information by explicit instruction. Front. Psychol. 10:2447. doi: 10.3389/fpsyg.2019.02447

Nunez, M. D., Vandekerckhove, J., and Srinivasan, R. (2017). How attention influences perceptual decision making: single-trial EEG correlates of drift-diffusion model parameters. J. Math. Psychol. 76, 117–130. doi: 10.1016/j.jmp.2016.03.003

Quax, S., and Gerven, M.Van. (2018). Emergent mechanisms of evidence integration in recurrent neural networks. PLoS One, 13:e0205676. doi: 10.1371/journal.pone.0205676

Ramirez, L. D., Foster, J. J., and Ling, S. (2021). Temporal attention selectively enhances target features. J. Vis. 21:6. doi: 10.1167/jov.21.6.6

Ratcliff, R. (1978). A theory of memory retrieval. Psychol. Rev. 85, 59–108. doi: 10.1037/0033-295X.85.2.59

Ratcliff, R., and McKoon, G. (2008). The diffusion decision model: theory and data for two-choice decision tasks. Neural Comput. 20, 873–922. doi: 10.1162/neco.2008.12-06-420

Ratcliff, R., Smith, P. L., Brown, S. D., and McKoon, G. (2016). Diffusion decision model: current issues and history. Trends Cogn. Sci. 20, 260–281. doi: 10.1016/j.tics.2016.01.007

Servant, M., Logan, G. D., Gajdos, T., and Evans, N. J. (2021). An integrated theory of deciding and acting. J. Exp. Psychol. Gen. 150, 2435–2454. doi: 10.1037/xge0001063

Servant, M., White, C., Montagnini, A., and Burle, B. (2015). Using covert response activation to test latent assumptions of formal decision-making models in humans. J. Neurosci. 35, 10371–10385. doi: 10.1523/JNEUROSCI.0078-15.2015

Servant, M., White, C., Montagnini, A., and Burle, B. (2016). Linking theoretical decision-making mechanisms in the Simon task with electrophysiological data: a model-based neuroscience study in humans. J. Cogn. Neurosci. 28, 1501–1521. doi: 10.1162/jocn_a_00989

Simen, P., Contreras, D., Buck, C., Hu, P., Holmes, P., and Cohen, J. D. (2009). Reward rate optimization in two-alternative decision making: empirical tests of theoretical predictions. J. Exp. Psychol. Hum. Percept. Perform. 35, 1865–1897. doi: 10.1037/a0016926

Smith, S. M., and Krajbich, I. (2018). Attention and choice across domains. J. Exp. Psychol. Gen. 147, 1810–1826. doi: 10.1037/xge0000482

Smith, S. M., and Krajbich, I. (2019). Gaze amplifies value in decision making. Psychol. Sci. 30, 116–128. doi: 10.1177/0956797618810521

Smith, P. L., and Ratcliff, R. (2009). An integrated theory of attention and decision making in visual signal detection. Psychol. Rev. 116, 283–317. doi: 10.1037/a0015156

Smith, P. L., Ratcliff, R., and Wolfgang, B. J. (2004). Attention orienting and the time course of perceptual decisions: response time distributions with masked and unmasked displays. Vis. Res. 44, 1297–1320. doi: 10.1016/j.visres.2004.01.002

Smout, C. A., Garrido, M. I., and Mattingley, J. B. (2020). Global effects of feature-based attention depend on surprise. NeuroImage 215:116785. doi: 10.1016/j.neuroimage.2020.116785

Smout, C. A., Tang, M. F., Garrido, M. I., and Mattingley, J. B. (2019). Attention promotes the neural encoding of prediction errors. PLoS Biol. 17:e2006812. doi: 10.1371/journal.pbio.2006812

Stein, T., and Peelen, M. V. (2015). Content-specific expectations enhance stimulus detectability by increasing perceptual sensitivity. J. Exp. Psychol. Gen. 144, 1089–1104. doi: 10.1037/xge0000109

Tillman, G., Zandt, T., and Logan, G. (2020). Sequential sampling models without random between-trial variability: the racing diffusion model of speeded decision making. Psychon. Bull. Rev. 27, 911–936. doi: 10.3758/s13423-020-01719-6

Townsend, J. T., and Ashby, F. G. (1983). The stochastic modeling of elementary psychological processes. Cambridge, England: Cambridge University Press.

Turner, B. M., Forstmann, B. U., Love, B. C., Palmeri, T. J., and Van Maanen, L. (2017). Approaches to analysis in model-based cognitive neuroscience. J. Math. Psychol. 76, 65–79. doi: 10.1016/j.jmp.2016.01.001

Urai, A. E., de Gee, J. W., Tsetsos, K., and Donner, T. H. (2019). Choice history biases subsequent evidence accumulation. elife 8:e46331. doi: 10.7554/eLife.46331

Usher, M., and McClelland, J. L. (2001). The time course of perceptual choice: the leaky, competing accumulator model. Psychol. Rev. 108, 550–592. doi: 10.1037/0033-295X.108.3.550

van Rooij, I., and Blokpoel, M. (2020). Formalizing verbal theories. Soc. Psychol. 51, 285–298. doi: 10.1027/1864-9335/a000428

Vickers, D. (1979). Decision processes in visual perception. Cambridge, Massachusetts, United States: Academic Press.

Wald, A. (1945). Sequential tests of statistical hypotheses. Ann. Math. Stat. 16, 117–186. doi: 10.1214/aoms/1177731118

Wang, X.-J. (2002). Probabilistic decision making by slow reverberation in cortical circuits. Neuron 36, 955–968. doi: 10.1016/S0896-6273(02)01092-9

White, A. L., and Carrasco, M. (2011). Feature-based attention involuntarily and simultaneously improves visual performance across locations. J. Vis. 11:15. doi: 10.1167/11.6.15

White, C. N., and Poldrack, R. A. (2014). Decomposing bias in different types of simple decisions. J. Exp. Psychol. Learn. Mem. Cogn. 40, 385–398. doi: 10.1037/a0034851

Keywords: cognitive modelling, decision-making bias, attention, prior probability, DDM, SDT, EAM

Citation: Cerracchio E, Miletić S and Forstmann BU (2023) Modelling decision-making biases. Front. Comput. Neurosci. 17:1222924. doi: 10.3389/fncom.2023.1222924

Received: 15 May 2023; Accepted: 09 October 2023;

Published: 20 October 2023.

Edited by:

Cristiano Capone, National Institute of Nuclear Physics of Rome, ItalyReviewed by:

Moshe Glickman, University College London, United KingdomCopyright © 2023 Cerracchio, Miletić and Forstmann. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ettore Cerracchio, ZS5jZXJyYWNjaGlvQHV2YS5ubA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.