94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Comput. Neurosci., 17 May 2023

Volume 17 - 2023 | https://doi.org/10.3389/fncom.2023.1151895

This article is part of the Research TopicHorizons in Computational Neuroscience 2022View all 4 articles

Edward W. Large1,2

Edward W. Large1,2 Iran Roman3

Iran Roman3 Ji Chul Kim1

Ji Chul Kim1 Jonathan Cannon4

Jonathan Cannon4 Jesse K. Pazdera4

Jesse K. Pazdera4 Laurel J. Trainor4

Laurel J. Trainor4 John Rinzel5,6

John Rinzel5,6 Amitabha Bose7*

Amitabha Bose7*Rhythmicity permeates large parts of human experience. Humans generate various motor and brain rhythms spanning a range of frequencies. We also experience and synchronize to externally imposed rhythmicity, for example from music and song or from the 24-h light-dark cycles of the sun. In the context of music, humans have the ability to perceive, generate, and anticipate rhythmic structures, for example, “the beat.” Experimental and behavioral studies offer clues about the biophysical and neural mechanisms that underlie our rhythmic abilities, and about different brain areas that are involved but many open questions remain. In this paper, we review several theoretical and computational approaches, each centered at different levels of description, that address specific aspects of musical rhythmic generation, perception, attention, perception-action coordination, and learning. We survey methods and results from applications of dynamical systems theory, neuro-mechanistic modeling, and Bayesian inference. Some frameworks rely on synchronization of intrinsic brain rhythms that span the relevant frequency range; some formulations involve real-time adaptation schemes for error-correction to align the phase and frequency of a dedicated circuit; others involve learning and dynamically adjusting expectations to make rhythm tracking predictions. Each of the approaches, while initially designed to answer specific questions, offers the possibility of being integrated into a larger framework that provides insights into our ability to perceive and generate rhythmic patterns.

Biological processes, actions, perceptions, thoughts, and emotions all unfold over time. Some types of sensory information like static images can carry meaning independent of time, but most, like music and language, get all of their semantic and emotive content from temporal and sequential structure. Rhythm (see Glossary) can be defined as the temporal arrangements of sensory stimuli offering some amount of temporal predictability. The predictability granted by rhythm allows the organism to prepare for and coordinate with future events at both neural and behavioral levels (deGuzman and Kelso, 1991; Large and Jones, 1999; Schubotz, 2007; Fujioka et al., 2012; Patel and Iversen, 2014; Vuust and Witek, 2014). For example, repetitive movements, such as locomotion, are rhythmic. Simple repetitive rhythmic movements are relatively easy to instantiate in neural circuits, as can be seen from work on invertebrates and central pattern generation circuits (Kopell and Ermentrout, 1988; Marder et al., 2005; Yuste et al., 2005). Rhythm is also the basic building block for human auditory communication systems, speech, and music (e.g., Fiveash et al., 2021), but here, of course, the dynamics are much more complex and flexible.

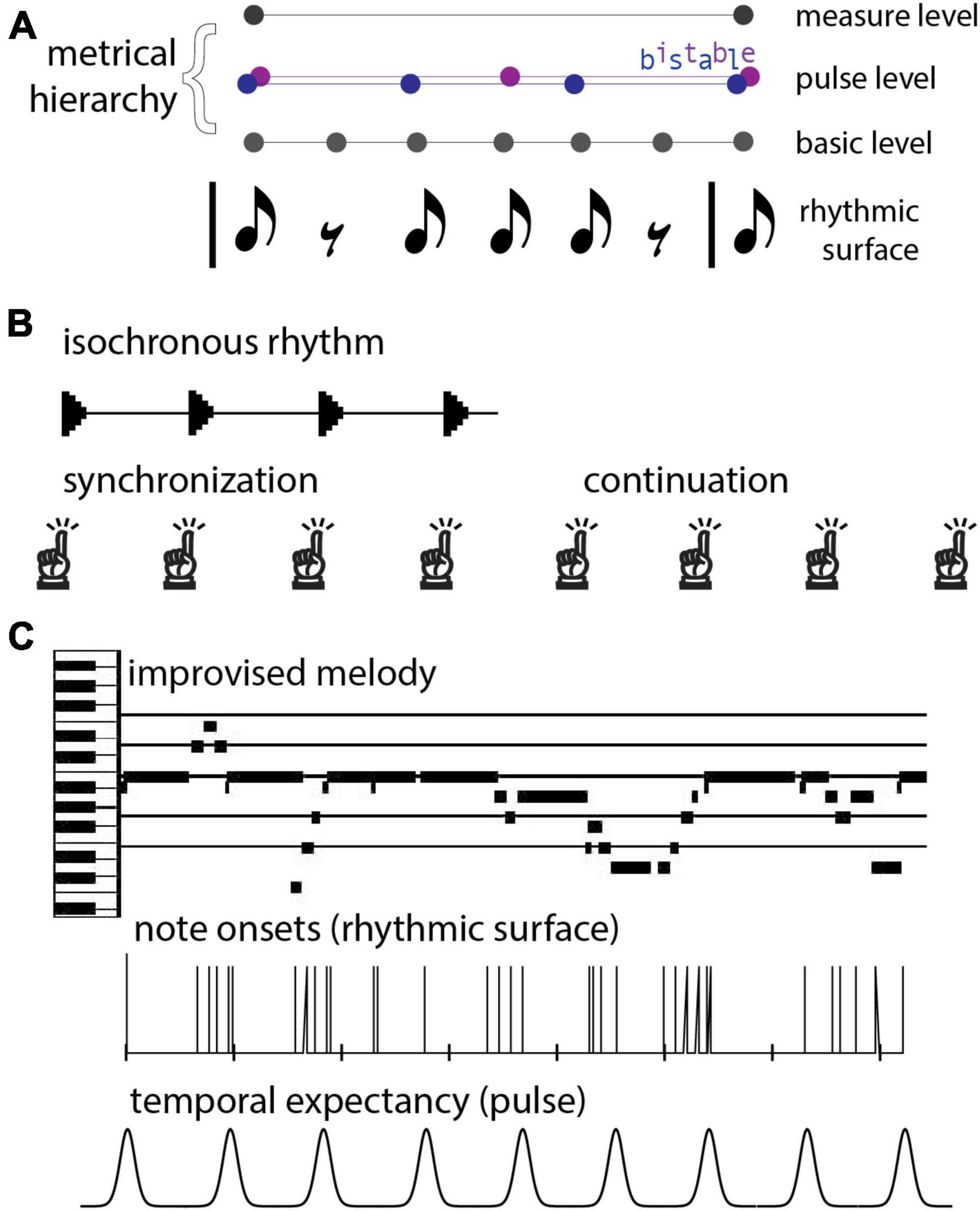

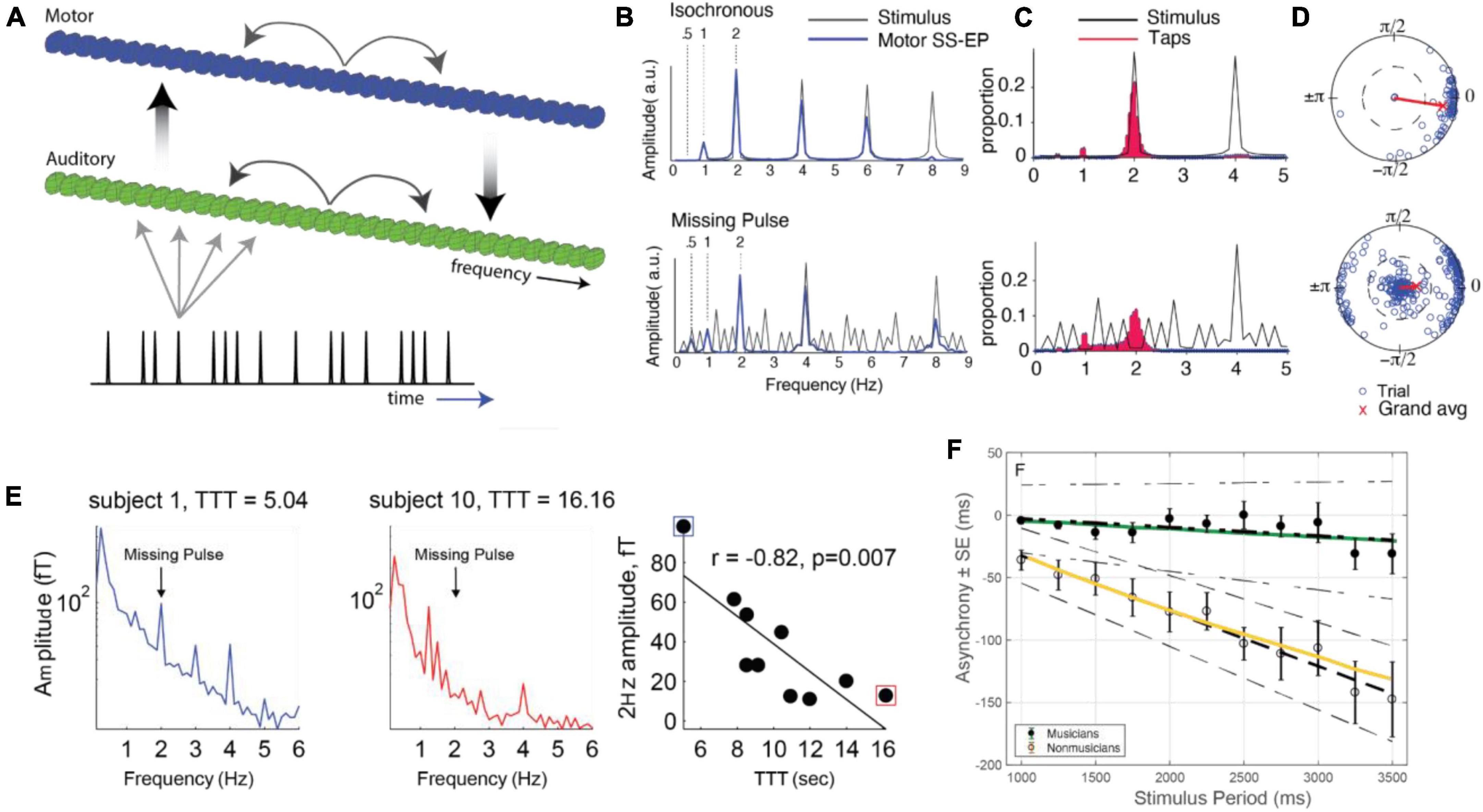

From a rhythmic pattern (rhythmic surface), humans neurally extract a (typically) periodic sequence known as a beat. It should be noted that beats can be perceived even where the rhythmic surface has a rest or silence (Figure 1A). Humans perceive beats at rates between about 0.5–8 Hz, with optimal beat perception around 2 Hz (Repp, 2005; Patel and Iversen, 2014). In addition, human brains extract a metrical hierarchy of temporal organization (or nested beat levels), typically with groups of two or three successive beat events at one level forming a single beat event at another level (see Figure 1A). The term pulse refers to the most salient level of beats, behaviorally defined as the beats a listener taps when synchronizing with a musical rhythm. Importantly, energy at the pulse frequency, or tempo, of a rhythm is not necessarily contained prominently, or not at all, in the rhythmic surface (Haegens and Zion Golumbic, 2018). Thus, the pulse percept comes from the brain’s tendency to impose rhythmic structure on its auditory input (Tal et al., 2017), which it begins to do even early in development (Phillips-Silver and Trainor, 2005). It should be noted also that for complex rhythms, the beat can be ambiguous or multi-stable; for example, a 6-beat pattern could be organized hierarchically as two groups of three beats or as three groups of two beats (see Figure 1A). In this review we focus on models of beat, pulse, and meter, aimed at comparing and contrasting theoretical and modeling frameworks. While the approaches we review have also been used to make predictions about other rhythmic phenomena such as pattern perception (Kaplan et al., 2022) and groove, these topics are beyond the scope of the current review.

Figure 1. (A) A rhythmic pattern (rhythmic surface) and possible metrical hierarchies. Notes indicate sound onsets and rests indicate silences. People perceive beats at different rates, or levels, and people neurally extract periodic sequences corresponding to beats with events at sounds and silences. Three levels of the metrical hierarchy (beat levels) are shown. In the example, the pulse (the intermediate beat level) is bistable; people can perceive this rhythm in groups of 2 or in groups of 3 pulses. (B) The synchronization-continuation task. A person first listens to an isochronous rhythm and synchronizes movements with it. Next, the stimulus ceases and the person continues tapping at the same frequency. (C) An improvised piano melody. Musical events unfold with diverse timings and discrete onset times are not periodic, but may result in a pseudo-periodic pulse percept, which can function as a series of temporal expectancies (i.e., times at which events are likely to occur) in the human listener.

The major developmental disorders, including dyslexia, autism, attention deficits, and developmental coordination disorder, have been associated with deficits in timing and rhythm processing (Lense et al., 2021), suggesting that rhythm perception is deeply intertwined with core developmental processes. Rhythm is an important ingredient of human social functioning: the predictability of auditory rhythms enables humans to plan movements so as to synchronize with the beat, which in turn facilitates synchrony between people. A number of studies have shown that interpersonal synchrony promotes feelings of trust and social bonding (Hove and Risen, 2009; Valdesolo et al., 2010; Mogan et al., 2017; Savage et al., 2020), even in infancy (Cirelli et al., 2014; Trainor and Marsh-Rollo, 2019). The centrality of rhythm to human development and social interaction might explain why rhythmic music is universal across human societies; why people engage in music (listening and/or playing) most days of their lives; why caregivers around the world use rhythmic infant-directed singing as a tool to help infants with emotional regulation (calming or rousing) and social development; and why adults engage in rhythmic music to promote social bonding, such as at weddings, funerals, parties, team sports, cultural rituals, in war, and in religious ceremonies.

The central role of rhythm perception in human life energizes the scientific challenge of understanding it. In the present paper, we examine how different classes of computational models approach the problem of how rhythms are perceived and coordinated, focusing on the strengths and challenges faced by each approach. In the remainder of the introduction, we outline some of the basic issues that models must address. Then, in subsequent sections, we go into details of the predominant approaches to date.

At the most basic level, humans are able to perceive pulse and meter, and synchronize their motor behavior with music, such as through dance (Savage et al., 2015; Ellamil et al., 2016), body sway (Burger et al., 2014; Chang et al., 2017), or finger tapping (Repp, 2005; Repp and Su, 2013). Even synchronizing with an isochronous sequence of events, such as that produced by a metronome, raises numerous theoretical questions. Does the brain develop an explicit model of the metronome to predict its timing, or do the brain’s intrinsic neural rhythms naturally entrain to the beat? Might the brain eschew the use of oscillatory processes, and instead estimate the passage of time by counting the pulses of a pacemaker? Or does it utilize a combination of endogenous oscillatory neuronal circuits and counting processes?

Human rhythmic capabilities are simultaneously flexible enough to adapt to changes in musical tempo, yet robust enough to maintain the beat when faced with perturbations. Research on synchronization suggests that people can re-synchronize their tapping within a few beats of a tempo change (Large et al., 2002; Repp and Keller, 2004; Loehr et al., 2011; Scheurich et al., 2020); therefore, any model of rhythm perception must be able to flexibly adapt in real-time, within several beats. Furthermore, music production often occurs as a collaborative activity, in which multiple performers must coordinate with one another in real time. Within these social contexts, the flexibility of human synchrony allows performers to maintain cohesion through mutual adaptation to one another’s variations in timing (Konvalinka et al., 2010; Loehr and Palmer, 2011; Dotov et al., 2022). How this adaptation occurs remains an open question: Do timing changes evoke prediction errors that drive the brain to update its model of the meter, or do perturbations directly drive neural oscillations to shift in phase and/or period? Rhythm perception is also robust to brief perturbations. In real performances, musicians use deviations from “perfect” mechanical timing as a communicative device. For example, musicians often leave clues as to the metrical structure of a piece by lengthening the intervals between notes at phrase boundaries (Sloboda, 1983; Todd, 1985), called phrase-final lengthening. Research suggests that these instances of expressive timing may actually aid – rather than disrupt – listeners in parsing the metrical and melodic structure of the music (Sloboda, 1983; Palmer, 1996; Large and Palmer, 2002). Alternatively, small timing deviations appear to be related to information content or entropy. Phrase boundaries are typically places of high uncertainty about what notes, chords, or rhythm will come next, and there is evidence that people slow down at points of high uncertainty (Hansen et al., 2021). A viable neural model of rhythm perception must therefore not destabilize or lose track of the beat due to intermittent deviations such as these.

Our sense of rhythm is robust not only to timing perturbations but also to the complete discontinuation of auditory input: humans display the ability to continue tapping to a beat even after an external acoustic stimulus has been removed (e.g., Chen et al., 2002; Zamm et al., 2018), known as synchronization-continuation tapping (Figure 1B). Does this ability imply that we have “learned” the tempo? Or perhaps it points to hysteresis in a neural limit cycle, allowing it to maintain a rhythm even after external driving has ceased.

Important for its theoretical implications is the fact that synchronization behavior appears to be anticipatory. For example, when synchronizing finger taps with rhythms, people tend to tap tens of milliseconds before the beat – a phenomenon known as negative mean asynchrony (NMA; see Repp and Su, 2013, for a review). What neural mechanisms might be responsible for this asynchrony? Does NMA represent a prediction of an upcoming event, or might it arise from a time-delayed feedback system among neural oscillators (e.g., Dubois, 2001; Stepp and Turvey, 2010; Roman et al., 2019)?

Beyond the basic beat structure, music can be far more intricate and involves perceiving, learning, and processing complex acoustical patterns, such as in the case of syncopation. Evidence suggests that people can still perceive a beat in highly complex rhythms (see Figure 1C), even rhythms so complex as to contain no spectral content at the beat frequency (Large et al., 2015; Tal et al., 2017). This ability necessitates considering larger network structures that appropriately respond to the different frequencies present in these patterns. What kinds of neural structures might be at play? Perhaps they are oscillator-based, and if so, the extent to which the intrinsic and synaptic properties of the constituent elements determine model output is important to understand. Perhaps these networks form a rhythm pattern generator akin to a “central pattern generator” found in motor systems, cardiac systems, and various invertebrate systems (Kopell and Ermentrout, 1988; Marder et al., 2005; Yuste et al., 2005) in which case it would be important to understand what is the neural basis for pacemaking within the circuit. On the other hand, it is possible that the neural structures do not rely on intrinsic oscillation at all. For example, there may exist stored templates used to perceive and recall a rhythm. If so, it is of interest to explore plausible neural instantiations for template learning or other possible non-oscillatory mechanisms.

Electrophysiological data from non-human primates has gradually shed light on some of the possible mechanisms of beat and rhythm processing. One main challenge is the time (years) and effort that goes into training monkeys to synchronize with isochronous beats and complex rhythmic stimuli. Nonetheless, data from non-human primates has revealed that individual neurons in the medial prefrontal cortex (mPFC) show accelerating firing rates that reset after beat onsets and are a direct function of the beat tempo (Crowe et al., 2014; Merchant and Averbeck, 2017; Zhou et al., 2020). These studies reveal that the mPFC dynamically encodes the beat, and that it can integrate periodic information independent of the stimulus modality (i.e., visual or auditory; Betancourt et al., 2022). To date we are not aware of human data collected at comparable neural substrates during a similar rhythm-tracking task.

In humans it is extremely difficult to measure single neuron activity, hampering the development of detailed biophysical models of neurons and the circuits that underlie human rhythm processing. However, higher level models can be informed by non-invasive methods in humans. In particular scalp-measured EEG and MEG have the temporal resolution to observe both population neural oscillatory activity in the time-frequency domain as well as large brain events occurring over tens of milliseconds. fMRI recordings are not sensitive enough to observe fine temporal dynamics, but they are able to delineate brain areas involved in rhythmic processing, as well as the connections between these areas. Despite these limitations, human neurophysiological data has informed some higher-level models of rhythm processing, both in suggesting constraints and providing a way to test some aspects of the model predictions. In summary, computational modeling efforts attempt to address fundamental questions about how the brain generates a beat and maintains it in the presence of noise and perturbations, while retaining the flexibility to adapt on the time scale of seconds to new tempos or rhythms, whether they are simple like that of a metronome (Figure 1B) or more complex like that found in a piece of music (Figure 1C).

In this paper, we review multiple modeling paradigms at three different levels of description that seek to explain various aspects of human rhythmic capabilities. These models rely, to some extent, on the fact that the organized temporal structure of musical rhythms is generally predictable and often periodic. At the highest level of organization, Bayesian inference (e.g., Sadakata et al., 2006; Cannon, 2021) and predictive coding (e.g., Vuust and Witek, 2014; Koelsch et al., 2019) approaches exploit the predictability of rhythms by suggesting that the brain constructs a statistical model of the meter and uses it to anticipate the progression of a song. In contrast, oscillator models based in the theory of dynamical systems utilize Neural Resonance Theory (Large and Snyder, 2009) to show how structure inherent in the rhythm itself allows anticipatory behavior to emerge through the coupling of neural oscillations with musical stimuli (e.g., Large and Palmer, 2002; Large et al., 2015; Tichko and Large, 2019). In this approach, a heterogeneous oscillator network spanning the frequency range of interest mode-locks to complex musical rhythms At perhaps the most basic level of modeling, beat perception of isochronous rhythms is addressed by mechanistic models that are either event-based (Mates, 1994a,b; Repp, 2001a; van der Steen and Keller, 2013) or based on neuronal-level oscillator descriptions (Bose et al., 2019; Byrne et al., 2020; Egger et al., 2020), both of which fall within the broad framework of dynamical systems. These mechanistic models postulate the existence of error-correction processes that adjust the period and phase of the perceived beat. Evaluating the merits of each modeling approach requires testing how well each can address specific findings from among the rich collection of rhythmic abilities and tendencies that humans exhibit.

The topic of musical rhythm has a rich history in psychology, and many different types of models have been proposed (Essens and Povel, 1985; McAngus Todd and Brown, 1996; Cariani, 2002). The models reviewed in this paper share the common feature that they are all dynamic; namely, they all involve variables that evolve in time according to some underlying set of ordinary differential equations. Another common feature is event-based updating; an event may be the onset of an auditory stimulus, the spike time of a neuron, or the zero crossing of an oscillator’s phase. At each event time, a variable or a parameter in the model is updated with a new value. In some cases, this may lead to formulation as a discrete dynamical system that can be described using a mathematical map (e.g., Large and Kolen, 1994; Large and Jones, 1999). Despite these formal similarities, the way that the dynamic formalisms are deployed often reflects deep differences in theoretical approaches. The neuro-mechanistic approach utilizes differential equations that describe the dynamics of individual neurons, which may then be combined into circuits designed to implement descriptions of neural function. The neuron-level and often the circuit-level descriptions can be analyzed using the tools of nonlinear dynamical systems (Rinzel and Ermentrout, 1998; Izhikevich, 2007), and the resulting models are well-suited to making predictions about neuron-level data. The dynamical systems approach is a theoretical framework within which the embodied view of cognition is often formalized (Kelso, 1995; Large, 2008; Schöner, 2008). Here, differential equations capture the general properties of families of physiological dynamical systems, and are derived using the tools of nonlinear dynamics (Pikovsky et al., 2001; Strogatz, 2015). These models are not intended to make predictions about neuron-level data. Rather, they are aimed at making predictions about ecological dynamics (dynamics of interaction of the organism with its environment), population-level neural dynamics, and the relationship between the two. Finally, Bayesian inference models are not always associated with dynamics, however the domain of musical rhythm (which is all about change in time) makes the language of differential equations natural in this case. Bayesian models such as the one described here are aimed squarely at behavior-level data, not at the level of individual neurons or even neural populations. However, when deployed in the rhythmic domain, interesting parallels emerge that relate Bayesian models to more physiologically oriented descriptions, as we shall see.

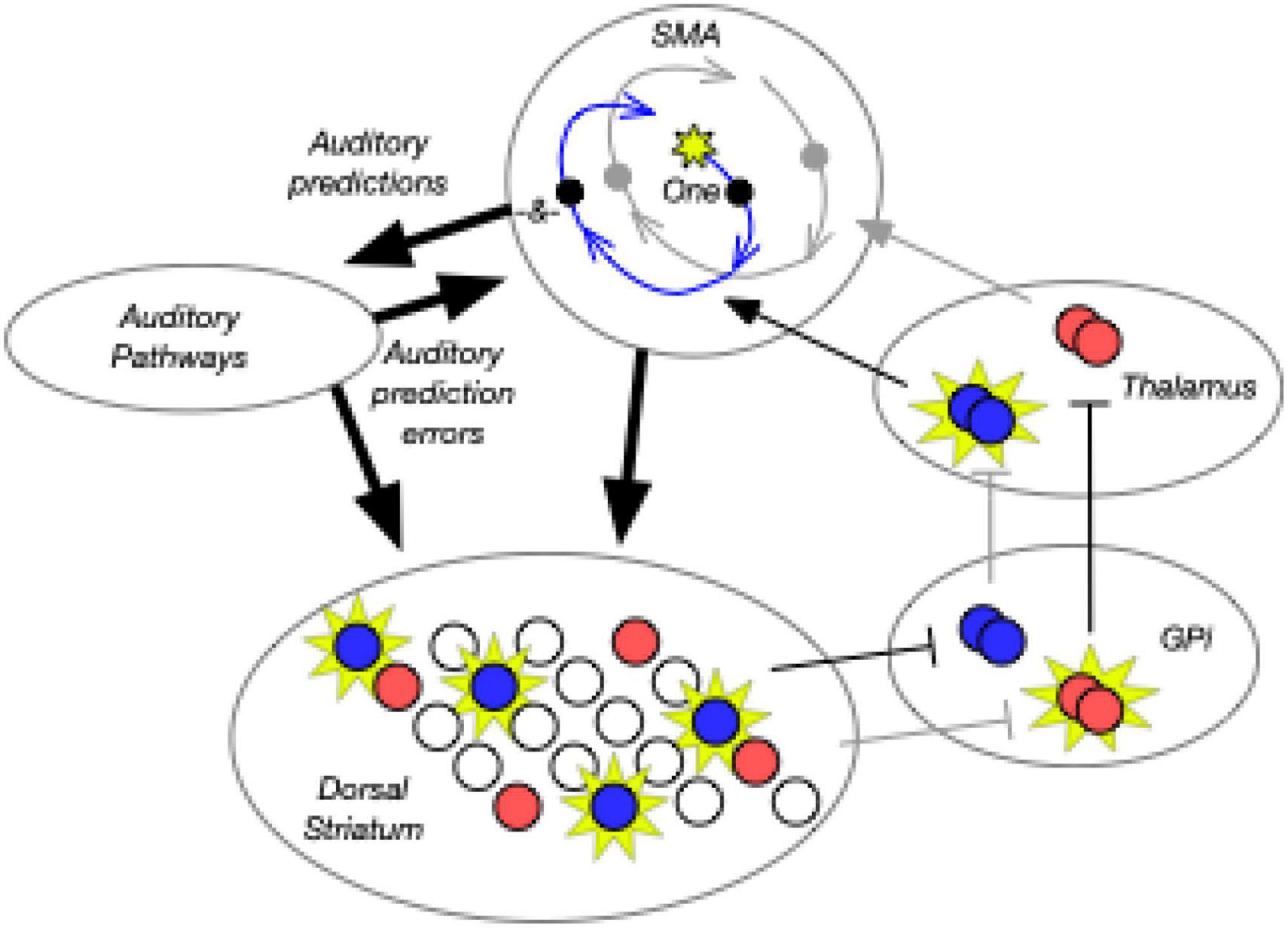

There is considerable evidence that the brain is capable of both duration-based (or single interval) timing and beat-based timing (Kasdan et al., 2022). fMRI and TMS studies in healthy populations show that duration-based timing relies particularly on the cerebellum (Teki et al., 2011; Merchant et al., 2015), and these results are corroborated in patients with cerebellar damage (Grahn and Brett, 2009; Grube et al., 2010). Furthermore, the cerebellum has been associated with prediction, absolute duration, and error detection (Kasdan et al., 2022). On the other hand, fMRI studies consistently show that beat perception activates a network involving auditory superior temporal regions, basal ganglia (notably the putamen), and supplementary (and pre-supplementary) motor areas (Lewis et al., 2004; Chen et al., 2006, 2008a,b; Grahn and Brett, 2007; Bengtsson et al., 2009; Grahn and Rowe, 2009, 2013; Teki et al., 2012; Patel and Iversen, 2014). It has been hypothesized that beat perception relies on the integration of these systems through pathways additionally involving parietal and auditory areas (Patel and Iversen, 2014; Cannon and Patel, 2021). Critical to this hypothesis is that beat perception relies on auditory-motor interactions. In humans, there is much evidence that motor areas are used for auditory timing (Schubotz, 2007; Iversen et al., 2009; Fujioka et al., 2012). Evidence from non-human primates synchronizing with periodic stimuli also shows interactions between sensory and motor areas (Betancourt et al., 2022). Thus, fully modeling rhythm processing in the human brain will likely require an architecture with both local areas and bidirectional interactions between areas.

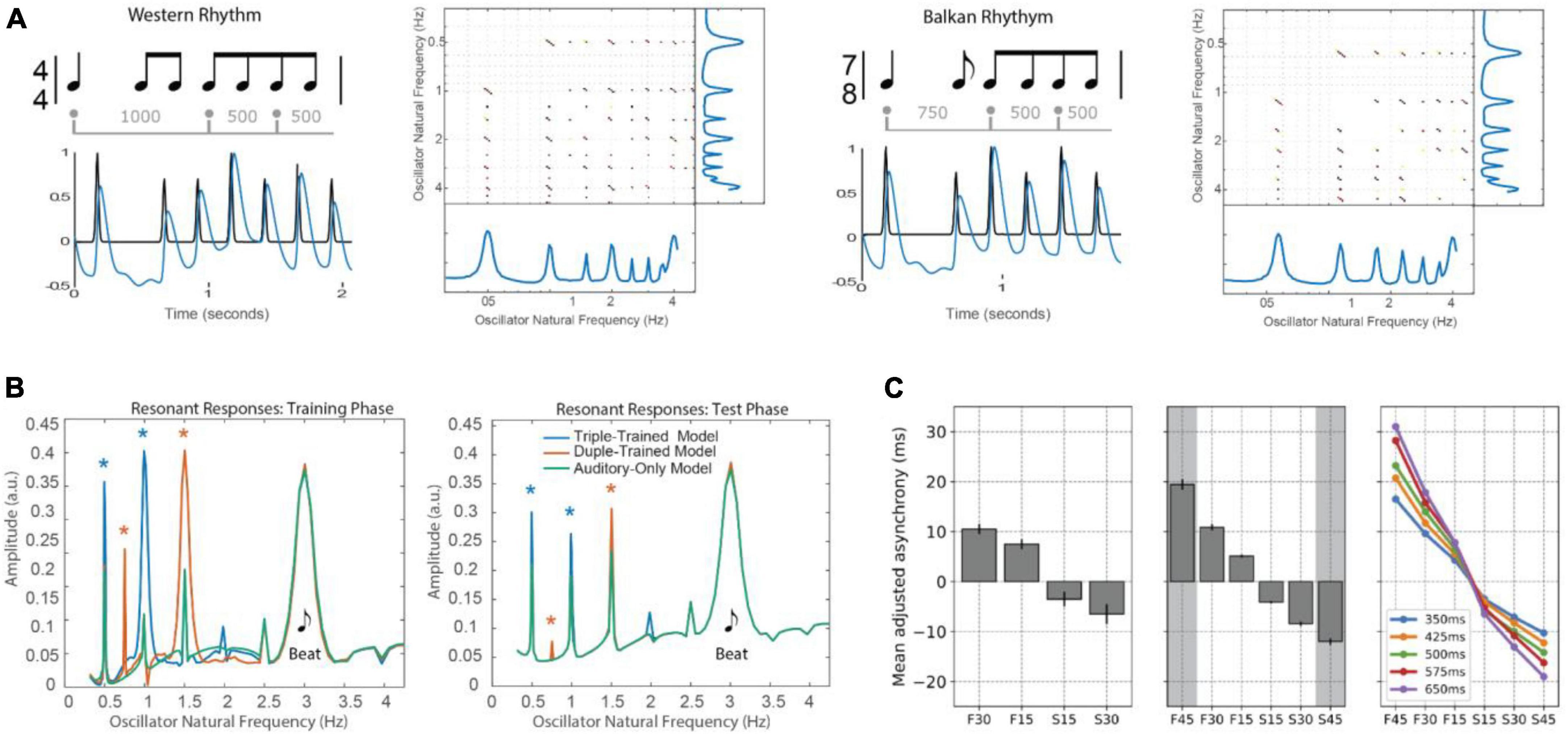

Cross-species studies suggest that humans and monkeys share mechanisms for duration-based timing, but that only humans show robust beat-based timing (Merchant and Honing, 2014; Merchant et al., 2015). Developmental studies indicate that young infants detect changes in rhythms in simple as well as moderately complex metrical structures, but that by 12 months of age, infants have already become specialized for processing rhythms with metrical structures that are common in their environment (Hannon and Trainor, 2007). Cross-cultural perceptual/tapping studies in adults have revealed that there is a universal tendency for people to “regularize” randomly timed rhythm patterns, so as to perceive/reproduce small-integer ratios between the durational intervals (Jacoby and McDermott, 2017). At the same time, people who grew up in different cultures that exposed them to different meters show different biases for the ratios that dominate their perception and production (Jacoby et al., 2021). Thus, of relevance to modeling, there appear to be both innate tendencies that may reflect intrinsic properties of neural networks and a degree of flexibility, such that networks are likely shaped both by the immediate input and longer-term learning.

Many neuroscience studies of beat and rhythm have been theoretically motivated by the idea that temporal regularity enables prediction of when upcoming beats are expected (Large and Kolen, 1994; Huron, 2008), with the consequence of enhanced perception at the times of beat onsets in determining both when events are expected to occur and what the events are expected to be (Large and Jones, 1999; Schroeder and Lakatos, 2009; Arnal and Giraud, 2012; Henry and Herrmann, 2014; Chang et al., 2019). While fMRI studies can reveal brain regions involved in processing rhythmic information, the temporal and spectral precision of EEG and MEG make them useful for testing models of rhythm perception. Two basic approaches can be taken for EEG analysis. One examines the time course of electrical activity in the brain as sound events occur in a stimulus input (event-related potentials or ERPs), whereas the other uses frequency-based analyses to understand periodicities and phase relations in the neural response. Components of ERPs, such as mismatch negativity (MMN), occur in response to unexpected events in sequences or patterns of sounds (Näätänen et al., 2007; Carbajal and Malmierca, 2018), and have been shown to reflect predictive processes (e.g., Bendixen et al., 2009; Dauer et al., 2020). While clear MMN is seen in response to a sound event with unexpected pitch or timbre (or pattern of pitch or timbre events) in the context of a sequence of sound events, direct evidence for predictive timing is less clear. This is likely because it is difficult to distinguish neural ERP activity at the expected time of an event (representing a response to the violation of timing expectation) from neural activity in response to the actual sound event, whether it occurs earlier or later than expected. However, ERP responses have been shown to be larger for sound omissions on metrically strong beats than omissions on metrically weak beats (Bouwer et al., 2014), even in newborns (Winkler et al., 2009). ERPs can be used indirectly to examine temporal expectations, however. For example, infants show larger ERP responses on beats they were previously primed to perceive as accented compared to those they were primed to hear as unaccented, when listening to rhythms with ambiguous metrical structure (Flaten et al., 2022). Thus, even infants can endogenously maintain a particular metrical interpretation of an ambiguous rhythm, and models of rhythm perception need to be able to cope with ambiguity and multi-stability.

A popular approach has been to examine the entrainment of neural responses to the frequencies present in temporally structured auditory input stimuli, with an interest both in frequency and phase alignment (Henry et al., 2014; Morillon and Schroeder, 2015). Many studies show that when presented with a rhythm, whether in speech or music, ongoing delta frequencies will phase align with the beats of the rhythm (Schroeder and Lakatos, 2009; Lakatos et al., 2013, 2016; Poeppel and Assaneo, 2020). There is debate as to whether these neural oscillations represent the brain entraining to the input (Haegens and Zion Golumbic, 2018). Evidence suggests, however, that these oscillations do not simply reflect ERP responses following each sound event in the input stimulus (Doelling et al., 2019), but rather change according to predictive cues in the input (Herbst and Obleser, 2019). Further, neural oscillations do not simply mimic the temporal structure of the input (Nozaradan et al., 2011, 2012; Tal et al., 2017), but also reflect internally driven (endogenously activated) processes, some of which appear to be present already in premature infants (Edalati et al., 2023). Thus, neural oscillations appear to be a window into dynamic neural mechanisms (Doelling and Assaneo, 2021) and provide a way to test aspects of computational models.

The next few sections will examine and compare different modeling approaches spanning neuronal, mid-level and high-level descriptions of rhythm processing.

Models that allow for discrete event-times are suited to address questions related to time-keeping, generation of isochronous rhythms, and synchronization of a periodic rhythm to a complex musical rhythm (beat perception). Time-keeper models are error correction models within the information processing tradition, and they primarily operate by adapting to the time intervals in a stimulus. Continuous time neuro-mechanistic models described in this section produce event-times. They are also error-correction models that seek to align the timing of internally generated events to the timing of the external sound onsets. They update parameters of a dynamical neuronal system to achieve matching of phase and period with the stimulus. Adaptive oscillator models simulate synchronization (i.e., phase-and mode-locking) of an oscillation with a complex stimulus rhythm, and have been used to simulate the perception of a beat in complex musical rhythms. They adapt period based on the phase of individual events rather than on the measurement of time-intervals.

The earliest set of time-keeper models were predominantly algorithmic error-correction models (Michon, 1967; Hary and Moore, 1987; Mates, 1994a,b; Vorberg and Wing, 1996), many of which were reviewed by Repp (2005) and Repp and Su (2013). For example, Mates (1994b) defined variables that model the internal time estimates that an individual makes; the kth stimulus onset time is defined as SI(k), the kth motor response time by RI(k), their error eI(k) = SI(k) - RI(k) and the kth cycle period by tI(k). At the occurrence of the stimulus, the period is updated by the correction rule

while phase correction obeys

where α and β are the strengths of the phase and period correction, respectively. This is a linear model which can be solved explicitly. In the isochronous case, SI(k) − SI(k − 1) equals the interonset interval, denoted IOI (the time between successive stimulus spikes) provided that the estimate is itself error free. In that case, period matching requires tI(k − 1) = SI(k) − SI(k − 1), which in turn implies tI(k) = tI(k − 1). Perfect synchrony for this case occurs when eI(k) = 0, tI(k) = IOI and thus the RI(k + 1) motor response is exactly an IOI in duration after the RI(k) motor response. Because the model is linear, it is straightforward to understand the separate effects that phase correction (strength α) and period correction (strength β) have on solutions. Further, the model readily adapts to tempo changes over a few cycles by changing SI(k) − SI(k − 1) away from a constant value. It can also exhibit asynchronies in the steady state difference between stimulus time and motor response by allowing eI(k) to be non-zero.

While time-keeper models have been successful in matching experimental data and aspects of synchronization, they leave open a major question. What are the neuro-mechanisms that the brain uses to synchronize and adapt to time intervals? Does the brain measure the passage of time? Early models focused on determining the length of intervals, anywhere from seconds to minutes. Treisman (1963), Church and Gibbon (1982), and Gibbon et al. (1984) proposed a pacemaker accumulator framework in which counts of a pacemaker clock are accumulated in a reference memory after a series of trials of different duration. These reference durations are then compared to a working memory accumulation for the current interval to make a judgment of duration. Left open is how a neuronal model may produce the pacemaker clock or the ability to count cycles and compare different counts. Also, an unaddressed issue is that in rhythmic interval timing, the model seeks a particular alignment of stimulus and beat generator phase; in single interval timing the accumulator is by definition aligned with the interval’s initial time.

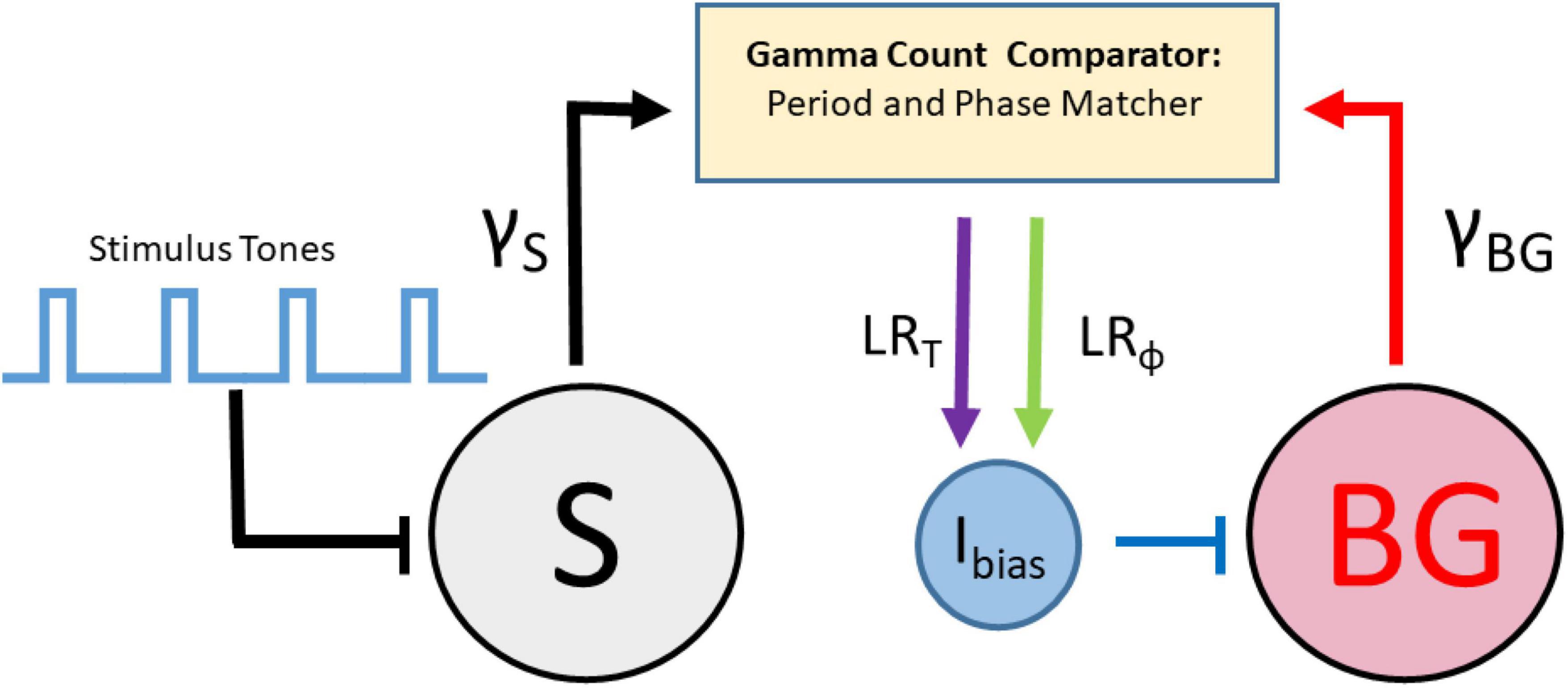

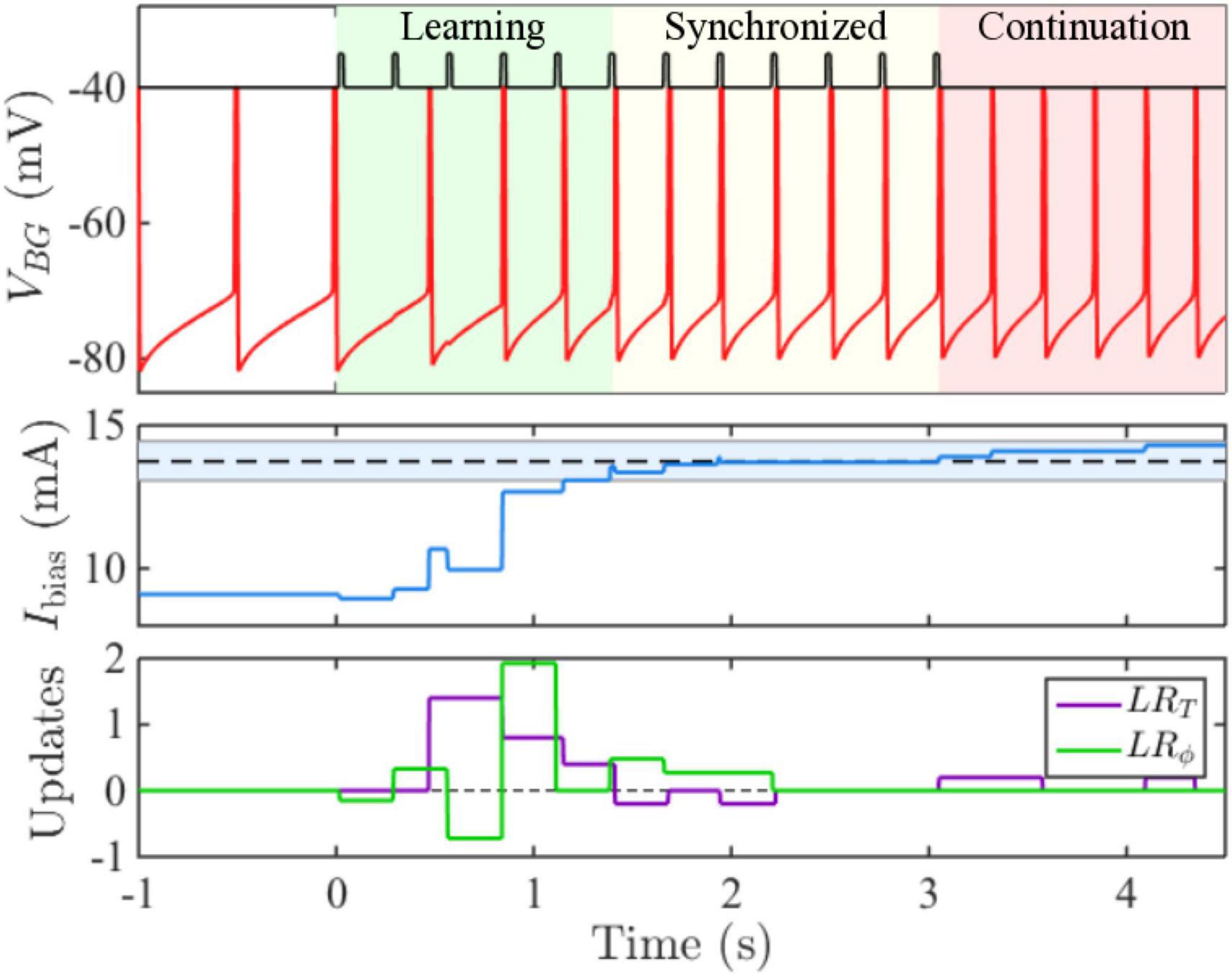

Recently, a new set of models for beat perception that are based on continuous time dynamical systems which incorporate event-based error correction rules have been derived. Bose et al. (2019) combined ideas of counting taken from pacemaker-accumulator models with those from error-correction models to develop an adaptive, biophysically based neuron/population beat generator (BG) that learns the spiking phase and period of a stimulus neuron (S) that represents an isochronous stimulus tone sequence (Figure 2). While based in dynamical systems, it is neither an information processing model, nor an entrainment model. It consists of a limit-cycle oscillator with specific period and phase learning rules that adaptively adjust an input strength Ibias to the BG. The term Ibias in conjunction with the widely found ionic currents that constitute the BG enabling it to oscillate over frequencies 0.5–8.0 Hz, that include beyond the range covered in finger-tapping experiments; (see Repp, 2005), determines the neuron’s frequency to input (freq-Input) curve. The BG neuron is a Type I neuron (oscillations can arise with arbitrarily slow frequencies as the input parameter increases through a bifurcation value) with a monotonic, non-linear freq-input curve whose shape determines several of the BG’s dynamic properties (Bose et al., 2019). It is not an entrainment model, as S does not exert an explicit forcing input to the BG. It differs from an information processing model by having an explicit neuronal representation of the beat generator, rather than positing the existence of an internal timekeeper. Indeed, as opposed to earlier algorithmic models, the error-correction rules are not directly applied to period and phase. Instead, the model compares estimates of IOI lengths by tracking the number of pacemaker cycles (e.g., gamma-frequency oscillations) between two successive BG spikes, γBG, and two successive S spikes, γS through a gamma count comparator. A period correction learning rule LRT is then applied at each BG firing event that adjusts Ibias → Ibias + δT(γBG - γS) that tries to match the integer values of γBG to γS. A phase correction learning rule LRϕ is implemented at each S firing event to adjust Ibias → Ibias + δϕ q(ϕ)ϕ|1 − ϕ| and is designed to send ϕ to either 0 or 1. This rule has some hidden asymmetries and may contribute to the existence of NMA that the BG neuron displays. Here ϕ is defined as the number of gamma cycles from a BG spike to the next S spike divided by γS, and q(ϕ) = sgn(ϕ − 0.5). The model resynchronizes quickly over a few cycles to changes in stimulus tempo (Figure 3) or phase, as well as to deviant or distracting stimulus events. It can perform synchronization-continuation (Figure 3) and displays NMA in that, on average, the BG firing time precedes that of the stimulus. Byrne et al. (2020) analyzed dynamical systems features of a BG model based on the integrate and fire neuron in computational and mathematical detail. Zemlianova et al. (2022) provided a biophysically based Wilson-Cowan description of a linear array network that propagates forward a single active excitatory-inhibitory (E-I) pair with each gamma cycle, thereby representing the current gamma count to estimate interval duration.

Figure 2. Schematic representation of the beat generator model with stimulus tones that drive a stimulus neuron (S), a beat generator neuron (BG) driven by an adjustable applied current (Ibias), a gamma count comparator that changes Ibias through rules for period learning LRT and phase learning LRϕ.

Figure 3. Time courses show the BG originally oscillating at 2 Hz quickly learning a faster 3.65 Hz frequency and then performing synchronization-continuation. (middle panel) Shows how Ibias adjusts within a few cycles based on period and phase learning rules (lower panel) to bring its value within a specified tolerance of the target value (dashed line). The blue shaded region represents the accuracy tolerance of roughly 30 ms prescribed in the model.

Egger et al. (2020) derived a related continuous time dynamical systems based error-correction model. They use a firing rate framework to describe the activity of neuronal populations in a feedforward two-layer (sensory and motor command) architecture that learns an isochronous stimulus sequence. Each layer incorporates an effective ramping variable, one for sensory and another for motor, that evolves toward a threshold. The ramping variable is derived from a competition dynamics that represents approximately the motion (time scale in IOI range) along the unstable manifold away from a saddle toward the steady state of dominance for one competitor. The ramping speed depends upon an input parameter I that drives the competition. Its value is adjusted at each reset in the sensory layer toward a match with the IOI of the stimulus. The motor layer (with similar competition dynamics) has a continuous-in-time adjustment of I to achieve phase alignment. The model accounts for a change in tempo as seen in their behavioral experiments. It also exhibits features of Bayesian performance in short 1–2-go and 1–3-go sequence timing tasks. In these tasks, participants are presented either 2 or 3 isochronous tones from a prior distribution and then asked to estimate the next beat. An optimal Bayesian integrator would utilize prior knowledge which would produce an estimate that is biased toward the mean of the distribution, which the model is able to reproduce. The authors observed a modest NMA in simulations, but the effect was not analyzed, and no attempt was made to fit empirical data. Future incorporation of time delays may however produce this effect (Ciszak et al., 2004; Nasuto and Hayashi, 2019; Roman et al., 2019; see also section “6.2.1. Transmission delay and negative mean asynchrony”).

Another important early approach involved phase oscillator models (Large and Kolen, 1994; McAuley, 1995; Large and Jones, 1999; Large and Palmer, 2002) that entrain to their input. Theoretically, these oscillator models are based on the idea that the brain does not measure time, instead, the model maps time onto the phase of an oscillator that synchronizes to rhythmic events. Dynamic Attending Theory (DAT) refers to the hypothesis that the neural mechanisms of attention are intrinsically oscillatory, and can be entrained by external stimuli, allowing attention to be directed toward specific points in time (Jones, 1976; Jones and Boltz, 1989; Large and Jones, 1999).

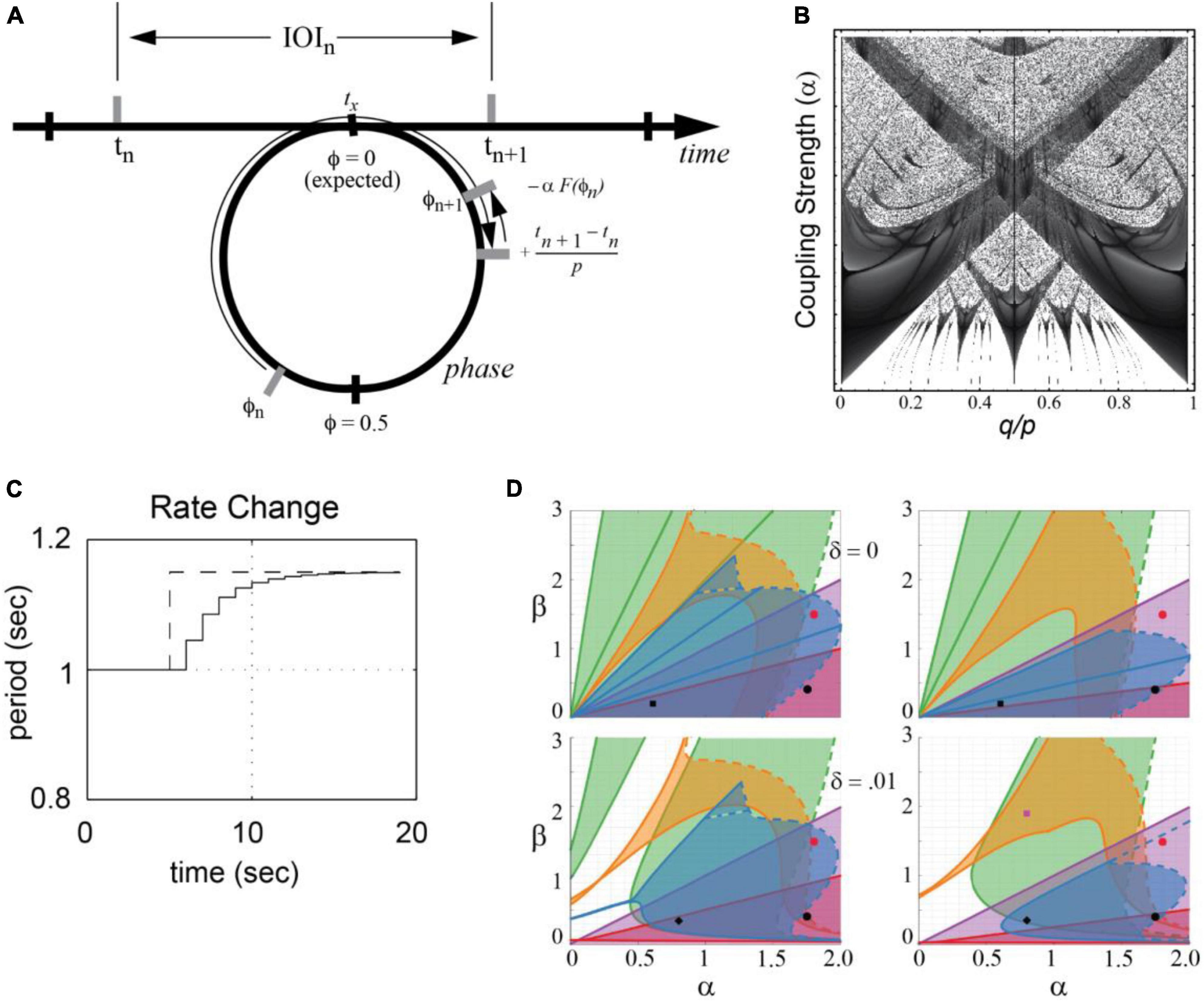

Phase oscillator models, based on circle maps, have a long history of application within dynamical systems. Given a hypothetical neural oscillation, a circle map predicts a succession of states at which events occur in a complex rhythmic stimulus. The circle map captures the fundamental hypothesis that temporal expectancies for events in a rhythmic stimulus depend on the phase of a neuronal oscillation that is driven by stimulus events, mapping time onto the phase of a neural oscillation (see Figure 4A).

Figure 4. (A) A circle map maps events at times tn and tn–1 onto the phase of an oscillator that entrains to the event sequence [adapted from Large and Palmer (2002)]. (B) Arnold tongues diagram shows regions in parameter space in which an oscillator mode-locks to a periodic stimulus. Parameters are the ratio of signal period to oscillator period (q/p) and the coupling strength (α). Darker regions correspond to parameter values that yield faster phase-locking [adapted from Large and Kolen (1994)]. (C) Period adaptation of an adaptive oscillator (solid line) to a stimulus rate change (dotted line) [adapted from Large and Jones (1999)]. (D) An analysis showing that mode-locking is preserved in phase and period-adaptive oscillators [e.g., (Loehr et al., 2011)]. δ is an added elasticity parameter. 1:2 locking (green), 2:3 locking (orange), 1:1 locking (purple), 3:2 locking (blue) and 2:1 locking (red) are shown [adapted from Savinov et al. (2021)].

Here, tn is the time of the nth event, πn is the phase of the nth event, and p is the intrinsic period of the neural oscillation. When F(ϕn) = sin2πϕn and the stimulus is periodic, tn + 1 − tn = q, this is the well-studied sine circle map (e.g., Glass and Mackey, 1988; Glass, 2001). In this case, the oscillator achieves a phase-locked state when the period of the stimulus, q, is not too far from the period of the oscillator, p. The greater the coupling strength, α, the greater the difference between the two periods can be and still achieve phase locking (a constant phase difference). Moreover, when the relative period of the oscillator and a stimulus, p/q, is near an integer ratio, the system achieves a mode-locked state. The behavior is referred to as synchronization or entrainment and is summarized in an Arnold tongues bifurcation diagram (Figure 4B; see also section “5. Neural resonance theory”).

Mode-locking is significant because musical rhythms are not just isochronous. They consist of patterned sequences of variable inter-onset intervals (IOIs; see Figure 1C), tn + 1 − tn, which contain multiple frequencies by definition. It is because of this property that Eq. 1 can model the perception of a beat at a steady tempo (frequency) in response to the multiple frequencies present in a musical rhythm.

Moreover, tempo can change in musical performances. In Eq. 1, the period p is fixed. However, to account for tempo changes, period adapting dynamics are introduced through a period correction term.

where the parameter β is the strength of the period adaptation. Together Eqs. 1, 2 constitute a period-adaptive, phase oscillator model (e.g., Large and Kolen, 1994; McAuley, 1995; Loehr et al., 2011). As illustrated in Figure 4C, period adaptation operates together with the phase entrainment to adapt to rhythms that change tempo. Period adaptation enables the system described by Eqs. 1, 2 to lock with zero phase difference to periodic rhythms different from its natural frequency (see also, Ermentrout, 1991, for a similar model of firefly synchronization). A recent analysis of the Loehr et al. (2011) model demonstrated the stability of multiple mode-locked states in the period-adapting model (Savinov et al., 2021) with and without period elasticity (Figure 4D). Extensions of this model are discussed in the sections “3.3.2. Dynamic attending and perception-action coordination” and “5. Neural resonance theory.”

To model dynamic attending, Eqs. 1, 2 served as a quantitative model of attentional entrainment. To capture the precision of temporal expectations, an “attentional pulse” was added and defined probabilistically as

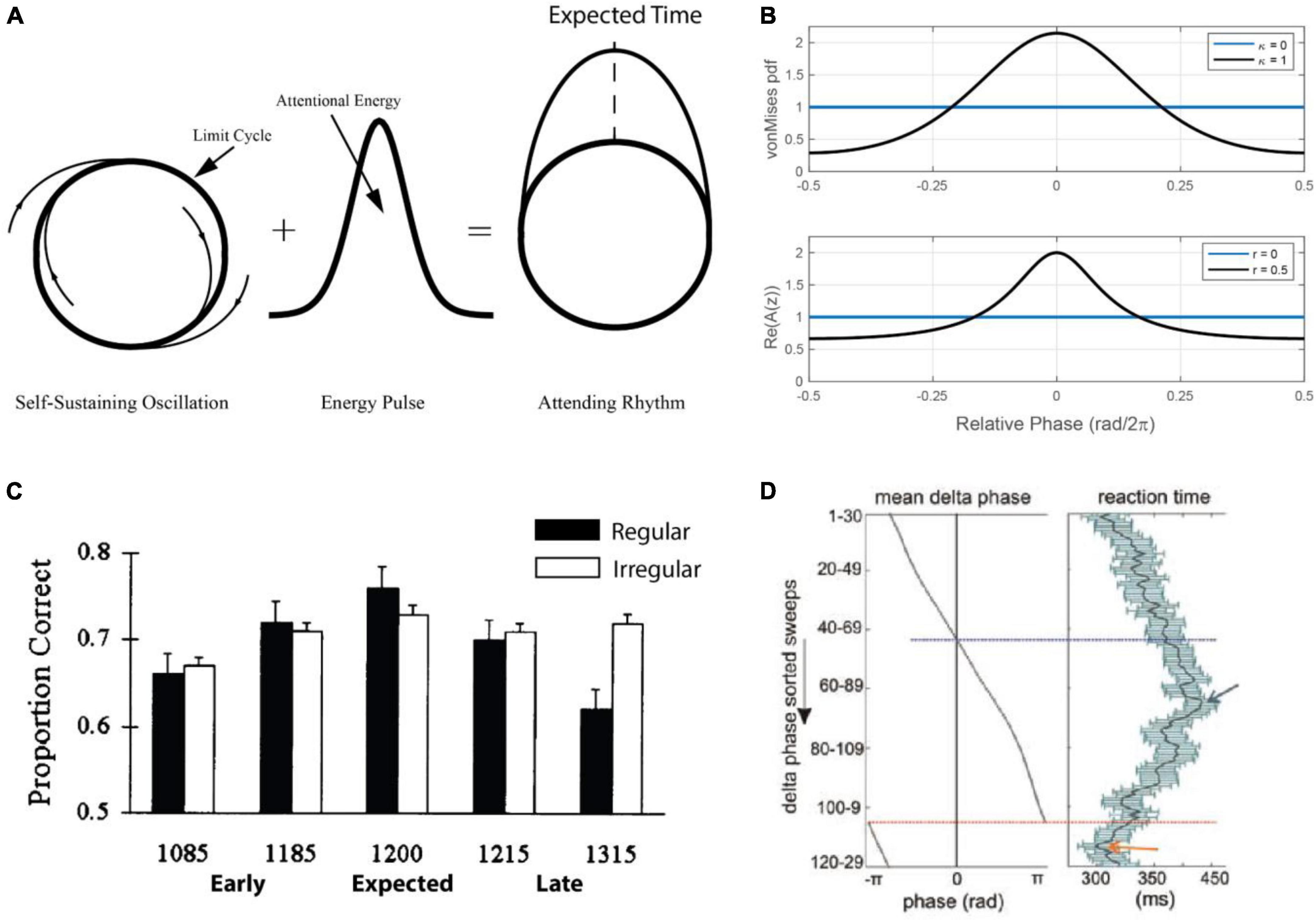

which corresponds to the von Mises distribution (Figures 5A, B; see also Figure 1C). I0(κ) denotes the modified Bessel function of order zero. When the temporal predictions are accurate, the attentional pulse becomes narrower in time, modeling increased precision of temporal predictions. The dynamics of attentional focus is included as a third dynamical equation to estimate the concentration parameter κ of the von Mises distribution (for details, see Large and Jones, 1999; Large and Palmer, 2002). The attentional pulse enabled quantitative modeling of temporal predictions in time and pitch discrimination tasks.

Figure 5. (A) The Dynamic Attending Theory model (DAT) consists of a limit-cycle oscillator and with a circular probability density that together model an “attending rhythm” that targets attention in time [adapted from Large and Jones (1999)]. (B) The energy distribution (a von Mises distribution) for the DAT model for different values of κ, the width of the distribution, which is a parameter that reflects attentional focus around the expected phase value of zero [top; adapted from Large and Jones (1999)]. The active non-linearity of the canonical model can function as the attentional pulse in a more neurally realistic model, and the amplitude (r) plays the same role as κ in the DAT model [bottom; see the section “5.2.2. A canonical model” and Eq. 8; (Large et al., 2010)]. (C) The effect of a regular versus an irregular timing cue on a pitch discrimination task. Performance is explained by a quadratic function that reflects expectation but only in the condition with a regular timing cue [adapted from Jones et al. (2002)]. (D) Results for a study where non-human primates carried out a visual discrimination task in a stream of visual stimuli timed around the delta-band of cortical oscillations [adapted from Lakatos et al. (2008)].

This model (Figures 5A, B) inspired a number of studies showing that a predictable rhythmic context facilitates perceptual processing of a subsequent acoustic event, with highest accuracy for targets occurring in-phase with the rhythm (Jones et al., 2002; see also Large and Jones, 1999; Barnes and Jones, 2000; Jones et al., 2006). In one study (Figure 5C) pitch changes were better detected when an event occurred at an expected time, compared with early or late. This pattern of results has since been replicated in multiple behavioral studies (see Henry and Herrmann, 2014; Hickok et al., 2015; Haegens and Zion Golumbic, 2018), including in the visual domain (Correa and Nobre, 2008; Auksztulewicz et al., 2019; see also Rohenkohl et al., 2012; Rohenkohl et al., 2014). Moreover, early studies in primates (Schroeder and Lakatos, 2009; Figure 5D) and humans (Stefanics et al., 2010) directly linked facilitation of perceptual processing (e.g., reaction time) to the phase of measured neural oscillations. Such results provided critical support for underlying models of entrained neural oscillation, but emphasized the need for more physiologically realistic models of neural oscillation.

A number of studies have linked beat perception in musical rhythms and dynamic allocation of attention to motor system activity (e.g., Phillips-Silver and Trainor, 2005; Chen et al., 2008a; Fujioka et al., 2009, 2012; Grahn and Rowe, 2009; Morillon et al., 2014). One such study on perception and action showed that active engagement of the motor-system by tapping along with a reference rhythm enhances the processing of on-beat targets and suppression of off-beat distractors compared to a passive-listening condition (Morillon et al., 2014). The Active Sensing Hypothesis proposed that perception occurs actively via oscillatory motor sampling routines (Schroeder et al., 2010; Henry et al., 2014; Morillon et al., 2014). Such findings have informed the development of neural resonance theory (section “5. Neural resonance theory”; e.g., Large and Snyder, 2009; Large et al., 2015), and led to testing of the theories using perception-action coordination tasks.

Perception-action tasks provide much more fine-grained behavioral data than is possible with perception and attention tasks. Adults can synchronize in-phase at a wide range of tempos, from around 5 Hz (p = 200 ms; Repp, 2003; ≥8 Hz if tapping a subharmonic) down to 0.3 Hz (p = 3,333 ms) and probably lower (see Repp and Doggett, 2007). At slower frequencies (∼2 Hz and lower) people can either synchronize or syncopate (deGuzman and Kelso, 1991), reflecting bistable dynamics.

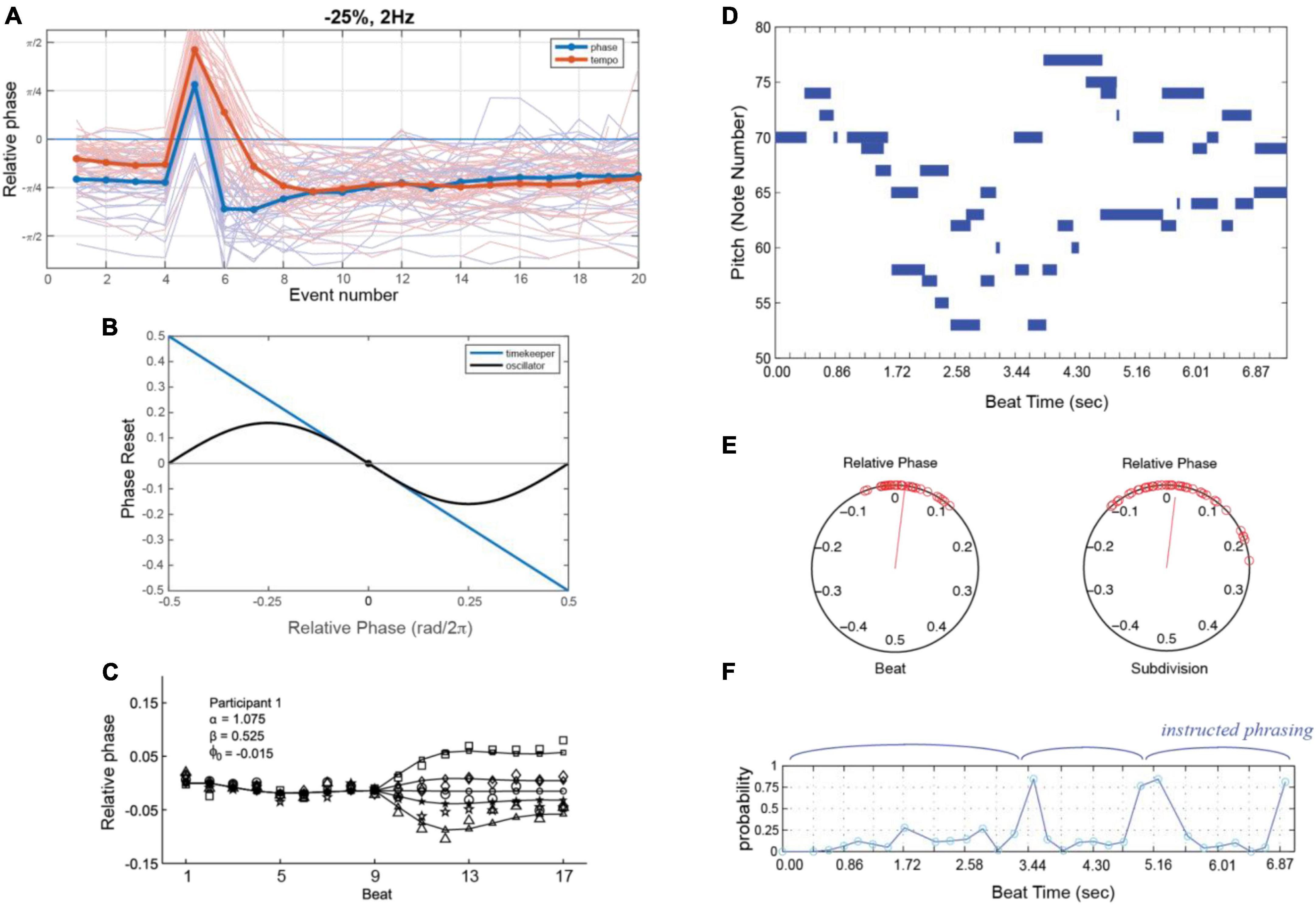

In one widely used paradigm, subjects synchronize finger taps (in-phase) with an isochronous rhythm. Once a steady-state phase is achieved (Repp, 2001a,b, 2003, 2008; Large et al., 2002), a phase or tempo perturbation is introduced, and relaxation back to steady-state is measured (Figure 6A). People respond quickly and automatically to phase perturbations (either permanent phase shifts or a shift of one event onset) of periodic sequences (Thaut et al., 1998; Repp, 2001b, 2002a,b, 2003; Large et al., 2002) and relaxation profiles match dynamical predictions. People also synchronize and recover from perturbations at small integer ratio frequencies (i.e., mode-locking; Large et al., 2002; Repp, 2008). People can also adapt to tempo perturbations (i.e., step changes of tempo; Large et al., 2002); however, tempo tracking is under volitional control and requires active attending (Repp, 2001a; Repp and Keller, 2004). People can also follow the periodic beat of complex musical rhythms (Large and Kolen, 1994; Large and Palmer, 2002), even those that have large changes in tempo (Rankin et al., 2009), as predicted by dynamical models (Large and Palmer, 2002; Loehr et al., 2011).

Figure 6. (A) Responses to phase (blue) or tempo (red) perturbations in a synchronization task (thin lines: individual trial; thick lines: grand average). Note that steady state synchronization also displays NMA [adapted from Wei et al. (2022)]. (B) The phase reset function in an oscillator model (black) as a function of relative phase with respect to the stimulus, versus the reset in a timekeeper model (blue). (C) An oscillator model (line with small markers) can explain asynchronies in a synchronization task where the stimulus speeds up or slows down (B,C) [adapted from Loehr et al. (2011)]. (D) Piano roll notation (as in Figure 1A) of a piano performance 3-part invention in B-flat by J.S Bach. (E) The relative phases of oscillators tracking beats at two different metrical levels, the main beat and a subdivision, determining attentional pulse width. (F) Probabilities that an event is late, computed using the attentional pulse, modeling perception of intended phrase boundaries (E,F) [adapted from Large and Palmer (2002)].

The seminal research of Haken et al. (1985) showed that anti-phase tapping with a periodic stimulus is possible due to bistable synchronization dynamics at low frequencies, but the anti-phase mode loses stability via a nonequilibrium phase transition (similar to gait transitions) if the stimulus frequency increases beyond a critical value (or bifurcation point) where monostable dynamics appear. Syncopation becomes difficult as the stimulus tempo increases even for trained musicians (e.g., Keller and Repp, 2005; Repp and Doggett, 2007). deGuzman and Kelso (1991) showed a phase model, similar to Eq. 1 but with a slightly different coupling function, could capture their findings.

The models considered here also allow for exploration of conceptual questions such as the relationship between dynamical systems approaches and information processing approaches. A dynamical systems approach is meant to embody the notion that a neural oscillator adapts its period to an external (forcing) stimulus, as in Eqs. 1, 2. Information processing models suggest that internal time-keepers measure and linearly track time intervals (e.g., Wing and Kristofferson, 1973; Mates, 1994a,b; Vorberg and Wing, 1996; van der Steen and Keller, 2013). One analysis showed that the phase oscillator model of Eqs. 1, 2 is formally similar to a linear time-keeper model (Jagacinski et al., 2000; Loehr et al., 2011), such that for periodic (isochronous) stimuli and small temporal perturbations, the predictions are nearly identical (Figure 6B). However, when responding to larger perturbations, linear models incorrectly predict symmetry in speeding up versus slowing down, whereas oscillator models correctly predict an asymmetry (Figure 6C; Loehr et al., 2011; see also Bose et al., 2019).

The critical difference, however, is that linear time-keeper models capture only 1:1 synchronization, whereas oscillator models capture mode-locking as well (Loehr et al., 2011). Mode-locking implies the ability to coordinate with complex music-like rhythms (Figures 6D–F), thus modeling the perception of beat, meter, and phrasing. In one study, oscillators entrained to complex rhythms in classical piano performances (e.g., 3-part invention by JS Bach; shown in Figure 6E) at multiple metric levels (Figure 6E). The precise temporal expectancies embodied in the attentional pulse were then used to model the perception of phrase boundaries (Figure 6F), which are marked by slowing of tempo (i.e., phrase-final lengthening; see Large and Palmer, 2002).

A different computational approach to beat perception draws on the theory of the Bayesian Brain and treats rhythm perception as a process of probabilistic inference. Rather than asking what neural mechanisms give rise to the percept of beats, it asks how we can understand human rhythm perception as part of the brain’s general strategy for making sense of the world. While this question is essentially a cognitive one, models that address this question may begin to guide and constrain models of the neural mechanisms that must ultimately undergird them.

One point of entry into the Bayesian Brain theory is the proposition that organisms are well served by producing internal dynamics corresponding to (or “representing”) the dynamic states and unknown parameters of survival-relevant processes in the world around them, which they can then use to predict what will happen next. A formal version of this claim is called the Free Energy Principle (Friston, 2010). In the case of rhythm perception, this representation may encompass the static parameters and dynamic states of any process that determines the timing and sequence of auditory events: for example, the nature of an underlying repetitive metrical pattern, the momentary phase and tempo of that pattern, and the number and identities of distinct agents generating the pattern. These representations can then inform predictions of upcoming auditory events and guide the entrainment of movements. Representing these variables might be survival-relevant by, for example, allowing groups of humans to coordinate their steps and actions rhythmically.

An attempt to represent the dynamic variables and parameters underlying the generation of a rhythm encounters multiple levels of ambiguity. A given rhythmic surface may admit multiple possible organizing metrical structures (see, e.g., Figure 1A), may be generated by one or by multiple agents, and may be corrupted by temporal irregularity or noisy sensory delays that obscure the underlying temporal structure. An idealized approach to coping with this ambiguity is given by Bayes Rule, a theorem from probability theory that describes an optimal method of incorporating noisy, ambiguous sensory observations into probabilistic estimates of underlying hidden (not directly observable) variables. Bayes Rule transforms a “prior” (pre-observation) distribution P(S) over a hidden state S into a “posterior” (post-observation) distribution P(S|O) that has incorporated an observation O. It does so using a “likelihood” function P(O|S) that describes the probability of observation O given any of the possible values of the hidden state S. The likelihood function can be understood as a model of how the hidden state generates observations, or a generative model.

The theory of the Bayesian Brain proposes that the brain mimics the application of Bayes Rule as it integrates sensory data into representations of the world, where generative models are implicitly learned through a lifetime (or evolutionary history) of interaction with the world. Note that the application of Bayes Rule does not entail a Bayesian interpretation of probability more generally – the essential contribution of Bayes rule in this context is a formal method of weighting the influence of each new observation by its precision relative to the precision of estimates preceding the observation.

The theory of Predictive Processing elaborates on the Bayesian Brain theory. It posits that the brain may be approximating Bayes Rule by continuously changing representations to minimize the difference between actual sensory input and the sensory input predicted based on those representations (the “prediction error”; Friston, 2005). Learning generative models can proceed similarly, by gradually changing them to minimize prediction error on time scales ranging from minutes to years, though some aspects of the generative models may be innate. Importantly, unlike in static Bayesian models of perception where statistically learned priors are combined with single observations to yield percepts, Predictive Processing proposes that inference proceeds dynamically, with the posterior after one observation acting as the prior for the next.

The qualitative groundwork for an inference theory of rhythm was laid by Lerdahl and Jackendoff (1996), who framed music cognition in terms of inference. In their view, the listener brings a set of musical intuitions, some of them learned, to each musical experience, and uses them to infer the latent structure underlying the musical surface (including the rhythmic surface). In their model, they treated meter as a hidden state to be inferred and upon which to base auditory timing predictions. Vuust, Witek, and later their coauthors (Vuust and Witek, 2014; Vuust et al., 2018) applied ideas from Predictive Processing to the perception of musical meter, positing that the perception of meter is determined through variational Bayesian inference, i.e., prediction error minimization, and arguing that this perspective accounts for various experimental results.

Perhaps the first fully mathematically specified inference model of rhythmic understanding took as the inferred hidden state the number of distinct processes generating a combination of jittered metronomic stimuli and used this model to account for participant tapping behavior, which synchronized differently depending on the phase proximity and jitter of the superimposed streams (Elliott et al., 2014). More recently, a model was proposed for two-participant synchronized tapping in which each tapper inferred whether the self-generated and other-generated taps could best be explained with two separate predictive models or one (“self-other integration”; Heggli et al., 2021).

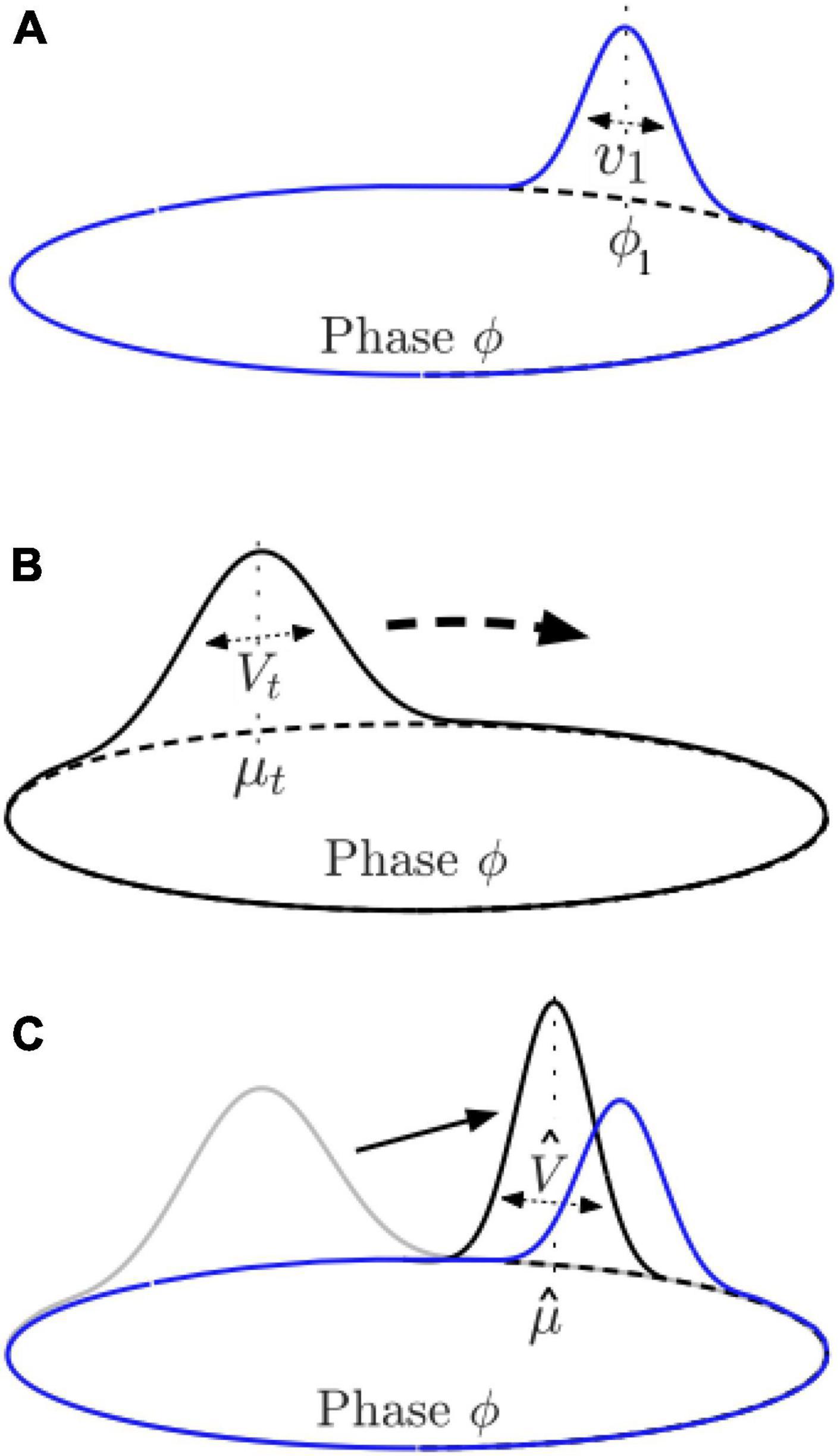

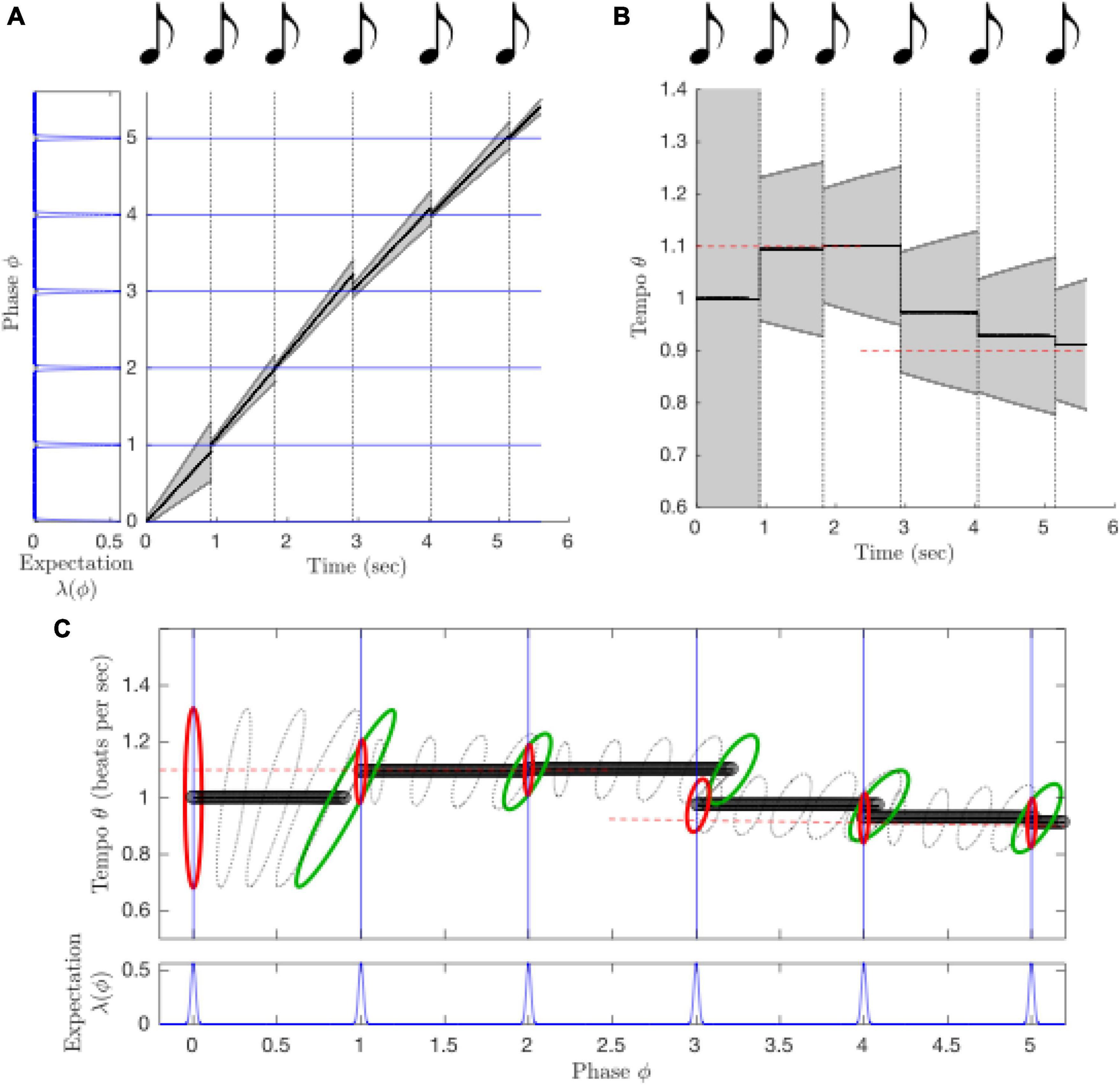

The PIPPET (Phase Inference from Point Process Event Timing) model (Cannon, 2021) attempts to characterize rhythm perception as a formal probabilistic inference process in continuous time. In its simplest incarnation, it describes an observer’s process of inferring the phase of a cyclically patterned stimulus given a known tempo and meter.

PIPPET starts with a generative model of rhythms based on a specific underlying metrical pattern, e.g., the cycle of the beat or of a group of multiple beats. Note that this generative model is not necessarily the way in which an actual stimulus is generated; instead, it describes the beliefs or expectations of the observer for the purposes of creating a representation of the rhythm and predicting future sound events. According to this generative model, the unfolding of a rhythmic stimulus is driven by a dynamic “phase” variable ϕt representing progress through the metrical pattern at time t, e.g., the momentary phase (on the circle) of an ongoing beat at time t. ϕt is expected to progress at a steady rate θ over time (corresponding to the known tempo), but with Brownian noise σWt representing the fact that even a perfectly steady rhythm will seem slightly irregular to an observer with noisy internal timekeeping:

The observer is assumed to know or have already identified an underlying metrical structure or “expectation template” for the rhythm (e.g., duple or triple subdivisions of a steady beat). This may be drawn from a library of learned expectation templates; we discuss the process of choosing a template below. The metrical pattern expected by the observer is represented by a function λ(ϕ) from stimulus phase to the observer’s belief about the probability of a sound event at that phase. This function consists of a sum of a constant λ0, representing the probability of non-metrical sound events, and a set of Gaussians, each with a mean ϕj representing a characteristic phase at which the observer expects events to be most probable, a scale λj representing the probability of events associated with that characteristic phase, and a variance vj related to the temporal precision with which events are expected to occur at that phase (Figure 7A):

Figure 7. Illustration of components of the PIPPET model. (A) In PIPPET, rhythmic expectations are represented with one or more Gaussian peaks on the circle, with means representing phases where events are expected, e.g., ϕ1, and variances representing the temporal precision of those expectations, e.g., v1. (B) The observer approximates the phase of the cyclically patterned stimulus with a Gaussian distribution over the circle, with mean (estimated phase) μt and variance (uncertainty) Vt at time t. In the absence of sound events or strong expectations, μt moves steadily around the circle while Vt grows. (C) At a sound event, μt resets to , moving the estimated phase closer to a nearby phase at which events are expected, and Vt resets to , adjusting the certainty about phase as appropriate.

According to the generative model, rhythmic events are produced as an inhomogeneous point process with rate λ(ϕt). As a map over cycle phase representing the position and precision of temporal expectancy, this function serves a very similar purpose to the “attentional pulse” in DAT models described above.

The observer uses the generative model to continuously infer the stimulus phase ϕt, approximating a full distribution over possible phases at time t with a Gaussian distribution with mean μt and the variance Vt (Figure 7B):

The parameters μt and Vt of this distribution are adjusted to make the best possible estimate of phase over time, handling various sources of timing and event noise through a variant on continuous-time Kalman filtering (Bucy and Joseph, 2005) that amounts to a continuous application of Bayes rule, and that can be described by the equations below.

At any time t, let Λ denote the observer’s expectancy (or “subjective hazard function”) for sound events, defined as

Define auxiliary variables and :

A rule for the continuous evolution of the variables μt and Vt between events can be defined:

as well as a rule for their instantaneous reset at any event time t (Figure 7C):

The rapid resetting of μt can be understood as a partial correction of inferred phase in response to event timing prediction error. Although the theoretical roots of PIPPET are very different from those of the oscillator models described here, the resulting dynamics are rather similar: the dynamics of μt are closely analogous the phase dynamics of a pulse-forced DAT model, and the dynamics of Vt behave somewhat like the radial dynamics in a damped oscillator like those that appear in neural resonance models (with larger Vt corresponding to a smaller radius), as described in the next section.

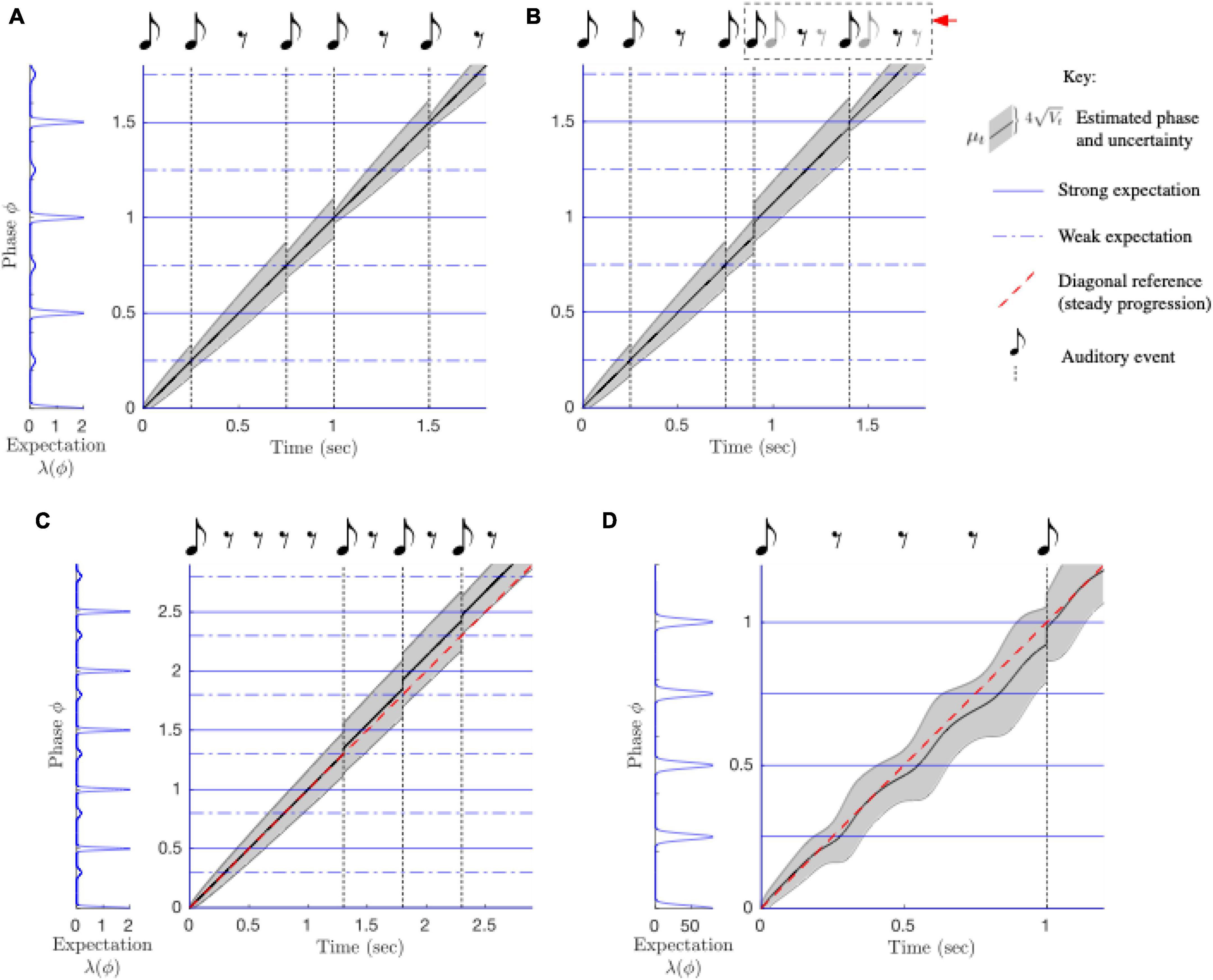

PIPPET tracks stimulus phase and anticipates sound events through steady rhythms (Figure 8A) and rhythms that are jittered or perturbed in time (Figure 8B). The model accounts for listeners’ tendency to perceptually shift the phase of a beat if the rhythm is overly syncopated: when phase uncertainty Vt is large, is strongly influenced by strong phase-specific expectations even if the sound event occurs well before or after that expectation (Figure 8C). Unlike other models of rhythm perception, PIPPET accounts for the empirically observed tendency to perceive rhythms with unexpected omissions as speeding up (Repp and Bruttomesso, 2009): the term in the continuous evolution of μt produces a slowing of estimated phase as it approaches a phase where events are strongly expected, resulting in the next sound seeming early (Figure 8D).

Figure 8. Behavior of the PIPPET model. (A) The PIPPET algorithm continuously estimates the phase (drawn here on the real line rather than the circle) underlying a syncopated rhythm. It does so by continuously evaluating the rhythm in light of an expectation template of times at which events are more or less strongly expected (blue). Here, the template is chosen a priori; in an extended model, it may be inferred from among a library of such expectation templates. For this relatively simple rhythm, estimated phase advances steadily. Uncertainty grows through rests since they provide no information about the exact phase of the stimulus cycle. Uncertainty decreases each time a sound aligns with a moment of sound expectation, and decreases substantially when a sound aligns with the strong and precise expectation on the beat, e.g., at 1 s. (B) Phase tracking is robust to timing perturbations in the rhythm. Immediately following a phase shift (at 1 s), phase uncertainty Vt increases, but then it is reduced when the adjusted phase is used to accurately predict a strongly expected event (at 1.4 s). (C) The listener may expect a steady stream of events or may learn irregular patterns of expectations. Here, the listener expects uneven (swung) eighth notes. For this well-timed but excessively syncopated example rhythm, excessive syncopation leads to a failure of phase tracking (straying from the diagonal) similar to that observed for high syncopation in humans. (D) Strong expectations that are not met with auditory events (at 0.25, 0.5, and 0.75 s) cause the advance of estimated phase to become irregular. As a result, an auditory event at 1 s seems early.

A key aspect of the model that follows from the formulation of the problem as probabilistic inference is Vt, the continuous estimate of the participant’s certainty about the rhythm’s phase. This work predicts that a similar estimate should be physiologically represented in the brain alongside an estimate of the phase of the beat cycle. The model is agnostic to the neural nature of μt and Vt, and could therefore be compatible with a range of physiological mechanisms.

One exciting aspect of this model, and of probabilistic inference approaches more generally, is that they can be used to specify an exact degree of mismatch between expectations and reality. A convenient measure of this mismatch is the information-theoretic surprisal: if the probability of an occurrence is p, the surprisal associated with it is −log(p). Thus, the surprisal associated with a sound event is −log(Λdt), a function of the subjective hazard rate, i.e., the overall degree of expectancy. This is closely related to the prediction error signal thought to drive Bayesian inference in the theory of Predictive Processing.

PIPPET can be extended to include inference of stimulus tempo as well as phase: when a dynamic tempo variable is incorporated into the generative model of rhythm, a dynamic probabilistic inference process can continuously estimate a joint distribution over phase and tempo (Figure 9). Further, it has recently been extended to include simultaneous inference about which of a library of templates is relevant to the current rhythm (Kaplan et al., 2022). In this extension, the collection of metrical patterns that the listener has been enculturated to expect determines how they interpret, tap along with, and reproduce the rhythmic surface, as has been demonstrated in experiment (Jacoby and McDermott, 2017).

Figure 9. The extended PIPPET model infers both phase and tempo over the course of a tempo change from 1.1 beats per second to 0.9 beats per second. (A) When the sequence of sound events slows down, the advance of estimated phase slows as well. See previous figure key. (B) This is because tempo (and tempo uncertainty) are also being inferred as the sequence unfolds, and the estimated tempo drops when the rate of events slows. Initial and final tempo are marked in dashed red lines. (C) Phase and tempo can be inferred simultaneously because the model tracks a joint distribution over phase (X-axis, projected from the circle onto the real line) and tempo (Y-axis). The contours represent level sets of a multivariate Gaussian distribution over phase/tempo state space, strobed over time. At each event, the prior distribution (red) updates to a posterior (green) that incorporates a likelihood function based on the phases at which events are expected.

The PIPPET model takes an interesting stance on the continuation of a beat. Continued rhythmic input is necessary in order for the estimated distribution over stimulus phase not to decay to a uniform distribution. However, that input can be self-generated. If self-movement, e.g., stepping or finger-tapping, is actuated based on the inferred rhythmic structure, then the feedback from this movement can take the place of the stimulus and keep the phase inference process going in a closed loop of action and rhythm perception. Alternatively, auditory imagery, presumably generated elsewhere in the brain and treated as input to PIPPET, could take the place of motor feedback.

There has not yet been a published attempt to carefully fit PIPPET’s parameters to rhythm perception data. However, such an attempt is not unrealistic. The expectation template could be estimated by manipulating timing at various levels of metrical hierarchy and observing the effect on the timing of a subsequent tap indicating the next expected beat (e.g., Repp and Doggett, 2007), and the rate of accumulation of temporal uncertainty between events could be worked out by doing a similar experiment across multiple inter-beat intervals.

The Bayesian Brain perspective on rhythm perception will not, in itself, reveal neural mechanisms of beat tracking and anticipation. However, it has already demonstrated its potential to highlight nuances of rhythm perception and model them at a high level, providing guidance to the search for neural substrates. Further, by identifying the cognitive significance of dynamic variables as “representations” of hidden underlying processes, this dynamic inference approach may provide a bridge from basic mechanisms to complex musical behaviors that are easiest to specify in terms of recognition of and interaction with musical structure.

While Bayesian approaches model rhythm at the behavioral level, in this section we explore the hypothesis that physiological oscillations in large scale brain networks underlie rhythm perception. We then ask what additional behavioral and neural phenomena may be explained by this hypothesis. But this approach brings with it significant challenges. Although the mechanisms of spiking and oscillation in individual neurons have been well known for some time (Hodgkin and Huxley, 1952; Izhikevich, 2007), there remain a variety of approaches for understanding emergent oscillations within large groups of neurons (see e.g., Ermentrout, 1998; Buzsáki, 2004; Wang, 2010; Breakspear, 2017; Senzai et al., 2019).

While one type of research goal involves understanding how oscillations at different levels of organization are generated, another important goal is to understand how emergent oscillations in large scale brain networks relate to perception, action, and cognition at the behavioral level (Kelso, 2000). The Neural Resonance Theory (NRT) approach begins with the observation that models of neural oscillation share certain behavioral characteristics that can be captured in generic models called normal forms, or canonical models. It hypothesizes that the properties found at this level of analysis are the key to explaining rhythmic behavior.

Neural Resonance Theory refers to the hypothesis that generic properties of self-organized neuronal oscillations directly predict behavioral level observations including rhythm perception, temporal attention, and coordinated action. The theory’s predictions arise from dynamical analysis of physiological models of oscillations in large-scale cortical networks (see Hoppensteadt and Izhikevich, 1996a,b; Breakspear, 2017). As such, NRT models make additional predictions about the underlying physiology, including local field potentials, and parameter regimes of the underlying physiological dynamics.

Nonlinear resonance refers broadly to synchronization, or entrainment, of (nonlinear) neural oscillations (Pikovsky et al., 2001; Large, 2008; Kim and Large, 2015). Although terms like entrainment are sometimes used in the empirical literature to refer merely to phase-alignment of neural signals, these terms are used here in a stricter physical (or dynamical) sense, enabling predictions about behavior and physiology that arise from dynamical analysis of nonlinear oscillation.

Importantly, neural resonance predicts more than phase-alignment with rhythmic stimuli; it predicts mode-locking of neural oscillations. Mode-locking, in turn, predicts structural constraints in perception, attention, and coordinated action. Mode-locking can explain, for example, how we perceive a periodic beat in a complex (multi-frequency) rhythm (Large et al., 2015), whereas phase-alignment in linear systems cannot (see e.g., Pikovsky et al., 2001; Loehr et al., 2011).

Synaptic plasticity is an important means by which the brain adapts and learns through exposure to environmental information. Here we describe two primary mechanisms by which adaptation may occur: Hebbian learning via synaptic plasticity, and behavioral timescale adaptation of individual oscillator parameters, such as natural frequency.

Transmission delays are ubiquitous in the brain, representing another generic feature of neural dynamics. But how can we perform synchronized activities, such as play music together, or anticipate events in a rhythm in the presence of time delays? Recent work suggests that such behavioral and perceptual feats take place not in spite of time delays, but because of them (Stephen et al., 2008; Stepp and Turvey, 2010). While seemingly counter-intuitive, dynamical systems can anticipate or expect events in the external world to which it is coupled, without a need to explicitly model the environment or predict the future.

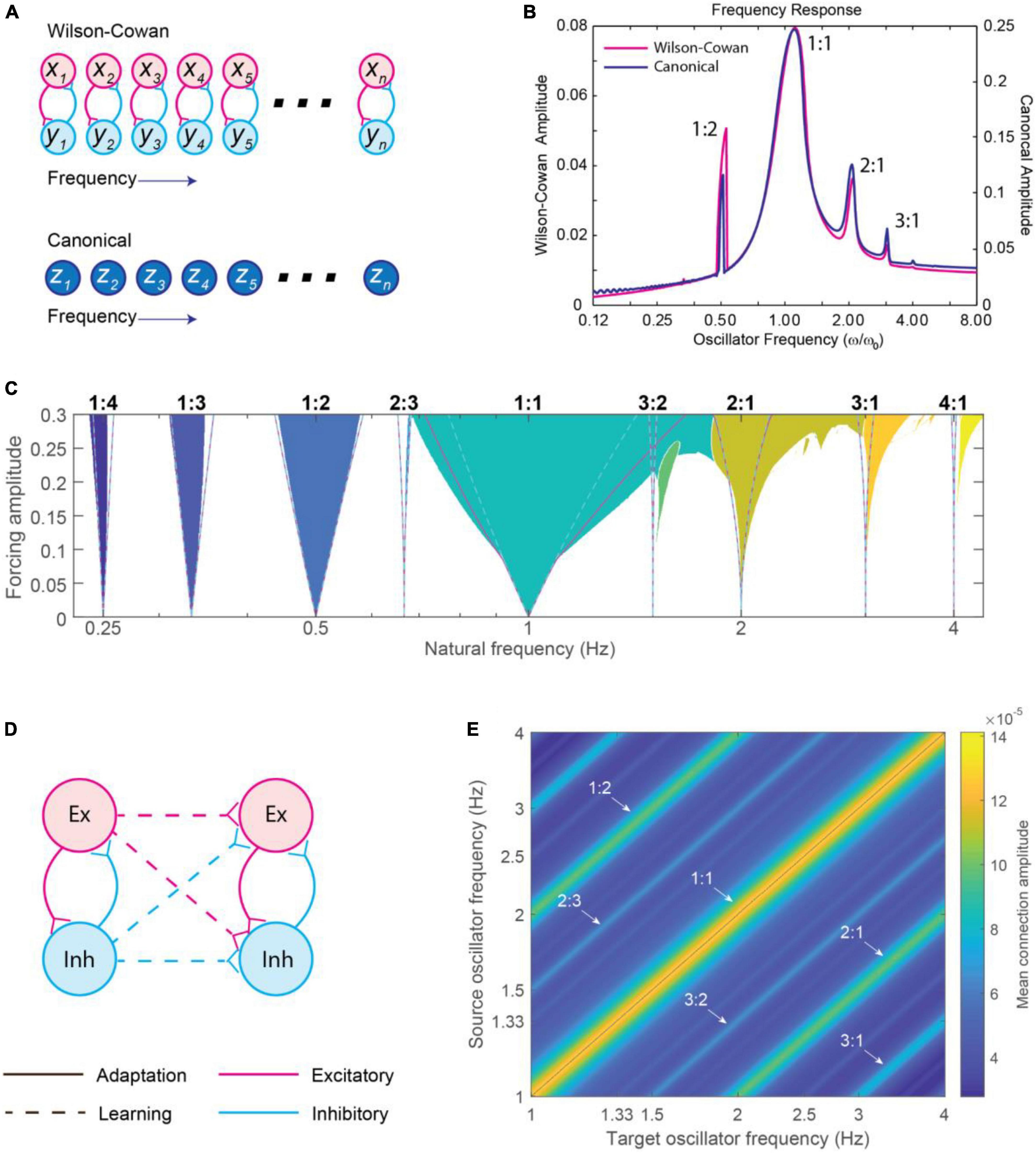

Phase models based on circle map dynamics, introduced in section “3. Models for time-keeping, beat generation and beat perception,” have been used to describe phenomena including beat perception (Large and Kolen, 1994), dynamic attending (Large and Jones, 1999), perception-action coordination (deGuzman and Kelso, 1991), and tempo invariance (Large and Palmer, 2002). Inclusion of period correcting dynamics (e.g., Large and Jones, 1999; Large and Palmer, 2002) allowed these models to be robust to stimuli that change tempo. In some models, an “attentional pulse” described when in time events in an acoustic stimulus were expected to occur as well as the precision of temporal expectations. A related “canonical” model was derived from a physiological model of oscillation in cortical networks (Wilson and Cowan, 1973; Hoppensteadt and Izhikevich, 1996a,b; Large et al., 2010), and includes both amplitude and phase dynamics. The inclusion of realistic amplitude dynamics enriches the behavioral predictions of phase models and has also led to models that include Hebbian learning and neural time-delays.

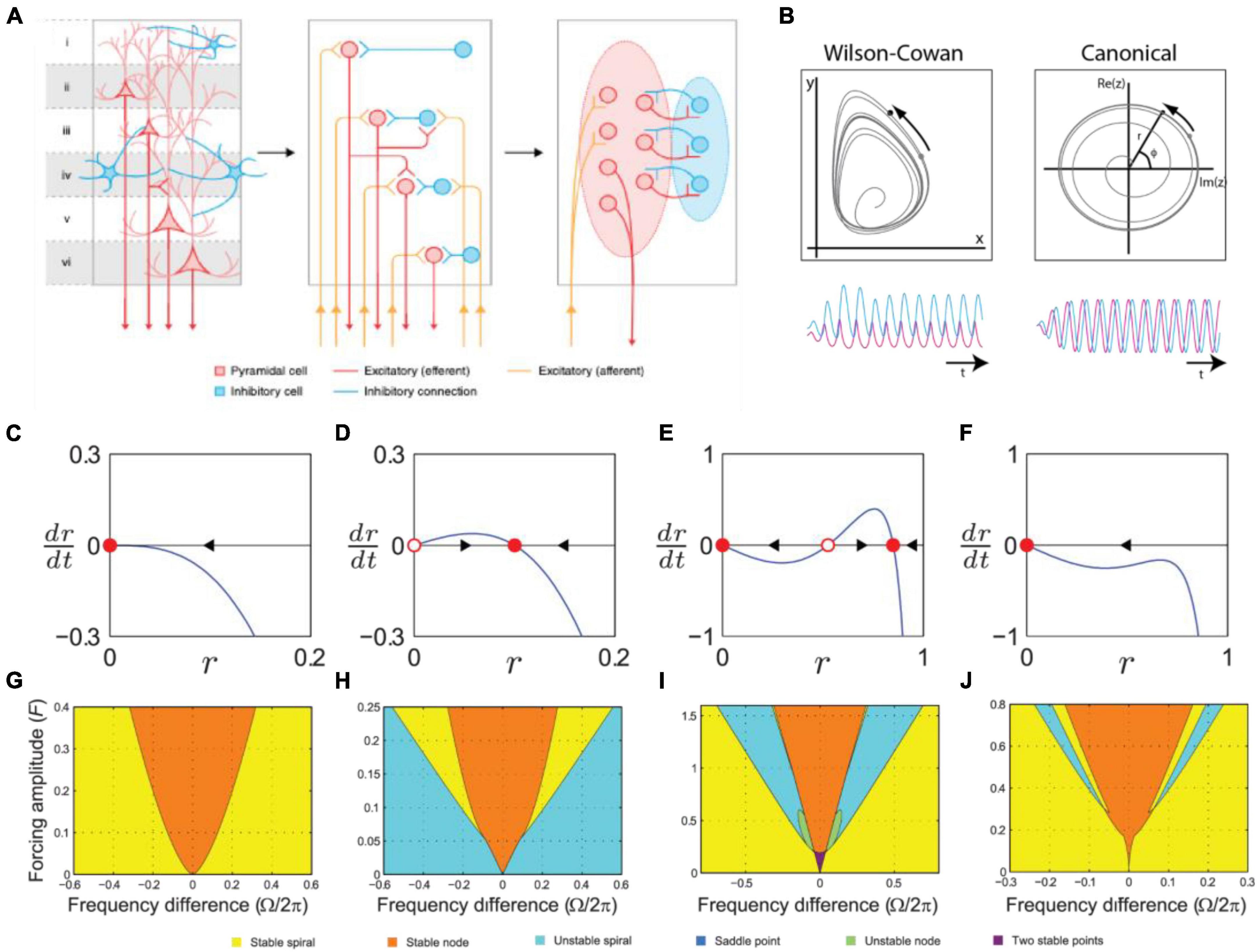

Phase models represented important steps forward in thinking about what kind of neural dynamics may underlie dynamic attending and perception-action coordination. However, the formalism described in section “3.3.1. Circle-map phase oscillator models,” specifically the absence of amplitude dynamics, limits applicability to neural and behavioral data. Alternatively, neural mass models (also called excitation-inhibition, or E-I models, e.g., Wilson and Cowan, 1973; Hoppensteadt and Izhikevich, 1996a) characterize neural oscillations in networks of interacting excitatory and inhibitory neural populations. Models of large-scale brain dynamics (larger than a single neural oscillation) can then be based on the study of self-organized oscillation in collective neural behavior (see Breakspear, 2017 for an overview) in which oscillations have both amplitude and phase (e.g., Large et al., 2010; see Figures 10A, B). Below, we describe a derivation of a canonical model from a neural mass model, and then expand this into a network model with learning and address the role of time delays.

Figure 10. (A) The left panel shows pyramidal and inhibitory neurons present and interacting across a cortical column. The center panel shows how a traditional spiking neural model treats each neuron as a unit that is individually modeled. The right panel shows a neural mass model (NMM), where population dynamics are averaged to a low-dimensional differential equation for each class of neurons [modified from Breakspear (2017)]. (B) The oscillatory dynamics of two-dimensional Wilson-Cowan (left) and a canonical oscillator (right) model, driven by a periodic sinusoidal stimulus [adapted from Large (2008)]. (C–F) Amplitude vector field for a canonical oscillator in a critical Hopf regime, (D) a supercritical Hopf regime, and (E,F) saddle-node bifurcation of periodic orbits regimes. Attractors and repellers are indicated by red dots and red circles, respectively. Arrows show direction of trajectories [adapted from Kim and Large (2015)]. (G–J) Stability regions for a canonical oscillator as a function of sinusoidal forcing strength in panel (G) a critical Hopf regime, (H) a supercritical Hopf regime, (I) saddle-node bifurcation of periodic orbits regimes. Colors indicate stability type [adapted from Kim and Large (2015)].

Neural mass models approximate groups of neurons by their average properties and interactions. One example is the well-known Wilson-Cowan model (Wilson and Cowan, 1973), which describes the dynamics of interactions between populations of simple excitatory and inhibitory model neurons. Both limit cycle behavior, i.e. neural oscillations, and stimulus-dependent evoked responses are captured in this model.

Here, u is the firing rate of an excitatory population, and v is the firing rate of an inhibitory population. a, b, c, and d are intrinsic parameters, ρu and ρv are bifurcation parameters, and xu(τ) and xv(τ) are afferent input to the oscillator.

On the surface, neural mass models appear quite distinct from the sine circle map used in section “3.3.1. Circle-map phase oscillator models.” However, there is a systematic relationship. One approach to understanding their behavior is to derive a normal form. When the neural mass model is near a Hopf bifurcation, we can reduce it to the Hopf normal form via a coordinate transformation and a Taylor expansion about a fixed point (equilibrium), followed by truncation of higher order terms after averaging over slow time (Ermentrout and Cowan, 1979; Hoppensteadt and Izhikevich, 1997).

where z = reiϕ is complex-valued oscillator state, a = α + iω and b1 = β1 + iδ1 are coefficients to the linear and cubic terms, respectively, x(t) is an external rhythmic input that may contain multiple frequencies, and t = ετ is slow time, and H.O.T. represents higher order terms. Eq. 5 can then be transformed to separately describe the dynamics of amplitude r and phase ϕ:

In this formulation, the parameters are behaviorally meaningful, α is the bifurcation parameter, ω is the natural frequency, β1is nonlinear damping, and δ is a detuning parameter that captures the dependence of frequency on amplitude. This model is systematically related to the phase model, assuming a limit cycle oscillation (α ≫ 0) and discrete input impulses (see Large, 2008).

Normal form models are useful in their own right, for example as models of outer hair cells in the cochlea (e.g., Eguíluz et al., 2000). However, by truncating higher order terms important properties of the neural models are lost, most importantly mode-locking, which is critical in the NRT framework. However, using the same principles as normal form analysis, a fully expanded canonical model for a neural mass oscillation near a Hopf bifurcation can be derived (Large et al., 2010). For example, the following expanded form contains the input terms for all two-frequency (k:m) relations between an oscillator and a sinusoidal input:

In the original expanded form in Large et al. (2010), the relative strengths of high-order terms are expressed by powers of the parameter ∈. Eq. 8 is a rescaled form without ∈ in which oscillation amplitude is normalized (|z| < 1; Kim and Large, 2021). This model displays rich dynamics and has several interesting properties that make it useful for modeling and predicting rhythmic behaviors. The fully expanded model can be decomposed to study specific properties, and to create minimal models of empirical phenomena.