95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci. , 03 August 2022

Volume 16 - 2022 | https://doi.org/10.3389/fncom.2022.931121

We apply the framework of nonlinear optimal control to a biophysically realistic neural mass model, which consists of two mutually coupled populations of deterministic excitatory and inhibitory neurons. External control signals are realized by time-dependent inputs to both populations. Optimality is defined by two alternative cost functions that trade the deviation of the controlled variable from its target value against the “strength” of the control, which is quantified by the integrated 1- and 2-norms of the control signal. We focus on a bistable region in state space where one low- (“down state”) and one high-activity (“up state”) stable fixed points coexist. With methods of nonlinear optimal control, we search for the most cost-efficient control function to switch between both activity states. For a broad range of parameters, we find that cost-efficient control strategies consist of a pulse of finite duration to push the state variables only minimally into the basin of attraction of the target state. This strategy only breaks down once we impose time constraints that force the system to switch on a time scale comparable to the duration of the control pulse. Penalizing control strength via the integrated 1-norm (2-norm) yields control inputs targeting one or both populations. However, whether control inputs to the excitatory or the inhibitory population dominate, depends on the location in state space relative to the bifurcation lines. Our study highlights the applicability of nonlinear optimal control to understand neuronal processing under constraints better.

Optimal control theory (OCT) provides a toolbox to investigate the effect of targeted perturbations on dynamical systems (Berkovitz and Medhin, 2012). It enables to answer the question of how stimulation must be designed to optimally induce or stop specific dynamical states or activity patterns. Optimality is defined through the global minimum of a cost function, which typically rewards closeness to desired target values of the state variables and penalizes control effort, which can be quantified, for example, in terms of the duration and strength of an external control signal (Casas et al., 2015). Applications of OCT are 2-fold. In a “synthetic” application scenario, OCT can help us to manipulate a dynamical system optimally, for example, to follow the desired trajectory. In an “analytic” application scenario, it can help us understand the way in which a natural dynamical system is designed and offers explanations of its workings in terms of optimization principles. In the past, OCT has been applied successfully in biology and biomedicine with applications to cellular systems, metabolic networks, and the development of effective treatments against pathogens (see, e.g., Ewald et al., 2017; Tsiantis and Banga, 2020 for recent reviews).

Applications to neural systems have been mostly on the synthetic side so far and cover a variety of open and closed loop approaches for modulating brain activity (cf. Grosenick et al., 2015; Tafazoli et al., 2020; Takeuchi and Berényi, 2020). Examples include deep brain stimulation for the treatment of patients with Parkinson's disease (Popovych and Tass, 2019), invasive stimulation to imprint population activity (Marshel et al., 2019), e.g., in the context of neuro-prosthetic devices (Chen et al., 2020; Flesher et al., 2021), and non-invasive transcranial electrical stimulation for modulating and improving perception, motor control, and cognition (Au et al., 2017; Colzato et al., 2017; Reteig et al., 2017). Applications of OCT, however, are few and are mostly restricted to theoretical investigations. OCT in form of minimum-energy or minimum-power control strategies was applied to phase oscillators, which were derived to match single neuron phase response curves (Nabi et al., 2012; Dasanayake and Li, 2014; Pyragas et al., 2020). Here, the first experimental verifications of this technique confirmed an improved performance (Wilson et al., 2015). OCT was applied more extensively to wave propagation in systems of coupled non-linear oscillators (cf. Löber and Engel, 2014; Ziepke et al., 2019; Shangerganesh and Sowndarrajan, 2020), which also serve as models for neurons or neural populations, but closer links to the neuroscience literature were not yet made.

Compared to other applications in biology and biomedicine, there have been fewer works exploring the potential of OCT for analytic investigations into neural systems. One exception is motor control, for which OCT and optimal feedback control theory are well-established frameworks and drive theoretical analysis and modeling on a behavioral level (Todorov and Jordan, 2002; Diedrichsen et al., 2009; Scott, 2012). Beyond validating its applicability (Bian et al., 2020), recent studies extend this framework by including feedforward strategies (Yeo et al., 2016) and stochastic effects (Berret et al., 2021). Studies on applications of OCT to neural dynamics are few. Bassett and colleagues (cf. Gu et al., 2015; Tang and Bassett, 2018; Srivastava et al., 2020) applied diagnostics from linear control theory to the dynamics of neural populations in a whole-brain network setting, arguing that linearization is a valid approximation locally. Questions that were addressed include the efficacy of network nodes to steer the network dynamics, with some of the obtained results being confirmed by numerical simulations of a corresponding non-linear model (Muldoon et al., 2016). Results were interpreted in the context of the brain's internal control of general neurophysiological processes with implications for brain development and cognitive function, but also in the context of controlling altered neurophysiological processes in a medical context. A recent work (Ref. Chouzouris et al., 2021) applied nonlinear OCT to a whole-brain network model of FitzHugh-Nagumo oscillators, discussing the predictions of linear control diagnostics vs. nonlinear optimal control for different control settings. These studies highlight the potential of control theoretic concepts in an “analytic” setting for a mechanistic understanding of neural dynamics.

In this contribution, we explore the potential of OCT for predicting optimal perturbations for a motif, which consists of two recurrently connected populations of excitatory and inhibitory neurons and which is a common building block of many neural systems. We consider a biophysically grounded two population mass model (Cakan and Obermayer, 2020), whose populations are mathematically described via mean-field approximations of infinitely large populations of exponential integrate-and-fire (EIF) neurons (Brette and Gerstner, 2005; Augustin et al., 2017) and which exhibits down-states, up-states, and several oscillatory phenomena observed in neural systems. Here, we focus on a region in state space, in which the model is bistable, i.e., in which stable states of constant high and low activity coexist. We then apply nonlinear OCT in search of the most efficient strategies (in terms of accuracy and required control strength) for an external input to steer the motif from one of its stable fixed points to the other. To do so, we implement a gradient descent algorithm minimizing a cost function, which trades accuracy (w.r.t. the control goal) against control strength (measured by the integrated 1- and 2-norms of the control signal). We first explore the performance of the optimization method and explore its limitations. When applied to the switching task we find that—in the noiseless case—low-cost control strategies exploit the intrinsic properties of the dynamical system by steering the system just slightly across the boundary to the target attractor, from where the system converges to its target state without further external input. We then apply the OCT ansatz to inquire whether it is more efficient to steer the system via inputs to the inhibitory or the excitatory population if control strength is constrained. Penalizing control strength via the integrated 1-norm we find that the answer depends on the exact location of the system in state space. Thus, optimal control may require changing control inputs between the participating neural populations when the dynamical context is changed. These results show that OCT is a valuable tool and highlight its applicability to probe the dynamics of a nonlinear neural system.

This work is structured as follows. Section 2 introduces the mean-field model and its dynamics, formalizes the optimal control problem mathematically, and finally describes the numerical implementation of our optimal control algorithm. In Section 3, we explain the setup for the experiments and present our main findings. Section 4 concludes with a brief discussion and comments on the potential and shortcomings of our approach.

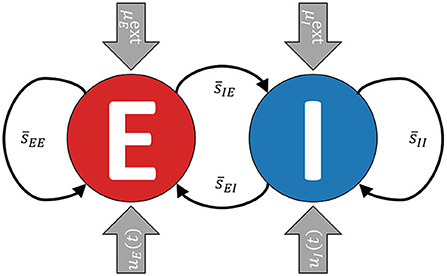

The model consists of two recurrently coupled excitatory (E) and inhibitory (I) populations (cf. Cakan and Obermayer, 2020), whose activities are measured in terms of their average firing rates rE(t) and rI(t) (see Figure 1). Both populations receive static background inputs and and time-varying external control inputs uE(t) and uI(t).

Figure 1. A simplified visualization of the model. The excitatory and the inhibitory subpopulations are recurrently coupled and receive external background inputs and time-varying external control currents uE,I(t).

The model is derived from a network of excitatory and inhibitory EIF neurons under the assumption of sparse and random connectivity to neurons of the same or opposite type and in the limit of an infinite number of neurons. All parameters and variables are biophysically grounded.

In a network of identical EIF neurons, the dynamics of the membrane voltage of the ith neuron is described by (cf. Augustin et al., 2017; Cakan and Obermayer, 2020).

The ion current Ii,ion of an EIF neuron is given by

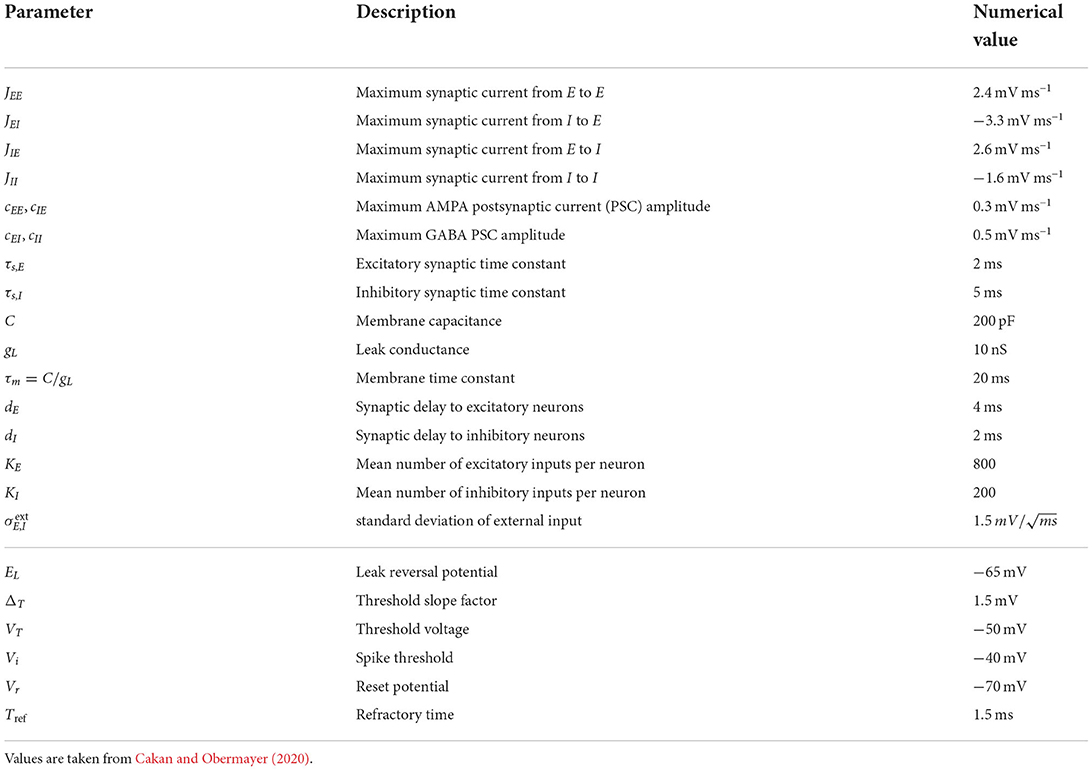

where EL, ΔT, and VT are the leak reversal potential, the threshold slope factor, and the threshold voltage, respectively. Whenever the membrane voltage reaches or exceeds the spike threshold Vs, i.e., Vi ≥ Vs, an action potential is generated, the membrane voltage is changed to the reset voltage Vr, i.e., Vi = Vr, and clamped for the refractory time Tref. Table 1 summarizes the numerical values of these parameters (cf. Cakan and Obermayer, 2020).

Table 1. Parameters of the mean-field EI EIF model (upper block) and the spiking neuron model (lower block).

Ii(t) is the sum of synaptic currents to the ith neuron induced by the neural activity of the connected neurons in the network. Excitatory (E) and inhibitory (I) neurons stimulate subsequently connected neurons differently, hence the synaptic current that neuron i of population α, α ∈ {E, I} receives is given by

C denotes the membrane capacitance, and Jαβ quantifies the coupling strength, i.e., the maximum synaptic current from population β to population α when all synapses are active. The fraction si,αβ of active synapses is determined by

where τs,β is the synaptic time constant. We sum over all spikes k that neuron j of population β emits and that are received by neuron i of population α after the time delay dα. G is a random binary connectivity matrix, i.e., Gij = 1 if neuron j is coupled to neuron i and Gij = 0 else.

Each neuron in the network receives a noisy background current with mean value and standard deviation σext, which are equal for all neurons within a population. ξi(t) is a Gaussian noise process with mean zero and variance one.

In the limit of an infinitely large population, the spiking neuron model can be turned into a neural mass model by averaging the neural dynamics of all neurons of each type. One can express the fraction of active synapses connecting population β to population α in terms of its mean value and its variance . These determine the average membrane current μα(t) and its variance , which in turn determine the mean firing rate rα(t). We denote the model as the mean-field model of excitatory and inhibitory EIF neurons (mean-field EI EIF model). For a thorough derivation of the model equations, we refer to Augustin et al. (2017). We set parameters as in Cakan and Obermayer (2020) and list the numerical values in Table 1. The model variables are summarized in Table 2. In the following, we denote the vector of dynamical variables by x(t).

The system of delay differential-algebraic equations (DDAEs) that defines the model dynamics reads

The system (Equation 5) of equations consists of four blocks. The population averages rα(t), α ∈ {E, I}, of the excitatory and inhibitory rates (first block), are determined by the precomputed transfer function Φr(μα, σα), which depends on the corresponding mean membrane current μα and its standard deviation σα. Their dynamics are described in the second block. The membrane current μα exponentially decays with the time constant τα while the weighted sum of mean synaptic inputs and the background current counteract the decay. To relate μα (given in units of mV ms-1) to a physical electric current (given in units of A), it is multiplied with the membrane capacitance C. The variances of the membrane currents combine the variances , α, β ∈ {E, I} of the synaptic inputs and the fixed parameter . rαβ denotes the population activity received by population β from population α after the time delay dβ.

The fraction of the maximum postsynaptic and the maximum synaptic current downscales the effect of the incoming rate rβ. Each neuron of population E and I is connected to Kβ neurons of population β. The third block contains the effective time constants τα, which the mean membrane current of the excitatory and inhibitory population decay with. They are determined by a precomputed function Φτ that depends on μα and σα. The last block defines the synaptic activities of the recurrently coupled populations and their variances . decays exponentially with the time constants τs,β and increases depending on the activity rαβ transmitted from population β. The variance combines the uncertainties of the different contributions to , where

Time delays enter the system through rαβ and ραβ. We denote the system of DDAEs (see Equation 5) by

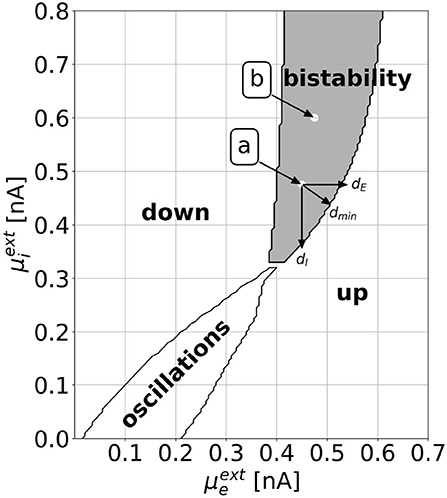

Figure 2 shows a slice through the state space of the EI EIF model. With the parameters as defined in Table 1, one can observe all dynamically interesting phenomena by varying the external background inputs and , which take the role of bifurcation parameters. With numerical simulations, we find a down state of constant low activity, an up state of constant high activity, a limit cycle with rate oscillations, and a bistable regime, where stable states of constant low and high activities coexist. We validate the stability of these points by numerically evaluating the Jacobian Matrix (see Supplementary section 1). Minimal and maximal values of the rates vary throughout the regimes. For a thorough analysis of the dynamics, refer to Cakan and Obermayer (2020). In this work, we focus on the bistable regime and investigate how to switch from one stable state to another. Bistability is considered an important feature for realistic models of brain dynamics as similar patterns appear in biological neural networks (Latham et al., 2000; Holcman and Tsodyks, 2006).

Figure 2. The dynamical landscape of the mean-field EI EIF model. Depending on the mean background inputs and , we observe a down state, an up state, an oscillatory regime, or a bistable regime, where down and up states coexist. We choose two locations, which we call point a () and point b (), for which we show explicit results in Section 3. We define the horizontal, vertical, and shortest distance to the regime boundary as dE, dI, and dmin, respectively. This definition can be applied both for the distances to the up regime, as shown in the figure, and to the down regime.

Optimal control theory enables us to find a control function u(t) that affects a dynamical system in an efficient way to reach a target state . We quantify the performance of the control u(t) with a cost functional. Minimal costs reflect optimality. Minimizing the cost functional is a constrained optimization problem. In a controlled setting, the system of DDAEs (see Equations 5 and 8) depends on the external control function u(t),

We denote the total cost functional by . It depends on the state vector x(t, u(t)), the target state , and the control u(t). The total cost is the weighted sum of three contributions (Casas et al., 2015),

The precision cost FP measures how accurately the target state is reached. It is defined as the integral over the squared difference of the actual state x(t) and the target state ,

Imprecision is penalized in a time interval [t0, t1]. In this study, [t0, t1] is at the end of the control interval [0, T]. We denote the integrand by . The “efficiency” of the control input is quantified by one cost functional that uses the L1-norm, F1, and one cost functional that uses the L2-norm, F2. In the literature, former is often referred to as the “sparsity cost” and the latter as the “energy cost.” The F1-cost is defined as Casas et al. (2015).

By integrating over the squared components of the control signal and taking the square root for each dimension individually before summing over the input dimensions, this cost functional enforces a small number of control input channels with non-zero control strength. The F2-cost measures the total strength of the control signal and enforces small absolute values. It is given by

The optimal control u*(t) is defined as the control with minimal cost,

By choosing the weights W1 and W2 appropriately, one can enforce different characteristics of the optimal control solution.

We compute the optimal control with a gradient descent algorithm. The gradient of the cost functional with respect to the control is obtained from the adjoint method (we provide an explicit derivation in the Supplementary section 2, based on Göllmann et al., 2009; Biegler, 2010). It is given by

h denotes the system dynamics (see Equations 5 and 8), Du is the Jacobian matrix with respect to the control, λ(t) is the so-called adjoint state, and the components of ∇uf = W1·∇uf1 + W2·∇uf2 are given by Casas et al. (2015).

The adjoint state λ(t) is defined by the differential equation

with the final condition λ(T) = 0. In Equation (17), χ[ta,tb] denotes the indicator function on the interval [ta, tb]. Dx, DxE, DxI, and are the Jacobian matrices with respect to the state variable at time t (i.e., x(t)), at time t − dE (i.e., x(t − dE)), at time t − dI (i.e., x(t − dI)), and the Jacobian matrix with respect to the derivative of the state variable (i.e., ).

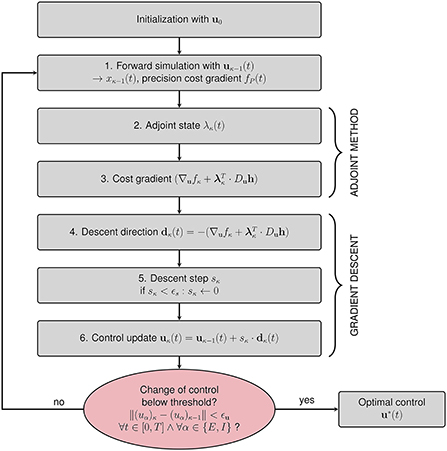

The iterative algorithm for the calculation of the optimal control u*(t) is given in Figure 3. After initialization with a first guess u0 for the optimal control (see Section 2.2.4.1), the steps in the κth iteration are as follows:

1. Perform a forward simulation using uκ−1(t) to obtain all dynamical variables xκ−1(t).

2. Compute the adjoint state λκ(t) by solving Equation (17) backward in time with the initial condition λκ(T) = 0.

3. Compute the gradient .

4. Set the descent direction .

5. Find an appropriate step size sκ such that uκ(t) = uκ−1(t)+sκ·dκ(t) outperforms uκ−1(t) in terms of total costs. We start by multiplying dκ(t) with a step size sκ = 10. We halve sκ and evaluate the cost resulting from uκ−1(t)+sκ·dκ(t) until we find the cost minimum. We choose this step size sκ. The bisection algorithm returns sκ = 0 if the step size falls below a threshold value ϵs to avoid infinite loops.

6. Update the control uκ(t) = uκ−1(t)+sκ·dκ(t).

Figure 3. Flowchart summarizing the gradient descent procedure for computing the optimal control u*(t). After initializing the algorithm with an initial guess for the control u0(t), six steps are performed within each iteration. The algorithm terminates if the change of control between subsequent iterations is below a predefined threshold value ϵu in all components and for all points of time.

We terminate the iteration if the change of the control uκ(t)−uκ−1(t) is below a threshold value ϵu in all components and for all points of time.

We add time-varying functions uE(t) and uI(t) to the differential equations that define the membrane currents of the mean-field EI EIF model (see Equation 5 and Figure 1).

Note that the control inputs are measured in units of mV ms-1. However, they can be converted to currents measured in units of A by multiplication with the membrane capacitance C. We will present our results in units of nA.

We compute and investigate the optimal control for the tasks of driving the EI EIF model from the down to the up state and vice versa, for either L1- or L2-constraints, and for various parameter combinations in the bistable regime (see Figure 2). This yields four tasks per parameter combination:

1. Down state → up state, L1-constraints: DU1-task,

2. Down state → up state, L2-constraints: DU2-task,

3. Up state → down state, L1-constraints: UD1-task,

4. Up state → down state, L2-constraints: UD2-task.

The observable physical quantity of the mean-field EI EIF model is the rate rα, α ∈ {E, I}, which, in the stable target state, does not depend on time. We observe that a state transition of rE is always accompanied by a transition of rI. Therefore, we define the target state via the mean rate of the excitatory population only, which unambiguously characterizes this target state. We chose a time window [0, T], during which control is active and penalize the deviation of the excitatory rate from its target value during an interval [t0, T], t0 ≥ 0. When t0 is small, we can investigate optimal transitions with time constraints.

We apply either L1-constraints () to investigate, to which population the application of control is more efficient, or L2-constraints () to investigate the effect of enforcing low amplitudes. The corresponding total cost reads

To make results better comparable across different lengths of the time window of penalization T − t0, we multiply the precision cost with its inverse .

We present results that investigate optimal transitions with or without time restrictions. For the former (presented in Sections 3.1, 3.2, and 3.3), we define the control time T = 500ms and the precision measurement onset time t0 = 480ms. This is significantly longer than the duration over which the optimal control signal has a finite value and enables smooth transitions without major discontinuities or other finite-size effects (see Section 3.4). Throughout the bistable regime, we find that under optimal control the target states are reached before the precision measurement starts, such that the precision cost FP is negligibly small. For transitions under time constraints (presented in Section 3.4), we decrease both the simulation duration T and the precision measurement onset time t0 from T = 500ms and t0 = 480ms to T = 20ms and t0 = 0ms stepwise, such that T − t0 = 20ms remains constant.

Gradient descent methods in general are only guaranteed to converge to a local optimum. Whether this optimum also corresponds to a global optimum of the cost depends on the initialization u0 of the control.

For investigations with T = 500ms, we find optimal control signals that lead to vanishing precision costs, FP ≈ 0. Therefore, the final control result does not depend on the weight Wj, as long as Wj is below a threshold value that we denote by Wj,max. Beyond Wj,max, it is less costly to be imprecise and stay in the initial state than to intervene and change the state, and the algorithm will return the zero control signal u(t) = 0. Wj determines the relative weight of ∇ufj (i.e., the gradient of the L1- or L2-cost; first term in Equation 15) and (resulting from the precision measurement; the second term in Equation 15). During optimization, the speed of convergence may vary with the choice of Wj. The algorithm convergences relatively fast if we frequently change Wj to a randomly chosen number between 0 and Wj,max.

We denote the components of the control vector by u(t) = (uE(t), uI(t)). For the down-to-up switching tasks, we define three initializations:

These are rectangle pulses centered at . For the up-to-down switching tasks, we multiply with −1. For each of these initializations, the algorithm converges to a pulse-shaped control signal. Depending on the task and the state space parameters , all three initializations might lead to the same or two different results. In the latter case, these correspond to local optima. We validate that the algorithm returns identically shaped control signals if initialized differently (e.g., gaussian function in uE, uI, both, zero, etc.). However, shifting signals in time is computationally very time-consuming, in particular, if initializations are centered close to t = 0 or t = t0.

For each of these u0(t), we compute the optimal control as follows:

1. We perform ten iterations with or allowing only control input uE to the excitatory population (1. initialization), uI to the inhibitory population (2. initialization), or control inputs to both populations (3. initialization).

2. We allow control inputs to both populations.

3. We set Wj to a random value between 0 and Wj,max and perform several tens of iterations. We repeat until convergence (, see Section 2.2.2 and Figure 3).

4. We set or and measure the total cost of the control.

We compare the three initializations and take the result with the lowest total cost as the optimal control. This initialization yields results with peaks approximately at .

For point a (see Figure 2), we investigate the optimal control for shorter simulation times T < 500ms. To this end, we successively reduce T and t0, keeping T − t0 = 20ms fixed. When reducing T, we initialize with the optimal control signal for T = 500ms, shifted back in time such that the peak remains at . To avoid local optima, we also compute the optimal control for T = 20ms and t0 = 0 and successively increase T and t0, keeping T − t0 = 20ms fixed. For each optimization, we initialize with the optimal control signal of the next longest T. We compare the results from the two different approaches and choose the signal with the lowest total cost as the optimal control.

We implement the optimal control algorithm using neurolib (Cakan et al., 2021), an open source python simulation framework for whole-brain neural mass modeling. Neurolib offers various models of neural dynamics, including the mean-field EI EIF model described in Section 2.1. We use Euler integration with an integration step size of dt = 0.1ms. We validate that this value is sufficiently small to avoid numerical inaccuracies, results are shown in the Supplementary section 3.

A graphical interface visualizes the optimal control signals and the resulting neural activity for the four state switching tasks for various parameter combinations () within the bistable regime (see Figure 2). The interface is available at github.com/lenasal/Optimal_Control_GUI.

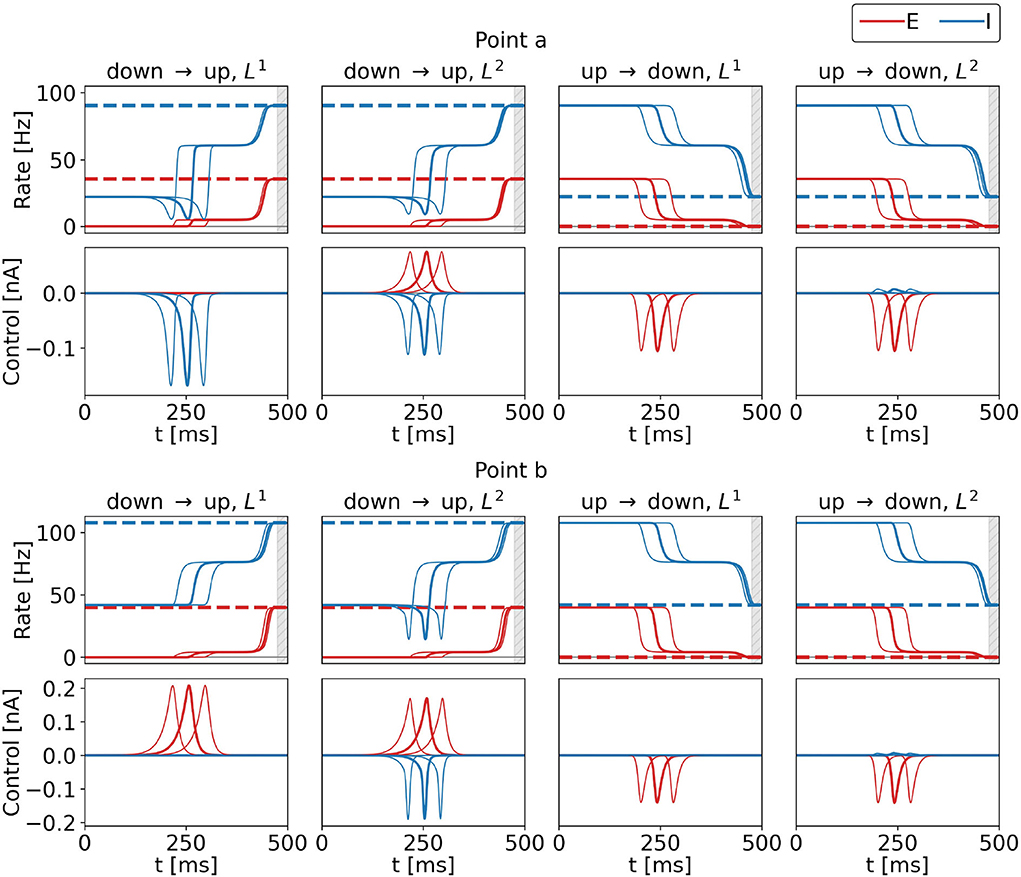

Figure 4 shows the optimal control signals and the resulting firing rates obtained from initializations as described in Section 2.2.4.1. We also show optimal control signals obtained from an initial rectangle pulse centered around 200 and 280 ms (cf. Equations 20–22). Across the three initializations, resulting costs are identical to at least five significant digits, for all four control tasks, for both points a and b. Also, there are no noteworthy differences in the control signals apart from their respective shifts by ±40 ms. We subtract the signals (shifted back by ±40 ms) from the original ones and find a difference of 31 nA at most for the two points and the four tasks. We hypothesize that there is a continuous set of optimal control signals with different peak times. For T → ∞, we thus expect a continuous set of global optima, where any peak time can be realized. In the following, we will present the solutions obtained from the initialization as explained in Section 2.2.4.1 only.

Figure 4. Control inputs and population rates for three different initializations for the four control tasks and for points a (top row) and b (bottom row) marked in the state space diagram of Figure 2. Bold lines show results obtained for the standard initialization, and the lines to the right (left) show results with the initialization pulse shifted by 40 ms (−40 ms). The top rows show the firing rates of the excitatory (red) and inhibitory (blue) population as a function of time, bottom rows show the corresponding optimal control currents, uE in red, uI in blue. From left to right, the columns show the results for the DU1-, DU2-, UD1-, and UD2-task. The respective target rates are indicated by the dashed lines. The simulation duration is T = 500 ms. During the last 20 ms, precision is penalized (gray shaded area). The numerical values for the costs are FDU1 = 3.3312, FDU2 = 3.5516, FUD1 = 2.1462, and FUD2 = 2.2901 at point a, and FDU1 = 5.0064, FDU2 = 10.9004, FUD1 = 2.6569, and FUD2 = 3.5209 at point b for all three initializations.

When optimal control is applied, the firing rates of the excitatory and inhibitory population pass a plateau (see Figure 4, all tasks and both points). Once the control pulse is applied, the system departs from the initial state. The transition is decelerated until the system reaches the intermediate plateau state. Then, the control terminates, keeping the control effort low. As a consequence, the system relaxes and naturally accelerates toward the stable target state, which is smoothly approached. This behavior is observed for all tasks in Figure 4 and throughout the whole bistable regime (results not shown).

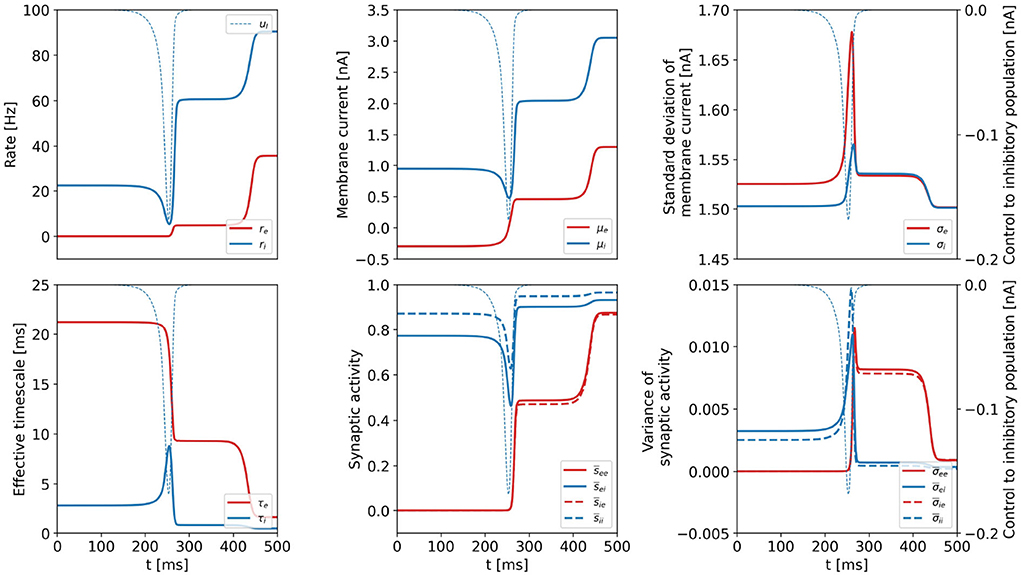

We plot all dynamical variables for the DU1-task at point a in Figure 5 and verify that the constant intermediate state is a common feature of all variables. We denote the state variables at the plateau state by xP. As the values are constant, . We hypothesize that the intermediate plateau is related to an unstable fixed point (see Supplementary section 1) that separates the basins of attraction of the initial and the final state. The control acts such that the system is steered minimally across the boundary of the basins of attraction. Once the boundary is passed, the system is certain to reach the target state without further control input.

Figure 5. Dynamical variables as a function of time for the DU1-task, when optimal control is applied. Parameters correspond to point a shown in Figure 2. Variables related to the excitatory (inhibitory) population are plotted in red (blue). We show the optimal control input to the inhibitory population in each plot as the thin, dashed, blue line (uE = 0). All dynamical variables reach a plateau state between t≈ 250 ms and t ≈ 400 ms.

Optimal control signals are bell-shaped pulses throughout the bistable regime for all tasks. We investigate four properties of the optimal control signals:

1. Dimensionality: We refer to a control as one-dimensional (1d), if it is applied to one population only. For 1d control signals, either uI = 0 or uE = 0. If a control signal is applied to both excitatory and inhibitory populations, we call it two-dimensional (2d). 2d signals can be dominated by input to the excitatory population (max|uE| ≥ max|uI|) or by input to the inhibitory population (max|uE| < max|uI|).

2. Amplitude: We define the maximum of the absolute value of each control signal as its amplitude .

3. Cost: We investigate the effects of L1- (DU1- and UD1-task) or L2-constraints (DU2- and UD2-task). The contribution to F1 of a control signal applied to the α population is given by

and the corresponding contribution to F2 by

4. Width: We define the width wα of a control signal uα(t) as the duration, over which the absolute value is at least half its maximum, i.e., wα = tw1 − tw0, where for t ∈ [tw0, tw1].

In the following, we denote the horizontal (vertical) distance from a selected point to the target regime boundary by dE (dI) and the shortest distance by dmin (see Figure 2).

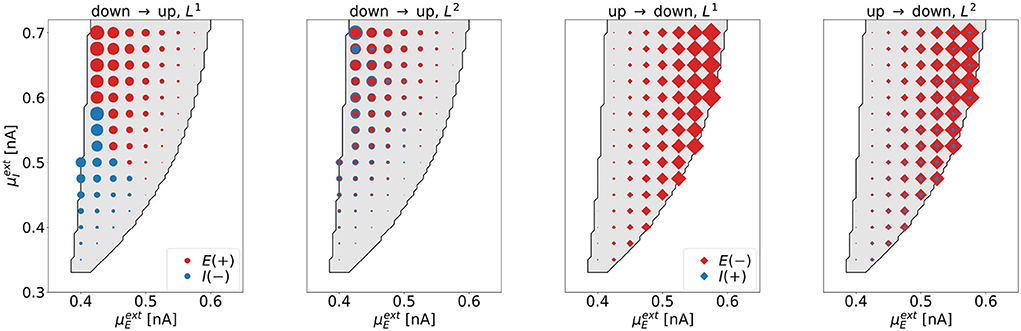

Dimensionality. We investigate the dimensionality of the optimal control signals for all tasks for various parameter combinations in the bistable regime. The results are summarized in Figure 6, where each symbol represents one point in state space, for which the optimization was performed.

Figure 6. The dimensionality of the optimal control signals at selected points in the bistable regime. The four panels correspond to the four control tasks. Each marker represents one point in state space, for which the optimal control was computed. We indicate the excitatory (inhibitory) control amplitude with red (blue) markers. The area of the markers scales with the respective amplitude of the optimal control signal. For the down-to-up tasks (first and second panel), red circles correspond to positive signals, blue circles correspond to negative signals. For the UD2-task (rightmost panel), the size of the blue diamonds was increased by a factor of 200 compared to the red diamonds to also visualize the contribution of the weak control signal uI.

As expected, we find that L1-constraints lead to one-dimensional solutions only (DU1- and UD1-task). For the DU1-task, we find 1d control of the inhibitory population for lower and 1d control of the excitatory population for higher values of . For the UD1-task, all solutions show non-zero control input to the excitatory population only. Constraints resulting from applying L2-constraints lead to 2d solutions. For the DU2-task, these are dominated by input to the inhibitory population for low and by input to the excitatory population for high values of . For the UD2-task, all solutions are dominated by control inputs to the excitatory population.

Applying control to the excitatory (inhibitory) population is related to a shift in state-space along the -axis (-axis). The control always operates such that it moves the system toward the target regime; right or downwards for the down-to-up tasks, left or upwards for the up-to-down tasks. As a consequence, uE and uI always have opposite signs. Due to the almost vertical boundary toward the down regime, applying control to the inhibitory population is not efficient for the up-to-down switching tasks.

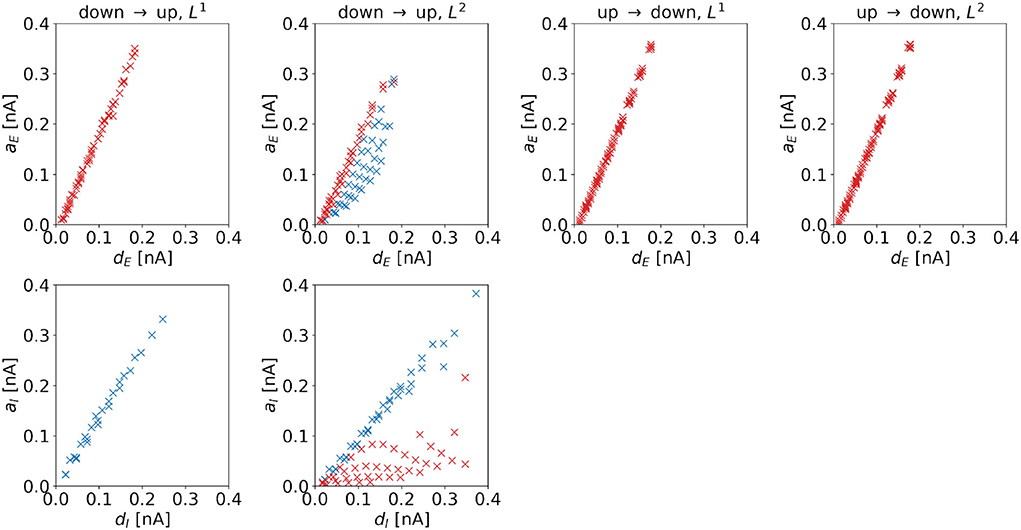

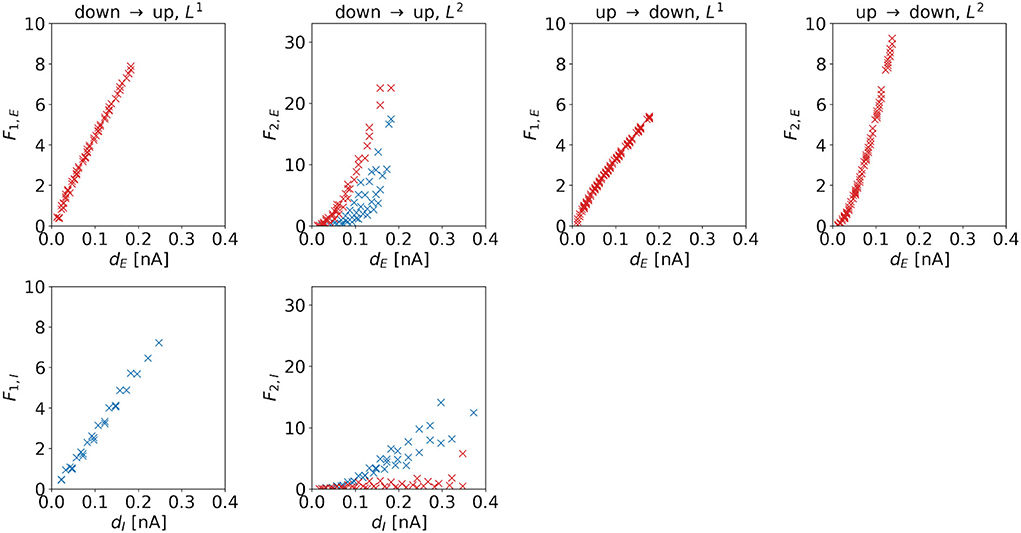

Amplitude. The amplitude of the (dominating) control signal depends on the distance to the target regime boundary. Figure 7 shows amplitudes as a function of distances for the four control tasks. We observe linear dependencies for all cases. Comparing the top and bottom panels of the up-to-down tasks, we observe that aE increases faster than aI with distance, i.e., .

Figure 7. Amplitude of the optimal control signals as a function of the horizontal or vertical distance to the target regime boundary. The four columns correspond to the different tasks. We indicate 1d control of the excitatory population or 2d control with max|uE(t)| ≥ max|uI(t)| by red color and 1d control of the inhibitory population or 2d control with max|uE(t)| < max|uI(t)| by blue color. For the down-to-up switching tasks, the figures show aE over dE (top panel) and aI over dI (bottom panel). For the DU2-task, both figures include data from optimal control signals with max|uE(t)| ≥ max|uI(t)| (red markers) and max|uE(t)| < max|uI(t)| (blue markers). For the UD1- and UD2-tasks, we only show aE over dE. Correlation coefficients of aE over dE are as follows: 0.9984 (DU1, E), 0.9935 (DU2, E), 0.8996 (DU2, I), 0.9992 (UD1), 0.9992 (UD2). Correlation coefficients of aI over dI are as follows: 0.9968 (DU1, I), 0.6279 (DU2, E), and 0.9909 (DU2, I).

For the DU2-task, we compare results with max|uE(t)| ≥ max|uI(t)| (red markers in Figure 7) to results with max|uE(t)| < max|uI(t)| (blue markers in Figure 7). For the former, aI is relatively small, indicating that transitions are mainly induced by stimulation of the excitatory population. For the latter, aE is relatively high, indicating that stimulation of both populations is crucial for optimal transitions.

A higher control strength, i.e., a higher amplitude, is needed to overcome a larger distance toward the target regime. Despite the highly nonlinear dynamics of the model, the required increase in amplitude scales linearly with the distance dE or dI in the dominating input channel.

Cost. The cost of the (dominating) control signal is also determined by the distance to the target regime boundary. Figure 8 shows costs as a function of distances for the four control tasks. We observe a linear dependence if L1-constraints are applied. For the DU2- and UD2-tasks, we also find a linear correlation, however, the dependence is superlinear for these control tasks. For the DU1-task, the slope of the excitatory cost is steeper than the slope for the inhibitory cost, i.e., .

Figure 8. F1 and F2 of the optimal control signals as a function of the horizontal or vertical distance to the target regime boundary. The four columns correspond to the different tasks. We indicate 1d control of the excitatory population or 2d control with max|uE(t)| ≥ max|uI(t)| by red color and 1d control of the inhibitory population or 2d control with max|uE(t)| < max|uI(t)| by blue color. For the down-to-up tasks, the figure shows F1,E or F2,E over dE (top panel) and F1,I or F2,I over dI (bottom panel). For the DU2-task, both figures include data from optimal control signal with max|uE(t)| ≥ max|uI(t)| (red markers) and max|uE(t)| < max|uI(t)| (blue markers). For the UD1- and UD2-tasks, we only show aE over dE. Correlation coefficients of F1,E or F2,E over dE are as follows: 0.9980 (DU1, E), 0.9652 (DU2, E), 0.7984 (DU2, I), 0.9964 (UD1), and 0.9840 (UD2). Correlation coefficients of F1,I or F2,I over dI are as follows: 0.9953 (DU1, I), 0.5253 (DU2, E), and 0.9330 (DU2, I).

For the DU2-task, we compare results with max|uE(t)| ≥ max|uI(t)| (red markers in Figure 8) to results with max|uE(t)| < max|uI(t)| (blue markers in Figure 8). Similar to the relations found for the amplitude, we find that for the former, F2,I is relatively small, whereas for the latter, F2,E is relatively high.

A higher required control strength (i.e., a higher amplitude) is reflected in the corresponding cost. Due to the mathematical definition of F1 (see Equation 19, first line) and due to the fact that the amplitude scales linearly with the distance dE or dI, the dependence of F1,e or F1,i on dE or dI is also linear. However, the definition of F2 (see Equation 19, second line) implies that, if amplitude scales linearly with distance, the dependence of the cost must be superlinear.

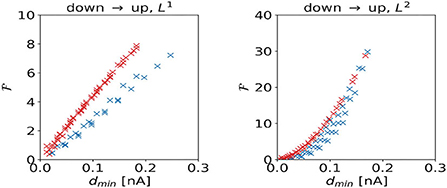

We investigate the scaling of the total cost with the shortest distance dmin to the target regime boundary. For the DU1-task, control inputs to the excitatory population produce higher total costs to overcome a certain distance to the target regime than control inputs to the inhibitory population (Figure 9, left panel). For the DU2-task, control signals dominated by inputs to the excitatory population produce higher total costs to overcome a certain distance to the target regime than control signals dominated by inputs to the inhibitory population (Figure 9, right panel).

Figure 9. Total cost as a function of the shortest distance dmin to the target regime boundary for the DU1- (left panel) and DU2-tasks (right panel).

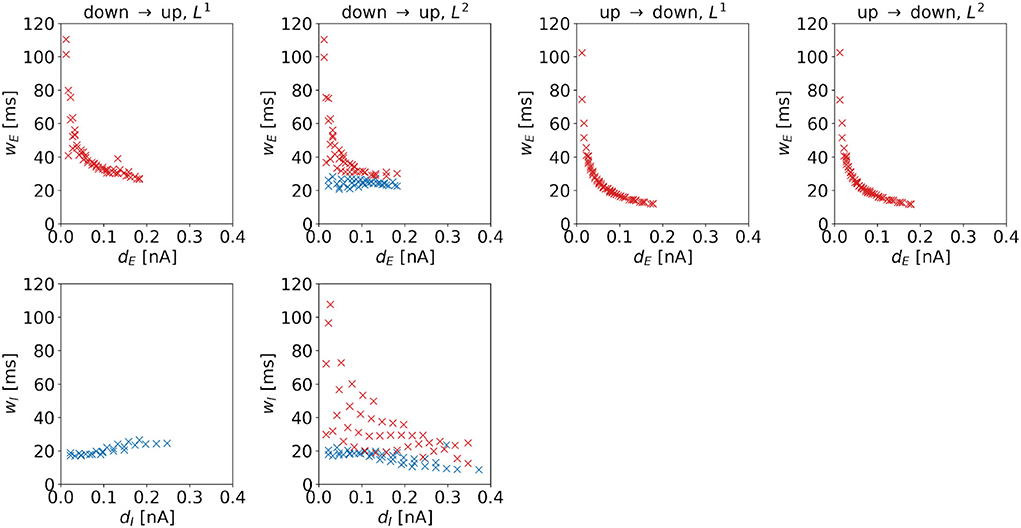

Width. The widths of the control signal depends on the distance to the regime boundary. For control signals dominated by inputs to the excitatory population, we observe a negative correlation (see red markers in Figure 10), i.e., such control pulses become sharper when moving away from the target regime boundary. For the DU2-task, this also holds for wI. In particular, wE and wI correlate strongly with each other, the Pearson correlation coefficient is 0.9916. For control signals dominated by inputs to the inhibitory population, we observe a positive correlation for the DU1-task (see Figure 10, first column, bottom panel), i.e., these control pulses become wider when moving away from the target regime boundary. For the DU2-task, the width of control signals dominated by inputs to the inhibitory population hardly changes with the distance to the target regime boundary.

Figure 10. Width of the optimal control signals as a function of the horizontal or vertical distance to the target regime boundary. The four columns correspond to the different tasks. We indicate 1d control of the excitatory population or 2d control with max|uE(t)| ≥ max|uI(t)| by red color and 1d control of the inhibitory population or 2d control with max|uE(t)| < max|uI(t)| by blue color. For the down-to-up tasks, the figure shows wE over dE (top panel) and wI over dI (bottom panel). For the DU2-task, both figures include data from optimal control signal with max|uE(t)| ≥ max|uI(t)| (red markers) and max|uE(t)| < max|uI(t)| (blue markers). For the UD1- and UD2-tasks, we only show wE over wE. Correlation coefficients of wE over dE are as follows: –0.7120 (DU1, E), –0.6479 (DU2, E), –0.6962 (DU2, I), –0.5492 (UD1), and –0.5476 (UD2). Correlation coefficients of wI over dI are as follows: 0.8848 (DU1, I), –0.6213 (DU2, E), and –0.6962 (DU2, I).

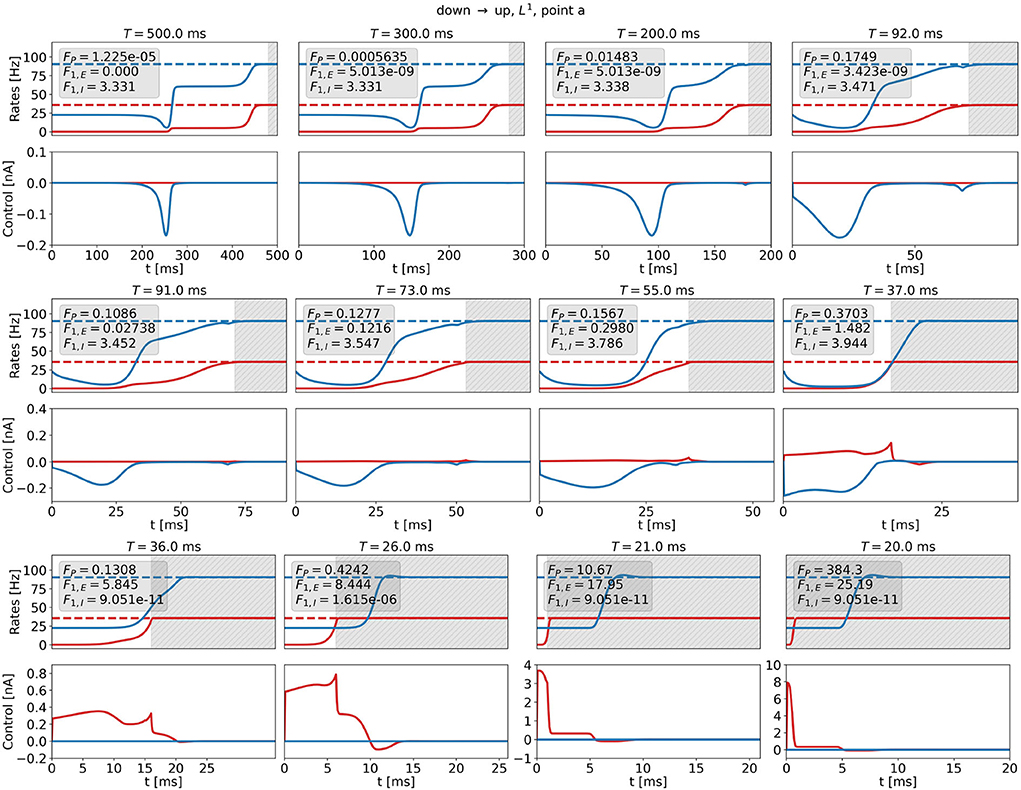

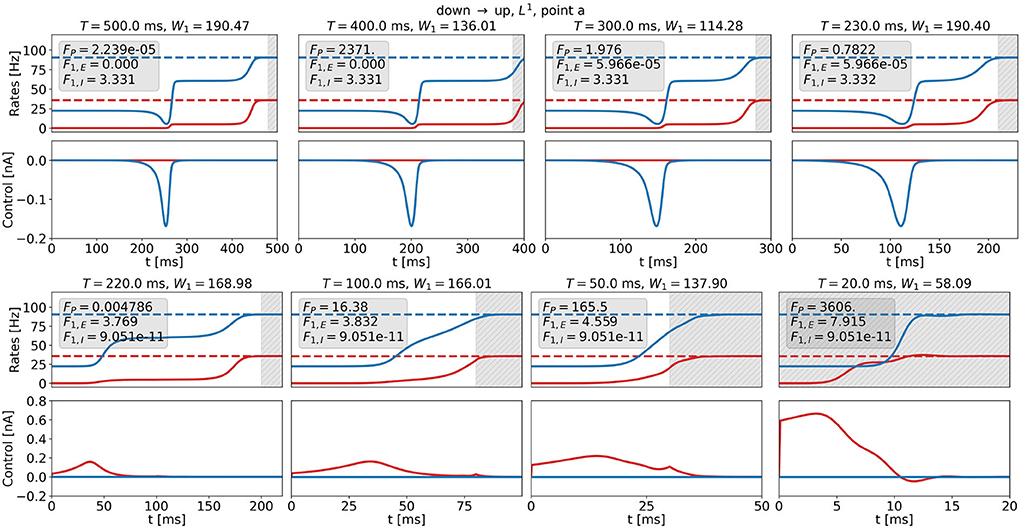

To investigate tradeoffs between transition time, precision cost, and strength of control, we reduce both the simulation duration T and the precision measurement onset time t0 from T = 500ms and t0 = 480ms to T = 20ms and t0 = 0 successively, such that T − t0 = 20ms remains constant (see Section 2.2.4.2).

We investigate optimal control signals for T ≤ 500ms for the DU1-task at point a and for two penalization strategies. We compute the optimal control for , or for W1,max. The numerical value depends on T and t0.

Figure 11 shows optimal control signals and the resulting trajectories of the firing rates for several values of T and t0 for the DU1-task at point a for . We find three different control strategies. For large transition times, t0 ≳ 72ms, T≳92ms, the optimal control remains a 1d signal to the inhibitory population (see Figure 11, top row). The cost remains almost constant with decreasing transition time, however, the plateau state becomes shorter. For intermediate transition times, 17ms ≲ t0 ≲ 71ms, 37ms ≲ T ≲ 91ms, there is a finite contribution of uE that increases when t0 becomes smaller (see Figure 11, center row). A secondary peak appears just before t0, which helps push the system toward the target state. The input to the excitatory population is much smaller than the input to the inhibitory population. For small transition times, t0 ≲ 16ms, T ≲ 36ms, the optimal control is a 1d signal to the excitatory population (see Figure 11, bottom row). The amplitude increases and reaches a maximum of approximately 8 nA for t0 = 0ms (note that the scaling along both the x- and the y-axis changes). With this control strength, the firing rate of the excitatory population reaches the target state after approximately 1 ms.

Figure 11. Firing rates (top panels) and optimal control signals (A) for transitions with various transition times t0 for the DU1-task at point a for . Excitatory (inhibitory) activity and control applied to the excitatory (inhibitory) population are plotted in red (blue). The gray area shows the time window of precision measurement, T − t0. The transition time t0 decreases from left to right and from top to bottom. The respective precision cost FP, and the F1,E- and F1,I-costs are given in the box of each figure.

Figure 12 shows optimal control signals and the resulting trajectories of the firing rates for several values of T and t0 for the DU1-task at point a for the highest possible value of W1, i.e., W1,max. We find two different control strategies. For large transition times, t0 ≳ 210ms, T≳230ms, the optimal control remains a one-dimensional signal to the inhibitory population (see Figure 12, top row). Again, the cost remains almost constant with decreasing transition time, whereas the plateau state becomes shorter. For small transition times, t0 ≲ 200ms, T ≲ 220ms, the optimal control is a one-dimensional signal to the excitatory population (see Figure 12, bottom row). The amplitude increases only for t0 ≈ 0ms and reaches a maximum of approximately 0.6 nA for t0 = 0ms. W1 is a relatively high number, preventing large input signals at the cost of an increased precision cost FP.

Figure 12. Firing rates (top panels) and optimal control signals (bottom panels) for transitions with various transition times t0 for the DU1-task at point a for W1 = W1,max. Excitatory (inhibitory) activity and control applied to the excitatory (inhibitory) population are plotted in red (blue). The gray area shows the time window of precision measurement, T − t0. The transition time t0 decreases from left to right and from top to bottom. The respective precision cost FP and the F1,E- and F1,I-cost (without the factor W1) are given in the box of each figure.

Transition strategies differ from the solution found for T = 500ms once the simulation duration becomes comparable to the width of the control signal, i.e., around t0 ≈ 180ms and T ≈ 200ms. For longer t0 and T, control signals are relatively similar to the original signal for T = 500ms (see top panel in Figures 11, 12). For shorter t0 and T, the control signals differ notably from the original signal. The respective costs increase. For t0 ≲ 180ms and T ≲ 200ms, the results for and W1 = W1,max reveal different strategies. W1 determines the relationship between precision and control strength. In accordance with expectations, we can enforce either precise transitions, by choosing , or low-amplitude transitions, by choosing . For both penalization strategies, and W1 = W1,max, it is more efficient to stimulate the inhibitory population for long transition times and the excitatory population for short transition times. This could be a consequence of the time delay dE (see Equations 6 and 7) and of the fact that we measure precision only in the firing rate of the excitatory population, as rE reacts faster to inputs to the excitatory node.

This study uses an iterative numerical algorithm to compute optimal control for a biologically motivated nonlinear mean-field model of a population of excitatory and inhibitory neurons for four different control tasks. Our key findings are as follows: First, there are continuous sets of optimal control signals for each parameter choice and task if the time interval with no penalty on precision is sufficiently long, i.e., if the precision cost at the end of this interval is negligible compared to the cost of control strength. Since the duration of the control inputs remains finite even for long time intervals [0, T], time-shifted versions of otherwise identical control signals are cost-optimal as long as control signals are not too close to the interval boundaries. Second, we find that the optimal control operates such that the system is steered just minimally beyond the boundary that separates the two basins of attraction. The system converges to the respective stable target state without the requirement of further control input beyond that boundary. This keeps the control costs low. Third, we find systematic dependencies of input channels and certain parameters related to the shape of the optimal control signals on the distance to the target regime boundary. Rather unexpectedly, we also find that optimal control strategies do not consistently select one input channel, but steer the system through the excitatory or inhibitory channel depending on the exact location in state space. Finally, in a time-constrained setting, we observe not only amplitude effects, which would be expected, but also changes in shape and input channels.

Our approach to nonlinear OCT features some technical limitations, which must be considered appropriate to ensure that reliable results are produced. First, gradient descent algorithms are not guaranteed to converge to global optima. Optimal cost solutions may reflect local optima only, and there may be other initializations that could converge to control inputs at an even lower cost. Comparing solutions resulting from different initializations, however, did not provide evidence for a complicated energy landscape. One specific control signal shape is found from different initialization strategies, and shifts in time are computationally extremely time-consuming. We conclude that our heuristic approach to initialization produces results that are satisfactorily close to a global optimum and can thus be used to reliably investigate the systematic properties of optimal control strategies. Models of higher complexity, however, may require modifications of initialization strategies (cf. Chouzouris et al., 2021).

The time complexity of the proposed OCT method depends on the number of dynamical variables, the number of iterations of the descent algorithm, and the simulation time measured in units of the integration step size. Computation time scales linearly with the simulation time T and the number of iterations. The computation of the adjoint state (see Section 2.2.2, Figure 3, and Supplementary section 1) requires the Jacobian matrix. Hence, the computational complexity of the gradient of the cost scales quadratically with the number of dynamical variables. The computation of the descent step sκ (see Section 2.2.2 and Figure 3) requires approximately forward simulations per descent step, the computational complexity of the forward simulation scales linearly with N and T. For our investigations, we find that due to a large number of forward simulations, the step size computation accounts for approximately 40–60% of the total computation time. For the EI EIF model, the computation of the optimal control signal for one initialization for one point in state space requires approximately 10 min CPU time on a laptop-computer (11th Gen Intel® Core™ i7-1165G7, CPU base frequency 2.8 GHz, maximum frequency 4.7 GHz) for T = 500ms (integration step dt = 0.1ms). The choice of abort criteria, and (see Section 2.2.2 and Figure 3), led to several thousand iterations of the gradient descent procedure. For simpler models (e.g., the Wilson-Cowan model), the computation time decreases approximately by a factor of M/N, where M is the number of the respective dynamical variables, rendering the investigation of neural mass models of complex networks feasible also on laptop computers (cf. Chouzouris et al., 2021).

Given the high metabolic demand of neural systems, evolutionary pressure could have enforced energy efficient interactions between its components (Niven, 2016; Watts et al., 2018). The consequences for the neural dynamics could, in principle, be investigated using methods from nonlinear OCT. Setting up a realistic energy balance for a neural system is a difficult task, and a neural mass model as it is investigated here would not be detailed enough to allow for this. Given the interpretation of the control u(t) as an induced ion current that affects the neurons' membrane potential, the metabolic energy E required to restore the neurons' state could be estimated roughly via the number of ions involved,1

In our simplistic example, efficiency would then be related to the L1-norm of the control, i.e., to the optima of the corresponding cost functional in Equation (19). The formalism of OCT investigated in this work, however, can be extended to other cost functionals in principle and may thus allow for realistic analytical investigations into the consequences of metabolic or other constraints on neural processing.

On the synthetic side, both the L1- and the L2-norm have previously been investigated in the context of the external control of neural systems. The L2-norm leads to so-called minimum-energy control strategies (cf. Nabi et al., 2012; Wilson et al., 2015). These strategies are motivated by reduced energy consumption of an electric stimulation device potentially supporting a longer-term deployment. The L1-norm leads to so-called minimum-charge control strategies (cf. Pyragas et al., 2018, 2020). These strategies are motivated by a reduced interference with neural tissue potentially lowering the danger of tissue damage (cf. Shannon, 1992). Gradient-based optimization as investigated in this study may provide an alternative method to derive these optimal control strategies. With properly adapted precision measures (e.g., measures of synchronization, Chouzouris et al., 2021) and alternative constraints (if required), the formalism of OCT investigated in this work can be extended to a variety of novel control goals.

This study focuses on a state-switching task in a bistable regime. In vivo experiments show that neural tissue can spontaneously transit between a state of low, steady activity (1 Hz-5 Hz) and a state of high activity or rhythmic bursting in the absence of stimuli (Latham et al., 2000; Holcman and Tsodyks, 2006). Electrophysiological recordings during the execution of memory tasks report regular transitions between states of inactivity and activity of single neurons (e.g., Funahashi et al., 1989). During sleep and anesthesia, slow-wave oscillations are observed and commonly modeled as periodic transitions of up and down states (Torao-Angosto et al., 2021). It is hypothesized that such transitions are fundamental for working memory and attention and for memory consolidation during sleep (Diekelmann and Born, 2010; Klinzing et al., 2019). Hence, bistability is thought to be a functionally important element of neural population dynamics, and efficient control of the population state may be a prerequisite for performing cognitive tasks (Durstewitz and Seamans, 2006). Beyond its biological importance, bistability enables stimulation that is limited in time and can yet produce sustainable changes in the activity of the system and is, therefore, a convenient dynamical regime for studies of control.

The results reported in this study pertain to the noise-free case. When additive noise affects the membrane currents μα (see Equation 5), the mean activities and of both excitatory and inhibitory populations decrease in the up state, and increases in the down state. In addition, noise-induced transitions between up and down states may occur. The probability of spontaneous transitions increases with noise strength. The theoretical framework needs to be adapted by replacing the precision cost in Equation (11) with its expectation value. Practically, it is required to average over several noise realizations. Preliminary investigations into the optimal control for switching between the two stable states in the bistable regime show that both the amplitude aα and the cost cα of the control signals increase. As a result, the system is pushed closer to the target regime. The plateau state vanishes thus preventing immediate noise-induced transitions back to the original state.

In general, our theoretical and algorithmic approach can be applied to a wide range of models of neural dynamics, including whole-brain network structures (cf. Cakan et al., 2022) and can be extended to different control tasks (e.g., Chouzouris et al., 2021). This could, for example, open up new ways to study the efficiency of neural interaction theoretically. Evolutionary pressure and natural selection led to a high degree of cost efficiency in biological processes. Principles of communication resulting from applying optimal control to neural dynamics could thus be reflected in biological systems. In the context of our toy example, these principles could enable conclusions on the efficiency of stimulating the excitatory vs. the inhibitory population. On the synthetic side, applying optimal control methods to a real-world framework of neural dynamics could offer a fresh view on optimal protocols for neural stimulation in a clinical context, and presumably enable to minimize undesired side- and after-effects.

The data presented in this study can be found in the Github online repository: https://github.com/lenasal/Optimal_Control_GUI.

LS implemented the algorithm, performed the simulations, and analyzed the data. KO supervised the project. Both authors conceptualized the study and drafted the manuscript.

This work was supported by the DFG (German Research Foundation) via the CRC 910 (Project number 163436311).

We would like to thank our colleagues from the Neural Information Processing Group for the valuable exchange and fruitful discussions during this project.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2022.931121/full#supplementary-material

1. ^For different ion species with different restoration costs the integrand must be replaced by a weighted sum of the individual currents.

Au, J., Karsten, C., Buschkuehl, M., and Jaeggi, S. (2017). Optimizing transcranial direct current stimulation protocols to promote long-term learning. J. Cogn. Enhan. 1, 65–72. doi: 10.1007/s41465-017-0007-6

Augustin, M., Ladenbauer, J., Baumann, F., and Obermayer, K. (2017). Low-dimensional spike rate models derived from networks of adaptive integrate-and-fire neurons: comparison and implementation. PLoS Comput. Biol. 13, 1–46. doi: 10.1371/journal.pcbi.1005545

Berkovitz, L., and Medhin, N. (2012). Nonlinear Optimal Control Theory. Boca Raton, FL: Chapman & Hall; CRC Applied Mathematics &Nonlinear Science. Taylor & Francis.

Berret, B., Conessa, A., Schweighofer, N., and Burdet, E. (2021). Stochastic optimal feedforward-feedback control determines timing and variability of arm movements with or without vision. PLoS Comput. Biol. 17, e1009047. doi: 10.1371/journal.pcbi.1009047

Bian, T., Wolpert, D. M., and Jiang, Z.-P. (2020). Model-free robust optimal feedback mechanisms of biological motor control. Neural Comput. 32, 562–595. doi: 10.1162/neco_a_01260

Biegler, L. (2010). “Nonlinear programming: concepts, algorithms, and applications to chemical processes,” in MOS-SIAM Series on Optimization. Society for Industrial and Applied Mathematics (Philadelphia, PA).

Brette, R., and Gerstner, W. (2005). Adaptive exponential integrate-and-fire model as an effective description of neuronal activity. J. Neurophysiol. 94, 3637–3642. doi: 10.1152/jn.00686.2005

Cakan, C., Dimulescu, C., Khakimova, L., Obst, D., Flöel, A., and Obermayer, K. (2022). Spatiotemporal patterns of adaptation-induced slow oscillations in a whole-brain model of slow-wave sleep. Front. Comput. Neurosci. 15, 80101. doi: 10.3389/fncom.2021.800101

Cakan, C., Jajcay, N., and Obermayer, K. (2021). neurolib: A simulation framework for whole-brain neural mass modeling. Cogn. Comput. doi: 10.1007/s12559-021-09931-9

Cakan, C., and Obermayer, K. (2020). Biophysically grounded mean-field models of neural populations under electrical stimulation. PLoS Comput. Biol. 16, e1007822. doi: 10.1371/journal.pcbi.1007822

Casas, E., Herzog, R., and Wachsmuth, G. (2015). “Analysis of spatio-temporally sparse optimal control problems of semilinear parabolic equations,” in ESAIM: Control, Optimisation and Calculus of Variations 2015, 23:263–295. Available online at: https://www.esaim-cocv.org/articles/cocv/abs/2017/01/cocv150048/cocv150048.html

Chen, X., Wang, F., Fernandez, E., and Roelfsema, P. R. (2020). Shape perception via a high-channel-count neuroprosthesis in monkey visual cortex. Science 370, 1191–1196. doi: 10.1126/science.abd7435

Chouzouris, T., Roth, N., Cakan, C., and Obermayer, K. (2021). Applications of optimal nonlinear control to a whole-brain network of FitzHugh-nagumo oscillators. Phys. Rev. E 104, 213. doi: 10.1103/PhysRevE.104.024213

Colzato, L., Nitsche, M., and Kibele, A. (2017). Noninvasive brain stimulation and neural entrainment enhance athletic performance–a review. J. Cogn. Enhan. 1, 73–79. doi: 10.1007/s41465-016-0003-2

Dasanayake, I. S., and Li, J.-S. (2014). Design of charge-balanced time-optimal stimuli for spiking neuron oscillators. Neural Comput. 26, 2223–2246. doi: 10.1162/NECO_a_00643

Diedrichsen, J., Shadmehr, R., and Ivry, R. (2009). The coordination of movement: optimal feedback control and beyond. Trends Cogn. Sci. 14, 31–39. doi: 10.1016/j.tics.2009.11.004

Diekelmann, S., and Born, J. (2010). Diekelmann s, born j. the memory function of sleep. Nat. Rev. Neurosci. 11, 114–126. doi: 10.1038/nrn2762

Durstewitz, D., and Seamans, J. (2006). Durstewitz d, seamans jk. beyond bistability: biophysics and temporal dynamics of working memory. Neuroscience. 139, 119–33. doi: 10.1016/j.neuroscience.2005.06.094

Ewald, J., Bartl, M., and Kaleta, C. (2017). Deciphering the regulation of metabolism with dynamic optimization: an overview of recent advances. Biochem. Soc. Trans. 45, BST20170137. doi: 10.1042/BST20170137

Flesher, S. N., Downey, J. E., Weiss, J. M., Hughes, C. L., Herrera, A. J., Tyler-Kabara, E. C., et al. (2021). A brain-computer interface that evokes tactile sensations improves robotic arm control. Science 372, 831–836. doi: 10.1126/science.abd0380

Funahashi, S., Bruce, C., and Goldman-Rakic, P. (1989). Funahashi s, bruce cj, goldman-rakic ps. mnemonic coding of visual space in the monkey's dorsolateral prefrontal cortex. J. Neurophysiol. 61, 331–349. doi: 10.1152/jn.1989.61.2.331

Göllmann, L., Kern, D., and Maurer, H. (2009). Optimal control problems with delays in state and control variables subject to mixed control-state constraints. Opt. Control Appl. Methods 30, 341–365. doi: 10.1002/oca.843

Grosenick, L., Marshel, J. H., and Deisseroth, K. (2015). Closed-loop and activity-guided optogenetic control. Neuron 86, 106–139. doi: 10.1016/j.neuron.2015.03.034

Gu, S., Pasqualetti, F., Cieslak, M., Telesford, Q. K., Yu, A. B., Kahn, A. E., et al. (2015). Controllability of structural brain networks. Nat. Commun. 6, 9414. doi: 10.1038/ncomms9414

Holcman, D., and Tsodyks, M. (2006). The emergence of up and down states in cortical networks. PLoS Comput. Biol. 2, e23. doi: 10.1371/journal.pcbi.0020023

Klinzing, J., Niethard, N., and Born, J. (2019). Mechanisms of systems memory consolidation during sleep. Nat. Neurosci. 22, 1598. doi: 10.1038/s41593-019-0467-3

Latham, P., Richmond, B., Nelson, P., and Nirenberg, S. (2000). Intrinsic dynamics in neuronal networks. I. theory. J. Neurophysiol. 83, 808–827. doi: 10.1152/jn.2000.83.2.808

Löber, J., and Engel, H. (2014). Controlling the position of traveling waves in reaction-diffusion systems. Phys. Rev. Lett. 112, 148305. doi: 10.1103/PhysRevLett.112.148305

Marshel, J. H., Kim, Y. S., Machado, T. A., Quirin, S., Benson, B., Kadmon, J., et al. (2019). Cortical layer-specific critical dynamics triggering perception. Science 365, eaaw5202. doi: 10.1126/science.aaw5202

Muldoon, S. F., Pasqualetti, F., Gu, S., Cieslak, M., Grafton, S. T., Vettel, J. M., et al. (2016). Stimulation-based control of dynamic brain networks. PLoS Comput. Biol. 12, e1005076. doi: 10.1371/journal.pcbi.1005076

Nabi, A., Mirzadeh, M., Gibou, F., and Moehlis, J. (2012). Minimum energy desynchronizing control for coupled neurons. J. Comput. Neurosci. 34, pages 259–271. doi: 10.1007/s10827-012-0419-3

Niven, J. E. (2016). Neuronal energy consumption: biophysics, efficiency and evolution. Curr. Opin. Neurobiol. 41, 129–135. doi: 10.1016/j.conb.2016.09.004

Popovych, O., and Tass, P. (2019). Adaptive delivery of continuous and delayed feedback deep brain stimulation - a computational study. Sci. Rep. 9, 10585. doi: 10.1038/s41598-019-47036-4

Pyragas, K., Fedaravičius, A. P., Pyragienė, T., and Tass, P. A. (2018). Optimal waveform for entrainment of a spiking neuron with minimum stimulating charge. Phys. Rev. E 98, 042216. doi: 10.1103/PhysRevE.98.042216

Pyragas, K., Fedaravičius, A. P., Pyragienė, T., and Tass, P. A. (2020). Entrainment of a network of interacting neurons with minimum stimulating charge. Phys. Rev. E 102, 012221. doi: 10.1103/PhysRevE.102.012221

Reteig, L., Talsma, L., van Schouwenburg, M., and Slagter, H. (2017). Transcranial electrical stimulation as a tool to enhance attention. J. Cogn. Enhan. 1, 10–25. doi: 10.1007/s41465-017-0010-y

Scott, S. H. (2012). The computational and neural basis of voluntary motor control and planning. Trends Cogn. Sci. 16, 541–549. doi: 10.1016/j.tics.2012.09.008

Shangerganesh, L., and Sowndarrajan, P. T. (2020). An optimal control problem of nonlocal pyragas feedback controllers for convective fitzhugh-nagumo equations with time-delay. SIAM J. Control Optim. 58, 3613–3631. doi: 10.1137/18M122248X

Shannon, R. (1992). A model of safe levels for electrical stimulation. IEEE Trans. Biomed. Eng. 39, 424–426. doi: 10.1109/10.126616

Srivastava, P., Nozari, E., Kim, J. Z., Ju, H., Zhou, D., Becker, C., et al. (2020). Models of communication and control for brain networks: distinctions, convergence, and future outlook. Network Neurosci. 4, 1122–1159. doi: 10.1162/netn_a_00158

Tafazoli, S., MacDowell, C., Che, Z., Letai, K., Steinhardt, C., and Buschman, T. (2020). Learning to control the brain through adaptive closed-loop patterned stimulation. J. Neural Eng. 17, 056007. doi: 10.1088/1741-2552/abb860

Takeuchi, Y., and Berényi, A. (2020). Oscillotherapeutics - time-targeted interventions in epilepsy and beyond. Neurosci. Res. 152, 87–107. doi: 10.1016/j.neures.2020.01.002

Tang, E., and Bassett, D. S. (2018). Colloquium: control of dynamics in brain networks. Rev. Mod. Phys. 90, 031003. doi: 10.1103/RevModPhys.90.031003

Todorov, E., and Jordan, M. (2002). Optimal feedback control as a theory of motor coordination. Nat. Neurosci. 5, 1226–1235. doi: 10.1038/nn963

Torao-Angosto, M., Manasanch, A., Mattia, M., and Sanchez-Vives, M. V. (2021). Up and down states during slow oscillations in slow-wave sleep and different levels of anesthesia. Front. Syst. Neurosci. 15, 609645. doi: 10.3389/fnsys.2021.609645

Tsiantis, N., and Banga, J. (2020). Using optimal control to understand complex metabolic pathways. BMC Bioinform. 21, 472. doi: 10.1186/s12859-020-03808-8

Watts, M. E., Pocock, R., and Claudianos, C. (2018). Brain energy and oxygen metabolism: emerging role in normal function and disease. Front. Mol. Neurosci. 11, 216. doi: 10.3389/fnmol.2018.00216

Wilson, D., Holt, A. B., Netoff, T. I., and Moehlis, J. (2015). Optimal entrainment of heterogeneous noisy neurons. Front. Neurosci. 9, 192. doi: 10.3389/fnins.2015.00192

Yeo, S.-H., Franklin, D. W., and Wolpert, D. M. (2016). When optimal feedback control is not enough: feedforward strategies are required for optimal control with active sensing. PLoS Comput. Biol. 12, e1005190. doi: 10.1371/journal.pcbi.1005190

Keywords: nonlinear optimal control, control of neural dynamics, neural mass models, bistability, delay differential-algebraic equations (DDAEs), nonlinear population dynamics

Citation: Salfenmoser L and Obermayer K (2022) Nonlinear optimal control of a mean-field model of neural population dynamics. Front. Comput. Neurosci. 16:931121. doi: 10.3389/fncom.2022.931121

Received: 28 April 2022; Accepted: 11 July 2022;

Published: 03 August 2022.

Edited by:

Valeri Makarov, Complutense University of Madrid, SpainReviewed by:

Kamran Diba, University of Michigan, United StatesCopyright © 2022 Salfenmoser and Obermayer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lena Salfenmoser, bGVuYS5zYWxmZW5tb3NlckB0dS1iZXJsaW4uZGU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.