95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci. , 15 July 2022

Volume 16 - 2022 | https://doi.org/10.3389/fncom.2022.930827

This article is part of the Research Topic Computational Intelligence for Signal and Image Processing View all 11 articles

Training infrared target detection and tracking models based on deep learning requires a large number of infrared sequence images. The cost of acquisition real infrared target sequence images is high, while conventional simulation methods lack authenticity. This paper proposes a novel infrared data simulation method that combines real infrared images and simulated 3D infrared targets. Firstly, it stitches real infrared images into a panoramic image which is used as background. Then, the infrared characteristics of 3D aircraft are simulated on the tail nozzle, skin, and tail flame, which are used as targets. Finally, the background and targets are fused based on Unity3D, where the aircraft trajectory and attitude can be edited freely to generate rich multi-target infrared data. The experimental results show that the simulated image is not only visually similar to the real infrared image but also consistent with the real infrared image in terms of the performance of target detection algorithms. The method can provide training and testing samples for deep learning models for infrared target detection and tracking.

With the rapid development of deep-learning technology, data-driven models and algorithms have become a hot topic in infrared target detection and tracking (Dai et al., 2021; Hou et al., 2022). Unlike conventional methods, data-driven methods require a large amount of infrared data for model training and testing (Yi et al., 2019; Junhong et al., 2020).

However, the current infrared image datasets used for object detection and tracking are of poor quality (Hui et al., 2020). The cost of measured data is high, and it is difficult to obtain infrared images in various scenarios (Zhang et al., 2018). For example, the target type in real data is single, and it is difficult to obtain infrared images of important types of aircraft. The authenticity of the simulation data is insufficient (Xia et al., 2015). The battlefield in modern warfare involves a wide range of complex environments. It is difficult for knowledge-based models to simulate a complex infrared battlefield. These problems significantly limit research progress in infrared target detection and tracking.

Currently, infrared target simulation can be performed using two approaches: methods based on infrared characteristic modeling (Shuwei and Bo, 2018; Guanfeng et al., 2019; Yongjie et al., 2020) and methods based on deep neural networks (Mirza and Osindero, 2014; Alec et al., 2016; Junyan et al., 2017; Chenyang, 2019; Yi, 2020). The former is typically based on infrared radiation theory. Physical models of various parts of an aircraft (such as engines, tail nozzles, tail flames, and casings) are established, atmospheric radiation is modeled, and infrared simulation data under various conditions are obtained. These methods start with a physical model and have strong interpretability. If sufficient parameters are added, high-fidelity infrared images can be produced (Yunjey et al., 2020). With a large number of parameters and calculations, they are suitable for simple target simulations. However, these are unsuitable for real-environment simulations with complex types of ground objects (Chenyang, 2019; Rani et al., 2022). Methods based on deep learning, typically using a generative adversarial network (GAN), learn the style of the infrared image from a large number of real infrared images and then transfer visible light images to infrared images (Alec et al., 2016; Junyan et al., 2017; Chenyang, 2019; Yi, 2020). These methods do not require complex physical modeling processes and are fast, but lack authenticity and reliability (Shi et al., 2021; Bhalla et al., 2022). More importantly, the method is based on deep learning and cannot add infrared targets as needed, nor can it edit the flight trajectory and attitude, which is exactly what the infrared target dataset needs most.

Therefore, it is meaningful and valuable to study an infrared data generation method that conforms to the real infrared radiation characteristics, and can add multiple types and multiple aircraft targets arbitrarily. This paper proposed a new method, and its main contributions are as follows:

(1) A method combining the real infrared data of background with the simulated infrared data of target is proposed, which can easily generate multi-target infrared simulation data with high authenticity. It uses the panorama of the real infrared data mosaic as the background, rather than the direct 3D infrared simulation of the ground objects. It can avoid the complex problem of infrared modeling of ground objects. Compared with the 3D infrared simulation of the whole scene, it is much easier, and the generated data are more authentic.

(2) The method is based on the Unity3D to fuse the target model with the infrared scene. It can freely add the type and number of aircrafts, edit the aircraft trajectory, and attitude. So it can generate rich multi-target infrared simulation data.

(3) Starting from the infrared radiation characteristics, our method simulates the physical characteristics of the key parts of the 3D target (the tail nozzle, skin, and tail flame), which can generate high authenticity infrared target data.

Figure 1 shows the overall framework of this study, divided into three branches: infrared background stitching, infrared radiation modeling, and flight trajectory editing. The infrared radiation modeling branch first establishes a 3D model on the basis of the size of the aircraft and then establishes an infrared radiation model of the aircraft according to the infrared radiation theory (such as the engine nozzle, skin, and tail flame). The infrared background stitching branch performs panoramic stitching based on real infrared dataset, and after uniform light processing, a uniform infrared panoramic image is obtained. We used the infrared panorama as background for the 3D scene. The flight-trajectory editing branch provides trajectory-editing tools. Users can call editing tools to create flight trajectories based on the aircraft performance parameters. The trajectory included the time, position, and attitude of each node. The observation window can track and record targets in a field of view of a specified size. Because multiple and various types of aircrafts can be selected and various trajectories can be edited, a rich variety of infrared simulation data can be obtained.

As an infrared radiation source, the radiation characteristics of different parts of an aircraft show evident differences owing to different degrees of heat generation. The main components with the strongest infrared radiation include the engine nozzle, aircraft skin, and tail flame (Haixing et al., 1997). This study starts with the basic theory of infrared radiation, grasps the main infrared radiation characteristics of each component, and establishes its infrared radiation intensity model.

Assuming that the infrared detector can perceive light of wavelengths ranging from λ1 to λ2 (only mid-wave infrared is considered in this study, that is, the wavelength range is 3–5 μm), according to the Planck's law (Yu, 2012), the infrared radiation intensity of a gray body can be expressed as:

where T is the gray body surface temperature, c1 is the first radiation constant, typically (3.741774 ± 0.0000022) × 10−16W · m2, and c2 is the second radiation constant, typically (1.4387869 ± 0.00000012) × 10−2m · K. Assuming x = c2/λT, the above equation can be simplified as follows:

When the fuel in an engine burns, it emits high-temperature radiation, which is the main heat source when the aircraft is flying (Chuanyu, 2013). As an extension of the engine outside the fuselage, the tail nozzle also exhibits relatively strong infrared radiation. The tail nozzle is a typical gray body, and the surface emissivity is approximately in the range of 0.8–0.9. According to Equation (2), the relationship between the infrared radiation intensity of the tail nozzle IW and temperature TW is as follows:

where εM is the radiation rate of the nozzle surface, which is determined by the aircraft surface material. SM is the cross-sectional area of the skin facing the probe. θM is the angle between the orientation of the probe and the orientation of the infrared radiation.

Aircraft skin temperature is mainly affected by two factors: the ambient temperature of the atmosphere and the temperature generated by the friction between the aircraft and the atmosphere during the high-speed motion. Because this study only considers aircraft flying at medium and low altitudes, the linear relationship between the atmospheric ambient temperature T0 and altitude H satisfies T0 = (288.2-0.0065 H) K, and T0 = 280 K for simplicity. The temperature TM generated by friction and flight speed follow the following functional relationship: , where M is the Mach number of the aircraft.

Furthermore, according to Equation (2), the functional relationship between the aircraft skin radiation intensity IM and temperature TM is as follows:

where εM is the skin surface emissivity, which is determined by the surface material of the aircraft skin. SM is the cross-sectional area of the aircraft skin facing the probe, and θM is the angle between the probe and infrared radiation orientation.

The high-temperature flame and high-temperature gas injected by the engine form the tail flame of the aircraft. We assume that the gas temperature in the tail nozzle is TF, the tail flame temperature is TP, and the gas pressures inside and outside the tail nozzle are PP and PF, respectively; then, we have:

where γ is the specific heat of the gas; its value for turbofan aeroengines is 1.3. According to Equation (2), the functional relationship between the radiation intensity IP of the tail nozzle and temperature TP can be established as follows:

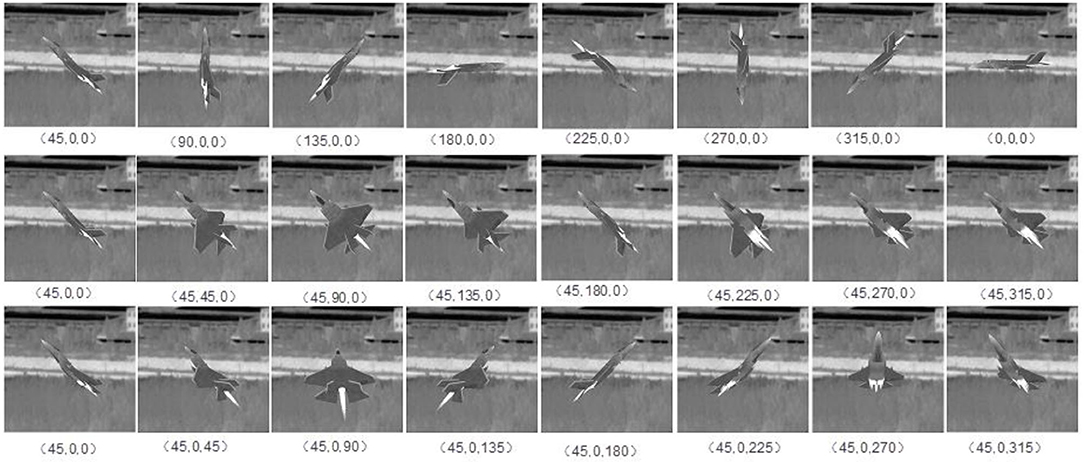

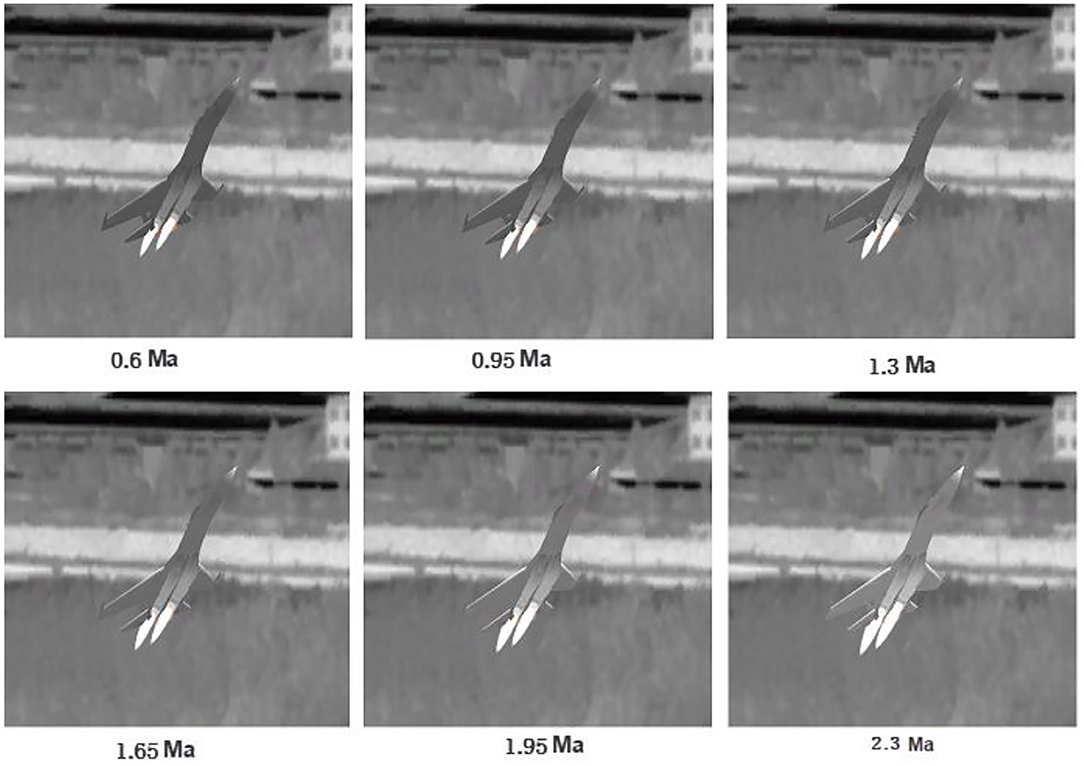

where ερ is the surface emissivity of the aircraft tail flame, SP is the cross-sectional area of the aircraft tail flame facing the probe, and θP is the angle between the probe and infrared radiation orientation. To improve the intuitive effect, the tail flame is typically simulated by particle flow. Based on the above-infrared radiation model, a 3D target with infrared radiation characteristics was obtained. The infrared radiation intensity of an aircraft dynamically changes with the speed and attitude of the target. Figure 2 shows the simulation effect of F-35 aircraft at different attitudes. Figure 3 shows the simulation effect of Su-35 aircraft at different speeds.

Figure 2. Simulation effect of F-35 aircraft at different attitudes. The speed is Mach 1, and the background is a real infrared image. The coordinates are roll, yaw, and pitch.

Figure 3. Infrared characteristics of Su-35 aircraft at different speeds. The speed varies from 0.6 to 2.3 Ma.

We expect the targets to fly in a wide infrared scene to obtain a simulated image sequence of moving targets. However, the field of view of infrared sensors is typically narrow. For example, the field of view in the public infrared dataset (Hui et al., 2020) (dataset used for infrared detection and tracking of dim-small aircraft targets under a ground/air background, http://www.csdata.org/p/387/) is only 1° × 1°.

To obtain a continuous projection of the moving target in a real infrared scene, it is necessary to stitch infrared images of a narrow field of view into a panoramic image. In view of the small texture and low contrast of infrared images, a stitching and fusion method must be adopted specifically for infrared images, as detailed in our previous paper (Zhijian et al., 2021), which describes how to stitch a panoramic image from infrared sequence images. Figure 4 shows only a part of the stitching results.

This study realized the fusion of a static real infrared scene and dynamic simulated targets based on the Unity3D engine. The main steps were as follows: (1) Constructing a hemisphere with the camera position as the center and the real farthest observation distance as the radius. The panoramic image obtained by splicing real infrared images was used as the epidermis to cover the hemisphere to obtain a pseudo 3D scene, as shown in Figure 5. (2) Based on the flight trajectory (information, such as the position, attitude, and speed of the aircraft at each moment, is set), the 3D infrared simulation target flies in a 3D space. (3) Through human–computer interaction, the observation position and viewing angle were dynamically adjusted to track and observe the targets. (4) Each frame of the observation projects the target onto the infrared background and obtains the target infrared data with the real infrared background. With continuous observation, dynamic simulation image sequences of the targets can be obtained.

The real infrared data used in this experiment comes from the public infrared dataset (Hui et al., 2020) (dataset used for infrared detection and tracking of dim-small aircraft targets under a ground/air background, http://www.csdata.org/p/387/). The dataset covers a variety of scenes such as sky and ground, with a total of 22 data segments, 30 tracks, 16,177 images, and 16,944 targets. Each frame is a gray image with a resolution of 256 × 256 pixels, BMP format, 1° × 1° field of view. Each target corresponds to a label position, and each data segment corresponds to a label file. This data set is usually used in the basic research of dim-small target detection, precision guidance, and infrared target characteristics.

The hardware environment of this experiment is: Dual Core CPU above 2.0 GHz and body memory above 4G. Software environment: system software above Windows 7. The experiment is based on the development of 2021.2.6f1 version of Unity3D. The development language is c#, and the development platform is visual studio 2017.

We selected four scenes from real infrared data introduced in (Hui et al., 2020): sky background, ground background, mixed background, and sky multi-target, which are from data 1, data 7, data 3, and data 2, respectively, in the public dataset. Correspondingly, we also intercepted the above four scenarios from the simulation data, and the comparative results are shown in Figure 6. Visually and intuitively, both the real and simulated data have the following characteristics: (1) The images are gray overall, which conforms to the characteristics of infrared images. (2) The images have low contrast and relatively few textural features. (3) The target appears as bright spots and diffuses into the surroundings. Therefore, the simulated and real infrared data are intuitively similar.

The purpose of this study was to provide simulation data for the training and testing of infrared target detection and tracking models. Therefore, determining whether the performance of an algorithm on simulated data is consistent with that of the algorithm on real data is the most effective evaluation method (Deng et al., 2022). We used two algorithms (Zhijian et al., 2021; Deng et al., 2022) employed in the 2nd Sky Cup National Innovation and Creativity Competition in 2019 for testing. We compared their performance both on real infrared data and simulated data generated by our method.

In the experiment, the data shown in Figure 6 were used; the real infrared data came from data 1, data 7, data 3, and data 2 in the public dataset (Hui et al., 2020). The simulation data also included the sky background, ground background, mixed background, and multiple targets. The resolution was 256 × 256. The targets were all small, that is, <10 pixels.

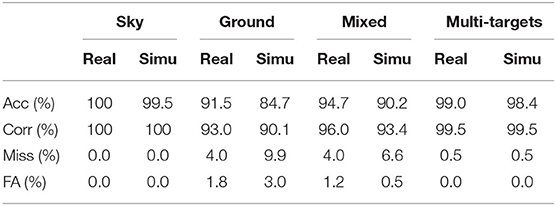

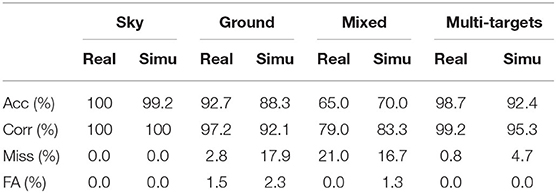

As in (Zhijian et al., 2021; Deng et al., 2022), four indicators, namely the accurate detection rate, correct detection rate, missed detection rate, and false alarm rate, were used to evaluate the performance of the algorithm. An accurate detection (Acc) is when the detection result is within the 3 × 3 pixel range of the ground truth. Correct detection (Corr) is when the detection result is within the 9 × 9 pixel range of the ground truth. Missing detection (Miss) is when the detection result is outside the 9 × 9 pixel range of the ground truth. A false alarm (FA) refers to a detected non-real target. Tables 1, 2 present the detection results without changing any parameters of the original algorithm.

Table 1. Infrared target detection results on real and simulated data with algorithm (Tianjun et al., 2019).

Table 2. Infrared target detection results on real and simulated data with algorithm (Xianbu et al., 2019).

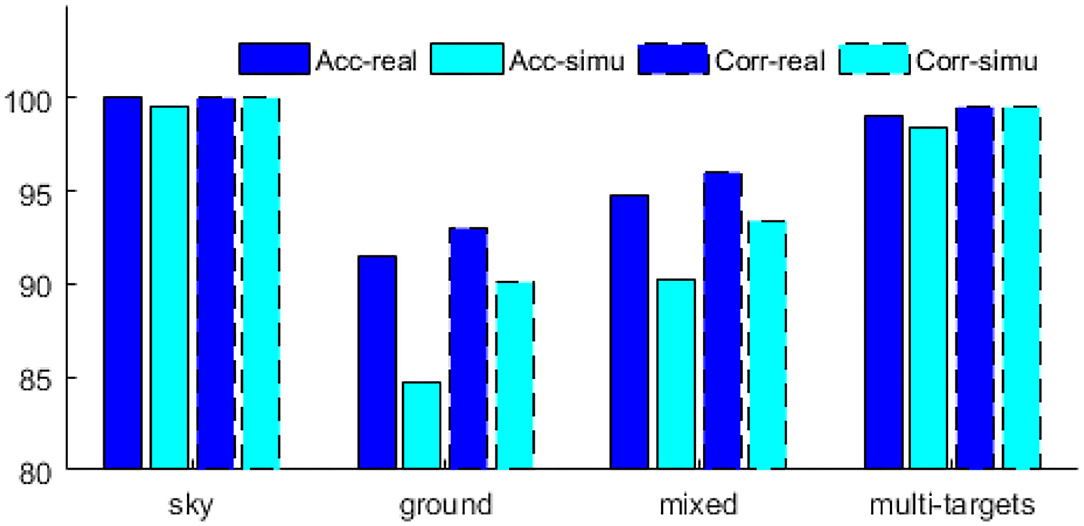

As shown in Table 1, the algorithm reported in (Tianjun et al., 2019) performed well on the above four types of scenes, particularly in terms of the Acc and Corr indicators on sky background and multi-target scenes, which reached more than 99%. The performance on the ground background and mixed background is slightly worse; nevertheless, the accurate detection rate is above 90%. On the simulation data, the algorithm also performed well on sky background and multi-target scenes and is similar to the detection results on real data. On the ground and mixed backgrounds, the detection results of the simulated data are slightly worse than those of the real data; nevertheless, the maximum difference in the accurate detection rates is no more than 7% (on the ground background, the difference between the accurate detection rates of the real and simulated data was 6.8).

The performance of the simulation data generated by our method and the real data in the algorithm (Tianjun et al., 2019) is compared as shown in Figure 7. When it performs well on the real dataset, the simulation data generated by our method also perform well, such as in sky and multi-targets scenarios. When its performance of real datasets is poor, the simulation data generated by our method is also poor, such as in ground and mixed scenarios. This consistency is both reflected in the ACC and Corr indicators. Therefore, the simulation data generated by our method are consistent with the real data on the performance of algorithm (Tianjun et al., 2019).

Figure 7. Performance of simulation data and real data on algorithm (Tianjun et al., 2019).

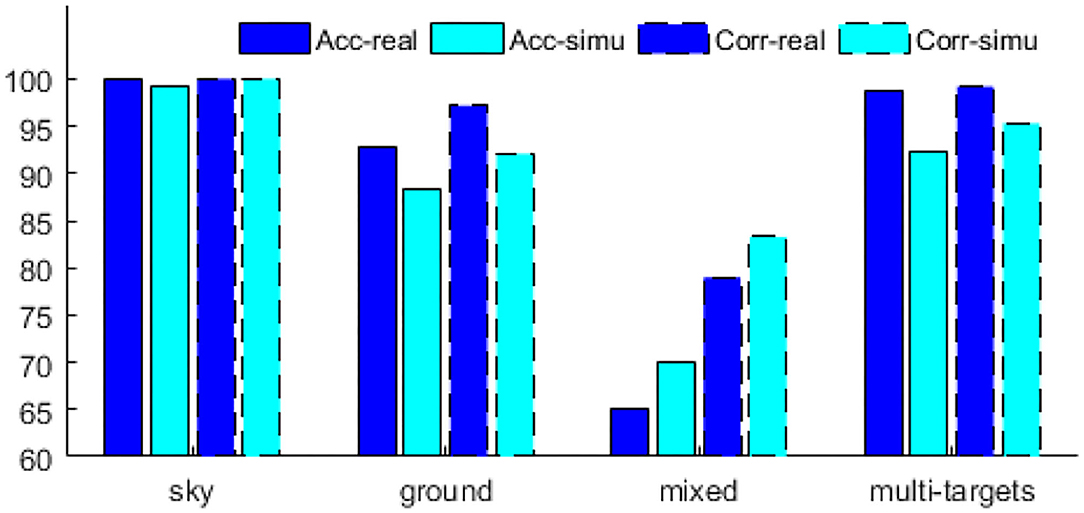

As shown in Table 2, the performance of the algorithm (Xianbu et al., 2019) is similar to that of the algorithm (Tianjun et al., 2019) on sky background, ground background, and multi-target scenes; however, the Acc drops to 65% on the mixed background. This may be related to the applicability of the algorithm in different scenarios. Interestingly, the detection results on the simulated data also drop to 70%. Both simulation data and real data show the low performance of the algorithm (Tianjun et al., 2019) in mixed scenarios. Regardless of the scenario, the maximum difference between the accurate detection rates of the simulated and real data is still <7% (in a multi-target scenario, the difference between the accurate detection rates of the real and simulated data is 6.3).

Similarly, the performance of the simulation data generated by our method and the real data in the algorithm (Xianbu et al., 2019) is compared as shown in Figure 8. When it performs well on real datasets, the simulation data generated by our method performs also well, such as in sky, ground, and multi-targets scenarios. When its performance on the real dataset is poor, the simulation data generated by our method are also poor, such as in the mixed scene. Therefore, the simulation data generated by our method are consistent with the real data on the performance of algorithm (Xianbu et al., 2019).

Figure 8. Performance of simulation data and real data on algorithm (Xianbu et al., 2019).

Training infrared target detection and tracking models based on deep learning requires a large number of infrared sequence images. The cost of acquisition real infrared target sequence images is high, while conventional simulation methods lack authenticity. This paper proposes a novel infrared data simulation method that combines real infrared images and simulated 3D infrared targets. Firstly, it stitches real infrared images into a panoramic image which is used as background. Then, the infrared characteristics of 3D aircraft are simulated on the tail nozzle, skin, and tail flame, which are used as targets. Finally, the background and targets are fused based on Unity3D, where the aircraft trajectory and attitude can be edited freely to generate rich multi-target infrared data. The experimental results show that the simulated image is not only visually similar to the real infrared image but also consistent with the real infrared image in terms of the performance of target detection algorithms. The method can provide training and testing samples for deep learning models for infrared target detection and tracking.

The infrared simulation of the target in this method has not considered the environmental factors (such as weather, temperature, illumination, etc.) and the sensor error. It is necessary to further improve the precision of target infrared simulation to meet some special application scenarios. This is also the direction of our future work.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

HZ contributed the main ideas and designed the algorithm. HB contributed the main ideas. SS contribution on experiments and result analysis. All authors contributed to the article and approved the submitted version.

This work was supported by the Key Laboratory Fund of Basic Strengthening Program (JKWATR-210503), Changsha Municipal Natural Science Foundation (kq2202067), and the Basic Science and Technology Research Project of the National Key Laboratory of Science and Technology on Automatic Target Recognition of Scientific Research under Grant (WDZC20205500209).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Professor Fan Hongqi from the ATR Key Laboratory, National University of Defense Technology, provided real infrared data. We thank Shi Tianjun of the Harbin Institute of Technology and Dong Xiaohu of the National University of Defense Technology for their outstanding contributions in the second Sky-Cup National Innovation and Creativity Competition, which provided the test algorithms.

Alec, R., Luke, M., and Soumith, C. (2016). Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. San Juan: ICLR.

Bhalla, K., Koundal, D., Bhatia, S., Rahmani, M. K., and Tahir, M. (2022). Fusion of infrared and visible images using fuzzy based siamese convolutional network. Comput. Mater. Continua. 3, 2022. doi: 10.32604/cmc.2022.021125

Chenyang, L. (2019). The Infrared Imaging Simulation System Based on Three-Dimensional Scene and its Implementation. Beijing: The University of Chinese Academy of Sciences.

Chuanyu, Z. (2013). Infrared Image Fromation for Multiple Targets. Harbin: Harbin Institute of Technology.

Dai, Y., Wu, Y., Zhou, F., and Barnard, K. (2021). Attentional local contrast networks for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 59, 9813–9824. doi: 10.1109/TGRS.2020.3044958

Deng, L., Xu, D., Xu, G., and Zhu, H. (2022). A generalized low-rank double-tensor nuclear norm completion framework for infrared small target detection. IEEE Trans. Aerosp. Electr. Syst. 1. doi: 10.1109./TAES.2022.3147437

Guanfeng, Y., Changhao, Z., and Yue, C. (2019). Research on infrared imaging simulantion for enhanced synthetic vision system. Aeronaut. Comput. Tech. 49, 100–103.

Haixing, Z., Jianqi, Z., and Wei, Y. (1997). Theoretical calculation of the IR radiation of an aeroplane. J. Xidian Univ. 24, 78–82.

Hou, Q., Wang, Z., Tan, F., Zhao, Y., Zheng, H., Zhang, W., et al. (2022). RISTDnet: robust infrared small target detection network. IEEE Geosci. Remote Sens. Lett. 19, 1–5. doi: 10.1109/LGRS.2021.3050828

Hui, B., Song, Z., Fan, H., Zhong, P., Hu, W., Zhang, X., et al. (2020). A dataset for infrared detection and tracking of dim-small aircraft targets under ground/air background. Chinese Sci. Data. 5, 291–302. doi: 10.11922/csdata.2019.0074.zh

Junhong, L., Ping, Z., Xiaowei, W., and Shize, H. (2020). Infrared small-target detection algorithms: a survey. J. Image Graph. 25, 1739–1753. doi: 10.11834/jig.190574

Junyan, Z., Taesung, P., and Isola, P. (2017). Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. Venice: ICCV.

Mirza, M., and Osindero, S. (2014). Conditional generative adversarial nets. Comput. Sci. 2672–80. Avaialble online at: https://arxiv.org/abs/1411.1784

Rani, S., Singh, B. K., Koundal, D., and Athavale, V. A. (2022). Localization of stroke lesion in MRI images using object detection techniques: a comprehensive review. Neurosci Inform. 2, 100070. doi: 10.1016/j.neuri.2022.100070

Shi, Q., Gao, Y., Zhang, X., Li, Z., Du, J., Shi, R., et al. (2021). Cryogenic background infrared scene generation method based on a light-driven blackbody micro cavity array. Infrared Phys. Technol. 117, 103841. doi: 10.1016/j.infrared.2021.103841

Shuwei, T., and Bo, X. (2018). Research on infrared scene built by computer. Electro-Optic. Technol. Appl. 33, 58–61.

Tianjun, S., Guangzhen, B., Fuhai, W., Chaofei, L., and Jinnan, G. (2019). An infrared small target detection and tracking algorithm applying for multiple scenarios. Aero Weaponry. 26, 35–42. doi: 10.12132/ISSN.1673-5048.2019.0220

Xia, W., Hao, W., and Chao, X. (2015). Overview on development of infrared scene simulation. Infrared Technol. 7, 537–43. doi: 10.11846/j.issn.1001_8891.201507001

Xianbu, D., Ruigang, F., Yinghui, G., and Bio, L. (2019). Detecting and tracking of small infrared targets adaptively in complex background. Aero Weaponry. 26, 22–28. doi: 10.12132/ISSN.1673-5048.2019.0233

Yi, H. (2020). RGB-to-NIR Image Translation Using Generative Adversarial Network. Wuhan: Central Normal China University.

Yi, Y., Changbin, X., and Yuying, M. (2019). A review of infrared dim small target detection algorithms with low SNR. Laser Infrared. 49, 643–649. doi: 10.3969/j.issn.1001-5078.2019.06.001

Yongjie, Z., Zhenya, X., and Jianxun, L. (2020). Study on simulation model of aircraft infrared hyperspectral image. Aero Weaponry. 27, 91–96. doi: 10.12132/ISSN.1673-5048.2019.0082

Yu, C. (2012). Design of Infrared Decoy HIL Simulation System Based on Finite Element Module. Harbin: Harbin Institute of Technology.

Yunjey, C., Youngjung, U., Jaejun, Y., and Ha, J.-W. (2020). “StarGAN v2: diverse image synthesis for multiple domains,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (Seattle).

Zhang, R., Mu, C., Yang, Y., and Xu, L. (2018). Research on simulated infrared image utility evaluation using deep representation. J. Electr. Imag. 27, 013012. doi: 10.1117/1.JEI.27.1.013012

Keywords: infrared image simulation, infrared target simulation, infrared radiation, deep learning, Unity3D

Citation: Zhijian H, Bingwei H and Shujin S (2022) An Infrared Sequence Image Generating Method for Target Detection and Tracking. Front. Comput. Neurosci. 16:930827. doi: 10.3389/fncom.2022.930827

Received: 28 April 2022; Accepted: 10 June 2022;

Published: 15 July 2022.

Edited by:

Deepika Koundal, University of Petroleum and Energy Studies, IndiaReviewed by:

Arvind Dhaka, Manipal University Jaipur, IndiaCopyright © 2022 Zhijian, Bingwei and Shujin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hui Bingwei, aHVpYmluZ3dlaTA3QDE2My5jb20=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.