94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci., 13 December 2022

Volume 16 - 2022 | https://doi.org/10.3389/fncom.2022.881046

We establish a simple mechanism by which radially oriented simple cells can emerge in the primary visual cortex. In 1986, R. Linsker. proposed a means by which radially symmetric, spatial opponent cells can evolve, driven entirely by noise, from structure in the initial synaptic connectivity distribution. We provide an analytical derivation of Linsker's results, and further show that radial eigenfunctions can be expressed as a weighted sum of degenerate Cartesian eigenfunctions, and vice-versa. These results are extended to allow for radially dependent cell density, from which we show that, despite a circularly symmetric synaptic connectivity distribution, radially biased orientation selectivity emerges in the third layer when cell density in the first layer, or equivalently, synaptic radius, changes with eccentricity; i.e., distance to the center of the lamina. This provides a potential mechanism for the emergence of radial orientation in the primary visual cortex before eye opening and the onset of structured visual input after birth.

Synaptic plasticity underpins our understanding of learning in neural systems as it is the mechanism that describes how synaptic weights change in response to sensory inputs. Plasticity has traditionally been modeled as rate based, in which synaptic weights change in response to short-time averaged pre- and postsynaptic neuron spiking rates. Over the past two decades, the importance of pre- and postsynaptic neuron spike timing has been recognized, particularly for contexts in which high-resolution temporal information is involved at microsecond resolution (Gerstner et al., 1996; Kempter et al., 1999a), prompting the emergence of spike-timing dependent plasticity (STDP) (Gerstner et al., 1996; Markram et al., 1997). Spike-based plasticity updates synaptic strength in response to the relative timing of pre- and postsynaptic spikes, amplifying synaptic strength if the presynaptic neuron contributes to the postsynaptic neuron's spike, and depressing a synapse if the presynaptic neuron fires after the postsynaptic neuron and thus did not contribute to its spike.

Plasticity mechanisms have played a fundamental role in explaining the emergence of simple cells in the early layers of cortical processing, such as the primary visual cortex (V1). Plasticity has successfully explained the emergence of simple cells such as orientation selective cells (Bienenstock et al., 1982; Wimbauer et al., 1998; Yamakazi, 2002), direction selective cells (Wimbauer et al., 1997a,b; Senn and Buchs, 2003), ocular dominance (Miller, 1990), and feature maps in which sensitivity to a particular feature changes as the layer is traversed (Goodhill, 2007).

Much of the research on learning in cortical networks has been empirical and computational because of the analytical complexity of learning in response to parameters that describe the number of layers, connectivity structure, and neuron type. A notable exception is the analysis of the network proposed by Linsker (1986b) in which the emergence of a spatial opponent cell in the third layer of a three-layer network of Poisson neurons with Gaussian connectivity kernels was described. Learning in this network is a linear function of correlation in presynaptic neural activity, with two learning constants that control the homeostatic equilibrium. The linearity of the learning system enables an eigenfunction analysis to be used to identify the independent contributors to a postsynaptic neuron's synaptic weight structure. Eigenvalues provide a way to distinguish the eigenfunctions that are the most significant contributors, and hence determine the receptive field of the postsynaptic neuron.

Although, Linsker (1986b) focused on empirical results, there has been significant work aimed at extending the analytical framework for the network that he proposed. MacKay and Miller (1990) proposed the first three radial eigenfunctions based on the work by Tang (1990), but without providing a derivation. The proposed eigenfunctions were for a simplified learning system in which homeostatic constants were assumed zero so that all plasticity was driven by correlation between presynaptic inputs and there was no non-competitive plasticity. They provided an empirical examination of the impact of non-zero homeostatic constants, showing that the eigenfunction of the leading eigenvalue can change in response to a change in the homeostatic equilibrium.

Miller (1990) employed Linsker (1986b) network in a model of learning in the primary visual cortex, with overlapping left and right eye inputs processed by the lateral geniculate nucleus (LGN). The network structure prompted correlation and anti-correlation in two afferents originating from either the same eye or the opposite eye, leading to the emergence of an ocular dominance feature map. Miller (1990) provided a description of an analytical derivation for the eigenfunctions of ocular dominance feature maps across the cortex.

Wimbauer et al. (1998) extended Linsker (1986b) network by incorporating lateral inhibitory connections in the third layer, showing the emergence of orientation selective cells in the third layer. They provided a derivation of Cartesian eigenfunctions for learning with homeostatic constants set to zero and empirically extended the solution to the general learning equation with non-zero homeostatic constants. They simulated the development of an orientation selective feature map distributed across the primary visual cortex using a model slightly more complex than that for which they derived the eigenfunctions.

Davey et al. (2021) relaxed Linsker (1986b) implicit assumption of homogeneous spike propagation delay between all pre- and post-synaptic connections between two layers. Distance dependent propagation delay was incorporated into all synaptic connections in the three layer network, and the consequent impact on both neural activity and synaptic plasticity analytically derived. Davey et al. (2021) established that propagation delay induces low-pass filtering by dispersing arrival times of spikes from pre-synaptic neurons, providing a natural correlation cancellation mechanism for distal connections. Cut-off frequency was found to decrease as the dendritic arbor increased in radius across the pre-synaptic layer, introducing an upper limit on temporal resolution for the network.

Analytical solutions to Linsker (1986b) learning system have played a central role in explaining the emergence of spatial opponent and orientation selective cells in the network. However, thus far, no general analytical solution has been provided, with analytical results to date being for the simplified system in which the homeostatic constants are set to zero. We provide here a solution for the eigenfunctions of Linsker (1986b) network in polar coordinates. As the system is radially symmetric, polar coordinates provide a natural coordinate system that enables an straightforward extension of polar eigenfunctions to the general learning system with non-zero homeostatic constants. One of the benefits of a full analytical solution for the network is insight into why the receptive field changes in response to changes in the homeostatic equilibrium and the framework to determine exactly when this change occurs.

Thus far, the original network proposed by Linsker (1986b) and used in the subsequent analytical analyzes of Miller (1990) and Wimbauer et al. (1998) made an assumption that cells within each layer were evenly distributed and that receptive fields of all cells in a layer were statistically identical; i.e., drawn from the same synaptic connectivity distribution. To date, there has been no exploration of the impact of relaxing this assumption. However, it is known that some biological cell layers show an uneven density of cells across the lamina and contain receptive fields with different statistical properties. For example, the retina is well known to have cell density changes as a function of eccentricity (Sjöstrand et al., 1999; Watson, 2014) and receptive field sizes of neurons in the primary visual cortex increase with stimulus eccentricity (Smith et al., 2001; Wurbs et al., 2013). Furthermore, it is well established that orientation selectivity in the primary visual cortex is biased toward radial orientation in that an orientation selective neuron in the primary visual cortex is more likely to be oriented toward the center of the retina (Rodionova et al., 2004). In this study, we explore the impact of radially dependent synaptic connection distributions on emerging receptive field properties in the third layer of Linsker (1986b) network and show how introducing radially dependent synaptic connectivity distributions in the first layer results in the emergence of radial orientation selectivity in the third layer of the network.

This paper is organized as follows. Section 2.1 introduces the network and neuron models used, based on Linsker (1986b) network. Radial eigenfunctions and eigenvalues are analytically derived for the simplified learning equation, for which the homeostatic parameters are set to zero, and then extended via perturbation analysis to the full system in Section 3. Eigenfunctions and eigenvalues are also derived in Cartesian coordinates in Section 3 and compared to the radial eigenfunctions. Finally, we show in Section 4 that the introduction of radially dependent synaptic connectivity distributions in the first layer generates radial orientation selectivity in the third layer of the network.

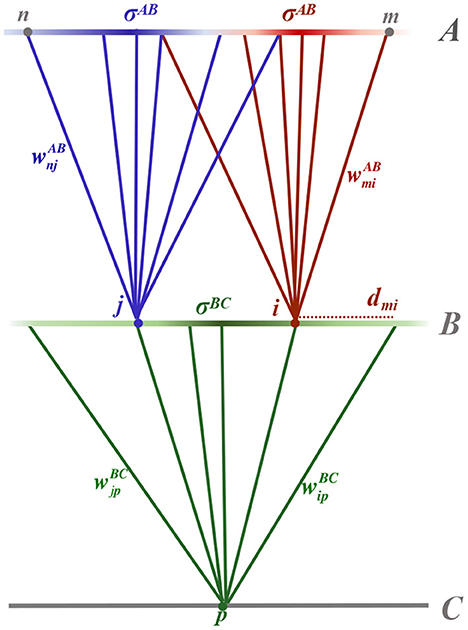

Following Linsker (1986b), we consider a three-layer, feed-forward topographical network. The network is driven by spontaneous neural activity in the first layer, layer A, which inputs to layer B, which in turn inputs to layer C, as shown schematically in Figure 1. Layers comprise populations of homogeneous neurons, equispaced in a square grid across the layer. The distance between the parallel layers is assumed to dominate sufficiently such that propagation delay experienced by action potentials from the presynaptic layer can be assumed approximately equal. Neurons m and n of layer A have synaptic inputs to neurons i and j of layer B, respectively, which both input to neuron p of layer C.

Figure 1. Schematic diagram of the three layered feed-forward network. Layer A neurons, m and n, feed into layer B neurons, i and j, respectively, which in turn feed to a layer C neuron, p. Synaptic connections between neurons are shown as solid, colored lines connecting from a presynaptic neuron to a postsynaptic neuron of the same color. The synaptic strength, for example between neurons m and i, is denoted . Synaptic connection distributions are homogeneous within a layer and modeled as being Gaussian, parameterised by distance-dependent standard deviation (radius) denoted σAB between layers A and B and σBC between layers B and C. The probability of a postsynaptic neuron having a presynaptic connection from a particular position in the layer is depicted by the intensity of the color of the presynaptic layer. Not shown in the diagram is the expected number of presynaptic connections input to a neuron, denoted by NAB and NBC for postsynaptic neurons in layers B and C, respectively. The radial distance between two neurons within a lamina, for example between neuron m from layer A and i from layer B, is denoted .

Each postsynaptic neuron has a Gaussian synaptic connection distribution, centered on its two-dimensional position in the lamina, which ensures that radially proximate neurons are more likely to connect to it than a neuron more distal in the presynaptic lamina. The connectivity distributions are parameterized by a standard deviation (radius) that is homogeneous across a layer, denoted σAB and σBC, for synaptic connections between layers A and B and layers B and C, respectively. Consequently, the probability of neuron m in layer A connecting to neuron i in layer B is given by

where xmi = (xmi, ymi) is the two-dimensional radial distance between m and i. Note that this definition differs from the standard definition by a factor of in accordance with the definition used by Linsker (1986b), and is specifically chosen for later convenience.

For postsynaptic neurons in layer C, it is useful to write the connection probability in polar coordinates by assuming, without loss of generality, that the postsynaptic neuron is at position (0, 0). The probability of presynaptic neuron j in layer B connecting to postsynaptic neuron p in layer C in polar coordinates is then

where rjp is the radial distance from neuron p, at the center of the lamina, to neuron j in layer B, and θjp is the angle to j within the two-dimensional lamina.

Linsker (1986b) showed that the Gaussian connectivity distributions introduce spatial correlations in the inputs to layer B neurons despite spontaneous neural activity in layer A being uncorrelated. Layer B neurons that are spatially more proximate will have a greater number of shared connections, and therefore more correlated input, when compared to layer B neurons that are positioned further apart in the lamina. The expected number of shared presynaptic inputs between two postsynaptic neurons in layer B, denoted NBB, is shown to be (see Appendix A for full derivation)

where NAB denotes the expected number of synaptic connections from layer A to each neuron in layer B, and represents the distance between neurons i and j such that .

The network is driven by spontaneous Poisson activity of the layer A neurons. This implies that there are no spike-based temporal correlations between input and output neurons other than what is captured in the rate-based signals and that the rates change slowly when compared to the period over which they are averaged (Kempter et al., 1999b). Activity of a layer A neuron is modeled as , where is the spiking rate of layer A neuron m at time t.

As in Linsker (1986b), we use a Poisson neuron model so that the network is linear when operating within the weight bounds, discussed below. The update equations for neural activity in layers B and C are

where , denote spontaneous firing rates, and , depict synaptic strengths between neurons m and i in layers A and B, respectively, and neurons i and p in layers B and C, respectively. Note that an implicit assumption in this Poisson model of neural activity is that propagation delay is negligible or, equivalently, is dominated by inter-layer distances between neurons and, therefore, can be considered homogeneous across all inputs to a postsynaptic neuron.

The adiabatic approximation in neural learning is that incremental weight changes occur slowly with respect to neural dynamics, which occur on a millisecond timescale. Furthermore, neurons within the same population are assumed to have the same statistical properties of neural activity and synaptic connectivity. Consequently, the system is ergodic and the spike rate can be determined from the ensemble average or from a temporal mean over the timescale of learning. Under these assumptions, the learning equation can be expressed as a differential equation (Linsker, 1986b). The general learning equations for synaptic weights between neurons in layers A and B and synapses connecting layers B and C is given by Linsker (1986b)

where η ≪ 1 is the learning rate that ensures that learning is slow on a millisecond timescale, wmin and wmax are the lower and upper bounds on the weights, respectively, and the parameters , , , are layer specific constants controlling homeostasis (i.e., independent of the correlation structure of the inputs). The definition for normalized covariance has the same structure for each layer; for example, the normalized covariance between layer A neurons m and n is defined by

where 〈〉 depicts the ensemble average, , denotes the temporal average of layer A neural activity, and is a scaling factor to normalize the covariance matrix Q.

For a Gaussian synaptic density distribution, the covariance between layer B neurons is a function of the radial distance separating the neurons, as described in Appendix B,

Only radial distances are considered, so that distances between layers are assumed to have negligible impact on learning dynamics since the inter-layer transmission delay is uniform.

Normalizing this result and incorporating it into Equation (5) gives the learning equation

where it is assumed that the covariance is normalized and we have removed the layer superscripts for readability.

It is assumed that a deeper layer is not learned until after its presynaptic layer has converged to a stable weight structure, and hence layers are learned sequentially. This accords with the approach employed by Linsker (1986b) and does not impact the final weight structure across the network. Consequently, synapses connecting layers A and B evolve to a stable structure before learning begins for synapses connecting layers B and C.

Linsker (1986b) demonstrated that individual synapses are unstable and, for excitatory synapses, all or all-but-one necessarily reach the upper bound, wmax. However, under an assumption of weak covariance of the inputs (MacKay and Miller, 1990), the mean weight of synapses input to a postsynaptic neuron is not necessarily unstable but rather controlled by homeostatic mechanisms. For excitatory connections, the mean weight of a postsynaptic neuron's synapses will converge to

where the conditions on k1 and k2 are required to ensure that the mean synaptic weight does not diverge to the bounds. For all synapses to grow until they reach the upper bound, it is required that k1 + k2 > 0. In this case, the system is unstable so that the mean synaptic weight grows until all individual synapses, or all-but-one, have reached the upper bound (Linsker, 1986b).

Linsker (1986b) selected homeostatic constants for synapses connecting layers A and B such that the mean weight was unstable and, consequently, all synapses diverged to the upper bound. For connections between layers B and C, the homeostatic constants are chosen such that the mean weight is stable, requiring some individual synapses to diverge to the lower bound and others to the upper bound.

With synaptic connections between layers A and B assumed to all reach the upper bound, the focus is on determining the learned synaptic structure for postsynaptic neurons in layer C. Given that the learning equation in Equation (5b) is linear within the weight bounds, the system lends itself to an eigenfunction analysis. That is, we wish to identify the independent eigenfunctions that contribute to the evolution of synaptic weights. Given that the system is driven by unstructured noise, it will self-organize such that the eigenfunction with the leading eigenvalue will ultimately dominate the synaptic weight structure.

In order to conduct an eigenfunction analysis, we approximate the discrete grid of neurons by its continuous limit. The probability of a synaptic connection existing between neuron m at position (xmi, ymi) in the presynaptic layer and postsynaptic neuron i, detailed in Equation (1), becomes a synaptic density describing the expected proportion of the total number of presynaptic inputs originating from (xmi, ymi). The synaptic strength is then considered to be the average weight of synapses at this location. In the continuous limit, the learning equation in Equation (8) becomes

where neuron i in layer B is denoted by its continuous position vector x = (xip, yip) and neuron j in layer B is represented by its continuous vector, , where vector subscripts have been omitted for readability. The Cartesian coordinates have been centered on the layer C neuron. Note that A contains coefficients to normalize covariance and connection probabilities, such that A = (π(σBC)2)−2.

To characterize the system in terms of its eigenfunctions, we need to solve the eigenvalue problem for the system,

Given the circular symmetry of the spatial opponent neurons that emerge from Linsker (1986b) network, we derive the radial eigenfunctions and eigenvalues of a layer C neuron's receptive field. By identifying the eigenfunction with the largest eigenvalue, we can analytically determine the expected receptive field of the neuron, since this eigenfunction is expected to grow most rapidly and dominate development of the receptive field.

To proceed we initially set k2 to zero and later consider the more general case in which k2 is non-zero. Converting to polar coordinates, such that r and θ give the magnitude and phase of x, and transforming r to be unit-less by scaling it by , the eigenvalue problem in Equation (11) becomes

The eigenfunctions and eigenvalues for the simplified learning equation are derived in Appendix C.1. Introducing a radial decay parameter that controls the rate of decay from the center of the receptive field,

the eigenfunctions and associated eigenvalues can be expressed in polar coordinates as

where Nl, n is a normalization factor and is an associated Laguerre polynomial. Since , the normalization factor can be derived as

where the factor of 2 difference occurs for the case l = n because the integral for the angular component is over cos (0θ), a constant.

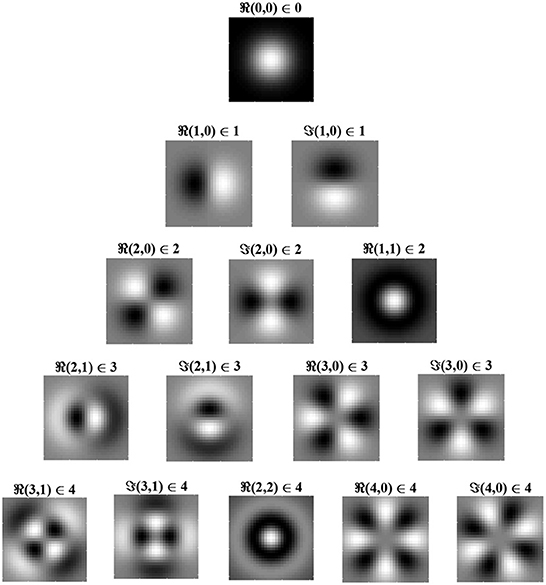

Eigenfunctions up to order 4 are shown in Figure 2 in order of decreasing eigenvalue, λ. The eigenfunctions are ordered by l + n, where n controls the shape of the Laguerre polynomial and l − n controls the angular frequency. The eigenfunction with the largest eigenvalue has order l + n = 0 and is radially symmetric with all positive synaptic weights. Consequently, for the simplified learning equation described in Equation (12) and after learning for a sufficiently long period, the synaptic weight structure of a layer C postsynaptic neuron will be all excitatory connections with weights at the upper bound.

Figure 2. Radial eigenvalues and eigenfunctions of the simplified learning equation, Equation (12), given in Equation (14) for pairs of indices, (l,n) ∈ λl,n. Eigenvalues are ordered by l + n, with l + n = 0 giving the largest eigenvalue and, hence, (0, 0) being the leading eigenfunction. Eigenfunctions in the same row have the same eigenvalue and are, therefore, degenerate. Eigenfunctions are given for both the real part of Equation (14b) (i.e., the cosine angular component) and for the imaginary part, denoted by ℜ and ℑ, respectively. From the figure, it can be seen that, when l = n, the eigenfunction is radially symmetric, being fully determined by the radial component of the eigenfunction. White indicates positive regions of synaptic weights, while black indicates negative regions. The leading eigenfunction is all positive and dominates learning. As l − n increases, the frequency of the angular component increases.

For completeness, we derive the eigenfunctions and eigenvalues of Linsker (1986b) network using Cartesian coordinates, the solution of which is a special case of that found in Wimbauer et al. (1997b). We show that a weighted sum of the Cartesian eigenfunctions produces the radial eigenfunctions, thus establishing equivalence. The derivations are given in Appendix D. For eigenvalues indexed by order u and v, for the x and y dimensions respectively, eigenfunction and eigenvalue pairs are given by

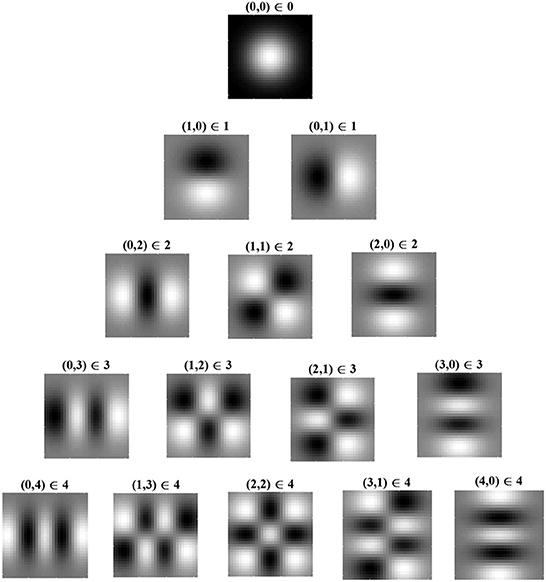

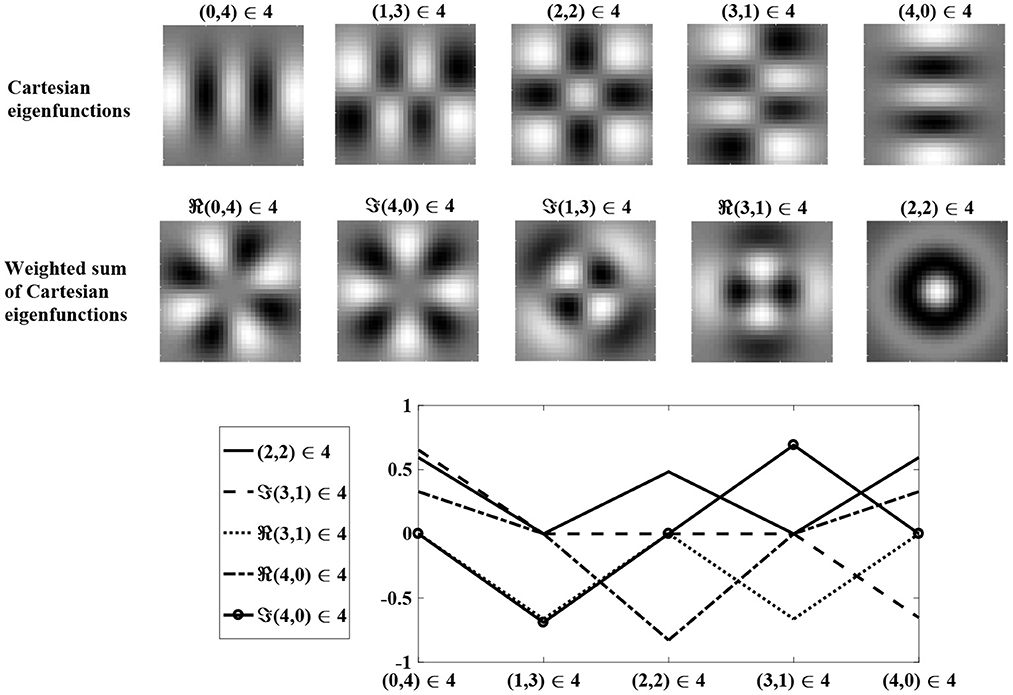

Figure 3 shows Cartesian eigenfunctions up to the fourth order, which is determined by u + v. The eigenfunctions are shown in order of decreasing eigenvalue, so that the eigenfunction with the largest eigenvalue is of order u + v = 0. This eigenfunction is radially symmetric, with all positive weights. A radial eigenfunction with a given degenerate order can be reconstructed as a weighted linear sum of Cartesian eigenfunctions of the same order (Figure 4). Consequently, the Cartesian eigenfunctions of the simplified learning equation described in Equation (61) give the same result as the radial eigenfunctions, shown in Figure 2. After sustained learning, the weight structure of a neuron in layer C will have all synapses at the upper bound.

Figure 3. Cartesian eigenfunctions of the simplified learning equation, Equation (61), defined for index pairs (u, v). Eigenvalues are determined by u + v, where (0, 0) has the largest eigenvalue and therefore is the leading eigenfunction, dominating learning. Eigenfunctions in the same row have the same eigenvalue and are, therefore, degenerate. Eigenvalues decrease with descending rows. Regions of white indicate positive synaptic weights, while black indicates negative weights. The leading eigenfunction has all positive synapse weights.

Figure 4. A weighted sum of degenerate Cartesian eigenfunctions of order u + v = 4 are used to generate radial eigenfunctions of order l + n = 4. Weights are determined using maximum likelihood regression. The top row shows degenerate Cartesian eigenfunctions of order 4. The middle row shows the weighted sum of the Cartesian eigenfunctions, reproducing the radial eigenfunctions in the bottom row of Figure 2. The bottom row shows the regression weights.

While covariance between the activity of layer B input neurons primarily drives the structure of the layer C cell, the and terms control the homeostatic equilibrium. MacKay and Miller (1990) empirically showed that the choice of can change the structure of the dominant eigenfunction, and hence the resultant receptive field of a layer C cell. As Figure 2 shows, for the simplified system, the leading eigenvalue has all synapses at the upper or the lower bound. For a negative value of , homeostasis can only be reached if some of the synapses are negative. To determine the impact of the learning constant, we find an analytical expression for the eigenfunctions of the full learning equation, Equation (11), by conducting a perturbation analysis on the simplified learning equation, in Equation (11).

The full derivation is detailed in Appendix C.2. The eigenfunctions of the first order perturbation are equal to those of the simplified equation, Equation (14). However, the eigenvalues are altered by the addition of the learning constants according to

where

and 2F1() is the hypergeometric function. As detailed in Appendix C.2, the only non-zero perturbations are where l + n is even and l = n, which happens only once for each even order degenerate eigenfunction set.

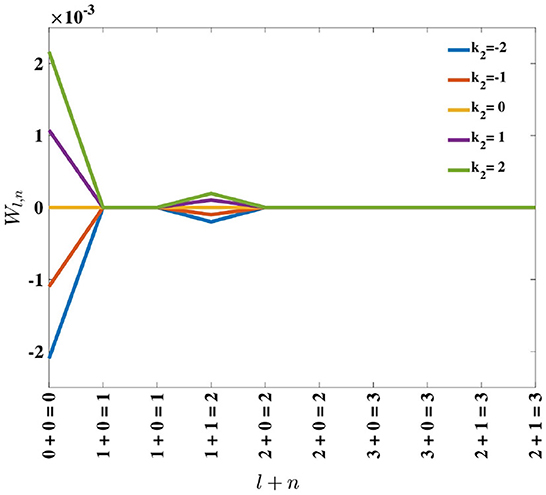

Inspection of Equation (18) reveals that, for positive k2, perturbation of the eigenvalues is positive and monotonically decreasing with l + n. Consequently, the order of the eigenvalues remains the same. For negative k2, the perturbation on the eigenvalues is negative and monotonically increasing with eigenfunction order, l + n (see Figure 5). Since these perturbations are being added to the original eigenvalues, which are positive, the result can be a change in the dominant eigenfunction. This result supports the empirical findings by MacKay and Miller (1990) who showed the emergence of a spatial opponent cell in C, where l + n = 0 for small values of k2, and bi-lobed neurons with l + n = 1, for larger values of k2.

Figure 5. Effect of adding the perturbation term, k2, on eigenvalues λl,n, represented by Wl,n in Equation (17). For positive k2, the perturbation results in Wl,n being positive, while for negative k2, the perturbation causes Wl,n to be negative.

The original network proposed by Linsker (1986b), and for which we have calculated the eigenfunctions, made an implicit assumption that neurons within each layer were evenly distributed and that receptive fields of all neurons in a layer were statistically identical, drawn from the same synaptic connectivity distribution. However, it is known that some biological neuron layers show an uneven density of cells across the lamina, and contain receptive fields with different statistical properties.

We consider the impact of changing neuron density as a function of distance to the layer center. We assume that a consequence of this is that the radius of a neuron's synaptic connectivity distribution becomes dependent on the neuron's position in the layer. That is, where neurons are spread further apart there is an increase in connectivity radius to compensate. For simplicity assume that a postsynaptic neurons's arbor is within a sufficiently small area that the presynaptic neuron connection density is parameterised by a constant radius. If we denote the spatial center of the neuron layer by c and consider this point to have location vector [0, 0], then a postsynaptic cell, located at [xic, yic], has connection density that is a function of the magnitude of its position,

Let the radius of a cell be a linear function of its radial distance to the layer center, such that

In this scenario, the probability of presynaptic neuron, m, in layer A, generating a synaptic connection to postsynaptic neuron, i, in layer B, is given by

In Appendix E, we calculate the expected number of shared inputs between two neurons in layer B. This is important to consider as shared inputs is the source of correlation between layer B neurons, which then triggers the emergence of spatial opponent neurons in Linsker (1986b) network. In the case of the synaptic connection radius increasing linearly with distance from the center of the neuron layer, the expected number of shared inputs between two layer B neurons is found to be

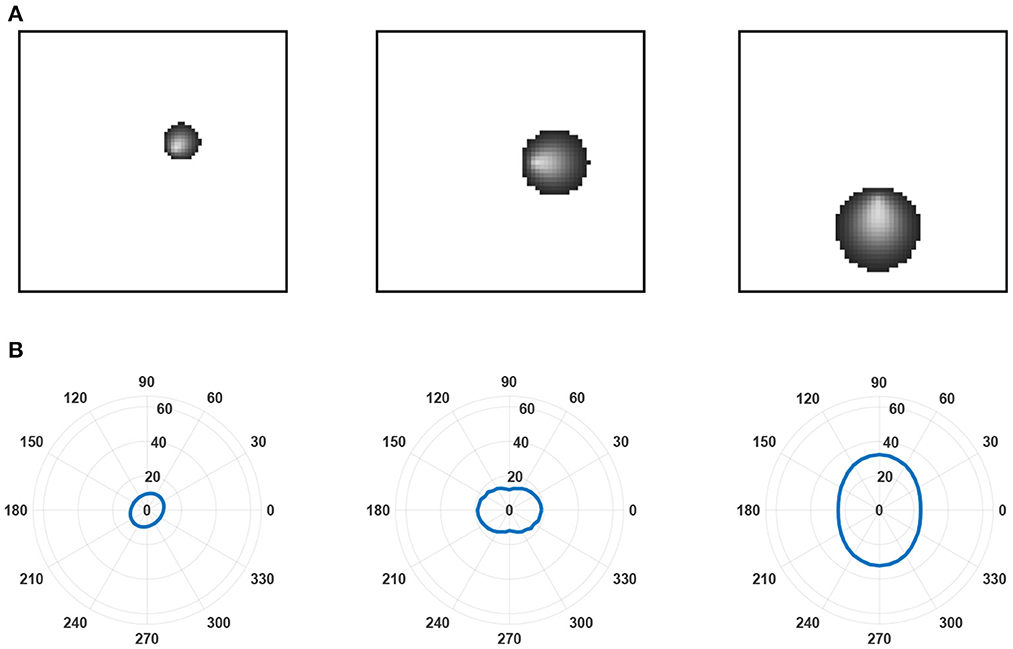

We simulated this learning equation and plotted the receptive fields of three layer C neurons in three different positions relative to a small layer of B neurons (see Figure 6). The neurons developed radial orientation tuning, with tuning curves showing an orientation directed toward the center of the lamina.

Figure 6. Examples of receptive fields and tuning curves. (A) Receptive fields predicted by the leading eigenfunction of three layer C neurons at different locations relative to the center of layer B. The layer is small relative to the receptive field size of the layer C neurons to highlight changes in receptive field size and the radial orientation selectivity of selected layer C neurons. These features emerge from a linearly increasing arbor radius in synaptic connectivity between layer A and layer B neurons. As established by Linsker (1986b), it is the overlap between the arbors of layer B neurons that prompts correlation in their activity despite only inputting unstructured noise into layer A and, subsequently, generates the structured receptive fields found in the layer C neurons. (B) Tuning curves generated for each of the layer C neurons in (A). Tuning curves were calculated for the spatial frequency prompting the largest response in the neuron.

In this paper, we provide a general expression for the complete set of eigenfunctions for the three-layer feed-forward network proposed by Linsker (1986b). Initially, the homeostatic parameters were set to zero to simplify the learning equation. This result was then extended via a perturbation analysis to provide the complete set of eigenfunctions for the network with non-zero homeostatic parameters.

Linsker (1986b) analysis was integral in revealing how neural learning occurred before the onset of structured environmental input, empirically demonstrating the emergence of spatial opponent neurons in the third layer. MacKay and Miller (1990) provided a stability analysis of Linsker (1986b) network, noting the first six eigenfunctions, determined via an ansatz based on the results of Tang (1990). MacKay and Miller (1990) showed that the receptive field structure of cells in the third layer could be either spatial opponent neuron or bi-lobed neurons, depending upon the value of the homeostatic parameters. Similarly, Walton and Bissest (1992) extended Linsker (1986b) network to the auditory system, considering the morphology of the resulting neuron based on the homeostatic parameters of the system. In this paper, we provide the complete set of eigenvalues for the full learning equation, enabling an exact calculation of the homeostatic parameters required to induce this change, and a quantitative analysis on the parameter space.

Linsker (1986a) showed that augmenting the network with additional layers prompts the development of orientation selective neurons. However, given the absence of a complete mathematical framework for the three-layer network, there has been limited analysis provided for the development of orientation selective neurons in Linsker (1986a) network (MacKay and Miller, 1990; Miller, 1990; Wimbauer et al., 1998). Yamakazi (2002) provided an analysis of deeper layers, essentially based on an ansatz for the eigenfunctions for the three-layer network. The results in this paper provide the foundation for analysis of larger networks and hence the development of features other than spatial opponent neurons. As the system is radially symmetric in connectivity distribution, radial eigenfunctions provide a natural coordinate system that will facilitate future work on more complex network and parameter regimes.

The results of this study demonstrate that relaxing the assumption of evenly distributed neurons across the layer can change the receptive fields that emerge in the third layer. Similar to the distribution in the retina, we examined a decrease in neuron density with increasing distance from the center of the layer and, consequently, an increase in synaptic connectivity radius. We analytically derived an expression for the learning equation in the third layer, as a result of a radially dependent connectivity distribution between the first and second layers. The eigenfunctions for the learning equation were empirically calculated, showing that orientation selective neurons emerge. Interestingly, the preferred orientation of the neurons was the radial orientation toward the center of the laminar. The radial bias is more pronounced for peripheral neurons, which accords with experimental results (Freeman et al., 2011).

It is well established that neural density changes as a function of eccentricity in the retina (Sjöstrand et al., 1999; Watson, 2014), and receptive field sizes of neurons in the primary visual cortex increase with stimulus eccentricity (Smith et al., 2001; Wurbs et al., 2013). Furthermore, it is known that orientation selectivity in the primary visual cortex is biased toward radial orientation, in that an orientation selective neuron in the primary visual cortex is more likely to be oriented toward the center of the retina (Rodionova et al., 2004; Sasaki et al., 2006; Antinucci and Hindges, 2018). However, the origin of radial orientation selectivity has not yet been confirmed, and hence its emergence from inhomogeneous cell density has significance as a potential mechanism. It should be noted that experimentally measured orientation bias has been shown to emerge as an artifact of visual gratings (Scholl et al., 2013). However, given that radial orientation bias has been established using a range of stimuli, such as small bars (Philips and Chakravarthy, 2017), and random dots in conjunction with reverse correlation (Mareschal et al., 2006), its presence is now well established.

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

CD wrote the code and developed the idea under the supervision of AB and DG. All authors contributed to the article and approved the submitted version.

The authors acknowledge support under the Australian Research Council (ARC) Discovery Projects funding scheme (Project DP140102947). CD acknowledges support of a University of Melbourne Research Fellowship. Carlo Beenakker is acknowledged for assistance in evaluating the integral in Equation (44) in Appendix.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2022.881046/full#supplementary-material

Antinucci, P., and Hindges, R. (2018). Orientation-selective retinal circuits in vertebrates. Front. Neural Circ. 12, 11. doi: 10.3389/fncir.2018.00011

Bienenstock, E. L., Cooper, L. N., and Munro, P. W. (1982). Theory for the development of neuron selectivity: orientation specificity and binocular interaction in visual cortex. J. Neurosci. 2, 32–38. doi: 10.1523/JNEUROSCI.02-01-00032.1982

Davey, C. E., Grayden, D. B., and Burkitt, A. N. (2021). Impact of axonal delay on structure development in a multi-layered network. Neural Netw. 144, 737–754. doi: 10.1016/j.neunet.2021.08.023

Freeman, J., Brouwer, G. J., Heeger, D. J., and Merriam, E. P. (2011). Orientation decoding depends on maps, not columns. J. Neurosci. 31, 4792–4804. doi: 10.1523/JNEUROSCI.5160-10.2011

Gerstner, W., Kempter, R., van Hemmen, J. L., and Wagner, H. (1996). A neuronal learning rule for sub-millisecond temporal coding. Nature 383, 76–78. doi: 10.1038/383076a0

Goodhill, G. (2007). Contributions of theoretical modeling to the understanding of neural map development. Neuron 56, 301–311. doi: 10.1016/j.neuron.2007.09.027

Kempter, R., Gerstner, W., and van Hemmen, J. L. (1999a). Hebbian learning and spiking neurons. Phys. Rev. E 59, 4498–4514. doi: 10.1103/PhysRevE.59.4498

Kempter, R., Gerstner, W., and van Hemmen, J. L. (1999b). Spike-based compared to rate-based Hebbian learning. Adv. Neuron Inf. Process. Syst. 11, 128–131.

Linsker, R. (1986a). From basic network principles to neural architecture: emergence of orientation-selective cells. Proc. Natl. Acad. Sci. U.S.A. 83, 8390–8394. doi: 10.1073/pnas.83.21.8390

Linsker, R. (1986b). From basic network principles to neural architecture: emergence of spatial-opponent cells. Proc. Natl. Acad. Sci. U.S.A. 83, 7508–7512. doi: 10.1073/pnas.83.19.7508

MacKay, D. J., and Miller, K. D. (1990). Analysis of Linsker's application of Hebbian rules to linear networks. Network Comput. Neural Syst. 1, 257–297. doi: 10.1088/0954-898X_1_3_001

Mareschal, I., Dakin, S. C., and Bex, P. J. (2006). Dynamic properties of orientation discrimination assessed by using classification images. Proc. Natl. Acad. Sci. U.S.A. 103, 5131–5136. doi: 10.1073/pnas.0507259103

Markram, H., Lubke, J., Frotscher, M., and Sakmann, B. (1997). Regulation of synaptic efficacy by coincidence of postsynaptic aps and epsps. Science 275, 213–215. doi: 10.1126/science.275.5297.213

Miller, K. D. (1990). “Correlation-based models of neural development,” in Neuroscience and Connectionist Theory, chapter 7, eds M. A. Gluck and D. E. Rumelhart (Hillsdale), 267–352.

Philips, R. T., and Chakravarthy, V. S. (2017). A global orientation map in the primary visual cortex (V1): Could a self organizing model reveal its hidden bias? Front. Neural Circ. 10, 109. doi: 10.3389/fncir.2016.00109

Rodionova, E. I., Revishchin, A. V., and Pigarev, I. N. (2004). Distant cortical locations of the upper and lower quadrants of the visual field represented by neurons with elongated and radially oriented receptive fields. Exp. Brain Res. 158, 373–377. doi: 10.1007/s00221-004-1967-1

Sasaki, Y., Rajimehr, R., Kim, B. W., Ekstrom, L. B., Vanduffel, W., and Tootell, R. B. (2006). The radial bias: a different slant on visual orientation sensitivity in human and nonhuman primates. Neuron 51, 661–670. doi: 10.1016/j.neuron.2006.07.021

Scholl, B., Tan, A. Y., Corey, J., and Priebe, N. J. (2013). Emergence of orientation selectivity in the mammalian visual pathway. J. Neurosci. 33, 10616–10624. doi: 10.1523/JNEUROSCI.0404-13.2013

Senn, W., and Buchs, N. J. (2003). Spike-based synaptic plasticity and the emergence of direction selective simple cells: mathematical analysis. J. Comput. Neurosci. 14, 119–138. doi: 10.1023/A:1021935100586

Sjöstrand, J., Olsson, V., Popovic, Z., and Conradi, N. (1999). Quantitative estimations of foveal and extra-foveal circuitry in humans. Vision Res. 39, 2987–2998. doi: 10.1016/S0042-6989(99)00030-9

Smith, A. T., Singh, K. D., Williams, A. L., and Greenlee, M. W. (2001). Estimating receptive field size from fMRI data in human striate and extrastriate visual cortex. Cereb. Cortex 11, 1182–1190. doi: 10.1093/cercor/11.12.1182

Tang, D. S. (1990). “Information theory and early Visual information processing,” in Self-Organization, Emerging Properties, and Learning, 1st Edn (New York, NY: Plenum Press), 113–125.

Walton, L., and Bissest, D. L. (1992). “Parameterising feature sensitive cell formation in Linsker's networks in the auditory system,” in Advances in Neural Information Processing Systems (San Francisco, CA: Morgan Kaufmann Publishers), 1007–1013.

Watson, A. B. (2014). A formula for human retinal ganglion cell receptive field density as a function of visual field location. J. Vis. 14, 15. doi: 10.1167/14.7.15

Wimbauer, S., Gerstner, W., and van Hemmen, J. L. (1998). Analysis of a correlation-based model for the development of orientation-selective receptive fields in the visual cortex. Network Comput. Neural Syst. 9, 449–466. doi: 10.1088/0954-898X_9_4_004

Wimbauer, S., Wenisch, O. G., Miller, K. D., and van Hemmen, J. L. (1997a). Development of spatiotemporal receptive fields of simple cells: I. Model formulation. Biol. Cybern. 77, 453–461. doi: 10.1007/s004220050405

Wimbauer, S., Wenisch, O. G., Miller, K. D., and van Hemmen, J. L. (1997b). Development of spatiotemporal receptive fields of simple cells: II. Simulation and analysis. Biol. Cybern. 77, 463–477. doi: 10.1007/s004220050406

Wurbs, J., Mingolla, E., and Yazdanbakhsh, A. (2013). Modeling a space-variant cortical representation for apparent motion. J. Vis. 13, 1–17. doi: 10.1167/13.10.2

Keywords: neural network, rate-based neural plasticity, orientation selectivity, spatial opponent cells, neural learning, radial orientation

Citation: Davey CE, Grayden DB and Burkitt AN (2022) Emergence of radial orientation selectivity: Effect of cell density changes and eccentricity in a layered network. Front. Comput. Neurosci. 16:881046. doi: 10.3389/fncom.2022.881046

Received: 22 February 2022; Accepted: 04 November 2022;

Published: 13 December 2022.

Edited by:

Si Wu, Peking University, ChinaReviewed by:

Maria Alessandra Ragusa, University of Catania, ItalyCopyright © 2022 Davey, Grayden and Burkitt. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Catherine E. Davey, Y2F0aGVyaW5lLmRhdmV5QHVuaW1lbGIuZWR1LmF1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.