94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci., 15 March 2022

Volume 16 - 2022 | https://doi.org/10.3389/fncom.2022.775241

This article is part of the Research TopicSymmetry as a Guiding Principle in Artificial and Brain Neural NetworksView all 11 articles

In this article, we consider the problem of reconstructing an image that is downsampled in the space of its SE(2) wavelet transform, which is motivated by classical models of simple cell receptive fields and feature preference maps in the primary visual cortex. We prove that, whenever the problem is solvable, the reconstruction can be obtained by an elementary project and replace iterative scheme based on the reproducing kernel arising from the group structure, and show numerical results on real images.

This article introduces an iterative scheme for solving a problem of image reconstruction, motivated by the classical behavior of the primary visual cortex (V1), in the setting of group wavelet analysis. The mathematical formulation of the problem is that of the reconstruction of a function on the plane which, once represented as a function on the group SE(2) = ℝ2 ⋊ 𝕊1 of rotations and translations of the Euclidean plane via the group wavelet transform, is known only on a certain two-dimensional subset of this three-dimensional group. This problem is equivalent to that of filling in missing information related to a large subset of the SE(2) group and ultimately inquires about the completeness of the image representation provided by feature maps observed in V1.

One of the main motivations for the present study comes indeed from neuroscience and the modeling of classical receptive fields of simple cells in terms of group actions restricted to feature maps such as the orientation preference maps. The attempts to model mathematically the measured behavior of the brain's primary visual cortex (V1) have led to the introduction of the linear-nonlinear-Poisson (LNP) model (Carandini et al., 2005), which defines what is sometimes referred to as classical behavior. It describes the activity of a neuron in response to a visual stimulus as a Poisson spiking process driven by a linear operation on the visual stimulus, modeled by the receptive field of the neuron, passed through a sigmoidal nonlinearity. A series of thorough studies of single cell behavior could find a rather accurate description of the receptive fields of a large amount of V1 cells, called simple cells, within the LNP model, in terms of integrals over Gabor functions located in a given position of the visual field (Marcelja, 1980; Ringach, 2002). This description formally reduces the variability in the classical behavior of these cells to few parameters, regulating the position on the visual field, the size, the shape, and the local orientation of a two-dimensional modulated Gaussian. A slightly simplified description of a receptive field activity F in response to a visual stimulus defined by a real function f on the plane, representing light intensity, is the following: denoting by ,

where the parameters s, p ∈ ℝ+ define the local (inverse) scale and spatial frequency, the angle θ ∈ [0, 2π) defines the local direction and x ∈ ℝ2 define the local position of the receptive field in the visual field, while the complex formulation can be formally justified by the prevalence of so-called even and odd cells (Ringach, 2002). We will focus on the sole parameters x, θ. This may be considered restrictive (Hubel and Wiesel, 1962; Sarti et al., 2008; Barbieri, 2015), but it is nevertheless interesting since angles represent a relevant local feature detected by V1 (Hubel and Wiesel, 1962) whose identification has given rise to effective geometrical models of perception (Petitot and Tondut, 1999; Paul C. Bressloff, 2002; John Zweck, 2004; Citti and Sarti, 2006, 2015). We will give more details below concerning this restriction. In this case, one can then model the linear part of the classical behavior of simple cells in terms of an object that is well-studied in harmonic analysis: by rephrasing Equation (1) as

for fixed values of p, s, we obtain a SE(2) group wavelet transform of f (refer to Section 2). On the other hand, classical experiments with optical imaging have shown that not all parameters θ ∈ 𝕊1 are available for these cortical operations (Blasdel and Salama, 1986; Bonhoeffer and Grinvald, 1991; Weliky et al., 1996; Bosking et al., 1997; White and Fitzpatrick, 2007). Furthermore, with single-cell precision two-photon imaging techniques (Ohki et al., 2006), we can say with a good approximation that in many mammals, a pinwheel-shaped function Θ : ℝ2 → 𝕊1 determines the available angle at each position. This feature map can be introduced in the Model (1) by saying that the receptive fields in V1 record the data.

Within this setting, a natural question is, thus, whether this data actually contains all the sufficient information to reconstruct the original image f, and how can we reobtain f from these data. This is the main problem we aim to address. Note that one can equivalently consider the graph of the feature map Θ instead of the feature map itself: this would allow us to address the problem as that of the injectivity of the restriction of the function (Equation 2) to the subset , which is the point of view taken in Section 3.

Before proceeding, we recall that a severe limitation of the purely spatial Model (Equation 1) is that of disregarding temporal behaviors (DeAngelis et al., 1995; Cocci et al., 2012). Moreover, the classical behavior described by the LNP model is well-known to be effective only to a limited extent: several other mechanisms are present that describe a substantial amount of the neural activity in V1, such as Carandini-Heeger normalization (Carandini and Heeger, 2012), so-called non-classical behaviors (Fitzpatrick, 2000; Carandini et al., 2005), and neural connectivity (Angelucci et al., 2002). However, spatial receptive fields and the LNP provide relevant insights on the functioning of V1 (Ringach, 2002). Moreover, the ideas behind the LNP model have been a main source of inspiration in other disciplines, notably for the design of relevant mathematical and computational theories, such as wavelets and convolutional neural networks (Marr, 1980; LeCun et al., 2010). We also point out that the use of groups and invariances to describe the variability of the neural activity has proved to be a solid idea to build effective models (Citti and Sarti, 2006; Anselmi and Poggio, 2014; Petitot, 2017), whose influence on the design of artificial learning architectures is still very strong (Anselmi et al., 2019, 2020; Montobbio et al., 2019; Lafarge et al., 2021).

We would like to mention that, in addition, some other simplifications already at the level of modeling the receptive fields of V1 cells are assumed when considering (Equation 3) as the data collected by V1, i.e., by restricting the parameters of interest to only (x, θ) and by considering just one angle θ for each position x. First, in Equation (1), one performs operations x − y, meaning that both x and y are coordinates on the visual plane. On the other hand, it is known that a nontrivial retinotopic map links the cortical coordinates with the visual field (Tootell et al., 1982). Thus, in order for a cortical map Θ to be compared with actual measurements such as those of Blasdel and Salama (1986); Bonhoeffer and Grinvald (1991); Weliky et al. (1996); Bosking et al. (1997); Ohki et al. (2006); White and Fitzpatrick (2007), in Equation (3), we should not consider Θ as computed directly on the visual field coordinate x but rather compose Θ with the retinotopic map. Equation (3) is, thus, to be considered, from the strictly neural point of view, either as a local approximation or as a formulation where Θ also contains the retinotopic map. Moreover, we are assuming yet another simplification of what would be a realistic model by considering the parameters p, s in Equation (1) as fixed. More specifically, it is known that the spatial frequency p of the receptive field is itself a feature that is organized in a columnar fashion, i.e., by a cortical map (Ribot et al., 2013), which is correlated with that of orientations, and recent refined mathematical models are able to reproduce these cortical maps on the basis of the sole structure of the receptive field (Baspinar et al., 2018). Concerning the (inverse) scale parameter s, it is known (Hubel and Wiesel, 1974) that it does not only vary horizontally on the cortex, but it also changes along the transverse direction, hence providing a local analysis at more than one scale. Moreover, considering the variation of the transversal average local scale with respect to the horizontal cortical coordinates, it has been observed that, up to the scatter variations, this scale is also correlated with another cortical map, the retinotopic map (Harvey and Dumoulin, 2011). Finally, the two parameters p and s appear to be statistically correlated in the populations of simple cells whose receptive fields have been measured and fitted with the Gabor model (Ringach, 2002). If these assumptions are considered satisfactory, however, it becomes easy to reconcile a description such as that of Equation (3) with other classical ones based on the concept of tuning curves (refer to e.g., Webster and Valois, 1985. An orientation tuning curve for a given V1 cell is a measured bell-shaped curve that shows the response of the cell to different orientations. That is, it describes how a cell that has a given orientation preference θ0 actually has a nonzero activation when a visual stimulus with similar but not equally oriented stimuli are presented in its receptive field area. In this sense, each cell is not sensitive to just one orientation, in the same way as it is not sensitive to just one point in the visual space. These areas of influence in the parameter space (x, θ) for a given cell of V1 can actually be related to the structure of receptive fields and the uncertainty principle of the SE(2) group (Barbieri et al., 2014), and orientation tuning curves too can be obtained from Gabor-like receptive fields (Barbieri, 2015).

For the sake of completeness and previously unfamiliar readers, we also recall that simple cells receptive fields are sometimes modeled as scaled directional derivatives of Gaussians. This model, which can actually reproduce quite closely certain Gabor functions, was initially introduced for its theoretical interest and its neural plausibility (Webster and Valois, 1985; Young, 1985; Koenderink and van Doorn, 1987) and later used extensively in the scale space theory in computer vision (Florack et al., 1992; Lindeberg, 1994) as well as for a largely developed axiomatic theory of receptive fields (Lindeberg, 2013, 2021). A thorough discussion on the relationship between this model and that of Gabor functions can be found in (Petitot, 2017, Ch. 3).

Another motivation for studying the completeness of the data collected with Equation (3) comes from the relationship of this problem with that of sampling in reproducing kernel Hilbert spaces (RKHS). The RKHS structure, in this case, is that of the range of the group wavelet transform, and will be discussed in detail, together with the basics on the SE(2) wavelet transform, in Section 2. Reducing the number of parameters in a wavelet transform is a common operation, that one typically performs for discretization purposes (refer to e.g., Daubechies, 1992), or e.g., because it is useful to achieve square-integrability (Ali et al., 1991). The main issue is that these operations are typically constrained nontrivially in order to retain sufficient information that allows one to distinguish all interesting signals. For example, when discretizing the short-time Fourier transform to obtain a discrete Gabor Transform, the well-known density theorem (Heil, 2007) imposes a minimum density of points in phase space where the values of the continuous transform must be known in order to retain injectivity for signals of finite energy. Much is known about this class of problems in the context of sampling, from classical Shannon's theorem and uncertainty-related signal recovery results (Donoho and Stark, 1989) to general sampling results in RKHS (Fuhr et al., 2017; Grochenig et al., 2018). However, the kind of restriction implemented by feature maps does not seem to fit into these settings, even if some similarities may be found between the pinwheel-shaped orientation preference maps (Bonhoeffer and Grinvald, 1991) and Meyer's quasicrystals, which have been recently used for extending compressed sensing results (Candes et al., 2006; Matei and Meyer, 2010; Agora et al., 2019).

We remark that this article does not focus on dictionary learning techniques for image representation that may give rise to realistic receptive fields and feature maps, such as e.g., those studied in Hyvarinen and Hoyer (2001) and Miikkulainen et al. (2005). We will consider only the problem of reconstruction of the SE(2) wavelet transform from a given feature map.

The plan of the article is as follows. In Section 2, after a brief overview of the SE(2) transform and its RKHS structure, we will formalize in a precise way the problem of functional reconstruction after the restriction (Equation 3) has been performed. In Section 3, we will introduce a technique to tackle this problem, given by an iterative kernel method based on projecting the restricted SE(2) wavelet transform onto the RKHS defined by the group representation. Moreover, we will consider a discretization of the problem and, in the setting of finite dimensional vector spaces, we will give proof that the proposed iteration converges to the desired solution. Finally, in Section 4, we will show and discuss numerical results on real images.

The purpose of this section is to review the fundamental notions of harmonic analysis needed to provide a formal statement of the problem. We will focus on the group wavelet transform defined by the action on L2(ℝ2) of the group of rotations and translation of the Euclidean plane, expressed as a convolutional integral transform.

We will denote the Fourier transform of f ∈ L1(ℝ2) ∩ L2(ℝ2) by

and, as customary, we will use the same notation for its extension by density to the whole L2(ℝ2). We will also denote by * the convolution on ℝ2:

Let 𝕊1 be the abelian group of angles of the unit circle, which is isomorphic either to the one dimensional torus 𝕋 = [0, 2π) or to the group SO(2) of rotations of the plane ℝ2. The group SE(2) = ℝ2 ⋊ 𝕊1 is (refer to e.g., Sugiura, 1990, Ch. IV) the semidirect product group with elements (x, θ) ∈ ℝ2 × 𝕊1 and composition law

where . Its Haar measure, which is the (Radon) measure on the group which is invariant under group operations, is the Lebesgue measure on ℝ2 ⋊ 𝕊1.

A standard way to perform a wavelet analysis with respect to the SE(2) group on two-dimensional signals is given by the operator defined as follows.

DEFINITION 1. Let us denote by the unitary action by rotations of 𝕊1 on L2(ℝ2):

Let ψ ∈ L2(ℝ2), and denote by ψθ = R(θ)ψ. The SE(2) wavelet transform on L2(ℝ2) with the mother wavelet ψ is

In terms of this definition, we can then see that if we choose s, p ∈ ℝ+, and let be

we can write (Equation 1) as F = Wψs, pf(x, θ).

Moreover, by making use of the quasiregular representation of the SE(2) group

and denoting by , we can rewrite (Equation 4) as follows:

which is a standard form to write the so-called group wavelet transform (refer to e.g., Führ, 2005; Deitmar and Echterhoff, 2014). Note that, in the interesting case (Equation 5), we have .

The SE(2) transform (Equation 4), together with the notion of group wavelet transform, has been studied in multiple contexts (refer to e.g., Weiss and Wilson, 2001; Antoine et al., 2004; Führ, 2005; Duits et al., 2007; Deitmar and Echterhoff, 2014; Dahlke et al., 2015 and references therein), and several of its properties are well-known. In particular, if Wψf is a bounded operator from L2(ℝ2) to L2(ℝ2 × 𝕊1), which happens e.g., for ψ ∈ L1(ℝ2) ∩ L2(ℝ2) by Young's convolution inequality and the compactness of 𝕊1, it is easy to see that its adjoint reads

It is also well-known (Weiss and Wilson, 2001) that Wψf cannot be injective on the whole L2(ℝ2), i.e., we cannot retrieve an arbitrary element of L2(ℝ2) by knowing its SE(2) transform. However, as shown in Duits (2005); Duits et al. (2007), and applied successfully in a large subsequent series of works (e.g., Duits and Franken, 2010; Zhang et al., 2016; Lafarge et al., 2021), it is possible to obtain a bounded invertible transform by extending the notion of SE(2) transform to mother wavelets ψ that do not belong to L2(ℝ2), or by simplifying the problem and reduce the wavelet analysis to the space of bandlimited functions, that are those functions whose Fourier transform is supported on a bounded set, whenever a Calderón's admissibility condition holds. Since our main point in this article is not the reconstruction of the whole L2(ℝ2), we will consider the SE(2) transform with this second, simplified, approach. In this case, the image of the SE(2) transform is a reproducing kernel Hilbert subspace of L2(ℝ2 × 𝕊1) whose kernel will play an important role in the next section. For convenience, we formalize these statements with the next two theorems, and provide a sketch of the proof in the Appendix, even if they can be considered standard material.

THEOREM 2. For R > 0, let and let

The SE(2) wavelet transform (Equation 4) for a mother wavelet ψ ∈ L2(ℝ2) is a bounded injective operator from to L2(ℝ2 × 𝕊1) if and only if there exist two constants 0 < A ≤ B < ∞ such that

holds for almost every ξ ∈ BR.

Before stating the next result, we repeat the observation of Weiss and Wilson (2001) and show, using this theorem, that the SE(2) transform cannot be a bounded injective operator on the whole L2(ℝ2). Indeed, using that

we can see that the Calderón's function in condition (Equation 9) is actually a radial function

which, by Plancherel's theorem, satisfies Hence, the lower bound in condition (Equation 9) cannot be satisfied on the whole ℝ2 by any ψ ∈ L2(ℝ2).

On the other hand, for the mother wavelet (Equation 5), since , we have

From here, we can see that Cψ(ξ) > 0 for all ξ ∈ ℝ2, so, even if Cψ(ξ) → 0 as |ξ| → ∞, we have that the lower bound in Equation (9) is larger than zero for any finite R.

The next theorem shows how to construct the inverse of the SE(2) wavelet transform on bandlimited functions, and what is the structure of the closed subspace defined by its image.

THEOREM 3. Let ψ ∈ L2(ℝ2) and R > 0 be such that Equation (9) holds. Let also γ ∈ L2(ℝ2) be defined by

where , and let be as in Equation (8). Then

(i) For all , it holds .

(ii) The space is a reproducing kernel Hilbert subspace of continuous functions of L2(ℝ2 × 𝕊1), and the orthogonal projection ℙ of L2(ℝ2 × 𝕊1) onto is

Since is a Hilbert space of continuous functions, it makes sense to consider its values on the zero measure set provided by the graph of a function Θ : ℝ2 → 𝕊1, as in Equation (3). We can then provide a formal statement of the problem discussed in Section 1:

For this problem to be solvable, the graph must be a set of uniqueness for . That is, the following condition must hold:

Indeed, if this was not the case, for any nonzero that vanishes on , the function would be different from f but WψfF would coincide with Wψf on . That is, we could not solve the problem (Equation 13).

Condition (Equation 14) is in general hard to be checked, and the formal characterization of the interplay between ψ and Θ that makes it hold true is out of the scope of this article. However, in the next section, we will provide a technique for addressing (Equation 13) in a discrete setting, which will allow us to explore in Section 4, the behavior of this problem for various functions Θ inspired by the feature maps measured in V1.

In this section, we describe the discretization of the problem (Equation 13) which is used in the next section. Then, we introduce a kernel based iterative algorithm and prove its convergence to the solution whenever the solvability condition (Equation 14) holds.

The setting described in Section 2 can be discretized in a standard fashion by replacing L2(ℝ2) with ℂN×N, endowed with the usual Euclidean scalar product, circular convolution, and discrete Fourier transform (FFT), which amounts to replacing ℝ with ℤN, the integers modulo N. Explicitly, given f, ψ ∈ ℂN×N, , we have

With a uniform discretization of angles, i.e., by replacing 𝕊1 with , we obtain the following discretization of the SE(2) transform with the mother wavelet (Equation 5):

for x, ξ ∈ ℤN × ℤN and , j = 0, …, M. Thus, in particular, . Note that here, for simplicity, we have removed the normalization used in Equation (5).

This allows us to process N × N digital images while retaining the results of Theorems 2 and 3 as statements on finite frames (refer to e.g., Casazza et al., 2013) since Plancherel's theorem and Fourier convolution theorem still holds. In particular, when computing numerically the inverse of Wψ using (i), Theorem 3, one has to choose an R > 0 so that Calderón's condition (Equation 9) for

holds with some 0 < A ≤ B < ∞ for all . This is the injectivity condition on and, due to the finiteness of the space, now it can be achieved for all R, i.e., without bandlimiting. However, since this is equivalent to the frame inequalities (Equation 24), the quantity defines actually the condition number of Wψ. Thus, in order to keep stability for the inversion, the ratio cannot be arbitrarily large (refer to e.g., Duits et al., 2007; Casazza et al., 2013). Once the parameters s, p, R are chosen in such a way that this ratio provides an acceptable numerical accuracy, one can then compute the projection ℙ given by (ii), Theorem 3, on F ∈ ℂN×N×M, by making use of Fourier convolution theorem:

We note at this point that this standard discretization, in general (for M different from 2 or 4), retains all of the approaches of Section 2 but the overall semidirect group structure of ℝ2 ⋊ 𝕊1.

Let us now consider the discretization of the problem (Equation 13) and denote the graph of Θ : ℤN × ℤN → ℤM by = {(x, j) ∈ ℤN × ℤN × ℤM : j = Θ(x)}. If we denote by 𝕆Θ the selection operator that sets to zero all the components of an F ∈ ℂN×N×M that do not belong to , i.e.,

We can see that this is now an orthogonal projection of ℂN×N×M. Hence, problem (Equation 13) can be rewritten in the present discrete setting as follows: given , find F ∈ ℂN×N×M that solves the linear problem

The meaning of Equation (17) is indeed to recover Wψf, and hence f, knowing only the values Wψf(x, Θ(x)).

We propose to look for such a solution using the following iteration in ℂN×N×M: for F0 = 𝕆Θ Wψf, compute

The idea behind this iteration is elementary: we start with the values of Wψf selected by Θ, we project them on the RKHS defined by the image of Wψ, and we replace the values on of the result with the known values of Wψf. The convergence of this iteration is discussed in the next section. Before that, we observe that Equation (18) can be seen as a linearized version of the Wilson, Cowan, and Ermentrout equation (Wilson and Cowan, 1972; Ermentrout and Cowan, 1980). Indeed, denoting by K = (1 − 𝕆Θ)ℙ, Equation (18) can be seen as a forward Euler scheme (time discretization) for the vector valued ordinary differential equation.

Apart from the absence of a sigmoid, this is indeed a classical model of population dynamics. Here, the “kernel” K is not just the reproducing kernel ℙ, but it also contains the projection on a feature map 𝕆Θ. Returning to the model of V1, here, the forcing term F0 is the data collected by simple cells, while the stationary solution F, if it exists, is the full group representation of the visual stimulus defined as the solution to the Volterra-type equation F = KF + F0.

We show here that, whenever the problem (Equation 17) is solvable, the iteration (Equation 18) converges to its solution. Since the argument is general, we will consider in this subsection the setting of an arbitrary finite dimensional vector space V endowed with a scalar product and the induced norm, and two arbitrary orthogonal projections P, Q. For an orthogonal projection P, we will denote by P⊥ = 1 − P the complementary orthogonal projection. We will also denote by Ran the range, or image, and by Ker the kernel, of a matrix.

We start with a basic observation, which is just a restatement of the solvability condition (Equation 14) as that of a linear system defined by an orthogonal projection, in this case, Q, on a subspace, in this case, characterized as Ran(P). The simple proof is included for convenience, and it can be found in the Appendix.

LEMMA 4. Let P, Q be orthogonal projections of a finite dimensional vector space V. The system

has a unique solution in V for any if and only if

The problem posed by the system (Equation 19) is a problem of linear algebra: if we know that a vector F belongs to a given subspace Ran(P)⊂V, and we know the projection of F on a different subspace Ran(𝕆)⊂V, can we recover F? The next theorem shows that, if the system (Equation 19) has a unique solution, such a solution can be obtained by the project and replace iteration (Equation 18). Its proof is given in the Appendix.

THEOREM 5. Let V be finite dimensional vector space, and let P, Q be orthogonal projections of V. Given , set , and let {Fn}n∈ℕ, {Hn}n∈ℕ ⊂ V be the sequences defined by the project and replace the iteration.

If condition (Equation 20) holds, then

and the errors , decay exponentially with the number of iterations n.

We present in this study the reconstruction results of the project and replace iteration on the restriction to feature maps of the SE(2) transform of real images. We have chosen eight 512 × 512 pixels, 8-bit grayscale digital images , which are shown in Figure 1 together with their Fourier spectra. Note that, for processing, they have been bandlimited in order to formally maintain the structure described in Section 2. However, this bandlimiting has minimal effects, not visible to the eye, since the spectra have a strong decay: for this reason, the bandlimited images are not shown.

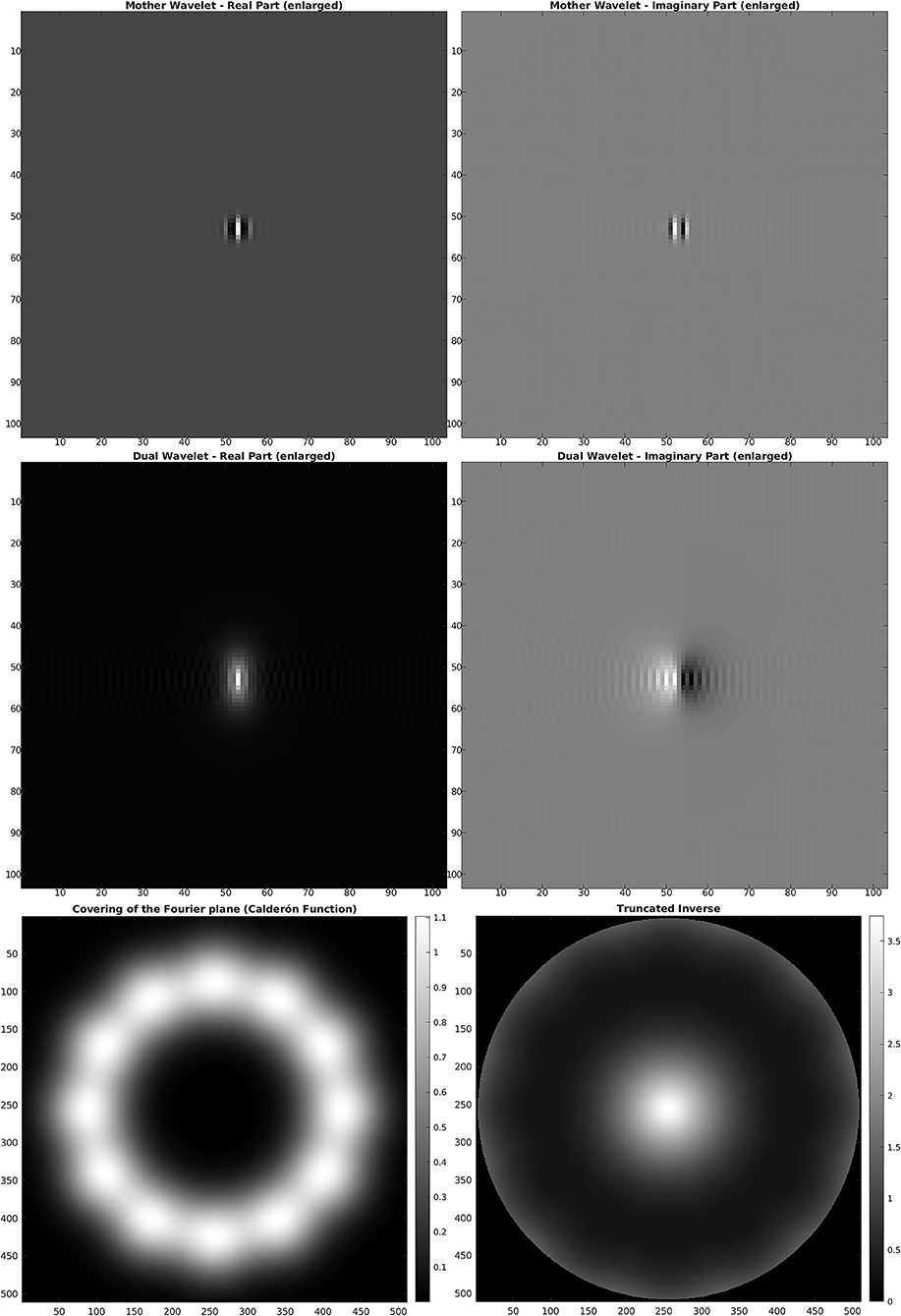

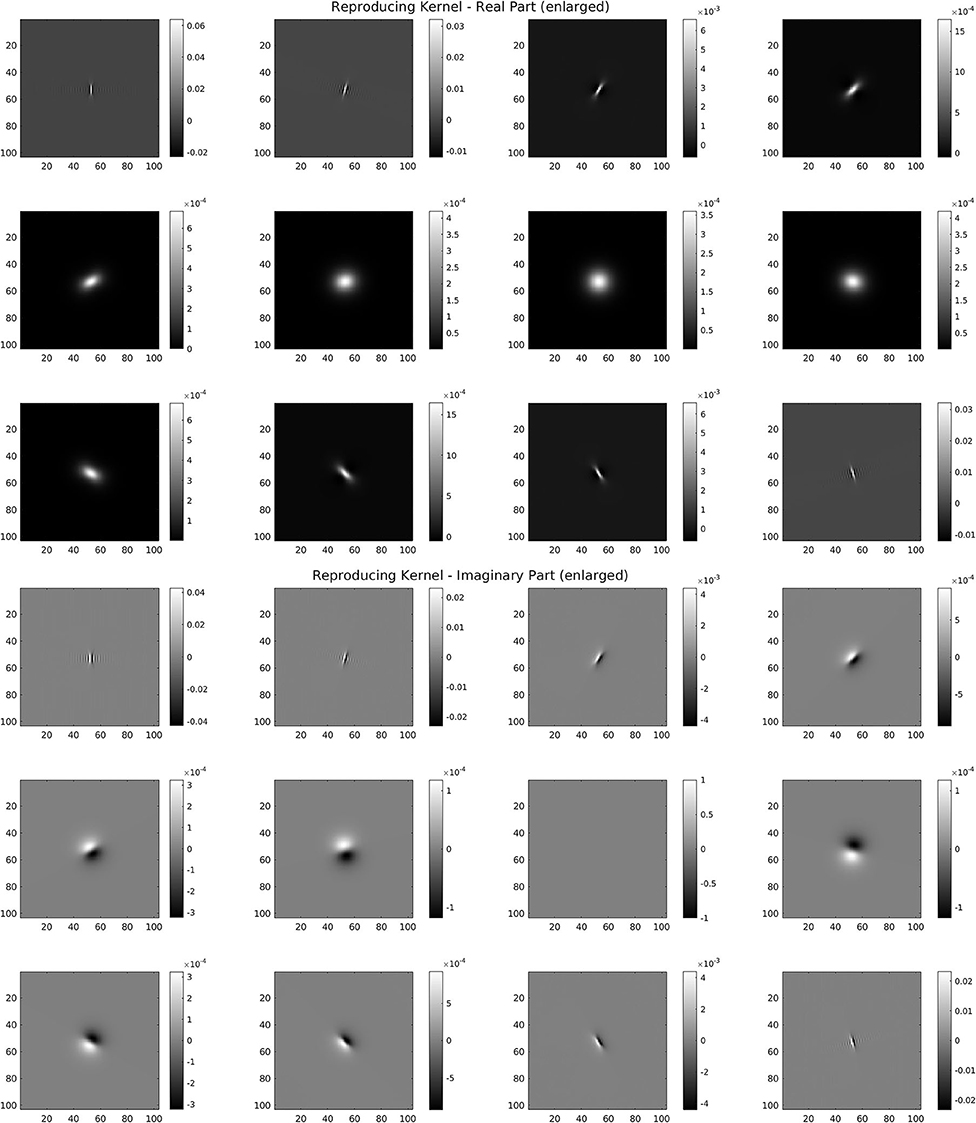

For the discrete SE(2) transform, we have chosen a discretization of 𝕊1 with 12 angles, so that, with respect to the notation of the previous section, we have N = 512 and M = 12. We have also chosen the parameter values s = 51, p = 170, R = 252. The mother wavelet ψ and dual wavelet γ produced by these parameters are shown in Figure 2, top and center, on a crop of the full 512 × 512 space for better visualization. We stress that the stability of the transform and the numerical accuracy of the projection (Equation 16) depend only on the behavior of the Calderón's function, while the accuracy of image representation under bandlimiting with radius R depends on the decay of the spectra of the images. The Calderón's function Cψ, computed as in Equation (15), is shown in Figure 2, bottom left: the chosen parameters define a ratio B/A ≈ 6 · 103, corresponding to a condition number for Wψ of less than 102. In Figure 2, bottom right, we have shown in log10 scale the multiplier χBR/Cψ that defines the dual wavelet γ as in Equation (11), and in particular, we can see the bandlimiting radius, to be compared with the spectra of Figure 1. For visualization purposes, in Figure 3, we have shown in spatial coordinates the integral kernel defining the projection (Equation 16), which is the reproducing kernel for the discrete SE(2) transform. Its real and imaginary parts are shown on the same crop used to display the wavelet of Figure 2.

Figure 2. Top: mother wavelet ψ. Center: dual wavelet γ. Bottom, left: Calderón's function. Bottom, right: inverse of the Calderón's function in log10 scale, bandlimited with R = 252.

Figure 3. Reproducing kernel for the wavelet of Figure 2. Top: real part for the 12 angles. Bottom: imaginary part for the 12 angles.

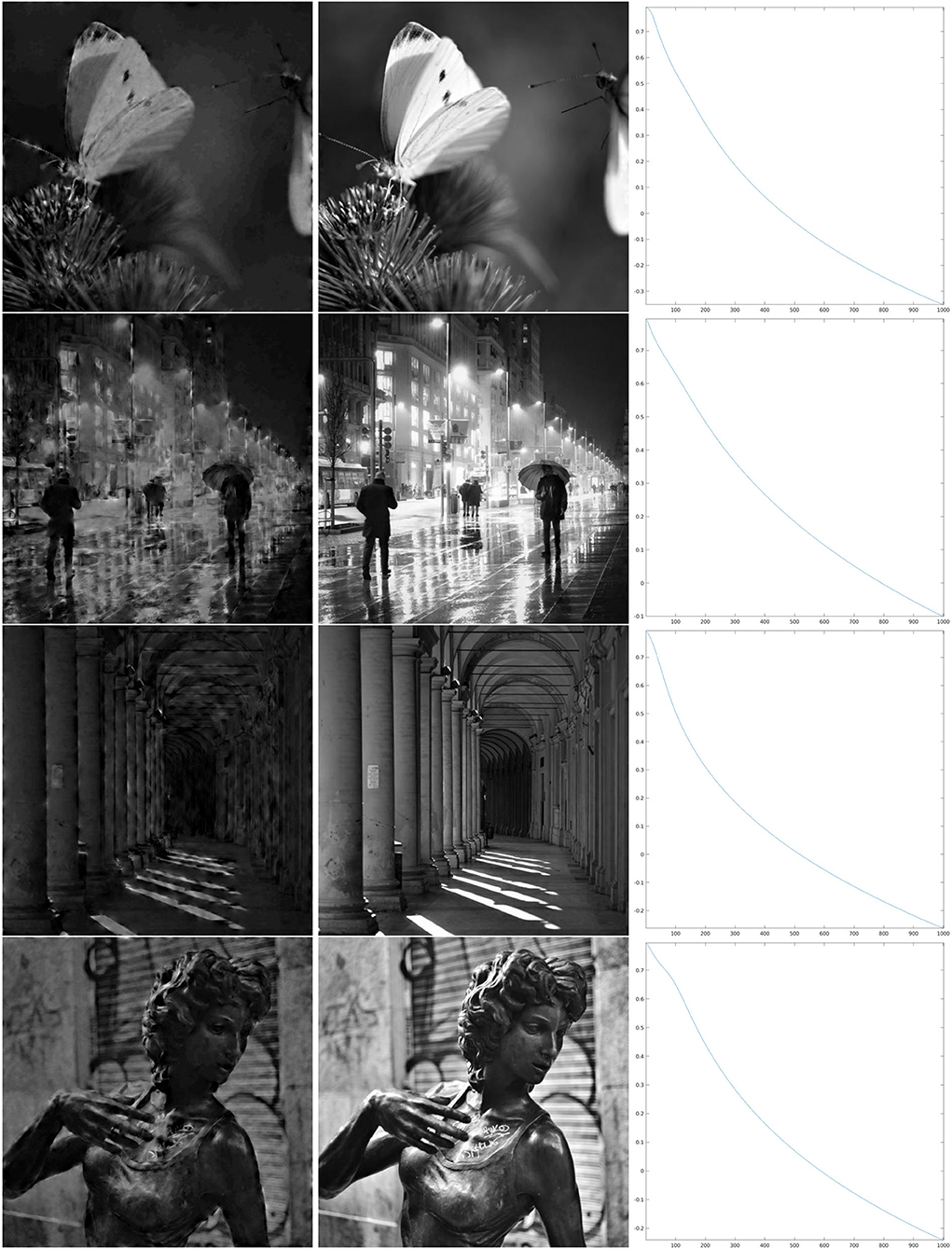

We have implemented iteration (Equation 18) for the restriction of this discrete SE(2) transform to different types of feature maps Θ, shown in Figure 4. We will comment below each one of these cases. We have chosen to illustrate the effect of that iteration as follows. We have computed the sequence as in Equation (21), for a number ν of iterations, and applied the inverse SE(2) transform to each Hn. This allows us to obtain real images that are directly comparable with the original ones. We then have shown , representing the first image that can be directly retrieved from the feature parameters, and , that is the image obtained when we stopped the iteration. Moreover, as a measure of reconstruction error, we have considered the following rescaling of the Euclidean norm, at each step n ∈ {1, …, ν}:

This adimensional quantity measures a % error obtained as the average square difference by pixel of an image fi in the dataset from its n-steps reconstruction , divided by the size of the admissible pixel range for 8 bit images, which is {0, …, 255}.

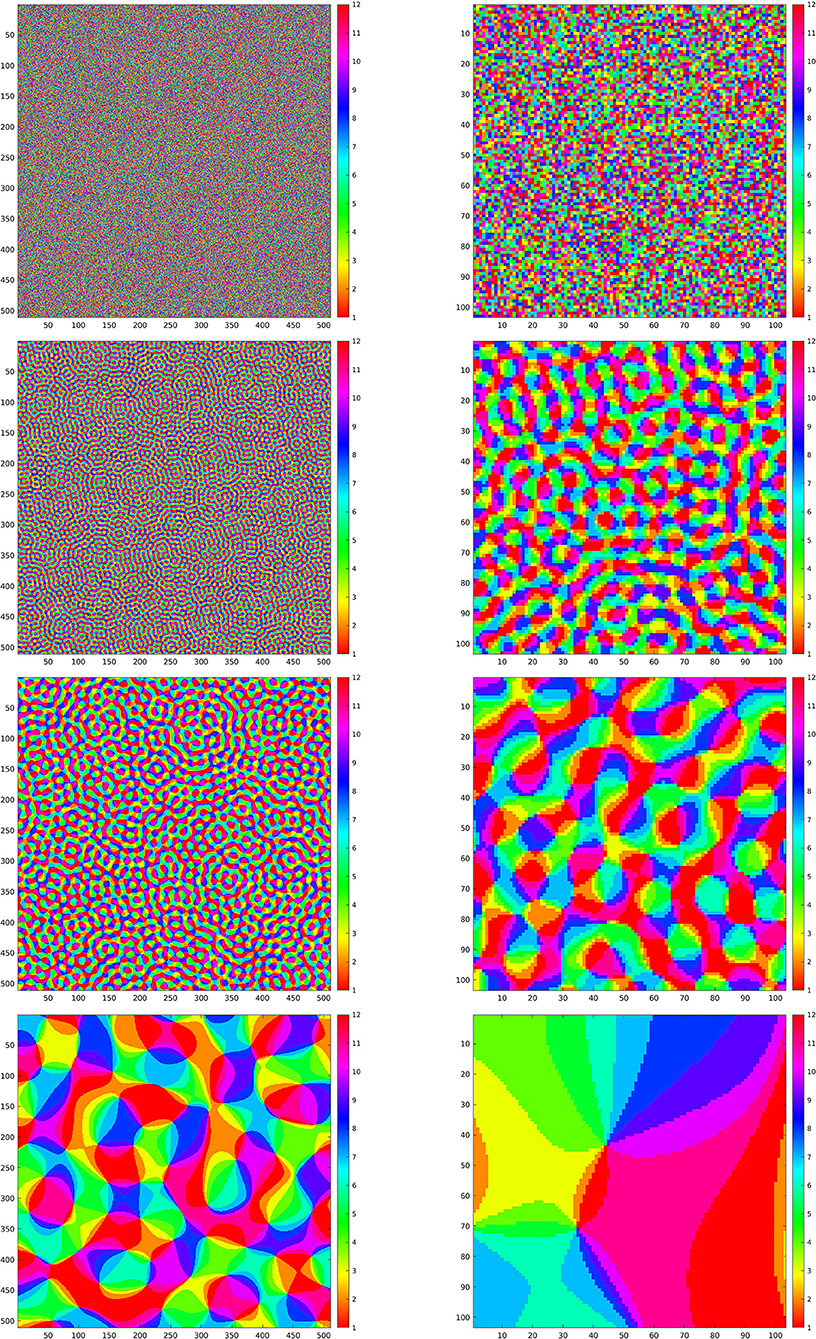

Figure 4. Left column: maps Θ for the simulations in Figures 5–8. Right column: enlargements of the same maps. First line: purely random Θ. Second, third, and fourth line: maps Θρ generated according to Equation (23) with ρ respectively given by ρ = 0.8, ρ = 0.4, and ρ = 0.06.

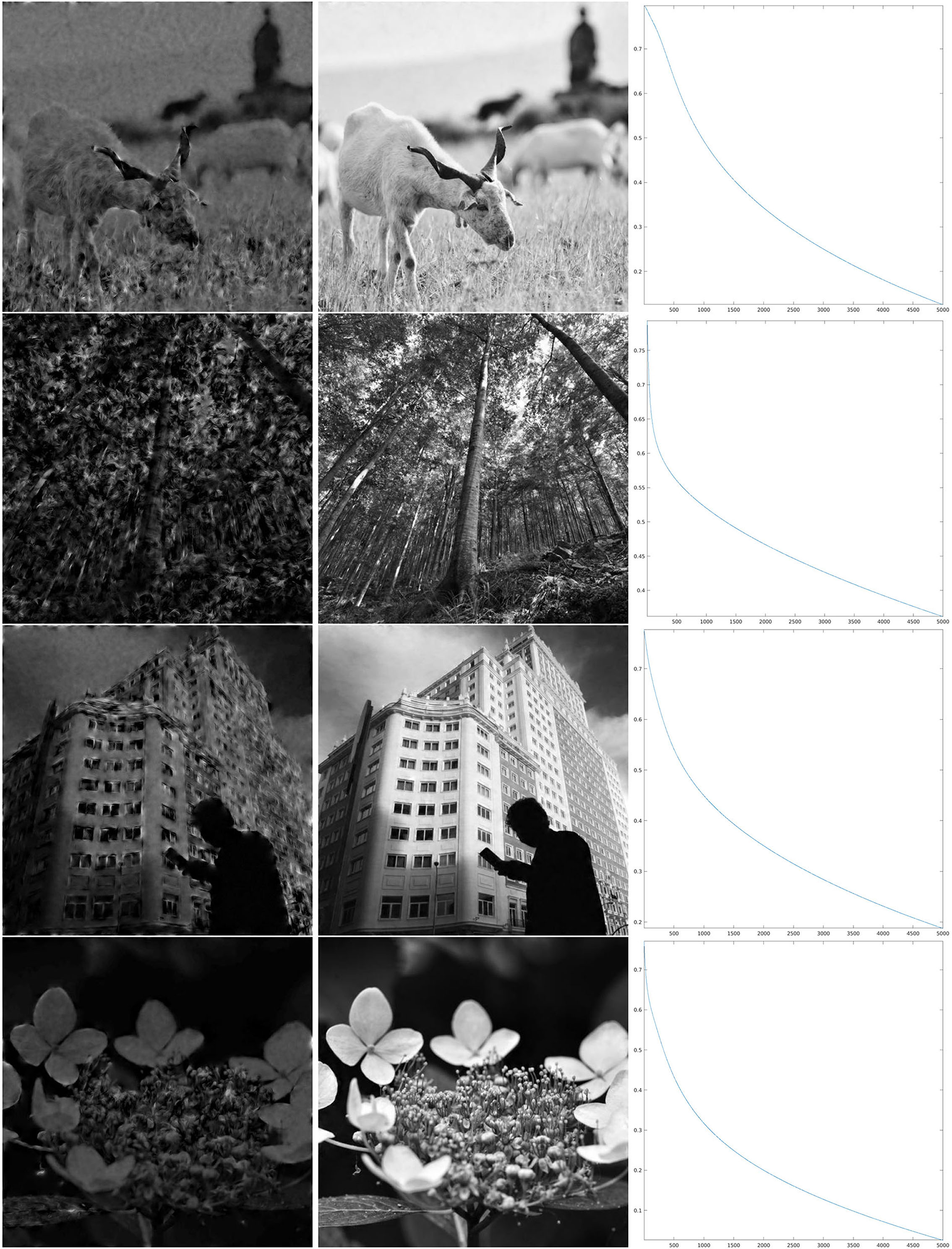

The first map that we have considered is a Θ : ℤN × ℤN → ℤM that, for each x ∈ ℤN × ℤN, simply chooses one value in ℤM as a uniformly distributed random variable. This map is shown in the first line of Figure 4, left, and in the first line of Figure 4, right, we have shown an enlargement to the same crop at which the wavelets in Figure 2 and the reproducing kernel in Figure 3 are shown. In Figure 5, we have shown the images resulting from and , and the evolution of the error (Equation 22) in log10 scale, respectively in the left, center, and right column, for the first four images of Figure 1. In this case, we can see that the error Δn goes beyond 1%, indicated by 0 on the y-axis, in just about 500 iterations. As a remark, feature maps that are similar to such configurations are commonly encountered in rodents (refer to e.g., Ho et al., 2021 and references therein).

Figure 5. Iteration on the images 1–4 of Figure 1 for the purely random Θ shown in the first line of Figure 4. Left: The first step of the iteration. Center: after 1,000 iterations. Right: log10(Δn), for the error (Equation 22).

Next, we present the results for three selection maps Θ that are pinwheel-shaped, as it is commonly observed for orientation and direction preference maps of V1 in primates and other mammals. These maps can be constructed as follows Petitot (2017): for ρ ∈ ℝ+, let ϕρ : ℤN × ℤN → ℂ be given by

where Γ is a purely random process with values in [0, 2π). The maps Θρ : ℤN × ℤN → ℤM that we have considered are obtained by

where angle(z) is the phase of a complex number z ∈ ℂ, and ⌊t⌋ is the integer part of a real number t. The resulting maps are quasiperiodic, with a characteristic correlation length that corresponds to the fact that the spectrum of ϕρ is concentrated on a ring of radius . The main feature of those maps Θρ is that they possess points, called pinwheel points, around which all angles are mapped, and these points are spaced, on average, by a distance of 2π/ρ (refer to e.g., Petitot, 2017 and references therein).

In the second, third, and fourth line of Figure 4, left, we have shown the resulting maps Θρ with ρ, respectively, given by ρ = 0.8, ρ = 0.4, and ρ = 0.06. On the right column, we have shown an enlargement to the same crop used before.

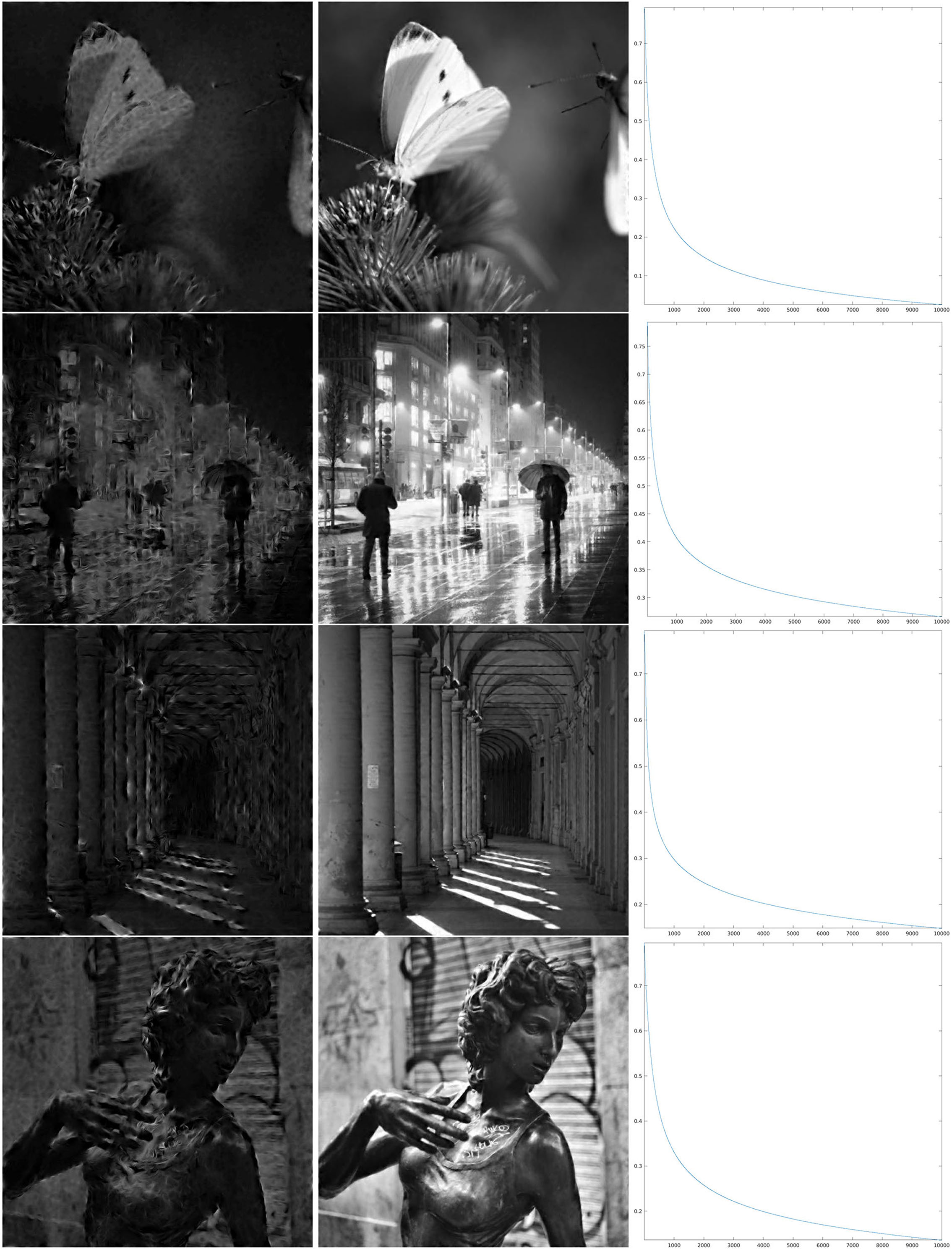

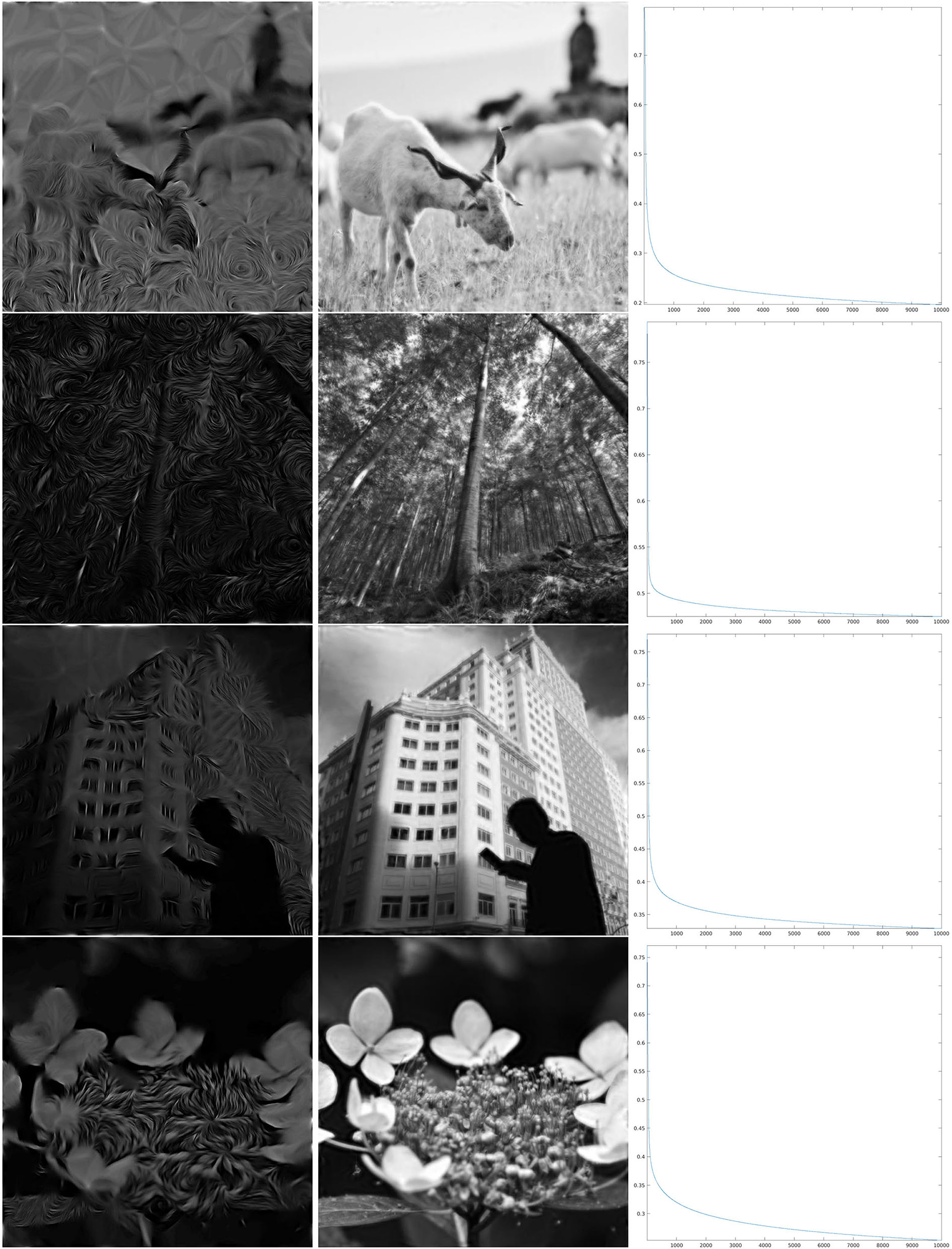

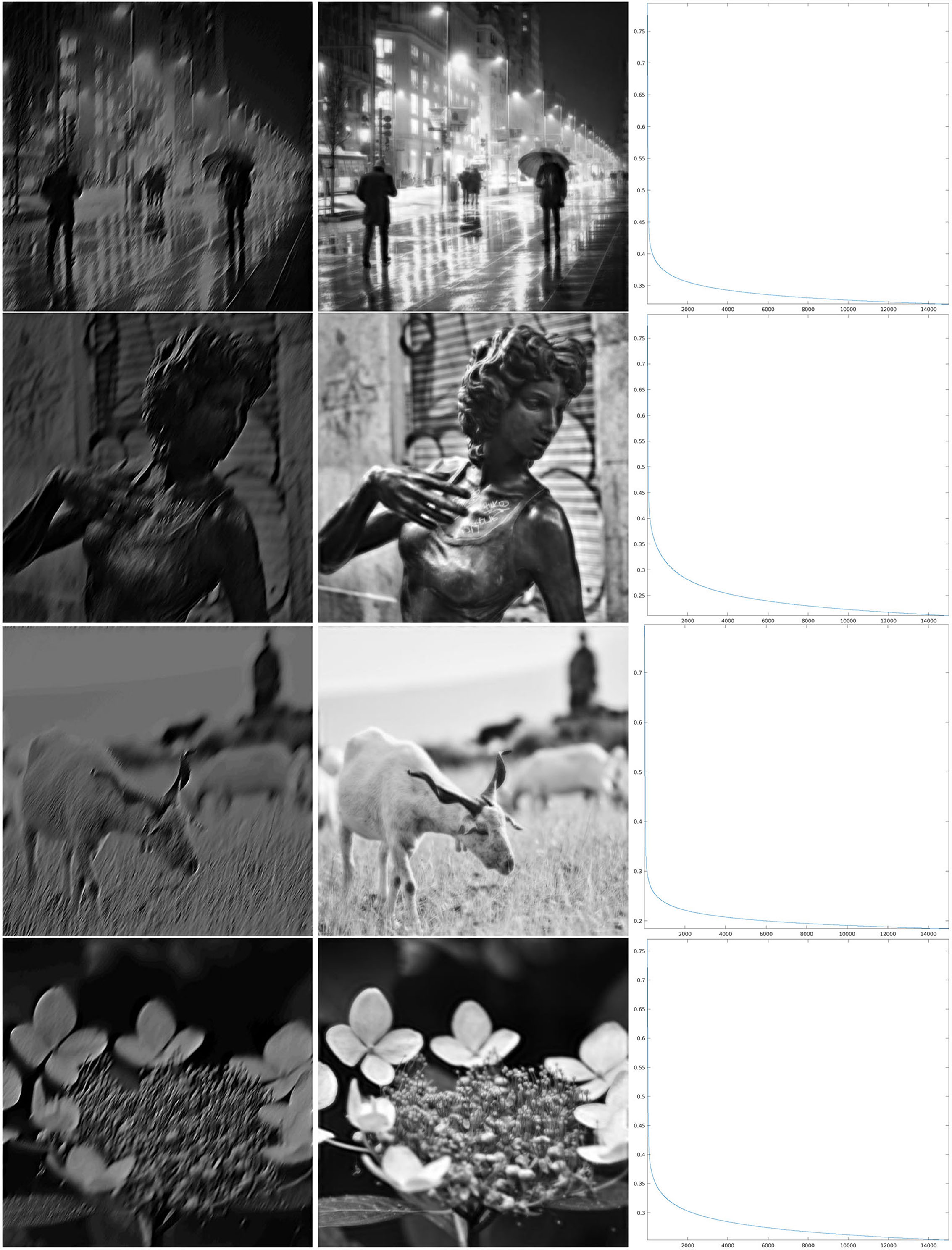

The results of the iteration are presented, as described above, in Figures 6–8, whose structure is the same as in Figure 5 with the exception of the number of iterations, which is now larger.

Figure 6. Iteration on the images 5–8 of Figure 1 for Θ0.8 shown in the second line of Figure 4. Left: The first step of the iteration. Center: after 5,000 iterations. Right: log10(Δn), for the error (Equation 22).

In order to discuss these results, we first recall that the correlation length of orientation preference maps has been often related to the size of receptive fields, as a “coverage” constraint to obtain a faithful representation of the visual stimulus (refer to Swindale, 1991; Swindale et al., 2000; Bosking et al., 2002; Keil and Wolf, 2011; Barbieri et al., 2014 and references therein). However, neither mathematical proof of this principle in terms of image reconstruction has been given so far, nor has the word size received a more quantitative meaning within the models of type (Equation 1), and we have not tried to give any more specific meaning either. However, for the three cases that we present, by comparing the crops of the maps Θρ in Figure 4 with the wavelet ψ in Figure 2, we can see that for ρ = 0.8, the correlation length of the map Θρ is approximately similar to what we could call effective support of the receptive field, while for ρ = 0.4, we have that the area of influence of the receptive field does not include two different pinwheel points, and for ρ = 0.06, the two scales are very different. Heuristically, one could then be led to think that the reconstruction properties in the three cases may present qualitative differences. For example, that condition (Equation 14), or its discrete counterpart (Equation 20), may hold in the first case and may not hold in the third case.

As can be seen from the numerical results of the proposed algorithm, there is actually a difference in the behavior of the decay. For larger values of the parameter ρ, when the map Θρ is more similar to the purely random selection described above, the decay of the error is faster, while for smaller values of ρ the decay is slower, but nevertheless, the error appears to be monotonically decreasing. In the presented cases, for ρ = 0.8, we can see in the right column of Figure 6 that in about 2,000 iterations the error decay appears to enter an exponential regime, which is rectilinear in the log10 scale. We see in Figure 7 that it takes roughly twice as many iterations for ρ = 0.4 to enter the same regime. On the other hand, for ρ = 0.06, we can see in Figure 8 that after a relatively small number of iterations the decay becomes very small and does not seem to become exponential even after 10,000 iterations. However, visual inspection of the results (which “measures” the error in a different way than the Euclidean norm) in this last case, show that the starting image appears to be qualitatively highly corrupted, while the image obtained after the iteration was stopped is remarkably true to the original one and does not display evident artifacts away from the boundaries.

Figure 7. Iteration on the images 1–4 of Figure 1 for Θ0.4 shown in the third line of Figure 4. Left: The first step of the iteration. Center: after 10,000 iterations. Right: log10(Δn), for the error (Equation 22).

Figure 8. Iteration on the images 5–8 of Figure 1 for Θ0.06, shown in the fourth line of Figure 4. Left: The first step of the iteration. Center: after 10,000 iterations. Right: log10(Δn), for the error (Equation 22).

The surprisingly good performance in the reconstruction problem for the last map Θ0.06 has motivated a performance test for an additional feature selection map, given by a function Θ that is independent of x, hence selecting just one angle in the SE(2) transform. In the same fashion as seeing the purely random distribution as a limiting case for large ρ of the pinwheel-shaped maps, this can be considered as a limiting case for small ρ. However, keeping only the values of Wψf for a single angle θ concretely corresponds to performing a convolution with one function ψθ, and aiming to reconstruct f is actually a deconvolution problem. In this case, the frequency behavior of the convolution filter is that of a Gaussian centered away from the origin, and its shape can be observed in the Calderón's function of Figure 2, which clearly shows the sum of 12 such Gaussians. We have shown the reconstruction results for this problem, using now 15,000 iterations, in Figure 9. The error decay seems to share, qualitatively, much of the same properties of the case ρ = 0.06.

Figure 9. Iteration for the SE(2) deconvolution by ψ on images of Figure 1. Left: The first step of the iteration. Center: after 15,000 iterations. Right: log10(Δn), for the error (Equation 22).

In this article, we have proposed an elementary iterative technique to address the problem of the reconstruction of images from a fixed reduced set of values of its SE(2) group wavelet transform. We have formally defined these restrictions in terms of cortical maps, as this problem is inspired by visual perception, since the SE(2) symmetry and the associated integral transform have proved to be relevant for mathematical modeling of V1, refer to e.g., (Petitot and Tondut, 1999; Paul C. Bressloff, 2002; John Zweck, 2004; Citti and Sarti, 2006, 2015). Moreover, the presented numerical simulations directly compare with the studies on the relationship between cortical maps and the efficiency of single cell encoding of information in terms of coverage (Swindale, 1991; Swindale et al., 2000; Bosking et al., 2002; Keil and Wolf, 2011; Barbieri et al., 2014). Indeed, as a result of the main theorem, and for cortical maps as formally defined in this article, we can see from the proposed implementation that, when a pinwheel structure is chosen, the relationship between the average distance between pinwheel centers and the size of receptive fields influences the invertibility of the restricted SE(2) transform.

A possible interpretation of the proposed iteration with a kernel defined by the SE(2) group as a neural computation in V1 comes from the modeling of the neural connectivity as a kernel operation (Wilson and Cowan, 1972; Ermentrout and Cowan, 1980; Citti and Sarti, 2015; Montobbio et al., 2018), especially if considered in the framework of a neural system that aims to learn group invariant representations of visual stimuli (Anselmi and Poggio, 2014; Anselmi et al., 2020). A direct comparison of the proposed technique with kernel techniques recently introduced with radically different purposes in Montobbio et al. (2018) and Montobbio et al. (2019) shows, however, two main differences at the level of the kernel that is used: here, we need the dual wavelet to build the projection kernel, and the iteration kernel effectively contains the feature maps. On the other hand, a possible application is the inclusion of a solvability condition such as Equation (14) as iterative steps within learning frameworks such as those of Anselmi et al. (2019) and Anselmi et al. (2020). From the point of view of actual neural implementation, even if it was possible to see a formal analogy between the proposed algorithm and a neural field equation, we believe that more has to be done concerning the performances and that the proposed mechanism has to be considered as a much more elementary procedure than the ones that could take place in a real visual cortex. For example, while the proposed iteration is faster than typical convex optimization implementations used for other completion problems, it still requires a large number of iterations even in the exponential regime. Improvements could be searched in several directions, e.g., by including non-classical behaviors in neural modeling.

We would like to observe also that, since the proof of convergence of this technique is general, it could be applied to other problems with a similar structure. The computational cost essentially relies on the availability of efficient methods to implement the two projections that define the problem in the discrete setting, as it happens to be the case for the setting studied in this article. In particular, similar arguments could be applied to other wavelet transforms based on semidirect product groups ℝd ⋊ G, with G a subgroup of GLd(ℝ) that defines what is sometimes referred to as local features, and to sampling projections obtained for example, but not only, from other types of feature maps Θ : ℝd → G. From the dimensional point of view, in the discrete setting the selection of local features with a feature map can be seen as downsampling that allows one to maintain in the transformed space the same dimension of the input vector. This is often a desirable property and it is already commonly realized e.g., by the MRA decomposition algorithm of classical wavelets or by the pooling operation in neural networks. Moreover, its apparent stability of convergence seems to suggest that this operation can be performed a priori, without the need for a previous study of the solvability of the problem.

Several questions remain open after this study. Probably the most fundamental one is the characterization of those maps Θ that, for a given mother wavelet ψ, satisfy the solvability condition (Equation 14). In terms of the study of the convergence of the project and replace iteration, it is plausible that one could obtain convergence under weaker conditions than Equation (20), even if maybe to a different solution, as it appears to happen in some of the numerical simulations presented.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

The author confirms being the sole contributor of this work and has approved it for publication.

This project has received funding from the European Union's Horizon 2020 Research and Innovation Programme under the Marie Skłodowska-Curie grant agreement No 777822, and from Grant PID2019-105599GB-I00, Ministerio de Ciencia e Innovación, Spain.

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The author would like to thank G. Citti, A. Sarti, E. Hernández, J. Antezana, and F. Anselmi for inspiring discussion on topics related to the present study.

Agora, E., Antezana, J., Cabrelli, C., and Matei, B. (2019). Existence of quasicrystals and universal stable sampling and interpolation in lca groups. Trans. Amer. Math. Soc. 372, 4647–4674. doi: 10.1090/tran/7723

Ali, S. T., Antoine, J. P., and Gazeau, J. P. (1991). Square integrability of group representations on homogeneous spaces. i. reproducing triples and frames. Annales de l'I. H. P. A 55, 829–855.

Ali, S. T., Antoine, J. P., and Gazeau, J. P. (1993). Continuous frames in hilbert space. Ann. Phys. 222, 1–37. doi: 10.1006/aphy.1993.1016

Angelucci, A., Levitt, J. B., Watson, E. J. S., Hupeé, J. M., Bullier, J., and Lund, J. S. (2002). Circuits for local and global signal integration in primary visual cortex. J. Neurosci. 22, 8633–8646. doi: 10.1523/JNEUROSCI.22-19-08633.2002

Anselmi, F., Evangelopoulos, G., Rosasco, L., and Poggio, T. (2019). Symmetry-adapted representation learning. Pattern Recognit. 86, 201–208. doi: 10.1016/j.patcog.2018.07.025

Anselmi, F., Patel, A., and Rosasco, L. (2020). Neurally plausible mechanisms for learning selective and invariant representations. J. Math. Neurosc. 10, 12. doi: 10.1186/s13408-020-00088-7

Anselmi, F., and Poggio, T. A. (2014). Representation learning in sensory cortex: a theory. CBMM Memo Series 026.

Antoine, J. P., Murenzi, R., Vandergheynst, P., and Ali, S. T. (2004). Two-Dimensional Wavelets and their Relatives. Cambridge: Cambridge University Press.

Barbieri, D. (2015). Geometry and dimensionality reduction of feature spaces in primary visual cortex. Proc. SPIE Wavelets Sparsity XVI 9597, 95970J. doi: 10.1117/12.2187026

Barbieri, D., Citti, G., and Sarti, A. (2014). How uncertainty bounds the shape index of simple cells. J. Math. Neurosc. 4, 5. doi: 10.1186/2190-8567-4-5

Baspinar, E., Citti, G., and Sarti, A. (2018). A geometric model of multi-scale orientation preference maps via gabor functions. J. Math. Imaging Vis. 60, 900–912. doi: 10.1007/s10851-018-0803-3

Blasdel, G. G., and Salama, G. (1986). Voltage-sensitive dyes reveal a modular organization in monkey striate cortex. Nature 321, 579–585. doi: 10.1038/321579a0

Bonhoeffer, T., and Grinvald, A. (1991). Iso-orientation domains in cat visual cortex are arranged in pinwheel-like patterns. Nature 353, 429–431. doi: 10.1038/353429a0

Bosking, W. H., Crowley, J. C., and Fitzpatrick, D. (2002). Spatial coding of position and orientation in primary visual cortex. Nat. Neurosci. 5, 874–882. doi: 10.1038/nn908

Bosking, W. H., Zhang, Y., Schofield, B., and Fitzpatrick, D. (1997). Orientation selectivity and the arrangement of horizontal connections in tree shrew striate cortex. J. Neurosci. 17, 2112–2127. doi: 10.1523/JNEUROSCI.17-06-02112.1997

Candes, E. J., Romberg, J., and Tao, T. (2006). Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52, 489–509. doi: 10.1109/TIT.2005.862083

Carandini, M., Demb, J. B., Mante, V., Tolhurst, D. J., Dan, Y., Olshausen, B. A., et al. (2005). Do we know what the early visual system does? J. Neurosci. 25, 10577–10597. doi: 10.1523/JNEUROSCI.3726-05.2005

Carandini, M., and Heeger, D. J. (2012). Normalization as a canonical neural computation. Nat. Rev. Neurosci. 13, 51–62. doi: 10.1038/nrn3136

Citti, G., and Sarti, A. (2006). A cortical based model of perceptual completion in the roto-translation space. J. Math. Imag. Vis. 24, 307–326. doi: 10.1007/s10851-005-3630-2

Citti, G., and Sarti, A. (2015). The constitution of visual perceptual units in the functional architecture of v1. J. Comput. Neurosci. 38, 285–300. doi: 10.1007/s10827-014-0540-6

Cocci, G., Barbieri, D., and Sarti, A. (2012). Spatiotemporal receptive fields of cells in v1 are optimally shaped for stimulus velocity estimation. J. Opt. Soc. Am. A 29, 130–138. doi: 10.1364/JOSAA.29.000130

Dahlke, S., Mari, F. D., Grohs, P., and Labate, D. (2015). Harmonic and Applied Analysis. From Groups to Signals. Basel: Birkhäuser.

Daubechies, I. (1992). Ten Lectures on Wavelets. Philadelphia, PA: Siam Society for Industrial and Applied Mathematics.

DeAngelis, G. C., Ohzawa, I., and Freeman, R. D. (1995). Receptive-field dynamics in the central visual pathways. Trends Neurosci. 18, 451–458. doi: 10.1016/0166-2236(95)94496-R

Deitmar, A., and Echterhoff, S. (2014). Principles of Harmonic Analysis, 2nd Edn. Springer International Publishing Switzerland.

Donoho, D. L., and Stark, P. B. (1989). Uncertainty principles and signal recovery. SIAM J. Appl. Math. 49, 906–931. doi: 10.1137/0149053

Duits, R. (2005). Perceptual organization in image analysis (Ph.D. thesis). Eindhoven University of Technology, Department of Biomedical Engineering, Netherlands.

Duits, R., Felsberg, M., Granlund, G., and Romeny, B. T. H. (2007). Image analysis and reconstruction using a wavelet transform constructed from a reducible representation of the euclidean motion group. Int. J. Comput. Vis. 72, 79–102. doi: 10.1007/s11263-006-8894-5

Duits, R., and Franken, E. (2010). Left-invariant parabolic evolutions on se(2) and contour enhancement via invertible orientation scores part i: linear left-invariant diffusion equations on se(2). Quart. Appl. Math. 68, 255–292. doi: 10.1090/S0033-569X-10-01172-0

Ermentrout, G. B., and Cowan, J. D. (1980). Large scale spatially organized activity in neural nets. SIAM J. Appl. Math. 38, 1–21. doi: 10.1137/0138001

Fitzpatrick, D. (2000). Seeing beyond the receptive field in primary visual cortex. Curr. Opin. Neurobiol. 10, 438–443. doi: 10.1016/S0959-4388(00)00113-6

Florack, L. M., ter Haar Romeny, B. M., Koenderink, J. J., and Viergever, M. A. (1992). Scale and the differential structure of images. Image Vis. Comput. 10, 376–388. doi: 10.1016/0262-8856(92)90024-W

Führ, H. (2005). Abstract Harmonic Analysis of Continuous Wavelet Transforms. Berlin; Heidelberg: Springer-Verlag.

Fuhr, H., Grochenig, K., Haimi, A., Klotz, A., and Romero, J. L. (2017). Density of sampling and interpolation in reproducing kernel hilbert spaces. J. Lond. Math. Soc. 96, 663–686. doi: 10.1112/jlms.12083

Grochenig, K., Romero, J. L., and Stockler, J. (2018). Sampling theorems for shift-invariant spaces, gabor frames, and totally positive functions. Invent. Math. 211, 1119–1148. doi: 10.1007/s00222-017-0760-2

Harvey, B. M., and Dumoulin, S. O. (2011). The relationship between cortical magnification factor and population receptive field size in human visual cortex: constancies in cortical architecture. J. Neurosci. 31, 13604–13612. doi: 10.1523/JNEUROSCI.2572-11.2011

Heil, C. (2007). History and evolution of the density theorem for gabor frames. J. Fourier. Anal. Appl. 13, 113–166. doi: 10.1007/s00041-006-6073-2

Ho, C. L. A., Zimmermann, R., Florez Weidinger, J. D., Prsa, M., Schottdorf, M., Merlin, S., et al. (2021). Orientation preference maps in microcebus murinus reveal size-invariant design principles in primate visual cortex. Curr. Biol. 31, 733.e7–741.e7. doi: 10.1016/j.cub.2020.11.027

Hubel, D. H., and Wiesel, T. N. (1962). Receptive fields, binocular interaction and functional architecture in the cat's visual cortex. J. Physiol. 160, 106–154. doi: 10.1113/jphysiol.1962.sp006837

Hubel, D. H., and Wiesel, T. N. (1974). Uniformity of monkey striate cortex. A parallel relationship between field size, scatter and magnification factor. J. Comp. Neurol. 158, 295–306. doi: 10.1002/cne.901580305

Hyvarinen, A., and Hoyer, P. O. (2001). Topographic independent component analysis as a model of v1 organization and receptive fields. Neurocomputing 38–40, 1307–1315. doi: 10.1016/S0925-2312(01)00490-8

John Zweck, L. R. W. (2004). Euclidean group invariant computation of stochastic completion fields using shiftable-twistable functions. J. Math. Imaging Vis. 21, 135–154. doi: 10.1023/B:JMIV.0000035179.47895.bc

Keil, W., and Wolf, F. (2011). Coverage, continuity, and visual cortical architecture. Neural Syst. Circ. 1, 17. doi: 10.1186/2042-1001-1-17

Koenderink, J., and van Doorn, A. (1987). Representation of local geometry in the visual system. Biol. Cybern. 55, 367–375. doi: 10.1007/BF00318371

Lafarge, M. W., Bekkers, E. J., Pluim, J. P., Duits, R., and Veta, M. (2021). Roto-translation equivariant convolutional networks: application to histopathology image analysis. Med. Image Anal. 68, 101849. doi: 10.1016/j.media.2020.101849

LeCun, Y., Kavukcuoglu, K., and Farabet, C. (2010). “Convolutional networks and applications in vision,” in Proceedings of 2010 IEEE International Symposium on Circuits and Systems (Paris: IEEE), 253–256.

Lindeberg, T. (1994). Scale Space Theory in Computer Vision. Dordrecht: Springer Science+Business Media.

Lindeberg, T. (2013). A computational theory of visual receptive fields. Biol. Cybern. 107, 589–635. doi: 10.1007/s00422-013-0569-z

Lindeberg, T. (2021). Normative theory of visual receptive fields. Heliyon 7:e05897. doi: 10.1016/j.heliyon.2021.e05897

Marcelja, S. (1980). Mathematical description of the responses of simple cortical cells. J. Opt. Soc. Am. 70, 1297–1300. doi: 10.1364/JOSA.70.001297

Matei, B., and Meyer, Y. (2010). Simple quasicrystals are sets of stable sampling. Complex Var. Elliptic Equ. 55, 947–964. doi: 10.1080/17476930903394689

Miikkulainen, R., Bednar, J. A., Choe, Y., and Sirosh, J. (2005). Computational Maps in the Visual Cortex. New York, NY: Springer Science+Business Media, Inc.

Montobbio, N., Bonnasse-Gahot, L., Citti, G., and Sarti, A. (2018). From receptive profiles to a metric model of v1. (preprint). arXiv:1803.05783v3. doi: 10.1007/s10827-019-00716-6

Montobbio, N., Bonnasse-Gahot, L., Citti, G., and Sarti, A. (2019). Kercnn: Biologically inspired lateral connections for classification of corrupted images. (preprint). arXiv:1910.08336v1.

Ohki, K., Chung, S., Kara, P., Hubener, M., Bonhoeffer, T., and Reid, R. C. (2006). Highly ordered arrangement of single neurons in orientation pinwheels. Nature 442, 925–928. doi: 10.1038/nature05019

Paul, C., Bressloff, J.ack D., and Cowan, M. G. P. J. T. M. C. W. (2002). What geometric visual hallucinations tell us about the visual cortex. Neural Comput. 14, 473–491. doi: 10.1162/089976602317250861

Petitot, J., and Tondut, Y. (1999). Vers une neurogéométrie. fibrations corticales, structures de contact et contours subjectifs modaux. Math. Sci. Hum. 145, 5–101. doi: 10.4000/msh.2809

Ribot, J., Aushana, Y., Bui-Quoc, E., and Milleret, C. (2013). Organization and origin of spatial frequency maps in cat visual cortex. J. Neurosci. 33, 13326–13343a. doi: 10.1523/JNEUROSCI.4040-12.2013

Ringach, D. (2002). Spatial structure and symmetry of simple cells receptive fields in macaque primary visual cortex. J. Neurophysiol. 88, 455–463. doi: 10.1152/jn.2002.88.1.455

Sarti, A., Citti, G., and Petitot, J. (2008). The symplectic structure of the primary visual cortex. Biol. Cybern. 98, 33–48. doi: 10.1007/s00422-007-0194-9

Sugiura, M. (1990). Unitary Representations and Harmonic Analysis, 2nd Edn. Amsterdam: Elsevier Science Publishing Company.

Swindale, N. V. (1991). Coverage and the design of striate cortex. Biol. Cybern., 65, 415–424. doi: 10.1007/BF00204654

Swindale, N. V., Shoham, D., Grinvald, A., Bonhoeffer, T., and Hubener, M. (2000). Visual cortex maps are optimized for uniform coverage. Nat. Neurosci. 3, 822–826. doi: 10.1038/77731

Tootell, R. B. H., Silverman, M. S., Switkes, E., and Valois, R. L. D. (1982). Deoxyglucose analysis of retinotopic organization in primate striate cortex. Science 218, 902–904. doi: 10.1126/science.7134981

Webster, M. A., and Valois, R. L. D. (1985). Relationship between spatial-frequency and orientation tuning of striate-cortex cells. J. Opt. Soc. Am. A 2, 1124–1132. doi: 10.1364/JOSAA.2.001124

Weiss, G., and Wilson, E. N. (2001). “The mathematical theory of wavelets,” in Twentieth Century Harmonic Analysis – A Celebration, ed J. S. Byrnes (Springer), 329–366.

Weliky, M., Bosking, W., and Fitzpatrick, D. (1996). A systematic map of direction preference in primary visual cortex. Nature 379, 725–728. doi: 10.1038/379725a0

White, L. E., and Fitzpatrick, D. (2007). Vision and cortical map development. Neuron 56, 327–338. doi: 10.1016/j.neuron.2007.10.011

Wilson, H. R., and Cowan, J. D. (1972). Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J. 12, 1–24. doi: 10.1016/S0006-3495(72)86068-5

Young, R. A. (1985). The gaussian derivative theory of spatial vision: analysis of cortical cell receptive field line-wheighting profiles. GM Res. Labs Techn. Pub. 4920, 1–75.

Zhang, J., Dashtbozorg, B., Bekkers, E., Pluim, J., Duits, R., and ter Haar Romeny, B. (2016). Robust retinal vessel segmentation via locally adaptive derivative frames in orientation scores. IEEE Trans. Med. Imaging 35, 2631–2644. doi: 10.1109/TMI.2016.2587062

This section contains the proofs of the formal statements introduced in the text.

SKETCH OF THE PROOF OF THEOREM 2. By Fourier Convolution Theorem, Plancherel's theorem, and the definition of , we have

Thus, (9) is equivalent to the so-called frame condition

for all , which is equivalent [refer to e.g., Ali et al. (1993)] to WΨ being bounded and invertible on .□

SKETCH OF THE PROOF OF THEOREM 3. Observe first that Thus, recalling (7) and using Fourier Convolution Theorem, we can compute

which proves (i). To prove (ii), one needs to show that the elements of are continuous functions, that is self adjoint and idempotent, and that (12) holds. The continuity can be obtained as a consequence of the continuity of the unitary representation (6) and, by the same arguments used to prove (i) we can easily see that . On the other hand, (i) implies that for all , hence for all . Equation (12) can be obtained directly by (7) and the definition of Wψ.□

PROOF OF LEMMA 4. The system (19) is of course solved by , so we only need to prove that, for all , this solution is unique if and only if (20) holds. In order to see this, let 𝕊 ∈ V be a solution to (19), and denote by . Then E ∈ Ran(P) ∩ Ker(Q). Hence, (20) holds if and only if E = 0, i.e., the only solution to (19) is .□

PROOF OF THEOREM 5. Denoting by H1 = PF0, we can rewrite the iteration (21) as two separate iterations, generating the two sequences {Fn}n ∈ ℕ, {Hn}n ∈ ℕ as follows:

If (20) holds, then ||PQ⊥|| < 1 and ||Q⊥P|| < 1, because . Hence, the existence of the limits and is due to the convergence of the Neumann series [refer to e.g., Riesz and Sz.-Nagy (1990)]. Thus, getting rid of the notations and H1 = PF0, we have that the limits H and F are the solutions to the equations

We can now see that , because this equation reads

and, using that , we can rewrite it as

which is true by definition of Q⊥ = 1 − Q. Moreover, by taking the limit in the first equation of (21), and using again that , we obtain

Finally, the convergence of (18) is exponential because, using the series expression (A2) for ,

A similar argument applies to .□

Keywords: harmonic analysis, wavelet analysis, reproducing kernel Hilbert spaces, primary visual cortex, receptive fields, orientation preference maps

Citation: Barbieri D (2022) Reconstructing Group Wavelet Transform From Feature Maps With a Reproducing Kernel Iteration. Front. Comput. Neurosci. 16:775241. doi: 10.3389/fncom.2022.775241

Received: 13 September 2021; Accepted: 28 January 2022;

Published: 15 March 2022.

Edited by:

Fabio Anselmi, Baylor College of Medicine, United StatesReviewed by:

Bruno Olshausen, University of California, Berkeley, United StatesCopyright © 2022 Barbieri. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Davide Barbieri, ZGF2aWRlLmJhcmJpZXJpQHVhbS5lcw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.