94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci., 25 March 2022

Volume 16 - 2022 | https://doi.org/10.3389/fncom.2022.747735

This article is part of the Research TopicAffective Computing and Regulation in Brain Computer InterfaceView all 11 articles

Affective studies provide essential insights to address emotion recognition and tracking. In traditional open-loop structures, a lack of knowledge about the internal emotional state makes the system incapable of adjusting stimuli parameters and automatically responding to changes in the brain. To address this issue, we propose to use facial electromyogram measurements as biomarkers to infer the internal hidden brain state as feedback to close the loop. In this research, we develop a systematic way to track and control emotional valence, which codes emotions as being pleasant or obstructive. Hence, we conduct a simulation study by modeling and tracking the subject's emotional valence dynamics using state-space approaches. We employ Bayesian filtering to estimate the person-specific model parameters along with the hidden valence state, using continuous and binary features extracted from experimental electromyogram measurements. Moreover, we utilize a mixed-filter estimator to infer the secluded brain state in a real-time simulation environment. We close the loop with a fuzzy logic controller in two categories of regulation: inhibition and excitation. By designing a control action, we aim to automatically reflect any required adjustments within the simulation and reach the desired emotional state levels. Final results demonstrate that, by making use of physiological data, the proposed controller could effectively regulate the estimated valence state. Ultimately, we envision future outcomes of this research to support alternative forms of self-therapy by using wearable machine interface architectures capable of mitigating periods of pervasive emotions and maintaining daily well-being and welfare.

Emotions directly influence the way we think and interact with others in different situations, especially when it interferes with rationality in our decision-making or perception (Dolan, 2002). Thus, having a solid grasp of the dynamics of emotions is critical to provide any therapeutic solutions to maintain welfare (Couette et al., 2020). Moreover, deciphering emotions has been an ongoing task among researchers, dictating joint efforts from behavioral, physiological, and computational angles (Scherer, 2005). According to the James A. Russell's circumplex model of affect, emotion can be divided into two perpendicular axes, viz. valence—reflecting the spectrum of negative to positive emotions—and arousal, accounting for the intensity characteristics (Russell, 1980). In this study, we focus on improving comprehension of emotional valence regulation by proposing an architecture to track and regulate the internal hidden valence state using physiological signals collected via wearable devices. The use of wearable devices to gain insight to the internal brain state provides a good alternative to study the brain dynamics, as usually the procedures either rely on invasive techniques, e.g., extracting bloodstream samples, performing surgery, or require large and expensive equipment for imaging purposes (Villanueva-Meyer et al., 2017; Wickramasuriya et al., 2019a,b).

Affective computing is defined by an interdisciplinary field of research that incorporates both sentiment analysis and emotion recognition (Poria et al., 2017). Scholars have posited the importance of affective computing to endow machines with the means to recognize, interpret or convey emotions and sentiments (Poria et al., 2017; Burzagli and Naldini, 2020). These capabilities allow the development and enhancement of personal care systems that interact better with humans, potentially improving a personal health and daily well-being (Burzagli and Naldini, 2020). Previous attempts in the development of affective computing have focused on emotion feature extraction and classification through human-robot interactions (Azuar et al., 2019; Rudovic et al., 2019; Yu and Tapus, 2019; Filippini et al., 2020; Rosula Reyes et al., 2020; Val-Calvo et al., 2020), facial expressions (Chronaki et al., 2015; rong Mao et al., 2015; Yang et al., 2018a; Zeng et al., 2018), and vocal responses (Wang et al., 2015; Fayek et al., 2017; Noroozi et al., 2017; Anuja and Sanjeev, 2020). The objective of this research is to take this one step further and introduce a tracking and closed-loop control framework to regulate specific emotions.

Within a closed-loop approach, biomarkers are collected in real-time as feedback, which grants the possibility of automatically adjusting brain stimulation levels according to the current emotional state (Wickramasuriya et al., 2019a,b; Thenaisie et al., 2021). Previous studies have shown that this strategy can increase treatment efficacy and decrease the extent of stimulation side-effects, compared to just employing an open-loop stimulation (Price et al., 2020). The benefits of closed-loop neurostimulation have been well reported in addressing conventional-therapy-resistant patients with Parkinson's disease (Little et al., 2016; Weiss and Massano, 2018). However, fewer studies have explored closed-loop therapies for non-motor neuropathologies such as post-traumatic stress disorder or depression (Tegeler et al., 2017; Mertens et al., 2018), even though there is already relevant evidence of improvements with open-loop therapies (Conway et al., 2018; Starnes et al., 2019; Freire et al., 2020). Conversely with the conventional open-loop approach, brain stimulation is manually tuned during in-clinic visits, delivering pre-determined quantities and incurring over or under stimulation of the brain (Wickramasuriya et al., 2019a,b; Price et al., 2020).

To properly regulate the emotional brain state in a closed-loop manner, a suitable biomarker that relates to the internal emotional valence needs to be identified. Prior research has based emotion classification on facial or voice expressions, which not only requires heavy data acquisition, but also runs into ambiguity issues (Tan et al., 2012). Facial and vocal expressions can vary significantly between person to person, making it difficult to draw any accurate inference about the person's emotional state. Moreover, facial and vocal expressions (e.g., smiling) can be seen as externalized emotions and can be altered at will, confounding the accuracy of such classification approaches, and thus hindering any tracking and control efforts as the true emotional state would not be clear (Cai et al., 2018). In response, our proposed strategy aims to remove this ambiguity by using a more reliable metric: physiological signals (Cacioppo et al., 2000). Physiological signals or biomarkers are involuntary responses initiated by the human's central and autonomic nervous systems, whereas facial and vocal lineaments can voluntarily be hidden to reject certain emotional displays (Cannon, 1927; Cacioppo et al., 2000; Lin et al., 2018; Amin and Faghih, 2020; Wilson et al., 2020). Although overall facial expression can be made to mask certain emotions, several studies have linked electromyogram activity of specific facial muscles to states of affection in varying valence levels, such as happiness, stress and anger (Nakasone et al., 2005; Kulic and Croft, 2007; Gruebler and Suzuki, 2010; Tan et al., 2012; Amin et al., 2016; Cai et al., 2018). Cacioppo et al. described that the somatic effectors of the face are tied to changes in connective tissue rather than skeletal complexes (Cacioppo et al., 1986). Researchers in Cacioppo et al. (1986) posited facial electromyogram could provide insight into valence state recognition even when there is no apparent change in facial expressions. Moreso, the work of Ekman et al. (1980) and Brown and Schwartz (Brown and Schwartz, 1980) are two of the few who showed that using facial electromyogram measurements of the zygomaticus muscle (zEMG) gave the most distinct indicator of valence compared to other facial muscles involved in the act of smiling. Multiple studies have suggested the relation between emotional states and facial electromyogram activity (Van Boxtel, 2010; Tan et al., 2011; Koelstra et al., 2012; Künecke et al., 2014; Kordsachia et al., 2018; Kayser et al., 2021; Shiva et al., 2021). Golland et al. (2018) also showcased a consistent relationship between the emotional media viewed and the changes seen in the components of the facial electromyogram signal. We focus on zEMG to build our model and track the hidden valence state. Then, we design a control strategy to automatically regulate the internal emotional valence state in real-time.

It should be noted that electromyogram is not the only physiological metric that has shown promise for valence recognition. Emotional valence can also be represented by many different physiological signals or a combination of them (Egger et al., 2019), such as using electroencephalography (Bozhkov et al., 2017; Wu et al., 2017; Soroush et al., 2019; Feradov et al., 2020), respiration (Zhang et al., 2017; Wickramasuriya et al., 2019a,b), electrocardiography (ECG) (Das et al., 2016; Goshvarpour et al., 2017; Harper and Southern, 2020), blood volume pulse (Das et al., 2016) or heart rate variability (Ravindran et al., 2019). Egger et al. investigated the accuracy of different physiological signals in classifying emotive states such as stress periods, calmness, despair, discontent, erotica, interest, boredom, or elation (Egger et al., 2019). Naji and collaborators displayed the disparity between multimodal and individual signal measurements regarding emotion classification via ECG and forehead biosignals (Naji et al., 2014).

Previous studies have also investigated different ways of estimating and tracking internal brain states (Sakkalis, 2011). Brain dynamics during resting states have been studied with measurements from functional magnetic resonance imaging (fMRI), using linear and non-linear models, and more recently, employing a tensor based approach (Honey et al., 2009; Abdelnour et al., 2014; Al-Sharoa et al., 2018). The transition of brain states has been examined with machine learning methods and eigenvalue decomposition, by using data from fMRI, electroencephalogram (EEG) or magnetoencephalography (Pfurtscheller et al., 1998; Guimaraes et al., 2007; LaConte et al., 2007; Maheshwari et al., 2020). Moreover, EEG measurements were also employed with machine learning techniques to estimate stress levels (Al-Shargie et al., 2015), and affection (Nie et al., 2011). The method introduced by Yadav et al. uses a state-space formulation to track and classify emotional valence based on two simultaneous assessments of brain activity (Yadav et al., 2019). In the present work, we use a similar approach to estimate and track the hidden valence state, with the help of Bayesian filtering as a powerful statistical tool to improve state estimation under measurement uncertainties (Prerau et al., 2009; Ahmadi et al., 2019; Wickramasuriya and Faghih, 2020). Another contribution of the present work is the use of real measurements from wearable devices to develop a virtual subject environment as a simulation framework for concealed emotional levels. This is the first step to empower the implementation and testing of closed-loop controllers that could track and regulate the internal valence state. In a similar fashion to other control studies, providing a reliable closed-loop simulation framework can pave the way for safe experimentation of brain-related control algorithms here and in future studies (Santaniello et al., 2010; Dunn and Lowery, 2013; Yang et al., 2018b; Wei et al., 2020; Ionescu et al., 2021).

To investigate the validity of regulating emotions through a closed-loop control architecture, we design a simulation system using experimental data. Specifically, in this in silico study, we employ features extracted from zEMG data and design a fuzzy logic controller to regulate the emotional valence state in a closed-loop manner. We propose to implement fuzzy logic as this knowledge-based controller works with a set of predetermined fuzzy rules and weights responsible to gauge the degree in which the input variables are classified into output membership functions (Klir and Yuan, 1995; Qi et al., 2019). This process is particularly useful for controlling complex biological systems, as it provides a simple yet effective way of interacting with the uncertainties and impreciseness of these challenging systems (Lilly, 2011). In the literature, previous research have explored the use of a fuzzy logic controller in a simulation environment to control cognitive stress or regulate energy levels of patients with clinical hypercortisolism (Azgomi and Faghih, 2019; Azgomi et al., 2019). A fuzzy controller was also combined with a classical Proportional Integral Derivative (PID) controller to aid the movement of a knee prosthesis leg (Wiem et al., 2018), and to regulate movement of the elbow joint of an exoskeleton during post-stroke rehabilitation (Tageldeen et al., 2016). Scholars have shown fuzzy logic controllers to outperform PID controllers in the regulation of mean arterial pressure (Sharma et al., 2020), and to improve the anesthetic levels of patients undergoing general anesthesia (Mendez et al., 2018). In light of what is presented, in this in silico study, we develop a virtual subject environment to evaluate the efficiency of our proposed architecture.

The remainder of this research is organized as follows. In Section 2 we describe the methods used in this research. Specifically, in Section 2.1 we describe the virtual subject environment and the steps taken toward its development (i.e., the models used, the features extracted, the valence state estimation and the modeling of the environmental stimuli). Next, in Section 2.2 we explain the controller design and the steps taken during implementation. Then, we present our results in Section 3, followed by a discussion of those in Section 4.

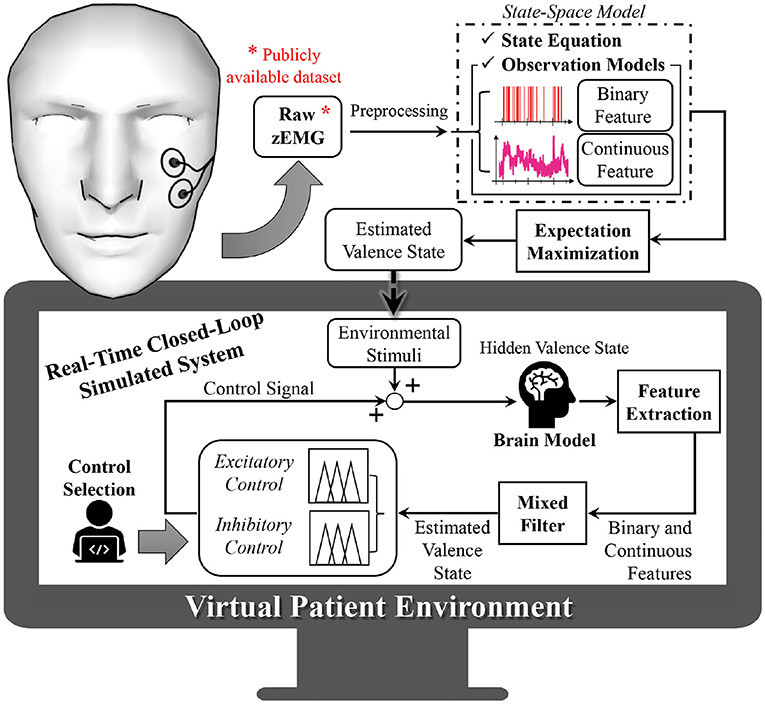

An overview of the proposed system is presented in Figure 1. As depicted in Figure 1, to construct the virtual subject environment we first take the zEMG measurements and preprocess the collected data for our further analysis. From the zEMG data we extract binary and continuous features that will be used both to build the state-space model and to estimate hidden emotional levels. This is possible after the establishment of the continuous and binary observation models associated with the state-space representation of emotional valence. Since the emotional valence progression of the subject is not measurable directly, we use the two simultaneous features and an expectation maximization (EM) algorithm, to model and drive the environmental stimuli within the virtual subject environment. The environmental stimuli are used to recreate, in real-time, different subject-specific emotional valence state-related responses into the simulated brain model. Similarly to the non-real-time case, output from the brain model will then have binary and continuous features extracted before reaching the mixed-filter. The mixed-filter estimates the hidden valence state to supply it to the control method selected of either excitatory or inhibitory control. With these two classes of closed-loop regulation we can analyze the performance of the proposed approach. Finally, the control algorithm determines the control effort necessary and provides it to the brain model, closing the loop. All the simulations of this research were performed using SIMULINK from MATLAB (The Math Works, Inc., Natick, MA) version 2020b.

Figure 1. Overview of proposed closed-loop solution. Within data from a publicly available dataset, the subject is wearing wearable electromyogram sensors that collect facial muscle activity. From the electromyogram measurements, binary and continuous features are extracted and used to infer the hidden emotional valence state of the subject, which cannot be measured directly. This is performed using state-space modeling and via an expectation maximization algorithm. The estimated valence state is then used to model an environmental stimuli, recreating the subject's surrounding input inside the virtual subject environment. Within this virtual environment, different emotional conditions are recreated into the brain model. By extracting binary and continuous features and using a mixed filter, the subject's hidden emotional valence state is estimated and further regulated as desired (excitation or inhibition modes) by means of a fuzzy logic controller.

In this research, we develop human brain models using the publicly available Database for Emotion Analysis using Physiological Signals (DEAP) (Koelstra et al., 2012), in which the authors investigated the connection between physiological signals and an associated emotional tag, based on a valence scale. In the DEAP dataset, 32 subjects (16-females and 16-males, mean age 26.9) were asked to watch 1 min segments of 40 different music videos. These videos were selected so that they would capture every aspect of both arousal and valence levels. At the end of each video trial, the researchers gathered each subject's self-assessment regarding emotional valence, on a 1–9 scale. During the experiment, various physiological signals were collected, such as the facial zEMG response at 512 Hz. For our study, the self-assessed emotional valence information is taken as ground truth.

We model the valence state progression by forming stochastic state-space models.

Similar to Prerau et al. (2009), we use a first order autoregressive state-space model,

where xk is the hidden valence state at time step k for k = 1, …, K and K is the entire experiment duration. The model also includes the process noise as a Gaussian zero-mean random variable , sk as a surrogate for any environmental stimuli that influenced the brain at the time of data collection, and uk as the input from the controller.

We include two observation models that capture the evolution of the zEMG signals binary and continuous features so that we can observe the valence state progression in Equation (1). By using two features simultaneously in the model, we achieve a more accurate (i.e., narrower confidence intervals) and more precise emotional state estimation (Prerau et al., 2008). The binary observations nk = {0, 1}, are modeled as a Bernoulli distribution (McCullagh and Nelder, 1989; Wickramasuriya et al., 2019a,b),

where pk is the probability of observing a spike given the current valence state amplitude via sigmoidal link function (Equation 3), which has shown to depict frequency or counting datasets well (Wickramasuriya et al., 2019a,b). The continuous observations zk ∈ ℝ are modeled as,

where α is a coefficient representing the baseline power of the continuous feature, β is the rate of change in the continuous feature's power, and ωk is a normally distributed zero mean Gaussian random variable . Both the continuous and binary observations are stated as functions of the valence state xk.

To perform the estimation process and obtain the hidden valence state, we utilize the zEMG data and extract the binary and continuous features presented in the observation models.

We use a third order butterworth bandpass filter between 10 and 250 Hz to remove motion artifacts and other unwanted high frequency noise. Additionally, we use notch filters at 50 Hz and next four harmonics to remove any electrical line interference. Finally, the filtered zEMG signal, yk, is segmented into 0.5 second bins with no overlapping.

As suggested by previous scholars the binary features extracted from the zEMG signal may be associated with the underlying neural spiking activity (Prerau et al., 2008; Amin and Faghih, 2020; Azgomi et al., 2021a). Thus, we estimate the neural spiking pertinent to emotional valence by extracting binary features from the zEMG data. Firstly, the bins of the filtered zEMG signal yk are rectified by taking their absolute values and then smoothed with a Gaussian kernel. Similarly to Azgomi et al. (2019) and Yadav et al. (2019), the binary features nk are obtained with the Bernoulli distribution,

where a is a scaling coefficient, chosen heuristically to be 0.5, and qk is a zEMG amplitude dependent probability function of observing a spike in bin k, given yk.

Using the filtered zEMG signal yk, we also extract the continuous features employing the Welch power spectral density (PSD) of each 0.5 s bin, with a 75% window overlap. Afterwards, for each bin, we compute the bandpower of the PSD result from 10 to 250 Hz, before taking the logarithm. Finally, we normalize the entire signal on a 0–1 scale, to provide insight of the relative band power of the zygomaticus major muscle activity, across all 40 1-min trials.

To estimate the emotional valence fluctuations within the experimental data, we employ the state-space representation shown in Equation (1) without the control effort and environmental stimuli, since at this time, there is no control signal and the stimuli is inherent in the data. The hidden valence state process is defined by

Given the complete values for both extracted binary N1:K = {n1, …, nK} and continuous Z1:K = {z1, …, zK} features, we use the EM algorithm to estimate the model parameters θ = [α, β, σϵ, σω] and the hidden valence state xk. The EM algorithm provides a way to jointly estimate the latent state and parameters of the state-space models. Composed of two steps, namely, Expectation step (E-step) and Maximization step (M-step), the EM algorithm: (1) finds the expected value of the complete data log-likelihood, and (2) maximizes the parameters corresponding to this data log-likelihood. The algorithm iterates between these two steps until convergence (Wickramasuriya et al., 2019a,b; Yadav et al., 2019). The following equations show how at iteration (i + 1) values are recursively predicted with estimates and parameters from iteration i (e.g., , ).

2.1.5.1.1. Kalman-Based Mixed-Filter (Forward-Filter).

where k = 1, …, K; is the estimated valence state; and constitute the corresponding standard deviation.

2.1.5.1.2. Fixed-Interval Smoothing Algorithm (Backward-Filter).

2.1.5.1.3. State-Space Covariance Algorithm.

for 1 ≤ k ≤ u ≤ K.

We model the environmental stimuli referred to in Eqouation (1) as a way to capture and recreate the subject's response to high or low valence trials. This allows for the simulation of subject-specific HV and LV conditions. The environmental stimuli are calculated by finding the difference between adjacent elements of the estimated valence state , as in

for k = 1, …, K−1. Then, we assume a sinusoidal harmonic formulation to model the environmental stimuli in either HV or LV trials,

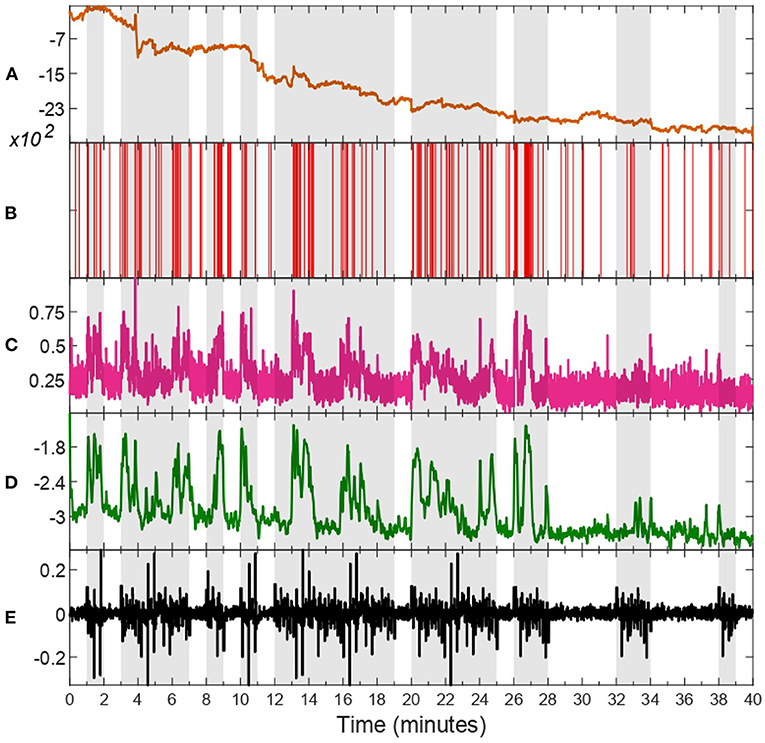

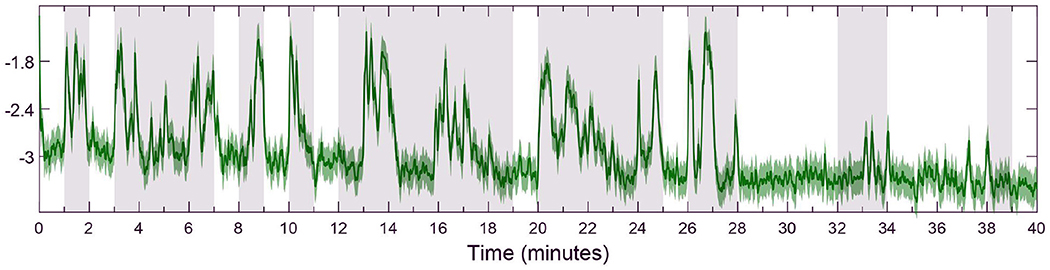

Through inspection across all subjects, we notice that HV trials tend to have a higher mean and standard deviation compared to LV ones. Thus, to avoid fitting outliers to the harmonic model depicted in Equation (24), we select the six trials with highest mean and standard deviation of estimated valence levels for fitting sk to HV, and the six trials with the lowest mean and standard deviation to model LV periods. Additionally, we consider a transition period between each different valence state, as approximated by a linear relationship of 0.5 s in duration. This is done separately for each subject to ensure personalized models. Data from an exemplary subject is depicted in Figure 2, in which every step of the process is illustrated separately, i.e., raw zEMG to extracted features and valence state and finally obtaining a corresponding environmental stimuli. In addition, in Figure 3, the estimated emotional valence state for the same exemplary subject is presented with 95% confidence intervals. Of the 23 subjects available in the dataset, we excluded five participants due to a lack of emotional response found when comparing between LV and HV periods, that is, both emotional periods have shown equivalent outcomes regarding both features and estimated valence state.

Figure 2. zEMG data, corresponding features, estimated valence state, and environmental stimuli of subject 18. Trials characterized as high valence (HV) are shaded in gray, whilst unshaded ones as representative of low valence (LV). The raw zEMG collected is presented in (A) in orange, while (B,C) show the extracted features, binary (red) and continuous (pink), respectively. (D) illustrates the hidden valence state (green) attained with the EM algorithm by employing both features shown in (B,C). The last (E) shows the environmental stimuli (black) obtained from the valence state progression in (D).

Figure 3. Detail of estimated emotional valence state for subject 18 with 95% confidence intervals. The white background depicts LV periods while the gray-shaded areas show HV results. The solid green line shows the estimated valence state while the green region around it is a 95% confidence interval.

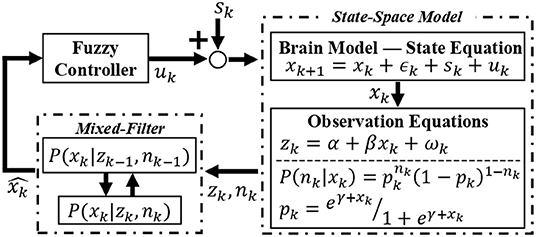

With the virtual subject environment in place, we explore the regulation of emotional valence. Similar to the feature extraction process, we simulate the binary and continuous responses simultaneously from the internal brain state. In other words, we use Equations (2)–(4) to recreate within the virtual subject environment what would be inherent to the zEMG data in the real world. Then, these two features are fed to a Kalman-based mixed-filter to estimate the hidden valence state in an online fashion. The estimated state is averaged out in a 10-s window to smooth any abrupt changes before reaching the fuzzy controller, which then derives the control effort uk in real-time. A diagram of the closed-loop is depicted in Figure 4. As the hidden valence state cannot be measured directly, we use the recursive, Kalman-based mixed-filter to estimate the latent valence state inherent to the brain model as detailed in Equations (8)–(12). As shown in Figure 4, this filter takes in both binary and continuous observations to compute the prior distribution using a Chapman-Kolmogrov equation, then finds the measurement likelihood via Bayes theorem, which can be summarized with, respectively,

and

Figure 4. Overview of the closed-loop solution. The environmental stimuli sk is added to the control signal uk to form an input of the state-space brain model. The internal emotional valence state xk is governed by the state equation and by employing the observation equations, binary and continuous features are extracted and taken in by the recursive mixed-filter. The filter estimates and tracks the hidden brain state , supplying this signal for the controller. Finally, the controller takes the current estimated valence state and generates a control signal uk back to the brain model, thus closing the loop. This control signal is responsible for changing the valence state in the desired direction, i.e., increasing if excitatory or decreasing if inhibitory action.

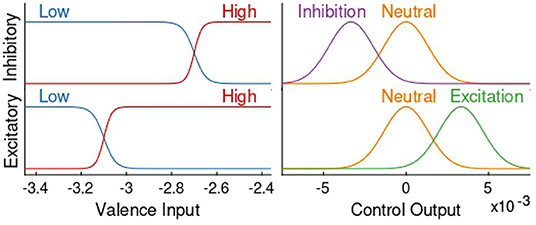

We use a Mamdani-type fuzzy logic controller with the fuzzy rules shown in Table 1 to regulate the subject's emotional valence to a more desired level, i.e., during inhibitory mode of control action, the goal is to achieve and remain in the same valence level characterized by the LV period—and vice-versa for the excitatory controller. As it can be observed in Figures 1, 4 and Table 1, the input signal for the controller is the estimated valence state and not a prediction error as it is more common in control studies. After analyzing the open-loop response of all subjects we designed a set of membership functions capable of directly regulating the emotional valence without subtracting it from a target reference. With this, we could employ more intuitive membership functions as depicted in Figure 5. Similarly to previous authors (Azgomi et al., 2021b), the fuzzy output can be obtained with,

where j designate the active rule at each time step k and μvalence is the fuzzified valence input v. The crisp output of the fuzzy controller, i.e., the control signal uk, is attained using the centroid method as follows,

Figure 5. Excitatory and inhibitory fuzzy membership functions. The left side shows membership functions of the controller's input, whilst the right side display the ones for the output. The top and bottom row depict, respectively, membership functions of the inhibitory and excitatory controllers. In all four graphs the y axis depicts the degree of membership for every case, in which the lowest value is zero association with that function and the highest value is total association.

With a fuzzy logic controller, crisp input values are transformed to degrees of membership of certain functions called membership functions in the fuzzification process. Then, using the pre-determined fuzzy rules the fuzzy inference process takes place, in which a connection between all fuzzified inputs is made. This results in degrees of membership of a set of output membership functions, which are then defuzzified to produce a final representative crisp value (Qi et al., 2019). This fuzzy logic process is convenient when dealing with complex systems, such as those biological in nature, since it allows for the emergence of complex control behaviors using relatively simple constructions (Lilly, 2011).

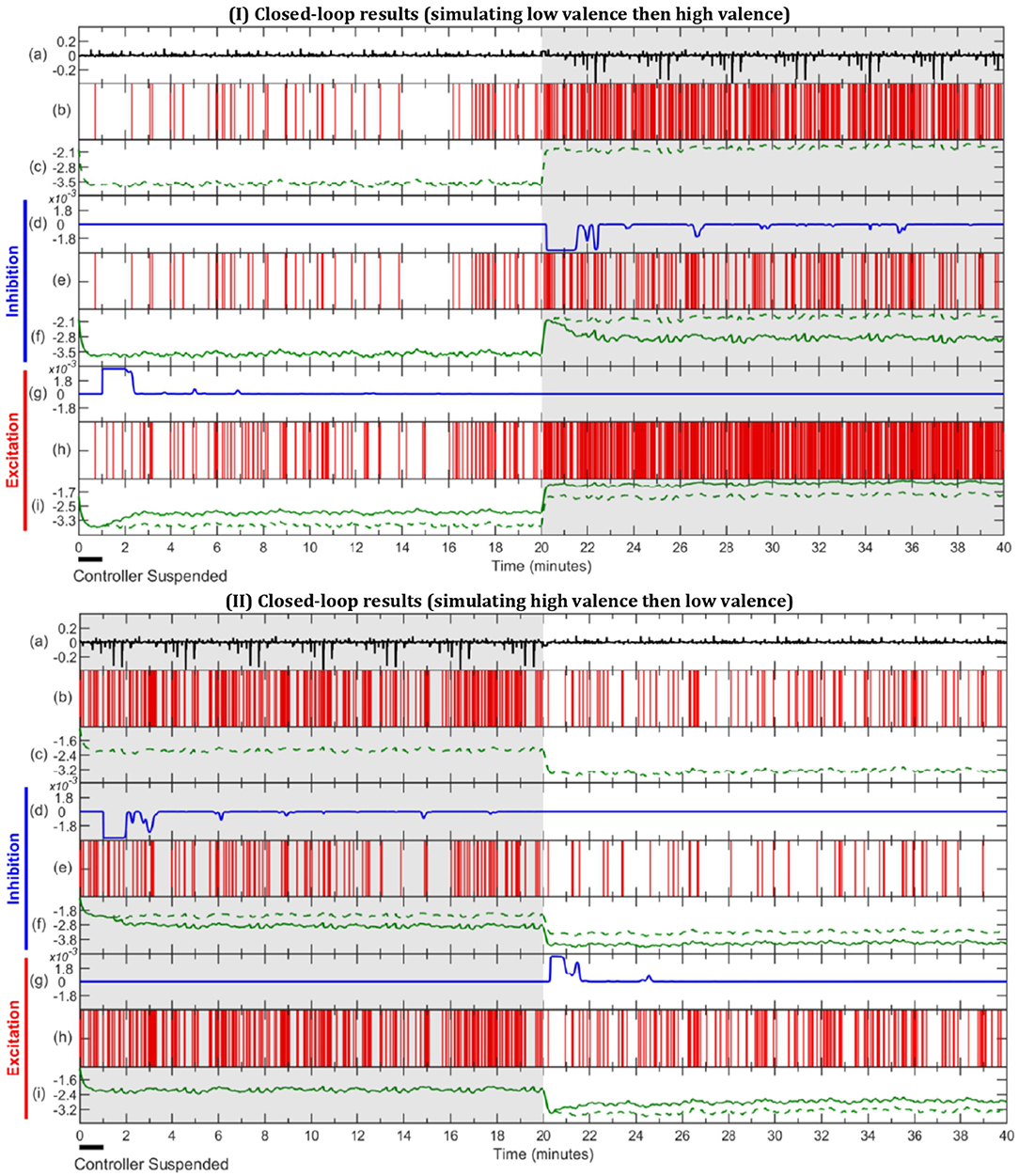

In this section, we present the results obtained for subject 7 in three different simulation scenaria: open-loop, inhibitory closed-loop, and excitatory closed-loop. The results associated with other subjects are also available in the Supplementary Material. We simulate with an environmental stimulus that is either half LV then half HV or vice versa. During the first minute, the controller is suspended to let the mixed-filter converge. The results are presented in Figure 6. As depicted in sub-panel (a) of I and II in Figure 6, all three scenarios for one particular subject have the same environmental stimuli in common, either starting with LV or with HV.

Figure 6. Simulation results of open-loop, inhibitory closed-loop and excitatory closed-loop scenarios for subject 7. In sub-figure I the external stimulus is comprised of half LV, then half HV, with sub-figure II being the opposite. In both (I,II), LV, and HV periods are represented with unshaded and gray-shaded areas, respectively. (a) depicts environmental stimulus (black) used in all three simulation scenarios. The (b,c) show spike activity (red) and estimated valence state (green, dashed) during the open-loop, respectively. (d–f) display inhibitory closed-loop results, with (d) showing control effort (blue), (e) the corresponding binary signal (red) and (f) the comparison between open-loop (green, dashed) and closed-loop (green, solid) valence state. In a similar fashion, (g–i) exhibit the excitatory closed-loop outcome.

Scenario 1 - Open-Loop: Since in the open-loop scenario there is no control effort (uk = 0), it can be omitted and the results are shown within the spike activity, depicted in sub-panel (b), and the corresponding estimated internal state depicted in sub-panel (c) and in dashed lines in both (f) and (i) sub-panels. It is observed, in sub-figure I of Figure 6, that the estimated valence state increases from the period of LV in the first half to HV in the second half, and so does the frequency of spikes. In contrast, sub-figure II of Figure 6 shows valence levels and number of spikes declining from the first half (HV) to the second half (LV).

Scenario 2 - Inhibitory Closed-Loop: The inhibitory results are observed in sub-panels (d, e, f) of both I and II in Figure 6. The control signal is zero during the LV periods of the simulations (i.e., during the first half in I and for the second half in II). It is not until the controller detects a HV period that the control effort takes a negative value (uk < 0) to inhibit the emotional valence, effectively lowering the number of spikes shown in sub-panel (e) and the estimated valence state depicted in sub-panel (f), as compared to the open-loop case.

Scenario 3 - Excitatory Closed-Loop: The last 3 sub-panels (g, h, i) from both I and II of Figure 6 depict the results of the excitatory controller. From sub-panel (g), we can see there is no control effort in periods of HV; both in the second half of I and first half of II. Once the controller detects a low valence state, it outputs a positive control effort (uk > 0), which increases the number of spikes and estimated valence level in sub-panels (h) and (i), as compared to the open-loop.

In this study, we use experimental data to build a virtual subject environment, allowing us to simulate and regulate emotional valence levels using a state-space brain model and a fuzzy logic feedback controller. To the best of our knowledge, in this in silico feasibility study, we present the first closed-loop control framework for emotional valence state using biofeedback from facial muscles. We use two simultaneous observation models, one binary and one continuous, to relate zEMG measurements to the hidden emotional valence state. The valence state is assumed to be governed by a state-space formulation and is converted from a 1 to 9 valence scale obtained from the self-assessment of subjects from the dataset, to the high (above 5) or low valence level used in this study. These valence labels were previously used by scholars as ground truth and were also employed here to determine subject-specific simulation parameters (Yadav et al., 2019). This was done by selecting specific LV and HV trials for modeling based on a trend in the mean and standard deviation of the estimated valence state between the two categories. To capture the surrounding stimuli influencing the affective levels of the subject and incorporate them into simulation, we use the estimated emotional valence progression and a high-order harmonic formulation. This modeling and simulation of the environmental stimuli is currently necessary to evoke representative subject-specific emotional valence responses within the simulated brain model. Thus, modern control techniques can be systematically investigated in silico, allowing for the development of this research field without risking harm to any patients.

In the current stage of this research on closed-loop emotional valence regulation, we focus our contributions on developing the closed-loop simulated framework and opted for using a fuzzy logic controller to regulate the estimated valence state in simulated profiles. While the accuracy of the classification method is paramount for the success of our method, we employed the same methodology for classifying between low valence and high valence states which reported a 89% accuracy in previous works (Yadav et al., 2019). This value is on par with other state-of-the-art methods however, relying on physiological measurements and estimation of the brain state, instead of externalized facial or vocal expressions.

Using the proposed knowledge-based controller we successfully verify the in silico feasibility of the presented methods. By employing a set of simple logic rules the fuzzy system is capable of producing complex regulating behaviors (Lilly, 2011). This is extremely valuable since insight about the system can come in many ways, such as from doctors, other researchers, or the individual itself. Moreover, the fuzzy structure allows for an uncomplicated expandability feature which means other physiological signals could be simply incorporated while designing the control systems (Azgomi et al., 2021a,b). This could further enhance the approach for valence regulation.

In previous research for closed-loop regulation of human-related dynamics, scholars have developed simulators to explore controller designs for Parkinson's disease, cognitive stress, depression and other neurological and neuropsychiatric disorders, as well as for anesthetic delivery, hemodynamic stability, and mechanical ventilation (Boayue et al., 2018; Yang et al., 2018b; Azgomi et al., 2019; Parvinian et al., 2019; Fleming et al., 2020; Ionescu et al., 2021). Here, the proposed architectures set initial steps for a future wearable machine interface (WMI) implementation, as we achieved simulation of emotional valence controllers for both inhibitory and excitatory goals, demonstrating great potential in helping individuals maintain daily mental well-being (Azgomi and Faghih, 2019). While no commercial wearable solution for facial EMG measurement is available yet, the potential for this non-invasive procedure to regulate mental states encourages future efforts.

During excitatory action, we observe an increase in number of spikes and overall emotional valence state when needed and, for inhibition, our approach obtained less spikes and a lower valence level as the need arose. However, the amount of response varied with each subject due to a few reasons. One factor can be attributed to the use of a single mono-objective fuzzy controller design, in which the controller can act locally in the first half of the experiment, correctly adjusting the mental state, without considering that the environmental stimuli are going to further push the subject's valence level in the second half. This architecture also does not account for each individual peculiarities, i.e., lack or abundance of emotional engagement throughout the experiment. Further research needs to explore the optimization of fuzzy membership functions, to adapt for different persons and variations in time. Because the performance of fuzzy logic controllers are highly dependent on their parameters and structure, optimization algorithms could also improve the overall results as the parameters would not rely on pre-determined knowledge of the system (Qi et al., 2019).

In the exemplary subject depicted in Figure 6 we can observe an inhibitory action taking place in the HV periods of inhibition simulation and lowering of the number of spikes and estimated valence level as compared to the open-loop. Similarly, we can observe the excitatory controller acting in LV periods and increasing the spike frequency and valence levels, accordingly. Overall, subjects 3–5, 8, 11, and 17 (Supplementary Figures S4–S6, S9, S12, S18) showed similar results to the exemplary subject depicted in Figure 6, accomplishing reasonable regulation across all scenarios. Of the remaining 10 subjects, 7 had good performance in all inhibitory scenarios (Supplementary Figures S2, S3, S10, S11, S13, S16, S17) while 4 out of 10 had good performance in at least one excitatory scenario (Supplementary Figures S10, S14-S16). This could suggest that HV regulation is more challenging possibly due to the high variability nature of this mental state.

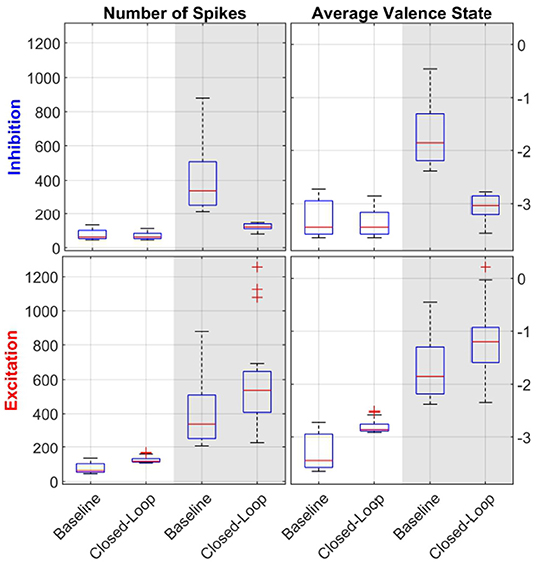

In addition to the subject exemplified in Figure 6, t-test analysis between the open- and closed-loop simulations with 17 out of 23 subjects was performed, as detailed in Table 2. Additionally, Figure 7 displays the distribution of data used during the t-test for the case of LV then HV order of environmental stimuli. The HV then LV order is also included in the Supplementary Material and presents a similar analysis. As seen both in Figure 7 and Table 2, the results show LV periods to be significantly different during excitatory action and HV trials to be significantly different throughout inhibition, regarding both the average valence level and number of spikes. This can be an indicative that the proposed controller was able to perform as desired and alter the emotional state of various subjects when required. In a similar manner, LV periods were not significantly different during inhibitory regulation if the LV was at the beginning of simulation (both in spike count, and mean valence levels). Comparing HV periods throughout excitation, the number of spikes was not significantly different when the HV period happen before LV. These results are indicative that the controller is able to detect when changes to the brain state are not required. The reason the affective state is significantly different in the second half of the experiment in cases it was not necessary (LV inhibition and HV excitation) is due to the fact that the proposed controller is not multi-objective and a regulation goal is selected beforehand | either to excite or to inhibit. Thus, after properly adjusting the brain state in the first half of the simulation, the second half will be different in comparison to the open-loop baseline and the mono-objective nature of this approach is incapable of addressing the matter. Further research is still required.

Figure 7. Statistical analysis with boxplot (N = 17) visualization of LV then HV environmental stimuli order. The left column of sub-panels shows the number of spikes in a given period, while the right column of sub-panels depicts the average valence state. The top row of sub-panels show results from the inhibitory controller and the bottom one for the excitatory one. Within each sub-plot, the white background depicts LV periods while the gray-shaded areas show HV results. Each pair of data (i.e., baseline and closed-loop) was used during the t-tests analysis. Comparing the open-loop baseline and closed-loop results of number of spikes and average valence levels, HV periods are statistically significant both in inhibition and excitation (all sub-panels, gray background). For LV periods, results are statistically significant only for inhibition (bottom-row, white background).

A few subjects (4, 11, 12, 20, 21, and 23) had poor emotional valence state estimation and were discarded from the statistical analysis which also show directions for improving the proposed approach. These participants showed similar number of spikes and valence levels, during both LV and HV periods, within the open-loop scenario. Thus, when taken to a closed-loop solution, the fuzzy controller is impaired from distinguishing high and low valence levels and leads to unsatisfactory results. However, this poor valence estimation could be due to many factors such as the person not being emotionally engaged during the original data collection or distracted during the experiment (Chaouachi and Frasson, 2010). Similarly to previous scholars (Yadav et al., 2019), we investigate the performance of the emotional valence estimation with a 95% confidence intervals metric, as depicted in Figure 3. As it can be observed in Figure 3, the confidence intervals reside close to the actual recovered state and further validate the proposed state-space estimation procedure. Moreover, it is possible that these discarded subjects required additional physiological measurements (e.g., electrocardiogram, skin conductance, pupil size) to improve the estimation of the internal brain state. As mentioned above, the flexibility of the proposed state-space and fuzzy logic controller framework could easily incorporate additional physiological signals.

The present study has a few limitations. The dataset used had conflicting metadata on 9 of the 32 subjects, resulting in an impossibility of recovering the position of all 40 trials and thus, these subjects had to be discarded. Additionally, in real-world scenarios as in the dataset used, emotional valence has a spectrum of levels, but we assume only two possible states of high and low valence. This decision also reflects in the controller design in which we experiment with only two classes of closed-loop regulation, i.e., excitation and inhibition. Even with this limitation, it should be noted that both the mixed-filter and designed control provide continuous estimation and control objectives allowing for a finer regulation within this spectrum of emotions. This can be addressed in future research. Moreover, this simulation study does not incorporate the controller dynamics and real-world actuators. To implement the proposed architectures in real-world scenarios, it is paramount to consider how valence needs to be modulated, not only in terms of which actuators to use but also how frequent should interventions take place. These are challenging to address, especially when dealing with such a complex organ as the human brain, and require further investigation. In that sense, future human subject experiments shall be designed to explore the dynamics of possible actuation methods to regulate valence states. Previous scholars have observed emotional brain responses from changes in lighting or music (Schubert, 2007; Vandewalle et al., 2010; Droit-Volet et al., 2013). These would be interesting to investigate since they are also non-invasive procedures and could be incorporated in a practical system. Future research into using adaptive and predictive control strategies would also be beneficial to address some of the biological intrinsic variations of an individual. Similarly, the applicability of the proposed approach in the real world depends on the real-time estimation of mental states. At this time, we illustrate the feasibility of the approach by incorporating a simulation of brain responses on a per individual basis. Once implemented, this simulation is no longer required. However, a “training" session might be necessary to calibrate the system for each subject's peculiarities. In addition, robust state estimation or robust control design can be of tremendous importance for a real-world application. Lastly, we extracted features from LV and HV trials from EMG signal of the Zygomaticus major facial muscle, which has been depicted as a good indicator of valence (Brown and Schwartz, 1980; Ekman et al., 1980; Tan et al., 2012). As a future direction of this research, an investigation to quantify the performance in detecting fake emotional expressions via the zEMG signal would be beneficial to further enhance the proposed approach to be implemented in real life.

Using the proposed architecture, we were able to regulate one's emotional state, specifically emotional valence levels, by implementing a fuzzy controller that acted on a state-space model of the human brain. With a similar approach, a WMI could, in the future, be used to recommend a specific music track for a person feeling down, advise a change in lighting for someone in a bad mental state, or even offer a cup of green tea if the user wants to maintain a desired level of well-being (Athavale and Krishnan, 2017; Cannard et al., 2020). While we used experimental data to design a closed-loop system for regulating an internal valence state in a simulation study, a future direction of this research would be designing human subject experiments to close the loop in real-world settings. In our future work, we plan to validate the valence state estimator in real-time and close the loop accordingly. For example, we plan to incorporate safe actuators such as music or visual stimulation to close the loop. More research is needed but this suggests an important new step toward new clinical applications and the self-management of mental health.

The publicly available dataset used in this study can be found in http://www.eecs.qmul.ac.uk/mmv/datasets/deap/.

RF conceived and designed the study. LB, AE, HA, and RF developed the algorithms, analysis tools, and revised the manuscript. LB and AE performed research, analyzed data, and wrote the manuscript. All authors contributed to the article and approved the submitted version.

This work was supported in part by NSF CAREER Award 1942585–MINDWATCH: Multimodal Intelligent Noninvasive brain state Decoder for Wearable AdapTive Closed-loop arcHitectures and NSF grant 1755780–CRII: CPS: Wearable-Machine Interface Architectures, and NYU start-up funds.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2022.747735/full#supplementary-material

Abdelnour, F., Voss, H. U., and Raj, A. (2014). Network diffusion accurately models the relationship between structural and functional brain connectivity networks. Neuroimage 90, 335–347. doi: 10.1016/j.neuroimage.2013.12.039

Ahmadi, M. B., Craik, A., Azgomi, H. F., Francis, J. T., Contreras-Vidal, J. L., and Faghih, R. T. (2019). “Real-time seizure state tracking using two channels: a mixed-filter approach,” in 2019 53rd Asilomar Conference on Signals, Systems, and Computers (Pacific Grove, CA: IEEE), 2033–2039.

Al-Shargie, F., Tang, T. B., Badruddin, N., and Kiguchi, M. (2015). “Mental stress quantification using eeg signals,” in International Conference for Innovation in Biomedical Engineering and Life Sciences (Springer), 15–19.

Al-Sharoa, E., Al-Khassaweneh, M., and Aviyente, S. (2018). Tensor based temporal and multilayer community detection for studying brain dynamics during resting state fmri. IEEE Trans. Biomed. Eng. 66, 695–709. doi: 10.1109/TBME.2018.2854676

Amin, M. R., and Faghih, R. T. (2020). Identification of sympathetic nervous system activation from skin conductance: a sparse decomposition approach with physiological priors. IEEE Trans. Biomed. Eng. 68, 1726–1736. doi: 10.1109/TBME.2020.3034632

Amin, R., Shams, A. F., Rahman, S. M., and Hatzinakos, D. (2016). “Evaluation of discrimination power of facial parts from 3d point cloud data,” in 2016 9th International Conference on Electrical and Computer Engineering (ICECE) (Dhaka: IEEE), 602–605.

Anuja, T., and Sanjeev, D. (2020). “Speech emotion recognition: a review,” in Advances in Communication and Computational Technology eds G. Hura, A. Singh, and l. Siong Hoe L (Singapore: Springer.

Athavale, Y., and Krishnan, S. (2017). Biosignal monitoring using wearables: Observations and opportunities. Biomed. Signal Process. Control 38, 22–33. doi: 10.1016/j.bspc.2017.03.011

Azgomi, H. F., Cajigas, I., and Faghih, R. T. (2021a). Closed-loop cognitive stress regulation using fuzzy control in wearable-machine interface architectures. IEEE Access. 9, 106202–106219. doi: 10.1109/ACCESS.2021.3099027

Azgomi, H. F., and Faghih, R. T. (2019). “A wearable brain machine interface architecture for regulation of energy in hypercortisolism,” in 2019 53rd Asilomar Conference on Signals, Systems, and Computers (Pacific Grove, CA: IEEE), 254–258.

Azgomi, H. F., Hahn, J.-O., and Faghih, R. T. (2021b). Closed-loop fuzzy energy regulation in patients with hypercortisolism via inhibitory and excitatory intermittent actuation. Front. Neurosci. 15, 695975. doi: 10.3389/fnins.2021.695975

Azgomi, H. F., Wickramasuriya, D. S., and Faghih, R. T. (2019). “State-space modeling and fuzzy feedback control of cognitive stress,” in 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (Berlin: IEEE), 6327–6330.

Azuar, D., Gallud, G., Escalona, F., Gomez-Donoso, F., and Cazorla, M. (2019). “A story-telling social robot with emotion recognition capabilities for the intellectually challenged,” in Iberian Robotics Conference (Porto: Springer), 599–609.

Boayue, N. M., Csifcsák, G., Puonti, O., Thielscher, A., and Mittner, M. (2018). Head models of healthy and depressed adults for simulating the electric fields of non-invasive electric brain stimulation. F1000Research 7, 704. doi: 10.12688/f1000research.15125.2

Bozhkov, L., Koprinkova-Hristova, P., and Georgieva, P. (2017). Reservoir computing for emotion valence discrimination from eeg signals. Neurocomputing 231, 28–40. doi: 10.1016/j.neucom.2016.03.108

Brown, S.-L., and Schwartz, G. E. (1980). Relationships between facial electromyography and subjective experience during affective imagery. Biol. Psychol. 11, 49–62. doi: 10.1016/0301-0511(80)90026-5

Burzagli, L., and Naldini, S. (2020). “Affective computing and loneliness: how this approach could improve a support system,” in International Conference on Human-Computer Interaction (Copenhagen: Springer), 493–503.

Cacioppo, J., Berntson, G., Larsen, J., Poehlmann, K., and Ito, T. (2000). “The psychophysiology of emotion,” in Handbook of Emotions (The Guilord Press), 173–191.

Cacioppo, J., Petty, R., Losch, M., and Kim, H. (1986). Electromyographic activity over facial muscle regions can differentiate the valence and intensity of affective reactions. J. Pers. Soc. Psychol. 50, 260–268. doi: 10.1037/0022-3514.50.2.260

Cai, Y., Guo, Y., Jiang, H., and Huang, M.-C. (2018). Machine-learning approaches for recognizing muscle activities involved in facial expressions captured by multi-channels surface electromyogram. Smart Health 5, 15–25. doi: 10.1016/j.smhl.2017.11.002

Cannard, C., Brandmeyer, T., Wahbeh, H., and Delorme, A. (2020). Self-health monitoring and wearable neurotechnologies. Handbook Clin. Neurol. 168, 207–232. doi: 10.1016/B978-0-444-63934-9.00016-0

Cannon, W. B. (1927). The james-lange theory of emotions: a critical examination and an alternative theory. Am. J. Psychol. 39, 106–124. doi: 10.2307/1415404

Chaouachi, M., and Frasson, C. (2010). “Exploring the relationship between learner eeg mental engagement and affect,” in International Conference on Intelligent Tutoring Systems (Berlin: Springer), 291–293.

Chronaki, G., Hadwin, J. A., Garner, M., Maurage, P., and Sonuga-Barke, E. J. S. (2015). The development of emotion recognition from facial expressions and non-linguistic vocalizations during childhood. Br. J. Dev. Psychol. 33, 218–236. doi: 10.1111/bjdp.12075

Conway, C. R., Udaiyar, A., and Schachter, S. C. (2018). Neurostimulation for depression in epilepsy. Epilepsy Behav. 88, 25–32. doi: 10.1016/j.yebeh.2018.06.007

Couette, M., Mouchabac, S., Bourla, A., Nuss, P., and Ferreri, F. (2020). Social cognition in post-traumatic stress disorder: a systematic review. Br. J. Clin. Psychol. 59, 117–138. doi: 10.1111/bjc.12238

Das, P., Khasnobish, A., and Tibarewala, D. N. (2016). “Emotion recognition employing ECG and gsr signals as markers of ans,” in 2016 Conference on Advances in Signal Processing (CASP) (Pune), 37–42.

Dolan, R. J. (2002). Emotion, cognition, and behavior. Science 298, 1191–1194. doi: 10.1126/science.1076358

Droit-Volet, S., Bueno, L. J., Bigand, E., et al. (2013). Music, emotion, and time perception: the influence of subjective emotional valence and arousal? Front. Psychol. 4, 417. doi: 10.3389/fpsyg.2013.00417

Dunn, E. M., and Lowery, M. M. (2013). “Simulation of pid control schemes for closed-loop deep brain stimulation,” in 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER) (San Diego, CA: IEEE), 1182–1185.

Egger, M., Ley, M., and Hanke, S. (2019). Emotion recognition from physiological signal analysis: a review. Electron. Notes Theor. Comput. Sci. 343, 35–55. doi: 10.1016/j.entcs.2019.04.009

Ekman, P., Friesen, W. V., and Ancoli, S. (1980). Facial signs of emotional experience. J. Pers. Soc. Psychol. 39, 1125–1134. doi: 10.1037/h0077722

Fayek, H. M., Lech, M., and Cavedon, L. (2017). Evaluating deep learning architectures for speech emotion recognition. Neural Netw. 92, 60–68. doi: 10.1016/j.neunet.2017.02.013

Feradov, F., Mporas, I., and Ganchev, T. (2020). Evaluation of features in detection of dislike responses to audio-visual stimuli from EEG signals. Computers 9, 33. doi: 10.3390/computers9020033

Filippini, C., Spadolini, E., Cardone, D., Bianchi, D., and Preziuso, M. (2020). Facilitating the child-robot interaction by endowing the robot with the capability of understanding the child engagement: the case of mio amico robot. Int. J. Soc. Rob. 13, 677–689. doi: 10.1007/s12369-020-00661-w

Fleming, J. E., Dunn, E., and Lowery, M. M. (2020). Simulation of closed-loop deep brain stimulation control schemes for suppression of pathological beta oscillations in Parkinson's disease. Front. Neurosci. 14, 166. doi: 10.3389/fnins.2020.00166

Freire, R. C., Cabrera-Abreu, C., and Milev, R. (2020). Neurostimulation in anxiety disorders, post-traumatic stress disorder, and obsessive-compulsive disorder. Anxiety Disord. 1191, 331–346. doi: 10.1007/978-981-32-9705-0_18

Golland, Y., Hakim, A., Aloni, T., Schaefer, S. M., and Levit-Binnun, N. (2018). Affect dynamics of facial emg during continuous emotional experiences. Biol. Psychol. 139, 47–58. doi: 10.1016/j.biopsycho.2018.10.003

Goshvarpour, A., Abbasi, A., and Goshvarpour, A. (2017). An accurate emotion recognition system using ecg and gsr signals and matching pursuit method. Sci. Direct Biomed. J. 40, 355–368. doi: 10.1016/j.bj.2017.11.001

Gruebler, A., and Suzuki, K. (2010). Measurement of distal emg signals using a wearable device for reading facial expressions. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2010, 4594–4597. doi: 10.1109/IEMBS.2010.5626504

Guimaraes, M. P., Wong, D. K., Uy, E. T., Grosenick, L., and Suppes, P. (2007). Single-trial classification of meg recordings. IEEE Trans. Biomed. Eng. 54, 436–443. doi: 10.1109/TBME.2006.888824

Harper, R., and Southern, J. (2020). A bayesian deep learning framework for end-to-end prediction of emotion from heartbeat. IEEE Trans. Affect. Comput. 1:1. doi: 10.1109/TAFFC.2020.2981610

Honey, C. J., Sporns, O., Cammoun, L., Gigandet, X., Thiran, J.-P., Meuli, R., et al. (2009). Predicting human resting-state functional connectivity from structural connectivity. Proc. Natl. Acad. Sci. U.S.A. 106, 2035–2040. doi: 10.1073/pnas.0811168106

Ionescu, C. M., Neckebroek, M., Ghita, M., and Copot, D. (2021). An open source patient simulator for design and evaluation of computer based multiple drug dosing control for anesthetic and hemodynamic variables. IEEE Access. 9, 8680–8694. doi: 10.1109/ACCESS.2021.3049880

Kayser, D., Egermann, H., and Barraclough, N. E. (2021). Audience facial expressions detected by automated face analysis software reflect emotions in music. Behav. Rese. Methods. 1–15. doi: 10.3758/s13428-021-01678-3

Koelstra, S., Mühl, C., Soleymani, M., Lee, J.-S., Yazdani, A., Ebrahimi, T., et al. (2012). Deap: a database for emotion analysis using physiological signals. IEEE Trans. Affect. Comput. 3, 19–31. doi: 10.1109/T-AFFC.2011.15

Kordsachia, C. C., Labuschagne, I., Andrews, S. C., and Stout, J. C. (2018). Diminished facial emg responses to disgusting scenes and happy and fearful faces in huntington's disease. Cortex 106, 185–199. doi: 10.1016/j.cortex.2018.05.019

Kulic, D., and Croft, E. A. (2007). Affective state estimation for human-robot interaction. IEEE Trans. Rob. 23, 991–1000. doi: 10.1109/TRO.2007.904899

Künecke, J., Hildebrandt, A., Recio, G., Sommer, W., and Wilhelm, O. (2014). Facial emg responses to emotional expressions are related to emotion perception ability. PLoS ONE 9, e84053. doi: 10.1371/journal.pone.0084053

LaConte, S. M., Peltier, S. J., and Hu, X. P. (2007). Real-time fmri using brain-state classification. Hum. Brain Mapp. 28, 1033–1044. doi: 10.1002/hbm.20326

Lin, S., Jinyan, X., Mingyue, Y., Ziyi, L., Zhenqi, L., Dan, L., et al. (2018). A review of emotion recognition using physiological signals. New Trends Psychophysiol. Mental Health. 18:2074. doi: 10.3390/s18072074

Little, S., Beudel, M., Zrinzo, L., Foltynie, T., Limousin, P., Hariz, M., et al. (2016). Bilateral adaptive deep brain stimulation is effective in parkinson's disease. J. Neurol. Neurosurg. Psychiatry 87, 717–721. doi: 10.1136/jnnp-2015-310972

Maheshwari, J., Joshi, S. D., and Gandhi, T. K. (2020). Tracking the transitions of brain states: an analytical approach using EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 28, 1742–1749. doi: 10.1109/TNSRE.2020.3005950

Mendez, J. A., Leon, A., Marrero, A., Gonzalez-Cava, J. M., Reboso, J. A., Estevez, J. I., et al. (2018). Improving the anesthetic process by a fuzzy rule based medical decision system. Artif. Intell. Med. 84, 159–170. doi: 10.1016/j.artmed.2017.12.005

Mertens, A., Raedt, R., Gadeyne, S., Carrette, E., Boon, P., and Vonck, K. (2018). Recent advances in devices for vagus nerve stimulation. Expert. Rev. Med. Devices 15, 527–539. doi: 10.1080/17434440.2018.1507732

Naji, M., Firoozabadi, M., and Azadfallah, P. (2014). Classification of music-induced emotions based on information fusion of forehead biosignals and electrocardiogram. Cognit. Comput. 6, 241–252. doi: 10.1007/s12559-013-9239-7

Nakasone, A., Prendinger, H., and Ishizuka, M. (2005). “Emotion recognition from electromyography and skin conductance,” in Proceedings of the 5th International Workshop on biosignal Interpretation (Tokyo), 219–222.

Nie, D., Wang, X.-W., Shi, L.-C., and Lu, B.-L. (2011). “Eeg-based emotion recognition during watching movies,” in 2011 5th International IEEE/EMBS Conference on Neural Engineering (Cancun: IEEE), 667–670.

Noroozi, F., Sapinski, T., Kaminska, D., and Anbarjafari, G. (2017). Vocal-based emotion recognition using random forests and decision tree. Int. J. Speech Techno.l 20, 239–246. doi: 10.1007/s10772-017-9396-2

Parvinian, B., Pathmanathan, P., Daluwatte, C., Yaghouby, F., Gray, R. A., Weininger, S., et al. (2019). Credibility evidence for computational patient models used in the development of physiological closed-loop controlled devices for critical care medicine. Front. Physiol. 10, 220. doi: 10.3389/fphys.2019.00220

Pfurtscheller, G., Neuper, C., Schlogl, A., and Lugger, K. (1998). Separability of EEG signals recorded during right and left motor imagery using adaptive autoregressive parameters. IEEE Trans. Rehabil. Eng. 6, 316–325. doi: 10.1109/86.712230

Poria, S., Cambria, E., Bajpai, R., and Hussain, A. (2017). A review of affective computing: from unimodal analysis to multimodal fusion. Inf. Fusion 37, 98–125. doi: 10.1016/j.inffus.2017.02.003

Prerau, M. J., Smith, A. C., Eden, U. T., Kubota, Y., Yanike, M., Suzuki, W., et al. (2009). Characterizing learning by simultaneous analysis of continuous and binary measures of performance. J. Neurophysiol. 102, 3060–3072. doi: 10.1152/jn.91251.2008

Prerau, M. J., Smith, A. C., Eden, U. T., Yanike, M., Suzuki, W. A., and Brown, E. N. (2008). A mixed filter algorithm for cognitive state estimation from simultaneously recorded continuous and binary measures of performance. Biol. Cybern. 99, 1–14. doi: 10.1007/s00422-008-0227-z

Price, J. B., Rusheen, A. E., Barath, A. S., Cabrera, J. M. R., Shin, H., Chang, S.-Y., et al. (2020). Clinical applications of neurochemical and electrophysiological measurements for closed-loop neurostimulation. Neurosurg. Focus 49, E6. doi: 10.3171/2020.4.FOCUS20167

Ravindran, A. S., Nakagome, S., Wickramasuriya, D. S., Contreras-Vidal, J. L., and Faghih, R. T. (2019). “Emotion recognition by point process characterization of heartbeat dynamics,” in 2019 IEEE Healthcare Innovations and Point of Care Technologies, (HI-POCT) (Bethesda, MD: IEEE), 13–16.

rong Mao, Q., yu Pan, X., zhao Zhan, Y., and jun Shen, X. (2015). Using kinect for real-time emotion recognition via facial expressions. Front. Inf. Technol. Electron. Eng. 16, 272–282. doi: 10.1631/FITEE.1400209

Rosula Reyes, S. J., Depano, K. M., Velasco, A. M. A., Kwong, J. C. T., and Oppus, C. M. (2020). “Face detection and recognition of the seven emotions via facial expression: Integration of machine learning algorithm into the nao robot,” in 2020 5th International Conference on Control and Robotics Engineering (ICCRE) (Osaka: IEEE), 25–29.

Rudovic, O. O., Park, H. W., Busche, J., Schuller, B., Breazeal, C., and Picard, R. W. (2019). “Personalized estimation of engagement from videos using active learning with deep reinforcement learning,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) (Long Beach, CA: IEEE).

Russell, J. A. (1980). A circumplex model of affect. J. Pers. Soc. Psychol. 39, 1161–1178. doi: 10.1037/h0077714

Sakkalis, V. (2011). Review of advanced techniques for the estimation of brain connectivity measured with eeg/meg. Comput. Biol. Med. 41, 1110–1117. doi: 10.1016/j.compbiomed.2011.06.020

Santaniello, S., Fiengo, G., Glielmo, L., and Grill, W. M. (2010). Closed-loop control of deep brain stimulation: a simulation study. IEEE Trans. Neural Syst. Rehabil. Eng. 19, 15–24. doi: 10.1109/TNSRE.2010.2081377

Scherer, K. R. (2005). What are emotions? and how can they be measured? Soc. Sci. Inf. 44, 695–729. doi: 10.1177/0539018405058216

Schubert, E. (2007). Locus of emotion: The effect of task order and age on emotion perceived and emotion felt in response to music. J. Music Ther. 44, 344–368. doi: 10.1093/jmt/44.4.344

Sharma, R., Deepak, K., Gaur, P., and Joshi, D. (2020). An optimal interval type-2 fuzzy logic control based closed-loop drug administration to regulate the mean arterial blood pressure. Comput. Methods Programs Biomed. 185, 105167. doi: 10.1016/j.cmpb.2019.105167

Shiva, J., Makaram, N., Karthick, P., and Swaminathan, R. (2021). Emotion recognition using spectral feature from facial electromygraphy signals for human-machine interface. Stud. Health Technol. Inform. 281, 486–487. doi: 10.3233/SHTI210207

Soroush, M. S., Maghooli, K., Setarehdan, S. K., and Nasrabadi, A. M. (2019). A Novel EEG-Based Approach to Classify Emotions Through Phase Space Dynamics. Springer, NM: Signal, Image, and Video Processing.

Starnes, K., Miller, K., Wong-Kisiel, L., and Lundstrom, B. N. (2019). A review of neurostimulation for epilepsy in pediatrics. Brain Sci. 9, 283. doi: 10.3390/brainsci9100283

Tageldeen, M. K., Elamvazuthi, I., and Perumal, N. (2016). “Motion control for a multiple input rehabilitation wearable exoskeleton using fuzzy logic and pid,” in 2016 IEEE 14th International Workshop on Advanced Motion Control (AMC) (Auckland: IEEE), 473–478.

Tan, J.-W., Walter, S., Scheck, A., Hrabal, D., Hoffmann, H., Kessler, H., et al. (2011). “Facial electromyography (fEMG) activities in response to affective visual stimulation,” in 2011 IEEE Workshop on Affective Computational Intelligence (WACI) (Paris: IEEE), 1–5.

Tan, J.-W., Walter, S., Scheck, A., Hrabal, D., Hoffmann, H., Kessler, H., et al. (2012). Repeatability of facial electromyography (emg) activity over corrugator supercilii and zygomaticus major on differentiating various emotions. J. Ambient Intell. Humaniz Comput. 3, 3–10. doi: 10.1007/s12652-011-0084-9

Tegeler, C. H., Cook, J. F., Tegeler, C. L., Hirsch, J. R., Shaltout, H. A., Simpson, S. L., et al. (2017). Clinical, hemispheric, and autonomic changes associated with use of closed-loop, allostatic neurotechnology by a case series of individuals with self-reported symptoms of post-traumatic stress. BMC Psychiatry 17, 141. doi: 10.1186/s12888-017-1299-x

Thenaisie, Y., Palmisano, C., Canessa, A., Keulen, B. J., Capetian, P., Jimenez, M. C., et al. (2021). Towards adaptive deep brain stimulation: clinical and technical notes on a novel commercial device for chronic brain sensing. medRxiv. doi: 10.1088/1741-2552/ac1d5b

Val-Calvo, M., Álvarez-Sánchez, J. R., Ferrández-Vicente, J. M., and Fernández, E. (2020). Affective robot story-telling human-robot interaction: exploratory real-time emotion estimation analysis using facial expressions and physiological signals. IEEE Access. 8, 134051–134066. doi: 10.1109/ACCESS.2020.3007109

Van Boxtel, A. (2010). Facial emg as a tool for inferring affective states. Proc. Meas. Behav. 7, 104–108. doi: 10.1371/journal.pone.0226328

Vandewalle, G., Schwartz, S., Grandjean, D., Wuillaume, C., Balteau, E., Degueldre, C., et al. (2010). Spectral quality of light modulates emotional brain responses in humans. Proc. Natl. Acad. Sci. U.S.A. 107, 19549–19554. doi: 10.1073/pnas.1010180107

Villanueva-Meyer, J. E., Mabray, M. C., and Cha, S. (2017). Current clinical brain tumor imaging. Neurosurgery 81, 397–415. doi: 10.1093/neuros/nyx103

Wang, K., An, N., Li, B. N., Zhang, Y., and Li, L. (2015). Speech emotion recognition using fourier parameters. IEEE Trans. Affect. Comput. 6, 69–75. doi: 10.1109/TAFFC.2015.2392101

Wei, X., Huang, M., Lu, M., Chang, S., Wang, J., and Deng, B. (2020). “Personalized closed-loop brain stimulation system based on linear state space model identification,” In 2020 39th Chinese Control Conference (CCC) (Shenyang: IEEE), 1046–1051.

Weiss, D., and Massano, J. (2018). Approaching adaptive control in neurostimulation for parkinson disease: autopilot on. Neurology 90, 497–498. doi: 10.1212/WNL.0000000000005111

Wickramasuriya, D. S., Amin, M. R., and Faghih, R. T. (2019a). Skin conductance as a viable alternative for closing the deep brain stimulation loop in neuropsychiatric disorders. Front. Neurosci. 13, 780. doi: 10.3389/fnins.2019.00780

Wickramasuriya, D. S., and Faghih, R. T. (2020). A mixed filter algorithm for sympathetic arousal tracking from skin conductance and heart rate measurements in pavlovian fear conditioning. PLoS ONE 15, e0231659. doi: 10.1371/journal.pone.0231659

Wickramasuriya, D. S., Tessmer, M. K., and Faghih, R. T. (2019b). “Facial expression-based emotion classification using electrocardiogram and respiration signals,” in 2019 IEEE Healthcare Innovations and Point of Care Technologies, (HI-POCT) (Bethesda, MD: IEEE), 9–12.

Wiem, A., Rahma, B., and Naoui, S. B. H. A. (2018). “An adaptive ts fuzzy-pid controller applied to an active knee prosthesis based on human walk,” in 2018 International Conference on Advanced Systems and Electric Technologies (IC_ASET) (Hammamet: IEEE), 22–27.

Wilson, K., James, G., and Kilts, C. (2020). Combining physiological and neuroimaging measures to predict affect processing induced by affectively valent image stimuli. Sci. Rep. 10, 9298. doi: 10.1038/s41598-020-66109-3

Wu, S., Xu, X., Shu, L., and Hu, B. (2017). “Estimation of valence of emotion using two frontal EEG channels,” in 2017 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) (Kansas City, MO: IEEE), 1127–1130.

Yadav, T., Atique, M. M. U., Azgomi, H. F., Francis, J. T., and Faghih, R. T. (2019). “Emotional valence tracking and classification via state-space analysis of facial electromyography,” in 2019 53rd Asilomar Conference on Signals, Systems, and Computers (Pacific Grove, CA: IEEE), 2116–2120.

Yang, D., Alsadoon, A., Prasad, P. C., Singh, A. K., and Elchouemi, A. (2018a). An emotion recognition model based on facial recognition in virtual learning environment. Procedia Comput. Sci. 125, 2–10. doi: 10.1016/j.procs.2017.12.003

Yang, Y., Connolly, A. T., and Shanechi, M. M. (2018b). A control-theoretic system identification framework and a real-time closed-loop clinical simulation testbed for electrical brain stimulation. J. Neural Eng. 15, 066007. doi: 10.1088/1741-2552/aad1a8

Yu, C., and Tapus, A. (2019). Interactive robot learning for multimodal emotion recognition. Int. J. Soc. Rob. 633–642. doi: 10.1007/978-3-030-35888-4_59

Zeng, N., Zhang, H., Song, B., Liu, W., Li, Y., and Dobaie, A. M. (2018). Facial expression recognition via learning deep sparse autoencoders. Neurocomputing 273, 643–649. doi: 10.1016/j.neucom.2017.08.043

Keywords: closed-loop, control, brain, emotion, valence, electromyogram (EMG), wearable, state-space

Citation: Branco LRF, Ehteshami A, Azgomi HF and Faghih RT (2022) Closed-Loop Tracking and Regulation of Emotional Valence State From Facial Electromyogram Measurements. Front. Comput. Neurosci. 16:747735. doi: 10.3389/fncom.2022.747735

Received: 27 July 2022; Accepted: 21 February 2022;

Published: 25 March 2022.

Edited by:

Jane Zhen Liang, Shenzhen University, ChinaReviewed by:

Qing Yun Wang, Beihang University, ChinaCopyright © 2022 Branco, Ehteshami, Azgomi and Faghih. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rose T. Faghih, cmZhZ2hpaEBueXUuZWR1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.