94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci., 28 October 2021

Volume 15 - 2021 | https://doi.org/10.3389/fncom.2021.740601

The decision making function is governed by the complex coupled neural circuit in the brain. The underlying energy landscape provides a global picture for the dynamics of the neural decision making system and has been described extensively in the literature, but often as illustrations. In this work, we explicitly quantified the landscape for perceptual decision making based on biophysically-realistic cortical network with spiking neurons to mimic a two-alternative visual motion discrimination task. Under certain parameter regions, the underlying landscape displays bistable or tristable attractor states, which quantify the transition dynamics between different decision states. We identified two intermediate states: the spontaneous state which increases the plasticity and robustness of changes of minds and the “double-up” state which facilitates the state transitions. The irreversibility of the bistable and tristable switches due to the probabilistic curl flux demonstrates the inherent non-equilibrium characteristics of the neural decision system. The results of global stability of decision-making quantified by barrier height inferred from landscape topography and mean first passage time are in line with experimental observations. These results advance our understanding of the stochastic and dynamical transition mechanism of decision-making function, and the landscape and kinetic path approach can be applied to other cognitive function related problems (such as working memory) in brain networks.

The brain operates as a complex non-linear dynamical system, performing various physiological or cognitive functions. The computational ability emerges when a collection of neurons richly interact with each other via excitation or inhibition. Decision making is a cognitive process in terms of choosing a particular action or opinion among a set of alternatives, governing the behavioral flexibility and pervading all aspects of our life (Lee, 2013). Decision making process for sensory stimuli, such as the interpretation of an ambiguous image (Sterzer et al., 2009; Wang et al., 2013) or the discrimination of motion direction of random dots (Shadlen and Newsome, 2001; Roitman and Shadlen, 2002; Churchland et al., 2008; Lin et al., 2020), is closely associated with lateral intraparietal cortex (area LIP), which receives the inputs from sensory cortex and guides the motor output.

Decision making functions have been successfully described by the attractor network-based framework (Wang, 2002; Wong and Wang, 2006; Wong et al., 2007; Deco et al., 2013; You and Wang, 2013; Murray et al., 2017), which is characterized by its ability to account for the persistent activity observed broadly across many decision-related neurons. In the attractor network model, the persistent activity of the neural populations can be sustained, i.e., the system settles in the decided attractor state, even after the withdrawal of the external stimulus (Wang, 2002; Wong and Wang, 2006).

A concept closely related to attractor state is multistability, which refers to the coexistence of multiple steady states and exists in both a single neuron and neuronal populations in the brain, evidenced by a number of theoretical and experimental studies (Kelso, 2012). For example, two patterns of neuronal oscillations in cortical neurons, slow oscillations vs. tonic and irregular firing, correspond to two different cortical states, slow-wave sleep vs. wakefulness (Shilnikov et al., 2005; Fröhlich and Bazhenov, 2006; Destexhe et al., 2007; Sanchez-Vives et al., 2017). Perceptual multistability in visual and auditory systems is closely related to the coordination of a diversity of behaviors (Kondo et al., 2017). Furthermore, the brain is essentially noisy, originating from stochastic external inputs and highly irregular spiking activity within cortical circuits (Wang, 2008; Braun and Mattia, 2010). In multistable system, the noise induces alternations between distinct attractors in an irregular manner. For example, during extended gazing of a multistable image, inherent brain noise is responsible for inducing spontaneously switches between coexisting perceptual states (Braun and Mattia, 2010). The alternative switching between coexisting mental states for thinking process is also triggered by neuronal brain noise, either spontaneously or initiated by external stimulation (Kelso, 2012). Despite many advances on attractor networks and multistability in neural systems, the stochastic transition dynamics and global stability for decision making in neural networks have yet to be fully clarified.

For non-equilibrium dissipative dynamical systems such as biological neural circuits, the non-equilibrium potential (NEP) can be defined to facilitate the quantification of the global stability (Ludwig, 1975; Graham, 1987; Ao, 2004). Hopfield pioneeringly proposed the qualitative concept of "energy function" to explore the computational properties of neural circuits, such as the associative memory (Hopfield, 1984; Hopfield and Tank, 1986; Tank and Hopfield, 1987). The underlying energy landscape provides a global and quantitative picture of system dynamics which potentially can be used to study the stochastic transition dynamics of neural networks and has been described extensively in the literature, but mostly as illustrations (Walczak et al., 2005; Wong and Wang, 2006; Moreno-Bote et al., 2007; Braun and Mattia, 2010; Fung et al., 2010; Rolls, 2010; Zhang and Wolynes, 2014). Recently, some efforts have been devoted to quantifying the energy landscape from mathematical models. For the neural oscillations related to cognitive process, for example, the rapid-eye movement sleep cycle, the landscape shows a closed-ring attractor topography (Yan et al., 2013). The energy landscape theory also uncovers the essential stability-flexibility-energy trade-off in working memory and the speed-accuracy trade-off in decision making (Yan et al., 2016; Yan and Wang, 2020). However, these attempts are based on the simplified biophysical model rather than more realistic spiking neural network model, which may not fully capture some key aspects of the corresponding cognitive function (Wong and Wang, 2006). Therefore, how to quantify the energy landscape of cognitive systems based on a spiking neural network is still a challenging problem. Besides, the non-equilibrium landscape and flux framework has been applied to explore the cell fate decision-making based on gene regulatory networks (Wang et al., 2008; Li and Wang, 2013a,b, 2014a; Lv et al., 2015; Ge and Qian, 2016; Ye et al., 2021). So in this work, we focus on quantifying the energy landscape from a more plausible spiking cell-based neural network model to study the underlying stochastic dynamics mechanism of perceptual decision making in the brain.

In this work, we aim at quantifying the attractor landscape and further the stochastic dynamics and global stability of the decision making function from the spiking neural network model. The model we employed here was firstly introduced in Wang (2002), characterized by the winner-take-all competition mechanism for the binary decision. Depending on the parameter choice, the underlying neural circuit displays up to four stable attractors, which characterizes decision states, spontaneous state and intermediate states, individually. The irreversibility of the kinetic transition paths between attractors is due to the probabilistic flux, which measures the extent of the detailed balance broken in non-equilibrium biologically neural system. The barrier heights inferred from the landscape topography are correlated with the escape time, indicating the robustness of the decision attractor against the fluctuations. The results of barrier height also agree well with the reaction time recorded from behavioral experiments. These analyses on the landscape and transition dynamics facilitate our understanding of the underlying physical mechanism of decision making functions.

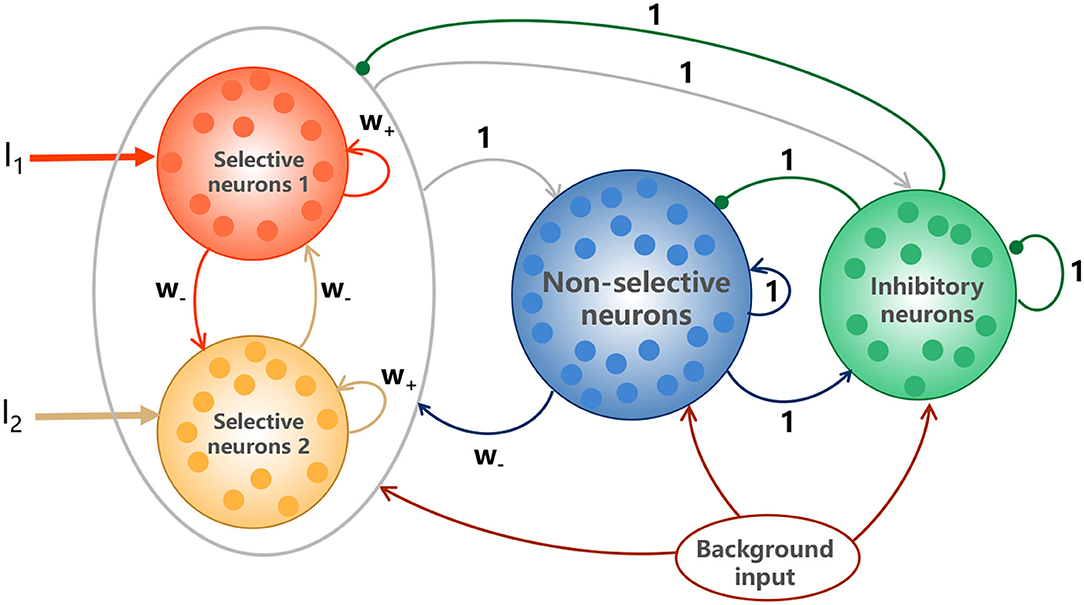

The classic random-dot motion discrimination (RDM) task is a suitable experimental paradigm to study the perceptual decision making behavior and the associated brain activity (Newsome et al., 1989; Shadlen and Newsome, 1996, 2001; Resulaj et al., 2009; Stine et al., 2020). The monkeys are trained to judge the direction of motion in a random dot display and their choices are indicated by a saccadic eye movement. We explored a biologically realistic attractor model with spiking neurons, first introduced in Wang (2002), to account for the decision making function. The model is composed of two selective excitatory populations (labeled as S1 and S2) with each encoding one of the two target directions, one non-selective excitatory population (labeled as NS) and one inhibitory interneuron populations (labeled as I), illustrated in Figure 1. S1 and S2 are characterized by the strong recurrent self-excitations dominated by NMDA-mediated receptors and mutual inhibitions mediated by NS. The model details are illustrated in the Methods.

Figure 1. Schematic depictions of the model. The model is characterized by the strong recurrent excitation in stimulus-selective populations and the reciprocal inhibition between them mediated by GABAergic interneurons (inhibitory neurons). The two selective populations receive Poisson spike trains I1 and I2, representing the external sensory inputs. All neurons receive background inputs, allowing spontaneously firing at a few hertz. Arrows represent activation, and dots denote repression. The numbers above the links indicate the dimensionless interaction strengths.

To imitate the sensory input of the RDM task, S1 and S2 independently receive the stimulus inputs I1 and I2 until the end of the simulation, which are modeled as uncorrelated Poisson spike trains with rates μ1 and μ2 (the unit is Hz). For S1, μ1 = μ(1+c), and for S2, μ2 = μ(1−c), where c represents the motion strength (the percentage of coherently moving dots), reflecting the difficulty of the task and μ is the stimulus strength.

In this work, we will probe how the circuit structure and stimulus input, more specifically, the recurrent connectivity w+ in S1 and S2, the stimulus strength μ and the motion strength c, influence the dynamical behavior of the decision making system in terms of the underlying energy landscape and the kinetics of state switching. The simulations are implemented on a free, open source simulator for spiking neural networks, Brain2 (Stimberg et al., 2019). And the equations (see Methods) are integrated numerically using a second order Runge-Kutta method with a time step dt = 0.02ms.

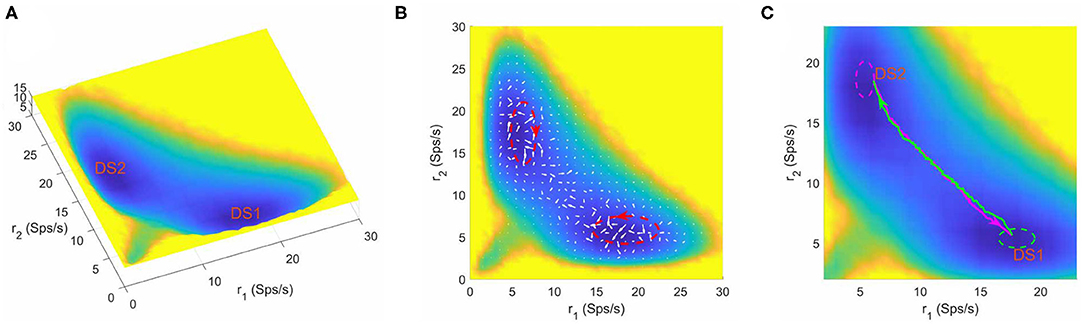

In the context of a two-choice decision task, at zero coherence, the stimuli to the two selective pools are similar and hard to distinguish, so the system will make decisions randomly in a “coin tossing” manner. Therefore, it can be anticipated that there exists two symmetric attractors in favor of one of the two distinct choices (the left or right motion direction) in the binary decision system. When the parameters are specified as w+ = 1.61, μ = 58 and c = 0, we obtained a bistable attractor landscape (shown in Figure 2). The normalized steady state probability distribution Pss(r1, r2) is firstly quantified by collecting the statistics of the system state in the “decision space” constructed by the selective populations averaged firing rate r1 and r2, and then the potential landscape can be mapped out by U(r1, r2) = −lnPss(r1, r2) (Sasai and Wolynes, 2003; Li and Wang, 2013a, 2014a) where U(r1, r2) is the dimensionless potential (see section 2 for details). Here, the potential U(r1, r2) does not correspond to the real energy in physical system, but a reflection of steady state probability distribution. In Figure 2A, the blue regions represent higher probability or lower potential, while the yellow regions indicate lower probability or higher potential. The landscape displays two symmetric basins of attraction. The two attractors with higher activity for one neural population (winner) and lower activity for the other (loser) are identified as distinct decision states, DS1 and DS2.

Figure 2. (A) The energy landscape for the network dynamics with bistability. (B) The probabilistic flux (white arrows) for the bistable system. The red dashed circles shows the curl direction of flux. (C) The dynamical transition paths between the two decision states for bistable dynamics. The green line is the transition path from DS1 to DS2 and the megenta line for the reverse transition. The green and meganta dashed circles indicate the decision boundaries where the corresponding decision is made. The parameters are designed as w+ = 1.61, μ = 58, and c = 0. r1 and r2 are the population averaged firing rate for the two selective pools. DS1, decision state 1; DS2, decision state 2; Sps/s, spikes/s.

The decision making process corresponds to the transition from a spontaneous state where both S1 and S2 fire with similar low rates, to a decision state (DS1 or DS2) where they compete with each other in a winner-take-all manner, in response to the motion input. The attractor landscape provides an intuitive explanation for the decision process. Starting from an initial resting state, the system will evolve dynamically to arrive at a decision state. This process can be pictured as the system follows a path downhill to the bottom of the nearest valley which has the minimal potential energy (DS1 or DS2).

In response to the visual stimulus, subjects will make an initial decision. However, they continue to accumulate noisy evidences (Resulaj et al., 2009) or monitor the correctness of the previous decision (Cavanagh and Frank, 2014) after a choice has been made. Then the decision-maker may or may not change the mind, i.e., the initial decision is made to subsequently either reverse or reaffirm. Since the decision-making is endowed with randomness from the external stimulus and spontaneous activity fluctuations, the stability of an attractor state in landscape is only guaranteed up to a limited time-scale. In the case of changing minds, the noise will terminate the self-sustained pattern of activity and drive the system to escape away from the initial attractor and switch to the other although the stimulus input remains unchanged.

The neural system operates far from equilibrium. So we quantified the non-equilibrium probability flux map (denoted by the white arrows in Figure 2B) to measure the extent of the violation of detailed balance. The flux is calculated from the dynamical stochastic trajectories of neural activity (the time window used to calculated firing rate is 50ms) over a long time (see section 2) (Battle et al., 2016). We can see that the force from the curl flux drives the system away from one local attractor and guides the transition to the other. To see the effects of using different time windows for simulations, we showed typical trajectories for using different time windows (Supplementary Figure 1). We also estimated the landscape and probabilistic flux when the time window used to calculate firing rate is 20ms (Supplementary Figure 2). A major difference for different time windows is that for smaller time windows there are larger fluctuations (Supplementary Figure 1), which is also reflected by the landscape results showing that the landscape using 20ms as time window displays a little more variations (compare Figure 2B and Supplementary Figure 2). As for the curl flux, different time windows lead to qualitatively similar results (compare Figure 2B and Supplementary Figure 2), i.e., the magnitude of flux is larger in the region close to the basins, and the flux has certain curl direction.

The dynamical transition paths corresponding to the changes of mind process (green line for DS1 to DS2 and megenta line for DS2 to DS1) are shown in Figure 2C. Note the fact that the forward and backward paths are irreversible, which is the consequence of the non-equilibrium curl flux (Wang et al., 2008; Li and Wang, 2014a; Yan and Wang, 2020). It is worth noting that the curl flux and noise play different roles in the decision making system. Here, the role of the none-zero flux is breaking detailed balance, which is also the cause for the irreversibility of forward and backward transition paths (the green path and the magenta path are not the same, Figure 2C). If the flux is zero, the green and magenta path will duplicate completely, which corresponds to an equilibrium case (Wang, 2015). To see whether this irreversibility is due to stochastic effects, we used different stochastic trajectories to calculate transition path (Supplementary Figure 3) and obtained consistent curl directions for the irreversible transition paths for both bistable and tristable system. We also showed single-trajectory examples, which support the irreversibility for the transition path for both bistable and tristable cases (Supplementary Figure 4). Therefore, flux is important for the state transitions. In terms of the role of noise, we see that although the landscape and flux keep the same, the single sample for transition path varies due the noise effects (Supplementary Figure 4). The noise also contributes to the state transition for the barrier crossing process.

Furthermore, we found that the number of stable attractors on landscape changes under different parameter combinations. Figure 3A shows the tristable attractor landscape of the decision making network for w+ = 1.66, μ = 16 and c = 0. Compared to Figure 2A, the attractor that emerges at the bottom left corner is identified as the spontaneous undecided state with low activities of both selective neural populations, indicated by SS on the landscape. Similar to the case of bistability, the property of curl flux and irreversibility of transition path also exist in the tristable system (Figures 3B,C), illustrating the inherent non-equilibrium property of neural system. It is worth noting that the kinetic switches between two decision states go through the SS state (green and megenta path in Figure 3C), indicating that the system will erase the former decision (back to the resting state) firstly and then make another decision (Pereira and Wang, 2014). So the SS state can be treated as an intermediate state.

Figure 3. (A) The energy landscape for network dynamics with tristability. (B) The probabilistic flux (white arrows) for tristable system. The red dashed circles show the curl direction of flux. (C) The dynamical transition paths between the two decision states. The green line is the transition path from DS1 to DS2 and the megenta line for the reverse transition. The change of mind process evolves through the SS state. The green and meganta dashed circles indicate the decision boundaries where the corresponding decision is made and the white dashed circle represents the attractor region for spontaneous state. The parameters are calibrated as w+ = 1.66, μ = 16, and c = 0. r1 and r2 are the population averaged firing rate for the two selective pools. SS, spontaneous state, DS1, decision state 1; DS2, decision state 2; Sps/s, spikes/s.

The intermediate state has been observed in various biological process. For example, in epithelial–mesenchymal transition, stem cell differentiation and cancer development, the intermediate state cell types play crucial roles in cell fate decision system governed by corresponding gene regulatory networks (Lu et al., 2013; Li and Wang, 2014b; Li and Balazsi, 2018; Kang et al., 2019). For working memory, the presence of the intermediate state significantly enhances the flexibility of the system to a new stimulus without seriously reducing the robustness against distractors (Yan and Wang, 2020). For the decision making function in this work, our landscape picture provides some hints on the roles of the intermediate state on mind changing. The mind changing process occurs in a step-wise way with the existence of the SS state. The system will switch to SS state firstly and stay there for a while, and then depending on the new accumulated evidences, decide whether transit to the other decision state (change of minds) or return back to the initial decision state (reaffirm previous decision) (Figure 3C). This demonstrates that the intermediate state may increase plasticity and robustness of perceptual decision making as the system can switch back to the original decision state from intermediate state if the accumulated evidences are not enough for change of mind.

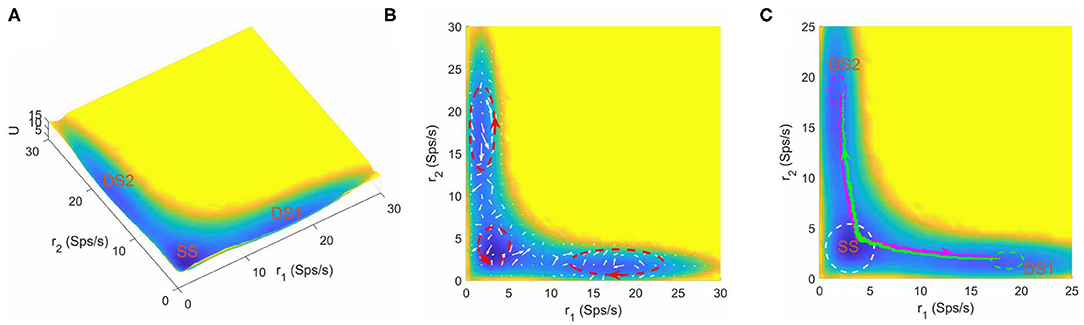

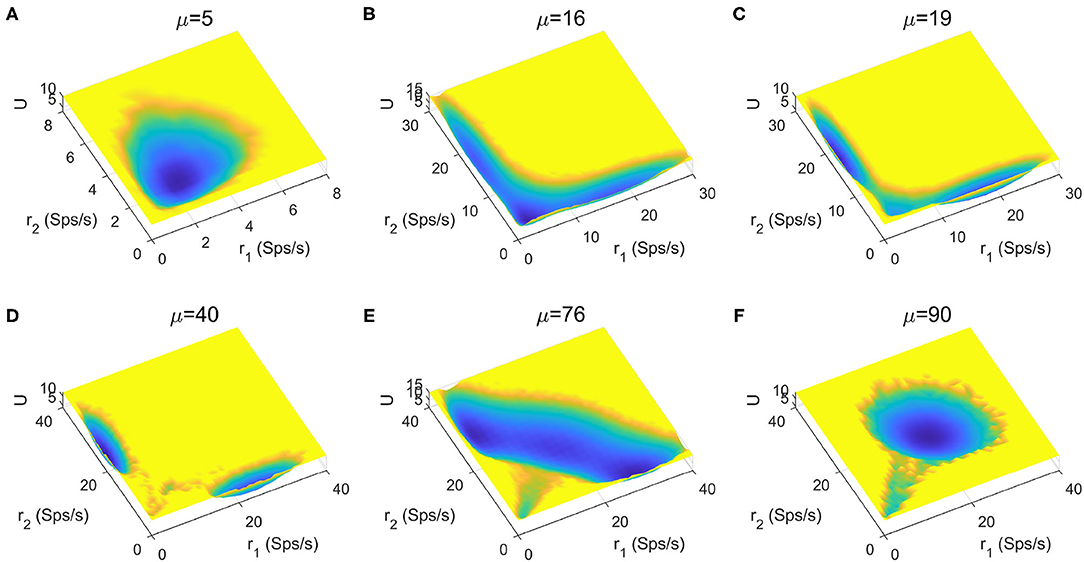

The stimuli strength applied to the two selective pools is a key factor in decision making tasks. In Figure 4, we quantitatively mapped out the potential landscape for the decision-making dynamics under different stimulus strength μ. The landscape displays three qualitatively different topographies (monostable, bistable and tristable) across the range of stimuli strength from 5 to 90Hz.

Figure 4. The energy landscape over a range of external inputs, applied symmetrically to both selective pools (0% coherence). (A) With slight stimulus, none of the decided attractors can be reached, the network stays at the resting state. (B) Two attractors corresponding to the decision states emerge for larger stimulus strength. (C) The central basin of the spontaneous state becomes weaker for increasing stimulus strength. (D) When the stimulus input is strong enough, the resting state vanishes, and the two decision states remain on two sides. (E) The strong inputs induce the emergence of an intermediate state, called “double-up” state, with high activity for both selective neural assemblies between decision states. (F) The “double-up” state becomes the exclusively steady state while two symmetric decision states disappear simultaneously for the further increase of the stimulus strength.

For small inputs, the spontaneous state with both selective populations firing at low rates, is the exclusively stable state (Figure 4A). The additional increase of stimulus strength induces the emergence of the two decision attractors (Figure 4B). The central basin of the spontaneous state becomes weaker for increasing stimulus strength (Figure 4C) until the system operates in a binary decision-making region (Figure 4D). When the selective inputs are sufficiently high, an intermediate state with high activity for both neural assemblies encoding the possible alternatives (Figure 4E), called "double-up" state, emerges, in line with both experimental evidences and computational results in delay response tasks (Shadlen and Newsome, 1996; Roitman and Shadlen, 2002; Huk and Shadlen, 2005; Wong and Wang, 2006; Martí et al., 2008; Albantakis and Deco, 2011; Yan et al., 2016; Yan and Wang, 2020). The “double-up” state reduces the barrier between decision states and the transitions are more likely to occur, facilitating changes of mind (Albantakis and Deco, 2011; Yan and Wang, 2020). The two symmetric decision states disappears simultaneously for the further increase of the stimulus strength, so the system loses its ability to compute a categorical choice (Figure 4F).

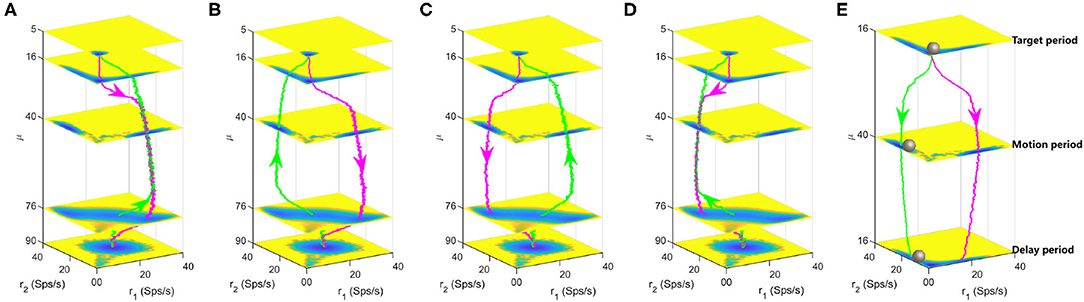

To track the landscape change and dynamical transition property of decision-making process, we calculated the typical kinetic transition paths averaged over many trials for continuously increasing (from 5 to 90Hz, magenta lines) and decreasing (from 90 to 5Hz, green lines) stimulus inputs, as displayed in Figures 5A–D. There exists four combinations and corresponding landscapes of the decision path since the forward and backward paths can pass through either the DS1 or the DS2 state due to the stochasticity. In Figures 5A,D, the backward path deviates away from the forward decision-making path due to the non-equilibrium flux force although the two paths pass through the same decision state (DS1 or DS2). Figures 5B,C show the forward and backward paths pass through distinct decision states. It can be seen that the system will spend some time staying at the spontaneous state (μ = 5, 16) before making a decision (μ = 40) for the forward paths while maintaining the "double-up" state for a period of time (μ = 90, 76) before making a decision (μ = 40) for the backward paths, implying the existence of hysteresis for state switches in biological neural system.

Figure 5. Landscapes are shown in the four-dimensional pictures. The magenta and green lines are the dynamical decision paths averaged over many trials with continuously varied stimulus input along the z axis. Each layer corresponds to a three-dimensional landscape with fixed stimulus strength μ. (A) The paths for increasing and decreasing input both pass through the DS1 state. (B) The paths for increasing and decreasing input pass through the DS1 and DS2 states, respectively. (C) The paths for increasing and decreasing input pass through the DS2 and DS1 states, respectively. (D) The paths for increasing and decreasing input both pass through the DS2 state. (E) A quantitative landscape to illustrate the maintenance of decisions during the delay period in the delayed version of random-dot motion discrimination task. The gray balls represent the instantaneous states of the system. The parameters are specified as w+ = 1.66 and c = 0.

The energy landscape we obtained here also explains the delayed version of the RDM task well, which involves both the decision computation and working memory. The delayed RDM task additionally requires the subjects to withhold the choice in working memory across a delay period before responding to the saccadic eye movement (Shadlen and Newsome, 2001). Figure 5E shows the three layers of landscape corresponding to the targets, motion and delay in the delayed RDM task, respectively. The gray balls represent the instantaneous states of the system. The megenta and green lines are two possible decision paths due to the symmetry and fluctuations. Initially, the system rests at the spontaneous state until the visual inputs force the system switching into one of the two decision attractor states. The decision is stored in the DS1 or DS2 and this information will be retrieved to produce motor responses at the end of the delay period even though the stimulus is absent. This quantitative picture of landscape echos with the one-dimensional illustrative diagram of decision “landscape” proposed in Wong and Wang (2006).

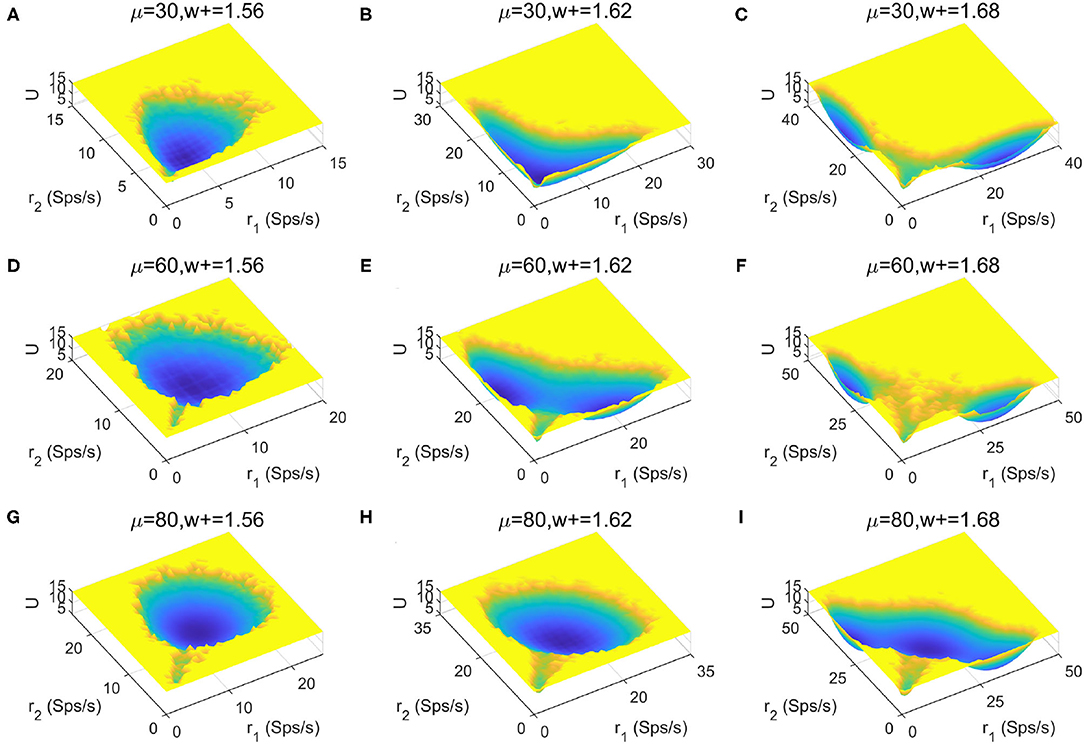

To provide a more global picture of the system dynamics, we explored the change of landscape topography when the stimulus strength μ and recurrent connectivity in the two selective populations w+ vary (Figures 6A–I). We found that the number of stable states increases as the recurrent connectivity w+ increases (horizontal direction), while the stimulus strength μ increases (vertical direction) there is no apparent trend for the occurrence of multistability. This suggests that stronger self-activation strength may promote the occurrence of multistability.

Figure 6. Landscape comparisons when the stimulus strength μ and recurrent connectivity in the two selective populations w+ change.

The stability of attractor states is critical for decision-making network since the decisions can be changed easily for unstable decision state. Experimental and modeling works both suggested that the probability of changes of mind depends on the task difficulty, namely, the motion strength c (Resulaj et al., 2009; Albantakis and Deco, 2011). For biased stimulus input (c≠0), the symmetry of attractor landscape is broken (Supplementary Figures 5, 6 for the asymmetric landscapes). Correspondingly, we can calculate relative barrier height (RB) between pairs of local minima and mean first passage time (MFPT) to quantitatively measure the global stability of the neural network under different motion strength c.

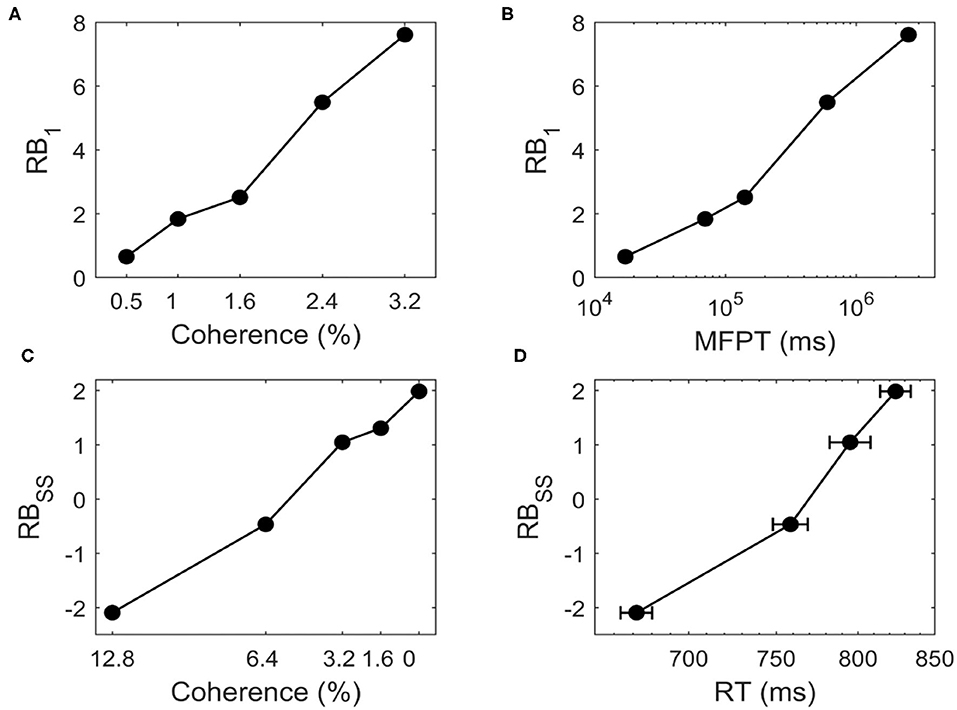

For bistable system, the relative barrier height (RB1) is defined in terms of the two basins of decision state. Based on landscape topography, we define U1 and U2 as the potential minimum of the DS1 and DS2, and Usaddle as the potential of the saddle point between the two states. The barrier heights quantifying the global stability are BH1 = Usaddle − U1 and BH2 = Usaddle − U2. Then RB1 = BH1 − BH2, quantifying the relative stability of DS1 against DS2.

The MFPT represents the average kinetic time of the alternations between different attractor states, thus also describing the stability of attractors. With dynamical neural activity trajectories over a long time window, we can estimate the time taken for the system to switch from one attractor to another for the first time and get the first passage time (FPT). Here, an attractor region is roughly taken as a small ellipse centered on the local minimum (megenta and green dashed circles in Figure 2C), and the transition is finished once the stochastic trajectory enters the destination ellipse. Then the MFPT is defined as the average of FPT by samplings.

We study whether the landscape can quantify the transition time. Figure 7A shows that for bistable system, RB1 increases as we increase c for c = 0.5%, 1%, 1.6%, 2.4%, 3.2%. The positive value of RB1 indicates that the two stable states are no longer symmetric and the DS1 has a deeper basin of attraction than DS2 due to the biased external inputs. When the motion strength gets stronger (larger c), the time taken to switch from DS1 to DS2 increases with higher barrier (Figure 7B), indicating that when the task is easier (larger c) the change of mind needs more time, i.e., the change of mind (the right decision becomes wrong decision in current case) is harder.

Figure 7. For the change of mind process from bistable landscape, (A) the relative barrier height (RB1) increases for c = 0.5%, 1%, 1.6%, 2.4%, 3.2%, (B) RB1 is correlated with the logarithm of the mean first passage time (MFPT). RB1 represents relative barrier height from DS1 to DS2, which quantifies the global stability of DS1 against DS2. The MFPT from DS1 to DS2 are estimated from dynamical neural activity trajectories. For the decision making process from tristable landscape, (C) The relative barrier height (RBSS) increases for c = 12.8%, 6.4%, 3.2%, 0%, (D) RBSS is correlated with the logarithm of the reaction time (RT, mean ± SEM). RBSS denotes the relative barrier height for the transition from SS to DS1. RT is the experimental observed reaction time of correct trials in the motion-discrimination task (Roitman and Shadlen, 2002).

We further ask how the model predictions from landscape fit quantitative experimental data. We use tristable system as an example to describe the decision process. Here, the relative barrier height (RBSS) between SS and DS1 has the similar definition as RB1. Figure 7C shows that for tristable system, RBSS increases as we decrease c for c = 12.8%, 6.4%, 3.2%, 0%, indicating that it is harder to make a decision since the barrier is higher for the more difficult task. The transition from SS to DS1 represents the decision process, thus related to the behavioral reaction time (RT). The positive correlation between RBSS and the experimentally observed RT (Roitman and Shadlen, 2002) suggests that the speed of decisions decreases in harder decision tasks (smaller c) with higher barrier (Figure 7D). Intuitively, this means that for a more difficult task, it is harder to make decision, which takes longer reaction time. Therefore, the landscape results explain the experimental data well and provide an intuitive and quantitative way to understand the decision making function.

Of note, the linear correlation between the logarithm of MFPT and RT and the barrier height of landscape is not a perfect fit. In fact, this is due to another important factor, the curl flux. For a non-equilibrium system, the landscape may explain a major part of the transition rate (barrier crossing from a potential valley). However, the landscape does not solely determine the transition rate as in an equilibrium system, i.e., the flux will contribute to the transition dynamics for barrier crossing (Feng et al., 2014). We should notice that due to the approximation nature of this approach, at very small or very large time scales, it might be less precise for the estimation of the relationship between MFPT and barrier height.

The biological neural circuit underlying the decision-making function is a non-linear, non-equilibrium, non-stationary, open and strongly coupled system. The underlying energy landscape allows us to understand the complex dynamic behavior of the neural system from a global view. In this work, we explicitly quantified the landscape and further studied the stochastic transition dynamics for perceptual decision making from the plausible biophysical spiking cell-based model to mimic the visual motion discrimination task.

When the motion strength c is zero, i.e., the two selective populations receive stimuli with the same strength, we identified qualitatively different landscape topographies with different number of attractors in the phase space under certain parameter regions. The bistable attractor landscape is characterize by two symmetric basins of attraction corresponding to the two competing decision states. The spontaneous state with both selective pools firing at low rates, as an intermediate state, emerges on tristable attractor landscape, which increases the plasticity by making a two-step transition for change of mind and robustness by reaffirming previous decision of decision making. The irreversibility of the bistable and tristable switches due to the probabilistic curl flux demonstrates the inherent non-equilibrium property of the neural decision system. We found that the neural ensembles evolve across different regimes under the control of driving input. Of note, a new intermediate state, the "double-up" state with both selective pools firing at high rates also emerges when the stimulus strength is sufficiently high. The "double-up" state reduces the barrier between the two decision states and facilitates the state transitions, in line with both experimental evidences and computational results in delay response tasks (Shadlen and Newsome, 1996; Roitman and Shadlen, 2002; Huk and Shadlen, 2005; Wong and Wang, 2006; Albantakis and Deco, 2011; Yan et al., 2016; Yan and Wang, 2020). By exploring the parameter region, we found that a possible way of promoting the occurrence of mutilstability and intermediate state is to increase the strength of recurrent connectivity or self-activation of neural populations.

We also quantified the global stability of decision-making by barrier height and mean first passage time to explore the influence of the difficulty of task. When the difficulty of task increases, namely, the coherence level c decreases, the speed of decisions gets slower and it takes longer for the system to make a decision characterized by higher barrier height, which is consistent with experimental observations. The landscape and path results advance our understanding of stochastic dynamical mechanism of decision-making function.

In previous study, Wong and Wang reduced the spiking neural network model to a two-variable coupled ordinary differential equations by mean-field approximations (Wong and Wang, 2006). This allows the analysis of dynamics with the tools of nonlinear dynamical system, such as phase-plane analysis and bifurcation based on ordinary differential equations. However, these analysis is under the framework of deterministic simulation, so the stochastic dynamics is hard to be studied from bifurcation type of approaches. The non-equilibrium potential theory we employed here is characterized by its ability to quantify the stochastic dynamics and global stability of decision-making function. The barrier height inferred from potential landscape and mean passage time measure the difficulty of switching between basins of attractions, which is also applicable in large-scale model. The quantitative picture of landscape in Figure 5E also echos with the one-dimensional illustrative diagram of decision “landscape” proposed in Wong and Wang (2006).

The spiking neural network we studied here consists of thousands of neurons interacting in a highly nonlinear manner, which is more biologically realistic compared to simplified firing rate-based model. Each parameter in the model has specific biologically meaning, allowing us to explore the neural mechanisms of decision-making. However, there are also some limitations for current spiking neural network model. Firstly, the numerical simulation of spiking neural network is computationally intensive and time-consuming. The accuracy of our results depends on the volume of collected dynamical trajectories, which is limited by the computational efficiency. Secondly, to facilitate our analysis, we reduced the dimension of spiking neural network system to 4 by averaging the microscopic activity of individual neurons in homogeneous populations to obtain macroscopic population-averaged firing rate. For visualization and analysis, we projected the system to the two dimensions (r1 and r2) for the estimation of potential landscape and flux. This may introduce inaccuracy for analyzing original high-dimensional system, quantitatively. So, it's also important to develop other dimensional reduction approach (Kang and Li, 2021). Furthermore, the local circuit model we studied here only involves individual brain area, while a biologically realistic decision-making may be distributed, engaging multiple brain regions (Siegel et al., 2015; Steinmetz et al., 2019). It is anticipated that the landscape and path approach can be applied to account for other cognitive function related issues (such as distributed working memory or decision-making) in more realistic brain networks considering more complex circuits in neural network scale or brain region scale (Murray et al., 2017; Schmidt et al., 2018; Mejias and Wang, 2021).

The biologically plausible model for binary decision making was first introduced in Wang (2002), illustrated in Figure 1. The model is a fully connected network, composed of N neurons with NE = 1600 for excitatory pyramidal cells and NI = 400 for inhibitory interneurons, which is consistent with the observed proportions of the pyramidal neurons and interneurons in the cerebral cortex (Abeles, 1991). Two distinct populations in excitatory neurons (S1 and S2) respond to the two visual stimuli, respectively, with NS1 = NS2 = fNE and f = 0.15, and the remaining (1−2f)NE non-selective neurons (NS) do not respond to either of the stimuli. All neurons receive a background Poisson input of vext = 2.4kHz. This can be viewed as originating from 800 excitatory connections from external neurons firing at 3Hz per neuron, which is consistent with the resting activity observed in the cerebral cortex (Rolls et al., 1998).

Table 1 is the connection weight matrix between these four populations, where minus sign represents inhibition effect. The synaptic weights between neurons are prescribed according to the Hebbian rule and remain fixed during the simulation. Therefore, inside the selective populations, the synapses are potentiated and the weight w+ is larger than 1. Between selective populations and from nonselective population to selective ones, the synaptic weight is w− = 1 − f(w+ − 1)/(1 − f) so that the overall recurrent excitatory synaptic drive in the spontaneous state remains constant when altering w+ (Amit and Brunel, 1997). w− < 1 indicates the synaptic repression. The remaining weights are 1.

Herein, we consider the leakage integrate-and-fire (LIF) model to describe both pyramidal cells and interneurons (Tuckwell, 1988). The membrane potential of a neuron, V(t), can be described by a capacitance-voltage (CV) equation when it is less than a given voltage threshold Vth,

Here, Cm is the capacitance of the neuron membrane, Cm = 0.5nF (0.2nF) for excitatory (inhibitory) neurons. gL is the leakage conductance, gL = 25nS (20nS) for excitatory (inhibitory) neurons. Each neuron has a leakage voltage VL = −70mV and firing threshold Vth = −50mV. Isyn represents the total synaptic current flowing into the neuron.

When V(t) = Vth at t = tk, the neuron will emit a spike and the membrane potential is reset at Vreset = −55mV for a refractory period τref,

After then, V(t) is governed by the CV (Equation 1) again. Here, τref = 2ms (1ms) for excitatory (inhibitory) neurons.

The synaptic model maps the spike trains of the presynaptic neuron to the postsynaptic current. For the fully connected neural network, the total postsynaptic current is the sum of the following four currents:

The first term is the external excitatory current, which is assumed to be exclusively mediated by AMPA receptor. The second and third terms are the recurrent excitatory currents mediated by AMPA and NMDA receptors. The last term is the inhibitory current mediated by GABA receptor. More specifically,

where VE = 0mV, VI = −70mV. For excitatory cells, the synaptic conductances for different channels are gext, AMPA = 2.1nS, grec, AMPA = 0.05nS, gNMDA = 0.165nS, and gGABA = 1.3nS; for inhibitory cells, gext, AMPA = 1.62nS, grec, AMPA = 0.04nS, gNMDA = 0.13nS, and gGABA = 1.0nS. wj is the dimensionless synaptic weight and sj is the gating variable, representing the fraction of open channels for different receptors. The sum over j denotes a sum over the synapses formed by presynaptic neurons j. Specially, the NMDA synaptic currents depend on both the membrane potential and the extracellular magnesium concentration ([Mg2+] = 1mM) (Jahr and Stevens, 1990). The gating variables are given by

where the decay time for AMPA, NMDA and GABA synapses are τAMPA = 2ms, τNMDA, decay = 100ms and τGABA = 5ms (Hestrin et al., 1990; Spruston et al., 1995; Salin and Prince, 1996; Xiang et al., 1998). The rise time for NMDA synapses are τNMDA, rise = 2ms (the rise times for AMPA and GABA are neglected because they are typically very short) and α = 0.5ms−1 (Hestrin et al., 1990; Spruston et al., 1995). The sum over k represents the sum over all the spikes emitted by presynaptic neuron j at time . For external AMPA currents, the spikes are generated by independently sampling Poisson process with rate vext = 2.4kHz from cell to cell.

By numerically simulating the neural network system for a long time, we can obtain the raster plot, i.e., neurons emit spikes at specific time points. However, since the spiking neural network we studied here is a high dimensional system consisting of thousands of interacting neurons, we focus on the macroscopic activity of population-averaged firing rate rather than microscopic neural spikes, which effectively reduced the dimension of the system dynamics to 4 (4 populations). For visualization, two more important dimensions, i.e., the population-averaged firing rates of two selective neural groups r1 and r2 are chosen as coordinates to form the "decision space" and then the probablistic distribution at steady state (energy landscape) is projected into the decision space by integrating other dimensions. r1 and r2 can be calculated by firstly counting the total spike numbers of a population in a time window of 50ms, which slides with a time step of 5ms, and then dividing it by the neuron number and the time window to get r1 and r2.

To visualize the probability distribution of the system state in the "decision space" constructed by the firing rate r1 and r2, we discrete the space into a collection of grids and collect the statistics for the system state falling into each grid. Finally, the potential landscape is mapped out by U(r1, r2) = −lnPss(r1, r2) (Sasai and Wolynes, 2003; Li and Wang, 2013a, 2014a), where Pss(r1, r2) represents the normalized joint probability distribution at steady state and U(r1, r2) is the dimensionless potential.

Of note, a key issue is to decide when a stationary distribution has been reached. Theoretically, the steady state distribution need to be obtained as time t goes to infinity (or very large). Since the numerical simulation of spiking neural network is computationally intensive and time-consuming, the time length of the dynamical trajectory we can obtained is limited. To address this problem, we define relative Euclidean distance between two probability distributions as , where and are probability distributions obtained by firing rate activity with time length t and t+500, respectively. So σ measures the deviation of distribution by prolonging the trajectory by 500s. If increasing time t does not significantly change this relative distance for the probability distribution between different time length (σ is less than a threshold, σ < 0.06% for bistable landscape in Figure 2), we consider that a steady state has been reached.

Biological systems, including neural circuits, are generally dissipative, exchanging energies or materials with the environment to perform functions (Lan et al., 2012). For a non-equilibrium system, the violation of detailed balance lies at the heart of its dynamics. Different from the equilibrium system whose dynamics is solely determined by the underlying energy landscape, the non-equilibrium system is also driven by the steady state probabilistic flux, which measures to what extent the system is out of equilibrium or the detailed balance is broken (Wang et al., 2008; Yan et al., 2013, 2016; Li and Wang, 2014a; Yan and Wang, 2020). For high-dimensional systems, for example, the neural network we studied here, it is challenging to quantify the non-equilibrium probabilistic flux from diffusion equation (Wang et al., 2008; Li and Wang, 2014a). Therefore, we employ an approach which is based on the fluctuating steady-state trajectories, to quantify the probability flux (Battle et al., 2016). As we discussed before, the reduced stochastic system trajectory evolves over time in a four-dimensional phase space. However, for the sake of simplicity and visualization, the two more important dimensions, r1 and r2, are utilized to estimate the probabilistic flux. To determine the probability flux of the non-equilibrium neural system, we discrete the subspace constructed by r1 and r2 in a coarse-grained way, i.e., the subspace is divided into N1 × N2 equally sized, rectangular boxes, each of which represents a discrete state α. Such a discrete state is a continuous set of microstates. Then the probability flux associated with state α is the following vector:

Here, is the center position of the box related to state α. There exist four possible transitions since state α has two neighboring states in each direction. The rate is the net rate of transitions into state α from the adjacent state α− (i.e., the state with smaller r1), while represents the rate of transitions from α to α+ (the state with larger r1). Similarly, and denote corresponding transitions rates between boxes arranged along the r2 direction, respectively. Of note, each rate has a sign. For example, means there are more transitions from α to α− than the reverse direction per unit time.

These rates can be estimated by the temporal trajectories of r1 and r2:

where ttotal is the total simulation time and () is the number of transitions from state α (β) to state β (α) along the direction ri. For the cases where the trajectories go from one box to a non-adjacent box in a single time-step, we perform a linear interpolation to capture all the transitions between adjacent boxes.

To quantify the transition paths between the steady-state attractor states on landscape, we firstly treat a small spherical area centered on the local minimum point as a attractor area (green and megenta dashed circles in Figures 2C, 3C), denoted by B1, ⋯ , Bm where m is the total number of attractors. The transition path from attractor Bi to attractor Bj is defined as a set of trajectory points {Xt1, ⋯ , Xtn} starting from Bi and ending at Bj. Since the trajectory is noisy, the start point is defined as the last point in Bi before leaving Bi and the transition is finished once the trajectory wanders into the area of attractor Bj. Each transition path corresponds to a non-linear mapping . Suppose there are K transition paths from Bi to Bj, then the average transition path ψij is defined as

Of note, since the noise should be large enough to drive the transitions between attractors, the time durations of each single transition trajectory is diverse. To deal with this problem, we firstly split the trajectories evenly into equal number of points and then average these points to form the average trajectory.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

CL conceived and designed the study. LY performed the research. CL and LY analyzed the results and wrote the manuscript. Both authors contributed to the article and approved the submitted version.

CL was supported by the National Key R&D Program of China (2019YFA0709502) and the National Natural Science Foundation of China (11771098).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2021.740601/full#supplementary-material

Abeles, M. (1991). Corticonics: Neural Circuits of the Cerebral Cortex. Cambridge: Cambridge University Press.

Albantakis, L., and Deco, G. (2011). Changes of mind in an attractor network of decision-making. PLoS Comput Biol. 7:e1002086. doi: 10.1371/journal.pcbi.1002086

Amit, D. J., and Brunel, N. (1997). Model of global spontaneous activity and local structured activity during delay periods in the cerebral cortex. Cereb. Cortex 7, 237–252. doi: 10.1093/cercor/7.3.237

Ao, P. (2004). Potential in stochastic differential equations: novel construction. J. Phys. A Math. Gen. 37, L25–L30. doi: 10.1088/0305-4470/37/3/L01

Battle, C., Broedersz, C. P., Fakhri, N., Geyer, V. F., Howard, J., Schmidt, C. F., et al. (2016). Broken detailed balance at mesoscopic scales in active biological systems. Science 352, 604–607. doi: 10.1126/science.aac8167

Braun, J., and Mattia, M. (2010). Attractors and noise: Twin drivers of decisions and multistability. Neuroimage 52, 740–751. doi: 10.1016/j.neuroimage.2009.12.126

Cavanagh, J. F., and Frank, M. J. (2014). Frontal theta as a mechanism for cognitive control. Trends Cogn. Sci. 18, 414–421. doi: 10.1016/j.tics.2014.04.012

Churchland, A. K., Kiani, R., and Shadlen, M. N. (2008). Decision-making with multiple alternatives. Nat. Neurosci. 11, 693–702. doi: 10.1038/nn.2123

Deco, G., Rolls, E. T., Albantakis, L., and Romo, R. (2013). Brain mechanisms for perceptual and reward-related decision-making. Progr. Neurobiol. 103, 194–213. doi: 10.1016/j.pneurobio.2012.01.010

Destexhe, A., Hughes, S. W., Rudolph, M., and Crunelli, V. (2007). Are corticothalamic ‘up' states fragments of wakefulness? Trends Neurosci. 30, 334–342. doi: 10.1016/j.tins.2007.04.006

Feng, H., Zhang, K., and Wang, J. (2014). Non-equilibrium transition state rate theory. Chem. Sci. 5, 3761–3769. doi: 10.1039/C4SC00831F

Fröhlich, F., and Bazhenov, M. (2006). Coexistence of tonic firing and bursting in cortical neurons. Phys. Rev. E 74:031922. doi: 10.1103/PhysRevE.74.031922

Fung, C. A., Wong, K. M., and Wu, S. (2010). A moving bump in a continuous manifold: a comprehensive study of the tracking dynamics of continuous attractor neural networks. Neural Comput. 22, 752–792. doi: 10.1162/neco.2009.07-08-824

Ge, H., and Qian, H. (2016). Mesoscopic kinetic basis of macroscopic chemical thermodynamics: a mathematical theory. Phys. Rev. E 94:052150. doi: 10.1103/PhysRevE.94.052150

Graham, R. (1987). “Macroscopic potentials, bifurcations and noise in dissipative systems,” in Fluctuations and Stochastic Phenomena in Condensed Matter, ed L. Garrido (Berlin; Heidelberg: Springer Berlin Heidelberg), 1–34.

Hestrin, S., Sah, P., and Nicoll, R. A. (1990). Mechanisms generating the time course of dual component excitatory synaptic currents recorded in hippocampal slices. Neuron 5, 247–253. doi: 10.1016/0896-6273(90)90162-9

Hopfield, J., and Tank, D. (1986). Computing with neural circuits: a model. Science 233, 625–633. doi: 10.1126/science.3755256

Hopfield, J. J. (1984). Neurons with graded response have collective computational properties like those of two-state neurons. Proc. Natl. Acad. Sci. U.S.A. 81, 3088–3092. doi: 10.1073/pnas.81.10.3088

Huk, A. C., and Shadlen, M. N. (2005). Neural activity in macaque parietal cortex reflects temporal integration of visual motion signals during perceptual decision making. J. Neurosci. 25, 10420–10436. doi: 10.1523/JNEUROSCI.4684-04.2005

Jahr, C., and Stevens, C. (1990). Voltage dependence of nmda-activated macroscopic conductances predicted by single-channel kinetics. J. Neurosci. 10, 3178–3182. doi: 10.1523/JNEUROSCI.10-09-03178.1990

Kang, X., and Li, C. (2021). A dimension reduction approach for energy landscape: Identifying intermediate states in metabolism-emt network. Adv. Sci. 8, 2003133. doi: 10.1002/advs.202003133

Kang, X., Wang, J., and Li, C. (2019). Exposing the underlying relationship of cancer metastasis to metabolism and epithelial-mesenchymal transitions. iScience 21:754–772. doi: 10.1016/j.isci.2019.10.060

Kelso, J. A. S. (2012). Multistability and metastability: understanding dynamic coordination in the brain. Philos. Trans. R. Soc. Biol. Sci. 367, 906–918. doi: 10.1098/rstb.2011.0351

Kondo, H. M., Farkas, D., Denham, S. L., Asai, T., and Winkler, I. (2017). Auditory multistability and neurotransmitter concentrations in the human brain. Philos. Trans. R. Soc. B Biol. Sci. 372, 20160110. doi: 10.1098/rstb.2016.0110

Lan, G., Sartori, P., Neumann, S., Sourjik, V., and Tu, Y. (2012). The energy-speed-accuracy trade-off in sensory adaptation. Nat. Phys. 8, 422–428. doi: 10.1038/nphys2276

Lee, D. (2013). Decision making: from neuroscience to psychiatry. Neuron 78, 233–248. doi: 10.1016/j.neuron.2013.04.008

Li, C., and Balazsi, G. (2018). A landscape view on the interplay between emt and cancer metastasis. NPJ Syst. Biol. Appl. 4, 1–9. doi: 10.1038/s41540-018-0068-x

Li, C., and Wang, J. (2013a). Quantifying cell fate decisions for differentiation and reprogramming of a human stem cell network: landscape and biological paths. PLoS Comput. Biol. 9:e1003165. doi: 10.1371/journal.pcbi.1003165

Li, C., and Wang, J. (2013b). Quantifying waddington landscapes and paths of non-adiabatic cell fate decisions for differentiation, reprogramming and transdifferentiation. J. R. Soc. Interface 10:20130787. doi: 10.1098/rsif.2013.0787

Li, C., and Wang, J. (2014a). Landscape and flux reveal a new global view and physical quantification of mammalian cell cycle. Proc. Natl. Acad. Sci. U.S.A. 111, 14130–14135. doi: 10.1073/pnas.1408628111

Li, C., and Wang, J. (2014b). Quantifying the underlying landscape and paths of cancer. J. R. Soc. Interface 11, 20140774. doi: 10.1098/rsif.2014.0774

Lin, Z., Nie, C., Zhang, Y., Chen, Y., and Yang, T. (2020). Evidence accumulation for value computation in the prefrontal cortex during decision making. Proc. Natl Acad. Sci. U.S.A. 117, 30728–30737. doi: 10.1073/pnas.2019077117

Lu, M., Jolly, M. K., Levine, H., Onuchic, J. N., and Ben-Jacob, E. (2013). Microrna-based regulation of epithelial-hybrid-mesenchymal fate determination. Proc. Natl. Acad. Sci. U.S.A. 110, 18144–18149. doi: 10.1073/pnas.1318192110

Ludwig, D. (1975). Persistence of dynamical systems under random perturbations. SIAM Rev. 17, 605–640. doi: 10.1137/1017070

Lv, C., Li, X., Li, F., and Li, T. (2015). Energy landscape reveals that the budding yeast cell cycle is a robust and adaptive multi-stage process. PLoS Comput. Biol. 11:e1004156. doi: 10.1371/journal.pcbi.1004156

Martí, D., Deco, G., Mattia, M., Gigante, G., and Del Giudice, P. (2008). A fluctuation-driven mechanism for slow decision processes in reverberant networks. PLoS ONE 3:e0002534. doi: 10.1371/journal.pone.0002534

Mejias, J. F., and Wang, X.-J. (2021). Mechanisms of distributed working memory in a large-scale network of macaque neocortex. bioRxiv.

Moreno-Bote, R., Rinzel, J., and Rubin, N. (2007). Noise-induced alternations in an attractor network model of perceptual bistability. J. Neurophysiol. 98, 1125–1139. doi: 10.1152/jn.00116.2007

Murray, J. D., Jaramillo, J., and Wang, X.-J. (2017). Working memory and decision-making in a frontoparietal circuit model. J. Neurosci. 37, 12167–12186. doi: 10.1523/JNEUROSCI.0343-17.2017

Newsome, W. T., Britten, K. H., and Movshon, J. A. (1989). Neuronal correlates of a perceptual decision. Nature 341, 52–54. doi: 10.1038/341052a0

Pereira, J., and Wang, X.-J. (2014). A tradeoff between accuracy and flexibility in a working memory circuit endowed with slow feedback mechanisms. Cereb. Cortex 25, 3586–3601. doi: 10.1093/cercor/bhu202

Resulaj, A., Kiani, R., Wolpert, D. M., and Shadlen, M. N. (2009). Changes of mind in decision-making. Nature 461, 263–266. doi: 10.1038/nature08275

Roitman, J. D., and Shadlen, M. N. (2002). Response of neurons in the lateral intraparietal area during a combined visual discrimination reaction time task. J. Neurosci. 22, 9475–9489. doi: 10.1523/JNEUROSCI.22-21-09475.2002

Rolls, E. T., Treves, A., and Rolls, E. T. (1998). Neural Networks and Brain Function, Vol. 572. Oxford: Oxford University Press Oxford.

Salin, P. A., and Prince, D. A. (1996). Spontaneous gabaa receptor-mediated inhibitory currents in adult rat somatosensory cortex. J. Neurophysiol. 75, 1573–1588. doi: 10.1152/jn.1996.75.4.1573

Sanchez-Vives, M. V., Massimini, M., and Mattia, M. (2017). Shaping the default activity pattern of the cortical network. Neuron 94, 993–1001. doi: 10.1016/j.neuron.2017.05.015

Sasai, M., and Wolynes, P. G. (2003). Stochastic gene expression as a many-body problem. Proc. Natl. Acad. Sci. U.S.A. 100, 2374–2379. doi: 10.1073/pnas.2627987100

Schmidt, M., Bakker, R., Shen, K., Bezgin, G., Diesmann, M., and van Albada, S. J. (2018). A multi-scale layer-resolved spiking network model of resting-state dynamics in macaque visual cortical areas. PLoS Comput. Biol. 14:e1006359. doi: 10.1371/journal.pcbi.1006359

Shadlen, M. N., and Newsome, W. T. (1996). Motion perception: seeing and deciding. Proc. Natl. Acad. Sci. U.S.A. 93, 628–633. doi: 10.1073/pnas.93.2.628

Shadlen, M. N., and Newsome, W. T. (2001). Neural basis of a perceptual decision in the parietal cortex (area lip) of the rhesus monkey. J. Neurophysiol. 86, 1916–1936. doi: 10.1152/jn.2001.86.4.1916

Shilnikov, A., Calabrese, R. L., and Cymbalyuk, G. (2005). Mechanism of bistability: Tonic spiking and bursting in a neuron model. Phys. Rev. E 71:056214. doi: 10.1103/PhysRevE.71.056214

Siegel, M., Buschman, T. J., and Miller, E. K. (2015). Cortical information flow during flexible sensorimotor decisions. Science. 348, 1352–1355. doi: 10.1126/science.aab0551

Spruston, N., Jonas, P., and Sakmann, B. (1995). Dendritic glutamate receptor channels in rat hippocampal ca3 and ca1 pyramidal neurons. J. Physiol. 482, 325–352. doi: 10.1113/jphysiol.1995.sp020521

Steinmetz, N. A., Zatka-Haas, P., Carandini, M., and Harris, K. D. (2019). Distributed coding of choice, action and engagement across the mouse brain. Nature 576, 266–273. doi: 10.1038/s41586-019-1787-x

Sterzer, P., Kleinschmidt, A., and Rees, G. (2009). The neural bases of multistable perception. Trends Cogn. Sci. 13, 310–318. doi: 10.1016/j.tics.2009.04.006

Stimberg, M., Brette, R., and Goodman, D. F. (2019). Brian 2, an intuitive and efficient neural simulator. Elife 8:e47314. doi: 10.7554/eLife.47314

Stine, G. M., Zylberberg, A., Ditterich, J., and Shadlen, M. N. (2020). Differentiating between integration and non-integration strategies in perceptual decision making. Elife 9:e55365. doi: 10.7554/eLife.55365

Tank, D. W., and Hopfield, J. J. (1987). Collective computation in neuronlike circuits. Sci. Am. 257, 104–115. doi: 10.1038/scientificamerican1287-104

Tuckwell, H. C. (1988). Introduction to Theoretical Neurobiology. Cambridge: Cambridge University Press.

Walczak, A. M., Sasai, M., and Wolynes, P. G. (2005). Self consistent proteomic field theory of stochastic gene switches. Biophys. J. 88:828–850. doi: 10.1529/biophysj.104.050666

Wang, J. (2015). Landscape and flux theory of non-equilibrium dynamical systems with application to biology. Adv. Phys. 64, 1–137. doi: 10.1080/00018732.2015.1037068

Wang, J., Xu, L., and Wang, E. (2008). Potential landscape and flux framework of nonequilibrium networks: robustness, dissipation, and coherence of biochemical oscillations. Proc. Natl. Acad. Sci. U.S.A.105, 12271–12276. doi: 10.1073/pnas.0800579105

Wang, M., Arteaga, D., and He, B. J. (2013). Brain mechanisms for simple perception and bistable perception. Proc. Natl. Acad. Sci.U.S.A. 110, E3350–E3359. doi: 10.1073/pnas.1221945110

Wang, X.-J. (2002). Probabilistic decision making by slow reverberation in cortical circuits. Neuron 36, 955–968. doi: 10.1016/S0896-6273(02)01092-9

Wang, X.-J. (2008). Decision making in recurrent neuronal circuits. Neuron 60, 215–234. doi: 10.1016/j.neuron.2008.09.034

Wong, K.-F., Huk, A., Shadlen, M., and Wang, X.-J. (2007). Neural circuit dynamics underlying accumulation of time-varying evidence during perceptual decision making. Front. Comput. Neurosci. 1:6. doi: 10.3389/neuro.10.006.2007

Wong, K.-F., and Wang, X.-J. (2006). A recurrent network mechanism of time integration in perceptual decisions. J. Neurosci. 26, 1314–1328. doi: 10.1523/JNEUROSCI.3733-05.2006

Xiang, Z., Huguenard, J. R., and Prince, D. A. (1998). Gabaa receptor-mediated currents in interneurons and pyramidal cells of rat visual cortex. J. Physiol. 506, 715–730. doi: 10.1111/j.1469-7793.1998.715bv.x

Yan, H., and Wang, J. (2020). Non-equilibrium landscape and flux reveal the stability-flexibility-energy tradeoff in working memory. PLoS Comput. Biol. 16, e1008209. doi: 10.1371/journal.pcbi.1008209

Yan, H., Zhang, K., and Wang, J. (2016). Physical mechanism of mind changes and tradeoffs among speed, accuracy, and energy cost in brain decision making: Landscape, flux, and path perspectives. Chin. Phys. B 25, 078702. doi: 10.1088/1674-1056/25/7/078702

Yan, H., Zhao, L., Hu, L., Wang, X., Wang, E., and Wang, J. (2013). Nonequilibrium landscape theory of neural networks. Proc. Natl. Acad. Sci. U.S.A. 110, E4185–E4194. doi: 10.1073/pnas.1310692110

Ye, L., Song, Z., and Li, C. (2021). Landscape and flux quantify the stochastic transition dynamics for p53 cell fate decision. J. Chem. Phys. 154, 025101. doi: 10.1063/5.0030558

You, H., and Wang, D.-H. (2013). Dynamics of multiple-choice decision making. Neural Comput. 25, 2108–2145. doi: 10.1162/NECO_a_00473

Keywords: decision making, neural network, attractor, energy landscape, kinetic path

Citation: Ye L and Li C (2021) Quantifying the Landscape of Decision Making From Spiking Neural Networks. Front. Comput. Neurosci. 15:740601. doi: 10.3389/fncom.2021.740601

Received: 20 July 2021; Accepted: 05 October 2021;

Published: 28 October 2021.

Edited by:

Paul Miller, Brandeis University, United StatesReviewed by:

Maurizio Mattia, National Institute of Health (ISS), ItalyCopyright © 2021 Ye and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chunhe Li, Y2h1bmhlbGlAZnVkYW4uZWR1LmNu

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.