94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci., 24 May 2021

Volume 15 - 2021 | https://doi.org/10.3389/fncom.2021.551111

This article is part of the Research TopicCognitive Computation: from Neuronal Circuits to Social BrainView all 6 articles

Recurrent neural networks with associative memory properties are typically based on fixed-point dynamics, which is fundamentally distinct from the oscillatory dynamics of the brain. There have been proposals for oscillatory associative memories, but here too, in the majority of cases, only binary patterns are stored as oscillatory states in the network. Oscillatory neural network models typically operate at a single/common frequency. At multiple frequencies, even a pair of oscillators with real coupling exhibits rich dynamics of Arnold tongues, not easily harnessed to achieve reliable memory storage and retrieval. Since real brain dynamics comprises of a wide range of spectral components, there is a need for oscillatory neural network models that operate at multiple frequencies. We propose an oscillatory neural network that can model multiple time series simultaneously by performing a Fourier-like decomposition of the signals. We show that these enhanced properties of a network of Hopf oscillators become possible by operating in the complex-variable domain. In this model, the single neural oscillator is modeled as a Hopf oscillator, with adaptive frequency and dynamics described over the complex domain. We propose a novel form of coupling, dubbed “power coupling,” between complex Hopf oscillators. With power coupling, expressed naturally only in the complex-variable domain, it is possible to achieve stable (normalized) phase relationships in a network of multifrequency oscillators. Network connections are trained either by Hebb-like learning or by delta rule, adapted to the complex domain. The network is capable of modeling N-channel electroencephalogram time series with high accuracy and shows the potential as an effective model of large-scale brain dynamics.

Currently, there are two prominent approaches to characterizing the neural code: the instantaneous rate or frequency at which the neuron fires action potential (“the spike frequency code” or the “rate code”) and the time of the occurrence of action potential (“spike time code”). The former assumes the information is processed/encoded over a larger time scale conveyed by average number of spikes fired in a given duration forms the basis of a large class of neural networks called the rate coded neural networks (Lippmann, 1990; Ruck et al., 1990; Lawrence et al., 1997). Whereas the latter believe that the precise timing of the action potential fired by a neuron encodes the ongoing activity, giving rise to a broad class of spiking neural network (Maass, 1997a, b; Izhikevich, 2003, 2004; Ghosh-dastidar and Lichtenstein, 2009). These two classes of neural networks are capable of universal function approximation and well explored (Maass, 1997a; Auer et al., 2008). They have been reported to solve a wide range of information processing problems like vector space transformation, dimensionality reduction, sequence processing, memory storage as an attractor and autoencoding (Hopfield, 1982; Frasconi et al., 1995; Kohonen, 1998; Trappenberg, 2003; Schmidhuber, 2015).

The third class of neural code, dubbed the oscillation coding (Fujii et al., 1996) also has been around for more than two decades. However, it has not been adopted in modeling literature as extensively as the first two types of neural code. Oscillation coding is based on the idea that not single-neuron activity, but the synchronized collective activity of a neural ensemble is the true building block of the brain’s activity. Unlike the rate code, which is often represented as a continuous signal encoding information as an average neural activity, or the spike time code, which is often described as a train of delta functions, oscillation code is a smooth signal that is said to be composed of distinct frequency bands. Oscillation coding also offers an opportunity to represent important temporal phenomena like temporal binding via transient synchrony (Wang and Terman, 1995, 1997; Shi et al., 2008) or rhythmic behaviors such as locomotion (Ermentrout and Kopell, 1989). There have been comprehensive and valorous attempts to describe all brain function in terms of the oscillatory activity of neural ensembles (Draguhn, 2004; Buzsáki et al., 2012). However, since existing literature does not commit to the exact size of a “neural ensemble,” activity measured at different scales goes by different names, including local field potentials (LFPs), electrocorticograms (ECoGs), electroencephalograms (EEGs), etc.

Since oscillations are ubiquitous in the brain, one would naturally expect nonlinear oscillator models to be used extensively to describe brain function. However, models of brain function often use sigmoidal neurons (to represent the rate code), spiking neuron models (to represent the spike time code), or more detailed conductance-based or biophysical models. In the field of neural signal processing, for example, a large body of literature depicting various deep neural network architectures such as recurrent neural network, convolutional neural network and deep belief network have been used for EEG signal processing and classification at various scales which found application in the following areas: brain-computer interface, epilepsy, cognitive and effective monitoring, etc. (Craik and He, 2019; Roy et al., 2019). Nonlinear oscillators are used exclusively for describing oscillatory phenomena like rhythmic movements or feature binding by synchronization. In an attempt to correct this bias, it would be a worthwhile modeling exercise to construct general networks of nonlinear neural oscillators that can be used to describe a wide range of brain functions.

The coupling strategies between a pair of neurons differ depending on the neuronal dynamics defined by the mathematical representation of the neural code. Biophysical neuron models employ biophysically detailed synapse models for coupling whereas the spiking neuron models employ elementary single exponential alpha function or double exponential function for coupling (Destexhe et al., 1994). Various two-variable oscillator models such as FitzHugh Nagumo model, Van der Pol, Wilson-Cowan, Kuramoto and Hopf oscillator principally employ what may be described as “real coupling strategy” (Campbell and Wang, 1994; Toral et al., 2003; Low et al., 2005; Izhikevich, 2007; Breakspear et al., 2010; Hoff et al., 2014; Sadilek and Thurner, 2015). In real coupling strategy, only the main variables (x1 and x2) of the two oscillators interact directly. Delayed real coupling strategies have also been explored for several of these oscillator models (Wirkus and Rand, 2002; Saha and Feudel, 2017). The averaged behavior of pulse-coupled oscillators has also been analyzed (Ermentrout and Kopell, 1991). On the contrary, in the complex coupling strategy (Hoppensteadt and Izhikevich, 2000), the two variables of the oscillator (x and y) are combined to form a complex variable (z = x + iy), and the complex state of one oscillator influences the complex state of the other. In this case, both x and y variables of one oscillator influence the x and y variables of the other.

In this paper, we describe a general trainable network of neural oscillators. For the oscillator model, we choose the simple Hopf oscillator. One reason behind the choice of the Hopf oscillator is the elegant form its description assumes in the complex variable domain. We show that it is important to operate in the complex domain in order to define some of the novel modeling features we introduce in this paper. Particularly, we introduce the concept of “power coupling” by which it is possible to achieve robust phase relationships among oscillators with very different intrinsic frequencies. The proposed network of oscillators can be trained to model multi-channel EEG, thereby demonstrating the potential to evolve into a large-scale brain model.

The outline of the paper is as follows. Section “Prior Work: Complex Coupling” begins with the definition of Hopf oscillator in the complex domain and show how to adapt the intrinsic frequency of the oscillator to that of a sinusoidal forcing signal. Section “A Pair of Hopf Oscillators With Complex Coupling” describes the dynamics of a pair of coupled Hopf oscillators and shows the advantages in coupling with a complex coupling constant. Section “Coupling Two Hopf Oscillators With Different Natural Frequencies” highlights the difficulties in coupling a pair of Hopf oscillators with very different intrinsic frequencies, and Section “Power Coupling” Between a Pair of Hopf Oscillators” shows how the difficulties can be overcome by adopting “power coupling.” Gathering all the modeling elements developed so far, Section “Adaptive Hopf Oscillator With Complex Input or Complex Adaptive Hopf Oscillator” describes a network of oscillators that can model multi-channel EEG. A discussion of the work was presented in the last section.

The canonical model of Hopf oscillator without any external input is described as follows in complex state variable representation , Cartesian coordinate representation (state variables: x, y) and polar coordinate representation (state variables: r, ∅) respectively:

Complex state variable representation:

Cartesian coordinate representation:

Polar coordinate representation:

Depending on the parameter values (α, β1, β2), the autonomous behavior of the oscillator principally falls into four regimes: critical Hopf regime (α = 0, β1 < 0, β2 = 0), supercritical Hopf regime (α > 0, β1 < 0, β2 = 0), supercritical double limit cycle regime (α < 0, β1 > 0, β2 < 0, localmaxima > 0) and subcritical double limit cycle regime (α < 0, β1 > 0, β2 < 0, localmaxima < 0) (Kim and Large, 2015). The critical Hopf regime has a stable fixed point at the origin and has the ability to show a stable resonating response to the complex sinusoidal input (Feiω0t). The supercritical Hopf regime has an unstable fixed point at the origin and a stable limit cycle at . In the supercritical double limit cycle regime, the system exhibits two limit cycles, one of which is stable while the other being unstable. In the subcritical Hopf regime, the system has one stable fixed point at the origin. However, it has the ability to show stable oscillation under the influence of complex sinusoidal input whose frequency is not too different from that of the oscillator (ω − ω0 < ϵ). Throughout the rest of the paper, we will be using supercritical Hopf regime (with α = μ, β1 = 1, β2 = 0), which can be defined as follows:

Complex state variable representation:

Cartesian coordinate representation:

Polar coordinate representation:

We now present a series of results related to a single oscillator, a coupled pair, and a network of Hopf oscillators in the supercritical regime defined above.

It has been previously shown that when a Hopf oscillator is influenced by a real sinusoidal input signal, it can adapt its natural frequency to the frequency of the input signal if it follows the following dynamics (Righetti et al., 2005).

Given, z = re∅ = x + iy. It can be recognized that the Hopf oscillator is perturbed by a real input signal Iext(t) with coupling strength ε. At steady-state r reaches for ε = 0. If Iext(t) = I0sin(ω0t + φ) (I0, ω0, and φ are the magnitude, frequency and phase offset of the sinusoidal input signal), the natural frequency, ω, adapts to the frequency ω0.

When a Hopf oscillator is influenced by a complex sinusoidal input signal, the natural frequency of the oscillator can adapt to the frequency of input if the natural frequency of the oscillator is updated according to the following equation:

In this scenario, Iext(t) is a complex sinusoidal signal. It is straight forward to derive the learning rule for the natural frequency of the oscillator if eq. 2a is represented in the Cartesian and polar coordinate forms, respectively, as follows:

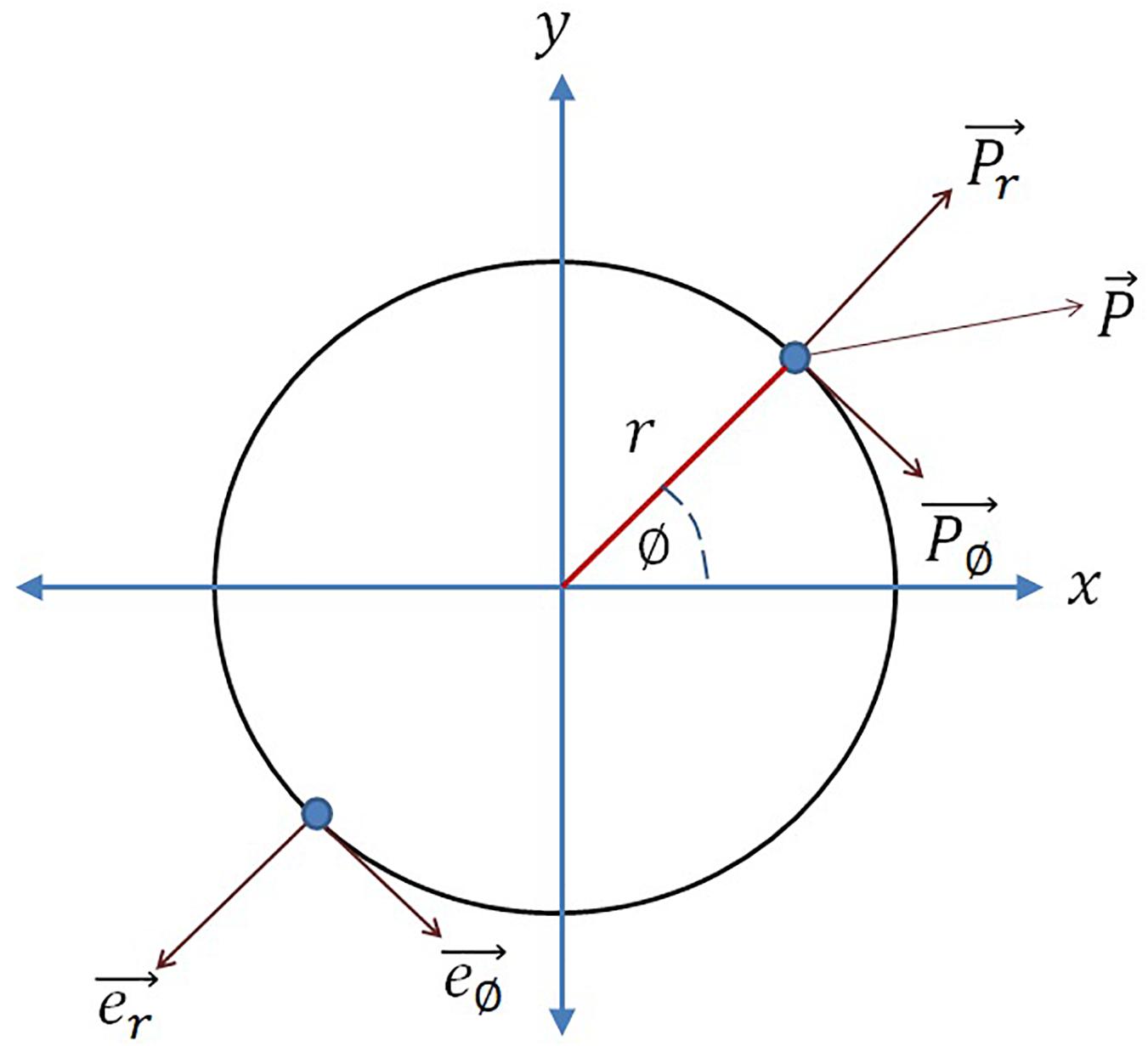

In the phase plane representation, it can be observed from eq. 2a2 that the influence caused by the input perturbation on the oscillator phase is . Whereas from the Cartesian coordinate system representation (eq. 2a1) it can be observed that the overall influence of the external perturbation on the phase point along the tangential axis of the limit cycle (), is (to understand the vector notations refer to Figure 1):

Figure 1. Considering the circle as the limit cycle of the Hopf oscillator, e∅ is the unit vector along increasing azimuth angle (∅), er is the unit vector along radius (r), P is the overall external input perturbation, P∅ and Pr are the external input perturbation along e∅ and er respectively. The analogy is drawn from Righetti et al. (2006).

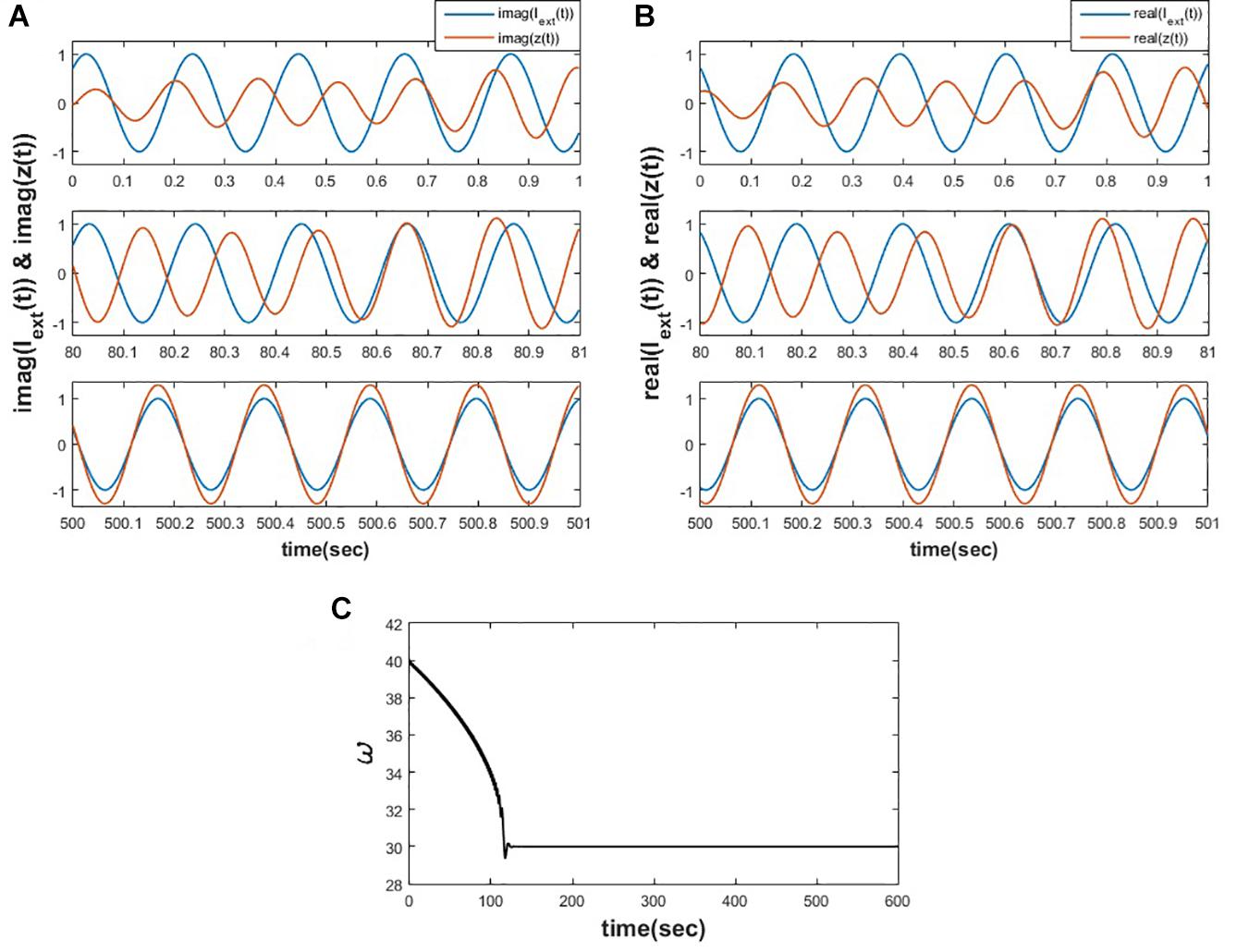

This motivates us to adapt the learning rule for the natural frequency of the oscillator, as proposed in eq. 2c, by dropping the magnitude of oscillation because of the same reason as described by Righetti et al. (2006). We have simulated the eqs. 2a-2c and observed that the proposed learning rule for the natural frequency of the oscillator allows it to adapt to the frequency of the input complex sinusoidal signal, as shown in the following Figure 2.

Figure 2. Equations 2a-c is simulated for for 1000 s. (A) Depicts the variation of y(t) w.r.t img(Iext(t)) at various time instants. (B) Depicts the variation of x(t) w.r.t real(Iext(t)) at various time instants. (C) It can be observed that the natural frequency of the Hopf oscillator adapts to the frequency of the input signal.

When two Hopf oscillators with equal natural frequencies are coupled with the real coupling coefficient as described below (eq. 3), they are going to phase lock in phase (0) or out of phase by (2n + 1)π depending on the polarity of the coupling and initialization. (for proof refer to Supplementary Appendix 1)

where W12 is the real coupling coefficient from 2nd oscillator to 1st one, and W21 is the real coupling coefficient from 1st to 2nd. In the stated scenario, both of the oscillators have identical natural frequencies, ω1 = ω2. This, with real-valued coupling, a pair of Hopf oscillators with equal intrinsic frequencies can only produce two possible values of phase difference.

When two Hopf oscillators with identical natural frequencies are coupled bilaterally through complex coefficients with Hermitian symmetry, they can exhibit phase-locked oscillation at a particular angle similar to the angle of complex coupling coefficient. (for proof refer to the Supplementary Appendix 2).

where z1 = r1ei∅1, z2 = r2ei∅2 and W = Aeiθ, W∗=Ae−iθ to be the coupling coefficient (A and θ being the magnitude and the angle of complex coupling coefficient), in polar coordinate system representation:

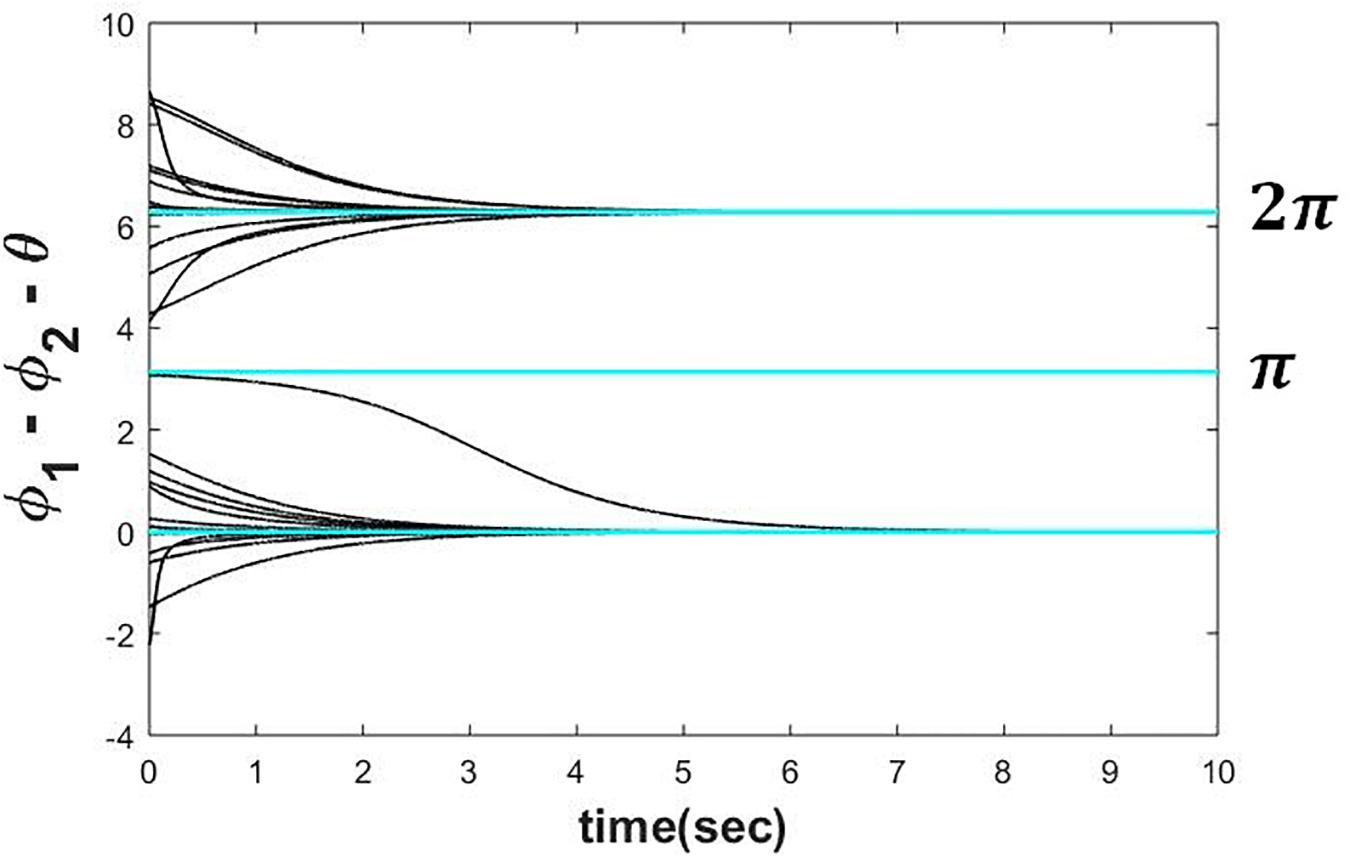

At steady-state ∅1−∅2 approaches any of the solutions 2nπ + θ depending on the initial conditions [∅1(0) and ∅2(0)], whereas the magnitude of the complex coupling coefficient determines the rate at which the phase-locking occurs. ψss (where, ψ = ∅1−∅2 and ψss is the steady state value of ψ) attains solution 2nπ + θ for the following initial condition 2nπ−(−π + θ) < ∅1(0)−∅2(0) < 2nπ−(π + θ).

To verify the above result, we have simulated eqs. 4a1 and 4b1 numerically by Euler integration method on MATLAB platform with △t = 1msec. It can be observed from the plot (refer to the Figure 3) that the steady-state phase difference between the two oscillators (ψss) is either achieving θ or 2π + θ depending on the whether it was initialized in the intervals θ − π < ψ(0) ≤ θ + π and θ + π < ψ(0) ≤ 2π + θ respectively.

Figure 3. Two Hopf oscillators, dynamics of which is defined in the eqs. 4a1 and 4b1 simulated with ω = 5, μ = 1, A = 0.5, for various initial conditions (−π < ∅1(0)−∅2(0)−θ≤3π) depicting that can reach any of the following solutions 2nπ + θ depending on the initial condition.

When a pair of complex sinusoidal inputs with identical frequency is presented to a pair of coupled Hopf oscillators with complex coupling and identical natural frequencies (eq. 4), and when the complex coupling coefficient is adapted according to eq. 5, the angle of the coupling coefficient approaches the phase difference of the external inputs. In other words, the complex coupling learns the phase relationship of the external inputs.

To train the complex coupling coefficient, a Hebbian like learning rule can be used as follows:

The polar coordinate representation:

We have limited the scope of our study only to θ dynamics by assuming . Assuming τW is the time constant for the specified learning dynamics. A similar adaptation of the Hebb’s rule was used earlier to train connections in a complex version of the Hopfield network (Hopfield, 1982). When the dynamics of two Hopf oscillators is defined by eq. 4 (with no external input), and the complex coupling coefficient is trained according to eq. 5 with very small value of A(|W|), θ(angle(W)) learns ∅1(0) − ∅2(0). From eq. 4, it can be interpreted that ∅1(t) ≈ ωt + ∅1(0) and ∅2(t) ≈ ωt + ∅2(0)(Assuming A≪1), similarly from eq. 5, we can see that θ or angle(W) learns or ∅1(t) − ∅2(t) ≈ ∅1(0) − ∅2(0). It is shown in the Supplementary Appendix 3 that the nonzero symmetric steady state solution (r1ss = r2ss ≠ 0, θ − ψss) is , ψ = 2nπ + θ, which is a stable node.

On the other hand, in a network of two coupled oscillators driven by separate external sinusoidal forcing as described in the following eq. 6 with the same frequency as the natural frequency of the two oscillators but any phase offset (φ1, φ2) will drive those oscillators to oscillate at the same phase as the external sinusoidal forcing (∅1 ≈ ωt + φ1 and ∅2 ≈ ωt + φ2), provided very low magnitude of the complex coupling coefficient (A≪1).

Under the influence of external input, the angles of z1 and z2 (∅1 and ∅2 respectively) tend to ωt + φ1 and ωt + φ2, respectively. When the complex coupling coefficient is trained according to eq. 5, the angle(W) or θ learns or ∅1(t)−∅2(t) ≈ φ1−φ2. During training, A must be kept low (A≪1) so that z1 and z2 dynamics is influenced only by the intrinsic oscillatory dynamics and the forcing signal. During testing, however, the external input is removed, A is again increased so that the phase difference between the oscillators is stable and determined by the coupling constant.

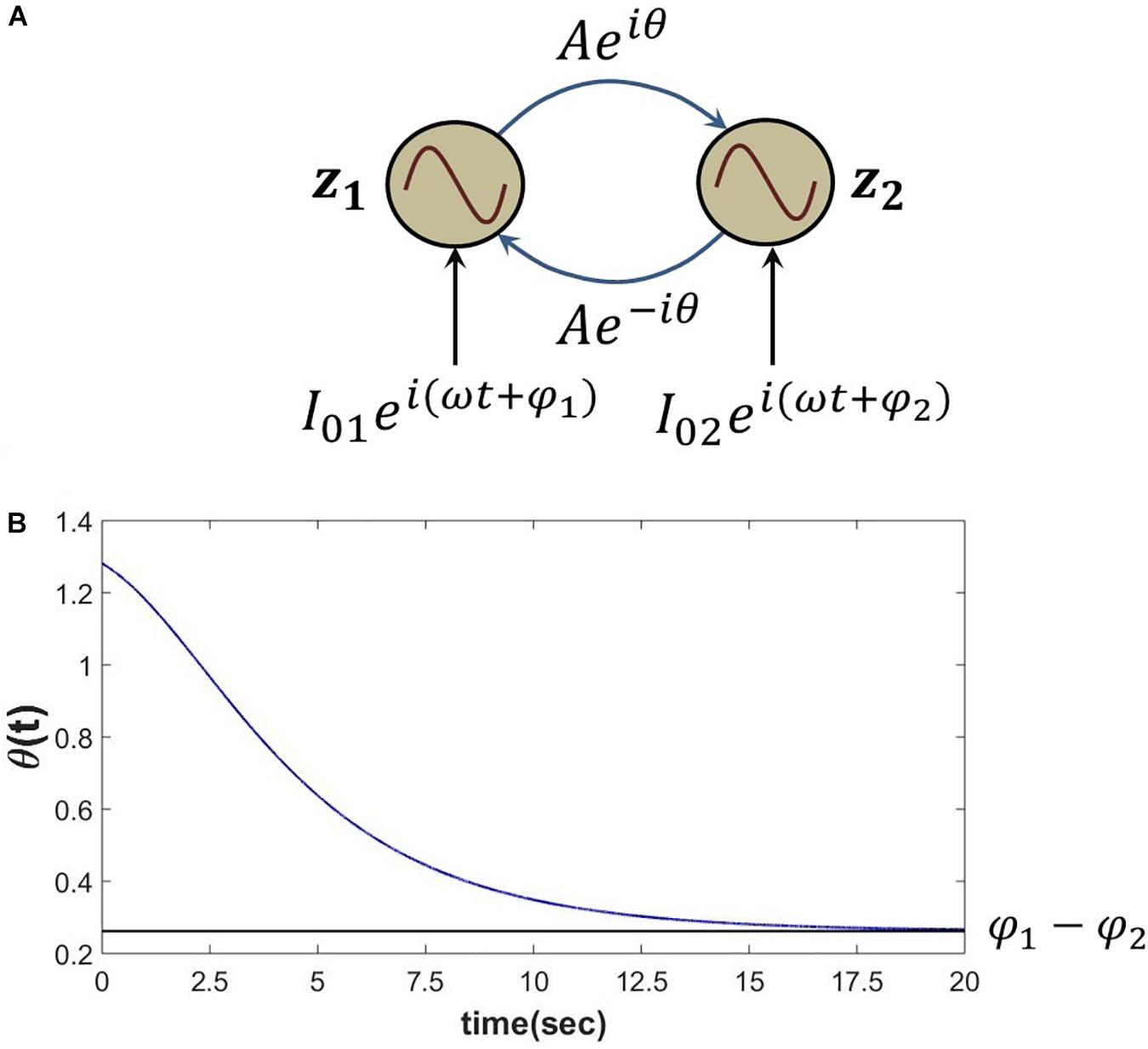

The proposed Hebb like learning rule (eq. 5) allows the angle of complex coupling weight (θ) to learn the phase difference between the complex sinusoidal inputs driving each of the oscillators for very low magnitude of the complex coupling weight (A≪1). To verify this, eq. 6 is simulated for the set of parameters as described in the following Figure 4. It can be observed that θ learns the phase difference between the complex sinusoidal input signals .

Figure 4. (A) Network schematic of dynamics represented in eq. 6 where the complex coupling coefficient is trained according to eq. 5. (B) It can be verified that for the following set of network parameters θ learns φ1−φ2. I01 = I02 = 0.3, .

A different kind of dynamics can be observed when two sinusoidal oscillators with different natural frequencies are coupled with real coupling coefficient. A pair of Hopf oscillators with real coupling shows Arnold tongue behavior which may be described as follows: the two oscillators entrain to commensurate frequencies (having a simple integral ratio) for specific ranges of the coupling strength, and the range of frequencies for which the entrainment occurs widens with the strength of the coupling coefficient (Izhikevich, 2007). There are whole ranges of the coupling strength and the natural frequencies where there is no specific phase relationship at all. Therefore, it is not easy to get a stable phase relationship between two Hopf oscillators with very different frequencies and real coupling.

The situation is a bit more facile with Kuramoto oscillators (Izhikevich, 2007). When a pair of Kuramoto oscillators are coupled according to the equation shown below, their natural frequencies converge to a particular frequency in between their natural frequencies, depending on the coupling strength.

Let, K1 and K2 be the real coupling strengths in the forward and backward directions, respectively. The phase difference between the two oscillators (∅ = ∅1 − ∅2) reaches a steady-state value if K1 + K2 > |ω1 − ω2| which is satisfied by the relationship sin∅∗= where ∅∗ be the steady-state phase difference. Notably, both of the oscillators reach a periodic solution with frequency ω∗=. In this scenario, although synchronization at a particular intermediate frequency with a particular phase difference is ensured, the natural frequency of oscillation is not maintained, i.e., to phase lock at a particular angle, the oscillation of both the oscillators had to converge at a particular intermediate value. A network of such Kuramoto oscillators (eq. 7a) with natural frequencies drawn from a Gaussian distribution with constrained standard deviation can show increased phase synchrony among the oscillators when the isotopic coupling strength (K) exceeds a threshold (Breakspear et al., 2010).

Thus, we can see that the dynamics of a pair of coupled Hopf oscillators become dramatically more complicated when we relax the equality relationship between the natural frequencies of the coupled oscillators. We can visualize a simple, desirable extension of the dynamics of a pair of coupled oscillators with distinct natural frequencies. When the natural frequencies are equal, we found that the phase difference equals the angle of the complex coupling factor. However, when the natural frequencies are unequal, there cannot be a phase difference in the usual sense (∅1 − ∅2), although when the coupled oscillators are entrained in the m:n ratio, the phase difference has been defined as m∅1 − n∅2 (where, m, n are integers). Therefore, one may define a quantity called “normalized phase difference” as follows:

Let ψ12 and ψ21 be the normalized phase difference of 1st oscillator w.r.t 2nd oscillator and vice versa. Is it possible to relate the normalized phase difference with the angle of the complex coupling coefficient? It turns out that such a relationship is not possible even with the complex coupling of eq. 4. To this end, we extend the complex coupling to a new form of coupling we label “power coupling” as described below.

A pair of sinusoidal oscillators (Hopf or Kuramoto oscillator) can entrain at a specific normalized phase difference if they are coupled through complex “power coupling” coefficient according to the following eqs. 8a and 8b at a particular value dependent on the angle of complex “power coupling” coefficient, natural frequencies of the coupled oscillators and the initial values of the phase angle of those oscillators (for proof please refer to Supplementary Appendix 4).

Coupling a pair of Hopf oscillators through power coupling:

where, is the weight of power coupling from 2nd oscillator to the 1st oscillator and is the weight of power coupling from 1st oscillator to the 2nd oscillator.

The polar coordinate representation:

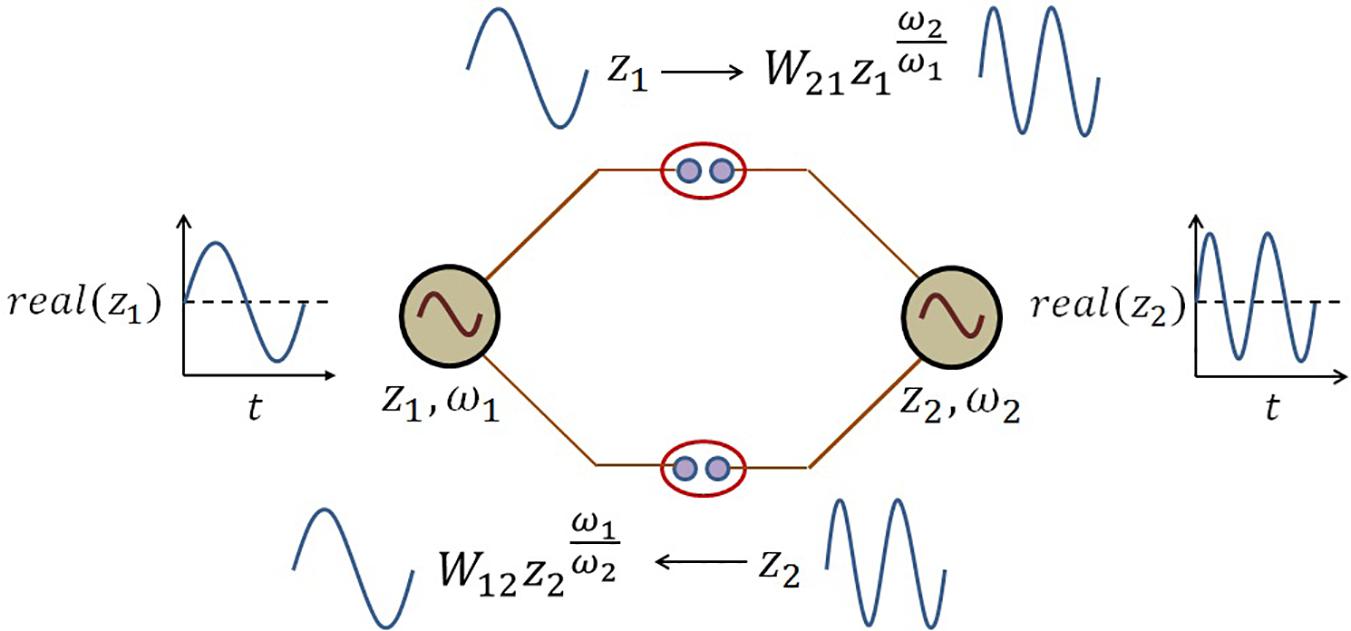

The schematic of the coupling architecture is elaborated in the following Figure 5.

Figure 5. The schematic network representation of eq. 8. There is a kind of frequency transformation occurring at the power coupling synapse because of the following term in the dynamical eq. 8:. At the power coupling synapse, this frequency-transformed version of the oscillation from jth oscillator is weighted by the power coupling weight to convert the forcing signal from jth oscillator to ith oscillator and vice versa.

Based on eqs. 8a2 and 8b2, we understand that two modified Kuramoto oscillators may be coupled using power coupling as follows:

Similarly, the dynamics of the ith oscillator in a network of N supercritical Hopf oscillators coupled through power coupling can be represented as:

Let be the weight of power coupling from the jth oscillator to ith oscillator.

The polar coordinate representation is:

Therefore, the dynamics of the ith oscillator in a network of N number Kuramoto oscillators coupled through power coupling can be represented as:

We may appreciate the difference in the second term in eqs. 7a and 12. (defined in eq. 7b) can be obtained from eqs. 8a2, 8b2 and 11b for a network of two oscillators and also for the general case of N (> 2) Hopf oscillators respectively:

At steady state, ψ12 and can attain any of the possible solutions of the above eqs. 13a,b.

For a network of any arbitrary N number of Hopf oscillators as described in eqs. 10 and 11 under the constraint eiθij = e−iθji or θij = −θji, Aij = A,μ = 1, s can achieve the following desirable solution:

for certain initial conditions.

However, there can exist other solutions for which the sum of the terms on the right-hand side of eqs. 13a,b is zero, without all the individual terms being zero. That is eq. 13b.s above is not satisfied. We call these solutions spurious solutions.

Whether the final solution is a desirable or a spurious solution depends on the initial conditions. Therefore, the solution can be achieved only for certain initial values of s.

Under the following constraints θ12 = θ, θ21 = −θ, A12 = A21 = A, μ = 1, some of the steady-state solutions of eq. 13a where both entities on the right-hand side of eq. 13a is zero, is as follows:

following the specification: . It can be noticed that n1 = n2 = 0 gives us the desired solution, as mentioned in eq. 13b.s.

Let , so, .

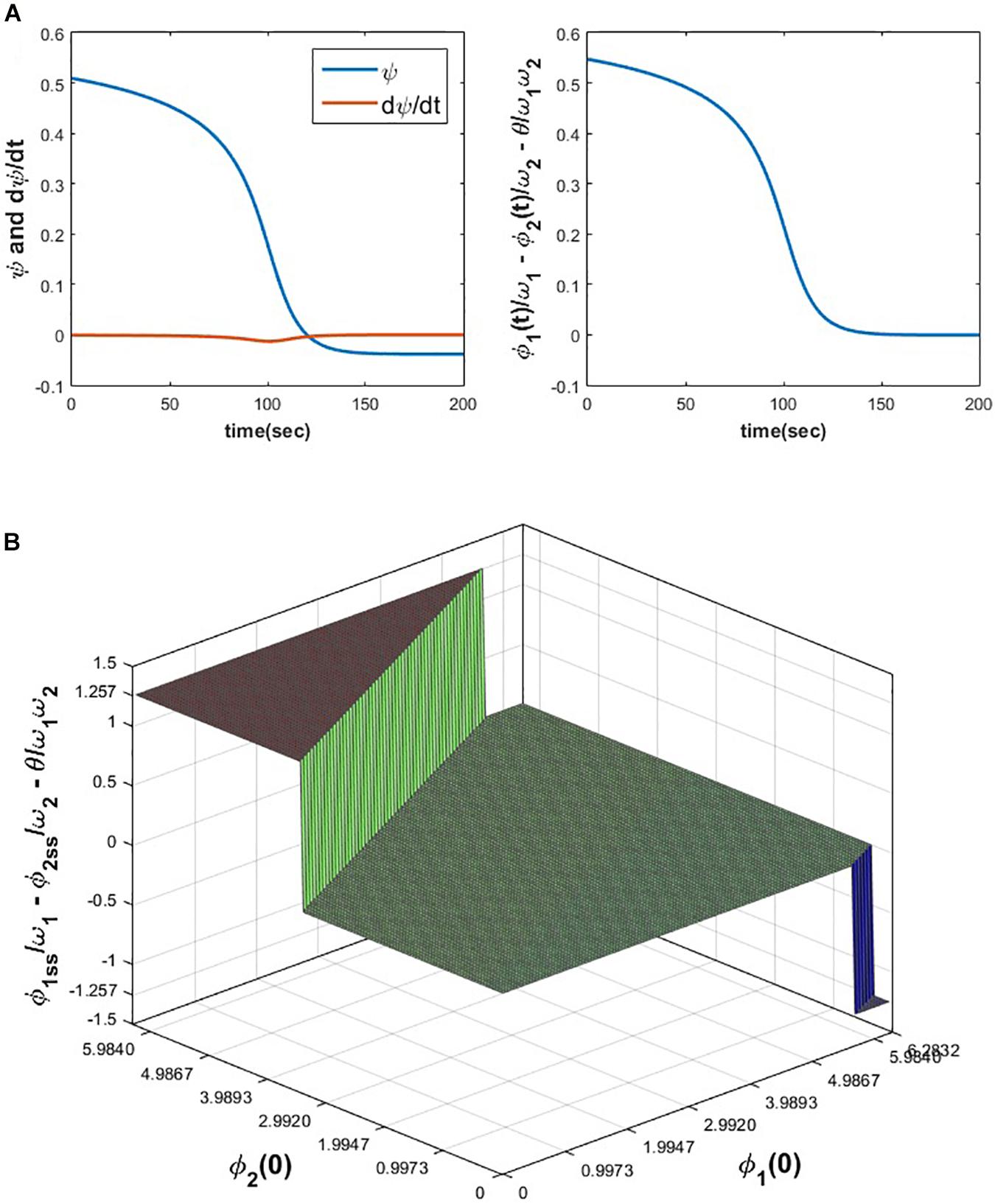

From the following Figure 6A it can be verified that ψ12 and σ12 attain and zero solution (the desired solution as mentioned before) respectively for the initialization specified in the figure caption. To check the dependency of on the initial values of ∅1 and ∅2, ∅1(0) and ∅2(0) space is discretized at △∅1(0) = △∅2(0) = 0.1, in the interval 0 < ∅1(0), ∅2(0) ≤ 2π and eqs. 8a1, 8a2, 8b1, 8b2 is simulated for 200 s to ensure the steady-state to be achieved for various combinations of ∅1(0) and ∅2(0). From the following Figure 6B it can be observed that achieves the following solutions: ; for n1 = 0, 1 or independent of θ.

Figure 6. (A) The variation of ψ, , and w.r.t time is plotted by simulating eqs. 8a1 and 8b1 for the following set of parameters and initial conditions. ω1 = 5, ω2 = 10, A = 0.05, θ = −1.8968, ∅1(0) = 3.7008, ∅2(0) = 2.3106. (B) It can be verified that depending on the various combinations of initial conditions (0 < ∅1(0), ∅2(0)≤2π), can reach one of the solutions . For the following set of parameters, simulated results show that reaches one of the following three solutions . Simulation parameters: ω1 = 5, ω2 = 10, A = 0.2, θ = 2.9644.

We now explore the solution space numerically for a three-oscillator system. When three Hopf oscillators are coupled according to the eq. 10 or 11 (N = 3) with s, chosen as θij = θi − θj∀ 0 < θi, θj ≤ 2π (i.e., θij = −θij), at steady state σij achieves one of the possible solutions depending on the initializations (∅i(0)′s). The dynamics of the three oscillators can be expressed in the following form.

At steady-state , where or : Assuming μ = 1, Aij = A and eiθij is a Hermitian matrix (i.e., eiθij = e−iθji),

The trivial and desirable solution set for the above equation is:

=, =,= or ===0

However, like before in eq. 13a, there are several spurious solutions for which the entire right-hand sides of eqs. 14a, 14b, 14c are zero, without each of the individual terms being zero separately. So, σij can achieve any of the possible solutions satisfying the above equation. It appears that finding the spurious solutions analytically are not possible.

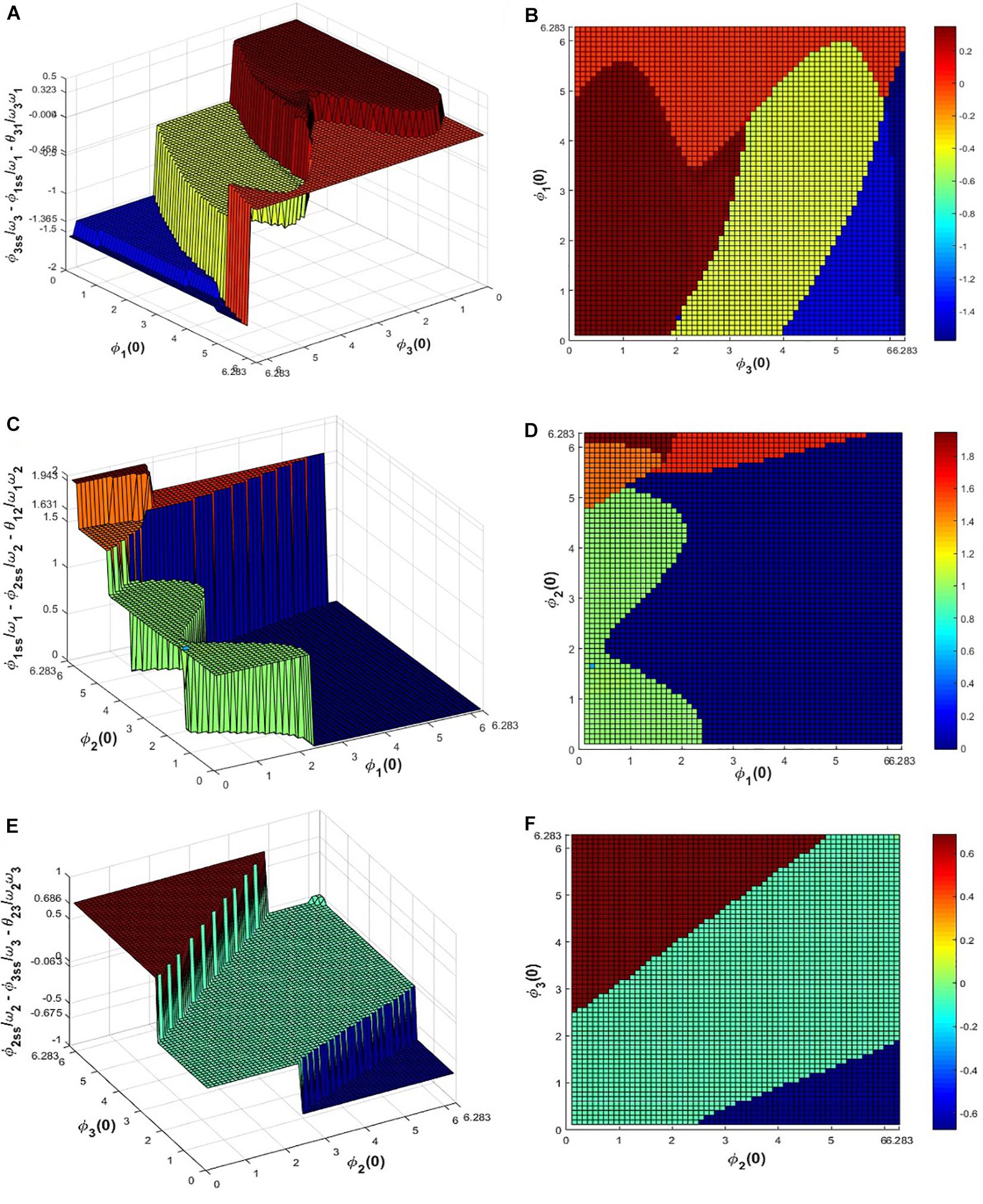

To verify this numerically eq. 11 was simulated for the given set of network parameters: ω1 = 4, ω2 = 7, ω3 = 10, Aij = A = 0.2, and the initial values of in the range 0 < ∅i(0) ≤ 2π with △∅i(0) = 0.1 for 100 s to let the system achieve a steady-state. It can be observed from eq. 14 that the possible solutions of do not depend on θij but on the natural frequencies of the oscillators (ωi). The following Figure 7 summarizes the dependency of on ∅i(0) and ∅j(0) where it can be observed that , , and achieve any of the 5, 3, 4 solutions respectively (mentioned in the figure caption) and particular combinations of these solutions satisfy the above equation. For a given initial value of ∅1, ∅2 and ∅3, ’s attained one of the solutions and for a confined subspace of ∅1(0), ∅2(0) and ∅3(0) under space 0 ≤ ∅i(0) < 2π, ’s attained the zero or desired solution .

Figure 7. Equation 11 is simulated for 100 s for the network parameter values mentioned previously to check for what combinations of initial values of , ’s reaches, which of the solutions of eq. 14. (A) and (B) depicts that for various initial values of ∅1and ∅3 in between 0 to 2π, attains 4 possible solutions: 0.3235, −0.0036, −0.4576, −1.3652, similarly from (C-F) it can be seen that for various initial values of ∅1, ∅2 and ∅2, ∅3 in between 0 to 2π, and attains the 0.99, 1.465, 1.6307, 1.9426, −0.0046 and 0.0633, 0.6855, −0.6751 solutions respectively, satisfying eq. 14.

We may extend the Hebbian learning of complex coupling (eq. 5), to the case of power coupling as follows.

The polar coordinate representation:

We have limited the scope of our study only to θij dynamics by assuming . Let τW be the time constant of the above learning dynamics. It is shown both analytically and numerically that ωj times angle(Wij) or θij tries to learn ∅iωj−∅jωi when the weight of the power coupling is trained according to eq. 15 (for proof, please refer to Supplementary Appendix 5). Similarly, as it was previously shown during the training or phase encoding of the complex coupling coefficient with very small fixed value of Aij (magnitude of complex coupling coefficient), θij (angle of complex coupling coefficient) learns ∅i(0)−∅j(0) (≈∅i−∅j) for arbitrary values of ω (constraining the natural frequencies to be identical). When the power coupling weight is updated according to eq. 15 under the same constraint as Aij≪1 and , θij learns ∅i(0)ωj−∅j(0)ωi when there is no external input.

From eq. 11, it can be interpreted that ∅i(t) ≈ ωit + ∅i(0) for Aij≪1. So, when a network of sinusoidal oscillators coupled according to eqs. 10 or 14 with the power coupling weights trained according to eq. 15, θij learns .

In such a network when individual oscillators are driven by complex sinusoidal forcing and the power coupling weights are trained as stated, θij learns φiωj−φjωi as with Aij≪1, and I0i≫Aij. s are approximately same as ωit + φi. The dynamics of such a network of supercritical Hopf oscillators is defined below:

Polar coordinate representation:

The dynamics of such a network of Kuramoto oscillators:

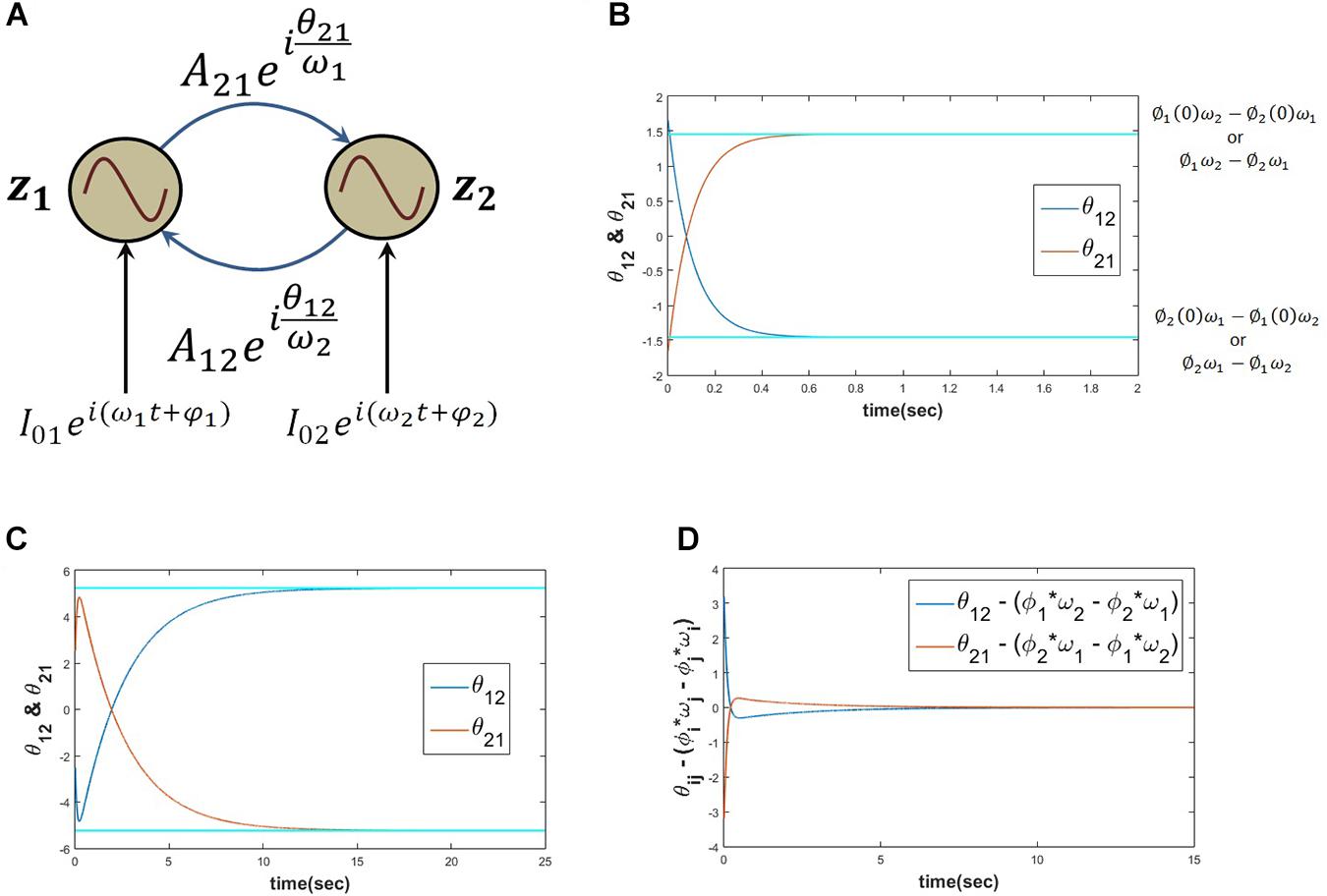

When two Hopf oscillators are coupled through power coupling with very weak coupling coefficient (Aij≪1) as described above in eq. 16, θij learns ∅i(0)ωj−∅j(0)ωi while there is no external input () and θij learns φiωj−φjωi when ith oscillator is forced by complex sinusoidal external perturbation. The following simulation results support the stated argument (Figure 8).

Figure 8. When two Hopf oscillators (z1, z2) coupled through power coupling according to eq. 16 (for N=2 and ), the schematic of the network is elaborated in panel (A), with the parameters ω1 = 5, ω2 = 10, A12 = A21 = 0.0001, θ12(0) = 1.657, θ21(0) = −1.657 and the power coupling weights are trained according to eq. 15, keeping the magnitude of the power coupling weight (Aij) constant, ωj times the angle of power coupling, θij learns ∅i(0)ωj−∅j(0)ωi or ∅iωj−∅jωi. Here ∅1(0) = 1.2046, ∅2(0) = 2.7008, and τW = 103. It is clear from the plot (B) that θ12 reaches ∅1ω2−∅2ω1 and θ21 reaches ∅2ω1−∅1ω2 w.r.t time. When two such Hopf oscillators as described in the previous simulation are forced by an external complex sinusoidal perturbation as described in eq. 16 () with the following parameters ω1 = 5, ω2 = 10, A12 = A21 = 0.0001, θ12(0) = −2.513, θ21(0) = 2.513, τW = 103 and , , θ12, and θ21 learn φ1ω2−φ2ω1 = −5.7131 and φ2ω1−φ1ω2 = 5.7131 respectively (C). (D) It can be verified that the difference between θ12 and ∅1ω2−∅2ω1 as well as θ21 and ∅2ω1−∅1ω2 becomes zero w.r.t time.

A pair of coupled adaptive Hopf oscillators driven by distinct complex sinusoidal inputs are capable of adapting their natural frequencies to the frequencies of the complex sinusoidal input signals. The trainable power coupling weight can encode the normalized phase difference between the two complex sinusoidal input signals.

When a pair of Hopf oscillators coupled through trainable power coupling coefficient (eq. 15) are driven by complex sinusoidal inputs (eq. 16), they adapt their natural frequencies according to eqs. 2b or 2c, ωj times the angle of power coupling coefficient Wij (power coupling weight from jth oscillator to ith oscillator) or θij can learn the normalized phase difference between the complex sinusoidal input signal. If is the complex sinusoidal input signal driving ith oscillator, then the normalized phase difference among them is:

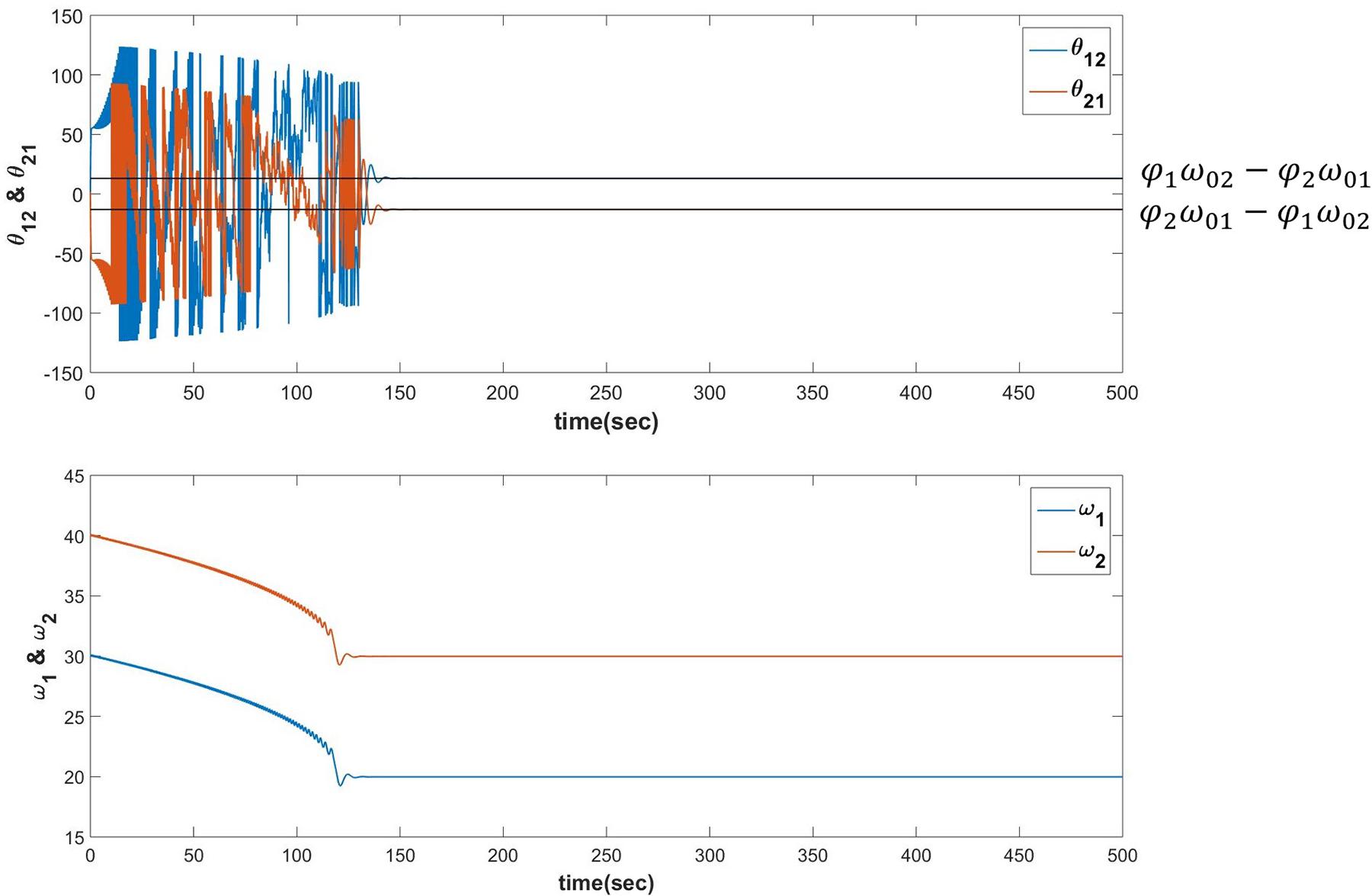

When the following dynamics is simulated for N=2 it can be verified that θij learns φjω0i−φiω0j after ωi, the natural frequency of ith oscillator learns the frequency of the input signal ω0i (described in Figure 9).

Figure 9. Equations 19a-c are simulated for the following set of parameters:ω01 = 20, ω02 = 30, ω1(0) = 30, ω2(0) = 40, A12 = A21 = 0.0001, θ12(0) = −1.7884, θ21(0) = 1.7884, τW = 103, ε = 0.9 and , . It can be verified that θij learns φjω0i−φiω0j after the s learns the corresponding s.

In the previous sections, we have described a network of oscillators in which the natural frequencies and lateral connections can be trained. We now add a feature to the network of Section “A Network of Oscillators With Adaptive Frequency and Trainable Lateral Connections” and make it learn an unknown signal by performing a Fourier-like decomposition.

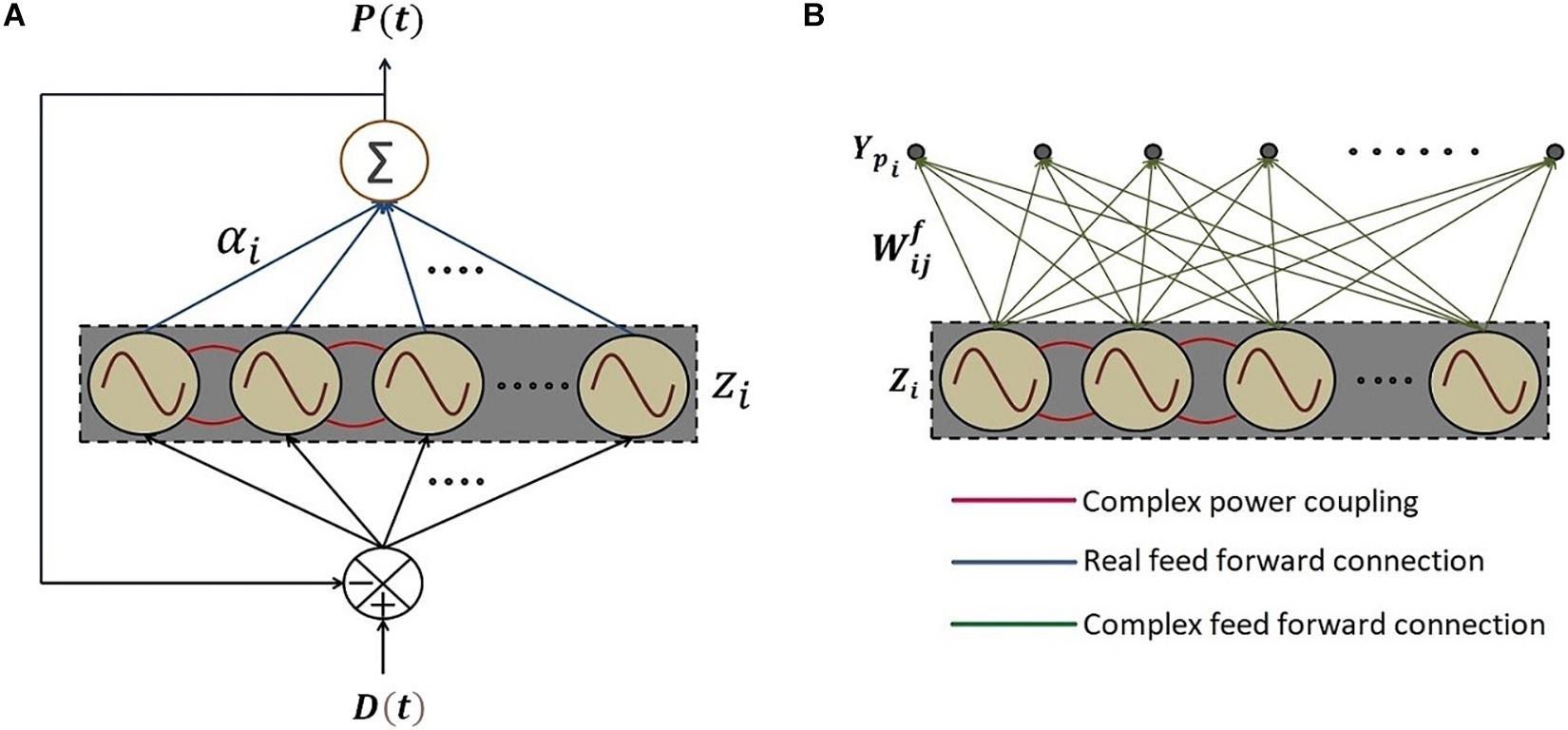

To this end, we construct a reservoir of Hopf oscillators (Figure 10A). It consists of a network of Hopf oscillators with trainable lateral connections (eq. 15) and trainable natural frequencies (eq. 20b). In addition, there exists a linear weight stage between the oscillators and the output layer. The natural frequencies of the oscillators adapt themselves to the nearest significant component in the input signal. The lateral connections involving power coupling, encode the (normalized) phase relationships among the oscillators. The output weights represent the amplitudes of the oscillatory components corresponding to the oscillators. A similar network architecture and dynamics for adaptable central pattern generator (aCPG) has been proposed by Righetti et al. (2005), the detailed comparison of which is discussed in Section “Discussion.”

Figure 10. The schematic of the network where (A) a reservoir of N number of adaptive Hopf oscillators similar to the network proposed by Righetti et al. (2005) with additional asymmetric power coupling connections between the oscillators, each of which is driven by the same error signal e(t) between the D(t), teaching time-series signal and P(t), the linear summation of the oscillations of each of the oscillators in the reservoir through the real feed-forward connection weights, elaborated in eq. 20. (B) The network architecture of 2nd phase of training with the same reservoir of oscillators with tuned natural frequencies and trained power coupling weights in the 1st phase of training. Ypi is the predicted output signal at the ith output node. Complex feed-forward weights connecting jth oscillator to the ith output node, are trained in batch mode according to eq. 23.

To reconstruct the teaching time-series signal at the output summation node, the error signal that drives each of the oscillators drives the phase offset to the desired phase offset associated with the corresponding frequency present in the teaching time-series signal. The activation and learning dynamics of the network are given below.

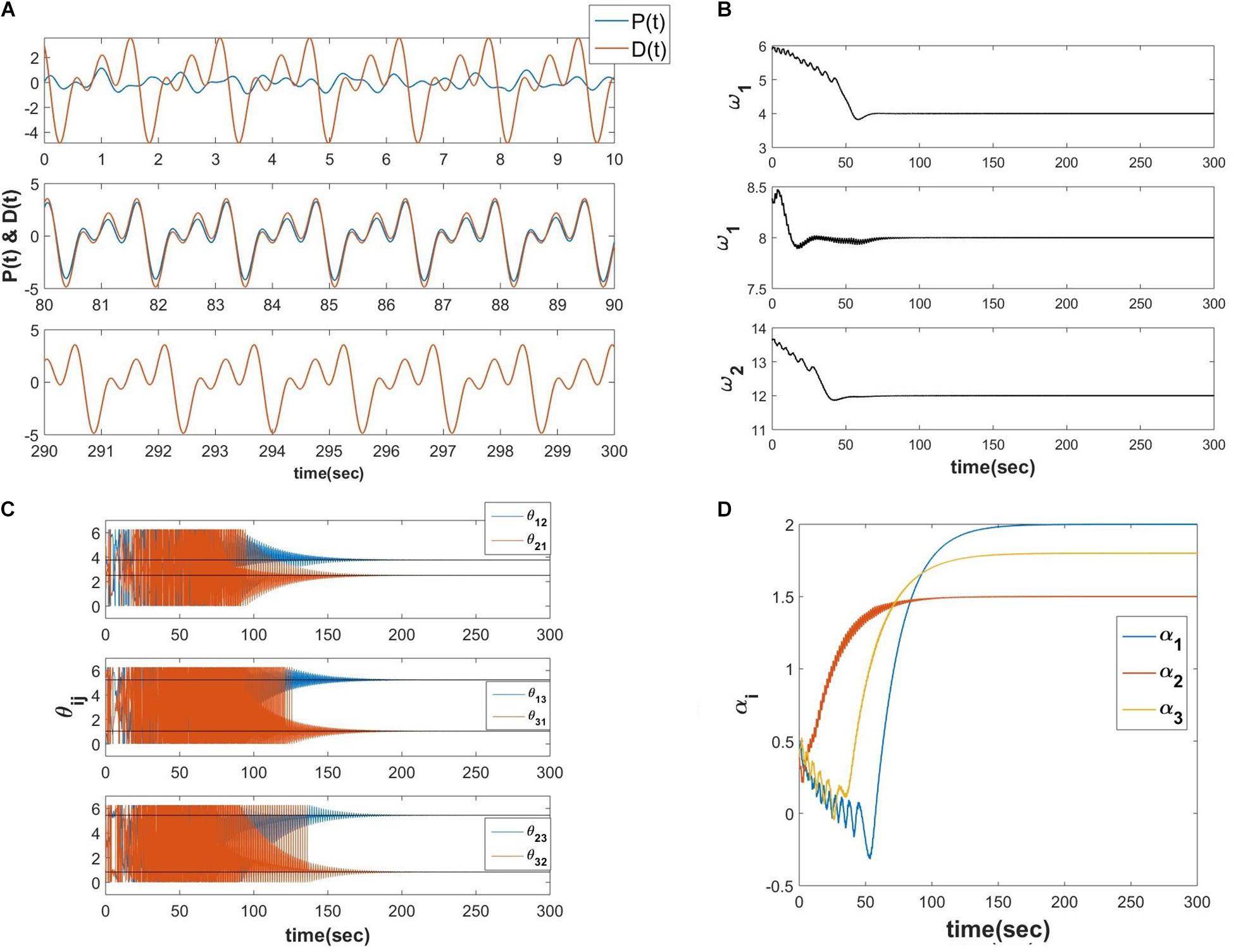

Let D(t) be the teaching time-series signal with a finite number of frequencies ( where φi be the phase offset associated with ith frequency component), zi = riei∅i, are the real feed-forward weights from ith oscillator to the output summation node, τW, ηω and ηα are the time constant of the learning dynamics for power coupling coefficient, the learning rate of the natural frequency of the oscillators and the real feed-forward weights from oscillators to the output summation node respectively, P(t) is the reconstructed signal at the output summation node. The numerical simulation of the proposed network (Figure 10A describes the schematic) is elaborated in the following Figure 11.

Figure 11. Equations 20a-e is simulated with the following parameters , Aij = 10−5, ηω = 0.1, ηα = 10−4, τW = 104, ε = 0.5, N = 3, dt = 10−3 sec. (A) The network learned signal at the output summation node P(t) and the D(t) signal at various 10 s of time interval. (B) The natural frequencies of the three oscillators learn the three frequency components present in the teaching signal. (C) ωj times the angles of the complex power coupling weights (θij) learn φiωj−φjωi. (D) The real feed-forward weights αi’s learn ai’s.

We propose a similar network for Fourier decomposition of complex time series signal with a finite number of frequencies (assuming ), which is an extension of the network proposed in section “A Network of Oscillators With Adaptive Frequency and Trainable Lateral Connections.” The proposed network is comprised of a reservoir of complex adaptive Hopf oscillators coupled through trainable power coupling weights, and driven by distinct complex sinusoidal inputs with arbitrarily different frequencies. The network is capable of learning the frequencies and encode the normalized phase relationship among the oscillatory components of the input signal. It is driven by the error signal between the complex teaching signal and the linear summation of complex activations of the Hopf oscillators. The dynamics of the network is described in the following equations.

The schematic of the network is identical to the network described in Figure 10A, and the simulated result for a D(t), which contains three frequency components is as follows (Figure 12).

Figure 12. Equations 21a-e are simulated for a teaching signal constituting three frequency components with the parameters . (A) The real part of the network learned signal at the output summation node P(t) and the D(t) signal at various 10 s of time interval. (B) The natural frequencies of the three oscillators learn the three frequency components present in the teaching signal. (C) ωj times the angles of the complex power coupling weights (θij) learn φiωj−φjωi. (D) The real feed-forward weights αi’s learn ai’s.

The proposed network is capable of modeling an arbitrary number of signals with an overlapping frequency spectrum. It is trained in two phases. In the first phase, a network exactly similar to the one in the previous section (Section “A Network for Reconstructing a Signal by a Fourier-Like Decomposition”) is used to encode the constituting frequency components of one of the input signals. During this phase, the natural frequencies (s), the real feed-forward weights () as well as the power coupling weights of a network of N Hopf oscillators are trained using the same learning rule described in the previous section (eq. 20). One key difference compared to the previous section is that the teaching signal in the present scenario is aperiodic (frequency spectrum is continuous) and has a finite duration. To overcome this issue, the limited duration teaching EEG signal is presented repeatedly to the network over multiple epochs. This helps the network to learn some sort of Fourier decomposition of the teaching signal.

In the second phase of training, the trained reservoir of oscillators tries to reconstruct M number of signals at the corresponding M output nodes assuming these M signals are the outcome of the same underlying process (producing signals with frequencies confined to a certain frequency band) by training the complex feed-forward weights connecting N oscillators of the reservoir to the M output nodes. The schematic of the network architecture (Figure 10B) and the corresponding learning rules are described below.

During the first phase of learning, an identical network with identical learning dynamics as described by eq. 20 is used. The only fundamental difference between the previous and present scenario is that D(t) is a limited duration aperiodic or quasiperiodic signal compared to the previous case where D(t) was an infinite duration periodic or quasiperiodic signal. i.e., there can be an infinite number of frequency components present in D(t) as the frequency spectrum of an aperiodic signal is continuous (refer to Figure 14A). So, the Fourier decomposition using the proposed network can be accomplished by discretely sampling the continuous frequency spectrum.

In this phase, the supervised batch mode of learning is used to train the complex feed-forward weights, Wf, defined as follows. Here Kij and ζij are respectively the magnitude and angle of the complex feed-forward weights. The derivation for the batch update rule for Kij and ζij as described in eq. 22 is given in the Supplementary Appendix 6.

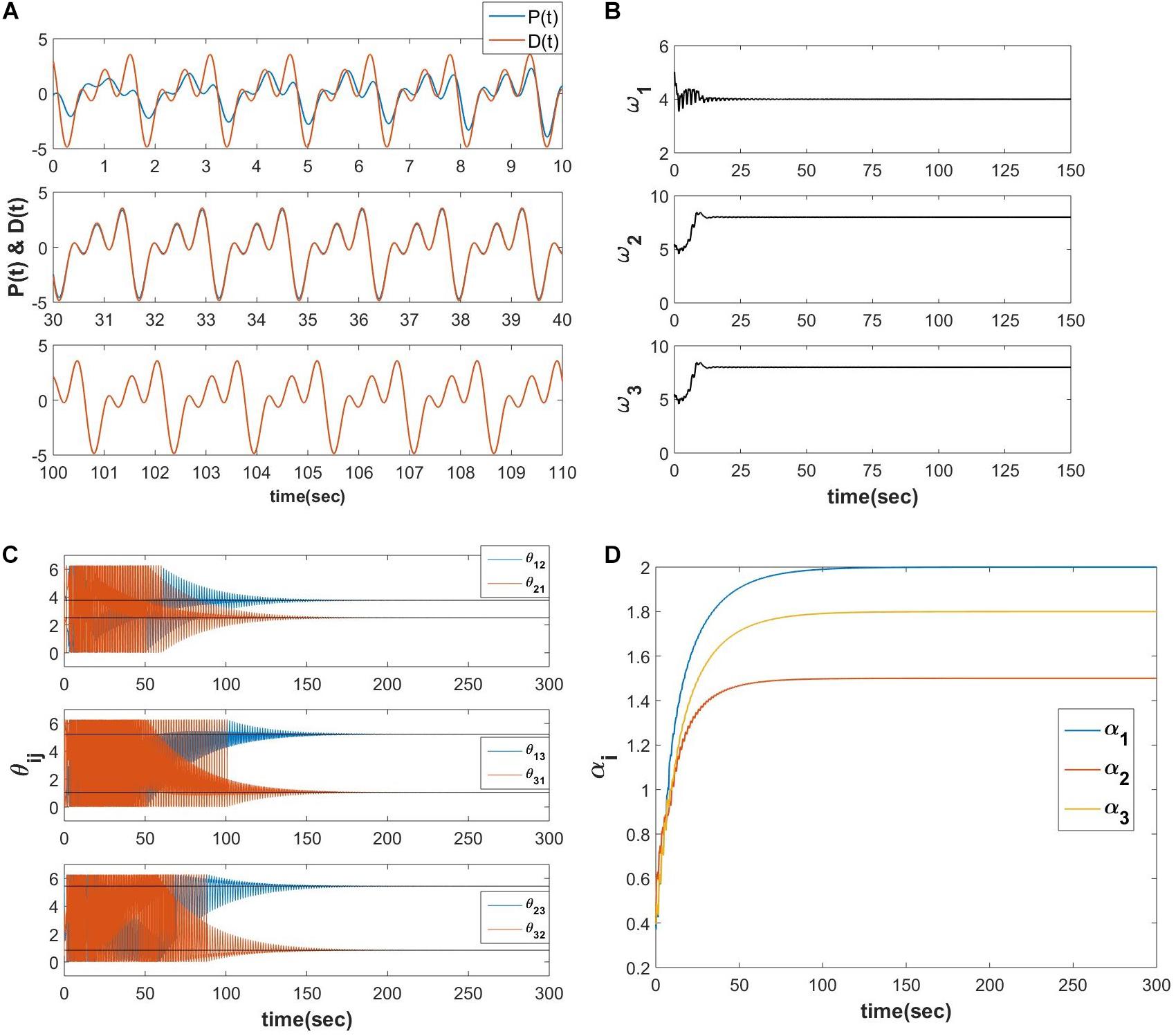

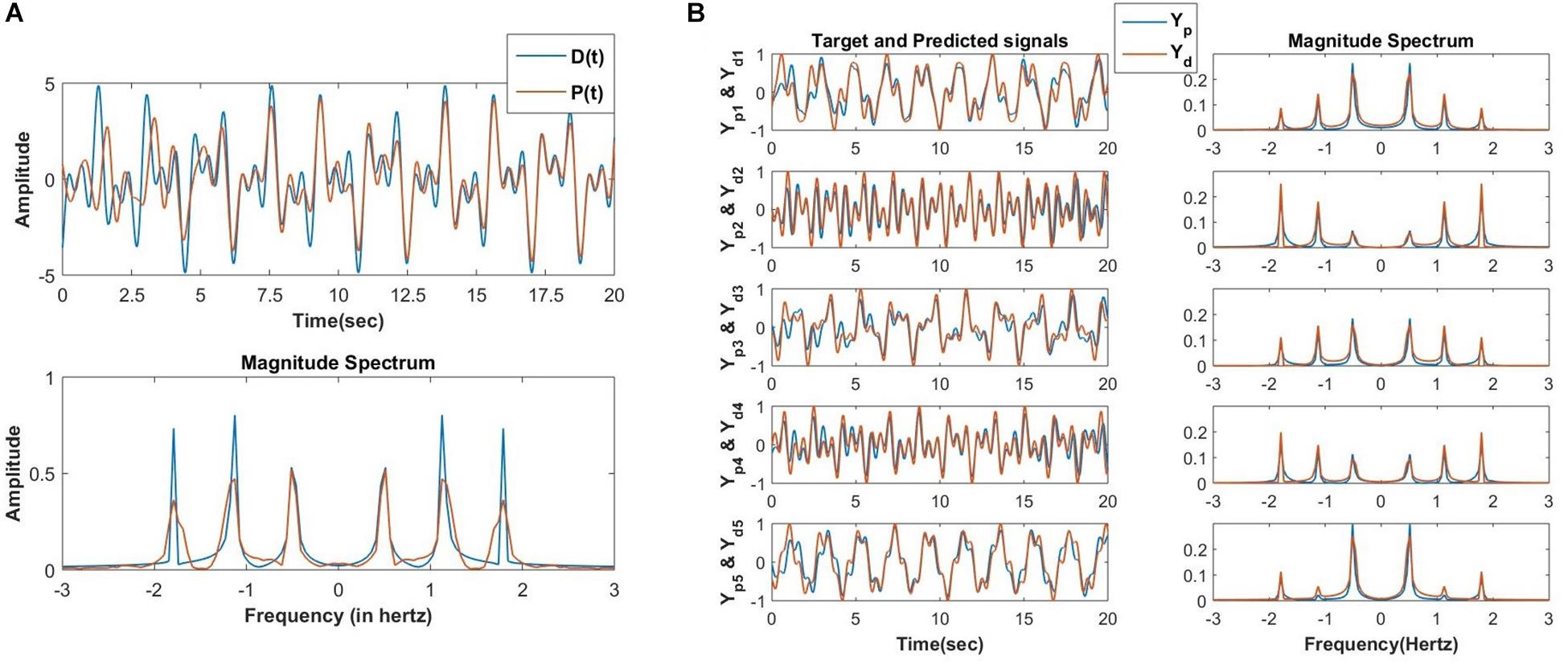

At first, the proposed network is simulated to model an arbitrary number of quasiperiodic signals with identical frequency components. The desired signal (D(t)) consisting of three frequency components (provided is not an integer) with a duration of 20 s is used for learning. The network output P(t) signal (after the first phase of learning) and the respective frequency spectrum is plotted in Figure 13A. After the second phase of training, the desired and the network reconstructed signals are plotted in Figure 13B, and the training parameters are described. One crucial condition has to be met in order to achieve an accurate reconstruction: All the oscillators in the reservoir has to be initialized at zi(0) = 1.

Figure 13. (A) In the first phase of training the oscillatory reservoir network (Figure 10A) with three oscillators is trained using the following teaching signal and learning parameters: D(t) = A1cos(ω1t + ∅1) + A2cos(ω2t + ∅2) + A3cos(ω2t + ∅2), Aij = 10−1, ηw = 10−1, ηα = 10−4, τw = 104, ϵ = 0.5, N=3, dt = 10−3sec, nepoch = 15. The network produced and the original teaching signal and their magnitude spectrum respectively after the last epoch (15) of training. (B) After the second phase of training, the network (Figure 10B) is able to reconstruct the M=5 output signals (Ydi for i = 1 to M) with identical frequency components but randomly chosen amplitude (Ai) and phase offset (∅i) with high accuracy. Learning parameters: ηK = 3 × 10−5, ηζ = 10−6, no. of epochs for the batch mode of learning = 10000.

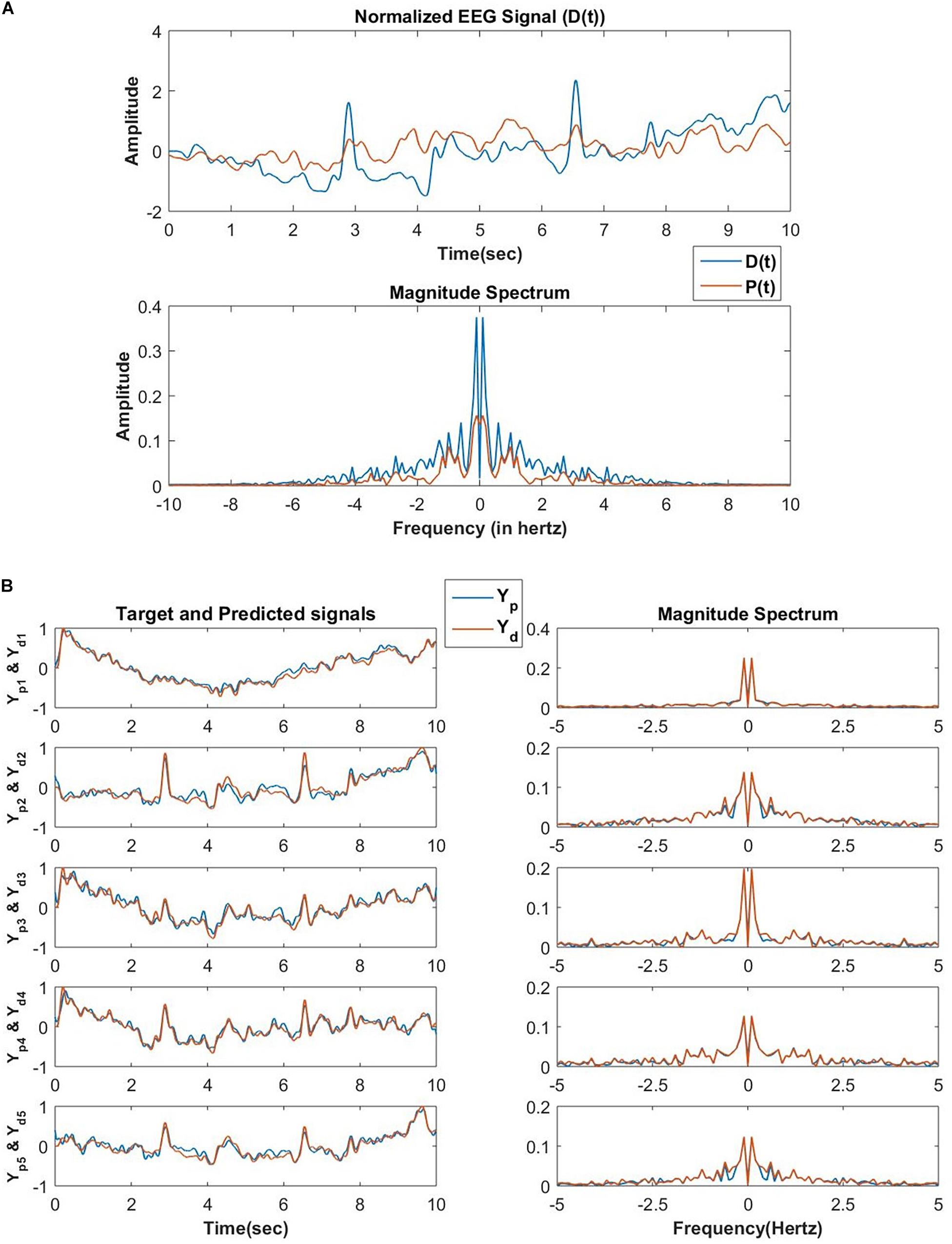

The network performance is tested on low pass filtered (cut off frequency of 5 Hertz) EEG data collected from a human subject while performing mind wandering task (Grandchamp et al., 2014). In the first phase, the network encodes N number of frequency components of an EEG signal collected from one channel of EEG recording during the mentioned experiment. There is a constraint imposed on the magnitude of the lateral power coupling weights as shown in Figure 14. This constraint allows only oscillators with nearby frequency components to interact with each other as initially, the natural frequencies of these oscillators are sorted at an increasing order after sampling from a uniform probability distribution ranging between 0 and 5 Hertz.

Figure 14. (A) The reconstructed signal by the network of 100 oscillators after 30 epochs, the teaching signal, and the respective frequency spectrum in the first phase of training. In this phase of training, the D(t) signal is an EEG signal of duration 10 s, and the exactly similar EEG signal is presented for consecutive 30 epochs. The learning parameters are: Aij = 10−1 for |j−i| < 5 and Aij = 0 for |j−i|≥5ηω = 0.1, ηα = 10−4, τW = 104, ε = 0.5, N = 100, dt = 10−3 sec nepoch = 30. (B) After the second phase of training, the network reconstructed and the original EEG signals collected from 5 channels during the same experiment from which D(t) for the first phase of training was collected and the corresponding frequency spectrum. Learning parameters: ηK = 3×10−5, ηζ = 10−6, no. of epochs for the batch mode of learning = 1000.

In the second phase, the network tries to reconstruct the EEG signals collected simultaneously from the other channels during the same experiment. A set of EEG signals from 5 other channels are reconstructed by the network. The reconstruction accuracy of the modeled EEG signals depends on the number of oscillators in the oscillatory layer and the number of desired signals to be reconstructed. Using complex weights for the feed-forward connections is a key factor as it helps to learn the amplitude, as well as phase of the Fourier decomposition of the EEG signal to be reconstructed, provided the frequency components are already learnt in the encoding phase.

The unique contribution of the proposed network of complex neural oscillators is the notion of power coupling by which it becomes possible to achieve a stable normalized phase relationship between two oscillators with arbitrary natural frequencies. With such a feature as the backbone, it is possible to construct a network of oscillators that can learn to reconstruct multiple time series signals. Another positive feature of the proposed model is the biological feasibility of the learning mechanisms. The lateral connections in the oscillatory reservoir are trained by a Hebb-like rule, while the forward connections are trained by a modified delta rule adapted to the complex domain. Experimental observations reveal that local interaction occurs at the single neuron level through single synapse whereas long-range interaction occurs between various cortical areas mediated by multiple synapses (Supplementary Figure 6). At single-cell level the synaptic property can define the relative phase difference between tonically firing presynaptic and postsynaptic neurons. Neuronal population-level interaction between various cortical areas can facilitate cross-frequency coupling ensuring a certain phase relationship (Hyafil et al., 2015) between the LFP activity of different frequencies of the respective cortical areas.

There is a vast literature pertaining to complex-valued neural network models, which are produced by extending their real-valued counterparts to the complex domain. Complex feed-forward networks trained by the complex backpropagation algorithm, complex Hopfield network, complex self-organizing maps are examples of such models. Complex formalism plays a key role in linear systems theory in which both systems and signals can be represented as complex functions. As a special case of this, in electrical circuit theory, oscillations are modeled as complex numbers called phasors, that represent the phase of the oscillations. Therefore, there is a longstanding association between complex numbers and oscillations. Nevertheless, complex-valued neural network literature did not seem to have exploited this association. For the most part, complex-valued neural networks operate like 2n dimensional versions of their n-dimensional real-valued counterparts. The proposed model attempts to take an important step in filling this lacuna. By describing oscillations in the complex plane, and invoking the power coupling principle, it is able to elegantly overcome some of the difficulties involved in harnessing the dynamics of multi-oscillator networks with real coupling.

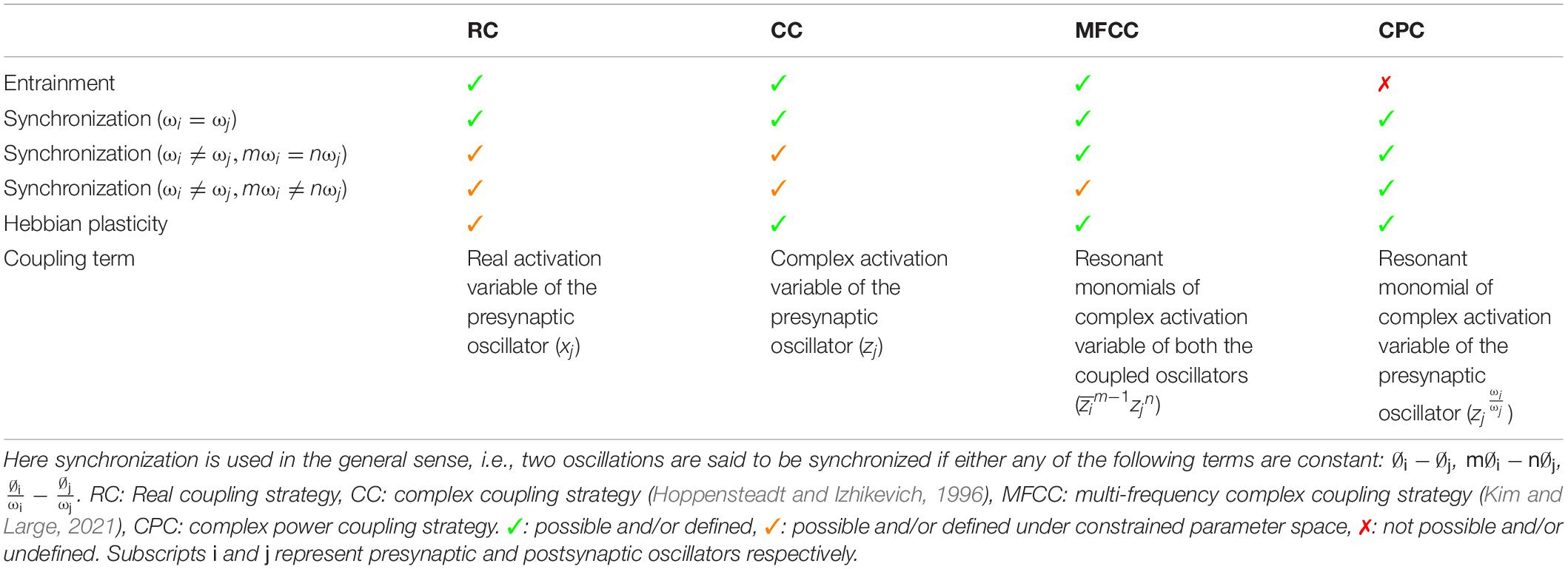

Network models of complex-valued oscillators have been described before. For example, subsequent studies by Hoppensteadt and Izhikevich (1996, 2000) have shown that weakly connected network of complex oscillators with identical natural frequencies and self-adjoint matrix of complex coupling coefficient can achieve any arbitrary phase difference between two oscillators. It has also been shown that such network can store and retrieve at least one memory pattern in terms of the phase difference of the oscillators by enabling the Hebbian-like learning rule of the complex coupling coefficient as given in eq. 5. Recent study by Kim and Large (2021) has extended the steady-state stability analysis of such a pair of oscillators to the special case of frequency detuning (ω1 ≠ ω2) for a range of parameter space. In the same study (Kim and Large, 2021), the authors have proposed a generalized framework for a pair of coupled multifrequency complex oscillators (Hoppensteadt and Izhikevich, 1997) with a Hebbian like learning rule for the complex coupling coefficient. Unlike the proposed power coupling strategy, the coupling is defined for oscillators with natural frequencies near the resonant condition: mω1 = nω2, where m and n are integer numbers. Similar steady-state stability analysis of such a pair of complex oscillators (for n=2, m=1 and higher order) shows that the angle of the complex coupling coefficient (θij) learns m∅1−n∅2 for Ω12 = mω1−nω2 = 0, and increases/decreases linearly or rotates for Ω12 ≠ 0 at steady state. However, neither of the study extended the result to the case of interacting oscillators with very different natural frequencies (|mω1−nω2| > ϵ, where ϵ is a small positive number), as we have done using power coupling. Table 1 summarizes the comparison between four different coupling strategies between a pair of complex Hopf oscillators. Comparison is made principally w.r.t two dynamical properties: entrainment and synchronization and Hebbian like plasticity of the coupling coefficient and the coupling term influencing the oscillator dynamics. Frequency entrainment is the dynamical phenomena when the natural frequency of two coupled autonomous oscillators converges to an intermediate frequency (Pikovsky et al., 2001). Whereas the synchronization has been defined here in a more general sense: any coupled oscillators can exhibit synchronization if they maintain any of the phase relationships (∅i−∅j, m∅i−n∅j, ) constant at steady-state with or without being entrained. A network of complex-valued oscillators that can store patterns as oscillatory states was described in Chakravarthy and Ghosh (1996). The model also proposed a complex form of Hebb’s rule, similar to the one used in the current study. However, the model of Chakravarthy and Ghosh (1996) was limited in its capability by the fact that all the oscillators in the model have a common frequency. A representation of synaptic strength using a complex weight, instead of the usual real weight, has an added advantage in representing the temporal relationships underlying neural dynamics. We have recently shown (Chakravarthy, 2020) that the imaginary part of the complex weight captures the temporal asymmetry between the activities of pre- and post-synaptic neurons in a manner akin to the weight kernel of Spike Time Dependent Plasticity (STDP) mechanism (Bi and Poo, 1998).

Table 1. The brief comparison among the various coupling strategies between a pair of complex Hopf oscillators.

It may be said that the network of Section “A Generative Network Which Is Capable of Modeling EEG Signals” achieves a discrete approximation of the continuous spectra of the signals that are reconstructed by the network. The greater the number of oscillators in the network, the tighter is the approximation. In this approximation, the trainable real feed-forward weights (αi) of the network learn the amplitude spectrum of D(t). The angle of the power coupling weight learns normalized phase differences, .

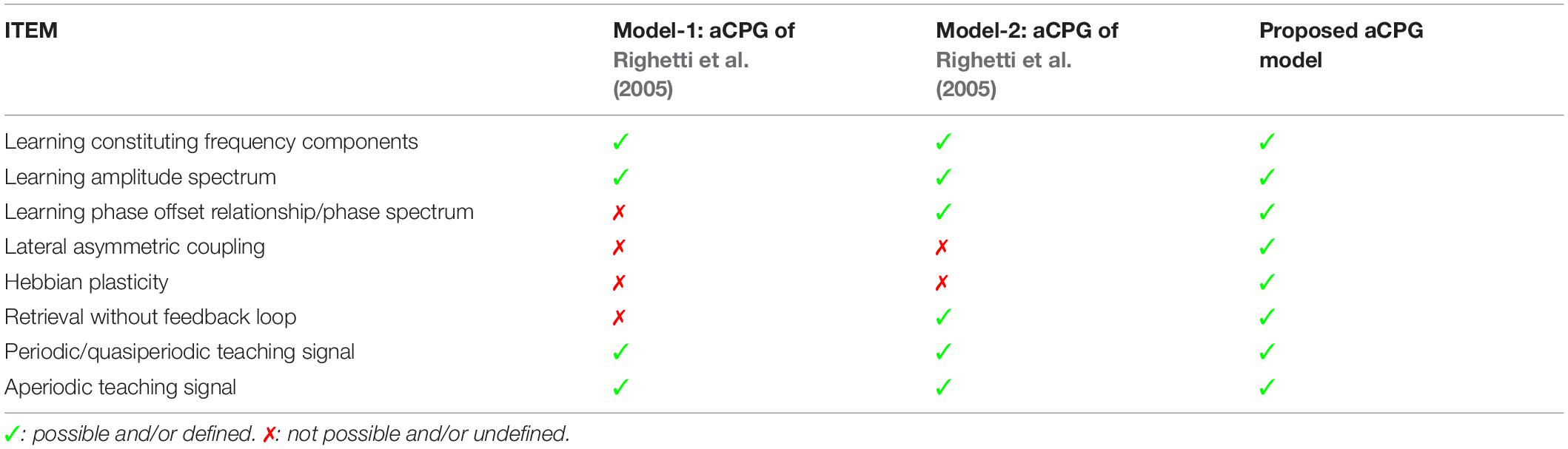

It has previously been shown in Righetti et al. (2005) that, when a network of supercritical Hopf oscillators driven by the reconstruction error signal, individual oscillators are tuned to the nearest frequency components in the target time series. In the network (net-1: schematic is described in Figure 2, and the network dynamics and the learning dynamics is given in eqs. 4 to 8 of Righetti et al., 2005) one shortcoming is that there is no way to recreate the teaching time-series signal (Pteach(t)) back without the error feedback loop as with the learnt and , when the network is reset (i.e., and are reset) there is no external forcing to drive the phase offset of each oscillator to the desired phase offsets . To overcome this issue, the authors have proposed a phase signal of frequency same as ith oscillator but same phase as the oscillator with the lowest frequency as a signal communicated by the oscillator with the lowest frequency to the ith oscillator, which makes those two oscillators maintain a certain normalized phase difference . However, to learn/store the phase relationship between the lowest frequency component and the ith frequency component in the teaching signal a “phase variable” considered as a third variable of the oscillator dynamics has been defined which learns the difference between phase signal from the oscillator with the lowest frequency and its own phase. The main issue with this network is that the network can only learn Fourier decomposition (frequency, magnitude and relative phase offset among the constituting frequency components) of a periodic signal which has frequency components of the form ωi = nω0, ω0 being the fundamental frequency of the periodic signal as n ∈ N as the phase signal Ri from the 0th oscillator is interacting with the x dynamics of the 0th oscillator through real coupling constant. Furthermore, there is an ambiguity in defining the lowest frequency component or fundamental frequency of the periodic teaching signal as the dynamics do not itself identify the oscillator, which learns the lowest frequency component in the teaching signal.

The above arrangement of representing phase difference using an explicit phase parameter located in the ith oscillator has another drawback of Righetti et al. (2005). Phase difference between two oscillators is a property that arises out of their interactions. Ideally, it must be represented as a property of the interaction strength, which in our case is the complex weight parameter. In Righetti et al. (2005), since phase difference information between two oscillators has to be encoded within one of the oscillators, in a rather awkward fashion, each oscillator is restricted to encode the phase difference only with one “standard” oscillator – the oscillator corresponding to the fundamental frequency. On the contrary, in our proposed model, (normalized) phase difference arises jointly out of the power coupling and the corresponding complex weight, there is no need for the above-mentioned restrictions. The oscillators can independently control their (normalized) phase differences with other oscillators via their mutual coupling parameters.

To overcome these issues, the proposed network as described in section “A Generative Network Which Is Capable of Modeling EEG Signals” is a similar network where the reservoir of complex supercritical Hopf oscillators is coupled through power coupling to preserve the phase relationship among the constituting frequency components in the teaching signal. When second network architecture proposed by Righetti et al. (schematic of which is described in Figure 3 and the dynamics is given in eqs. 13-20 of Righetti et al. (2005) tries to learn Fourier decomposition of a teaching signal the natural frequencies of the oscillators and the real feed-forward weights learns the magnitude spectrum of the teaching signal. During learning the error signal, F(t) drives the phase offset (∅i−ωit) of each of the oscillators to the desired phase offset (φi) can be retrieved from the phase spectrum of the teaching signal. Thus, the same network deliberated by eq. 20 with the oscillators coupled through power coupling with fixed Aij (≪μ) ensures θij to learn φiωj−φjωi.

One issue while retrieving the stored oscillatory pattern is the dependence on the initial state of the oscillators to achieve the desired solution (see section “Hebbian Learning for the N-Oscillator System With Power Coupling”). We observed that when ∅i(0)′s are sampled from space ∧, subspace of ∧ = {x ∈ RN|0 ≤ x ≤ 2π}, the network attains the desired solution at a steady-state. However, there are other plausible solutions or spurious states the network can acquire. The desired solution of (=0) and the spurious solutions are independent of the network parameters like Aij (for Aij = A) and θij but has a dependence on the natural frequency of the oscillators. However, the solutions of ψij are dependent on both network parameters (θij and ωi, assuming Aij = A). The dependency of the boundary of space ? or the boundary of the other subspaces (initializing ∅i by sampling which leads the system to spurious states) on the natural frequency of the oscillators is yet to be understood. A brief comparison between aCPG model of Righetti et al. (2005) and the proposed aCPG model is summarized in the following Table 2.

Table 2. This table compares various features related to network architecture, scope of trainability, retrievability, type of teaching signals the model can learn; of the two adaptive central pattern generator (aCPG) models proposed by Righetti et al. (2005) with our proposed aCPG model.

In the present study, we use the oscillatory neural network model to reconstruct six EEG time series simultaneously. However, the proposed learning mechanisms and network architecture can be easily scaled up. It is possible to model a much larger number of EEG channels (say, 128 or 512) simply by increasing the size of the oscillatory reservoir and the number of output neurons. In the current study, the connections among the oscillators are constrained to ensure that only oscillators with nearby natural frequencies are connected. In a large-scale model, it is conceivable to associate the individual neural oscillators with anatomical locations in the brain, and constrain their coupling based on structural connectivity information from structural imaging tools like Diffusion Tensor Imaging (DTI) (Damoiseaux and Greicius, 2009). Through such developments, the present model can evolve into a class large-scale models of brain dynamics similar to The Virtual Brain (TVB) model, with some relative advantages.

The TVB model principally uses neuronal mass models like the two-variable nonlinear oscillatory models including Fitzhugh-Nagumo model, Winson-Cowan model, Wong-Wang model, Brunel-Wang model, Jensen-Rit model, Stefanescu-Jirsa model (Wilson and Cowan, 1972; Jansen and Rit, 1995; Brunel and Wang, 2003; Wong and Wang, 2006; Stefanescu and Jirsa, 2008), which can exhibit excitable dynamics and limit cycle oscillations. In these models, the oscillators are coupled by only one variable, which is analogous to “real-valued” coupling. They are trained by global optimization algorithms that adjust the coupling strengths so that the output of the oscillatory network matches the recorded brain dynamics. Furthermore, most of them do not have an explicit natural frequency as an independent parameter which is trainable depending on the external input signal. Powanwe and Longtin (2019) developed a large-scale cortical model using a network of stochastic Wilson-Cowan model with ‘smooth nonlinearity’ to model gamma-band LFP activity. The LFP activity of excitatory and inhibitory population of neurons is modeled using sinusoidal variations (Vx(t) = Zx(t)cos(ω0t + ∅x(t))). The three characteristic features, envelop (Zx(t)), constant mean frequency (ω0) and phase (∅x(t)) respectively are dependent on network parameters. ω0 is dependent on excitatory synaptic strength, tunable through Hebbian plasticity. Other large-scale modeling studies on the same lines (Mejias et al., 2016; Joglekar et al., 2018) focus on investigating the principal network parameters behind signal propagation or intercommunication between various cortical areas located at the various levels of hierarchy. Although these models can capture the large-scale network entrainment by the external input signal through Hebb-like plasticity of the excitatory synaptic connection, they fail to provide simplified network dynamics essential to learn three constitutive features (frequency, magnitude, and phase offset) of any input signal.

The proposed network in section “Power Coupling Among N Hopf Oscillators” can reproduce a given time series signal as a linear summation of the oscillations with the same frequency components as the natural frequency of the oscillators in the reservoir and the relative phase relationships encoded in terms of the angle of the power coupling. Given the similarities between conventional attractor neural networks such as the Hopfield network, storing multiple “patterns” can be regarded as multiple time series signals with identical frequency components but non-identical relative phase relationships among them. As the relative phases among the oscillations is encoded in terms of the angle of the power coupling coefficient, the pth pattern can be regarded as:

where, θp is a N-dimensional vector, N being the no of oscillators. The power coupling coefficients are assigned as:

Where,

is the complex conjugate of the transpose of xp. However, the network retains the phase relationship among the oscillators defined by the combined phase, Θ, instead of the individual phase relationships defined by various patterns. The model equations in the current form do not seem to support storage of multiple patterns within the oscillator layer. However, with slightly modified equations in the complex domain, it is possible to achieve multiple pattern storage (Chakravarthy and Ghosh, 1996; Chakravarthy, 2009). The proposed network architectures seem to be robust to the noise inherent to the system dynamics as well as external noise, corrupting the input signal. A brief study on the performance of key network architectures w.r.t the noise power is given in the Supplementary Figures 1-5.

In the future, we plan to extend the proposed model to create a whole class of deep oscillatory networks. The network will have an input stage that serves as an encoder that performs a Fourier-like decomposition of the input time-series signals. The network model of Section “A Network for Reconstructing a Signal by a Fourier-Like Decomposition” will play the role of this encoder. The hidden layers will consist of oscillators operating at a range of frequencies. The output layer will be a decoder that converts the oscillatory outputs of the last hidden layer into the output time series. Another interesting proposed study is to develop the network model of Section “A Network for Reconstructing a Signal by a Fourier-Like Decomposition” into a model of the tonotopic map. Electrophysiological recordings from the bat’s auditory cortex revealed a map of frequencies (a tonotopic map). Kohonen had proposed a model of the tonotopic map using a self-organizing map (Kohonen, 1998). However, this model represents frequency as an explicitly available parameter, without actually modeling the responses of the neurons to oscillatory inputs (tones). We propose that by organizing the oscillators in the reservoir model of Section “A Network for Reconstructing a Signal by a Fourier-Like Decomposition” in a 2D array with neighborhood connections, it is possible to produce a biologically more feasible tonotopic map model.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author/s.

The simulations were done mostly by DB with the support of SP. DB wrote the main text. VC contributed to providing the key ideas, conducting initial simulations to test the hypothesis, editing the manuscript drafts, and providing insight into structure. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We acknowledge the support from undergraduate student from the Mechanical Engineering Department of IIT Madras, Asit Tarsode for his insight on the dependency of steady state solutions on the initial conditions. We also acknowledge the constant and much needed support from senior lab mate Vignayanandam R Muddapu, and other lab members. This manuscript has been released as a pre-print at bioRxiv (Biswas et al., 2020) for feedback from fellow neuroscientists.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2021.551111/full#supplementary-material

Auer, P., Burgsteiner, H., and Maass, W. (2008). A learning rule for very simple universal approximators consisting of a single layer of perceptrons $. Neural Netw. 21, 786–795. doi: 10.1016/j.neunet.2007.12.036

Bi, G. Q., and Poo, M. M. (1998). Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci. 18, 10464–10472. doi: 10.1523/jneurosci.18-24-10464.1998

Biswas, D., Sooryakiran, P., and Chakravarthy, V. S. (2020). A complex-valued oscillatory neural network for storage and retrieval of multichannel electroencephalogram signals. bioRxiv [Preprint]. doi: 10.1101/2020.03.28.013292

Breakspear, M., Heitmann, S., and Daffertshofer, A. (2010). Generative models of cortical oscillations: neurobiological implications of the Kuramoto model. Front. Hum. Neurosci. 4:190. doi: 10.3389/fnhum.2010.00190

Brunel, N., and Wang, X. J. (2003). What determines the frequency of fast network oscillations with irregular neural discharges? I. Synaptic dynamics and excitation-inhibition balance. J. Neurophysiol. 90, 415–430. doi: 10.1152/jn.01095.2002

Buzsáki, G., Anastassiou, C. A., and Koch, C. (2012). The origin of extracellular fields and currents — EEG, ECoG, LFP and spikes. Nat. Rev. Neurosci. 13, 407–420. doi: 10.1038/nrn3241

Campbell, S., and Wang, D. (1994). Synchronization and desynchronization in a network of locally coupled synchronization and desynchronization in a network of locally coupled Wilson-Cowan oscillators. IEEE Trans. Neural Netw. 7, 541–554. doi: 10.1109/72.501714

Chakravarthy, S. V., and Ghosh, J. (1996). A complex-valued associative memory for storing patterns as oscillatory states. Biol. Cybern. 75, 229–238. doi: 10.1007/s004220050290

Chakravarthy, V. S. (2009). “A complex-valued hopfield neural network: dynamics and applications,” in Complex-Valued Neural Networks: Utilizing High-Dimensional Parameters, ed. T. Nitta, (Hershey, PA: Information Science Reference), 79–103. doi: 10.4018/978-1-60566-214-5.ch004

Chakravarthy, V. S. (2020). Encoding temporal relations using complex – valued synapses. ACCS 1, 1–3.

Craik, A., and He, Y. (2019). Deep learning for electroencephalogram (EEG) classification tasks?: a review. J. Neural Eng. 16, 031001. doi: 10.1088/1741-2552/ab0ab5

Damoiseaux, J. S., and Greicius, M. D. (2009). Greater than the sum of its parts: a review of studies combining structural connectivity and resting-state functional connectivity. Brain Struct. Funct. 213, 525–533. doi: 10.1007/s00429-009-0208-6

Destexhe, A., Mainen, Z. F., and Sejnowski, T. J. (1994). Synthesis of models for excitable membranes, synaptic transmission and neuromodulation using a common kinetic formalism. J. Comput. Neurosci. 1, 195–230. doi: 10.1007/BF00961734

Draguhn, A. (2004). Neuronal oscillations in cortical networks. Science 304, 1926–1930. doi: 10.1126/science.1099745

Ermentrout, B., and Kopell, N. (1991). Multiple pulse interactions and averaging in systems of coupled neural oscillators∗. J. Math. Biol. 29, 195–217. doi: 10.1007/BF00160535

Ermentrout, G. B., and Kopell, N. (1989). Mathematical Modelling of Central Pattern Generators. Cambridge, MA: Academic Press Limited, doi: 10.1016/b978-0-12-287960-9.50013-7

Frasconi, P., Gori, M., and Soda, G. (1995). Recurrent neural networks and prior knowledge for sequence processing?: a constrained nondeterministic approach. Knowledge Based Syst. 8, 313–332. doi: 10.1016/0950-7051(96)81916-2

Fujii, H., Ito, H., Aihara, K., Ichinose, N., and Tsukada, M. (1996). Dynamical cell assembly hypothesis – theoretical possibility of spatio-temporal coding in the cortex. Neural Netw. 9, 1303–1350. doi: 10.1016/S0893-6080(96)00054-8

Ghosh-dastidar, S., and Lichtenstein, A. G. (2009). Review article spiking neural networks. Int. J. 19, 295–308.

Grandchamp, R., Braboszcz, C., and Delorme, A. (2014). Oculometric variations during mind wandering. Front. Psychol. 5:31. doi: 10.3389/fpsyg.2014.00031

Hoff, A., Santos, J. V., Manchein, C., and Albuquerque, H. A. (2014). Numerical bifurcation analysis of two coupled FitzHugh-Nagumo oscillators. Eur. Phys. J. B. 87:151. doi: 10.1140/epjb/e2014-50170-9

Hopfield, J. J. (1982). Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. U.S.A. 79, 2554–2558. doi: 10.1073/pnas.79.8.2554

Hoppensteadt, F. C., and Izhikevich, E. M. (1996). Biological Cybernetics Synaptic organizations and dynamical properties of weakly connected neural oscillators. II. Learning phase information. Biol. Cybern. 135, 129–135. doi: 10.1007/s004220050280

Hoppensteadt, F. C., and Izhikevich, E. M. (1997). Weakly Connected Neural Networks. New York, NY: Springer-Verlag.

Hoppensteadt, F. C., and Izhikevich, E. M. (2000). Pattern recognition via synchronization in phase-locked loop neural networks. IEEE Trans. Neural Netw. 11, 734–738. doi: 10.1109/72.846744

Hyafil, A., Giraud, A. L., Fontolan, L., and Gutkin, B. (2015). Neural cross-frequency coupling: connecting architectures, mechanisms, and functions. Trends Neurosci. 38, 725–740. doi: 10.1016/j.tins.2015.09.001

Izhikevich, E. M. (2003). Simple model of spiking neurons. IEEE Trans. Neural Netw. 14, 1569–1572. doi: 10.1109/TNN.2003.820440

Izhikevich, E. M. (2004). Which model to use for cortical spiking neurons? IEEE Trans. Neural Netw. 15, 1063–1070. doi: 10.1109/TNN.2004.832719

Izhikevich, E. M. (2007). Dynamical Systems in Neuroscience?: The Geometry of Excitability and Bursting. Cambridge, MA: The MIT Press.

Jansen, B. H., and Rit, V. G. (1995). Electroencephalogram and visual evoked potential generation in a mathematical model of coupled cortical columns. Biol. Cybern. 73, 357–366. doi: 10.1007/BF00199471

Joglekar, M. R., Mejias, J. F., Yang, G. R., and Wang, X. J. (2018). Inter-areal balanced amplification enhances signal propagation in a large-scale circuit model of the primate cortex. Neuron 98, 222–234.e8. doi: 10.1016/j.neuron.2018.02.031

Kim, J. C., and Large, E. W. (2015). Signal processing in periodically forced gradient frequency neural networks. Front. Comput. Neurosci. 9:152. doi: 10.3389/fncom.2015.00152

Kim, J. C., and Large, E. W. (2021). Multifrequency Hebbian plasticity in coupled neural oscillators. Biol. Cybern. 115, 43–57. doi: 10.1007/s00422-020-00854-6

Lawrence, S., Giles, C. L., Tsoi, A. C., and Back, A. D. (1997). Face recognition: a convolutional neural-network approach. IEEE Trans. Neural Netw. 8, 98–113. doi: 10.1109/72.554195

Lippmann, R. P. (1990). Review of neural networks for speech recognition. Read. Speech Recognit. 38, 374–392. doi: 10.1016/b978-0-08-051584-7.50036-x

Low, L. A., Reinhall, P. G., Storti, D. W., and Goldman, E. B. (2005). Coupled van der Pol oscillators as a simplified model for generation of neural patterns for jellyfish locomotion. Struct. Control Heal. Monit. 13, 417–429. doi: 10.1002/stc.133

Maass, W. (1997a). Fast sigmoidal networks via spiking neurons. Neural Comput. 9, 279–304. doi: 10.1162/neco.1997.9.2.279

Maass, W. (1997b). Networks of spiking neurons: the third generation of neural network models. Neural Netw. 10, 1659–1671. doi: 10.1016/S0893-6080(97)00011-7

Mejias, J. F., Murray, J. D., Kennedy, H., and Wang, X. J. (2016). Feedforward and feedback frequency-dependent interactions in a large-scale laminar network of the primate cortex. Sci. Adv. 2:e1601335. doi: 10.1126/sciadv.1601335

Pikovsky, A., Rosenblum, M., and Kurths, J. (2001). Synchronization A Universal Concept in Nonlinear Sciences. Cambridge: Cambridge University Press.

Powanwe, A. S., and Longtin, A. (2019). Determinants of brain rhythm burst statistics. Sci. Rep. 9:18335. doi: 10.1038/s41598-019-54444-z

Righetti, L., Buchli, J., and Ijspeert, A. J. (2005). “From dynamic hebbian learning for oscillators to adaptive central pattern generators,” in Proceedings of 3rd International Symposium on Adaptive Motion in Animals and Machines – AMAM 2005, (Ilmenau: Verlag ISLE), 1–7.

Righetti, L., Buchli, J., and Ijspeert, A. J. (2006). Dynamic Hebbian learning in adaptive frequency oscillators. Phys. D Nonlinear Phenom. 216, 269–281. doi: 10.1016/j.physd.2006.02.009

Roy, Y., Banville, H., and Albuquerque, I. (2019). Deep learning-based electroencephalography analysis?: a systematic review. J. Neural Eng. 16:051001. doi: 10.1088/1741-2552/ab260c

Ruck, D. W., Rogers, S. K., and Kabrisky, M. (1990). Feature selection using a multilayer perceptron. J. Neural Netw. Comput. 2, 40–48.

Sadilek, M., and Thurner, S. (2015). Physiologically motivated multiplex Kuramoto model describes phase diagram of cortical activity. Sci. Rep. 5:10015. doi: 10.1038/srep10015

Saha, A., and Feudel, U. (2017). Intermittency in delay-coupled FitzHugh – Nagumo oscillators and loss of phase synchrony as its precursor. Indian Acad. Sci. Conf. Ser. 1:1. doi: 10.29195/iascs.01.01.0010

Schmidhuber, J. (2015). Deep learning in neural networks?: an overview. Neural Netw. 61, 85–117. doi: 10.1016/j.neunet.2014.09.003

Shi, Z. W., Shi, Z. Z., Liu, X., and Shi, Z. P. (2008). A computational model for feature binding. Sci. China C Life Sci. 51, 470–478. doi: 10.1007/s11427-008-0063-3

Stefanescu, R. A., and Jirsa, V. K. (2008). A low dimensional description of globally coupled heterogeneous neural networks of excitatory and inhibitory neurons. PLoS Comput. Biol. 4:e1000219. doi: 10.1371/journal.pcbi.1000219

Toral, R., Mirasso, C., and Gunton, J. (2003). System size coherence resonance in coupled FitzHugh-Nagumo models. Europhys. Lett. 61:162. doi: 10.1209/epl/i2003-00207-5

Trappenberg, T. P. (2003). Continuous Attractor Neural Networks Biographical Sketch?: Continuous Attractor Neural Networks Abstract. (Hershey, PA: IGI Global, 701 E. Chocolate Ave), 1–30.

Wang, D., and Terman, D. (1997). Image segmentation based on oscillatory correlation. Neural Comput. 9, 805–836. doi: 10.1162/neco.1997.9.4.805

Wang, D. L., and Terman, D. (1995). Locally excitatory globally inhibitory oscillator networks. IEEE Trans. Neural Netw. 6, 283–286. doi: 10.1109/72.363423

Wilson, H. R., and Cowan, J. D. (1972). Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J. 12, 1–24. doi: 10.1016/S0006-3495(72)86068-5

Wirkus, S., and Rand, R. (2002). The dynamics of two coupled van der pol oscillators. Nonlinear Dyn. 30, 205–221.

Keywords: supercritical Hopf oscillator, power coupling, complex coupling, normalized phase difference, fourier decomposition, complex valued oscillator, complex Hebb’s rule, generative model

Citation: Biswas D, Pallikkulath S and Chakravarthy VS (2021) A Complex-Valued Oscillatory Neural Network for Storage and Retrieval of Multidimensional Aperiodic Signals. Front. Comput. Neurosci. 15:551111. doi: 10.3389/fncom.2021.551111

Received: 11 April 2020; Accepted: 06 April 2021;

Published: 24 May 2021.

Edited by:

Uma Shanker Tiwary, Indian Institute of Information Technology, Allahabad, IndiaReviewed by:

Jorge F. Mejias, University of Amsterdam, NetherlandsCopyright © 2021 Biswas, Pallikkulath and Chakravarthy. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: V. Srinivasa Chakravarthy, c2NoYWtyYUBlZS5paXRtLmFjLmlu

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.