- Institute of Theoretical Computer Science, Graz University of Technology, Graz, Austria

The connectivity structure of neuronal networks in cortex is highly dynamic. This ongoing cortical rewiring is assumed to serve important functions for learning and memory. We analyze in this article a model for the self-organization of synaptic inputs onto dendritic branches of pyramidal cells. The model combines a generic stochastic rewiring principle with a simple synaptic plasticity rule that depends on local dendritic activity. In computer simulations, we find that this synaptic rewiring model leads to synaptic clustering, that is, temporally correlated inputs become locally clustered on dendritic branches. This empirical finding is backed up by a theoretical analysis which shows that rewiring in our model favors network configurations with synaptic clustering. We propose that synaptic clustering plays an important role in the organization of computation and memory in cortical circuits: we find that synaptic clustering through the proposed rewiring mechanism can serve as a mechanism to protect memories from subsequent modifications on a medium time scale. Rewiring of synaptic connections onto specific dendritic branches may thus counteract the general problem of catastrophic forgetting in neural networks.

1. Introduction

Long-term imaging studies of the living brain have revealed that the cortical connectivity structure is dynamic, with dendritic spines being added and deleted on the time scale of hours to days (Holtmaat et al., 2005; Stettler et al., 2006; Kasai et al., 2010; Loewenstein et al., 2011; Rumpel and Triesch, 2016). It has been proposed that this ongoing cortical rewiring serves important functions for learning and memory (Chklovskii et al., 2004; DeBello, 2008). According to this view, synaptic rewiring defines the connectivity structure of cortical circuits and interacts with synaptic plasticity of established synaptic connections.

Many theoretical studies of cortical rewiring have explored the implications of rewiring on the network level using point neuron models (see section 3). However, rewiring can also shape the connectivity structure on the sub-cellular level, defining the dendritic targets of synaptic connections onto pyramidal cells (PCs). As dendrites of cortical PCs exhibit various types of non-linear regenerative events, so-called dendritic spikes (Larkum et al., 2009), the specific placement of synaptic connections at the dendritic tree of a PC can strongly impact its computational function (Mel, 1994; Kastellakis et al., 2015; Bono et al., 2017). In particular, with regard to the organization of synapses on dendritic branches, a popular hypothesis with strong experimental support is the synaptic clustering hypothesis, which states that functionally related synapses tend to cluster on dendritic branches (Govindarajan et al., 2006; Kastellakis et al., 2015). A theoretical justification for rewiring of synapses on non-linear dendrites was provided by Poirazi and Mel (2001). There, it was shown that a supervised structural plasticity rule can optimize memory performance of a simple non-spiking model of a pyramidal cell with non-linear dendrites. Later, Legenstein and Maass (2011) showed that branch-specific synaptic plasticity—without synaptic rewiring—can self-organize non-linear computations in neurons with non-linear branches.

In this article, we study synaptic rewiring based on simple synaptic plasticity rules in a spiking neuron model for PCs with dendritic non-linearities. In computer simulations of the model, we find that rewiring leads to a clustering of temporally correlated inputs onto dendritic branches, thus supporting the synaptic clustering hypothesis. Using this model, we argue that synaptic clustering through rewiring could play an important functional role in learning processes. It was shown by Cichon and Gan (2015) that different motor learning tasks induce dendritic spikes in different dendritic branches of PCs in mouse motor cortex. A possible explanation for this finding is that synaptic inputs are clustered onto dendritic branches in a task-specific manner such that a dendritic branch receives predominantly synaptic inputs that are activated at a specific task. This interpretation is consistent with the synaptic clustering hypothesis. When this segregation was pharmacologically disrupted, the animals could still learn the current task, but performance on an earlier learned task degraded, indicating a role of clustering for retaining older memories. One may thus hypothesize that synaptic clustering provides a mechanism to shelter previous memories from being overwritten by novel plasticity events. Testing this hypothesis, we found in another series of computer simulations that synaptic clustering through our simple rewiring dynamics is able to shelter previous memories from subsequent modifications.

To model synaptic rewiring, we take advantage of the synaptic sampling theory (Kappel et al., 2015), which provides a general framework for the interaction of synaptic plasticity and rewiring. Synaptic plasticity in this framework has a strong stochastic component (Yasumatsu et al., 2008; Dvorkin and Ziv, 2016). This stochasticity naturally gives rise to a stochastic addition and deletion of synaptic connections, which can be shown to implement a stochastic search over connectivity structures. In our application of this framework, we combine rewiring with simple plasticity rules where plasticity events are triggered by local dendritic spikes (Golding et al., 2002; Lisman and Spruston, 2005; Gordon et al., 2006). While the branch-specific plasticity mechanism inevitably leads to the independent self-organization of each dendritic branch, we show that the addition of a spike-timing-dependent plasticity mechanism, as proposed by Legenstein and Maass (2011), can orchestrate this process across branches, leading to an increased capacity to store input patterns.

To probe conditions under which clustering emerges in our model, we tested the model in various situations. While synaptic clustering can occur under various input statistics, we observed no clustering when individual synaptic inputs were activated in an unreliable stochastic manner. Since these statistics are presumably more characteristic for early sensory areas, this may explain why some studies reported a lack of synaptic clustering (Jia et al., 2010). Finally, we theoretically analyzed the consequences of rewiring in our model. We found that the proposed dynamics approximates a stochastic search over connectivity structures that favors functionally clustered synaptic configurations.

2. Results

2.1. Synaptic Rewiring on Dendritic Branches

Dendritic structures of pyramidal cells (PCs) can be divided into integrative compartments (Losonczy and Magee, 2006; Major et al., 2008; Larkum et al., 2009; Branco et al., 2010). The compartmental model for PCs adopted in this article is illustrated in Figures 1A,B. The axon of a presynaptic neuron i can contact various dendritic branches k of the postsynaptic PC, establishing a synaptic connection ki with synaptic efficacy wki. Presynaptic spikes at such a synapse ki give rise to alpha-shaped currents of amplitude wki at the branch. Each dendritic branch acts as a leaky integrator that temporally and spatially sums synaptic input currents from its incoming synapses. Note that we do not consider any specific spatial structure of the branches. The structures shown in the figures are provided for illustration purposes only.

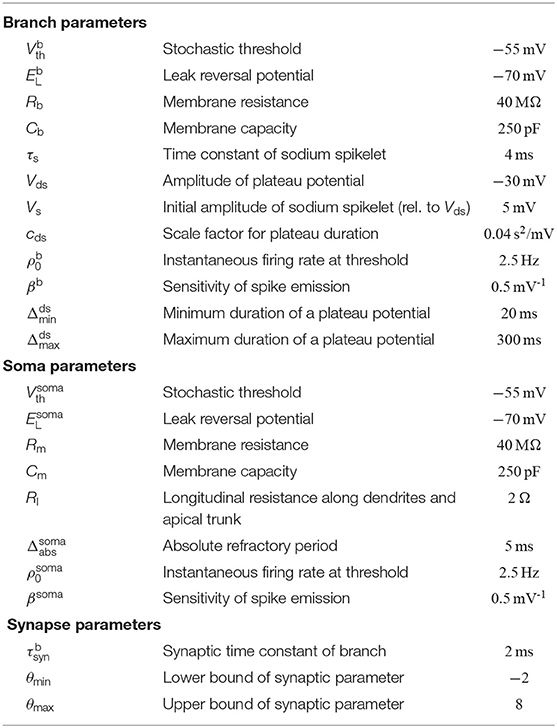

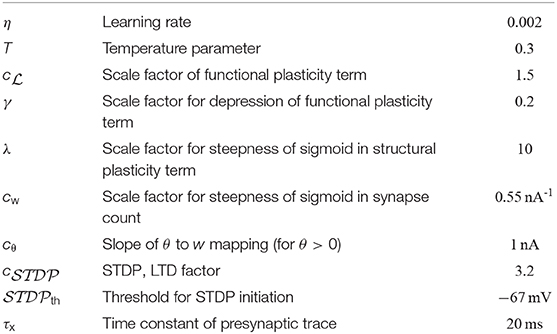

Figure 1. Schema of neuron model and plasticity/rewiring. (A) Input neurons (colored dots) are divided into different input assemblies (2 shown; color indicates assembly assignment). Bottom: spike raster of input neurons. Dots represent spike times. (B) Schematic drawing of neuron model (spatial structure of branches for illustrative purposes only) with dendritic membrane potentials (top right) and somatic membrane potential Vsoma (bottom right). Branch b5 emits two dendritic spikes with a duration of about 50 ms. Branch b1 spikes once with a longer plateau phase. No synaptic cluster was established on branch b4. Therefore, this branch did not elicit dendritic spikes. Somatic spikes are indicated for illustrative purpose by vertical lines. (C) The duration of a plateau potential grows linearly as a function of the input intensity. The synaptic efficacy w of a single synaptic input was varied between 0 and 120 nA evenly spaced; a single strong synapse was used to mimic strong synchronous input. Shown is the mean and standard deviation of the resulting plateau durations over 100 independent trials. Arrow (at 50 nA) roughly marks the onset of this linear growth. Synaptic input exceeding this threshold has a fair chance of triggering a dendritic spike. (D) Mapping between the synaptic parameter θki and the synaptic efficacy wki of synapse i onto branch k. Negative values of θki, corresponding to non-established synapses, are mapped to zero in the wki-space. (E) Evolution of three synaptic parameters as a function of time t (top) and the corresponding wiring diagram at three points in time (bottom). At time t1 the values of parameters θ1i and θ2i are positive (indicating a functional synapse) while the value of parameter θ3i is negative (indicating a non-established synapse). Parameter θ2i crosses zero shortly before t2 (becoming non-established). (F) The plasticity process. Branch potential (black; sub-threshold potentials and a dendritic spike), the somatic spike traces of input neurons i and j (top), and the evolution of synaptic parameters θki and θkj (bottom). Input neuron i is active shortly before and during the dendritic spike. The synaptic parameter θki of this connection is therefore increasing. θkj decreases, since neuron j is not active during the plateau potential.

Besides this passive response to synaptic inputs, dendrites of PCs show a variety of non-linear responses. In particular, we model local N-methyl-D-aspartate (NMDA) spikes (Antic et al., 2010) that include a brief sodium spikelet followed by a plateau potential that lasts up to a few hundreds of milliseconds, see Figure 1B [amplitude values of the plateau potentials are taken from Antic et al. (2010), see Figure 1 there]. NMDA spikes are elicited stochastically at dendritic branches based on the local membrane potential (branch potential). The duration of the spike grows linearly with stimulus intensity (Figure 1C), whereas the amplitude exhibits an all-or-none highly non-linear behavior (Antic et al., 2010). Currents from dendritic compartments to the soma are driven by the difference between the branch potential and the membrane potential of the soma. The somatic compartment is again a leaky integrator that sums currents from all dendrites (with a certain degree of amplitude attenuation, see Equation 17). Somatic action potentials are elicited stochastically with an instantaneous firing rate that depends exponentially on the somatic membrane potential. See Jolivet et al. (2006) for a fit of this model of action potential generation to layer V pyramidal neurons in rat somatosensory cortex. A more detailed description of the neuron model is given in section 4.

The connectivity structure in the cortex is not static, rather synaptic connections are rewired on a time scale of hours to days (Holtmaat et al., 2005; Stettler et al., 2006; Minerbi et al., 2009; Yang et al., 2009; Ziv and Ahissar, 2009; Kasai et al., 2010; Loewenstein et al., 2011; Rumpel and Triesch, 2016; Chambers and Rumpel, 2017). To investigate the consequences of rewiring processes for information processing in PCs, we adopted the synaptic sampling framework introduced by Kappel et al. (2015, 2018). Each compartment has a set of potential synaptic connections that could be realized by some parameter setting. However, at each point in time, only a subset of these connections is realized by functional synapses. More precisely, we maintain one parameter θki for each potential synapse ki from input neuron i to branch k of the neuron. This parameter encodes (a) through its sign whether the synapse is functional and (b) the synaptic efficacy wki of the synapse if it is functional. More precisely, the synaptic weight wki is cθθki for a functional synapse (θki > 0) and 0 otherwise (θki ≤ 0). Hence, the mapping from the parameter θki to the synaptic weight is given by wki = cθmax{0, θki} where cθ = 1 nA is the slope of this mapping for θki > 0 (Figure 1D).

Recent experimental results show that synaptic modifications contain a strong autonomous component (Dvorkin and Ziv, 2016). In accordance with previous modeling approaches (Loewenstein et al., 2011), this component is modeled in the synaptic sampling framework as a stochastic process. In general, the stochastic synaptic dynamics is given in this model as a stochastic differential equation (SDE) of the form

where η > 0 is a small learning rate (for parameter values, see Table 2). This SDE can be read as follows: the change of the synaptic parameter θki is the sum of three terms. The last term models the synapse-autonomous component that does not depend on any synaptic, presynaptic, or postsynaptic variables. Instead, it is given by the increments of a standard Wiener process , thus implementing random walk behavior. The temperature T is a constant that scales the strength of this stochastic component. The term in the brackets defines the deterministic part of the dynamics. For conceptual clarity, it is divided here into two components. The first term describes changes that are not directly relevant for the functional goal of the plasticity process but rather used to enforce structural constraints on the connectivity (for example, bound the number of connections that can be established onto one branch). The functional goal of the plasticity process is captured by the second term , see below for an example. The deterministic terms will be chosen such that they vanish for θki(t) ≤ 0. Hence, for non-established synapses, only the stochastic term remains. The random walk may at some point cross zero again, leading to the creation of a synaptic connection which may be strengthened or weakened depending on its functional relevance (Figure 1E). The model thus integrates synaptic dynamics with rewiring in one unifying framework. Note that the creation of new synapses solely depends on the Wiener process. New synapses can also directly be sampled from the set of non-established potential synapses, which however loses some of the nice mathematical properties of the model, see Bellec et al. (2018) for details.

Since in cortical PCs, the number of connections to a branch is bounded, we use for the structural component of the plasticity dynamics (1) a term that enforces a soft upper bound on the number of synaptic connections onto each branch. This term depresses weak synapses of a branch if the number of connections to it is close to or exceeds a predefined constant Nsyn (set to 20 in our simulations), and it vanishes if the number of connections is well below Nsyn.

According to the Hebbian theory of synaptic plasticity, synaptic connections are created and/or strengthened when the presynaptic neuron contributes to activity of the postsynaptic neuron (Hebb, 1949). More recent experiments, however, showed that postsynaptic somatic spiking is neither necessary nor sufficient to induce long-term potentiation (LTP) in pyramidal cells in various brain structures, both, at distal basal dendrites and at the apical tuft (Golding et al., 2002; Lisman and Spruston, 2005; Gordon et al., 2006; Gambino et al., 2014; Cichon and Gan, 2015; Brandalise et al., 2016). These studies indicate that local dendritic NMDA-dependent regenerative processes are necessary as the postsynaptic signal to trigger long-term potentiation (LTP). An important implication of this finding is that the learning signal is at least partially local to dendritic branches, leading to a synaptic organization that depends on local dendritic integration. To model such LTP within the stochastic rewiring framework, we include a term xi(t)Γk(t) in the functional term of the plasticity dynamics (1). Here, xi(t) is a trace of the spike train from presynaptic neuron i, and Γk(t) = 1 indicates the presence of an NMDA plateau potential at time t (Γk(t) = 0 otherwise). Hence, coincidence of presynaptic activity with a dendritic plateau potential will induce LTP (see Figure 1F, blue trace). While the dependence for LTP on dendritic spikes is well-established in the literature, less is known about long-term depression (LTD) in this regard. Interestingly, while Golding et al. (2002) observed LTP when pairing high-frequency stimulation of synaptic inputs with dendritic spikes in a theta-burst pairing protocol, Holthoff et al. (2004) reported long-term depression (LTD) with a single presynaptic stimulation leading to a dendritic spike, indicating that low-frequency pairing may lead to LTD, consistent with the classical findings that weak synaptic stimulation leads to LTD (Dudek and Bear, 1995) under the assumption that dendritic spikes are elicited in the postsynaptic neuron occasionally. We therefore included a term in our plasticity model that weakens a synapse if its activity is below a certain level during a plateau potential. Together, the functional component of the plasticity dynamics (1) was given by

with γ > 0 being a constant that determines the threshold activity that switches from LTD to LTP. Note that changes occur only during the presence of a plateau potential [Γk(t) = 1]. The rule can be described qualitatively as follows: if there is a dendritic spike at branch k, potentiate active synapses and depress inactive synapses to this branch k. Weak synapses will ultimately retract, and non-established potential synapses will be established at random times according to the stochastic process. We show in the section “Analysis of stochastic rewiring dynamics” that this dynamic corresponds to a distribution that defines functionally preferred parameter settings that lead to synaptic clustering.

2.2. Synaptic Clustering Through Rewiring

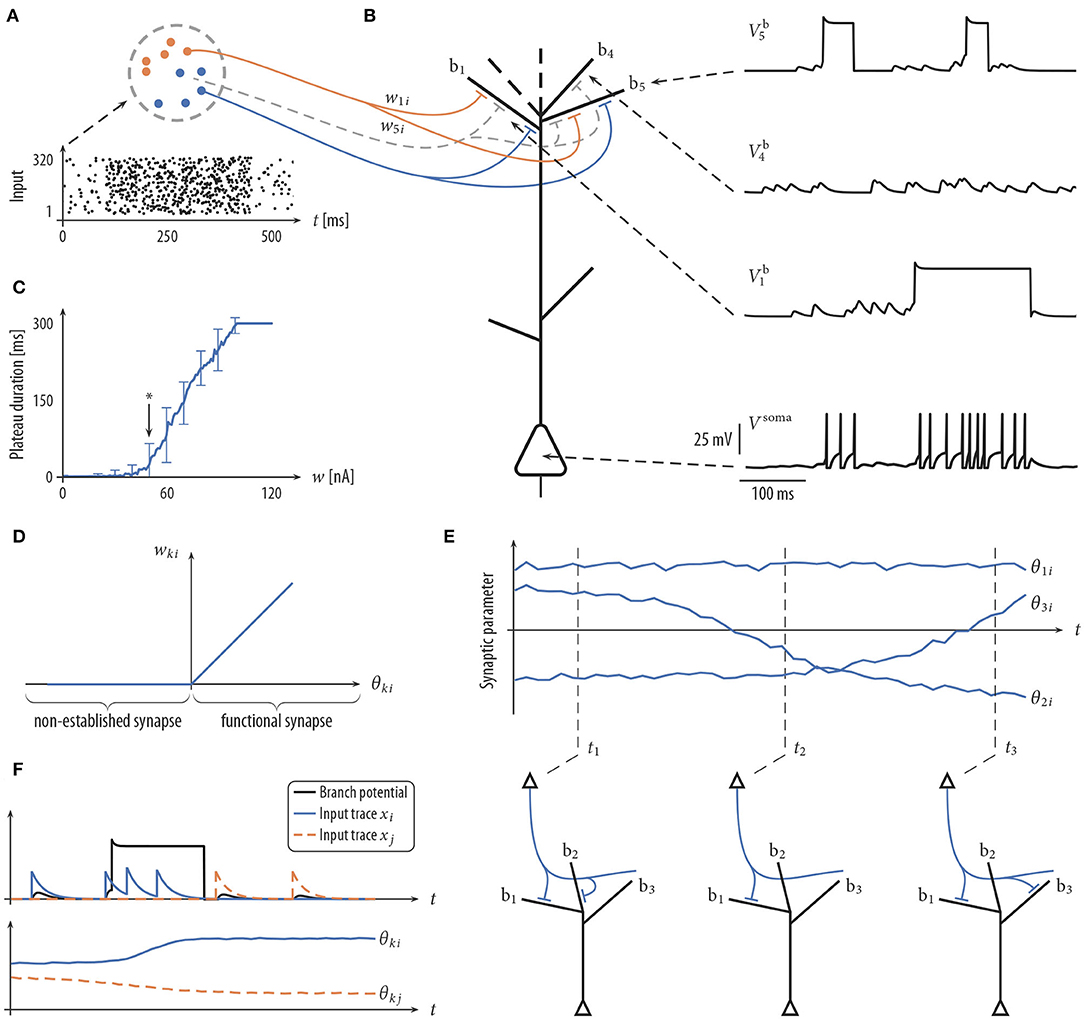

We first investigated the patterns of synaptic connections that emerge when synaptic rewiring is driven by the simple dendritic spike-dependent plasticity mechanism (2). We simulated a neuron with 12 independent dendritic compartments that received input from 320 input neurons. Input neurons were divided into 8 disjoint assemblies of 40 neurons each, producing Poisson spike trains at a background rate of 1 Hz. Every 500 ms, one assembly was chosen randomly and activated for 300 ms. These neurons elevated their firing rate to 35 Hz, leading to a specific input firing pattern (Poisson spike trains were randomly generated at every pattern presentation), see Figure 2A (top).

Figure 2. Synaptic rewiring leads to synaptic clustering at dendritic branches. (A) Spike rasters of input neurons (top), initial wiring diagram of three selected branches (middle left), dendritic membrane potentials of these branches (middle right), and the somatic membrane potential Vsoma (bottom) for the successive activation of assemblies A1, A2, and A5. Color of graph edges indicate to which of the assemblies (A1, A2, and A3; pattern presentation of assembly A3 is not shown) a connection was established. Connections in gray are connections to one of the other assemblies. At this initial configuration, the input neurons were connected to branches randomly. Brief dendritic spikes appeared occasionally (middle right) and the neuronal firing rate was low (bottom). (B) After 17 min of rewiring dynamics, inputs to each branch were originating predominantly from a specific input assembly Ai (middle left). Hence, temporally correlated branch input triggered long lasting dendritic plateau potentials (middle right). In this simulation, none of the branches received synaptic clusters from assembly A5. As a consequence, the neuron elevated its firing rate during pattern presentations of input assemblies A1 and A2, but rarely spiked during the pattern presentation of assembly A5 (bottom). (C) Evolution of the synaptic weights wki of branch b2 (top), branch b6 (middle), and branch b12 (bottom). Shown is the mean synaptic weight from three selected assembly (A1, A2, and A3) which clustered on the branch (saturated color), weights of individual synapses from that assembly (desaturated color), and weights of synapses from other assemblies (gray). Colors correspond to colors in (A,B). Synaptic connections are reorganized during the rewiring process, i.e., synapses with correlated activity are established on the branch while synapses of other assemblies retract over time. Occasional small gray bumps close to 0 in the second half of the simulation originate from synapses from other assemblies that are reconnected, but they quickly retract due to their irrelevance for activating the branch.

Input neurons were initially connected to dendritic branches randomly such that 20 synapses were established on each branch (Figure 2A middle). Synaptic parameters of these connections were independently drawn from a uniform distribution over the interval [4, 8). A soft upper bound of 20 synapses per branch was enforced through the structural component of the synaptic sampling update (1). At this initial configuration, brief dendritic plateau potentials appeared occasionally and the neuronal firing rate was low (≈ 6.19 Hz). Dendritic plateau potentials were elicited preferentially during presynaptic assembly activations, but they were not specific to any of the input assemblies. We observed a gradual assembly-specific clustering of synapses onto individual branches during the simulation. After 17 min of assembly activations, each branch usually hosted a synaptic cluster from one of the input assemblies, receiving approximately 20 synapses from that assembly with the weight close to the maximum, while other assemblies were either not connected or had weights close to zero, see Figure 2B. Many synapses that were functional after rewiring were not connected to the branches initially but were established during the rewiring process (Figure 2C). In order to quantify the behavior of the model, we performed 25 simulations with different randomly chosen initial conditions and different random assembly activations. We say that assembly synapses cluster on a branch if the branch receives at least 10 synapses from one assembly with a total weight of at least 50 nA. The value of 50 nA was chosen since synaptic input exceeding this threshold has a fair chance of triggering a dendritic spike (see arrow in Figure 1C). In our 25 independent simulations, from the 8 input assemblies, between 5 and 8 were represented on dendritic branches with a mean of 6.36 and a standard deviation (SD) of 0.84. In all simulations, most of the dendritic branches had a synaptic cluster from one assembly, and some assemblies were clustered on several branches.

Since inputs to each branch were preferentially originating from a specific input assembly after rewiring, branch inputs were temporally correlated. This collective activity was sufficient to trigger long-lasting dendritic plateau potentials and somatic spikes (Figure 2B; voltage traces at active assemblies A1 and A2). In total, three branches received synaptic clusters from assembly A2 and two branches from assembly A1. This was reflected in the firing rate of the neuron during the activation of these assemblies. The firing rate of the neuron was highest (≈ 63.5 Hz) during the pattern presentation of assembly A2 and lowest (≈ 54.2 Hz) during the activation of assembly A1. To test the response of the neuron to assemblies that were not clustered, we activated assembly A5 that was active during the rewiring process but did not evolve a synaptic cluster on any branch. Branches did not elicit spikes during the pattern presentation of this assembly and the neuronal firing rate was low (≈ 0.97 Hz; Figure 2B; voltage traces at active assembly A5).

We hypothesized that dendritic plateau potentials are crucial for assembly-specific clustering of synapses in our model. To verify this we conducted simulations of an altered model with linear dendritic integration (i.e., the same model but without dendritic spikes). We did not observe clustering of functionally related inputs onto dendrites in this altered model on a large range of relevant parameters (see section 1.1 in the Supplementary Material for details), indicating that dendritic plateau potentials are indeed necessary for assembly-specific clustering of synapses onto individual dendrites.

In summary, these results show that synaptic rewiring dynamics can give rise to (a) clustering of functionally related inputs onto dendritic compartments and (b) segregation of different assemblies onto different branches. This leads to segregated assembly-specific activation of dendritic branches. Note that this plasticity-driven self-organization of network connectivity is independent of postsynaptic somatic spikes.

2.3. STDP Increases the Capacity of Neurons to Store Assembly Patterns

With the plasticity dynamics considered above, since plasticity depends solely on synaptic inputs and local dendritic potentials, all branches are adapted independently without taking the activity of other branches into account. This implies that synaptic patterns at different branches can become correlated in the sense that projections from one assembly cluster on two or more branches of the neuron. Such adaptation may in general waste dendritic resources such that all branches become occupied by a subset of input assemblies while other assemblies are neglected. This effect would become even more pronounced if assemblies were not activated in an intermixed manner but sequentially. In this case, early assemblies would occupy all branches, leaving no representational space for later ones.

Previous work has shown that dendritic synaptic patterns can be decorrelated by a simple additional spike-timing-dependent plasticity (STDP) mechanism where each somatic spike induces a small amount of depression in all synapses with a recent presynaptic spike (Legenstein and Maass, 2011). This rule is consistent with the experimental finding that in distal dendrites, a pre-before-post spike pair leads to depression (Kampa et al., 2007). The STDP update indirectly introduces competition between dendritic branches such that branches compete for becoming activated for each input pattern. Briefly, consider an input pattern for which one branch (or a few branches) has evolved a strong synaptic cluster. Whenever this input is presented, the branch becomes active, it produces dendritic plateau potentials which in turn will lead to somatic spikes. For branches that did not yet evolve a strong cluster for that input pattern, these somatic spikes then depress via STDP active synapses and thus prevent the emergence of a cluster for this pattern. On the other hand, the branch with the strong cluster and plateau potentials is protected in the sense that potentiation from the dendritic-spike-dependent plasticity rule overcompensates the depression. This branch (or a few branches with a strong cluster) has thus won the competition for becoming activated by this input pattern.

To test the effect of this mechanism on synaptic rewiring, we performed 25 simulations as described above but with STDP added to the parameter dynamics (see section 4 for a definition of the STDP update). We found that the average number of represented assemblies increased from 6.36 ± 0.84 to 7.40 ± 0.57 (mean ± SD). This is a significant increase of the number of assemblies that are stored on the dendrites [t(24) = 5.01, p < 0.0001, unpaired t-test]. A schematic drawing of the simulated neuron and the wiring diagram after 17 min of rewiring dynamics of four selected branches of one of the conducted trials is shown in Figure 3A. Each assembly in this simulation evolved a synaptic cluster onto exactly one dendritic branch. Four branches did not receive a synaptic cluster from any of the assemblies (inset Figure 3A). Since these branches did not specialize to a specific input assembly, they could, in principle, adapt later to respond to novel input assemblies. To test whether these results are sensitive to the specific values of parameters in our rewiring model, we performed a sensitivity analysis. This analysis showed that the number of synaptic clusters is quite robust to parameter variations (see section 1.2 in the Supplementary Material). We also tested effects of the pattern duration and the delay between patterns. We found that our rewiring mechanism is, up to some point, quite robust to variations in the pattern duration and the delay between patterns (see section 1.3 in the Supplementary Material).

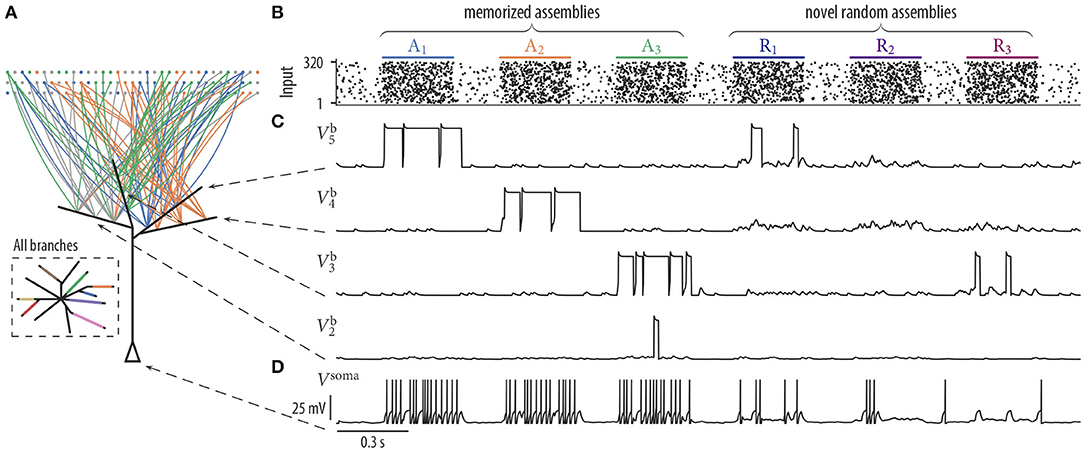

Figure 3. Synaptic rewiring with spike-timing-dependent depression and neuron response to stored and novel input patterns. (A) Schematic neuron drawing wiring diagram of four selected branches after 17 min of rewiring dynamics (as in Figure 2B). Branches b3, b4, and b5 received synaptic inputs predominantly from assemblies A3, A2, and A1, respectively. Branch b2 remained without a synaptic cluster from any assembly. Inset: Schematic drawing of all branches of the neuron. Branch color indicates assembly origin of a synaptic cluster. All assemblies were represented on the branches and each assembly established only a single cluster. Four branches remained without a synaptic cluster from any assembly. (B–D) Pattern-specific neuron responses after rewiring. (B) Input neurons spike raster. Assemblies A1, …, A3 were also activated during the previous rewiring period. The last three firing patterns were generated by novel random assemblies Ri. (C) Dendritic membrane potentials of four branches during the presentation of three test patterns of assemblies Ai and Ri. Dendritic plateau potentials were elicited preferentially during the activation of memorized assemblies Ai and appeared only occasionally at novel random assemblies Ri. (D) Somatic membrane potential Vsoma during the presentation of input patterns of memorized assemblies (A1, A2, and A3) and for patterns of novel random assemblies (R1, R2, and R3). The neuron responded with a high firing rate (≈ 27.6 Hz) to patterns of memorized assemblies and with a low firing rate (≈ 11.3 Hz) during the activation of novel random assemblies.

We next asked whether the neuron was able to differentiate after the rewiring process between stored and novel input patterns. We presented three firing patterns of memorized assemblies A1, A2, A3 and three firing patterns of novel random assemblies R1, R2, R3 to the neuron (Figure 3B). Each novel assembly was created by randomly choosing 40 distinct neurons from all 320 input neurons. Hence, assemblies R1 to R3 were not necessarily disjoint and they shared a significant amount of neurons with assemblies A1 to A8. The neuron responded with a high firing rate during the activation of memorized assemblies but spiked only occasionally for patterns of novel random assemblies (Figure 3D). Branches that did not receive a synaptic cluster from any of the assemblies still maintained rather strong individual synapses originating from multiple assemblies, but since their collective activity was not correlated; it was not sufficient to trigger long lasting dendritic plateau potentials in these branches (Figure 3C, bottom trace ).

In summary, STDP increases the capacity of the neuron in terms of the number of assemblies that are stored on dendrites. If there are more branches than assemblies in the input, some branches remain assembly-unspecific and are therefore available to store novel assemblies. Unless otherwise stated, all of the following simulations presented below are conducted with the STDP update.

2.4. Rewiring Protects Stored Information

One effect of the synaptic clustering observed in our simulations was that activation of different input assemblies led to dendritic spikes in different dendritic branches, see Figures 2A,B, 3B. Similarly, Cichon and Gan (2015) observed task-specific segregation of dendritic activity in tuft branches of layer V pyramidal neurons in mouse motor cortex. More specifically, they observed that different motor learning tasks induce dendritic spikes on different branches. The processing of different behaviors is therefore separated onto different dendritic branches. Importantly, when this segregation was pharmacologically disrupted, the animals could still learn the current task, but performance on an earlier learned task degraded, indicating a role of this clustering for retaining older memories. The authors thus argued that this segregation may provide a mechanism to shelter previous memories from being overwritten by novel plasticity events.

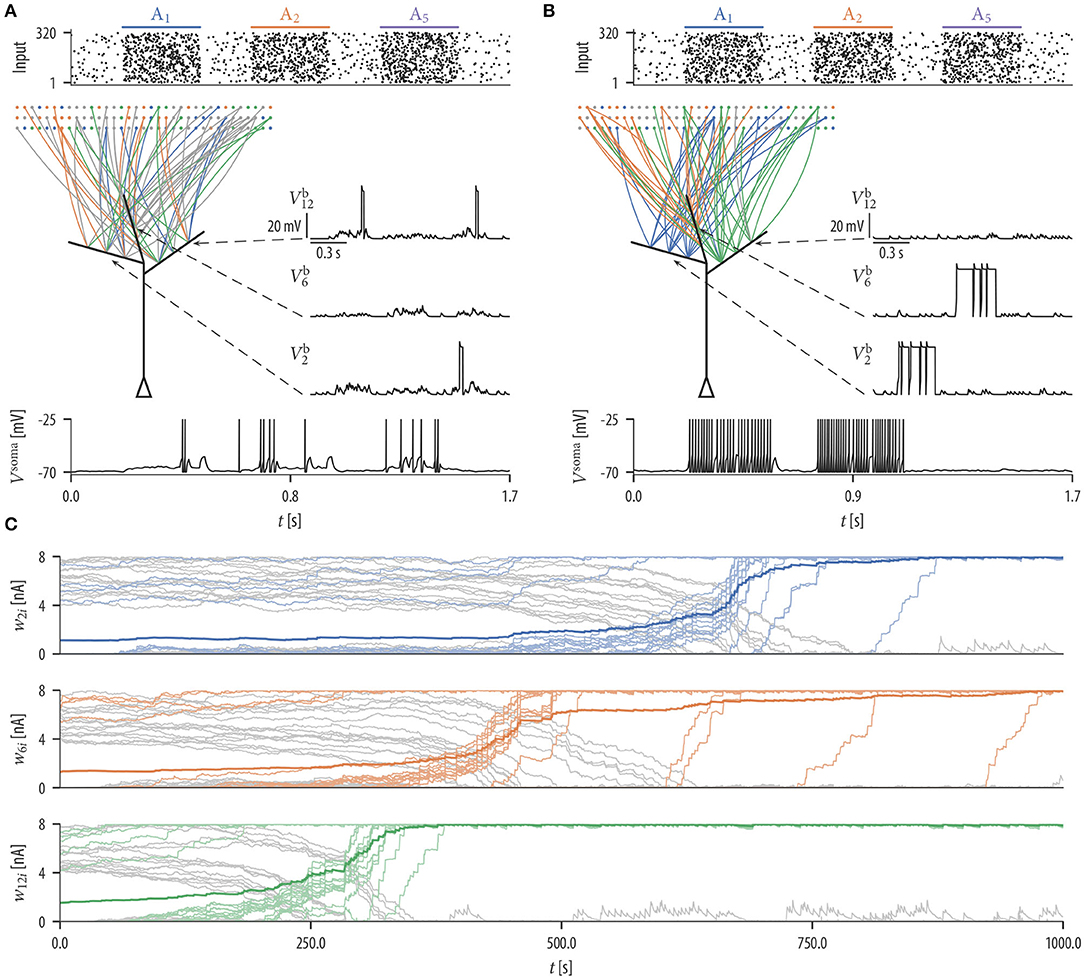

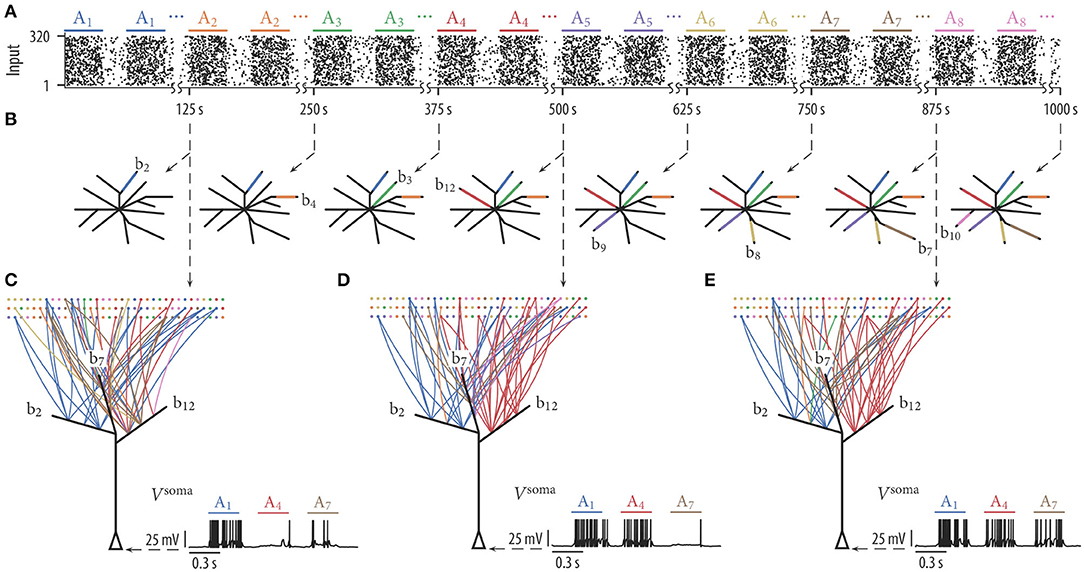

To test whether simple rewiring rules can indeed support memory protection, we performed simulations as described above. Input assembly activations and background noise were generated as before, however, assemblies were activated in a sequential manner. In other words, we first activated exclusively assembly A1 250 times, then assembly A2, then assembly A3, etc. (with a delay between each pattern presentation as in the previous simulations; Figure 4A). Figure 4B shows schematic drawings of the 12 simulated branches at various times of the rewiring process. The color of a branch in Figure 4B indicates which of the 8 sequentially presented assemblies had evolved a synaptic cluster at the branch at the specified time. Clearly, branches were recruited sequentially to store input patterns. Branch b2 received a synaptic cluster from the first assembly, whereas none of the other branches was evolving large weights for this assembly. Beginning at 125 s, assembly A2 was activated while assembly A1 was silent. Shortly afterwards, branch b4 developed large synaptic weights for this input pattern while the synapse cluster at branch b2 remained stable. This stabilization is due to the fact that (a) synapses from assembly A2 to branch b2 were weak, and dendritic plateau potentials were consequently induced rarely, and (b), presynaptic activity from assembly A1 was weak, and depression due to inverse STDP was thus very small. As further assemblies became activated every 125 s, new branches were recruited to store these patterns while synaptic weights on old branches kept stable. In this simulation, all assemblies were represented on the branches. Four branches remained without a synapse cluster from any of the assemblies. We have repeated the experiment for 25 independent trials. The mean number of represented assemblies over these trials was 6.92 ± 0.89 (mean ± SD).

Figure 4. Dendritic-spike-dependent rewiring protects stored information by segregating functionally unrelated inputs onto different dendritic branches. When input assemblies were activated sequentially, novel assemblies clustered on free branches while previously established clusters were retained. (A) Input assemblies Ai activated in a sequential manner. We first activated exclusively assembly A1, then assembly A2, then A3, etc. (B) Schematic drawing of the 12 simulated branches at 8 different time points (branch color indicates assembly origin of synaptic cluster at the branch). Branches were recruited sequentially. (C–E) Network connectivity graph and somatic membrane potential Vsoma of three selected branches (b2, b7, and b12) at three different time points. Graph edge color indicates to which of the input assemblies a connection is established. The somatic potential shows the response of the neuron to one test pattern of assembly A1, assembly A4, and assembly A7. The neuron successively reorganized its synaptic connections such that it responds to any of the patterns that have been presented previously.

We hypothesized that competition between branches through STDP is essential for this capability. Due to the competition, only one or a few branches evolve a synaptic cluster from a specific assembly while all other branches remain neutral to assemblies. This is especially important when assemblies are presented in a sequential manner. Without competition, most of the branches would adapt to respond to the first active assembly. Most branches would thus be occupied and not available for novel assemblies. To quantify the effect of dendritic competition, we repeated the experiment without STDP. In this case, the mean number of represented assemblies reduced to 4.04 ± 0.72 (mean ± SD over 25 independent trials). As expected, most of the branches evolved synaptic clusters from the first shown assembly A1 early on.

Due to the random fluctuations of weights, there is always a chance that synapses are overwritten. This behavior can be interpreted as gradual forgetting. If a synaptic cluster is inactive for an extended time, then the synaptic connections gradually degrade. If, however, that assembly is again activated, the synapses to that assembly will again stabilize provided that the weights are large enough to trigger a dendritic spike. To investigate the impact of the random fluctuations of weights in the weakening of older memories over time, we have performed simulations with various noise strengths and analyzed the weight decrease (see section 1.4 in the Supplementary Material for more detail). In section 1.5 of the Supplementary Material, we show a simple way to reduce gradual forgetting in our model and in section 1.6 of the Supplementary Material we propose a simple consolidation mechanism. Both of these methods integrate nicely with our rewiring framework. Synaptic connections of old memories are well-protected against changes induced by the noise term in this extended model (see sections 1.5, 1.6 in the Supplementary Material).

2.5. Synaptic Clustering Depends on Input Statistics

In the above simulations, input assemblies were activated in temporal isolation and each assembly activation was clean in the sense that all assembly neurons had an elevated firing rate and assemblies were disjoint. Under these conditions, our rewiring model led to robust synaptic clustering. We next determined the sensitivity of this result on these input statistics.

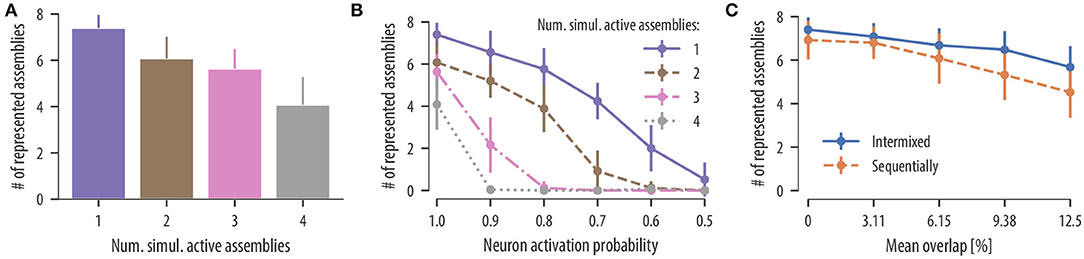

Influence of co-active assemblies: We first asked whether input assemblies can be clustered onto different dendritic compartments even when single assemblies are never activated in temporal isolation. As above, we simulated a neuron with 12 independent branches and a population of 320 input neurons divided into 8 disjoint neuronal assemblies of 40 neurons each. Input patterns and background noise were generated as before. At times of assembly activation, a medium number of assemblies was chosen uniformly at random from the set of all input assemblies and were simultaneously activated for 300 ms. For up to four simultaneous active assemblies, the rewiring dynamics separated connections from different assemblies onto different branches with some decrease of clustering. In 25 independent simulation runs, the mean number of represented assemblies on the neuron was 6.08 ± 0.94 for two simultaneously activated assemblies, 5.64 ± 0.84 for three simultaneously activated assemblies, and 4.08 ± 1.20 for four simultaneously activated assemblies (Figure 5A). Our rewiring mechanisms consequently segregates uncorrelated inputs onto different branches even when assembly patterns are not temporally isolated.

Figure 5. Synaptic clustering depends on input statistics. (A) Mean number of represented assemblies as a function of the number of simultaneous active assemblies. The difference in the mean number of represented assemblies for two and three simultaneous active assemblies were not significant. However, the number of represented assemblies significantly decreased compared to temporally isolated pattern presentations in all cases [F(3, 96) = 53.9, p < 0.0001, one-way analysis of variance (ANOVA); p < 0.0001, one active assembly vs. two simulations active assemblies, 1 vs. 3, 1 vs. 4, 2 vs. 4, 3 vs. 4; not significant (ns) p = 0.35, 2 vs. 3; n = 25 trials]. These results show that the rewiring mechanism segregates uncorrelated input assemblies onto different branches even when a medium number of assemblies are activated simultaneously. (B) Mean number of represented assemblies as a function of the assembly neuron activation probability (mean and SD over 25 independent trials). Results are shown for temporally isolated pattern presentations (violet solid line), two simultaneous active assemblies (brown dashed line), three simultaneous active assemblies (pink dashed dotted line), and four simultaneous active assemblies (gray dotted line). In all four cases, the number of represented assemblies significantly decreased at a neuron activation probability of 0.9 [t(24) = 5.09, p < 0.0001, one active assembly, activation probability of 1.0 vs. activation probability of 0.9; t(24) = 4.99, p < 0.0001, two active assemblies; t(24) = 10.8, p < 0.0001, three active assemblies; t(24) = 19.2, p < 0.0001, four active assemblies, paired t-test]. (C) Mean number of represented assemblies as a function of the mean overlap between a given pair of assemblies (mean and SD over 25 independent trials). Results are shown for intermixed pattern presentations (blue solid line) and for patterns presented in a sequential manner as in Figure 4 (orange dashed line). In both cases, the number of represented assemblies significantly decreased for a mean assembly overlap of 6.15 % [t(24) = 3.84, p = 0.0008, intermixed, disjoint assemblies vs. non-disjoint assemblies with mean overlap of 6.15 %; t(24) = 2.87, p = 0.008, sequentially, paired t-test].

Influence of stochastic assembly activation: While evidence for synaptic clustering has been reported in various cortical areas (Chen et al., 2011; Kleindienst et al., 2011; Fu et al., 2012), some studies found no evidence for it in early sensory areas (Jia et al., 2010). We hypothesized that this discrepancy may be contributed to different input statistics in different cortical areas. In particular, we conjectured that inputs to neurons in early sensory areas may be more stochastic (or unreliable) than in higher cortical areas. To study this issue quantitatively, we investigated how clustering in our model depends on the reliability of assembly activations. To this end, we repeated the experiment with simultaneously activated assemblies but with a reduced number of active neurons in each assembly activation. When an assembly was activated for 300 ms, a fraction p of assembly neurons was chosen randomly which increased their firing rate to 35 Hz as before, while the rest remained at the background rate of 1 Hz. In successive simulations, we reduced the fraction p of active neurons in each assembly from 1.0 (all neurons were active at each pattern presentation) down to 0.5 (half of the neurons were active at each pattern presentation). The mean number of represented assemblies as a function of the neuron activation probability in each assembly is shown in Figure 5B (25 independent trials). The number of assembly-synapse clusters on dendritic branches decreased with the neuron activation probability. A moderate stochasticity is tolerated by the rewiring dynamics for temporally isolated patterns and two simultaneously activated assemblies. No stochasticity is tolerated, however, when more than two assemblies are activated simultaneously at times of pattern presentation (Figure 5B). In all simulations, a number of branches did not receive any synaptic cluster. This was expected as the neuron had 12 branches while only 8 assembly patterns were presented as input. These branches hosted rather stable synaptic connections originating from various input assemblies. In conclusion, this result predicts a continuum of synaptic clustering in PCs where the extent of synaptic clustering depends on the reliability of presynaptic assembly activations. This finding may provide an explanation for diverse results on synaptic clustering in cortex.

Influence of assembly overlap: In the simulations so far, we considered disjoint input assemblies. In contrast, it was proposed that associative memories learned within short time intervals will be represented by overlapping populations of neurons (Silva et al., 2009; Rogerson et al., 2014). This claim was recently verified by experimental studies of medial temporal lobe (Ison et al., 2015), the hippocampus (Cai et al., 2016), and the lateral amygdala (Rashid et al., 2016). Their results show that linking of memories relies on the overlap of distributed neuronal assemblies.

To test whether synaptic clustering emerges also for input assemblies with various amounts of neuron overlap, we considered a setup where some of the neurons of each assembly were chosen from a shared pool of neurons. As a result, some neurons of the shared pool participated in two or more input assemblies (see section 4 for details). By increasing the fraction of shared neurons in the assemblies, we were able to increase the assembly overlap. When repeating this procedure in 25 independent trials, the overlap between a given pair of assemblies was 3.11 ± 2.48% (mean ± SD), 6.15 ± 3.53%, 9.38 ± 4.19%, and 12.5 ± 5.11% for a fraction of shared neurons per input assembly of 25, 50, 75, and 100%, respectively. After 17 min of assembly activations, we determined the number of represented assemblies on the neuron. The results for 25 independent trials are summarized in Figure 5C (solid line). Our results show that synapses are organized on dendrites in a clustered manner; this is true even for assemblies that share a significant portion of neurons, where the number of represented assemblies decreases slightly with increasing assembly overlap.

In section 2.4, we showed that our rewiring rule can support memory protection by recruiting branches sequentially as new assemblies were activated. To test whether this ability of the model depends on disjoint input assemblies, we repeated the experiment described in section 2.4 but with overlapping assemblies and various assembly overlaps. Overlapping assemblies were created as described above. After 17 min of assembly activations, the mean number of represented assemblies was 6.80 ± 0.75, 6.08 ± 1.16, 5.32 ± 1.16, and 4.52 ± 1.17 for mean assembly overlaps of 3.11 ± 2.48%, 6.15 ± 3.53%, 9.38 ± 4.19%, and 12.5 ± 5.11%, respectively (mean and SD over 25 independent trials). For all considered overlaps, synapses were still segregated in an assembly-specific manner. However, the number of represented assemblies significantly decreased for mean assembly overlaps of 6.15 % (Figure 5C, dashed line). In summary, our model predicts that associated memories with overlapping assembly representations are segregated in an assembly-specific manner onto different dendritic branches and that this segregation can indeed support memory protection.

2.6. Analysis of Stochastic Rewiring Dynamics

The stochastic rewiring dynamics considered in this work is described by the general SDE (1), reiterated here for convenience:

where the first term describes structural constraints on the connectivity, the second term captures the functional goal of the plasticity process, and the third term models stochastic contributions.

It has been shown by Kappel et al. (2015) that the long-term behavior of this dynamics can be described concisely if the deterministic parameter changes are defined through two probability distributions:

where is a probability distribution that defines the structural constraints (the structural prior on parameters), and is a distribution that defines functionally preferred parameter settings (the functional likelihood of parameters). Preferred parameter settings have high probability in these distributions, others have low probability. Note that without the noise term, Equation (1) together with Equation (4) constitute continuous-time gradient ascent dynamics on the objective . With the inclusion of the noise term, Equation (1) defines Langevin sampling dynamics (Welling and Teh, 2011), that is, parameter configurations are visited in the long run according to the distribution

In other words, the probability density to observe some specific θ is given—after an initial transient period (often called burn-in time) where parameters move from the potentially low-probability initial setting to a high-probability region—by p*(θ). We say that the network samples network configurations from p*(θ). Since this p*(θ) contains the product of the two distributions and , high-probability parameter settings are only possible if they fulfill both the structural and the functional demands as defined by these distributions. Since θ defines both the synaptic strengths and the connectivity structure (a synapse ki is connected if θki > 0), this stochastic process automatically samples network connectivity structures.

The structural prior corresponding to the structural plasticity term is discussed in detail in section 4. The functional term is given by

Synapses that contribute or are co-active with dendritic plateau potentials are potentiated, while others are depressed and eventually retract, making room for new connections.

To interpret this functional term from the perspective of the sampling distribution of the stochastic dynamics, we want to exhibit a corresponding functional likelihood . We show in section 4 that this distribution factors into branch-specific distributions

so each branch optimizes its likelihood independently from other branches. For the functional likelihood, we consider the situation where the neuron is exposed for an extended time tmax to input spike trains xi according to some distribution and it stochastically responds with branch spikes. We can interpret the functional dynamics using the following functional likelihood (see section 4)

where is a normalizing factor (we consider here parameters bounded in the range [θmin, θmax] to keep this factor bounded) and is a constant. The angular brackets 〈·〉X, Γ denote an average over stochastic realizations of inputs xi and branch spikes Γk. Because of the function Γk in the integral, only times at which a plateau potential is present at the branch contribute to the integral. In combination with the first term in the brackets, the probability of the functional likelihood is high if efficacies of those synapses are large that are active slightly before or during a plateau potential. In combination with the second term in the brackets, the probability of the functional likelihood is high if efficacies of those synapses are small that are inactive slightly before or during a plateau potential. The constant γ > 0 trades off the importance of large active vs. small inactive synapses.

Hence, the rewiring dynamics prefers parameter settings where inputs that jointly recruit dendritic regenerative events (i.e., temporally correlated inputs) have strong synaptic connections to the branch. Under the assumption that such inputs are also functionally related (corresponding for example to a specific motor behavior), the rewiring dynamics contributes to functional synaptic clustering (Kastellakis et al., 2015). While cortical connectivity is sparse even at the local scale, it has been estimated that potential (i.e., possibly realizable) connectivity is huge—a spatial scale of a few hundred microns—and every pair of PCs could be potentially connected (Chklovskii et al., 2004; Kalisman et al., 2005). Our analysis shows that in this large space of potential connectivity structures, synaptic rewiring based on simple dendritic-spike-dependent plasticity rules could implement a stochastic search algorithm that favors functionally clustered synaptic configurations, as proposed by Chklovskii et al. (2004).

3. Discussion

We have proposed in this article a model for synaptic rewiring in neurons with dendritic non-linearities. We have observed that simple plasticity rules that depend on postsynaptic dendritic spikes lead to robust synaptic clustering. The use of the synaptic sampling framework allowed us to analyze the connectivity dynamics as a sampling process from a distribution of network configurations. Our analysis revealed that synaptic clusters are preferred configurations in this sampling process. Cichon and Gan (2015) found that dendritic spike events for different motor tasks were segregated onto different tuft branches of layer V PCs in mouse motor cortex. They also found that this segregation was necessary to avoid a detrimental effect of training on a previously learned task. Our model can explain these findings. First, we found that branch activity is segregated onto different dendritic branches if it is elicited by different input assemblies. Second, we found that this segregation allows memory retention in the sense that synaptic clusters are retained after memorization of new assembly input patterns.

3.1. Synaptic Plasticity and Rewiring

Throughout this article, we assumed that strong presynaptic activity coincident with a postsynaptic dendritic spike leads to LTP whereas a postsynaptic dendritic spike paired with weak presynaptic activity leads to LTD. While these plasticity dynamics are qualitatively consistent with experimental findings both for LTP (Golding et al., 2002; Lisman and Spruston, 2005; Gordon et al., 2006; Gambino et al., 2014; Cichon and Gan, 2015; Brandalise et al., 2016) and LTD (Holthoff et al., 2004), the exact plasticity rules and their relation to dendritic regenerative events are still to be determined. For example, experimental results of a study by Cichon and Gan (2015) indicated that at distal dendrites of layer V PCs, presynaptic activity during dendritic spikes leads to LTP, which is consistent with our model. However, different from previous findings, they found that LTD was induced by presynaptic activity that preceded the dendritic spike. To test whether our model is sensitive to such alterations in the plasticity rules, we reran the simulations for Figure 3 with such depression (see section 4 for details). We found that the model behaved comparably (7.28 ± 0.60 represented assemblies, mean ± SD over 25 independent trials).

We found that the number of input patterns that can be stored by a neuron is increased if dendritic-spike-dependent plasticity is extended by an STDP mechanism where pre-before-post spike pairs induce depression. This phenomenon has already been described and analyzed in a modeling study by Legenstein and Maass (2011). In general, the back-propagating action potential provides a means for communicating global information to the dendritic branches, that is, it can indicate to all branches that the neuron is active for the current input pattern. With STDP, a pattern that does already activate enough branches to elicit strong firing of the neuron will depress synapses at inactive branches. This competition reduces the number of activated branches per pattern and thus increases the number of patterns that can be stored in a single neuron. While we showed that clustering is still observed without this mechanism (Figure 2), it is beneficial in a sequential learning paradigm, as it keeps branches free to store patterns presented later in the sequence (Figure 4).

3.2. Protection of Memories

Our results indicate that synaptic clustering may protect older memories (Figure 4). It can thus avoid catastrophic forgetting, a well-known deficiency of standard incremental learning algorithms for neural networks (McCloskey and Cohen, 1989; French, 1999; Kirkpatrick et al., 2017). The mechanism is simple: a branch with a synaptic cluster from a specific input pattern is silent to most other patterns. Since LTP and LTD is conditioned on the local dendritic spike, memories on a branch are protected for most other input patterns. At the same time, other branches can develop specificity to novel patterns as plasticity there is decoupled from protected branches. However, this does not shelter memories completely. There are still mechanisms in our model that are detrimental to old memories. In particular, the noise term in Equation (1) will inevitably corrode memories. We did indeed observe weakening of older memories over time if they were not repeated, but on a time scale much longer than the one of pattern presentation (sections 1.4, 1.5 in the Supplementary Material). This suggests that synaptic clustering may provide a mechanism to avoid catastrophic forgetting temporarily. Additional consolidation mechanisms seem necessary to protect memories over long time scales, and we have investigated one way how consolidation can be integrated in our rewiring framework (section 1.6 in the Supplementary Material). An alternative role of dendritic structures in extending memory lifetime was proposed in Bono and Clopath (2017). We have demonstrated in this article that simple dendritic-spike-dependent rewiring can protect memories in a pattern memorization setup. In future work, one could apply these ideas to larger deep-learning networks in the context of continual learning tasks, possibly using simplified neuron and plasticity models with similar properties.

3.3. Related Work

Many theoretical studies have linked rewiring processes to cortical connection statistics (Deger et al., 2012; Fauth et al., 2015), computation and memory (Knoblauch et al., 2014; Zheng and Triesch, 2014; Deger et al., 2016; Miner and Triesch, 2016; Spiess et al., 2016), and homeostasis (Gallinaro and Rotter, 2018). These studies were, however, based on point neuron models, where the phenomenons considered in the current study cannot be observed. It has been proposed by Rhodes (2008) that neurons with non-linear dendrites could serve as a recoding stage in order to orthogonalize input representations, that is, to reduce the overlap between different input patterns. It is discussed there that a dendritic-spike-dependent rewiring could be used to provide efficient wiring diagrams for this operation. Our model exhibits one possible implementation of such a rewiring strategy. In a theoretical study, the impact of active dendrites on the memory capacity of neural networks was investigated (Poirazi and Mel, 2001). They calculated that non-linear dendrites can vastly increase the number of patterns that can be stored by a simple non-spiking model of a pyramidal cell. They also considered a supervised structural plasticity rule, the “clusteron” (Mel, 1992), that was designed to cluster synaptic inputs while optimizing the memory performance of the neuron. Their simulations showed that this rule leads to memory capacities as predicted by their theoretical considerations. Our model shows that such clustering emerges also in a more detailed spiking neuron model with a simpler online dendritic-spike-dependent rewiring principle that does not need supervision. We exhibited a potential functional relevance of this clustering in terms of protection of memory but did not consider memory capacity per se. It would be interesting to study similar capacity questions in the proposed model. Memory capacity of networks of neurons with non-linear dendrites was also studied by Wu and Mel (2009). They also used a simple non-spiking neuron model which basically consisted of a “dendritic” layer with a threshold non-linearity and a somatic layer which summed the outputs of the binary dendritic elements. This article did not consider rewiring. Nevertheless, a simple plasticity rule was used that depended on the dendritic activation, which resembles some similarity with dendritic-spike-dependent plasticity considered here. Interestingly, they found that memory capacity could be increased if not only the dendritic activation but also the number of activated synapses at the dendrite was used to gate LTP. In our rewiring framework, such a rule could be applied to weak synaptic connections, but not to non-established connections as their dynamics are assumed independent of pre- and postsynaptic activity.

3.4. Relation to Experimental Literature and Experimentally Testable Predictions

The proposed model gives rise to a number of experimentally testable predictions. We have shown that temporally correlated input leads to the clustering of corresponding synaptic connections on single dendritic branches. This suggests that neuronal assemblies should connect to PCs preferentially in a branch-specific clustered manner. To verify this prediction, methods for labeling memory engrams (Tonegawa et al., 2015) could either be combined with tracing techniques or with engram reactivation and target cell Ca2+ imaging at dendritic branches. Another prediction of our model is that synaptic clustering depends on input statistics. This finding may explain that no evidence for clustering has been observed in early sensory cortical areas (Jia et al., 2010). One could test this prediction more directly through glutamate uncaging at tuft dendrites. Our model predicts that concerted correlated uncaging at single branches should lead to a clustered strengthening of synapses at the branch. Conversely, a more unreliable uncaging where each uncaging site is activated only with a certain probability would not have this effect. Various experimental studies have indicated that associated memories are stored in overlapping assemblies (Ison et al., 2015; Cai et al., 2016; Rashid et al., 2016). Our model predicts that such associations should also be visible in the clustering structure, that is, neurons that respond to each of two associated memories would exhibit synaptic co-clustering of these memories within dendrites. This prediction is consistent with the findings of a modeling study by Kastellakis et al. (2016).

3.5. Conclusions

We have shown through computer simulations and theoretical analysis that synaptic clustering emerges from simple rewiring dynamics based on dendritic-spike-dependent synaptic plasticity. This result provides support for the synaptic clustering hypothesis. We further found that this clustering in turn may serve as a mechanism to protect memories from subsequent modifications on a medium time scale. More generally, our results emphasize the importance of dendritic structures in learning and memory and demonstrate the effectiveness of synaptic rewiring to find sparse network structures in the high-dimensional search space over potential connectivities.

4. Materials and Methods

Here, we provide details to the models and simulations.

4.1. Neuron Model

We assume a spiking neuron model with Nb independent dendritic compartments. Here and in the following, we will indicate variables and parameters of dendritic branches with a b superscript or subscript. Presynaptic spikes are transformed at the synapse ki—the synapse from presynaptic neuron i to branch k of the postsynaptic neuron—into alpha-shaped postsynaptic currents, which are weighted by the corresponding synaptic efficacies wki. The total current at branch k is given by

where is the fth spike-time of presynaptic neuron i. The two sums run over all presynaptic neurons i and all firing times of neuron i. Each individual alpha-current is given by

where H denotes (here and in the following) the Heaviside step function (for parameter values, see Table 1). The membrane potential of each branch (; branch potential) follows the dynamics of a leaky integrator of the current with resting potential . In particular, the dynamics of potential of branch k is given by

with membrane resistance Rb and membrane capacity Cb (the membrane time constant is given by τb = RbCb).

Branch spikes are elicited by the branch in a stochastic manner. In particular, the voltage-dependent instantaneous firing rate is given by

where is the threshold for branch-spike initiation, is the instantaneous firing rate at threshold, and βb is the sensitivity of spike emission (for parameter values, see Table 1). We denote the times of branch-spike onsets at branch k as . A branch spike includes a brief sodium spikelet followed by a plateau potential that lasts for up to a few hundreds of milliseconds, see Figure 1B. Assuming that branch k has initiated its last dendritic spike at time , the evolution of immediately after initiation is given by

where the function ηk describes the form of the branch spike (see below). The duration of the dendritic spike is proportional to the slope of at the last spike time of the branch, i.e.,

where cds is a scaling parameter. In our simulations, we clipped the duration between a minimum of and a maximum of to avoid unnaturally brief and long plateau durations (for other parameter values, see Table 1). The functional relation between input strength and the duration of the plateau potential is shown in Figure 1C. The shape of the dendritic spike is given by a brief sodium spikelet followed by a plateau (Antic et al., 2010):

The first term in Equation (15) models the sodium spikelet, and the second term models the plateau potential. The sodium spikelet decays exponentially with time constant τs with initial amplitude Vs (added to the amplitude Vds of the plateau potential). For the membrane potential again follows the dynamics given by Equation (11). As an example, the evolution of the dendritic membrane potential for an arbitrary chosen input is depicted in Figure 1B.

During the time of the plateau potential, the dendritic branch is absolute refractory, that is, no further regenerative event can be initiated. The propagation of dendritic plateau potentials toward the soma produces a sustained depolarization of the cell body. In general, the currents from dendritic compartments to the soma are driven by the difference between the membrane potential at the branch and the membrane potential of the soma. The somatic compartment sums currents from all dendrites (with a certain degree of amplitude attenuation).

The membrane potential at the soma of the neuron is given by

where is the resting potential. The membrane time constant of the neuron is given by τm = RmCm and Isoma(t) is

where Rl represents the total of the longitudinal resistance along the dendrites and apical trunk. Note that we restrict the currents to non-negative values since we only simulate forward currents, i.e., currents from the dendritic branches to the soma. Somatic spikes are characterized by their spike times t(f). The instantaneous firing rate is given analogously to the branch spike mechanism by

Immediately after t(f), the somatic potential enters a refractory period of duration in which Vsoma(t) is set to . For the dynamics is again given by Equation (16). To illustrate the dynamics of the membrane potential, a neuron which receives arbitrary input was simulated. The results of this simulation are shown in Figure 1B.

4.2. Plasticity and Rewiring

Here, we summarize the plasticity and rewiring dynamics in the network.

4.2.1. Synaptic Parameters and Efficacies

Each dendritic compartment has a set of potential synaptic connections that could be realized by some parameter setting. However, at each point in time, only a subset of these connections is realized by functional synapses. More precisely, we maintain one parameter θki for each synapse ki from input neuron i to branch k of the neuron. This parameter encodes (a) whether the synapse is functional and (b) the synaptic efficacy wki of the synapse (if it is functional). In particular, θki > 0 indicates a functional synapse, while θki ≤ 0 indicates that the connection is not established. The synaptic weight wki is cθθki for a functional synapse, and 0 otherwise. Hence, the mapping from the parameter θki to the synaptic weight is given by wki = cθmax{0, θki} where cθ = 1 nA is the slope of this mapping for θki > 0 (for other parameter values, see Table 2).

4.2.2. Parameter Dynamics

The stochastic dynamics of parameters are given by

where η > 0 is a small learning rate and denote increments of a standard Wiener process, thus implementing random walk behavior. The temperature T > 0 is a constant that scales the strength of this stochastic component. In the simulations, the parameters θki were clipped to the range [θmin, θmax]. The upper bound is necessary to avoid that the synaptic efficacies become arbitrary large. The lower bound is useful to avoid that parameters become very negative. Such parameters would be stuck for a long time in the negative domain and hence would be unlikely to be re-established, which would slow down the rewiring dynamics. The term in the brackets defines the deterministic part of the dynamics. It is divided here into two components. The first term describes changes that are used to enforce structural constraints on the connectivity. The functional goal of the plasticity process is captured by the second term . The deterministic terms are chosen such that they vanish for θki(t) ≤ 0. Hence, for non-established synapses, only the stochastic term remains.

For the structural term, we define a soft count of synaptic connections on branch k

where σ denotes the logistic sigmoid function . For a non-established synapse (wki = 0), the term in the brackets is 0 and this potential connection is not counted. For increasing efficacies larger than 0, this term approaches 1 with the scaling constant cw defining the speed of this approach. Using this soft count, the structural term is given by

where the constant Nsyn defines a soft upper bound on the number of active synapses per branch (we used Nsyn = 20 in all simulations), the constant λ > 0 defines how hard this soft bound is enforced, and denotes the derivative of the logistic sigmoid.

The functional term combines a presynaptic activity trace with a postsynaptic term that indicates the presence of a dendritic spike. The exponential presynaptic activity trace with time constant τx > 0 is given by:

where is the set of all indices of input spikes with spike times before t. The presence of a postsynaptic dendritic spike is formalized as a function Γk which is 1 if a plateau potential is present at time t and 0 otherwise. More formally, using to indicate the time of the last branch spike initiation before t, i.e., , we define

where is the length of this plateau potential as defined in Equation (14). The functional term is then given by

with constants and γ > 0.

4.2.3. STDP

Denoting the postsynaptic somatic spike times by t(1), t(2), … , we define the postsynaptic spike trains as sums of Dirac delta pulses, i.e., . Using this notation, the STDP update term is given by

where is a constant, xi is an exponential trace of the presynaptic spike times with time constant τx as defined in Equation (22), and where is the threshold for STDP initiation (for parameter values, see Table 2). Note that this update models the induction of depression for a pre-before-post spike pair (an inverted STDP rule) as found for synapses in the distal dendrites (Kampa et al., 2007). The plasticity dynamics with STDP for the parameters θki is therefore

Note that we include the term H(θki(t)) in Equation (26) in order to stress again that only functional synapses are subject to non-stochastic parameter changes.

4.2.4. Alternative Plasticity Rule

To test the dependence of the results on the specific form of the plasticity rule, we considered an alternative rule that models findings in a study by Cichon and Gan (2015). Here, presynaptic activity during a dendritic spike still leads to LTP, but, LTD is induced by presynaptic activity preceding a dendritic spike. The functional term in this plasticity rule combines two presynaptic activity traces [with fast (LTP) and slow (LTD) decay] with postsynaptic terms that indicate the onset and the presence of a dendritic spike. The fast decaying presynaptic activity trace with time constant is incremented at each presynaptic spike of neuron i that appears within the time period of a dendritic spike of branch k. The trace is given by:

where is the set of all indices of input spikes of neuron i with spike times before t and during the presence of a dendritic spike in branch k (recall that the function Γk is 1 during a dendritic spike and 0 otherwise). The slow decaying presynaptic activity trace is defined alike. However, this trace is only incremented at each presynaptic spike if there is no postsynaptic dendritic spike. The trace with time constant is defined as

where is the set of all indices of input spikes of neuron i with spike times before t and in the absence of a postsynaptic dendritic spike. Similar to somatic spike trains, we formalize the dendritic spike train as a sum of Dirac delta pulses at times of dendritic spike onset , were denotes the onset time of the fth spike of dendrite k. The functional term is then given by

where the constants and scale the contribution of potentiation and depression. The structural term and the STDP term are not altered and are kept as defined above (Equation (21) and (25), respectively). The alternative plasticity dynamics for the parameters θki is therefore,

4.3. Experimental Design and Statistical Analysis

All simulations as well as statistical analyses were performed using Python v3.5.3 with SciPy v0.18.1 and NumPy v1.13.0. The model was simulated with a time step of 1 ms. We used the Euler method for the integration of the deterministic neuron dynamics, and we used the Euler-Maruyama method for the integration of the stochastic dynamics of the synaptic parameters. Every neuron state, that is, membrane potential, remaining refractory period, etc., as well as the synaptic parameters are represented by fixed size state vectors that are update at each time step of the simulation.

D'Agostino-Pearson tests were used to assess normality. Statistical analyses were performed using different tests as appropriate, as stated in the main text and the figure legends. All of the data is presented as mean ± SD. Significant levels for all significance tests were set at p ≤ 0.05.

Simulation parameters are listed in Tables 1, 2. All simulations were based on the same parameter sets. Parameters used in the simulations with the alternative plasticity rule are given in section 4.3.3. To investigate the impact of the parameters on the simulation results we performed a one-at-a-time sensitivity analysis (see section 1.2 in the Supplementary Material for more detail).

4.3.1. Details to Simulations for Figure 2

A total of 320 input neurons were divided into eight disjoint assemblies of 40 neurons each. Neurons within each input assembly were preferentially active together. More precisely, at times tstart + k(Δtact + Δtdelay), with 0 ≤ k < Np and Np being the number of input patterns, one assembly was chosen uniformly at random from the set of all input assemblies and each neuron in the assembly emitted a 35 Hz Poisson spike train (randomly generated at every pattern presentation) for Δtact = 300 ms. In addition, to model background activity, each neuron from the whole input population was spiking at a rate of 1 Hz. The delay between two pattern presentations (the delay between successive active assemblies) was set to Δtdelay = 200 ms. During this time and from t = 0 to tstart = 200 ms only the background noise was active. We assume that each input neuron could potentially establish a connection to each branch. Note that this is a reasonable assumption if the neurons are considered to be located within the same cortical column (Chklovskii et al., 2004; Kalisman et al., 2005). Input neurons were initially connected to branches randomly, such that exactly 20 synapses were established on each branch. Synaptic parameters of these connections were independently drawn from a uniform distribution on the interval [4, 8). The soft maximum of synapses per branch (Nsyn) was also set to 20. A total of Np= 2,000 patterns as described above were presented to the neuron. Neuron and plasticity parameters were set according to Tables 1, 2, respectively.

4.3.2. Details to Simulations for Figure 3

Patterns were generated and parameters were set as in simulations for Figure 2. In these simulations, however, we added the STDP update (Equation 25) to the parameter dynamics. All of the following simulations, that is, simulations with alternative plasticity rule, simulations for Figures 4, 5, were conducted with the STDP update.

4.3.3. Details to Simulations With Alternative Plasticity Rule

We repeated the simulations for Figure 3 but with an alternative functional term in the plasticity dynamics (according to Equation 29). We presented a total of Np= 2,000 patterns to the neuron and we used the following plasticity parameters: , , , . All other parameters were set according to to Tables 1, 2. Results of these simulations are given in the Discussion.

4.3.4. Details to Simulations for Figure 4

Input assembly activations and background noise were generated as in the simulations described above (simulations for Figures 2, 3), but instead of choosing one assembly at random at times of pattern presentation, we activated the assemblies sequentially. In other words, we first activated exclusively assembly A1 250 times, then assembly A2, then assembly A3, etc. The delay between two pattern presentations was set to Δtdelay = 200 ms as before. Parameters were set according to Tables 1, 2. At the end of all pattern presentations of an assembly, we evaluated for each branch which, if any, assemblies had synaptic clusters on that branch.

4.3.5. Details to Simulations for Figure 5A

Patterns were generated similar as in simulations for Figure 2, but instead of choosing one assembly at random at times of pattern presentations, up to 4 assemblies were chosen uniformly at random from the set of all input assemblies and were simultaneously activated. In addition, in successive simulations, we reduced the fraction p of active neurons in each assembly from 1.0 down to 0.5 (in steps of 0.1). The fraction p of assembly neurons was chosen randomly at each pattern presentation. These neurons increased their firing rate to 35 Hz as before, while the rest remained at the background rate of 1 Hz. In each of these simulations we presented a total of Np = 2,000 patterns to the neuron. Neuron and plasticity parameters were set according to Tables 1, 2, respectively.

4.3.6. Details to Simulations for Figures 5B,C