94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci. , 23 January 2018

Volume 12 - 2018 | https://doi.org/10.3389/fncom.2018.00001

Synaptic plasticity is believed to be the biological substrate underlying learning and memory. One of the most widespread forms of synaptic plasticity, spike-timing-dependent plasticity (STDP), uses the spike timing information of presynaptic and postsynaptic neurons to induce synaptic potentiation or depression. An open question is how STDP organizes the connectivity patterns in neuronal circuits. Previous studies have placed much emphasis on the role of firing rate in shaping connectivity patterns. Here, we go beyond the firing rate description to develop a self-consistent linear response theory that incorporates the information of both firing rate and firing variability. By decomposing the pairwise spike correlation into one component associated with local direct connections and the other associated with indirect connections, we identify two distinct regimes regarding the network structures learned through STDP. In one regime, the contribution of the direct-connection correlations dominates over that of the indirect-connection correlations in the learning dynamics; this gives rise to a network structure consistent with the firing rate description. In the other regime, the contribution of the indirect-connection correlations dominates in the learning dynamics, leading to a network structure different from the firing rate description. We demonstrate that the heterogeneity of firing variability across neuronal populations induces a temporally asymmetric structure of indirect-connection correlations. This temporally asymmetric structure underlies the emergence of the second regime. Our study provides a new perspective that emphasizes the role of high-order statistics of spiking activity in the spike-correlation-sensitive learning dynamics.

Spike-timing-dependent plasticity (STDP) (Markram et al., 1997; Bi and Poo, 1998; Caporale and Dan, 2008) is one of the major forms of Hebbian learning. In canonical STDP, a synapse is potentiated (depressed) when the presynaptic spikes precede (follow) the postsynaptic ones. With this temporally asymmetric learning window, STDP can regulate both the mean rate and variability of postsynaptic firing (Song et al., 2000), enforce the competition between convergent synaptic inputs (Song et al., 2000), and support temporal sequence learning (Rao and Sejnowski, 2001).

While STDP of a single neuron with many synapses has been well characterized, issues related to the STDP learning dynamics in recurrent networks are yet to be fully addressed, in spite of recent progress (Morrison et al., 2007; Clopath et al., 2010; Gilson, 2010; Kozloski and Cecchi, 2010; Babadi and Abbott, 2013, 2016; Litwin-Kumar and Doiron, 2014; Ocker et al., 2015; Zenke et al., 2015; Bi and Zhou, 2016; Ravid Tannenbaum and Burak, 2016). The role of the low-order statistical structure (i.e., firing rate) of spiking activity has been intensively investigated in the learning dynamics in recurrent networks (Morrison et al., 2007; Kozloski and Cecchi, 2010; Babadi and Abbott, 2013). Though it is known that, as a correlation-sensitive learning rule, STDP inherently incorporates the high-order statistics of spiking activity (Morrison et al., 2008), the role of the high-order statistics of spiking activity in STDP learning dynamics for recurrent networks remains largely unknown. In this work, we explore the issue of how the high-order firing statistics (for example, firing variability) affect the network connectivity structures learned through STDP in recurrent networks. We demonstrate that the information of firing variability is encoded in a linear response kernel that quantifies how a neuron responds to a weak incoming signal. By developing a self-consistent linear response theory pioneered in Pernice et al. (2012), Trousdale et al. (2012), and Helias et al. (2013), we show that this response kernel, together with the firing rate, determines the pairwise correlation of spiking activity. By decomposing the pairwise correlation into one component associated with local direct connections and the other associated with indirect connections, we can identify two distinct dynamical regimes. In the first regime, the local direct connections dominate over the indirect connections in determining the learned network structure. This regime corresponds to those studied in Babadi and Abbott (2013), Kozloski and Cecchi (2010), and Morrison et al. (2007), where the connections from neurons of higher firing rate to those of lower firing rate are strengthened whereas the connections with the opposite situation are weakened. The other is a regime in which the indirect connections dominate over the direct connections in learning dynamics. In this second regime, the connections from neurons of higher firing rate and higher firing variability to those of lower firing rate and lower firing variability are weakened whereas the connections with the opposite situation (i.e., connections from neurons of lower firing rate and lower firing variability to those of higher firing rate and higher firing variability) are strengthened. We find that there is a temporally asymmetric structure of common-input-induced spike correlations between different neuronal populations. This structure is induced by the heterogeneity of firing variability across neuronal populations, which underlies this counterintuitive dynamical regime. Our study provides a theoretical framework for addressing the question of how the high-order statistics of spiking activity affect network structures learned through STDP. Using this framework, we demonstrate how the introduction of heterogeneity of firing variability across different neuronal populations can give rise to counterintuitive network connectivity structures in a plastic neuronal network.

The model we used here is the current-based leaky integrate-and-fire (LIF) model with finite synaptic time constant. It is the same as that in Babadi and Abbott (2013).

where τm = 20 ms is the membrane time constant, Vr = −60 mV is the resting potential, τs = 5 ms is the synaptic time constant, wij is the synaptic strength from neuron j to neuron i and sj(t) is the spike train of neuron j. The parameters μext,i and are the mean and variance of the external input, respectively, and ξi is the white noise satisfying 〈ξi〉 = 0 and . Every time when the membrane potential Vi crosses the threshold Vth = −40 mV, neuron i would emit a spike and Vi would be reset to Vr.

The network consists of NE excitatory neurons and NI inhibitory neurons. We use NE = 250, NI = 250. The connections between these neurons are of the all-to-all type. We keep the inhibitory-to-excitatory (EI), excitatory-to-inhibitory (IE) and inhibitory-to-inhibitory (II) synaptic strengths constant during the entire simulation while the excitatory-to-excitatory (EE) connections are subject to the STDP learning rule to be introduced in section “The effect of firing rate and firing variability on learning dynamics—numerical evidence”. At the beginning of simulations, the strengths for the EE and IE are drawn at random from a uniform distribution from 0 mV to mV and mV, respectively, and the strengths for the EI and II connections are drawn at random from a uniform distribution from mV and to 0 mV, respectively.

Based on the mean and variance of external inputs, we divide the excitatory neurons into three populations in which the αth (α = 1, 2, 3) population receives the external input of the mean and standard deviation . The number of neurons in the αth (α = 1, 2, 3) population is denoted as Nα.

We first describe the statistical properties of a single LIF neuron. The dynamics of a single LIF neuron with finite synaptic time constant is governed by Equation (1) and the following equation:

where μ and σ are the mean and standard deviation of the external input respectively, and ξ(t) is the Gaussian white noise satisfying 〈ξ〉 = 0 and 〈ξ(t)ξ(t′)〉 = δ(t − t′).

When τs is much smaller than τm, the mean firing rate has the following approximation:

where , erf(·) is the error function and ζ(·) is the Riemann-Zeta function (Fourcaud and Brunel, 2002).

We use a response kernel to characterize how the LIF neuron ensemble responds to a perturbation. Specifically, we consider Equation (1) and the following equation:

where u(t) is a perturbation. When u(t) is weak, the difference between the instantaneous firing rate r(t) of neuronal ensemble that is governed by Equations (1, 5) and the mean firing rate of neuronal ensemble that is governed by Equations (1, 3) is small. Therefore, we can approximate the instantaneous firing rate r(t) by the mean firing rate plus a linear response term with respect to u(t), i.e.,

where h(τ) is the linear response kernel of Equations (1, 3) and the symbol * stands for temporal convolution.

The analytical results for the linear response kernel are available for the case of zero synaptic time constant (Fourcaud and Brunel, 2002). Recently, for the case of nonzero synaptic time constant, the analytical result was derived (Schuecker et al., 2015). Here, we use the reverse correlation method to compute it (Dayan and Abbott, 2001; Ostojic et al., 2009). Specifically, we inject a weak Gaussian white noise signal ξs(t) with correlation to the LIF neuron, i.e., adding ξs(t) on the right hand side of Equation (3). Note that this testing white noise signal ξs(t) is different from the external noise input ξ(t) in Equation (3). By using the spike-triggered average technique, we calculate the response kernel in the following way:

where is the spike timing of the LIF neuron in the duration T and the firing rate is given by Equation (4) (Dayan and Abbott, 2001). In our simulation, σs = 2 mV and T = 106 ms. We use one realization of ξs(t) and perform the average over 1,000 realizations of external noise input ξ(t).

Here, we describe how we obtain and hi(τ) for i = 1, ⋯, N in a self-consistent manner for the network described in Equations (1, 2). By splitting the spike train sj(t) in Equation (2) into the mean firing rate and its fluctuations, we can rewrite Equation (2) in the following form:

where the term can be regarded as a perturbation. By enforcing the first-order Volterra approximation of Equations (1, 8), we arrive at Equation (31), where and hi(τ) are the mean firing rate and response kernel, respectively, of Equation (1) and the following equation:

Here, we can observe that, even with the same mean and variance value of external inputs, different neurons may still have different mean firing rates due to the randomness of synaptic strengths. To simplify the self-consistent derivation of mean firing rate, we make a further assumption that the mean firing rates of single neurons within the same population are approximated by the population-averaged mean firing rate. Denote the population-averaged mean firing rates of the different populations as , α = 1, 2, 3, I, where I stands for the inhibitory population. Then, is the mean firing rate of the following equations:

where stands for the average synaptic strength from Population β to Population α. Therefore, can be determined in the following self-consistent way:

The first derivation of the power spectrum of Hawkes process (i.e., Equation 31) was carried out by Hawkes (1971a), in which the Wiener-Hopf theory was used. Here, we follow the method in Grytskyy et al. (2013). First, for the spike correlation function C(τ), the following equations hold:

for τ ≥ 0, where s(t) and r(t) are the spike train and the instantaneous firing rate of Hawkes process, respectively, and . In Equation (14b), we use the fact that 〈s(t + τ)sT(t)〉 = 〈r(t + τ)sT(t)〉 + Dδ(τ). In Equation (14c), we insert the Hawkes process (Equation 31). In Equation (14d), we take advantage of the definition of spike correlation. Next, define y(t) = ry(t) + x(t), where

and x(t) is the Gaussian white noise with correlation 〈x(t + τ)x(t)〉 = Dxδ(τ). For y(t),

for τ ≥ 0. In Equation (16b), we have made use of the definition of y(t). In Equation (16c), we take advantage of the definition of Cy(t). In Equation (18), we use the fact that . By setting Dx = D, we obtain C(τ) = Cy(τ). Therefore, to understand the correlation structure of the Hawkes process, it suffices to study the correlation structure of y(t). Define as the Fourier transform of ry(t). From Equation (16b), we have

Thus, . By using the Wiener-Khinchin theorem, we have

This completes the derivation of the power spectrum formula as discussed in Equation (32).

Assume that there are Nα (α = 1, 2, 3, I) neurons in the αth population. The spike correlation between population α and population β is defined as follows:

The corresponding spike cross correlation is defined as follows:

Note that is used in the caption of Figure 4. Inserting Equation (16a) into Equation (19), we can obtain

where stands for the linear response kernel of Population α. Similar to the result (Equation 18), the population-averaged power spectrum has the following expression:

where , and .

Formally, the power spectrum (Equation 18) can be expanded in the following way:

Therefore, the cross correlation C(τ), which is the inverse Fourier transform of by the Wiener-Khinchin theorem, can be approximated by Equation (33). The same decomposition can also be carried out for the population-averaged cross correlation as discussed previously.

We first present the numerical evidence of the complexity of mutual interactions between plastic network structures and spiking activity, as it relates to the effect of high-order statistical structures implied in the STDP learning dynamics. In particular, we emphasize the importance of firing variability of spiking activity for the network structures learned through STDP. This fact is particularly emphasized by the sharp contrast of learned network structures between two scenarios (Case I in Figure 1 and Case II Figure 2); they share the same trend for firing rate change across different neuronal populations, but have the opposite trend for their firing variability change. We then develop a self-consistent linear response theory to account for the effects of both firing rate and firing variability on learning dynamics and explain the role observed in our numerical study of high-order statistics in learning dynamics.

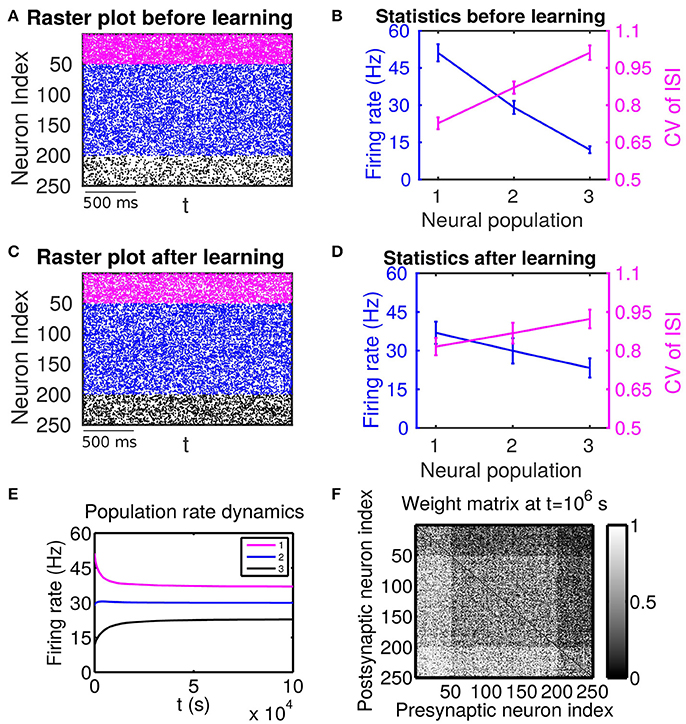

Figure 1. The effect of firing rate and variability on STDP learning dynamics—Case I. All excitatory neurons receive external inputs with an identical variance (i.e., mV for α = 1, 2, 3). Based on the values of mean of external inputs, the excitatory neurons are divided into three populations, in which Population 1 (including neurons labeled by 1–50, purple), Population 2 (including neurons labeled by 51–200, blue) and Population 3 (including neurons labeled by 201–250, black) receive the largest ( mV), intermediate ( mV) and smallest ( mV) mean, respectively. The initial values of excitatory-to-excitatory connection strengths are drawn from a uniform distribution ranging from 0 to 1 mV. (A,C) Raster plots before learning and after learning, respectively. (B,D) Firing rates and coefficients of variation (CVs) of the different populations before learning and after 106 s of learning, respectively. Error bars indicate the standard deviation of corresponding values within each population. (E) Firing rate dynamics of the different populations during learning. (F) Weight matrix after 106 s of learning. The largest (i.e., brightest) and the smallest (i.e., darkest) value of the gray bar is 1 and 0 mV, respectively.

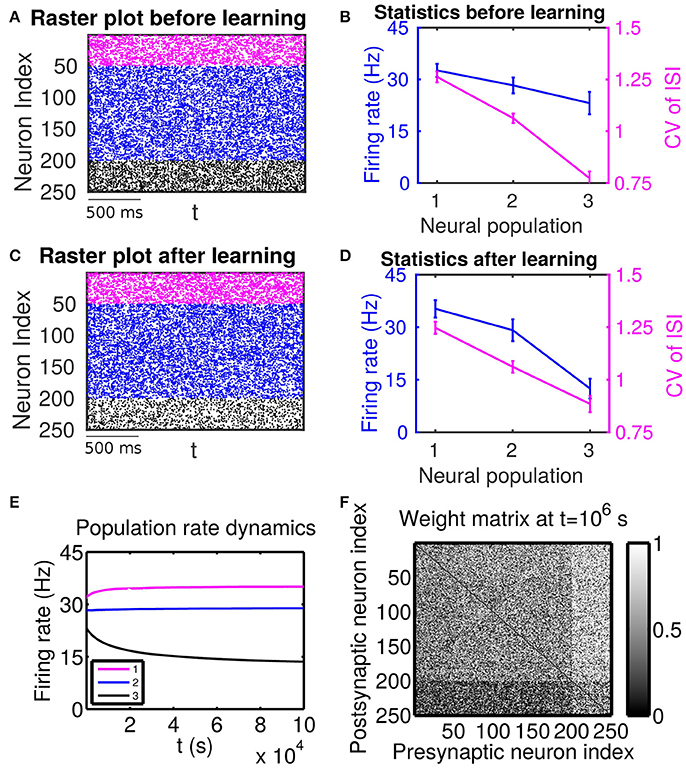

Figure 2. The effect of firing rate and variability on STDP learning dynamics—Case II. Different populations receive external inputs with different means as well as different variances. Population 1 (including neurons labeled by 1–50, purple) receives the smallest mean ( mV) but the largest variance ( mV), Population 2 (including neurons labeled by 51–200, blue) receives an intermediate mean ( mV) and variance ( mV), and Population 3 (including neurons labeled by 201–250, black) receives the largest mean ( mV) but the smallest variance ( mV). The initial values of excitatory-to-excitatory connection strengths are drawn from a uniform distribution ranging from 0 to 1 mV. (A,C) Raster plots before learning and after learning, respectively. (B,D) Firing rates and coefficients of variation (CVs) of the different populations before learning and after 106 s of learning, respectively. Error bars indicate the standard deviation of corresponding values within each population. (E) Firing rate dynamics of the different populations during learning. (F) Weight matrix after 106 s of learning. The largest (i.e., brightest) and the smallest (i.e., darkest) value of the gray bar is 1 and 0 mV, respectively.

For our numerical experiments, we use the current-based leaky integrate-and-fire (LIF) neuron model with finite synaptic time constant (Babadi and Abbott, 2013). The network consists of 250 excitatory neurons and 250 inhibitory neurons (See section Methods for details). Based on the values of mean and variance of external inputs, we divide the excitatory neurons into three populations—Population 1 (denoted as P1, including neurons indexed from 1 to 50), Population 2 (denoted as P2, including neurons indexed from 51 to 200) and Population 3 (denoted as P3, including neurons indexed from 201 to 250). The initial values of excitatory-to-excitatory (EE) connection strengths are drawn at random from a uniform distribution ranging from 0 to mV. During the simulation, the EE connections are subject to the canonical all-to-all pairwise additive STDP learning rule (Song et al., 2000; Babadi and Abbott, 2013). Specifically, let τ = ti − tj, where ti and tj are a pair of spike times of excitatory neuron i and excitatory neuron j, respectively. This pair of spike times will affect a change of the synaptic strength wij from neuron j to neuron i as follows: wij → wij + L(τ), where the STDP learning window L(τ) is given by

Similarly, for the synaptic strength wji from neuron i to neuron j, the update is wji → wji + L(−τ). As in Song et al. (2000) and Babadi and Abbott (2013), we enforce the hard bound to the value of wij and wji, i.e., set wij (wji) to if and 0 if wij(wji) < 0. In this work, we will restrict ourselves to the balanced case in which A+ = A− = 0.005 and τ+ = τ− = 20 ms. We characterize spiking activity in the network by its firing rate and coefficient of variation (CV), where CV is the ratio of the standard deviation of interspike interval (ISI) to the mean of ISI. As is well known, for a given neuron under fixed input conditions, CV is often used as a measure of firing variability — the larger CV, the more irregular the spiking activity.

If these populations receive external inputs of different means but an identical variance, we observe that, as the mean external input increases, the firing rate increases whereas the CV decreases. Figure 1B illustrates the corresponding firing statistics of these populations. For this case, as shown in Figure 1F, the connections from the populations of higher firing rate to those of lower firing rate are strengthened after learning whereas the connections with the opposite situation (i.e., connections from those of lower firing rate to those of higher firing rate) are weakened. This result is consistent with results of the previous studies (Morrison et al., 2007; Kozloski and Cecchi, 2010; Babadi and Abbott, 2013). Here, STDP is found to act as a firing rate equalizer, i.e., decreasing the rate of the neuronal populations of higher firing rate and increasing the rate of the neuronal populations of lower firing rate. Figure 1E displays an example of this behavior. Furthermore, by comparing the firing statistics before learning (Figure 1B) with that after learning (Figure 1D), we observe that STDP also acts as a firing variability equalizer, i.e., decreasing the CV of higher-CV neuronal populations and increasing the CV of lower-CV neuronal populations. For ease of discussion below, this case will be termed as Case I.

Next, we consider the case in which both the mean and variance of external inputs vary across these populations. We adjust both the mean and variance of external inputs (see the caption of Figure 2 for the specific values) such that Population 1 has the highest firing rate and the highest CV, Population 2 has an intermediate firing rate and CV, and Population 3 has the lowest firing rate and the lowest CV. The corresponding firing statistics of these populations before learning is displayed in Figure 2B. From Figure 2F, it can be seen clearly that after learning the connections from Population 1, which has the highest firing rate, to Population 3, which has the lowest firing rate, are weakened. We note that this phenomenon still exists even when the projections from the excitatory populations to the inhibitory populations become plastic (Supplementary Material). Furthermore, by comparing Figure 2B with Figure 2D, we observe that STDP tends to increase the rate of the population of the highest firing rate and decrease the rate of the population of the lowest firing rate. That is, STDP is no longer acting as a firing rate equalizer. However, it still acts as a weak equalizer for firing variability. This phenomenon cannot be explained solely by the firing rate theory (Morrison et al., 2007; Kozloski and Cecchi, 2010; Babadi and Abbott, 2013), in which the connections from the population of higher firing rate to the population of lower firing rate should be strengthened and STDP should act as a firing rate equalizer. In the following, we develop a linear response theory to characterize the importance of high-order firing statistics in learning dynamics as illustrated in Figure 2. For ease of discussion below, the case in Figure 2 will be termed as Case II.

To characterize the learning dynamics, we consider two mutually-connected neurons in a recurrent network, say neuron i and neuron j. The STDP learning rule introduced in the last section can be simply written in the following weight dynamics:

where si(t) and sj(t) respectively stand for the spike trains of neuron i and neuron j. Expressing and as the integral of sj(t) and si(t), respectively, we can obtain

By assuming that the change of wij(t) is sufficiently slow (Ocker et al., 2015), sj(t − τ)si(t) can be approximated by Cij(τ) + 〈si(t)〉〈sj(t)〉 where Cij(τ), defined as 〈si(t + τ)sj(t)〉 − 〈si(t)〉〈sj(t)〉, is the temporal spike cross correlation between neuron i and neuron j in the recurrent network with a fixed synaptic strength matrix. Combining the dynamics of wji in Equation (25), we arrive at the following equations:

Note that unlike the scenario studied in Babadi and Abbott (2013) and Ocker et al. (2015), there is no offset term in Equations (29, 30). This is due to the balanced condition A+ = A− and τ+ = τ− in our STDP learning rule. Therefore, to understand the learning dynamics (Equations 29, 30), it is facilitative to study the correlation structure in recurrent networks with static couplings.

In the following sections, we will follow the linear response theory proposed in Pernice et al. (2012), Trousdale et al. (2012), and Helias et al. (2013) and develop a self-consistent framework for the learning dynamics (Equations 29, 30). To this end, we first characterize how the firing variability information is encoded in the linear response kernel for the single LIF neuron case in section Incorporation of Firing Variability in Linear Response Kernel. Then, in section Hawkes Process as an Approximation of an LIF Network Dynamics, we approximate the LIF network dynamics with a stochastic point process called Hawkes process in which the instantaneous firing rate depends on the spiking history of interacting neurons (Hawkes, 1971b). With this Hawkes process approximation, in section Spike Correlation Structure of Hawkes Process, we discuss the issue of how firing statistics of spiking activity and network connectivity structure shape the spike correlation structure of the LIF network dynamics. In the end, we combine the correlation structure analysis with the STDP learning dynamics (Equations 29, 30) to arrive at the final learning dynamics (Equations 35, 36), which explain the observed numerical results, in section Learning Dynamics with Spike Correlation Decomposition.

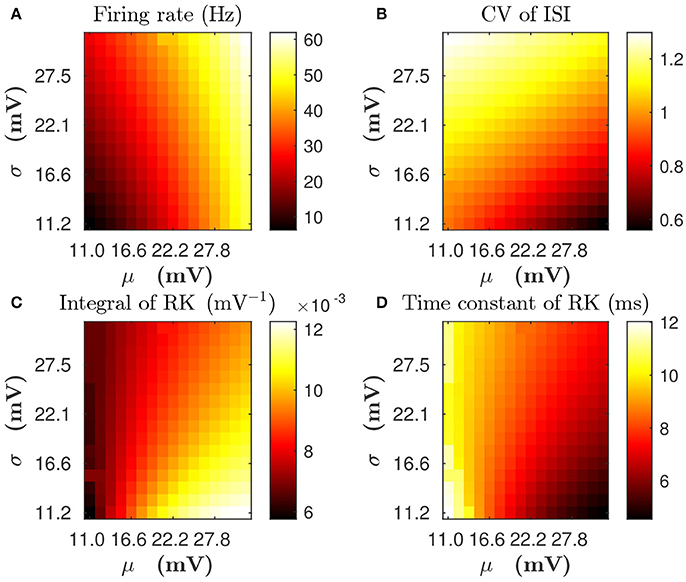

For a single LIF neuron described by Equations (1, 3), the dependence of mean firing rate and CV on input parameters (mean input value μ and standard deviation value σ) is illustrated in Figures 3A,B, respectively. By using the reverse correlation method (Dayan and Abbott, 2001; Ostojic et al., 2009) (see section Methods for details), We also computed the response kernel h(τ) that characterizes how the LIF neuron ensemble responds to a perturbation. We found that response kernel h(τ) exhibits an exponential decay profile, A exp{−τ/τeff}Θ(τ), where Θ(τ) is the Heaviside function, provided that fluctuations of the external input are sufficiently high, i.e., comparable to the difference between firing threshold and reset voltage Vth − Vr (for example, σ is about 10 ~ 30 mV). We can use two quantities — the integral of response kernel ∫h(τ)dτ, which is τeffA after the substitution of the exponential form of h(τ) = A exp{−τ/τeff}Θ(τ), and the decay time constant τeff — to characterize h(τ). Figures 3C,D illustrate the dependence of ∫h(τ)dτ and τeff on μ and σ, respectively. In Figure 3, we can observe that, for any fixed mean firing rate, when the standard deviation σ increases, both CV of ISI and τeff increase whereas ∫h(τ)dτ decreases. Therefore, if there are two neurons with the same mean firing rate but different firing CVs, both the decay time constant and the integral of response kernel of these two neurons will differ from each other. In this sense, the information of firing variability is incorporated in the linear response kernel.

Figure 3. The dependence of statistical properties of the LIF neuron on the mean μ and the standard deviation σ of external inputs. (A,B) Firing rate and CV of inter-spike interval (ISI), respectively. (C,D) Integral of response kernel ∫h(τ)dτ and linear response time constant τeff, respectively.

In general, the response of a neuron to recurrent spike inputs is nonlinear. Here, we investigate the asynchronous state, as shown in Figures 1A, 2A, in which spikes from different neurons arrive at the synapses of the target neuron at different times. By considering that the magnitude of a postsynaptic potential elicited by the firing event of a single presynaptic neuron is in general small, i.e., the synaptic strength is weak, it is reasonable to treat the asynchronous recurrent inputs in a linear manner (Pernice et al., 2012; Trousdale et al., 2012; Helias et al., 2013). Specifically, by regarding in the LIF neuronal network as a perturbation, we arrive at the following effective description:

where and hi(τ) are the mean firing rate and response kernel of the ith neuron that is driven by both the external input and the mean-driven recurrent input (see section Methods for details), respectively, and N is the number of neurons in the network. Note that (i) the mean firing rate is determined by the self-consistent Equations (12, 13); and (ii) the response kernel hi(τ) is computed based on the single LIF neuron Equations (1, 3) with the total mean input value in Equation (13) and the standard deviation value in Equation (12). Equation (31) can be understood in the following way. On the one hand, given spike trains sj(t) for j = 1, ⋯, N, Equation (31) provides a way to evolve the instantaneous firing rate of the ith neuron ri(t). On the other hand, from Equation (31), the spike train si(t) can be regarded as being generated by a jump process with the instantaneous firing rate ri(t). Therefore, we have obtained a self-consistent stochastic jump process that produces both the instantaneous firing rate ri(t) and the stochastic spike train si(t). This stochastic process Equation (31) is a Hawkes process (Hawkes, 1971b), which has been extensively used in the neuroscience literature (Pernice et al., 2012; Trousdale et al., 2012; Helias et al., 2013). From the discussion above, such a Hawkes process can be viewed as the first-order (i.e., linear) Volterra approximation of the LIF network dynamics.

For the Hawkes process (Equation 31), its spike correlation has an explicit analytical form (Hawkes, 1971a). Specifically, the power spectrum , by the Wiener-Khinchin theorem, is the Fourier transform of spike correlation C(τ) = (Cij(τ)) and has the following form (Hawkes, 1971a) (see section Methods for a derivation):

where is the Fourier transform of the response kernel h(τ) = diag{h1(τ), ⋯, hN(τ)}, w is the synaptic strength matrix, and .

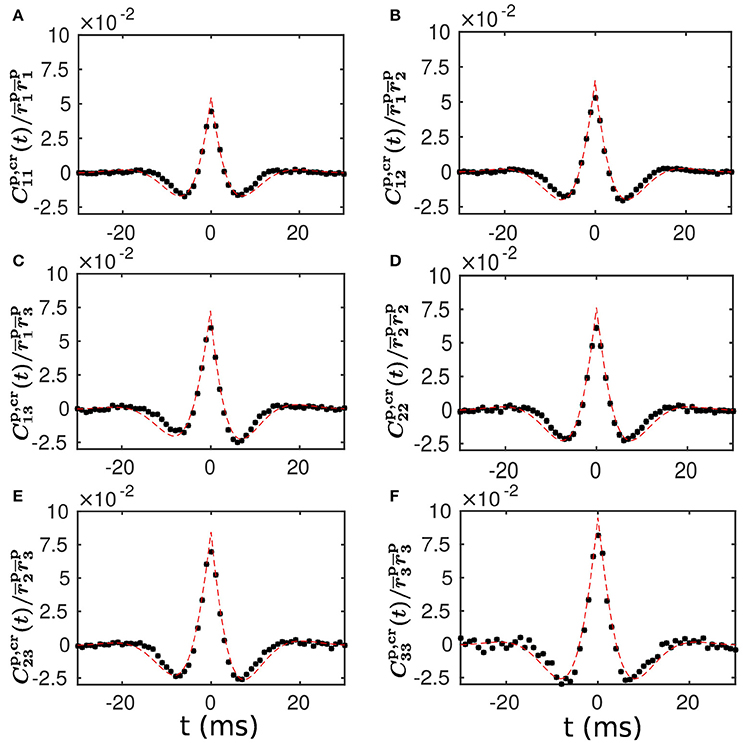

To examine the validity of our linear response theory, we compute the population-averaged cross correlation (see section Methods for details). We find that our theoretical prediction of the population-averaged cross correlation is in very good agreement with the cross correlation measured from our LIF neuronal network simulation. This agreement is clearly seen in Figure 4, demonstrating that our linear response theory can well capture the spike correlation structure of the LIF network dynamics.

Figure 4. Population-averaged cross correlation—comparison between theoretical prediction and numerical results. (A–F) Normalized population-averaged cross correlation between the different populations. Note that for α, β = 1, 2, 3 is defined in Equation (20) and for α = 1, 2, 3 is computed with Equations (12, 13) in a self-consistent way. The black dots are the cross correlation measured from a simulation of the LIF neuronal network with a static weight matrix. The red dashed line is the theoretical prediction of our linear response theory.

We note that Equation (32) also provides a starting point to study how network connectivity affects the spike correlation structure since we can decompose the connections into direct connections, common inputs, and other higher-order connections. In particular, the spike correlation has the following decomposition (see section Methods for a derivation):

where h−(τ) ≡ h(−τ). Note that the similar decomposition has been derived in Pernice et al. (2012), Trousdale et al. (2012), Hu et al. (2013), and Ocker et al. (2015).

The first term on the right hand side (RHS) of Equation (33) can be obtained by setting the connection strength w in the Hawkes process (Equation 31) to zero. There is no cross correlation in this term.

For the second term on the RHS of Equation (33), the corresponding component with respect to the correlation between neuron i and neuron j is . This involves firing rate of presynaptic neuron j, the connection strength wij from neuron j to neuron i and the linear response kernel hi(τ) of postsynaptic neuron i. Therefore, this component corresponds to the correlation induced by the direct connection from neuron j to neuron i. Similarly, the component (the third term on the RHS of Equation 33), corresponds to the correlation induced by the direct connection from neuron i to neuron j. Combining these two terms, we obtain the spike correlation induced by direct connections between neuron i and neuron j. For ease of discussion below, we denote these terms as .

We next discuss the terms associated with the indirect connections. For the fourth term on the RHS of Equation (33), the corresponding component with respect to the correlation between neuron i and neuron j is . This involves the firing rate of neuron k, the connection strengths wαk and the response kernels hα(·) for α = i, j. Therefore, this term corresponds to the correlation induced by the common inputs from neuron k to both neuron i and neuron j. As we have demonstrated above, hα(·), α = i, j, exhibits an exponential decay profile, which will be expressed as Bα exp(−t/τα)Θ(t). Then, this component becomes

For ease of discussion below, we denote this term as , the remaining higher-order terms in Equation (33) as , and the summation of and as the indirect term . We can observe from Equation (34) that, if there is a difference between response time constants τi and τj, will show an asymmetric property with respect to the axis t = 0. More precisely, if we assume τi > τj, for any t > 0. This asymmetry can be understood intuitively as follows. When there is a common input from neuron k into both neurons i and j, the neuron with the smaller response time constant (neuron j) will respond more rapidly than the neuron with the larger response time constant (neuron i) on average. Therefore, there is a higher likelihood that neuron j will fire before neuron i. In terms of the spike correlation, this implies that for any t > 0. We note that, as shown in Equation (34), the common inputs from both excitatory and inhibitory neurons into neuron i and neuron j induce a positive spike correlation between neuron i and neuron j. Therefore, if there is a significant difference between τi and τj, there will be a substantial asymmetry between the positive-t component of and the corresponding negative-t component. This will have a profound impact on the structure of Cij(t) as discussed below.

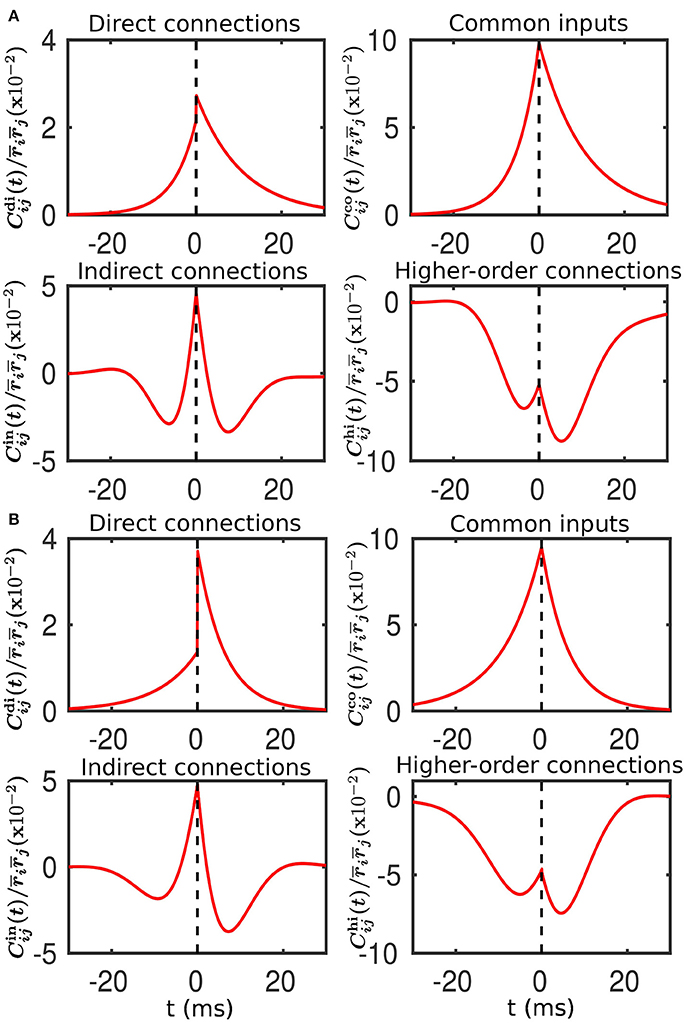

Now we study how different connectivity patterns affect the cross correlation structure between different neuronal populations for Cases I and II in Figures 1, 2. As an example, we pick neuron i from Population 3 and neuron j from Population 1. Note that, for Case I in Figure 1, neuron i (j) has a lower (higher) firing rate and a higher (lower) firing variability. Whereas, for Case II in Figure 2, neuron i (j) has a lower (higher) firing rate and a lower (higher) firing variability.

For Case I in Figure 1, we find that the net mean inputs to neuron j in Population 1 and neuron i in Population 3 are 31.45 and 11.55 mV, respectively. Note that the external mean inputs and are 40 and 20 mV, respectively. Since the net mean input is the summation of external mean input and recurrent input, the net recurrent inputs to neuron j and neuron i are −8.55 mV and −8.45 mV, respectively. This means the overall recurrent inputs to both neurons are inhibitory. In Case I, the standard deviation values for both neurons are 15.81 mV. With these net mean input values and standard deviation values, we compute the response kernel by using the reverse correlation method (see section Methods for more details). We find that ∫hj(t)dt > ∫hi(t)dt and τj < τi, which is evident in Figures 3C,D. From the left panels of Figure 5A, we can observe that (i) the positive-t component of is larger than the corresponding negative-t component; and (ii) the positive-t component of is slightly smaller than the corresponding negative-t component. To further understand how different connectivity patterns shape , we split into the common-input correlation and the higher-order-connection correlation . It can be seen clearly from the right panels of Figure 5A that the positive-t component of is smaller than the corresponding negative-t component whereas the positive-t component of is larger than the corresponding negative-t component. A combination of these two facts yields a small difference between the positive-t component of and the corresponding negative-t component. We note that the asymmetry of with respect to the axis t = 0 exhibited in the top right panel of Figure 5A is a consequence of τj < τi.

Figure 5. Decomposition of the spike cross correlation between neurons from the different populations before learning. Here, we choose neuron i from Population 3 and neuron j from Population 1. The connection strengths wij and wji are fixed at 0.5 mV. The top left, top right, bottom left and bottom right panels of both (A,B) correspond to the correlations associated with direct connections, common inputs, indirect connections, and higher-order connections, respectively. (A) Compared to neuron i, neuron j receives a larger mean and an identical variance of external inputs. This corresponds to Case I in Figure 1. (B) Compared to neuron i, neuron j receives a smaller mean but a larger variance of external inputs. This corresponds to Case II in Figure 2.

For Case II in Figure 2, we find that the net mean inputs to neuron j in Population 1 and neuron i in Population 3 are 15.58 and 20.50 mV, respectively. Note that the external mean inputs and are 27.5 and 32.5 mV, respectively. Since the net mean input is the summation of external mean input and recurrent input, the net recurrent inputs to neuron j and neuron i are −11.92 and −12.00 mV, respectively. This means the overall recurrent inputs to both neurons also are inhibitory. In Case II, the standard deviation values for neuron j and neuron i are 31.6 and 11.1 mV, respectively. With these net mean input values and standard deviation values, we compute the response kernel by using the reverse correlation method (see section Methods for more details). We find that ∫hj(t)dt < ∫hi(t)dt and τj > τi, which is also evident in Figures 3C,D. From the left panels of Figure 5B, we can observe that (i) the positive-t component of is again larger than the corresponding negative-t component; and (ii) the positive-t component of is much smaller than the corresponding negative-t component. From the right panels of Figure 5B, we find that the positive-t components of both and are smaller than the corresponding negative-t components. A combination of these two effects yields a large difference between the positive-t component of and the corresponding negative-t component. Note that the fact that for t > 0 in Figure 5B is a consequence of the inequality τj > τi. In the next section, we will demonstrate the extent of asymmetry between the positive-t component of and the corresponding negative-t component underlies the difference of network structures learned through STDP presented in Figures 1, 2.

Combining the STDP learning dynamics (Equations 29, 30) with the direct- and indirect-connection decomposition of the correlation structure in the previous section, we arrive at the following learning dynamics:

where for α = i, j and . In Equations (35, 36), the first and second terms on the RHS correspond to the effect of direct connections between neuron i and neuron j, whereas the third term corresponds to the effect of indirect connections between neuron i and neuron j. Note that here we have used the balanced STDP assumption, i.e., A+ = A− and τ+ = τ−.

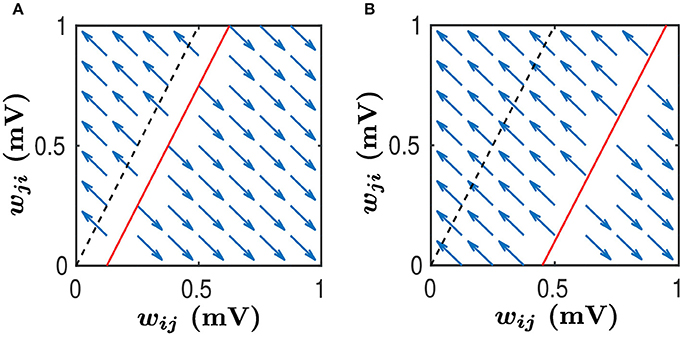

As argued in Babadi and Abbott (2013), while a linear system of differential equations such as Equations (35, 36) cannot have more than two attractors, the hard lower and upper bounds enforced by the STDP learning rule, i.e., the condition , can lead to the following two attractors: and . For the sake of discussion, we consider below. Then, the line has the slope greater than 1, as illustrated by the black dash line in Figure 6. If neglecting the indirect connection effect, that is, setting B = 0, we can observe from Equations (35, 36) that, for any (wij, wji) pair satisfying , the pair will converge to . Whereas, for any (wij, wji) pair satisfying , the pair will converge to . This is illustrated in Figure 6A with the black dash line separating the two basins. In this case, the size of basin of the attractor is larger than that of the attractor . Therefore, wij would be more likely to be potentiated, whereas wji would be more likely to be depressed.

Figure 6. Phase plane analysis of learning dynamics. This learning dynamics has two attractors — (wij, wji) = (1, 0) and (wij, wji) = (0, 1). The black dashed line satisfies while the red line satisfies . We assume that . Therefore, the slope of both the black dashed line and the red line is greater than 1. Any (wij, wji) pair in the area above the red line will converge to (0, 1) whereas any pair in the area below the red line will converge to (1, 0), as indicated by the blue arrows. Therefore, the area above the red line is the basin of the attractor (0, 1) whereas the area below the red line is the basin of the attractor (1, 0). Note that wij and wji evolve along the line wij + wji = Const as indicated by the arrows. (A) The size of basin of the attractor (0, 1) is smaller than that of the attractor (1, 0). In this case, synapses from the neuronal population to which neuron j belongs, to the neuronal population to which neuron i belongs, would be more likely to be strengthened, whereas the synapses with the opposite direction will be weakened. (B) The size of basin of the attractor (0, 1) is larger than that of the attractor (1, 0). In this case, synapses from the neuronal population to which neuron j belongs, to the neuronal population to which neuron i belongs, would be more likely to be weakened, whereas the synapses with the opposite direction will be strengthened.

When the indirect connection effect is included, there exist two distinct regimes depending on the value of B. For the sake of discussion, we denote the size of basin of (i.e., the area below the line ) as Sij and the size of basin of (i.e., the area above the line ) as Sji. One is a regime in which Sij > Sji. This occurs when B ≥ 0 or B is negative but small. As illustrated in Figure 6A, the red line separates the two basins. In this regime, wij would be more likely to be potentiated whereas wji would be more likely to be depressed. For neuronal populations, the synapses from the neuron j's population to neuron i's population will have a higher likelihood to be strengthened. In contrast, the synapses with the opposite direction will be more likely to be weakened. The other is a regime in which Sij < Sji. This occurs when B is sufficiently negative, as indicated by the red line in Figure 6B. In this regime, wij would be more likely to be depressed whereas wji would be more likely to be potentiated. For neuronal populations, the synapses from neuron i's population to neuron j's population will have a higher likelihood to be strengthened, whereas the synapses with the opposite direction will be weakened with a larger probability.

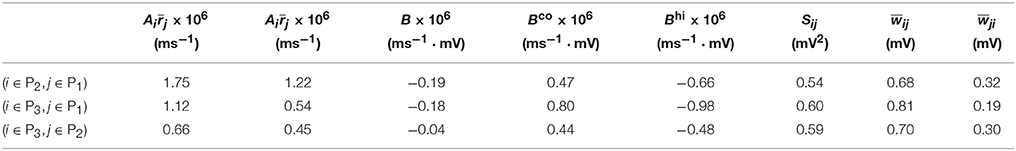

We now examine the cases in Figures 1, 2. For Case I in Figure 1 and Table 1 displays the corresponding values of , , B, Sij, Sji as well as the average connection strengths and after 106 s of learning. It can be seen clearly that for all the three (i, j) pairs, namely (i ∈ P2, j ∈ P1), (i ∈ P3, j ∈ P1) and (i ∈ P3, j ∈ P2), , indicating that for all these three pairs would be strengthened. Indeed, this strengthening is confirmed by the values of presented in Table 1 (i.e., for all three pairs). To evaluate the contribution of common inputs and higher-order connections separately, we also include Bco (defined as ) and Bhi (defined as ) in Table 1. We can observe that for all three pairs Bco > 0 whereas Bhi < 0. Since B = Bco + Bhi, this gives rise to a negative but small value of B, yielding a regime in which Sij > Sji. Since the connections from the populations of higher firing rate to those of lower firing rate are strengthened whereas the connections with the opposite direction are weakened, the rate of the populations of lower firing rate will increase whereas that of the populations of higher firing rate will decrease. The strengthening of connections from the populations of higher firing rate to those of lower firing rate leads to the increase of the mean of total inputs into the populations of lower firing rate. As illustrated in Figure 3B, when the variance of total inputs is fixed, the larger the mean total inputs, the smaller the CV. Therefore, this explains why in Figure 1 the CV of the populations of lower firing rate, which is larger before learning, decreases after learning. Therefore, STDP here acts as a weak equalizer for both firing rate and firing variability, as demonstrated in Figure 1.

Table 1. Parameters of learning dynamics (Equations 35, 36) and related quantities for Figure 1.

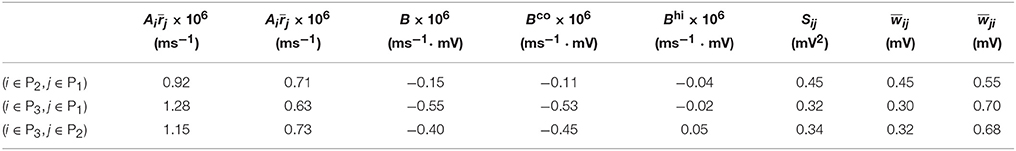

For Case II in Figure 2 and Table 2 shows that for all three (i, j) pairs, namely (i ∈ P2, j ∈ P1), (i ∈ P3, j ∈ P1) and (i ∈ P3, j ∈ P2), and . Since the connections from the populations of higher firing rate to the populations of lower firing rate are weakened whereas the connections with the opposite direction are strengthened, the rate of the populations of higher firing rate will increase further whereas that of the populations of lower firing rate will decrease further. Meanwhile, the weakening of connections from the populations of higher firing rate to those of lower firing rate leads to the decrease of the mean of total inputs into the populations of lower firing rate. As illustrated in Figure 3B, the smaller the mean total inputs, the larger the CV. Therefore, the CV of these populations of lower firing rate, which is smaller before learning, increases after learning. Note that, in Case II, those populations of lower firing rate also have the lower firing variability. This explains why STDP acts as a weak equalizer of firing variability, instead of firing rate, in Figure 2.

Table 2. Parameters of learning dynamics (Equations 35, 36) and related quantities for Figure 2.

Furthermore, for Case II in Figure 2, by examining the contribution of common inputs and higher-order connections, we can find from Table 2 that, compared to Bco, Bhi is negligible for the pairs (i ∈ P2, j ∈ P1) and (i ∈ P3, j ∈ P1). Therefore, for these two pairs, it is the contribution of common-input correlations that yields a large negative value of B and the corresponding network structures in Figure 2. Note that the asymmetric property of common-input correlations arises from the difference of response time constants between different neurons. As demonstrated in Figure 3, the response time constant encodes the information of the firing variability of spiking activity. In this sense, we have established a direct link between firing variability and learning dynamics by analyzing the spike correlation structures, and have demonstrated explicitly how firing variability shapes the network structures learned through STDP.

We have investigated how the high-order statistics of spiking activity affect the learning dynamics in a plastic LIF neuronal network. We have developed a self-consistent linear response theory, which incorporates the information of both firing rate and firing variability. By decomposing the spike correlation structure into the component associated with local direct connections and that associated with indirect connections, we have identified two distinct regimes regarding the network structures learned through STDP. In one regime, the contribution of the correlation associated with local direct connections dominates in STDP learning dynamics, leading to network structures consistent with the previous work (Morrison et al., 2007; Kozloski and Cecchi, 2010; Babadi and Abbott, 2013). In the other regime, the contribution of the correlation associated with indirect connections, instead of local direct connections, dominates, yielding network structures at variance with the prediction by the simple firing rate theory. The dominance of the indirect-connection correlation mainly arises from the asymmetric common-input correlations, which is induced by the heterogeneity of firing variability across different neuronal populations. Therefore, our study highlights the importance of firing variability of spiking activity for the evolution of plastic neural networks.

In a series of work (Gilson et al., 2009a,b,c,d, 2010), the STDP learning dynamics in recurrent networks has been investigated with Hawkes processes. However, the response kernel of the Hawkes process used there is identical across neuronal populations, thus the effect of firing variability was not addressed. Here, we study the STDP learning dynamics with the LIF neuron, in which the effect of firing variability is addressed by varying the mean and variance of external inputs across different neuronal populations. In Babadi and Abbott (2013), the authors developed a theory to explain the formation of in- and out-hubs. However, the effect of firing variability has not been discussed. Our theory can be viewed as an extension of the work (Babadi and Abbott, 2013). More recently, motif dynamics have been studied with linear response theory for one homogeneous population (Ocker et al., 2015). Instead, our work focuses on the evolution of connection strengths across different neuronal populations.

We have focused here on the balanced STDP rule. However, it is straightforward to extend our analysis to the unbalanced case by including additional terms in learning dynamics (Equations 35, 36). An unbalanced STDP rule can be expected to act as a loop-generating or loop-eliminating mechanism, depending on whether the rule is potentiation-dominated or depression-dominated (Babadi and Abbott, 2013). The neuron model we used here is the current-based LIF neuron. As we have demonstrated, the key property of a single neuron model to have an indirect-connection-dominated regime is that the firing variability of spiking activity can be encoded in the time constant of linear response kernel. Therefore, it can be expected that the indirect-connection-dominated regime can exist with other neuron models, such as conductance-based models, exponential integrate-and-fire models or more realistic Hodgkin-Huxley neurons. The connectivity here is of the all-to-all type. We can readily extend our self-consistent linear response theory to the sparsely connected network case. For larger network size, such as the network of 500 excitatory neurons and 500 inhibitory neurons, we have also found that there is an indirect-connection-dominated regime, provided that there is a significant difference of firing variability between different neuronal populations. In this work, the input statistics (i.e., input means and input variances) are kept constant, indicating that the circuit we studied receives the same sensory stimuli during the whole simulation. Under this assumption, the neural population activity can be simply quantified by mean firing rate and CV of ISI. It would be very interesting to study the learning dynamics when the stimulation periods are interleaved by spontaneous states. Another interesting issue to be addressed in the future work is how the high-order firing statistics of spiking activity affect the connectivity structures in a circuit with more complex learning rules, such as triplet STDP learning rule (Gjorgjieva et al., 2011).

In conclusion, we have demonstrated that the introduction of heterogeneity of firing variability across different neuronal populations can give rise to counterintuitive network structures in a plastic neural circuit. Our work suggests that the high-order statistics of spiking activity can play an important role in shaping the connectivity patterns in our brain.

Conceived and designed the research, performed experiments, analyzed data, and wrote the paper: BM, DZ, and DC.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This work was supported by NYU Abu Dhabi Institute G1301 (BM, DZ, and DC), NSFC-11671259, NSFC-91630208, NSFC-11722107, Shanghai Rising-Star Program-15QA1402600 (DZ), NSFC 31571071, NSF DMS-1009575 (DC), Shanghai 14JC1403800, Shanghai 15JC1400104, SJTU-UM Collaborative Research Program (DZ and DC).

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2018.00001/full#supplementary-material

Babadi, B., and Abbott, L. F. (2013). Pairwise analysis can account for network structures arising from spike-timing dependent plasticity. PLoS Comput. Biol. 9:e1002906. doi: 10.1371/journal.pcbi.1002906

Babadi, B., and Abbott, L. F. (2016). Stability and competition in multi-spike models of spike-timing dependent plasticity. PLoS Comput. Biol. 12:e1004750. doi: 10.1371/journal.pcbi.1004750

Bi, G. Q., and Poo, M. M. (1998). Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci. 18, 10464–10472.

Bi, Z., and Zhou, C. (2016). Spike pattern structure influences synaptic efficacy variability under stdp and synaptic homeostasis. I: spike generating models on converging motifs. Front. Comput. Neurosci. 10:14. doi: 10.3389/fncom.2016.00014

Caporale, N., and Dan, Y. (2008). Spike timing-dependent plasticity: a hebbian learning rule. Annu. Rev. Neurosci. 31, 25–46. doi: 10.1146/annurev.neuro.31.060407.125639

Clopath, C., Büsing, L., Vasilaki, E., and Gerstner, W. (2010). Connectivity reflects coding: a model of voltage-based STDP with homeostasis. Nat. Neurosci. 13, 344–352. doi: 10.1038/nn.2479

Fourcaud, N., and Brunel, N. (2002). Dynamics of the firing probability of noisy integrate-and-fire neurons. Neural Comput. 14, 2057–2110. doi: 10.1162/089976602320264015

Gilson, M. (2010). STDP in recurrent neuronal networks. Front. Comput. Neurosci. 4:23. doi: 10.3389/fncom.2010.00023

Gilson, M., Burkitt, A. N., Grayden, D. B., Thomas, D. A., and van Hemmen, J. L. (2009a). Emergence of network structure due to spike-timing-dependent plasticity in recurrent neuronal networks. I. Input selectivity-strengthening correlated input pathways. Biol. Cybern. 101, 81–102. doi: 10.1007/s00422-009-0319-4

Gilson, M., Burkitt, A. N., Grayden, D. B., Thomas, D. A., and van Hemmen, J. L. (2009b). Emergence of network structure due to spike-timing-dependent plasticity in recurrent neuronal networks. II. Input selectivity-symmetry breaking. Biol. Cybern. 101, 103–114. doi: 10.1007/s00422-009-0320-y

Gilson, M., Burkitt, A. N., Grayden, D. B., Thomas, D. A., and van Hemmen, J. L. (2009c). Emergence of network structure due to spike-timing-dependent plasticity in recurrent neuronal networks III: partially connected neurons driven by spontaneous activity. Biol. Cybern. 101, 411–426. doi: 10.1007/s00422-009-0343-4

Gilson, M., Burkitt, A. N., Grayden, D. B., Thomas, D. A., and van Hemmen, J. L. (2009d). Emergence of network structure due to spike-timing-dependent plasticity in recurrent neuronal networks IV. Biol. Cybern. 101, 427–444. doi: 10.1007/s00422-009-0346-1

Gilson, M., Burkitt, A. N., Grayden, D. B., Thomas, D. A., and van Hemmen, J. L. (2010). Emergence of network structure due to spike-timing-dependent plasticity in recurrent neuronal networks V: self-organization schemes and weight dependence. Biol. Cybern. 103, 365–386. doi: 10.1007/s00422-010-0405-7

Gjorgjieva, J., Clopath, C., Audet, J., and Pfister, J.-P. (2011). A triplet spike-timing-dependent plasticity model generalizes the bienenstock-cooper-munro rule to higher-order spatiotemporal correlations. Proc. Natl. Acad. Sci. U.S.A. 108, 19383–19388. doi: 10.1073/pnas.1105933108

Grytskyy, D., Tetzlaff, T., Diesmann, M., and Helias, M. (2013). A unified view on weakly correlated recurrent networks. Front. Comput. Neurosci. 7:131. doi: 10.3389/fncom.2013.00131

Hawkes, A. G. (1971a). Point spectra of some mutually exciting point processes. J. R. Stat. Soc. Ser. B (Methodol.) 33, 438–443.

Hawkes, A. G. (1971b). Spectra of some self-exciting and mutually exciting point processes. Biometrika 58, 83–90. doi: 10.1093/biomet/58.1.83

Helias, M., Tetzlaff, T., and Diesmann, M. (2013). Echoes in correlated neural systems. New J. Phys. 15:023002. doi: 10.1088/1367-2630/15/2/023002

Hu, Y., Trousdale, J., Josic, K., and Shea-Brown, E. (2013). Motif statistics and spike correlations in neuronal networks. J. Stat. Mech. Theory Exp. 2013:P03012. doi: 10.1088/1742-5468/2013/03/P03012

Kozloski, J., and Cecchi, G. A. (2010). A theory of loop formation and elimination by spike timing-dependent plasticity. Front. Neural Circuits 4:7. doi: 10.3389/fncir.2010.00007

Litwin-Kumar, A., and Doiron, B. (2014). Formation and maintenance of neuronal assemblies through synaptic plasticity. Nat. Commun. 5:5319. doi: 10.1038/ncomms6319

Markram, H., Lübke, J., Frotscher, M., and Sakmann, B. (1997). Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science 275, 213–215. doi: 10.1126/science.275.5297.213

Morrison, A., Aertsen, A., and Diesmann, M. (2007). Spike-timing-dependent plasticity in balanced random networks. Neural Comput. 19, 1437–1467. doi: 10.1162/neco.2007.19.6.1437

Morrison, A., Diesmann, M., and Gerstner, W. (2008). Phenomenological models of synaptic plasticity based on spike timing. Biol. Cybern. 98, 459–478. doi: 10.1007/s00422-008-0233-1

Ocker, G. K., Litwin-Kumar, A., and Doiron, B. (2015). Self-organization of microcircuits in networks of spiking neurons with plastic synapses. PLoS Comput. Biol. 11:e1004458. doi: 10.1371/journal.pcbi.1004458

Ostojic, S., Brunel, N., and Hakim, V. (2009). How connectivity, background activity, and synaptic properties shape the cross-correlation between spike trains. J. Neurosci. 29, 10234–10253. doi: 10.1523/JNEUROSCI.1275-09.2009

Pernice, V., Staude, B., Cardanobile, S., and Rotter, S. (2012). Recurrent interactions in spiking networks with arbitrary topology. Phys. Rev. E 85:031916. doi: 10.1103/PhysRevE.85.031916

Rao, R. P., and Sejnowski, T. J. (2001). Spike-timing-dependent hebbian plasticity as temporal difference learning. Neural Comput. 13, 2221–2237. doi: 10.1162/089976601750541787

Ravid Tannenbaum, N., and Burak, Y. (2016). Shaping neural circuits by high order synaptic interactions. PLoS Comput. Biol. 12:e1005056. doi: 10.1371/journal.pcbi.1005056

Schuecker, J., Diesmann, M., and Helias, M. (2015). Modulated escape from a metastable state driven by colored noise. Phys. Rev. E 92:052119. doi: 10.1103/PhysRevE.92.052119

Song, S., Miller, K. D., and Abbott, L. F. (2000). Competitive hebbian learning through spike-timing-dependent synaptic plasticity. Nat. Neurosci. 3, 919–926. doi: 10.1038/78829

Trousdale, J., Hu, Y., Shea-Brown, E., and Josic, K. (2012). Impact of network structure and cellular response on spike time correlations. PLoS Comput. Biol. 8:e1002408. doi: 10.1371/journal.pcbi.1002408

Keywords: STDP, linear response theory, correlation structure, firing variability, synaptic plasticity

Citation: Min B, Zhou D and Cai D (2018) Effects of Firing Variability on Network Structures with Spike-Timing-Dependent Plasticity. Front. Comput. Neurosci. 12:1. doi: 10.3389/fncom.2018.00001

Received: 17 August 2017; Accepted: 03 January 2018;

Published: 23 January 2018.

Edited by:

Paul Miller, Brandeis University, United StatesReviewed by:

Maxim Volgushev, University of Connecticut, United StatesCopyright © 2018 Min, Zhou and Cai. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Douglas Zhou, emR6QHNqdHUuZWR1LmNu

Dedicated to David Cai

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.