95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci. , 03 August 2016

Volume 10 - 2016 | https://doi.org/10.3389/fncom.2016.00080

This article is part of the Research Topic Computation meets Emotional Systems: a synergistic approach View all 13 articles

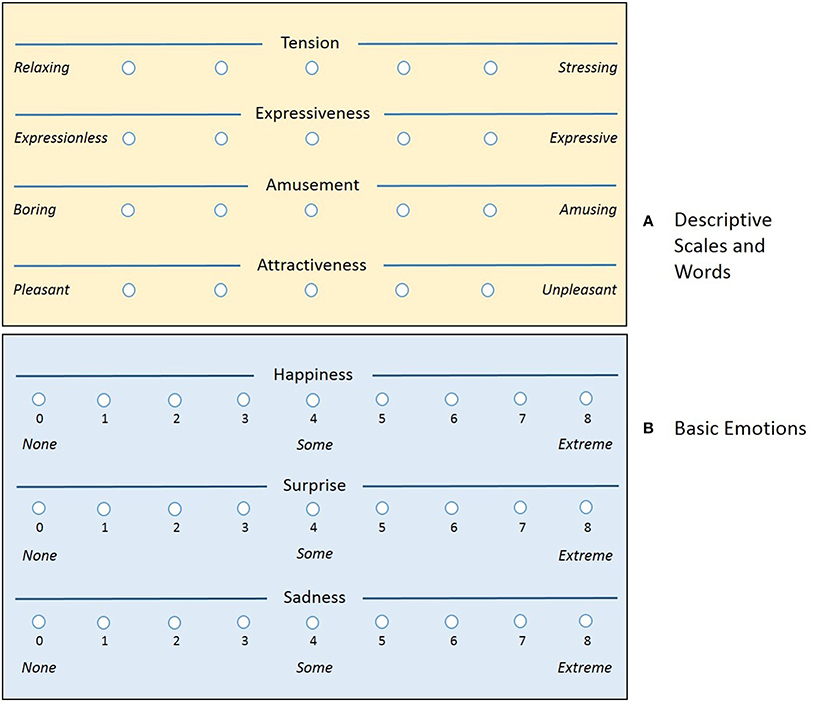

This article is based on the assumption of musical power to change the listener's mood. The paper studies the outcome of two experiments on the regulation of emotional states in a series of participants who listen to different auditions. The present research focuses on note value, an important musical cue related to rhythm. The influence of two concepts linked to note value is analyzed separately and discussed together. The two musical cues under investigation are tempo and rhythmic unit. The participants are asked to label music fragments by using opposite meaningful words belonging to four semantic scales, namely “Tension” (ranging from Relaxing to Stressing), “Expressiveness” (Expressionless to Expressive), “Amusement” (Boring to Amusing) and “Attractiveness” (Pleasant to Unpleasant). The participants also have to indicate how much they feel certain basic emotions while listening to each music excerpt. The rated emotions are “Happiness,” “Surprise,” and “Sadness.” This study makes it possible to draw some interesting conclusions about the associations between note value and emotions.

Brain structures and networks related to music processing of many kinds, including music perception, emotion and music, and sensory processing and music, have been discovered by psychologists and cognitive neuroscientists (Hunt, 2015). Recently, there has been an increase of research that relates music and emotion within neuropsychology (see e.g., Peretz, 2010). Many studies in neuropsychology and music have investigated reactions to specific musical cues (Koelsch and Siebel, 2005), such as melody (Brattico, 2006), harmony including basic dissonance-consonance (Koelsch et al., 2006), modality in terms of major-minor (Mizuno and Sugishita, 2007), rhythm (Samson and Ehrlé, 2003), and musical timbre (Caclin et al., 2006).

This article follows the findings on the assumption of the power of music to regulate the listeners mood (Fernández-Sotos et al., 2015). Indeed, emotion regulation through music is often considered one of the most important functions of music (Saarikallio and Erkkila, 2007). The two experiments presented in this paper are part of a project named “Improvement of the Elderly Quality of Life and Care through Smart Emotion Regulation” (Castillo et al., 2014b, 2016; Fernández-Caballero et al., 2014). The project's general objective is to find solutions for improving the quality of life and care of aging people who wish to continue living at home with the aid of emotion elicitation techniques. Cameras and body sensors are used for monitoring the aging adults' facial and gestural expression (Lozano-Monasor et al., 2014), activity and behavior (Castillo et al., 2014a), as well as for acquiring relevant physiological data (Costa et al., 2012; Fernández-Caballero et al., 2012; Martínez-Rodrigo et al., 2016). By using advanced monitoring techniques, the older people's emotions should be inferred and recognized. On the other hand, music, color and light are the proposed stimulating means to regulate their emotions toward a “positive” mood in accordance with the guidelines of a physician.

This paper introduces an initial step of the project that focuses on specific musical cues related to note value. Note value is the duration of a note, or the relationship of a note's duration to the measure. To sum up, the current paper introduces some hints in the projects overall aim to investigate the listeners changes in emotional state through playing different auditions that have been composed under defined parameters of note value. This way, it will be possible to conclude if and how the analyzed musical cues are able to induce positive and negative emotions in the listener.

In this sense, the article describes a couple of experiments that are aimed at detecting the individual influential issues related to two basic components of note value, that is, tempo and rhythmic unit. Tempo (time in Italian) is defined as the speed of a composition's rhythm, and it is measured according to beats per minute. Beat is the regular pulse of music which may be dictated by the rise or fall of the hand or baton of the conductor, by a metronome, or by the accents in music. On the other hand, a rhythmic unit is defined as a durational pattern that synchronizes with a pulse or pulses on the underlying metric level.

Tempo and rhythm have been studied in many previous works as broad concepts. As far as we know, it is the first time that tempo and rhythmic unit are studied as intrinsic parameters of note vale. In this paper, the individual influence of tempo and rhythmic unit are experimentally studied. In addition, from the results of both experiments carried out, the two parameters are related in their emotional influence. Lastly, this article established a basis for future study of the combined effect of both parameters.

It has been well established that arousal and mood represent different but related aspects of emotional responding (Husain et al., 2002). Although the use of these terms in the literature varies, mood typically refers to relatively long lasting emotions (Sloboda and Juslin, 2001), which may have stronger consequences for cognition than action. Arousal typically refers to the degree of physiological activation or to the intensity of an emotional response (Sloboda and Juslin, 2001). According to the arousal-mood hypothesis, listening to music affects arousal and mood, which then influence performance on various cognitive skills. The impact of music on arousal and mood is well established. People often choose to listen music for this very effect (Gabrielsson, 2001), and physiological responses to music differ depending on the type of music heard.

A research work on the impact of individual musical cues in the communication of certain emotions to the listener states that the most potent and frequently studied musical cues are mode, tempo, dynamics, articulation, timbre, and phrasing (Gabrielsson and Lindstrom, 2010). In fact, musical parameters such as tempo or mode are inherent properties of musical structure (van der Zwaag et al., 2011), which is known to influence listeners emotions. Music preferences commonly are treated as affective states (Scherer and Zentner, 2001), as they are strongly linked to valence (positive or negative experiences) (Istók, 2013). Moreover, recognizing basic emotions in music such as happiness or sadness is effortless and highly consistent among adults (Peretz et al., 1998).

In an example of experimentation with musical cues, twenty performers were asked to manipulate values of seven musical variables simultaneously (tempo, sound level, articulation, phrasing, register, timbre, and attack speed) for communicating five different emotional expressions (neutral, happy, scary, peaceful, sad) for each of four scores (Bresin and Friberg, 2011). Also, another research has revealed that there are a number of elements in sound aspects that modify emotional responses when listening to music (Glowinski and Camurri, 2012). These elements are connected to score features such as pitch (high/low), intervals (short/long), harmony (consonant/dissonant) and rhythm (regular/irregular). The dynamic between these sound elements is also important and depends largely on instrumental interpretation (or performance features), e.g., rhythmic accents, articulation (staccato/legato), variations in timbre (spectral richness, playing mode, and so on). In a systematic manipulation of musical cues, an optimized factorial design was used with six primary musical cues (mode, tempo, dynamics, articulation, timbre, and register) across four different music examples (Eerola et al., 2013). In the last 10 years, many other studies have assessed the influence of tempo, mostly combined with mode, in affective reactions. An interactive approach to understanding emotional responses to music was undertaken by simultaneously manipulating three musical elements: mode, texture, and tempo (Webster and Weir, 2005).

In our understanding all these previous studies show a need for going on in experimenting with tempo and rhythmic unit for the sake of regulating emotions through music.

Firstly, we have to highlight that our aim is to investigate solely the influence of note value parameters on emotional reactions. This is why all the musical fragments used in the two experiments have been designed in major mode. The results offered in this article would probably not apply in minor mode musical pieces in line with a series of studies (e.g., Husain et al., 2002; Knoferle et al., 2012). In Husain et al. (2002), the effects of tempo and mode in spatial abilities, levels of arousal and mood are discussed. Thus, listeners are offered four musical versions in which tempo (fast and slow) and mode (major and minor) are varied, so that they can judge them after listening. It is concluded that the performance of a spatial task increases with increasing tempo when listening in major mode. By contrast, the performance decreases with listening in slower tempo and minor mode. Similarly, in Knoferle et al. (2012) there is an experiment on the effects of mode and tempo in improving sales (marketing). During 4 weeks, play lists were placed in different shops under the same conditions (330 pop and rock songs with variations in tempo between 95 and 135 beats per minute (bpm), in major and minor mode, divided into four sets of songs, major-rapid, minor-fast, major-slow and minor-slow) for the sake of analyzing the increase or not in shopping. Thus, it is concluded that listening to music in a major mode and fast tempo is much more effective than listening to music in minor mode and slow tempo. Both studies suggest the existence of an optimal tempo for major and minor mode.

Sixty three young people (males and females) aged between 19 and 29 years have participated in the two experiments. Participants are students of the subject “Musical Perception and Expression,” taught by the first author of this paper. The students are enrolled in the 3rd year course of an Early Childhood Education Degree offered at Albacete School of Education, Spain. Some of these students are willing to teach music to preschool children in a close future. This study was carried out in accordance with the ethical standards and with the approval of the Ethics Committee for Clinical Research of the University Hospital Complex of Albacete (Spain) with written informed consent from all subjects. All subjects gave written informed consent in accordance with the Declaration of Helsinki.

The experimentation is carried out in a specially organized room, where each participant is placed in front of a computer. The evaluation of musical influence is performed with a software application. Each participant is asked to judge about each of the music pieces played on the computer at a volume of 20 decibels (dB). The participants are asked to label the music pieces for the following indirect semantic scales, where each scale is represented by two opposite descriptive words:

• “Tension”: from Relaxing to Stressing

• “Expressiveness”: from Expressionless to Expressive

• “Amusement”: from Boring to Amusing

• “Attractiveness”: from Pleasant to Unpleasant

Next, each participant reports how he/she felt one of basic emotions “Happiness,” “Surprise,” and “Sadness.” The choice of these emotions is based on the premise that the practical purpose of this study is to find musical cues that improve mood. The participants indicate a value corresponding to the intensity of each description and emotion. Each of the semantic scales is evaluated with a value ranging from 1 to 5. The self-reported emotions are also ranked similarly with a discrete integer value, now ranging from 0 to 8 (see Figure 1). As depicted in the figure, a value of 0 corresponds to None and 8 to Extreme.

Figure 1. Graphical user interface with questions. (A) Descriptive scales and words. (B) Basic emotions.

The statistical analysis was conducted using SPSS 20.0. In our study, we have calculated the mean (M) and the standard deviation (SD) of each input parameter (related to tempo and rhythmic unit) in relation to each output (descriptive scales and basic emotions). We have calculated the percent changes in the two experiments, subtracting the value from one level to the previous and dividing it by the first, in order to make data related to variations that occur in the different emotional parameters more understandable.

An ANOVA for repeated measures was used to evaluate the effects of both tempo and rhythmic unit on descriptive scales “Tension,” “Expressiveness,” “Amusement,” and “Attractiveness,” and emotions “Happiness,” “Surprise,” and “Sadness.” In the one-factor repeated measures model, we assume that the variances of the variables are equal. This assumption is equivalent to saying that the variance-covariance matrix is circular or spherical. To test this assumption, we used the Mauchly sphericity test (W). When the critical level associated with statistic W is greater than 0.05, we cannot reject the sphericity hypothesis. In such cases, we use multivariate statistics because they are not affected by failure of the sphericity. In the post-hoc comparisons, critical levels are adjusted by Bonferroni correction to control the error rates or probability from making mistakes of type I. For the significance, we have considered a critical value of p-value < 0.05. The interpretation of η2 is the common one: 0.02 small; 0.13 medium; 0.26 large.

There is no doubt that tempo is an essential element of note value. Indeed, rhythm is based around the tempo. The tempo enables perceiving music in an organized manner. It forms the basis on which the melodic-harmonic lines are built. The promotion in children of perception, acquisition and reproduction of the tempo is a widely advocated topic. This practice has a positive effect on reading assignments, learning vocabulary, maths and motor coordination of the younger (Weikart, 2003). Children perceive better the responses that they receive from the exterior through a constant beat, allowing giving logical sense to their world. This element is present in daily actions as observed in speech and body movements made by the human being (Norris, 2009). On the one hand, there is a social synchrony between human movements; the tempo is an underlying social interaction organizer (Scollon, 1982). On this basis, Norris (2009) shows that two individuals in contact tend to synchronize their movements and they reach to establish a common beat pattern. Tempos are also observed in verbal discourse, for example when a question is posed and an answer is provided. This fact is noted in the gestures and movements associated with the discourse. Moreover, this situation also occurs in listening to background music. It is worth highlighting that the listener synchronizes his/her movements with the tempo perceived in music.

Thus, the first musical test that is proposed here focuses on the evaluation of three tunes by the listener. The three melodies are really the same one, but it is varied on two occasions by altering the tempo. The piece is titled “Walking on the Street,” framed in a suite called “Three Little Bar Songs Suite” (see Figure 2). It has been written by the contemporary composer Juan Francisco Manzano Ramos. We wanted to start with this little piece in non-classical style to bring enough variety to the experimentation. The different musical pieces combine both classical and contemporary elements of music. The only requirement is that all musical pieces share a tonal harmonic language, with a harmonic rhythm of classical music and repetitive rhythmic parameters. This enables to highlight each of the auditions in order to categorize them correctly. So, in this way, we have a musical piece which rhythm uses constantly alternating dotted notes (providing a touch of swing) and syncopated notes in prominent places. Then, tempo variations are provided to the rhythm used.

As described before, the tempo is measured according to beats per minute. A very fast tempo, prestissimo, has between 200 and 208 beats per minute, presto has 168 to 200 beats per minute, allegro has between 120 and 168 bpm, moderato has 108 to 120 beats per minute, andante has 76 to 108, adagio has 66 to 76, larghetto has 60 to 66, and largo, the slowest tempo, has 40 to 60. In our experiment, we have decided to use only three different tempos. Tempos to be heard are 90, 120, and 150 bpm, respectively, covering a sufficient range of standard beats. The order of appearance of each melody is in increasing number of bpms for each listener in the computer program. The listener labels melodies in the way he/she considers more suited as described before. That is, he/she offers a value from 1 to 5 to each of the description-related scales, and from 0 to 8 to the basic emotions.

In relation to the rhythm, typically the basic rhythmic patterns are addressed in duple meter, or triple meter present in the continuous movements of the human being as, for instance, walking. Let us remind that each of the categories of meter is defined by the subdivision of beats. The number of beats per measure determine the term associated with that meter. For instance, duple meter is a rhythmic pattern with the measure being divisible by two. This includes simple double rhythm such as 2/2, 4/4, but also such compound rhythms as 6/8. And, triple meter is a metrical pattern having three beats to a measure.

Jaques-Dalcroze emphasizes the importance of implementing the rhythmic movements, perceived in music and represented through the human body in its rhythmic part, in the right balance of the nervous system. Jaques-Dalcroze (1921, 1931) stresses that rhythm is movement and all motion is material. Therefore, any movement is in need of space and time. Thus, Jaques-Dalcroze starts from the binary rhythm in his teaching, associating it to freely walking. For this reason, one of the basic methodologies used is the association of half notes (basic beat in meter two by four) with walking, eighth notes with running, and eighth notes with dotted notes and sixteenth notes with jumping. Let us remind that the duration of a note is as shown in Table 1 in common time or 4/4 time.

In this sense, the second musical experiment is geared to the variation of the rhythmic unit. Rhythmic units may be classified as metric-even patterns, such as steady eighth notes, or pulses-intrametric-confirming patterns, such as dotted eighth-sixteenth note and swing patterns-contrametric-non-confirming, or syncopated patterns and extrametric-irregular patterns, such as tuplets (also called irregular rhythms or abnormal divisions). In other words, it is the variation of the rhythm of the melody without altering the musical line, harmonics or beat. To do this, from the main melody of the symphony “Surprise” by Haydn, three rhythmic variations are established (see Figure 3). The listener hears and newly labels what the melody suggests to him/her from the list of opposing descriptive words and direct basic emotion shown on the computer interface. The principal theme is characterized by the use of rhythmic whole and half notes. Afterwards, the original melody is slightly modified, especially in the last four bars where a cadence amendment is performed. Variation 1 is characterized by the predominance of the rhythmic formula of two eighth notes. A slight variation is introduced in the sixth bar in order to bring interest to the resolution of the theme. Variation 2 is characteristic of the use of simple combinations of the representation of sixteenth notes, that is, rhythmic formulas of four sixteenth notes, two sixteenth-eighth notes and eighth-two sixteenth notes. Finally, variation 3 uses syncopated notes, dotted notes and triplets, all in value of a beat.

The results of both experiments are described separately for a better understanding of the singular influence of the tempo and the rhythmic unit on the listener's emotional state.

Table 2 shows in columns 2 to 4 the means and standard deviations for the three tempos (90, 120, and 150 bpm) used during the experimentation.

With the augmentation of tempo (from 90 to 150 bpm), there is an increase in the mean values of emotions “Happiness” and “Surprise.” There is a similar behavior in semantic scales “Tension,” “Expressiveness,” and “Amusement.” In addition, there is a decrease in the mean values of “Sadness” emotion. In relation to Attractiveness” scale, we have to conclude that there is no significant change. The mean values are similar for all three tempos. From this point on, we will no longer study the results of “Attractiveness” provided in this experiment number 1.

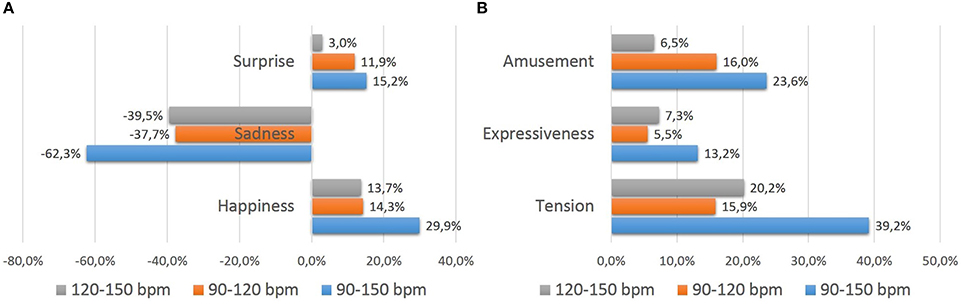

Moreover, there are significant differences in growth percentages for the parameters when studying the increase/decrease from 90 to 150 bpm (see Figure 4). Indeed, there are emotional factors that suffer large variations, while variation is not so relevant in others. The emotional perception of “Sadness” is the most affected with increasing tempo. The other emotions are less affected. In relation to descriptive scales, all of them experiment and increase, following the next order: “Tension,” “Amusement,” and “Expressiveness.”

Figure 4. Growth tendencies for experiment 1. (A) Tendencies in basic emotions. (B) Tendencies in descriptive scales.

It seems also useful to investigate the partial variations due to the augmentation from 90 to 120 bpm and from 120 to 150 bpm. Let us start with the first augmentation. The perception of emotion “Sadness” decreases by 37.7%, which the highest percentage for all emotions and descriptive words. Other four parameters increase their values in a margin from around 12 to 16% when augmenting the pulse from 90 bpm to 120 bpm. The scores of “Amusement” and “Tension” scales increase by 16.0 and 15.9%, while valuations of emotions “Happiness” and “Surprise” rise by 14.3 and 11.9%, respectively. The last parameter studied, namely “Expressiveness,” does not experience much variation in the results of emotional perception when the initial pulse is increased by 30 bpm. The score of “Expressiveness” only increases by 5.3%.

Moreover, when the tempo is augmented again (from 120 to 150 bpm), very different growth patterns are observed between the emotions and descriptive scales studied. In line with our previous assertion it is seen that the values of emotions “Happiness,” “Surprise,” and the scales “Amusement,” “Expressiveness” and “Tension” continue increasing. Again, the punctuation of emotion “Sadness” descends by 39.5%. Very different behaviors are observed here. Newly, among the descriptive words that do increase their values, only one of them stands out. Indeed, “Tension” is the only term that increases its growth with this tempo acceleration by 4.3% higher than the 15.9% previously experienced.

Table 3 has a very similar layout to Table 2. Here, columns 2 to 5 offer the measures and standard deviations for the theme and three variations performed in this experiment.

An increase in the values of emotions “Happiness” and “Surprise,” as well as in descriptive scales “Tension,” “Expressiveness,” and “Amusement” occurs in the use of rhythmic variations 1, 2, and 3 in comparison with the main theme. The gotten order of the scores is described next for these parameters. The use of whole and half notes (the theme) is punctuated with lower values for “Happiness,” “Surprise,” “Tension,” “Expressiveness,” and “Amusement,” followed by the use of eighth notes (variation 1). Then we find higher scores for the use of short syncopations, dots contained in a pulse and triplets of eighth notes (that is, variation 3). A syncopation is a deliberate upsetting of the meter or pulse of a composition by means of a temporary shifting of the accent to a weak beat or an off-beat. And, a doted note is a note that has a dot placed to the right of the note head, indicating that the duration of the note should be increased by half again its original duration.

Finally, you may observe that the use of sixteenth notes and their combinations present in variation 2 are valued with the highest scores in these parameters. These are “Happiness,” “Surprise,” “Tension,” “Expressiveness” and “Amusement.” The same order of punctuation for the theme and three variations is followed for “Sadness” emotion but in reverse order. The use of sixteenth notes (variation 2) is perceived as the less sad in this emotion, followed by variation 3, variation 1 and theme. Again, “Attractiveness” offers non-significant values, so, we will not consider the scores gotten.

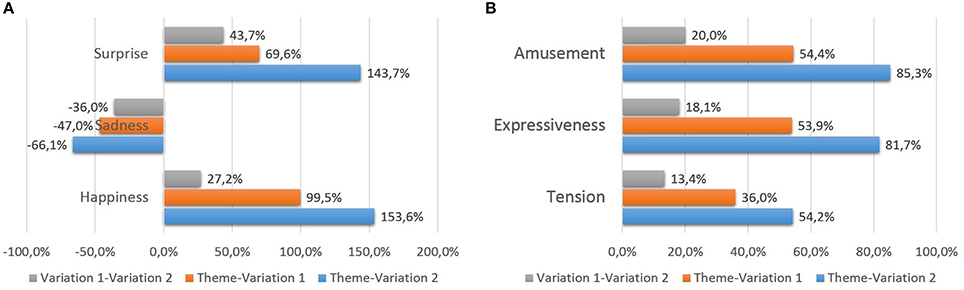

In all the emotions and descriptive words that suffer an increase/decrease in their values due to the proposed variations from the main theme (“Happiness,” “Surprise” and “Sadness”; “Tension,” “Expressiveness” and “Amusement”), it shows that growth occurs significantly in all of them, albeit with different growth percentages (see Figure 5). When passing from the theme to variation 2, the two highest increases occur for the values of “Happiness” (increased by 153.6% when playing sixteenth compared to the use of whole and half notes) and “Surprise” (increased by 143.7%). Other emotional judgments increase in a high percentage above 50% (“Amusement,” “Expressiveness,” and “Tension”). In the case of “Surprise” there is a decrease in a value of 66.1%. Again, this emotion is opposed to the feeling of “Happiness.”

Figure 5. Growth tendencies for experiment 2. (A) Tendencies in basic emotions. (B) Tendencies in descriptive scales.

Moreover, it is interesting to compare the results expressed when passing from whole and half notes (theme) to eighth notes (variation 1) and from eighth notes to sixteenth notes (variation 2). This is why, it is checked if there is proportionality in the emotional perception results, as occurs in the configuration of the proper rhythms. Thus, for those emotions in which an increase in their scores does occur, it is studied whether they follow a principle of binary multiplication results, coinciding with the structure of the rhythms (eighth notes are the result of a binary regular division of the half notes, like sixteenth notes result from a binary regular division of the eighth notes). In all cases, you can observe that there is a stronger effect in the increase/decrease of the scores when passing from theme to variation 1 vs. variation 1 to variation 2. This is especially pronounced for emotion “Happiness,” followed by “Surprise,” whilst “Sadness” experiences a lower difference. All the three remaining descriptive words also experience a very high difference in increase.

In order to discuss on the individual influence or effect of tempo and rhythmic unit on emotion regulation, we have used the well-known circumplex model of affect (Russell, 1980). This model suggests that emotions are distributed in a two-dimensional circular space, containing arousal and valence dimensions. Arousal represents the vertical axis and valence represents the horizontal axis, while the center of the circle represents a neutral valence and a medium level of arousal. In this model, emotional states can be represented at any level of valence and arousal, or at a neutral level of one or both of these factors. Circumplex models have been used most commonly to test stimuli of emotion words, emotional facial expressions, and affective states. As you can observe in see Figure 6, we have annotated the standard circumplex emotion model with the three emotions studied, that is, “Happiness,” “Sadness,” and “Surprise,” as well as the three meaningful opposed semantic scales related to “Amusement,” “Expressiveness,” “Tension,” and “Attractiveness.” We have also annotated the tempo and rhythmic unit values that were rated with higher scores for emotions and semantic scales.

The figure offers a symmetric appearance. In the middle value of arousal there is “Attractiveness,” showing that there is no influence of tempo and/or rhythmic unit. All combinations of tempo (90, 120, and 150 bpm) provide similar outputs for pleasantness. In relation to rhythmic unit, (Pleasant seems to be achieved with sixteenth notes and Unpleasant) with all the rest of possibilities. But, as seen in the Results sections, there is statistically not enough significance. At the top of Figure 6 the emotion “Surprise” appears quite isolated from the rest of emotions. Again, there are no clear outperforming tempo values (120 and 150 bmp) or rhythmic unit value (sixteenth notes) in accordance with the statistical results obtained before. Nevertheless, the most important result that can be seen in the figure is related to opposing emotions “Happiness” and “Sadness,” and couples of feeling words Amusing vs. Boring, Expressive vs. Expressionless, and Stressing vs. (Relaxing. All the first terms are related to a relatively high arousal whilst the second ones are in the low arousal level. Moreover, the first terms get their maximum score with a tempo of 150 bpm and a rhythmic unit of sixteenth notes. On the contrary, the second terms perform best with a 90 bpm tempo, and whole and half notes as rhythmic unit.

The results obtained in relation to tempo and its influence on emotional perception are clearly in line with previous research works. Indeed, the suggestion that emotion conveyed by music is determined by mode (major-minor) and tempo (fast-slow) was examined using the same set of equitone melodies in two experiments (Gagnon and Peretz, 2003). The results confirm that both mode and tempo, with tempo being more prominent, determine the “happy-sad” judgments in isolation. Thereby, tempo and mode provide essential factors to determine whether music sounds sad or happy. Slow tempo and minor mode are associated with sadness whereas music played with fast tempo and composed in major mode is commonly considered happy (for a review, see Juslin and Laukka, 2003). Moreover, tempo variation has consistently been associated with differential emotional responses to music (Dalla Bella et al., 2001; Gagnon and Peretz, 2003). For example, Rigg (1940) examined college students emotional responses to each of five different phrases presented at six tempos ranging from 60 to 160 quarter notes or beats per minute (bpm) varying in steps of 20 bpm. Across the five manipulated phrases, as tempo increased, more students were likely to describe the phrases as happy than sad. In contrast, more recent research has suggested that changes in tempo are more closely associated with changes in arousal than emotion per se (Husain et al., 2002). It is conceivable that the extraction of temporal information and the following interpretation in regard to emotion has a biological foundation because tempo is regarded as a domain-general signal property. For instance, fast tempo is commonly associated with heightened arousal (Trehub et al., 2010). Another study provides a further investigation of mode and tempo (Trochidis and Bigand, 2013). It investigates the effect of three modes (major, minor, locrian) and three tempos (slow, moderate, fast) on emotional ratings and EEG responses. Beyond the effect of mode, tempo was found to modulate the emotional ratings, with faster tempo being more associated with the emotions of anger and happiness as opposed to slow tempo, which induces stronger feeling of sadness and serenity. This suggests that the tempo modulates the arousal value of the emotions. A new point of the study was to manipulate three values of tempo (slow, moderate, fast). The findings suggest that there is a linear trend between tempo and the arousing values of the emotion: the faster the tempo, the stronger the arousal value of the emotion.

On the other hand, let us highlight that, as far as we have seen, the effect of rhythmic unit has not been studied so far in musical emotion regulation. This is why, in consonance with Figure 6, one question arises. Is there a relationship between tempo and rhythmic unit that effect on emotion regulation? Some previous research can provide some hints on this issue. Firstly, a psychological investigation (Krumhansl, 2000) has deduced that temporary construction in music, turned into rhythmic patterns, not only results in complex components in music, but also complex psychological representations mainly influencing perception. Thus, the proportions of duration in music tend to simple ratios (1/2, 1/3), producing a psychological assimilation of these categories. The pulse provides a periodic base structure that allows the perception of specific temporal patterns. Thus, a framework to organize and remember the events taking place in time is provided. The following three studies conclude that the proposed relationship between tempo and rhythmic un it really exists. In the work by Desain et al. (2000), different rhythms are offered, in which the pulse (40, 60, and 90 bpm) is changed, and the bars also vary. The rhythmic patterns are perceived differently when the pulse and bars in which they are written change. Furthermore, this variation occurs, in varying ways, depending on its complexity. In another experiment (Bergeson and Trehub, 2005), different listening rhythms were offered to 7-month babies to discuss their response to rhythmic variations (some in a particular rhythmic accentuation frame, some without, and with the use of binary and ternary measure). Babies detected rhythmic changes more easily in the rhythmic context with clear accentuation (rhythm that corresponds to the measure). From the regularity of the perceived accents temporal changes in listening could be detected. Moreover, babies detected the rhythms much more clearly in binary than in ternary compass. Lastly, in Seyerlehner et al. (2007) the relationship between rhythmic patterns and tempo perceived in them is studied. Is is concluded that listeners perceive a similar tempo when they hear songs with similar rhythmic patterns. The effectiveness to discriminate each rhythmic pattern depends largely on training, similarity and capacity of the rhythmic patterns used to capture and regroup the regular rhythmic by the listener.

Clearly, in accordance with the results of the experiments, and after taking a look at Figure 6, sixteenth notes and 150 bpm are closely related, as well as 90 bpm and whole and half notes. Rhythmic unit related to syncopated notes deserves a special paragraph. Although syncopated/dotted notes have not been determinant in the experiments performed in the present study, some of the results gotten here are also in line with musical emotion research. Indeed, it is well-known that syncopated patterns are perceived as more fun and upbeat than non syncopated. Moreover, a syncopated pattern is always perceived as more complex and exciting than a non-syncopated one. This difference increases when a non-syncopated pattern is followed by a syncopated one (Keller and Schubert, 2011).

Obviously, the results offered in Figure 6 are not sufficient to draw conclusions on how tempo and rhythmic unit (be it individually or combined) can be used to regulate emotions. It is even not possible to provide sufficient evidence on how to move from a negative to a positive mood by playing music with different tempo and/or rhythmic unit. Indeed, in the previous discussion only arousal has been introduced. But the questions is also “What happens with valence?”

If we only rely on 150 bpm and sixteenth notes vs. 90 bpm and whole and half notes, we clearly attain a higher or lower arousal level. But we do not know to what extent a person feels “Happiness” at the same time that he/she finds music to be Stressing, Expressive and Amusing. “Happiness” is for sure a positive emotion, and Expressive and Amusing are good friends for the emotion. But, is something Stressing also positive? At the opposite side, we have the just the contrary. The emotion felt is “Sadness” and the music is rated as Boring, Expressionless and Relaxing. “Sadness,” Boring and Expressionless perfectly fit under a negative emotional impression, but Relaxing is a positive feeling.

So, there must be a solution that makes things function correctly. This is why, we have prepared another figure that enables to offer a better understanding of the combined smart use of tempo and rhythmic unit to regulate emotions. Figure 7 shows, for each emotion and descriptive word, the influence of tempo (both partial increment from 90 to 120 or decrement from 120 to 90 bpm, and increment from 120 to 150 bpm or decrement from 150 to 120 bpm) combined with the most influential change in rhythmic unit for that specific feeling (in positive: white and half notes (W&H) to eighth notes (eighth) and eighth notes to sixteenth notes (sixteenth); in negative: sixteenth to eighth and eighth to W&H). The size of the font determines the importance of the influence, that is, the biggest the font, the greatest the influence. We have also used green and red color to depict the most influential value to move toward a negative (red) and negative (green) emotional state.

For instance, to reach “Happiness” Figure 7 suggests to use a tempo of 120 bpm (passing from 90 to 120 bpm (90 → 120), if necessary) and clearly establishes that you have to move rhythmic unit from whole and notes to eighth notes (W&H → eighth). Notice that this combination includes the positive feelings of Expressive and Amusing. This combination of parameters of note value do not get a feeling of Stressing, as this is achieved only if you pass from 120 to 150 bpm. And, the opposite problem also gets a correct solution in Figure 7. Obviously new experiments have to be carried out to demonstrate the validity of this approach.

This article has described the first steps in the use of music to regulate affect in a running project denominated “Improvement of the Elderly Quality of Life and Care through Smart Emotion Regulation.” The objective of the project is to find solutions for improving the quality of life and care of aging adults living at home by using emotion elicitation techniques. This paper has focused on emotion regulation through some musical parameters.

The proposal has studied the participants' changes in emotional states through listening different auditions. The present research has focused on the musical cue of note value through two basic components of the parameter note value, namely, tempo and rhythmic unit to detect the individual preferences of the listeners. The two experiments carried out have been discussed in detail to provide an acceptable manner of using both parameters to be able to understand how to move from negative to positive emotional states (and vice versa), which is one key of our current project.

The results obtained in relation to tempo and its influence on emotional perception are in line with previous research works. The results confirm that tempo clearly determines whether music sounds sad or happy. Moreover, Stressing, Expressive and Amusing are the words gotten from a high tempo, whilst the opposite terms Relaxing, Expressionless and Boring are obtained for a low tempo. This suggests that the tempo modulates the arousal value of the emotions. On the other hand, the effect of rhythmic unit, which has not been studied in musical emotion regulation, has shown significant outcomes in the same direction than tempo. Indeed, sixteenth notes and 150 bpm are closely related, as well as 90 bpm and whole and half notes.

Lastly, the paper has established a basis for future study of the combined effect of both parameters so to provide a finer tuning of the emotion regulation capabilities of the proposed system.

All authors listed, have made substantial, direct and intellectual contribution to the work, and approved it for publication.

This work was partially supported by Spanish Ministerio de Economía y Competitividad/FEDER under TIN2013-47074-C2-1-R, TIN2015-72931-EXP and DPI2016-80894-R grants.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors are thankful to contemporary composer Juan Francisco Manzano Ramos for his invaluable help and support.

Bergeson, T., and Trehub, S. (2005). Infants perception of rhythmic patterns. Music Percept. 23, 345–360. doi: 10.1525/mp.2006.23.4.345

Brattico, E. (2006). Cortical Processing of Musical Pitch as Reflected by Behavioural and Electrophysiological Evidence. Ph.D. dissertation, Helsinki University.

Bresin, R., and Friberg, A. (2011). Emotion rendering in music: range and characteristic values of seven musical variables. Cortex 47, 1068–1081. doi: 10.1016/j.cortex.2011.05.009

Caclin, A., Brattico, E., Tervaniemi, M., Naatanen, R., Morlet, D., Giard, M., et al. (2006). Separate neural processing of timbre dimensions in auditory sensory memory. J. Cogn. Neurosci. 18, 1959–1972. doi: 10.1162/jocn.2006.18.12.1959

Castillo, J., Carneiro, D., Serrano-Cuerda, J., Novais, P., Fernández-Caballero, A., and Neves, J. (2014a). A multi-modal approach for activity classification and fall detection. Int. J. Syst. Sci. 45, 810–824. doi: 10.1080/00207721.2013.784372

Castillo, J., Fernández-Caballero, A., Castro-González, A., Salichs, M., and López, M. (2014b). “A framework for recognizing and regulating emotions in the elderly,” in Ambient Assisted Living and Daily Activities, eds L. Pecchia, L. Chen, C. Nugent, and J. Bravo (New York, NY: Springer), 320–327.

Castillo, J., Castro-González, A., Fernández-Caballero, A., Latorre, J., Pastor, J., Fernández-Sotos, A., et al. (2016). Software architecture for smart emotion recognition and regulation of the ageing adult. Cogn. Comput. 8, 357–367. doi: 10.1007/s12559-016-9383-y

Costa, A., Castillo, J., Novais, P., Fernández-Caballero, A., and Simoes, R. (2012). Sensor-driven agenda for intelligent home care of the elderly. Exp. Syst. Appl. 39, 12192–12204. doi: 10.1016/j.eswa.2012.04.058

Dalla Bella, S., Peretz, I., Rousseau, L., and Gosselin, N. (2001). A developmental study of the affective value of tempo and mode in music. Cognition 80, 1–10. doi: 10.1016/S0010-0277(00)00136-0

Desain, P., Jansen, C., and Honing, H. (2000). “How identification of rhythmic categories depends on tempo and meter,” in Proceedings of the Sixth International Conference on Music Perception and Cognition (Staffordshire, UK: European Society for the Cognitive Sciences of Music), 29.

Eerola, T., Friberg, A., and Bresin, R. (2013). Emotional expression in music: contribution, linearity, and additivity of primary musical cues. Front. Psychol. 4:487. doi: 10.3389/fpsyg.2013.00487

Fernández-Caballero, A., Castillo, J., and Rodríguez-Sánchez, J. (2012). Human activity monitoring by local and global finite state machines. Exp. Syst. Appl. 39, 6982–6993. doi: 10.1016/j.eswa.2012.01.050

Fernández-Caballero, A., Latorre, J., Pastor, J., and Fernández-Sotos, A. (2014). “Improvement of the elderly quality of life and care through smart emotion regulation,” in Ambient Assisted Living and Daily Activities, eds L. Pecchia, L. Chen, C. Nugent, and J. Bravo (New York, NY: Springer), 320–327.

Fernández-Sotos, A., Fernández-Caballero, A., and Latorre, J. (2015). “Elicitation of emotions through music: the influence of note value,” in Artificial Computation in Biology and Medicine, eds J. F. Vicente, J. Álvarez-Sánchez, F. de la Paz López, F. Toledo-Moreo, and H. Adeli (New York, NY: Springer), 488–497. doi: 10.1007/978-3-319-18914-7_51

Gabrielsson, A. (2001). “Emotions in strong experiences with music,” in Music and Emotion: Theory and Research, eds P. Juslin and J. Sloboda (New York, NY: Oxford University Press), 431–449.

Gabrielsson, A., and Lindstrom, E. (2010). “The role of structure in the musical expression of emotions,” in Handbook of Music and Emotion: Theory, Research, and Applications, eds P. Juslin and J. Sloboda (Oxford: Oxford University Press), 367–400.

Gagnon, L., and Peretz, I. (2003). Mode and tempo relative contributions to “happy-sad” judgements in equitone melodies. Cogn. Emot. 17, 25–40. doi: 10.1080/02699930302279

Glowinski, D., and Camurri, A. (2012). “Music and emotions,” in Emotion-Oriented Systems, ed C. Pelachaud (Hoboken, NJ: John Wiley and Sons, Inc.), 247–270.

Hunt, A. (2015). Boundaries and potentials of traditional and alternative neuroscience research methods in music therapy research. Front. Hum. Neurosci. 39:342. doi: 10.3389/fnhum.2015.00342

Husain, G., Thompson, W., and Schellenberg, E. (2002). Effects of musical tempo and mode on arousal, mood, and spatial abilities. Music Percept. 20, 151–171. doi: 10.1525/mp.2002.20.2.151

Istók, E. (2013). Cognitive and Neural Determinants of Music Appreciation and Aesthetics. Ph.D. dissertation, University of Helsinki.

Juslin, P., and Laukka, P. (2003). Communication of emotions in vocal expression and music performance: different channels, same code? Psychol. Bull. 129, 770–814. doi: 10.1037/0033-2909.129.5.770

Keller, P., and Schubert, E. (2011). Cognitive and affective judgements of syncopated musical themes. Adv. Cogn. Psychol. 7, 142–156. doi: 10.2478/v10053-008-0094-0

Knoferle, K., Spangenberg, E., Herrmann, A., and Landwehr, J. (2012). It is all in the mix: the interactive effect of music tempo and mode on in-store sales. Market. Lett. 23, 325–337. doi: 10.1007/s11002-011-9156-z

Koelsch, S., Fritz, T., von Cramon, D., Muller, K., and Friederici, A. (2006). Investigating emotion with music: an fMRI study. Hum. Brain Mapp. 27, 239–250. doi: 10.1002/hbm.20180

Koelsch, S., and Siebel, W. (2005). Towards a neural basis of music perception. Trends Cogn. Sci. 9, 578–584. doi: 10.1016/j.tics.2005.10.001

Krumhansl, C. (2000). Rhythm and pitch in music cognition. Psychol. Bull. 126, 159–179. doi: 10.1037/0033-2909.126.1.159

Lozano-Monasor, E., López, M., Fernández-Caballero, A., and Vigo-Bustos, F. (2014). “Facial expression recognition from webcam based on active shape models and support vector machines,” in Ambient Assisted Living and Daily Activities, eds L. Pecchia, L. Chen, C. Nugent, and J. Bravo (New York, NY: Springer), 147–154.

Martínez-Rodrigo, A., Pastor, J., Zangróniz, R., Sánchez-Meléndez, C., and Fernández-Caballero, A. (2016). “Aristarko: a software framework for physiological data acquisition,” in Ambient Intelligence- Software and Applications: 7th International Symposium on Ambient Intelligence, eds H. Lindgren, J. D. Paz, P. Novais, A. Fernández-Caballero, H. Yoe, A. Jimenez-Ramírez, and G. Villarrubia (New York, NY: Springer), 215–223. doi: 10.1007/978-3-319-40114-0_24

Mizuno, T., and Sugishita, M. (2007). Neural correlates underlying perception of tonality-related emotional contents. NeuroReport 18, 1651–1655. doi: 10.1097/WNR.0b013e3282f0b787

Norris, S. (2009). Tempo, auftakt, levels of actions, and practice: rhythm in ordinary interactions. J. Appl. Linguist. 6, 333–355. doi: 10.1558/japl.v6i3.333

Peretz, I. (2010). “Towards a neurobiology of musical emotion,” in Handbook of Music and Emotion: Theory, Research, and Applications, eds P. Juslin and J. Sloboda (Oxford: Oxford University Press), 99–126.

Peretz, I., Gagnon, L., and Bouchard, B. (1998). Music and emotion: perceptual determinants, immediacy, and isolation after brain damage. Cognition 68, 111–141. doi: 10.1016/S0010-0277(98)00043-2

Rigg, M. (1940). Speed as a determiner of musical mood. J. Exp. Psychol. 27, 566–571. doi: 10.1037/h0058652

Russell, J. (1980). A circumplex model of affect. J. Pers. Soc. Psychol. 39, 1161–1178. doi: 10.1037/h0077714

Saarikallio, S., and Erkkila, J. (2007). The role of music in adolescents mood regulation. Psychol. Music 35, 88–109. doi: 10.1177/0305735607068889

Samson, S., and Ehrlé, N. (2003). “Cerebral substrates for musical temporal processes,” in The Cognitive Neuroscience of Music, eds I. Peretz and R. Zatorre (New York, NY: Oxford University Press), 204–216.

Scherer, K., and Zentner, M. (2001). “Emotional effects of music: production rules,” in Music and Emotion: Theory and Research, eds P. Juslin and J. Sloboda (New York, NY: Oxford University Press), 361–392.

Scollon, R. (1982). “The rhythmic integration of ordinary talk,” in Analyzing Discourse: Text and Talk, ed D. Tannen (Washington, DC: Georgetown University Press), 335–349.

Seyerlehner, K., Widmer, G., and Schnitzer, D. (2007). “From rhythm patterns to perceived tempo,” in Proceedings of the 8th International Conference on Music Information Retrieval (Vienna: Austrian Research Institute for Artificial Intelligence), 519–524.

Sloboda, J., and Juslin, P. (2001). “Psychological perspectives on music and emotion,” in Music and Emotion: Theory and Research, eds P. Juslin and J. Sloboda (New York, NY: Oxford University Press), 71–104.

Trehub, S., Hannon, E., and Schachner, A. (2010). “Perspective on music and affect in the early years,” in Handbook of Music and Emotion: Theory, Research, and Applications, eds P. Juslin and J. Sloboda (Oxford: Oxford University Press), 645–668.

Trochidis, K., and Bigand, E. (2013). Investigation of the effect of mode and tempo on emotional responses to music using eeg power asymmetry. J. Psychophysiol. 27, 142–147. doi: 10.1027/0269-8803/a000099

van der Zwaag, M. D., Westerink, J. H., and van den Broek, E. L. (2011). Emotional and psychophysiological responses to tempo, mode, and percussiveness. Music. Sci. 15, 250–269. doi: 10.1177/1029864911403364

Webster, G., and Weir, C. (2005). Emotional responses to music: interactive effects of mode, texture, and tempo. Motiv. Emot. 29, 19–39. doi: 10.1007/s11031-005-4414-0

Keywords: emotion regulation, music, note value, tempo, rhythmic unit

Citation: Fernández-Sotos A, Fernández-Caballero A and Latorre JM (2016) Influence of Tempo and Rhythmic Unit in Musical Emotion Regulation. Front. Comput. Neurosci. 10:80. doi: 10.3389/fncom.2016.00080

Received: 01 February 2016; Accepted: 19 July 2016;

Published: 03 August 2016.

Edited by:

Jose Manuel Ferrandez, Universidad Politécnica de Cartagena, SpainCopyright © 2016 Fernández-Sotos, Fernández-Caballero and Latorre. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alicia Fernández-Sotos, YWxpY2lhLmZzb3Rvc0B1Y2xtLmVz

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.