- Neurithmic Systems LLC, Newton, MA, USA

The visual cortex's hierarchical, multi-level organization is captured in many biologically inspired computational vision models, the general idea being that progressively larger scale (spatially/temporally) and more complex visual features are represented in progressively higher areas. However, most earlier models use localist representations (codes) in each representational field (which we equate with the cortical macrocolumn, “mac”), at each level. In localism, each represented feature/concept/event (hereinafter “item”) is coded by a single unit. The model we describe, Sparsey, is hierarchical as well but crucially, it uses sparse distributed coding (SDC) in every mac in all levels. In SDC, each represented item is coded by a small subset of the mac's units. The SDCs of different items can overlap and the size of overlap between items can be used to represent their similarity. The difference between localism and SDC is crucial because SDC allows the two essential operations of associative memory, storing a new item and retrieving the best-matching stored item, to be done in fixed time for the life of the model. Since the model's core algorithm, which does both storage and retrieval (inference), makes a single pass over all macs on each time step, the overall model's storage/retrieval operation is also fixed-time, a criterion we consider essential for scalability to the huge (“Big Data”) problems. A 2010 paper described a nonhierarchical version of this model in the context of purely spatial pattern processing. Here, we elaborate a fully hierarchical model (arbitrary numbers of levels and macs per level), describing novel model principles like progressive critical periods, dynamic modulation of principal cells' activation functions based on a mac-level familiarity measure, representation of multiple simultaneously active hypotheses, a novel method of time warp invariant recognition, and we report results showing learning/recognition of spatiotemporal patterns.

Introduction

In this paper, we provide the hierarchical elaboration of the macro/mini-column model of cortical computation described in Rinkus (1996, 2010) which is now named Sparsey. We report results of initial experiments involving multi-level models with multiple macrocolumns (“macs”) per level, processing spatiotemporal patterns, i.e., “events.” In particular, we show: (a) single-trial unsupervised learning of sequences where this learning results in the formation of hierarchical spatiotemporal memory traces; and (b) recognition of training sequences, i.e., exact or nearly exact reactivation of complete hierarchical traces over all frames of a sequence. The canonical macrocolumnar algorithm—which probabilistically chooses a sparse distributed code (SDC) as a function of a mac's entire input, i.e., its bottom-up (U), horizontal (H), and top-down (D) input vectors, at a given moment—operates similarly, modulo parameters, in both learning and recognition, in all macs at all levels. Computationally, Sparsey's most important property is that a mac both stores (learns) new input items—which in general are temporal-context-dependent inputs, i.e., particular spatiotemporal moments—and retrieves the spatiotemporally closest-matching stored item in time that remains fixed as the number of items stored in the mac increases. This property depends critically on the use of SDCs, is essential for scalability to “Big Data” problems, and has not been shown for any other computational model, biologically inspired or not!

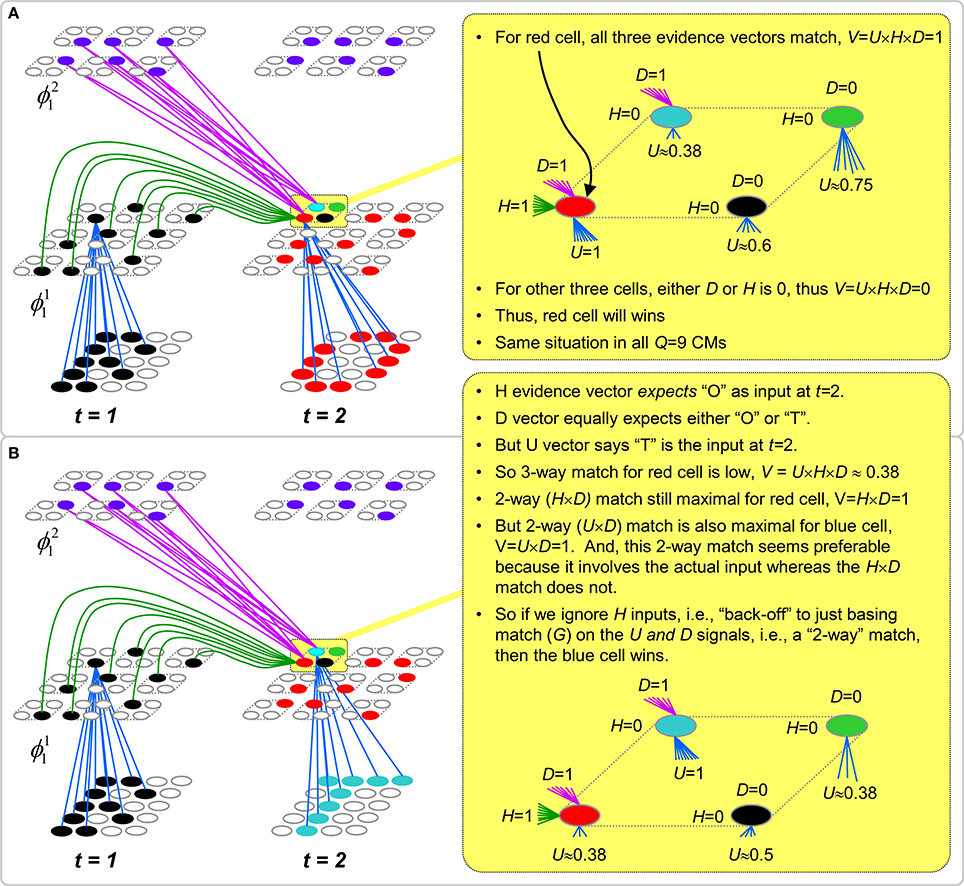

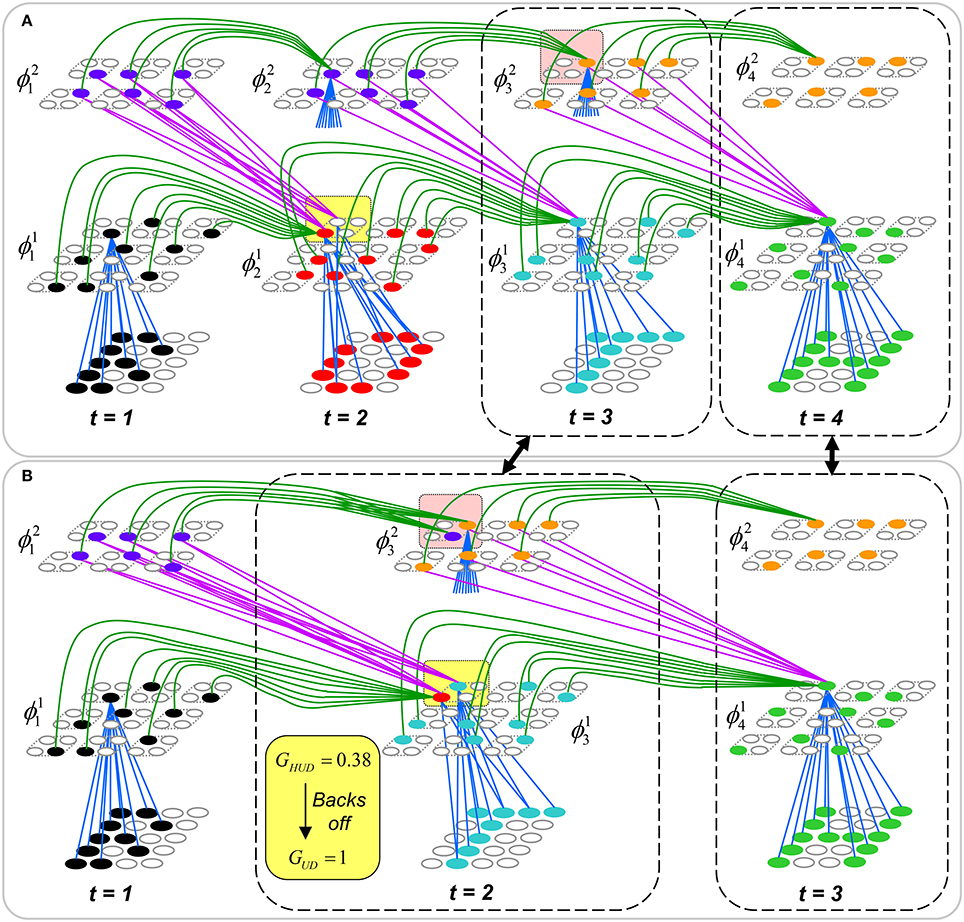

The model has a number of other interesting neurally plausible properties, including the following. (1) A “critical period” concept wherein learning is frozen in a mac's afferent synaptic projections when those projections reach a threshold saturation. In a hierarchical setting, freezing will occur beginning with the lowest level macs (analogous to primary sensory cortex) and progress upward over the course of experience. (2) A “progressive persistence” property wherein the activation duration (persistence) of the “neurons” (and thus of the SDCs which are sets of co-active neurons) increases with level; there is some evidence for increasing persistence along the ventral visual path (Rolls and Tovee, 1994; Uusitalo et al., 1997; Gauthier et al., 2012). This allows an SDC in a mac at level J to associate with sequences of SDCs in Level J-1 macs with which it is connected, i.e., a chunking (compression) mechanism. In particular, this provides a means to learn in unsupervised fashion perceptual invariances produced by continuous transforms occurring in the environment (e.g., rotation, translation, etc.). Rolls' VisNet model, introduced in Rolls (1992) and reviewed in Rolls (2012), uses a similar concept to explain learning of naturally-experienced transforms, although his trace-learning-rule-based implementation differs markedly from ours. (3) During learning, an SDC is chosen on the basis of signals arriving from all active afferent neurons in the mac's total (U, H, and D) receptive field (RF). However, during retrieval, if the highest-order match, i.e., involving all three (U, H, and D) input sources, falls below a threshold, the mac considers a progression of lower-order matches, e.g., involving only its U and D inputs, but ignoring its H inputs, and if that also falls below a threshold, a match involving only its U inputs. This “back-off” protocol, in conjunction with progressive persistence, allows a protocol by which the model can rapidly—crucially, the protocol does not increase the time complexity of closest-match retrieval—compare a test sequence (e.g., video snippet) not only to the set of all sequences actually experienced and stored, but to a much larger space of nonlinearly time-warped variants of the actually-experienced sequences. (4) During retrieval, multiple competing hypotheses can momentarily (i.e., for one or several frames) be co-active in any given mac and resolve to a single hypothesis as subsequent disambiguating information enters.

While the results reported herein are specifically for the unsupervised learning case, Sparsey also implements supervised learning in the form of cross-modal unsupervised learning, where one of the input modalities is treated as a label modality. That is, if the same label is co-presented with multiple (arbitrarily different) inputs in another (raw sensory) modality, then a single internal representation of that label can be associated with the multiple (arbitrarily different) internal representations of the sensory inputs. That internal representation of the label then de facto constitutes a representation of the class that includes all those sensory inputs regardless of how different they are, providing the model a means to learn essentially arbitrarily nonlinear categories (invariances), i.e., instances of what Bengio terms “AI Set” problems (Bengio, 2007). Although we describe this principle in this paper, its full elaboration and demonstration in the context of supervised learning will be treated in a future paper.

Regarding the model's possible neural realization, our primary concern is that all of the model's formal structural and dynamic properties/mechanisms be plausibly realizable by known neural principles. For example, we do not give a detailed neural model of the winner-take-all (WTA) competition that we hypothesize to take place in the model's minicolumns, but rather rely on the plausibility of any of the many detailed models of WTA competition in the literature, (e.g., Grossberg, 1973; Yu et al., 2002; Knoblich et al., 2007; Oster et al., 2009; Jitsev, 2010). Nor do we give a detailed neural model for the mac's computation of the overall spatiotemporal familiarity of its input (the “G” measure), or for the G-contingent modulation of neurons' activation functions. Furthermore, the model relies only upon binary neurons and a simple synaptic learning model. This paper is really most centrally an explanation of why and how the use of SDC in conjunction with hierarchy provides a computationally efficient, scalable, and neurally plausible solution to event (i.e., single- or multimodal spatiotemporal pattern) learning and recognition.

Overall Model Concept

The remarkable structural homogeneity across the neocortical sheet suggests a canonical circuit/algorithm, i.e., a core computational module, operating similarly in all regions (Douglas et al., 1989; Douglas and Martin, 2004). In addition, DiCarlo et al. (2012) present compelling first-principles arguments based on computational efficiency and evolution for a macrocolumn-sized canonical functional module whose goal they describe as “cortically local subspace untangling.” We also identify the canonical functional module with the cortical “macrocolumn” (a.k.a. “hypercolumn” in V1, or “barrel”-related volumes in rat/mouse primary somatosensory cortex), i.e., a volume of cortex, ~200–500 um in diameter, and will refer to it as a “mac.” In our view, the mac's essential function, or “meta job description,” in the terms of DiCarlo et al. (2012), is to operate as a semi-autonomous content-addressable memory. That is, the mac:

(a) assigns (stores, learns) neural codes, specifically sparse distributed codes (SDCs), representing its global (i.e., combined U, H, and D) input patterns; and

(b) retrieves (reactivates) stored codes, i.e., memories, on subsequent occasions when the global input pattern matches a stored code sufficiently closely.

If the mac's learning process ensures that similar inputs map to similar codes (SISC), as Sparsey's does, then operating as a content addressable memory is functionally equivalent to local subspace untangling.

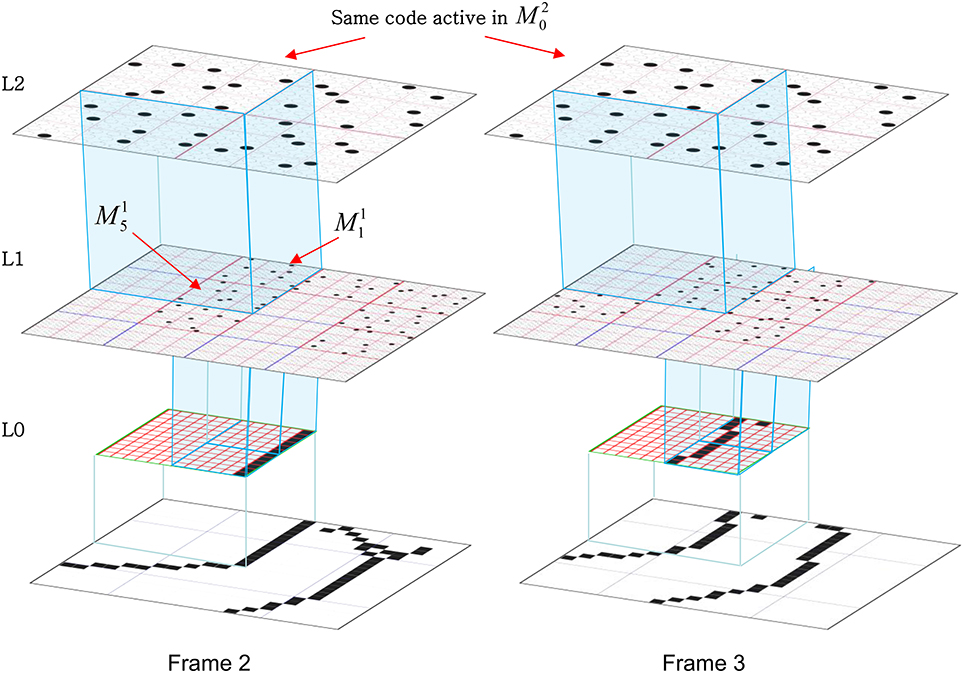

Although the majority of neurophysiological studies through the decades have formalized the responses of cortical neurons in terms of purely spatial receptive fields (RFs), evidence revealing the truly spatiotemporal nature of neuronal RFs is accumulating (DeAngelis et al., 1993, 1999; Rust et al., 2005; Gavornik and Bear, 2014; Ramirez et al., 2014). In our mac model, time is discrete: U signals arrive from neurons active on the current time step while H and D signals arrive from neurons active on the previous time step. We can view the combined U, H, and D inputs as a “context-dependent U input” (where the H and D signals are considered the “context”) or more holistically, as an overall particular spatiotemporal moment (as suggested earlier).

As will be described in detail, the first step of the mac's canonical algorithm, during both learning and retrieval, is to combine its U, H, and D inputs to yield a (scalar) judgment, G, as to the spatiotemporal familiarity of the current moment. Provided the number of codes stored in the mac is small enough, G measures the spatiotemporal similarity of the best matching stored moment, x, to the current moment, I.

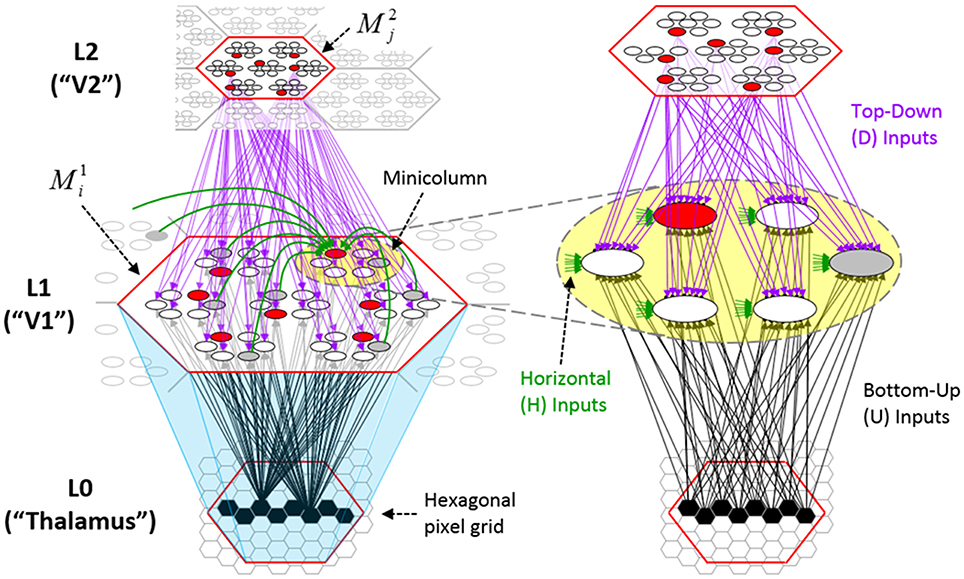

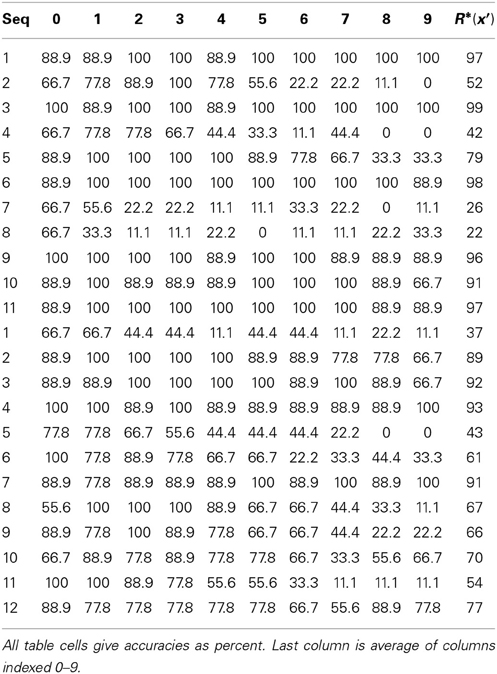

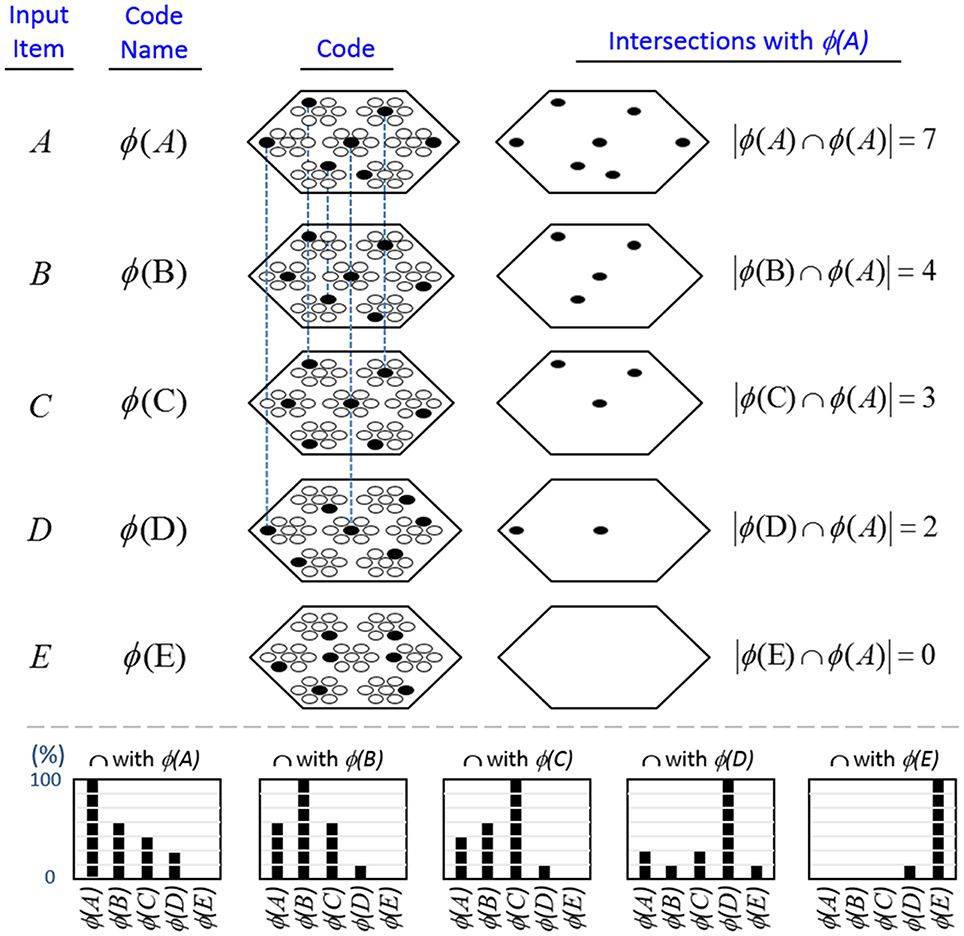

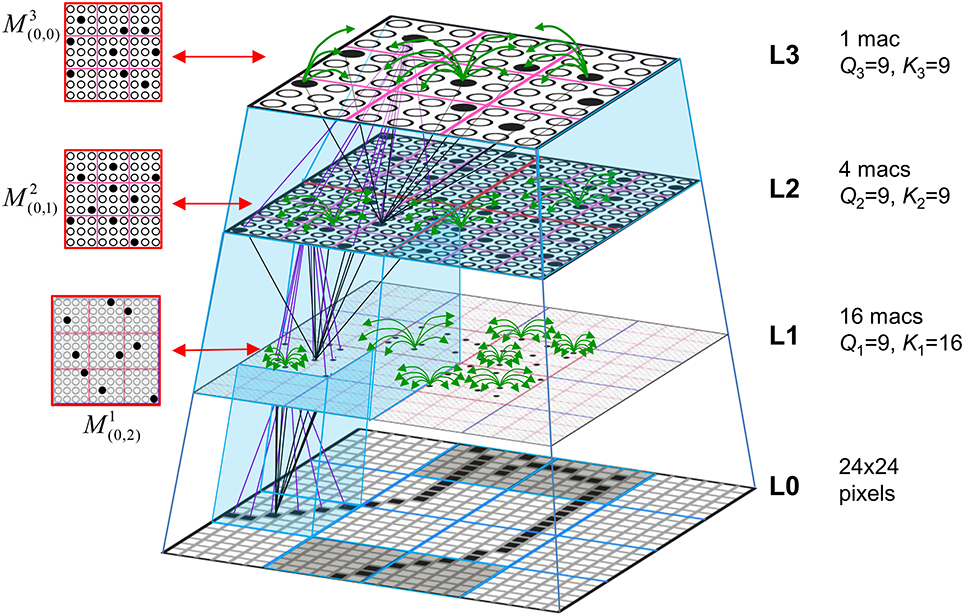

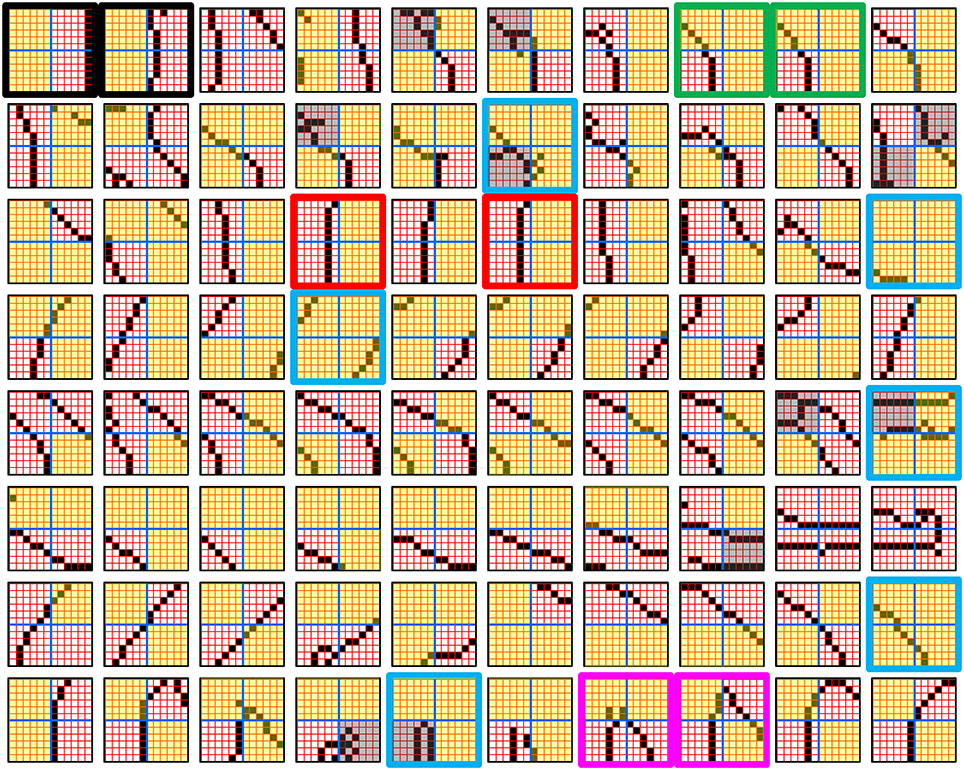

Figure I-1 shows the envisioned correspondence of Sparsey to the cortical macrocolumn. In particular, we view the mac's sub-population of L2/3 pyramidals as the actual repository of SDCs. And even more specifically, we postulate that the ~20 L2/3 pyramidals in each of the mac's ~70 minicolumns function in WTA fashion. Thus, a single SDC code will consist of 70 L2/3 pyramidals, one per minicolumn. Note: we also refer to minicolumns as competitive modules (CMs). Two-photon calcium imaging movies, e.g., Ohki et al. (2005), Sadovsky and MacLean (2014), provide some support for the existence of such macrocolumnar SDCs as they show numerous instances of ensembles, consisting of from several to hundreds of neurons, often spanning several 100 um, turning on and off as tightly synchronized wholes. We anticipate that the recently developed super-fast voltage sensor ASAP1 (St-Pierre et al., 2014) may allow much higher fidelity testing of SDCs and Sparsey in general.

Figure I-1. Proposed correspondence between the cortical macrocolumn and Sparsey's mac. Left: schematic of a cortical macrocolumn composed of ~70 minicolumns (green cylinder). SDCs representing context-dependent inputs reside in mac's L2/3 population. An SDC is a set composed of one active L2/3 pyramidal cell per minicolumn. Upper Right: 2-photon calcium image of activity in a mac-sized area of cat V1 given a left-moving vertical bar in the mac's RF; we have added dashed hexagonal boundary to suggest the boundary of macrocolumn/hypercolumn module (adapted from Ohki et al., 2005). Lower Right: two formats that we use to depict macs; they show only the L2/3 cells. The hexagonal format mac has 10 minicolumns each with seven cells. The rectangular format mac has nine minicolumns each with nine cells. Note that in these formats, active cells are black (or red as in many subsequent figures); inactive cells are white.

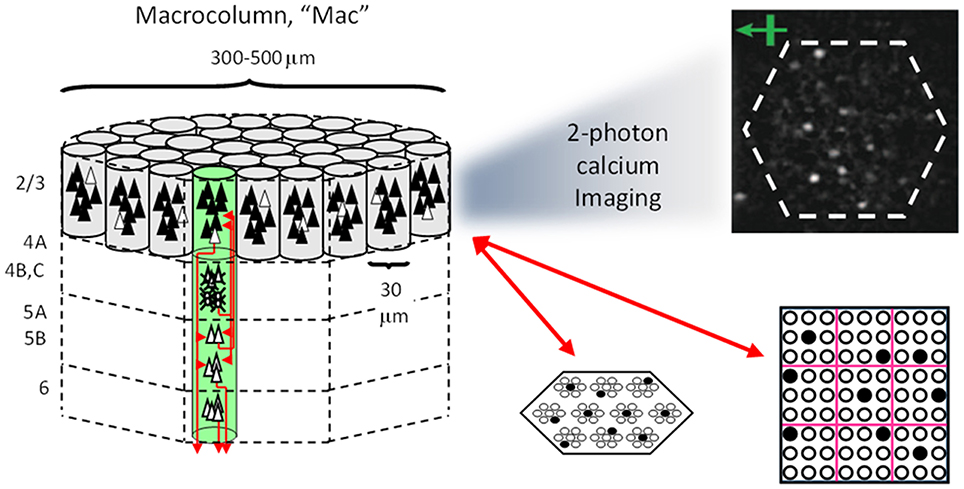

Figure I-2 (left) illustrates the three afferent projections to a particular mac at level L1 (analog of cortical V1), M1i (i.e., the ith mac at level L1). The red hexagon at L0 indicates M1i's aperture onto the thalamic representation of the visual space, i.e., its classical receptive field (RF), which we can refer to more specifically as M1i's U-RF. This aperture consists of about 40 binary pixels connected all-to-all with M1i's cells; black arrows show representative U-weights (U-wts) from two active pixels. Note that we assume that visual inputs to the model are filtered to single-pixel-wide edges and binarized. The blue semi-transparent prism represents the full bundle of U-wts comprising M1i's U-RF.

The all-to-all U-connectivity within the blue prism is essential because the concept of the RF of a mac as a whole, not of an individual cell, is central to our theory. This is because the “atomic coding unit,” or equivalently, the “atomic unit of meaning” in this theory is the SDC, i.e., a set of cells. The activation of a mac, during both learning and recognition, consists in the activation of an entire SDC, i.e., simultaneous activation of one cell in every minicolumn. Similarly, deactivation of a mac consists in the simultaneous deactivation of all cells comprising the SDC (though in general, some of the cells contained in a mac's currently active SDC might also be contained in the next SDC to become active in that mac). Thus, in order to be able to view an SDC as collectively (or atomically) representing the input to a mac as a whole, all cells in a mac must have the same RF (the same set of afferent cells). This scenario is assumed throughout this report.

In Figure I-2, magenta lines represent the D-wts comprising M1i's afferent D projection, or D-RF. In this case, M1i's D-RF consists of only one L2 (analog of V2) mac, M2j, which is all-to-all connected to M1i (representative D-wts from just two of M2j's cells are shown). Any given mac also receives complete H-projections from all nearby macs in its own level (including itself) whose centers fall within a parameter-specifiable radius of its own center. Signals propagating via H-wts are defined to take one time step (one sequence item) to propagate. Green arrows show a small representative sample of H-wts mediating signals arriving form cells active on the prior time step (gray). Red indicates cells active on current time step. At right of Figure I-2, we zoom in on one of M1i's minicolumns (CMs) to emphasize that every cell in a CM has the same H-, U-, and D-RFs. Figure I-3 further illustrates (using the rectangular format for depicting macs) the concept that all cells in a given mac have the same U-, H-, and D-RFs and that those RFs respect the borders of the source macs. Each cell in the L1 mac, M1(2,2) (here we use an alternate (x,y) coordinate indexing convention for the macs), receives a D-wt from all cells in all five L2 macs indicated, an H-wt from all cells in M1(2,2) and its N, S, E, and W neighboring macs (green shading), and a U-wt from all 36 cells in the indicated aperture.

Figure I-3. Connectivity scheme. Within each of the three afferent projections, H, U, and D, to a mac, M1(2,2) (where the mac index is now in terms of (x,y) coordinates in the level, and we have switched to the rectangular mac topology), the connectivity is full and respects mac borders. L1 is a 5 × 4 sheet of macs (blue borders), each consisting of 36 minicolumns (pink borders), but the scale is too small to see the individual cells within minicolumns. L2 is a 4 × 3 sheet of macs, each consisting of nine CMs, each consisting of nine cells.

The hierarchical organization of visual cortex is captured in many biologically inspired computational vision models with the general idea being that progressively larger scale (both spatially and temporally) and more complex visual features are represented in progressively higher areas (Riesenhuber and Poggio, 1999; Serre et al., 2005). Our cortical model, Sparsey, is hierarchical as well, but as noted above, a crucial, in fact, the most crucial difference between Sparsey and most other biologically inspired vision models is that Sparsey encodes information at all levels of the hierarchy, and in every mac at every level, with SDCs. This stands in contrast to models that use localist representations, e.g., all published versions of the HMAX family of models, (e.g., Murray and Kreutz-Delgado, 2007; Serre et al., 2007) and other cortically-inspired hierarchical models (Kouh and Poggio, 2008; Litvak and Ullman, 2009; Jitsev, 2010) and the majority of graphical probability-based models (e.g., hidden Markov models, Bayesian nets, dynamic Bayesian nets). There are several other models for which SDC is central, e.g., SDM (Kanerva, 1988, 1994, 2009; Jockel, 2009), Convergence-Zone Memory (Moll and Miikkulainen, 1997), Associative-Projective Neural Networks (Rachkovskij, 2001; Rachkovskij and Kussul, 2001), Cogent Confabulation (Hecht-Nielsen, 2005), Valiant's “positive shared” representations (Valiant, 2006; Feldman and Valiant, 2009), and Numenta's Grok (described in Numenta white papers). However, none of these models has been substantially elaborated or demonstrated in an explicitly hierarchical architecture and most have not been substantially elaborated for the spatiotemporal case.

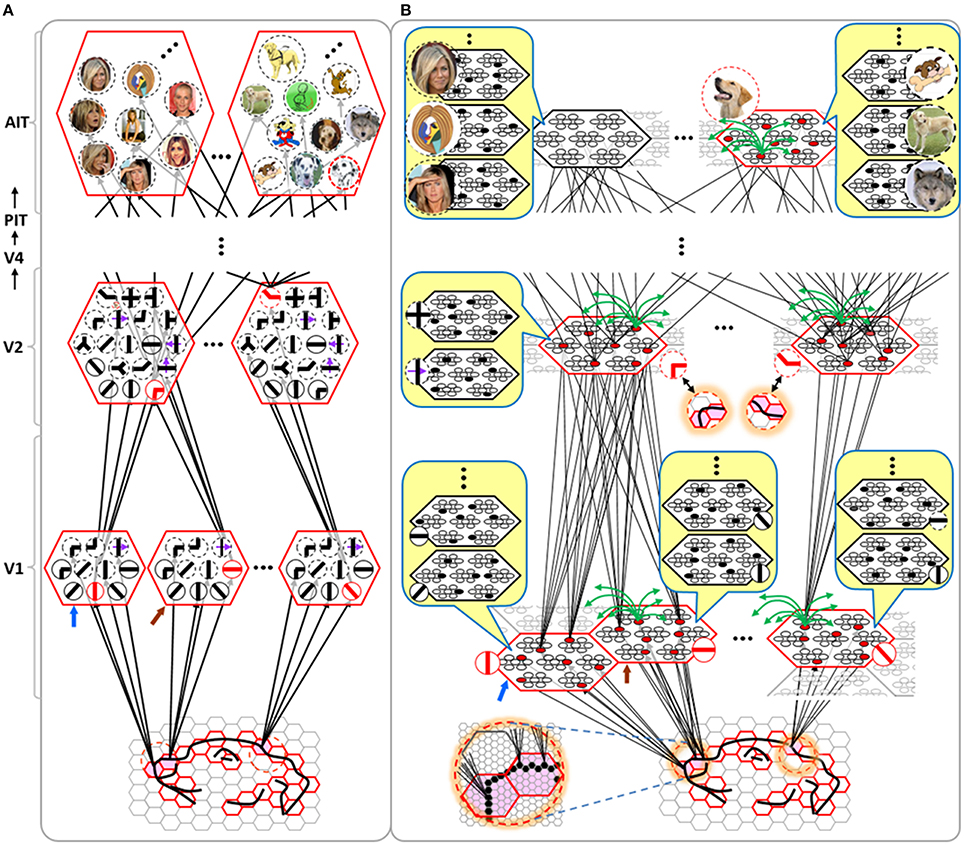

Figure I-4 illustrates the difference between a localist, e.g., an HMAX-like, model and the SDC-based Sparsey model. The input level (analogous to thalamus) is the same in both cases: each small gray/red hexagon in the input level represents the aperture (U-RF) of a single V1 mac (gray/red hexagon). In Figure I-4A, the representation used in each mac (at all levels) is localist, i.e., each feature is represented by a single cell and at any one time, only one cell (feature) is active (red) in any given mac (here the cell is depicted with an icon representing the feature it represents). In contrast, in Figure I-4B, any particular feature is represented by a set of co-active cells (red), one in each of a mac's minicolumns: compare the two macs at lower left of Figure I-4A with the corresponding macs in Figure I-4B (blue and brown arrows). Any given cell will generally participate in the codes of many different features. A yellow call-out shows codes for other features stored in the mac, besides the feature that is currently active. If you look closely, you can see that for some macs, some cells are active in more than one of the codes.

Looking at Figure I-4A, adapted from Serre et al. (2005), one can see the basic principle of hierarchical compositionality in action. The two neighboring apertures (pink) over the dog's nose lead to activation of cells representing a vertical and a horizontal feature in neighboring V1 macs. Due to the convergence/divergence of U-projections to V2, both of these cells project to the cells in the left-hand V2 mac. Each of these cells projects to multiple cells in that V2 mac, however, only the red (active) cell representing an “upper left corner” feature, is maximally activated by the conjunction of these two V1 features. Similarly, the U-signals from the cell representing the “diagonal” feature active in the right-hand V1 mac will combine with signals representing features in nearby apertures to activate the appropriate higher-level feature in the V2 mac whose U-RF includes these apertures (small dashed circles in the input level). Note that some notion of competition (e.g., the “max” operation in HMAX models) operates amongst the cells of a mac such that at any one time, only one cell (one feature) can be active.

We underscore that in Figure I-4, we depict simple (solid border) and complex (dashed border) features within individual macs, implying that complex and simple features can compete with each other. We believe that the distinction between simple and complex features may be largely due to coarseness of older experimental methods (e.g., using synthetic low-dimensional stimuli): newer studies are revealing far more precise tuning functions (Nandy et al., 2013), including temporal context specificity, even as early as V1 (DeAngelis et al., 1993, 1999), and in other modalities, somatosensory (Ramirez et al., 2014) and auditory (Theunissen and Elie, 2014).

The same hierarchical compositional scheme as between V1 and V2 continues up the hierarchy (some levels not shown), causing activation of progressively higher-level features. At higher levels, we typically call them concepts, e.g., the visual concept of “Jennifer Aniston,” the visual concept of the class of dogs, the visual concept of a particular dog, etc. We show most of the features at higher levels with dashed outlines to indicate that they are complex features, i.e., features with particular, perhaps many, dimensions of invariance, most of which are learned through experience. In Sparsey, the particular invariances are learned from scratch and will generally vary from one feature/concept to another, including within the same mac. The particular features shown in the different macs in this example are purely notional: it is the overall hierarchical compositionality principle that is important, not the particular features shown, nor the particular cortical regions in which they are shown.

The hierarchical compositional process described above in the context of the localist model of Figure I-4A applies to the SDC-based model in Figure I-4B as well. However, features/concepts are now represented by sets of cells rather than single cells. Thus, the vertical and horizontal features forming part of the dog's nose are represented with SDCs in their respective V1 macs (blue and brown arrows, respectively), rather than with single cells. The U-signals propagating from these two V1 macs converge on the cells of the left-hand V2 mac and combine, via Sparsey's code selection algorithm (CSA) (described in Section Sparsey's Core Algorithm), to activate the SDC representing the “corner” feature, and similarly on up the hierarchy. Each of the orange outlined insets at V2 shows the input level aperture of the corresponding mac, emphasizing the idea that the precise input pattern is mapped into the closest-matching stored feature, in this example, a “upper left 90° corner” at left and a “NNE-pointing 135° angle” at right. The inset at bottom of Figure I-4B zooms in to show that the U-signals to V1 arise from individual pixels of the apertures (which would correspond to individual LGN projection cells).

In the past, IT cells have generally been depicted as being narrowly selective to particular objects (Desimone et al., 1984; Kreiman et al., 2006; Kiani et al., 2007; Rust and DiCarlo, 2010). However, as DiCarlo et al. (2012) point out, the data overwhelmingly support the view of individual IT cells as having a “diversity of selectivity”; that is, individual IT cells generally respond to many different objects and in that sense are much more broadly tuned. This diversity is notionally suggested in Figures I-4B, I-5 in that individual cells are seen to participate in multiple SDCs representing different images/concepts. However, the particular input (stimulus) dimensions for which any given cell ultimately demonstrates some degree of invariance is not prescribed a priori. Rather they emerge essentially idiosyncratically over the history of a cell's inclusions in SDCs of particular experienced moments. Thus, the dimensions of invariance in the tuning functions of even immediately neighboring cells may generally end up quite different.

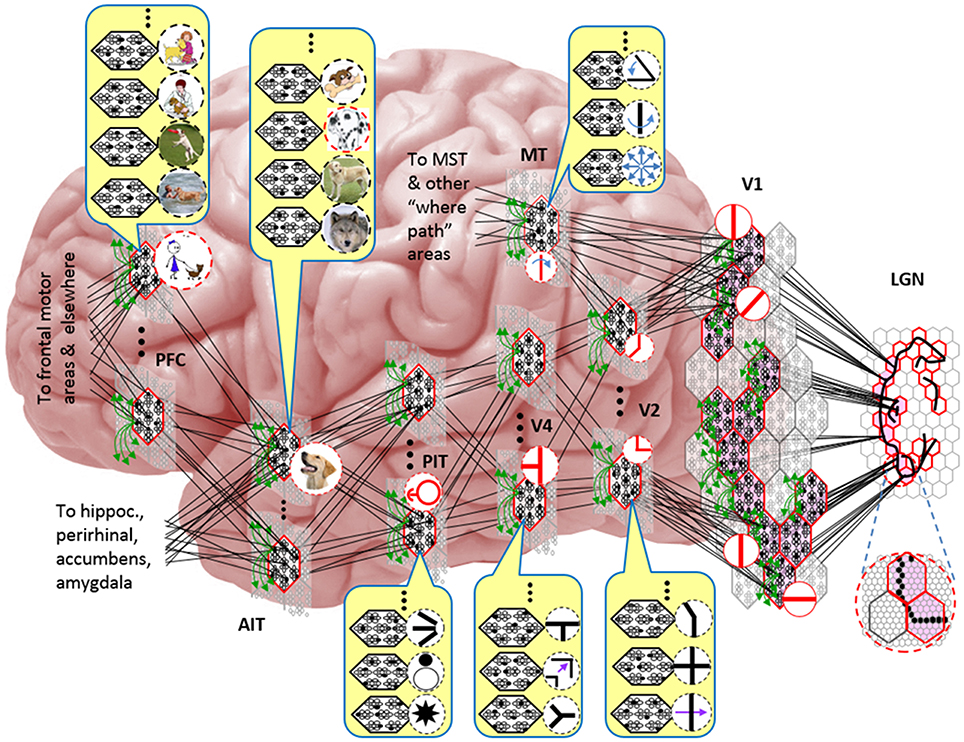

Figure I-5 embellishes the scheme shown in Figure I-4B and (turning it sideways) casts it onto the physical brain. We add paths from V1 and V2 to an MT representation as well. We add a notional PFC representation in which a higher-level concept involving the dog, i.e., the fact that it is being walked, is active. We show a more complete tiling of macs at V1 than in Figure I-4B to emphasize that only V1 macs that have a sufficient fraction of active pixels, e.g., an edge contour, in their aperture become active (pink). In general, we expect the fraction of active macs to decrease with level. As this and prior figures suggest, we currently model the macs as having no overlap with each other (i.e., they tile the local region), though their RFs [as well as their projective fields (PFs)] can overlap. However, we expect that in the real brain, macs can physically overlap. That is, any given minicolumn could be contained in multiple overlapping macs, where only one of those macs can be active at any given moment. The degree of overlap could vary by region, possibly generally increasing anteriorly. If so, then this would partially explain (in conjunction with the extremely limited view of population activity that single/few-unit electrophysiology has provided through most of the history of neuroscience) why there has been little evidence thus far for macs in more frontal regions.

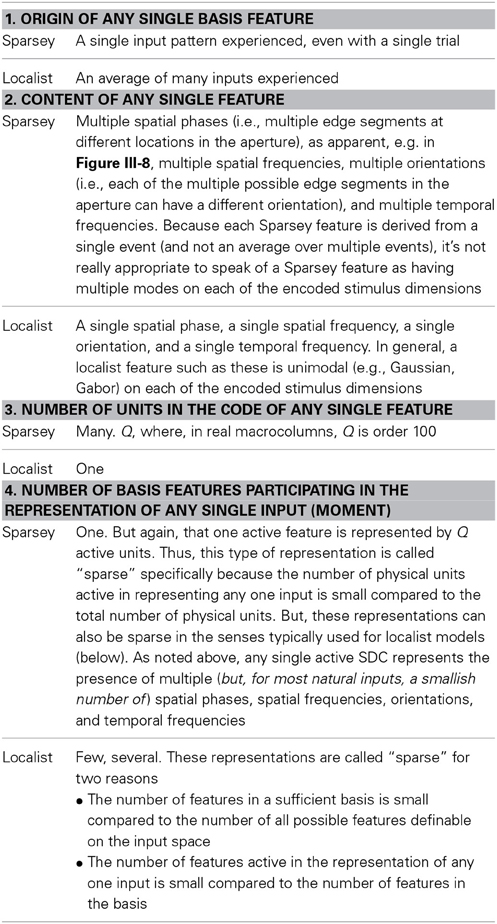

Sparse Distributed Codes vs. Localist Codes

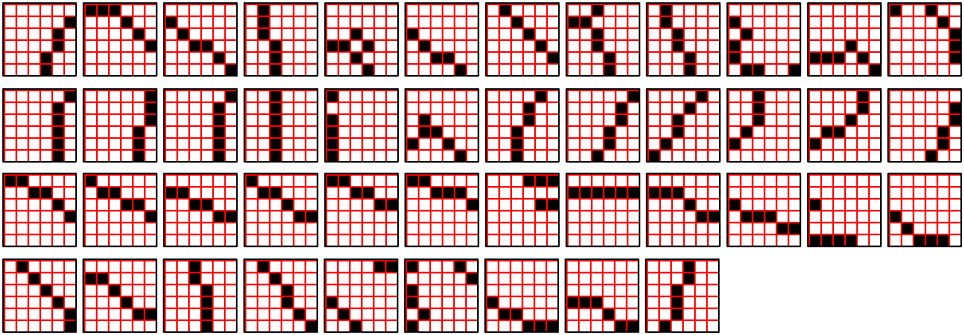

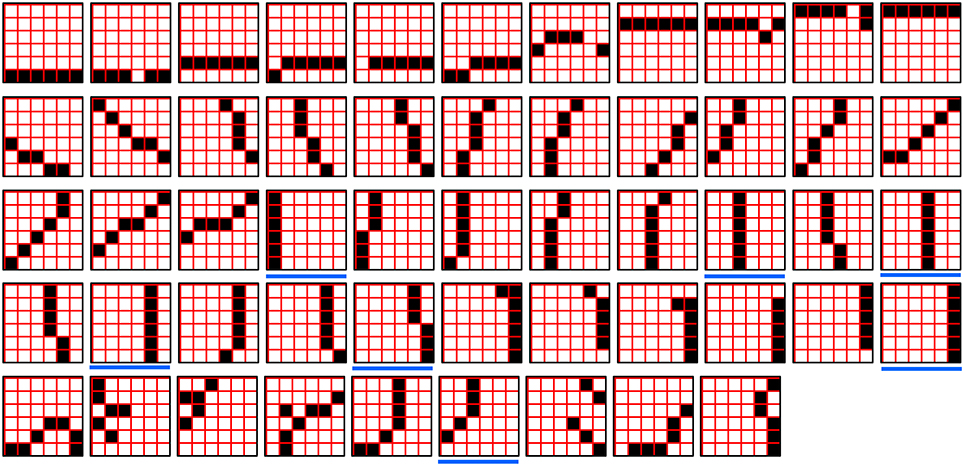

One important difference between SDC and localist representation is that the space of representations (codes) for a mac using SDC is exponentially larger than for a mac using a localist representation. Specifically, if Q is the number of CMs in a mac and K is the number of cells per CM, then there are KQ unique SDC codes for that mac. A localist mac of the same size only has Q × K unique codes. Note that it is not the case that an SDC-based mac can use that entire code space, i.e., store KQ features. Rather, the limiting factor on the number of codes storable in an SDC-based mac is the fraction of the mac's afferent synaptic weights that are set high (our model uses effectively binary weights), i.e., degree of saturation. In fact, the number of codes storable such that all stored codes can be retrieved with some prescribed average retrieval accuracy (error), is probably a vanishingly small fraction of the entire code space. However, real macrocolumns have Q ≈ 70 minicolumns, each with K ≈ 20 L2/3 principal cells: a “vanishingly small fraction” of 2070 can of course still be a large absolute number of codes.

While the difference in code space size between localist and SDC models is important, it is the distributed nature of the SDC codes per se that is most important. Many have pointed out a key property of SDC which is that since codes overlap, the number of cells in common between two codes can be used to represent their similarity. For example, if a given mac has Q = 100 CMs, then there are 101 possible degrees of intersection between codes, and thus 101 degrees of similarity, which can be represented between concepts stored in that mac. The details of the process/algorithm that assigns codes to inputs determines the specific definition of similarity implemented. We will discuss the similarity metric(s) implemented and implementable in Sparsey throughout the sequel.

However, as stated earlier, the most important distinction between localism and SDC is that SDC allows the two essential operations of associative (content-addressable) memory, storing new inputs and retrieving the best-matching stored input, to be done in fixed time for the life of the model. That is, given a model of a fixed size (dominated by the number of weights), and which therefore has a particular limit on the amount, C, of information that it can store and retrieve subject to a prescribed average retrieval accuracy (error), the time it takes to either store (learn) a new input or retrieve the best-matching stored input (memory) remains constant regardless of how much information has been stored, so long as that amount remains less than C. There is no other extant model, including all HMAX models, all convolutional network (CN) models, all Deep Learning (DL) models, all other models in the class of graphical probability models (GPMs), and the locality-sensitive hashing models, for which this capability—constant storage and best-match retrieval time over the life of the system—has been demonstrated. All these other classes of models realize the benefits of hierarchy per se, i.e., the principle of hierarchical compositionality which is critical for rapidly learning highly nonlinear category boundaries, as described in Bengio et al. (2012), but only Sparsey also realizes the speed benefit, and therefore ultimately, the scalability benefit, of SDC. We state the algorithm in Section Sparsey's Core Algorithm. The reader can see by inspection of the CSA (Table I-1) that it has a fixed number of steps; in particular, it does not iterate over stored items.

Another way of understanding the computational power of SDC compared to localism is as follows. We stated above that in a localist representation such as in Figure I-4A, only one cell, representing one hypothesis can be active at a time. The other cells in the mac might, at some point prior to the choice of a final winner, have a distribution of sub-threshold voltages that reflects the likelihood distribution over all represented hypotheses. But ultimately, only one cell will win, i.e., go supra-threshold and spike. Consequently, only that one cell, and thus that one hypothesis, will materially influence the next time step's decision process in the same mac (via the recurrent H matrix) and in any other downstream macs.

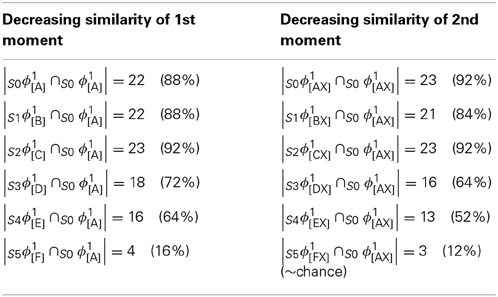

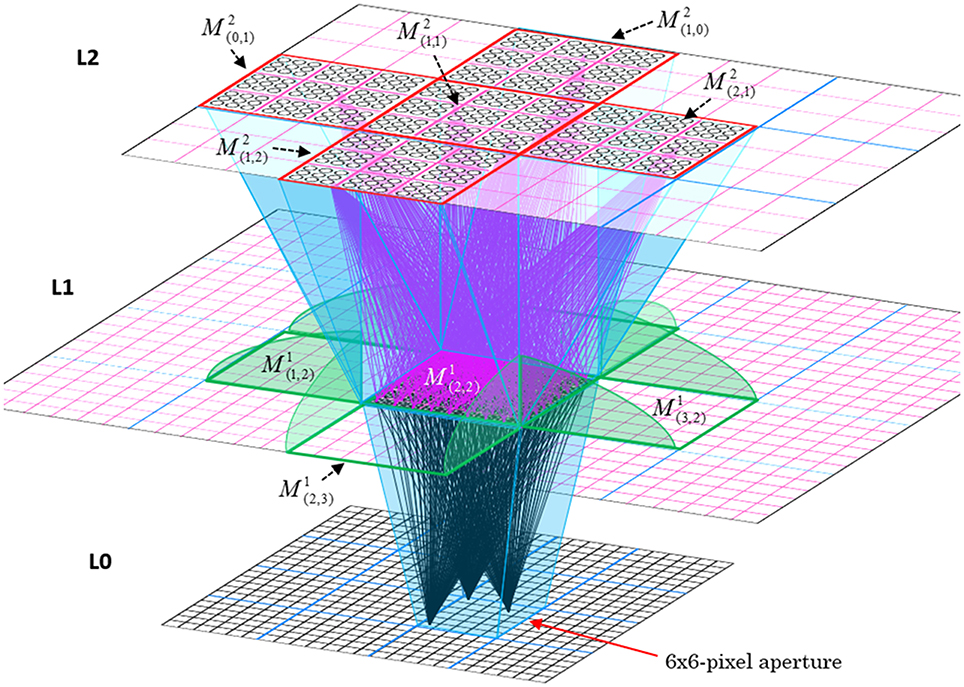

In contrast, because SDCs physically overlap, if one particular SDC (and thus, the hypothesis that it represents) is fully active in a mac, i.e., if all Q of that code's cells are active, then all other codes (and thus, their associated hypotheses) stored in that mac are also simultaneously physically partially active in proportion to the size of their intersections with the single fully active code. Furthermore, if the process/algorithm that assigns the codes to inputs has enforced the similar-inputs-to-similar-codes (SISC) property, then all stored inputs (hypotheses) are active with strength in descending order of similarity to the fully active hypothesis. We assume that more similar inputs generally reflect more similar world states and that world state similarity correlates with likelihood. In this case, the single fully active code also physically functions as the full likelihood distribution over all SDCs (hypotheses) stored in a mac. Figure I-6 illustrates this concept. We show five hypothetical SDCs, denoted with ϕ(), for five input items, A-E (the actual input items are not shown here), which have been stored in the mac shown. At right, we show the decreasing intersections of the codes with ϕ(A). Thus, when code ϕ(A) is (fully) active, ϕ(B) is 4/7 active, ϕ(C) is 3/7 active, etc. Since cells representing all of these hypotheses, not just the most likely hypothesis, A, actually spike, it follows that all of these hypotheses physically influence the next time step's decision processes, i.e., the resulting likelihood distributions, active on the next time step in the same and all downstream macs.

Figure I-6. If the process that assigns SDCs to inputs enforces the similar-input-to-similar-codes (SISC) property, then the currently active code in a mac simultaneously physically functions as the entire likelihood distribution over all hypotheses stored in the mac. At bottom, we show the activation strength distribution over all five codes (stored hypotheses), when each of the five codes is fully active. If SISC was enforced when these codes were assigned (learned), then these distributions are interpretable as likelihood distributions. See text for further discussion.

We believe this difference to be fundamentally important. In particular, it means that performing a single execution of the fixed-time CSA transmits the influence of every represented hypothesis, regardless of how strongly active a hypothesis is, to every hypothesis represented in downstream macs. We emphasize that the representation of a hypothesis's probability (or likelihood) in our model—i.e., as the fraction of a given hypothesis's full code (of Q cells) that is active—differs fundamentally from existing representations in which single neurons encode such probabilities in their strengths of activation (e.g., firing rates) as described in the recent review of Pouget et al. (2013).

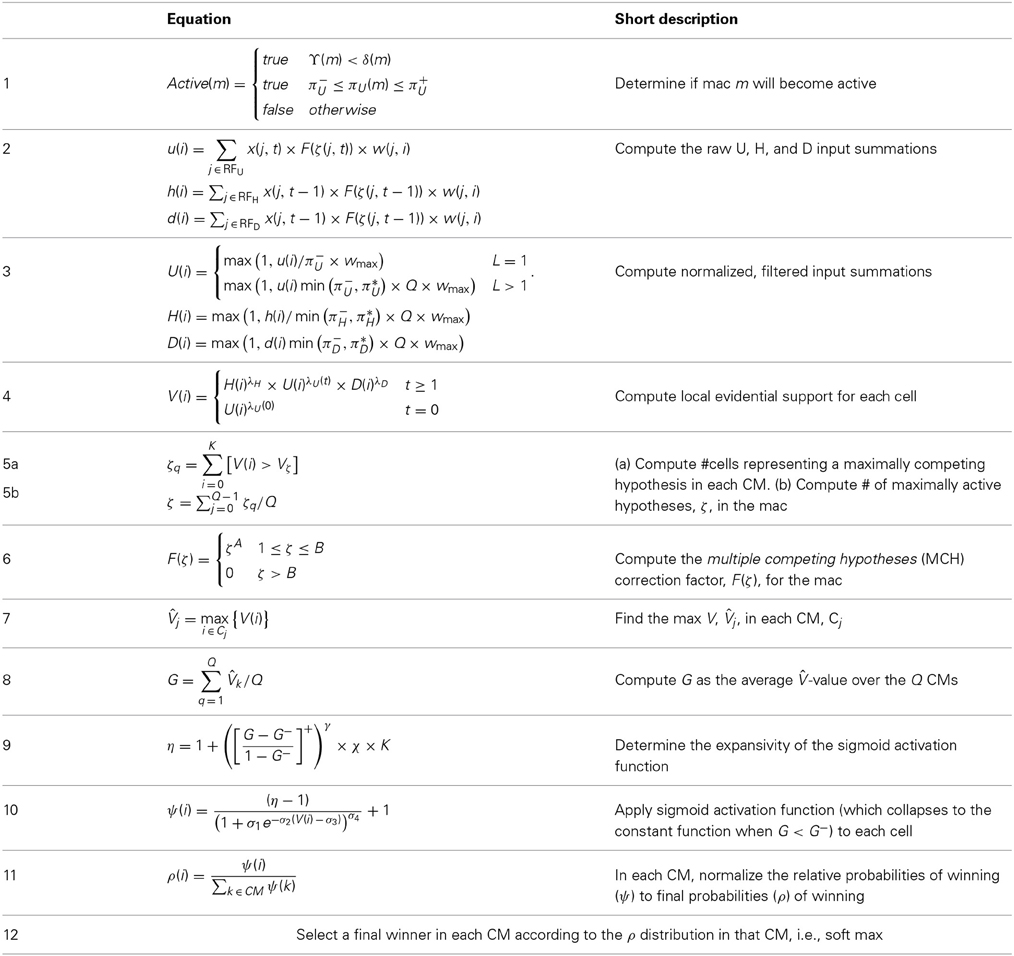

Sparsey's Core Algorithm

During learning, Sparsey's core algorithm, the code selection algorithm (CSA), operates on every time step (frame) in every mac of every level, resulting in activation of a set of cells (an SDC) in the mac. The CSA can also be used, with one major variation, during retrieval (recognition). However, there is a much simpler retrieval algorithm, essentially just the first few steps of the CSA, which is preferable if the system “knows” that it is in retrieval mode. Note that this is not the natural condition for autonomous systems: in general, the system must be able to decide for itself, on a frame-by-frame basis, whether it needs to be in learning mode (if, and to what extent, the input is novel) or retrieval mode (if the input is completely familiar). We first describe the CSA's learning mode, then its variation for retrieval, then its much simpler retrieval mode. See Table I-2 for definitions of symbols used in equations and throughout the paper.

CSA: Learning Mode

The overall goal of the CSA when in learning mode is to assign codes to a mac's inputs in adherence with the SISC property, i.e., more similar overall inputs to a mac are mapped to more highly intersecting SDCs. With respect to each of a mac's individual afferent RFs, U, H, and D, the similarity metric is extremely primitive: the similarity of two patterns in an afferent RF is simply an increasing function of the number of features in common between the two patterns, thus embodying only what Bengio et al. (2012) refer to as the weakest of priors, the smoothness prior. However, the CSA multiplicatively combines these component similarity measures and, because the H and D signals carry temporal information reflecting the history of the sequence being processed, the CSA implements a spatiotemporal similarity metric. Nevertheless, the ability to learn arbitrarily complex nonlinear similarity metrics (i.e., category boundaries, or invariances), requires a hierarchical network of macs and the ability for an individual SDC, e.g., active in one mac, to associate with multiple (perhaps arbitrarily different) SDCs in one or more other macs. We elaborate more on Sparsey's implementation of this capability in Section Learning arbitrarily complex nonlinear similarity metrics.

The CSA has 12 steps which can be broken into two phases. Phase 1 (Steps 1–7) culminates in computation of the familiarity, G (normalized to [0,1]), of the overall (H, U, and D) input to the mac as a whole, i.e., G is a function of the global state of the mac. To first approximation, G is the similarity of the current overall input to the closest-matching previously stored (learned) overall input. As we will see, computing G involves a round of deterministic (hard max) competition resulting in one winning cell in each of the Q CMs. In Phase 2 (Steps 8–12), the activation function of the cells is modified based on G and a second round of competition occurs, resulting in the final set of Q winners, i.e., the activated code in the mac on the current time step. The second round of competition is probabilistic (soft max), i.e., the winner in each CM is chosen as a draw from a probability distribution over the CM's K cells.

In neural terms, each of the CSA's two competitive rounds entail the principal cells in each CM integrating their inputs, engaging the local inhibitory circuitry, resulting in a single spiking winner. The difference is that the cell activation functions (F/I-curves) used during the second round of integration will generally be very different from those used during the first round. Broadly, the goal is as follows: as G approaches 1, make cells with larger inputs compared to others in the CM increasingly likely to win in the second round, whereas as G approaches 0, make all cells in a CM equally likely to win in the second round. We discuss this further in Section Neural implementation of CSA.

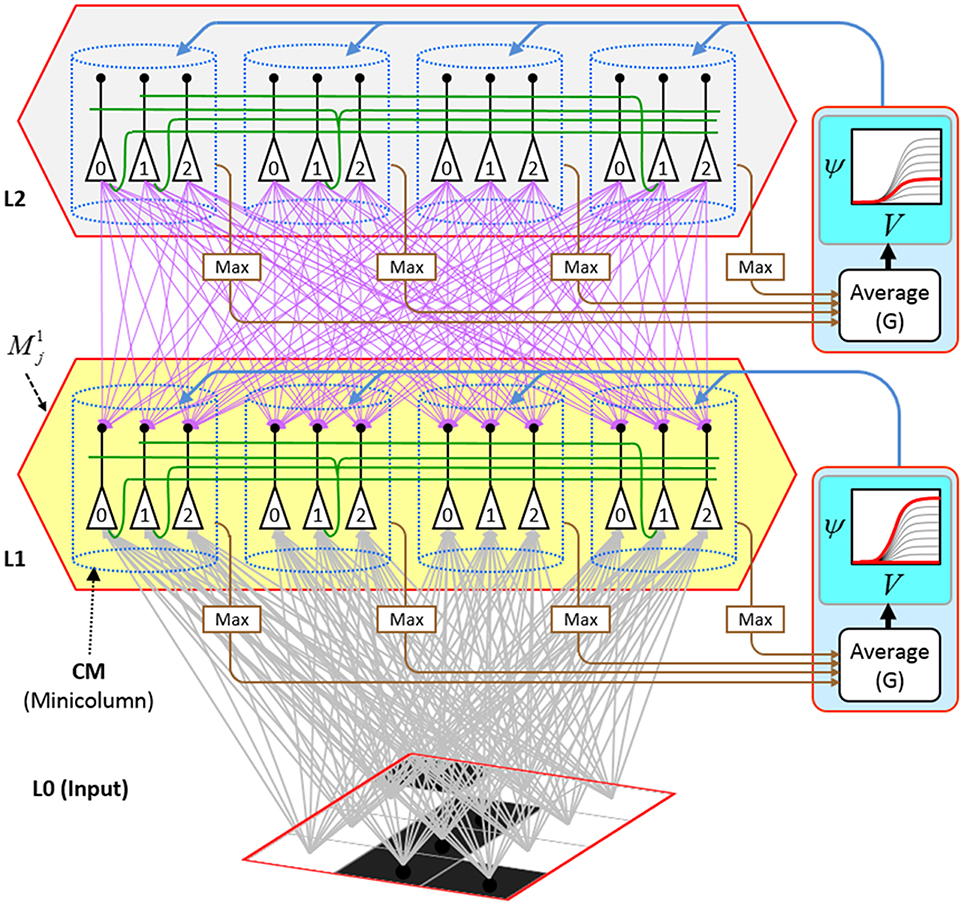

We now describe the steps of the CSA in learning mode. We will refer to the generic “circuit model” in Figure II-1 in describing some of the steps. The figure has two internal levels with one small mac at each level, but the focus, in describing the algorithm, will be on the L1 mac, M1j, highlighted in yellow. M1j consists of Q = 4 CMs, each with K = 3 cells. Gray arrows represent the U-wts from the input level, L0, consisting of 12 binary pixels. Magenta arrows represent the D-wts from the L2 mac. Green lines depict a subset of the H-wts. The representation of where the different afferents arrive on the cells is not intended to be veridical. The depicted “Max” operations are the hard max operations of CSA Step 7. The blue arrows portray the mac-global G-based modulation of the cellular V-to-ψ map (essentially, the F/I curve). The probabilistic draw operation is not explicitly depicted in this circuit model.

Step 1: Determine if the mac will become active

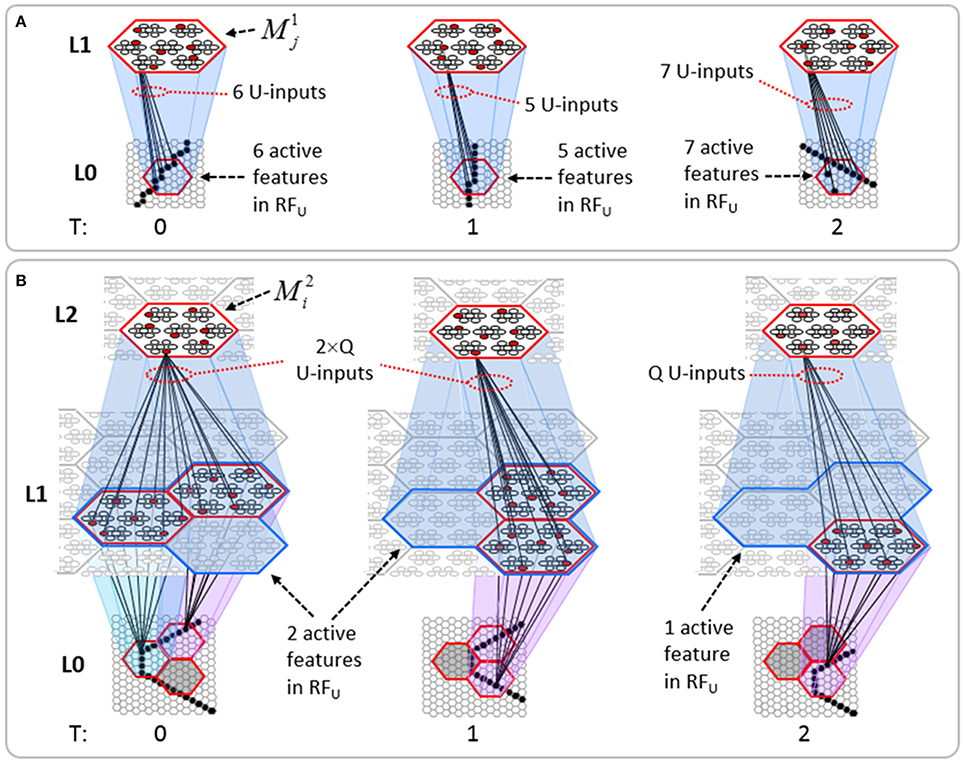

As shown in Equation (1), during learning, a mac, m, becomes active if either of two conditions hold: (a) if the number of active features in its U-RF, πU(m), is between π−U and π+U; or (b) if it is already active but the number of frames that it has been on for, i.e., its code age, ϒ(m), is less than its persistence, δ(m). That is, during learning, we want to ensure that codes remain on for their entire prescribed persistence durations. We currently have no conditions on the number of active features in the H and D RFs.

Step 2: Compute raw U, H, and D-summations for each cell, i, in the mac

Every cell, i, in the mac computes its three weighted input summations, u(i), as in Equation (2a). RFU is a synonym for U-RF. a(j, t) is pre-synaptic cell j's activation, which is binary, on the current frame. Note that the synapses are effectively binary. Although the weight range is [0,127], pre-post correlation causes a weight to increase immediately to wmax = 127 and the asymptotic weight distribution will have a tight cluster around 0 (for weights that are effectively “0”) and around 127 (for weights that are effectively “1”). The learning policy and mechanics are described in Section Learning policy and mechanics. F(ζ(j, t)) is a term needed to adjust the weights of afferent signals from cells in macs in which multiple competing hypotheses (MCHs) are active. If the number of MCHs (ζ) is small then we want to boost the weights of those signals, but if it gets too high, in which case we refer to the source mac as being muddled, those signals will generally only serve to decrease SNR in target macs and so we disregard them. Computing and dealing with MCHs is described in Steps 5 and 6. h(i) and d(i) are computed in analogous fashion Equations (2b) and (2c), with the slight change that H and D signals are modeled as originating from codes active on the previous time step (t − 1).

Step 3: Normalize and filter the raw summations

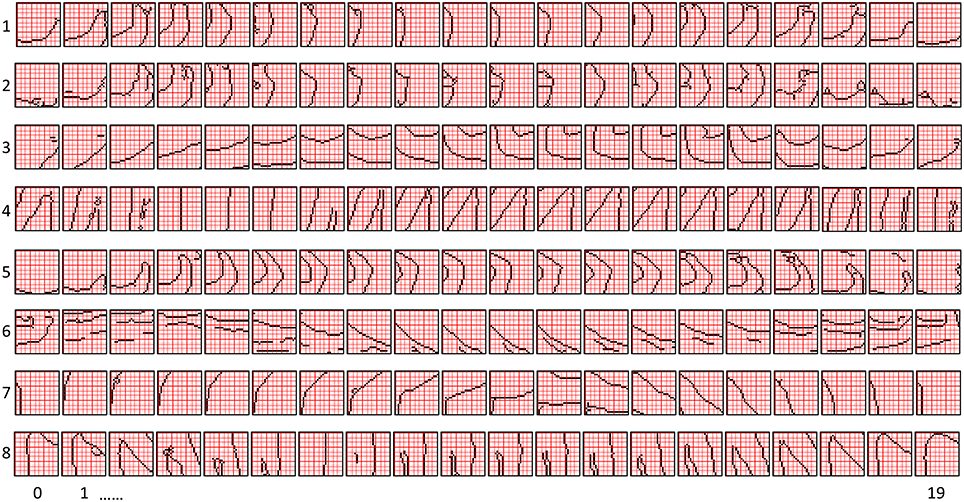

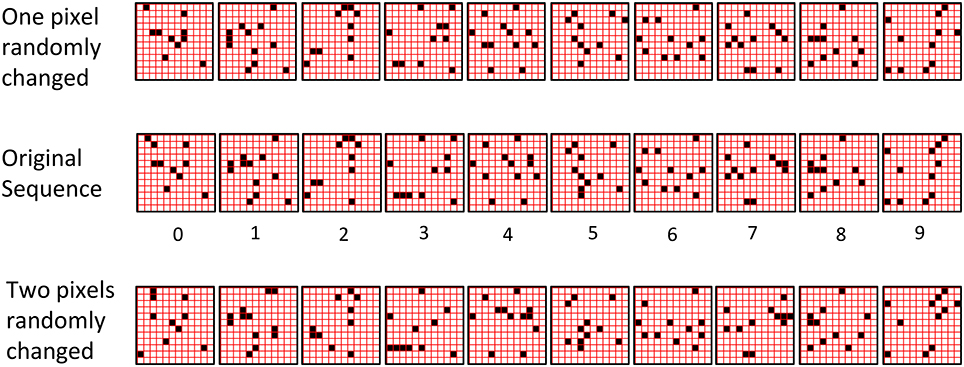

The summations, u(i), h(i), and d(i), are normalized to [0,1] interval, yielding U(i), H(i), and D(i). We explained above that a mac m only becomes active if the number of active features in its U-RF, πU(m), is between π−U and π+U, referred to as the lower and upper mac activation bounds. Given our assumption that visual inputs to the model are filtered to single-pixel-wide edges and binarized, we expect relatively straight or low-curvature edges roughly spanning the diameter of an L0 aperture to occur rather frequently in natural imagery. Figure II-2 shows two examples of such inputs, as frames of sequences, involving either only a single L0 aperture (panel A) or a region consisting of three L0 apertures, i.e., as might comprise the U-RFs of an L2 mac (e.g., as in Figure I-4B). The general problem, treated in this figure, is that the number of features present in a mac's U-RF, πU(m), may vary from one frame to the next. Note that for macs at L2 and higher, the number of features present in an RF is the number of active macs in that RF, not the total number of active cells in that RF. The policy implemented in Sparsey is that inputs with different numbers of active features compete with each other on an equal footing. Thus, normalizers (denominators) in Equations (3a–c) use the lower mac activation bound, π−U, π−H, and π−D. This necessitates hard limiting the maximum possible normalized value to 1, so that inputs with between π−U and π+U active features yield normalized values confined to [0,1]. There is one additional nuance. As noted above, if a mac in m's U-RF is muddled, then we disregard all signals from it, i.e., they are not included in the u-summations of m's cells. However, since that mac is active, it will be included in the number of active features, πU(m). Thus, we should normalize by the number of active, nonmuddled macs in m's U-RF (not simply the number of active macs): we denote this value as π*U. Finally, note that when the afferent feature is represented by a mac, that feature is actually being represented by the simultaneous activation of, and thus, inputs from, Q cells; thus the denominator must be adjusted accordingly, i.e., multiplied by Q and by the maximum weight of a synapse, wmax.

Figure II-2. The mac's normalization policy must be able to deal with inputs of different sizes, i.e., inputs having different numbers of active features. (A) An edge rotates through the aperture over three time steps, but the number of active features (in this case, pixels) varies from one time step (moment) to the next. In order for the mac to be able to recognize the 5-pixel input (T = 1) just as strongly as the 6 or 7-pixel inputs, the u-summations must be divided by 5. (B) The U-RFs of macs at L2 and higher consist of an integer number of subjacent level macs, e.g., here, M2i's U-RF consists of three L1 macs (blue border). Each active mac in M2i's U-RF represents one feature. As for panel a, the number of active features varies across moments, but in this case, the variation is in increments/decrements of Q synaptic inputs. Grayed-out apertures have too few active pixels for their associated L1 macs to become active.

Step 4: Compute overall local support for each cell in the mac

The overall local (to the individual cell) measure, V(i), of evidence/support that cell i should be activated is computed by multiplying filtered versions of the normalized inputs as in Equation (4). V(i) can also be viewed as the normalized degree of match of cell i's total afferent (including U, H, and D) synaptic weight vector to its total input pattern. We emphasize that the V measure is not a measure of support for a single hypothesis, since an individual cell does not represent a single hypothesis. Rather, in terms of hypotheses, V(i) can be viewed as the local support for the set of hypotheses whose representations (codes) include cell i. The individual normalized summations are raised to powers (λ), which allows control of the relative sensitivities of V to the different input sources (U, H, and D). Currently, the U-sensitivity parameter, λU, varies with time (index of frame with respect to beginning of sequence). We will add time-dependence to the H and D sensitivity parameters as well and explore the space of policies regarding these schedules in the future. In general terms, these parameters (along with many others) influence the shapes of the boundaries of the categories learned by a mac.

As described in Section CSA: Retrieval Mode, during retrieval, this step is significantly generalized to provide an extremely powerful, general, and efficient mechanism for dealing with arbitrary, nonlinear invariances, most notably, nonlinear time-warping of sequences.

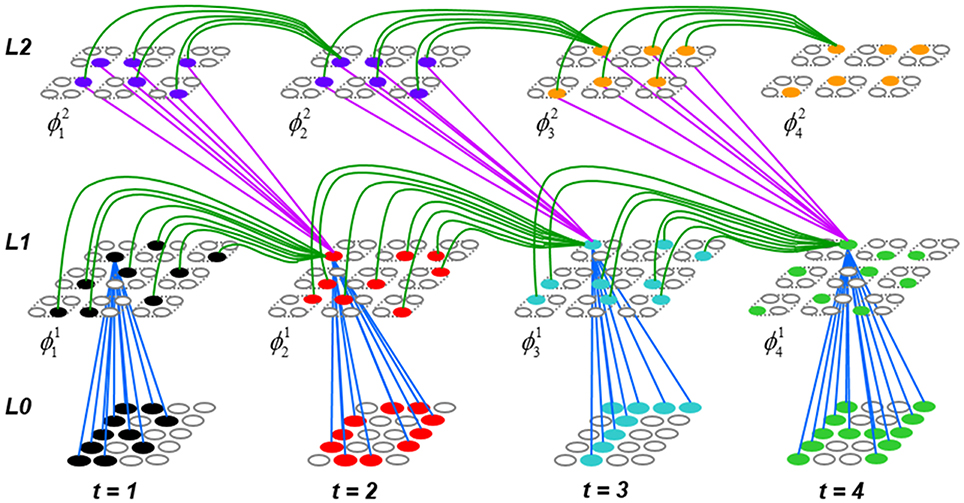

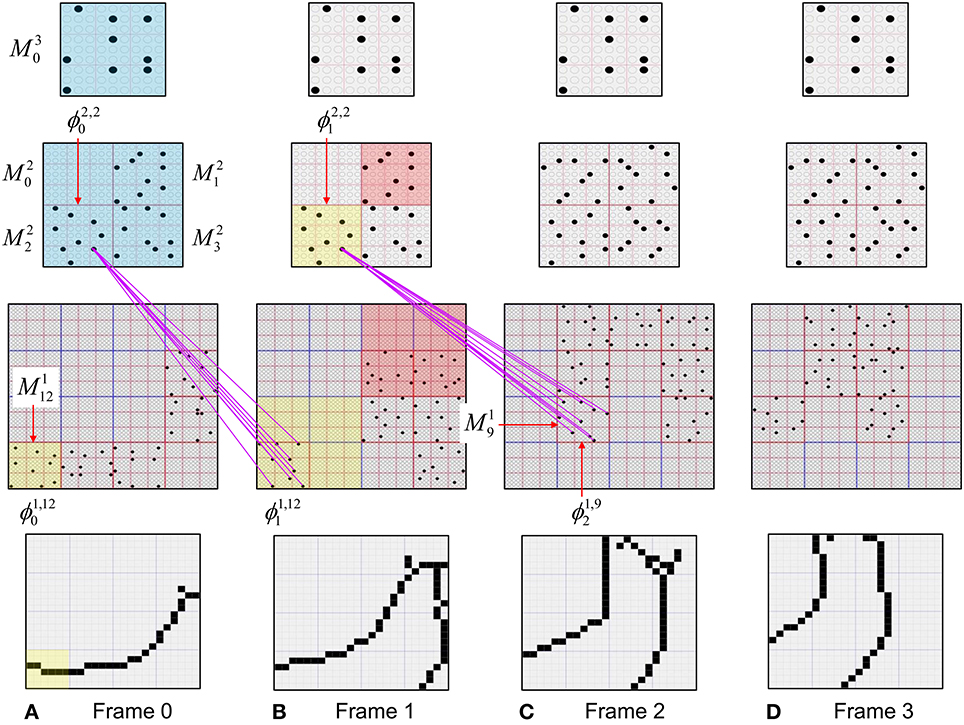

Step 5: Compute the number of competing hypotheses that will be active in the mac once the final code for this frame is activated

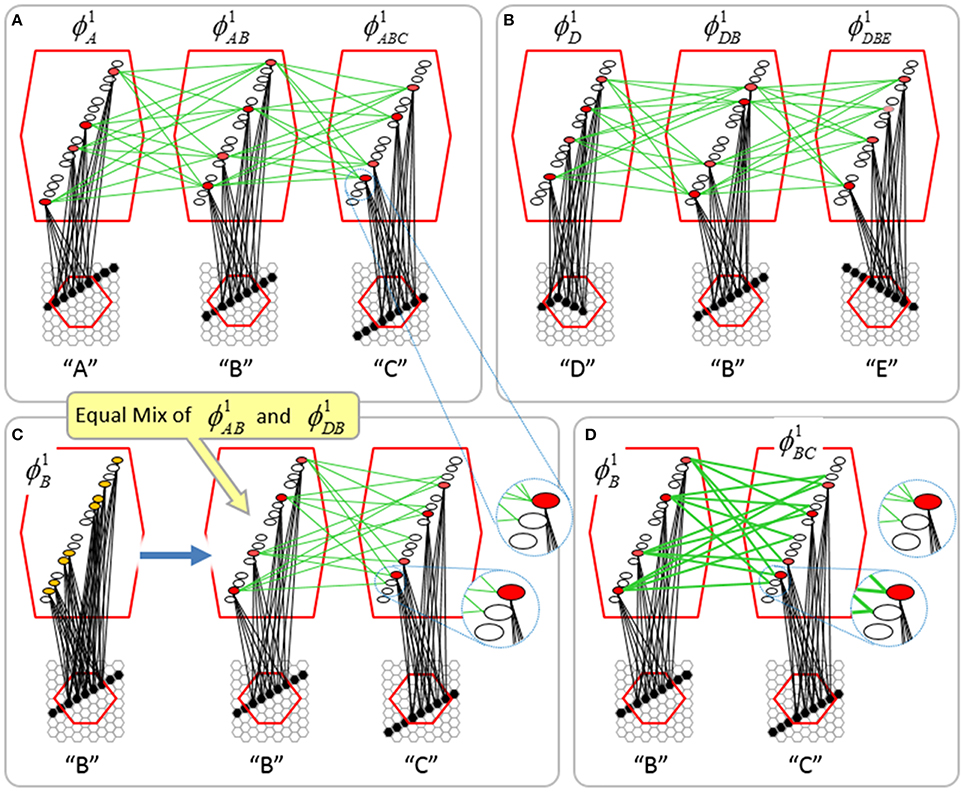

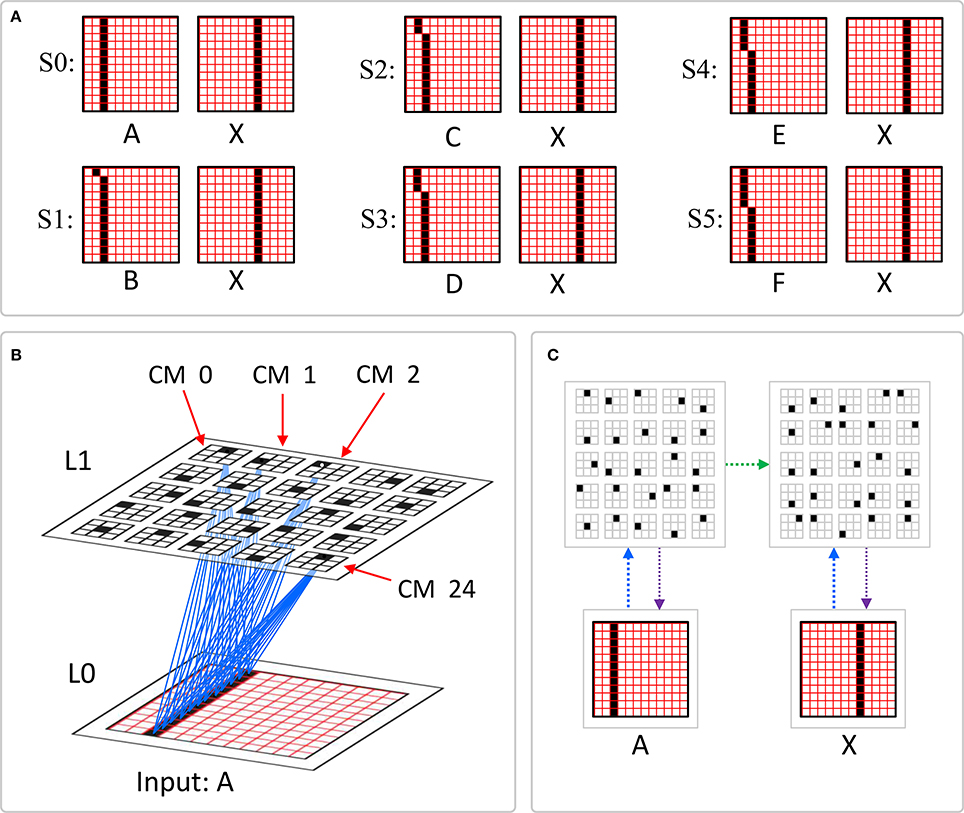

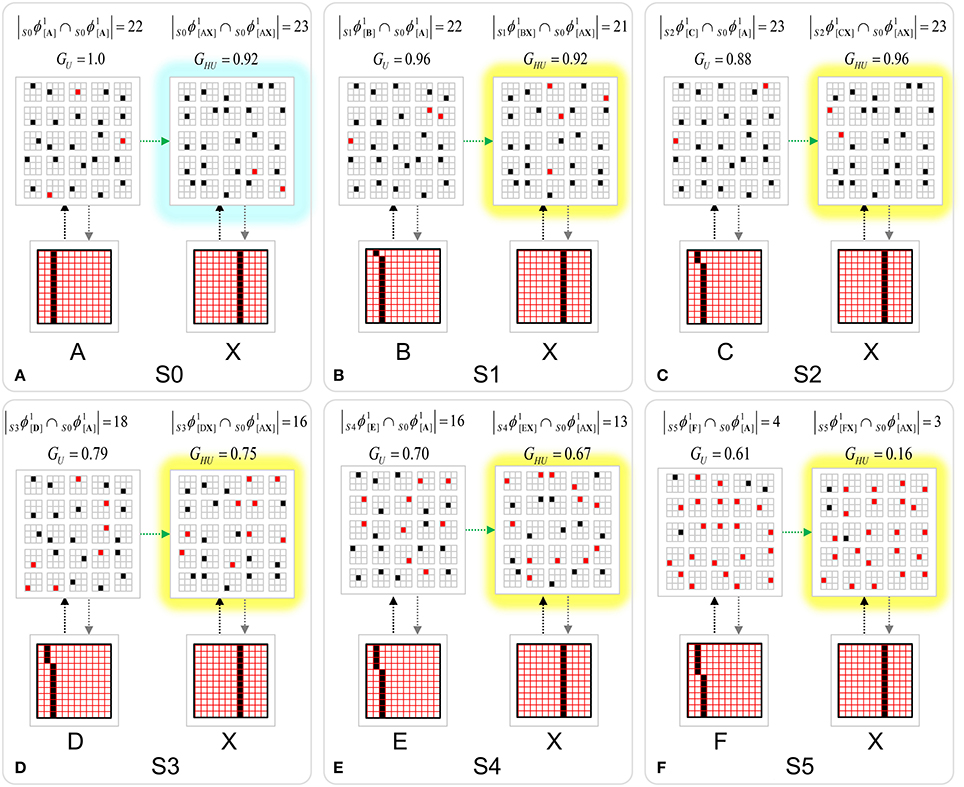

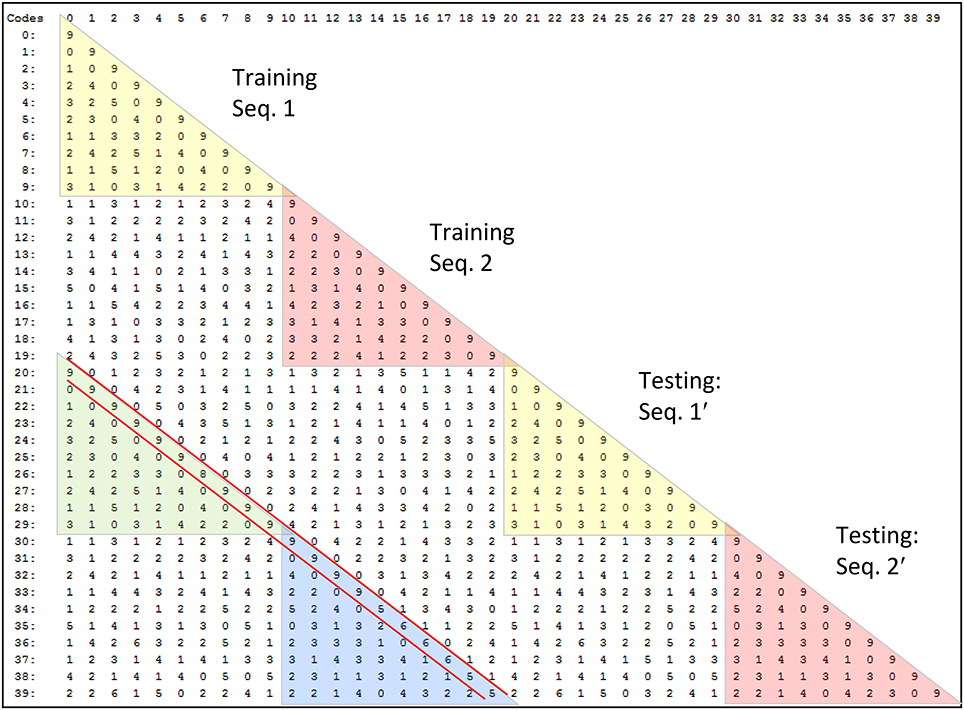

To motivate the need for keeping track of the number of competing hypotheses active in a mac, we consider the case of complex sequences, in which the same input item occurs multiple times and in multiple contexts. Figure II-3 portrays a minimal example in which item B occurs as the middle state of sequences [ABC] and [DBE]. Here, the model's single internal level, L1, consists of just one mac, with Q = 4 CMs, each with K = 4 cell. Figure II-3A shows notional codes (SDCs) chosen on the three time steps of [ABC]. The code name convention here is that ϕ denotes a code, the superscript “1” indicates the model level at which code resides. The subscript indicates the specific moment of the sequence that the code represents; thus, it is necessary for the subscript to specify the full temporal context, from start of sequence, leading up to the current input item. Successively active codes are chained together, resulting in spatiotemporal memory traces that represent sequences. Green lines indicate the H-wts that are increased from one code to the next. Black lines indicate the U-wts that are increased from currently active pixels to currently active L1 cells (red). Thus, as described earlier, e.g., in Figure I-2, individual cells learn spatiotemporal inputs in correlated fashion, as whole SDCs. Learning is described more thoroughly in Section Learning Policy and Mechanics.

Figure II-3. Portrayal of reason why macs need to know how many multiple competing hypotheses (MCHs) are/were active in their afferent macs. (A) Memory trace of 3-item sequence, [ABC]. This model has a single internal level with one mac consisting of Q = 4 CMs, each with K = 4 cells. We show notional SDCs (sets of red cells) for each of the three items. The green lines represent increased H-wts in the recurrent H-matrix: the trace is shown unrolled in time in time. (B) A notional memory trace of sequence [DBE]. The SDC chosen for item B differs from that in [ABC] because of the different temporal context signals, i.e., from the code for item D rather than the code for item A. (C) We prompt with item B, the model enters a state that has equal measures of both of B's previously assigned SDCs. Thus multiple (here, two) hypotheses are equally active. (D) If the model can detect that multiple hypotheses are active in this mac, then it can boost its efferent H-signals (multiplying them by the number of MCHs), in which case the combined H and U signals when the next item, here “C”, is presents, causing the SDC for the moment [ABC] to become fully active. See text for more details.

As portrayed in Figure II-3B, if [ABC] has been previously learned, then when item B of another sequence, [DBC], is encountered, the CSA will generally cause a different SDC, here, ϕ1DB, to be chosen. ϕ1DB will be H-associated with whatever code is activated for the next item, in this case ϕ1DBE for item E. This choosing of codes in a context-dependent way (where the dependency has no fixed Markov order and in practice can be extremely long), enables subsequent recognition of complex sequences without confusion.

However, what if in some future recognition test instance, we prompt the network with item B, i.e., as the first item of the sequence, as shown in Figure II-3C? In this case, there are no active H-wts and so the computation of local support Equation (4) depends only on the U-wts. But, the pixels comprising item B have been fully associated with the two codes, ϕ1AB and ϕ1DB, which have been assigned to the two moments when item B was presented, [AB] and [DB]. We show the two maximally implicated (more specifically, maximally U-implicated) cells in each CM as orange to indicate that a choice between them in each CM has not yet been made. However, by the time the CSA completes for the frame when item B is presented, one winner must be chosen in each CM (as will become clear as we continue to explain the CSA throughout the remainder of section Sparsey's Core Algorithm). And, because it is the case in each CM, that both orange cells are equally implicated, we choose winners randomly between them, resulting in a code that is an equal mix of the winners from ϕ1AB and ϕ1DB. In this case, we refer to the mac as having multiple competing hypotheses active (MCHs), where we specifically mean that all the active hypotheses (in this case, just two) are approximately equally strongly active.

The problem can now be seen at the right of Figure II-3C when C is presented. Clearly, once C is presented, the model has enough information to know which of the two learned sequences, or more specifically, which particular moment is intended, [ABC] rather than [DBE]. However, the cells comprising the code representing that learned moment, ϕ1ABC, will, at the current test moment (lower inset in Figure II-3C), have only half the active H-inputs that they had during the original learning instance (i.e., upper inset in Figure II-3C). This leads, once processed through steps 2b, 3b, and 4, to V-values that will be far below V = 1, for simplicity, let's say V = 0.5, for the cells comprising ϕ1ABC. As will be explained in the remaining CSA steps, this ultimately leads to the model not recognizing the current test trial moment [BC] as equivalent to the learning trial moment [ABC], and consequently, to activation of a new code that could in general be arbitrarily different from ϕ1ABC.

However, there is a fairly general solution to this problem where multiple competing hypotheses are present in an active mac code, e.g., in the code for B indicated by the yellow call-out. The mac can easily detect when an MCH condition exists. Specifically, it can tally the number cells with V = 1—or, allowing some slight tolerance for considering a cell to be maximally implicated, cells with V(i) > Vζ, where Vζ is close to 1, e.g., Vζ = 0.95—in each of its Q CMs, as in Equation (5a). It can then sum ζq over all Q CMs and divide by Q (and round to the nearest integer, “rni”), resulting in the number of MCHs active in the mac, ζ, as in Equation (5b). In this example, ζ = 2, and the principle by which the H-input conditions, specifically the h-summations, for the cells in ϕ1ABC on this test trial moment [BC] can be made the same as they were during the learning trial moment [ABC], is simply to multiply all outgoing H-signals from ϕ1B by ζ = 2. We indicate the inflated H-signals by the thicker green lines in the lower inset at right of Figure II-3D. This ultimately leads to V = 1 for all four cells comprising ϕ1ABC and, via the remaining steps of the CSA, reinstatement of 1 ϕABC with very high probability (or with certainty, in the simple retrieval mode described in Section CSA: Simple Retrieval Mode), i.e., with recognition of test trial moment [BC] as equivalent to learning trial moment [ABC]. The model has successfully gotten through an ambiguous moment based on presentation of further, disambiguating inputs.

We note here that uniformly boosting the efferent H-signals from ϕ1B also causes the h-summations for the four cells comprising the code ϕ1DBE to be the same as they were in the learning trial moment [DBE]. However, by Equation (4), the V-values depend on the U-inputs as well. In this case, the four cells of ϕ1DBE have u-summations of zero, which leads to V = 0, and ultimately to essentially zero probability of any of these cells winning the competitions in their respective CMs. Though we don't show the example here, if on the test trial, we present E instead of C after B, the situation is reversed; the u-summations of cells comprising the code ϕ1DBE are the same as they were in the learning trial moment [DBE] whereas those of the cells comprising the code ϕ1ABC are zero, resulting with high probability (or certainty) in reinstatement of ϕ1DBE.

Step 6: Compute correction factor for multiple competing hypotheses to be applied to efferent signals from this mac

The example in Figure II-3 was rather clean in that it involved only two sequences having been learned, containing a total of six moments, [A], [AB], [ABC], [D], [DB], and [DBE], and very little pixel-wise overlap between the items. Thus, cross-talk between the stored codes was minimized. However, in general, macs will store far more codes. If for example, the mac of Figure II-3 was asked to store 10 moments where B was presented, then, if we prompted the network with B as the first sequence item, we would expect almost all cells in all CMs to have V = 1. As discussed in Step 2, when the number of MCHs (ζ) in a mac gets too high, i.e., when the mac is muddled, its efferent signals will generally only serve to decrease SNR in target macs (including itself on the next time step via the recurrent H-wts) and so we disregard them. Specifically, when ζ is small, e.g., two or three, we want to boost the value of the signals coming from all active cells in that mac by multiplying by ζ (as in Figure II-3D). However, as ζ grows beyond that range, the expected overlap between the competing codes increases and to approximately account for that, we begin to diminish the boost factor as in Equation (6), where A is an exponent less than 1, e.g., 0.7. Further, once ζ reaches a threshold, B, typically set to 3 or 4, we multiply the outgoing weights by 0, thus effectively disregarding the mac completely in downstream computations. We denote the correction factor for MCHs as F(ζ), defined as in Equation (6). We also use the notation, F(ζ(j, t)), as in Equation (2), where ζ(j, t) is the number of hypotheses tied for maximal activation strength in the owning mac of a pre-synaptic cell, j, at time (frame) t.

Step 7: Determine the maximum local support in each of the mac's CMs

Operationally, this step is quite simple: simply find the cell with the highest V-value, j, in each CM, Cj, as in Equation (7).

Conceptually, the cell with j in a CM is the cell most implicated by the mac's total input (multiple cells can be tied for j), or in other words, the most likely winner in the CM. In fact, in the simple retrieval mode (Section CSA: Simple Retrieval Mode), the cell with j in each CM is chosen winner.

Step 8: Compute the familiarity of the mac's overall input

The average, G, of the maximum V's across the mac's Q CMs is computed as in Equation (8): G is a measure of the familiarity of the macs overall input. This is done on every time step (frame), so we sometimes denote G as a function of time, G(t). And, G is computed independently for each activated mac, so we may also use more general notation that indicates mac as well.

The main intuition motivating the definition and use of G is as follows. If the mac's current input moment has been experienced in the past, then all active afferent weights (U, H, and D) to the code activated in that instance would have been increased. Thus, in the current moment, all Q cells comprising that code will have V = 1. Thus, G = 1. Thus, a familiar moment must always result in G = 1 (assuming that MCHs are accounted for as described above). On the other hand, suppose that the current overall input moment is novel, even if sub-components of the current overall input have been experienced exactly before. In this case, provided that few enough codes have been stored in the mac (so that crosstalk remains sufficiently small), there will be at least some CMs, Cj, for which j is significantly less than 1. Thus, G < 1. Moreover, as the examples in the Results section will show, G correlates with the familiarity of the overall mac input. Thus, G measures the familiarity, or inverse novelty, of the global input to the mac.

Note that in the brain, this step requires that the Q cells with V = j become active (i.e., spike) so that their outputs can be summed and averaged. This constitutes the first of two rounds of competition that occurs within the mac's CMs on each execution of the CSA. However, as explained herein, this set of Q cells will, in general, not be identical to (and can often be substantially different from, especially when G ≈ 0) the finally chosen code for this execution of the CSA (i.e., the code chosen in Step 12).

Step 9: Determine the expansivity/compressivity of the I/O function to be used for the second and final round of competition within the mac's CMs

Determine the range, η, of the sigmoid activation function, which transforms a cell's V-value into its relative (within its own CM) probability of winning, ψ. We refer to that transform as the V-to-ψ map. We refer to χ as the sigmoid expansion factor and γ as the sigmoid expansion exponent.

As noted several times earlier, the overall goal of the CSA when in learning mode is to assign codes to a mac's inputs in adherence with the SISC property, i.e., more similar overall inputs to a mac are mapped to more highly intersecting SDCs. Given that G represents, to first approximation, the similarity of the closest-matching stored input to the current input, we can restate the goal as follows.

(1) as G goes to 1, meaning the input X is completely familiar, we want the probability of reinstating the code ϕX that was originally assigned to represent X, to go to 1. It is the cells comprising ϕX, which are causing the high G-value. But these are the cells with the maximal V's (V = j = 1) in their respective CMs. Thus, within each CM, Cj, we want to increase the probability of picking the cell with V = j relative to cells with V < j, i.e., we want to transform the V's via an expansive nonlinearity

(2) as G goes to 0 (completely novel input), we want the set of winners chosen to have the minimum average intersection with all stored codes. We can achieve that by choosing the winner in each CM from the uniform distribution, i.e., by making all cells in a CM equally likely to win, i.e., transform the V's via a maximally compressive nonlinearity.

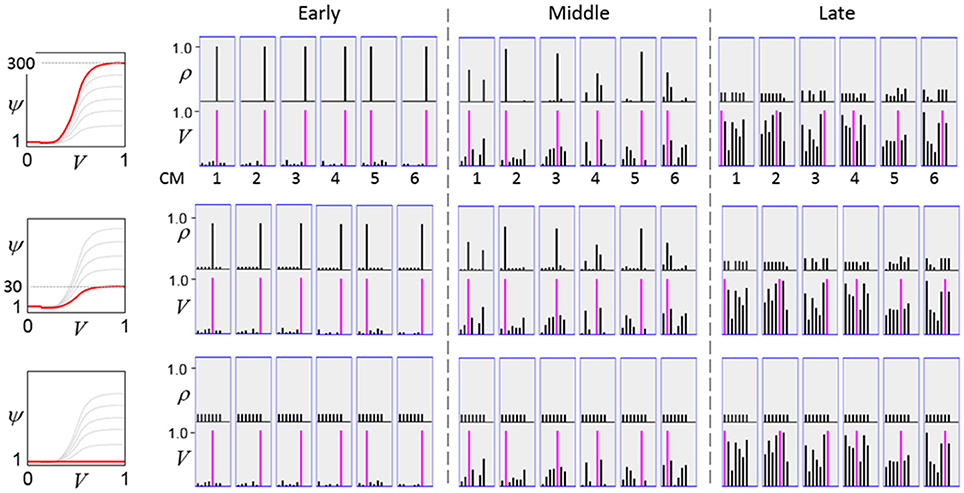

The first goal is met by making the activation function a very expansive nonlinearity. Figure II-4 shows how the expansivity of the V-to-ψ map affects cell win probability, and indirectly, whole-code reinstatement probability. All nine panels concern a small example mac with Q = 6 CMs each comprised of K = 7 cells. Each panel shows hypothetical V and ρ vectors over the cells of the CMs, across two parametrically varying conditions: model “age” (across columns), which we can take as a correlate of the number of stored codes and thus, of the amount of interference (crosstalk) between codes during retrieval, and expansivity (η) (across rows) of the V-to-ψ map. As described shortly, the V-values are first transformed to relative probabilities (ψ) (Step 10), which are then normalized to absolute probabilities (ρ) (Step 11). In all panels, the example V vector in each CM has one cell with V = 1 (pink bars). Thus, by Step 8, all panels correspond to a G = 1 condition. The other six cells (black bars) in each CM are assigned uniformly randomly chosen values in defined intervals that depend on the age of the model. The intervals for “Early,” “Middle,” and “Late,” are [0.0, 0.1], [0.1, 0.5], and [0.2, 0.8], respectively, simulating the increasing crosstalk with age.

Figure II-4. G-based sigmoid transform characteristics. All panels show hypothetical V and ρ vectors over the K = 7 cells in each of the Q = 6 CMs comprising the mac. In all nine panels, the V vector in each CM has one cell prescribed to have V = 1 (pink bars). The V's of the other six cells (black bars) in each CM are drawn randomly from defined intervals that depend on the age (amount of inputs experienced) of the model. For each age condition, we show the effects of using a V-to-ψ map with three different η-values. But our purpose here is just to show the consequences on the final ρ distribution for a given V distribution (the V distribution is the same for all three rows in any given column) as a function of the expansivity/compressivity (η) of the V-to-ψ map. See text for details.

For each age condition, we show the effects of using a V-to-ψ map with three different η-values. Note that in actual operation (specifically, Step 9), all panels would be processed with a V-to-ψ map with the maximal η-value (again, because G = 1 in all panels). But our purpose here is just to show the consequences on the final ρ distribution for a given V distribution (the V distribution is the same for all three rows in any given column) as a function of η. And, note that the minimum ψ-value in all cases is 1. Thus, for the “Early” column, the highly expansive V-to-ψ map (η = 300) (top row) results in a 300/306 ≈ 98% probability of selecting the cell with V = 1 (pink) in each CM. This results in a (300/306)6 ≈ 89% probability of choosing the pink cell in all Q = 6 CMs, i.e., of reinstating the entire correct code. In the second row, η is reduced to 30. Each of the six black cells ultimately ends up with a 1/36 probability of winning and the pink cell, with a 30/36 = 5/6 win probability. In this case the likelihood of reinstating the entire correct code, is (5/6)6 ≈ 33%. In the bottom row, η = 1, i.e., the V-to-ψ map has been collapsed to the constant function, ψ = 1. As can be seen, all cells, including the cell with V = 1 become equally likely to be chosen winner in their respective CMs.

Greater crosstalk can clearly be seen in the “Middle” condition. Consequently, even for η = 300, several of the cells with nonmaximal V end up with significant final probability ρ of being chosen winner in their respective CMs. The ρ-distributions are slightly further compressed (flatter) when η = 30, and completely compressed when η = 1 (bottom row). The “Late” condition is intended to model a later period of the life of the model, after many memories (codes) have been stored in this mac. Thus, when the input pattern associated with any of those stored codes is presented again, many of the cells in each CM will have an appreciable V-value (again, here they are drawn uniformly from [0.2, 0.8]). In this condition, even if η = 300, the probability of selecting the correct cell (pink) in each CMs is close to chance, as is the chance of reinstating the entire correct code. And the situation only gets worse for lower η-values.

Note that for any particular V distribution in a CM, the relative increase to the final probability of being chosen winner is a smoothly and faster-than-linearly increasing (typically, γ ≥ 2) function of G. Thus, in each CM, the probability that the most highly implicated (by the mac's total input) cell (those corresponding to the pink bars in Figure II-4) wins increases smoothly as G goes to 1. (Strictly, this is true only for the portion of the sigmoid nonlinearity with slope > 1). The initial (left) and final (right) portions of the sigmoid are compressive ranges.) And since the overall code is just the result of the Q independent draws, it follows that the expected intersection of the code consisting of the Q most highly implicated cells, i.e., the code of the closest-matching stored input, with the finally chosen code is also an increasing function of G, i.e., thus realizing the “SISC” property.

Step 10: Apply the modulated activation function to all the mac's cells, resulting in a relative probability distribution of winning over the cells of each CM

Apply sigmoid activation function to each cell. Note: the sigmoid collapses to a constant function, ψ(i) = 1, when η = 1 (i.e., when G < G−).

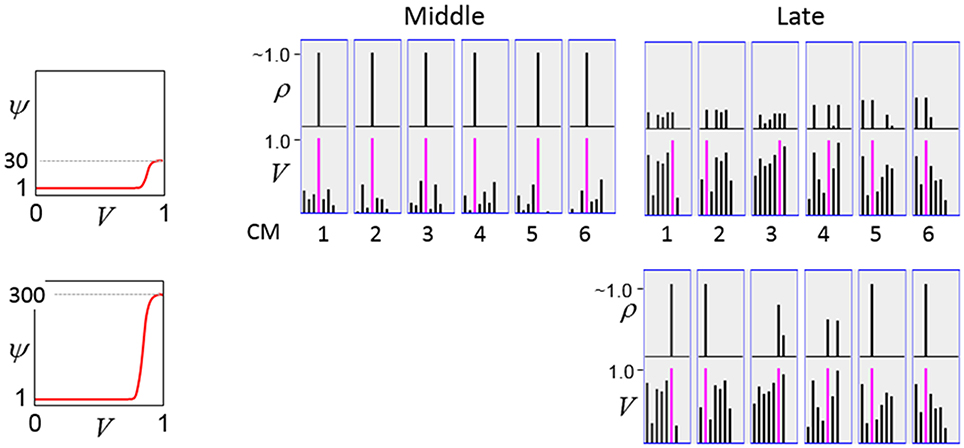

In a more general development, the CSA could include additional prior steps for setting any of the other sigmoid parameters, σ1, σ2, σ3, and σ4, all of which interact to control overall sigmoid expansivity and shape. In particular, in the current implementation, the horizontal position of the sigmoid's inflection point is moved rightward as additional codes are stored in a mac. Figure II-5 shows that doing so greatly increases the probability of choosing the correct cell in each CM and thus, of reinstating the entire correct code, even when many codes have been stored in the mac. In the “Middle” condition, even if η = 30, the probability of choosing the pink cell in each CM is very close to 1. For the “Late” condition, setting η = 30 significantly improves the situation relative to the top right panel of Figure II-4 and setting η = 300 makes the probability of choosing the correct cell close to 1 in four of the six CMs. Thus, we have a mechanism for keeping memories accessible for longer lifetimes.

Figure II-5. Moving the inflection point of the sigmoidal V-to-ψ map to the right greatly increases the probability of selecting the correct cell despite mounting crosstalk due to a growing number of codes stored in superposition.

Step 11: Convert relative win probability distributions to absolute distributions

In each of the mac's CMs, the ψ-values of the cells are converted to true probabilities of winning (ρ) and the winner is selected by drawing from the ρ distribution, resulting in a final SDC, ϕ, for the mac, as in Equation (11).

Step 12: Pick winners in the mac's CMs, i.e., activate the SDC

The last step of the CSA is just selecting a final winner in each CM according to the ρ distribution in that CM, i.e., soft max. This is the second round of competition. Our hypothesis that the canonical cortical computation involves two rounds of competition is a strong and falsifiable prediction of the model with respect to actual neural dynamics, which we would like to explore further.

The CSA is given in Table I-1.

Learning policy and mechanics

Broadly, Sparsey's learning policy can be described as Hebbian with passive weight decay. As noted earlier, the model's synapses are effectively binary. By this we mean that although the weight range is [0,127], the several learning related properties conspire to cause the asymptotic weight distribution to tend toward having two spikes, one at 0 and the other at wmax = 127, thus effectively being binary.

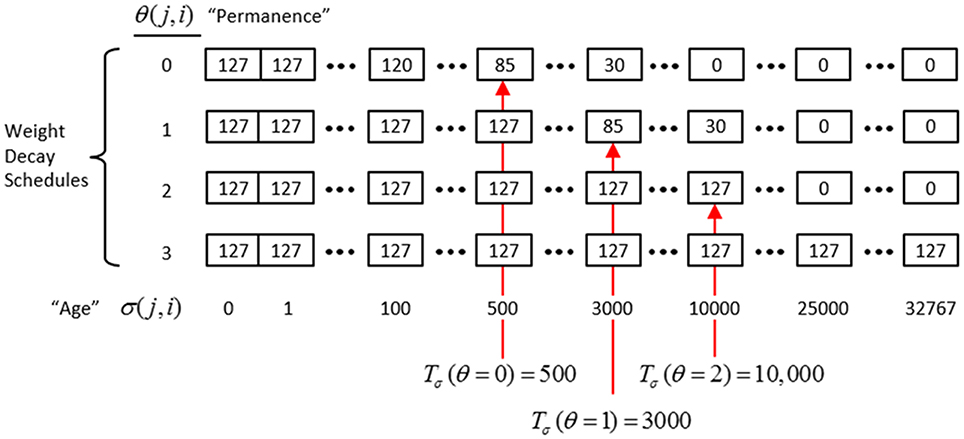

In actuality, a synapse's weight, w(j, i), where j and i index the pre- and postsynaptic cells, respectively, is determined by two primary variables, its age, σ(j, i), which is the number of time steps (e.g., video frames) since it was last increased, and its permanence, θ(j, i), which measures how resistant to decrease the weight is (i.e., the passive decay rate). The learning law is implemented as follows. Whenever a synapse's pre- and postsynaptic cells are coactive [i.e., a “pre-post correlation,” a(j) = 1 ∧ a(i) = 1], its age is set to zero, as in Equation (12a), which has the effect of setting its weight to wmax (as can be seen in the “weight table” of Figure II-6, an age of zero always maps to wmax). Otherwise, σ(j, i) increases by one on each successive time step (across all frames of all sequences presented) on which there is no pre-post correlation Equation (12c), stopping when it gets to the maximum age, σmax Equation (12d). Also note that once a synapse has reached maximum permanence, θmax, its age stays at zero, i.e., its weight stays at wmax Equation (12b). At any point, the synapse's weight, w(j,i), is gotten by dereferencing σ(j, i) and θ(j, i) from the weight table shown in Figure II-6.

Figure II-6. The “weight table”: Indexed by age (columns) and permanence (rows). A synapse's weight is gotten by dereferencing its age, σ(j, i), and its permanence, θ(j, i). See text for details.

The intent of the decay schedule (for any permanence value) is to keep the weight at or near wmax for some initial window of time (number of time steps), Tσ (θ), and then allow it to decay increasingly rapidly toward zero. Thus, the model “assumes” that a pre-post correlation reflects an important / meaningful event in the input space and therefore strongly embeds it in memory (consistent with the notion of episodic memory). If the synapse experiences a second pre-post correlations within the window Tσ (θ), its permanence is incremented as in Equation (13) and σ(j, i) is set back to 0 (i.e., its weight is set back to wmax); otherwise the age, σ(j, i), increases by one with each time step and the weight decreases according to the decay schedule in effect. Thus, pre-post correlations due to noise or spurious events, which will have a much longer expected time to recurrence, will tend to fade from memory. Sparsey's permanence property is closely related to the notion of synaptic tagging (Frey and Morris, 1997; Morris and Frey, 1999; Sajikumar and Frey, 2004; Moncada and Viola, 2007; Barrett et al., 2009).

The exact parametric details are less important, but as can be seen in the weight table, the decay rate decreases with θ(j, i) and the window, Tσ(θ), within which a second pre-post correlation will cause an increase in permanence, increases with θ(j, i) (three example values shown). Permanence can only increase and in our investigations thus far, we typically make a synaptic weight completely permanent on the second or third within-window pre-post correlation [θmax = 1 or θmax = 2, respectively]. The justification of this policy derives from two facts: (a) a mac's input is a sizable set of co-active cells; and (b) due to the SISC property, the probability that a weight will be increased correlates with the strength of the statistical regularity of the input (i.e., the structural permanence of the input feature) causing that increase. These two facts conspire to make the expected time of recurrence of a pre-post correlation exponentially longer for spurious/noisy events than for meaningful (i.e., due to structural regularities of the environment) events.

If we run the model indefinitely, then eventually every synapse will experience two successive pre-post correlations occurring within any predefined window, Tσ. Thus, without some additional mechanism in place, eventually all afferent synapses into a mac will be permanently increased to wmax = 127 at which point (total saturation of the afferent weight matrices) all information will be lost from the afferent matrices. Therefore, Sparsey implements a “critical period” concept, in which all weights leading to a mac are “frozen” (no further learning) once the fraction of weights that have been increased in any one of its afferent matrices crosses a threshold. This may seem a rather drastic solution to the classic trade-off that Grossberg termed the “stability-plasticity dilemma” (Grossberg, 1980). However, note that: (a) “critical periods” have been demonstrated in the real brain in vision and other modalities (Wiesel and Hubel, 1963; Barkat et al., 2011; Pandipati and Schoppa, 2012); (b) model parameter settings can readily be found such that in general, all synaptic matrices afferent to a mac approach their respective saturation thresholds roughly at the same time (so that the above rule for freezing a mac does not result in significantly underutilized synaptic matrices); and (c) in Sparsey, freezing of learning is applied on a mac-by-mac basis. We anticipate that in actual operation, the statistics of natural visual input domains (filtered as described earlier, i.e., to binary 1-pixel wide edges) in conjunction with model principles/parameters will result in the tendency for the lowest level macs to freeze earliest, and progressively higher macs to freeze progressively later, i.e., a “progressive critical periods” concept. Though clearly, if the model as a whole is to be able to learn new inputs throughout its entire “life,” parameters must be set so that some macs, logically those at the highest levels, never freeze. We are still in the earliest stages of exploring the vast space of model parameters that influence the pattern of freezing across levels.

The ultimate test of whether the use of critical periods as described above is too drastic or not is how well a model can continue to perform recognition/retrieval (or perform the specific recognition/retrieval-contingent tasks with which it is charged) over its operational lifetime (which will in general entail large numbers of novel inputs), in particular, after many of its lower levels have been frozen.

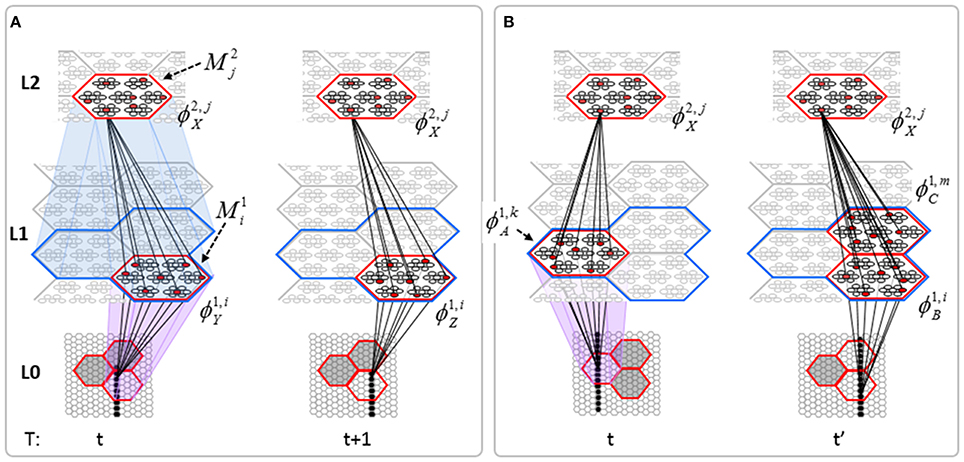

Learning arbitrarily complex nonlinear similarity metrics

The essential property needed to allow learning of arbitrarily complex nonlinear similarity metrics (i.e., category boundaries, or invariances) is the ability for an individual SDC in one mac to associate with multiple, perhaps arbitrarily different, SDCs in one or more other macs. This ability is present a priori in Sparsey in the form of the progressive persistence property wherein code duration, or persistence (δ), (measured in frames) increases with hierarchical level (in most experiments so far, δ doubles with level). For example, the V2 code ϕ2,jX in Figure II-7A becomes associated with the V1 code ϕ1, iY at time t, and because it persists for two time steps, it also becomes associated with ϕ1, iZ at t + 1. By construction of this example, ϕ2,jX represents (a particular instance of) the spatiotemporal concept, “rightward-moving vertical edge.” However, if for the moment, we ignore the fact that these two associations occurred on successive time steps, then we can view ϕ2,jX as representing XOR(ϕ1, iY, ϕ1, iZ), i.e., just two different (in fact, pixel-wise disjoint) instances of a vertical edge falling within the U-RF of M2j. That is, the U-signals from either of these two input patterns alone (but not together1) can cause reinstatement of ϕ2,jX. This provides an unsupervised means by which arbitrarily different, but temporally contiguous, input images, which may in principle portray any transformation that can be carried out over a two-time-step period and over the spatial extent of the RF in question, can be associated with the same object or class (the identity of which is carried by the persisting code, ϕ2,jX).

Figure II-7. (A) The basic model property, progressive persistence, allows SDCs in higher level macs to associate with sequences of temporally contiguous SDCs active in macs in their U-RFs. (B) More generally, any mechanism which allows a particular code, e.g., ϕ2,jX, to be activated under the control of a supervisory signal can cause ϕ2,jX to associate with two or more arbitrarily different codes presented at arbitrarily different times, thus allowing ϕ2,jX to represent arbitrary invariances (classes, similarity metrics).

Figure II-7B shows two more instances in which ϕ2,jX is active, denoted t and t' to suggest that they may occur at arbitrary times. If there is a supervisory signal by which ϕ2,jX can be activated whenever desired, then ϕ2,jX will associate with whatever codes are active in its RF (in this example, specifically, its U-RF) at such times. In this case, the two inputs associated with ϕ2,jX are just two different instances of a vertical edge falling within ϕ2,jX's RF. Furthermore, note that the number of active codes (features) in the RF can vary across association events. Thus, ϕ2,jX can serve as a code representing any invariances present in the set of codes with which it has been associated.

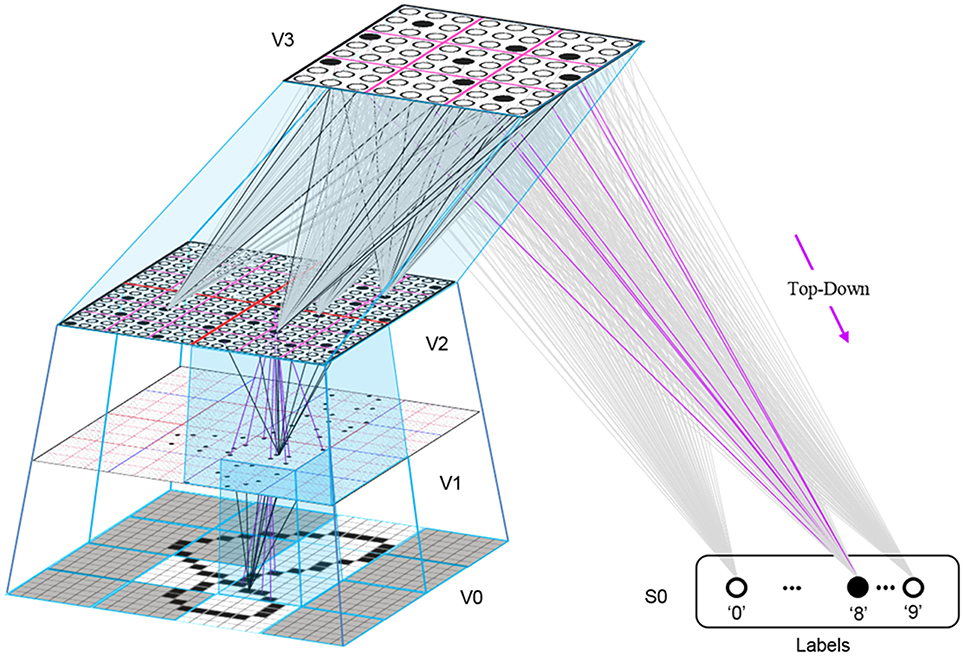

This is in fact how supervised learning is implemented in Sparsey. That is, the supervised learning signal (label) is essentially just another input modality and supervised learning is therefore treated as a special case of cross-modal unsupervised learning. We have conducted preliminary supervised learning studies involving the MNIST digit recognition database (LeCun et al., 1998) using a model architecture like that in Figure II-8. However, to adequately describe the supervised learning architecture, protocol, and theory, would add too much length to this paper and so we save that work for a separate paper. Nevertheless, we are confident that the general framework described here will allow arbitrarily complex nonlinear similarity metrics, e.g., functions described as comprising the “AI Set,” by Bengio et al. (2012), to be efficiently learned as unions, where each element of the union is a hierarchical spatiotemporal composition of the locally primitive (i.e., smoothness prior only) similarity metrics embedded in individual macs.

Figure II-8. The 4-level model used in preliminary supervised learning studies involving the MNIST digit recognition task. This shows the recognition test trial in which the “8” was presented, giving rise to a flow of U-signals activating codes in macs throughout the hierarchy, and finally a top-down flow from the activated V3 code to the Label field, where the unit with maximal D-summation, the “8” unit, wins.

Neural implementation of CSA

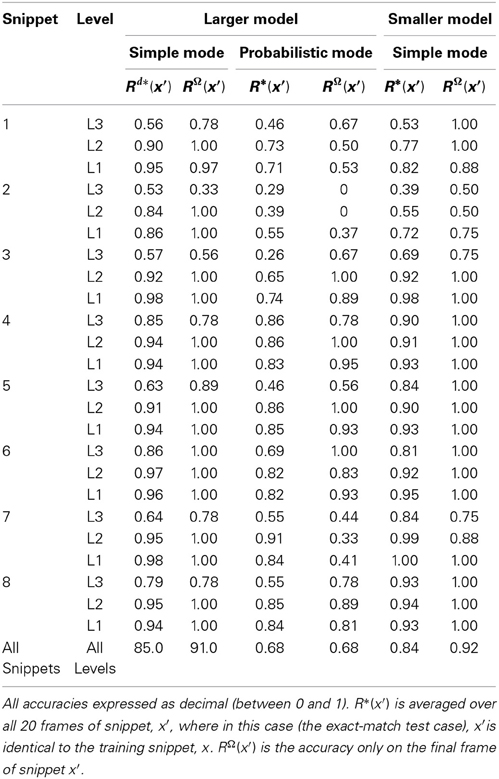

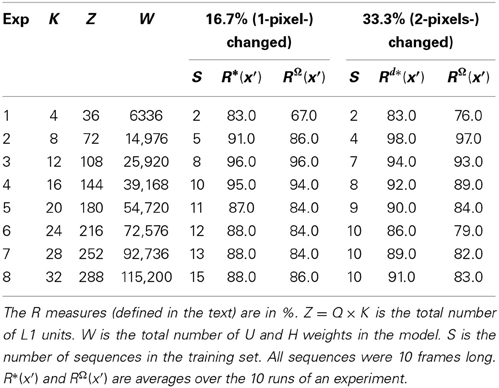

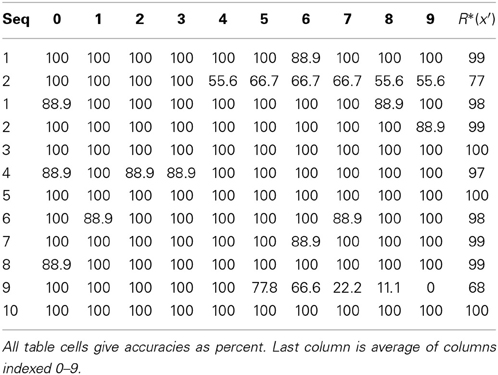

Though we identify the broad correspondence of model structures and principles to biological counterparts throughout the paper, we have thus far been less concerned with determining precise neural realizations. Our goal has been to elucidate computationally efficient and biologically plausible mechanisms for generic functions, e.g., the ability to form large numbers of permanent memory traces of arbitrary spatiotemporal events on-the-fly and based on single trials, the ability to subsequently directly (i.e., without any serial search) retrieve the best-matching or most relevant memories, invariance to nonlinear time warping, coherent handling of simultaneous activation of multiple hypotheses, etc. We believe that Sparsey meets these criterion so far. For one thing, it does not require computing any gradients or sampling of distributions, as do the Deep Learning models (Hinton et al., 2006; Salakhutdinov and Hinton, 2012). Nevertheless, we do want to make a few points concerning Sparsey's relation to the brain.