- CITI Laboratory, Univ Lyon, INSA Lyon, Inria, Villeurbanne, France

A wide range of communication systems are corrupted by non-Gaussian noise, ranging from wireless to power line. In some cases, including interference in uncoordinated OFDM-based wireless networks, the noise is both impulsive and multivariate. At present, little is known about the information capacity and corresponding optimal input distributions. In this paper, we derive upper and lower bounds of the information capacity by exploiting non-isotropic inputs. For the special case of sub-Gaussian α-stable noise models, a numerical study reveals that isotropic Gaussian inputs can remain a viable choice, although the performance depends heavily on the dependence structure of the noise.

1 Introduction

In many communication systems, additive Gaussian noise is the dominant form of signal corruption due to thermal fluctuations in the electronic devices comprising the receiver. Nevertheless, additive non-Gaussian noise has also been observed to play an important role in power line (Zimmermann and Dostert, 2002) and molecular communications (Farsad et al., 2015). Even in wireless communications, interference from uncoordinated transmitters, such as in the Internet of Things (IoT), has been suggested to admit non-Gaussian statistics (Clavier et al., 2021b). Another form of wireless communications where non-Gaussian noise arises is in underwater communications (Chitre et al., 2004).

A particularly important family of non-Gaussian noise models are impulsive, where the probability of large amplitude noise is significantly higher than predicted by corresponding Gaussian models; that is, impulsive noise is heavy-tailed. A key property of impulsive noise is that higher-order moments are often infinite or undefined, arising in Student’s t (Hall, 1966), generalized Gaussian (Dytso et al., 2018), and α-stable models (Middleton, 1977; Sousa, 1992; Ilow and Hatzinakos, 1998; Gulati et al., 2010; Pinto and Win, 2010).

Of all impulsive noise families, one of the most ubiquitous are the α-stable models. As a generalization of Gaussian models admitting the key property known as stability under convolution, these models arise via several mechanisms. The first mechanism, relevant for molecular communications, is via the distribution of the first hitting time of the standard Wiener process (Farsad et al., 2015). The second mechanism is via the generalized central limit theorem, which characterizes the behavior of partial sums of n independent and identically distributed random variables under the scaling

The third mechanism to obtain α-stable models relevant for interference in wireless communication systems was first identified by Middleton (Middleton, 1977) and further clarified in (Sousa, 1992; Ilow and Hatzinakos, 1998). In particular, given uncoordinated transmitting devices located according to a homogeneous Poisson point process on the plane, the interference under power-law path loss converges almost surely to an α-stable random variable by identification with the LePage series (Samorodnitsky and Taqqu, 1994). This third mechanism has recently seen application in interference studies for the IoT (Egan et al., 2018). Indeed both theory and recent experimental data (Lauridsen et al., 2017) in the 868 MHz band, utilized by SigFox and LoRa devices, has indicated the presence of heavy-tailed interference which may be modeled via α-stable models (Clavier et al., 2021b).

Despite the utility of α-stable noise models in communications, the vast majority of work has focused on real-valued noise. In this setting, information capacity bounds have been derived in (de Freitas et al., 2017) and the structure of optimal input distributions characterized in (Fahs and Abou-Faycal, 2017). The design of symbol detection strategies and their performance has been addressed in (Niranjayan and Beaulieu, 2009; Ghannudi et al., 2010; Clavier et al., 2021a) and decoding algorithms developed in (Gu and Clavier, 2012; Mestrah et al., 2020). Noise parameter estimation algorithms have also been developed in (Kuruoglu, 2001) and power control strategies in (Freitas et al., 2018).

On the other hand, baseband signals in wireless communications are typically complex-valued for which few signal processing strategies and studies of performance analysis have been developed, with notable exceptions in (Gulati et al., 2010; Mahmood et al., 2014) for the narrowband case. The situation is further complicated when transmissions utilize orthogonal frequency division multiplexing (OFDM), where signals are transmitted over multiple subcarriers. In such cases, the noise forms a random vector and real-valued α-stable models are insufficient. Nevertheless, it has recently been shown that multivariate α-stable models can naturally arise from statistical analysis of interference in complex baseband signals over multiple subcarriers (Egan et al., 2018). However, little is known about performance limits or optimal signaling strategies in the presence of multivariate α-stable noise. In particular, the information capacity remains an open question in such channels, which is useful for selecting coding rates—via the noisy channel coding theorem—and in designing resource allocation strategies (Freitas et al., 2018).

In this paper, as a step towards resolving these open questions, we study the information capacity and signaling in multivariate symmetric α-stable noise channels with 1 < α < 2. We first return to the question of the information capacity in real-valued symmetric α-stable noise channels, where we establish new upper and lower bounds that are tighter and more general than those given in (de Freitas et al., 2017). In particular, bounds are also given for power-constrained inputs as well as fractional moment constraints. In the case of a power constraint, we establish that the information capacity is within a constant of the information capacity for the Gaussian noise channel and that Gaussian inputs yield this behavior.

We then turn to the case of multivariate symmetric α-stable noise. We show that there exists a unique optimal input achieving the information capacity and also derive a general upper bound, which is applicable to all multivariate symmetric α-stable noise channels subject to fractional moment and power constraints. We then derive a general lower bound applicable for fractional moment constraints with exponent r < α. In the case of sub-Gaussian α-stable models, we also obtain a lower bound on the information capacity subject to a power constraint.

Our bounds suggest, at least from an analytical point of view, that it is desirable to match the dependence structure of the input distribution to that of the noise. Indeed, our lower bounds are obtained with non-isotropic inputs, often matched to the dependence structure of the noise distribution. To study the performance of non-isotropic inputs, we consider communication in sub-Gaussian α-stable noise subject to a power constraint, and numerically study the behavior of the bounds. In this particular case, we observe that isotropic Gaussian inputs nearly achieve the capacity upper bound, suggesting that matching the input to the dependence structure of the noise is not always desirable.

1.1 Notation

Vectors are denoted by bold lowercase letters and random vectors by bold uppercase letters, respectively (e.g., x, X). We denote the distribution of a random vector X by PX. If X, Y are two random vectors equal in distribution, then we write

Let

and ‖·‖r is called the r-norm on

Let

2 Problem Formulation

In this section, we detail the problem of characterizing the information capacity and optimal input distributions in multivariate symmetric α-stable noise channels (1 < α < 2). To this end, we first recall preliminary definitions and properties of scalar and multivariate α-stable models that will be used in the sequel. For further details, we refer the reader to (Samorodnitsky and Taqqu, 1994).

2.1 α-Stable Models

The probability density function of an α-stable random variable is described by four parameters: the exponent 0 < α ≤ 2; the scale parameter

In general, α-stable random variables do not have closed-form probability density functions. Instead, they are more compactly represented by the characteristic function, given by (Samorodnitsky and Taqqu, 1994, Eq. 1.1.6)

Observe that in the special case α = 2, the α-stable distribution is Gaussian. As such, the family of α-stable distribution generalize the family of Gaussian distributions. In fact, like Gaussian models, if X(1) and X(2) are independent copies of an α-stable random variable X, then for a, b > 0, there exists constants

More precisely, the following property holds (Samorodnitsky and Taqqu, 1994).

Property 1 Suppose Z1, Z2 are independent with Z1 ∼ Sα(γ1, β1, δ1) and Z2 ∼ Sα(γ2, β2, δ2). Then, Z1 + Z2 ∼ Sα(γ, β, δ), where

When β = δ = 0 in Eq. 2, the resulting α-stable distribution is said to be symmetric. An important alternative characterization of symmetric α-stable random variables is via the LePage series.

Theorem 1 [Theorem 1.4.2 (Samorodnitsky and Taqqu, 1994)]. Suppose 0 < α < 2,

converges almost surely to a random variable

In the multivariate setting, we consider random vectors X in

where X(1) and X(2) are independent copies of X.A sufficient condition for a random vector X in

where Γ is the unique symmetric measure on the surface of the d-dimensional unit sphere.In the case that a d-dimensional α-stable random vector X is truly d-dimensional, there exists a joint probability density function pX(⋅) on

Definition 1 Any vector X satisfying

and

2.2 The Information Capacity Problem

Consider the memoryless, stationary, linear and point-to-point communication channel

where N is a truly symmetric α-stable random vector with 1 < α < 2, admitting a multivariate probability density function pN(⋅), with X and N independent. The random vector X is defined on1

for a given

The main focus of this paper is to investigate the information capacity and corresponding optimal inputs for communication channels of the form Eq. 10. To this end, let

where the mutual information I(X; Y) is given by

In the case an optimal input exists, it satisfies

Note that, by a generalization of Shannon’s noisy channel coding theorem for vector non-Gaussian channels (Han, 2003), the information capacity may be interpreted as the maximum achievable rate with asymptotically zero average probability of error.

In the remainder of this paper, we address the following questions:

(i) What is the value of C(P, r) for varying P and r?

(ii) Does an optimal input

(iii) What is the structure of nearly optimal inputs?

In this work, we will allow μX to be non-isotropic; that is, for all d × d orthogonal matrices

where X has probability measure μX. In the following section, we begin with scalar channels—which have not previously been comprehensively studied—before considering more general vector channels in Section 4.

3 Scalar Channels

Before turning to multivariate α-stable noise channels, we first consider the scalar case. In particular, we first improve on the capacity bounds in (de Freitas et al., 2017) and in the process develop techniques that will be generalized to the multivariate setting in the sequel. To begin, we specialize the problem in Eq. 12 to the scalar case: a stationary and memoryless scalar additive symmetric α-stable noise channel is given by

where the noise N is a symmetric α-stable random variable with scale parameter γN, admiting a probability density function pN(⋅), with X and N independent. The input random variable X is required to satisfy the constraint

where 1 ≤ r ≤ 2. In terms of the probability measure of X, the constraint can be written as

In this case, the information capacity of the channel (16) is defined as

It follows from (Fahs and Abou-Faycal, 2016) that an optimal solution of Eq. 19 exists and is unique. Indeed, the optimal input is known to be discrete (Fahs and Abou-Faycal, 2017).

3.1 Capacity Upper Bounds

In (de Freitas et al., 2017), an upper bound on C(P, r) was established when r = 1 and 1 < α < 2.

Theorem 2 Letλ > 0 and r = 1. For the channel (16), the capacity C(P, 1) in (19) is upper bounded by

It was shown in (de Freitas et al., 2017) that this bound was tight for moderate values of P and appropriate values of λ, but quickly diverged. An asymptotic upper bound, that is, the upper bound is only guaranteed to hold as P → ∞, was established. In the following theorem, we establish an upper bound which holds for all 1 ≤ r ≤ 2 and p > 0.

Theorem 3 Let 1 ≤ r ≤ 2 and p > 0. For the channel (16), the capacity C(P, r) in (19) is upper bounded by

Proof. Note that under the constraint that

By the triangle inequality,

We also have by (Zolotarev, 1957)

All that remains is to obtain

Substituting Eqs 23–25 into Eq. 22 gives

as required.

3.2 Capacity Lower Bounds

We now turn to lower bounding C(P, r). We first consider the case where 1 ≤ r < α.

Theorem 4 Let 1 ≤ r < α. For the channel (16), the capacity C(P, r) in (19) is lower bounded by

Proof. Let X ∼ Sα(γX, 0, 0) with

By the stability property

and hence

where

Using (Shao and Nikias, 1993, Theorem 4)

and the constraint

Remark 1 When r = 1, Theorem 4 specializes to the lower bound in (de Freitas et al., 2017).

Since 1 ≤ r < α and α < 2, it follows that Theorem 4 does not apply in the important case where the input X is constrained to satisfy

Theorem 5 Let r = 2. For the channel (16), the capacity C(P, 2) in (19) is lower bounded by

where pA(a) is the probability density function of a totally skewed α/2-stable random variable with scale parameter

Proof. Let

where

As such,

as required.

A key question is whether the capacity bounds we have established so far are tight. To this end, we make the following observation.

Corollary 1 Let 1 ≤ r < α. For the channel (16), the capacity C(P, r) in (19) satisfies

Proof. Observe that

Since

the corollary follows.

By the same argument, we also have the following corollary.

Corollary 2 Let r = 2. For the channel (16), the capacity C(P, 2) in (19) satisfies

As a consequence, for sufficiently large values of P and r = 2, the rate achievable using a Gaussian input is within a constant of the capacity C(P, 2). A further observation, which will be useful in the sequel, is that matching the input distribution to the noise distribution yields a rate that forms a good approximation of the capacity. Finally, the capacity of symmetric α-stable noise channels is within a constant of the capacity for an additive Gaussian noise channel.

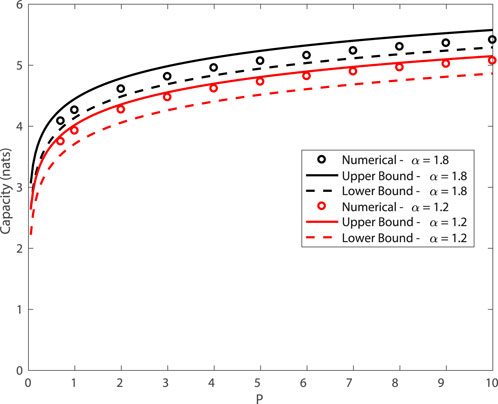

3.3 Numerical Results

In order to further verify the tightness of the upper bound in Theorem 3, we compare the bound with the numerical computation of the capacity via the Blahut-Arimoto algorithm (Blahut, 1972; Arimoto, 1972). Figure 1 plots the power against the information capacity for varying and α. The scale parameter is set as γN = 0.01. Observe that the upper bound and the numerical approximation are in good agreement. Note that the lower bound is obtained based on a Gaussian input is also in good agreement, despite the fact that the optimal input is discrete (Fahs and Abou-Faycal, 2017).

FIGURE 1. Capacity of symmetric α-stable noise channels subject to a power constraint P, with γN = 0.01.

Figure 2 plots the capacity upper bounds in Theorem 2 [from (de Freitas et al., 2017)] and our new upper bound in Theorem 3 in the case of the constraint

FIGURE 2. Capacity of symmetric α-stable noise channels subject to the constraint

4 Vector Channels

In this section, we return to the general problem in Eq. 12 for vector channels with d > 1.

4.1 Existence and Uniqueness of Optimal Inputs

While existence and uniqueness of optimal inputs is well understood in the scalar case (Fahs and Abou-Faycal, 2016), it has not yet been established in the vector case. We prove this result in the following theorem by utilizing the theory of weak convergence (Billingsley, 1999).

Theorem 6 For the optimization problem in (12), there exists a unique input probability measure μ* corresponding to an input random vector X* on

Proof. The proof proceeds in three steps: (i) weak compactness of the constraint set ΛX(P, r); (ii) weak continuity of I(X; Y) on ΛX(P, r), yielding existence of

(i) For any ϵ > 0, there exists

The inequality in Eq. 42 holds as a consequence of the generalized Markov inequality in (Marshall, 1984, Example 2.3). In more detail,

Now, choose

Hence, μ0 ∈ ΛX(P, r). Since the choice of sequence is arbitrary, it follows that ΛX(P, r) is closed in the topology of weak convergence. Since ΛX(P, r) is tight and closed in the topology of weak convergence, it then follows by Prokhorov’s theorem (Billingsley, 1999) that ΛX(P, r) is compact.

(ii) The second step is to establish that I(X; Y) is weakly continuous on ΛX(P, r). In particular, we need to show that for any weakly convergent sequence of probability measures

where Yn is the output corresponding to an input Xn with probability measure μn. Note that Yn = Xn + N admits a probability density function since N is truly d-dimensional.Observe that if the limit and the integral in Eq. 45 can be swapped, the result follows from the definition of weak convergence if the probability density function of N, pN, is bounded and continuous. Note that this is indeed the case since the characteristic function of N,

To proceed, let

which is a Cauchy density on

where the last term follows from the fact that

which tends to zero as R(δ) → ∞. Here, L < ∞ since by the Jensen and Hölder inequalities

which implies

since the probability measure μ corresponding to X lies in ΛX(P, r). Similarly,

which tends to zero as R(δ) → ∞.Moreover,

Note that

by Hölder’s inequality. As such, Eq. 53 is finite and tends to zero as R(δ) → ∞.After an application of the dominated convergence theorem, for any δ > 0

Since the identities in Eqs 49, 52, 53, 55 hold for all δ > 0, weak continuity of I(X; Y) follows by taking δ → 0 (and hence R(δ) → ∞). The existence part of Theorem 6 then holds by applying the extreme value theorem.

(iii) The uniqueness of the optimal input follows from the fact that the entropy h(Y) is a strictly concave function of the distribution PY. By the fact that the characteristic function of N is strictly positive, PY is a one-to-one function of the distribution PX. Hence, h(Y) is a strictly concave function of PX. As the mutual information can be written as

it follows that I(X; Y) is a strictly concave function of PX since h(N) does not depend on PX. Since this holds for any input lying in Λ(P, r), it then follows that the optimal input probability measure

4.2 Capacity Upper Bound

We now obtain a general upper bound on the capacity in multivariate α-stable noise, which holds for constraints with 1 ≤ r ≤ 2.

Theorem 7 Let 1 < α < 2, 1 ≤ r ≤ 2,P⪰0 and

Proof. Recall that

For each term h(Yi), the same argument as Theorem 3 yields

The result then follows since for all X with probability measure μX ∈ Λ(P, r),

As for the scalar case, the term h(N) is not available in closed-form and must be numerically evaluated. In the numerical study in Section 4.4, h(N) will be estimated via nearest neighbor methods.

4.3 Capacity Lower Bounds

We now generalize the results in Section 3.2 to the case of vector channels. As for scalar channels, we consider the two cases: 1 ≤ r < α; and r = 2.

Theorem 8 Let 1 < α < 2, 1 ≤ r < α and P⪰0. The capacity C(P, r) in (12) is lower bounded by

where

Proof. Let

and set

In order to ensure that μX ∈ ΛX(P, r), we recall (Shao and Nikias, 1993, Theorem 4)

It then follows that

as required.

Theorem 9 Let 1 < α < 2, r = 2, and P⪰0 For the channel (10) with N sub-Gaussian α-stable with underlying random vector

where ΣX is any positive definite matrix with diagonal elements

and

Proof. Let

where

In order to satisfy the power constraints, we require

which yields the desired result.

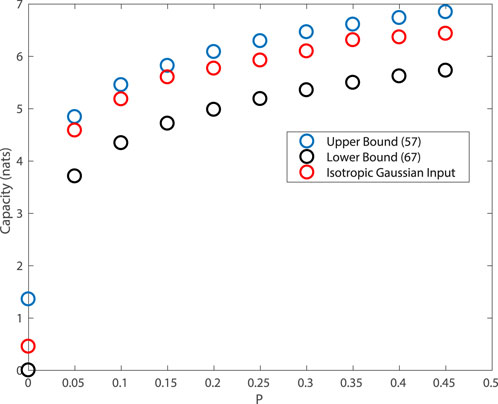

4.4 Numerical Results

In this section, we study the behavior of the bounds in the case of two-dimensional sub-Gaussian α-stable noise, where inputs are subject to a power constraint.

Figure 3 plots the capacity bounds in the previous section for varying values of P in the presence of sub-Gaussian α-stable noise, with α = 1.2 and

In order to compute the entropy h(N), the 1-nearest neighbor method (Berrett et al., 2019) was used. Observe there is roughly a gap of approximately one nat between the upper bound in Theorem 7 and the lower bound in Theorem 9 with ΣX chosen to proportional to Σ.

FIGURE 3. Capacity bounds for two-dimensional sub-Gaussian α-stable noise channels subject to a power constraint P, with Σ given in Eq. 72 and α = 1.2.

The third curve in red corresponds to the case of a two-dimensional isotropic Gaussian input where each component has variance P. Observe that the mutual information obtained with this input is close to the upper bound. This suggests that for sub-Gaussian α-stable noise channels, Gaussian inputs perform well and, moreover, independent components are desirable. This can be understood by an inspection of Theorem 9, where choosing ΣX to be diagonal maximizes the determinant when Σ = 0.

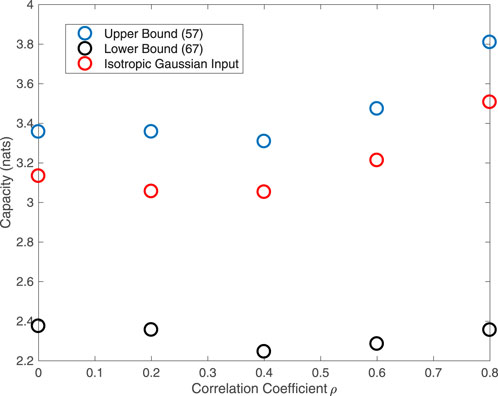

Figure 4 plots the capacity bounds in the previous section subject to a power constraint p = 0.01 for varying values of ρ in the presence of sub-Gaussian α-stable noise, with α = 1.2 and

The results are consistent with Figure 3, with the isotropic Gaussian input performing well for all values of ρ. We also observe that the curves also increase for sufficient large values of ρ, suggesting that increasing the dependence can lead to performance improvements. This is relevant for communication systems, such as in (Zheng et al., 2019, 2020), where noise is dominated by interference, which may be modified via changes to access policies.

FIGURE 4. Capacity bounds for two-dimensional sub-Gaussian α-stable noise channels for varying noise dependence ρ subject to a power constraint p = 0.01, with Σ given in Eq. 73 and α = 1.2.

5 Conclusion

Multivariate α-stable models have been suggested to capture the heavy-tailed nature of interference in OFDM-based wireless communication systems. In this paper, we studied the capacity of fractional moment and power constrained signaling in the presence of such noise. By considering non-isotropic inputs, we obtained upper and lower bounds, which provide insights into the behavior of the capacity and its relation to Gaussian noise models. Via a numerical study in two-dimensional channels with sub-Gaussian α-stable noise, we compared the performance of isotropic and non-isotropic Gaussian inputs. This suggests, at least for this special case, isotropic Gaussian inputs remain a desirable choice.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author Contributions

ME formulated the problem, carried out the mathematical analysis and numerical study, and wrote the paper.

Funding

This work has been (partly) funded by the French National Agency for Research (ANR) under grant ANR-16-CE25-0001-ARBURST.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We would like to acknowledge useful discussions with L. Clavier, G. W. Peters, N. Azzaoui, and J.-M. Gorce.

Footnotes

1The notation

References

Arimoto, S. (1972). An Algorithm for Computing the Capacity of Arbitrary Discrete Memoryless Channels. IEEE Trans. Inform. Theor. 18, 14–20. doi:10.1109/tit.1972.1054753

Berrett, T., Samworth, R., and Yuan, M. (2019). Efficient Multivariate Entropy Estimation via K-Nearest Neighbour Distances. Ann. Stat. 47, 288–318. doi:10.1214/18-aos1688

Blahut, R. (1972). Computation of Channel Capacity and Rate-Distortion Functions. IEEE Trans. Inform. Theor. 18, 460–473. doi:10.1109/tit.1972.1054855

Byczkowski, T., Nolan, J. P., and Rajput, B. (1993). Approximation of Multidimensional Stable Densities. J. Multivariate Anal. 46, 13–31. doi:10.1006/jmva.1993.1044

Chitre, M., Potter, J., and Heng, O. (2004). “Underwater Acoustic Channel Characterisation for Medium-Range Shallow Water Communications,” in MTS/IEEE Techno-Ocean’04 (IEEE).

Clavier, L., Pedersen, T., Larrad, I., Lauridsen, M., and Egan, M. (2021b). Experimental Evidence for Heavy Tailed Interference in the IoT. IEEE Commun. Lett. 25, 692–695. doi:10.1109/lcomm.2020.3034430

Clavier, L., Peters, G., Septier, F., and Nevat, I. (2021a). Impulsive Noise Modeling and Robust Receiver Design. EURASIP J. Wireless Commun. Networking 13, 1. doi:10.1186/s13638-020-01868-1

Cover, T., and Thomas, J. (2006). Elements of Information Theory. Second Edition. Hoboken, NJ: John Wiley & Sons.

de Freitas, M. L., Egan, M., Clavier, L., Goupil, A., Peters, G. W., and Azzaoui, N. (2017). Capacity Bounds for Additive Symmetric $\alpha $ -Stable Noise Channels. IEEE Trans. Inform. Theor. 63, 5115–5123. doi:10.1109/tit.2017.2676104

Dytso, A., Bustin, R., Vincent Poor, H., and Shamai, S. (2018). Analytical Properties of Generalized Gaussian Distributions. J. Stat. Distributions Appl. 5, 1–40. doi:10.1186/s40488-018-0088-5

Egan, M., Clavier, L., Zheng, C., de Freitas, M., and Gorce, J.-M. (2018). Dynamic Interference for Uplink SCMA in Large-Scale Wireless Networks without Coordination. EURASIP J. Wireless Commun. Networking 213, 1. doi:10.1186/s13638-018-1225-z

Egan, M. (2019). “On Capacity Sensitivity in Additive Vector Symmetric α-stable Noise Channels,” in Proc. IEEE Wireless Communications and Networking Conference Workshop (WCNCW) (IEEE). doi:10.1109/wcncw.2019.8902901

Fahs, J., and Abou-Faycal, I. (2017). On Properties of the Support of Capacity-Achieving Distributions for Additive Noise Channel Models with Input Cost Constraints. IEEE Trans. Inf. Theor. 64, 1178–1198. doi:10.1109/TIT.2017.2771815

Fahs, J., and Abou-Faycal, I. (2016). On the Finiteness of the Capacity of Continuous Channels. IEEE Trans. Commun. 64, 166–173. doi:10.1109/tcomm.2015.2503403

Farsad, N., Guo, W., Chae, C.-B., and Eckford, A. (2015). “Stable Distributions as Noise Models for Molecular Communication,” in Proc. IEEE Global Communications Conference (IEEE). doi:10.1109/glocom.2015.7417583

Freitas, M., Egan, M., Clavier, L., Savard, A., and Gorce, J.-M. (2018). “Power Control in Parallel Symmetric α-stable Noise Channels,” in IEEE International Workshop on Signal Processing Advances in Wireless Communications (SPAWC) (IEEE).

Ghannudi, H. E., Clavier, L., Azzaoui, N., Septier, F., and Rolland, P.-a. (2010). α-Stable Interference Modeling and Cauchy Receiver for an IR-UWB Ad Hoc Network. IEEE Trans. Commun. 58, 1748–1757. doi:10.1109/tcomm.2010.06.090074

Gu, W., and Clavier, L. (2012). Decoding Metric Study for Turbo Codes in Very Impulsive Environment. IEEE Commun. Lett. 16, 256–258. doi:10.1109/lcomm.2011.112311.111504

Gulati, K., Evans, B. L., Andrews, J. G., and Tinsley, K. R. (2010). Statistics of Co-channel Interference in a Field of Poisson and Poisson-Poisson Clustered Interferers. IEEE Trans. Signal. Process. 58, 6207–6222. doi:10.1109/tsp.2010.2072922

Hall, H. (1966). A New Model for Impulsive Phenomena: Application to Atmospheric-Noise Communication Channels. Stanford, CA: Tech. Rep. 3412-8, Stanford Electronics Labs, Stanford University.

Han, T. (2003). Information-Spectrum Methods in Information Theory. Berlin Heidelberg: Springer-Verlag.

Ilow, J., and Hatzinakos, D. (1998). Analytic Alpha-Stable Noise Modeling in a Poisson Field of Interferers or Scatterers. IEEE Trans. Signal. Process. 46, 1601–1611. doi:10.1109/78.678475

Kuruoglu, E. E. (2001). Density Parameter Estimation of Skewed α-stable Distributions. IEEE Trans. Signal. Process. 49, 2192–2201. doi:10.1109/78.950775

Lauridsen, M., Vejlgaard, B., Kovács, I., Nguyen, H., and Mogensen, P. (2017). “Interference Measurements in the European 868 MHz ISM Band with Focus on LoRa and SigFox,” in IEEE Wireless Communications and Networking Conference (WCNC) (IEEE). doi:10.1109/wcnc.2017.7925650

Mahmood, A., Chitre, M., and Armand, M. A. (2014). On Single-Carrier Communication in Additive white Symmetric Alpha-Stable Noise. IEEE Trans. Commun. 62, 3584–3599. doi:10.1109/tcomm.2014.2351819

Marshall, A. W. (1984). Markov’s Inequality for Random Variables Taking Values in a Linear Topological Space. Inequalities Stat. Probab. 5, 104–108. doi:10.1214/lnms/1215465634

Mestrah, Y., Savard, A., Goupil, A., Gellé, G., and Clavier, L. (2020). An Unsupervised Llr Estimation with Unknown Noise Distribution. EURASIP J. Wireless Commun. Networking 26, 1. doi:10.1186/s13638-019-1608-9

Middleton, D. (1977). Statistical-physical Models of Electromagnetic Interference. IEEE Trans. Electromagn. Compat. EMC-19, 106–127. doi:10.1109/temc.1977.303527

Niranjayan, S., and Beaulieu, N. (2009). The BER Optimal Linear Rake Receiver for Signal Detection in Symmetric Alpha-Stable Noise. IEEE Trans. Commun. 57, 3585–3588. doi:10.1109/tcomm.2009.12.0701392

Pinto, P. C., and Win, M. Z. (2010). Communication in a Poisson Field of Interferers--Part I: Interference Distribution and Error Probability. IEEE Trans. Wireless Commun. 9, 2176–2186. doi:10.1109/twc.2010.07.060438

Samorodnitsky, G., and Taqqu, M. (1994). Stable Non-gaussian Random Processes. New York, NY: CRC Press.

Shao, M., and Nikias, C. L. (1993). Signal Processing with Fractional Lower Order Moments: Stable Processes and Their Applications. Proc. IEEE 81, 986–1010. doi:10.1109/5.231338

Sousa, E. S. (1992). Performance of a Spread Spectrum Packet Radio Network Link in a Poisson Field of Interferers. IEEE Trans. Inform. Theor. 38, 1743–1754. doi:10.1109/18.165447

Zheng, C., Egan, M., Clavier, L., Pedersen, T., and Gorce, J.-M. (2020). “Linear Combining in Dependent α-stable Interference,” in IEEE International Conference on Communications (ICC) (IEEE). doi:10.1109/icc40277.2020.9148724

Zheng, C., Egan, M., Clavier, L., Peters, G., and Gorce, J.-M. (2019). “Copula-based Interference Models for IoT Wireless Networks,” in Proc. IEEE International Conference on Communications (ICC) (IEEE). doi:10.1109/icc.2019.8761783

Zimmermann, M., and Dostert, K. (2002). Analysis and Modeling of Impulsive Noise in Broad-Band Powerline Communications. IEEE Trans. Electromagn. Compat. 44, 249–258. doi:10.1109/15.990732

Keywords: α-stable distributions, heavy-tailed noise, non-isotropic inputs, information capacity, discrete inputs

Citation: Egan M (2021) Isotropic and Non-Isotropic Signaling in Multivariate α-Stable Noise. Front. Comms. Net 2:718945. doi: 10.3389/frcmn.2021.718945

Received: 01 June 2021; Accepted: 21 September 2021;

Published: 13 October 2021.

Edited by:

Ebrahim Bedeer, University of Saskatchewan, CanadaReviewed by:

Filbert Juwono, Curtin University Sarawak, MalaysiaYe Wu, University of North Carolina at Chapel Hill, United States

Copyright © 2021 Egan . This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Malcolm Egan , bWFsY29tLmVnYW5AaW5yaWEuZnI=

Malcolm Egan

Malcolm Egan