94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Commun., 15 April 2025

Sec. Science and Environmental Communication

Volume 10 - 2025 | https://doi.org/10.3389/fcomm.2025.1589116

This article is part of the Research TopicThe Erosion of Trust in the 21st Century: Origins, Implications, and SolutionsView all 6 articles

The recent pandemic has revealed the importance of understanding how scientific knowledge is produced, if citizens are to make decisions that protect themselves, their communities, and their nations. Creating such an understanding presents a critical and urgent challenge for the world’s education systems. In this essay, we propose that teachers prioritize producing an understanding of the scientific process at all levels of science education.

For those in the scientific community, the pandemic served as a distressing wake-up call. It revealed the stark truth that millions of people have little faith in science—or in scientists. In a survey of 9,500 American adults conducted by the Pew Research Center in 2024, nearly one-quarter of respondents (23%) expressed “not much or no confidence” that scientists act in the best interest of the public—a value that was only 12% prior to COVID (Pew Research Center, 2024). And in 2025, the entire world is experiencing the ongoing consequences of the fact that only about one-third of Americans believe that climate scientists understand very well whether climate change is happening (Pew Research Center, 2023).

Far from valuing scientific expertise, many seem to feel that scientific judgments are little more than the personal beliefs of individual scientists—and that the scientific consensus on issues that range from vaccine safety to climate change should be regarded in the same way as any other opinion one might encounter through social media. As a result, scientific conclusions in conflict with one’s views can be safely ignored.

What allowed this seeming sudden decline in scientific trust? We contend that the public’s misapprehension about the value and meaning of science and scientific consensus reflects a failure of science education.

Those of us who trained as scientists experience firsthand how the scientific process creates reliable knowledge through a broad community effort that embraces many checks and balances. We appreciate that scientific consensus is always open to change—based on new evidence and ideas—and that our knowledge improves gradually over time, with refinements that bring it ever closer to the truth.

But in our eagerness to expose students to all the amazing things that science has discovered about the natural world, we often overlook the most critical task for scientific education: teaching students how the remarkable human endeavor that we call science creates a vast treasure trove of knowledge.

In this essay, we address the question of when and why we can trust science—and how we can identify scientific claims we can trust. We explain how scientists work together, as part of a larger scientific community, to generate reliable knowledge. We describe how the scientific process builds a consensus, and how new evidence can change the ways that scientists—and, ultimately, the rest of us—see the world. Last, but not least, we discuss how, through science education, we can help students to become “competent outsiders” who are able to separate science facts from science fiction.

Modern science is very much a team sport. In most fields of science, from astronomy to archeology, investigators work in teams, with collaborators, and within a broad scientific community. They debate their discoveries at conferences, write research proposals that are reviewed by their peers, and present their data in publications that allow others to evaluate everything from their methods to how they interpret their results.

In response to these open invitations for scrutiny, investigators devise even more rigorous strategies for testing their theories, and they adjust their hypotheses to best accommodate all of the available data—their own and that of other scientists. If two heads are better than one, the scientific enterprise benefits from having hundreds, even thousands of investigators putting their heads together to ponder a given problem and experimentally test—and retest—the proposed solutions. As Ludwik Fleck—a Polish microbiologist who also studied the sociology of science—put it, “A truly isolated investigator is impossible… Thinking is a collective activity” (Fleck et al., 1981). In this manner, the scientific community strives to produce a consensus.

Of course, scientists, like anyone, can make mistakes. But, as a group, scientists are not only dedicated to trying to understand the world, they are trained to examine everything they see with a critical eye. So, when we have questions that, by nature, require methodical and rigorous investigation, it only makes sense that we should turn to scientists to help us find the answers.

When we first learn about “the scientific method,” we are told that an investigator develops a hypothesis and conducts an experiment. If the results support the hypothesis, the hypothesis is confirmed. But this picture is vastly oversimplified. In reality, experiments are typically designed to disprove a hypothesis. Some might even argue that a major goal of science is not to make bold new discoveries, but to eliminate erroneous notions, irreproducible results, and incorrect interpretations.

Indeed, scientists are trained to be skeptical—even (or especially) of their own hypotheses. Good scientists operate with the knowledge that their initial ideas or models may require revision or even outright rejection. Thanks to a rigorous system of checks and balances, which is “baked in” to the scientific method, over time science corrects its own mistakes. In doing so, it steers us—bit by bit—away from misinformation and toward an increasingly accurate and reliable understanding of the world.

But if skepticism allows science to progress, it does so only because, as a community, scientists share a certain set of values. In his book Science and Human Values, Jacob Bronowski, a physicist and philosopher, noted: “Science confronts the work of one [investigator] with that of another and grafts each on each; it cannot survive without justice and honor and respect. Only by these means can science pursue its steadfast object, to explore truth” (Bronowski, 1956).

Although values and principles form the foundation to any honest endeavor, the scientific community has also developed a set of practices that facilitate the constant vetting of knowledge necessary for science to progress. These practices enable investigators to “check their work” by identifying potential problems in their theories and experiments and allowing them to pursue the necessary corrections.

1. Independent replication. When investigators publish their work, they provide comprehensive descriptions of the experimental procedures they followed and even all the materials they used. This excruciating level of detail is designed to allow others in the community to reproduce the original experiment (or conduct a very similar one). In this way, scientists can readily corroborate or extend each other’s findings—or identify a problem with the original study.

2. Randomized controlled trials. To determine if a new drug or vaccine (or even a high-school science curriculum) is more effective than the one currently in use, scientists compare what happens to a group of people who receive the new intervention to a “control” group that does not. This control group often includes people who receive either the conventional treatment or a placebo—an inactive substance or a sham (or “dummy”) treatment. To reduce potential bias, such studies assign participants to each group at random. Such randomized controlled trials represent the gold standard approach to determining, with certainty, whether a new treatment is both effective and safe.

3. Blinded analysis. When scientists design and conduct their experiments, they often use a “blinded analysis” to avoid (whether purposefully or unintentionally) favoring the data that support their hypotheses. For example, in clinical trials, investigators conducting the study typically do not know which participants receive the drug being tested and which get a placebo. Very often, the participants themselves do not know either—ensuring that nobody involved in the study can inadvertently sway the results.

4. Statistical validation. Scientific data will always exhibit some degree of variability, so researchers use statistical analyses to assess how likely it is that a particular result is “real,” as opposed to something that could have happened by chance. To avoid being misled, good scientists design their experiments with the appropriate controls, replicate samples, and a sample size that is large enough to assure them that their results are meaningful and not simply due to random luck.

5. Peer review. Everything that scientists do is subject to review by fellow scientists. Before they even begin their research, investigators typically submit requests for funding, explaining what they intend to do and how they intend to do it. These applications are evaluated by other researchers to ensure that only well-designed projects will receive financial backing. The articles that scientists write to describe their research are similarly assessed before being accepted for publication in “peer-reviewed” journals. Once those papers are published, they are subject to critique by the broader scientific community.

By publishing their results and subjecting their methods and analyses to critical review, scientists facilitate the exchange of ideas, challenge hypotheses and interpretations, and encourage each other to continually reassess their theories and refine their conclusions. Thus, although individual scientists may get things wrong, only those claims that have passed the rigorous testing of community-wide experimentation and critique are accepted as provisionally valid, thereby moving us toward a consensus view that is reliable and in which we can trust. As scientist and historian Naomi Oreskes says in her book Why Trust Science: “…the basis for our trust is not in scientists—as wise or upright individuals—but in science as a social process that rigorously vets claims” (Oreskes, 2019).

Science does not progress by simply confirming the same information, under the same set of circumstances, again and again. The beauty of the scientific enterprise is that it uses past observations and experiments to predict how the natural world will behave in the future. It does so by producing models, or conceptual frameworks, that are then tested repeatedly by investigators in other labs—and even in other fields of science—to determine whether they always hold true. New experiments may confirm a model, lead to its alteration in small or large ways, or prompt its rejection and replacement with one that better accommodates all of the data.

In this way, science has produced a vast web of interconnected, well-established knowledge that allows us not only to describe or account for the things we observe today—but to predict what will happen tomorrow and 100 years from now. The laws of motion developed by Sir Isaac Newton in the late 1600s, for example, are still valid today. They allow us to accurately gage how much fuel is needed to launch a rocket ship that will reach Mars, or whether detonating a precisely targeted explosion will provide enough force to alter the path of an asteroid that might otherwise collide with the Earth in 5 months, 5 years, or 5 centuries.

It is clear that science is an iterative, never-ending process of exploration and analysis in which even popular ideas are continuously re-evaluated as scientists make new observations and gather fresh evidence. In some cases, this new evidence can totally upend the way we see the world.

In the 1950s, for example, geologists were using sonar to map the ocean floor. Instead of the smooth surface that they expected, they discovered mountain ranges and trenches that were formed as the seafloor spread. As scientists continued to survey the ocean floor, studying the way that magnetic materials aligned as ancient rocks were formed, their findings revealed that not only have the continents shifted relative to one another, but so have massive slabs of the Earth’s crust—the so-called “tectonic plates” upon which the planet’s continents and its oceans all ride. This discovery caused a tectonic shift in our understanding of our planet’s history and of the earthquakes and volcanic eruptions that drifting continents leave in their wake.

Although the process of scientific inquiry occasionally leads to such dramatic changes in our understanding of the natural world, most changes in scientific knowledge are much more gradual. As more and more studies are conducted, the community moves toward a deeper understanding of a problem or question, one step at a time.

Take the discovery that specific germs can cause a particular disease. In the late 1800s, the German physician Robert Koch used a microscope to examine the blackened blood of cows and sheep killed by anthrax and saw what appeared to be tiny sticks or threads—structures he never found in healthy animals. After inoculating a mouse with the diseased blood, Koch found that the sickened rodent was teeming with the suspicious sticks. Even then, he could not be sure that there wasn’t something else in his sample that was causing the disease. So he came up with a technique for growing microbes in a culture dish that produced a pure population containing only one type of germ. This careful, step-by-step approach allowed him to prove that a specific microbe, a rod-shaped bacterium he collected and cultured in the lab, was the cause of anthrax (Koch, 1876; Blevins and Bronze, 2010).

Today, clinicians make use of powerful DNA technologies that can quickly screen patient samples for genes that are associated with hundreds of known disease-causing viruses, bacteria, parasites, and fungi (Tan et al., 2024). Such an approach led to the rapid isolation and identification of the virus responsible for COVID. In this case, the initial discovery of the virus was rapidly followed by studies of how it gets into host cells and how it is transmitted from person to person—findings that were quickly confirmed by multiple laboratories around the world. This concerted scientific effort drove the development and administration of a novel vaccine to billions of people less than a year after the first reports of infection (Saag, 2022). Such rapid progress from a basic discovery to a clinical benefit shows that—even with all of the checks and balances of controlled, blinded trials and peer review—science can sometimes reach a consensus in record time.

Thanks to the explosive expansion of the internet and the inescapable spread of social media, most of us now have virtually unlimited access to a tidal wave of information. Sadly, a great deal of this information is not accurate. Anyone with a large number of online followers, but little scientific background, can publicize dubious or unconfirmed studies – or even fabricate them out of thin air. Some may advocate sincere but unscientific or disproven beliefs, like the link between autism and childhood vaccines. Others foster falsehoods for financial gain, like oil company lobbyists who deny the role that fossil fuels are playing in global climate change. In this informational free-for-all, false claims often become quickly sensationalized and disseminated to millions of people.

Although most of us recognize the need to think critically when we read or see stories on the web, on social media, or in the popular press, how can we determine whether a particular study or story is trustworthy? How can we inoculate ourselves against being fooled by scientific untruths or misrepresentations? Researchers devoted to promoting science literacy have devised a three-step process for separating science fact from science fiction (Osborne and Pimentel, 2022).

The first and perhaps most critical step involves evaluating the source of the claim. Who is providing or promoting the information? Do they have economic or political reasons to spread these views? What, if anything, might they be selling?

Next, it is important to ask whether the source of the information has the expertise and credentials to validate their claim. Does the individual have the appropriate training (an MD or PhD degree, for example) and do they work in that particular field? Even highly respected scientists can be wrong when they venture too far from their areas of expertise. Not long ago, small groups of distinguished physicists questioned whether smoking caused cancer and (until their dying breaths) opposed the idea that greenhouse gasses cause climate change (Oreskes and Conway, 2011).

Having an advanced degree is clearly no guarantee that someone will act ethically: the physicists just mentioned were supported by financial backing from the industries that benefited from their “expert” testimonials. Therefore, another point to consider is whether the experts in question are generally respected by their scientific peers. During the early days of the COVID-19 pandemic, for example, a small but vocal group of physicians advocated the use of ivermectin (a horse de-worming drug) to prevent infection—a strategy that is not only ineffective, but can be harmful. Some of these clinicians had previously been criticized by their peers in the medical community for promoting other unproven and ineffective treatments. Yet they continued to publicize their unsupported claims, which were then amplified by influencers with no scientific or medical training at all.

But what happens if the source of the story seems credible? At that point, it is time to assess whether a scientific consensus exists. This can sometimes be more challenging to discern. A good place to start is the website of a reliable organization, such as a respected news outlet or the National Academy of Sciences (in the United States) or the Royal Society (in the United Kingdom). In the case of climate change, for example, the community of climatologists speaks with a broad consensus when it concludes that human activity is contributing to global warming (National Academy of Sciences and Royal Society, 2020).

By now it should be clear that the entire scientific enterprise is built on trust. Integrity is so essential to science that Albert Einstein once remarked: “Most people say that it is the intellect which makes a great scientist. They are wrong: it is character.” Scientists trust one another to adhere to the standards and practices that the community has established to enable all researchers to rely on—and build on—each other’s findings.

At the same time, scientists have an obligation to be open and honest with all of us. Our taxes support much of the authoritative research we encounter in the news. And lives can depend on whether scientific studies are conducted rigorously and presented accurately. Scientists therefore have an ethical responsibility to communicate their findings in a clear and straightforward manner, to explain honestly what their conclusions mean (and what they do not mean), and to make their data as available as they can for public scrutiny.

This policy of openness did not arise spontaneously. The worldwide institution of science, as a whole, has worked long and hard to establish a system of values and incentives that strongly encourage investigators to be meticulous with their methodology and scrupulous when it comes to sharing their results. In addition, the scientific community actively discourages various forms of “bad behavior,” including the publication of fraudulent or misleading data and the promotion of unverified research. Such misconduct can waste precious resources and limited funding, erode public trust, hamper discovery, and lead us farther from reliable knowledge—thereby undermining the primary objective of scientific research.

Maintaining the cultural values of science requires a continuous input of energy and attention. Institutions like the Royal Society (established in 1660) and the National Academy of Sciences (signed into existence by President Abraham Lincoln) shore up the pillars of science by educating future generations of scientists and instilling in them the community values and practices that are required for science to remain healthy (National Academy of Sciences, 2009).

In an ideal world, no scientist would ever stray from the virtuous search for truth. Unfortunately, scientists—like all professionals—are not only human, but can be under intense pressure to succeed. They struggle to garner recognition, funding, and trainees. They need to work quickly to avoid being “scooped,” as they compete for limited space in the most widely read journals. This ever-present pressure to “publish or perish” can lead to shortcuts in the scientific process that go undetected by peer review, such as the manipulation of data or images by a member of the research team in order to create a more compelling publication. In an analysis conducted in 2009, some 2% of the scientists surveyed admitted to fabricating, falsifying, or modifying data at least once (Fanelli, 2009).

How can the scientific community prevent such ethical breaches? Best practices and proper conduct need to be outlined and practiced at all levels of the scientific enterprise—from individual scientists to their institutions and funders. At the same time, all of these participants must remain ready to identify and investigate allegations of misconduct. Technology can help: software programs, for example, can facilitate detection of manipulated figures or plagiarized text.

Transgressions, when caught, must lead to formal sanctions. These can include the retraction of publications and the subsequent correction of the scientific record, suspension or removal of the perpetrators from their positions, and the revocation of their funding. In instances in which the misbehavior amounts to a violation of the law, the individual may even face time in prison. Such was the case for the Chinese researcher who used gene editing to irreversibly alter human embryos, a practice that is not only unethical, but illegal in China and throughout the world (Nie et al., 2020).

In the end, the responsibility for improving the public image of science falls largely on scientists themselves. Only by energetically identifying and punishing the “bad actors,” while supporting and rewarding those who play fairly and operate with openness and honesty, can the worldwide scientific enterprise ensure that the public can continue to trust in the community of scientists—and in the science they produce.

The ideas we present in this article will be familiar to anyone who has undertaken advanced training in the sciences. But even people who have achieved great success in other professions—attorneys, accountants, artists, and airline pilots—are likely entirely unaware of how the scientific “sausage” is made.

Research has shown that achieving the type of understanding that we seek is not easy and will require repeated exposures (Romine et al., 2017). Thus, developing an appreciation of the scientific process, and how scientists as a community strive to uncover new reliable knowledge, must begin early on. Even 5-year olds can be led through activities that give them a sense of how to ask questions, test ideas, and engage in respectful discussions—just like “real scientists” do (Smith et al., 2009). Attitudes and beliefs are adopted early in life, and schools are the ideal place to plant the seeds that will blossom into a trust in the scientific process. To make this happen, we need to reform science curricula and support school systems. And perhaps most importantly, we will need to train teachers so they not only understand how science works and why science matters, but have the confidence to spread this appreciation to future generations. As others have emphasized, if misinformation is allowed to take root and flourish, “we cannot hope to halt climate change, make reasoned democratic decisions, or control a global pandemic” (West and Bergstrom, 2021).

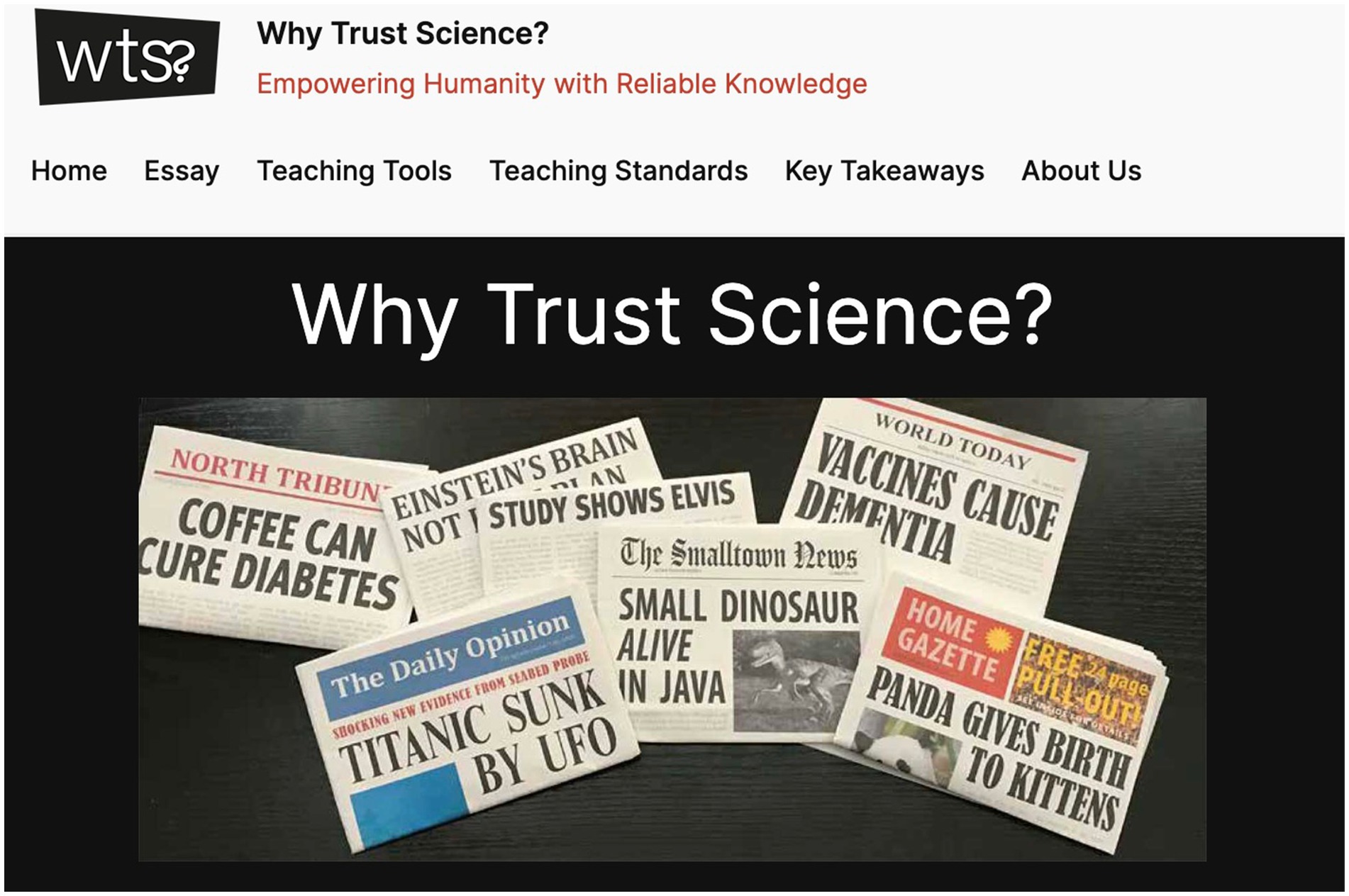

We hope that what we have written will inspire others to develop effective teaching materials for classrooms at all levels—from primary school through college. As an initial contribution, we have created a new website that presents a more lengthy essay on why we should trust science along with a collection of free teaching tools that we have compiled from many different sources (Figure 1). We hope that readers will join us in our efforts to broaden the public understanding of science and the scientific process. Our future as a species depends on it.

Figure 1. A website to promote the explicit teaching of how the scientific community creates reliable knowledge. The teaching tools posted at: https://whytrustscience.org.uk come from organizations such as the National Center for Science Education and the Strategic Education Research Partnership, as well from individual educators and researchers. Containing a mix of in-class activities and out-of-class references to aid student learning, they represent some of the best resources we could find for teaching how science works and why evidence-based judgments are more credible than a simple belief.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

KH: Conceptualization, Writing – original draft. KR: Validation, Visualization, Writing – review & editing. BA: Conceptualization, Writing – original draft.

The author(s) declare that no financial support was received for the research and/or publication of this article.

Much of this article has been adapted from a section we wrote for our textbook Essential Cell Biology (Norton Publishing) to which the entire author team contributed. Particular thanks are due to Sandy Johnson and to Nigel Orme, who have provided valuable input from the very first draft.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors declare that no Gen AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Blevins, S. M., and Bronze, M. S. (2010). Robert Koch and the ‘golden age’ of bacteriology. Int. J. Infect. Dis. 14, e744–e751. doi: 10.1016/j.ijid.2009.12.003

Fanelli, D. (2009). How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS One 4:e5738. doi: 10.1371/journal.pone.0005738

Fleck, L., Kuhn, T. S., and Trenn, T. J. (1981). Genesis and development of a scientific fact. Chicago, IL: University of Chicago Press.

Koch, R. (1876). “The etiology of anthrax, founded on the course of development of Bacillus anthracis” in Translator. Essays of Robert Koch. ed. K. C. Carter (Westport, CT: Greenwood Press).

National Academy of Sciences (2009). On being a scientist: A guide to responsible conduct in research. 3rd Edn. Washington, DC: The National Academies Press.

National Academy of Sciences and Royal Society (2020). Climate change: evidence and causes: update 2020. Washington, DC: The National Academies Press.

Nie, J.-B., Xie, G., Chen, H., and Cong, Y. (2020, 2020). Conflict of interest in scientific research in China: a socio-ethical analysis of the Jiankui’s human genome-editing experiment. Bioeth. Inq. 17, 191–201. doi: 10.1007/s11673-020-09978-7

Oreskes, N., and Conway, E. M. (2011). Merchants of doubt: How a handful of scientists obscured the truth on issues from tobacco smoke to global warming. New York, NY: Bloomsbury Press.

Osborne, J., and Pimentel, D. (2022). Science, misinformation, and the role of education. Science 378, 246–248. doi: 10.1126/science.abq8093

Pew Research Center (2023) Americans continue to have doubts about scientists’ understanding of climate change. Available online at: https://www.pewresearch.org/short-reads/2023/10/25/americans-continue-to-have-doubts-about-climate-scientists-understanding-of-climate-change/ (accessed October 25, 2023).

Pew Research Center (2024). Public trust in scientists and views on their role in policymaking. Available online at: https://www.pewresearch.org/science/2024/11/14/public-trust-in-scientists-and-views-on-their-role-in-policymaking/ (accessed November 14, 2024).

Romine, W. L., Sadler, T. D., and Kinslow, D. T. (2017). Assessment of scientific literacy: development and validation of the quantitative assessment of socio-scientific reasoning (QuASSR). J. Res. Sci. Teach. 54, 274–295. doi: 10.1002/tea.21368

Saag, M. (2022). Wonder of wonders, miracle of miracles: the unprecedented speed of COVID-19 science. Physiol. Rev. 102, 1569–1577. doi: 10.1152/physrev.00010.2022

Smith, D. C., Cowan, J., and Culo, A. M. (2009). Growing seeds and scientists. Sci. Child. 47, 48–51.

Tan, K. T., Servellita, V., Stryke, D., Kelly, E., Streithorst, J., Sumimoto, N., et al. (2024). Laboratory validation of a clinical metagenomic next-generation sequencing assay for respiratory virus detection and discovery. Nat. Commun. 15:9016. doi: 10.1038/s41467-024-51470-y

Keywords: education, trust, science - general, communication, misinformation, health

Citation: Hopkin K, Roberts K and Alberts B (2025) Teaching trust in science: a critical new focus for science education. Front. Commun. 10:1589116. doi: 10.3389/fcomm.2025.1589116

Received: 06 March 2025; Accepted: 18 March 2025;

Published: 15 April 2025.

Edited by:

Arri Eisen, Emory University, United StatesReviewed by:

Ernest Ricks, Morehouse School of Medicine, United StatesCopyright © 2025 Hopkin, Roberts and Alberts. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bruce Alberts, YmFsYmVydHNAdWNzZi5lZHU=

†ORCID: Keith Roberts, https://orcid.org/0000-0001-9148-6701

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.