- 1Mass Communication Department, College of Humanities and Social Sciences, King Saud University, Riyadh, Saudi Arabia

- 2Department of Arabic for International Communication at Kulliyyah of Languages and Management (KLM), International Islamic University Malaysia (IIUM), Selayang, Malaysia

- 3Department of Mass Communication, Aligarh Muslim University, Aligarh, India

- 4Radio and TV Department, Faculty of Specific Education, Minia University, Minya, Egypt

Artificial intelligence (AI) literacy is a crucial aspect of media and information literacy (MIL), regarded not only as a human right but also as a fundamental requirement for societal advancement and stability. This study aimed to provide a comprehensive, cross-border perspective on AI literacy levels by surveying 1,800 university students from four Asian and African nations. The findings revealed significant disparities in AI literacy levels based on nationality, scientific specialization, and academic degrees, while age and gender did not show notable impacts. Malaysian participants scored significantly higher on the AI literacy scale than individuals from other countries. The results indicated that various demographic and academic factors influenced respondents’ perceptions of AI and their inclination to utilize it. Nationality and academic degree were identified as the most influential factors, followed by scientific specialization, with age and gender exerting a lesser influence. The study highlights the necessity of focusing research efforts on the detailed dimensions of the AI literacy scale and examining the effects of previously untested intervening variables. Additionally, it advocates for assessing AI literacy levels across different societal segments and developing the appropriate measurements.

Introduction

Experts from the United Nations Educational, Scientific and Cultural Organization (UNESCO) have identified 23 terms related to the field of Media and Information Literacy (MIL), including Artificial Intelligence (AI) Literacy. Specialists now consider AI Literacy a branch of Digital Literacy, which falls under the broader umbrella of Media and Information Literacy (Grizzle et al., 2021; Yang, 2022).

Wang et al. (2023) summarize the importance of scientific research in the field of AI literacy for three reasons: (1) It is essential within the study of human-AI interactions and explaining the behavior of individuals when they interact with AI; (2) this can help in measuring the efficiency of the user in using AI and developing clear standards for these competencies; and (3) research in this field helps improve AI education by providing a comprehensive and detailed framework for curriculum design.

In recent years, researchers’ interest in publishing on the topic of AI literacy, particularly from the perspective of education in the social and humanitarian aspects, has been increasing. One study identified 30 previous studies in this field from 2016 to 2021 (Ng et al., 2021).

Although AI has become a national asset that many countries are racing to utilize in building their economies today, the importance of AI literacy for everyone and for enhancing national goals still requires substantial efforts from policymakers, researchers, and concerned institutions. According to Kong et al. (2021), efforts to develop AI literacy among citizens remain insufficient. For example, there is potential to develop a course for university students in all disciplines on this literacy.

Theoretical background

AI literacy, as an advanced aspect of information literacy, focuses on the crucial skills individuals require to navigate and thrive in the digital world with the aid of AI technologies. It does not necessitate detailed technical expertise in AI applications. Still, it spans four dimensions: understanding and comprehension of AI, practical application of AI, assessment of AI applications, and ethical considerations of AI (Zhao et al., 2022).

It can also be said that AI literacy is a set of competencies enabling individuals to critically assess AI technology, communicate and collaborate effectively with AI, and utilize it as a tool online, at home, and in the workplace (Long and Magerko, 2020). This aligns with what Kong et al. (2021) have pointed out regarding AI literacy, which includes three components: Understanding fundamental concepts of AI, using AI concepts for assessment, and using AI concepts to understand the real world through problem-solving.

Researchers have proposed the term “AI literacy” to emphasize its importance as an addition to digital literacy skills in the 21st century for all members of society, including young children. This literacy is crucial for daily life, education, and work in the digital world. It encompasses competencies in AI that everyone should possess, focusing primarily on learners without a background in computer science (non-specialists or experts) (Burgsteiner et al., 2016; Kandlhofer et al., 2016; Laupichler et al., 2022; Ng et al., 2021; Su et al., 2023).

The term “AI Literacy” was first used by researchers Burgsteiner et al. (2016) in their paper proposing a curriculum for teaching AI basics at an Austrian secondary school titled “iRobot,” presented at an international conference on AI in March 2016. Prior to this, the Japanese Konishi (2015) used this term in an article discussing priorities for the Japanese economy.

The concept of AI literacy is still in its early stages (Su et al., 2023), and researchers initially focused on defining AI literacy as “the ability to understand the basic technologies and concepts of AI in various products and services” (Burgsteiner et al., 2016; Kandlhofer et al., 2016). Later, researchers recognized other equally important aspects of AI literacy beyond understanding the basics. These include educating learners on applying AI concepts in various contexts and applications in daily life and understanding the ethical dimensions of AI technologies. Some researchers have expanded the scope of AI literacy to include two advanced skills: critical evaluation of AI technologies and effective communication and collaboration with AI (Ng et al., 2021). These skills and dimensions are outlined as follows:

• Know and understand AI

• Use and apply AI

• Evaluate and create AI

• AI ethics

Laupichler et al. (2023b) define the concept of AI literacy as “competencies that include basic knowledge and analytical evaluation of AI, in addition to the critical use of AI applications by ordinary non-expert individuals.” They did not include (programming skills) in this definition because they consider them a separate set of competencies beyond AI literacy.

Similar to data literacy or computational literacy, AI literacy is generally used to describe the competencies and skills of ordinary non-expert individuals in this field (Laupichler et al., 2023a).

Hermann (2022) relies on the AI-for-social-good perspective to define AI literacy as the basic level for individuals to understand (a) How and what data is collected, (b) How data is integrated or compared to draw conclusions, create content and disseminate it, (c) Self-capacity to make decisions, act, and object, (d) AI’s susceptibility to bias and selectivity, and (e) The potential impact of AI in general.

Hermann (2022) addresses four dimensions of AI literacy:

• Basic understanding of AI inputs

• Basic understanding of AI functional performance

• Basic understanding of AI effectiveness and stakeholders

• Basic understanding of AI outputs and impacts

The UNESCO International Forum on AI and the Future of Education links the concept of AI literacy for all people, stating that global citizens need to understand the potential impact of AI, what it can and cannot do, when AI is beneficial when skepticism is warranted, and how it can be directed for the common good. This requires everyone to achieve a certain level of competency in knowledge, understanding, skills, and guiding values (Miao and Holmes, 2021).

Literature review

Ng et al.’s (2021) study reviewed the domains of 30 previous studies on AI literacy and found that only four were within the scope of higher education. These studies focused on learning AI in specific disciplines such as meteorology, medicine, and libraries. They indicated, “University students are assumed to have a basic understanding of AI, which prepares them for further development in this field. They can apply AI skills and knowledge to solve real-world problems, addressing future academic and professional challenges.”

Laupichler et al. (2022) conducted a literature review on AI literacy in higher education and adult education to identify focal points and recent research trends in this field. They searched through 10 databases, identifying 30 studies from 902 results using predefined inclusion and exclusion criteria. The results indicated that research in this area is still in its early stages and requires improvement in defining AI literacy in adult education and determining the content that should be taught to non-experts.

Among the studies that have addressed enhancing AI literacy in higher education curricula are the series of studies by Siu-Cheung Kong and colleagues (Kong et al., 2021, 2022, 2023) and the series of studies by Laupichler et al. (2023a,b,c).

In the first study by Kong et al. (2021), an educational course on AI literacy was evaluated for university students from various academic disciplines. It concluded that AI literacy comprises three components: grasping fundamental AI concepts, assessment, and understanding the real world through problem-solving. The course was announced to 4,000 students through 120 volunteer participants and took 7 h to complete. The study’s results, both before and after the course, showed significant progress among participants from diverse educational backgrounds in grasping fundamental AI concepts. They also felt capable of functioning in their environment, and prior programming knowledge was not necessary to develop AI literacy concepts.

In the second study by Kong et al. (2022), literacy courses in AI aimed at building conceptual understanding were evaluated among students at a university in Hong Kong with diverse academic backgrounds. Eighty-two volunteers completed two courses: a seven-hour machine learning course and a nine-hour deep learning course. Cognitive skills in learning objectives were categorized into:

• Remember

• Understand

• Apply

• Analyze

• Evaluate

The results indicated that the courses successfully empowered participants to grasp the fundamental concepts of AI.

In the third study, Kong et al. (2023) evaluated an educational program on AI literacy for its effectiveness in developing conceptual awareness, empowerment, and ethical awareness among university students. Thirty-six university students from varied academic backgrounds participated in the program.

A series of studies by Laupichler et al. (2023a,b,c). Explored and developed a scale for measuring AI literacy among non-computer science experts, which was later adapted for evaluating AI courses for university students.

The first study, Laupichler et al. (2023a), employed a three-stage Delphi method involving 53 experts from various fields to identify scale statements relevant to AI literacy. The validity of these statements was then verified in another study by Laupichler et al. (2023b), using a sample of 415 participants. Exploratory factor analysis was conducted on these statements, revealing the existence of three dimensions of AI literacy for non-computer science experts: technical understanding, critical evaluation, and practical application.

In the third study (Laupichler et al., 2023c), the aforementioned scale was adapted to evaluate AI literacy among non-experts to assess AI courses for university students from various disciplines. The evaluation relied on participants’ self-assessments.

A study by Schofield et al. (2023) reviewed studies that addressed Media and Information Literacy (MIL) measures between 2000 and 2021. The study concluded that the main measurement methods in those studies were self-assessments by the respondents, with few addressing large populations. Additionally, demographic and social factors were underemphasized in these measures. The study highlighted that the goal of comprehensively measuring MIL appears to be highly ambitious and challenging. This represents a notable weakness in the research.

The study recommended that several key issues should be prioritized in this context, including data security and privacy, harmful media content and usage, online risky behavior, content production, AI, content moderating algorithms, and surveillance.

Researchers aiming to map MIL levels must specify precisely which MIL aspects they want to measure. It will not be possible to fully examine MIL in all its breadth, no matter which framework or design is chosen (Schofield et al., 2023, p. 134). One scale that has proposed additional dimensions to measure AI literacy levels is the four-part scale that considers psychological dimensions (Carolus et al., 2023):

• Know and Understand

• Use and Apply AI

• AI ethics

• Evaluate and Create

This scale emphasizes that the assessment of AI systems (knowledge and understanding) is more important than the ability to program and develop AI as an AI literacy skill for non-specialists, “Create AI is separate and no part of AI Literacy” (Carolus et al., 2023, p. 10). Adding to the previous four dimensions, the scale includes two additional dimensions from a psychological perspective titled AI Self-management.

The first dimension is AI Self-Efficacy, which refers to skills related to problem-solving and continuous learning. The second dimension, AI Self-Competency, focuses on knowledge of AI’s persuasive and emotional aspects.

Pinski and Benlian (2023) presented a five-dimensional scale with 13 statements: knowledge of AI technologies, understanding of the role of humans in and interaction with AI, knowledge of the steps of AI processes (inputs and outputs), experience in interacting with AI, and experience in designing AI. This scale is clearly based on the competencies and skills of learning in the field of information systems, with the technical dimension being dominant.

Research gap

AI literacy enables individuals to critically evaluate artificial intelligence technologies and use them effectively, ethically, and safely in educational, work, and personal environments, as well as in various aspects of life. In trying to understand these aspects, different measures of AI literacy have been used, as some of them focused on measuring these competencies in a specific category of the public, such as university students (Kong et al., 2021, 2023). Other studies developed measures for non-experts in computer science (Laupichler et al., 2023a). In contrast, the technical dimension clearly dominated some scales (Pinski and Benlian, 2023). Carolus et al. (2023) provided a broader range of measurements that include the known dimensions of AI literacy and do not neglect the psychological and social aspects as well as self-development and work readiness.

The present study sought to comprehensively review the existing body of literature pertaining to AI literacy scales within the context of higher education. This review was undertaken to identify the key components and commonalities among these measures, ultimately facilitating the selection of the most suitable instrument for a large-scale international survey.

It is clear from the above that AI literacy is a new research area, and the studies that dealt with enhancing AI literacy in higher education focused on developing measures and self-assessment studies for students from different disciplines through a survey or quasi-experimental studies. The studies provided meta-reviews of previous literature in the field of MIL measures such as Schofield et al. (2023), concluding that few of them addressed large populations and demographic and social factors were also minimized in those measures. The study showed that “the goal of measuring the ‘entire’ MIL area seems to be quite ambitious and challenging.” This represents a notable weakness in the studies. It is recommended that several focused issues be highlighted in this regard, including AI.

Montag et al. (2024) confirmed that “the investigation of attitudes toward AI together with AI literacy/competency is critical, timely, and needs to be started in large representative samples around the world.”

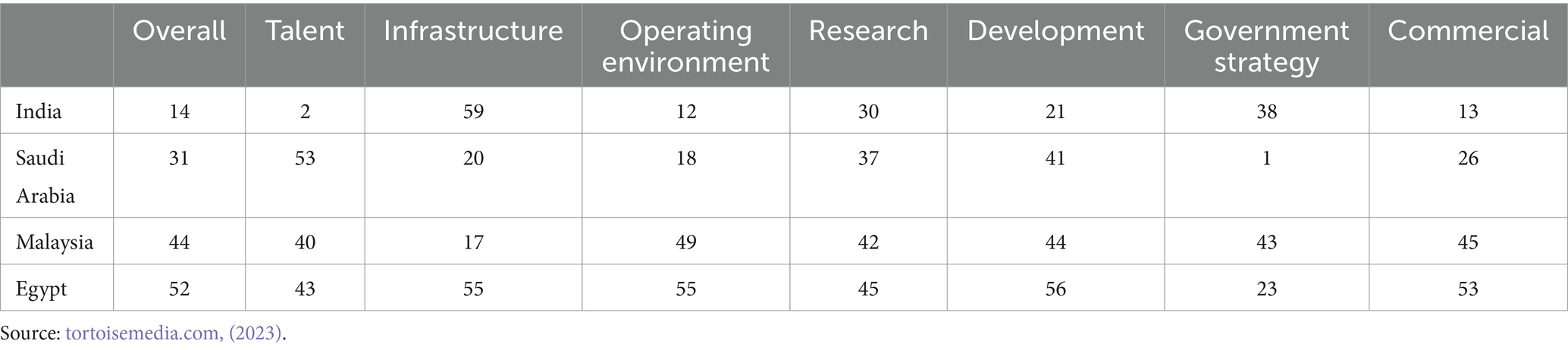

Therefore, this study aims to bridge this scientific gap through a large-scale geographical survey to measure the levels of a specific aspect of information literacy, specifically AI literacy, among a sample of university students from four countries in East Asia, the Middle East, and North Africa (India, Malaysia, Saudi Arabia, and Egypt). The Global AI Index 2023, revealed by Stanford University, ranks 62 countries according to their capabilities in AI. India ranked 14th globally on the general scale, Saudi Arabia ranked 31st, Malaysia ranked 44th, and Egypt ranked 52nd among the 62 countries in the index. The ranking of the four countries varied according to the different criteria of the index, as shown in Table 1. Countries are ranked by their AI capacity at the international level. This is the fourth iteration of the Global AI Index, published on 28 June 2023.

The global ranking highlights India’s strengths across several dimensions: talent (second place), operational environment, development, and trade. Saudi Arabia leads globally in governmental strategy (first place) and also performs well in infrastructure and the operational environment. Malaysia stands out for its robust infrastructure, while Egypt has achieved an advanced position in governmental strategy. The findings of this study can provide valuable insights into the extent to which the progress reflected in the overall AI index for the four countries impacts AI literacy among university students.

This study aims to identify the levels of AI literacy as part of MIL among a sample of university students in four countries (Egypt, Saudi Arabia, India, and Malaysia) to determine the extent to which students keep pace with the development in this field, especially since this type of literacy has become seen as a human right. One of the requirements and conditions of sustainable development and the sub-objectives of the study are:

• Identifying the general levels of AI literacy among a sample of university students in the four countries.

• To investigate the statistical significance of differences among respondents in their perspectives on AI and their levels of AI literacy based on gender, nationality, age, field of study, and academic level.

Hypotheses

The study tests two main hypotheses:

• There are statistically significant differences in AI literacy levels among respondents based on gender, nationality, age, field of study, and academic level.

• There are statistically significant differences in the levels of AI desire/AI perception (enthusiasm for AI and optimistic/pessimistic views toward AI) among respondents based on gender, nationality, age, field of study, and academic level.

Methodology and instrument

The study adopted a survey approach to collect data from a sample of university students in four countries in East Asia, the Middle East, and North Africa (India, Malaysia, Saudi Arabia, and Egypt). After obtaining approval from the King Saud University Research Ethics Committee, the online questionnaire was distributed across 10 universities in the four countries from April 20th to May 20th, 2024.

Prior to commencing the questionnaire, participants were required to provide informed consent by agreeing to the terms outlined in the information letter. The letter explicitly stated the voluntary nature of participation, the anonymity of all responses, the exclusive use of data for research purposes, and the participant’s right to withdraw from the study at any time.

To ensure data quality and adherence to study objectives, responses were subjected to a rigorous cleaning process. Those who failed attention checks (n = 241) were excluded from the analysis. Data collection in each country was terminated once the target sample size of 450 respondents was reached. Consequently, the final dataset comprised 1,800 responses from 10 universities across the four participating countries.

1. Aligarh Muslim University (AMU)

2. Babasaheb Ambedkar Marathwada University (BAMU)

3. Cairo University (CU)

4. International Islamic University Malaysia (IIUM)

5. King Abdulaziz University (KAU)

6. King Saud University (KSU)

7. Minia University (Minia)

8. Universiti Kebangsaan Malaysia (UKM)

9. Universiti Malaya (UM)

10. University of Jeddah (UJ)

The study adopted the Meta AI Literacy Scale (MAILS) developed by Carolus et al. (2023) for the following reasons:

• It is one of the comprehensive scales that added multiple dimensions to measure the levels of AI literacy. These dimensions include usage and application, knowledge and understanding, discovery, ethics, and AI self-management. These dimensions encompass skills like problem-solving, continuous learning, and even persuasive and emotional aspects related to AI.

• It is a scale that integrates and benefits from multiple previous scales from studies in communication and psychology (Ajzen, 1985; Cetindamar et al., 2022; Dai et al., 2020; Long and Magerko, 2020; Ng et al., 2022; Wang et al., 2023).

• Carolus designed the MAILS scale for AI literacy in German (Carolus et al., 2023). The study recommended using this scale in different linguistic and cultural contexts. For this study, the scale has been translated and applied in both Arabic and English.

A slight modification was made to the scale. The research team found that using the 11-point Likert scale (0–10) employed in the study by Carolus et al. (2023) would potentially increase the time needed to respond and reduce focus. Therefore, a 6-point scale (0–5) was adopted, starting from zero, meaning “Cannot at all,” and ending at five, meaning “very capable.” Statistically, the outcome will be the same in terms of measuring respondents’ responses.

The scale included 33 statements, with only one omitted, which was intended for attention checks in the original scale. This was done because three other statements served the purpose of attention checks (statements 21, 27, and 29); each statement has the same meaning as the previous statement. After these slight modifications to the scale, reliability and stability tests were applied, confirming the scale’s validity.

Reliability and internal consistency of an AI literacy scale

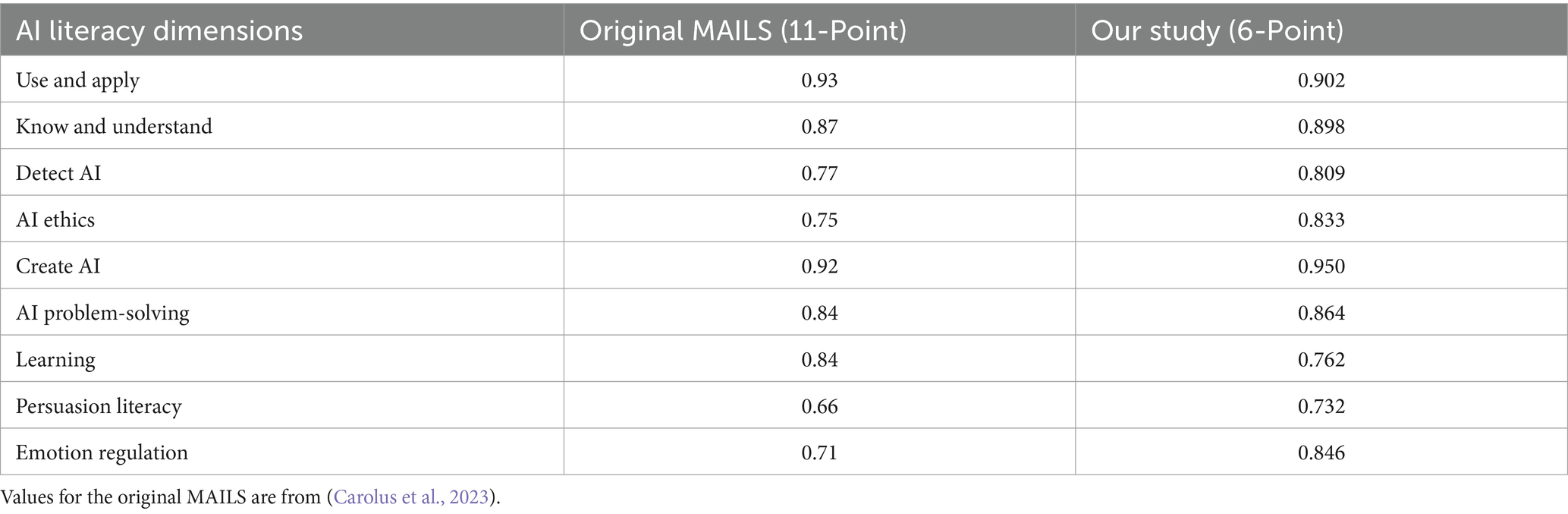

The authors conducted Cronbach’s alpha analyses to assess the reliability of the AI literacy scale, as illustrated in Table 2. Cronbach’s alpha values for our modified 6-point scale demonstrated high internal consistency reliability for the AI literacy dimensions, which ranged from 0.732 to 0.961 and were very close to the original 11-point scale.

Table 2. Comparison of Cronbach’s alpha values for the modified 6-point scale and the original 11-point scale.

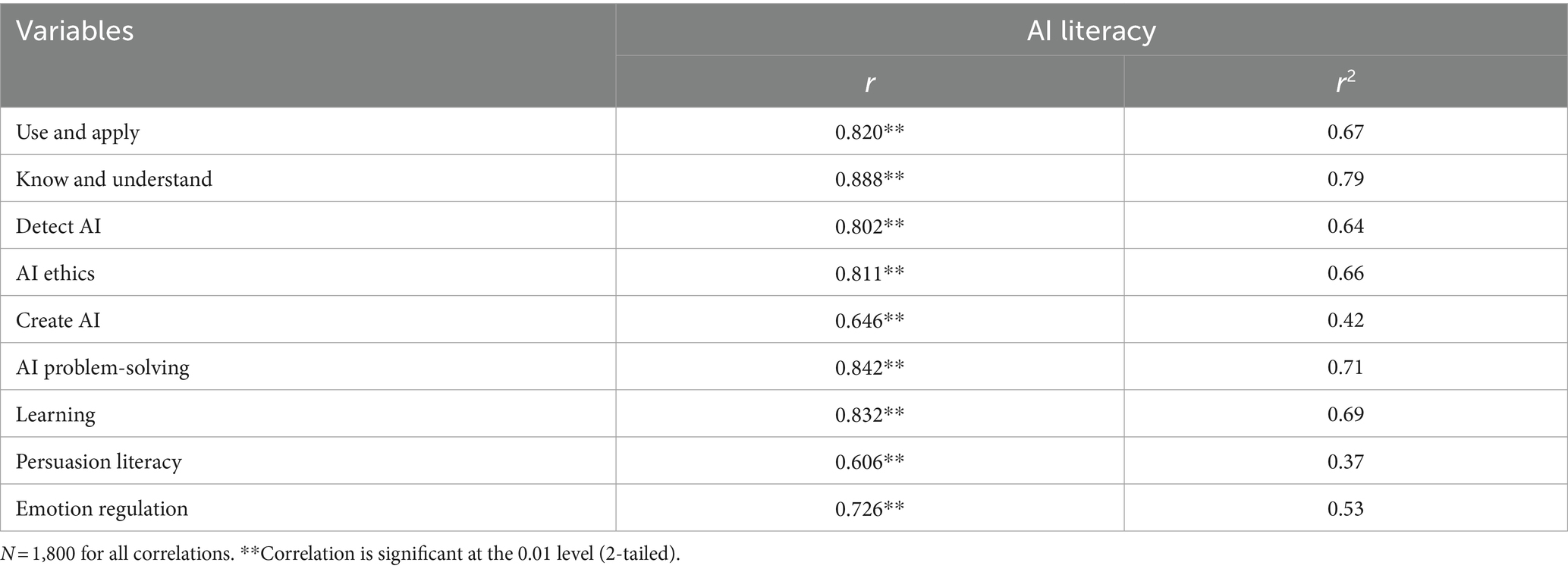

Furthermore, to evaluate the internal consistency of the AI Literacy scale, the authors employed a Pearson correlation to investigate the linear relationships between the dimensions and the total scale. As illustrated in Table 3, the Pearson correlation results revealed significant positive correlations (r > 0.6). Moreover, a range of coefficients of determination (r2) from 0.37 to 0.79 indicates that even the least correlated dimension accounts for more than one-third of the variation in AI Literacy scores. The robust correlation coefficients suggest that each component contributes to AI Literacy while forming a cohesive entity. Robust internal consistency assures the scale’s reliability and construct validity, emphasizing that the dimensions are closely interrelated and may evaluate similar or overlapping constructs.

Table 3. Pearson correlation coefficients and coefficients of determination for internal consistency of the AI literacy scale.

Results

Demographic profile

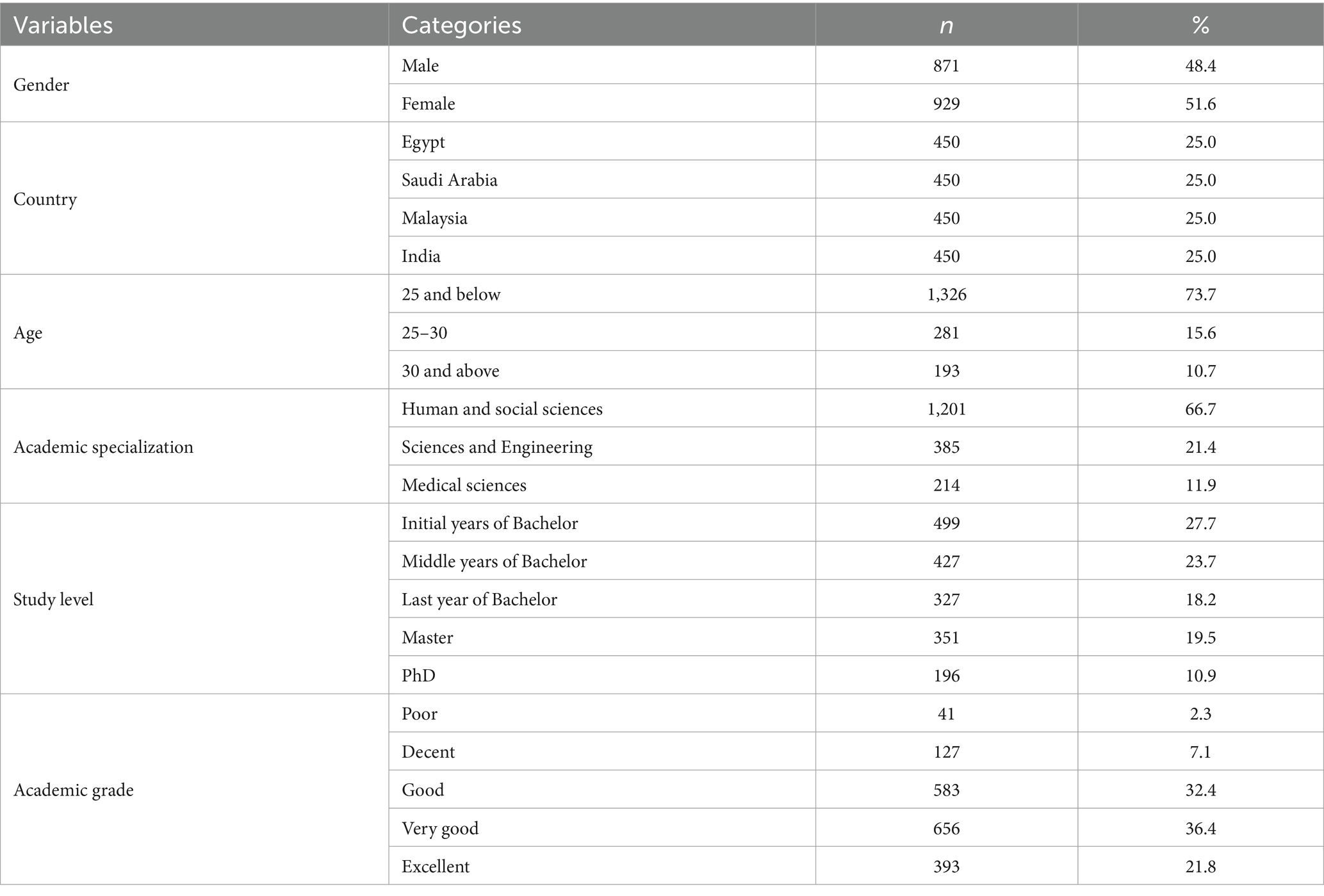

The demographic profile, as presented in Table 4, provides a comprehensive summary of the study sample (N = 1,800), emphasizing key features relevant to the research. The sample demonstrates a relatively equal distribution of genders, with a slight majority of female individuals at 51.6%. Significantly, the sample consists of an equal distribution of individuals from four different countries: Egypt, Saudi Arabia, Malaysia, and India, with each country accounting for 25% of the sample. The age distribution is predominantly skewed toward younger individuals, with almost 75% of participants being 25 or younger. Most participants (66.7%) have an academic background in human and social sciences, while a smaller proportion are in sciences and engineering (21.4%) and medical sciences (11.9%). The distribution of study levels ranges from undergraduate to doctoral studies, with the majority (27.7%) in the early stages of their bachelor’s degree. As measured by their grades, the participants’ academic achievement demonstrates a notable focus on the higher categories, with 69.2% of them attaining either “Very Good” or “Good” marks. The demographic makeup of the study’s participants offers a solid basis for examining the factors within various cultural and academic settings.

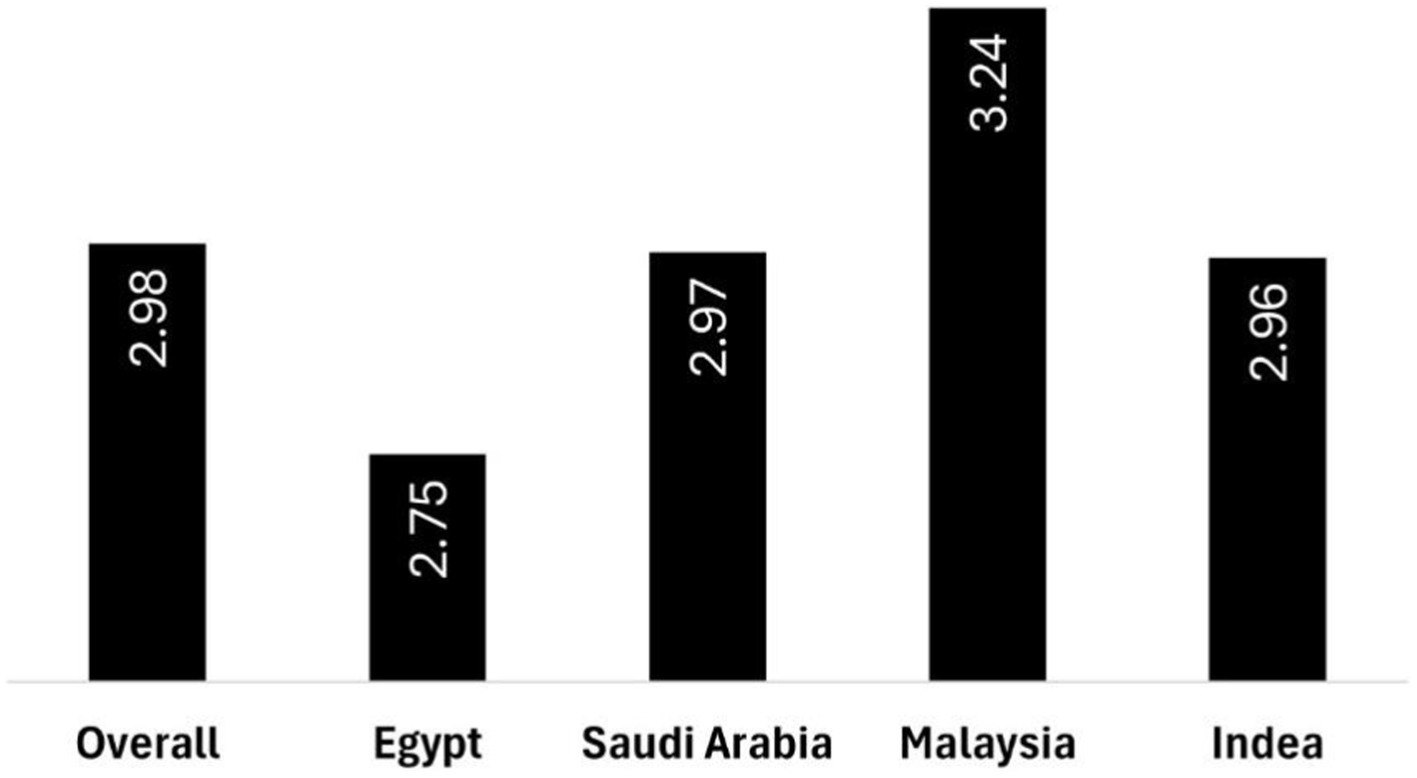

AI literacy

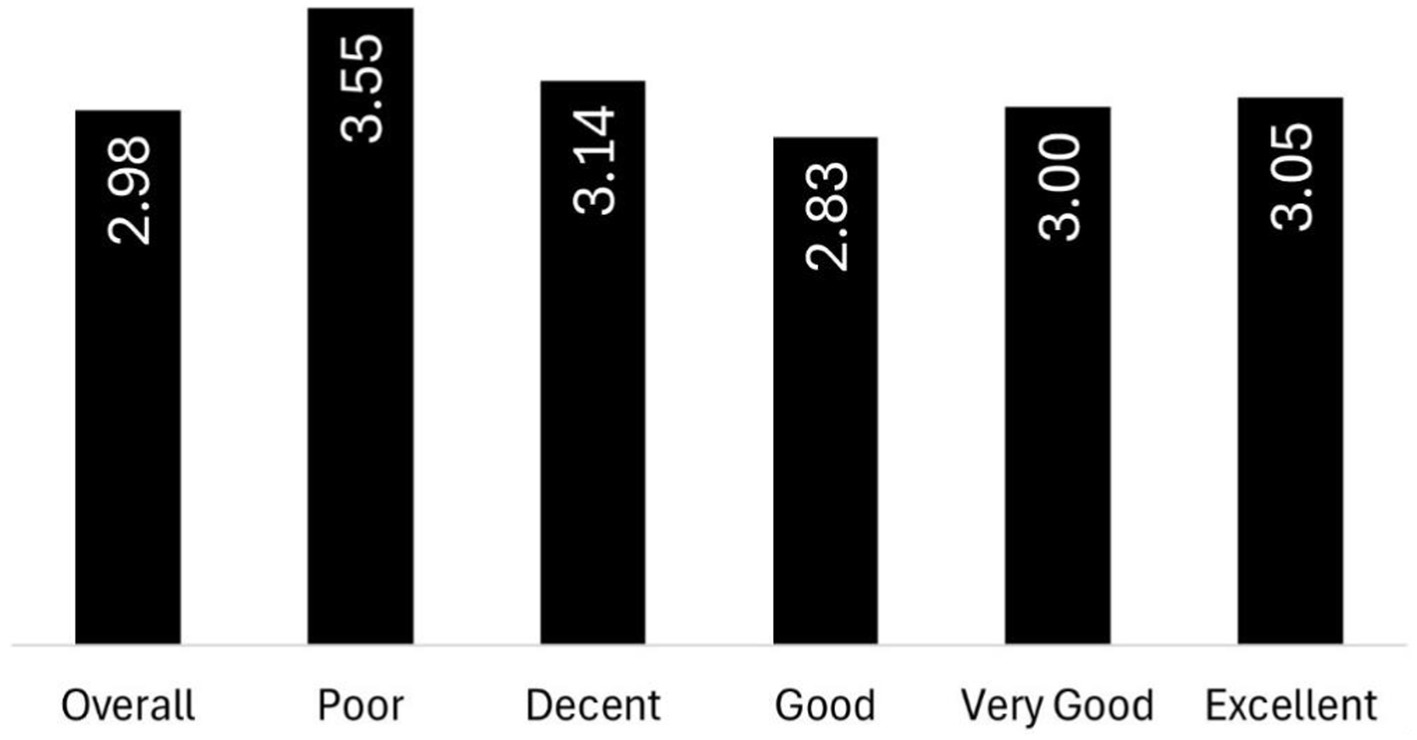

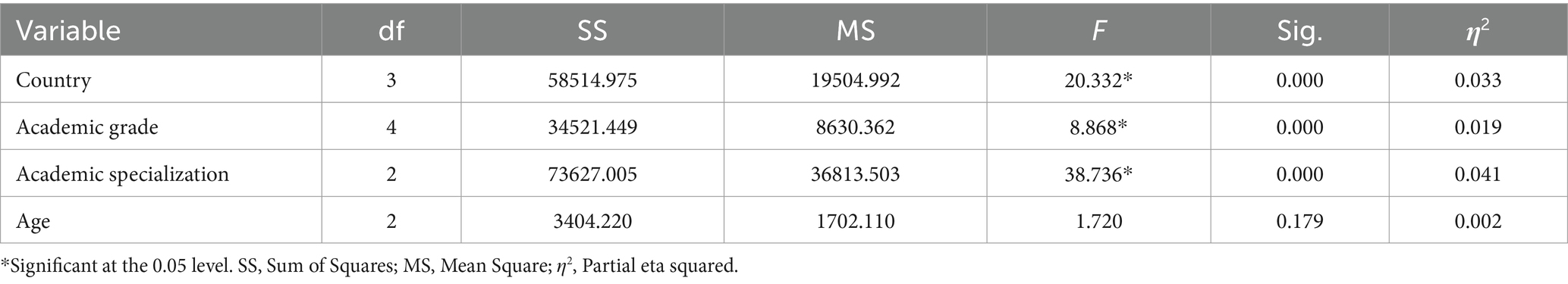

Data show that the average of the overall scale is 2.98 out of 5, which falls within the category of “Moderately able (Figure 1).” The results of the one-way ANOVA, as shown in Table 5, reveal significant differences in AI literacy scores among the four countries and respondents’ academic grades and specializations. All comparisons show statistically significant results (p < 0.001). Nevertheless, the analysis did not reveal a significant difference based on respondents’ age in artificial intelligence literacy scores (p = 0.179).

Table 5. One-way ANOVA of AI literacy differences based on country, academic grade, academic specialization, and age.

Further examination of the country-specific data demonstrates a noteworthy impact on artificial intelligence literacy scores, F(3, 1,796) = 20.33, p < 0.001, η2 = 0.033, implying that about 3.3% of the observed variance in these scores may be attributed to national differences. Furthermore, the study revealed that the academic grades F(4, 1,795) = 8.87, p < 0.001, η2 = 0.019, indicating that approximately 1.9% of the score variance could be explained by the respondents’ academic grades. This correlation does not necessarily indicate that grades determine AI literacy proficiency.

The most significant impact was detected in Academic Specialization, F(2, 1,797) = 38.74, p < 0.001, η2 = 0.041; this effect demonstrated 4.1% of the variation in AI literacy scores.

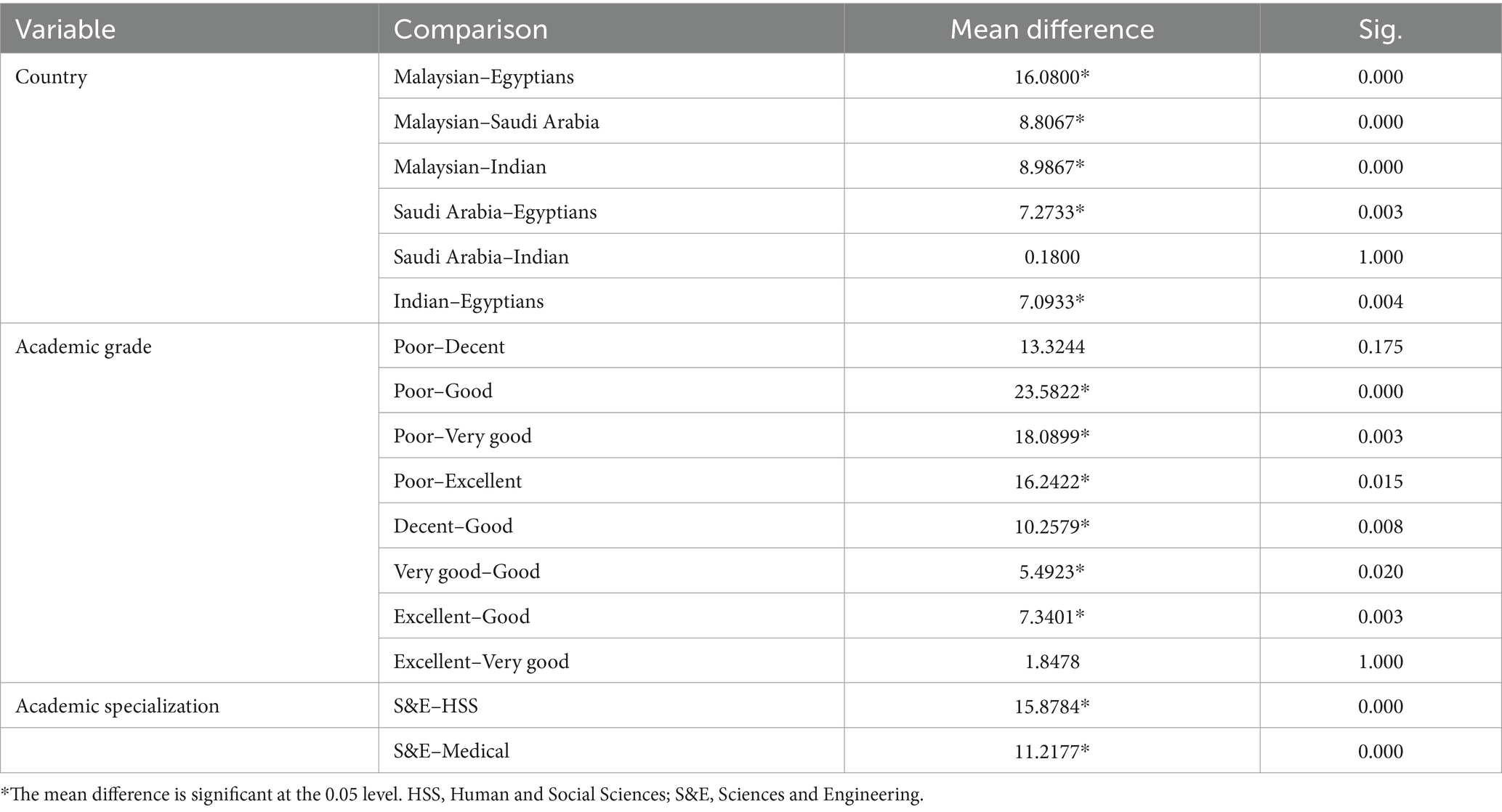

Further analyses using the Bonferroni post-hoc test for multiple comparisons (Table 6) revealed significant differences among the variables investigated.

Table 6. Post hoc comparisons of AI literacy differences by country, academic grade, academic specialization, and age using Bonferroni correction.

Concerning country-specific differences, Malaysian respondents demonstrated significantly higher AI literacy scores than participants from all other countries (p < 0.001). Saudi Arabian and Indian participants scored significantly higher than their Egyptian counterparts (p < 0.01). However, no statistically significant difference was seen in AI literacy scores between Saudi Arabian and Indian respondents. Regarding university students’ academic performance, students classified as having “Poor” grades consistently demonstrated higher levels of AI literacy compared to their peers with “Good,” “Very Good,” and “Excellent” grades (all p < 0.05). Individuals graded “Decent,” “Very Good,” and “Excellent” achieved significantly higher scores than individuals with “Good” grades (p < 0.01) (Figure 2).

Within the variable of Academic Specialization, individuals enrolled in Sciences and Engineering programs had significantly greater proficiency in AI literacy when compared to those studying Human and Social Sciences or Medical Sciences (p < 0.001).

AI perception

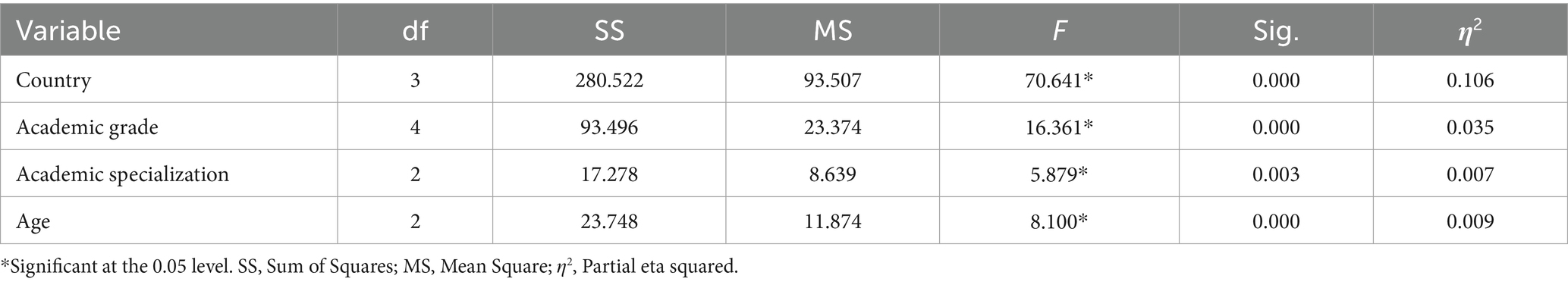

The results of the one-way ANOVA in Table 7 indicate significant variations in AI perception (optimistic/pessimistic views toward AI) among respondents based on country, academic grade, Academic Specialization, and age (all p < 0.05). The effect sizes (η2) indicate that the respondents’ country has the most significant influence on AI perception (η2 = 0.106), followed by academic grade with a lesser influence (η2 = 0.035). Academic Specialization and age have the most negligible impact. These findings suggest that various demographic and intellectual characteristics may influence individuals’ perceptions of AI, with nationality being the most influential factor.

Table 7. One-way ANOVA of AI perception differences based on country, academic grade, academic specialization, and age.

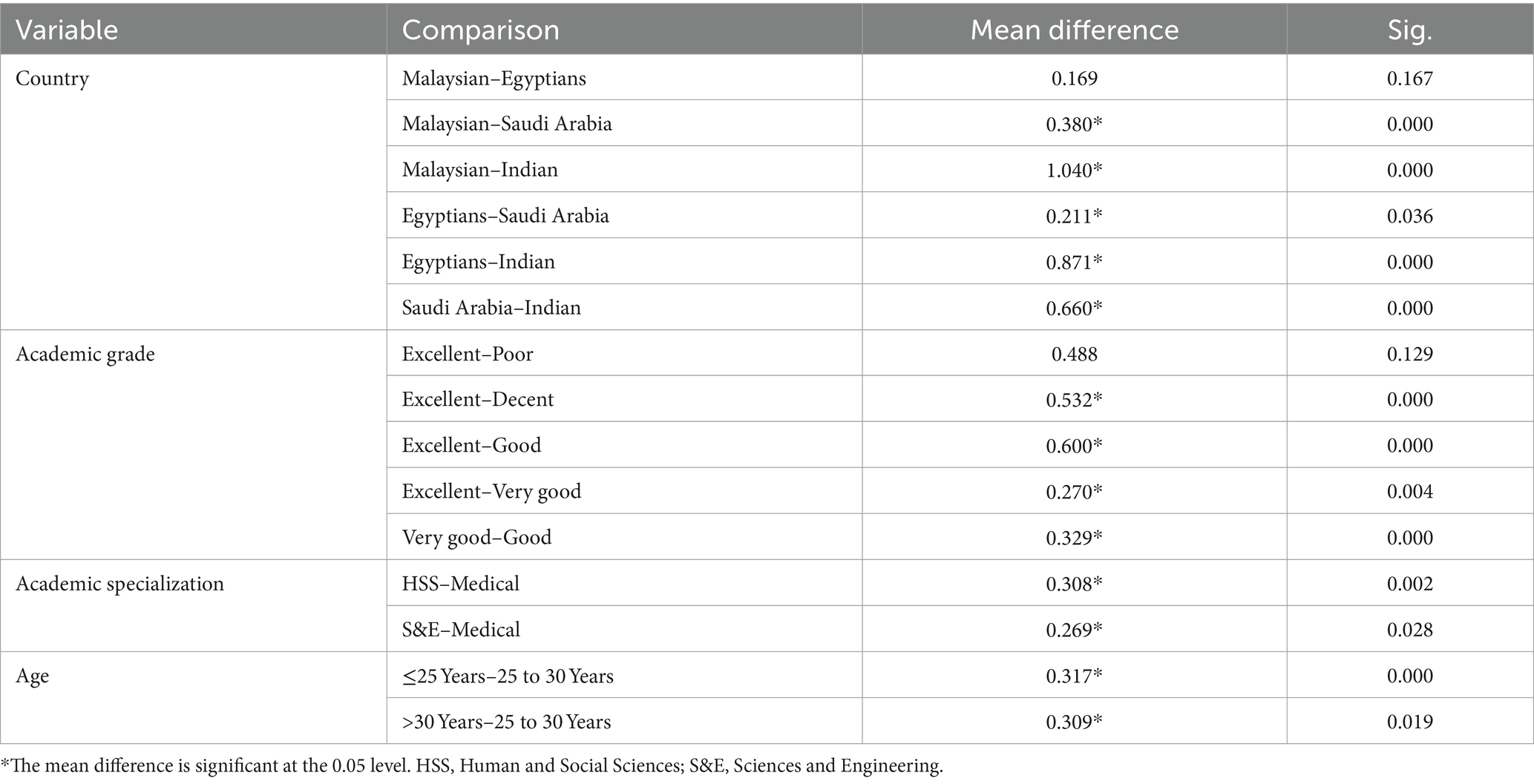

After conducting post-hoc analyses with Bonferroni correction, the results in Table 8 revealed several significant differences in AI perception across research variables. Regarding respondents’ countries, there were substantial variations among most pairs of countries, with Malaysian respondents reporting the highest perception of AI, followed by Egyptian and Saudi Arabian students. Concerning academic performance, students with “Excellent” grades had significantly higher AI perception than their counterparts who received very good grades, indicating a positive association between academic grades and AI perception. Academic specialization substantially impacted students’ AI perception; students from the humanities/social sciences and science/engineering disciplines had a higher perception of AI than those from medical sciences. Additionally, age differences were significant; respondents aged 25 and younger and individuals over 30 had a greater AI perception than those aged 25–30.

Table 8. Post hoc comparisons of AI perception differences by country, academic grade, academic specialization, and age using Bonferroni correction.

Desire to use AI

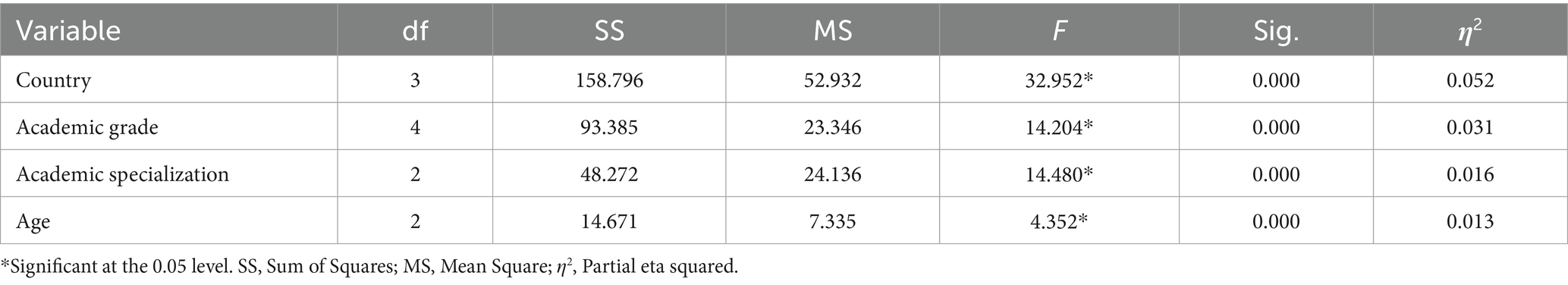

The results of Table 9 indicate significant differences in the desire to use AI based on respondents’ country, F(3, 1,796) = 32.952, p < 0.001, η2 = 0.052, suggesting that around 5.2% of the variation in AI desire may be attributed to disparities among countries. Academic grades, F(4, 1,795) = 14.204, p < 0.001, η2 = 0.031; this suggests that approximately 3.1% of the variation in respondents’ desire to use AI can be explained by academic performance differences. Furthermore, Academic Specialization, F(2, 1,797) = 14.480, p < 0.001, η2 = 0.016, indicating 1.6% of the variability. Lastly, age, with an F-value of 4.35 and a p-value of 0.013. This suggests that around 0.5% of the variation in the desire to use AI can be attributed to differences in respondents’ age.

Table 9. One-way ANOVA of desire to use AI differences based on country, academic grade, academic specialization, and age.

The post-hoc test in Table 10 revealed significant differences in the desire to use AI based on the respondents’ countries. Malaysian students were significantly more willing to utilize AI than their Egyptian and Saudi Arabian counterparts. Indian individuals exhibited the lowest level of desire (all p < 0.001). Academic grade positively correlates with the desire to use AI; students who achieved “Excellent” grades demonstrated a significantly higher level of desire compared to those who had “Good” grades (p < 0.001) and “Very Good” grades (p = 0.007). In the context of Academic Specialization, students in Sciences and Engineering confirmed a greater inclination toward utilizing AI, surpassing those in the Human/Social Sciences (p = 0.001) and Medical Sciences (p < 0.001) by a wide margin. In addition, respondents from the Human/Social Sciences field expressed a considerably greater willingness than those from the Medical Sciences field (p = 0.004). Regarding the age of students, the group aged 30 and above showed a substantially higher desire to employ AI than those aged 25–30 (p = 0.010).

Table 10. Post hoc comparisons using Bonferroni correction of desire to use AI differences based on country, academic grade, academic specialization, and age.

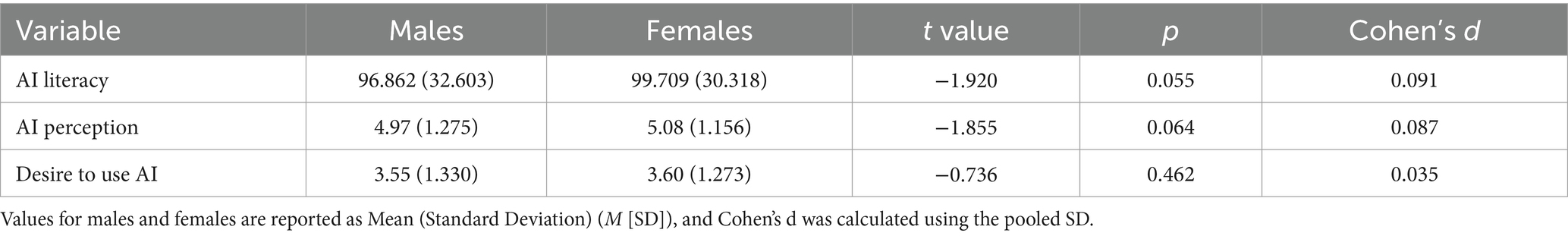

Differences according to gender

The findings in Table 11 suggest a slight and insignificant difference in AI Literacy between males (M = 96.86, SD = 32.60) and females (M = 99.71, SD = 30.32), t(1,798) = −1.920, p = 0.055, d = 0.091. As measured by Cohen’s d (d = 0.091), the effect size indicates a minimal practical difference between the groups. Females had a marginally higher average score in AI Literacy, defying certain traditional assumptions in technology-related domains. In terms of AI Perception, there was an insignificant difference between males (M = 4.97, SD = 1.28) and females (M = 5.08, SD = 1.16), t(1,798) = −1.855, p = 0.064, d = 0.087. The effect size is minimal. Furthermore, there was no statistically significant difference in the Desire to Use AI between males (M = 3.55, SD = 1.33) and females (M = 3.60, SD = 1.27), t(1,798) = −0.736, p = 0.462, d = 0.035. The effect size is negligible, indicating that gender has little impact on the willingness to use AI technologies.

Table 11. Results of an independent samples t-test comparing males and females on AI literacy, AI perception, and the desire to use AI.

Discussion and conclusions

The study aimed to provide a broad cross-border perspective in measuring levels of awareness of artificial intelligence through a survey that included 1,800 respondents from university students in four countries representing diverse geographical, cultural, and developmental backgrounds.

The overall mean for all respondents in all scale statements was 2.98 out of 5, indicating an average somewhat close to capability or a moderate skill level. The study results provided comparisons to test the impact of variables such as nationality, gender, age, academic major, and academic degree on the AI literacy scale, on the desire or enthusiasm to use it, and on the level of pessimism or optimism regarding AI technologies.

The findings of this study align with those of previous research conducted in Portugal (Lérias et al., 2024) and China (Chai et al., 2020; Dai et al., 2020), which collectively suggest a prevailing positive sentiment toward AI’s potential for societal benefit. Our results further corroborate the findings of Hornberger et al. (2023), indicating a significant disparity in AI literacy among university students, particularly favoring those with technical backgrounds or prior AI experience. However, these findings diverge from those of Kong et al. (2021), who observed a broader understanding of AI concepts across diverse academic backgrounds and genders, regardless of programming proficiency. The discrepancy between these studies may be attributed to differences in measurement methodologies, such as the use of pre-and post-course surveys (Kong et al., 2021) compared to the self-assessment questionnaire employed in the present research.

To the extent that the study aimed to provide a scientific addition in measuring levels of AI literacy in different cultural and linguistic environments, the study results also raise a series of questions that could be fertile ground for future research. For instance, one striking result presented by the study is that students with “weak” academic performance were better on the AI literacy scale than their peers with grades (good, very good, and excellent). This result should be approached with caution, as it requires additional testing to investigate other potential mediating variables that may have a more significant impact, such as the field of study, and to highlight the detailed dimensions of the AI literacy scale without simply relying on the overall mean level of literacy. The detailed dimensions of the scale’s results could help provide logical explanations in comparisons. For instance, do specialists in science and engineering excel in all dimensions of the literacy scale or only in the dimension related to technical programming and development? Does the inverse relationship between academic achievement levels (student GPA) and AI literacy level hold true for students across all majors and educational backgrounds, or is it more evident in specific fields?

The variable of students’ reliance on AI applications to complete their educational tasks can also be studied, and its effects on AI literacy level and academic performance can be assessed.

Previous studies on the link between academic performance and AI literacy have yielded inconclusive results. While Singh et al. (2024) found significant positive relationships between AI literacy, AI usage, AI learning outcomes and academic performance, Abbas et al. (2019) and Asio (2024) identified a weak correlation between academic performance and AI literacy. In contrast, Widowati et al. (2023) reported that digital literacy and student engagement did not have a direct impact on academic performance. These differing findings suggest that other factors may be influencing the relationship between AI literacy and academic performance.

Moreover, the current study suggests an inverse relationship between academic performance and AI literacy. It can be argued that students with lower academic performance may tend to rely more heavily on AI tools to complete their academic tasks. This frequent reliance often leads to greater familiarity with AI technologies, as these students use AI regularly for assistance. However, their engagement with AI may lack a structured or critical approach, often resulting in random or haphazard use. Such superficial application of AI tools can contribute to less effective outcomes and, consequently, lower academic performance.

In contrast, high-performing students may tend to approach AI more cautiously. Their selective use of AI tools reflects a more strategic and discerning engagement, where AI is employed as a supplementary resource rather than a primary means of completing assignments. This more balanced approach ensures that they maintain academic integrity and critical thinking, relying less on AI-generated content. As a result, their lower dependency on AI means they are less familiar with its intricacies, yet they still achieve better academic outcomes due to the more thoughtful integration of AI into their work. This distinction highlights a potential inverse relationship between AI familiarity and academic success, where over-reliance on AI may hinder rather than enhance performance.

Prior investigations into the utilization of digital technologies have yielded empirical support for this kind of inverse relationship. For instance, studies have demonstrated a correlation between prolonged use of mobile phones and social media applications and lower academic achievement (Al-Menayes, 2014; Lepp et al, 2015; Liu et al, 2020). Additionally, research has indicated that the use of iPads can negatively influence academic performance (Nketiah-Amponsah et al, 2017).

Policy implications and limitations

Data suggests that Malaysian respondents scored significantly higher than those from other countries. This finding aligns with the Global AI Index 2023, which emphasizes Malaysia’s robust infrastructure, including “basic electricity and internet access, as well as supercomputing capabilities, deep databases, and increasing AI adoption” (tortoisemedia.com, 2023). The Global AI Index also suggests higher AI readiness in Saudi Arabia and India compared to Egypt. However, a country’s overall AI ranking may not directly translate to higher AI literacy within a specific demographic, such as university students, when compared to their counterparts elsewhere.

Therefore, to enhance AI literacy amongst university students specifically, it is essential to develop targeted educational curricula and methodologies. Integrating AI literacy into courses across all disciplines is a promising approach, as advocated by numerous studies in the field (Kong et al., 2021, 2022, 2023; Laupichler et al., 2023a,b,c). These findings offer valuable insights for policymakers and curriculum developers in the educational sector. As Mansoor (2023) emphasizes, approaching information literacy as a “comprehensive and complex concept to achieve educational and development goals” necessitates broader consideration by researchers, professors, and policymakers across media, education, and professional development spheres.

This study relied on the self-assessment method for respondents, which many studies have used to measure AI literacy, such as (Laupichler et al., 2023c; Schofield et al., 2023). However, this does not mean closing the door on other methodological approaches used to measure levels of AI literacy, such as experimental and quasi-experimental methods. The best measurements allow researchers to combine more than one method, mainly if applied to large and diverse samples of respondents.

In summary, the study results should be interpreted within its methodological boundaries, as it was limited to four countries’ university students. This might explain why the age variable could have been more impactful due to the proximity of age levels within the research community. Moreover, the level of AI literacy required from all individuals necessitates surveys and scales encompassing diverse community segments from different ages and educational, economic, and social backgrounds.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Research Ethics Committee – Deanship of Scientific Research at King Saud University. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

HM: Writing – original draft, Supervision, Methodology, Conceptualization. AB: Writing – review & editing, Formal analysis, Data curation. MA: Writing – review & editing, Formal analysis, Data curation. AA: Writing – review & editing, Investigation, Formal analysis. AO: Writing – review & editing, Visualization, Validation, Investigation, Formal analysis, Data curation.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abbas, Q., Hussain, S., and Rasool, S. (2019). Digital literacy effect on the academic performance of students at higher education level in Pakistan. Glob. Soc. Sci. Rev. IV, 108–116. doi: 10.31703/gssr.2019(IV-I).14

Ajzen, I. (1985). From Intentions to Actions: A Theory of Planned Behavior. in Action Control. SSSP Springer Series in Social Psychology. Eds. Kuhl, J., Beckmann, J. (Springer, Berlin, Heidelberg), 11–39.

Al-Menayes, J. (2014). The relationship between mobile social media use and academic performance in university students. New Media and Mass Communication, 25, 23–29.

Asio, J. M. R. (2024). Artificial intelligence (AI) literacy and academic performance of tertiary level students: a preliminary analysis. Soc. Sci. Human. Educ. J. 5, 309–321. doi: 10.25273/she.v5i2.20862

Burgsteiner, H., Kandlhofer, M., and Steinbauer, G. (2016). Irobot: teaching the basics of artificial intelligence in high schools. Proceedings of the AAAI conference on artificial intelligence, 30.

Carolus, A., Koch, M. J., Straka, S., Latoschik, M. E., and Wienrich, C. (2023). MAILS-Meta AI literacy scale: development and testing of an AI literacy questionnaire based on well-founded competency models and psychological change-and meta-competencies. Comput. Hum. Behav. Artif. Hum. 1:100014. doi: 10.1016/j.chbah.2023.100014

Cetindamar, D., Kitto, K., Wu, M., Zhang, Y., Abedin, B., and Knight, S. (2022). Explicating AI literacy of employees at digital workplaces. IEEE Trans. Eng. Manag. 71, 810–823. doi: 10.1109/TEM.2021.3138503

Chai, C. S., Wang, X., and Xu, C. (2020). An extended theory of planned behavior for the modelling of Chinese secondary school students’ intention to learn artificial intelligence. Mathematics 8:2089. doi: 10.3390/math8112089

Dai, Y., Chai, C.-S., Lin, P.-Y., Jong, M. S.-Y., Guo, Y., and Qin, J. (2020). Promoting students’ well-being by developing their readiness for the artificial intelligence age. Sustain. For. 12:6597. doi: 10.3390/su12166597

Grizzle, A., Wilson, C., Tuazon, R., Cheung, C. K., Lau, J., Fischer, R., et al. (2021). Media and information literate citizens: think critically, click wisely!. UNESCO: Paris, France.

Hermann, E. (2022). Artificial intelligence and mass personalization of communication content—an ethical and literacy perspective. New Media Soc. 24, 1258–1277. doi: 10.1177/14614448211022702

Hornberger, M., Bewersdorff, A., and Nerdel, C. (2023). What do university students know about artificial intelligence? Development and validation of an AI literacy test. Comput. Educ. Artif. Intell. 5:100165. doi: 10.1016/j.caeai.2023.100165

Kandlhofer, M., Steinbauer, G., Hirschmugl-Gaisch, S., and Huber, P. (2016). Artificial intelligence and computer science in education: from kindergarten to university. 2016 IEEE frontiers in education conference (FIE), 1–9.

Kong, S.-C., Cheung, W. M.-Y., and Zhang, G. (2021). Evaluation of an artificial intelligence literacy course for university students with diverse study backgrounds. Comput. Educ. Artif. Intell. 2:100026. doi: 10.1016/j.caeai.2021.100026

Kong, S.-C., Cheung, W. M.-Y., and Zhang, G. (2022). Evaluating artificial intelligence literacy courses for fostering conceptual learning, literacy and empowerment in university students: refocusing to conceptual building. Comput. Hum. Behav. Rep. 7:100223. doi: 10.1016/j.chbr.2022.100223

Kong, S.-C., Cheung, W. M.-Y., and Zhang, G. (2023). Evaluating an artificial intelligence literacy programme for developing university students’ conceptual understanding, literacy, empowerment and ethical awareness. Educ. Technol. Soc. 26, 16–30.

Konishi, Y. (2015). What is needed for AI literacy? Priorities for the Japanese economy in 2016. Res. Inst. Econ. Trade Ind.

Laupichler, M. C., Aster, A., Haverkamp, N., and Raupach, T. (2023b). Development of the “scale for the assessment of non-experts’ AI literacy”–an exploratory factor analysis. Comput. Hum. Behav. Rep. 12:100338. doi: 10.1016/j.chbr.2023.100338

Laupichler, M. C., Aster, A., Perschewski, J.-O., and Schleiss, J. (2023c). Evaluating AI courses: a valid and reliable instrument for assessing artificial-intelligence learning through comparative self-assessment. Educ. Sci. 13:978. doi: 10.3390/educsci13100978

Laupichler, M. C., Aster, A., and Raupach, T. (2023a). Delphi study for the development and preliminary validation of an item set for the assessment of non-experts’ AI literacy. Comput. Educ. Artif. Intell. 4:100126. doi: 10.1016/j.caeai.2023.100126

Laupichler, M. C., Aster, A., Schirch, J., and Raupach, T. (2022). Artificial intelligence literacy in higher and adult education: a scoping literature review. Comput. Educ. Artif. Intell. 3:100101. doi: 10.1016/j.caeai.2022.100101

Lérias, E., Guerra, C., and Ferreira, P. (2024). Literacy in artificial intelligence as a challenge for teaching in higher education: a case study at Portalegre polytechnic university. Information 15:205. doi: 10.3390/info15040205

Lepp, A., Barkley, J. E., and Karpinski, A. C. (2015). The relationship between cell phone use and academic performance in a sample of US college students. Sage Open, 5. doi: 10.1177/2158244015573169

Long, D., and Magerko, B. (2020). What is AI literacy? Competencies and design considerations. Proceedings of the 2020 CHI conference on human factors in computing systems, 1–16.

Liu, X., Luo, Y., Liu, Z. Z., Yang, Y., Liu, J., and Jia, C. X. (2020). Prolonged mobile phone use is associated with poor academic performance in adolescents. Cyberpsychology, Behavior, and Social Networking, 23, 303–311. doi: 10.1089/cyber.2019.0591

Mansoor, H. M. (2023). Diversity and pluralism in Arab media education curricula: an analytical study in light of UNESCO standards. Humanities and Social Sciences Communications, 10, 1–11. https://www.nature.com/articles/s41599-023-01598-x

Miao, F., and Holmes, W. (2021). International forum on AI and the futures of education, developing competencies for the AI era, 7–8 December 2020: synthesis report. UNESCO: Paris, France.

Montag, C., Nakov, P., and Ali, R. (2024). On the need to develop nuanced measures assessing attitudes towards AI and AI literacy in representative large-scale samples. AI & Soc., 1–2. doi: 10.1007/s00146-024-01888-1

Ng, D. T. K., Leung, J. K. L., Chu, S. K. W., and Qiao, M. S. (2021). Conceptualizing AI literacy: an exploratory review. Comput. Educ. Artif. Intell. 2:100041. doi: 10.1016/j.caeai.2021.100041

Ng, D. T. K., Luo, W., Chan, H. M. Y., and Chu, S. K. W. (2022). Using digital story writing as a pedagogy to develop AI literacy among primary students. Comput. Educ. Artif. Intell. 3:100054. doi: 10.1016/j.caeai.2022.100054

Nketiah-Amponsah, E., Asamoah, M. K., Allassani, W., and Aziale, L. K. (2017). Examining students’ experience with the use of some selected ICT devices and applications for learning and their effect on academic performance. Journal of Computers in Education, 4, 441–460. doi: 10.1007/s40692-017-0089-2

Pinski, M., and Benlian, A. (2023). AI literacy-towards measuring human competency in artificial intelligence. Hawaii International Conference on System Sciences (HICSS-56). University of Hawaiʻi at Mānoa Library. https://aisel.aisnet.org/hicss-56/cl/ai_and_future_work/3

Schofield, D., Kupiainen, R., Frantzen, V., and Novak, A. (2023). Show or tell? A systematic review of media and information literacy measurements. J. Media Literacy Educ. 15, 124–138. doi: 10.23860/JMLE-2023-15-2-9

Singh, E., Vasishta, P., and Singla, A. (2024). AI-enhanced education: exploring the impact of AI literacy on generation Z’s academic performance in northern India. Qual. Assur. Educ. doi: 10.1108/QAE-02-2024-0037

Su, J., Ng, D. T. K., and Chu, S. K. W. (2023). Artificial intelligence (AI) literacy in early childhood education: the challenges and opportunities. Comput. Educ. Artif. Intell. 4:100124. doi: 10.1016/j.caeai.2023.100124

tortoisemedia.com. (2023). The Global AI Index the first index to benchmark nations on their level of investment, innovation and implementation of artificial intelligence. Available at: https://www.tortoisemedia.com/intelligence/global-ai/#rankings (Accessed July 18, 2024).

Wang, B., Rau, P.-L. P., and Yuan, T. (2023). Measuring user competence in using artificial intelligence: validity and reliability of artificial intelligence literacy scale. Behav. Inform. Technol. 42, 1324–1337. doi: 10.1080/0144929X.2022.2072768

Widowati, A., Siswanto, I., and Wakid, M. (2023). Factors affecting students’ academic performance: self efficacy, digital literacy, and academic engagement effects. Int. J. Instr. 16, 885–898. doi: 10.29333/iji.2023.16449a

Yang, W. (2022). Artificial intelligence education for young children: why, what, and how in curriculum design and implementation. Comput. Educ. Artif. Intell. 3:100061. doi: 10.1016/j.caeai.2022.100061

Keywords: AI literacy, Media and Information Literacy, transnational survey, Saudi Arabia (KSA), Egypt, India, Malaysia

Citation: Mansoor HMH, Bawazir A, Alsabri MA, Alharbi A and Okela AH (2024) Artificial intelligence literacy among university students—a comparative transnational survey. Front. Commun. 9:1478476. doi: 10.3389/fcomm.2024.1478476

Edited by:

Tobias Eberwein, Austrian Academy of Sciences (OeAW), AustriaReviewed by:

Brent Anders, American University of Armenia, ArmeniaRui Cruz, European University of Lisbon, Portugal

Copyright © 2024 Mansoor, Bawazir, Alsabri, Alharbi and Okela. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hasan M. H. Mansoor, aG1hbnNvb3JAa3N1LmVkdS5zYQ==

Hasan M. H. Mansoor

Hasan M. H. Mansoor Ala Bawazir2

Ala Bawazir2 Ahmed Alharbi

Ahmed Alharbi Abdelmohsen Hamed Okela

Abdelmohsen Hamed Okela