- Department of Communication, University of Louisiana at Lafayette, Lafayette, LA, United States

As a preparation for our future healthcare system with artificial intelligence (AI)-based autonomous robots, this study investigated the level of public trust in autonomous humanoid robot (AHR) doctors that would be enabled by AI technology and introduced to the public for the sake of better healthcare accessibility and services in the future. Employing the most frequently adopted scales in measuring patients’ trust in their primary care physicians (PCPs), this study analyzed 413 survey responses collected from the general public in the United States and found trust in AHR nearly matched the level of trust in human doctors, although it was slightly lower. Based on the results of data analysis, this study provided explanations about the benefits of using AHR doctors and some proactive recommendations in terms of how to develop AHR doctors, how to implement them in actual medical practices, more frequent exposure of humanoid robots to the public, and the need of interdisciplinary collaboration to enhance public trust in AHR doctors. This line of study is urgently demanded because the placement of such advanced robot technology in the healthcare system is unavoidable as the public has experienced it more these days. The limitations arising from the non-experimental design, a voluntary response sampling through social media, and few theories on communication with humanoid robots remain tasks for future studies.

Introduction

The development of artificial intelligence (AI) and its implementation in robots have elevated the role of such advanced technologies to another level in human-machine communication and collaboration. Scientists now predict that AI-based robots would replace not only simple human labor, but also professional human jobs in more diverse fields, such as lawyers, bankers, and healthcare professionals (Roose, 2021; Susskind and Susskind, 2016). From the communication perspective, AI-based interactive devices revolutionized human-machine communication. For example, Amazon’s Alexa, Apple’s Siri, and other AI devices have functioned as personal partners and co-workers in people’s daily lives at homes and workplaces. Then, what if we have humanoid robots that humans barely notice their identity as robots because of undistinguishable similarity to humans in appearance and communication skills? Taking one step further, this study asked the public how they would respond to AI-based autonomous humanoid robot (AHR) physicians that can be developed with a combination of AI and robot technologies.

In recent years, AI and robot technologies have reshaped the healthcare landscape. In fact, some AI-based robots have already been implemented in healthcare services. For example, Business Wire recently reported a test of a humanoid robot, Beomni, built to provide healthcare services for older people (Showen, 2021). Beomni interacted with both healthcare workers and clients and showed a very impressive communication ability, and human communication partners were surprisingly satisfied and delighted by communication with Beomni that still does not look really like a human, but has a robotic face and mechanical body and is controlled by humans. However, its popularity and effectiveness have been beyond expected. Then, what if a humanoid robot looks truly like a human and communicates with people autonomously? This is the primary assumption of this present study in cultivating our future.

Humanoid robots have been developed initially with the purpose of replicating and replacing humans, as scientists have strived to develop humanoid robots that look authentically like real humans and are communicable, attractive, gentle, and even lovely (Hanson Robotics, 2020). Implementing AI into humanoid robots has progressed to achieve this goal, and this technological development elicits people’s latent imagination of living and working with AHRs, as many books and movies have already been delivering stories about human-robot communication and interactions. Sooner or later, this imagination would be a reality as numerous pieces of evidence in the history of technology development have demonstrated that our imagination has accelerated technology development to realize the imagination. As technology development has accelerated, communication scholars should more proactively work to contribute to shaping our future reality by examining communication with humanoid robots. Riding on such historical tendencies, this study drew an imaginable future reality that human patients can see AHR doctors and examined the public perception of imaginable AHR doctors that would be introduced to human patients for the sake of better healthcare accessibility and services in the future. This study will certainly contribute to designing and implementing desirable AHRs for our future healthcare system.

Robots have already assisted human doctors for several decades (Lee and Yoon, 2021). Especially during the COVID-19 pandemic, the demand for implementing robots in healthcare facilities was more robust due to many advantages of implementing advanced robot technology in advancing the quality of the healthcare system and preparation for unanticipated public health disasters in the future (Ozturkcan and Merdin-Uygur, 2022). Responding to this demand for the implementation of humanoid robots, along with the call for more specific research related to the desirable development of humanoid healthcare robots (Ozturkcan and Merdin-Uygur, 2022) and people’s positive perception of humanoid healthcare robots (Andtfolk et al., 2022), this study raised the question of whether the public trusts AHR doctors more or less than human doctors if AHR doctors are available.

Trust in patient-doctor communication

A large number of studies with ample evidence underscore the importance of patient-(human) doctor communication for the effective delivery of healthcare services. Much research supports that a patient-doctor relationship is mutually and communicatively constructed between patients and doctors (Coulter, 2002; Vick and Scott, 1998; Wright et al., 2004). More specifically, a large amount of research has explored factors affecting the patient-doctor relationship and identified several influential factors, such as (1) doctors’ careful listening to and sufficient communication with patients to develop supportive relationships, gather information, and be accurate in diagnoses and treatments (e.g., Dugdale et al., 1999; Jagosh et al., 2011) and (2) patients’ compliance with doctors’ recommendations and treatments (e.g., Garrity, 1981). Many studies have also pointed out the gap between doctors and patients in understanding medical terms as a barrier to building mutual relationships (Ha and Longnecker, 2010; Jucks and Bromme, 2007). Among many influential factors affecting patient-doctor communication, trust has always been a core concern and is often regarded as an essential factor for patients to develop a desirable patient-doctor relationship (Chipidza et al., 2015; Duggan and Thompson, 2011).

Patients’ trust in doctors substantially influences their selection, continuation, and change of doctors (Baker et al., 2020; Sullivan, 2020). It also affects the outcomes of medical and other healthcare services because it increases patients’ compliance with doctor’s orders and recommendations (Bonds et al., 2004; Meier et al., 2019). As trust is a construct constituted by several dimensions, many factors affect patients’ trust in their doctors (Pearson and Raeke, 2000). The major factors that enable patients to build trust in their doctors include the doctor’s expertise, confidentiality, and interpersonal communication skills, such as attentiveness, honesty, and caring communication (Anderson and Dedrick, 1990; Coulter, 2002; Duggan and Thompson, 2011; Hall et al., 2002). Recognizing the importance of patients’ trust and how they build trust in their doctors, the present study investigated the level of the public’s trust in future AHR doctors with their imagination of future lives.

The idea of implementing AHR doctors may be contentious. Some researchers argue that the public is more likely to understand the benefits and applications of using healthcare robots and feel simple medical procedures and physical help offered by robots are acceptable (Broadbent et al., 2010). However, others claim that patients still appear to be reluctant to implement robots in healthcare services due to a level of concerns about safety, reliability, privacy turbulence, and the lack of personal care (Fosch Villaronga et al., 2018; Tanioka, 2019). Responding to these concerns with negative perceptions of implementing humanoid robot technology in healthcare services, scholars with a positive perspective on the implementation of AHRs in human lives argue that people’s positive attitude toward robot doctors would increase as more people are exposed to them (Katz and Halpern, 2014). This present study acknowledges this discussion or contention as a symptom of having AHRs sooner or later, arguing that the diffusion of advanced technology in all areas of human lives and works is inescapable. This is why we must proactively prepare for our future.

Along with the potential that humanoid robot doctors will become available in the future, this study investigated people’s current perceptions of future AHR doctors, specifically analyzing how much people would trust the AHR doctors. This study set the area of doctors’ expertise as primary care physicians (PCPs) who practice general medicine and usually have the closest relationships with their patients. Under these hypothetical research surroundings, this present study aimed to answer the following research question that will provide us with many implications and insights in developing AHRs and enhancing future healthcare services with AHRs:

Q: How much would the public trust future AHR doctors as their PCPs compared to human doctors?

Preparation is needed for the time when we realistically implement such advanced technology in medical/healthcare services, which demands necessary trial and error and requires evidence-based directions for the future development and use of AHRs. In the field of study in human-machine/robot communication, few studies focus on autonomous humanoid robots in healthcare settings (e.g., patient-doctor communication at a doctor’s office). The investigation of public trust in AHR doctors would also be beneficial in measuring public readiness in the process of adopting advanced robot technology to healthcare facilities. Theoretically, this study will contribute to making a cornerstone for developing theories of human-humanoid robot communication as empirical evidence.

Methods

Measure

Methodologically, this study extended the research instruments developed to measure trust between patients and human doctors to measure trust between patients and AHR doctors. This methodological extension can be made because this study compares human patients’ trust in their human doctors with imaginable AHR doctors in the future. In doing so, this present study reviewed predominantly cited studies that explored patients’ trust in their doctors, covering published research since the 1970s. Among the selected studies, it was notable that Eveleigh et al. (2012) published a synthetic overview of 19 well-cited patient-doctor trust scales, comparing their validity, reliability, and applicability to other studies. After having a careful review, this present study adopted two scales designed by Anderson and Dedrick (1990) and Hall et al. (2002) due to their topical relevancy (focusing on the public trust in PCPs), predominant use by the past research, and applicability to human-machine communication. Following Kim and Kim (2021), which tested both scales in one study, this study also employed both scales because this study was also interested in testing and comparing these scales in the context of trust between human patients and AHR doctors. If both scales produce similar outcomes, it strengthens the validity of this initial study on this topical area, and future studies in the same line of study can simply employ either scale as both scales can consistently produce similar results.

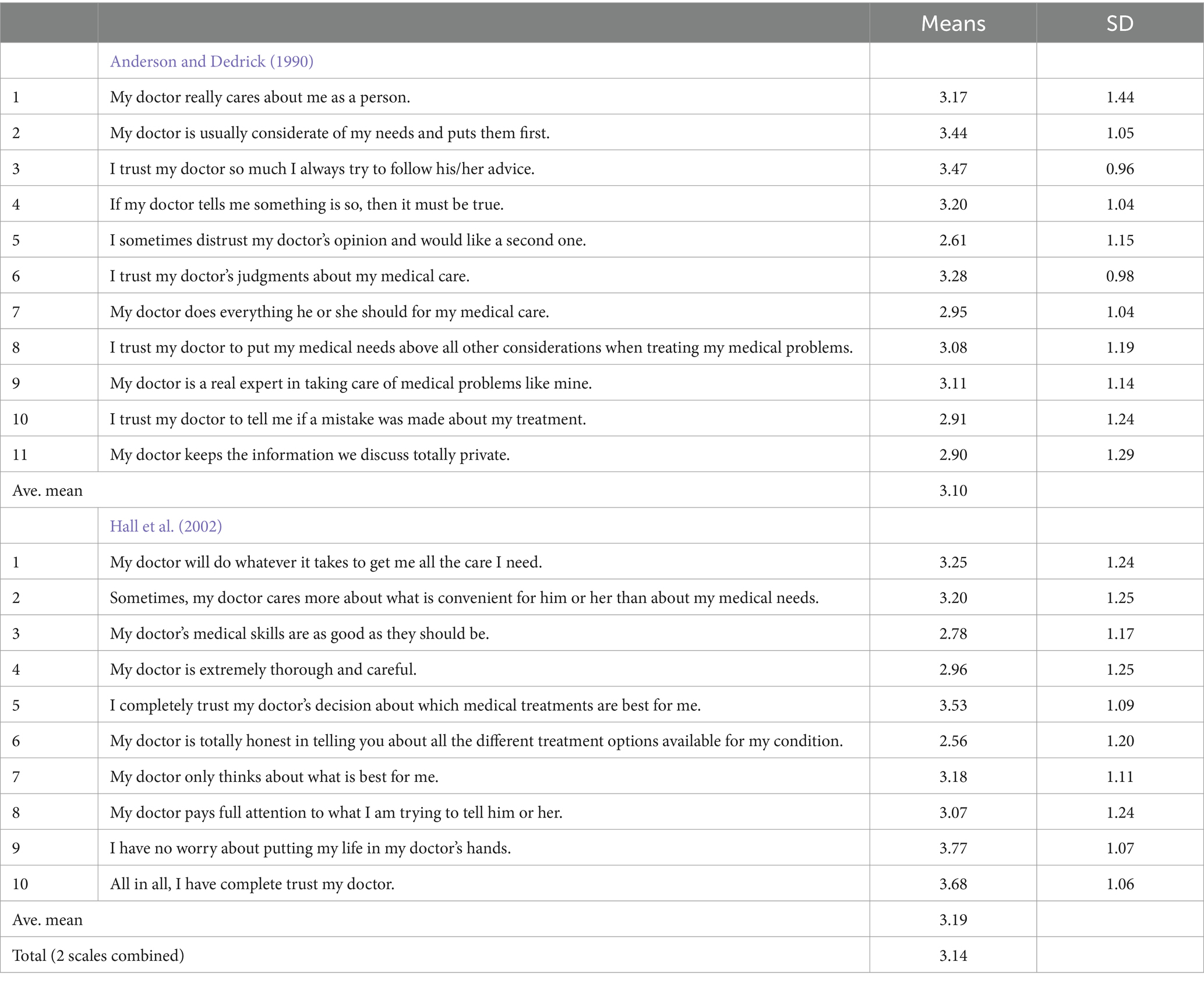

Anderson and Dedrick (1990) developed a scale with 11 items (see Table 1) that measures multidimensions of patients’ trust in primary care physicians: (1) dependability of the physician (items 1 ~ 4), (2) confidence in the physician’s knowledge and skills (items 5 ~ 9), and (3) confidentiality of information between physicians and patients (items 10 & 11). The second scale this present study adopted is Hall and his colleagues’ scale (2002), which consists of five dimensions – fidelity, competence, honesty, confidentiality, and global trust – with 10 questions: (1) Fidelity denotes caring and advocating for the patient’s interests or welfare and avoiding conflicts of interest; (2) competence refers to a good practice and interpersonal skills, making correct decisions, and avoiding mistakes; (3) honesty indicates telling the truth and avoiding intentional falsehoods; (4) confidentiality is a proper use of sensitive information; and (5) global trust means the combination of all these elements of trust (p. 298).

The wording of all the questions was the same as the two original scales, but the answering options were modified in order to maintain the validity of the present study. While the original studies adopted a Likert scale ranging from “strongly agree” to “strongly disagree,” this study gave respondents answering options that enabled them to compare their trust in AHRs and human doctors when providing their answers. This perceptual comparison can lead to more accurate answers about how much people trust AHR doctors (Kim and Kim, 2021). The modified options for answers are: “1. Definitely humanoid robot doctors,” “2. Likely humanoid robot doctors,” “3. Equally likely,” “4. Likely human doctors,” and “5. Definitely human doctors.” If an answer is close to 1, it means the participant trusts more of the humanoid robot doctors on the item asked. Finally, at the end of the survey, this study asked some demographic questions—gender, age, level of income, and level of education—for more specific analyses. All demographic questions except gender used a 5-point Likert scale to be consistent with answering options for the patient-doctor trust scale.

Participants

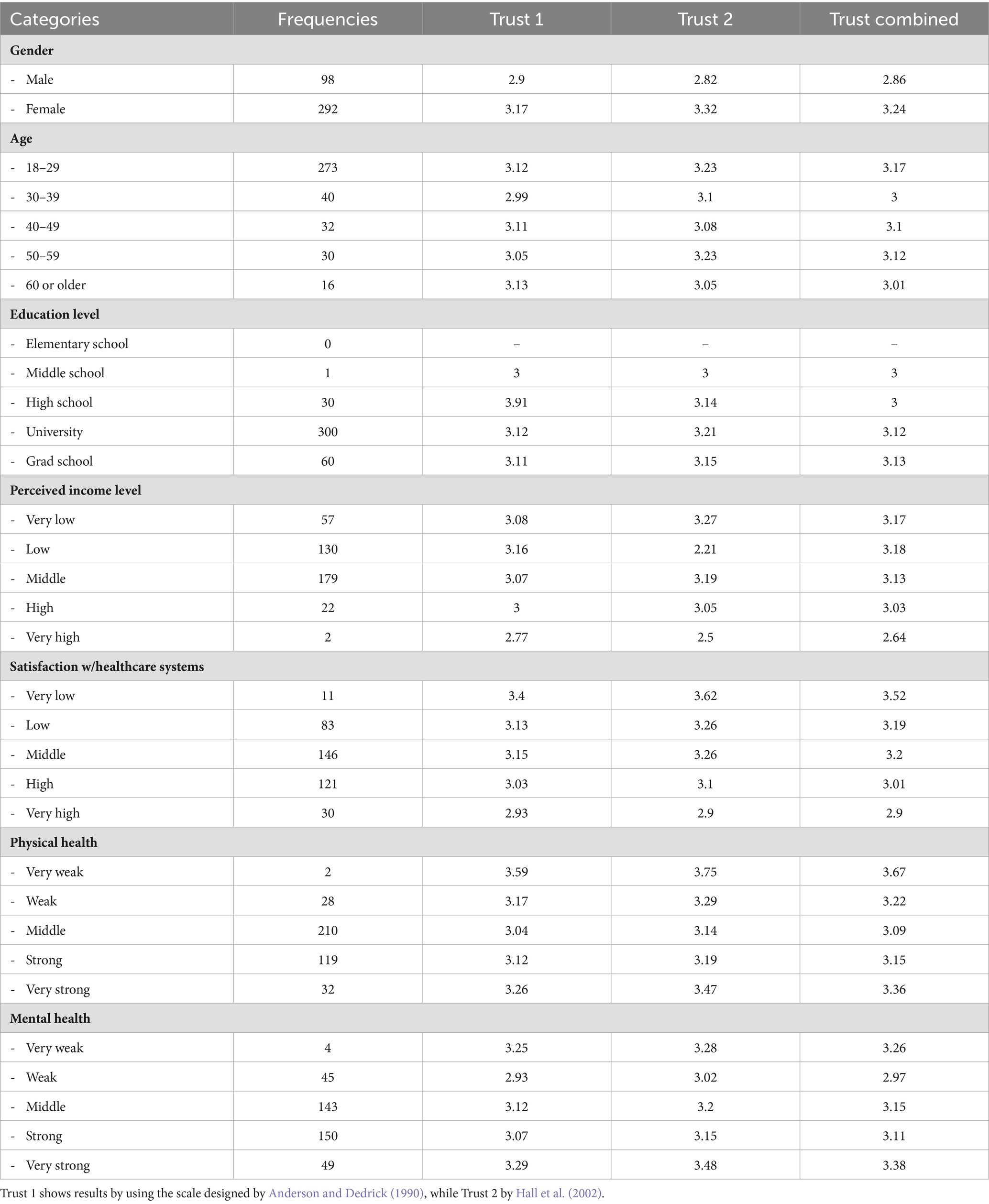

A total of 489 people over 18 years old voluntarily participated in the survey distributed through social media. This study excluded 76 responses from data analysis, applying a cautious detection process of coarse data. Excluded data mostly showed a high level of incompleteness (20% or higher incompletion rates) and consistent markings on an extreme level for all questions within a short period (less than two minutes). Table 2 shows detailed demographic information about participants as well as the results of descriptive statistics by demographic differences.

Data collection and analysis

Approved by the Institutional Review Board (IRB) from a southern university in the United States, data collection was conducted over one year period through an online survey tool, distributing the survey link through social media platforms, such as Twitter and Facebook. This study employed a voluntary response sampling. In voluntary response sampling, participants self-select to be part of the survey, which is often the case with online surveys distributed on social media platforms (Sledzieski et al., 2023). Social media platforms have been used for data collection for numerous studies that investigate public perceptions or opinions because social media can reach diverse groups of people, even including hard-to-reach populations (Casler et al., 2013), simplify and accelerate the data collection procedure (King et al., 2014), and create a snowball effect that collects more data by sharing posts and links to diverse social media friends (Salmons, 2017). Taking these advantages of using social media-based data collection, this study recruited volunteer college students in classes for juniors and seniors and asked them to post the survey weblink to their social media once a week for two weeks. Data collection was non-compensatory.

Data analysis was conducted with 413 survey data. First, a descriptive statistical analysis was conducted to measure the means of all items in each scale and the two scales combined, as seen in Table 1. Then, this study ran a t-test to figure out whether or not the mean difference between the student participants (M = 3.16, SD = 0.64) and the non-student participants (M = 3.10, SD = 0.56) exists. The result of the t-test supported no difference between the two groups (t(379) = −0.97, p = 0.09). Based on this result, further analyses were conducted with the combined data of both student and non-student groups.

Results

The results from a descriptive statistical analysis provided an overview of the public trust in AHR doctors (see the results in Tables 1, 2). The average mean values of the two tested scales commonly indicated that the public trust in human doctors was slightly higher than in AHRs (M = 3.10 for Anderson and Dedrickv scale; M = 3.19 for Hall et al. scale), and the most means values for specific items also showed the same tendency as their mean values were higher than 3.

In addition to the descriptive analysis, this study measured the level of public trust by demographic variables: gender, age, income level, and educational level. The results of a t-test for gender revealed a statistically significant difference between males and females (t(379) = −5.30, p = 0.00). Specifically, males (M = 2.86, SD = 0.56) demonstrated a higher trust in AHR doctors than females (M = 3.23, SD = 0.61). The results of ANOVA tests coupled with post hoc tests (Tukey HSD) showed no mean difference among groups in other demographic variables: age [F(4, 377) = 0.457, p = 0.767], income [F(4, 376) = 0.693, p = 0.597], and education [F(3, 378) = 0.635, p = 0.593].

Finally, this study employed two scales that were designed to measure the patients’ trust in their PCPs in order to see whether or not those two scales generate different outcomes, which tested the methodological reliability between the two scales. The results from a t-test with two scales showed that there was no significant mean difference (t(800) = 1.88, p = 0.61) between Hall et al. (2002) scale (M = 3.19, SD = 0.73) and Anderson and Dedrick’s scale (1990) (M = 3.10, SD = 0.61). In addition, when two scales were combined, the reliability of the combined scale was very high (Cronbach’s Alpha = 0.9). This suggests that future studies can utilize either scale to conduct similar studies on patients’ trust in autonomous humanoid robot doctors (Kim and Kim, 2021).

Discussion

After Hanson Robotics (2016) introduced its AI-based humanoid robot, Sophia, a female robot with fluent communication skills, communication with autonomous humanoid robots (AHRs) turned out to be not a far-fetched dream but a reality. This specifically signaledthat AHRs will be partnered with humans in many areas of human lives much faster than many had anticipated. Expecting this rapid advancement of humanoid robot technology, this study specifically focused on a very likely future application of AHRs that can contribute to our healthcare systems and investigated current public perceptions of future AHR doctors as PCPs.

Statistically, the results of this study found a slightly higher preference for human doctors than AHR doctors. Yet, the preference for human doctors (M = 3.14) is very little higher than for AHR doctors, showing only as little as 0.14 from the equal or no preference between AHR and human doctors (3.00 being no preference) on a 5-point Likert scale – 1 (strong trust in AHR doctors) and 5 (strong trust in human doctors). In the past, having a robot as a PCP might have been considered a science-fiction. However, this result indicates a very small difference in preference between human and AHR doctors, implying that having AHR doctors as PCPs no longer seems radical or too far from our reality. In other words, it is time for us to examine more actively issues related to how AHRs can be effectively implemented in our healthcare system.

Among the different demographic variables this study examined, only gender showed a valid difference in the trust level: males showed a higher trust level in AHR doctors, while females were more likely to trust in human doctors. This result aligns with other research that supports a different perception of robots by gender (e.g., Lin et al., 2012; Schermerhorn et al., 2008; Yu and Ngan, 2019). For instance, Schermerhorn et al. (2008) found that females see robots as more machine-like and less sociable than males. Specifically in patient-doctor communication, several studies have demonstrated various gendered norms regarding men’s and women’s experiences and expressions of pain, as well as their identities, lifestyles, and coping styles (Samulowitz et al., 2018). Gender bias has been a persistent issue in patient-provider communication. Therefore, awareness of gendered norms is crucial in both research and clinical practice to guide the development of AHR doctors in a more equitable way, better meeting the needs of women. Expanding the humanistic aspects of humanoid robots, Goldhahn et al. (2018) are concerned that the algorithms of humanoid robot doctors might be unable to appropriately replicate relational qualities, such as emotions, values, and non-verbal motions, whereas these relational factors are critical in patient-doctor communication in the process of healing and create a supportive atmosphere. This concern suggests that AHR developers should focus more on social and relational elements, considering interpersonal responsivity, positive emotions (i.e., smiles), and interpersonal warmth, in developing future AHRs.

Although there are some concerns regarding AHR doctors, mostly about less relational attributes of humanoid robots in communication, as explained above, an increasing amount of evidence supports the view that humans can build real interpersonal-like and emotional relationships with humanoid robots even at the current technological stage that humanoid robots do not perfectly look or communicate like real humans (Purtill, 2019; Stock-Homburg, 2022). Moreover, albeit some people’s unsatisfaction with the current AI devices or robots due to limited abilities and functionalities, future humanoid robots, which are fully autonomous and look, speak, and function like real humans, may attenuate people’s disinclination to communication with AHRs and incur human affinity with more advanced algorithms and machine-learning ability. More education about the advancement of robot technology and frequent exposure to humanoid robots with substantial interactions with them will also reduce the existing negative perceptions towards communication with and getting healthcare services by AHRs. Most of all, consciously or unconsciously, people are already using many AI-based interactive communication technologies (e.g., smartphones and smart Bluetooth speakers) in their daily lives. The prevalence of advanced communication technologies can naturally increase the likelihood of people’s adaptation to future communication environments with AHRs (Beer et al., 2014).

Beyond gender differences and emotional elements, diversity in patient groups is another communication element for AHR doctors when interacting with human patients. As in general communication situations, AHR doctors will communicate with diverse populations that vary in ethnical background, country of origin, language, and more. The inclusion of diversity in populations should be a fundamental consideration in designing AHR doctors and all other humanoid robots. If so, AHR doctors can provide more personalized care, reduce bias, and improve health outcomes across diverse population groups, which will certainly contribute to promoting equity in healthcare. In other words, a one-size-fits-all approach is inadequate in developing AHR doctors and humanoid robots that will communicate with diverse populations.

In reality, modern medical practices rely much on technology. Physicians’ offices are full of (computer) equipment and informational software that assist their decision-making and treatments. In addition, most medical examinations cannot be performed without technological assistance. Based on this already prevalent use of technology in healthcare services and, now, AI technology that is now available for actual human-machine communication, engineers in AI and robotics, medical professionals, and social scientists discuss the application of AHRs that can directly assist and communicate with patients and extend the discussion for future medical services with autonomous robot doctors (Gulshan et al., 2016; Kocher and Emanuel, 2019). For example, researchers from MIT and Brigham and Women’s Hospital in Boston conducted a nationwide survey showing people’s confidence in receiving medical care provided by robots, as a majority of their respondents were open to the idea that robots perform minor medical procedures (Chai et al., 2021). Related to this research, MIT News foresaw the availability of autonomous robot doctors for patients in our future healthcare system (Trafton, 2021). Supported by such predictions, a growing number of empirical tests and trials have been conducted to develop and implement AHR doctors in real-world settings, which will significantly contribute to more accurate medical decisions and services through a comprehensive overview of existing options than human doctors (Bahl et al., 2018).

Importantly, AHR doctors can be positioned in many areas that human doctors cannot or are reluctant to be. For instance, the world has been experiencing several global pandemics in recent years, such as SARS, MERS, COVID-19, and others. In battling such strong and fast-spreading viruses, human doctors and medical professionals have been constantly at risk of infection and carried an immeasurable burden while caring for the infected. Likewise, many countries are experiencing a rapidly growing number of hard-to-reach populations, such as refugees and immigrants with limited language proficiency in host countries, homeless people, less educated people with a low level of health literacy, and many other socioeconomically marginalized populations. AHR doctors can be positioned in highly infectious areas with little concern about infection and be more reachable to these marginalized populations. One of the greatest advantages of implementing AHR doctors along with other healthcare humanoid robots is that they can be programmed to meet the specific needs of such vulnerable people.

Finally, in order to proactively prepare for future healthcare environments with AHR doctors, multidimensional communication is needed among the public, humanoid robot scientists, and policymakers. In other words, they should collaboratively discuss and examine human-AHR communication to find ways to enhance the public trust in AHR doctors in the process of developing and implementing them for our future healthcare system. Such a multidimensional approach can reduce existing ethical, technological, and perceptional concerns. Trust is a construct of communication, especially transparent communication with sufficient information that reduces perceptual uncertainty and promotes confidence in our future reality with AHR doctors. If communicated well, AHR doctors would be available sooner than many expect now and contribute to human well-being.

Limitations and suggestions for future studies

A limitation that is also a suggestion for future studies is related to the nature of this paper. As this paper forecasts our future with AHR doctors, the discussion of this paper is less theoretical, but more explorative because of scent literature available on this specific topic. Therefore, future studies need to make an effort to theorize human-AHR communication. In addition, this study only investigated people’s perception of AHR doctors, based on participants’ imagination of the future without real experience with them. Related to this limitation, it is suggested that future studies attempt an experimental design to examine if there are any differences between people’s perceptions and real experiences. In order to do this, this study suggests more experimental and interdisciplinary studies with humanoid robot scientists and engineers, developing actual AHR doctors and testing human-AHR doctor communication in more realistic settings. Such interdisciplinary studies would provide substantial recommendations for developing more desirable AHR doctors and implementing them in our future healthcare system.

Methodologically, this study collected public data through social media. While the use of social media has proven effective in compiling public opinions in the age of digital communication, self-selection bias and the lack of data from non-social media users remain challenges. Therefore, incorporating additional data collection methods for non-social media users would enhance the generalizability of the findings. In addition, it would have been better to conduct a more accurate analysis if there was a valid scale developed to measure trust in the context of human-machine (or humanoid robots) communication instead of adopting scales used for human doctor-patient communication.

While this study highlighted the engagement and comfort in human-AHR doctor communication, it is also important from the communication perspective that robots need to understand and provide accurate advice for patient concerns, and patients need to comply with given information and advice. Therefore, future studies should investigate how to improve the accuracy of AI’s understanding of patients’ concerns and their responses to the concerns and, simultaneously, how to ensure patients’ compliance with AIH doctors’ advice.

The suggested future research agendas above aim to facilitate the smooth implementation of AI-based autonomous robot physicians, which could lead to improved healthcare outcomes in the near future. In line with this goal, it is crucial to develop more communication research with the purpose of ensuring accurate and effective communication with future AI Robot physicians. Such future studies in the communication discipline will substantially contribute to increasing resilience in people’s response to uncertainty and complexity they may face experience in the future world and also help explore better alternatives and make informed decisions among all possible options that people can choose as the world continue to change.

Data availability statement

The datasets presented in this article are not readily available because this study and dataset were approved by IRB at the author’s university with the condition that only the author can access and handle the dataset. Requests to access the datasets should be directed to ZG8ua2ltQGxvdWlzaWFuYS5lZHU=.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the participants was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

DK: Writing – original draft.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Anderson, L. A., and Dedrick, R. F. (1990). Development of the trust in physician scale: a measure to assess interpersonal trust in patient-physician relationships. Psychol. Rep. 67, 1091–1100. doi: 10.2466/pr0.1990.67.3f.1091

Andtfolk, M., Nyholm, L., Eide, H., Rauhala, A., and Fagerstrom, L. (2022). Attitudes toward the use of humanoid robots in healthcare—a cross-sectional study. AI Soc. 37, 1739–1748. doi: 10.1007/s00146-021-01271-4

Bahl, M., Barzilay, R., Yedidia, A. B., Locascio, N. J., Yu, L., and Lehman, C. D. (2018). High-risk breast lesions: a machine learning model to predict pathologic upgrade and reduce unnecessary surgical excision. Radiology 286, 810–818. doi: 10.1148/radiol.2017170549

Baker, R., Freeman, G. K., Haggerty, J. L., Bankart, M. J., and Nockels, K. H. (2020). Primary medical care continuity and patient mortality: a systematic review. Br. J. Gen. Pract. 70, e600–e611. doi: 10.3399/bjgp20x712289

Beer, J. M., Fisk, A. D., and Rogers, W. A. (2014). Toward a framework for levels of robot autonomy in human-robot interaction. J. Hum. Robot Interac. 3, 74–99. doi: 10.5898/jhri.3.2.beer

Bonds, D. E., Camacho, F., Bell, R. A., Duren-Winfield, V. T., Anderson, R. T., and Goff, D. C. (2004). The association of patient trust and self-care among patients with diabetes mellitus. BMC Fam. Pract. 5:26. doi: 10.1186/1471-2296-5-26

Broadbent, E., Kuo, I. H., Lee, Y. I., Rabindran, J., Kerse, N., Stafford, R., et al. (2010). Attitudes and reactions to a healthcare robot. Telemed. e-Health 16, 608–613. doi: 10.1089/tmj.2009.0171

Casler, K., Bickel, L., and Hackett, E. (2013). Separate but equal? A comparison of participants and data gathered via Amazon’s MTurk, social media, and face-to-face behavioral testing. Comput. Hum. Behav. 29, 2156–2160. doi: 10.1016/j.chb.2013.05.009

Chai, P. R., Dadabhoy, F. Z., Huang, H. W., Chu, J. N., Feng, A., Le, H. M., et al. (2021). Assessment of the acceptability and feasibility of using Mobile robotic Systems for Patient Evaluation. JAMA Netw. Open 4:e210667. doi: 10.1001/jamanetworkopen.2021.0667

Chipidza, F. E., Wallwork, R. S., and Stern, T. A. (2015). Impact of the doctor-patient relationship. Prim. Care Compan. CNS Dis. 17. doi: 10.4088/PCC.15f01840

Coulter, A. (2002). Patients' views of the good doctor. BMJ 325, 668–669. doi: 10.1136/bmj.325.7366.668

Dugdale, D. C., Epstein, R., and Pantilat, S. Z. (1999). Time and the patient-physician relationship. J. Gen. Intern. Med. 14, S34–S40. doi: 10.1046/j.1525-1497.1999.00263.x

Duggan, A. P., and Thompson, T. L. (2011). “Provider-patient interaction and related outcomes” in The Routledge handbook of health communication. eds. T. L. Thompson, R. Parrott, and J. F. Nussbaum. 2nd ed (New York, NY: Routledge), 414–427.

Eveleigh, R. M., Muskens, E., van Ravesteijn, H., van Dijk, I., van Rijswijk, E., and Lucassen, P. (2012). An overview of 19 instruments assessing the doctor-patient relationship: different models or concepts are used. J. Clin. Epidemiol. 65, 10–15. doi: 10.1016/j.jclinepi.2011.05.011

Fosch Villaronga, E., Tamò-Larrieux, A., and Lutz, C. (2018). Did I tell you my new therapist is a robot? Ethical, legal, and societal issues of healthcare and therapeutic robots. Ethical, Legal, and Societal Issues of Healthcare and Therapeutic Robots. Available at: https://ssrn.com/abstract=3267832

Garrity, T. F. (1981). Medical compliance and the clinician-patient relationship: a review. Soc. Sci. Med. Part E 15, 215–222. doi: 10.1016/0271-5384(81)90016-8

Goldhahn, J., Rampton, V., and Spinas, G. A. (2018). Could artificial intelligence make doctors obsolete? Br. Med. J. 363:k4563. doi: 10.1136/bmj.k4563

Gulshan, V., Peng, L., Coram, M., Stumpe, M. C., Wu, D., Narayanaswamy, A., et al. (2016). Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA J. Am. Med. Assoc. 316, 2402–2410. doi: 10.1001/jama.2016.17216

Hall, M. A., Zheng, B., Dugan, E., Camacho, F., Kidd, K. E., Mishra, A., et al. (2002). Measuring patients’ trust in their primary care providers. Med. Care Res. Rev. 59, 293–318. doi: 10.1177/1077558702059003004

Hanson Robotics. (2016). Sophia awakens [video file]. Available at: https://www.youtube.com/watch?v=LguXfHKsa0c

Hanson Robotics. (2020). Creating value with human-like robots. Available at: https://www.hansonrobotics.com/hanson-robots/

Jagosh, J., Boudreau, J. D., Steinert, Y., MacDonald, M. E., and Ingram, L. (2011). The importance of physician listening from the patients’ perspective: enhancing diagnosis, healing, and the doctor–patient relationship. Patient Educ. Couns. 85, 369–374. doi: 10.1016/j.pec.2011.01.028

Jucks, R., and Bromme, R. (2007). Choice of words in doctor–patient communication: an analysis of health-related internet sites. Health Commun. 21, 267–277. doi: 10.1080/10410230701307865

Katz, J. E., and Halpern, D. (2014). Attitudes towards robots suitability for various jobs as affected robot appearance. Behav. Inform. Technol. 33, 941–953. doi: 10.1080/0144929x.2013.783115

Kim, D. D., and Kim, S. J. (2021). What if you have an humanoid AI robot doctor?: an investigation of public trust in South Korea. J. Commun. Healthc. 15, 276–285. doi: 10.1080/17538068.2021.1994825

King, D. B., O'Rourke, N., and DeLongis, A. (2014). Social media recruitment and online data collection: a beginner’s guide and best practices for accessing low-prevalence and hard-to-reach populations. Can. Psychol. 55, 240–249. doi: 10.1037/a0038087

Kocher, B., and Emanuel, Z. (2019). Will robots replace doctors? Brookings Institution. Available at: https://www.brookings.edu/blog/usc-brookings-schaeffer-on-health-policy/2019/03/05/will-robots-replace-doctors/

Lee, D., and Yoon, S. N. (2021). Application of artificial intelligence-based technologies in the healthcare industry: opportunities and challenges. Int. J. Environ. Res. Public Health 18:271. doi: 10.3390/ijerph18010271

Lin, C. H., Liu, E. Z. F., and Huang, Y. Y. (2012). Exploring parents' perceptions towards educational robots: gender and socio-economic differences. Br. J. Educ. Technol. 43, E31–E34. doi: 10.1111/j.1467-8535.2011.01258.x

Meier, S., Sundstrom, B., Delay, C., and DeMaria, A. L. (2019). “Nobody’s ever told me that:” Women’s experiences with shared decision-making when accessing contraception. Health Commun. 36, 179–187. doi: 10.1080/10410236.2019.1669271

Ozturkcan, S., and Merdin-Uygur, E. (2022). Humanoid service robots: the future of healthcare? J. Inf. Technol. Teach. Cases 12, 163–169. doi: 10.1177/20438869211003905

Pearson, S. D., and Raeke, L. H. (2000). Patients' trust in physicians: many theories, few measures, and little data. J. Gen. Intern. Med. 15, 509–513. doi: 10.1046/j.1525-1497.2000.11002.x

Roose, K. (2021). The robots are coming for Phil in accounting. New York Times. Available at: https://www.nytimes.com/2021/03/06/business/the-robots-are-coming-for-phil-in-accounting.html

Salmons, J. (2017). “Using social media in data collection: designing studies with the qualitative e-research framework” in The SAGE handbook of social media research methods. eds. A. Quan-Haase and L. Sloan. vol. 177-197, (Sage Publications).

Samulowitz, A., Gremyr, I., Eriksson, E., and Hensing, G. (2018). "brave men" and "emotional women": a theory-guided literature review on gender bias in health care and gendered norms towards patients with chronic pain. Pain Res. Manag. 2018, 1–14. doi: 10.1155/2018/6358624

Schermerhorn, P., Scheutz, M., and Crowell, C. R. (2008). Robot social presence and gender: do females view robots differently than males? In Proceedings of the 3rd ACM/IEEE international conference on Human-robot interaction (pp. 263–270). ACM.

Showen, M. (2021). “Your doctor is in:” physicians test humanoid robots for eldercare. Business Wire. Available at: https://www.businesswire.com/news/home/20211206005120/en/%E2%80%9CYour-Doctor-is-in%E2%80%9D-Physicians-Test-Humanoid-Robot-for-Eldercare

Sledzieski, N., Gallicano, T. D., Shaikh, S., and Levens, S. (2023). Optimizing recruitment for qualitative research: a comparison of social media, emails, and offline methods. Int J Qual Methods 22, 160940692311625–160940692311610. doi: 10.1177/16094069231162539

Stock-Homburg, R. (2022). Survey of emotions in human–robot interactions: perspectives from robotic psychology on 20 years of research. Int. J. Soc. Robot. 14, 389–411. doi: 10.1007/s12369-021-00778-6

Sullivan, L. S. (2020). Trust, risk, and race in American medicine. Hastings Cent. Rep. 50, 18–26. doi: 10.1002/hast.1080

Susskind, R., and Susskind, D. (2016). Technology will replace many doctors, lawyers, and other professionals. Available at: https://hbr.org/2016/10/robots-will-replace-doctors-lawyers-and-other-professionals

Tanioka, T. (2019). Nursing and rehabilitative care of the elderly using humanoid robots. J. Med. Invest. 66, 19–23. doi: 10.2152/jmi.66.19

Trafton, A. (2021. The (robotic) doctor will see you soon. MIT News. Available at: https://news.mit.edu/2021/robotic-doctor-will-see-you-now-0304

Vick, S., and Scott, A. (1998). Agency in health care. Examining patients' preferences for attributes of the doctor–patient relationship. J. Health Econ. 17, 587–605. doi: 10.1016/s0167-6296(97)00035-0

Wright, E. B., Holcombe, C., and Salmon, P. (2004). Doctors' communication of trust, care, and respect in breast cancer: qualitative study. Br. Med. J. 328:864. doi: 10.1136/bmj.38046.771308.7c

Keywords: health technology acceptance, artificial intelligence, autonomous humanoid robots, patient-doctor communication, robotic healthcare service, health communication technology, healthcare robots

Citation: Kim DKD (2024) An investigation of public trust in autonomous humanoid AI robot doctors: a preparation for our future healthcare system. Front. Commun. 9:1420312. doi: 10.3389/fcomm.2024.1420312

Edited by:

Muneeb Imtiaz Ahmad, Swansea University, United KingdomReviewed by:

Bryan Abendschein, Western Michigan University, United StatesCarlos Alberto Pereira De Oliveira, Rio de Janeiro State University, Brazil

Isabel Rada, Universidad del Desarrollo, Chile

Copyright © 2024 Kim. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Do Kyun David Kim, ZG8ua2ltQGxvdWlzaWFuYS5lZHU=

Do Kyun David Kim

Do Kyun David Kim