95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Commun. , 19 June 2024

Sec. Disaster Communications

Volume 9 - 2024 | https://doi.org/10.3389/fcomm.2024.1391480

This article is part of the Research Topic Dynamic Earthquake Hazard and Risk Communication View all 5 articles

Earthquake misinformation has not only a significant impact on crisis management, but also on trust in scientific information and institutions. As a global center for seismic information, the Euro-Mediterranean Seismological Center (EMSC) has developed a strategy to combat false information related to earthquakes, particularly on Twitter (now known as X). This strategy includes the automation of prebunking tweets to prevent the spread of false information and, particularly unreliable claims of earthquake predictions. In the field of false information, predictions of earthquakes have a particular characteristic: their appearance follows a specific dynamic and the attention paid to them is predictable, which makes the automation of countermeasures (prebunking and debunking) both possible and relevant. Therefore, the objectives of the EMSC’s automatic tweets are to warn people in advance, particularly those who may be vulnerable to earthquake misinformation, while filling the information void with verified, scientifically-based information in the meantime. This paper seeks to examine the usefulness of such a tool. It does so by analyzing users’ engagement with these tweets. In addition, it focuses on two case studies, the seismic sequence in Turkey following the M7.8 earthquake on February 6, 2023 and the M6.8 Morocco earthquake on September 8, 2023. The results show engagement with the automatically generated tweets but an even greater interaction and engagement with manual tweets, highlighting the importance of a holistic approach. Recommendations include optimizing the visibility of the tweets, extending efforts beyond social media, and exploring alternative platforms. The paper advocates for a comprehensive strategy, combining automated tools with manual engagement and leveraging diverse communication channels to take into account cultural differences.

In the contemporary digital age, misinformation has become an insidious force permeating many facets of society, including earthquakes (Dallo et al., 2022). While its prevalence is not new and false information has always circulated among human beings, the phenomenon has taken outsized proportions with social media (Tandoc et al., 2018; Novaes and de Ridder, 2021). Accordingly, the research on misinformation has gained unprecedented momentum in recent years, with a special focus on false information tackling COVID 19 (Vicari and Komendatova, 2023). Research questions on this phenomenon mainly tackle four areas: (1) the creation of false information (Larson, 2018; Scheufele et al., 2021), (2) the reasons that lead people to believe and share this false information (Huang et al., 2015; Freiling et al., 2021), (3) the consequences (Barua et al., 2020) and, finally, (4) ways of combating this phenomenon (Lewandowsky et al., 2012). Research often distinguishes the unintentional dissemination of false information (misinformation) from deliberate acts aimed at causing harm (disinformation) (Baines and Elliott, 2020; Komendantova et al., 2021). Yet, when false information is circulated, it is not always possible to definitely establish the intentional nature (or lack thereof) of its creation and dissemination. In this paper, we will be focusing on preventing the spread of false information, so we will be using the term “misinformation” in a general way, except in cases where intentionality is proven.

The landscape of earthquake false information encompasses a triad of categories (Dallo et al., 2022). The first one concerns earthquake predictions (Gori, 1993), where speculative claims about the time, location, and magnitude of impending earthquakes proliferate, regardless of the fact that earthquake cannot to date be scientifically predicted. Indeed, as mentioned in Dallo et al. (2022), “There is a belief and hope among people that earthquakes can be predicted (e.g., by monitoring the seismicity over a given area or by studying animal behavior, the moon cycle, etc.). […] However, scientists can only estimate the probability of experiencing a seismic event in a specific geographic location within a given time window. This is known as a forecast.” Secondly, earthquake creation misinformation perpetuates false narratives regarding the human-induced genesis of some earthquakes, attributing seismic activities to malicious human intentions or to the HAARP1 program for instance (Erokhin and Komendantova, 2023). This second category of false information is often associated with confusion or misinterpretation of the scientific work on seismicity induced by human activities such as gas or geothermal heat exploitation (Dallo et al., 2022). Expanding the horizon, the third category delves into the nuanced connections between earthquakes and climate, weather, and astronomy. For example, some people believe that earthquakes occur more frequently depending on climatic or meteorological factors. This is often caused by retrospective evidence bias and confirmation bias, or by a poor understanding of phenomena with complex dynamics (Dallo et al., 2022). In the aftermath of a seismic event, it is not uncommon to witness the propagation of a combination of these three types of misinformation across social media platforms, highlighting the multifaceted nature of misinformation, as was documented for instance in Romania by Mărcău et al. (2023). In this study, the authors give an idea of the scale of false information, showing that around 30% of respondents gave credit to information reporting predictions of an earthquake following a seismic event, which occurred on February 13, 2023.

Research has found that people tend to believe earthquake misinformation due to a series of socio-psychological factors as well as the specific context and dynamics of earthquake-related information. As mentioned above, cognitive biases (such as confirmation bias or belief bias) play a role in reinforcing pre-existing, sometimes false beliefs about earthquakes (Baptista and Gradim, 2020; Dallo et al., 2022). In addition, earthquake information takes time (from a few minutes to several hours) to generate and then communicate. However, people have an immediate need to make sense of what has just happened. False information can then fill this information void (Fallou et al., 2020). Finally, there is often a knowledge gap between those affected and those producing the information, which can lead to misinterpretation and therefore to the spread of false information (Marwick, 2018; Treen et al., 2019; Freiling et al., 2021; Dryhurst et al., 2022).

The concern surrounding earthquake misinformation is fueled by the tangible and intangible consequences it can precipitate. The dissemination of inaccurate information about seismic events poses a significant threat to public safety, exacerbates anxiety, and can impede effective emergency response efforts (Flores-Saviaga and Savage, 2021). A concrete example is the Albanian case in 2019. After an Mw5.6 earthquake on September 21 2019, a few journalists published online articles mentioning the prediction of a significant aftershock, based on information provided by Greek seismologists (Fallou et al., 2022). This false information created additional fear for a public (already experiencing anxiety due to the mainshock and the numerous aftershocks) and the inhabitants started to leave the capital city of Tirana in panic, creating traffic jams. The journalists were subsequently prosecuted (Erebara, 2019). Although this example is particularly significant because it combined the dissemination of false information by a trusted third party (the media) and the validation of it by a figure normally regarded as authoritative (a scientist), it perfectly illustrates the fact that misinformation can have very real effects and lead to dangerous behavior. Additionally, the psychological impact of misinformation can leave lasting imprints on the affected communities, shaping public perceptions of seismic risks in ways that may deviate from scientific reality. It can also enhance mistrust toward authorities and scientific communication, this as was the case following a series of earthquakes and the subsequent misinformation spread in Mayotte, France (Fallou et al., 2020). In general, specific research on earthquake misinformation draws on a flourishing field of research, that of risk and safety communication. In particular, it explores the importance of trust for effective communication (Mehta et al., 2024), the issues surrounding scientific communication to ensure that numbers are properly understood (Goodwin and Peters, 2024), and the need to consider emotions (Lu and Huang, 2024).

Recognizing the severity of the issue, seismic institutions and researchers have intensified their efforts to understand the dynamics of earthquake misinformation (Dallo et al., 2023) and identify ways to fight it (Dallo et al., 2022). Among the solutions, crucial strategies are emerging at the intersection of technology, risk and scientific communication. First, close collaboration with online platforms that can moderate content on their services is crucial (Acemoglu et al., 2022; Vese, 2022) but this may be outside the scope of seismic institutions, remaining more pertinent for national or sub-national authorities such as the European Union, which is currently implementing the Digital Service Act (Turillazzi et al., 2023). Simultaneously, more effective scientific and risk communication proves essential (Lamontagne and Flynn, 2014; Devès et al., 2022; Crescimbene et al., 2023; Bossu et al., 2020). This involves not only strengthening the channels for disseminating accurate information but also adopting pedagogical approaches to educate the public about the basics of seismology and the limitations of earthquake predictability (Dryhurst et al., 2022; Fallou et al., 2022). Indeed, a well-informed public becomes an ally in the fight against misinformation.

A part of the communication strategy consists in actively demystifying false information after it spreads. This therapeutic approach falls under what is considered as “debunking” techniques or fact-checking. For instance, Flores-Saviaga and Savage (2021) studied how, in the aftermath of the 7.1-Magnitude earthquake in Mexico on September 19 2017, volunteers used social media such as Facebook and Twitter to cross-check information about the earthquake and the relief efforts to debunk false information and improve the crisis response. In particular, through the @Verificado19S Twitter account, they shared posts composed of screenshots of false information that were corrected with accurate statements. Although debunking has proven necessary, it is often insufficient to combat misinformation (Lewandowsky et al., 2012; Jang et al., 2019; van der Linden, 2022). Thus, recommendations have emerged for the introduction of prebunking, i.e., warning in advance of the possibility of false information appearing and educating people to spot it and not believe it (Lewandowsky and van der Linden, 2021).

A portion of these solutions can be automated, notably on social media, where the appearance of false information can be anticipated in some cases, as is the case for earthquake predictions. Some of the research and technological developments are aimed at automating the time-consuming task of fact-checking (Dierickx et al., 2023) especially on Twitter (now called X2), given that most tweets containing false information are often left undebunked and spontaneous debunking is a relatively slow process (Miyazaki et al., 2023). Automation can also be applied to pre-bunking strategies. Indeed, after a significant earthquake timely information is critical in order to fill the information void and prevent false information from circulating (Fallou et al., 2020). Social media bots such as the @LastQuake initiative developed by the Euro-Mediterranean Seismological Center (EMSC) on Twitter enables the center to respond to the need for information within a few tens of seconds (Bossu et al., 2018, 2023, 2024; Steed et al., 2019). By freeing itself from the constraints of human writing speed or working hours, automating the publication of part of the information guarantees fast, global and uninterrupted information. While some bots are implicated in disseminating misinformation, others bots can, on a more positive note, be integral to the solution (Erokhin and Komendantova, 2023).

The aim of this paper is to analyze the effectiveness of a tool developed by the EMSC, which seeks to automate part of the battle against online earthquake prediction on Twitter. To do so, we first introduce the @LastQuake tool, its genesis, its role in supporting crisis communication, and its evolutions over a decade. We then present a methodology based on the tweets analytics and how it is used to assess the effectiveness of the tool. Finally, we elaborate on the limitations of the study and on future practical research paths for the earthquake misinformation fight.

The EMSC is one of the world’s leading institutions for public seismic information offering information on earthquakes and their effects (Bossu et al., 2024). Over the years, it has developed an innovative and multi-channel earthquake information system comprising a mobile application, a desktop and mobile website, a Twitter bot, named @LastQuake, and recently a Telegram bot with the same name (Bossu et al., 2018, 2023).

@LastQuake is a hybrid system comprising both automatic and manual tweets (the messages published on Twitter) about earthquakes felt around the world. Automation meets the need for continuity and extreme speed of service while manual tweets are used to share context-specific information, to answer users’ questions and more generally, for a communication that takes greater account of users’ cultural and emotional needs. It started its operation in 2012, and a new version was released in 2022. Reactions and questions from users following felt earthquakes were meticulously monitored to identify systematic information needs and then subsequently, automatic tweets were implemented in the 2022 version of the bot to address these cases (for a detailed description, see Bossu et al., 2023). The @LastQuake twitter account was being followed by more than 307 K users in January 2024.

Over the years, because of its presence on social media, the EMSC has been exposed to, and sometimes unwillingly associated with, false information, especially concerning false earthquake predictions, a situation which led us to include the fight against misinformation in the 2022 version of @LastQuake, which is further detailed in Bossu et al. (2023) and Fallou et al. (2020). Our objectives were to avoid the information void and to avoid leaving prediction claims unanswered in our timeline. More generally the idea was to prebunk the numerous prediction-related claims which systematically flourish on social media after damaging earthquakes. Although earthquake predictions are not the only type of false information to be combated, the EMSC decided to start with this one, since it is the most common and its dynamics have been studied. We currently address the other types manually, due to their less predictable occurrences. This action is part of a scientific and ethical approach, since the communication of verified and reliable information and the fight against false information are part of the ethical and social responsibilities of seismological institutes (Peppoloni and Di Capua, 2012; Peppoloni, 2020, Anthony et al., 2024). As noted by Lacassin et al. (2019), scientists and seismology institutes play a crucial role as they possess scientific expertise, data, and a substantial audience, especially on social media platforms. As trusted partners, their insights are often endorsed by authorities and media outlets.

A new component of the Twitter robot was developed to respond to the misinformation challenge, based on research and recommendations developed in particular by Dallo et al. (2023). In the research paper, the authors recommended to:

1. Foster measures to counteract misinformation, especially after an event, but also at other times.

2. Check who is sharing, tagging and linking posts, and ask not to be associated with misleading content.

3. Provide information sources opponents of misinformation can use to support their arguments,

4. Monitor multiple social media channels,

5. Provide decision support tools to deal with misinformation on social media,

6. Create media policy measures to stimulate critical thinking,

7. Treat emotions of users on social media seriously,

8. Educate people while their attention is high,

9. Consider multi-hazard communication,

10. Foster dissemination of correct information.

The EMSC took into account the applicable recommendations as well as the dynamics of misinformation related to earthquake predictions on Twitter. For example, we know that predictions exist all the time, but tend to flourish and get more attention after major earthquakes. Therefore, after each earthquake, prevention tweets are published in threads whose characteristics (magnitude, affected population, etc.) are likely to generate predictions or to enable those people making predictions to benefit from the public and media attention the earthquake has generated (Recommendations #1 and #8). We decided to produce five different types of tweets, each with a different tone, warning of the possibility of false information, quoting trusted sources and providing further explanation, particularly through videos, thereby enhancing transparency and credibility (Figure 1) (Recommendation #3). The multi-tweet-model strategy was a conscious effort to engage with diverse audiences and address the varied ways that misinformation is received. Furthermore, this is a way used by @LastQuake to avoid posting similar threads, particularly during aftershock sequences where earthquakes strike the same region in a short period of time. Each tweet is published in English, which limits its audience, yet the Twitter platform offers a translation service. In addition to these automatic tweets, the EMSC has developed a policy regarding prediction claims associating @LastQuake (by including its handle in their message) as an authoritative source to prediction claims (Bossu et al., 2022) (Recommendation #2). In such a case, the author of the claim receives the explanation that predictions do not exist and is then requested to delete the incriminating tweet. Failure to do so will engender a blockage of the account in question, preventing further access to tweets published by @LastQuake. In other words, EMSC does not actively look for prediction claims to fight them but intends to offer a timeline free of unreliable and false statements (Recommendation #10). Finally, by first explaining our policy and leaving the option for a tweet deletion we avoid blocking users who may have published such a tweet due to a lack of seismological knowledge.

As part of an ongoing process of evaluating and improving its communication tools, the EMSC has sought to measure their effectiveness and their use by users. This is presented in the following section.

Assessing the effectiveness of prebunking tools presents inherent challenges, primarily due to the impossibility in objectively gaging what individuals might have believed or disbelieved in the absence of exposure to prebunking tweets. Nevertheless, our methodology aims to shed light on the utilization patterns of these tools, both in a general context and, more specifically, during seismic crises known to have had the potential for generating misinformation.

We focus our investigation on the metrics available for measuring the impact of prebunking tweets, including views, likes, retweets, and responses to the tweets. These quantitative indicators provide insights into the engagement levels and reception of the prebunking content. The data were automatically collected through the Twitter API, between 31 January 2022 and 29 January 2024, covering a period of 2 years of use. We compiled data available at the time of the analysis (January 2024), which implies that if some users have deleted their accounts, then their tweets or reactions will not be taken into account.

It should be noted here that prebunking tweets are automatically generated for earthquakes of magnitude ≥4.5 and with strong (or even destructive) shaking. Strongly felt earthquakes cover more than 99% of the earthquakes associated with a misinformation tweet. These tweets are positioned in a thread associated with the earthquake. On average, they appear at rank 16 (with a standard deviation of 3.58) in the thread. This corresponds on average to 39 min from the earthquake origin time (T0) (with a standard deviation of 13 min). However, this positioning can vary, as in the case of aftershocks, the number of tweets generated in the thread is limited to avoid publishing the same tweets; this can result in a positioning at rank 5 at 17 min from T0.

To complement these metrics, we conducted a detailed analysis of two case studies to facilitate cross-cultural comparisons and gain a nuanced understanding of the underlying dynamics. Both earthquakes’ sequences were deadly. The first case study is linked to a series of earthquakes that hit Turkey and Syria starting from February 6, 2023 with a M7.8 followed by a M7.5 (Dal Zilio and Ampuero, 2023; Melgar et al., 2023). The second is related to earthquakes in Morocco in September 8, 2023 (Yeo & McKasy, 2021). Both cases are precisely described below. They were selected based on their consequences in terms of the human death toll as well as the high visibility of the earthquake misinformation generated after the earthquake that was circulating on social media, especially Twitter. For the two cases, we consider all the earthquakes that triggered automated prebunking tweets as well as manual publications about earthquake misinformation. Data were automatically collected through Twitter API and then coded manually.

It should be noted that there are specific methodological considerations during the study period, caused by significant changes to the Twitter platform. The alterations to Twitter extended beyond simple nomenclature, with notable impacts on the platform’s Application Programming Interfaces (APIs). These changes have introduced challenges, particularly regarding data continuity for certain metrics. For instance, the collection of metrics related to earthquakes that occurred between April 2023 and mid-September 2023 had to be delayed. For the sake of the paper, data were collected at least 24 h after publication of the associated tweet. The ensuing discussion section will delve into the implications of these platform evolutions on our study.

During the studied period, 410 prebunking tweets were published (i.e., an average of four times a week) (Table 1). This number represents the number of earthquakes that occurred worldwide and met the criteria for triggering the prebunking strategy. Overall, these tweets generated 2,171,889 views. In terms of engagement, we recorded a total of 1,991 likes and 564 retweets. The number of responses to the tweets was low, demonstrating the lack of conversation generated on the platform on this subject. Yet, we did notice that a small but non-zero proportion of people who saw the tweet clicked for more information, either on the EMSC profile or on the educational link included in the tweet. The first observation, therefore, is that the message was relatively visible on the platform, at least for the EMSC community, but that the level of engagement with the automatic pre-bunking tweets was relatively low.

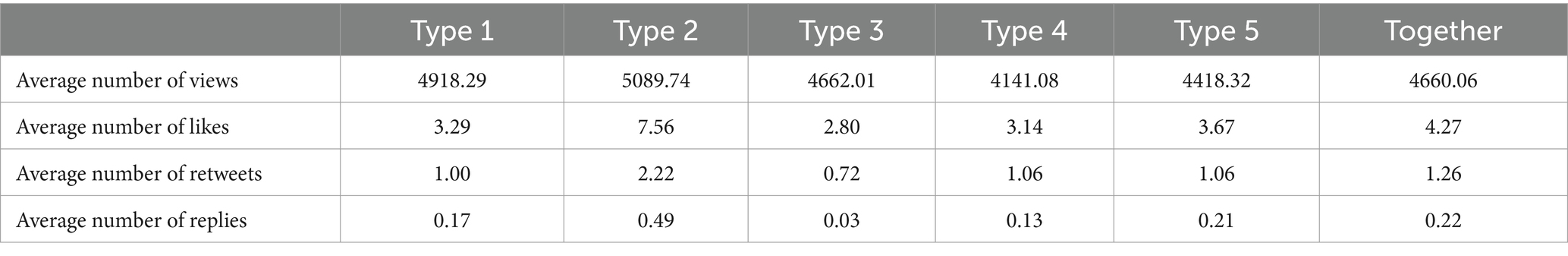

On average, each prebunking tweet was viewed over 5,200 times, liked five times, retweeted just over once and commented on less than once. But these statistics conceal major disparities, particularly depending on the type of tweets (Table 2). Despite being selected at random, the type 2 tweet was automatically published the most times (n = 99), compared with just 71 times for the type 1 tweet. Surprisingly, and independently, it is also type 2 tweet that seems to appeal most to users, as it generates on average more likes, retweets and replies, which explains why it also generates more views in total. Indeed, the more engagement a tweet generates, the more it is made visible to other users by the platforms’ algorithms. Conversely, the tweet that seems to appeal least to users is the type 3 tweet, with an average of just 3 likes, less than one retweet and under one reply. The logical result is a significantly lower average number of views.

In order to assess the robustness of this analysis, we excluded the top automated prebunking tweets that generated more than 10,000 views (n = 20). They represent 4.9% of the total amount of tweets. However, we wanted to control that the above results were not biased by a specific seismic event. Results are described in Table 3 and show that, generally speaking, type 2 tweets remain ranked highly in terms of user engagement.

Table 3. Data on average use and engagement with the automatic prebunking tweets, for tweets with less than 10,000 views.

It is interesting to note here that the type of tweet that attracts the most users is the most provocative in tone. It encourages people to post their predictions on the platform from The Collaboratory for the Study of Earthquake Predictability (CSEP), supported by a group of scientists (Zechar et al., 2016). Reciprocally, the tweet that generates the least engagement is the most literal and the most scientific, as it provides a link to a video explaining why scientists cannot predict earthquakes to date. It therefore seems that the provocative nature of a tweet leads to a reaction, and therefore to the visibility of the automated prebunking tweet.

While these descriptive statistics are necessary for an overall understanding, they need to be supplemented by a more qualitative analysis, particularly in cases where false information has emerged. This is the purpose of the case analyses presented below.

In this section, we explore two significant and deadly earthquakes—one in Turkey and Syria on February 6, 2023, and another in Morocco on September 8, 2023. These seismic events had a considerable impact on the affected areas, giving rise to misinformation and seismic predictions. Our analysis seeks to offer a thorough insight into these occurrences, highlighting the challenges posed by misinformation during seismic crises and the scientific scrutiny of seismic predictions.

As a preamble to these two case studies on Twitter, one should mention that according to user data from 2022, there is a clear difference in the popularity of the social network in the two countries. Turkey had 16.1 million Twitter users (18.9% of the population), while Morocco had just 1.1 million (3% of the population) (Statista, 2022). Therefore, the percentage of the population using Twitter is 6 times higher in Turkey than in Morocco.

The first case is based on the seismic catastrophe that struck Turkey and Syria on February 6, 2023, marked by two powerful earthquakes—a magnitude 7.8 event near the Syrian border in south-eastern Turkey at 1:17 UTC, followed by a second magnitude 7.5 earthquake 100 km further north at 10:24 UTC (Dal Zilio and Ampuero, 2023). The earthquakes, felt up to 2,000 km from the epicenter, resulted in extensive damage and more than 55,000 casualties. False narratives quickly emerged. For instance, a video circulated on social media, falsely depicting a nuclear power plant explosion in Turkey post-earthquake, despite the absence of operational nuclear plants in the country. In reality, the footage showed a 2020 explosion in Beirut, Lebanon.3 A large part of the narratives containing misinformation in this case study had to do with the fact that, 2 days prior to the earthquake, Frank Hoogerbeets, a self-proclaimed seismic predictor, asserted on social media the anticipation of a seismic incident with a magnitude of 7.5 in the same geographic area subsequently impacted by the consequential 7.8-magnitude earthquake4 (Figure 2). This prediction gained popularity on various digital platforms, where Hoogerbeets was extolled for supposedly predicting the seismic event. However, seismic experts within the scientific community unanimously repudiated the claim, underscoring the inadequacy of his prediction5 due to the indeterminate temporal parameter “sooner or later” and its consequential lack of specificity and prognostic efficacy (Chappells, 2023; Romanet, 2023). Many journalists also wrote newspaper pieces to debunk his claim (Rabs, 2023).

After the unprecedented 6.8 magnitude earthquake struck Morocco on September 8, 2023 (Yeo & McKasy, 2021), claiming nearly 3,000 lives, a surge in misinformation and fake news narratives ensued as well. Instances of misinformation included a video falsely alleging a newborn baby rescue in Morocco; the footage was later traced to Kanpur, India.6 More unusual in the realm of earthquake misinformation, a social media rumor about Cristiano Ronaldo offering his hotel as a refuge center circulated.7 Additionally, AFP fact-checked misleading footage of a building collapse in Casablanca which was erroneously linked to the earthquake.8 New rumors about earthquake predictions circulated, giving visibility to a post by the same self-proclaimed expert as in Turkey a few months before, claiming to anticipate tremors around September 5–7, based on planets movements.9 This statement was in fact vague and ambiguous, as it did not mention a place or a magnitude. Therefore, the initial tweet is not a prediction per se, what really matters is the fact that it was perceived as such after the earthquake had occurred. In this case, it is the reception and perception by the public that gives the predictive character a posteriori, credibility and viral character. The original tweet was then seen 1.4 million times, collected 2,000 likes and generated 535 retweets and a large number of comments.

In the case of these two earthquake crises, we effectively observed the circulation of false information about earthquake, including predictions, on Twitter. The EMSC channel published automatic prebunking tweets in order to warn the public that earthquake predictions might circulate or get visibility after the main shock. Because of their social impact and the number of people affected, these two series of earthquakes attracted a great deal of attention and questions, particularly on social media and especially on Twitter. Therefore, the EMSC also published a series of manual tweets with a more empathic tone addressing the specific seismic and cultural context, unlike the automatic tweets that were designed to be more universal. Both sequences are described in Annexes 1, 2.

During the Turkey and Syria sequence between February 6 and 7th, 24 earthquakes in the region generated an automated prebunking tweet due to the high number of aftershocks and their high magnitude. In parallel, the EMSC manually published four manual tweets during this sequence and manually answered to earthquake predictions comments three times until February 18. The seismic sequence in the Moroccan case was characterized by its shorter duration and by the fact that only two earthquakes, the main shock and an aftershock the day after, met the criteria for publication of an automatic prebunking tweet. In this case, the number of manual tweets is more important than the number of automatic tweets.

In both cases, the automatic prebunking tweets ensure that a warning message against prediction was published within few hours after the earthquake, when the public attention on social media was driven by a need for information. This was particularly relevant in the case of these two seismic crises, which began during the night, or at least outside EMSC working hours. It also ensured that this message was supported by a scientific justification through the hyperlink. In both cases, we could observe that the number of views per tweet in a given thread decreased with the rank of this tweet. This occurs due to the way the Twitter bot is designed, that is to say, based on a hierarchy of informational needs— people first need to get information about the earthquake parameters—and so prebunking tweets are positioned at the end of every thread and therefore get less views than the top tweets in the same thread.

A qualitative analysis shows that in the two cases studied, manual tweets generate a greater number of views than automatic tweets. They get more likes and retweets, and in the Moroccan case, they also generate a higher number of comments, although the conversation remains limited. This holds specifically true in the Morocco case, which can be partly explained by the fact that manual tweets were published in French, a language commonly spoken in Morocco.

The conversation generated by manual tweets can be divided into two fairly equivalent categories. On the one hand, some of the responses simply quote the predictions of self-proclaimed experts, suggesting that putting forward scientific facts will not be enough to convince them. On the other hand, some of the responses consisted of thanking the EMSC for its information and scientific details as they learned something about earthquake science.

It is worth noting here that the collected data does not allow us to derive a predictive model of visibility and reactions to tweets. Indeed, even beyond the seismic parameters, too many factors come into play. These include socio-cultural parameters such as the popularity of the social media in the country or the presence or absence of a well-identified national source of seismic information on social media. The impact of a tweet can also be different if it is retweeted by an influential person. Technical and technological parameters are also involved, such as Twitter’s algorithm, which decide whether or not to highlight tweets. It also depends on the availability of internet access at the time of need. In Turkey, for example, mobile networks were heavily affected because of the relays on the roves of buildings. Turkey also temporarily restricted access to Twitter to prevent the spread of false information.10

The case studies of seismic events in Turkey-Syria, and Morocco underscore the significance of the automated tool for prebunking as a crucial instrument in countering misinformation during earthquake crises. The automated system plays a vital role in swiftly and timely disseminating accurate information to the public, helping mitigate the impact of false predictions and narratives. However, our analysis reveals that a sole reliance on automation has its limitations. People exhibit a more responsive engagement with manual tweets compared to automated ones, emphasizing the need for a diversified communication strategy. To further enhance the efficacy of the prebunking tool, improvements are warranted, such as optimizing their visibility within social media threads to counteract diminishing views numbers. Additionally, the fight against misinformation should extend beyond social media platforms. Incorporating other mediums can broaden the reach of prebunking efforts, fostering a comprehensive approach to combating the false narratives associated with seismic predictions.

Assessing the effectiveness of an operating prebunking tool is not an easy, but yet necessary, task. Since this is a real-life experimentation, there is no obvious way to measure how many people were prevented from believing and sharing an earthquake prediction. Here we have chosen to study engagement with tweets, which has allowed us to show that there is a certain preference for a particular style of tweet, which generates more engagement. Additionally, the case study approach has allowed us to gather observations on the relevance of combining an automatic approach with a manual one. However, the study would benefit from qualitative interviews with users who have been exposed to both false information and the automated prebunking tweets.

Another technical limitation of the study presented here lies in the changes made by Twitter to API access during the period studied. The company changed the conditions for access to certain metrics and the conditions for automatic publication of tweets on several occasions. What was ostensibly intended to limit troublesome bots on the social network actually hindered the operation of bots with added value for crisis management and scientific communication.

The experience presented in this study represents an experimental approach, testing the application of automatic prebunking and manual debunking methods on Twitter. The objective is to prevent the emergence and circulation of false information regarding earthquake predictions, partly by warning in advance people who are potentially misinformation-vulnerable and partly by filling the information void with scientific-based information. The results of the study show that automatic tweets have a certain visibility, even though engagement is generally fairly low. They are designed to systematically warn of the possible circulation of false information, and particularly of injustified predictions following an earthquake, regardless of the time of day or night. The combination of these automatic prebunking tweets with more empathetic manual messages seems to be working, partly because they also allow us to act downstream through targeted debunking. Having discussed the technical limitations of the study in the preceding section, we would like to address the practical limitations of the tool. Some of them are reminiscent of challenges documented in existing literature and other misinformation inoculation experiments across diverse domains (Lewandowsky and van der Linden, 2021; Harjani et al., 2023).

The first and main limitation is visibility. As a Twitter tool, it targets mainly Twitter users, and the number of users actually exposed to the tweets depends largely on the Twitter algorithm (which is quite opaque for users). Even though, given the high presence of journalists on Twitter, there are chances that information is then shared on traditional media. As an example, after the Mw 6.8 Morocco earthquake on September 8, 2023, journalists identified EMSC actions and dedicated several interviews and even a popular French TV program to earthquake misinformation.11 Beyond that, due to socio-technical reasons, these automated tweets are mostly visible by people close to the @LastQuake network, i.e., people who follow the account or follow people who do. Consequently, the prebunking system relies, to a certain extent, on a form of voluntarism, or at least curiosity, from users, who need to come and look for additional information about earthquakes (beyond the basic information such as magnitude, epicenter, and felt map) and/or subscribe to the EMSC account to maximize their chance of exposure to the content that will enable them to spot false information. In the end, this limits the system’s applicability to a more engaged subset of the population (Lewandowsky and van der Linden, 2021). Visibility also comes up in our results, which raise the issue about the place of tweets in the thread, which could limit their visibility for technical and algorithmic reasons. Finally, despite the translation function, the service is more accessible for users who understand English.

The issue of visibility is inherent in all initiatives to combat false information. The challenge is always to know how to reach the people most at risk, either preventively, in the case of prebunking, or, in the case of fact checking, to target people who are likely to have come into contact with the false information (Porter and Wood, 2024). In the case of earthquakes, temporality and urgency can to some extent help the issue of visibility. Historically, Twitter played a pivotal role in crisis management (Bruns et al., 2012; Umihara and Nishikitani, 2013; Cooper et al., 2015). It was used for crisis management, both by managers to disseminate essential information and by citizens to share and find the information they needed to understand the crisis and take action or help manage it. Yet, recent shifts in its usage, interactions, and trust dynamics raise concerns for its future efficacy (Vaughan-Nichols, 2023). A drastic reduction in interactions on the platform diminishes its relevance for crisis management.

Beyond the problem of visibility, a second limitation lies in the timeframe. One notable challenge is the necessity for sustained, long-term efforts to effectively enhance individuals’ resilience against misinformation. Unfortunately, at an individual scale, the EMSC bot’s impact is often confined to brief periods when users are specifically interested in seismic events occurring in their vicinity or gaining global attention. Although automatic tweets are available at all times, we currently have no way of assessing how many times the same person may have seen this information appear in their Twitter news feed.

Finally, one limitation is intrinsically linked to the automation and cultural variations and misinformation types. Automatic prebunking fails to adapt to cultural variations linked to seismic risk culture, as well as the social, economic, and political contexts in which the events occur. This is why manual tweet are necessary as a supplement. While there are cultural variations in the reception of earthquake predictions (e.g., some people may be more receptive to scientifically based predictions using calculations, while others may prefer predictions based on celestial events), these differences are even more pronounced for other types of misinformation such as earthquake creations. For instance, false narratives attributing seismic events to different “enemies” can vary significantly based on cultural contexts. Some would accuse the Americans, others a rival nation or a member of the government. Thus, if we were to expand our focus to these other forms of misinformation such as earthquake creation, we should pay attention to two key elements: (1) the propagation dynamics of this type of misinformation (which may differ and have not yet been thoroughly studied) and (2) the reliance on irrationality. Indeed, the question of prediction is often dealt with in debunk or prebunk strategies from the scientific angle, with an explanation that appeals to reason: we cannot predict earthquakes because we do not have the necessary knowledge. But when it comes to creating earthquakes, it is not so much rationality or scientific understanding of the world that comes into play, but emotions and belief systems (Dallo et al., 2022; Lu and Huang, 2024). Kwanda and Lin (2020) demonstrated that it is much harder to combat false information that appeals to these modes of thinking.

From this discussion, a number of solutions have emerged for improving the tool and extending the fight against earthquake predictions and false information in general. The exploration of alternative spaces to combat earthquake predictions is imperative. The EMSC has already deployed its publishing robot, including automatic pre-bunking of tweets, on Mastodon and Bluesky, recent alternatives to Twitter. In the meantime, the growing success of messaging applications for both conversational and informational purposes make them fertile ground for the spread of false information (ICRC, 2017; Mărcău et al., 2023). For that reason, the EMSC have a duty to be present there. This is also in line with recommendation #4 from Dallo et al. (2022) that state to “monitor multiple Social media channels.” Admittedly, these tools are based on interpersonal conversations and it is therefore difficult to detect false information as we cannot and do not want to monitor these conversations. On the other hand, it is possible to establish a relationship of trust with users through channels or conversations with individuals, and to broadcast these prebunking messages at key moments. This is an option that the ESMC is currently exploring with the development of a free Telegram robot.

In addition to the multiplication of possible channels, experience and the analysis of case studies also show that traditional media remain powerful allies in overcoming the limitations of platforms, as they can act as information relays, particularly for debunking information. Most newsrooms have acquired qualified staff and advanced tools for fact-checking, which has become an integral part of the journalist’s job, especially in times of crisis. This echoes recommendation #6 from Dallo et al. (2022) regarding the implementation of media policy measures.

Finally, while the recent transformations of the Twitter platform are often criticized, a new functionality could be explored to meet the need for cultural adaptation in the face of false information: Community Notes (Wojcik et al., 2022). Community Notes are notes written and approved by Twitter users to provide context and assess the veracity of a tweet. In the case of earthquake predictions, we could consider using them to refer to sources demonstrating the fallacious nature of the information.

Overall, the testing of the LastQuake system prebunking tool and its integration into the EMSC’s strategy to combat false information demonstrates the feasibility of implementing a large number of the recommendations formulated by Dallo et al. (2022) to combat the spread of earthquake predictions. This investigation encourages us to continue the efforts initiated by seismology institutes over the last few years to fight against false information, that rely increasingly on social science research linked to false information and to risk communication (Sellnow and Sellnow, 2024).

If earthquakes cannot be predicted to date, we can at least anticipate one of their consequences: a surge of misinformation. Navigating the intricate landscape of earthquake misinformation, this study delves into the effectiveness of an innovative automated prebunking tool, specifically the @LastQuake Twitter bot developed by the EMSC. Thanks to rapid information, the system reaches earthquake witnesses within tens of second of an earthquake occurrence, who are often the people most vulnerable to dubious predictions (Bossu et al., 2023). While the automated system plays a crucial role in promptly disseminating accurate information and mitigating the impact of unreliable earthquake predictions, our analysis underscores the importance of a multifaceted approach. The limitations encountered, such as the need for sustained long-term efforts and an understanding of cultural variations, emphasize the necessity of supplementing automation with adaptable strategies and nuanced, culturally sensitive interventions through manual tweets. The lessons gleaned from our analysis call for a holistic perspective. This study shows that we are dealing here with a very specific type of misinformation. (1) It is recurrent, in the sense that the same type of false narratives appears regularly on social networks. (2) Its visibility is linked to an event (significant earthquakes) that cannot be predicted, but which often leads to similar misinformation, (3) although it is recurrent, it affects a variety of individuals, since earthquakes occur in many parts of the world, and (4) for all that, institutions are able to respond to this misinformation on a regular basis, and to identify vulnerable people. These characteristics, linked to the dynamics and “audiences” of fake news, give it a very special status in the fake news ecosystem and make the automatic prebunking tool all the more relevant, but not necessarily replicable for other types of false information.

When the EMSC teams launched the prebunking tool on Twitter 2 years ago, we did not expect to see so many developments, whether socio-technical (with the changes in Twitter and its use), or socio-cultural (with a perceived change in the most widespread false information and in particular the spread of beliefs in the creation of earthquakes, as was the case with the earthquakes in Morocco and Turkey). Although these changes are difficult to quantify or even objectify, they have significant consequences for the way we can combat this false information, since it does not necessarily utilize the same methods of reasoning and is sometimes imbued with conspiracy. Given this, seismological institutes cannot respond unilaterally. If they have the scientific knowledge for a substantive response, they must work in close collaboration with the authorities and social media platforms in order to rebuild trust in information.

The original contributions presented in the study are included in the article/Supplementary material; further inquiries can be directed to the corresponding author.

LF: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. RB: Funding acquisition, Methodology, Project administration, Writing – review & editing. J-MC: Conceptualization, Data curation, Writing – review & editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work is part of the EU research project CORE that has received funding from the European Union’s Horizon 2020 research and innovation program under grant agreement No 101021746.

The authors would like to acknowledge the numerous discussions with seismologists and social scientists on the topic of earthquake misinformation and especially their colleagues from ETH Zurich and from the RISE EU project. We would like to thank Robert Steed and Jennifer Jones for proofreading the article and correcting the English syntax. We also would like to warmly thank the reviewers for their time and comments, which helped to improve the quality of the paper.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Opinions expressed in this article solely reflect the authors’ views; the EU is not responsible for any use that may be made of the information it contains.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomm.2024.1391480/full#supplementary-material

1. ^HAARP relates to the High Frequency Active Auroral Research Program.

2. ^While the platform is now known as X, for the sake of convenience and referencing, we maintain the use of the term “Twitter” in this paper.

3. ^https://www.bbc.com/news/64557407

4. ^https://x.com/hogrbe/status/1621479563720118273?s=20

5. ^https://x.com/hogrbe/status/1622473107318398977?s=20

6. ^https://www.newsweek.com/fact-check-does-video-show-newborn-baby-rescue-morocco-earthquake-1826108

7. ^https://www.snopes.com/fact-check/ronaldo-morocco-earthquake/

8. ^https://factcheck.afp.com/doc.afp.com.33UZ8TM; https://factcheck.afp.com/doc.afp.com.33UX4RC

9. ^https://x.com/hogrbe/status/1698585825804455995?s=20

10. ^https://www.reuters.com/world/middle-east/anger-over-turkeys-temporary-twitter-block-during-quake-rescue-2023-02-09/

Acemoglu, D., Ozdaglar, A., Siderius, J., Allcott, H., Dewart, J., Gentzkow, M., et al. (2022). A model of online misinformation. Available at: https://www.cnbc.com/2016/12/30/

Anthony, K., Strait, L., Petrun Sayers, E., Alberti, L., Beckham, J., Cameron, C., et al. (2024). “14 the ethic of first and second things in communicating risk and safety” in Communicating Risk and Safety. eds. T. Sellnow and D. Sellnow (Berlin, Boston: De Gruyter Mouton), 237–254.

Baines, D., and Elliott, R. J. R. (2020). Defining misinformation, disinformation and malinformation: An urgent need for clarity during the COVID-19 infodemic. 1–23.

Baptista, J. P., and Gradim, A. (2020). Understanding fake news consumption: a review. Soc. Sci. 9, 1–22. doi: 10.3390/socsci9100185

Barua, Z., Barua, S., Aktar, S., Kabir, N., and Li, M. (2020). Effects of misinformation on COVID-19 individual responses and recommendations for resilience of disastrous consequences of misinformation. Progress Disast. Sci. 8:100119. doi: 10.1016/j.pdisas.2020.100119

Bossu, R., Corradini, M., Cheny, J.-M., and Fallou, L. (2022). Communicating rapid public earthquake information through a Twitter bot: The 10-year long @LastQuake experience. Frontiers in Earth Science, Under revi.

Bossu, R., Corradini, M., Cheny, J.-M., and Fallou, L. (2023). A social bot in support of crisis communication: 10-years of @LastQuake experience on Twitter. Front. Commun. 8:992654. doi: 10.3389/fcomm.2023.992654

Bossu, R., Fallou, L., Landès, M., Roussel, F., Julien-Laferrière, S., Roch, J., et al. (2020). Rapid Public Information and Situational Awareness After the November 26, 2019, Albania Earthquake: Lessons Learned From the LastQuake System. Frontiers in Earth Science, 8(August), 1–15. doi: 10.3389/feart.2020.00235

Bossu, R., Haslinger, F., and Héber, H. (2024). History and activities of the European-MediterraneanSeismological Centre. Seismica, volume 3.1. doi: 10.26443/seismica.v3i1.981

Bossu, R., Roussel, F., Fallou, L., Landès, M., Steed, R., Mazet-Roux, G., et al. (2018). LastQuake: From rapid information to global seismic risk reduction. International Journal of Disaster Risk Reduction, 28, 32–42. doi: 10.1016/j.ijdrr.2018.02.024

Bruns, A., Burgess, J., Crawford, K., and Shaw, F. (2012). # qldfloods and @ QPSMedia: Crisis communication on twitter in the 2011 south East Queensland floods (issue media ecologies project ARC Centre of excellence for creative industries & innovation).

Chappells, B. (2023). No, you can’t predict earthquakes, the USGS says. NPR. https://www.npr.org/2023/02/07/1154893886/earthquake-prediction-turkey-usgs

Cooper, G. P., Yeager, V., Burkle, F., and Subbarao, I. (2015). Twitter as a potential disaster risk reduction tool. PloS Curr. Disast. 1:7. doi: 10.1371/currents.dis.a7657429d6f25f02bb5253e551015f0f

Crescimbene, M., Todesco, M., La Longa, F., Ercolani, E., and Camassi, R. (2023). The whole story: rumors and science communication in the aftermath of 2012 Emilia seismic sequence. Front. Earth Sci. 10:1002648. doi: 10.3389/feart.2022.1002648

Dallo, I., Corradini, M., Fallou, L., and Marti, M. (2022). How to fight misinformation about earthquakes?—a communication guide. ETH Zürich. doi: 10.3929/ethz-b-000530319

Dallo, I., Elroy, O., Fallou, L., Komendantova, N., and Yosipof, A. (2023). Dynamics and characteristics of misinformation related to earthquake predictions on twitter. Sci. Rep. 13:13391. doi: 10.1038/s41598-023-40399-9

Dal Zilio, L., and Ampuero, J. P. (2023). Earthquake doublet in Turkey and Syria. Commun Earth Environ 4, 71. doi: 10.1038/s43247-023-00747-z

Devès, M., Lacassin, R., Pécout, H., and Robert, G. (2022). Risk communication during seismo-volcanic crises: the example of Mayotte. France. Nat. Hazards Earth Syst. Sci. 22, 2001–2029. doi: 10.5194/nhess-22-2001-2022

Dierickx, L., Lindén, C.-G., and Opdahl, A. L. (2023). Automated fact-checking to support professional practices: systematic literature review and meta-analysis. Int. J. Commun. 17, 5170–5190.

Dryhurst, S., Mulder, F., Dallo, I., Kerr, J. R., McBride, S. K., Fallou, L., et al. (2022). Fighting misinformation in seismology: Expert opinion on earthquake facts vs fiction. Front. Earth Sci. 10:937055. doi: 10.3389/feart.2022.937055

Erebara, G. (2019). Albanian Journalists Detained for Spreading Quake Scare. BalkanInsight. https://balkaninsight.com/2019/09/23/albania-police-stops-two-journalist-over-earthquake-scare/

Erokhin, D., and Komendantova, N. (2023). The role of bots in spreading conspiracies: case study of discourse about earthquakes on twitter. Int. J. Disast. Risk Reduct. 92:103740. doi: 10.1016/j.ijdrr.2023.103740

Fallou, L., Bossu, R., Landès, M., Roch, J., Roussel, F., Steed, R., et al. (2020). Citizen seismology without seismologists? Lessons learned from Mayotte leading to improved collaboration. Front. Commun. 5:49. doi: 10.3389/fcomm.2020.00049

Fallou, L., Marti, M., Dallo, I., and Corradini, M. (2022). How to fight earthquake misinformation? A communication guide. Seismol. Res. Lett. 93, 2418–2422. doi: 10.1785/0220220086

Flores-Saviaga, C., and Savage, S. (2021). Fighting disaster misinformation in Latin America: the #19S Mexican earthquake case study. Pers. Ubiquit. Comput. 25, 353–373. doi: 10.1007/s00779-020-01411-5

Freiling, I., Krause, N. M., Scheufele, D. A., and Brossard, D. (2021). Believing and sharing misinformation, fact-checks, and accurate information on social media: the role of anxiety during COVID-19. New Media Soc. 25, 141–162. doi: 10.1177/14614448211011451

Goodwin, R., and Peters, E. (2024). “3 Communicating with numbers: challenges and potential solutions” in Communicating Risk and Safety. eds. T. Sellnow and D. Sellnow (Berlin, Boston: De Gruyter Mouton), 33–56.

Gori, P. L. (1993). The social dynamics of a false earthquake prediction and the response by the public sector. Bull. Seismol. Soc. Am. 83, 963–980. doi: 10.1785/BSSA0830040963

Harjani, T., Basol, M.-S., Roozenbeek, J., and van der Linden, S. (2023). Gamified inoculation against misinformation in India: a randomized control trial. J. Trial Error 3, 14–56. doi: 10.36850/e12

Huang, Y. L., Starbird, K., Orand, M., Stanek, S. A., and Pedersen, H. T. (2015). “Connected through crisis: Emotional proximity and the spread of misinformation online” in Proceedings of the 18th ACM conference on Computer Supported Cooperative Work & Social Computing, 969–980.

ICRC (2017). Humanitarian futures for messaging apps. Understanding the Opportunities and Risks for Humanitarian Action.

Jang, J., Lee, E.-J., and Shin, S. Y. (2019). What debunking of misinformation does and doesn’t. Cyberpsychol. Behav. Soc. Netw. 22, 423–427. doi: 10.1089/cyber.2018.0608

Komendantova, N., Ekenberg, L., Svahn, M., Larsson, A., Shah, S. I. H., Glinos, M., et al. (2021). A value-driven approach to addressing misinformation in social media. Human. Soc. Sci. Commun. 8:33. doi: 10.1057/s41599-020-00702-9

Kwanda, F. A., and Lin, T. T. C. (2020). Fake news practices in Indonesian newsrooms during and after the Palu earthquake: a hierarchy-of-influences approach. Inf. Commun. Soc. 23, 849–866. doi: 10.1080/1369118X.2020.1759669

Lacassin, R., Devès, M., Hicks, S., Ampuero, J., Bruhat, L., Wibisono, D., et al. (2019). Rapid collaborative knowledge building via Twitter after significant geohazard events. Geoscience Communication Discussions, EGU – European Geosciences Union, 23.

Lamontagne, M., and Flynn, B. W. (2014). Communications in the aftermath of a major earthquake: bringing science to citizens to promote recovery. Seismol. Res. Lett. 85, 561–565. doi: 10.1785/0220130118

Larson, H. J. (2018). The biggest pandemic risk? Viral misinformation. Nature 562:309. doi: 10.1038/d41586-018-07034-4

Lewandowsky, S., Ecker, U. K. H., Seifert, C. M., Schwarz, N., and Cook, J. (2012). Misinformation and its correction. Psychol. Sci. Public Interest 13, 106–131. doi: 10.1177/1529100612451018

Lewandowsky, S., and van der Linden, S. (2021). Countering misinformation and fake news through inoculation and Prebunking. Eur. Rev. Soc. Psychol. 32, 348–384. doi: 10.1080/10463283.2021.1876983

Lu, Y., and Huang, Y. (2024). “8 emotions in risk and crisis communication: An individual and networked perspective” in Communicating Risk and Safety. eds. T. Sellnow and D. Sellnow (Berlin, Boston: De Gruyter Mouton), 123–142.

Mărcău, F. C., Peptan, C., Băleanu, V. D., Holt, A. G., Iana, S. A., and Gheorman, V. (2023). Analysis regarding the impact of 'fake news' on the quality of life of the population in a region affected by earthquake activity. The case of Romania-northern Oltenia. Front. Public Health 11:1244564. doi: 10.3389/fpubh.2023.1244564

Marwick, A. E. (2018). Why do people share fake news? A sociotechnical model of media effects. Georgetown Law Technol. Rev. 2, 474–512.

Mehta, A., Tyquin, E., and Bradley, L. (2024). “The role of trust and distrust in risk and safety communication” in Communicating Risk and Safety. eds. T. Sellnow and D. Sellnow (Berlin, Boston: De Gruyter Mouton), 529–550.

Melgar, D., Taymaz, T., Ganas, A., Crowell, B., Öcalan, T., Kahraman, M., et al. (2023). Sub- and super-shear ruptures during the 2023 Mw 7.8 and Mw 7.6 earthquake doublet in SE Türkiye. Seismica, 2. doi: 10.26443/seismica.v2i3.387

Mero, A. (2019). In quake-rattled Albania, journalists detained on fake news charges after falsely warning of aftershocks. VOA News. Available at: https://www.voanews.com/a/europe_quake-rattled-albania-journalists-detained-fake-news-charges-after-falsely-warning/6176290.html

Miyazaki, K., Uchiba, T., Tanaka, K., An, J., Kwak, H., and Sasahara, K. (2023). “This is fake news”: Characterizing the spontaneous debunking from twitter users to COVID-19 false information. Available at: https://github.com/Mmichio/spontaneous

Novaes, C. D., and de Ridder, J. (2021). “Is fake news old news?” in The Epistemology of Fake News (Oxford: Oxford University Press), 156–179.

Peppoloni, S. (2020). Geoscientists as social and political actors. EGU General Assembly Conference Abstracts.

Peppoloni, S., and Di Capua, G. (2012). Geoethics and geological culture: Awareness, responsibility and challenges. Annals of Geophysics, 55, 335–341. doi: 10.4401/ag-6099

Porter, E., and Wood, T. J. (2024). Factual corrections: Concerns and current evidence. Current Opinion in Psychology, 55, 101715. doi: 10.1016/J.COPSYC.2023.101715

Romanet, P. (2023). Could planet/sun conjunctions be used to predict large (moment magnitude ≥ 7) earthquakes? Seismica 2. doi: 10.26443/seismica.v2i1.528

Scheufele, D. A., Hoffman, A. J., Neeley, L., and Reid, C. M. (2021). Misinformation about science in the public sphere. Proc. Natl. Acad. Sci. 118, 522–526. doi: 10.1073/pnas.2104068118

Sellnow, T., and Sellnow, D. (2024). Communicating Risk and Safety. Berlin, Boston: De Gruyter Mouton.

Statista (2022). Twitter users in the world. Available at: https://fr.statista.com/statistiques/1305937/nombre-utilisateurs-twitter-pays/

Steed, R. J., Fuenzalida, A., Bossu, R., Bondár, I., Heinloo, A., Dupont, A., et al. (2019). Crowdsourcing triggers rapid, reliable earthquake locations. Science Advances, 5, 1–7. doi: 10.1126/sciadv.aau9824_rfseq1

Tandoc, E. C., Lim, Z. W., and Ling, R. (2018). Defining “fake news”. Digit. Journal. 6, 137–153. doi: 10.1080/21670811.2017.1360143

Treen, K. M. I., Williams, H. T. P., and O’Neill, S. J. (2019). Online misinformation about climate change. Wires Clim. Change 11, 1–20. doi: 10.1002/wcc.665

Turillazzi, A., Taddeo, M., Floridi, L., and Casolari, F. (2023). The digital services act: an analysis of its ethical, legal, and social implications. In Law, Innovation and Technology (Vol. 15, Issue 1, pp. 83–106). https://doi.org/10.1080/17579961.2023.2184136

Umihara, J., and Nishikitani, M. (2013). Emergent use of twitter in the 2011 Tohoku earthquake. Prehosp. Disaster Med. 28, 434–440. doi: 10.1017/S1049023X13008704

van der Linden, S. (2022). Misinformation: susceptibility, spread, and interventions to immunize the public. Nat. Med. 28, 460–467. doi: 10.1038/s41591-022-01713-6

Vaughan-Nichols, S. (2023). Twitter seeing “record user engagement”? The data tells a different story. ZDNET. https://www.zdnet.com/article/twitter-seeing-record-user-engagement-the-data-tells-a-different-story/

Vese, D. (2022). Governing fake news: the regulation of social media and the right to freedom of expression in the era of emergency. Eur. J. Risk Regul. 13, 477–513. doi: 10.1017/err.2021.48

Vicari, R., and Komendatova, N. (2023). Systematic meta-analysis of research on AI tools to deal with misinformation on social media during natural and anthropogenic hazards and disasters. Humanities and social sciences. Communications 10, 1–14. doi: 10.1057/s41599-023-01838-0

Wojcik, S., Hilgard, S., Judd, N., Mocanu, D., Ragain, S., Hunzaker, M. B., et al. (2022). Birdwatch: Crowd wisdom and bridging algorithms can inform understanding and reduce the spread of misinformation. arXiv preprint arXiv :2210.15723.

Yeo, S. K., and McKasy, M. (2021). Emotion and humor as misinformation antidotes. Proc. Natl. Acad. Sci. USA 118, 1–7. doi: 10.1073/pnas.2002484118

Keywords: misinformation, earthquake prediction, prebunking, debunking, social media, automation, Morocco, Turkey

Citation: Fallou L, Bossu R and Cheny J-M (2024) Prebunking earthquake predictions on social media. Front. Commun. 9:1391480. doi: 10.3389/fcomm.2024.1391480

Received: 25 February 2024; Accepted: 03 June 2024;

Published: 19 June 2024.

Edited by:

Timothy L. Sellnow, University of Central Florida, United StatesReviewed by:

Joel Iverson, University of Montana, United StatesCopyright © 2024 Fallou, Bossu and Cheny. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Laure Fallou, bGF1cmUuZmFsbG91QGhvdG1haWwuZnI=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.